1. Introduction

Concrete structures that have been in service in turbid water environment for a long time, such as sea-crossing bridge piers and docks in marine environment, dams, flood gates, revetments and piers in river and lake environments, have been affected by adverse conditions such as erosion, chemical erosion, freezing and thawing for a long time, and their surfaces often have cracks and spalling problems, which pose a serious threat to the safe and stable operation of the structures [

1]. Surface defects of concrete structures will not only weaken their durability but also cause more serious internal damage, thus threatening the overall safety of the structure [

2]. However, the high risk of underwater inspection and the complexity of the water environment make the detection of underwater concrete surface defects extremely challenging [

3,

4].

Currently, robots equipped with image acquisition equipment and combined with machine vision technology for intelligent inspections have shown great potential in identifying and measuring surface defects of underwater concrete structures [

5,

6]. For example, Espinosa uses ROV (Remote Operated Vehicle) static underwater video and image collection platforms to collect underwater images [

7]. Han et al. [

8]. used underwater robots and image segmentation algorithms to automatically detect stilling pool damage.

However, underwater image detection faces physical challenges that are quite different from the atmospheric environment [

9]. The schematic diagram of underwater optical imaging is shown in

Figure 1. First of all, there are a large number of suspended impurity particles (such as plankton and sediment) and bubbles in the water body, which will cause strong scattering effects [

10]. Scattered light forms a halo in front of the lens, causing non-uniform bright and dark areas of the image: bright areas lose details due to excessive light concentration, while dark areas mask features due to insufficient light. This phenomenon is called “underwater snow effect”, which is characterized by smooth transition between light and dark areas (such as Gaussian distribution), which makes it impossible for traditional sharpening algorithms to effectively restore details [

11]. To make matters more complicated, different wavelengths of light attenuate differently in water (red light is the fastest, blue and green light are the slowest), resulting in color distortion and contrast reduction [

12]. Quantitative analysis shows that unprocessed underwater images are significantly inferior to terrestrial images in structural similarity and other indicators [

13]. Therefore, the development of a brightness correction algorithm adapted to the smooth transition characteristics of underwater light and dark becomes the key prerequisite for restoring image details.

At present, underwater image enhancement algorithms are mainly divided into three categories, namely non-physical model methods, physical model-based methods and deep learning-based methods. Among them, the non-physical model method is represented by Retinex theory and its improved model [

14]. Retinex-like methods are based on illumination reflection theory and combined with nonlinear guided filtering to achieve illumination equalization. The key lies in adaptively estimating illumination components at different scales, which is prone to halo artifacts and loss of details in bright areas, such as Attenuated Color Channel Correction and Detail Preserved Contrast Enhancement (ACDC) [

15], etc. The physical model-based approach introduces prior knowledge and uses the imaging model to inversely solve the degradation process [

15], represented by the Dark Channel Prior (DCP) algorithm and its improved models, such as G-DCP. DCP can effectively suppress excessive enhancement by separating illumination components by counting the distribution of dark pixels in the image [

16,

17]. However, it is still difficult to avoid the failure of dark channel prior in high-scattering areas [

18], and DCP-like methods have high computational complexity and are difficult to meet the requirements of real-time processing. Data-driven deep learning algorithms are gradually applied to computer vision tasks due to their deep network structure and good feature extraction capabilities [

19]. However, due to the inability to obtain “truly clear” images in underwater environments, the performance based on deep learning methods always depends on training data quality, parameter adjustment, and learning framework [

20], and the generalization ability and computational efficiency are limited. Currently, the primary research object in underwater image processing remains the main image data of shallow ocean areas [

21,

22]. There are few image monitoring datasets for reservoirs and dams with high sediment concentration and deeper water depth, and there are few targeted studies.

At the same time, application scenarios such as underwater robots and monitoring systems require low processing latency. However, existing algorithms face severe challenges; for example, the original DCP algorithm achieves only 2-3 FPS on embedded CPUs [

23]. Deep learning models such as U-Net models require more than 500 ms at 1080 p resolution, which cannot meet real-time requirements [

20]. Some studies improve computational efficiency by simplifying model parameters, such as LU

2Net adopts axial depth separable convolution, which reduces parameters by 80% and reaches 12FPS on an i7-10750H CPU [

24], but it is still difficult to achieve real-time processing using ordinary processors.

To sum up, existing underwater image enhancement methods face three contradictions: although Retinex-like methods have high computational efficiency, they have halo artifacts and detail loss in bright areas. Although DCP-like methods can suppress excessive enhancement, they are not robust due to dark channel prior failure, and deep learning models are limited by training data quality and computing resources. To solve these core problems, this paper develops the Dynamic Illumination and Vision Enhancement for Underwater Images (DIVE) algorithm. By constructing an illumination–scattering decoupling processing framework, DIVE processes Gaussian distribution illumination correction (dynamic illumination module) and suspended particle scattering correction (visual enhancement module) in stages. Through fast local gamma correction in Lab space and dynamic decision of G/B channel mean, the problems of non-uniform illumination and color cast are solved step by step. During the visual enhancement phase, the Contrast Limited Adaptive Histogram Equalization (CLAHE) parameters are dynamically adjusted through contrast and detail levels. For example, high-frequency discrimination blocks can be reduced by 50% to improve detail retention. To meet the needs of real-time monitoring, separate Gaussian convolution, Thread Pool Executor, and vectorized matrix operation technologies are utilized to achieve a processing speed of approximately 25 frames per second for 1920 × 1080 underwater detection video.

2. Dynamic Illumination and Vision Enhancement for Underwater Images

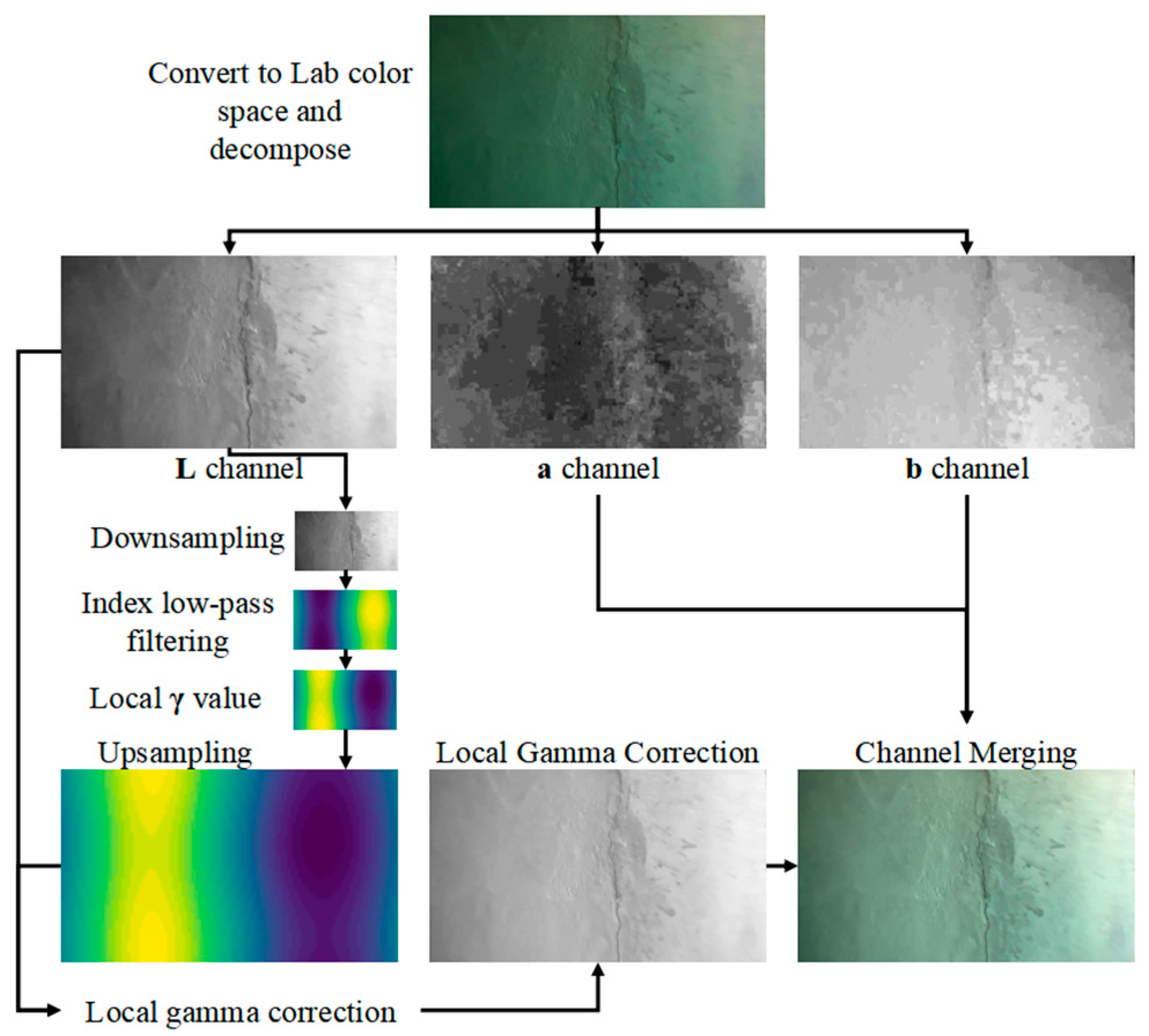

The DIVE algorithm is based on a lighting–scattering decoupling processing framework and comprises two core modules: dynamic illumination and visual enhancement. The dynamic illumination module, based on fast local gamma correction, employs Lab space downsampling and separated Gaussian convolution to address the issue of uneven underwater lighting effectively. The adaptive dual-channel visual enhancement module achieves color correction through dynamic decision-making of G/B channel means, and enhances details by combining the contrast-sensitive CLAHE algorithm. The processing flowchart is shown in

Figure 2, and the detailed processing procedure is as follows.

2.1. Dynamic Illumination Based on Fast Local Gamma Correction

In order to solve the problem of uneven underwater illumination caused by natural light and auxiliary illumination, this paper proposes a dynamic illumination method based on fast local gamma correction. By performing low-pass filtering and local gamma correction on the L channel (brightness channel) in the original image Lab color space, the local nonlinear transformation based on illumination distribution is realized. The process is shown in

Figure 3, and the specific steps are as follows.

The L channel in the Lab color space has the advantages of being independent of the chroma channels and possessing a larger dynamic range, making it more suitable for high dynamic range image enhancement tasks. Therefore, in the dynamic illumination stage, simply performing fast local gamma correction on the L channel itself can rapidly improve the brightness uniformity of the image.

(1) Lab color space conversion

Convert the image to the Lab color space to obtain the L (Lightness) channel of the image.

(2) L channel downsampling

To enhance computational efficiency and prevent information loss, multi-scale downsampling is applied to the L channel before low-frequency illumination estimation. This paper employs a multi-scale downsampling method based on the Gaussian pyramid, which better preserves the structural information of the image through layer-by-layer Gaussian smoothing and downsampling, avoiding artificial artifacts that may arise from simple interpolation.

Let the size of the original image’s

L channel be (

h,

w), and define the target minimum side length as 64. Let the 0th layer be the original image, with

I0 =

L. The

k-th layer image is obtained by performing Gaussian convolution and downsampling on the (

k − 1)-th layer image:

where

represents the Gaussian convolution kernel, and DOWN (·) denotes the downsampling operation.

Experimental results indicate that when the L channel of a 1080 p image is downsampled to 64 pixels using the Gaussian pyramid before subsequent processing, the total computation time is approximately 0.3% of that required for directly processing the original-sized image, representing a significant improvement in computational efficiency. Meanwhile, the multi-scale approach maintains better frequency domain characteristics, providing a reliable foundation for subsequent processing.

(3) Low-frequency illumination component estimation

The bright and dark regions in underwater images typically exhibit smooth transition characteristics (approximating a Gaussian distribution), making it difficult for traditional sharpening algorithms to effectively handle such gradual illumination variations [

10].

This method applies Gaussian low-pass filtering in the downsampled low-resolution space to obtain a smooth brightness distribution. This approach not only effectively estimates the illumination distribution but also avoids edge blurring issues caused by using large-sized convolution kernels at the original resolution.

The standard deviation of Gaussian low-pass filtering directly determines the cutoff frequency. Through underwater image experiments under different working conditions, it is verified that for images with higher resolution (higher than 720 P), taking can effectively filter out high-frequency noise (such as fine texture), while retaining medium- and low-frequency illumination components (such as shadow gradient).

To meet the real-time processing requirements, the Gaussian function is separated into two one-dimensional convolutions:

Then , where denotes the outer product. The separate convolution is consistent with the results of the direct two-dimensional convolution.

(4) Calculate local gamma values and upsampling

According to the given

value (calibrated as 1.5 by various working condition images in this paper), the local gamma value

is calculated by using Equation (3):

The resulting local gamma-value matrix is restored to the original size by bicubic interpolation upsampling to obtain .

(5) Gamma correction of the original L channel

Each pixel of the

L channel is then gamma-corrected using the calculated local gamma values:

Combine the adjusted L channel with the original a and b channels to obtain the brightness corrected image.

2.2. Adaptive Dual-Channel Visual Enhancement

2.2.1. Color Correction Based on G/B Channel Mean Dynamic Decision-Making

Inspired by ACDC [

15], the color cast is corrected by adjusting the proportional relationship of RGB channels to enhance the color contrast and dynamic range of the image. The specific steps are as follows:

Separate the red, green, and blue channels of the RGB image and normalize to [0, 1], adjusting the red (R) and blue (B) channels according to the green (G) and blue (B) channel mean.

, and represent adjusted channel values.

Calculate the sum for each channel:

Calculate and in the same way.

In order to meet the needs of real-time monitoring, Thread-Pool-Executor is used to implement parallel processing of RGB channels to improve processing efficiency. A matrix operation replaces the pixel-by-pixel operation, and the vectorization processing of color correction is realized, thus significantly reducing the computational overhead of pixel-by-pixel calculation.

2.2.2. Detail Enhancement Based on Local Adaptive Mapping

In order to further enhance the details of underwater images, the contrast is enhanced by a local adaptive mapping function.

Firstly, according to the L channel after local gamma correction in dynamic illumination, the contrast and detail level feature values of the image are calculated:

First, the contrast ratio C of the image is calculated:

where

.

Then, calculate the level of detail D:

The gradient is calculated using the 3 × 3 Sobel operator:

Then, the gradient amplitude is calculated:

In CLAHE processing, CLAHE parameters are first dynamically adjusted according to local contrast C and detail level D, where C is used to control the threshold for contrast limitation, preventing excessive enhancement in local regions (which can amplify noise).

Contrast Limit Threshold:

The RGB channel is divided into sub-regions of tileSize × tileSize, and the histogram

is calculated independently for each region. Truncate the histogram and reassign the pixels:

where

.

Then, bilinear interpolation and merging are performed, and the equalization result of each pixel is obtained as the final value through the interpolation of 4 adjacent blocks:

where

k is the adjacent block index and

is the interpolation weight.

This method enhances contrast by a local adaptive mapping function, which has contrast sensitivity and detail adaptation characteristics, where high-contrast images (C large) reduce cropping intensity (avoid over-enhancement), and high-frequency rich regions (D large) use smaller blocks (preserve details).

3. Algorithm Verification Based on Laboratory Test

In order to deeply explore the performance of image processing algorithm in specific scenes, the effectiveness of this method is verified by controlling the extreme working conditions of water sediment concentration, lighting conditions, shooting distance, etc.

3.1. Experimental Image Acquisition

3.1.1. Acquisition Platform

An image acquisition platform consisting of an underwater optical image acquisition system, an underwater dark chamber, and a cracked concrete block is designed and fabricated, as shown in

Figure 4.

The platform uses an opaque water tank with an inner diameter of 50 cm × 50 cm × 60 cm (length × width × height) as a container and color-controllable spotlights as auxiliary lighting devices.

The sediment concentrations of the prepared water body are 100 g/m3, 200 g/m3, 300 g/m3, 400 g/m3 and 500 g/m3. Two concrete members with cracks and pockmark disease are photographed at distances of 5 cm, 10 cm, 20 cm, 30 cm, and 40 cm.

3.1.2. Selection of Lighting Conditions

In related research, Chen [

1] conducted comparative experiments with different light sources, and the results showed that under the same illumination conditions, compared with white light, blue light can significantly improve the average gray value, clarity, contrast, and number of key points of underwater defect images. Based on this research conclusion, the experimental design of this study selects white light and blue light as auxiliary light sources, respectively, and underwater image shooting operations are conducted at various distances.

In terms of lighting condition setting, it is specifically divided into natural lighting conditions and auxiliary light source lighting conditions:

(1) Natural lighting conditions: Set two working conditions, namely, with natural light and without natural light.

(2) Auxiliary light source lighting conditions: Programmable spotlights are selected as auxiliary lighting equipment, and white light and blue light are used for auxiliary lighting of underwater scenes, respectively.

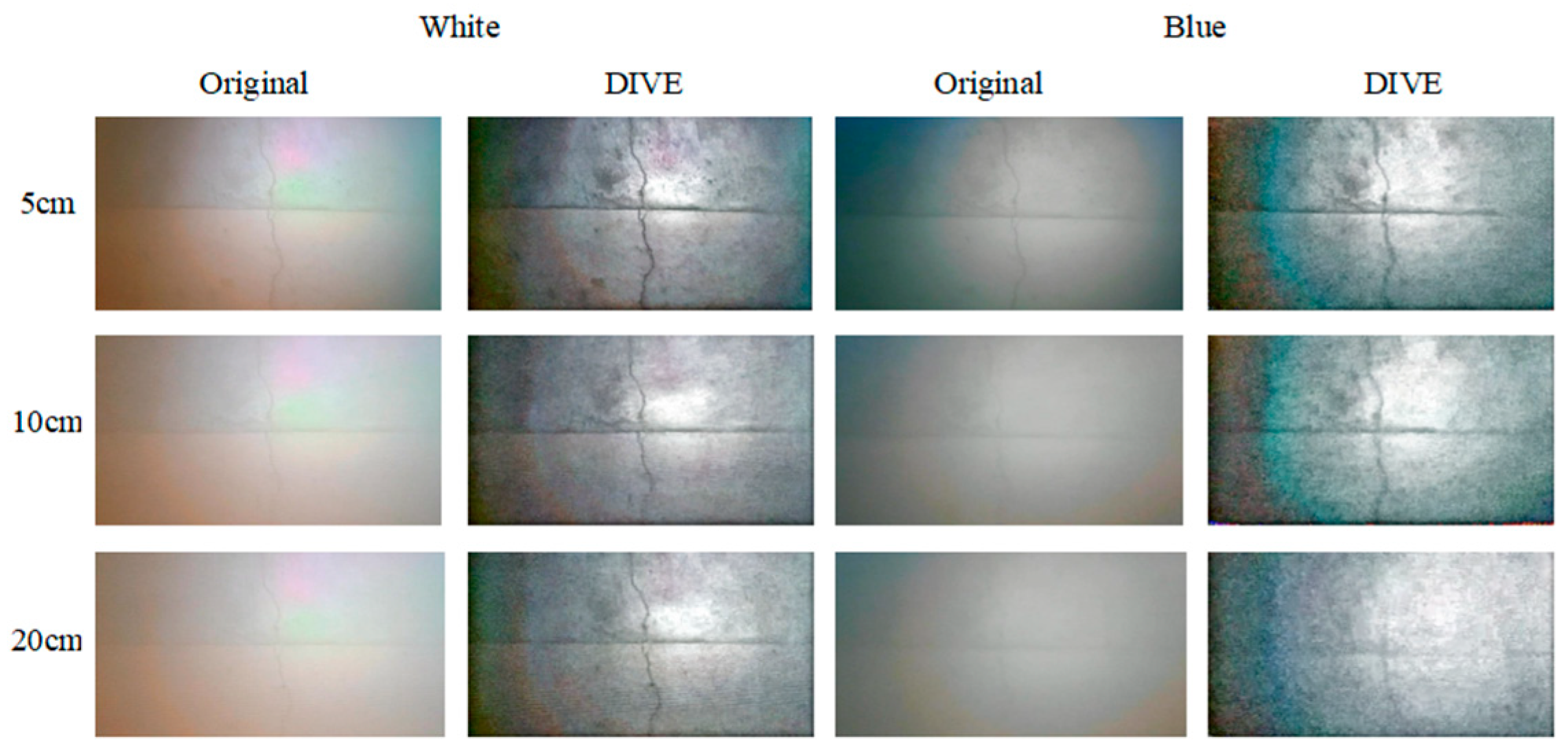

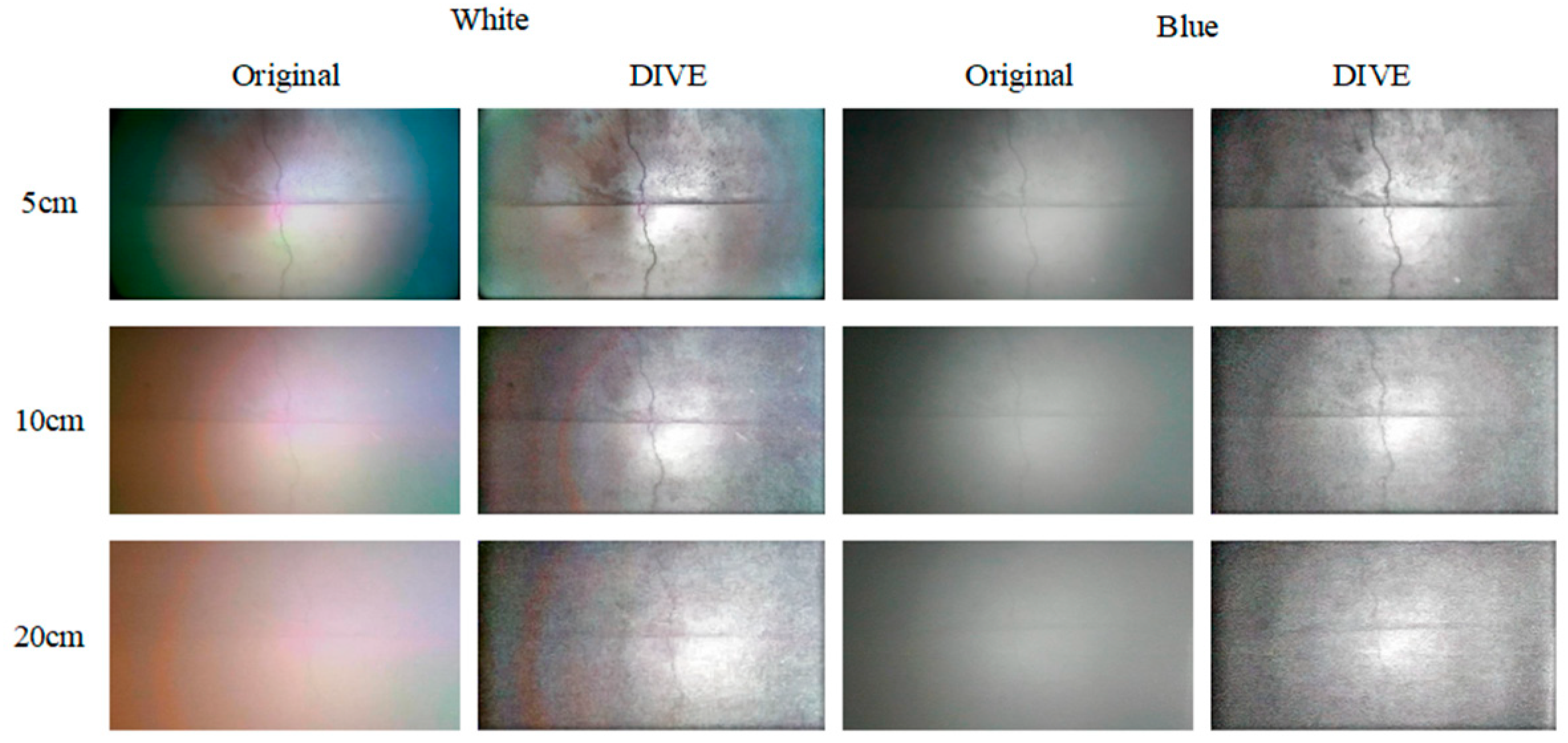

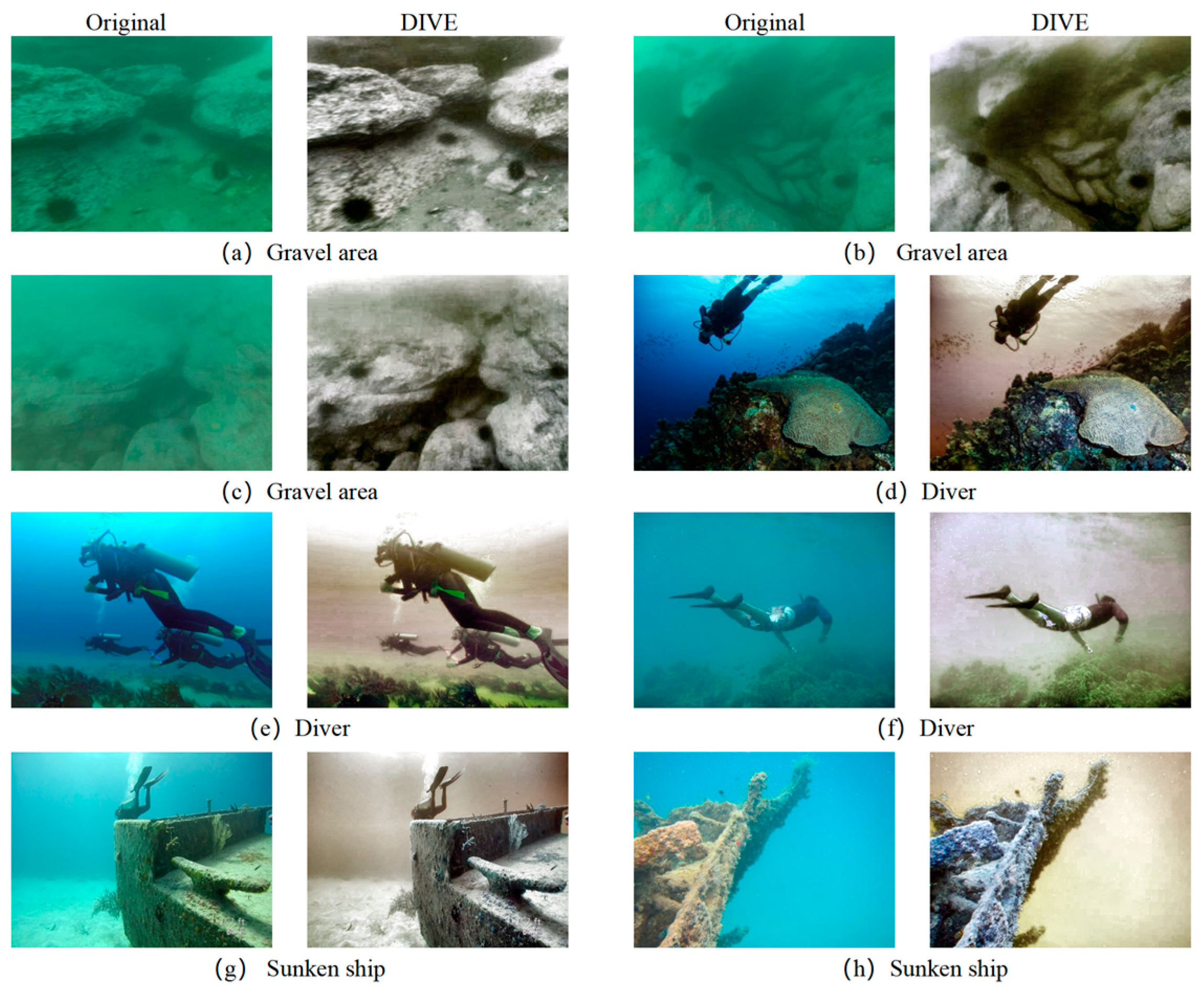

3.2. Subjective Evaluation of Image Processing Effect

In this section, the photos collected under different underwater lighting conditions, water sediment concentration and shooting distance are processed by DIVE algorithm, and the effectiveness of DIVE algorithm is verified by subjective evaluation and objective evaluation indices. The pictures collected by different natural illumination, different shooting distances and different auxiliary illumination methods when the sediment concentration is 500 g/m

3, and the processing results of DIVE algorithm are shown in

Figure 5 and

Figure 6.

Even in a water body with a high sediment concentration (500 g/m3), the DIVE algorithm can better balance the bright and dark areas of the underwater image, correct the color cast caused by the scattering of suspended particles, and improve image details and levels.

In both natural light and no natural light environments, the DIVE algorithm improves image quality most noticeably with white light and green light auxiliary illumination. In a natural light environment with a sediment content of 500 g/m3, the DIVE algorithm can increase the shooting distance under white light auxiliary lighting by approximately 20 cm, and the shooting distance under blue light and natural light by approximately 10 cm. In an environment without natural light, the DIVE algorithm can increase the shooting distance under white light and green light auxiliary lighting by about 10 cm. It can increase the shooting distance under red light and blue light auxiliary lighting by about 5 cm.

3.3. Objective Evaluation of Image Processing Effect

Due to the serious lack of information in the surrounding boundary areas of the underwater image after auxiliary illumination (dark black), this part uniformly intercepts the center area of the image (the distance from the upper, lower, left, and right boundaries is within 0.2 times the width of the original image) for quantitative evaluation.

3.3.1. Objective Evaluation Indicators

Image information entropy (E) and the Underwater Color Image Quality Evaluation metric (UCIQE) [

25] were adopted as objective evaluation indicators. Based on qualitative evaluation results, the impact of varying sediment concentrations on image quality was most pronounced at a shooting distance of 20 cm. Therefore, for the 10 images captured under each sediment concentration condition at this shooting distance, the mean and Standard Deviation (SD) of the objective evaluation indicators were calculated. Furthermore, the gain ratios of the objective evaluation indicators in the central region of the images processed by the DIVE algorithm were analyzed. The results are presented in

Table 1.

The results show that in environments with high sediment concentrations, the algorithm demonstrates more significant improvements across all indicators. Under natural light conditions, the algorithm generally exhibits superior image quality enhancement compared to environments without natural light.

In environments lacking natural light, the gain ratios for all indicators approach 1 under sediment-free conditions, indicating that the algorithm’s processing effects are similar to the original images, with limited improvement. As sediment concentration increases, the gain ratios for each indicator gradually rise, indicating that the algorithm performs more effectively in high-sediment environments and enhances image quality. Notably, for the E (R), E (G), E, and UCIQE indicators, when sediment concentration reaches 400 g/m3 and 500 g/m3, the gain ratios exceed 1.2, indicating that the algorithm significantly enhances these indicators.

Under natural light conditions, except for a slight improvement in E (B) under sediment-free conditions, the gain ratios for the remaining indicators are close to or slightly below 1, suggesting that the processing effects are comparable to or slightly inferior to the original images. As sediment concentration increases, the gain ratios for all indicators generally rise, with greater increases observed compared to environments without natural light. The gain ratios for E (R) and E exceed 1.25 when sediment concentration is ≥300 g/m3, indicating that the algorithm significantly improves image quality under these conditions. The UCIQE indicator also increases with sediment concentration, though the overall improvement is slightly lower than that for E (R) and E. The E (G) and E (B) indicators similarly show an upward trend, but the magnitude of improvement is relatively smaller.

3.3.2. Number of Key Points

Under varying sediment concentration conditions, the number of SIFT keypoints extracted from 10 images captured at a shooting distance of 20 cm was statistically analyzed to evaluate the enhancement effects of each processing stage on image features. The changes in the mean and standard deviation of the keypoint count in the central region of the images across different processing stages are presented in

Table 2.

Analyzing the above chart yields the following findings:

(1) In environments without natural light and under sediment-free conditions, the number of keypoints significantly increased from the original images to those after detail enhancement, indicating that the processing pipeline effectively enhanced image features. As sediment concentration increased, the number of keypoints in the original images dropped sharply, but after processing (especially during the detail enhancement stage), the keypoint count still rebounded significantly, demonstrating that the algorithm could effectively extract image features even in high-sediment environments. The detail enhancement step had the most pronounced effect on increasing the keypoint count, highlighting its importance in environments without natural light.

(2) In natural light environments and under sediment-free conditions, the number of keypoints gradually increased throughout the processing stages, though the increase was relatively small, suggesting that the processing provided some assistance in feature extraction but was less effective than in environments without natural light. As sediment concentration rose, the number of keypoints in the original images also decreased, but after processing, it remained higher than in the original images, indicating that the algorithm could still improve feature extraction capabilities in natural light environments. Detail enhancement remained the step with the most significant improvement, though the overall increase was lower than in environments without natural light.

(3) The gain ratio in the number of keypoints was generally lower in natural light environments compared to those without natural light, as the original images in natural light already had a higher number of keypoints. As sediment concentration increased, the gain ratio still showed an upward trend, but the increase was relatively small.

(4) Each processing step significantly increased the number of keypoints. Color correction, which only involved minor adjustments to the red and green channels, had a relatively smaller impact on increasing the keypoint count.

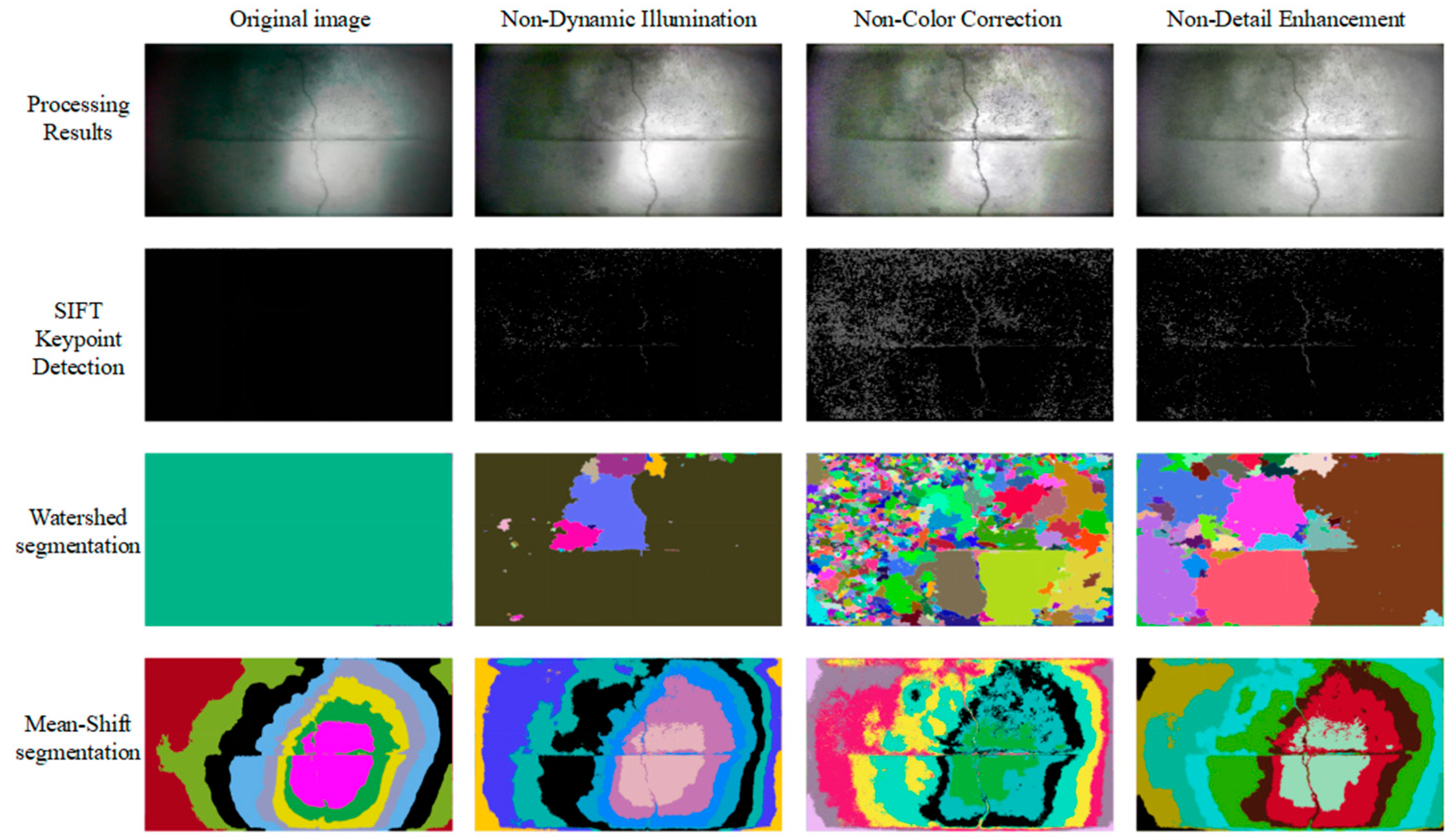

3.4. Modular Ablation Analysis and Feature Extraction Verification

Two basic image segmentation methods, SIFT key point detection, Watershed segmentation, and Mean-Shift segmentation, are used to extract image features step by step. The results are shown in

Figure 7. After eliminating each step, feature extraction is performed on the processing result, and the results are shown in

Figure 8.

As can be seen from

Figure 7, the dynamic lighting module basically makes the key features of cracks in the image appear, and the key points and image segmentation boundaries can well reflect the key information in the image, and also present some pockmarks (small potholes) on the concrete surface. The color correction part of the visual enhancement module only adds more detailed features to the edge area of the image, and its main function is to balance the color distribution, but it has no obvious effect on the enhancement of feature information in the image. The detail enhancement part in the visual enhancement module further enhances image details based on pre-processing, primarily improving the information content of small holes, pocket surfaces, and other features on the concrete surface.

It can be seen from

Figure 8 that after the dynamic illumination module is eliminated, the crack features in the image are difficult to mine through color correction and detail enhancement due to the smooth transition of illumination and cracks. However, after eliminating the color correction part in the visual enhancement module, the processing results of the image are basically the same as those before elimination. After eliminating the detail enhancement part in the visual enhancement module, it is difficult to dig small holes and pockmarks on the concrete surface.

In addition, a large amount of noise appears in over-dark areas in

Figure 7 and

Figure 8, which are mainly caused by local detail enhancement operations. When enhancing the image, in order to highlight key features such as cracks, a local detail enhancement algorithm is adopted. However, the dark areas in the original image itself hardly contain valid information. After enhancement, the algorithm will try to dig out “details” from these areas with almost no information, resulting in a lot of noise, which may be mistakenly identified as key points, such as noise such as sand.

Comprehensive analysis shows that each processing module of the DIVE algorithm plays an irreplaceable key role in the process of image enhancement:

The dynamic illumination module serves as the basis for feature extraction. By eliminating the influence of underwater non-uniform lighting, key structural features such as cracks are highlighted from the background, providing clear initial images for subsequent processing; Although the direct effect of the color correction module on feature enhancement is limited, it significantly improves the detail recognizability of the edge area of the image by balancing the color distribution, and provides more accurate color information for subsequent analysis. As the final optimization link, the detail enhancement module specifically strengthens the small defects (such as holes and pockets) on the concrete surface, which greatly improves the amount of information and detection accuracy of the image.

The absence of the dynamic illumination module will cause the overall features of the image to be blurred. In contrast, the local optimization function of the visual enhancement module further compensates for the deficiency in lighting processing. This stepped processing architecture (global lighting correction → color balance → local detail enhancement) ensures the robustness of the algorithm in different underwater environments and provides complete technical support for the accurate detection of surface defects of concrete structures.

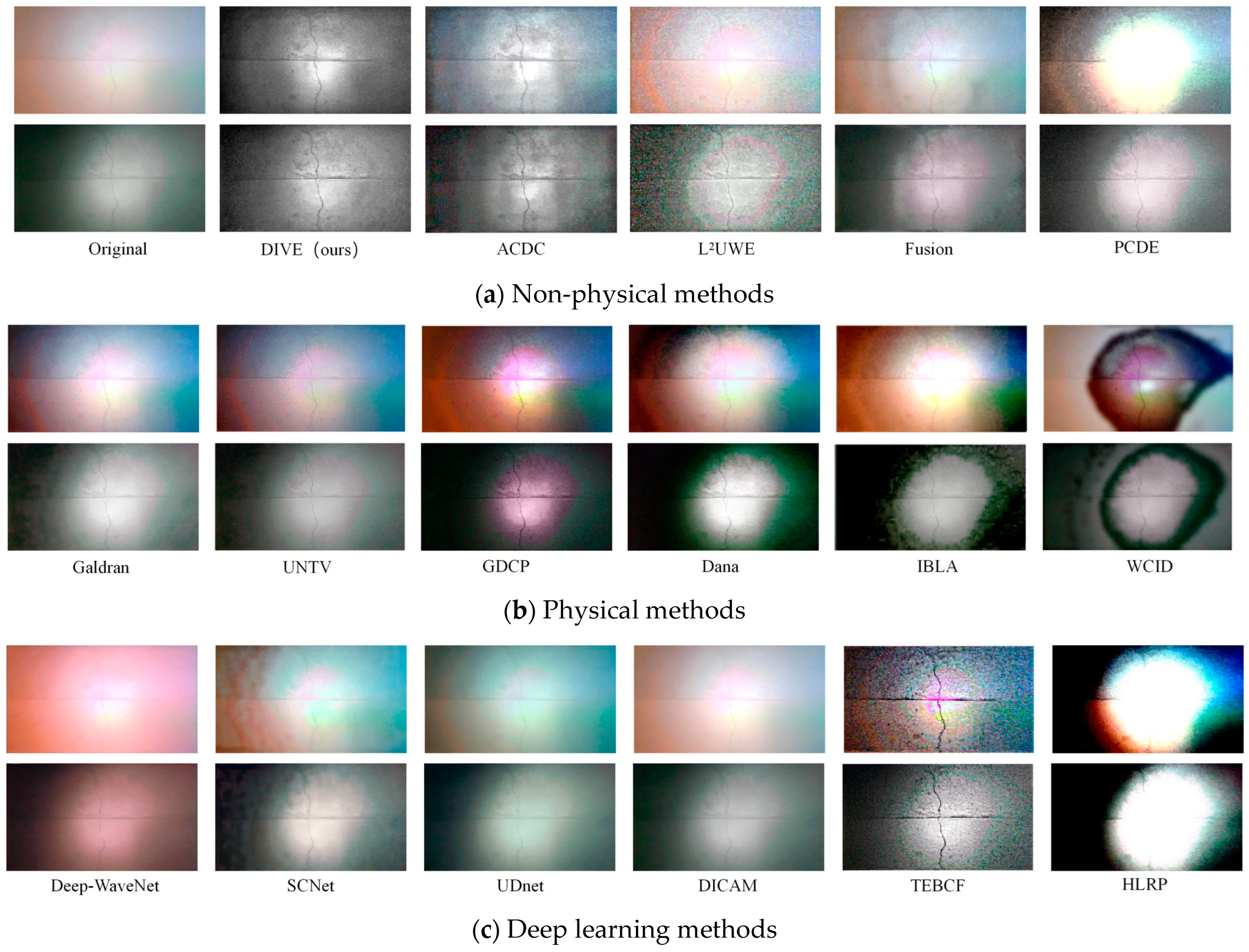

3.5. Comparison of Underwater Image Enhancement Algorithms

According to the classification of underwater image enhancement methods, non-physical methods, physical methods, and deep learning methods were employed to process two images—one captured under natural light with white light auxiliary illumination and the other under non-natural light with blue light auxiliary illumination, both at a sediment concentration of 400 g/m

3 and a shooting distance of 10 cm. The results are shown in

Figure 9.

For non-physical methods, four approaches—ACDC [

15], L

2UWE [

26], Fusion [

27], and PCDE [

17]—were compared. For physical methods, six approaches—Galdran [

28], UNTV [

29], GDCP [

16], Dana [

30], IBLA [

31], and WCID [

32]—were evaluated. For deep learning methods, six models—Deep-WaveNet [

21], SCNet [

33], UDnet [

22], DICAM [

34], TEBCF [

35], and HLRP [

36]—were contrasted.

Among non-physical methods, the image processed by DIVE (ours) demonstrated notable improvements in detail and clarity, with natural color restoration that effectively reduced blur and color cast in underwater images. ACDC improved overall image brightness but left some details blurry, with mediocre color restoration. L2UWE performed well in enhancing brightness and contrast but suffered from over-enhancement, leading to color distortion in some areas and introducing significant noise. Fusion integrated multiple types of information, achieving good detail and color restoration but introducing some noise, which reduced contrast and detail. PCDE exhibited abnormal contrast adjustment, effectively highlighting objects in the image but requiring improvement in color restoration.

Among physical methods, Galdran-processed images displayed vibrant colors and improved contrast but performed poorly in detail restoration. UNTV effectively restored image details with natural colors but suffered from local overexposure or underexposure under uneven lighting conditions. GDCP, Dana, IBLA, and WCID all showed some effectiveness in color restoration and detail enhancement but exhibited noticeable abnormalities in brightness processing, with dark region information almost entirely lost.

Most complex deep learning algorithms were trained and optimized using images from shallow marine waters, and this study directly applied their pre-trained models for processing. Consequently, these models performed poorly on dark, turbid water images. Deep-WaveNet exhibited obvious color distortion due to its over-enhancement of the red channel, as marine waters typically appear light blue or green, and it failed to address uneven illumination. SCNet predicted and compensated for dark regions in the image but introduced multiple abnormal halos. UDnet demonstrated abnormal color correction and had minimal effect on detail enhancement or uneven illumination. DICAM showed no significant changes. TEBCF’s processing results closely resembled those of L2UWE in non-physical methods, with excessive detail enhancement introducing substantial noise. HLRP, incorporating reflection priors (i.e., physical information) into its model, produced results similar to those of GDCP, Dana, IBLA, and WCID in physical methods, with abnormal illumination estimation exacerbating the imbalance between dark and bright regions after processing.

5. Discussion

The DIVE algorithm innovatively constructs innovations in physical mechanisms, algorithm architecture, and engineering implementation through the illumination–scattering decoupling processing framework, effectively solving the triple contradiction of existing underwater image enhancement methods. It has significant advantages when processing surface defect images of underwater concrete structures.

(1) Concrete surface images under different shooting distances, sediment concentrations, and lighting conditions were collected through laboratory experiments to simulate the concrete surface images of reservoir dams with high sediment content and great water depth.

(2) The decoupled design of the DIVE dynamic illumination module (for brightness correction) and the visual enhancement module (for color/detail restoration) overcomes the limitations of traditional methods, such as halo artifacts in Retinex and dark channel failure in DCP.

(3) Through downsampling, separation convolution, and parallel computing, about 25FPS processing is realized on embedded devices to meet the real-time inspection needs of underwater robots.

(4) Through laboratory tests, engineering sites (sluice sedimentation tanks) and public datasets (RUIE, UFO-120) images in different underwater environments and various evaluation index verification, the DIVE algorithm can balance the bright areas and dark areas of underwater images, correct color cast, improve image details and levels, and perform well in qualitative evaluation, quantitative evaluation (objective evaluation indicators and number of key points) and feature extraction verification. The underwater image processing results under different working conditions verify the robustness of the algorithm, especially for the detection of concrete cracks, holes, and other defects.

It provides an efficient and reliable image enhancement tool for intelligent inspection of underwater structures, and can be extended to marine engineering, dam monitoring and other fields.