Adaptive Hybrid PSO–APF Algorithm for Advanced Path Planning in Next-Generation Autonomous Robots

Abstract

1. Introduction

- We developed a new adaptive hybrid PSO-APF algorithm that allows PSO to dynamically tune APF parameters (repulsive scaling and distance of influence ) in a way that favors global optimization while also providing rapid tuning in the local scope.

- In our experiments with dynamically changing scenarios, the suggested algorithm provides better navigation performance, with significantly shorter paths (up to 18%) and an 85% success rate, relative to PSO and APF alone representations, utilizing moving obstacles, in comparison with two standalone benchmarks and one hybrid benchmark.

- A thorough review of parameter sensitivity and parameter optimization was performed, which provides a systematic exploration of how basic parameter choices can affect performance, along with practical advice on parameter knobs to tune.

- The proposed adaptive hybrid PSO-APF algorithm is robust and flexible in various contexts by exploiting real-time replanning to consider dynamic or moving obstacles with degraded paths, as well as dynamic or different goals, which all reflect reliability in a real-world implementation scenario.

2. Related Work

2.1. Global Path Planning

2.2. Local Path Planning

2.3. Hybrid Navigation

2.4. Multidisciplinary Navigation Insights

2.5. Reinforcement Learning–PSO Hybrid Approaches

3. Background and Motivation

3.1. Mechanics of PSO and APF Integration

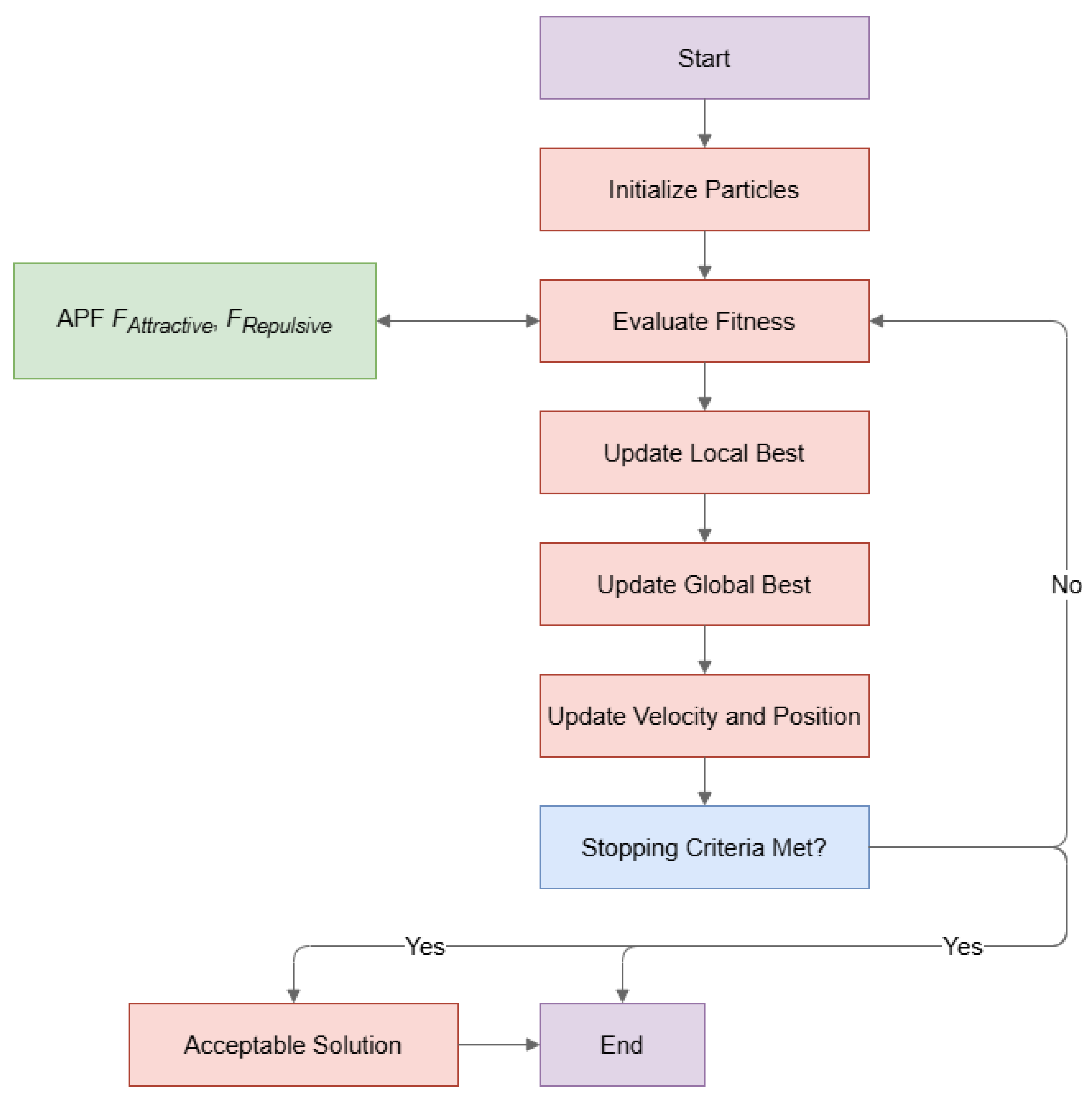

- PSO initializes a population of particles (potential paths) in the search space, evaluating their fitness based on a combined cost function that accounts for path length, smoothness, and goal proximity.

- APF calculates repulsive forces from obstacles and attractive forces toward the goal in real-time. These forces provide local adjustments to particle trajectories, ensuring collision-free navigation.

- At each PSO iteration: The APF module provides feedback by modifying the velocity vector of each particle to account for dynamic obstacles. This refined trajectory is then evaluated by the PSO fitness function, integrating local obstacle avoidance into the global optimization process.

- The search space is dynamically adjusted by APF forces, effectively steering particles away from infeasible regions while maintaining focus on the global objective.

- Weaknesses addressed:

- –

- While PSO is powerful for global optimization, it can be slow due to its iterative nature. In the hybrid approach, PSO is used for initial path planning, which is completed in advance. This enables the faster APF component to handle modifications in real-time, ensuring rapid responses during execution.

- –

- APF can get stuck at a local minimum, preventing it from finding the target. The hybrid approach avoids this by using PSO to generate a globally optimized path, giving the APF a solid starting point and reducing the risk of getting stuck.

- –

- APF often struggles with smooth motion near obstacles, leading to oscillations. The hybrid approach facilitates these transitions, as the globally optimized path of PSO reduces the sudden adjustments required by APF.

- Enhanced strengths:

- –

- PSO excels at finding efficient paths in congested environments. This global optimization ensures that the path is not only feasible but also efficient in terms of distance and energy usage.

- –

- APF is fast and lightweight, making it ideal for responding to sudden changes, such as moving obstacles. In the hybrid approach, APF fine-tunes the path in real time without the need for the more computationally expensive PSO restart.

- Benefits: The hybrid APF-PSO method strikes a balance between optimization and adaptability. As shown in Table 3:

- –

- It combines the efficient pathfinding of PSO with the real-time adjustment ability of APF, ensuring successful navigation even in dynamic environments.

- –

- Smoother trajectories: By combining global planning with local adjustments, the resulting trajectories become less steep and more natural.

- –

- Although it requires more computation than APF alone, the hybrid method remains efficient compared to standalone PSO or other hybrid methods.

- –

- The hybrid method effectively adapts to changing environments, optimizes the search space for efficiency, and overcomes common challenges such as local minima. This synergy makes it a powerful and reliable choice for real-world robotics applications.

3.2. Reinforcement Learning and Adaptive Control: Key Differences

3.3. Adaptive Parameter Optimization

- Parameter tuning: PSO dynamically adjusts the repulsive scaling factor (), influence distance (), inertial weight (w), and acceleration constants () of the APF during each iteration. In contrast, PSO-GA or BFO/PSO usually depend on fixed static values or offline tuning methods.

- Escaping local minima: While genetic algorithms rely on mutation and BFO on chemotaxis to avoid local minima, our approach combines the repulsive forces of APF with PSO’s global exploration, resulting in more effective trap avoidance.

- Real-time tuning: The proposed PSO-APF continuously updates APF forces during operation, whereas BFO/PSO/PSO-GA hybrids are usually designed for offline optimization and then operate in a fixed configuration.

- Two-way feedback loop: In PSO-APF, a two-way feedback loop exists: PSO sends updated parameters to APF for trajectory planning, and APF guides PSO particles via its force field evaluation. This interaction is absent in traditional hybrid methods.

4. The Proposed System

| Algorithm 1 Hybrid PSO-APF Pseudocode |

|

4.1. Initialization

- Generate a population of particles, each representing a potential solution (path) for the robot, letting N be the number of particles in the population.

- Each particle i is initialized with a position vector , and a velocity vector with random values within a predefined range in the search space, taking obstacles into account. These vectors can be represented as follows:

- Position Initialization: where d is the dimensionality of the problem space.

- Velocity Initialization: .

- Each particle i is represented by its position and velocity in the solution space.

4.2. Fitness Evaluation

- where n is the number of waypoints in the path, d is a Euclidean distance function, and represents the waypoint in the path for particle i.

- Update the fitness value for each particle based on the length of the path found.

4.3. Local Best Solution

- For each particle, we identify the best solution encountered so far (best local solution) by comparing its fitness value with those of its neighboring particles. This can be mathematically expressed as:

- Update if is better than the fitness value associated with or its neighbors.

4.4. Global Best Solution

- The swarm collectively tracks the global best-known position , representing the position with the best fitness value among all particles (global best solution). Mathematically, this is updated as:

- Update if is better than the fitness value associated with or its neighbors.

4.5. Velocity and Position Update

- For each particle, calculate the new velocity by considering its current velocity, its best local solution, and the global best solution, which balances exploration and exploitation.Mathematically, it can be expressed as:where w is the inertia weight, , and are acceleration coefficients, and are random numbers between 0 and 1, is the best position of particle i found so far, and is the best position found by any particle in the swarm at iteration t.

- Limit the particle’s velocity to prevent excessive movements.

- Update the position of each particle i by adding its velocity to its current position, using the equation:where is the current position of particle i at iteration t, and is the velocity of particle i at iteration t.

4.6. Stopping Criteria

- We repeat steps 2 to 5 until a stopping criterion is met:

- A maximum number of iterations is reached.

- The algorithm converges with a solution within a predefined threshold (Acceptable Solution).

- Stagnation in the search process.

- We select the particle that produced the best solution as the final solution, representing the shortest path found by the algorithm. This algorithm iteratively refines the solutions by adjusting the velocities and positions of particles based on their own experiences (local best) and the collective knowledge of the swarm (global best). By combining PSO’s exploration capabilities with APF’s obstacle avoidance, the algorithm efficiently navigates the solution space to find an optimal path for the autonomous mobile robot in a complex environment.

4.7. The Effect of Different Parameters on Path Planning Performance

- A higher w promotes exploration, leading to better global search but slower convergence. Conversely, a lower w enhances exploitation, resulting in faster convergence but a higher risk of local minima. We tested values ranging from to and found an optimal value of , balancing path quality and computational efficiency.

- For the acceleration factors , and , higher improved responsiveness to obstacles, while higher yielded smoother paths by promoting global collaboration among particles. Optimal values were and .

4.8. Dynamic Environments

- Obstacles may change position or move unpredictably during the robot’s navigation. The algorithm accounts for this by continuously recalculating the APF based on updated obstacle positions and adjusting the PSO particles’ paths accordingly.

- The robot may need to accelerate or decelerate based on terrain or mission requirements. The algorithm dynamically adapts the step size in the velocity updates of PSO particles to maintain smooth and efficient navigation.

- In certain applications, the goal location may also move (e.g., tracking a moving target). The APF’s attractive force component is recalculated in real-time to guide the robot toward the updated goal.

- Environmental changes, such as the sudden appearance or disappearance of obstacles, are handled by dynamically updating the APF and recalculating the fitness of PSO particles.

- The speed of the robot: the speed at which the robot navigates once it discovers a better position closer to the goal

- The speed of the obstacle: the speed at which the obstacle moves, which is important as it affects the path of the robot. If the robot and the obstacle have different speeds, it can cause further effects on the robot, forcing it to alter its original path, or it can collide with the robot, and that contact, in a way, pushes the robot off its course and forces changes on the robot while it is moving. The parameters of APF’s repulsive force might require dynamic adjustments to emphasize collision avoidance when obstacles are frequently or unpredictably in motion.

- Invert direction: the number of runs before the obstacle reverses its direction. This can make the robot recalculate and find another path to the goal than the one it initially found.

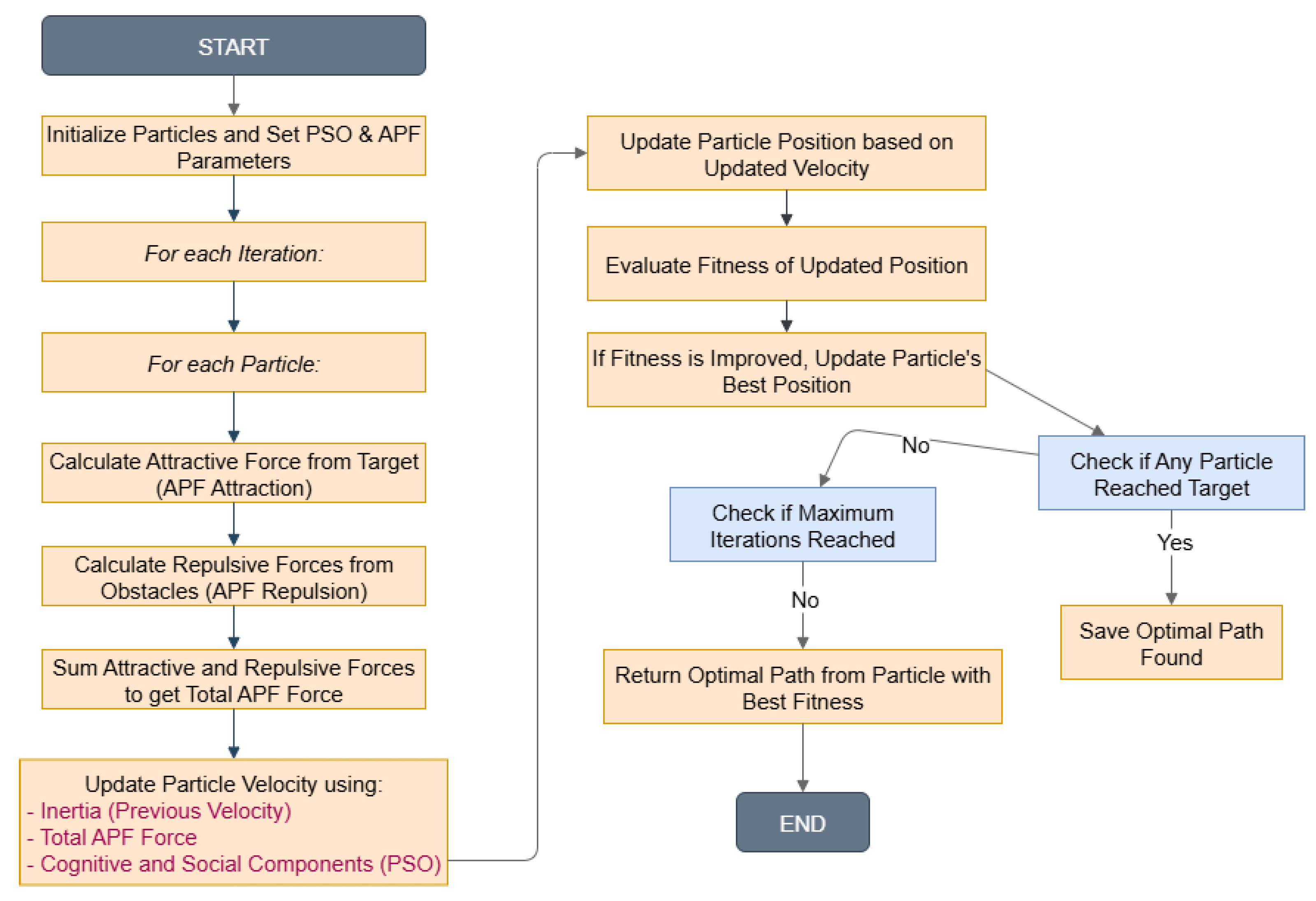

4.8.1. Mechanism of Adaptivity

- InitializationFirst, a group of PSO particles is generated, each representing a potential set of APF parameters (, , w, , ). These parameters control the magnitude and arrangement of the attractive and repulsive forces that shape the robot’s trajectory.

- APF Field CalculationUsing each particle’s parameters, the APF creates attractive forces guiding the robot toward the goal and repulsive forces pushing it away from obstacles. This results in an initial path for the robot to follow.

- Cost EvaluationThe trajectories are assessed using a fitness function that considers factors such as goal proximity, obstacle clearance, and path smoothness. This assessment offers feedback on the effectiveness of the selected parameters.

- PSO UpdateUsing the fitness values, PSO updates particles’ positions and velocities. Each particle searches for new parameter setups, relying on its own best performance (personal best) and the best performance found by the entire swarm (global best).

- Best Parameter SelectionThe best global solution is chosen from the swarm, representing the most effective set of APF parameters. This set of parameters is then used to improve the navigation forces.

- Adaptive RecalculationWith the new parameters, APF recalculates the force fields and adjusts the robot’s trajectory in real time. This introduces adaptability, as parameters are constantly fine-tuned during navigation.

- Local Minima HandlingThe algorithm checks if the robot is stuck in a local minimum. If it detects a trap, PSO explores different parameter sets to adjust the force field and free the robot. If no trap is found, navigation continues normally.

- Continuous Navigation and Goal AchievementThe process repeats until the robot successfully reaches the target. This closed-loop adjustment guarantees reliable navigation in dynamic and unpredictable settings.

4.8.2. Convergence Conditions in Dynamic Environments

- Fitness Stability: The algorithm tracks the fitness values of the particles over successive iterations. Convergence is assumed when the global best fitness value stabilizes within a predefined tolerance for a specified number of iterations, even if the environment changes.

- Minimal Distance to Goal: Convergence is achieved when the global best particle reaches a position sufficiently close to the goal, defined by a threshold (dgoal), while avoiding collisions with obstacles.

- Real-Time Adaptation to Changes: In dynamic environments, obstacles may move or appear/disappear. The algorithm recalculates the fitness function dynamically to reflect these changes. Convergence is maintained if the algorithm finds a feasible path with minimal deviations caused by new obstacle configurations.

- Maximum Iteration: In cases where the environment changes too frequently to allow stabilization, the algorithm halts after a predefined maximum number of iterations (maxI), returning the most feasible path found so far.

4.8.3. Decision Conditions for Replanning in Dynamic Environments

- Proximity to New Obstacles: When a new obstacle emerges within a predefined distance from the robot’s current trajectory, it triggers the robot to begin replanning to avoid a collision. The closer or more rapidly the obstacle approaches, the more compelling the reason for replanning becomes.

- Significant Path Deviation: If the robot’s existing route strays considerably from the ideal path due to obstacles or other disruptions, it may opt to replan to restore efficiency.

- Target Movement: When the target or goal point shifts, particularly in dynamic assignments, the robot evaluates whether it requires a new route to effectively pursue the moving target.

4.8.4. Implementation of Dynamic Obstacle Random Generation

- Random Movement Patterns:

- Obstacles are assigned randomized movement trajectories, including linear, circular, and erratic patterns, with variable speeds.

- The trajectories are updated dynamically based on predefined probabilities to simulate unpredictability.

- Obstacle Appearance and Disappearance: Obstacles can randomly appear or disappear within the simulation area, simulating real-world scenarios like vehicles entering or exiting a traffic zone.

- Environment Constraints: The randomization logic respects predefined boundaries and ensures that obstacles do not interfere with goal regions unnecessarily, maintaining feasible simulation scenarios.

5. Simulation and Results

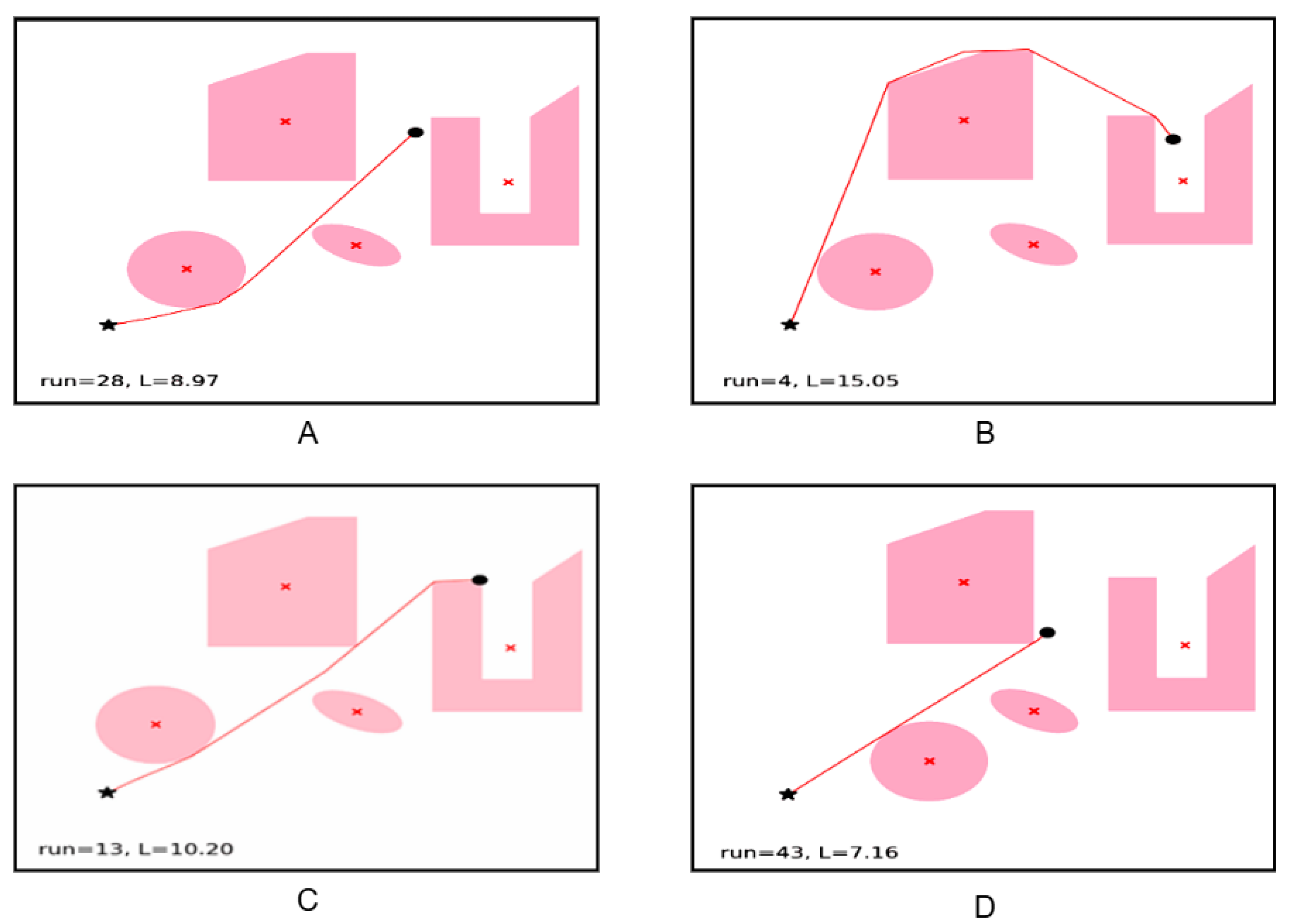

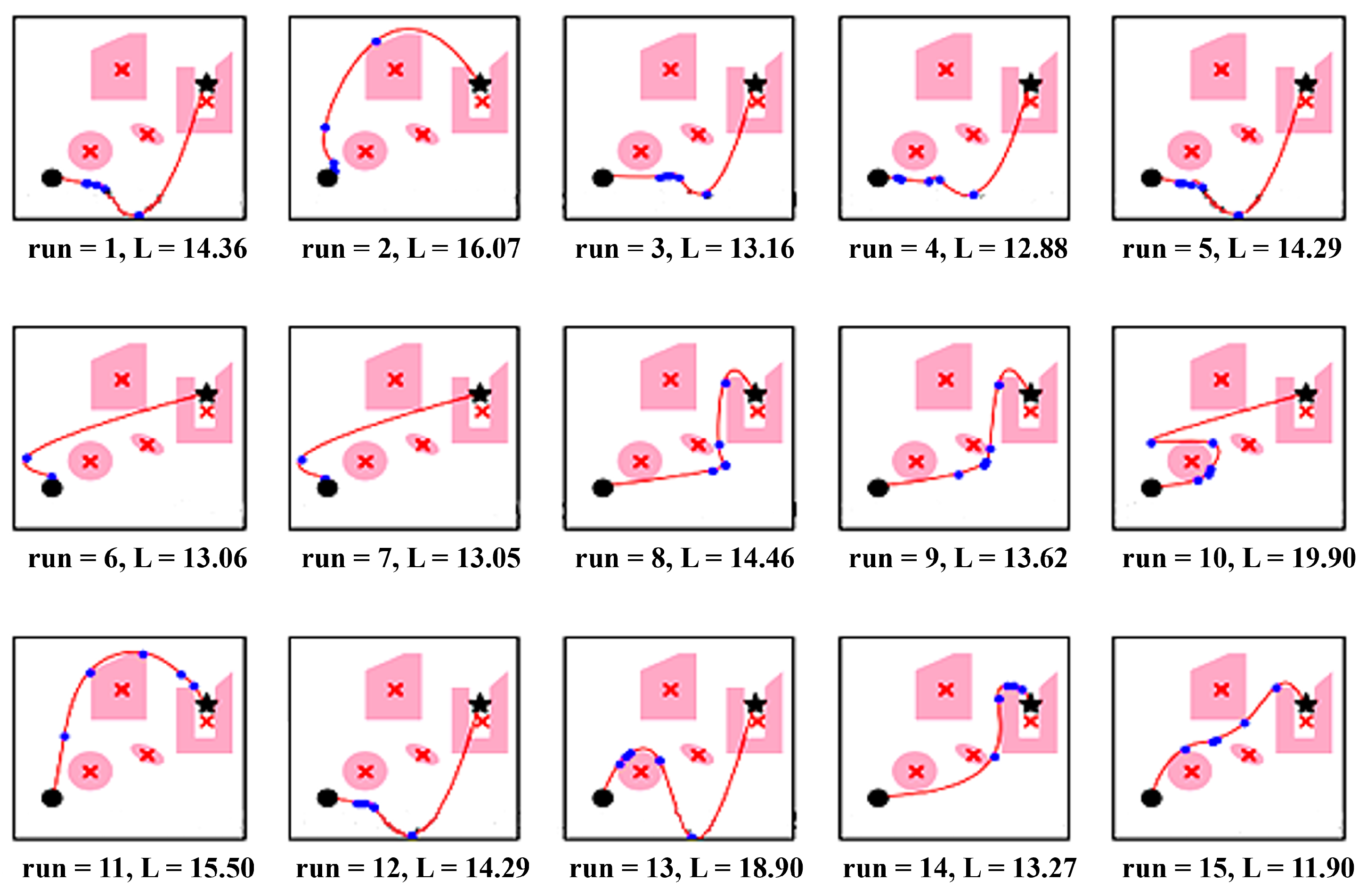

5.1. Results

5.1.1. Path Length Comparison

5.1.2. Computation Time

5.1.3. Success Rate in Dynamic Environments

5.1.4. Obstacle Avoidance Efficiency

5.1.5. Robustness and Flexibility

- PSO-APF amalgamates the exploration capabilities of PSO with APF’s obstacle avoidance proficiency, facilitating efficient exploration of the solution space while exploiting promising regions to pinpoint the shortest path.

- This algorithm maintains both global best-known positions and local best-known positions for each particle, achieving a balance between extensive global exploration and exploiting local regions around the current best-known positions.

- Suited for real-time path planning tasks like autonomous mobile robotics, PSO-APF swiftly adapts to dynamically shifting environments by continuously updating particle positions and velocities, enabling prompt navigation decisions.

- PSO-APF exhibits flexibility and adaptability across diverse environments and problem domains, capable of handling intricate obstacle layouts and dynamic scenarios, rendering it applicable in robotics, autonomous vehicles, and optimization problems.

- Effective parameter tuning is pivotal for optimizing PSO-APF’s performance. Parameters such as inertia weight (w), cognitive coefficient (c1), and social coefficient (c2) govern the trade-off between exploration and exploitation, significantly impacting convergence speed and solution quality.

- Convergence behavior hinges on chosen stopping criteria, such as reaching a maximum number of iterations, achieving satisfactory solution quality, or detecting stagnation. Selecting appropriate stopping criteria is crucial for balancing computational resources and solution accuracy.

- While proficient for small- to moderate-sized problems, scalability to larger-scale problems may pose challenges due to computational complexity. Addressing this concern may necessitate efficient implementation techniques or parallelization strategies.

- The PSO-APF algorithm offers a robust solution for identifying the shortest path amidst obstacles, leveraging the strengths of both PSO and APF. Nevertheless, careful parameter tuning and scalability considerations are imperative for its effective application in practical scenarios.

5.1.6. Comparison with RL-Integrated Methods

- Training requirements: RL-PSO demands extensive offline training using representative environments. Without enough training diversity, their ability to generalize to unseen or changing scenarios is limited.

- Hyperparameter tuning: Both PSO and RL involve numerous parameters (learning rate, exploration factor, reward shaping), which complicates optimization.

- Computational demands: RL-based methods typically need GPU acceleration for training, which may not always be feasible for real-time use on embedded robotic systems.

5.2. Attribution Analysis via Ablation Studies

- Env-1: grid with 13 obstacles.

- Env-2: grid with 120 obstacles.

- Env-3: grid with 1550 obstacles.

- Full PSO-APF (ours): With PSO-driven dynamic APF tuning, repulsion scaling, and bidirectional feedback.

- w/o Dynamic APF Tuning: APF parameters fixed a priori.

- w/o Repulsion Adjustment: Repulsive scaling factor kept constant.

- w/o Feedback Loop: One-way initialization without APF → PSO updates.

- Baseline APF: Classical APF without PSO.

5.3. Discussion

6. Conclusions

- Parameter sensitivity: Although we established reasonable parameters (, , ), we cannot claim that these parameters are generalizable to all robotic platforms or environments. It may be necessary to adaptively define and modify the parameters in physical environments, using fuzzy logic or meta-learning.

- Sensor noise and latency: In real-world environments, LiDAR and IMU data are subject to noise, occlusion, and latency. This will impact the calculation of APF forces, potentially leading to erratic trajectories and collisions with obstacles during the robot’s trajectory.

- Dynamic obstacle prediction: We developed a method to react to obstacles, but it does not predict movement patterns. This would be useful when discovering crowded spaces (e.g., public pedestrian areas) by integrating known motion prediction paradigms (e.g., LSTM, Kalman filters) for proactive replanning.

- Computational constraints: Although we found PSO-APF to be faster than BFO or RL methods, we may still encounter difficulties implementing the time-constrained algorithm on embedded systems (e.g., Raspberry Pi, Jetson Nano). We will need to simplify the algorithm over multiple time steps to enable single-agent execution or account for other computational constraints through concurrent execution in resource-constrained environments.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Loganathan, A.; Ahmad, N.S. A systematic review on recent advances in autonomous mobile robot navigation. Eng. Sci. Technol. Int. J. 2023, 40, 101343. [Google Scholar] [CrossRef]

- Zeng, L.; Guo, S.; Wu, J.; Markert, B. Autonomous mobile construction robots in built environment: A comprehensive review. Dev. Built Environ. 2024, 19, 100484. [Google Scholar] [CrossRef]

- Tang, K.H.P.Y.; Ghanem, M.C.; Gasiorowski, P.; Vassilev, V.; Ouazzane, K. Synchronisation, optimisation, and adaptation of machine learning techniques for computer vision in cyber-physical systems: A comprehensive analysis. IET Cyber-Phys. Syst. Theory Appl. 2025, 1–43. [Google Scholar]

- Okonkwo, C.; Awolusi, I. Environmental sensing in autonomous construction robots: Applicable technologies and systems. Autom. Constr. 2025, 172, 106075. [Google Scholar] [CrossRef]

- Liu, Y.; Li, H.; Lu, R.; Zuo, Z.; Li, X. An overview of finite/fixed-time control and its application in engineering systems. IEEE/CAA J. Autom. Sin. 2022, 9, 2106–2120. [Google Scholar] [CrossRef]

- Mellouk, A.; Benmachiche, A. A survey on navigation systems in dynamic environments. In Proceedings of the 10th International Conference on Information Systems and Technologies, Amman, Jordan, 14–15 July 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Khlif, N. A Comprehensive Review of Intelligent Navigation of Mobile Robots Using Reinforcement Learning with a Comparative Analysis of a modified Q-Learning Method and DQN in Simulated Gym Environment. Res. Sq. 2024. [Google Scholar] [CrossRef]

- Katona, K.; Neamah, H.A.; Korondi, P. Obstacle avoidance and path planning methods for autonomous navigation of mobile robot. Sensors 2024, 24, 3573. [Google Scholar] [CrossRef]

- Reda, M.; Onsy, A.; Haikal, A.Y.; Ghanbari, A. Path planning algorithms in the autonomous driving system: A comprehensive review. Robot. Auton. Syst. 2024, 174, 104630. [Google Scholar] [CrossRef]

- Benmachiche, A.; Makhlouf, A.; Bouhadada, T. Optimization learning of hidden Markov model using the bacterial foraging optimization algorithm for speech recognition. Int. J. Knowl. Based Intell. Eng. Syst. 2020, 24, 171–181. [Google Scholar] [CrossRef]

- Behjati, M.; Nordin, R.; Zulkifley, M.A.; Abdullah, N.F. 3D global path planning optimization for cellular-connected UAVs under link reliability constraint. Sensors 2022, 22, 8957. [Google Scholar] [CrossRef]

- Zhong, J.; Kong, D.; Wei, Y.; Hu, X.; Yang, Y. Efficiency-optimized path planning algorithm for car-like mobile robots in bilateral constraint corridor environments. Robot. Auton. Syst. 2025, 186, 104923. [Google Scholar] [CrossRef]

- Huang, C.; Zhao, Y.; Zhang, M.; Yang, H. APSO: An A*-PSO hybrid algorithm for mobile robot path planning. IEEE Access 2023, 11, 43238–43256. [Google Scholar] [CrossRef]

- Yu, Z.; Si, Z.; Li, X.; Wang, D.; Song, H. A novel hybrid particle swarm optimization algorithm for path planning of UAVs. IEEE Internet Things J. 2022, 9, 22547–22558. [Google Scholar] [CrossRef]

- Lv, Q.; Yang, D. Multi-target path planning for mobile robot based on improved PSO algorithm. In Proceedings of the 2020 IEEE 5th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 12–14 June 2020; IEEE: New York, NY, USA, 2020; pp. 1042–1047. [Google Scholar] [CrossRef]

- Zhang, G.; Li, C.; Gao, M.; Sheng, L. Global smooth path planning for mobile robots using a novel adaptive particle swarm optimization. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; IEEE: New York, NY, USA, 2019; pp. 2124–2129. [Google Scholar] [CrossRef]

- Meng, Q.; Chen, K.; Qu, Q. Ppswarm: Multi-uav path planning based on hybrid pso in complex scenarios. Drones 2024, 8, 192. [Google Scholar] [CrossRef]

- Li, Y.; Song, X.; Guan, W. Mobile robot path planning based on ABC-PSO algorithm. In Proceedings of the 2022 IEEE 6th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 4–6 March 2022; IEEE: New York, NY, USA, 2022; Volume 6, pp. 530–534. [Google Scholar] [CrossRef]

- Najm, H.T.; Ahmad, N.S.; Al-Araji, A.S. Enhanced path planning algorithm via hybrid WOA-PSO for differential wheeled mobile robots. Syst. Sci. Control Eng. 2024, 12, 2334301. [Google Scholar] [CrossRef]

- Yuan, Q.; Sun, R.; Du, X. Path planning of mobile robots based on an improved particle swarm optimization algorithm. Processes 2022, 11, 26. [Google Scholar] [CrossRef]

- Haris, M.; Nam, H. Path planning optimization of smart vehicle with fast converging distance-dependent PSO algorithm. IEEE Open J. Intell. Transp. Syst. 2024, 5, 726–739. [Google Scholar] [CrossRef]

- Makhlouf, A.; Benmachiche, A.; Boutabia, I. A Hybrid BFO/PSO Navigation Approach for an Autonomous Mobile Robot. Informatica 2024, 48, 209–222. [Google Scholar] [CrossRef]

- Bai, Z.; Pang, H.; He, Z.; Zhao, B.; Wang, T. Path planning of autonomous mobile robot in comprehensive unknown environment using deep reinforcement learning. IEEE Internet Things J. 2024, 11, 22153–22166. [Google Scholar] [CrossRef]

- Zhao, M.; Li, X.; Lu, Y.; Wang, H.; Ning, S. A path-planning algorithm for autonomous vehicles based on traffic stability criteria: The AS-IAPF algorithm. Mech. Sci. 2024, 15, 613–631. [Google Scholar] [CrossRef]

- Fusic, J.; Sitharthan, R. Self-adaptive learning particle swarm optimization-based path planning of mobile robot using 2D Lidar environment. Robotica 2024, 42, 977–1000. [Google Scholar] [CrossRef]

- Benmachiche, A.; Tahar, B.; Tayeb, L.M.; Asma, Z. A dynamic navigation for autonomous mobiles robots. Intell. Decis. Technol. 2016, 10, 81–91. [Google Scholar] [CrossRef]

- Benmachiche, A.; Betouil, A.A.; Boutabia, I.; Nouari, A.; Boumahni, K.; Bouzata, H. A fuzzy navigation approach using the intelligent lights algorithm for an autonomous mobile robot. In Proceedings of the International Conference on Computing and Information Technology, Kanchanaburi, Thailand, 15–16 May 2025; Springer: Berlin/Heidelberg, Germany, 2022; pp. 112–121. [Google Scholar] [CrossRef]

- Nagar, H.; Paul, A.; Machavaram, R.; Soni, P. Reinforcement learning particle swarm optimization based trajectory planning of autonomous ground vehicle using 2D LiDAR point cloud. Robot. Auton. Syst. 2024, 178, 104723. [Google Scholar] [CrossRef]

- Muni, M.K.; Pradhan, P.K.; Dhal, P.R.; Kumar, S.; Sethi, R.; Patra, S.K. Improving navigational parameters and control of autonomous robot using hybrid SOMA–PSO technique. Evol. Intell. 2024, 17, 1295–1311. [Google Scholar] [CrossRef]

- Chen, L.; Liu, R.; Jia, D.; Xian, S.; Ma, G. Improvement of the TEB Algorithm for Local Path Planning of Car-like Mobile Robots Based on Fuzzy Logic Control. Actuators 2025, 14, 12. [Google Scholar] [CrossRef]

- Kumar, A.; Zhang, L.; Bilal, H.; Wang, S.; Shaikh, A.M.; Bo, L.; Rohra, A.; Khalid, A. DSQN: Robust path planning of mobile robot based on deep spiking Q-network. Neurocomputing 2025, 634, 129916. [Google Scholar] [CrossRef]

- Tao, B.; Kim, J.H. Mobile robot path planning based on bi-population particle swarm optimization with random perturbation strategy. J. King Saud-Univ. Comput. Inf. Sci. 2024, 36, 101974. [Google Scholar] [CrossRef]

- Fahmizal, I.D.; Arrofiq, M.; Maghfiroh, H.; Santoso, H.P.; Anugrah, P.; Molla, A. Path Planning for Mobile Robots on Dynamic Environmental Obstacles Using PSO Optimization. J. Ilm. Tek. Elektro Komput. Dan Inform. 2024, 10, 166–172. [Google Scholar] [CrossRef]

- Si, G.; Zhang, R.; Jin, X. Path planning of factory handling robot integrating fuzzy logic-PID control technology. Syst. Soft Comput. 2025, 7, 200188. [Google Scholar] [CrossRef]

- Han, B.; Sun, J. Finite Time ESO-Based Line-of-Sight Following Method with Multi-Objective Path Planning Applied on an Autonomous Marine Surface Vehicle. Electronics 2025, 14, 896. [Google Scholar] [CrossRef]

- Vaziri, A.; Askari, I.; Fang, H. Bayesian Inferential Motion Planning Using Heavy-Tailed Distributions. arXiv 2025, arXiv:2503.22030. [Google Scholar] [CrossRef]

- Faghirnejad, S.; Kontoni, D.P.N.; Camp, C.V.; Ghasemi, M.R.; Mohammadi Khoramabadi, M. Seismic performance-based design optimization of 2D steel chevron-braced frames using ACO algorithm and nonlinear pushover analysis. Struct. Multidiscip. Optim. 2025, 68, 16. [Google Scholar] [CrossRef]

- Faghirnejad, S.; Kontoni, D.P.N.; Ghasemi, M.R. Performance-based optimization of 2D reinforced concrete wall-frames using pushover analysis and ABC optimization algorithm. Earthquakes Struct. 2024, 27, 285. [Google Scholar] [CrossRef]

- Barat, F.; Irani, M.; Pahlevanzade, M. Solving nonlinear optimization problem using new closed loop method for optimal path tracking. In Proceedings of the 2019 5th Conference on Knowledge Based Engineering and Innovation (KBEI), Tehran, Iran, 28 February–1 March 2019; IEEE: New York, NY, USA, 2019; pp. 472–477. [Google Scholar] [CrossRef]

- Zhou, Y.; Han, G.; Wei, Z.; Huang, Z.; Chen, X. A time-optimal continuous jerk trajectory planning algorithm for manipulators. Appl. Sci. 2023, 13, 11479. [Google Scholar] [CrossRef]

- Qasim, M.H.; Al-Darraji, S. Traversing Dynamic Environments: Advanced Deep Reinforcement Learning for Mobile Robots Path Planning-A Comprehensive Review. Int. J. Comput. Digit. Syst. 2024, 16, 1–26. [Google Scholar] [CrossRef]

- Aslan, M.F.; Durdu, A.; Sabanci, K. Goal distance-based UAV path planning approach, path optimization and learning-based path estimation: GDRRT*, PSO-GDRRT* and BiLSTM-PSO-GDRRT. Appl. Soft Comput. 2023, 137, 110156. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, D.; Zhang, T.; Zhang, J.; Wang, J. A new path plan method based on hybrid algorithm of reinforcement learning and particle swarm optimization. Eng. Comput. 2022, 39, 993–1019. [Google Scholar] [CrossRef]

- Huang, H.; Jin, C. A novel particle swarm optimization algorithm based on reinforcement learning mechanism for AUV path planning. Complexity 2021, 2021, 8993173. [Google Scholar] [CrossRef]

- Chen, X.; Liu, S.; Zhao, J.; Wu, H.; Xian, J.; Montewka, J. Autonomous port management based AGV path planning and optimization via an ensemble reinforcement learning framework. Ocean Coast. Manag. 2024, 251, 107087. [Google Scholar] [CrossRef]

- Zhang, X.; Xia, S.; Li, X.; Zhang, T. Multi-objective particle swarm optimization with multi-mode collaboration based on reinforcement learning for path planning of unmanned air vehicles. Knowl.-Based Syst. 2022, 250, 109075. [Google Scholar] [CrossRef]

- Yang, Y.; Zhang, L.; Guo, H. The Path Planning Research for Mobile Robot Based on Reinforcement Learning Particle Swarm Algorithm. In Proceedings of the 2024 7th International Conference on Advanced Algorithms and Control Engineering (ICAACE), Jinan, China, 20–22 March 2026; IEEE: New York, NY, USA, 2024; pp. 1577–1580. [Google Scholar] [CrossRef]

- Zhu, C. Intelligent robot path planning and navigation based on reinforcement learning and adaptive control. J. Logist. Inform. Serv. Sci 2023, 10, 235–248. [Google Scholar] [CrossRef]

- Tian, G.; Tan, J.; Li, B.; Duan, G. Optimal fully actuated system approach-based trajectory tracking control for robot manipulators. IEEE Trans. Cybern. 2024, 54, 7469–7478. [Google Scholar] [CrossRef]

| Feature | Global Path Planning | Local Path Planning |

|---|---|---|

| Environment | Use preloaded map or data | Adapts to real-time changes and obstacles. |

| Path | Compute the whole path before moving. | Adjust path as the robot moves and encounters obstacles. |

| Obstacles | Assume obstacles are static. | Avoid moving obstacles in real-time. |

| Data | Utilize a map or model of the environment provided in advance. | Use live sensor data to navigate. |

| Flexibility | Less flexible in dynamic environments due to reliance on static data. | Highly flexible and adjusts to changes. |

| Use Case | Work best in predictable environments. | Best for dynamic or unknown environments. |

| Step | Description |

|---|---|

| 1. Global Path Initialization (PSO) | PSO generates potential paths toward the goal, exploring the search space to find optimal solutions. |

| 2. APF Parameter Optimization (PSO) | PSO adjusts key APF parameters (weights for attractive and repulsive forces) to minimize path inefficiency and avoid local minima. |

| 3. Real-time Navigation (APF) | With optimized parameters, APF dynamically adjusts the path to avoid obstacles during real-time navigation. |

| 4. Feedback to PSO | Real-time feedback on path quality (smoothness, obstacle avoidance) is provided to PSO for further refinement. |

| Method/Technique | Advantages | Limitations | Computation Time (ms) | Path (Curvature Index) | Success Rate (%) | Accuracy | Adaptability | Computational Efficiency |

|---|---|---|---|---|---|---|---|---|

| APF | Real-time performance; easy to implement | Local minima issues; oscillatory near obstacles | Low (17–23) | Medium (0.6–0.8) | Moderate (72–81) | Moderate | Low | High |

| PSO | Global search capability; effective in complex environments | Higher computational cost; slower in real-time | Medium (52–65) | Medium (0.75–0.85) | Moderate (84–91) | High | Moderate | Moderate |

| Hybrid APF-PSO (Proposed) | Combines real-time navigation with global optimization; mitigates local minima | Requires parameter tuning; higher cost than APF | Medium (35–45) | High (0.9–1.0) | Very high (95–98) | High | High | Moderate–High |

| BFO-PSO | Exploits BFO exploration with PSO optimization | Slower than PSO; sensitive to tuning | Medium (50–70) | Medium–High (0.8–0.9) | High (88–93) | High | Moderate | Moderate |

| BFO | Good at escaping local minima; bio-inspired search | Very slow convergence; high computational cost | High (70–100) | Medium (0.7–0.8) | Moderate (75–85) | Moderate | Moderate | Low–Moderate |

| GA | Strong global search; good for discrete problems | Convergence time high; risk of premature convergence | High (80–110) | Medium (0.75–0.85) | Moderate–High (85–90) | High | Moderate | Low |

| ANN and QL | Learns from data; adaptive to dynamic environments | Requires large training data; poor generalization in unseen cases | High (100–150) | High (0.9–1.0) | High (90–95) | High | High | Low (training-intensive) |

| A* | Guarantees optimal path in grid-based environments | Computationally expensive in large maps | Low–Medium (20–50) | Medium (0.7–0.85) | High (88–92) | Moderate | Low | High |

| RL-PSO | Learns adaptive strategies with RL while ensuring global search with PSO | Training overhead; computationally more intensive than PSO alone | High (70–120) | High (0.9–1.0) | Very high (93–96) | High | Very high | Moderate |

| Fuzzy Logic Control (FLC) | Simple rule-based decision making; robust in noisy environments | Requires expert-defined rules; scalability limited | Low (15–30) | Medium–High (0.8–0.9) | High (85–92) | Moderate | High | High (lightweight) |

| Adaptive Control | Adjusts online to environment changes | Needs continuous tuning; sensitive to disturbances | Medium (40–60) | High (0.85–0.95) | High (90–94) | High | High | Moderate (training/tuning needed) |

| Event-triggered Consensus | Distributed and scalable; excellent for multi-robot coordination | Communication overhead; complex design | Medium–High (60–90) | High (0.9–1.0) | Very high (93–97) | High | High | High (scalable, efficient) |

| Technique | Advantages | Limitations |

|---|---|---|

| APF | Fast local navigation; real-time obstacle avoidance | Prone to local minima; struggles in complex environments |

| PSO | Global optimization; effective for complex environments | Slow convergence in dynamic scenarios; lacks real-time adaptability |

| Hybrid APF-PSO | Combines local response (APF) with global search (PSO); overcomes local minima; enhanced path smoothness | Higher computational cost; requires careful parameter tuning |

| Parameter | Value Range Tested | Optimal Value | Impact on Path Planning | Rationale for Optimal Selection |

|---|---|---|---|---|

| Inertial Weight (w) | [0.2, 0.9] | 0.6 | High (): enhances exploration to avoid local minima but may slow convergence and lengthen paths. Low (): faster convergence but risk suboptimal solutions. | At , we observed the optimal trade-off: 18% shorter paths than APF alone while maintaining 98.6% convergence rate in environments. Values caused oscillations near obstacles, while values resulted in 12% more local minima incidents. |

| Cognitive Factor () | [1.0, 2.5] | 1.5 | High (): enhances obstacle avoidance via localized search. Low (): less effective navigation. | provided the best balance between individual particle responsiveness to obstacles (measured by obstacle clearance distance) and swarm coherence. Higher values caused fragmented search patterns, while lower values reduced obstacle avoidance efficiency by 15%. |

| Social Factor () | [1.0, 2.5] | 2.0 | High (): promotes smooth paths, may limit diversity. Low (): less smooth, more diverse but less optimal. | maximized global information sharing without sacrificing diversity. At this value, path curvature index improved by 23% compared to PSO-only, while maintaining 85% success rate in dynamic environments. |

| Repulsive Force Scaling () | [0.5, 5.0] | 3.0 | High (): cautious but longer paths. Low (): smoother but more collisions. | provided optimal obstacle clearance ( obstacle radius) without excessive detours. Values increased path length by 12%, while values resulted in 22% more near-collision incidents. |

| Influence Distance () | [1.0, 5.0] | 2.5 | High (): more avoidance, more detours. Low (): better in narrow spaces. | enabled effective navigation in narrow corridors (width units) while preventing unnecessary detours in open spaces. |

| Method | Path Length | Computation Time | Success Rate (Dynamic) | Obstacle Avoidance Efficiency |

|---|---|---|---|---|

| PSO-APF | Shortest () | Moderate () | 85% | 90% |

| APF-only | Longer | Fastest | 80% | 75% |

| BFO | Moderate | High () | 72% | 78% |

| GA | Moderate | Moderate | 78% | 80% |

| PSO/BFO | Moderate | High () | 79% | 82% |

| Method | Accuracy | Adaptability | Computational Efficiency |

|---|---|---|---|

| PSO-APF | High (better obstacle avoidance by combining strengths of PSO and APF) | Moderate to high (adaptive but depends on problem complexity) | Moderate (PSO’s global search combined with APF’s local strategies) |

| APF | Moderate to high (may fail in local minima) | Moderate (requires parameter tuning for different scenarios) | High (fast due to simple calculations) |

| BFO/PSO | High (leverages BFO’s exploration and PSO’s convergence) | High (suitable for dynamic environments) | Moderate to low (BFO is computationally intensive) |

| BFO | Moderate (good for exploration but slow convergence can reduce accuracy) | Moderate (adapts well to changing environments) | Low (computationally expensive due to bacteria lifecycle simulations) |

| PSO | High (good convergence for static environments) | Moderate (less effective in dynamic situations) | Moderate (depends on population size and iterations) |

| GA | High (good global optimization capabilities) | High (effective in dynamic and diverse environments) | Moderate to low (computationally intensive) |

| ANN and QL | High (learns from experience and adapts well) | Very high (can handle complex and dynamic environments) | Low (requires significant training time and computational resources) |

| A* | Very high (guaranteed optimal path in static environments) | Low (not adaptive to dynamic changes) | Moderate to high (depends on heuristic design) |

| Technique | Environment | Path Length (PL) | Convergence Rate |

|---|---|---|---|

| PSO-APF | 22 | 100% | |

| APF | 23 | 95.65% | |

| BFO/PSO | [10 × 10] | 22 | 100% |

| BFO | St: (1, 1) | 23 | 95.65% |

| PSO | Gl: (10, 10) | 22 | 100% |

| GA | Obstacles: 13 | 22 | 100% |

| ANN and QL | Best-Path: 22 | 23 | 95.65% |

| A* | 22 | 100% | |

| RL-PSO | 22 | 98.50% | |

| PSO-APF | 215 | 98.60% | |

| APF | 225 | 94.22% | |

| BFO/PSO | [100 × 100] | 218 | 97.24% |

| BFO | St: (1, 1) | 235 | 90.21% |

| PSO | Gl: (100, 100) | 225 | 94.22% |

| GA | Obstacles: 120 | 240 | 88.33% |

| ANN and QL | Best-Path: 212 | 238 | 89.07% |

| A* | 212 | 100% | |

| RL-PSO | 214 | 95.10% | |

| PSO-APF | 1977 | 93.42% | |

| APF | 2304 | 80.16% | |

| BFO/PSO | [1000 × 1000] | 2155 | 86.96% |

| BFO | St: (1, 1) | 2237 | 83.77% |

| PSO | Gl: (1000, 1000) | 2201 | 85.14% |

| GA | Obstacles: 1550 | 2269 | 80.44% |

| ANN and QL | Best-Path: 1847 | 2705 | 68.28% |

| A* | 1905 | 92.00% | |

| RL-PSO | 1925 | 85.00% |

| Variant | Env-1 Path Length | Env-2 Path Length | Env-3 Path Length | Success Rate | Convergence Rate |

|---|---|---|---|---|---|

| Full PSO-APF (ours) | 22 | 215 | 1977 | 100% | 93.4% |

| w/o Dynamic APF Tuning | 23 | 225 | 2155 | 96% | 86.9% |

| w/o Repulsion Adjustment | 23 | 222 | 2125 | 94% | 88.1% |

| w/o Feedback Loop | 23 | 220 | 2101 | 95% | 87.6% |

| Baseline APF | 23 | 225 | 2304 | 80% | 80.2% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Benmachiche, A.; Derdour, M.; Kahil, M.S.; Ghanem, M.C.; Deriche, M. Adaptive Hybrid PSO–APF Algorithm for Advanced Path Planning in Next-Generation Autonomous Robots. Sensors 2025, 25, 5742. https://doi.org/10.3390/s25185742

Benmachiche A, Derdour M, Kahil MS, Ghanem MC, Deriche M. Adaptive Hybrid PSO–APF Algorithm for Advanced Path Planning in Next-Generation Autonomous Robots. Sensors. 2025; 25(18):5742. https://doi.org/10.3390/s25185742

Chicago/Turabian StyleBenmachiche, Abdelmadjid, Makhlouf Derdour, Moustafa Sadek Kahil, Mohamed Chahine Ghanem, and Mohamed Deriche. 2025. "Adaptive Hybrid PSO–APF Algorithm for Advanced Path Planning in Next-Generation Autonomous Robots" Sensors 25, no. 18: 5742. https://doi.org/10.3390/s25185742

APA StyleBenmachiche, A., Derdour, M., Kahil, M. S., Ghanem, M. C., & Deriche, M. (2025). Adaptive Hybrid PSO–APF Algorithm for Advanced Path Planning in Next-Generation Autonomous Robots. Sensors, 25(18), 5742. https://doi.org/10.3390/s25185742