MCEM: Multi-Cue Fusion with Clutter Invariant Learning for Real-Time SAR Ship Detection

Abstract

1. Introduction

2. Related Works

2.1. SAR Maritime Target Detection

2.2. Feature Enhancement for Small Targets

2.3. Detection Head Optimization

3. Proposed Method

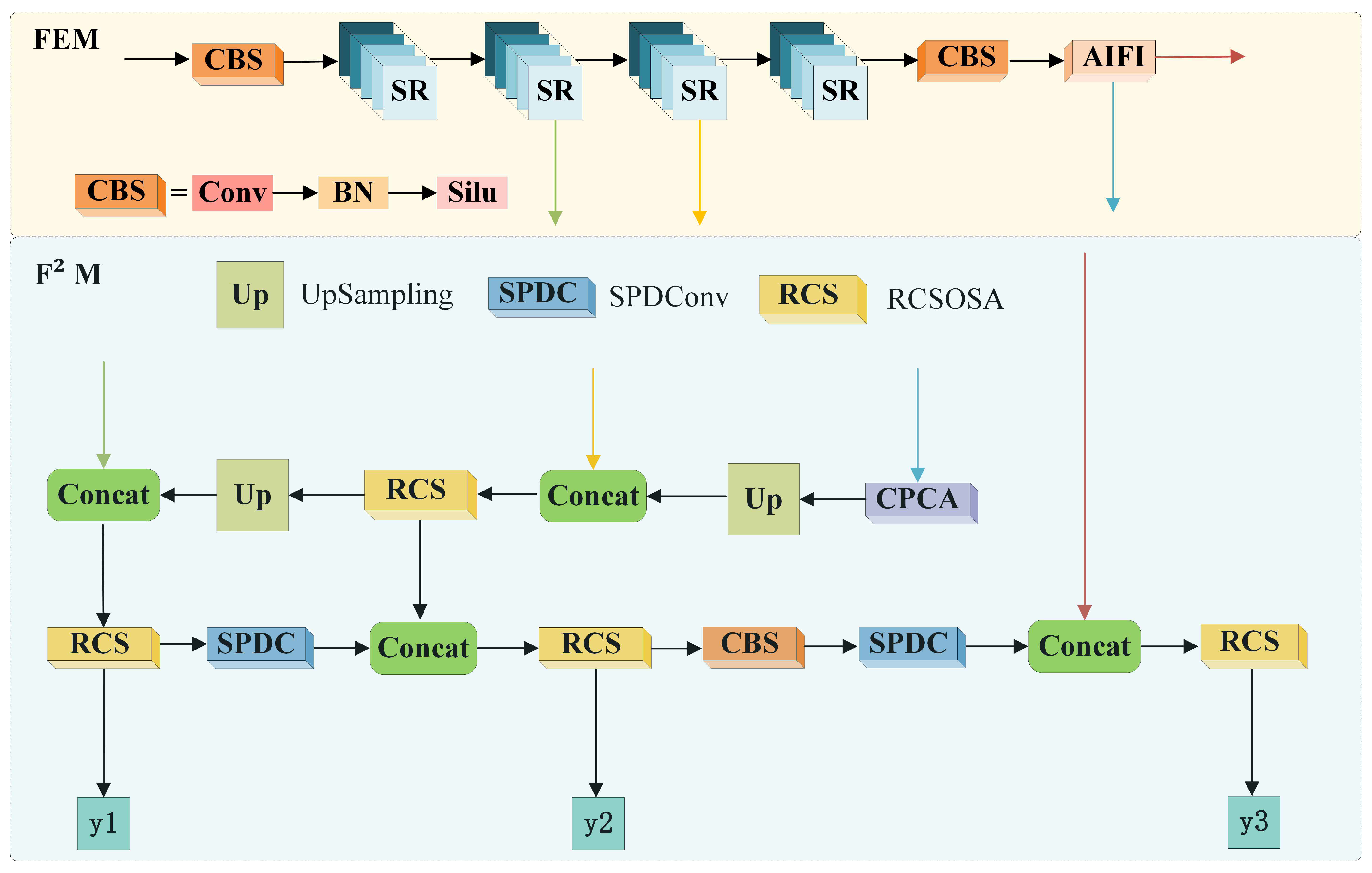

3.1. Feature Extraction Module (FEM)

3.1.1. AIFI Sub-Module

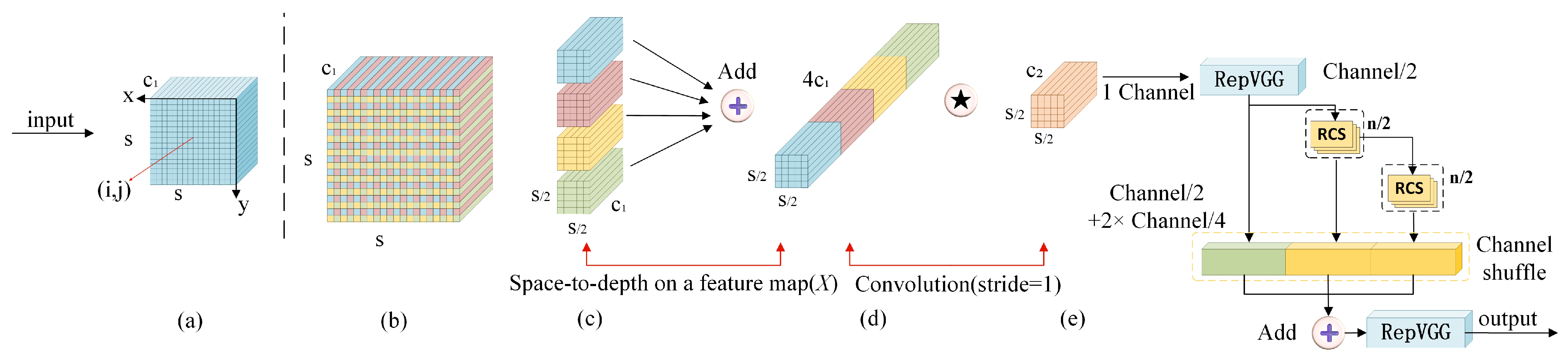

3.1.2. SR Sub-Module

3.2. Feature Fusion Module (F2M)

3.3. Detection Head Module (DHM)

4. Experimental Results

4.1. Datasets and Experimental Setup

4.2. Evaluation Metrics

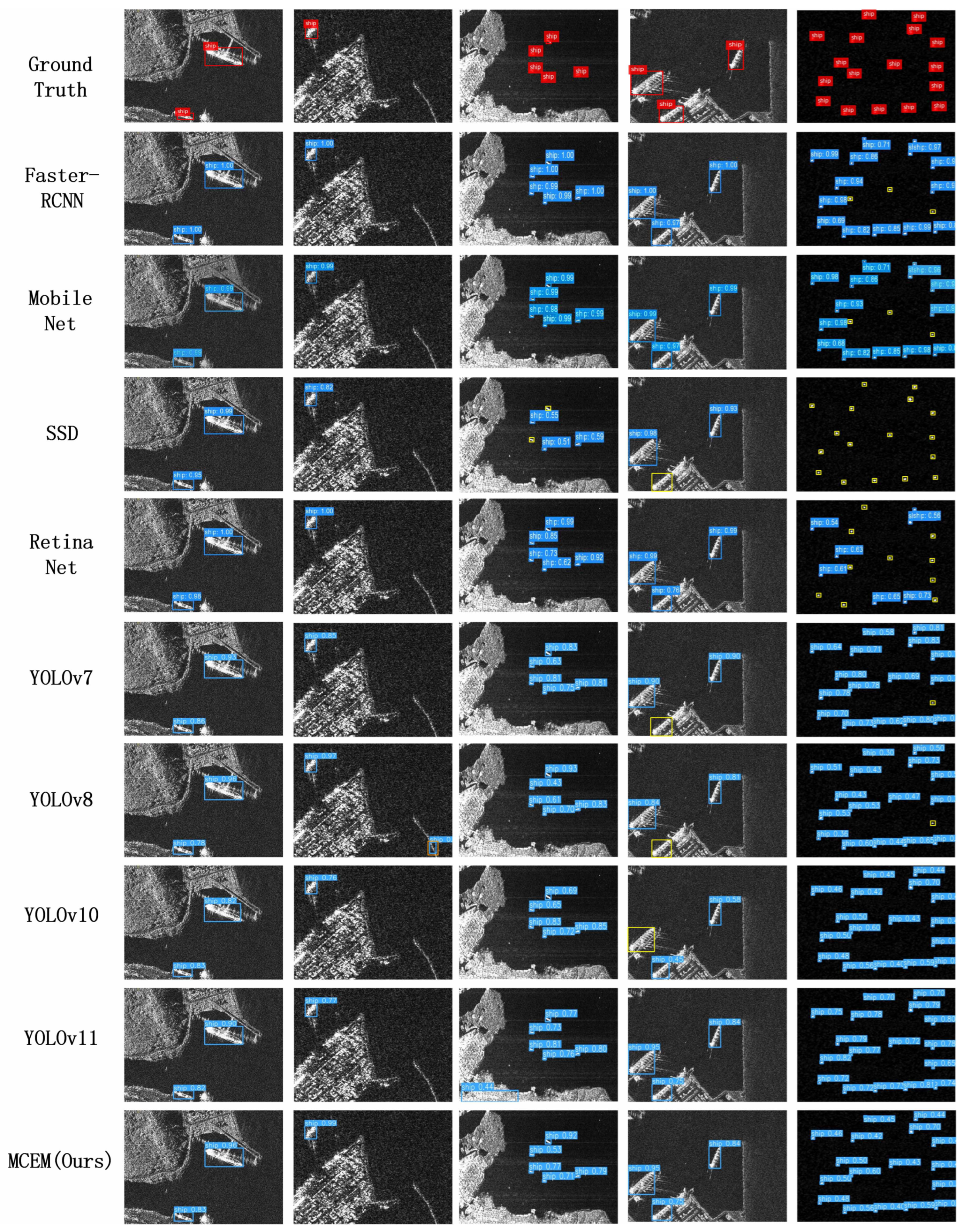

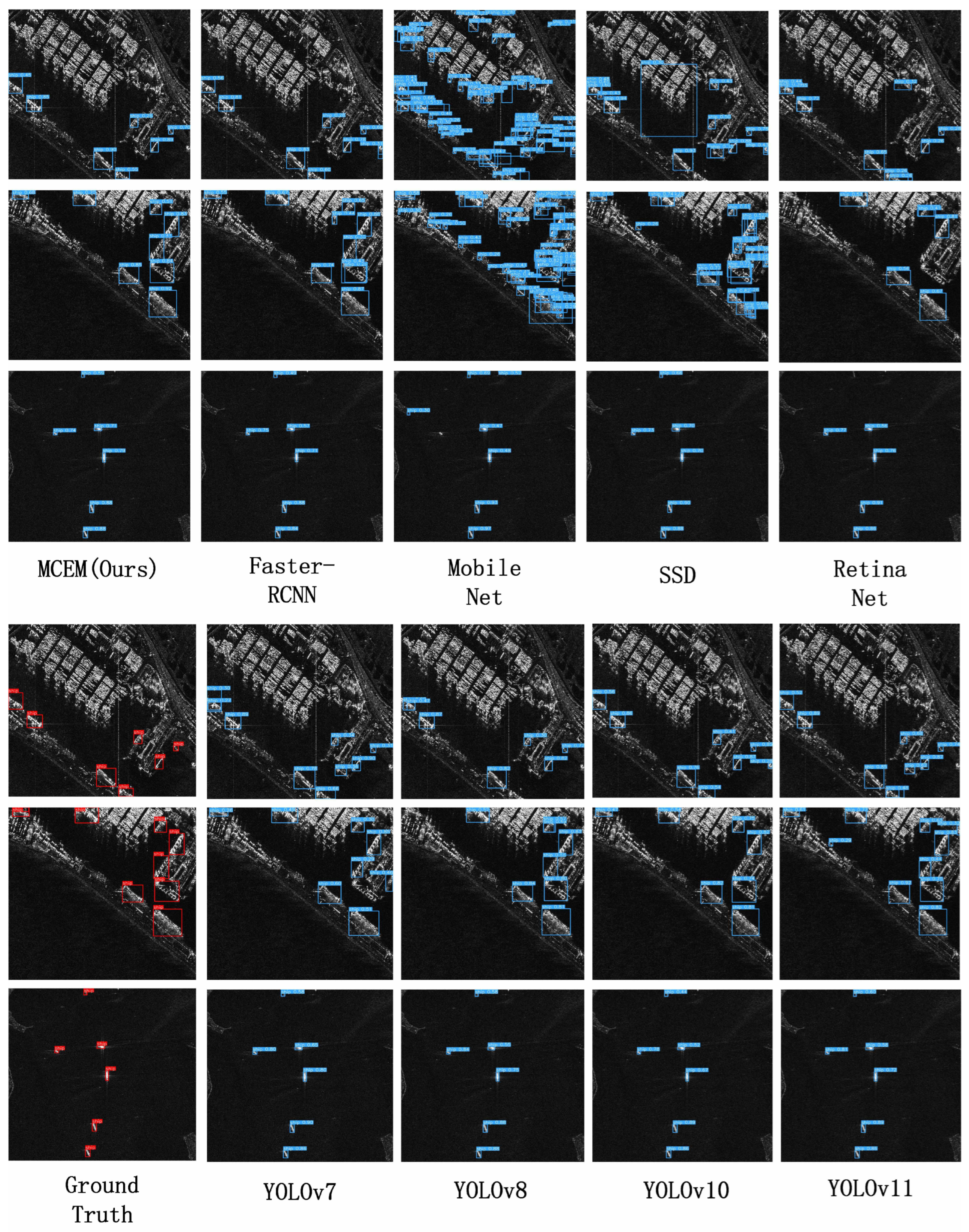

4.3. Comparison with SOTA Methods

4.4. Ablation Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Crisp, D.J. The State-of-the-Art in Ship Detection in Synthetic Aperture RADAR Imagery; Report No. DRDC-TM-2005-243; Department of Defence, DSTO: Canberra, ACT, Australia, 2004. [Google Scholar]

- Zhang, T.; Ji, J.; Li, X.; Yu, W.; Xiong, H. Ship detection from PolSAR imagery using the complete polarimetric covariance difference matrix. IEEE Trans. Geosci. Remote Sens. 2019, 57, 2824–2839. [Google Scholar] [CrossRef]

- Xu, W.; Guo, Z.; Huang, P.; Tan, W.; Gao, Z. Towards Efficient SAR Ship Detection: Multi-Level Feature Fusion and Lightweight Network Design. Remote Sens. 2025, 17, 2588. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, W.; Yang, Z.; Yin, J.; Yang, J. Ship detection from PolSAR imagery using the hybrid polarimetric covariance matrix. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1575–1579. [Google Scholar] [CrossRef]

- Zhang, T.; Yang, Z.; Gan, H.; Xiang, D.; Zhu, S.; Yang, J. PolSAR ship detection using the joint polarimetric information. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8225–8241. [Google Scholar] [CrossRef]

- Tian, Z.; Wang, W.; Zhou, K.; Song, X.; Shen, Y.; Liu, S. Weighted Pseudo-Labels and Bounding Boxes for Semisupervised SAR Target Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 5193–5203. [Google Scholar] [CrossRef]

- Wang, J.; Quan, S.; Xing, S.; Li, Y.; Wu, H.; Meng, W. PSO-based fine polarimetric decomposition for ship scattering characterization. ISPRS J. Photogramm. Remote Sens. 2025, 220, 18–31. [Google Scholar] [CrossRef]

- Xie, N.; Zhang, T.; Zhang, L.; Chen, J.; Wei, F.; Yu, W. VLF-SAR: A Novel Vision-Language Framework for Few-shot SAR Target Recognition. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 9530–9544. [Google Scholar] [CrossRef]

- Robey, F.C.; Fuhrmann, D.R.; Kelly, E.J.; Nitzberg, R. A CFAR adaptive matched filter detector. IEEE Trans. Aerosp. Electron. Syst. 1992, 28, 208–216. [Google Scholar] [CrossRef]

- Wackerman, C.C.; Friedman, K.S.; Pichel, W.G.; Clemente-Colón, P.; Li, X. Automatic detection of ships in RADARSAT-1 SAR imagery. Can. J. Remote Sens. 2001, 27, 568–577. [Google Scholar] [CrossRef]

- Liu, T.; Yang, Z.; Yang, J.; Gao, G. CFAR ship detection methods using compact polarimetric SAR in a K-Wishart distribution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 12, 3737–3745. [Google Scholar] [CrossRef]

- Chen, J.; Niu, L.; Zhang, J.; Si, J.; Qian, C.; Zhang, L. Amodal instance segmentation via prior-guided expansion. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 313–321. [Google Scholar]

- Huang, B.; Zhang, T.; Quan, S.; Wang, W.; Guo, W.; Zhang, Z. Scattering Enhancement and Feature Fusion Network for Aircraft Detection in SAR Images. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 1936–1950. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Volume 28, pp. 91–99. [Google Scholar]

- Chen, S.; Wang, H.; Xu, F.; Jin, Y.-Q. Target classification using the deep convolutional networks for SAR images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4806–4817. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot multibox detector. In Computer Vision–ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Chen, J.; Yan, J.; Fang, Y.; Niu, L. Webly supervised fine-grained classification by integrally tackling noises and subtle differences. IEEE Trans. Image Process. 2025, 34, 2641–2653. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Cui, Z.; Wang, X.; Liu, N.; Cao, Z.; Yang, J. Ship Detection in Large-Scale SAR Images Via Spatial Shuffle-Group Enhance Attention. IEEE Trans. Geosci. Remote Sens. 2021, 59, 379–391. [Google Scholar] [CrossRef]

- Zhu, M.; Hu, G.; Li, S.; Zhou, H.; Wang, S.; Feng, Z. A Novel Anchor-Free Method Based on FCOS + ATSS for Ship Detection in SAR Images. Remote Sens. 2022, 14, 2034. [Google Scholar] [CrossRef]

- Chen, Y.; Zhu, X.; Li, Y.; Wei, Y.; Ye, L. Enhanced Semantic Feature Pyramid Network for Small Object Detection. Signal Process. Image Commun. 2023, 113, 116919. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, C.; Pei, J.; Huang, Y.; Zhang, Y.; Yang, H. A Deformable Convolution Neural Network for SAR ATR. In Proceedings of the IGARSS 2020—IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 2639–2642. [Google Scholar]

- Chen, P.; Zhou, H.; Li, Y.; Liu, P.; Liu, B. A Novel Deep Learning Network with Deformable Convolution and Attention Mechanisms for Complex Scenes Ship Detection in SAR Images. Remote Sens. 2023, 15, 2589. [Google Scholar]

- Panda, S.L.; Sahoo, U.K.; Maiti, S.; Sasmal, P. An Attention U-Net-Based Improved Clutter Suppression in GPR Images. IEEE Trans. Instrum. Meas. 2024, 73, 1–11. [Google Scholar] [CrossRef]

- Li, Z.; He, H.; Zhou, T.; Zhang, Q.; Han, X.; You, Y. Dual CG-IG Distribution Model for Sea Clutter and Its Parameter Correction Method. J. Syst. Eng. Electron. 2025, 1–11. [Google Scholar] [CrossRef]

- Li, M.; Lin, S.; Huang, X. SAR Ship Detection Based on Enhanced Attention Mechanism. In Proceedings of the 2021 2nd International Conference on Artificial Intelligence and Computer Engineering (ICAICE), Hangzhou, China, 26–28 November 2021; pp. 759–762. [Google Scholar]

- Li, Y.; Liu, J.; Li, X.; Zhang, X.; Wu, Z.; Han, B. A Lightweight Network for Ship Detection in SAR Images Based on Edge Feature Aware and Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 3782–3796. [Google Scholar] [CrossRef]

- Zhang, Y.; Cai, W.; Guo, J.; Kong, H.; Huang, Y.; Ding, X. Lightweight SAR Ship Detection via Pearson Correlation and Nonlocal Distillation. IEEE Geosci. Remote Sens. Lett. 2025, 22, 1–5. [Google Scholar] [CrossRef]

- Liu, M.; Xu, J.; Zhou, Y. Real-time processing on airborne platforms. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Vancouver, BC, Canada, 17–24 June 2023; pp. 123–134. [Google Scholar]

- Zhang, Y.; Ye, M.; Zhu, G.; Liu, Y.; Guo, P.; Yan, J. FFCA-YOLO for small object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- Kim, K.-H.; Hong, S.; Roh, B.; Cheon, Y.; Park, M. PVANET: Deep but lightweight neural networks for real-time object detection. arXiv 2016, arXiv:1608.08021. [Google Scholar]

- He, F.; Wang, C.; Guo, B. SSGY: A Lightweight Neural Network Method for SAR Ship Detection. Remote Sens. 2025, 17, 2868. [Google Scholar] [CrossRef]

- Sunkara, R.; Luo, T. No More Strided Convolutions or Pooling: A New CNN Building Block for Low-Resolution Images and Small Objects. In Machine Learning and Knowledge Discovery in Databases; Amini, M.-R., Canu, S., Fischer, A., Guns, T., Kralj Novak, P., Tsoumakas, G., Eds.; Springer: Cham, Switzerland, 2023; pp. 443–459. [Google Scholar]

- Kang, M.; Ting, C.-M.; Ting, F.F.; Phan, R.C.-W. RCS-YOLO: A Fast and High-Accuracy Object Detector for Brain Tumor Detection. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2023; Greenspan, H., Madabhushi, A., Mousavi, P., Salcudean, S., Duncan, J., Syeda-Mahmood, T., Taylor, R., Eds.; Springer Nature: Cham, Switzerland, 2023; pp. 600–610. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs beat YOLOs on real-time object detection. arXiv 2024, arXiv:2304.08069. [Google Scholar]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A high-resolution SAR images dataset for ship detection and instance segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Li, J.; Xu, X.; Wang, B.; Zhan, X.; Xu, Y.; Ke, X.; Zeng, T.; Su, H.; et al. SAR Ship Detection Dataset (SSDD): Official Release and Comprehensive Data Analysis. Remote Sens. 2021, 13, 3690. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully convolutional one-stage object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9736. [Google Scholar]

- Meng, S.; Ren, K.; Lu, D.; Gu, G.; Chen, Q.; Lu, G. A Novel Ship CFAR Detection Algorithm Based on Adaptive Parameter Enhancement and Wake-Aided Detection in SAR Images. Infrared Phys. Technol. 2018, 89, 263–270. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, S.; Wang, W.-Q. A lightweight faster R-CNN for ship detection in SAR images. IEEE Geosci. Remote Sens. Lett. 2019, 19, 1–5. [Google Scholar] [CrossRef]

- Xu, P.; Li, Q.; Zhang, B.; Wu, F.; Zhao, K.; Du, X.; Yang, C.; Zhong, R. On-Board Real-Time Ship Detection in HISEA-1 SAR Images Based on CFAR and Lightweight Deep Learning. Remote Sens. 2021, 13, 1995. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar]

- Gao, J.; Geng, X.; Zhang, Y.; Wang, R.; Shao, K. Augmented Weighted Bidirectional Feature Pyramid Network for Marine Object Detection. Expert Syst. Appl. 2024, 237, 121688. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Cham, Switzerland, 2018; pp. 833–851. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. TOOD: Task-aligned One-stage Object Detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 3490–3499. [Google Scholar]

- Wu, Y.; He, K. Group normalization. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Manning, C.D.; Raghavan, P.; Schütze, H. Introduction to Information Retrieval; Cambridge University Press: Cambridge, UK, 2008. [Google Scholar]

- Li, L.; Ma, H.; Zhang, X.; Zhao, X.; Lv, M.; Jia, Z. Synthetic Aperture Radar Image Change Detection Based on Principal Component Analysis and Two-Level Clustering. Remote Sens. 2024, 16, 1861. [Google Scholar] [CrossRef]

- Qian, Y.; Yan, S.; Lukežič, A.; Kristan, M.; Kämäräinen, J.-K.; Matas, J. DAL: A Deep Depth-Aware Long-term Tracker. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 7825–7832. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision–ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Zhang, W.; Wang, S.; Thachan, S.; Chen, J.; Qian, Y. Deconv R-CNN for Small Object Detection on Remote Sensing Images. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Valencia, Spain, 22–27 July 2018; pp. 2483–2486. [Google Scholar]

- Liu, L.; Pan, Z.; Lei, B. Learning a Rotation Invariant Detector with Rotatable Bounding Box. arXiv 2017, arXiv:1711.09405. [Google Scholar]

- Li, Q.; Xiao, D.; Shi, F. A decoupled head and coordinate attention detection method for ship targets in SAR images. IEEE Access 2022, 10, 128562–128578. [Google Scholar] [CrossRef]

- Yue, T.; Zhang, Y.; Liu, P.; Xu, Y.; Yu, C. A generating-anchor network for small ship detection in SAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 7665–7676. [Google Scholar] [CrossRef]

- Hao, Y.; Zhang, Y. A lightweight convolutional neural network for ship target detection in SAR images. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 1882–1898. [Google Scholar] [CrossRef]

- Zhou, L.; Wan, Z.; Zhao, S.; Han, H.; Liu, Y. BFEA: A SAR ship detection model based on attention mechanism and multiscale feature fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 11163–11177. [Google Scholar] [CrossRef]

- Zhao, L.; Ning, F.; Xi, Y.; Liang, G.; He, Z.; Zhang, Y. MSFA-YOLO: A multi-scale SAR ship detection algorithm based on fused attention. IEEE Access 2024, 12, 24554–24568. [Google Scholar]

- Wang, Z.; Miao, F.; Huang, Y.; Lu, Z.; Ohtsuki, T.; Gui, G. Object Detection on SAR Images Via YOLOv10 and Integrated ACmix Attention Mechanism. In Proceedings of the 2024 6th International Conference on Robotics, Intelligent Control and Artificial Intelligence (RICAI), Nanjing, China, 6–8 December 2024; pp. 756–760. [Google Scholar]

- Bakirci, M.; Bayraktar, I. Assessment of YOLO11 for Ship Detection in SAR Imagery Under Open Ocean and Coastal Challenges. In Proceedings of the 2024 21st International Conference on Electrical Engineering, Computing Science and Automatic Control (CCE), Mexico City, Mexico, 23–25 October 2024; pp. 1–6. [Google Scholar]

| Metric | Definition | Evaluation Focus |

|---|---|---|

| [58] | Average precision at IoU thresholds [0.50:0.05:0.95] | Comprehensive detection accuracy |

| [57] | AP at IoU = 0.50 | Basic localization capability |

| [58] | AP at IoU = 0.75 | Precise localization requirement |

| [59] | AP for small targets (area < 1024 px2) | Small target performance (sub-32 × 32 px) |

| [60] | AP for medium targets (1024 ≤ area ≤ 9216 px2) | Medium target detection (32 × 32 to 96 × 96px) |

| [60] | AP for large targets (area > 9216 px2) | Oversized target capability |

| Methods | (%) | (%) | (%) | (%) | (%) | (%) | P (%) | R (%) | F1 (%) | (%) |

|---|---|---|---|---|---|---|---|---|---|---|

| Faster-RCNN [42] | 65.04 | 96.12 | 77.47 | 61.68 | 71.54 | 66.07 | 93.7 | 91.6 | 92.6 | 96.7 |

| MobileNet [63] | 41.88 | 79.89 | 38.97 | 35.65 | 56.90 | 29.09 | 74.2 | 71.5 | 72.8 | 80.4 |

| SSD [62] | 52.94 | 88.07 | 60.08 | 49.00 | 61.26 | 52.50 | 83.6 | 81.1 | 82.3 | 88.6 |

| RetinaNet [61] | 60.76 | 90.90 | 70.46 | 57.32 | 67.62 | 61.77 | 87.6 | 85.4 | 86.5 | 91.5 |

| YOLOv7 [64] | 56.80 | 89.90 | 64.30 | 55.00 | 63.30 | 32.20 | 86.7 | 84.3 | 85.5 | 90.5 |

| YOLOv8 [65] | 68.00 | 97.20 | 81.50 | 66.30 | 72.30 | 63.80 | 95.2 | 93.4 | 94.3 | 97.5 |

| YOLOv10 [66] | 67.00 | 96.20 | 80.30 | 65.20 | 71.60 | 51.20 | 93.5 | 91.4 | 92.4 | 96.8 |

| YOLOv11 [67] | 67.90 | 96.40 | 82.80 | 66.30 | 72.00 | 59.90 | 93.7 | 91.6 | 92.6 | 97.0 |

| MCEM (Ours) | 70.20 | 96.9 | 84.70 | 67.30 | 74.80 | 77.70 | 96.5 | 94.7 | 95.6 | 98.1 |

| Methods | (%) | (%) | (%) | (%) | (%) | (%) | P (%) | R (%) | F1 (%) | (%) |

|---|---|---|---|---|---|---|---|---|---|---|

| Faster-RCNN [42] | 56.70 | 81.10 | 65.30 | 43.60 | 74.50 | 46.30 | 80.5 | 69.8 | 74.7 | 83.5 |

| MobileNet [63] | 35.40 | 59.10 | 39.00 | 16.10 | 62.00 | 34.60 | 57.6 | 51.4 | 54.3 | 61.2 |

| SSD [62] | 43.50 | 67.10 | 49.80 | 23.30 | 70.40 | 52.70 | 68.2 | 60.7 | 64.2 | 69.5 |

| RetinaNet [61] | 50.70 | 83.10 | 54.00 | 36.20 | 72.10 | 63.20 | 81.8 | 73.1 | 77.2 | 85.6 |

| YOLOv7 [64] | 54.00 | 85.40 | 58.60 | 40.50 | 73.20 | 70.00 | 84.0 | 75.5 | 79.5 | 87.8 |

| YOLOv8 [65] | 57.70 | 83.20 | 65.00 | 42.80 | 77.30 | 59.30 | 87.2 | 75.9 | 82.4 | 86.5 |

| YOLOv10 [66] | 57.30 | 83.80 | 65.10 | 43.80 | 75.80 | 41.70 | 82.5 | 73.9 | 77.9 | 86.2 |

| YOLOv11 [67] | 58.00 | 83.40 | 64.80 | 43.50 | 77.70 | 33.40 | 82.1 | 73.5 | 77.5 | 85.9 |

| MCEM (Ours) | 60.00 | 85.20 | 67.50 | 45.10 | 79.20 | 71.80 | 90.3 | 79.3 | 84.5 | 88.4 |

| Case | FEM | F2M | DHM | P (%) | R (%) | (%) | (%) | F1 (%) | GFLOPs | Speed (ms) |

|---|---|---|---|---|---|---|---|---|---|---|

| Base | × | × | × | 95.2 | 93.4 | 97.5 | 68.8 | 94.3 | 6.5 | 3.4 |

| Case 1 | ✓ | × | × | 94.2 | 93.2 | 97.0 | 69.5 | 93.7 | 6.3 | 5.5 |

| Case 2 | × | ✓ | × | 95.9 | 94.2 | 98.3 | 72.0 | 95.0 | 6.2 | 3.0 |

| Case 3 | × | × | ✓ | 96.6 | 94.7 | 98.5 | 72.5 | 95.6 | 6.4 | 2.6 |

| Case 4 | ✓ | ✓ | × | 97.0 | 94.7 | 98.8 | 72.1 | 95.8 | 6.0 | 3.0 |

| Case 5 | ✓ | ✓ | ✓ | 96.5 | 94.7 | 98.1 | 73.5 | 95.6 | 6.1 | 2.9 |

| Case | (%) | (%) | (%) | (%) | (%) | (%) |

|---|---|---|---|---|---|---|

| Base | 68.3 | 96.8 | 80.3 | 65.4 | 73.8 | 66.6 |

| Case 1 | 69.4 | 96.5 | 84.2 | 66.1 | 75.8 | 69.1 |

| Case 2 | 69.7 | 97.2 | 83.4 | 66.5 | 74.6 | 73.8 |

| Case 3 | 70.3 | 97.3 | 84.4 | 67.5 | 74.8 | 76.6 |

| Case 4 | 69.6 | 97.9 | 82.9 | 66.5 | 75.1 | 72.3 |

| Case 5 | 70.2 | 96.9 | 84.7 | 67.3 | 74.8 | 77.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, H.; He, M.; Yang, Z.; Gan, L. MCEM: Multi-Cue Fusion with Clutter Invariant Learning for Real-Time SAR Ship Detection. Sensors 2025, 25, 5736. https://doi.org/10.3390/s25185736

Chen H, He M, Yang Z, Gan L. MCEM: Multi-Cue Fusion with Clutter Invariant Learning for Real-Time SAR Ship Detection. Sensors. 2025; 25(18):5736. https://doi.org/10.3390/s25185736

Chicago/Turabian StyleChen, Haowei, Manman He, Zhen Yang, and Lixin Gan. 2025. "MCEM: Multi-Cue Fusion with Clutter Invariant Learning for Real-Time SAR Ship Detection" Sensors 25, no. 18: 5736. https://doi.org/10.3390/s25185736

APA StyleChen, H., He, M., Yang, Z., & Gan, L. (2025). MCEM: Multi-Cue Fusion with Clutter Invariant Learning for Real-Time SAR Ship Detection. Sensors, 25(18), 5736. https://doi.org/10.3390/s25185736