Methodologies for Remote Bridge Inspection—Review

Abstract

1. Introduction

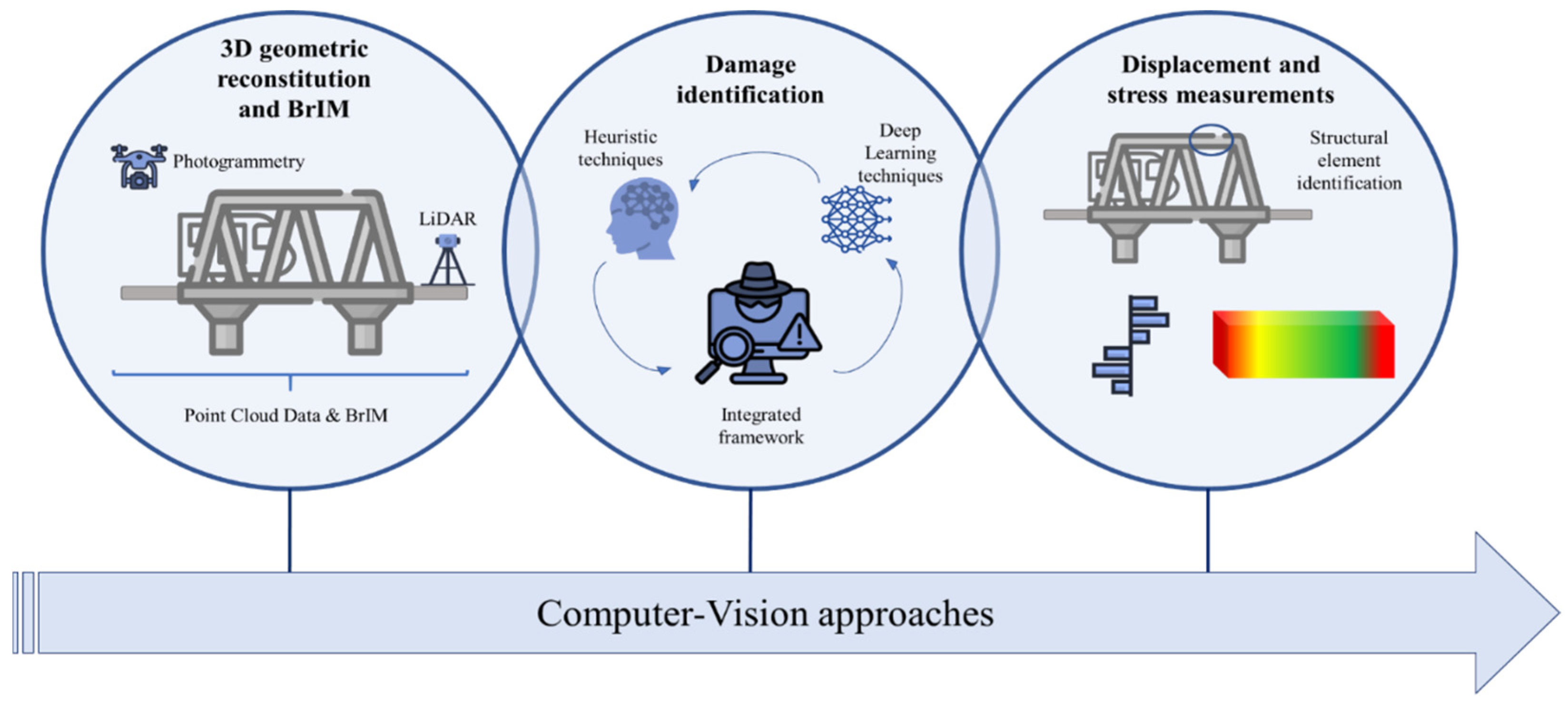

2. Vision-Based Methodologies

2.1. 3D Geometric Reconstitution

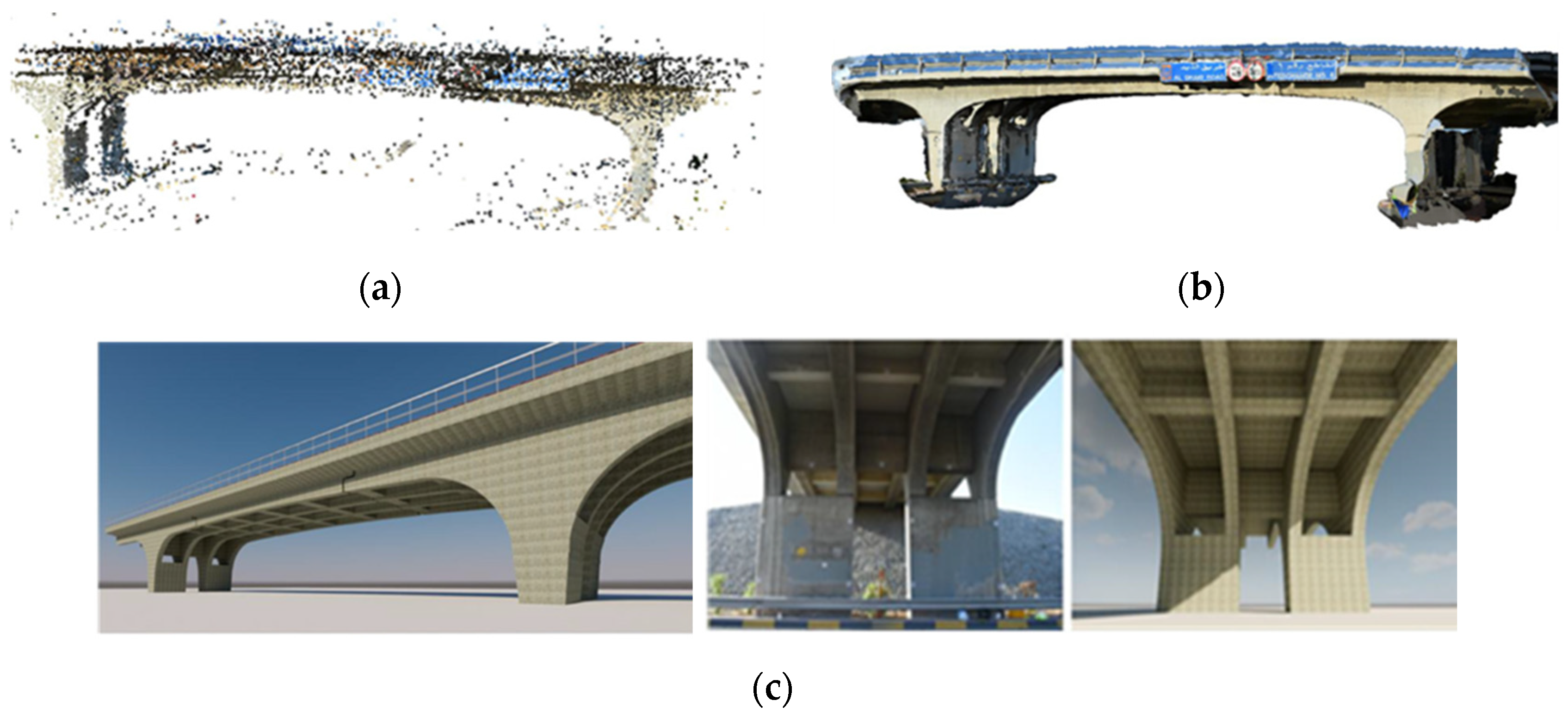

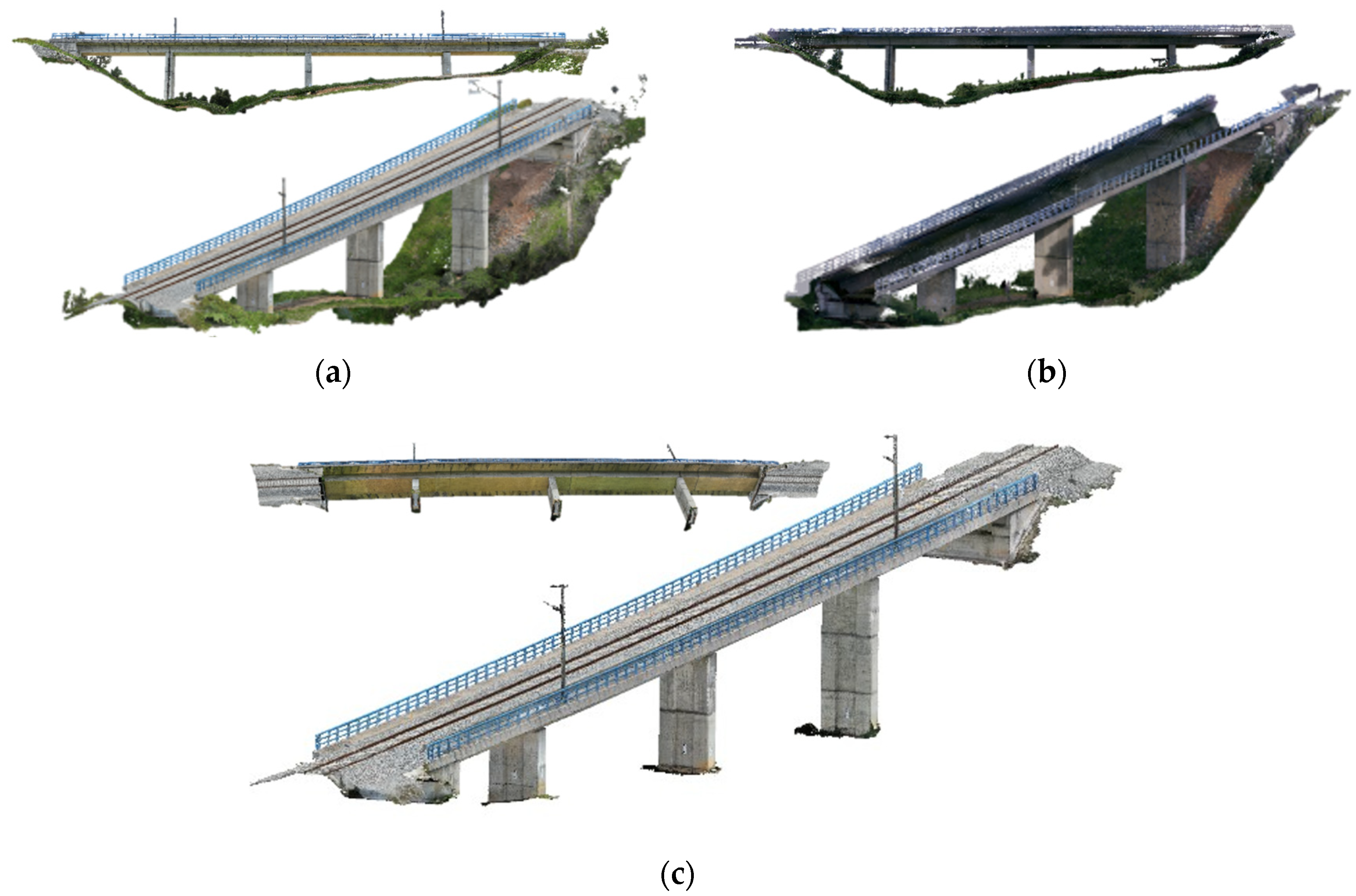

2.1.1. Photogrammetry

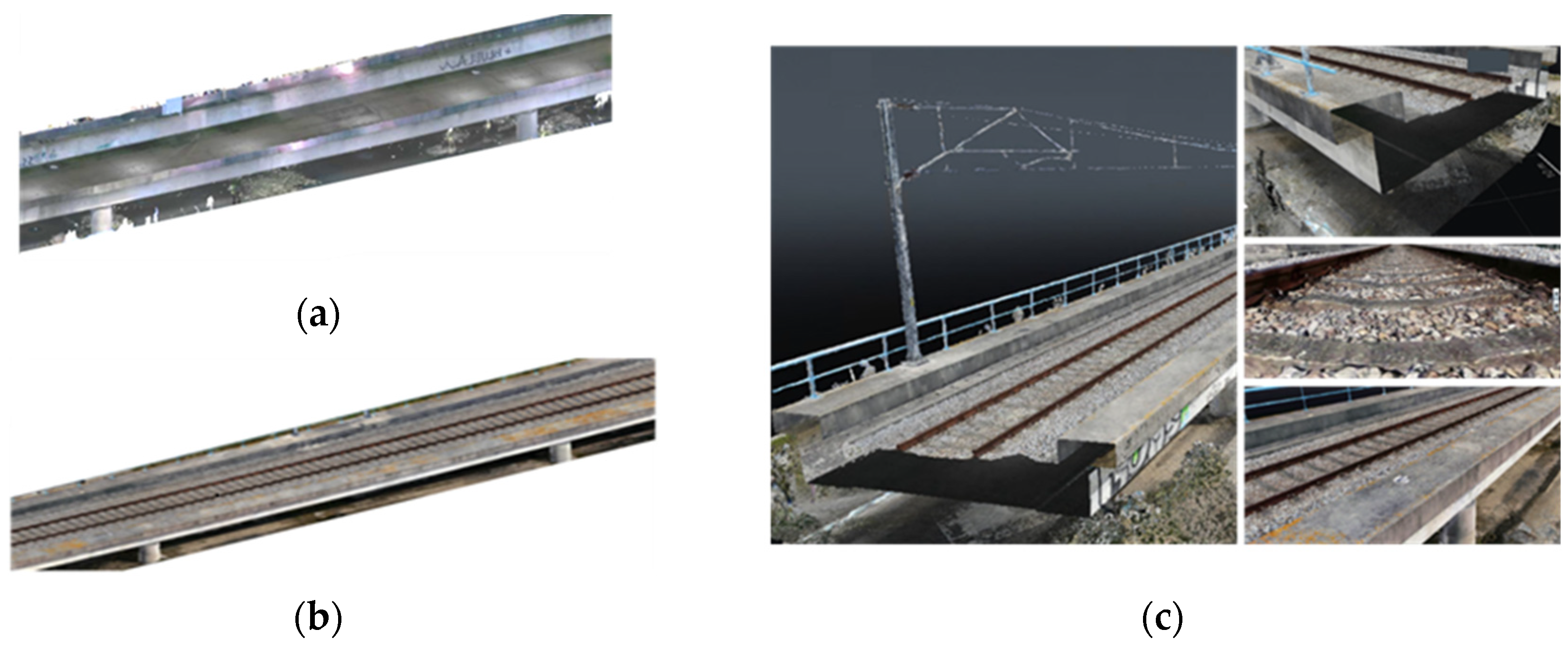

2.1.2. LiDAR

2.1.3. Hybrid Strategies

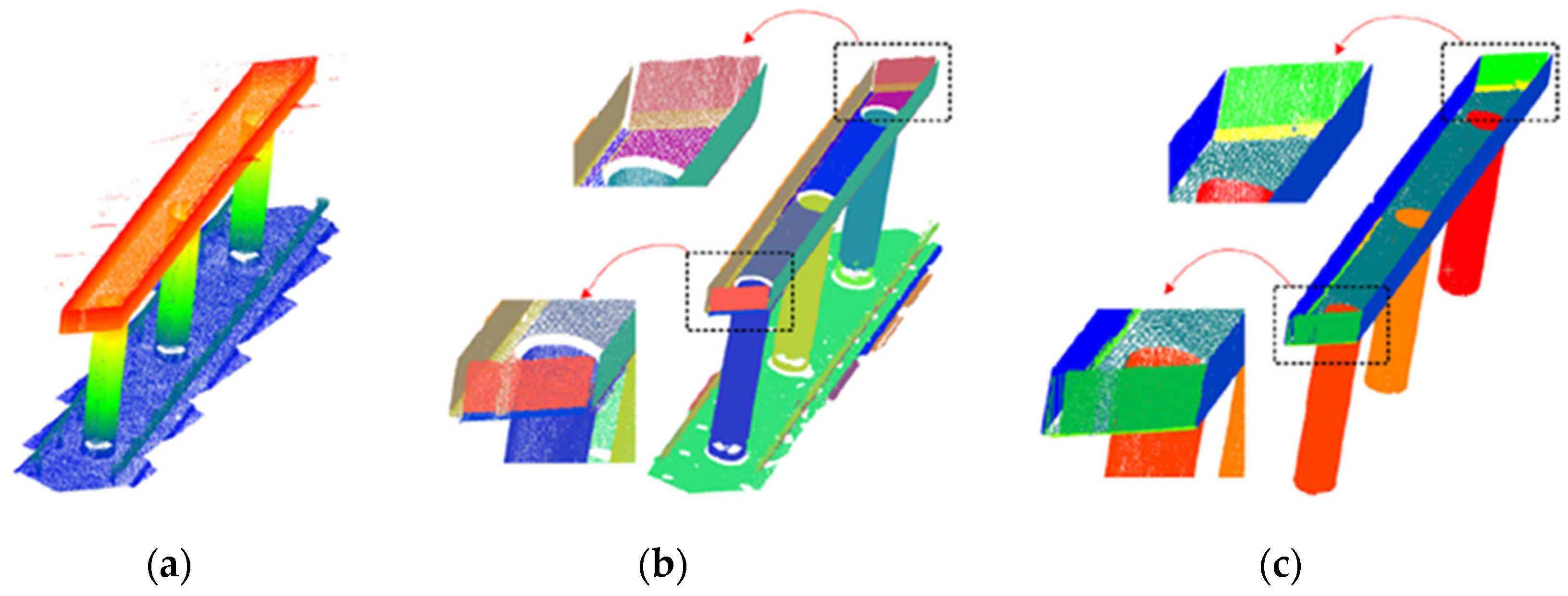

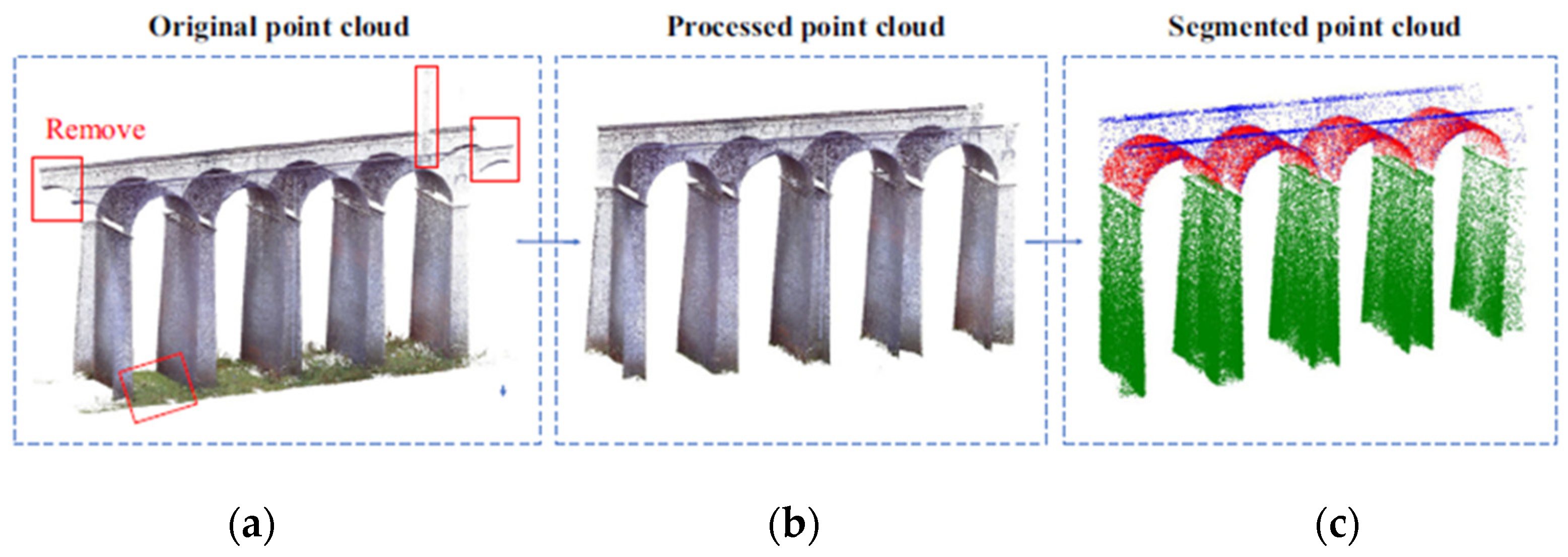

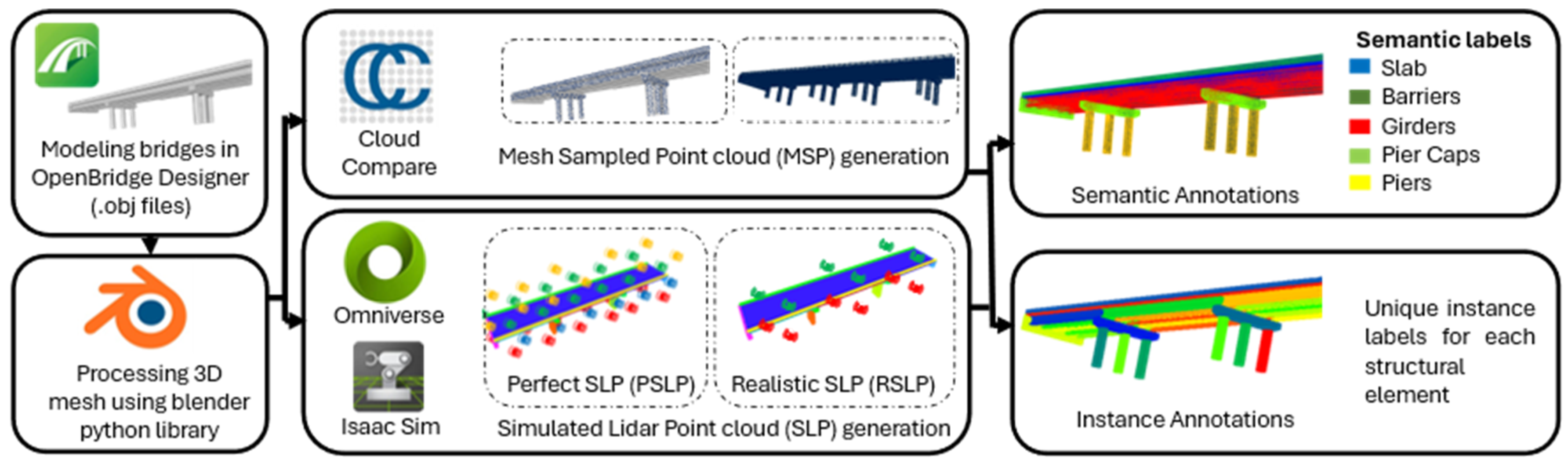

2.1.4. AI-Based Methodologies

2.2. Damage and Component Identification

2.2.1. Heuristic Techniques

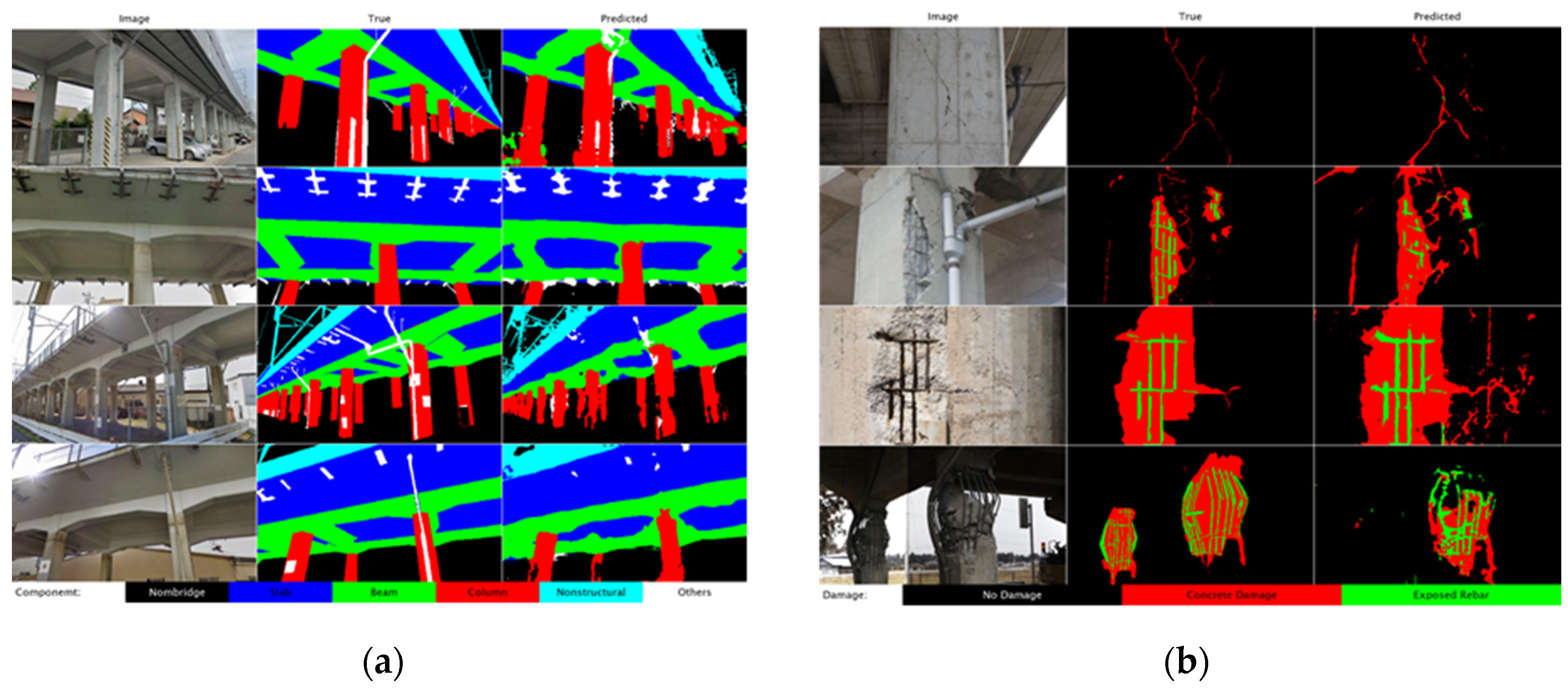

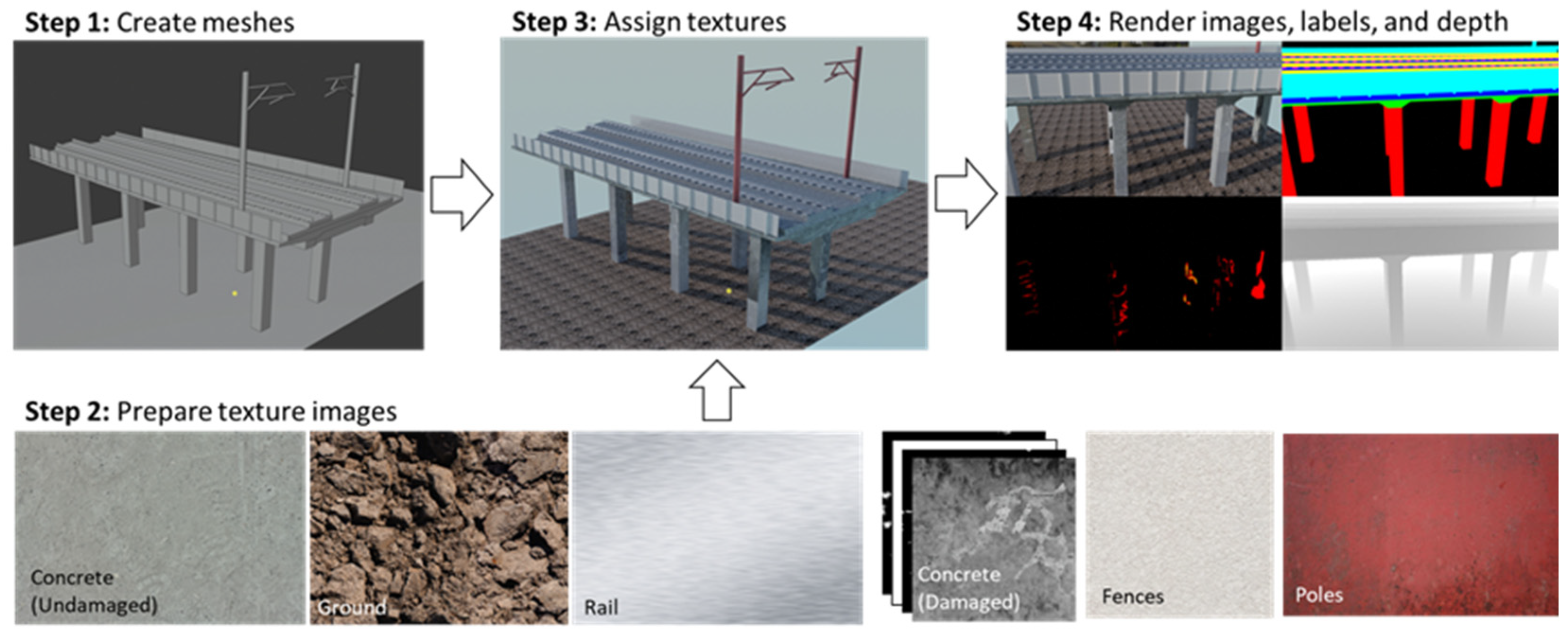

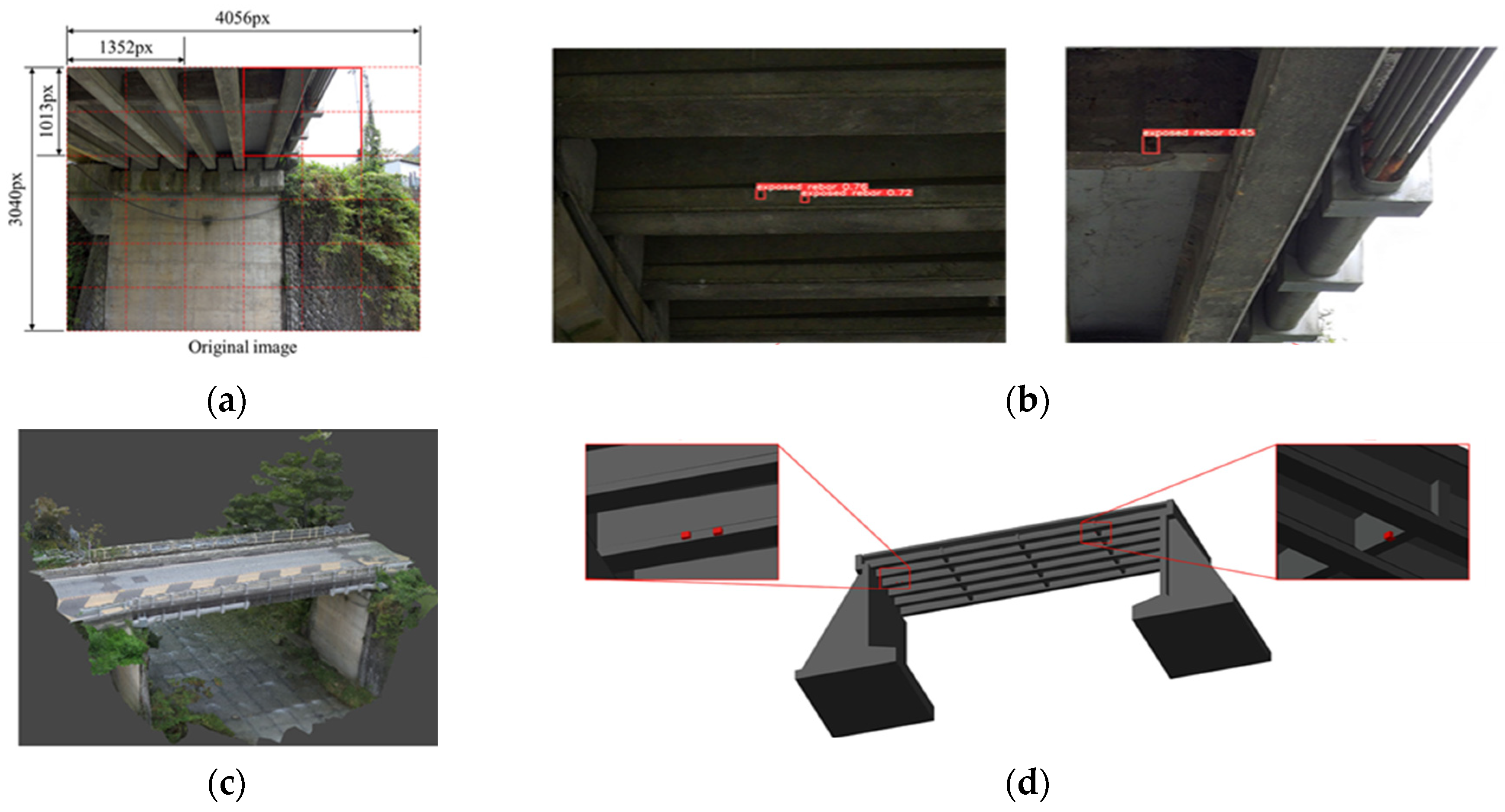

2.2.2. Deep Learning

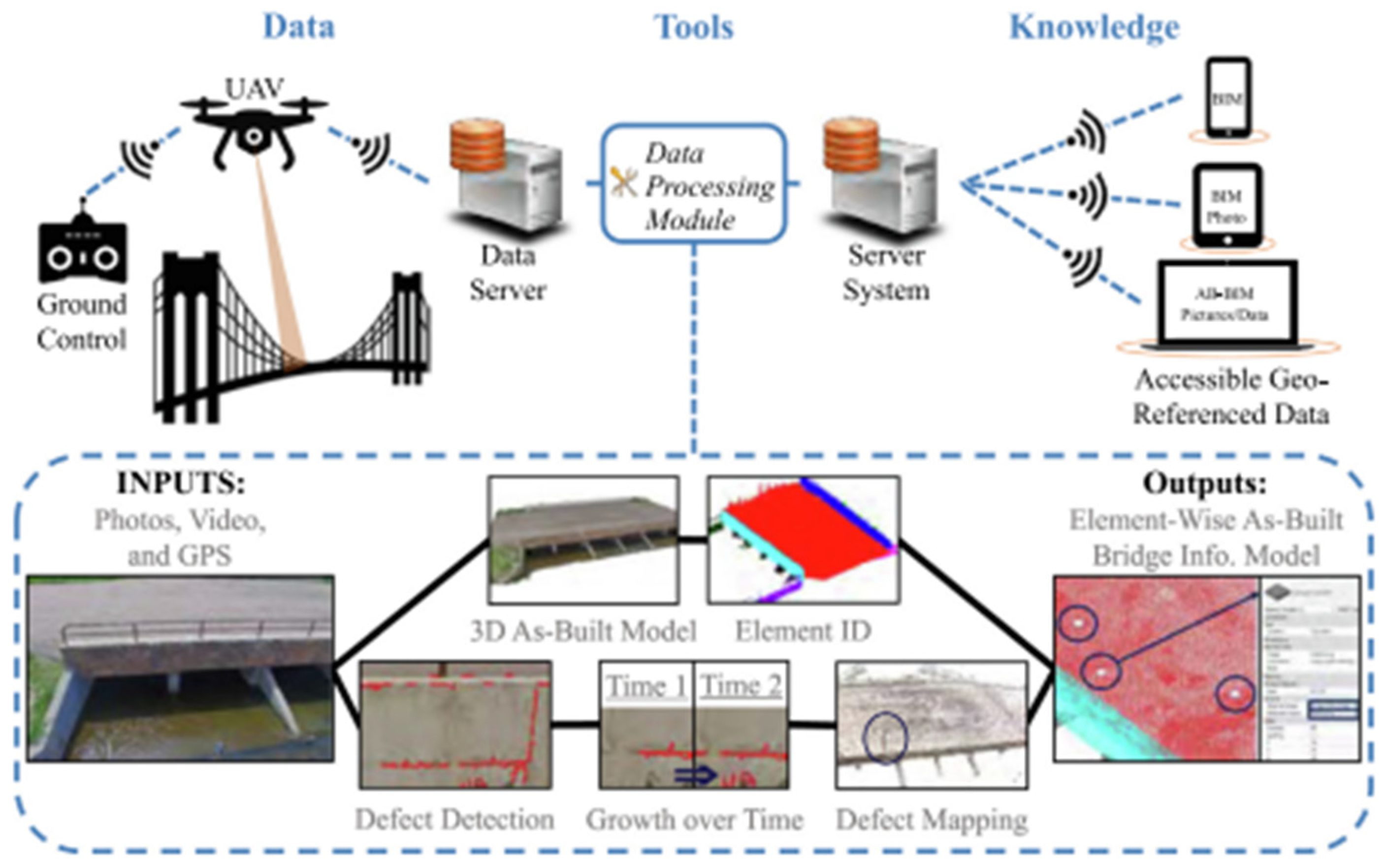

2.2.3. Integrated Frameworks

2.3. Measurement of Structural Performance Parameters

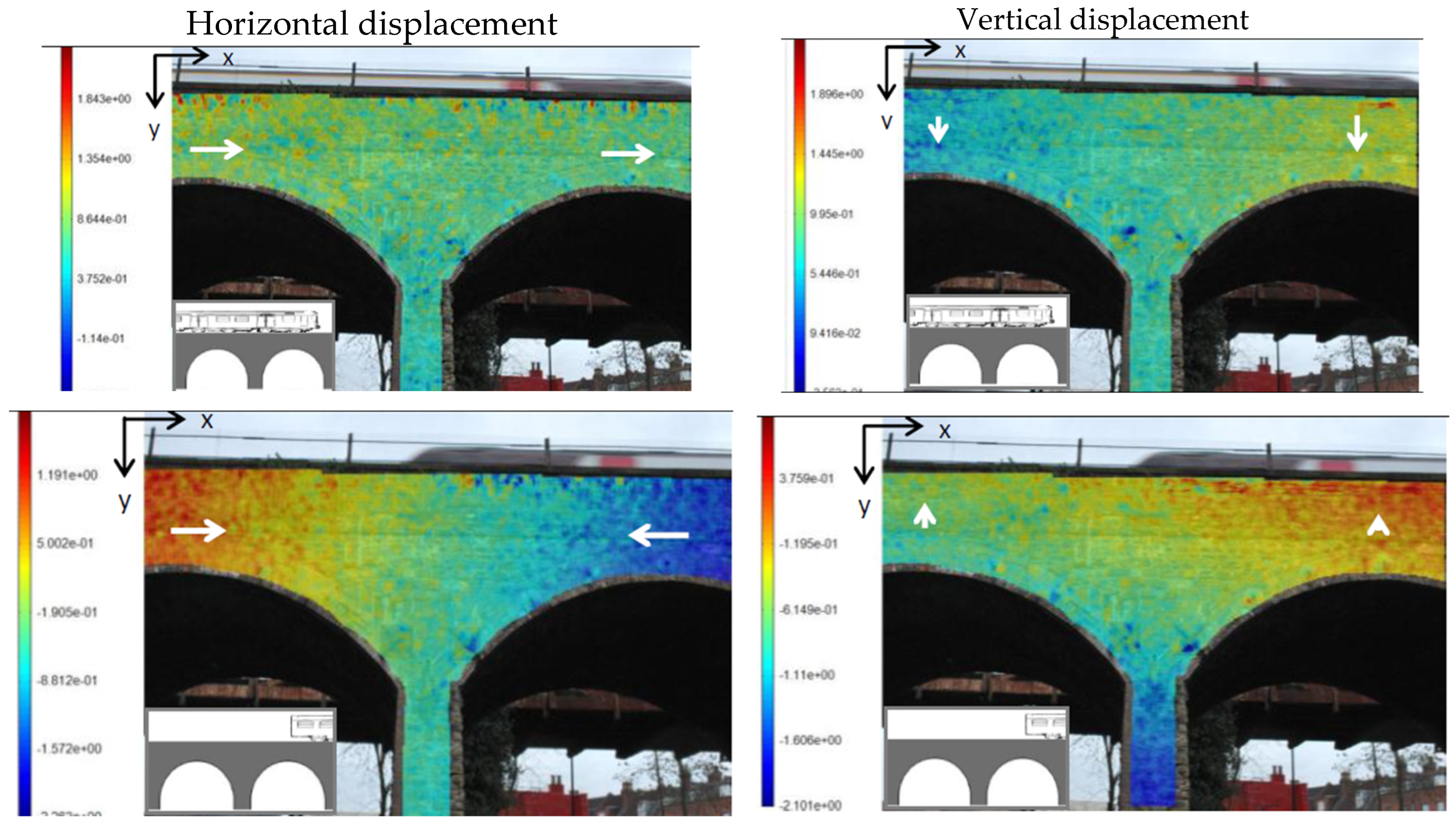

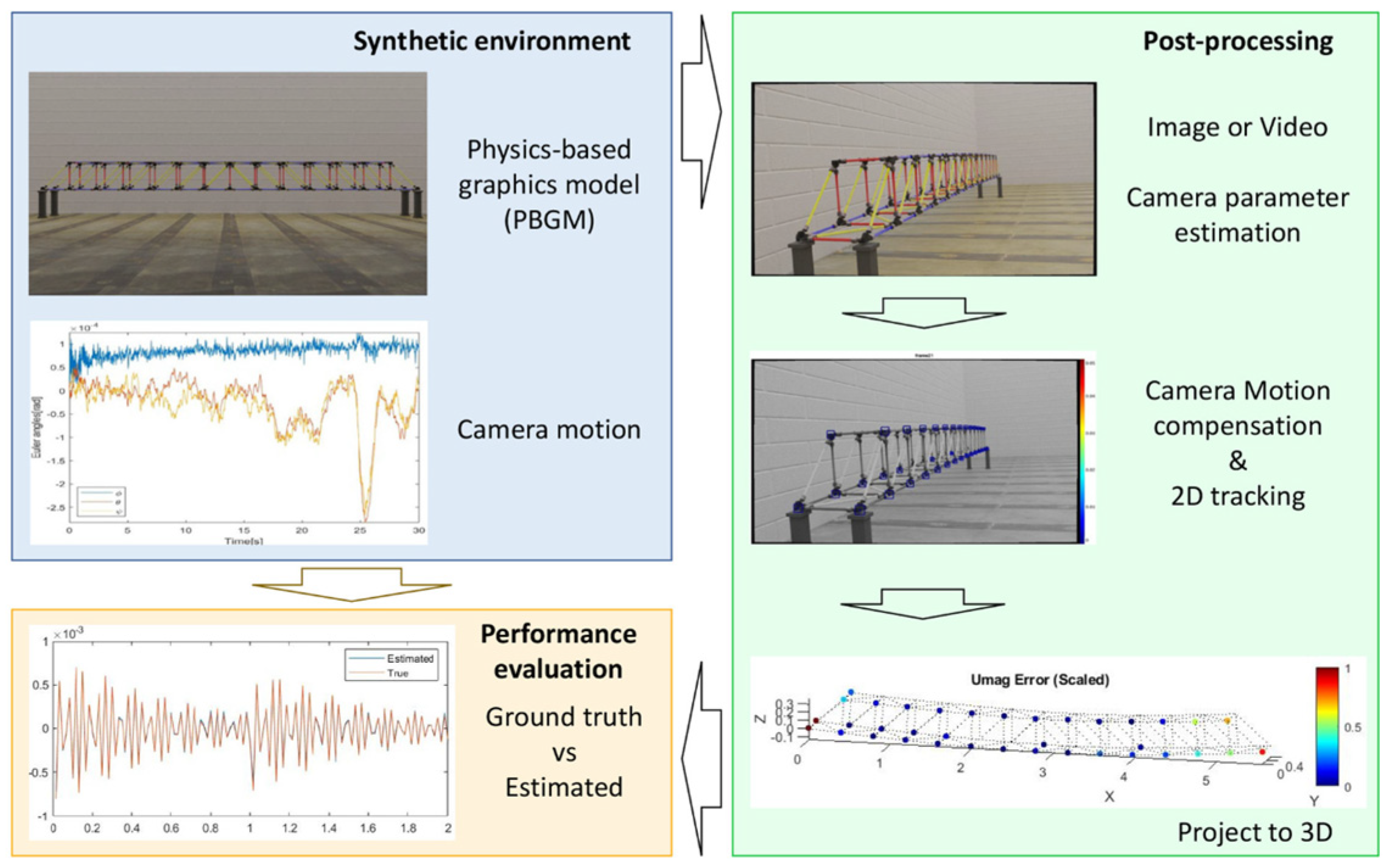

2.3.1. Displacements

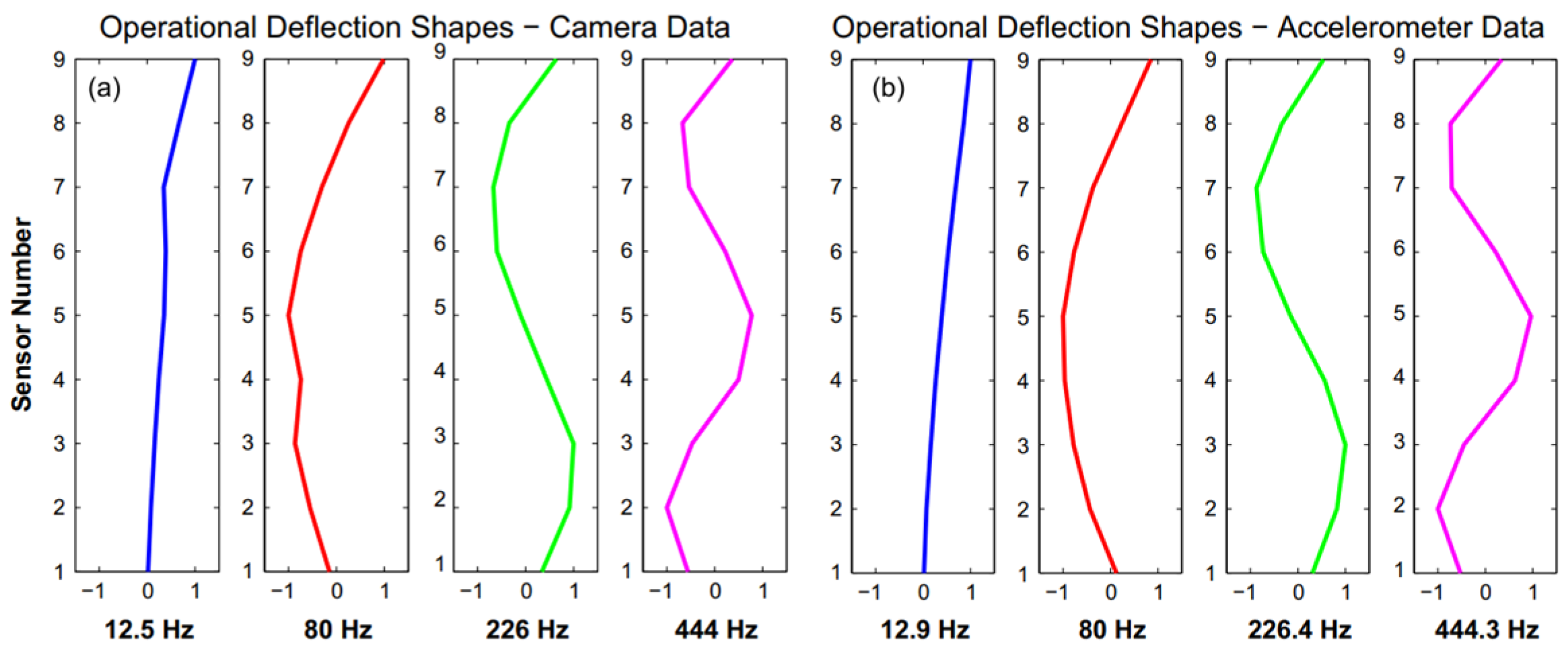

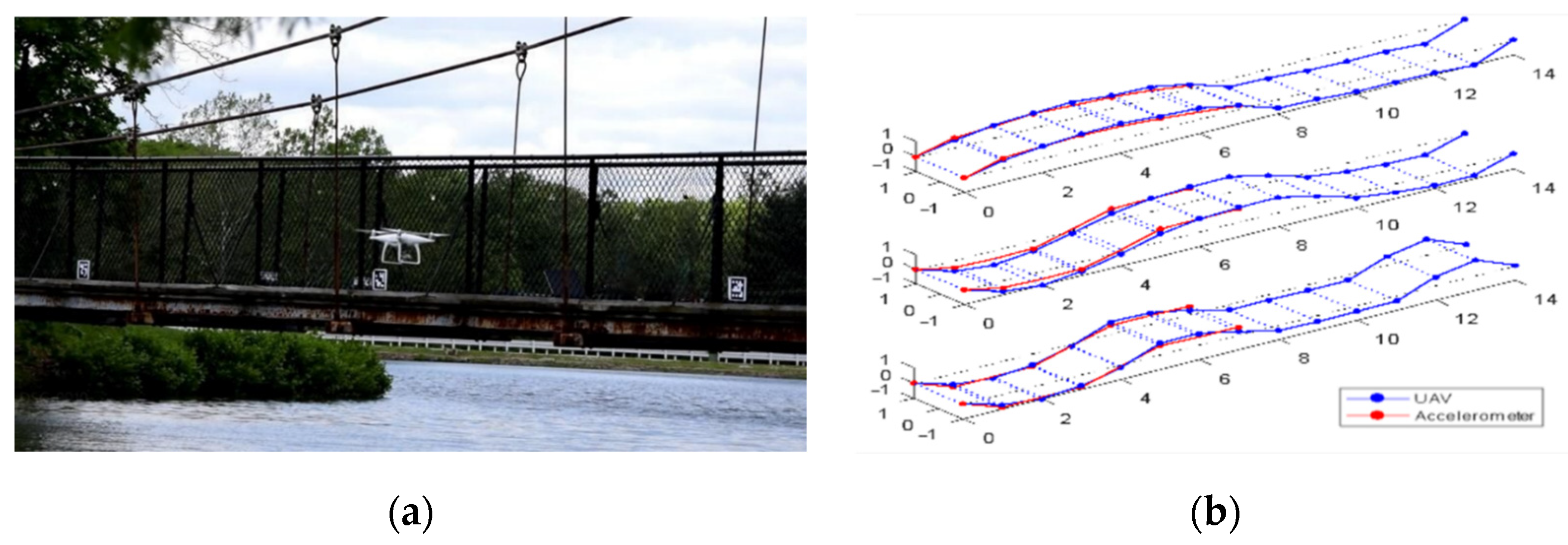

2.3.2. Modal Parameters

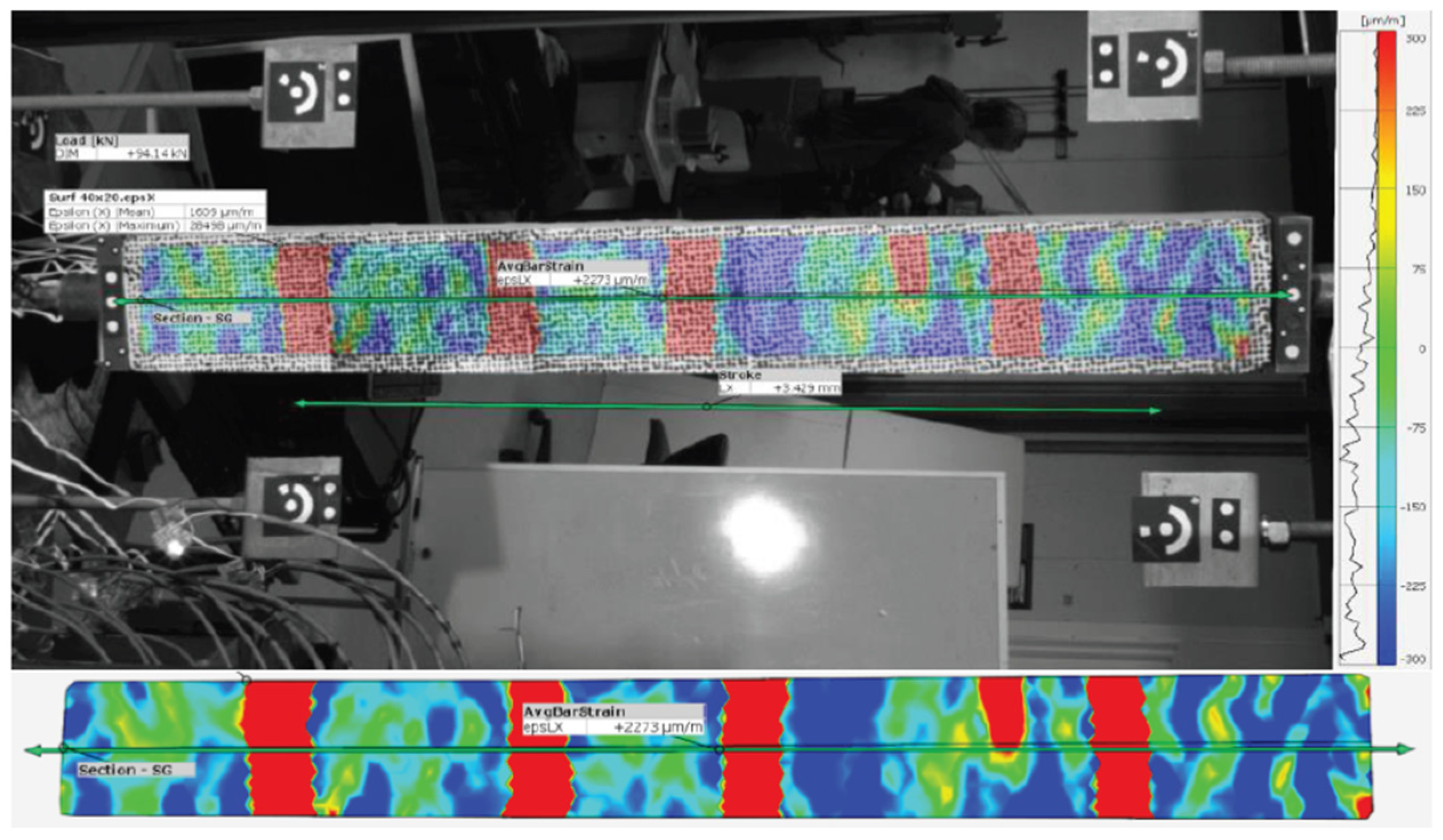

2.3.3. Strain Measurements and Stresses Estimation

3. Big Data

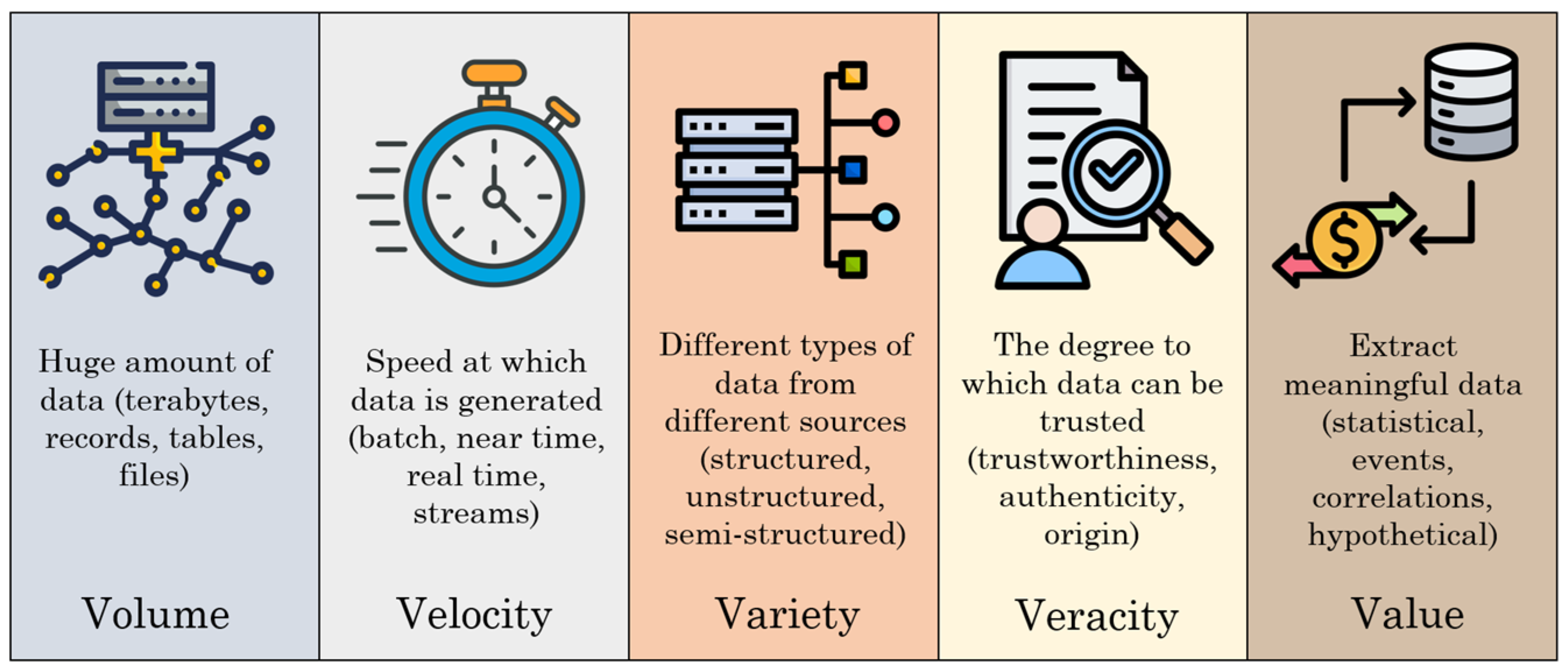

3.1. Main Features

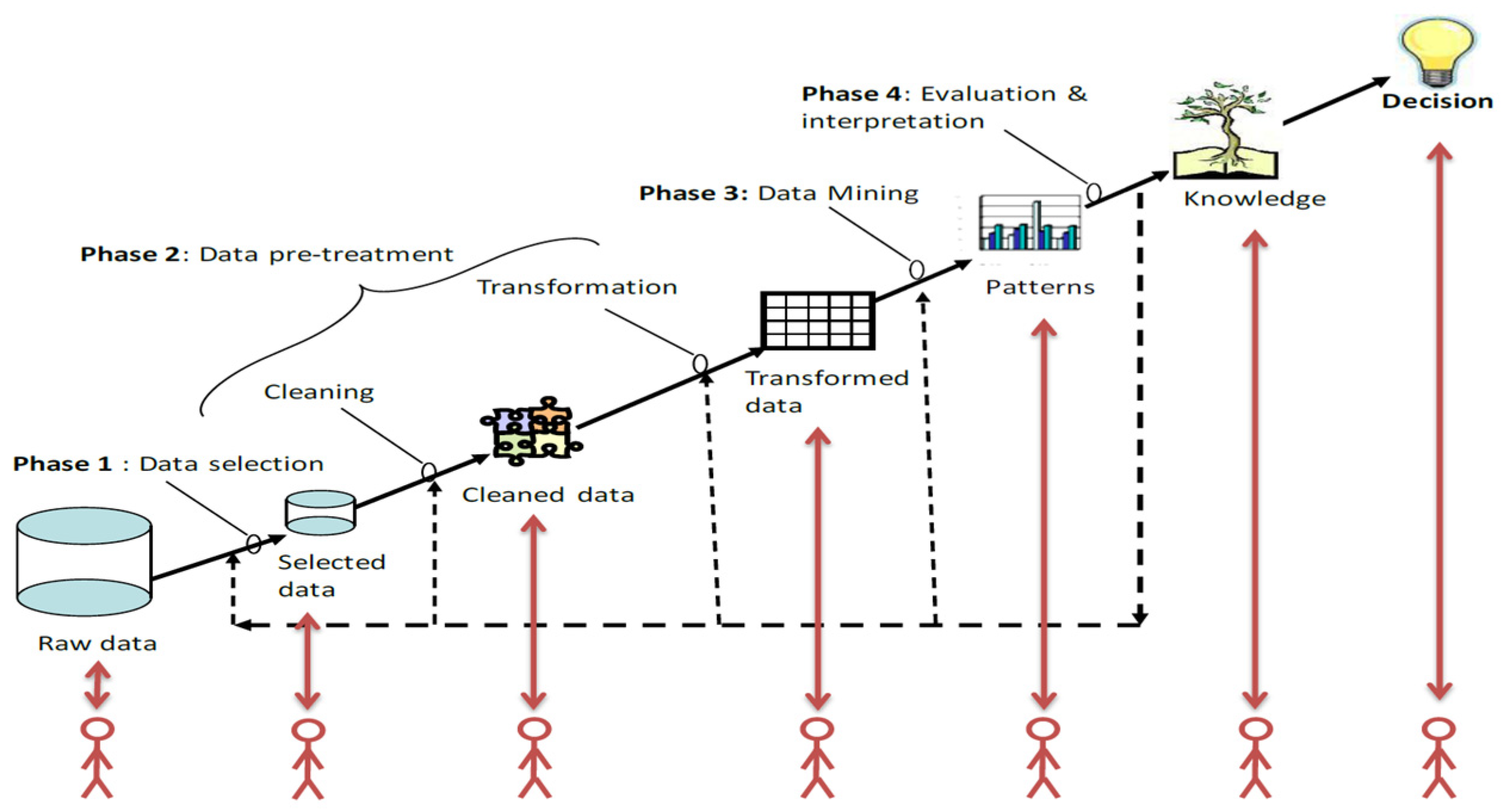

3.2. Analysis Methods

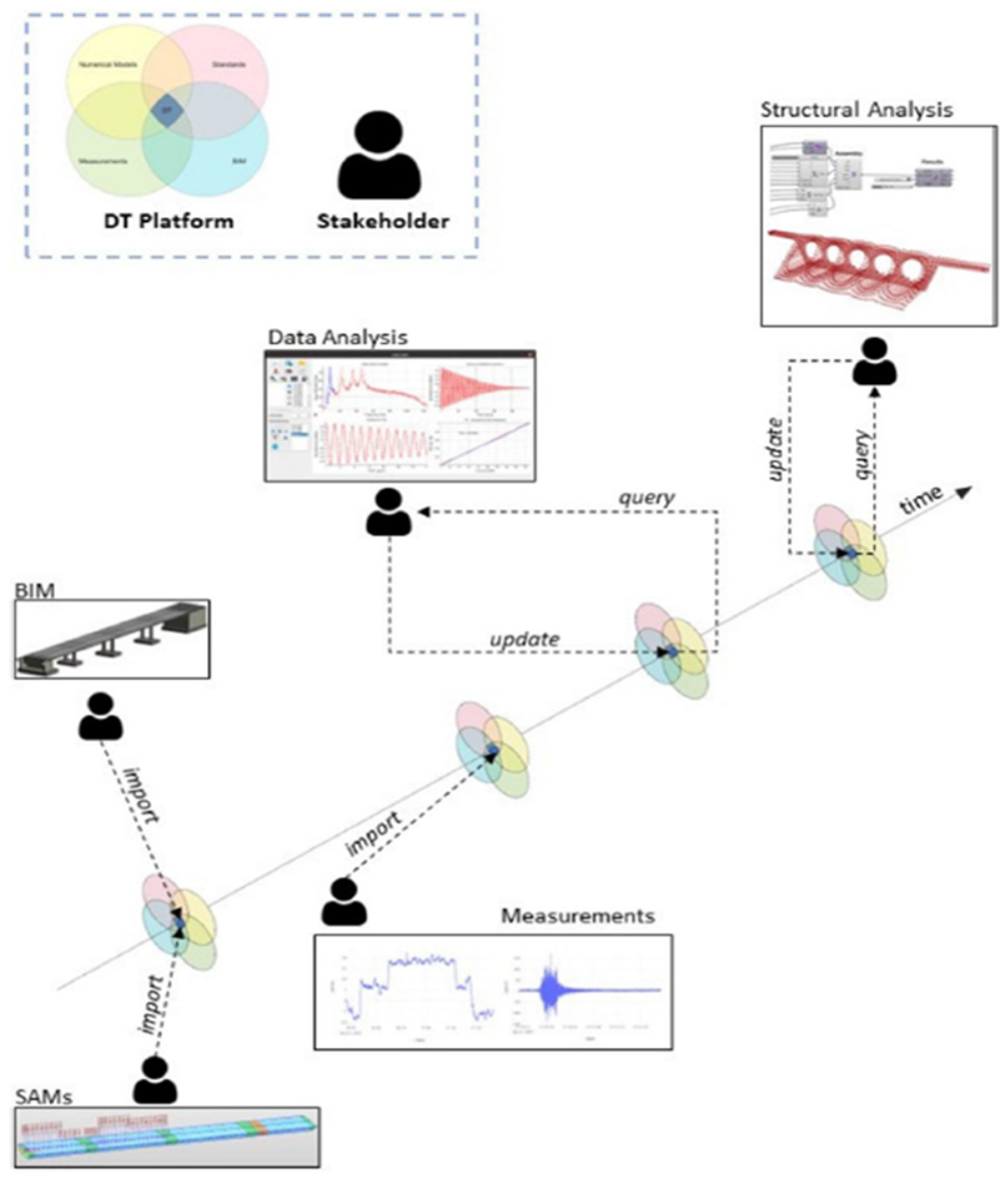

4. Digital Twins

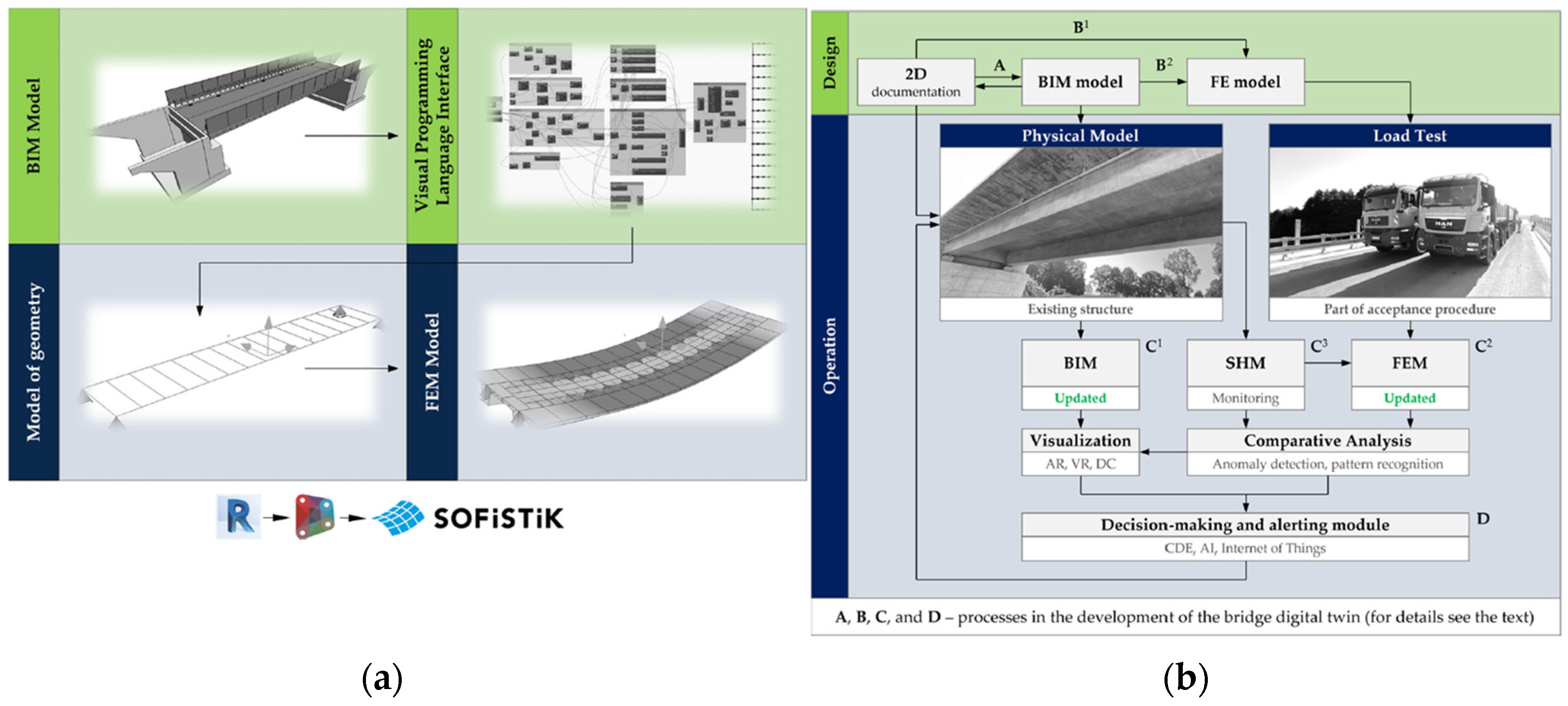

4.1. Definitions and Concepts of Digital Twin

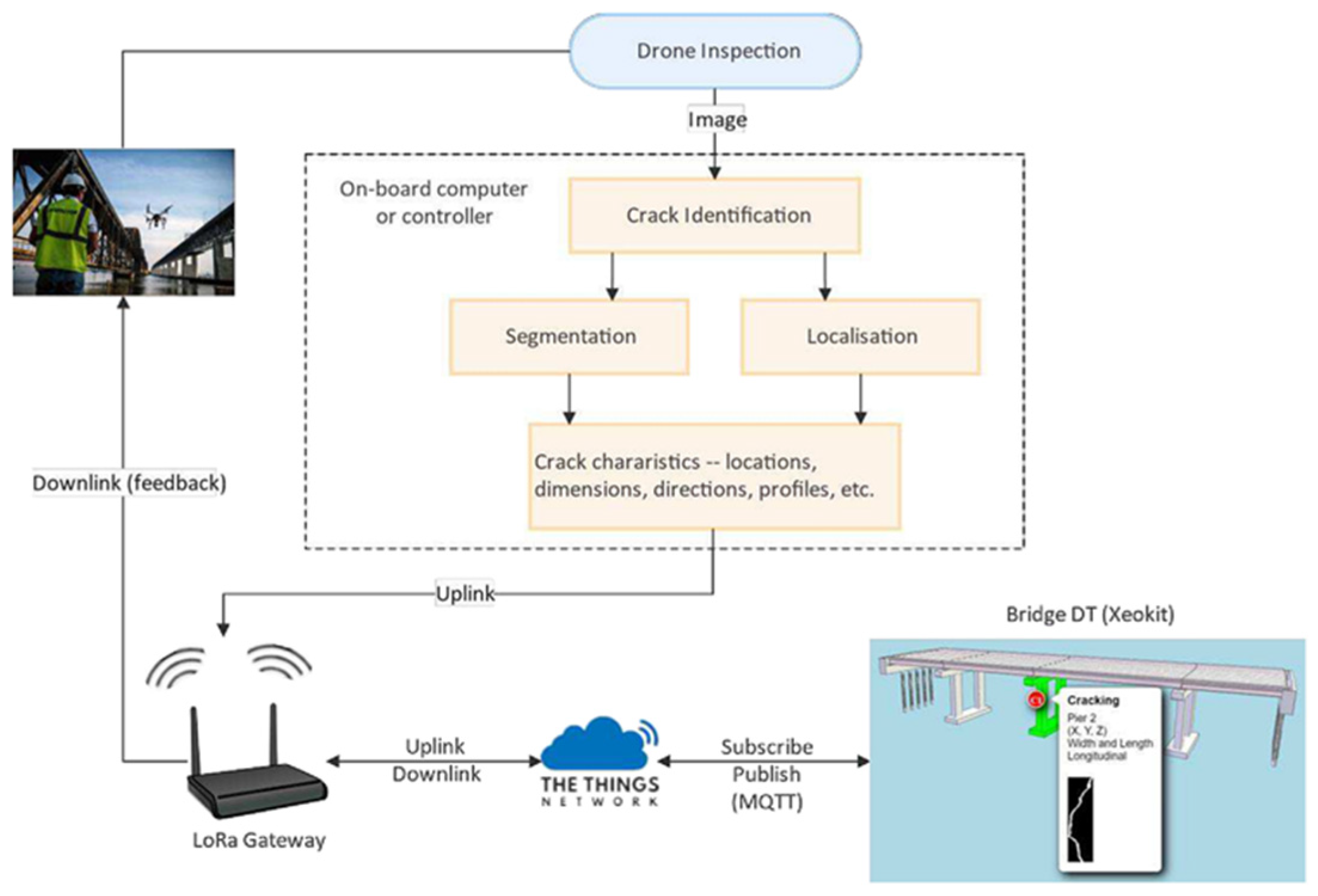

4.2. Digital Twin Architecture: Data Acquisition, Processing, and Visualisation Modules

4.3. Applications of Digital Twins in Bridge Management

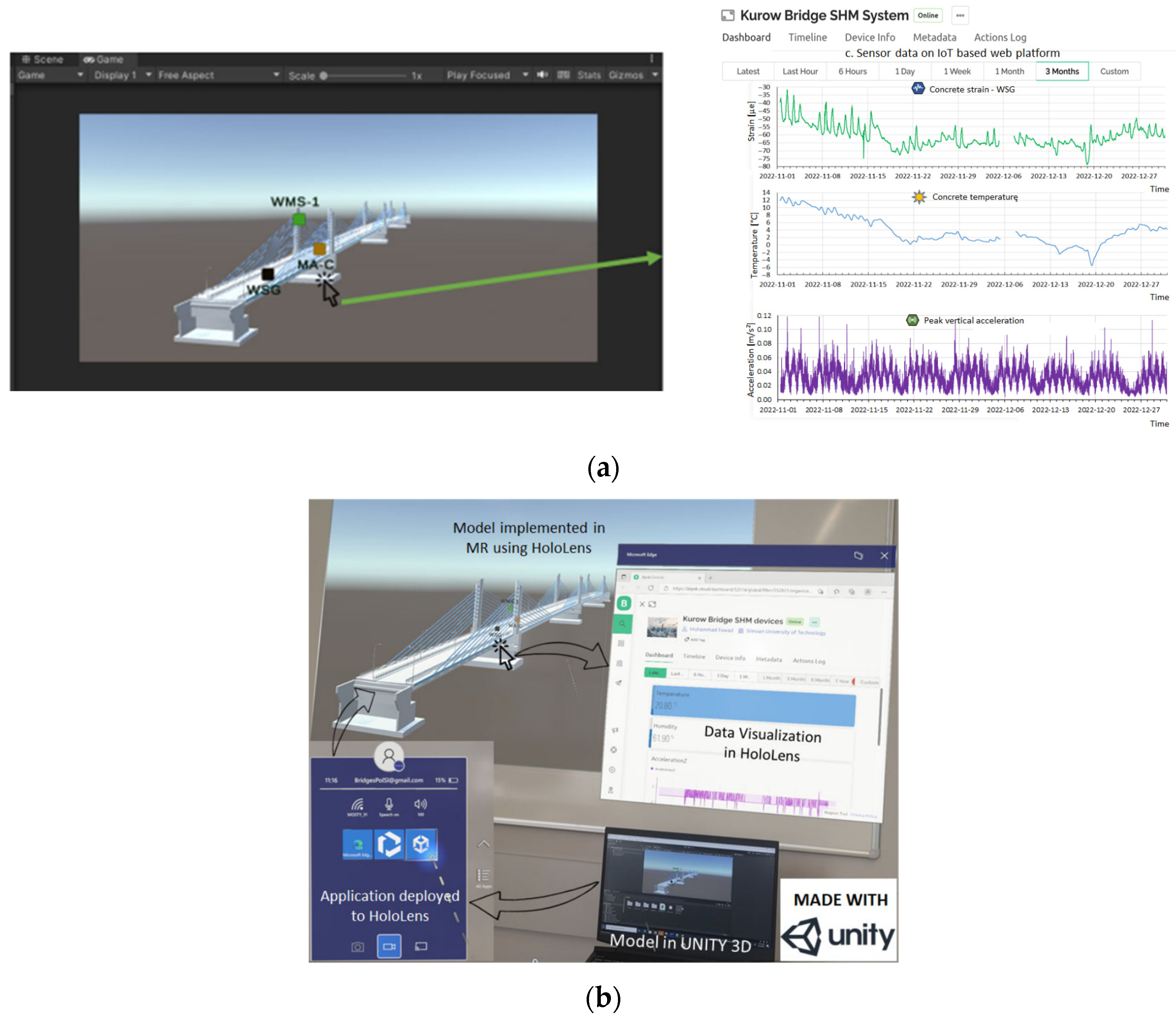

5. Augmented Reality

5.1. Augmented Reality Framework for Bridge Inspection

5.2. Capabilities, Limitations, and Technological Advances in Augmented Reality

5.3. Emerging Applications of Augmented Reality

6. Conclusions and Recommendations

Funding

Acknowledgments

Conflicts of Interest

References

- Cheng, H.; Chai, W.; Hu, J.; Ruan, W.; Shi, M.; Kim, H.; Cao, Y.; Narazaki, Y. Random bridge generator as a platform for developing computer vision-based structural inspection algorithms. J. Infrastruct. Intell. Resil. 2024, 3, 100098. [Google Scholar] [CrossRef]

- Dabous, S.A.; Al-Ruzouq, R.; Llort, D. Three-dimensional modeling and defect quantification of existing concrete bridges based on photogrammetry and computer aided design. Ain Shams Eng. J. 2023, 14, 102231. [Google Scholar] [CrossRef]

- Pepe, M.; Costantino, D.; Crocetto, N.; Garofalo, A.R. 3D Modeling of Roman Bridge by the Integration of Terrestrial and UAV Photogrammetric Survey for Structural Analysis Purpose; International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences—ISPRS Archives: Hannover, Germany, 2019. [Google Scholar] [CrossRef]

- Yu, L.C.; He, S.H.; Liu, X.S.; Ma, M.; Xiang, S.Y. Engineering-oriented bridge multiple-damage detection with damage integrity using modified faster region-based convolutional neural network. Multimed. Tools Appl. 2022, 81, 18279–18304. [Google Scholar] [CrossRef]

- Kao, S.P.; Chang, Y.C.; Wang, F.L. Combining the YOLOv4 Deep Learning Model with UAV Imagery Processing Technology in the Extraction and Quantization of Cracks in Bridges. Sensors 2023, 23, 2572. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Bao, Y.Q.; Chen, J.H.; Zuo, W.M.; Li, H. Surface fatigue crack identification in steel box girder of bridges by a deep fusion convolutional neural network based on consumer-grade camera images. Struct. Health Monit.-Int. J. 2019, 18, 653–674. [Google Scholar] [CrossRef]

- Narazaki, Y.; Hoskere, V.; Eick, B.A.; Smith, M.D.; Spencer, B.F. Vision-based dense displacement and strain estimation of miter gates with the performance evaluation using physics-based graphics models. Smart Struct. Syst. 2019, 24, 709–721. [Google Scholar] [CrossRef]

- Narazaki, Y.; Gomez, F.; Hoskere, V.; Smith, M.D.; Spencer, B.F. Efficient development of vision-based dense three-dimensional displacement measurement algorithms using physics-based graphics models. Struct. Health Monit.-Int. J. 2021, 20, 1841–1863. [Google Scholar] [CrossRef]

- Chen, J.G.; Wadhwa, N.; Cha, Y.J.; Durand, F.; Freeman, W.T.; Buyukozturk, O. Modal identification of simple structures with high-speed video using motion magnification. J. Sound Vib. 2015, 345, 58–71. [Google Scholar] [CrossRef]

- Nguyen, D.H.; Nguyen, Q.B.; Bui-Tien, T.; De Roeck, G.; Wahab, M.A. Damage detection in girder bridges using modal curvatures gapped smoothing method and Convolutional Neural Network: Application to Bo Nghi bridge. Theor. Appl. Fract. Mech. 2020, 109, 102728. [Google Scholar] [CrossRef]

- Wangchuk, S.; Siringoringo, D.M.; Fujino, Y. Modal analysis and tension estimation of stay cables using noncontact vision-based motion magnification method. Struct. Control. Health Monit. 2022, 29, e2957. [Google Scholar] [CrossRef]

- Jana, D.; Nagarajaiah, S.; Yang, Y. Computer vision-based real-time cable tension estimation algorithm using complexity pursuit from video and its application in Fred-Hartman cable-stayed bridge. Struct. Control. Health Monit. 2022, 29, e2985. [Google Scholar] [CrossRef]

- Demchenko, Y.; Grosso, P.; De Laat, C.; Membrey, P. Addressing Big Data Issues in Scientific Data Infrastructure. In Proceedings of the First International Symposium on Big Data and Data Analytics in Collaboration (BDDAC 2013), San Diego, CA, USA, 20–24 May 2013. [Google Scholar]

- Awadallah, O.; Grolinger, K.; Sadhu, A. Remote collaborative framework for real-time structural condition assessment using Augmented Reality. Adv. Eng. Inform. 2024, 62, 102652. [Google Scholar] [CrossRef]

- Hossain, M.; Hanson, J.-W.; Moreu, F. Real-Time Theoretical and Experimental Dynamic Mode Shapes for Structural Analysis Using Augmented Reality. In Topics in Modal Analysis & Testing; Dilworth, B., Mains, M., Eds.; Springer International Publishing: Cham, Switzerland, 2021; Volume 8, pp. 351–356. [Google Scholar]

- Carter, E.; Sakr, M.; Sadhu, A. Augmented Reality-Based Real-Time Visualization for Structural Modal Identification. Sensors 2024, 24, 1609. [Google Scholar] [CrossRef] [PubMed]

- Casas, J.R.; Chacón, R.; Catbas, N.; Riveiro, B.; Tonelli, D. Remote Sensing in Bridge Digitalization: A Review. Remote Sens. 2024, 16, 4438. [Google Scholar] [CrossRef]

- Rakoczy, A.M.; Ribeiro, D.; Hoskere, V.; Narazaki, Y.; Olaszek, P.; Karwowski, W.; Cabral, R.; Guo, Y.; Futai, M.M.; Milillo, P.; et al. Technologies and Platforms for Remote and Autonomous Bridge Inspection—Review. Struct. Eng. Int. 2025, 35, 354–376. [Google Scholar] [CrossRef]

- Hoskere, V.; Narazaki, Y.; Hoang, T.A.; Spencer, B.F. MaDnet: Multi-task semantic segmentation of multiple types of structural materials and damage in images of civil infrastructure. J. Civ. Struct. Health Monit. 2020, 10, 757–773. [Google Scholar] [CrossRef]

- Zhai, G.H.; Narazaki, Y.; Wang, S.; Shajihan, S.A.V.; Spencer, B.F. Synthetic data augmentation for pixel-wise steel fatigue crack identification using fully convolutional networks. Smart Struct. Syst. 2022, 29, 237–250. [Google Scholar] [CrossRef]

- Narazaki, Y.; Hoskere, V.; Yoshida, K.; Spencer, B.F.; Fujino, Y. Synthetic environments for vision-based structural condition assessment of Japanese high-speed railway viaducts. Mech. Syst. Signal Process. 2021, 160, 107850. [Google Scholar] [CrossRef]

- Narazaki, Y.; Hoskere, V.; Hoang, T.A.; Fujino, Y.; Sakurai, A.; Spencer, B.F. Vision-based automated bridge component recognition with high-level scene consistency. Comput. Civ. Infrastruct. Eng. 2020, 35, 465–482. [Google Scholar] [CrossRef]

- Yuan, X.; Smith, A.; Moreu, F.; Sarlo, R.; Lippitt, C.D.; Hojati, M.; Alampalli, S.; Zhang, S. Automatic evaluation of rebar spacing and quality using LiDAR data: Field application for bridge structural assessment. Autom. Constr. 2022, 146, 104708. [Google Scholar] [CrossRef]

- Autodesk. Autodesk Revit, Version 2024; Autodesk Inc.: San Francisco, CA, USA, 2024.

- Truong-Hong, L.; Lindenbergh, R. Automatically extracting surfaces of reinforced concrete bridges from terrestrial laser scanning point clouds. Autom. Constr. 2022, 135, 104127. [Google Scholar] [CrossRef]

- Li, X.; Hu, Y.; Jie, Y.; Zhao, C.; Zhang, Z. Dual-Frequency Lidar for Compressed Sensing 3D Imaging Based on All-Phase Fast Fourier Transform. JOPR 2023, 1, 74–81. [Google Scholar] [CrossRef]

- Tzortzinis, G.; Ai, C.B.; Brena, S.F.; Gerasimidis, S. Using 3D laser scanning for estimating the capacity of corroded steel bridge girders: Experiments, computations and analytical solutions. Eng. Struct. 2022, 265, 114407. [Google Scholar] [CrossRef]

- Cabral, R.; Oliveira, R.; Ribeiro, D.; Rakoczy, A.M.; Santos, R.; Azenha, M.; Correia, J. Railway Bridge Geometry Assessment Supported by Cutting-Edge Reality Capture Technologies and 3D As-Designed Models. Infrastructures 2023, 8, 114. [Google Scholar] [CrossRef]

- Cabral, R.; Santos, R.; Correia, J.; Ribeiro, D. Optimal reconstruction of railway bridges using a machine learning framework based on UAV photogrammetry and LiDAR. Struct. Infrastruct. Eng. 2025, 1–21. [Google Scholar] [CrossRef]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. NeRF: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2020, 65, 99–106. [Google Scholar] [CrossRef]

- Cui, D.; Wang, W.; Hu, W.; Peng, J.; Zhao, Y.; Zhang, Y.; Wang, J. 3D reconstruction of building structures incorporating neural radiation fields and geometric constraints. Autom. Constr. 2024, 165, 105517. [Google Scholar] [CrossRef]

- Kim, G.; Cha, Y. 3D Pixelwise damage mapping using a deep attention based modified Nerfacto. Autom. Constr. 2024, 168, 105878. [Google Scholar] [CrossRef]

- Kerbl, B.; Kopanas, G.; Leimkuehler, T.; Drettakis, G. 3D Gaussian Splatting for Real-Time Radiance Field Rendering. ACM Trans. Graph. 2023, 42, 139. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Blanco, A.C.T. Quantify LiDAR’s Geometry Capturing Capability for Structural and Construction Assessment. Ph.D. Thesis, Rutgers The State University of New Jersey, New Brunswick, NJ, USA, 2020. [Google Scholar]

- Jing, Y.X.; Sheil, B.; Acikgoz, S. Segmentation of large-scale masonry arch bridge point clouds with a synthetic simulator and the BridgeNet neural network. Autom. Constr. 2022, 142, 104459. [Google Scholar] [CrossRef]

- Kim, H.; Yoon, J.; Sim, S.H. Automated bridge component recognition from point clouds using deep learning. Struct. Control. Health Monit. 2020, 27, e2591. [Google Scholar] [CrossRef]

- Bahreini, F.; Hammad, A. Point Cloud Semantic Segmentation of Concrete Surface Defects Using Dynamic Graph CNN. In Proceedings of the International Symposium on Automation and Robotics in Construction, Dubai, United Arab Emirates, 2–4 November 2021. [Google Scholar] [CrossRef]

- Shi, M.; Kim, H.; Narazaki, Y. Development of large-scale synthetic 3D point cloud datasets for vision-based bridge structural condition assessment. Adv. Struct. Eng. 2024, 27, 2901–2928. [Google Scholar] [CrossRef]

- Tareen, S.K.; Saleem, Z. A Comparative Analysis of Sift, Surf, Kaze, Akaze, Orb, and Brisk. In Proceedings of the 2018 International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 3–4 March 2018. [Google Scholar] [CrossRef]

- Deng, L.; Yuan, X.; Deng, C.; Chen, J.; Cai, Y. Image Stitching Based on Nonrigid Warping for Urban Scene. Sensors 2020, 20, 7050. [Google Scholar] [CrossRef]

- Jahanshahi, M.R.; Kelly, J.S.; Masri, S.F.; Sukhatme, G.S. A survey and evaluation of promising approaches for automatic image-based defect detection of bridge structures. Struct. Infrastruct. Eng. 2009, 5, 455–486. [Google Scholar] [CrossRef]

- Ribeiro, D.; Santos, R.; Shibasaki, A.; Montenegro, P.; Carvalho, H.; Calcada, R. Remote inspection of RC structures using unmanned aerial vehicles and heuristic image processing. Eng. Fail. Anal. 2020, 117, 104813. [Google Scholar] [CrossRef]

- Abdel-Qader, I.; Abudayyeh, O.; Kelly, M. Analysis of Edge-Detection Techniques for Crack Identification in Bridges. J. Comput. Civ. Eng. 2003, 17, 255–263. [Google Scholar] [CrossRef]

- Ribeiro, D.; Santos, R.; Cabral, R.; Shibasaki, A. Remote Inspection and Monitoring of Civil Engineering Structures Based on Unmanned Aerial Vehicles. In Proceedings of the International Conference of the European Association on Quality Control of Bridges and Structures, Padua, Italy, 29 August–1 September 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 695–703. [Google Scholar]

- Wang, B.; Zhao, W.; Gao, P.; Zhang, Y.; Wang, Z. Crack Damage Detection Method via Multiple Visual Features and Efficient Multi-Task Learning Model. Sensors 2018, 18, 1796. [Google Scholar] [CrossRef]

- Moon, H.-G.; Kim, J.-H. Inteligent Crack Detecting Algorithm on the Concrete Crack Image Using Neural Network. In Proceedings of the 28th International Symposium on Automation and Robotics in Construction (ISARC), Seoul, Republic of Korea, 21 June–2 July 2011. [Google Scholar] [CrossRef]

- Rutzinger, M.; Höfle, B.; Vetter, M.; Pfeifer, N. Digital terrain models from airborne laser scanning for the automatic extraction of natural and anthropogenic linear structures. In Geomorphological Mapping: A Professional Handbook of Techniques and Applications; Elsevier: Amsterdam, The Netherlands, 2011; pp. 475–488. [Google Scholar]

- Potenza, F.; Rinaldi, C.; Ottaviano, E.; Gattulli, V. A robotics and computer-aided procedure for defect evaluation in bridge inspection. J. Civ. Struct. Health Monit. 2020, 10, 471–484. [Google Scholar] [CrossRef]

- Zollini, S.; Alicandro, M.; Dominici, D.; Quaresima, R.; Giallonardo, M. UAV Photogrammetry for Concrete Bridge Inspection Using Object-Based Image Analysis (OBIA). Remote Sens. 2020, 12, 3180. [Google Scholar] [CrossRef]

- Agarap, A.F. Deep Learning using Rectified Linear Units (ReLU). arXiv 2018, arXiv:1803.08375. [Google Scholar] [CrossRef]

- Deng, L.; Sun, T.; Yang, L.; Cao, R. Binocular video-based 3D reconstruction and length quantification of cracks in concrete structures. Autom. Constr. 2023, 148, 104743. [Google Scholar] [CrossRef]

- Dung, C.V.; Anh, L.D. Autonomous concrete crack detection using deep fully convolutional neural network. Autom. Constr. 2019, 99, 52–58. [Google Scholar] [CrossRef]

- Alexander, Q.G.; Hoskere, V.; Narazaki, Y.; Maxwell, A.; Spencer, B.F. Fusion of thermal and RGB images for automated deep learning based crack detection in civil infrastructure. AI Civ. Eng. 2022, 1, 3. [Google Scholar] [CrossRef]

- Hu, K.; Chen, Z.; Kang, H.; Tang, Y. 3D vision technologies for a self-developed structural external crack damage recognition robot. Autom. Constr. 2024, 159, 105262. [Google Scholar] [CrossRef]

- Kim, B.; Cho, S. Automated Multiple Concrete Damage Detection Using Instance Segmentation Deep Learning Model. Appl. Sci. 2020, 10, 8008. [Google Scholar] [CrossRef]

- Narazaki, Y.; Hoskere, V.; Chowdhary, G.; Spencer, B.F. Vision-based navigation planning for autonomous post-earthquake inspection of reinforced concrete railway viaducts using unmanned aerial vehicles. Autom. Constr. 2022, 137, 104214. [Google Scholar] [CrossRef]

- Kim, H.; Narazaki, Y.; Spencer, B.F. Automated bridge component recognition using close-range images from unmanned aerial vehicles. Eng. Struct. 2023, 274, 115184. [Google Scholar] [CrossRef]

- Zhang, C.B.; Chang, C.C.; Jamshidi, M. Concrete bridge surface damage detection using a single-stage detector. Comput. Civ. Infrastruct. Eng. 2020, 35, 389–409. [Google Scholar] [CrossRef]

- Sun, S.; Liu, W.; Cui, R. YOLO Based Bridge Surface Defect Detection Using Decoupled Prediction. In Proceedings of the 2022 7th Asia-Pacific Conference on Intelligent Robot Systems (ACIRS), Tianjin, China, 1–3 July 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Lee, J.I.; Sim, C.; Detweiler, C.; Barnes, B. Computer-Vision Based UAV Inspection for Steel Bridge Connections. Struct. Health Monit. 2019. [Google Scholar] [CrossRef]

- Hoskere, V.; Narazaki, Y.; Spencer, B.F.; Smith, M.D. Deep Learning-Based Damage Detection of Miter Gates Using Synthetic Imagery from Computer Graphics; DEStech Publications Inc.: Lancaster, PA, USA, 2019. [Google Scholar] [CrossRef]

- Narazaki, Y.; Pang, W.; Wang, G.; Chai, W. Unsupervised Domain Adaptation Approach for Vision-Based Semantic Understanding of Bridge Inspection Scenes without Manual Annotations. J. Bridg. Eng. 2024, 29, 4023118. [Google Scholar] [CrossRef]

- Rahman, A.U.; Hoskere, V. Instance Segmentation of Reinforced Concrete Bridges with Synthetic Point Clouds. arXiv 2024, arXiv:2409.16381. [Google Scholar] [CrossRef]

- Perry, B.J.; Guo, Y.; Mahmoud, H.N. Automated site-specific assessment of steel structures through integrating machine learning and fracture mechanics. Autom. Constr. 2022, 133, 104022. [Google Scholar] [CrossRef]

- Perry, B.; Guo, Y.; Atadero, R.; Lindt, J. Streamlined Bridge Inspection System Utilizing Unmanned Aerial Vehicles (UAVs) and Machine Learning. Measurement 2020, 164, 108048. [Google Scholar] [CrossRef]

- Yamane, T.; Chun, P.; Honda, R. Detecting and localising damage based on image recognition and structure from motion, and reflecting it in a 3D bridge model. Struct. Infrastruct. Eng. 2022, 20, 594–606. [Google Scholar] [CrossRef]

- Stephen, G.A.; Brownjohn, J.M.W.; Taylor, C.A. Measurements of static and dynamic displacement from visual monitoring of the Humber Bridge. Eng. Struct. 1993, 15, 197–208. [Google Scholar] [CrossRef]

- Jie, G.; Zhu, C. Dynamic displacement measurement of large-scale structures based on the Lucas–Kanade template tracking algorithm. Mech. Syst. Signal Process. 2016, 66, 425–436. [Google Scholar] [CrossRef]

- Ye, X.W.; Yi, T.-H.; Dong, C.; Liu, T.; Bai, H. Multi-point displacement monitoring of bridges using a vision-based approach. Wind Struct. 2015, 20, 315–326. [Google Scholar] [CrossRef]

- Du, W.; Lei, D.; Hang, Z.; Ling, Y.; Bai, P.; Zhu, F. Short-distance and long-distance bridge displacement measurement based on template matching and feature detection methods. J. Civ. Struct. Health Monit. 2022, 13, 343–360. [Google Scholar] [CrossRef]

- Wang, M.; Xu, F.; Koo, K.-Y.; Wang, P. Real-time displacement measurement for long-span bridges using a compact vision-based system with speed-optimized template matching. Comput. Civ. Infrastruct. Eng. 2024, 39, 1988–2009. [Google Scholar] [CrossRef]

- Dong, C.-Z.; Celik, O.; Catbas, F.N. Marker-free monitoring of the grandstand structures and modal identification using computer vision methods. Struct. Health Monit. 2018, 18, 1491–1509. [Google Scholar] [CrossRef]

- Javh, J.; Slavič, J.; Boltežar, M. The subpixel resolution of optical-flow-based modal analysis. Mech. Syst. Signal Process. 2017, 88, 89–99. [Google Scholar] [CrossRef]

- Pan, B.; Qian, K.M.; Xie, H.M.; Asundi, A. Two-dimensional digital image correlation for in-plane displacement and strain measurement: A review. Meas. Sci. Technol. 2009, 20, 062001. [Google Scholar] [CrossRef]

- Du, W.K.; Lei, D.; Bai, P.X.; Zhu, F.P.; Huang, Z.T. Dynamic measurement of stay-cable force using digital image techniques. Measurement 2020, 151, 107211. [Google Scholar] [CrossRef]

- Dhanasekar, M.; Prasad, P.; Dorji, J.; Zahra, T. Serviceability Assessment of Masonry Arch Bridges Using Digital Image Correlation. J. Bridg. Eng. 2019, 24, 04018120. [Google Scholar] [CrossRef]

- Wang, Y.; Tumbeva, M.D.; Thrall, A.P.; Zoli, T.P. Pressure-activated adhesive tape pattern for monitoring the structural condition of steel bridges via digital image correlation. Struct. Control. Health Monit. 2019, 26, e2382. [Google Scholar] [CrossRef]

- Yan, Z.; Jin, Z.; Teng, S.; Chen, G.; Bassir, D. Measurement of Bridge Vibration by UAVs Combined with CNN and KLT Optical-Flow Method. Appl. Sci. 2022, 12, 5181. [Google Scholar] [CrossRef]

- Hu, J.; Zhu, Q.; Zhang, Q. Global Vibration Comfort Evaluation of Footbridges Based on Computer Vision. Sensors 2022, 22, 7077. [Google Scholar] [CrossRef]

- Bai, Y.; Demir, A.; Yilmaz, A.; Sezen, H. Assessment and monitoring of bridges using various camera placements and structural analysis. J. Civ. Struct. Health Monit. 2024, 14, 321–337. [Google Scholar] [CrossRef]

- Dong, C.; Bas, S.; Catbas, F.N. Applications of Computer Vision-Based Structural Monitoring on Long-Span Bridges in Turkey. Sensors 2023, 23, 8161. [Google Scholar] [CrossRef]

- Mousa, M.A.; Yussof, M.M.; Udi, U.J.; Nazri, F.M.; Kamarudin, M.K.; Parke, G.A.R.; Assi, L.N.; Ghahari, S.A. Application of Digital Image Correlation in Structural Health Monitoring of Bridge Infrastructures: A Review. Infrastructures 2021, 6, 176. [Google Scholar] [CrossRef]

- Christensen, C.O.; Schmidt, J.W.; Halding, P.S.; Kapoor, M.; Goltermann, P. Digital Image Correlation for Evaluation of Cracks in Reinforced Concrete Bridge Slabs. Infrastructures 2021, 6, 99. [Google Scholar] [CrossRef]

- Yoneyama, S.; Ueda, H. Bridge Deflection Measurement Using Digital Image Correlation with Camera Movement Correction. Mater. Trans. 2012, 53, 285–290. [Google Scholar] [CrossRef]

- Tian, Y.; Zhang, C.; Jiang, S.; Zhang, J.; Duan, W. Noncontact cable force estimation with unmanned aerial vehicle and computer vision. Comput. Civ. Infrastruct. Eng. 2020, 36, 73–88. [Google Scholar] [CrossRef]

- Acikgoz, S.; DeJong, M.J.; Soga, K. Sensing dynamic displacements in masonry rail bridges using 2D digital image correlation. Struct. Control. Health Monit. 2018, 25, e2187. [Google Scholar] [CrossRef]

- Peddle, J.; Goudreau, A.; Carlson, E.; Santini-Bell, E. Bridge displacement measurement through digital image correlation. Bridg. Struct. 2011, 7, 165–173. [Google Scholar] [CrossRef]

- Koltsida, I.; Tomor, A.; Booth, C.A. The use of digital image correlation technique for monitoring masonry arch bridges. In Proceedings of the 7th International Conference on Arch Bridges, Trogir-Split, Croatia, 2–4 October 2013. [Google Scholar]

- Luo, K.; Kong, X.; Zhang, J.; Hu, J.; Li, J.; Tang, H. Computer Vision-Based Bridge Inspection and Monitoring: A Review. Sensors 2023, 23, 7863. [Google Scholar] [CrossRef]

- Ribeiro, D.; Santos, R.; Cabral, R.; Saramago, G.; Montenegro, P.; Carvalho, H.; Correia, J.; Calçada, R. Non-contact structural displacement measurement using Unmanned Aerial Vehicles and video-based systems. Mech. Syst. Signal Process. 2021, 160, 107869. [Google Scholar] [CrossRef]

- Han, Y.; Wu, G.; Feng, D. Vision-based displacement measurement using an unmanned aerial vehicle. Struct. Control. Health Monit. 2022, 29, e3025. [Google Scholar] [CrossRef]

- Yoon, H.; Shin, J.; Spencer, B.F., Jr. Structural Displacement Measurement Using an Unmanned Aerial System. Comput. Civ. Infrastruct. Eng. 2018, 33, 183–192. [Google Scholar] [CrossRef]

- Wadhwa, N.; Rubinstein, M.; Durand, F.; Freeman, W. Phase-Based Video Motion Processing. ACM Trans. Graph. 2013, 32, 1–10. [Google Scholar] [CrossRef]

- Yang, Y.; Dorn, C.; Mancini, T.; Talken, Z.; Kenyon, G.; Farrar, C.; Mascareñas, D. Blind identification of full-field vibration modes from video measurements with phase-based video motion magnification. Mech. Syst. Signal Process. 2017, 85, 567–590. [Google Scholar] [CrossRef]

- Chen, W.; Yan, B.; Jingbo, L.; Luo, L.; Dong, Y. Cable Force Determination Using Phase-Based Video Motion Magnification and Digital Image Correlation. Int. J. Struct. Stab. Dyn. 2021, 22, 2250036. [Google Scholar] [CrossRef]

- Civera, M.; Fragonara, L.Z.; Surace, C. An experimental study of the feasibility of phase-based video magnification for damage detection and localisation in operational deflection shapes. Strain 2020, 56, e12336. [Google Scholar] [CrossRef]

- Chu, X.; Xiang, X.; Zhou, Z. Experimental study of Euler motion amplification algorithm in bridge vibration analysis. J. Highw. Transp. Res. Dev. 2019, 36, 41–47. [Google Scholar]

- Shang, Z.; Shen, Z. Multi-point vibration measurement and mode magnification of civil structures using video-based motion processing. Autom. Constr. 2018, 93, 231–240. [Google Scholar] [CrossRef]

- Hoskere, V.; Park, J.-W.; Yoon, H.; Spencer, B.F., Jr. Vision-based modal survey of civil infrastructure using unmanned aerial vehicles. J. Struct. Eng. 2019, 145, 4019062. [Google Scholar] [CrossRef]

- Guzman-Acevedo, G.M.; Quintana-Rodriguez, J.A.; Vazquez-Becerra, G.E.; Garcia-Armenta, J. A reliable methodology to estimate cable tension force in cable-stayed bridges using Unmanned Aerial Vehicle (UAV). Measurement 2024, 229, 114498. [Google Scholar] [CrossRef]

- Mirzazade, A.; Popescu, C.; Täljsten, B. Prediction of Strain in Embedded Rebars for RC Member, Application of Hybrid Learning Approach. Infrastructures 2023, 8, 71. [Google Scholar] [CrossRef]

- Anuradha, J. A Brief Introduction on Big Data 5Vs Characteristics and Hadoop Technology. Procedia Comput. Sci. 2015, 48, 319–324. [Google Scholar] [CrossRef]

- Fayyad, U.; Stolorz, P. Data mining and KDD: Promise and challenges. Futur. Gener. Comput. Syst. 1997, 13, 99–115. [Google Scholar] [CrossRef]

- Tan, P.-N.; Steinbach, M.; Karpatne, A.; Kumar, V. Introduction to Data Mining, 2nd ed.; Pearson: New York, NY, USA, 2018. [Google Scholar]

- Sun, L.; Shang, Z.; Xia, Y.; Bhowmick, S.; Nagarajaiah, S. Review of Bridge Structural Health Monitoring Aided by Big Data andand Artificial Intelligence: From Condition Assessment to Damage Detection. J. Struct. Eng. 2020, 146, 04020073. [Google Scholar] [CrossRef]

- Radovic, M.; Ghonima, O.; Schumacher, T. Data Mining of Bridge Concrete Deck Parameters in the National Bridge Inventory by Two-Step Cluster Analysis. ASCE-ASME J. Risk Uncertain. Eng. Syst. Part A Civ. Eng. 2017, 3, F4016004. [Google Scholar] [CrossRef]

- Buchheit, R.B.; Garrett, J.H.; Lee, S.R.; Brahme, R. A knowledge discovery framework for civil infrastructure: A case study of the intelligent workplace. Eng. Comput. 2000, 16, 264–274. [Google Scholar] [CrossRef]

- Ng, H.S.; Toukourou, A.; Soibelman, L. Knowledge Discovery in a Facility Condition Assessment Database Using Text Clustering. J. Infrastruct. Syst. 2006, 12, 50–59. [Google Scholar] [CrossRef]

- Ltifi, H.; Kolski, C.; Ayed, M.B.; Alimi, A.M. A human-centred design approach for developing dynamic decision support system based on knowledge discovery in databases. J. Decis. Syst. 2013, 22, 69–96. [Google Scholar] [CrossRef]

- Rakoczy, A.M.; Karwowski, W.; Płudowska-Zagrajek, M.; Pałyga, M. Introducing a new method for assessing short railway bridge conditions using vehicle onboard systems. Arch. Civ. Eng. 2024, 70, 407–423. [Google Scholar] [CrossRef]

- Chuang, Y.-H.; Yau, N.-J.; Tabor, J.M.M. A Big Data Approach for Investigating Bridge Deterioration and Maintenance Strategies in Taiwan. Sustainability 2023, 15, 1697. [Google Scholar] [CrossRef]

- Hehenberger, P.; Bradley, D. Digital twin—The simulation aspect. In Mechatronic Futures; Springer International Publishing: Cham, Switzerland, 2016; pp. 59–74. [Google Scholar]

- Grieves, M.; Vickers, J. Digital twin: Mitigating unpredictable, undesirable emergent behavior in complex systems. In Transdisciplinary Perspectives on Complex Systems; Springer: Cham, Switzerland, 2017; pp. 85–113. [Google Scholar]

- Lu, Q.; Xie, X.; Heaton, J.; Parlikad, A.K.; Schooling, J. From BIM towards digital twin: Strategy and future development for smart asset management. In Service Oriented, Holonic and Multi-Agent Manufacturing Systems for Industry of the Future, Proceedings of SOHOMA, Sonoma, CA, USA, 26 September 2019; Springer: Cham, Switzerland; pp. 392–404.

- Schroeder, G.N.; Steinmetz, C.; Pereira, C.E.; Espindola, D.B. Digital twin data modeling with automationml and a communication methodology for data exchange. IFAC-Pap. 2016, 49, 12–17. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, Q.; Chen, X.; Zhang, D.; Leng, J. A digital twin-based approach for designing and multi-objective optimization of hollow glass production line. IEEE Access 2017, 5, 26901–26911. [Google Scholar] [CrossRef]

- Tao, F.; Cheng, J.; Qi, Q.; Zhang, M.; Zhang, H.; Sui, F. Digital twin-driven product design, manufacturing and service with big data. Int. J. Adv. Manuf. Technol. 2018, 94, 3563–3576. [Google Scholar]

- Söderberg, R.; Wärmefjord, K.; Carlson, J.S.; Lindkvist, L. Toward a Digital Twin for real-time geometry assurance in individualized production. CIRP Ann. 2017, 66, 137–140. [Google Scholar] [CrossRef]

- Miller, A.M.; Alvarez, R.; Hartman, N. Towards an extended model-based definition for the digital twin. Comput.-Aided Des. Appl. 2018, 15, 880–891. [Google Scholar] [CrossRef]

- Min, Q.; Lu, Y.; Liu, Z.; Su, C.; Wang, B. Machine learning based digital twin framework for production optimization in petrochemical industry. Int. J. Inf. Manag. 2019, 49, 502–519. [Google Scholar] [CrossRef]

- El Saddik, A. Digital twins: The convergence of multimedia technologies. IEEE Multimed. 2018, 25, 87–92. [Google Scholar] [CrossRef]

- Gehrmann, C.; Gunnarsson, M. A digital twin based industrial automation and control system security architecture. IEEE Trans. Ind. Inform. 2019, 16, 669–680. [Google Scholar] [CrossRef]

- Madubuike, O.; Anumba, C.; Khallaf, R. A review of digital twin applications in construction. J. Inf. Technol. Constr. 2022, 27, 145–172. [Google Scholar] [CrossRef]

- Elsaid, A.; Seracino, R. Rapid assessment of foundation scour using the dynamic features of bridge superstructure. Constr. Build. Mater. 2014, 50, 42–49. [Google Scholar] [CrossRef]

- Hoskere, V.; Hassanlou, D.; Ur Rahman, A.; Bazrgary, R.; Ali, M.T. Digital Twins of Bridge Structures. Autom. Constr. 2025. [Google Scholar] [CrossRef]

- Boje, C.; Mack, N.; Kubicki, S.; Vidal, A.L.; Sánchez, C.C.; Dugué, A.; Brassier, P. Digital Twin systems for building façade elements testing. In Proceedings of the In EC3 Conference 2023, Heraklion, Greece, 10–12 July 2023. [Google Scholar] [CrossRef]

- Petri, I.; Rezgui, Y.; Ghoroghi, A.; Alzahrani, A. Digital twins for performance management in the built environment. J. Ind. Inf. Integr. 2023, 33, 100445. [Google Scholar] [CrossRef]

- Jasiński, M.; Łaziński, P.; Piotrowski, D. The Concept of Creating Digital Twins of Bridges Using Load Tests. Sensors 2023, 23, 7349. [Google Scholar] [CrossRef]

- Gao, Y.; Li, H.; Xiong, G.; Song, H. AIoT-informed digital twin communication for bridge maintenance. Autom. Constr. 2023, 150, 104835. [Google Scholar] [CrossRef]

- Shim, C.S.; Kang, H.R.; Dang, N.S. Digital Twin Models for Maintenance of Cable-Supported Bridges. In Proceedings of the International Conference on Smart Infrastructure and Construction 2019 (ICSIC) Driving Data-Informed Decision-Making, Cambridge, UK, 8–10 July 2019; ICE Publishing: London, UK, 2019; pp. 737–742. [Google Scholar]

- Chacón, R.; Ramonell, C.; Posada, H.; Sierra, P.; Tomar, R.; de la Rosa, C.M.; Rodriguez, A.; Koulalis, I.; Ioannidis, K.; Wagmeister, S. Digital twinning during load tests of railway bridges—Case study: The high-speed railway network, Extremadura, Spain. Struct. Infrastruct. Eng. 2023, 20, 1105–1119. [Google Scholar] [CrossRef]

- Al-Ansi, A.M.; Jaboob, M.; Garad, A.; Al-Ansi, A. Al-Ansi, Analyzing augmented reality (AR) and virtual reality (VR) recent development in education. Soc. Sci. Humanit. Open 2023, 8, 100532. [Google Scholar] [CrossRef]

- Lueangvilai, E.; Chaisomphob, T. Application of Mobile Mapping System to a Cable-Stayed Bridge in Thailand. Sensors 2022, 22, 9625. [Google Scholar] [CrossRef] [PubMed]

- Azuma, R.T. A Survey of Augmented Reality. Presence Teleoperators Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Milgram, P.; Kishino, F. A Taxonomy of Mixed Reality Visual Displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Maharjan, D.; Agüero, M.; Mascarenas, D.; Fierro, R.; Moreu, F. Enabling human–infrastructure interfaces for inspection using augmented reality. Struct. Health Monit. 2020, 20, 1980–1996. [Google Scholar] [CrossRef]

- Moreu, F.; Bleck, B.; Vemuganti, S.; Rogers, D.; Mascarenas, D. Augmented Reality Tools for Enhanced Structural Inspection. Struct. Health Monit. 2017. [Google Scholar] [CrossRef]

- Mascareñas, D.D.; Ballor, J.P.; McClain, O.L.; Mellor, M.A.; Shen, C.-Y.; Bleck, B.; Morales, J.; Yeong, L.-M.R.; Narushof, B.; Shelton, P.; et al. Augmented reality for next generation infrastructure inspections. Struct. Health Monit. 2021, 20, 1957–1979. [Google Scholar] [CrossRef]

- Xu, J.; Moreu, F. A Review of Augmented Reality Applications in Civil Infrastructure During the 4th Industrial Revolution. Front. Built Environ. 2021, 7, 640732. [Google Scholar] [CrossRef]

- Xu, J.; Doyle, D.; Moreu, F. State of the art of augmented reality capabilities for civil infrastructure applications. Eng. Rep. 2023, 5, e12602. [Google Scholar] [CrossRef]

- Catbas, N.; Avci, O. A review of latest trends in bridge health monitoring. In Proceedings of the Institution of Civil Engineers—Bridge Engineering, London, UK, 15 November 2021; Volume 176, pp. 76–91. [Google Scholar] [CrossRef]

- Mohammadkhorasani, A.; Malek, K.; Mojidra, R.; Li, J.; Bennett, C.; Collins, W.; Moreu, F. Augmented reality-computer vision combination for automatic fatigue crack detection and localization. Comput. Ind. 2023, 149, 103936. [Google Scholar] [CrossRef]

- Malek, K.; Moreu, F. Realtime conversion of cracks from pixel to engineering scale using Augmented Reality. Autom. Constr. 2022, 143, 104542. [Google Scholar] [CrossRef]

- Malek, K.; Mohammadkhorasani, A.; Moreu, F. Methodology to integrate augmented reality and pattern recognition for crack detection. Comput. Civ. Infrastruct. Eng. 2022, 38, 1000–1019. [Google Scholar] [CrossRef]

- Moreu, F.; Chang, C.-M.; Liu, Y.-T.; Malek, K.; Khorasani, A. Human-centered post-disaster inspection of infrastructure using augmented reality. In Bridge Maintenance, Safety, Management, Digitalization and Sustainability; CRC Press: Boca Raton, FL, USA, 2024; pp. 3352–3358. [Google Scholar] [CrossRef]

- Aguero, M.; Maharjan, D.; Rodriguez, M.d.P.; Mascarenas, D.D.L.; Moreu, F. Design and Implementation of a Connection between Augmented Reality and Sensors. Robotics 2020, 9, 3. [Google Scholar] [CrossRef]

- Wyckoff, E.; Reza, R.; Moreu, F. Human-in-the-loop control of dynamics and robotics using augmented reality. J. Dyn. Disasters 2025, 1, 100005. [Google Scholar] [CrossRef]

- Shin, D.; Dunston, P. Evaluation of Augmented Reality in steel column inspection. Autom. Constr. 2009, 18, 118–129. [Google Scholar] [CrossRef]

- Fawad, M.; Salamak, M.; Hanif, M.U.; Koris, K.; Ahsan, M.; Rahman, H.; Gerges, M.; Salah, M.M. Integration of Bridge Health Monitoring System with Augmented Reality Application Developed Using 3D Game Engine–Case Study. IEEE Access 2024, 12, 16963–16974. [Google Scholar] [CrossRef]

- Cuong, N.; Nguyen, Q.; Jin, R.; Jeon, C.; Shim, C. BIM-based mixed-reality application for bridge inspection and maintenance. Constr. Innov. 2021, 22, 487–503. [Google Scholar] [CrossRef]

| Methodologies | Application | Techniques |

|---|---|---|

| Computer Vision | 3D geometric reconstitution | Photogrammetry (SfM—Structure from Motion, MVS—Multi-View Stereo) LiDAR (ToF—Time-of-Flight) Hybrid strategies (ICP—Iterative Closest Point) AI-Based (NeRF—Neural Radiance Fields, RANSAC, PFM—Plane Fitting Method, Gaussian Splatting, CNN, and Autoencoder) |

| Damage identification and component detection | Heuristic (edge detection filters) Deep learning (CNN, Mask-R-CNN, YOLO) Integrated frameworks | |

| Measurement of strains, displacements, and modal parameters | Template-Matching algorithms Optical Flow methods Digital Image Correlation (DIC) Phase-Based Motion Magnification (PMM) Eulerian Video Motion Amplification (EVMA) NExT-ERA UAV motion subtraction: digital filters, stationary background target, IMU | |

| Big Data | Robust knowledge of the asset’s structural behavior from varied data | Knowledge Discovery in Databases (KDD) Neural networks Bayesian networks Decision Trees |

| Digital Twins | Virtual representation of real behavior of a physical asset | IoT Data structuring techniques Common Data Environment (CDE) Scan-to-BrIM BrIM AI Optimization techniques Simulation (FEM, DEM, others) Predictive analysis |

| Augmented Reality | Interface between drawings and real structures during inspections |

| Reference | Definition of DT |

|---|---|

| [113] | Comprehensive physical and functional description of a component, product, or system, including all potentially useful information across current and subsequent lifecycle phases |

| [114] | A digital informational construct about a physical system, created as a standalone entity and linked to the physical system throughout its lifecycle |

| [115] | A digital model that dynamically represents and mimics an asset’s real-world behavior; built on data |

| [116] | Cyber representation within Cyber-Physical Systems, composed of multiple models and data |

| [117] | Enabled Big Data analytics, faster algorithms, increased computation power, and data availability, allowing for data-enabled real-time control and optimization of products and processes |

| [118] | An integrated, multi-physics, multiscale, and probabilistic simulation of a complex product that uses the best available models, sensor updates, etc., to mirror the life of its corresponding twin |

| [119] | Using a digital copy of the physical system to perform real-time optimization |

| [120] | Composition of disparate digital models which gives rise to a higher fidelity model of a product |

| [121] | Continuous interactive process between the physical manufacturing facility and its digital counterpart |

| [122] | Virtual representations of physical entities |

| [123] | A model where each product is also directly connected with a virtual counterpart |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ribeiro, D.; Rakoczy, A.M.; Cabral, R.; Hoskere, V.; Narazaki, Y.; Santos, R.; Tondo, G.; Gonzalez, L.; Matos, J.C.; Massao Futai, M.; et al. Methodologies for Remote Bridge Inspection—Review. Sensors 2025, 25, 5708. https://doi.org/10.3390/s25185708

Ribeiro D, Rakoczy AM, Cabral R, Hoskere V, Narazaki Y, Santos R, Tondo G, Gonzalez L, Matos JC, Massao Futai M, et al. Methodologies for Remote Bridge Inspection—Review. Sensors. 2025; 25(18):5708. https://doi.org/10.3390/s25185708

Chicago/Turabian StyleRibeiro, Diogo, Anna M. Rakoczy, Rafael Cabral, Vedhus Hoskere, Yasutaka Narazaki, Ricardo Santos, Gledson Tondo, Luis Gonzalez, José Campos Matos, Marcos Massao Futai, and et al. 2025. "Methodologies for Remote Bridge Inspection—Review" Sensors 25, no. 18: 5708. https://doi.org/10.3390/s25185708

APA StyleRibeiro, D., Rakoczy, A. M., Cabral, R., Hoskere, V., Narazaki, Y., Santos, R., Tondo, G., Gonzalez, L., Matos, J. C., Massao Futai, M., Guo, Y., Trias, A., Tinoco, J., Samec, V., Minh, T. Q., Moreu, F., Popescu, C., Mirzazade, A., Jorge, T., ... Fonseca, J. (2025). Methodologies for Remote Bridge Inspection—Review. Sensors, 25(18), 5708. https://doi.org/10.3390/s25185708