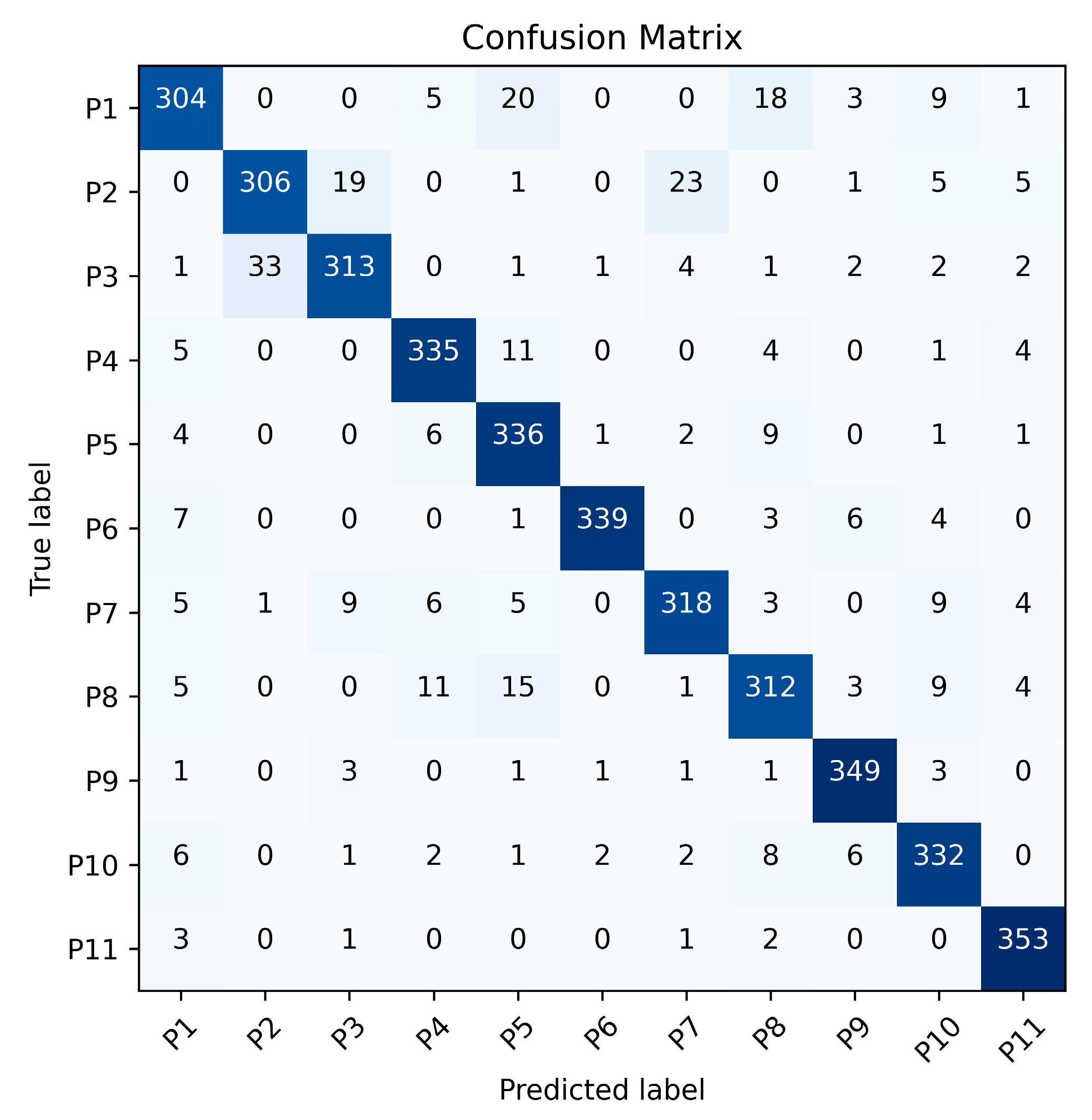

4.3.1. Experiment 1: All Four Arm Movements in Training Set

The training set of Experiment 1 is formed of the gait data with four arm movements included, and Exp. 1-0 uses 70% of the total samples as the training set. To evaluate the effect of varying training data sizes, Exp. 1-1 uses 45% of the total samples for training, while Exp. 1-2 uses 30%. Exp. 1-1 and Exp. 1-2 use the same test set as Exp. 1-0, which is the other 30% of data samples after 70% of them are selected as the training data.

Table 7 presents the gait recognition results under different training sample sizes. It can be observed that among the three experiments, Exp. 1-0 achieves the highest gait recognition accuracy regardless of whether the Leg Method or the Total Method was used. The gait recognition accuracy decreases as the training sample size decreases. It should be noted that the gait recognition accuracy was evaluated using a held-out test set. Prior to evaluation, the test set was rigorously isolated during training to avoid data leakage. Recognition performance was quantified as the percentage of correctly classified point-cloud samples relative to the total test samples.

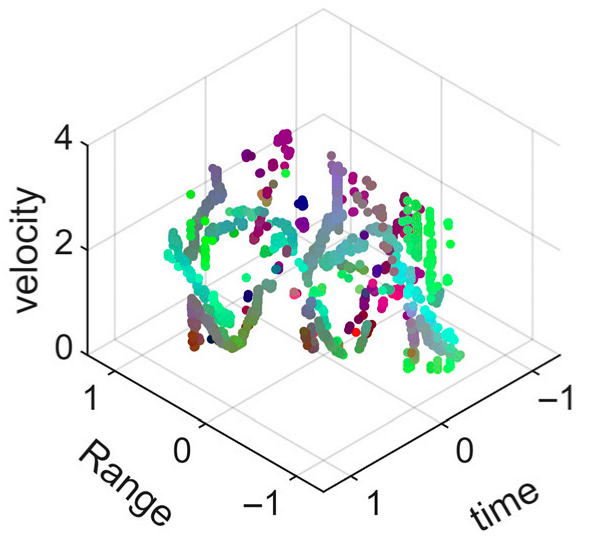

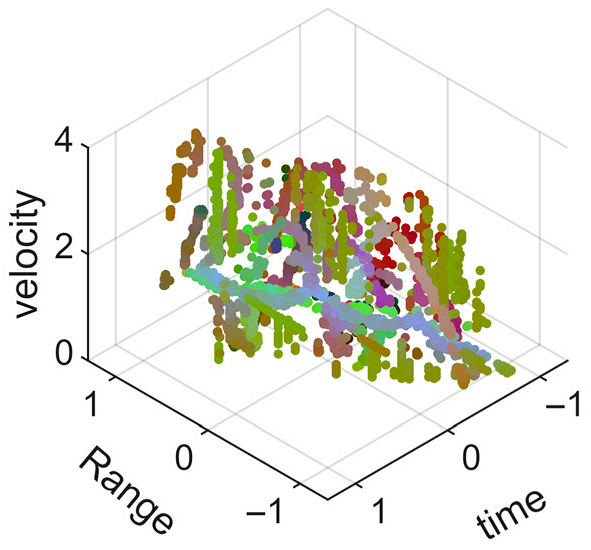

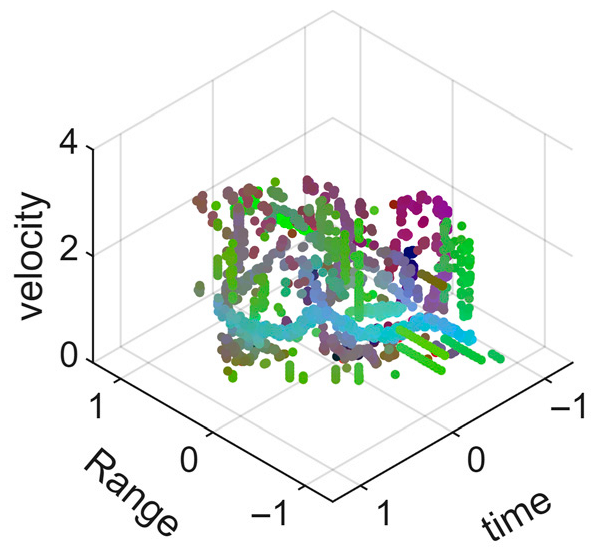

Let us compare the results listed in

Table 7 in detail. For the Leg Method, the accuracy results of Exp. 1-1 and Exp. 1-2 drop about 4.3% and 8.6%, respectively, compared to that of Exp. 1-0. As for the Total Method, the accuracies drop about 5.0% and 13%, respectively. It is clear that the Leg Method exhibited a smaller decline in gait recognition accuracy compared to the Total Method as the training sample size decreased. This is because the Total Method incorporates the diversity of arm movements, making the point cloud include not only leg motion characteristics but also various arm motion characteristics. When the training samples are reduced, the model’s ability to extract gait features becomes more susceptible to the interference from arm motion features, leading to a more significant drop in gait recognition accuracy.

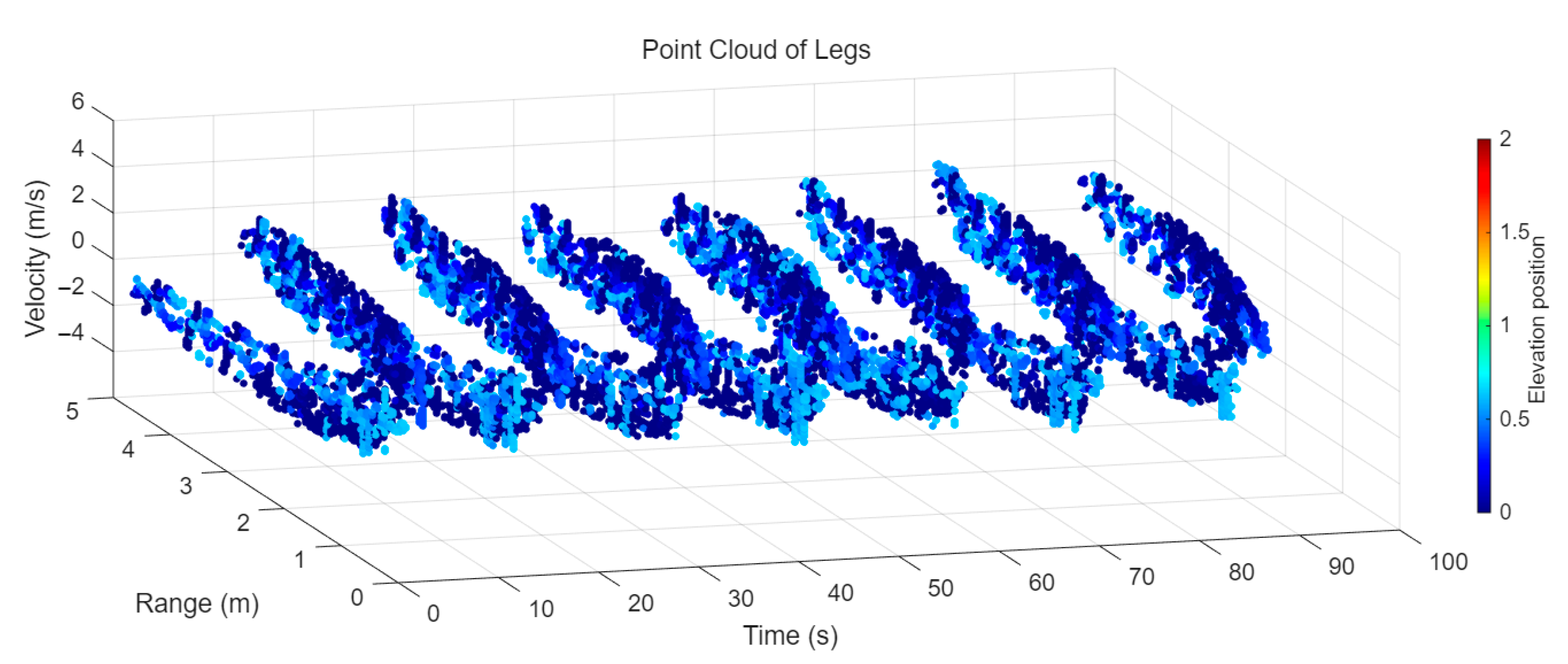

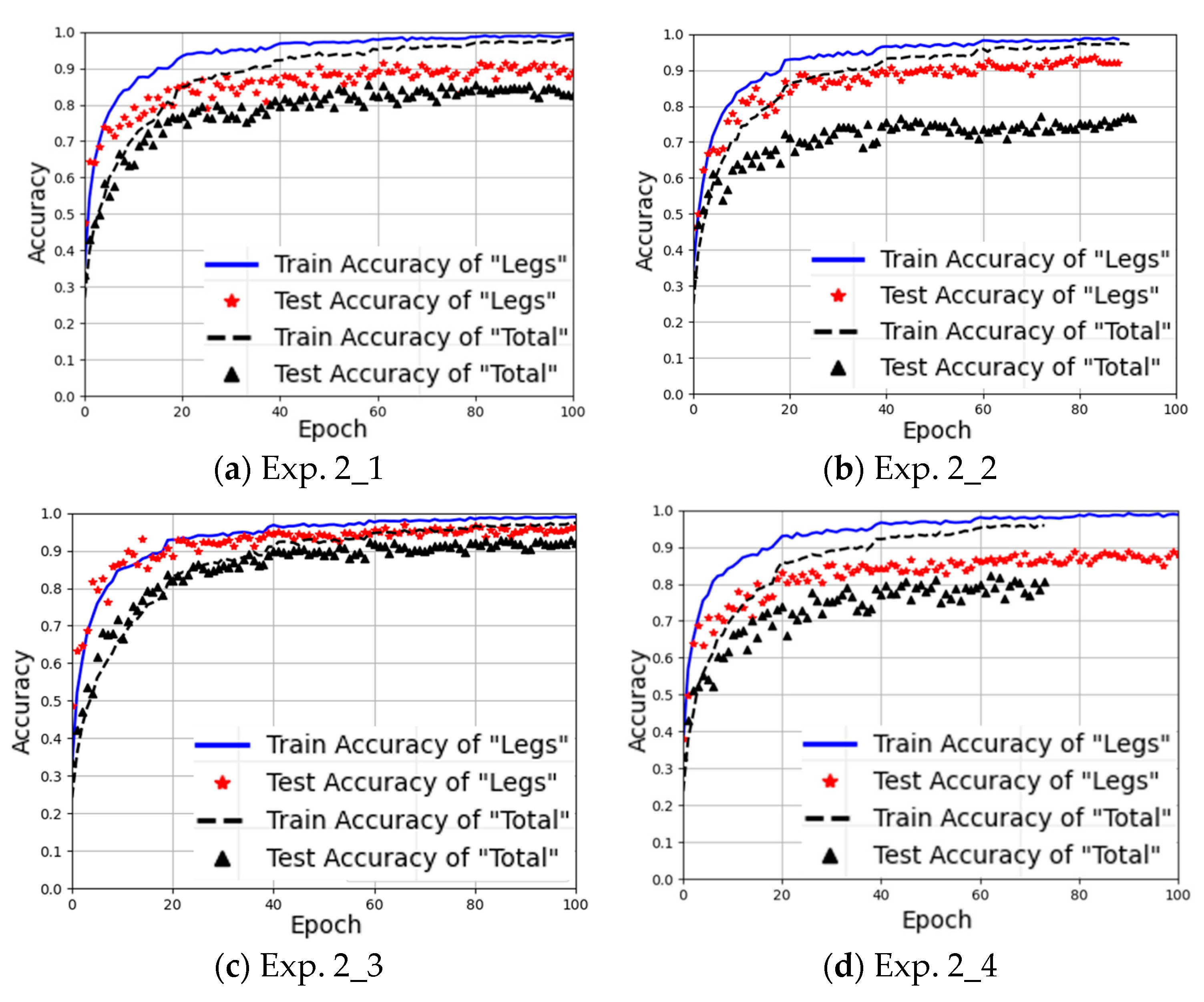

Figure 10 shows the training curves of Experiment 1 using the Leg Method and the Total Method under different training sample sizes. The blue solid line represents the training accuracy of the Leg Method, while the red asterisks denote its testing accuracy. The black dashed line indicates the training accuracy of the Total Method, and the black triangles represent its testing accuracy.

From the testing accuracy results in

Figure 10a, it can be observed that after 100 training epochs, the proposed Leg Method achieves a testing accuracy of 95.8%, outperforming the existing Total Method (90.8%) by a significant margin. Additionally, at epoch 20, the Leg Method already reaches 90% accuracy, whereas the Total Method only attains 80%.

When the training samples are reduced, as shown in

Figure 10b,c, the Leg Method maintains a minimum accuracy of above 80% at epoch 20, while the Total Method struggles to exceed 80% even at its peak. Across all sample sizes in Experiment 1, the proposed Leg Method consistently achieves at least 5% higher accuracy than the Total Method. Moreover, as the training samples decrease, the Total Method exhibits a more pronounced decline in gait recognition accuracy and is more prone to overfitting due to insufficient training data.

This indicates that the Leg Method can better learn the gait features of human subjects in training, leading to faster convergence and more robust performance even with limited training samples.

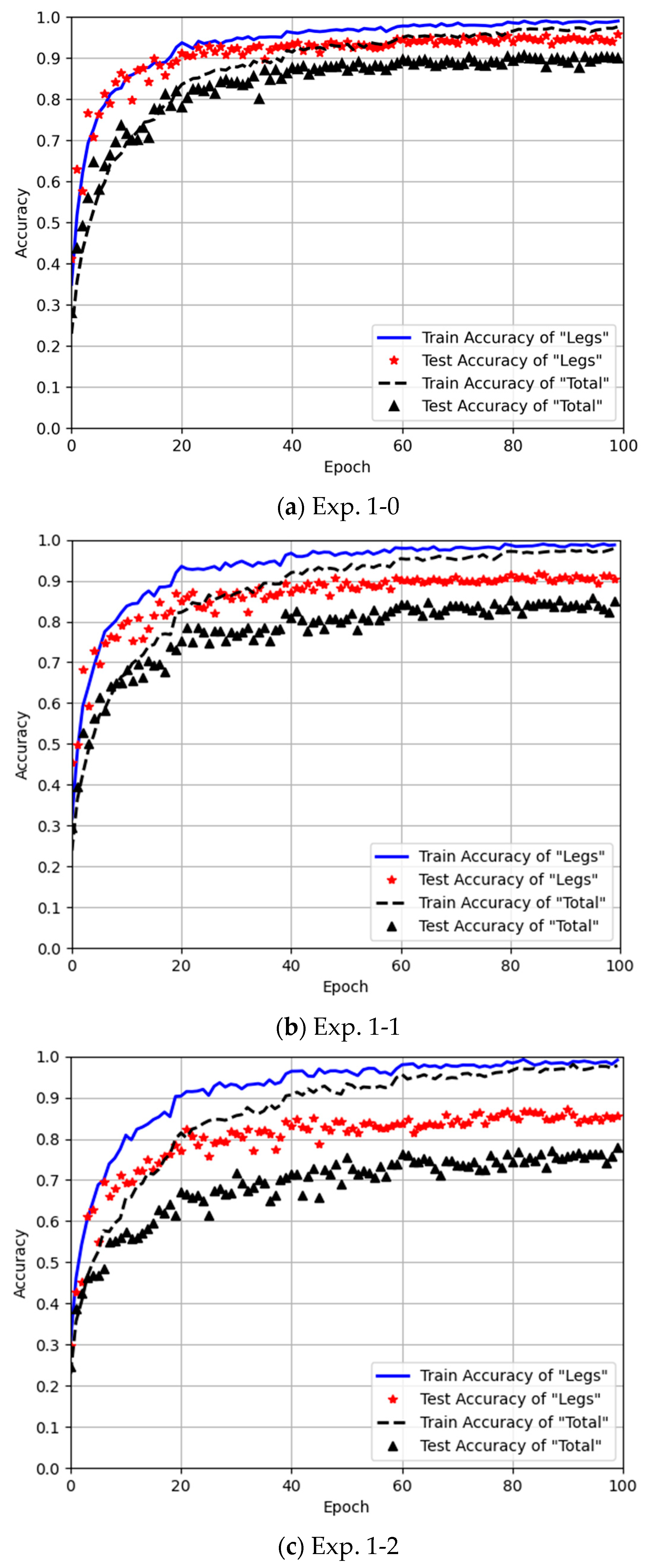

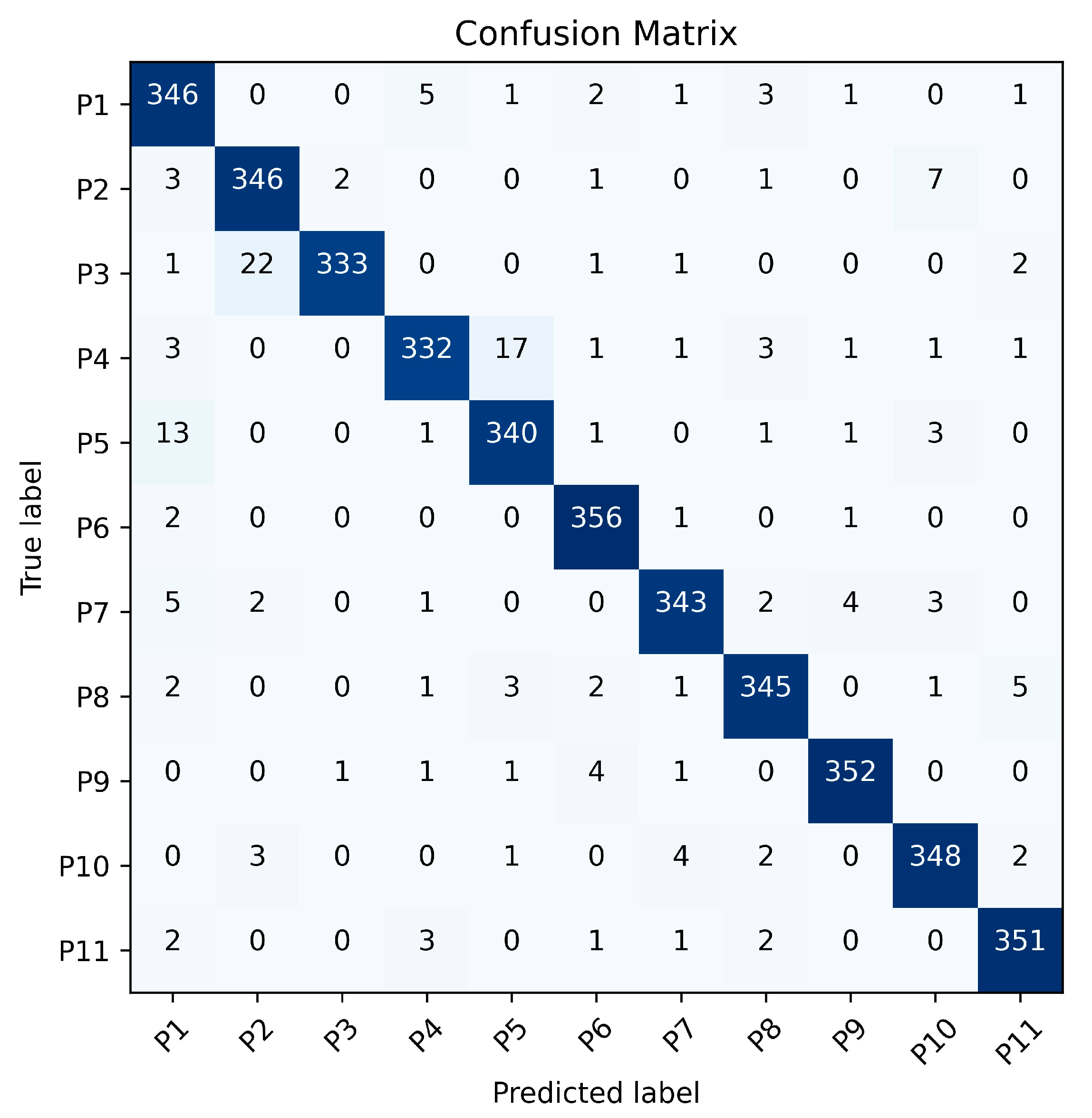

To further analyze the recognition results of the Leg Method and the Total Method,

Figure 11 and

Figure 12 present the gait recognition confusion matrices for Exp. 1_0 using the Leg Method and the Total Method respectively. These matrices offer a visual representation of the classification performance for each of the 11 subjects under the two methods. By examining these matrices, we can not only observe the misclassification patterns but also calculate the recognition precision, recall and F1-score for each person and the overall accuracy of the two methods, which helps in comprehensively evaluating the effectiveness of the Leg Method and the Total Method in gait recognition.

The results represented in

Table 8 demonstrate that the proposed Leg Method consistently outperforms the Total Method across all participants (P1–P11), achieving superior precision (91.8–99.1%), recall (92.2–98.9%), and F1-scores (93.8–97.8%) compared to the Total Method’s precision (85.7–98.6%), recall (84.4–98.1%), and F1-scores (86.6–96.3%). The Leg Method exhibits exceptional robustness, with all metrics exceeding 90%, confirming its reliability in minimizing false positives while maintaining high detection accuracy. Although the Total Method shows competitive performance in some cases (e.g., P6 and P11), its greater variability highlights the Leg Method’s overall superiority in consistency and effectiveness.

4.3.2. Experiment 2: Three Arm Movements in Training Set

We aimed to investigate whether the model still can accurately identify the target individual’s identity or not when gait data with one or more arm movements are absent from training but appear in testing. Experiment 2, as shown in

Table 4, uses the gait data containing three arm movements to train the model and the remaining one arm movement’s gait data for testing. As for Exp. 2_1, it uses M2, M3, and M4 for training while using M1 for testing. Similarly, Exp. 2_2 uses M1, M3, and M4 for training while using M2 for testing; Exp. 2_3 uses M1, M2, and M4 for training while using M3 for testing; and Exp. 2_4 uses M1, M2, and M3 for training while using M4 for testing.

The recognition results of Experiment 2 are listed in

Table 9, showing that even when trained by the gait data of three arm movements, the Leg Method can still achieve the minimum recognition accuracy of 89.4% and the maximum of 96.9%. In contrast, the Total Method achieves a minimum of 77.6% and a maximum of 93.2%.

From

Table 9, two conclusions can be drawn:

1. Different arm movements affect the model training effect differently, thereby influencing the recognition accuracy in case of new arm movements appearing.

- The results of Exp. 2_2 show that, when using M1, M3, and M4 as the training set and M2 as the test set, the Leg Method achieves 93.9% recognition accuracy, while the Total Method reaches only 77.6%. This is because the swinging characteristics of M2 are significantly different from those of M1, M3, and M4, but it is absent from training. As for the Total Method, due to all limb movements being included, the gait recognition is severely affected by drastic changes in arm movements. In contrast, with the Leg Method, due to only the leg movement data being utilized, the impact of arm movement variations can be avoided.

- The results of Exp. 2_3 show that, when M1, M2, and M4 are used for training with M3 for testing, both methods can achieve high recognition accuracy. This is because the absent M3 involves single-arm swinging, sharing the similar characteristics of M1 with two arms swinging, allowing the model to leverage the learned features of M1 when training. Due to the similarity between M3 and M1, the recognition results of Exp. 2_3 are comparable to those of Exp. 1_0, even being slightly higher, approximately 1% for the Leg Method and 3% for the Total Method. This can be attributed to the Leg Method’s reliance solely on leg data, so the influence of arm movement variations on gait recognition can thus be reduced. Compared to the case of Exp. 2_3, Exp. 1_0 introduces one more arm movement, whose feature may influence the model’s extraction of gait features. Therefore, with fixed training samples, even when one arm movement type is added, the gait recognition accuracy can be reduced. More types of arm movements should require a larger number of training samples to achieve better recognition accuracy. Since this experiment involves a limited number of arm movements, further experiments are needed to validate this hypothesis more reliably.

2. The proposed Leg Method maintains high gait recognition accuracy under different scenarios. Across all four experimental groups in Experiment 2, Leg Method consistently outperforms the Total Method in recognition accuracy, particularly when encountering arm movements significantly different from those in training; e.g., in Exp. 2_2, stable and high gait recognition accuracy performance is demonstrated.

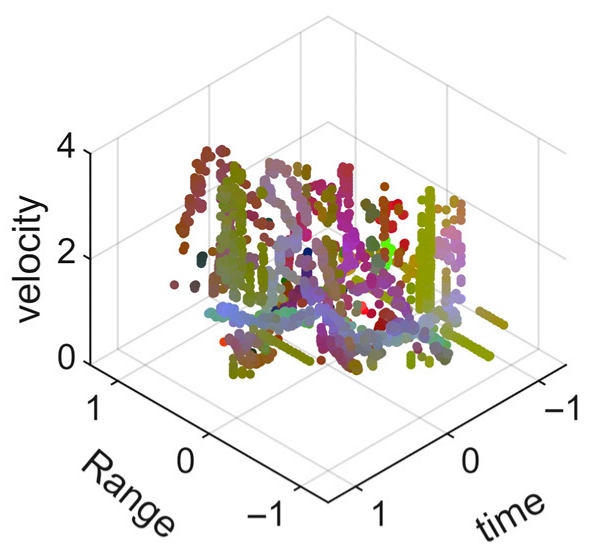

Figure 13 presents the training curves of the four experimental groups in Experiment 2. It can be observed that the Leg Method not only achieves higher recognition accuracy but also demonstrates faster model convergence speed. This further confirms that the proposed Leg Method facilitates more effective extraction of gait features by the model, thereby better maintaining gait recognition accuracy even when the arm movements change differently.

4.3.3. Experiment 3: Two Arm Movements in Training Set

Experiment 3 employes gait data with two arm movements included for model training while using the gait data with the remaining two arm movements as test sets. Let us explain the meanings of different setups by taking Exp. 3_12-3, Exp. 3_12-4, and Exp. 3_12-34 as examples. Exp. 3_12-3 denotes the case with M1 and M2 used for training and M3 for testing; Exp. 3_12-4 denotes the case with M1 and M2 used for training and M4 for testing; and Exp. 3_12-34 denotes the case with M1 and M2 used for training and M3 and M4 both used for testing.

Table 10 presents the results of Experiment 3, from which the recognition performances under different scenarios can be analyzed.

Compared to Experiment 1 and Experiment 2, Experiment 3 employs only two arm movements for training; i.e., not only fewer types of arm movements are involved but also the training samples are reduced as well. Consequently, model training in Experiment 3 faces a greater challenge. As shown in

Table 10, the gait recognition accuracies in Experiment 3 are relatively lower than those in Experiment 1 (as shown in

Figure 10) and Experiment 2 (as listed in

Table 9), and the reason is primarily the reduced training variety and sample size.

Table 10 clearly shows that the Leg Method consistently outperforms the Total Method in recognition accuracy, and the largest accuracy difference of 22.90% occurs in Exp. 3_34-2, while the smallest difference of 2.60% appears in Exp. 3_14-3. The reason is analyzed as follows.

Exp. 3_14-3 uses M1 and M4 for training and M3 for testing. Since the arm movement characteristics of M3 resemble M1, the model had already learned similar features during training. As a result, both the Total Method and Leg Method achieve over 90% recognition accuracy when tested on M3. The configuration of Exp. 3_14-3 is similar to that of Exp. 2_3. However, due to the larger training sample size and variety in Exp. 2_3, the recognition accuracy slightly outperforms Exp. 3_14-3, achieving 96.9% and 93.2% with the Leg Method and Total Method, respectively, compared to 93.0% and 90.4% for Exp. 3_14-3.

Exp. 3_34-2 uses M3 and M4 for training, while M2 is used for testing. Since the training set consisted of relatively stable or motionless arm movements, the model failed to learn the highly dynamic arm swing patterns of M2. Consequently, the Total Method suffer a significant drop in accuracy as low as 65.9% due to the interference from M2’s arm movements, whereas the Leg Method maintained a high accuracy of 88.8%, because it is immune to the influence of arm movement. All the results demonstrate that under challenging conditions where test and training samples differ substantially, the performance of the Total Method degrades sharply, while the Leg Method remains robust.

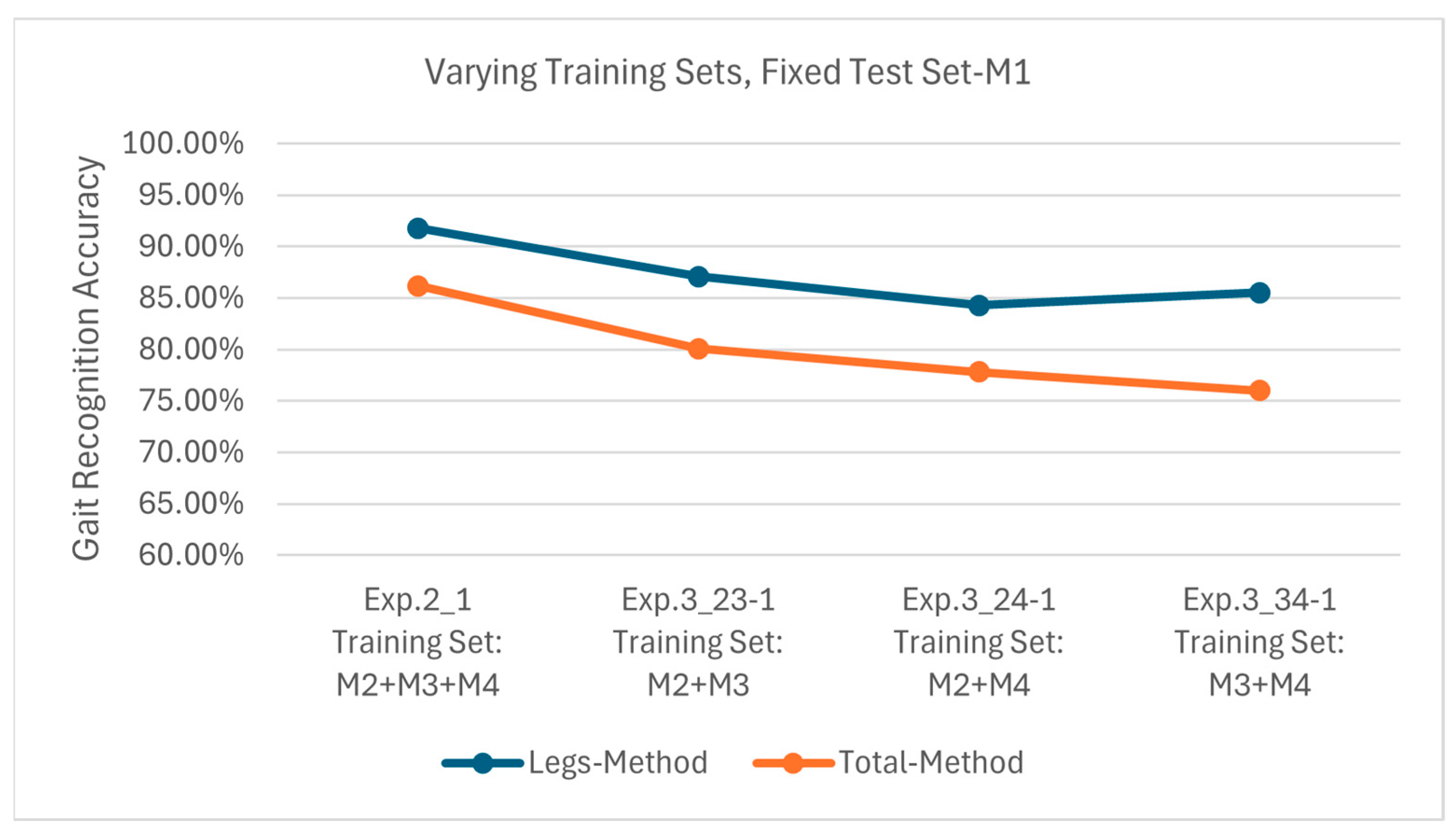

Figure 14 presents the results of Exp. 2_1, 3_23-1, 3_24-1, and 3_34-1, all of which use M1 as the test set. The Leg Method achieves recognition accuracies of 91.8%, 87.1%, 84.3%, and 85.5%, respectively, while they are 86.2%, 80.1%, 77.8%, and 76.0%, respectively, for the Total Method. Since M1, M2, and M3 all involve arm movements, and the training sets includes M2 or M3, the model has already learned similar motion features in training. Thus, stable recognition accuracy is obtained across different training configurations. Notably, the minimum recognition accuracy of the Leg Method can also reach 84.3%, while the maximum recognition accuracy of the Total Method is only 86.2%, further validating the superiority of the Legs Method in handling arm movement variations.

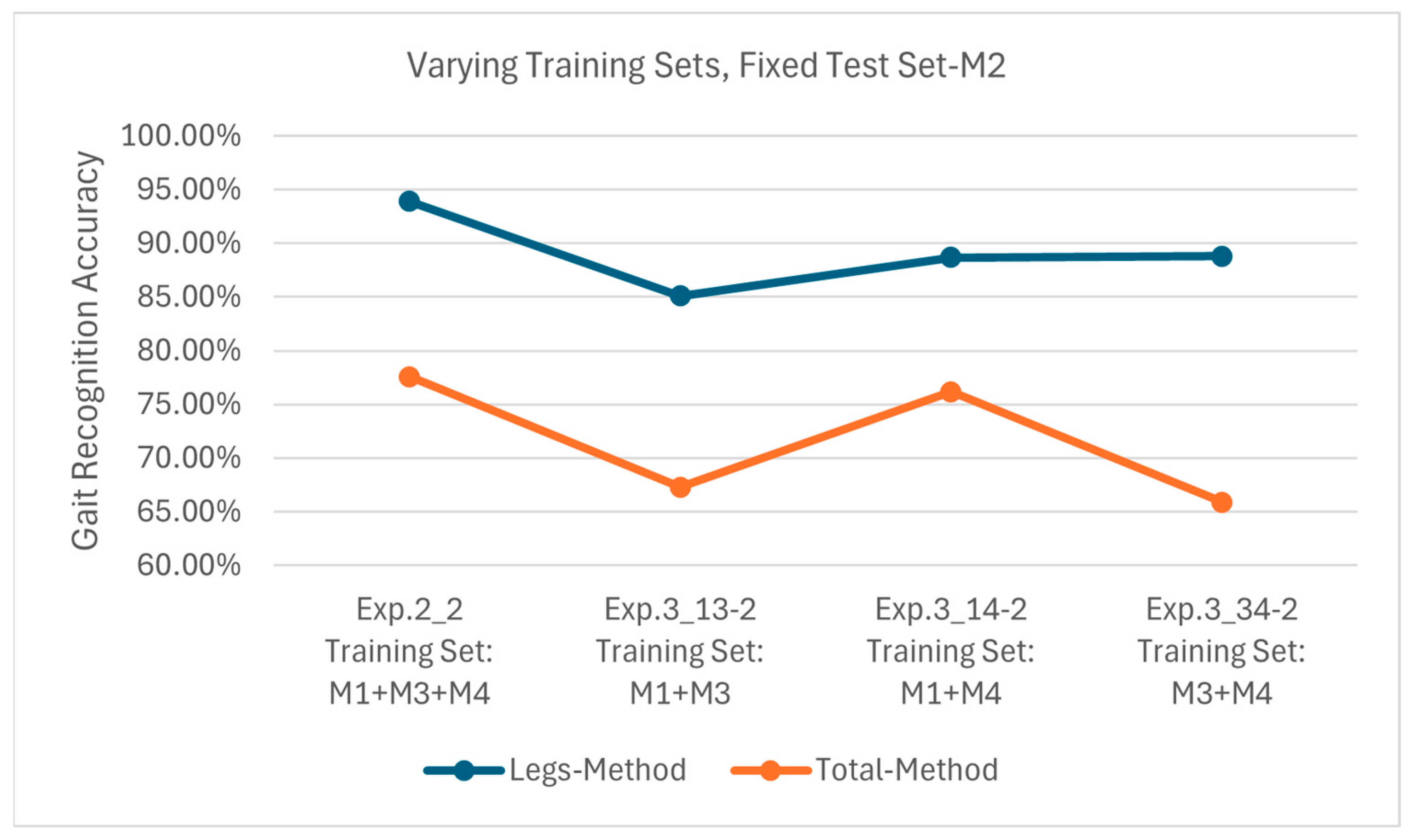

Figure 15 presents the results of Exp. 2_2, 3_13-2, 3_14-2 and 3_34-2, all using M2 as the test set. The Leg Method achieves recognition accuracies of 93.9%, 85.1%, 88.7% and 88.8%, respectively, while they are 77.6%, 65.9%, 76.2% and 67.3%, respectively, for the Total Method. Compared to M1 and M3, M2’s arm movements exhibit greater diversity and complexity. Consequently, when M2 is used for testing with the Total Method, the model suffers from significant accuracy degradation due to arm movement variations; thus, the maximum and minimum accuracies are only about 77.6% and 65.9%, respectively. In contrast, the proposed Leg Method can effectively mitigate the impact of different arm movements and maintain a recognition accuracy above 85%.

Figure 16 shows the results of Exp. 2_3, 3_12-3, 3_14-3 and 3_24-3, all employing M3 as the test set. The Leg Method achieves 96.9%, 91.8%, 93.0% and 92.8% accuracies, respectively, while the Total Method achieves the accuracies of 93.2%, 81.5%, 90.4% and 84.0%, respectively. As previously illustrated for Exp. 2_3, M3’s single-arm swinging shares similarities with M1’s double-arm swinging, and M2’s random swinging also contains some arm movement features. This enables both methods to achieve relatively high accuracy when testing with M3, and at the same time, the Leg Method demonstrates superior stability in maintaining high accuracy.

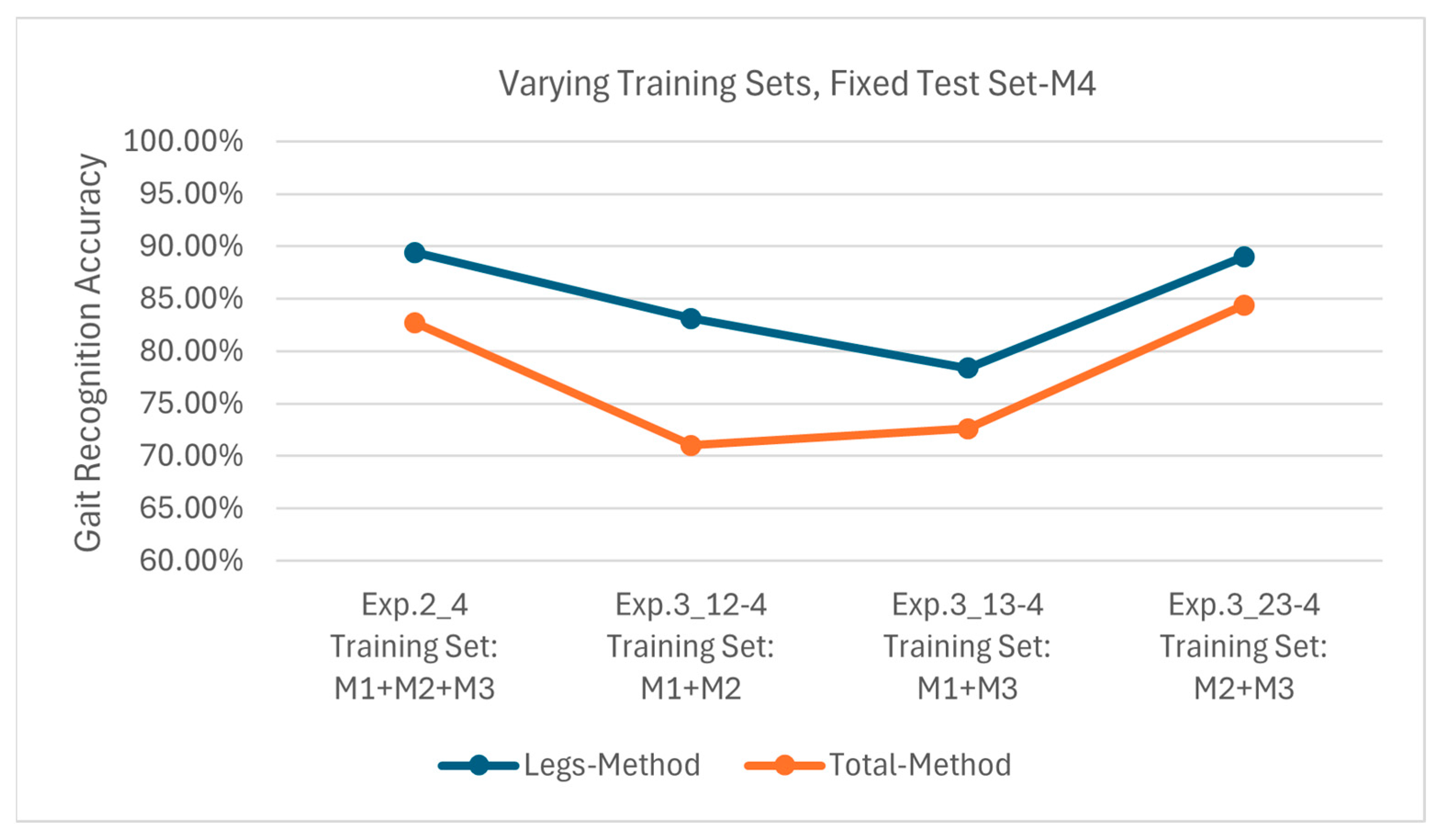

Figure 17 displays the results of Exp. 2_4, 3_12-4, 3_13-4 and 3_23-4, all using M4 as the test set. The Leg Method attained 89.4%, 83.1%, 78.4% and 89.0% accuracies, respectively, and the corresponding accuracies of the Total Method are 82.7%, 71.0%, 72.6% and 84.4%, respectively. Since M4 involves no arm movement while M1–M3 all contain arm motions, there exists significant discrepancy between the training and testing sets regarding arm movement states. This leads to notably lower accuracies for the Total Method when testing with M4; e.g., the maximum is 84.4% and the minimum is 71.0%. Despite the pronounced arm movement differences, the Leg Method still outperforms the Total Method with accuracies ranging from 78.4% to 89.4%.

The above three experiments show that when the model is trained with different sets, the recognition performances on the same test set can be quite different, highlighting the critical importance of training sample diversity for gait recognition. In practical applications, dataset construction is costly, making the trade-off between the training costs and the recognition accuracy a crucial consideration. Compared with the existing Total Method, the proposed Leg Method can significantly improve the recognition accuracy without increasing training costs while maintaining high recognition accuracy, even in cases that the testing gait data contains arm movements that are absent from training. All the results demonstrate that the Leg Method can be a substantially potential approach for simultaneously reducing training costs and enhancing recognition accuracy.