1. Introduction

ZCG is a critical parameter for evaluating vehicle performance and safety, with its accuracy directly impacting engineering design decisions [

1]. Current

ZCG measurement methods include the axle-lift method, stabilized pendulum method, and tilt-table test [

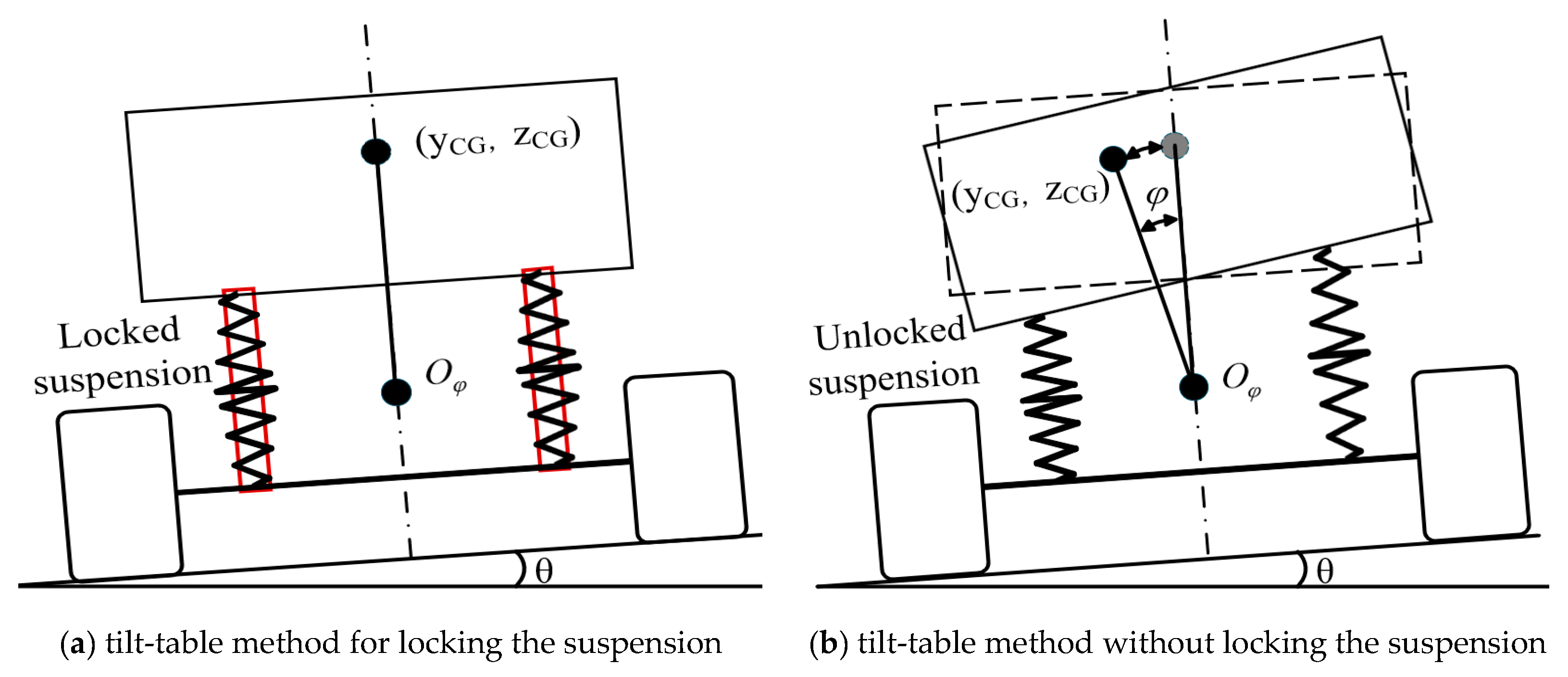

2]. While suitable for small vehicles, the axle-lift method and stabilized pendulum method exhibit limitations when applied to large vehicles such as semi-trailers or full trailers. In the conventional tilt-table method, the vehicle with locked suspension is mounted on a platform, and load transfer variations are recorded at left/right tilt angles of 6–12°. The

ZCG at 0° is then approximated by arithmetic averaging of

ZCG values within this angular range, introducing systematic errors due to angle-dependent extrapolation. Suspension lock procedures present operational complexities and safety risks, motivating research into unlocked-suspension measurement approaches [

3]. When measuring

ZCG without locking the suspension, tilt-induced deformation occurs, violating the rigid-body assumption essential for conventional methods [

4]. Consequently, suspension deformation induces coordinate shifts in the longitudinal position of the center of gravity (

XCG), the lateral position of the center of gravity (

YCG), and

ZCG dimensions. Furthermore, a coupling effect between

YCG and

ZCG makes it challenging to measure either parameter independently with high precision. Aftermarket functional components retrofitted to factory vehicles create variable sprung mass conditions, introducing additional uncertainties. Component heterogeneity and positional variability amplify suspension deformation magnitudes, thereby exacerbating fluctuations in

XCG,

YCG, and

ZCG coordinates. Collectively, three factors compromise

ZCG measurement accuracy:

- (1)

angle-dependent extrapolation via arithmetic averaging within 6–12° tilt intervals,

- (2)

YCG-ZCG coupling under unlocked suspension impeding independent measurement,

- (3)

variable sprung mass exacerbating deformation-induced coordinate shifts.

Conventional approaches to these issues include time-domain analysis [

5], frequency-domain analysis [

6], and wavelet transforms [

7]. Recent studies have integrated conventional techniques with machine learning algorithms, such as combining fast Fourier transform (FFT) with support vector machines (SVMs) [

8], short-time Fourier transform (STFT) with CNN [

9], or wavelet transforms with LSTM [

10]. As a pivotal branch of machine learning, deep learning (DL) has been widely applied across multiple domains [

11] due to its strengths in feature extraction, time-series modeling, robustness, and generalization capability. For instance, Hwang et al. implemented a CNN–LSTM–Attention model for monitoring human fatigue [

12]. Wang Xingfen et al. proposed a CNN–LSTM–Attention-based approach to improve the accuracy of iron ore futures price prediction [

13]. Yang Yong et al. developed an intelligent diagnostic approach using a CNN–LSTM–Attention model, which enhances the accuracy and reliability of track circuit fault diagnosis [

14]. Xia K. et al. developed a CNN–BiLSTM–Attention-based model for predicting the aging status of automotive wiring harnesses [

15]. Chen Xing et al. developed a CNN–LSTM–Attention-based model for predicting monthly domestic water demand, effectively forecasting water consumption patterns [

16]. She Chengxi et al. developed an intelligent fault diagnosis and early warning method for production lines using a CNN–LSTM–Attention model, enabling effective fault prediction through data mining [

17]. Zhu Anfeng et al. developed a condition monitoring and health evaluation system for wind turbines using a CNN–LSTM–Attention model [

18].

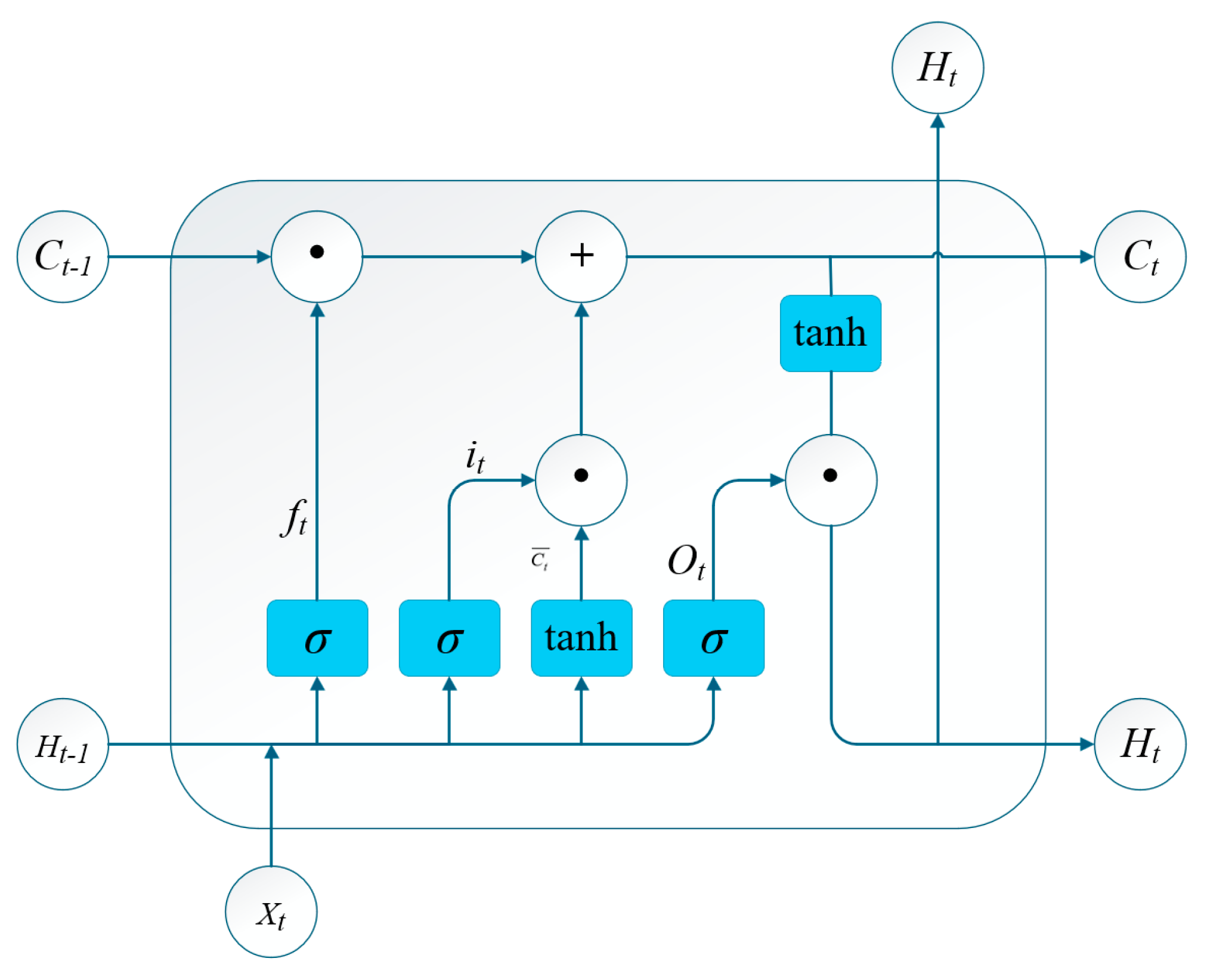

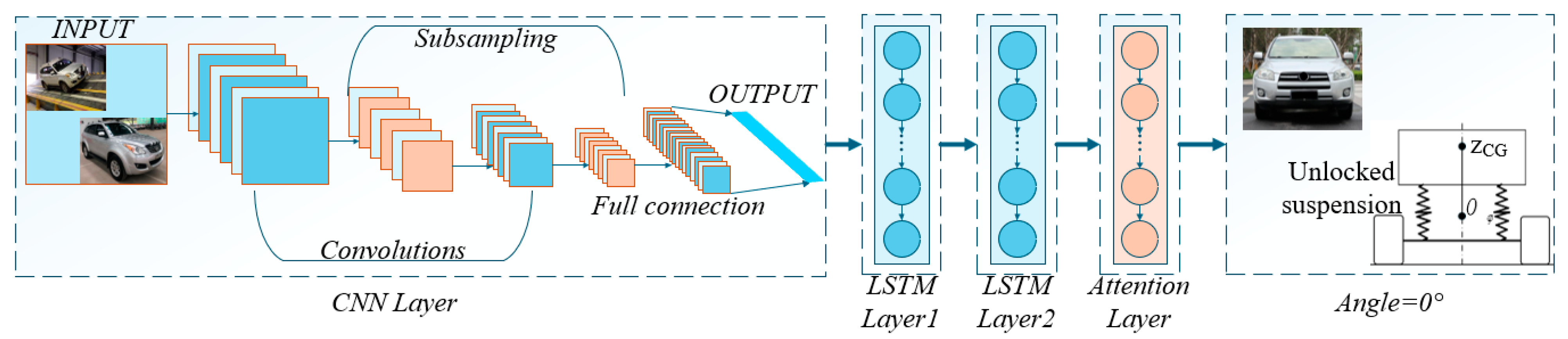

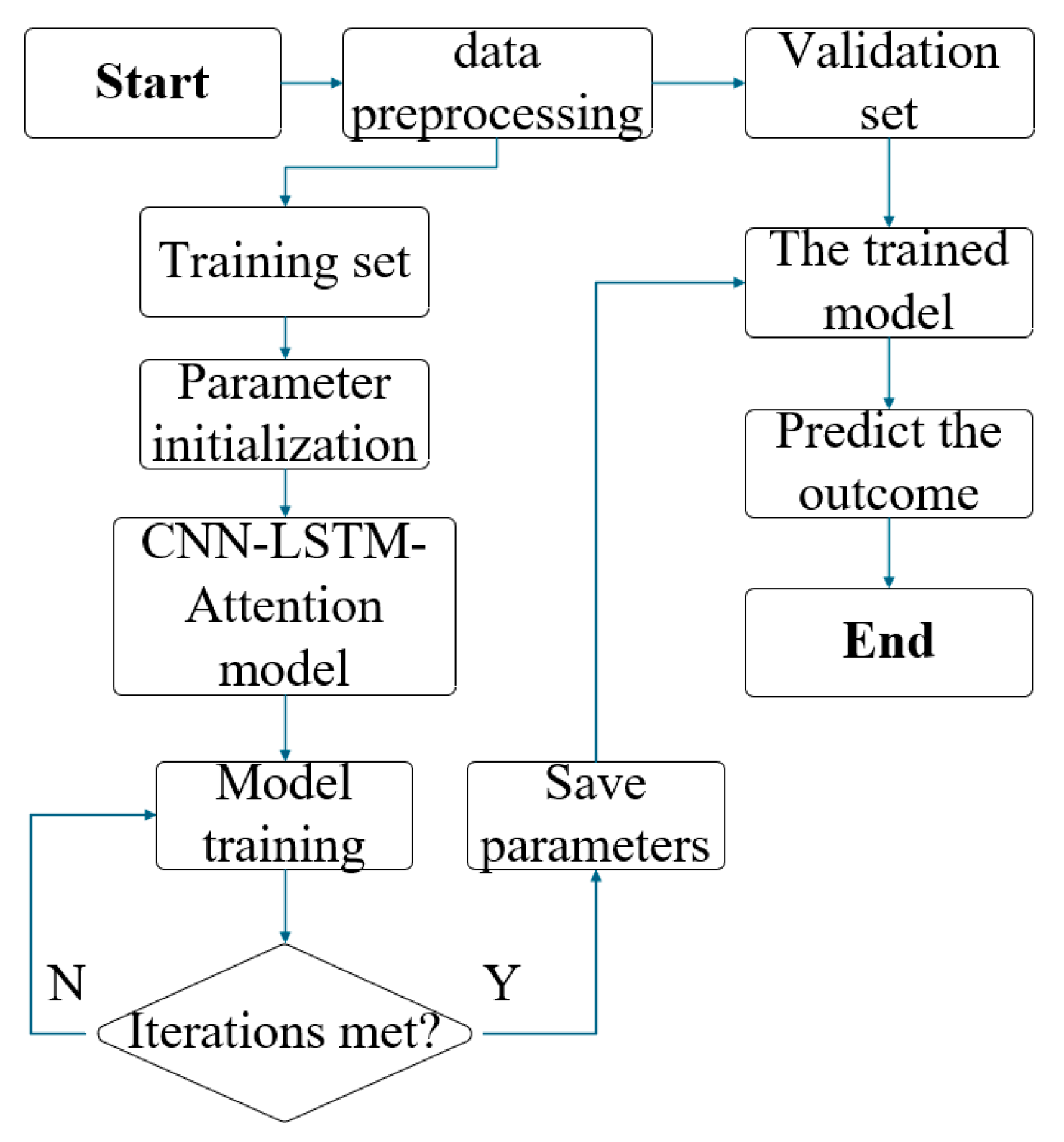

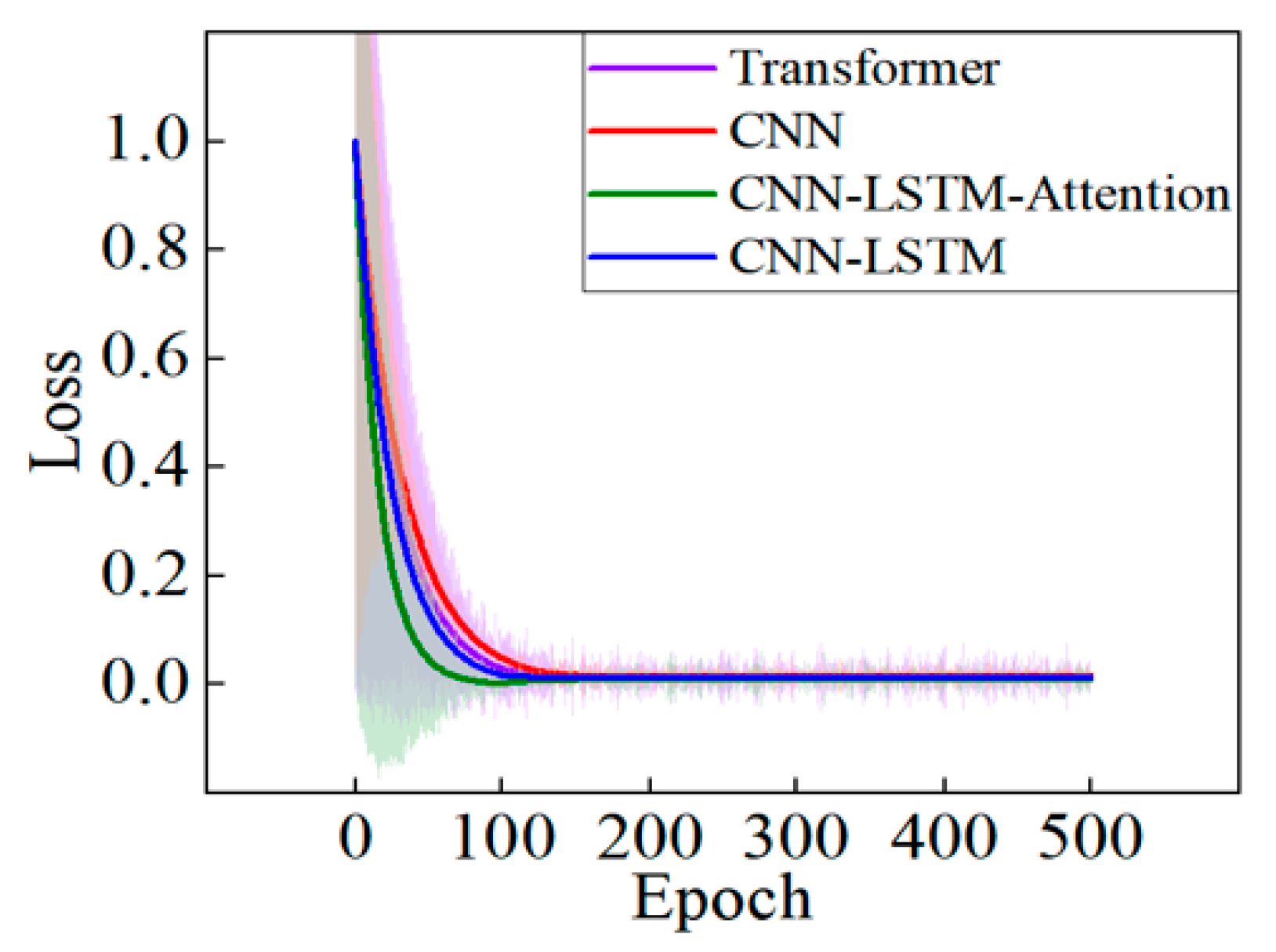

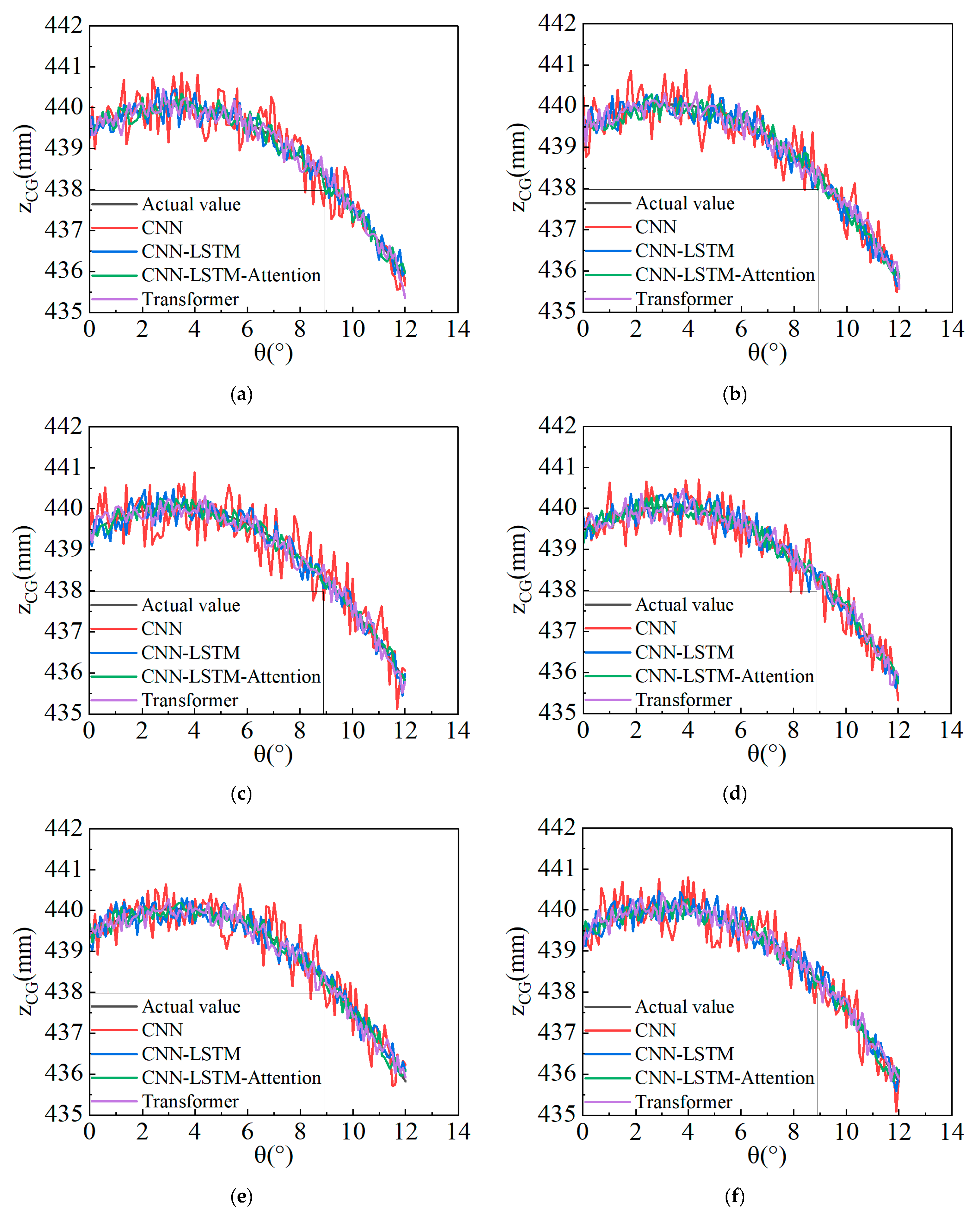

Given that the tilt-table method does not lock the suspension system and that variations in sprung mass can affect the measurement of the ZCG, this study proposes a CNN–LSTM–Attention model—integrating CNN, LSTM, and attention mechanisms—to predict the 0° ZCG of a typical two-axle vehicle, leveraging the advantages of deep learning and its successful application cases.

5. Conclusions

To mitigate the influence of unlocked suspension and variable sprung mass on ZCG measurements in tilt-table testing, a CNN–LSTM–Attention prediction model is proposed. Specifically, (1) CNN extracts fine-grained features among load transfer, suspension stiffness, and roll angle during unlocked suspension articulation; (2) LSTM processes temporal dependencies in roll-angle sequences; and (3) the attention mechanism dynamically weights features to accommodate variable sprung mass conditions. Experimental validation on production vehicles demonstrates superior ZCG prediction accuracy compared to benchmark models, with predictions exhibiting the closest alignment to ground-truth values. This deep learning framework provides a novel solution for vehicle center-of-mass positioning via tilt-table testing, utilizing the CNN–LSTM–Attention model to calculate ZCG coordinates. The trained model extracts intrinsic relationships among ZCG position, suspension elastic deformation, wheel-load transfer, and roll angle. It enables accurate ZCG prediction under unlocked suspension deformation and variable sprung mass scenarios, demonstrating significant practical utility in vehicle statics and dynamics applications.