Integrating UAV-Derived Diameter Estimations and Machine Learning for Precision Cabbage Yield Mapping

Abstract

1. Introduction

2. Materials and Methods

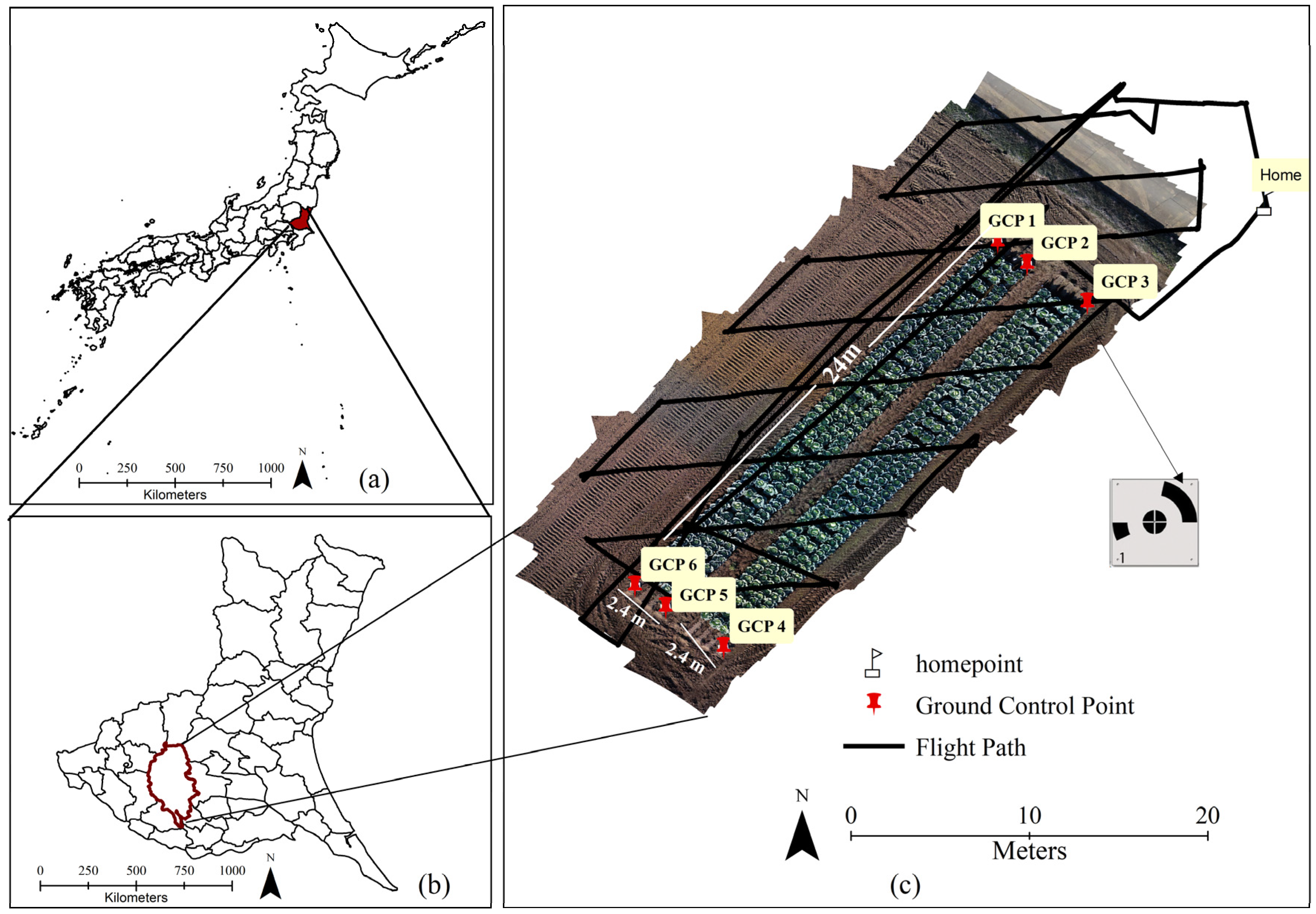

2.1. Experimental Field Summary and Cultivation Method

2.2. Data Acquisition

2.2.1. Synopsis of Drone Images and Data Collection

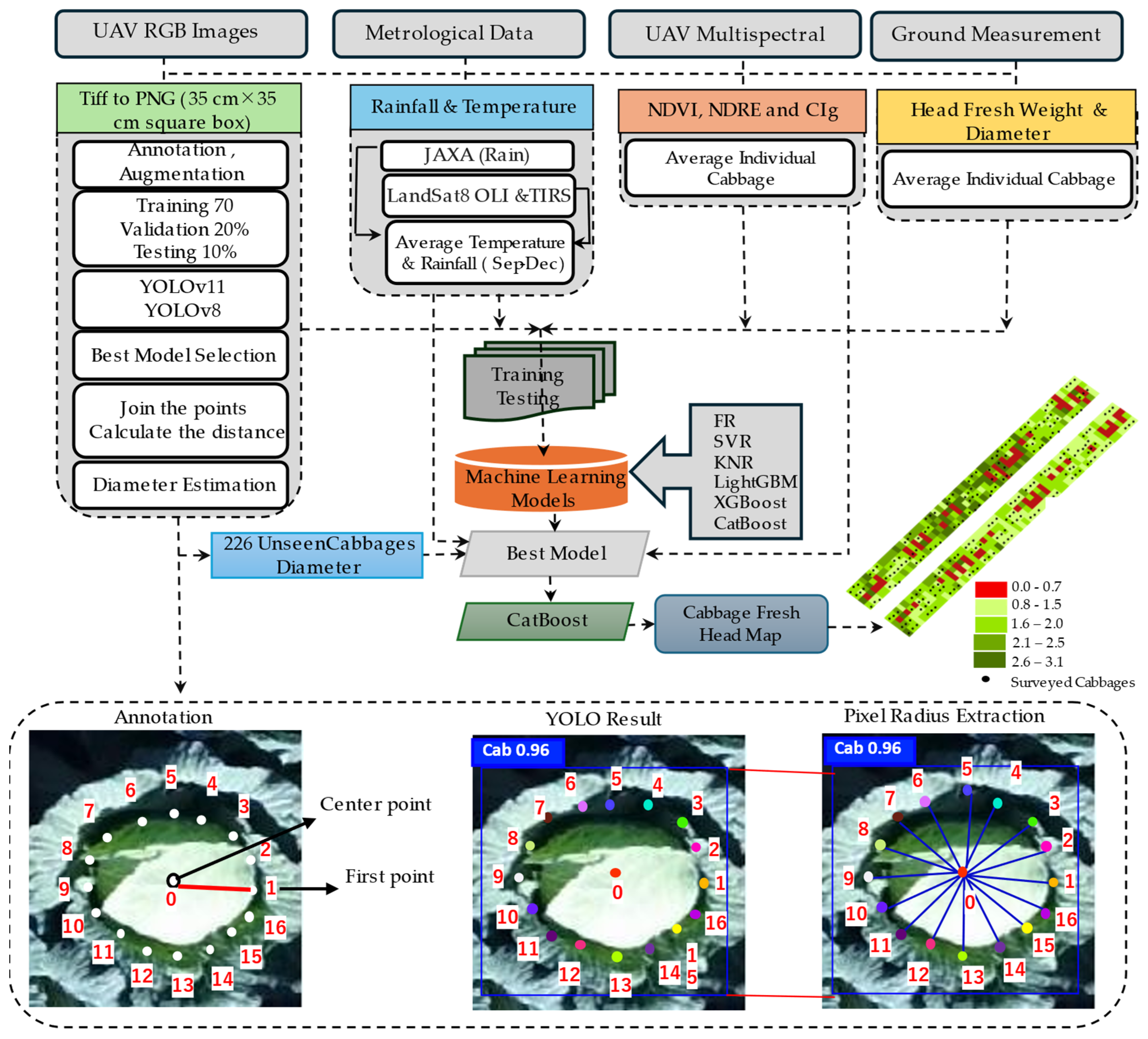

2.2.2. Data Processing and Preparation for Cabbage Head Diameter Estimation Using Pose Estimation Techniques

2.2.3. Climatic Variables

2.2.4. Canopy Reflectance Indices

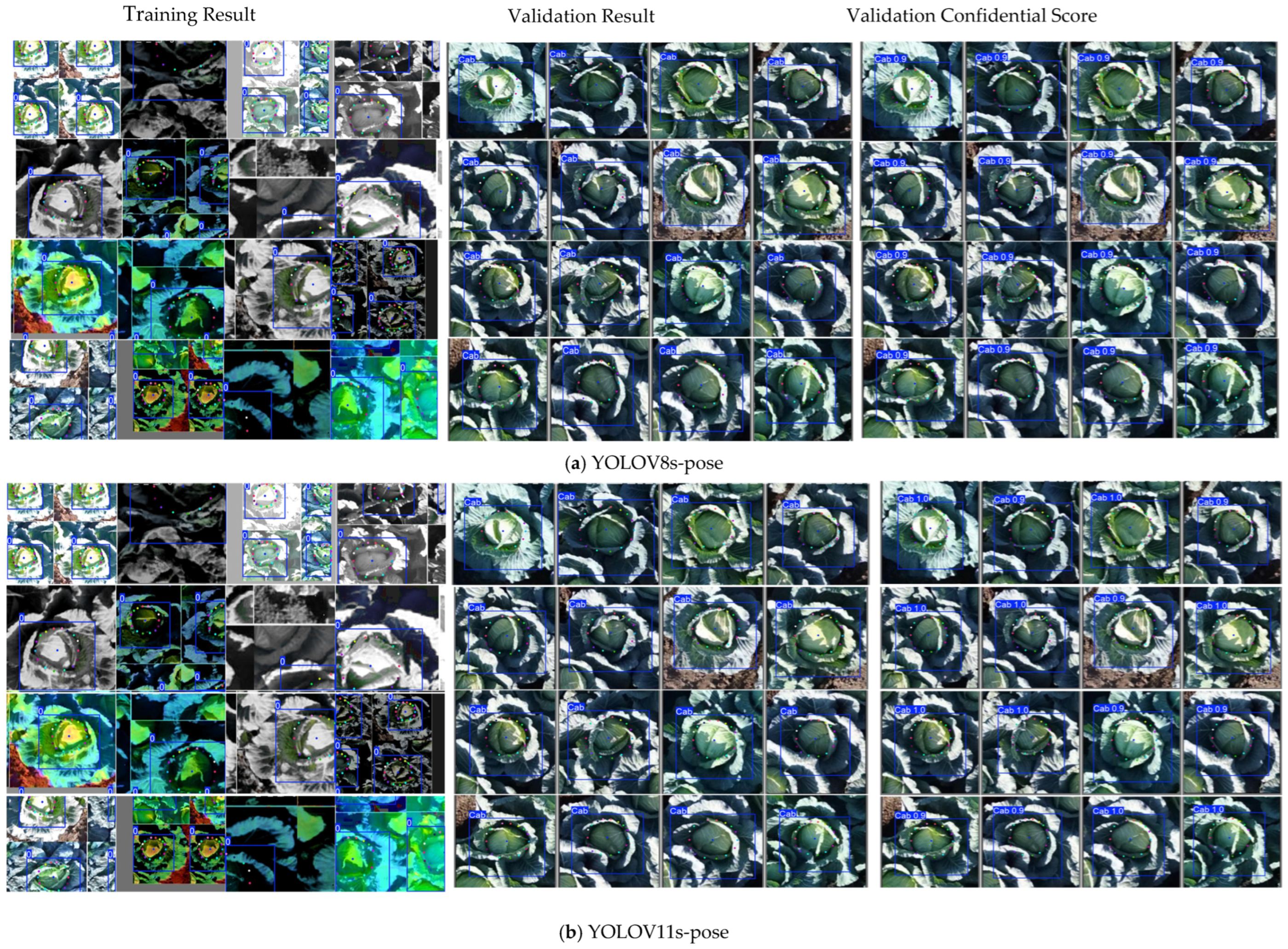

2.3. AI Models for Cabbage Head Diameter Estimation

2.4. Machine Learning Models for Cabbage Head Fresh Weight Prediction

2.5. Accuracy Assessment

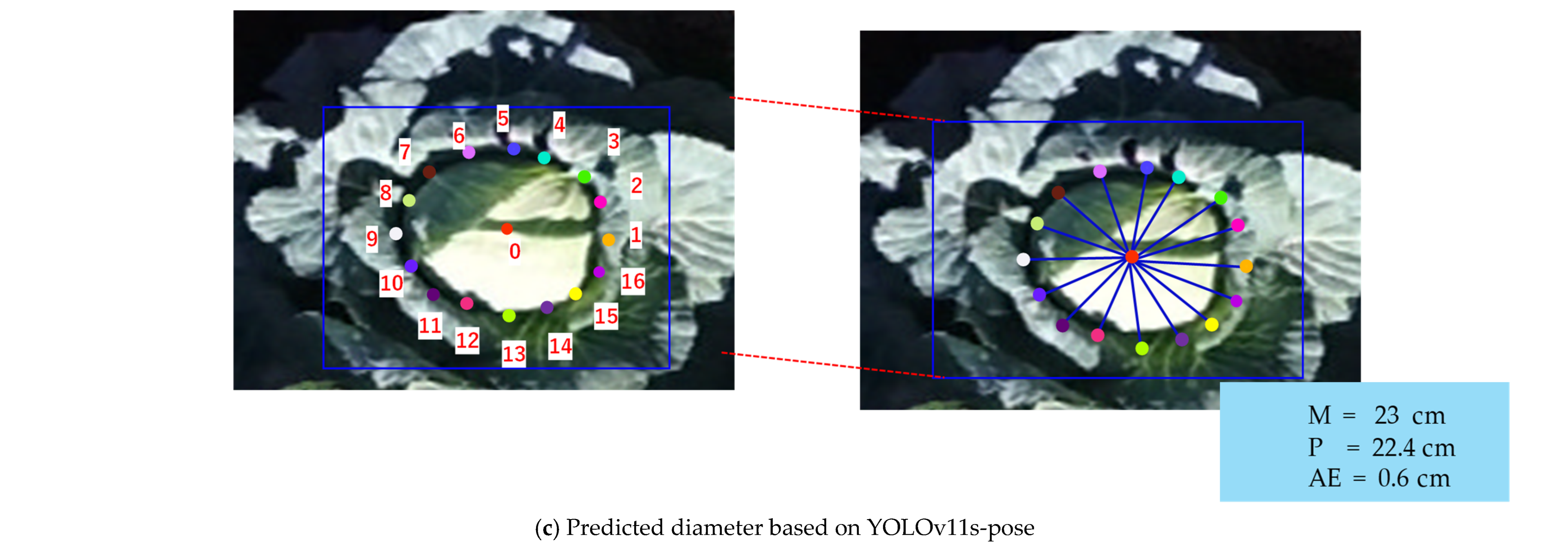

2.5.1. Head Keypoint Accuracy Assessment

2.5.2. Cabbage Head Diameter and Fresh Weight Accuracy Assessment

2.5.3. Performance Testing Using Diebold–Mariano Test

2.6. Details of the Experimental Environment

3. Results

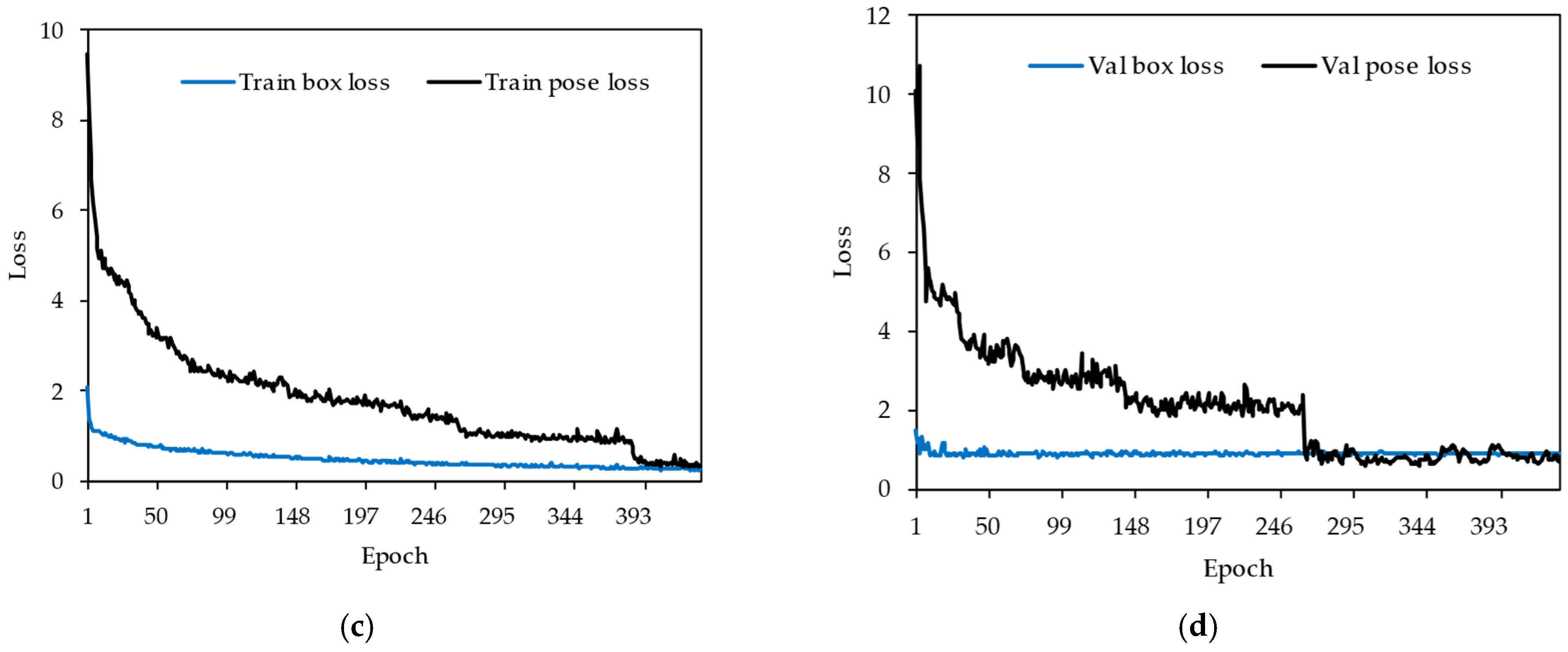

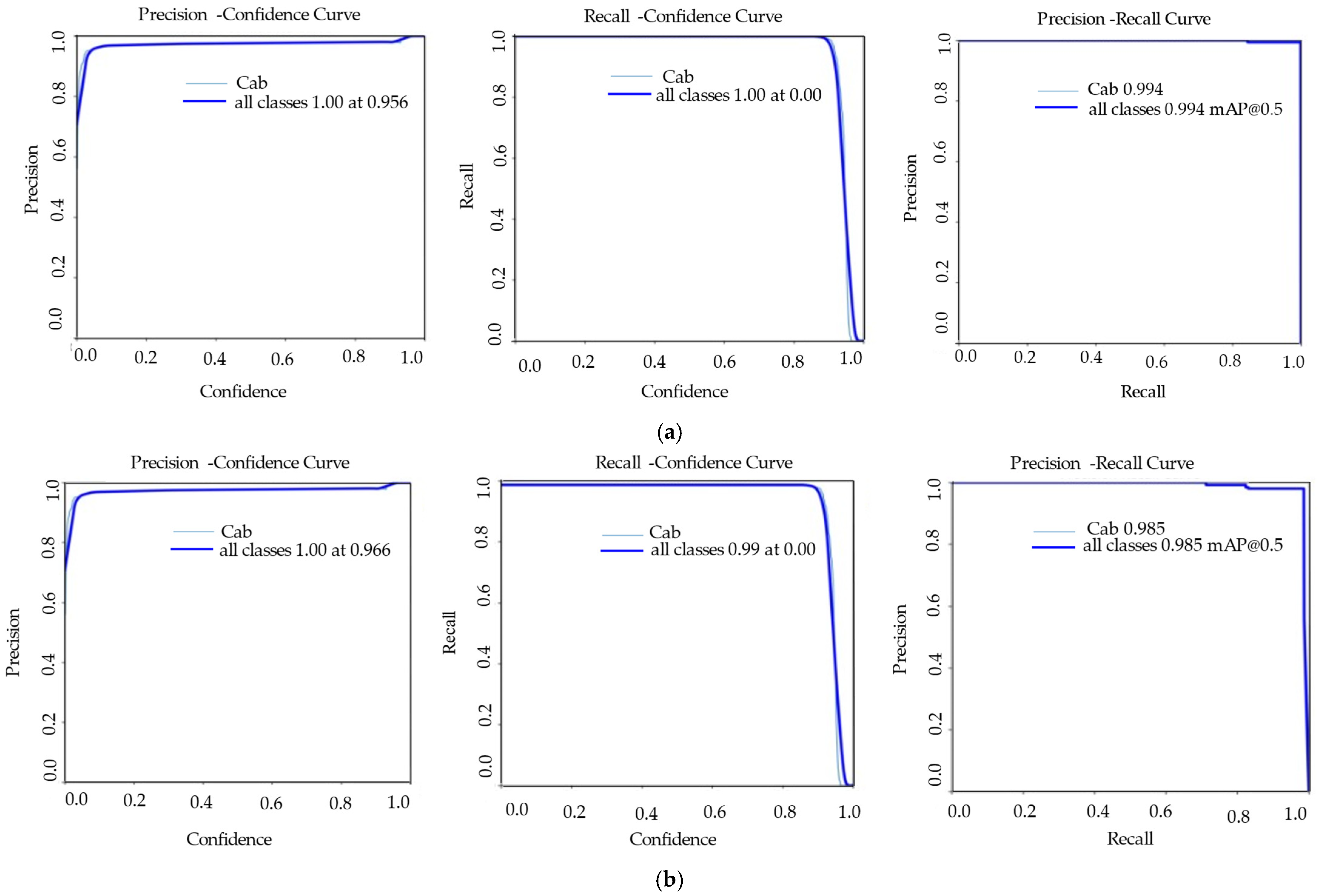

3.1. Cabbage Head Diameter Estimation Using Pose Estimation Model

3.2. Cabbage Head Fresh Weight Prediction Using Machine Learning Models

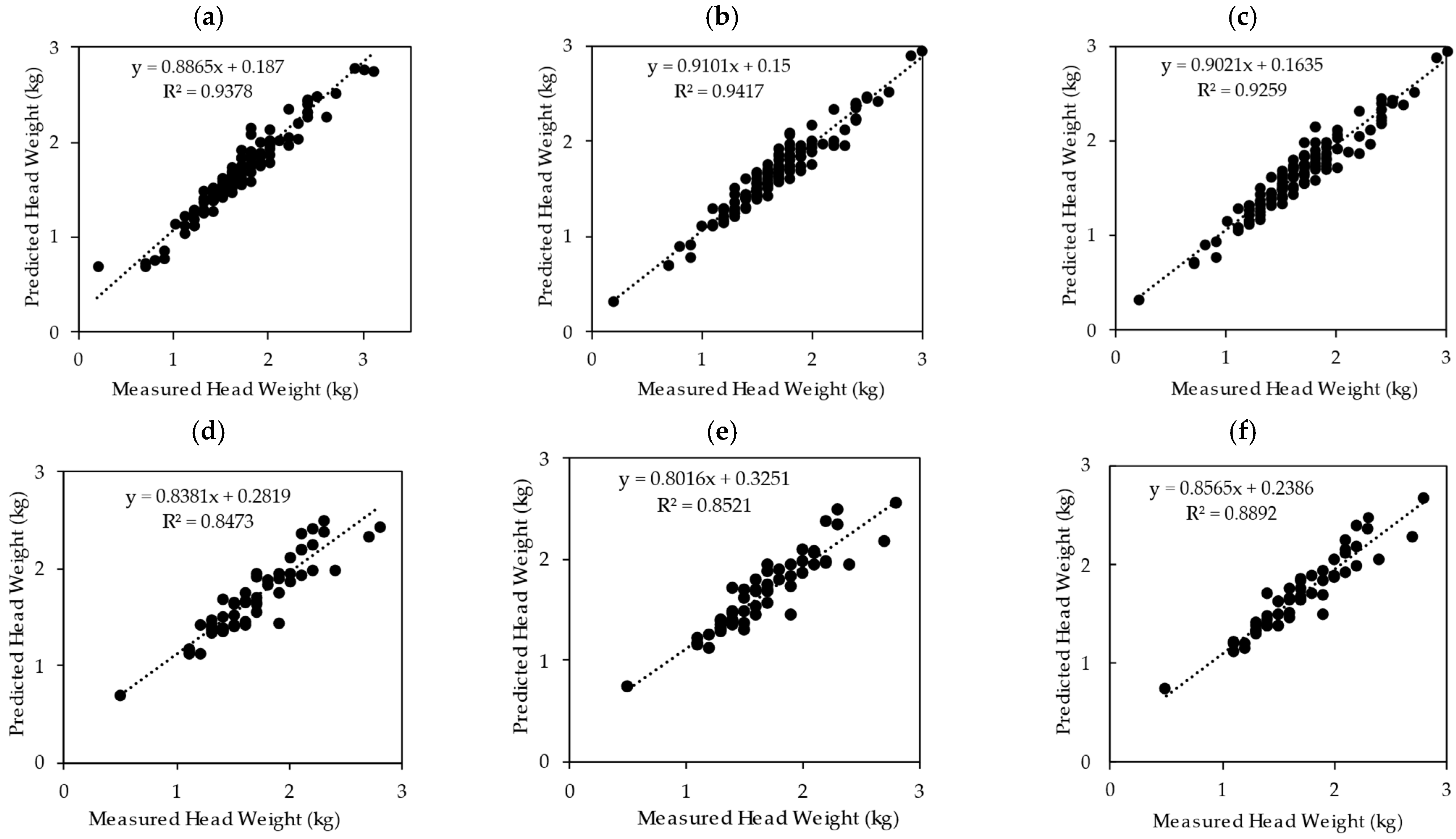

3.2.1. Cabbage Head Fresh Weight Machine Learning Model Performance Evaluation Incorporating R2 and MSE

3.2.2. Evaluation of Best Model Performance (Random Forest, Extreme Gradient Boosting and Categorical Boosting) by Diebold–Mariano Statistical Test

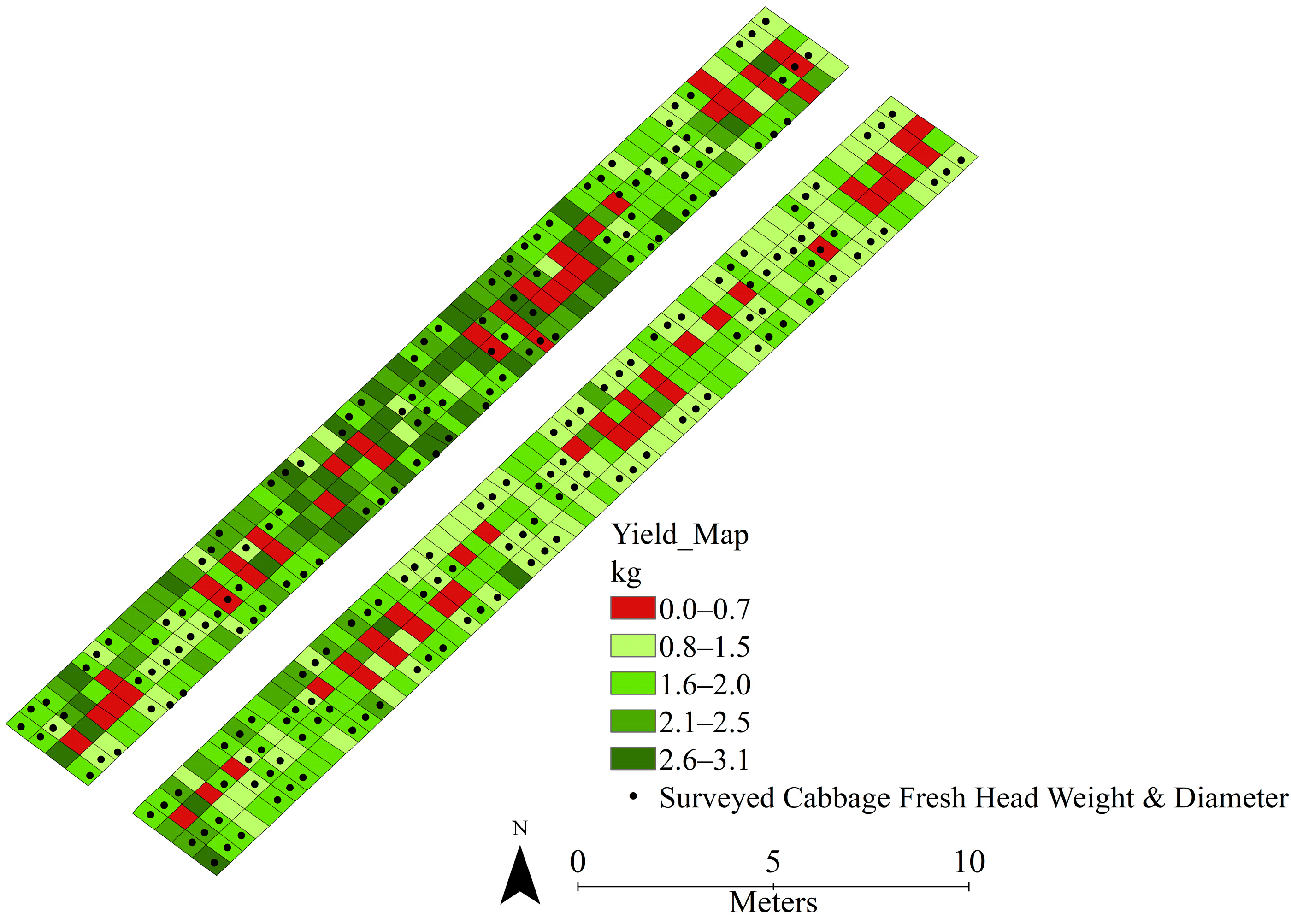

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| UAV | Unmanned aerial vehicle |

| YOLO | You only look once |

| MARE | Mean absolute relative error |

| NDVI | Normalized difference vegetation index |

| NDRE | Normalized differences red edge index |

| CIg | Green chlorophyll Index |

| MLMs | Machine learning models |

| DLT | Deep learning techniques |

| LST | Land surface temperature |

| JAXA | Japan aerospace exploration agency |

| CRP | Calibrated reflectance panel |

| OLI | Operational land imager |

| TIRS | Thermal infrared sensor |

| QA | quality assessment |

| GNDVI | Green normalized vegetation index |

| SAVI | Soil-adjusted vegetation index |

| MCARI | Modified chlorophyll absorption reflectance index |

| MSAVI | Modified soil-adjusted vegetation index |

| RF | Random forest |

| SVR | Support vector regressor |

| KNR | K-neighbors regressor |

| XGBoost | Extreme gradient boosting |

| LightGBM | Light gradient boosting Machine |

| CatBoost | Categorical gradient boosting |

| DM | Diebold–Mariano |

| mAP | Mean average precision |

| CHD | Cabbage head diameter |

References

- Mugisha, B.; Agole, D.; Ewing, J.C.; Wacal, C.; Kule, E.B. Determinants of Adoption of Climate-Smart Agriculture Practices among Farmers in Sheema District, Western Uganda. J. Int. Agric. Ext. Educ. 2025, 32, 204–222. [Google Scholar] [CrossRef]

- Leviäkangas, P.; Sønvisen, S.; Casado-Mansilla, D.; Mikalsen, M.; Cimmino, A.; Drosou, A.; Hussain, S. Towards Smart, Digitalised Rural Regions and Communities—Policies, Best Practices and Case Studies. Technol. Soc. 2025, 81, 102824. [Google Scholar] [CrossRef]

- Bissadu, K.D.; Sonko, S.; Hossain, G. Society 5.0 Enabled Agriculture: Drivers, Enabling Technologies, Architectures, Opportunities, and Challenges. Inf. Process. Agric. 2025, 12, 112–124. [Google Scholar] [CrossRef]

- Li, D.; Nanseki, T.; Chomei, Y.; Kuang, J. A Review of Smart Agriculture and Production Practices in Japanese Large-Scale Rice Farming. J. Sci. Food Agric. 2023, 103, 1609–1620. [Google Scholar] [CrossRef]

- Subeesh, A.; Prakash Kumar, S.; Kumar Chakraborty, S.; Upendar, K.; Singh Chandel, N.; Jat, D.; Dubey, K.; Modi, R.U.; Mazhar Khan, M. UAV Imagery Coupled Deep Learning Approach for the Development of an Adaptive In-House Web-Based Application for Yield Estimation in Citrus Orchard. Measurement 2024, 234, 114786. [Google Scholar] [CrossRef]

- Wang, S.-M.; Yu, C.-P.; Ma, J.-H.; Ouyang, J.-X.; Zhao, Z.-M.; Xuan, Y.-M.; Fan, D.-M.; Yu, J.-F.; Wang, X.-C.; Zheng, X.-Q. Tea Yield Estimation Using UAV Images and Deep Learning. Ind. Crops Prod. 2024, 212, 118358. [Google Scholar] [CrossRef]

- Tanaka, Y.; Watanabe, T.; Katsura, K.; Tsujimoto, Y.; Takai, T.; Tanaka, T.S.T.; Kawamura, K.; Saito, H.; Homma, K.; Mairoua, S.G.; et al. Deep Learning Enables Instant and Versatile Estimation of Rice Yield Using Ground-Based RGB Images. Plant Phenomics 2023, 5, 0073. [Google Scholar] [CrossRef]

- Aguilar-Ariza, A.; Ishii, M.; Miyazaki, T.; Saito, A.; Khaing, H.P.; Phoo, H.W.; Kondo, T.; Fujiwara, T.; Guo, W.; Kamiya, T. UAV-Based Individual Chinese Cabbage Weight Prediction Using Multi-Temporal Data. Sci. Rep. 2023, 13, 20122. [Google Scholar] [CrossRef]

- Ye, Z.; Yang, K.; Lin, Y.; Guo, S.; Sun, Y.; Chen, X.; Lai, R.; Zhang, H. A Comparison between Pixel-Based Deep Learning and Object-Based Image Analysis (OBIA) for Individual Detection of Cabbage Plants Based on UAV Visible-Light Images. Comput. Electron. Agric. 2023, 209, 107822. [Google Scholar] [CrossRef]

- Lee, C.-J.; Yang, M.-D.; Tseng, H.-H.; Hsu, Y.-C.; Sung, Y.; Chen, W.-L. Single-Plant Broccoli Growth Monitoring Using Deep Learning with UAV Imagery. Comput. Electron. Agric. 2023, 207, 107739. [Google Scholar] [CrossRef]

- Kim, D.-W.; Yun, H.; Jeong, S.-J.; Kwon, Y.-S.; Kim, S.-G.; Lee, W.; Kim, H.-J. Modeling and Testing of Growth Status for Chinese Cabbage and White Radish with UAV-Based RGB Imagery. Remote Sens. 2018, 10, 563. [Google Scholar] [CrossRef]

- Lee, D.-H.; Park, J.-H. Development of a UAS-Based Multi-Sensor Deep Learning Model for Predicting Napa Cabbage Fresh Weight and Determining Optimal Harvest Time. Remote Sens. 2024, 16, 3455. [Google Scholar] [CrossRef]

- Tian, Y.; Cao, X.; Zhang, T.; Wu, H.; Zhao, C.; Zhao, Y. CabbageNet: Deep Learning for High-Precision Cabbage Segmentation in Complex Settings for Autonomous Harvesting Robotics. Sensors 2024, 24, 8115. [Google Scholar] [CrossRef] [PubMed]

- Yokoyama, Y.; Matsui, T.; Tanaka, T.S.T. An Instance Segmentation Dataset of Cabbages over the Whole Growing Season for UAV Imagery. Data Brief 2024, 55, 110699. [Google Scholar] [CrossRef]

- García-Fernández, M.; Sanz-Ablanedo, E.; Rodríguez-Pérez, J.R. High-Resolution Drone-Acquired RGB Imagery to Estimate Spatial Grape Quality Variability. Agronomy 2021, 11, 655. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The Application of Small Unmanned Aerial Systems for Precision Agriculture: A Review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Lobell, D.B.; Cassman, K.G.; Field, C.B. Crop Yield Gaps: Their Importance, Magnitudes, and Causes. Annu. Rev. Environ. Resour. 2009, 34, 179–204. [Google Scholar] [CrossRef]

- Mia, M.S.; Tanabe, R.; Habibi, L.N.; Hashimoto, N.; Homma, K.; Maki, M.; Matsui, T.; Tanaka, T.S.T. Multimodal Deep Learning for Rice Yield Prediction Using UAV-Based Multispectral Imagery and Weather Data. Remote Sens. 2023, 15, 2511. [Google Scholar] [CrossRef]

- Herrero-Huerta, M.; Rodriguez-Gonzalvez, P.; Rainey, K.M. Yield Prediction by Machine Learning from UAS-Based Multi-Sensor Data Fusion in Soybean. Plant Methods 2020, 16, 78. [Google Scholar] [CrossRef]

- Walsh, O.S.; Nambi, E.; Shafian, S.; Jayawardena, D.M.; Ansah, E.O.; Lamichhane, R.; McClintick-Chess, J.R. UAV-based NDVI Estimation of Sugarbeet Yield and Quality under Varied Nitrogen and Water Rates. Agrosyst. Geosci. Environ. 2023, 6, e20337. [Google Scholar] [CrossRef]

- Kaur, N.; Sharma, A.K.; Shellenbarger, H.; Griffin, W.; Serrano, T.; Brym, Z.; Singh, A.; Singh, H.; Sandhu, H.; Sharma, L.K. Drone and Handheld Sensors for Hemp: Evaluating NDVI and NDRE in Relation to Nitrogen Application and Crop Yield. Agrosyst. Geosci. Environ. 2025, 8, e70075. [Google Scholar] [CrossRef]

- Pipatsitee, P.; Tisarum, R.; Taota, K.; Samphumphuang, T.; Eiumnoh, A.; Singh, H.P.; Cha-um, S. Effectiveness of Vegetation Indices and UAV-Multispectral Imageries in Assessing the Response of Hybrid Maize (Zea mays L.) to Water Deficit Stress under Field Environment. Environ. Monit. Assess. 2023, 195, 128. [Google Scholar] [CrossRef]

- Timmer, B.; Reshitnyk, L.Y.; Hessing-Lewis, M.; Juanes, F.; Gendall, L.; Costa, M. Capturing Accurate Kelp Canopy Extent: Integrating Tides, Currents, and Species-Level Morphology in Kelp Remote Sensing. Front. Environ. Sci. 2024, 12, 1338483. [Google Scholar] [CrossRef]

- Meivel, S.; Maheswari, S. Monitoring of Potato Crops Based on Multispectral Image Feature Extraction with Vegetation Indices. Multidimens. Syst. Signal Process. 2022, 33, 683–709. [Google Scholar] [CrossRef]

- Shamsuzzoha, M.; Noguchi, R.; Ahamed, T. Damaged Area Assessment of Cultivated Agricultural Lands Affected by Cyclone Bulbul in Coastal Region of Bangladesh Using Landsat 8 OLI and TIRS Datasets. Remote Sens. Appl. 2021, 23, 100523. [Google Scholar] [CrossRef]

- Arab, S.T.; Noguchi, R.; Ahamed, T. Yield Loss Assessment of Grapes Using Composite Drought Index Derived from Landsat OLI and TIRS Datasets. Remote Sens. Appl. 2022, 26, 100727. [Google Scholar] [CrossRef]

- Oyoshi, K.; Sobue, S.; Kimura, S. Rice Growth Outlook Using Satellite-Based Information in Southeast Asia. In Remote Sensing of Agriculture and Land Cover/Land Use Changes in South and Southeast Asian Countries; Springer International Publishing: Cham, Switzerland, 2022; pp. 91–104. [Google Scholar]

- Aka, K.S.R.; Akpavi, S.; Dibi, N.H.; Kabo-Bah, A.T.; Gyilbag, A.; Boamah, E. Toward Understanding Land Use Land Cover Changes and Their Effects on Land Surface Temperature in Yam Production Area, Côte d’Ivoire, Gontougo Region, Using Remote Sensing and Machine Learning Tools (Google Earth Engine). Front. Remote Sens. 2023, 4, 1221757. [Google Scholar] [CrossRef]

- Yang, L.; Cao, Y.; Zhu, X.; Zeng, S.; Yang, G.; He, J.; Yang, X. Land Surface Temperature Retrieval for Arid Regions Based on Landsat-8 TIRS Data: A Case Study in Shihezi, Northwest China. J. Arid. Land 2014, 6, 704–716. [Google Scholar] [CrossRef]

- Skofronick-Jackson, G.; Kirschbaum, D.; Petersen, W.; Huffman, G.; Kidd, C.; Stocker, E.; Kakar, R. The Global Precipitation Measurement (GPM) Mission’s Scientific Achievements and Societal Contributions: Reviewing Four Years of Advanced Rain and Snow Observations. Q. J. R. Meteorol. Soc. 2018, 144, 27–48. [Google Scholar] [CrossRef]

- Zhang, Q.; Liu, Y.; Gong, C.; Chen, Y.; Yu, H. Applications of Deep Learning for Dense Scenes Analysis in Agriculture: A Review. Sensors 2020, 20, 1520. [Google Scholar] [CrossRef]

- Tariq, A.; Riaz, I.; Ahmad, Z.; Yang, B.; Amin, M.; Kausar, R.; Andleeb, S.; Farooqi, M.A.; Rafiq, M. Land Surface Temperature Relation with Normalized Satellite Indices for the Estimation of Spatio-Temporal Trends in Temperature among Various Land Use Land Cover Classes of an Arid Potohar Region Using Landsat Data. Environ. Earth Sci. 2020, 79, 40. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E. Object-Based Cloud and Cloud Shadow Detection in Landsat Imagery. Remote Sens. Environ. 2012, 118, 83–94. [Google Scholar] [CrossRef]

- Onačillová, K.; Gallay, M.; Paluba, D.; Péliová, A.; Tokarčík, O.; Laubertová, D. Combining Landsat 8 and Sentinel-2 Data in Google Earth Engine to Derive Higher Resolution Land Surface Temperature Maps in Urban Environment. Remote Sens. 2022, 14, 4076. [Google Scholar] [CrossRef]

- DJI Phantom 4 Multispectral User Manual. Available online: https://dl.djicdn.com/downloads/p4-multispectral/20190927/P4_Multispectral_User_Manual_v1.0_EN.pdf (accessed on 2 September 2025).

- Sellers, P.J. International Journal of Remote Sensing Canopy Reflectance, Photosynthesis and Transpiration. Int. J. Remote Sens. 1985, 6, 1335–1372. [Google Scholar] [CrossRef]

- Wu, C.; Niu, Z.; Tang, Q.; Huang, W. Estimating Chlorophyll Content from Hyperspectral Vegetation Indices: Modeling and Validation. Agric. For. Meteorol. 2008, 148, 1230–1241. [Google Scholar] [CrossRef]

- Maji, D.; Nagori, S.; Mathew, M.; Poddar, D. YOLO-Pose: Enhancing YOLO for Multi Person Pose Estimation Using Object Keypoint Similarity Loss. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Bergstra, J.; Ca, J.B.; Ca, Y.B. Random Search for Hyper-Parameter Optimization Yoshua Bengio. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Ding, J.; Niu, S.; Nie, Z.; Zhu, W. Research on Human Posture Estimation Algorithm Based on YOLO-Pose. Sensors 2024, 24, 3036. [Google Scholar] [CrossRef]

- Niu, J.; Bi, M.; Yu, Q. Apple Pose Estimation Based on SCH-YOLO11s Segmentation. Agronomy 2025, 15, 900. [Google Scholar] [CrossRef]

- Mohammed, F.A.; Mousa, M.A. Applying Diebold–Mariano Test for Performance Evaluation Between Individual and Hybrid Time-Series Models for Modeling Bivariate Time-Series Data and Forecasting the Unemployment Rate in the USA. In Theory and Applications of Time Series Analysis; Springer International Publishing: Cham, Switzerland, 2020; pp. 443–458. [Google Scholar] [CrossRef]

- Diebold, F.X.; Mariano, R.S. Comparing Predictive Accuracy. J. Bus. Econ. Stat. 2002, 20, 134–144. [Google Scholar] [CrossRef]

- Diebold, F.X.; Rudebusch, G.D. Business Cycles: Durations, Dynamics, and Forecasting; Princeton University Press: Princeton, NJ, USA, 2020; ISBN 9780691219585. [Google Scholar]

- Sapkota, R.; Karkee, M. YOLO11 and Vision Transformers Based 3D Pose Estimation of Immature Green Fruits in Commercial Apple Orchards for Robotic Thinning. arXiv 2024, arXiv:2410.19846. [Google Scholar]

- Zhao, K.; Li, Y.; Liu, Z. Three-Dimensional Spatial Perception of Blueberry Fruits Based on Improved YOLOv11 Network. Agronomy 2025, 15, 535. [Google Scholar] [CrossRef]

- Uribeetxebarria, A.; Castellón, A.; Aizpurua, A. Optimizing Wheat Yield Prediction Integrating Data from Sentinel-1 and Sentinel-2 with CatBoost Algorithm. Remote Sens. 2023, 15, 1640. [Google Scholar] [CrossRef]

- Mahesh, P.; Soundrapandiyan, R. Yield Prediction for Crops by Gradient-Based Algorithms. PLoS ONE 2024, 19, e0291928. [Google Scholar] [CrossRef] [PubMed]

- Islam, A.; Islam Shanto, M.N.; Mahabub Rabby, M.S.; Sikder, A.R.; Sayem Uddin, M.; Arefin, M.N.; Patwary, M.J.A. Eggplant Yield Prediction Utilizing 130 Locally Collected Genotypes and Machine Learning Model. In Proceedings of the 2023 26th International Conference on Computer and Information Technology (ICCIT), Cox’s Bazar, Bangladesh, 13–15 December 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

| Item | Specification |

|---|---|

| UAV type | DJI Phantom 4M Drone |

| Camera sensor | RGB and multispectral images |

| Working frequency | 2.400–2.483 GHz |

| Filters | Blue (B): 450 nm ± 16 nm; Green (G): 560 nm ± 16 nm, Red (R): 650 nm ± 16 nm; red edge (RE): 730 nm ± 16 nm, near-infrared (NIR): 840 nm ± 26 nm. |

| Lenses | FOV (Field of View): 62.7° Focal Length: 5.74 mm (35 mm format equivalent: 40 mm), autofocus set at ∞ Aperture: f/2.2 |

| Battery | 6000 mAh LiPo 2S |

| Maximum takeoff weight | 1487 g |

| Maximum flight time | Approx. 27–28 min |

| Model | Hyperparameter | Value |

|---|---|---|

| Random Forest (RF) | n_estimators | 184 |

| max_depth | 21 | |

| random_state | 42 | |

| min_samples_split | 8 | |

| min_samples_leaf | 2 | |

| Support Vector Regressor (SVR) | Gamma | Scale |

| kernel | rbf | |

| C | 2 | |

| K-Neighbors Regressor (KNR) | n_neighbors | 5 |

| Light Gradient Boosting Machine (LightGBM) | n_estimators | 150 |

| max_depth | 6 | |

| random_state | 42 | |

| Learning_rate | 0.045 | |

| Extreme Gradient Boosting (XGB) | n_estimators | 375 |

| max_depth | 1 | |

| random_state | 42 | |

| Learning_rate | 0.07 | |

| Categorical Boosting (CatBoost) | n_estimators | 400 |

| max_depth | 1 | |

| random_state | 42 | |

| Learning_rate | 0.08 |

| Model | Input Image Dimensions | Batch Size | Epoch |

|---|---|---|---|

| YOLOv8 | 640 × 640 | 32 | 800 |

| YOLOv11 | 640 × 640 | 32 | 800 |

| Model | Parameter | Precision | Recall | Mean Average Precision–Recall (mAP)@0.5 |

|---|---|---|---|---|

| YOLOV8s | Boxes | 0.888 | 1.00 | 0.995 |

| Pose | 0.954 | 0.99 | 0.979 | |

| YOLOv11s | Boxes | 0.956 | 1.00 | 0.994 |

| Pose | 0.966 | 0.99 | 0.985 |

| No. | Variety | Measured CHD * (cm) | Average Radius (cm) | Predicted CHD * (cm) | Absolute Error (cm) | Relative Error (%) |

|---|---|---|---|---|---|---|

| 1 | Tenku | 23.0 | 11.2 | 22.4 | 0.6 | 2.7 |

| 2 | Renbu | 21.2 | 10.89 | 21.78 | 0.58 | 2.6 |

| 3 | Tenku | 21.2 | 11.42 | 22.84 | 1.64 | 7.5 |

| 4 | Mikuni | 20.9 | 10.69 | 21.39 | 0.49 | 2.2 |

| 5 | Renbu | 20.9 | 10.69 | 21.38 | 0.48 | 2.2 |

| 6 | Renbu | 21.2 | 9.92 | 19.84 | 1.36 | 6.2 |

| 7 | Renbu | 20.8 | 11.28 | 22.56 | 1.76 | 8.0 |

| 8 | Renbu | 20.0 | 10.13 | 20.25 | 0.25 | 1.1 |

| 9 | Renbu | 21.6 | 11.64 | 23.27 | 1.67 | 7.6 |

| 10 | Mikuni | 24.05 | 10.69 | 21.38 | 2.67 | 12.1 |

| 11 | Mikuni | 22.45 | 11.14 | 22.28 | 0.17 | 0.8 |

| 12 | Tenku | 23.7 | 10.57 | 21.14 | 2.56 | 11.6 |

| 13 | Renbu | 22.95 | 11.33 | 22.67 | 0.28 | 1.3 |

| 14 | Tenku | 24.3 | 11.96 | 23.92 | 0.38 | 1.7 |

| 15 | Tenku | 22.1 | 12.36 | 24.73 | 2.63 | 12.0 |

| 16 | Tenku | 23.15 | 11.80 | 23.60 | 0.45 | 2.0 |

| 17 | Renbu | 21.55 | 10.89 | 21.77 | 0.22 | 1.0 |

| 18 | Tenku | 22.9 | 11.65 | 23.30 | 0.4 | 1.8 |

| 19 | Renbu | 21.75 | 11.47 | 22.95 | 1.2 | 5.5 |

| 20 | Tenku | 23.5 | 11.52 | 23.03 | 0.47 | 2.1 |

| 21 | Mean | 10.1 | 4.6 |

| Model | Train | Test | ||

|---|---|---|---|---|

| R2 | MSE | R2 | MSE | |

| RF | 0.938 | 0.011 | 0.847 | 0.031 |

| SVR | 0.904 | 0.019 | 0.830 | 0.032 |

| KNR | 0.896 | 0.021 | 0.813 | 0.033 |

| LightGBM | 0.884 | 0.022 | 0.751 | 0.046 |

| XGBoost | 0.942 | 0.012 | 0.852 | 0.027 |

| CatBoost | 0.925 | 0.014 | 0.889 | 0.025 |

| Comparison | DM Type | DM Statistic | p-Value | Significance (p < 0.05) | Better Model |

|---|---|---|---|---|---|

| RF vs. XGBoost | Squared Errors | 0.4756 | 0.6344 | Not Significant | - |

| CatBoost vs. RF | Squared Errors | 2.7514 | 0.0059 | Significant | CatBoost |

| CatBoost vs. XGBoost | Squared Errors | 2.66 | 0.0078 | Significant | CatBoost |

| RF vs. XGBoost | Absolute Errors | 1.2266 | 0.22 | Not Significant | - |

| CatBoost vs. RF | Absolute Errors | 2.9753 | 0.0029 | Significant | CatBoost |

| CatBoost vs. XGB | Absolute Errors | 2.0725 | 0.0382 | Significant | CatBoost |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arab, S.T.; Takezaki, A.; Kogoshi, M.; Nakano, Y.; Kikuchi, S.; Tanaka, K.; Hayashi, K. Integrating UAV-Derived Diameter Estimations and Machine Learning for Precision Cabbage Yield Mapping. Sensors 2025, 25, 5652. https://doi.org/10.3390/s25185652

Arab ST, Takezaki A, Kogoshi M, Nakano Y, Kikuchi S, Tanaka K, Hayashi K. Integrating UAV-Derived Diameter Estimations and Machine Learning for Precision Cabbage Yield Mapping. Sensors. 2025; 25(18):5652. https://doi.org/10.3390/s25185652

Chicago/Turabian StyleArab, Sara Tokhi, Akane Takezaki, Masayuki Kogoshi, Yuka Nakano, Sunao Kikuchi, Kei Tanaka, and Kazunobu Hayashi. 2025. "Integrating UAV-Derived Diameter Estimations and Machine Learning for Precision Cabbage Yield Mapping" Sensors 25, no. 18: 5652. https://doi.org/10.3390/s25185652

APA StyleArab, S. T., Takezaki, A., Kogoshi, M., Nakano, Y., Kikuchi, S., Tanaka, K., & Hayashi, K. (2025). Integrating UAV-Derived Diameter Estimations and Machine Learning for Precision Cabbage Yield Mapping. Sensors, 25(18), 5652. https://doi.org/10.3390/s25185652