Abstract

Accurately predicting future activities in egocentric (first-person) videos is a challenging yet essential task, requiring robust object recognition and reliable forecasting of action patterns. However, the limited number of observable frames in such videos often lacks critical semantic context, making long-term predictions particularly difficult. Traditional approaches, especially those based on recurrent neural networks, tend to suffer from cumulative error propagation over extended time steps, leading to degraded performance. To address these challenges, this paper introduces a novel framework, Virtual Frame-Augmented Guided Forecasting (VFGF), designed specifically for long-term egocentric activity prediction. The VFGF framework enhances semantic continuity by generating and incorporating virtual frames into the observable sequence. These synthetic frames fill the temporal and contextual gaps caused by rapid changes in activity or environmental conditions. In addition, we propose a Feature Guidance Module that integrates anticipated activity-relevant features into the recursive prediction process, guiding the model toward more accurate and contextually coherent inferences. Extensive experiments on the EPIC-Kitchens dataset demonstrate that VFGF, with its interpolation-based temporal smoothing and feature-guided strategies, significantly improves long-term activity prediction accuracy. Specifically, VFGF achieves a state-of-the-art Top-5 accuracy of 44.11% at a 0.25 s prediction horizon. Moreover, it maintains competitive performance across a range of long-term forecasting intervals, highlighting its robustness and establishing a strong foundation for future research in egocentric activity prediction.

1. Introduction

Driven by emerging applications in smart surveillance, virtual reality (VR), industrial automation, and healthcare monitoring, behavior prediction from first-person perspective videos has become a cornerstone of intelligent systems [1]. Long-term Action Anticipation (LTA) leverages video sequences captured by sensors to infer future behavioral trends, thereby facilitating real-time decision-making and dynamic interactions [2]. For instance, LTA supports various applications [1,2,3,4,5,6], including the detection of anomalous behaviors in smart surveillance systems, the interpretation of user intentions in VR environments, the coordination of human–machine collaboration in industrial settings, and the analysis of patient movements in telemedicine. The emergence of large-scale egocentric video datasets [2,7,8,9,10], such as Ego4D [7] and EPIC-Kitchens [2], has provided a strong foundation for the development of algorithms, significantly enhancing model performance.

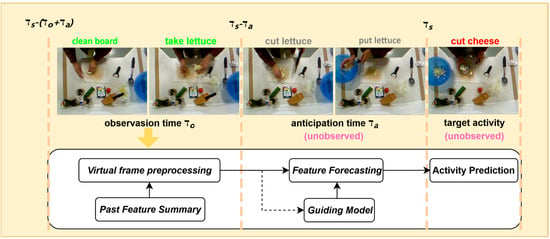

LTA plays a pivotal role in sensor-driven intelligent systems by inferring future behavioral states from limited video sequences [2]. As illustrated in Figure 1, the LTA task involves analyzing observable video segments from an initial time to an endpoint , aiming to predict the activity state at a future time , where the prediction interval is defined as , representing the unseen segment. The complexity of this task stems from multiple factors, including objective challenges such as dynamic environmental contexts and sensor-induced noise, as well as subjective factors, including varied manifestations of the same activity and diverse intentions driven by individual differences.

Figure 1.

Illustration of action anticipation task and our solution.

Despite recent progress, LTA remains a challenging task in complex, real-world scenarios, as existing models often face a trade-off between real-time responsiveness and long-term prediction accuracy. Sensor-derived video data are frequently affected by low frame rates, motion blur, or environmental noise, which result in incomplete and ambiguous semantic information [2]. The use of fixed-interval frame sampling can further exacerbate semantic loss by potentially missing critical actions. Although Recurrent Neural Networks (RNNs) [11] have shown promising performance in LTA tasks, their recursive operations result in error accumulation, which limits long-term prediction accuracy. Methods attempt to address these challenges by leveraging semantic information from observable data [12,13,14,15]. However, these approaches often heavily rely on historical observations, constraining predictions to specific patterns and making them prone to cumulative errors. Recent studies [16,17,18,19,20,21] have made progress by incorporating multimodal data or pretrained large-scale models, but these methods involve complex multimodal fusion processes, high data acquisition costs, and substantial computational resource demands.

To overcome these limitations, a Virtual Frame-Augmented Guided Prediction Framework (VFGF) is proposed. This framework aims to enhances the accuracy and efficiency of long-term action anticipation in egocentric vision tasks by addressing semantic gaps and error accumulation. The framework employs frame similarity analysis to detect significant differences between adjacent frames and generates transitional frames through linear interpolation, thereby smoothing semantic variations across activity sequences and enhancing the completeness of encoded information. Virtual frame generation is introduced to LTA tasks for the first time, addressing semantic deficiencies in observable frame sequences and providing a richer informational foundation for subsequent encoding. Built upon a Gated Recurrent Unit (GRU) [22] backbone, the Feature Guidance Module (FGM) is proposed to capture latent associations between current semantics and target activities through pretraining, dynamically generating guiding features during recursive prediction to reduce dependence on observable frames and improve the stability and robustness of long-term predictions. Extensive experiments conducted on the EPIC-Kitchens datasets validate that the proposed VFGF framework outperforms state-of-the-art methods in long-term prediction tasks, confirming its effectiveness in egocentric vision applications.

The remainder of this paper is organized as follows: Section 2 reviews related work on egocentric action recognition and video prediction. Section 3 presents the VFGF framework, detailing its virtual frame augmentation and FGM components. Section 4 describes the experimental setup and results, including comparisons with existing methods. Section 5 discusses the implications, limitations, and future directions of the proposed approach. Section 6 concludes this paper, summarizing the key findings and contributions.

2. Related Work

This section reviews the literature on egocentric video understanding, with a focus on identifying methodologies for action recognition and prediction. The review aims to analyze the evolution of approaches, from single-modality classification to complex multimodal fusion and long-term forecasting, and to examine how existing works address inherent challenges such as error accumulation, multimodal fusion, and the exploration of novel architectures.

2.1. Egocentric Action Recognition

Egocentric action recognition is a cornerstone of sensor-driven intelligent systems, focusing on analyzing and predicting human behavior patterns through egocentric video data. Recent advances in deep learning, particularly convolutional neural networks and transformer models, have significantly propelled progress in this field [23,24,25,26,27,28,29,30,31,32,33,34]. Early research on egocentric video recognition focused primarily on activity classification using visual information. For example, Liu et al. [25] introduced a video Transformer with local inductive bias by adapting the Swin Transformer from the image domain, leveraging pretrained image models to achieve an optimal balance between speed and accuracy compared to global self-attention approaches. Building on this foundation, significant progress has been made through multimodal data fusion. Xaviar [30] addressed the issues of continuous data missingness and noise in multimodal sensor data from IoT devices with the Centaur model, which combines a convolutional denoising autoencoder for data cleaning with a deep convolutional neural network incorporating self-attention for multimodal fusion. Trained with random data corruption, Centaur surpassed baseline models across multiple inertial measurement unit datasets. Similarly, Masashi et al. [31] proposed the MM-CDFSL method, which enhances target domain adaptability via multimodal distillation and employs ensemble mask inference to reduce input token counts, thereby improving inference efficiency. More recently, Wang et al. [32] addressed the labor-intensive challenge of action annotation in egocentric videos captured by wearable devices in complex backgrounds by introducing a semi-supervised learning approach based on the interaction knowledge distillation. This method utilizes a teacher-student framework, where the teacher network employs a graph neural network to capture spatiotemporal interactions between hands and objects in annotated videos, enabling the student network to learn this knowledge and improve recognition performance.

2.2. Egocentric Video Prediction

The release of large-scale egocentric video datasets, such as EPIC-Kitchens and Ego4D, has spurred extensive research in egocentric video prediction, leading to substantial advancements [2,12,13,14,15,16,17,18,19,20,21,33,34,35,36]. In the domain of feature learning, Furnari et al. [12]. introduced the Rolling-Unrolling LSTM architecture, which employs dual LSTMs for historical summarization and future inference, integrates sequence completion pretraining, and utilizes a modality attention mechanism to fuse multimodal features effectively. To address error accumulation in recursive sequence prediction for egocentric activity forecasting, Qi et al. [14] proposed the self-regulated learning (SRL) framework, leveraging contrastive loss to highlight information in current frames, a dynamic reweighting mechanism to capture inter-frame correlations, and multi-task learning to enhance feature representations. Liu et al. [16] addressed error accumulation and semantic deficiencies in egocentric activity prediction through the HRO hybrid framework, which combines memory-augmented recursion with one-shot representation prediction. For long-term action prediction in egocentric videos, Esteve et al. [17] developed a hierarchical architecture utilizing H3M to extract both high- and low-level human information and I-CVAE to generate stable predictions. Zhang et al. [19] focused on object-centric representations for long-term video action prediction, employing visual-language pretrained models to extract task-specific representations via “object prompts” and integrating Transformer architectures to retrieve relevant objects, with effectiveness validated across multiple datasets.

2.3. Summary

Research in egocentric action recognition has evolved from leveraging single visual modalities to incorporating multimodal data, aiming to enhance robustness and contextual understanding. In the closely related task of egocentric video prediction, mainstream approaches have primarily focused on two strategies: (1) designing advanced recurrent architectures to better model historical context and mitigate error propagation in recursive prediction and (2) incorporating external information, such as large-scale pre-trained models or multi-modal data, to compensate for perceptual uncertainties and semantic deficiencies in observed frames.

Despite these advancements, a significant gap remains in developing a concise and efficient framework that reduces reliance on costly external data acquisition or complex fusion processes. Many methods still face a trade-off between model complexity and generalizability, particularly in recovering missing semantic information due to sensor limitations or rapid activity transitions. To address this gap, our research introduces a solution that focuses on internal frame information augmentation and feature guidance, aiming to smooth semantic variations and reduce long-term dependency without external data.

3. Proposed Method

This study focuses on forecasting activity states at specific future time points by analyzing fixed-length video sequences in the context of egocentric activity prediction. Formally, given an observable video segment with time steps , we divide and obtain frame sequence at time interval , where each represents the frame at the corresponding time point. During the prediction phase, the model takes the observable segment of the sequence as input and employs a recurrent prediction mechanism to progressively infer the activity state at the specified target time point. The VFGF framework comprises three core components: virtual-semantic preprocessing, recurrent sequence prediction, and target activity generation. These components work synergistically to refine semantic sequences and dynamically guide predictions, effectively tackling the challenges of dynamic scenarios and long-term predictions. The design and implementation of each component are detailed below, emphasizing their critical contributions to accurate egocentric activity prediction.

3.1. Visual Semantic Preprocessing

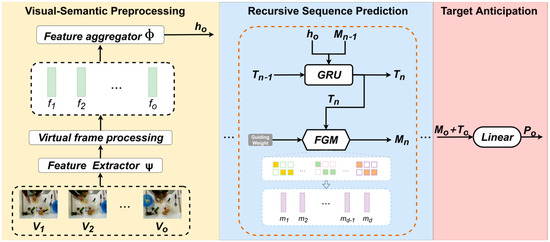

The frames sequence derived from observable historical video segment serves as the sole input for model forecasting. To effectively encode these frame sequences for long-term activity prediction, this paper proposes a feature extraction and aggregation method enhanced by virtual frame augmentation (VFA). As illustrated in Figure 2, this approach extends the original feature sequence to smooth semantic transitions and integrates spatiotemporal feature extraction with recursive aggregation to construct rich contextual features, thereby establishing a robust foundation for accurate activity predictions.

Figure 2.

The VFGF for long-term egocentric activity prediction comprises three core components. The first component focuses on extracting visual features, virtual frames augmentation, and fusing sequential features to enrich the activity context. The second component employs a GRU for recursive prediction, complemented by a Transformer-based encoder–decoder architecture to process initial features and generate guiding features, which are subsequently used as input for the next time step in the GRU. The third component performs activity classification on the final predictions to yield anticipated outcomes for future action tasks.

To capture the spatiotemporal dynamics of the augmented sequence, a Temporal Segment Network (TSN) [37] is employed as the feature extractor , to extract spatiotemporal features for each frame, Alternatively, other networks, such as I3D [38], can be utilized to achieve comparable feature extraction. The feature extracted from frame is represented as:

where integrates local spatial information with global contextual associations. The TSN is selected for its superior performance in video spatiotemporal modeling, ensuring robust feature representations.

To mitigate semantic loss caused by rapid scene transitions or action changes, the technique of virtual frame augmentation is initially applied to the observable frame sequence. Given the feature of a frame, the similarity between the feature of an adjacent frame is computed to identify pairs with significant differences. A similarity vector is defined to quantify the correlation between the feature of adjacent frame within the observable sequence. Cosine similarity is employed to calculate this vector . For instance, the similarity between frames and , where , is computed as:

where denotes the dot product, computed as the sum of element-wise multiplications. Linear interpolation is used to generate virtual frames, as it efficiently produces spatially and temporally coherent sequences [39]. A threshold is then defined as the minimum similarity required for smooth transitions between the features of adjacent frames, as validated in Section 4.5.2. The set of indices requiring insertion is . The total number of inserted feature is denoted as , resulting in the length of the extended sequence after the insertion of features being . The augmented sequence is generated via linear interpolation, and the calculation of the t-th frame is as follows:

where represents the weight of the original frame in the virtual frame , set to 0.5 by default. The augmented sequence is thus obtained.

To aggregate the dynamic context of the feature sequence, a GRU is used as the recursive aggregator , processing the feature sequence temporally up to the final time step:

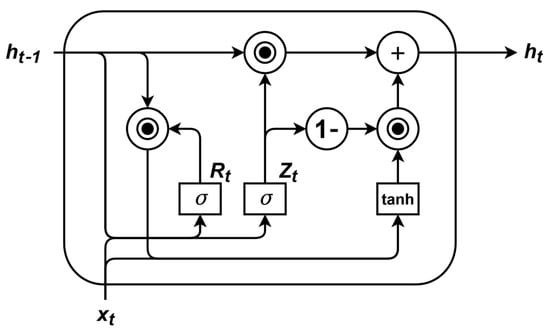

where represents the comprehensive feature over the entire observable time steps. The GRU’s gating mechanism effectively mitigates error accumulation, enhancing the stability of long-term predictions. Through these steps, this study constructs a model that efficiently integrates past video features, providing rich contextual information for subsequent activity prediction. The structure of the GRU is illustrated in Figure 3.

Figure 3.

The GRU architecture. The current hidden state is determined jointly by the input at the current time step and the hidden state from the previous time step.

3.2. Recurrent Sequence Prediction

Building on the feature encoding enhanced by virtual frame augmentation, the recursive sequence prediction module iteratively processes contextual features to infer future activity states, serving as a cornerstone for long-term egocentric activity prediction. As illustrated in Figure 2 (Recursive Sequence Prediction), this module leverages the comprehensive feature representation , generated by the preprocessing module, to iteratively predict the state at each subsequent time step until the target time step is reached.

For instance, at the target time step , the model utilizes the guidance information from the previous time step and alongside to generate an initial prediction state through a GRU layer:

At the onset of the recursive sequence, the initial values of and are set to , where denotes the hidden information from the previous time step of the GRU. However, as highlighted in the introduction, the initial prediction state often lacks sufficient accuracy in complex dynamic scenarios. Directly employing for subsequent recursive predictions can result in cumulative errors, significantly impairing long-term prediction performance.

To overcome this limitation, we introduce an FGM (Feature Guidance Module) that dynamically generates guiding semantic features to refine the recursive prediction process. By capturing latent associations between contextual features and target activities based on the current semantic environment, the FGM produces auxiliary features to steer the prediction direction (see Section 3.3 for implementation details). Unlike traditional recursive models, which heavily rely on observable information, the FGM effectively mitigates error accumulation, ensuring both stability and accuracy in predictions.

By integrating the initial prediction with the guiding features from the FGM, the model iteratively executes the prediction process until the target time step is reached, yielding the final activity state prediction. Owing to the optimized prediction mechanism, this module substantially enhances the robustness of long-term egocentric activity prediction, providing critical support for the framework’s overall performance breakthrough.

3.3. Feature Guidance Module

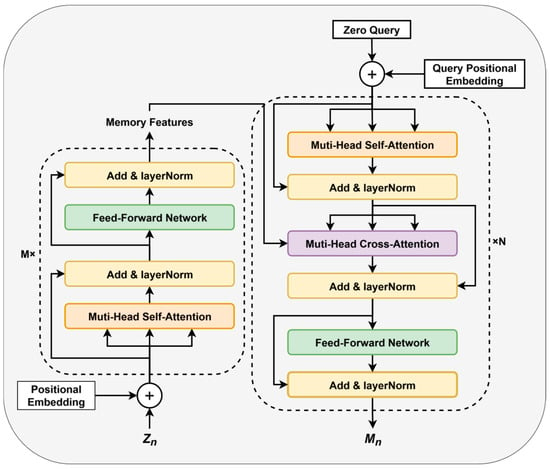

As discussed in Section 2.2, existing methods based on recursive prediction models suffer from significant performance degradation over extended prediction time steps due to error accumulation. To address the limitations and enhance the model’s ability to handle complex activity features in long-term activity prediction tasks, we propose the FGM, as shown in Figure 4.

Figure 4.

The FGM is composed of a Transformer-based encoder–decoder architecture, incorporating multi-head self-attention and multi-head cross-attention mechanisms. This design enables efficient capture of latent logical relationships within sequential data, providing effective guiding information for the GRU in recursive prediction tasks.

The core objective of the FGM is to uncover latent relationships between features through pretraining and leverage the learned knowledge as a guiding mechanism during recursive training to assist the base recurrent network in effective prediction. The FGM is implemented using a transformer-based encoder–decoder framework, with the following details. First, the predictive features are projected:

where and are learnable parameters. Subsequently, learnable positional embeddings are added to the input sequence ,

where .

The sequence with positional encodings is then passed through transformer blocks. Each transformer block consists of a multi-head self-attention mechanism, residual connections with layer normalization, and a feed-forward neural network. The multi-head self-attention mechanism incorporates learnable parameters, allowing different heads to capture distinct patterns during training. The propagation through each transformer block is defined as:

The transformer preserves the dimensionality of the input feature sequence, ensuring that the output sequence maintains the same shape as the input, i.e., , .

Next, the decoding process begins. The decoder, which includes transformer blocks, incorporates an additional multi-head cross-attention module to integrate the encoder’s output with the decoder’s query vectors . The are obtained similarly to , as shown in Figure 4. The cross-attention is computed as:

Finally, employs FNN and LN networks to produce the guiding features for the next frame. The FGM’s network weights are obtained through pretraining and remain fixed during the prediction process, relying solely on the pretrained knowledge to skillfully construct and introduce plausible future features. The experimental setup includes an encoder depth of , a decoder depth of , and a total of 8 attention heads. For more settings, please refer to the experimental section. This approach effectively leverages available contextual information, reducing the model’s strong dependence on observable data and mitigating error accumulation in recursive prediction sequences.

3.4. Training Objective Function

After completing recursive sequence prediction, the predictive representation and final guiding features are obtained at the target prediction time step . The probability distribution of the target activity at the final anticipated time step is computed using a linear layer with a activation function, as shown in Equation (11):

Furthermore, the guiding features at the final anticipated time step are processed through a linear layer with a activation function to compute prediction results , as shown:

where and are learnable parameters, represents the number of activity categories, and denotes the feature dimension of the current modality. Notably, the FGM parameters are fixed during training to ensure stability. Given the model parameters , the loss function is designed concisely, as shown in Equation (13):

where and denote the standard cross-entropy losses for the target activity and guiding feature classifications. is an adjustable weight coefficient. The FGM is pretrained using all frame information to capture intrinsic inter-frame relationships, enabling robust and high-precision guidance for long-term egocentric activity prediction.

4. Experimental Results

In this section, we describe the datasets and experimental protocols employed to evaluate the proposed method. Additionally, we present comparisons with state-of-the-art approaches and discuss the experimental results.

4.1. Dataset

To evaluate the effectiveness of VFGF, we conducted experiments on the EPIC-Kitchens dataset for long-term egocentric activity prediction. Collected from 32 participants performing unscripted activities in diverse kitchen environments, this dataset comprises 28,472 activity segments, encompassing 125 distinct actions (e.g., cutting, stirring), 331 specific noun categories (e.g., knife, onion), and 2513 unique activity classes (e.g., “cut onion”). Following the experimental protocol in [2], the public training set is split into 23,493 segments for training and 4979 for validation to assess model accuracy. The dataset, recorded using head-mounted cameras, offers a first-person perspective and a naturalistic setting that provide rich temporal and contextual information, making it ideal for evaluating long-term activity prediction. For EPIC-Kitchens, following [2], evaluation metrics include Top-5 accuracy as a class-agnostic measure of overall prediction performance and Mean Top-5 recall as a class-aware metric to address class imbalance, ensuring a robust foundation for validating the VFGF framework’s performance.

4.2. Compared Methods

To evaluate the superiority of our proposed Virtual Frame-Augmented Guided Forecasting (VFGF) method, we compared it with various existing approaches, including direct prediction methods from observed video clips (e.g., DMR [40], ATSN [2], MCE [41], SVM [42], ActionBanks [43], ED [44], FN [45], RL [46], EL [47]), recursive anticipation frameworks (e.g., RU-LSTM [12], LAI [48], SRL [14], HRO [16], SF-RULSTM [49]), and Transformer-based methods for prediction tasks (e.g., AVT [50], IAAM [18], VS-TransGRU:ES [21]). The baseline is a supervised backbone network. For fair comparison, the results were either sourced from the respective papers or reproduced using their published code. Experiments used a fixed random seed, reporting the average results, with all methods employing identical feature extraction and data splits for consistency.

4.3. Experimental Setting

The experiments were implemented by Pytorch 1.10 and conducted through one NVIDIA RTX 3090 GPU. Experiments were conducted on the EPIC-Kitchens dataset. The model processes fixed-length 1.5 s video clips as input, targeting activity progression prediction at multiple future time steps (0.25 s, 0.5 s, 0.75 s, 1 s, 1.25 s, 1.5 s, 1.75 s, and 2 s). The observation time step is set to 6, providing six intervals of observed information, while the anticipation time step is set to 8 to define the prediction horizon. For fair comparison, all methods use identical input features, with video segments sampled at 0.25 s intervals, selecting only the video frames corresponding to these time steps. Subsequently, RGB and optical flow features are extracted via a pretrained TSN model [2], each modality comprising 1024-dimensional spatiotemporal features. Additionally, 352-dimensional object features are derived from [12]. The features from all three modalities are normalized before being input into the prediction model. All models are followed by a linear layer at the final stage to output a probability distribution over 2513 actions as predictions. Results are sourced from the respective papers or reproduced using their published code, with experiments conducted using a fixed random seed and identical feature extraction and data splits for consistency. The FGM employs a transformer-based encoder–decoder architecture, utilizing self-attention and cross-attention mechanisms to capture long-range temporal and spatial dependencies, enhancing the model’s capability for complex activity prediction tasks. For feature extraction, multimodal data consistent with prior methods are used, with features adopted from [12] to ensure comparability. To enable fair comparisons with state-of-the-art methods, the TSN model, pretrained on a large-scale dataset as provided in [2], serves as the visual feature extractor. The recursive aggregator is implemented using a GRU, with its hidden state dimension aligned with the input visual feature dimension, and the classifier consists of a linear layer. In the virtual frame augmentation module, a similarity threshold of 0.3 is set to ensure smooth inter-frame transitions, determined through ablation studies reported in Section 4.5.2. The FGM is configured with 8 attention heads, and both the encoder and decoder consist of 2 transformer blocks each. Model parameters are optimized using the stochastic gradient descent optimizer with a learning rate of 0.05, momentum of 0.9, weight decay of , and batch size of 128, of 0.1. The FGM is pretrained on the EPIC-Kitchens dataset with the same train-validation split as VFGF. Pretraining runs for 100 epochs with cross-entropy loss, using early stopping after 10 epochs of no validation loss improvement and a 0.05 dropout rate to prevent overfitting, ensuring robust feature extraction for VFGF’s long-term prediction.

VFGF processes multimodal inputs (RGB, optical flow, and object features) to enhance prediction robustness. Each modality is handled by pretrained branch models, with optimal parameters detailed in our open-source code. Specifically, RGB and optical flow features are extracted using a pretrained TSN model [2], while object features are derived from [12]. Each modality employs a pretrained FGM, followed by VFGF training. Predictions from the three modalities are fused via a three-layer MLP (MATT, dropout rate 0.8) to generate a unified multimodal representation, optimized using cross-entropy loss over 50 epochs.

4.4. Comparison with Other Methods

Table 1 presents the comparative performance of the proposed VFGF against state-of-the-art methods on the EPIC-Kitchens validation set. To comprehensively highlight VFGF’s advantages, we report performance under multimodal inputs (RGB, FLOW, OBJ). In addition to evaluating Top-5 action accuracy across eight anticipation time steps (0.25 s to 2.0 s), we compare Top-5 accuracy for actions, verbs, and nouns, as well as Mean Top-5 recall (class-agnostic and class-aware) at a 1 s anticipation time. Bold and underlined values indicate the best and second best results, respectively. The experimental results demonstrate that VFGF outperforms competing methods across most evaluated anticipation times. Specifically, at a 1 s anticipation time, VFGF achieves a Top-5 action accuracy of 38.53%, surpassing the previous best recursive models, SF-RULSTM [48] and HRO [16], by 2.44% and 1.11%, respectively, and outperforming the Transformer-only AVT [50] method by 0.93%. Across all time steps, VFGF achieves an average improvement of 0.89% over the hybrid Transformer-GRU model VS-TransGRU:ES [21], with a notable 1.56% average gain in short-term predictions (0.25 s to 1.0 s).

Table 1.

Anticipation Results of Different Methods on EPIC-Kitchens Dataset.

For a summary, the comparative analysis reveals that VFGF consistently outperforms other models due to its reduced reliance on strongly coupled observable information compared to traditional models like SF-RULSTM and HRO, which are based on LSTM [51] and GRU [22]. Compared to the Transformer-only AVT method, VFGF also demonstrates significant advantages, as the memory capabilities inherent to recursive units are better suited for long-term prediction tasks. Furthermore, VFGF maintains a performance edge over VS-TransGRU:ES, which combines Transformer and GRU, underscoring the importance of well-designed integration to leverage the strengths of different modules for optimal long-term prediction performance.

4.5. Ablation Experiments

Ablation experiments were conducted on the RGB modality of the EPIC-Kitchens [2] dataset, leveraging its rich activity scenarios and diverse multimodal information to comprehensively evaluate performance under different feature combinations.

4.5.1. Module Effectiveness

Baseline: The baseline model (denoted as “Baseline” in Table 2) employs the same observed information encoding as VFGF. In the recursive sequence prediction phase, only a single GRU layer is used to predict feature representations at each anticipation time step, followed by a fully connected layer with a softmax activation function for target activity prediction. The Adam optimizer (learning rate of 0.001) and cross-entropy loss over 100 epochs, with early stopping applied to prevent overfitting. According to Table 2, the baseline model achieves a Top-5 action accuracy of 29.18% and a Top-1 action accuracy of 13.47% at a 1 s anticipation time, indicating substantial room for improvement in predictive performance.

Table 2.

The effectiveness of each component on EPIC-Kitchens validation set (RGB).

Baseline + VFA: Incorporating the VFA module (denoted as “+VFA” in Table 2) improves the Top-5 action accuracy from 29.18% to 30.01% at a 1 s anticipation time, demonstrating the effectiveness of VFA. Across all anticipation time steps (0.25 s to 2.0 s), VFA consistently enhances performance in both Top-5 and Top-1 action accuracy results. This improvement stems from VFA’s ability to insert virtual frames, mitigating differences between consecutive frames and smoothing transitions, thereby enriching the semantic quality of observable segments.

Baseline + FGM: Integrating the FGM (denoted as “+FGM” in Table 2), pretrained as described in Section 4.3, the FGM loads fixed parameters, receives GRU outputs, and generates guiding features for the next time step, assisting the GRU in long-term prediction tasks. This combination yields a 2.36% improvement in Top-5 action accuracy over the baseline at a 1 s anticipation time. Notably, performance gains are observed across various time steps, with more significant improvements in short-term predictions, such as a 3.73% gain at 0.25 s compared to a 2.53% gain at 2.0 s. This trend is attributed to the increasing error accumulation in the GRU over longer time steps, which degrades the initial prediction quality and leads to less accurate guiding features from the FGM, thereby mutually affecting performance.

Baseline + VFA & FGM: Combining VFA and the FGM (denoted as “Baseline + VFA & FGM” in Table 2) results in performance superior to using either module alone. The addition of VFA to “Baseline + FGM” yields more significant improvements than VFA alone, as VFA enhances the quality of observable information, improving GRU prediction accuracy and providing more precise input features for the FGM’s queries.

Baseline + Combination of All Components: As shown in the final row of Table 2, incorporating classification loss on the guiding features generated by the FGM further enhances VFGF’s overall prediction performance, significantly improving both Top-5 and Top-1 predictive accuracy. Since FGM parameters are fixed during training, computing loss on its guiding features encourages the GRU to learn more effective representations, providing the FGM with more accurate initial features.

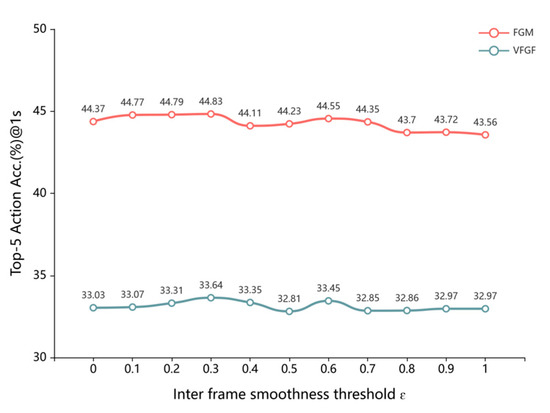

4.5.2. Selection of Virtual Frame Threshold

To determine the optimal similarity threshold for virtual frame insertion, as discussed in Section 3.1, we conducted a grid search, with results illustrated in Figure 5. The results compare the performance of the FGM and VFGF with VFA at various threshold values, including a case without VFA. Both models exhibit similar performance trends across thresholds ranging from 0 to 1. Notably, at thresholds between 0.1 and 0.5, VFA yields significant performance improvements. However, an increase in the threshold beyond 0.5 leads to performance degradation due to excessive insertion of similar frames, introducing redundant information that hinders the generation of high-quality comprehensive feature representations, ultimately reducing prediction accuracy.

Figure 5.

Virtual frame filling threshold selection experiment on EPIC Kitchen validation set (RGB).

4.6. Parameters and FLOPs

The VFGF model demonstrates a compelling balance between predictive performance and computational efficiency, as presented in Table 3. With a parameter count of 25.13 M and computational complexity of 74.41 G FLOPs, VFGF achieves a Top-5 accuracy of 33.64%, outperforming alternative models such as AVT (382.81 M parameters, 8573.46 G FLOPs, 28.10% accuracy) and SRL (29.31 M parameters, 2115.91 G FLOPs, 31.68% accuracy). This efficiency arises from the optimized graph-based feature fusion mechanism, which makes VFGF well suited for resource-constrained environments, including edge devices used in real-time video detection tasks. However, VFGF’s parameter count remains higher than that of lighter models like TransGRU (15.96 M parameters, 793.47 G FLOPs, 32.36% Top-5 accuracy), potentially limiting its applicability in extremely resource-scarce scenarios. Moreover, the simplified graph-based fusion may compromise robustness under highly dynamic or noisy inputs, suggesting opportunities for further refinement through advanced regularization or hybrid architectural designs.

Table 3.

The results of parameters and FLOPs.

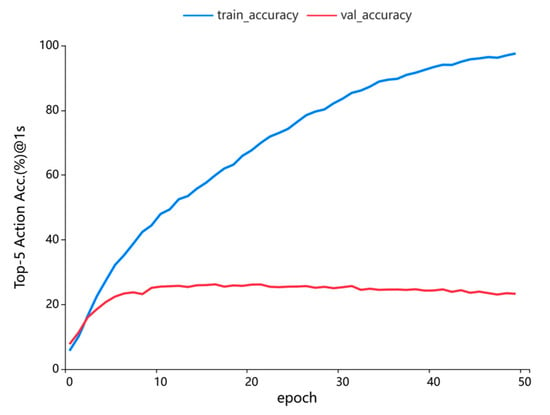

4.7. Generalization Experiments

To rigorously evaluate the generalization capability of the Virtual Frame-Augmented Guided Forecasting (VFGF) framework, comprehensive experiments were conducted on the EPIC-Kitchens dataset using 5-fold cross-validation to ensure robust and reliable train-validation splits, with a training-to-validation ratio of 2:8. The Feature Guidance Module (FGM) was pretrained for 50 epochs using stochastic gradient descent (SGD) with a learning rate of 0.05, momentum of 0.9, weight decay of 0.0001, and a batch size of 128. Subsequently, the VFGF framework was trained for an additional 50 epochs using cross-entropy loss to optimize its predictive performance. Figure 6 illustrates the median performance across five experimental runs, with the blue line representing the convergence of training accuracy and the red line indicating the convergence of validation accuracy. The VFGF model achieved convergence on the validation set by the 16th epoch, attaining a Top-5 accuracy of 26.21%. However, the training accuracy curve indicates overfitting beyond this point, with training performance continuing to improve while validation performance stabilizes or declines. This rapid convergence and competitive Top-5 accuracy underscore the efficacy of virtual frame augmentation and the FGM in enhancing model robustness. These advancements enable VFGF to deliver consistent and high-quality performance across diverse egocentric scenarios in the EPIC-Kitchens dataset, demonstrating its strong generalization potential.

Figure 6.

Convergence of VFGF generalization on the EPIC-Kitchens dataset, with training accuracy (blue) and validation accuracy (red) over epochs.

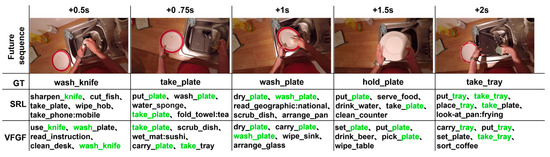

5. Discussion

The Virtual Frame-Augmented Guided Forecasting (VFGF) framework achieves state-of-the-art performance on the EPIC-Kitchens dataset, demonstrating the effectiveness of virtual frame augmentation and the Feature Guidance Module (FGM). Compared to the Self-Regulated Learning (SRL) framework [14], VFGF produces Top-5 predictions that align more closely with ground truth, as shown in Figure 7, where correct predictions for “take_tray” at +0.75 s match the 2 s ground truth (highlighted in green), underscoring FGM’s role in enhancing semantic guidance. VFGF outperforms SRL in short-term predictions but, like SRL, faces performance degradation at +1.5 s due to error accumulation. Against TransGRU [16], a Transformer-based model, VFGF offers competitive accuracy with lower computational complexity due to its hybrid GRU-Transformer architecture. Unlike traditional recursive methods [12,13,14,15], which rely heavily on local data and suffer from error accumulation, or other Transformer-based approaches [16,17,18,19,20,21], VFGF leverages virtual frame augmentation for improved semantic continuity and combines GRU’s localized temporal processing with Transformers’ global dependency modeling, achieving a balance of efficiency and robustness motivated by the need to address error accumulation and computational demands in dynamic egocentric scenarios.

Figure 7.

Top-5 prediction accuracy comparison between VFGF and SRL on the EPIC-Kitchens dataset, with correct predictions highlighted in green.

VFGF’s precise short-term predictions enable applications like human–robot interaction and video surveillance, where timely action forecasting is critical, and its efficient GRU-Transformer design enhances suitability for resource-constrained devices, such as wearables. Its robust generalization on EPIC-Kitchens suggests potential for smart homes or assistive technologies. However, VFGF’s large parameter count, akin to other Transformer-based models, poses challenges for edge device deployment. Additionally, performance degradation at longer horizons (e.g., +1.5 s) due to error accumulation limits long-term prediction reliability, and its effectiveness may wane on smaller datasets with limited data diversity. Future work could explore Transformer pruning or knowledge distillation to create lightweight models [52] and develop adaptive multimodal fusion to reduce error accumulation (e.g., combining text and audio [53,54,55,56]), thereby improving scalability and long-term action predictions.

6. Conclusions

This study presents the VFGF framework for long-term egocentric activity prediction, evaluated on the EPIC-Kitchens dataset using multimodal inputs (RGB, FLOW and OBJ). The experimental results demonstrate that VFGF achieves superior performance, particularly in short-term predictions, while maintaining robust Top-5 action accuracy across multiple anticipation intervals ranging from 0.25 s to 2.0 s. Ablation studies focused on the RGB modality confirm the effectiveness of both the VFA and FGM. The VFA improves the quality of observable sequences by addressing semantic gaps caused by abrupt changes in activity or environment. Meanwhile, the FGM enhances prediction accuracy by supplying semantically relevant guiding features during the recursive prediction process. Their combined use, along with a classification loss applied to FGM-generated features, significantly strengthens GRU-based learning and improves long-term inference capabilities. Collectively, these components demonstrate the innovative integration of pretraining, virtual frame augmentation, and a hybrid GRU-Transformer architecture, offering a context-aware solution well-aligned with real-world activity dynamics. The VFGF framework sets a new benchmark in egocentric activity forecasting by improving semantic continuity and predictive stability.

Future work will focus on extending VFGF to incorporate additional modalities such as depth, audio, and inertial sensor data, enriching the semantic representation of egocentric contexts. To integrate these heterogeneous inputs, we will explore multimodal fusion strategies like cross-modal transformers and co-attention mechanisms to improve robustness and adaptability in complex activity scenes. Further improvements will include developing adaptive VFA thresholds, advanced pretraining strategies such as self-supervised or contrastive learning for the FGM, and lightweight architectures for real-time inference on resource-constrained devices. Finally, comprehensive evaluations across broader datasets will validate the framework’s generalizability for applications in human–robot interaction and wearable computing.

Author Contributions

Conceptualization, X.L. and S.W.; methodology, X.L.; software, X.L.; validation, X.L.; formal analysis, X.L., S.W. and Y.C.; investigation, Y.C.; writing—original draft preparation, X.L.; writing—review and editing, X.L., S.W. and Y.C.; project administration, Y.C.; funding acquisition, S.W. and Y.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 61070089 and in part by the Natural Science fund of Tianjin under Grant 15JCYBJC46600 and Grant 19JCZDJC35100 for Shuqin Wang.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used in this study are available at https://github.com/fpv-iplab/rulstm (accessed on 1 June 2025). The source code of VFGF will be released after acceptance at https://github.com/MCLXD/VFGF (accessed on 7 September 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

| VFGF | Virtual Frame-Augmented Guided Prediction Framework |

| LTA | Long-term Action Anticipation |

| FGM | Feature Guidance Module |

| GRU | Gated Recurrent Unit |

| VR | Virtual Reality |

| RGB | Red, Green, Blue |

| LSTM | Long Short-Term Memory |

| H3M | Hierarchical Multitask MLP Mixer Model |

| I-CVAE | Intention-Conditioned Variational Autoencoder |

| CDFSL | Cross-Domain Few-Shot Learning |

| TSN | Temporal Segment Network |

| VFA | Virtual Frame Augmentation |

References

- Koppula, H.S.; Saxena, A. Anticipating Human Activities Using Object Affordances for Reactive Robotic Response. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 14–29. [Google Scholar] [CrossRef] [PubMed]

- Damen, D.; Doughty, H.; Farinella, G.M.; Fidler, S.; Furnari, A.; Kazakos, E.; Moltisanti, D.; Munro, J.; Perrett, T.; Price, W.; et al. Scaling Egocentric Vision: The EPIC-KITCHENS Dataset. arXiv 2018, arXiv:1804.02748. [Google Scholar] [CrossRef]

- Ji, Y.; Yang, Y.; Shen, F.; Shen, H.T.; Li, X. A Survey of Human Action Analysis in HRI Applications. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 2114–2128. [Google Scholar] [CrossRef]

- Xing, Y.; Golodetz, S.; Everitt, A.; Markham, A.; Trigoni, N. Multiscale Human Activity Recognition and Anticipation Network. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 451–465. [Google Scholar] [CrossRef]

- Ma, Y.; Zhu, X.; Zhang, S.; Yang, R.; Wang, W.; Manocha, D. TrafficPredict: Trajectory Prediction for Heterogeneous Traffic-Agents. Proc. Conf. AAAI Artif. Intell. 2019, 33, 6120–6127. [Google Scholar] [CrossRef]

- Rasouli, A.; Kotseruba, I.; Tsotsos, J.K. Pedestrian Action Anticipation Using Contextual Feature Fusion in Stacked RNNs. arXiv 2020, arXiv:2005.06582. [Google Scholar] [CrossRef]

- Grauman, K.; Westbury, A.; Byrne, E.; Cartillier, V.; Chavis, Z.; Furnari, A.; Girdhar, R.; Hamburger, J.; Jiang, H.; Kukreja, D.; et al. Ego4D: Around the World in 3,000 Hours of Egocentric Video. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 1–32. [Google Scholar] [CrossRef]

- Li, Y.; Liu, M.; Rehg, J.M. In the Eye of the Beholder: Gaze and Actions in First Person Video. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 6731–6747. [Google Scholar] [CrossRef] [PubMed]

- Stein, S.; McKenna, S.J. Combining Embedded Accelerometers with Computer Vision for Recognizing Food Preparation Activities. In Proceedings of the 2013 ACM International Joint Conference on Pervasive and Ubiquitous Computing, New York, NY, USA, 8–12 September 2013; pp. 729–738. [Google Scholar] [CrossRef]

- Kuehne, H.; Arslan, A.B.; Serre, T. The Language of Actions: Recovering the Syntax and Semantics of Goal-Directed Human Activities. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 780–787. [Google Scholar] [CrossRef]

- Farha, Y.A.; Richard, A.; Gall, J. When Will You Do What?—Anticipating Temporal Occurrences of Activities. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5343–5352. [Google Scholar] [CrossRef]

- Furnari, A.; Farinella, G.M. Rolling-Unrolling LSTMs for Action Anticipation from First-Person Video. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 4021–4036. [Google Scholar] [CrossRef]

- Tai, T.-M.; Fiameni, G.; Lee, C.-K.; See, S.; Lanz, O. Unified Recurrence Modeling for Video Action Anticipation. In Proceedings of the 26th International Conference on Pattern Recognition (ICPR), Montréal, QC, Canada, 21–25 August 2022; pp. 3273–3279. [Google Scholar] [CrossRef]

- Qi, Z.; Wang, S.; Su, C.; Su, L.; Huang, Q.; Tian, Q. Self-Regulated Learning for Egocentric Video Activity Anticipation. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 6715–6730. [Google Scholar] [CrossRef]

- Moniruzzaman, M.; Yin, Z.; He, Z.; Leu, M.C.; Qin, R. Jointly-Learnt Networks for Future Action Anticipation via Self-Knowledge Distillation and Cycle Consistency. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 3243–3256. [Google Scholar] [CrossRef]

- Liu, T.; Lam, K.-M. A Hybrid Egocentric Activity Anticipation Framework via Memory-Augmented Recurrent and One-Shot Representation Forecasting. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 13894–13903. [Google Scholar] [CrossRef]

- Mascaro, E.V.; Ahn, H.; Lee, D. Intention-Conditioned Long-Term Human Egocentric Action Anticipation. In Proceedings of the 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–7 January 2023; pp. 6037–6046. [Google Scholar] [CrossRef]

- Ma, Z.; Zhang, F.; Nan, Z.; Ge, Y. Intention Action Anticipation Model with Guide-Feedback Loop Mechanism. Knowl. Based Syst. 2024, 292, 111626. [Google Scholar] [CrossRef]

- Zhang, C.; Fu, C.; Wang, S.; Agarwal, N.; Lee, K.; Choi, C.; Sun, C. Object-Centric Video Representation for Long-Term Action Anticipation. In Proceedings of the 2024 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 4–8 January 2024; pp. 6737–6747. [Google Scholar] [CrossRef]

- Kim, S.; Huang, D.; Xian, Y.; Hilliges, O.; Van Gool, L.; Wang, X. PALM: Predicting Actions through Language Models. In Lecture Notes in Computer Science; Springer Nature: Cham, Switzerland, 2025; pp. 140–158. [Google Scholar] [CrossRef]

- Cao, C.; Sun, Z.; Lv, Q.; Min, L.; Zhang, Y. VS-TransGRU: A Novel Transformer-GRU-Based Framework Enhanced by Visual-Semantic Fusion for Egocentric Action Anticipation. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 11605–11618. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar] [CrossRef]

- Spriggs, E.H.; De La Torre, F.; Hebert, M. Temporal Segmentation and Activity Classification from First-Person Sensing. In Proceedings of the 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Miami Beach, FL, USA, 20–25 June 2009; pp. 17–24. [Google Scholar] [CrossRef]

- Weng, J.; Jiang, X.; Zheng, W.-L.; Yuan, J. Early Action Recognition with Category Exclusion Using Policy-Based Reinforcement Learning. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4626–4638. [Google Scholar] [CrossRef]

- Liu, Z.; Ning, J.; Cao, Y.; Wei, Y.; Zhang, Z.; Lin, S.; Hu, H. Video Swin Transformer. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 3192–3201. [Google Scholar] [CrossRef]

- Ou, Y.; Mi, L.; Chen, Z. Object-Relation Reasoning Graph for Action Recognition. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 20101–20110. [Google Scholar] [CrossRef]

- Wang, X.; Hu, J.-F.; Lai, J.-H.; Zhang, J.; Zheng, W.-S. Progressive Teacher-Student Learning for Early Action Prediction. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 3551–3560. [Google Scholar] [CrossRef]

- Liu, T.; Zhao, R.; Jia, W.; Lam, K.-M.; Kong, J. Holistic-Guided Disentangled Learning with Cross-Video Semantics Mining for Concurrent First-Person and Third-Person Activity Recognition. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 5211–5225. [Google Scholar] [CrossRef]

- Li, H.; Zheng, W.-S.; Zhang, J.; Hu, H.; Lu, J.; Lai, J.-H. Egocentric Action Recognition by Automatic Relation Modeling. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 489–507. [Google Scholar] [CrossRef]

- Xaviar, S.; Yang, X.; Ardakanian, O. Centaur: Robust Multimodal Fusion for Human Activity Recognition. IEEE Sens. J. 2024, 24, 18578–18591. [Google Scholar] [CrossRef]

- Hatano, M.; Hachiuma, R.; Fujii, R.; Saito, H. Multimodal Cross-Domain Few-Shot Learning for Egocentric Action Recognition. In Lecture Notes in Computer Science; Springer Nature: Cham, Switzerland, 2025; pp. 182–199. [Google Scholar] [CrossRef]

- Wang, H.; Yang, J.; Yu, B.; Zhan, Y.; Tao, D.; Ling, H. Distilling Interaction Knowledge for Semi-Supervised Egocentric Action Recognition. Pattern Recognit. 2025, 157, 110927. [Google Scholar] [CrossRef]

- Ke, Q.; Fritz, M.; Schiele, B. Time-Conditioned Action Anticipation in One Shot. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 9917–9926. [Google Scholar] [CrossRef]

- Lee, S.; Kim, H.G.; Hwi Choi, D.; Kim, H.-I.; Ro, Y.M. Video Prediction Recalling Long-Term Motion Context via Memory Alignment Learning. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual Event, 19–25 June 2021; pp. 3053–3062. [Google Scholar] [CrossRef]

- Nawhal, M.; Jyothi, A.A.; Mori, G. Rethinking Learning Approaches for Long-Term Action Anticipation. In Lecture Notes in Computer Science; Springer Nature: Cham, Switzerland, 2022; pp. 558–576. [Google Scholar] [CrossRef]

- Gong, D.; Lee, J.; Kim, M.; Ha, S.J.; Cho, M. Future Transformer for Long-Term Action Anticipation. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 3042–3051. [Google Scholar] [CrossRef]

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y.; Lin, D.; Tang, X.; Van Gool, L. Temporal Segment Networks: Towards Good Practices for Deep Action Recognition. In Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2016; pp. 20–36. [Google Scholar] [CrossRef]

- Carreira, J.; Zisserman, A. Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4724–4733. [Google Scholar] [CrossRef]

- Jiang, H.; Sun, D.; Jampani, V.; Yang, M.-H.; Learned-Miller, E.; Kautz, J. Super SloMo: High Quality Estimation of Multiple Intermediate Frames for Video Interpolation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 9000–9008. [Google Scholar] [CrossRef]

- Vondrick, C.; Pirsiavash, H.; Torralba, A. Anticipating Visual Representations from Unlabeled Video. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 98–106. [Google Scholar] [CrossRef]

- Furnari, A.; Battiato, S.; Farinella, G.M. Leveraging Uncertainty to Rethink Loss Functions and Evaluation Measures for Egocentric Action Anticipation. In Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2019; pp. 389–405. [Google Scholar] [CrossRef]

- Berrada, L.; Zisserman, A.; Kumar, M.P. Smooth Loss Functions for Deep Top-k Classification. arXiv 2018, arXiv:1802.07595. [Google Scholar] [CrossRef]

- Sener, F.; Singhania, D.; Yao, A. Temporal Aggregate Representations for Long-Range Video Understanding. In Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2020; pp. 154–171. [Google Scholar] [CrossRef]

- Gao, J.; Yang, Z.; Nevatia, R. RED: Reinforced Encoder-Decoder Networks for Action Anticipation. In Proceedings of the 2017 British Machine Vision Conference (BMVC), London, UK, 4–7 September 2017; pp. 1–13. [Google Scholar] [CrossRef]

- De Geest, R.; Tuytelaars, T. Modeling Temporal Structure with LSTM for Online Action Detection. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 1549–1557. [Google Scholar] [CrossRef]

- Ma, S.; Sigal, L.; Sclaroff, S. Learning Activity Progression in LSTMs for Activity Detection and Early Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1942–1950. [Google Scholar] [CrossRef]

- Jain, A.; Singh, A.; Koppula, H.S.; Soh, S.; Saxena, A. Recurrent Neural Networks for Driver Activity Anticipation via Sensory-Fusion Architecture. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 3118–3125. [Google Scholar] [CrossRef]

- Wu, Y.; Zhu, L.; Wang, X.; Yang, Y.; Wu, F. Learning to Anticipate Egocentric Actions by Imagination. IEEE Trans. Image Process. 2021, 30, 1143–1152. [Google Scholar] [CrossRef] [PubMed]

- Osman, N.; Camporese, G.; Coscia, P.; Ballan, L. SlowFast Rolling-Unrolling LSTMs for Action Anticipation in Egocentric Videos. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Virtual Event, 11–17 October 2021; pp. 3430–3438. [Google Scholar] [CrossRef]

- Girdhar, R.; Grauman, K. Anticipative Video Transformer. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Virtual Event, 11–17 October 2021; pp. 13485–13495. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Pramanick, S.; Song, Y.; Nag, S.; Lin, K.Q.; Shah, H.; Shou, M.Z.; Chellappa, R.; Zhang, P. EgoVLPv2: Egocentric Video-Language Pre-Training with Fusion in the Backbone. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 5262–5274. [Google Scholar] [CrossRef]

- Akbari, H.; Yuan, L.; Qian, R.; Chuang, W.-H.; Chang, S.-F.; Cui, Y.; Gong, B. VATT: Transformers for Multimodal Self-Supervised Learning from Raw Video, Audio and Text. In Proceedings of the 35th Conference on Neural Information Processing Systems 34, Virtual, 6–14 December 2021; pp. 1–16. [Google Scholar]

- Li, G.; Chen, Y.; Wu, Y.; Zhao, K.; Pollefeys, M.; Tang, S. EgoM2P: Egocentric Multimodal Multitask Pretraining. arXiv 2025, arXiv:2506.07886. [Google Scholar]

- Wu, Y.; Zhang, S.; Li, P. Multi-Modal Emotion Recognition in Conversation Based on Prompt Learning with Text-Audio Fusion Features. Sci. Rep. 2025, 15, 8855. [Google Scholar] [CrossRef]

- Assefa, M.; Jiang, W.; Zhan, J.; Gedamu, K.; Yilma, G.; Ayalew, M.; Adhikari, D. Audio-Visual Contrastive and Consistency Learning for Semi-Supervised Action Recognition. IEEE Trans. Multimed. 2024, 26, 3491–3504. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).