1. Introduction

In the current century, the agricultural sector is facing increasing global challenges, such as climate change—towards which it contributes significantly—and the progressive reduction in essential natural resources such as water and soil. At the same time, the growing global population is driving an increasing demand for food, emphasising the urgency of adopting more sustainable crop management strategies from environmental, economic and social standpoints. Among cultivated systems, perennial woody crops—particularly fruit trees—play a dual role, contributing not only to food production but also to long-term carbon sequestration through the accumulation of structural biomass. The estimation of aboveground biomass, in this context, becomes an essential operation to support both agronomic management (irrigation, pruning and fertilisation) and the quantification of carbon storage.

In forestry, aboveground biomass has traditionally been estimated through non-destructive methods such as allometric equations [

1,

2,

3], mathematical models that relate the dry mass of a tree to measurable variables such as diameter and height. Although generalised formulations have been developed for different forest types and species [

4], these equations are generally site-specific and can hardly capture the geometric complexity and morphological variability of real plant structures. In recent years, advanced sensing technologies such as photogrammetry and laser scanning have been increasingly adopted in forest environments for structural analysis of vegetation. These methods, implemented through both terrestrial [

5,

6] and airborne platforms [

7,

8,

9], have demonstrated a strong potential for generating detailed three-dimensional reconstructions of canopy architecture, proving particularly effective for volumetric analysis and the estimation of aboveground biomass [

10].

Building upon these advances, similar approaches have subsequently been applied in agricultural and fruit tree systems, where the integration of 2D and 3D LiDAR technology and drone-based photogrammetry has supported the acquisition of high-resolution structural data of individual plants and entire orchards [

11,

12,

13,

14]. Nevertheless, the widespread adoption of these systems in operational settings remains limited, primarily due to their cost, technical complexity and the requirement for specialised expertise in data acquisition and processing. In this context, the emergence of consumer-grade devices equipped with integrated sensors, such as smartphones featuring LiDAR and high-resolution RGB cameras, represents a promising alternative. These devices rely on augmented reality (AR) frameworks, which integrate information from multiple onboard sensors to create a consistent spatial representation of the surrounding environment. Two main approaches are available: LiDAR-based acquisition, where an integrated laser scanner emits infrared pulses and measures the return time to generate depth maps, which are fused with RGB imagery to produce a georeferenced point cloud in real time; Photogrammetry, a methodology that reconstructs 3D geometry from a set of overlapping RGB images using feature-matching and triangulation algorithms, yielding high-resolution point clouds. The diffusion of AR frameworks in mobile devices has made both methods accessible through user-friendly applications.

Recent studies have demonstrated the technical feasibility of using such devices for various forest inventory tasks, particularly diameter at breast height (DBH) estimation [

15,

16], small-scale forest measurements [

17], using both iPhones and iPads equipped with LiDAR sensor [

18,

19], and improvement to UAV-derived canopy height models [

20,

21]. Beyond forest application, low-cost scanning systems have been tested in urban environments, including the structural analysis of isolated olive trees [

22] and in the context of urban forest mapping [

23,

24]. In the agricultural domain, their use has mainly focused on fruit yield estimation, with applications reported in vineyards [

25], citrus orchards [

26] and kiwifruit plantations [

27], and leaf measurements [

28]. However current evidence remains limited for fruit tree systems: while some applications have been reported, they have mostly addressed fruit yield estimation or specific morphometric traits, without extending to a comprehensive structural characterisation of trees. In contrast, most studies have been carried out in forest and urban contexts, which differ substantially from fruit orchards in terms of tree size, planting density and management practices such as pruning. This highlights a clear scientific gap regarding the applicability and accuracy of consumer-grade scanning devices for structural analysis in fruit tree systems, particularly with respect to the estimation of aboveground biomass and associated carbon sequestration.

The present study aims to evaluate the performance of a consumer-grade device—the iPhone 13 Pro, equipped with an integrated LiDAR sensor and RGB camera—for the three-dimensional reconstruction and structural analysis of fruit trees components. The investigation followed a multi-step approach to assess both accuracy and practical feasibility under controlled and field conditions, including the estimation of morphometric traits of individual leaves. Particular emphasis was placed on the leaf area parameter, given its strong agronomic relevance as an indicator of photosynthetic capacity and transpiration dynamics. It was hypothesised that the two acquisition modes would show differences in accuracy and repeatability when applied to both controlled and field conditions, and that at least one of them would provide measurements with sufficient precision to enable reliable estimation of structural and morphological traits in fruit tree systems.

2. Materials and Methods

2.1. Experimental Setup and Acquisition

The experimental protocol was designed to assess the dimensional measurement capabilities of the iPhone 13 Pro’s LiDAR (Apple Inc., Cupertino, CA, USA), sensor under standardised conditions, with specific reference to applications in structural modelling of fruit tree components. The device running on iOS 15, includes a suite of onboard sensors: a proximity sensor, the True Depth system and a class 1 LiDAR unit. The LiDAR is based on a Direct Time-of-Flight (dToF) system composed of a VCSEL (Vertical-Cavity-Surface-Emitting Laser) emitter and a SPAD NIR CMOS (Single Photon Avalanche Diode Near-Infrared Complementary Metal-Oxide Semiconductor) receiver. Combined with gyroscope, magnetometer and RGB cameras, these components enable three-dimensional reconstructions in the form of point clouds. The iPhone 13 Pro was selected as it represents a low-cost and portable alternative to conventional terrestrial laser scanning systems, which, although highly accurate, are considerably more expensive; in addition, it integrates both LiDAR unit and high-resolution RGB camera within the same consumer-grade platform, enabling the acquisition of LiDAR-based and photogrammetric dataset using identical optical and processing conditions. The model was also chosen for its wide availability and its documented use in previous studies on forestry and urban vegetation studies [

15,

16,

17,

18]. No additional smartphones or tablets were tested, as the aim was to perform an in-depth evaluation of a single representative device for orchard applications. To simulate the geometry of aboveground tree structures, a total of 31 cylindrical models (pipes) with pre-measured diameters and heights were employed (

Figure 1). Reference measurements were obtained manually using a digital calliper (resolution 0.01 mm) and a measuring tape. Acquisitions were performed in an open flat outdoor environment under clear spring weather conditions, characterised by full sunlight, no cloud cover and absence of wind. These stable environmental conditions minimised potential interference from shadows and reflections, ensuring acquisition stability and reproducibility.

The scanning procedure was conducted using the Polycam© app v.2.2.10 (Polycam inc., Staple House, Bedfordshire, UK), selected for its compatibility with both LiDAR and image-based 3D reconstruction workflows and its ability to export point cloud data in standard formats.

The application provides two acquisition modes:

Photo mode, in which multiple images are captured around the object;

LiDAR mode, where a continuous video scan is performed.

Both acquisition modes require a preprocessing step before data export: in photo mode point cloud reconstruction is cloud-based, and the processed output is downloaded to the device; in LiDAR mode preprocessing is performed locally on the smartphone, with user-defined voxel size settings. Point cloud data were processed using CloudCompare (v.2.12.4, EDF R&D, Paris, France) for spatial measurements.

2.2. Accuracy and Precision Tests

A preliminary experimental phase was conducted using eight cylindrical models with known geometric dimensions. For each acquisition mode, scans were performed at four standardised radial distances (1.0 m, 1.5 m, 2.0 m, 3.0 m), maintaining a constant device height of 1.5 m above ground level; each distance-mode configuration was repeated three times. The acquisition distances were selected to represent typical orchard conditions: shorter distances enabled the capture of fine details, whereas increasing the distance reproduced situations where canopy size, planting layout or operational constraints limit proximity to the target.

In LiDAR mode, supplementary acquisitions were also performed by varying the voxel resolution parameter (low, medium, high), as provided by the application settings, which defines the size of the three-dimensional units used to discretise the point cloud.

Accuracy was assessed separately for diameter and height, by calculating the mean deviation from the reference value and the standard deviation of that mean deviation. In addition, a global evaluation was conducted on all data aggregated by acquisition mode. Precision was evaluated by analysing the three repeated measurements obtained for each experimental configuration, defined by acquisition mode, scanning distance and target. For each configuration, the mean, standard deviation and relative standard deviation (RSD) were calculated to quantify measurement repeatability. In a second phase, all repeated measurements associated with each individual target were aggregated across distances and modes: this allowed the computation of target-specific RSD values, providing an overview of how measurement consistency varied with object size. The resulting RSD values were then plotted in relation to the reference dimensions of the targets to investigate precision trends across different scales.

To assess the relationship between accuracy and precision, bivariate scatter plots were constructed, where each point represents a measurement pair. This classification enabled the identification of measurement categories: accurate and precise, accurate but imprecise, imprecise but accurate, and both imprecise and inaccurate, as reported in

Figure 2.

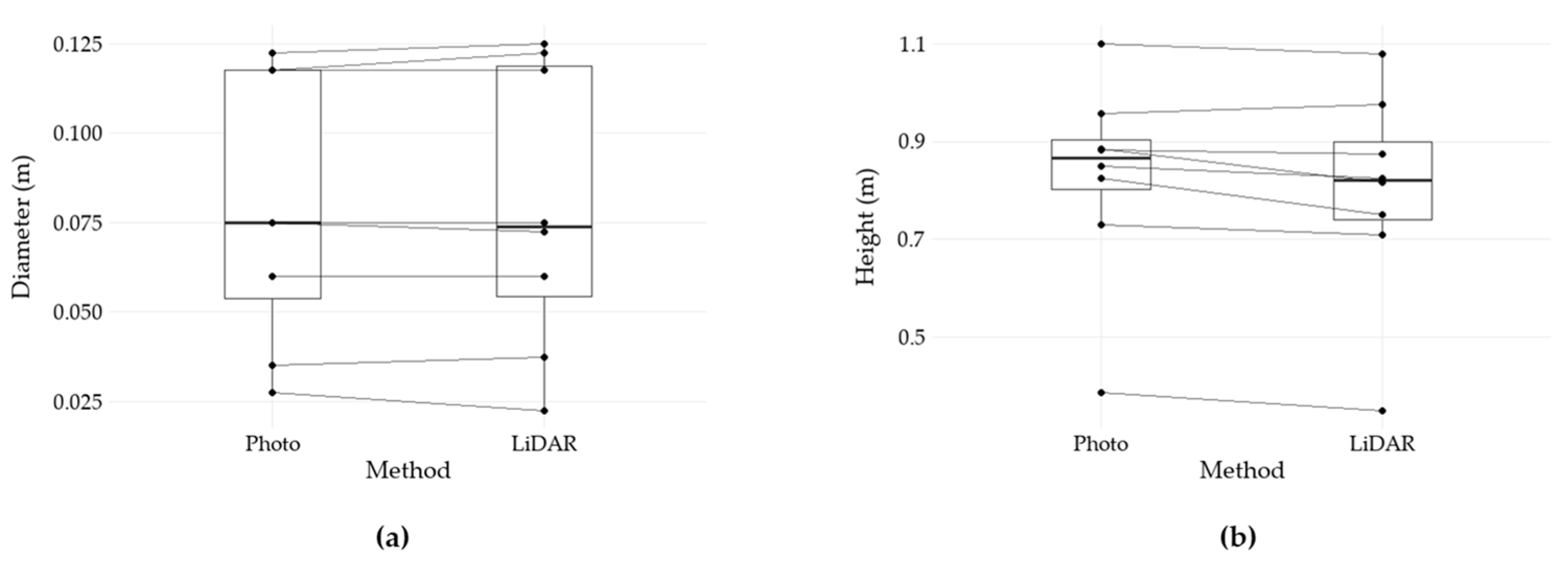

Finally, to complement the assessment of accuracy and precision, a non-parametric Wilcoxon signed-rank test was performed on paired diameter and height measurements from LiDAR and Photo modes, as the data did not conform to the assumption of normality. The statistical analysis was conducted in R software (v 4.4.1; R Core Team, Vienna, Austria) using the wilcox.test() function from the stats package to determine the existence of statistically significant differences between the two acquisition methods.

2.3. Extended Dataset Analysis and Validation

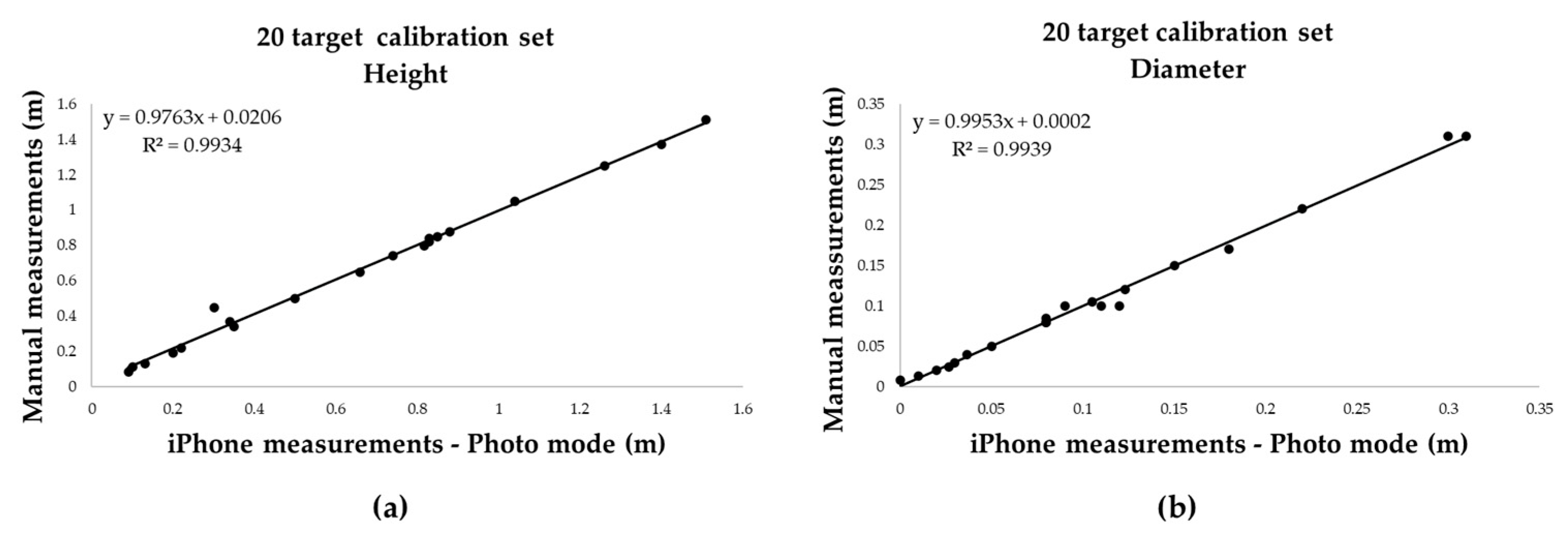

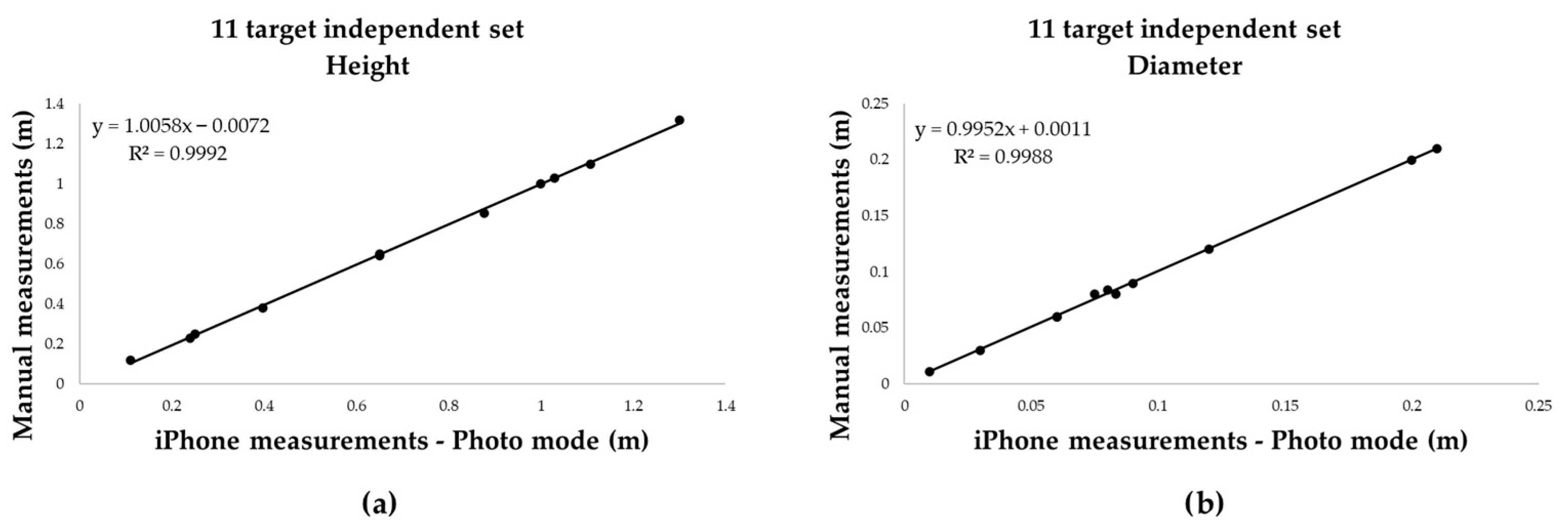

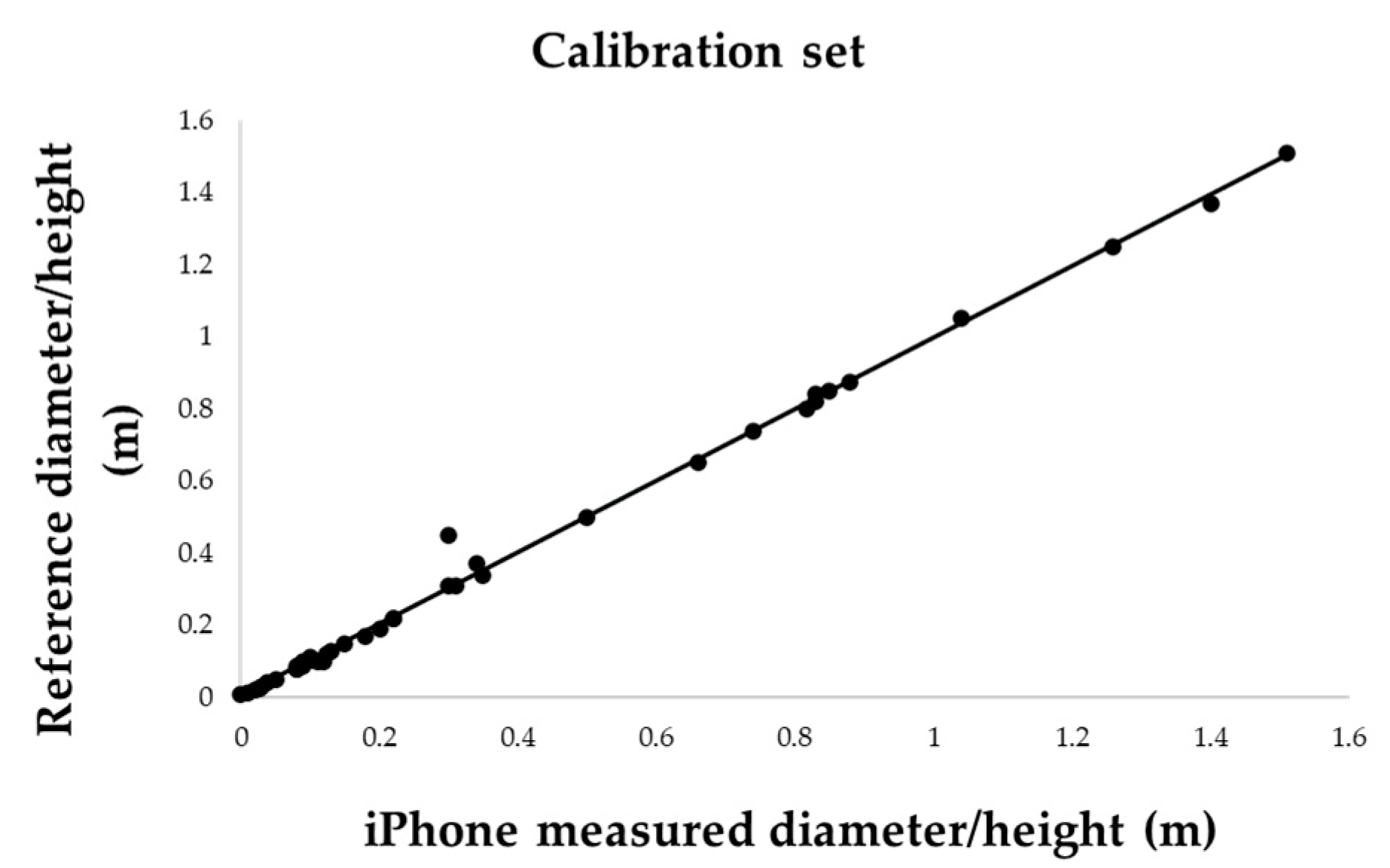

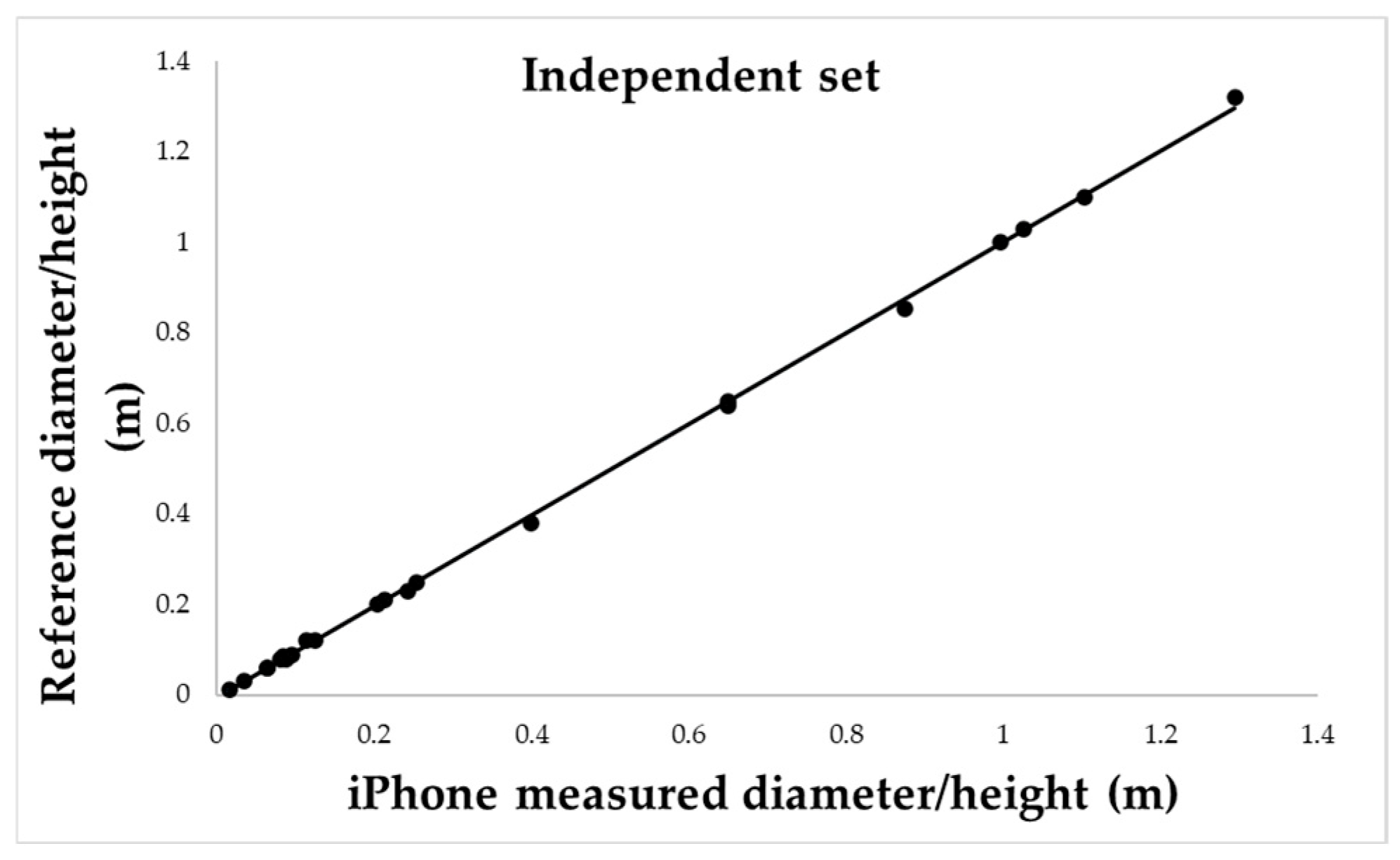

Based on the findings of the preliminary phase, a set of 31 cylindrical targets was acquired entirely in Photo mode, at a standardised scanning distance of 1.5 m, to capture fine-scale details and ensuring full target coverage. Once processed, point clouds were analysed in CloudCompare to extract target height and diameter. Dimensional variables were analysed individually to investigate potential correlations. The full dataset was randomly divided into two subsets: a calibration set (n = 20), and a validation set (n = 11), to enable independent assessment of the instrument’s reliability.

2.4. Study Site

The field application was conducted in a commercial hazelnut orchard located in Filiano (Potenza, Basilicata, Southern Italy; 40°49′50.61″ N, 15°42′13.07″ E), at an elevation of 480 m above sea level, with an average slope of 2% and a north-east orientation (

Figure 3). The orchard covers a total surface of 4.9 ha, with a 4 × 5 m planting layout. The soil, classified as sandy-clay loam (Cambisols—Regosols), shows a neutral to slightly acidic pH (6.8), with a good organic matter content (3%). The area has a Mediterranean climate with continental influences, characterised by cold winters and dry summers. Trees are trained as multi-stemmed bushes, a widely adopted system in the regional production area, and the orchard is managed under conventional practices including mechanical weed control, periodic pruning and drip irrigation system. The site was selected for its homogeneous varietal composition, which minimised confounding effects of genetic or site variability.

2.5. First Application to Fruit Trees

To assess the feasibility of smartphone-based scanning in real-world horticultural conditions, a preliminary application was conducted on hazelnut trees (

Corylus avellana L.) of the cultivar Tonda di Giffoni: this variety, widely recognised as one of the most representative in the Italian hazelnut sector, was selected as the focus of the trial given its presence in the experimental site. A subset of 4-year-old trees, trained as multi-stemmed bush with two main stems per plant (

Figure 4), was selected for this preliminary assessment: this choice allowed the assessment of the methodology while ensuring homogeneity of age and structures. The trial was designed with the specific purpose of evaluating the feasibility and reproducibility of the methodology under orchard conditions, rather than to provide exhaustive biometric data. Scanning was performed using the Polycam© application in Photo mode, selected for its higher accuracy in previous tests. Each tree was scanned maintaining a constant distance of 1.5 m from both the tree and the ground level, to optimise image overlap and ensure complete coverage of the structures: the resulting 3D point clouds, each consisting of an average of 559,402 points, were exported in LAS format and processed in CloudCompare. Geometrical attributes, such as total height and diameter of the stems at 0.30 m height, were calculated.

2.6. Estimation of Leaf Area from 3D Point Clouds

A complementary analysis was carried out to estimate the leaf area of

Corylus avellana L. using a smartphone-based 3D scanning approach. Leaf samples were collected from the same 4-year-old orchard described above: a total of 20 mature, fully expanded and undamaged leaves were collected, then positioned vertically on a rigid support placed on a flat surface to ensure stability during acquisition. Each sample was scanned using Polycam ©application in Photo mode, maintaining 0.5 m between the smartphone and the leaf (

Figure 5).

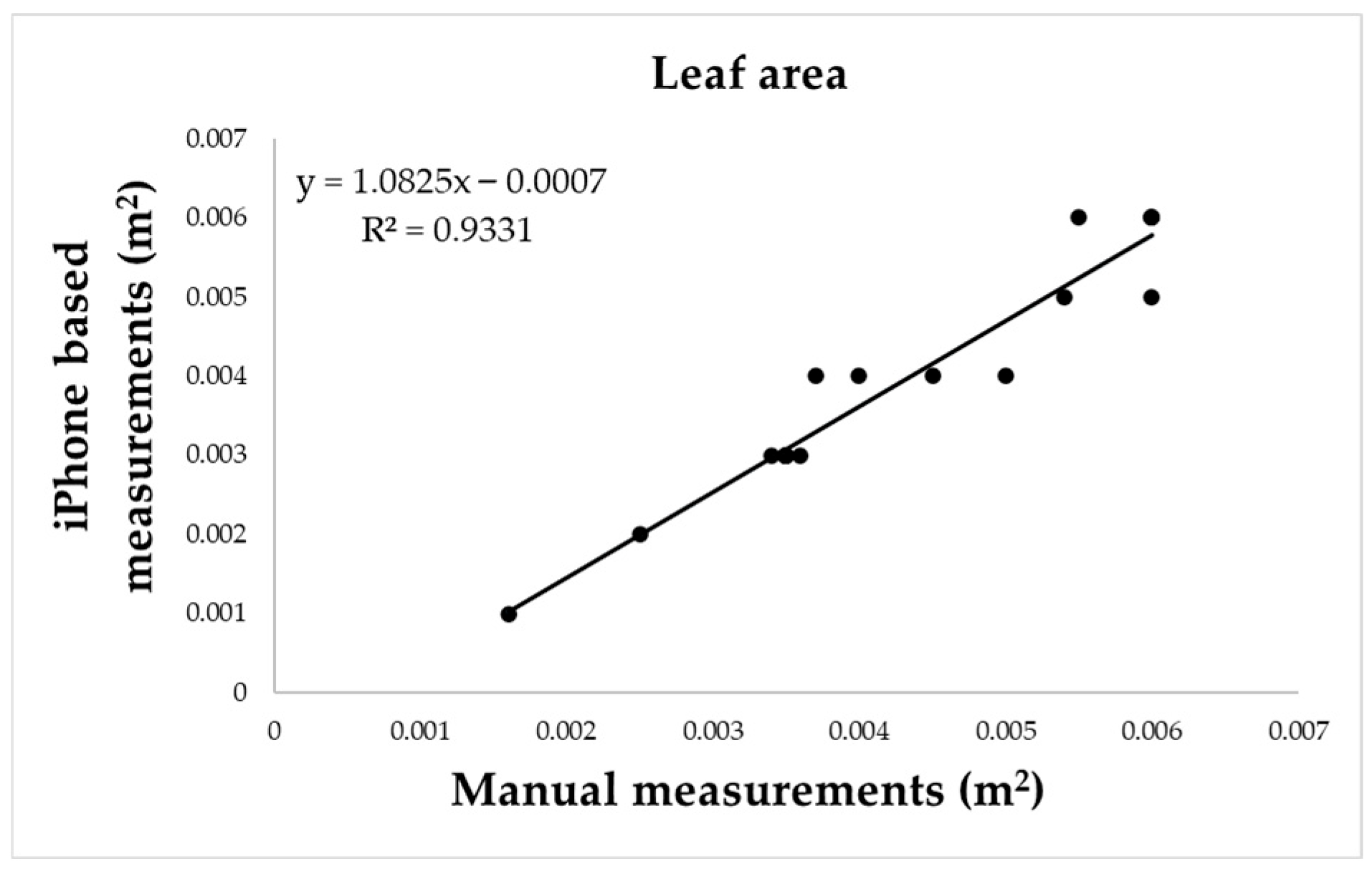

The acquired point clouds, consisting of an average of 552,036 points, were exported in LAS format and processed in CloudCompare software: leaf surface was isolated by manually defining Region of Interest (ROIs) to exclude the petiole and any residual background points. Manual approach was preferred over automatic ROI selection based on colour thresholds, as the latter proved less precise due to the irregular margins of the hazelnut leaves. The isolated point cloud was then used to compute surface area using the 2.5D surface tool of the software. To assess the reliability of this method, results were compared with ImageJ v 1.54 (NIH, Bethesda, MD, USA) measurements: for this method, each leaf was photographed alongside a metric reference scale, images were processed by setting the scale, converting to 8-bit format, and adjusting threshold to isolate the leaf shape. Regions of interest (ROIs) were then manually selected and added to the ROI manager, which automatically returned the area values for each leaf.

4. Discussion

4.1. Performance of Smartphone-Based Scanning

The results of this study confirm the technical feasibility of using a consumer-grade smartphone (iPhone 13 Pro) for 3D reconstruction of fruit tree structures, under both controlled and field conditions. The initial calibration experiment revealed a clear difference between the two acquisition modalities implemented in the Polycam© application: Photo mode consistently exhibited higher accuracy and precision compared to the integrated LiDAR sensor. Separate analysis for diameter and height showed that Photo mode achieved mean absolute deviations below 3 millimetres for both variables, and relative error less than 5%, indicating a high level of measurement fidelity. In contrast, LiDAR mode systematically underestimated height, with a mean deviation of—0.027 m and a relative error of—3.9%, demonstrating that its lower spatial resolution limits reliability for detailed structural measurements at short range. These differences were statistically supported by the paired Wilcoxon signed-rank test, which confirmed the absence of significant deviations for diameter but detected a systematic underestimation of height in LiDAR mode compared with Photo acquisition.

When considering aggregated measurements for both variables, the superiority of Photo mode was confirmed: it yielded a near-zero mean deviation (−0.001 m) and lower variability (σ = 0.016 m) compared to LiDAR (−0.013 m; σ = 0.024 m), confirming that the photogrammetric reconstruction outperformed LiDAR in terms of spatial density and measurement accuracy. Furthermore, the precision assessment across different targets and acquisition distances reinforced these conclusions: Photo mode maintained low relative standard deviations (RSDs), particularly for height where values remained consistently below 5% for all targets; LiDAR mode showed stable precision at larger distances, but its performance was more variable, especially for diameter where RSD values reached up to 19.6%. These results underscore that the performance of smartphone-integrated LiDAR is more sensitive to object geometry and surface reflectance than photogrammetry, especially under close-range conditions, highlighting a critical limitation for the application of smartphone-integrated LiDAR in heterogeneous orchard environments.

The analysis of the relationship between accuracy and precision provided further insights: a moderate correlation (R

2 = 0.44) was observed in Photo mode, an absent relationship in LiDAR mode (R

2 = 0.04). This behaviour confirms previous findings from in forest applications, which reported that smartphone-based LiDAR performed poorly when applied to young plantation trees, showing low accuracy and no consistent relationship between measurement variability and reliability [

18]: such evidence suggests that the constraints of the sensor are inherent to the technology rather than solely dependent on the study context.

Building on the performance observed in controlled conditions, a larger dataset of 31 cylindrical targets was analysed to evaluate the robustness of Photo mode: regression models fitted on 20 targets yielded near-perfect linearity for both height and diameter (R2 = 0.9939), which was maintained when applied to an independent validation set of 11 targets. The combined variable regression using all 62 samples confirmed the strength of the model, with R2 = 0.996 and mean absolute error of just 0.007 m.

4.2. Application to Orchard Systems and Leaf Traits

When applied in an operational orchard context, the smartphone-based method proved capable of capturing the structural characteristics of multi-stemmed hazelnut trees. Height and trunk diameter were successfully extracted from 3D point clouds acquired in Photo mode at a fixed distance of 1.5 m, confirming the potential of this tool for tree-level inventory in perennial fruit crops. These results demonstrate the feasibility of transferring methodologies—already validated in urban forest applications such as DBH estimation and tree mapping [

22,

23]—to the context of commercial orchards, where the geometric complexity and growth architecture differ significantly from typical forest stands due to pruning and agronomic practices. Moreover, the outcomes suggest that consumer-grade 3D scanning may complement more advanced and costly technologies such as terrestrial laser scanning and UAV-based photogrammetry [

20,

21]. In addition to structural measurements, the study investigated the feasibility of estimating leaf area—a parameter of agronomic relevance due to its strong correlation with photosynthetic activity, transpiration dynamics and plant water status—through 3D reconstructions of leaves. Twenty mature hazelnut leaves were scanned under field conditions, and their surface area was calculated from the resulting point clouds using CloudCompare software. These values were then compared against reference measurements obtained through manual image-based analysis with ImageJ, a validated and widely adopted tool for morphometric evaluation in plant science [

29]. The comparison yielded a high coefficient of determination (R

2 = 0.9331), confirming the reliability of the 3D scanning approach for capturing fine-scale leaf morphology. These findings are consistent with the recent work of Bar-Sella et al. [

28], who demonstrated the capability of the iPhone 13’s acquisition to precisely reconstruct individual maize leaves, and extend the methodology to a woody species under field conditions, showing that accuracy can be preserved despite higher variability introduced by environmental factors.

4.3. Methodological Strengths and Limitations

The present study offers a comprehensive evaluation of the capabilities and limitations of a consumer-grade device—specifically the iPhone 13 Pro—for the three-dimensional characterisation of fruit tree structures and leaf morphological traits. The choice of this device was motivated by its integration of both active (LiDAR) and passive (photogrammetry) 3D acquisition modes within a single platform, combined with the maturity and stability of its hardware-software ecosystem. Such configuration made it suitable for a systematic and reproducible performance assessment, minimising variability associated with device heterogeneity. Furthermore, the widespread adoption of iPhone models enhances the transferability and broader applicability of the results, as the technology is readily accessible to a large user base, facilitating replication of the approach and its potential integration into routine agronomic practices. Through a combination of calibration tests, field applications on hazelnut trees and fine-scale reconstruction, we demonstrated that this portable and accessible technology can produce accurate and reliable results under a variety of operational conditions. The results obtained under controlled conditions revealed that, while both LiDAR and photogrammetric acquisition modes can be used for 3D reconstruction, the Photo mode consistently outperformed LiDAR in terms of spatial fidelity, accuracy and repeatability. These findings are consistent with previous studies in forestry [

18], yet the present work extends these observations to the agricultural context, where fruit trees morphology and the variability introduced by agronomic management impose additional challenges. Compared to the forest-based results of Tatsumi et al., [

17] and Oikawa et al., [

19], data suggest that photogrammetry can offer greater robustness in environments where object shape, leaf cover and lighting are less controlled and more variable. When applied in orchard, the morphological complexity introduced by pruning systems and varietal growth patterns, represents a significant barrier to the generalisation of allometric models from forestry to fruit production systems; nevertheless, the approach enabled retrieval of key structural parameters without the need for expensive equipment.

These tools could complement TLS systems [

5,

6] or airborne systems [

11,

12,

13], particularly in contexts requiring high temporal resolution or rapid deployment across multiple sites. Furthermore, the capacity of the method to estimate leaf surface area in situ suggests that smartphone-based scanning could support a more integrative characterisation of plant traits: this is particularly relevant in fruit crops, where both canopy structure and leaf area are key determinants of productivity, water use and resource allocation. The ability to acquire such parameters rapidly and non-destructively opens opportunities for improved monitoring of plant status over time, including under different irrigation or pruning regimes.

Despite its strengths, several limitations emerged: the photogrammetric workflow, while highly accurate at short range, is inherently sensitive to environmental factors such as ambient lighting, operator movement and background clutter. These conditions, typical of field environments, may introduce inconsistencies in point cloud generation or processing. In contrast, LiDAR’s performance, although more stable under variable conditions, remains constrained by low point density and irregular spatial distribution, a limitation that in this study resulted in a systematic underestimation of height measurements on the calibration targets. This bias may become particularly critical when extrapolated to tree-level indices dependent on vertical extent, such as total height or height to diameter ratios. Similarly, in orchard conditions, occlusions from foliage, multi-stemmed structures and variable illumination further contribute to measurement uncertainty. Ensuring adequate angular coverage, performing repeated acquisitions under varying conditions, and adopting a standardised acquisition protocol would represent essential measures to secure reproducibility. Additional limitations deserve mention. The analysis did not stratify trees by DBH classes, since the orchard consisted of coetaneous individuals with highly uniform trunk size: while this design was suitable to test the feasibility of the approach in a controlled field setting, future applications should extend the validation to trees of different stem diameter, as this parameter may influence reconstruction accuracy. Operator variability also remains untested, an essential aspect for assessing robustness in routine agronomic contexts. Finally, scalability represents a limitation, as the tree-by-tree approach constrains applicability in large or high-density orchards. Within this framework, the most appropriate application of smartphone-based scanning is the rapid characterisation of individual trees or the ground-truthing of UAV and TLS products, whereas extensive, high-density surveys remain more consistent with dedicated platforms, although at the cost of greater economic and operational requirements.

4.4. Operational Relevance and Future Perspectives

From an operational standpoint, the simplicity and portability of smartphone-based scanning represent a major advantage in context where rapid assessment, minimal training and low cost are critical requirements. Practically, the method provides a non-destructive approach for the quantitative monitoring of tree structural parameters. These measurements can be incorporated into orchard management frameworks to support evidence-based decisions on pruning, irrigation and nutrient supply. The possibility of deploying the same device across multiple sites further enhances data comparability, facilitating the establishment of consistent time-series datasets and improving the assessment of plant responses to agronomic interventions such as fertilisation or irrigation scheduling.

Finally, this study lays the groundwork for further research on the integration of smartphone-derived 3D data into practical phenotyping workflows. Future efforts should focus on expanding validation across different species, environmental conditions and time points, to evaluate the method’s robustness and scalability in real horticultural systems. Although comparison across different smartphone models was beyond the scope of the work, addressing inter-model variability will be crucial to ensure methodological consistency and broader applicability. In addition, the assessment of operator-related variability is needed to establish reproducibility in routine monitoring, while scalability challenges may be addressed through the integration of smartphone-based approaches with complementary platforms such as UAVs and TLS, enabling multi-scale workflows that combine low-cost, high-frequency tree-level observations with spatially extensive surveys, enhancing both temporal resolution and spatial coverage.

5. Conclusions

This study assessed the accuracy and precision of a consumer-grade smartphone, the iPhone 13 Pro, for the three-dimensional data acquisition, providing a comparison between LiDAR and photogrammetric modes under controlled and field conditions. Controlled tests demonstrated that photogrammetry consistently outperformed LiDAR in terms of spatial fidelity and repeatability, while LiDAR showed a systematic underestimation of the height parameter. Beyond calibration, the workflow was tested on hazelnut trees, allowing retrieval of key structural parameters, and on leaves, where results showed strong correspondence with direct measurements.

These findings confirm the technical reliability of smartphone-based photogrammetry as a practical tool for monitoring in fruit systems. By enabling the extraction of dimensional traits with low-cost and portable equipment, the approach supports applications ranging from tree structural assessment to the estimation of leaf characteristics. The possibility of obtaining reliable three-dimensional data without specialised instruments broadens accessibility to digital technologies, fostering their adoption in precision fruit farming.

Nonetheless, some limitations should be acknowledged, including the sensitivity of photogrammetry to environmental factors, the requirement for tree-by-tree scanning, which limits scalability, and the influence of operator variability. Further research should extend validation across different tree sizes, species and environments, and evaluate multiple smartphone or portable device models, to strengthen robustness and enable broader adoption in orchard management.

In conclusion, by demonstrating accurate structural and morphological data acquisition with a widely accessible device, this work advances the development of low-cost and field-ready technologies for digital fruticulture. While challenges remain in terms of scalability and inter-operator consistency, these findings lay the groundwork for broader integration of consumer-grade devices into digital fruticulture, bridging the gap between research applications and routine orchard management.