Two-Stage Marker Detection–Localization Network for Bridge-Erecting Machine Hoisting Alignment

Abstract

1. Introduction

2. Related Works

3. Methodology

3.1. Overall Architecture

- Stage 1: Lightweight Marker Detection Module for the rapid coarse localization of markers in complex environments.

- Stage 2: Transformer-Based Homography Estimation Module for precise coordinate transformation between markers and hoisting equipment.

3.2. Stage 1: Lightweight Marker Detection Module

Dynamic Hybrid Block (DHB)

- The first branch uses MobileNetV3’s depthwise separable convolutions to extract geometric features (edges, corners). The depthwise convolution operation is defined aswhere is the depthwise kernel, k is the kernel size, C is the input channel, ⊛ denotes depthwise convolution, and is the bias.

- The second branch introduces lightweight Transformer blocks with scene-aware attention. The window multi-head self-attention (W-MSA) is computed aswhere , and a scene prior bias B (encoding marker aspect ratio/position distribution) is added to the attention scores:

3.3. Stage 2: Transformer-Based Homography Estimation Module

3.3.1. Input Processing

3.3.2. Transformer Encoder

- Absolute positional encoding: Encodes pixel coordinates in the ROI to preserve spatial layout.

- Geometric prior encoding: Encodes marker-specific priors (center coordinates: , width: w, height: h) derived from Stage 1’s coarse bounding box, anchoring attention to the marker geometry.

3.3.3. Homography Decoding

3.4. Multi-Dimensional Data Augmentation

3.4.1. Random Homography Transformation

- Scaling factors for x- and y-axes are perturbed by ±;

- Shearing terms and are perturbed by ±;

- Translation offsets and are limited to ± and ±, respectively.

3.4.2. Photometric Augmentation

- Color space adjustments: Random brightness () and saturation modifications in the HSV space, followed by contrast () and brightness offset () adjustments in the BGR space;

- Gaussian noise injection: Additive Gaussian noise with variance to simulate sensor noise or low-light conditions.

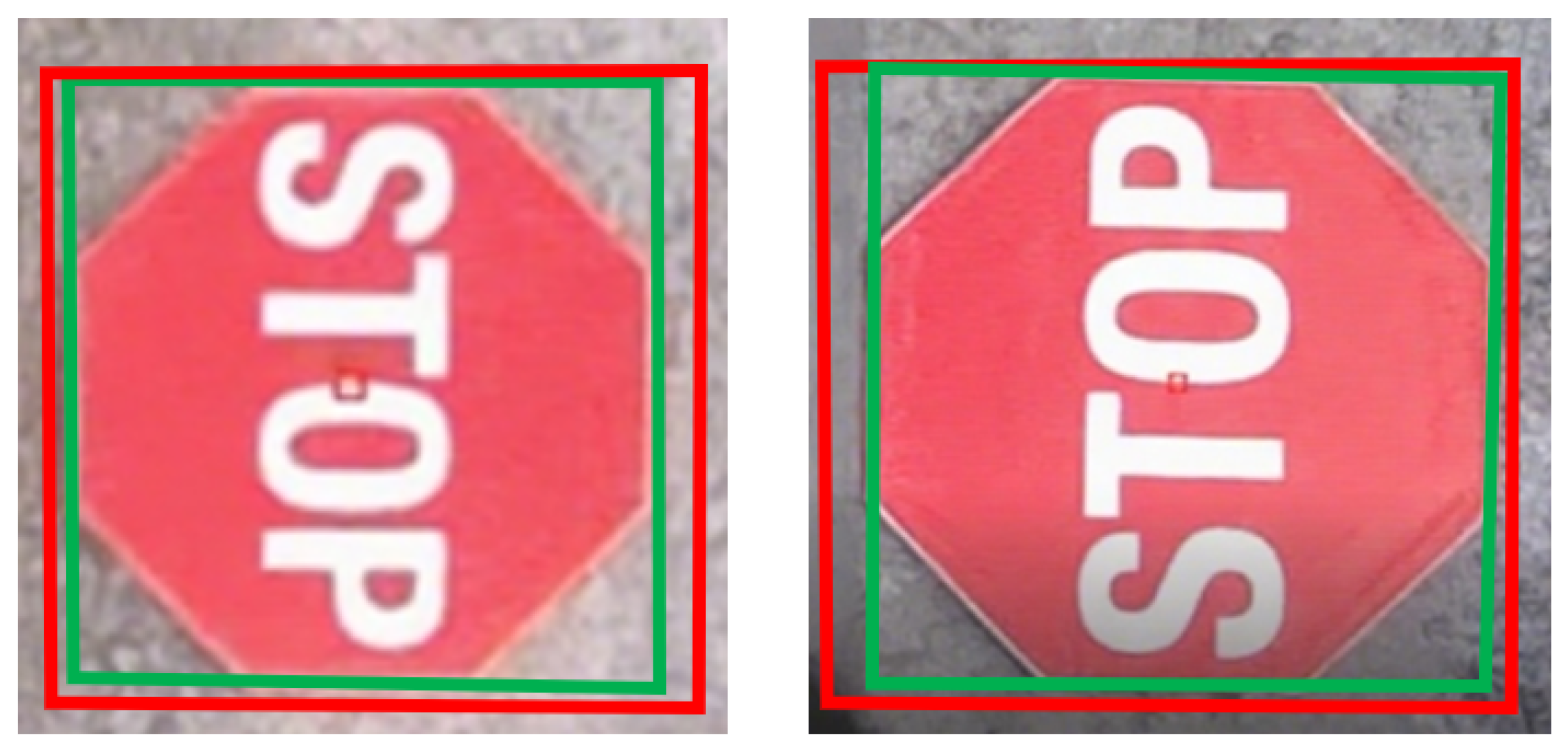

3.4.3. Background Integration and Bounding Box Extraction

3.5. Loss Function

4. Experimental Setup and Result Analysis

4.1. Experimental Setup

4.2. Experimental Datasets and Preprocessing

4.2.1. Real Construction Dataset

- Markers: 5 types of rectangular markers (10–20cm in size) affixed to precast girders;

- Annotations: Bounding boxes (labeled via LabelImg v1.8.5) and 4 corner coordinates (manually verified for sub-pixel accuracy using OpenCV4.0’s cornerSubPix);

- Disturbances: 32% with lighting variations (morning/afternoon sun, overcast), 28% with partial occlusion (crane arms, worker bodies), 21% with contamination (dust, paint peeling)—a distribution aligned with field observations.

4.2.2. Augmented Dataset

4.3. Evaluation Metrics

Robustness

- Images with lighting variations;

- Images with partial marker occlusion;

- Images with contaminated markers (e.g., dust adhesion, paint peeling).

4.4. Comparative Experiments

- Single-stage detection + traditional homography: YOLOv8 (single-stage detector) combined with SIFT feature matching for homography estimation;

- Two-stage CNN network: Faster R-CNN (two-stage detector) followed by a CNN-based homography regression module;

- Proposed method without data augmentation: Identical to the proposed network but trained using only real data (no augmented samples).

4.5. Ablation Studies

- Transformer module: Performance comparison between the proposed network and a variant where the Transformer-based homography estimation module was replaced with a CNN regression network.

- Data augmentation: Comparison of and reprojection error with/without using augmented training data.

- Positional encoding: Evaluation of three variants:

- Using only absolute positional encoding;

- Using only geometric prior encoding (marker center coordinates and aspect ratio);

- Using the proposed fused positional encoding (absolute + geometric).

4.6. Result Analysis

4.6.1. Quantitative Results

4.6.2. Ablation Study Results

4.6.3. Scalability Analysis

4.6.4. Qualitative Analysis

4.6.5. Robustness Verification

- Occluded markers: (as shown in Table 1);

- Contaminated markers: reprojection error .

5. Conclusions and Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; British Machine Vision Association (BMVA): London, UK, 1988; Volume 15, pp. 10–5244. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 2564–2571. [Google Scholar]

- Suárez, I.; Sfeir, G.; Buenaposada, J.M.; Baumela, L. BEBLID: Boosted efficient binary local image descriptor. Pattern Recognit. Lett. 2020, 133, 366–372. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, IL, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Luo, Y.; Wang, X.; Liao, Y.; Fu, Q.; Shu, C.; Wu, Y.; He, Y. A review of homography estimation: Advances and challenges. Electronics 2023, 12, 4977. [Google Scholar] [CrossRef]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Deep image homography estimation. arXiv 2016, arXiv:1606.03798. [Google Scholar] [CrossRef]

- Wang, G.; You, Z.; An, P.; Yu, J.; Chen, Y. Efficient and robust homography estimation using compressed convolutional neural network. In Proceedings of the Digital TV and Multimedia Communication: 15th International Forum, IFTC 2018, Shanghai, China, 20–21 September 2018; Revised Selected Papers 15. Springer: Berlin/Heidelberg, Germany, 2019; pp. 156–168. [Google Scholar]

- Kang, L.; Wei, Y.; Xie, Y.; Jiang, J.; Guo, Y. Combining convolutional neural network and photometric refinement for accurate homography estimation. IEEE Access 2019, 7, 109460–109473. [Google Scholar] [CrossRef]

- Zhou, H.; Hu, W.; Li, Y.; He, C.; Chen, X. Deep Homography Estimation With Feature Correlation Transformer. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo (ICME), Brisbane, Australia, 10–14 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1397–1402. [Google Scholar]

- Ye, N.; Wang, C.; Fan, H.; Liu, S. Motion basis learning for unsupervised deep homography estimation with subspace projection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 13117–13125. [Google Scholar]

- Hong, M.; Lu, Y.; Ye, N.; Lin, C.; Zhao, Q.; Liu, S. Unsupervised homography estimation with coplanarity-aware gan. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 17663–17672. [Google Scholar]

- Zhang, J.; Wang, C.; Liu, S.; Jia, L.; Ye, N.; Wang, J.; Zhou, J.; Sun, J. Content-aware unsupervised deep homography estimation. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part I 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 653–669. [Google Scholar]

- Nie, L.; Lin, C.; Liao, K.; Liu, S.; Zhao, Y. Depth-aware multi-grid deep homography estimation with contextual correlation. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 4460–4472. [Google Scholar] [CrossRef]

- Hou, B.; Ren, J.; Yan, W. Unsupervised Multi-Scale-Stage Content-Aware Homography Estimation. Electronics 2023, 12, 1976. [Google Scholar] [CrossRef]

- Fang, Q.; Li, H.; Luo, X.; Ding, L.; Luo, H.; Rose, T.M.; An, W. Detecting non-hardhat-use by a deep learning method from far-field surveillance videos. Autom. Constr. 2018, 85, 1–9. [Google Scholar] [CrossRef]

- Zhu, Z.; Ren, X.; Chen, Z. Visual tracking of construction jobsite workforce and equipment with particle filtering. J. Comput. Civ. Eng. 2016, 30, 04016023. [Google Scholar] [CrossRef]

- Sharif, M.M.; Nahangi, M.; Haas, C.; West, J. Automated model-based finding of 3D objects in cluttered construction point cloud models. Comput.-Aided Civ. Infrastruct. Eng. 2017, 32, 893–908. [Google Scholar] [CrossRef]

- Golparvar-Fard, M.; Heydarian, A.; Niebles, J.C. Vision-based action recognition of earthmoving equipment using spatio-temporal features and support vector machine classifiers. Advanced Engineering Informatics 2013, 27, 652–663. [Google Scholar] [CrossRef]

- Kim, J.; Chi, S.; Seo, J. Interaction analysis for vision-based activity identification of earthmoving excavators and dump trucks. Autom. Constr. 2018, 87, 297–308. [Google Scholar] [CrossRef]

- Fang, W.; Ding, L.; Zhong, B.; Love, P.E.; Luo, H. Automated detection of workers and heavy equipment on construction sites: A convolutional neural network approach. Adv. Eng. Inform. 2018, 37, 139–149. [Google Scholar] [CrossRef]

- Luo, X.; Li, H.; Cao, D.; Yu, Y.; Yang, X.; Huang, T. Towards efficient and objective work sampling: Recognizing workers’ activities in site surveillance videos with two-stream convolutional networks. Autom. Constr. 2018, 94, 360–370. [Google Scholar] [CrossRef]

- Jeelani, I.; Han, K.; Albert, A. Automating and scaling personalized safety training using eye-tracking data. Autom. Constr. 2018, 93, 63–77. [Google Scholar] [CrossRef]

- Irizarry, J.; Costa, D.B. Exploratory study of potential applications of unmanned aerial systems for construction management tasks. J. Manag. Eng. 2016, 32, 05016001. [Google Scholar] [CrossRef]

- Ros, G.; Sellart, L.; Materzynska, J.; Vazquez, D.; Lopez, A.M. The synthia dataset: A large collection of synthetic images for semantic segmentation of urban scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3234–3243. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

| Method | Detection | Localization | Real-Time | Robustness |

|---|---|---|---|---|

| (%) | Reprojection Error (mm) | FPS | (%) | |

| YOLOv8+SIFT | 90.2 ± 0.8 | 3.5 ± 0.3 | 45 ± 1 | 82.1 ± 1.2 |

| Faster R-CNN+CNN | 93.4 ± 0.6 | 2.3 ± 0.2 | 38 ± 1 | 88.7 ± 0.9 |

| Ours (no augmentation) | 96.1 ± 0.5 | 1.8 ± 0.2 | 32 ± 1 | 92.4 ± 0.7 |

| Proposed Method | 97.8 ± 0.4 | 1.2 ± 0.1 | 32 ± 1 | 95.6 ± 0.5 |

| Variant | (%) | Reprojection Error (mm) | FPS |

|---|---|---|---|

| Full model (proposed) | 97.8 | 1.2 | 32 |

| Without Transformer (CNN regression) | 95.0 | 2.0 | 33 |

| Without data augmentation | 96.1 | 1.8 | 32 |

| Absolute pos. encoding only | 96.5 | 1.6 | 32 |

| Geometric prior encoding only | 96.3 | 1.7 | 32 |

| Ratio (Real–Synthetic) | (%) | Reprojection Error (mm) | FPS |

|---|---|---|---|

| 1:1 (2000:2000) | 96.9 | 1.4 | 32 |

| 2:5 (2000:5000) | 97.8 | 1.2 | 32 |

| 3:5 (3000:5000) | 97.2 | 1.3 | 32 |

| Stitched Condition | (%) | Reprojection Error (mm) | FPS |

|---|---|---|---|

| 1280 × 960, 2 markers | 97.3 | 1.3 | 30 |

| 1920 × 1440, 4–5 markers | 96.5 | 1.5 | 12 |

| 2880 × 2160, 6–8 markers | 96.1 | 1.6 | 4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, L.; Xiao, Z.; Hu, T. Two-Stage Marker Detection–Localization Network for Bridge-Erecting Machine Hoisting Alignment. Sensors 2025, 25, 5604. https://doi.org/10.3390/s25175604

Li L, Xiao Z, Hu T. Two-Stage Marker Detection–Localization Network for Bridge-Erecting Machine Hoisting Alignment. Sensors. 2025; 25(17):5604. https://doi.org/10.3390/s25175604

Chicago/Turabian StyleLi, Lei, Zelong Xiao, and Taiyang Hu. 2025. "Two-Stage Marker Detection–Localization Network for Bridge-Erecting Machine Hoisting Alignment" Sensors 25, no. 17: 5604. https://doi.org/10.3390/s25175604

APA StyleLi, L., Xiao, Z., & Hu, T. (2025). Two-Stage Marker Detection–Localization Network for Bridge-Erecting Machine Hoisting Alignment. Sensors, 25(17), 5604. https://doi.org/10.3390/s25175604