FM-Net: A New Method for Detecting Smoke and Flames

Abstract

1. Introduction

- (1)

- Enhancing the generalization ability of the model by constructing a dedicated dataset covering flame, smoke and complex backgrounds and adopting an adversarial filtering enhancement strategy.

- (2)

- Introducing a Context Guided Convolutional Block to achieve structured decoupling of the feature space and progressive dimensionality simplification; optimizing the performance of capturing the details of the detected target by combining this with a Poly Kernel Inception Block; and solving the problem of dynamic characterization of the smoke diffusion by using the Manhattan Attention Mechanism Unit to model the long-distance dependency relationship between pixels.

- (3)

- A lightweight network architecture is constructed to achieve significant improvement in detection accuracy and real-time performance, which provides reliable technical support for early fire warning in complex scenarios.

2. Related Work

2.1. Fire Detection

2.2. Attention Mechanisms and Manhattan Distance

3. Methodology

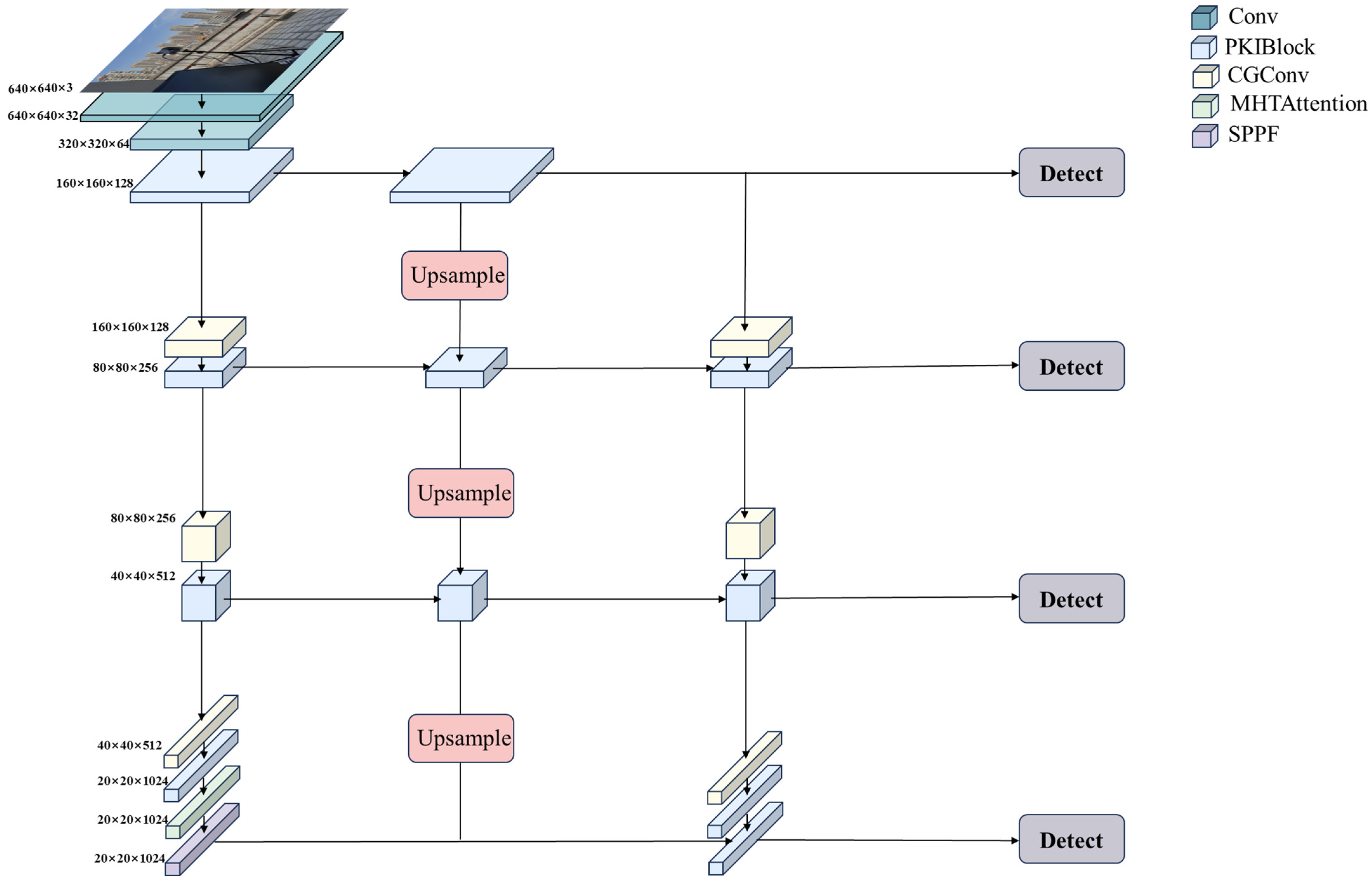

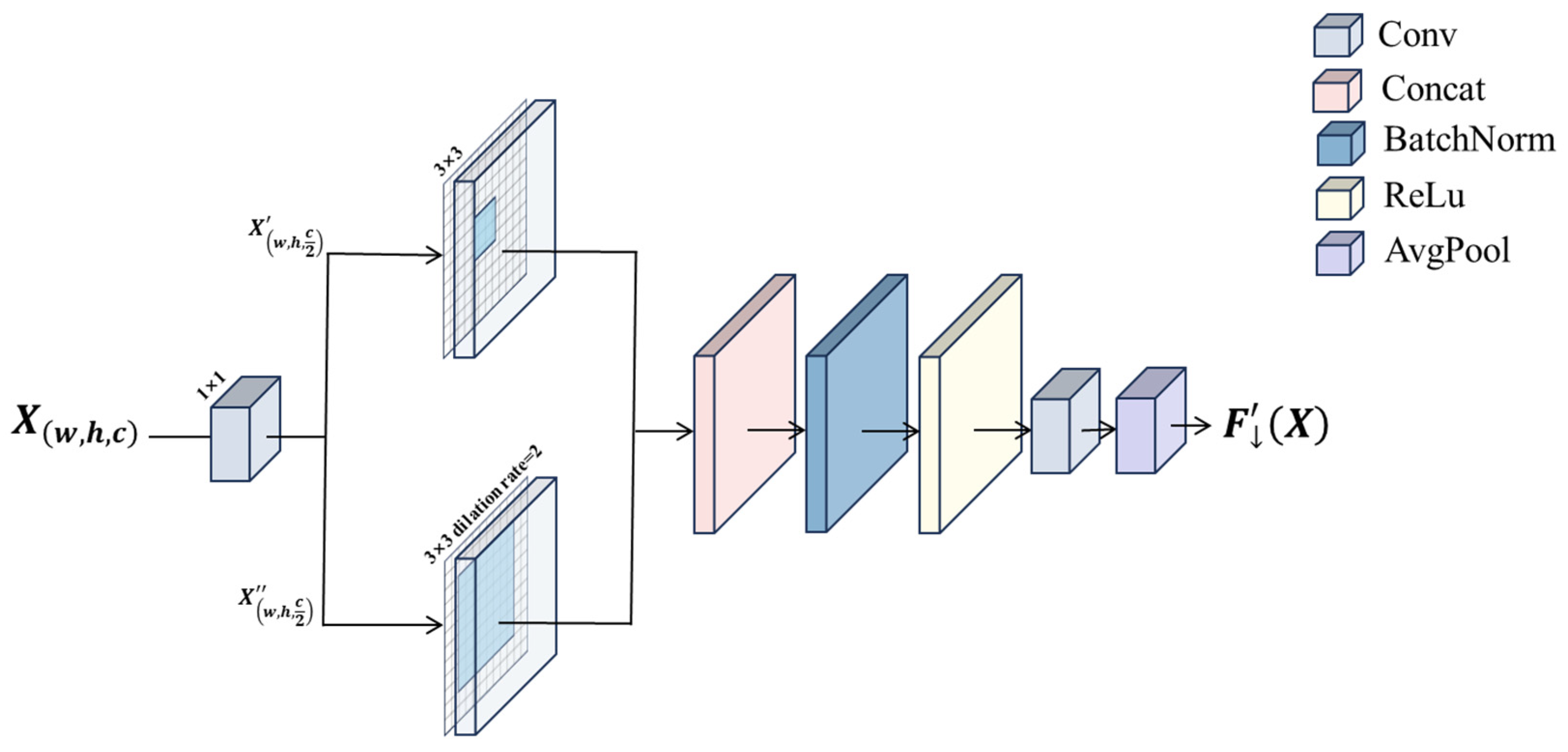

3.1. Context Guided Convolutional Block

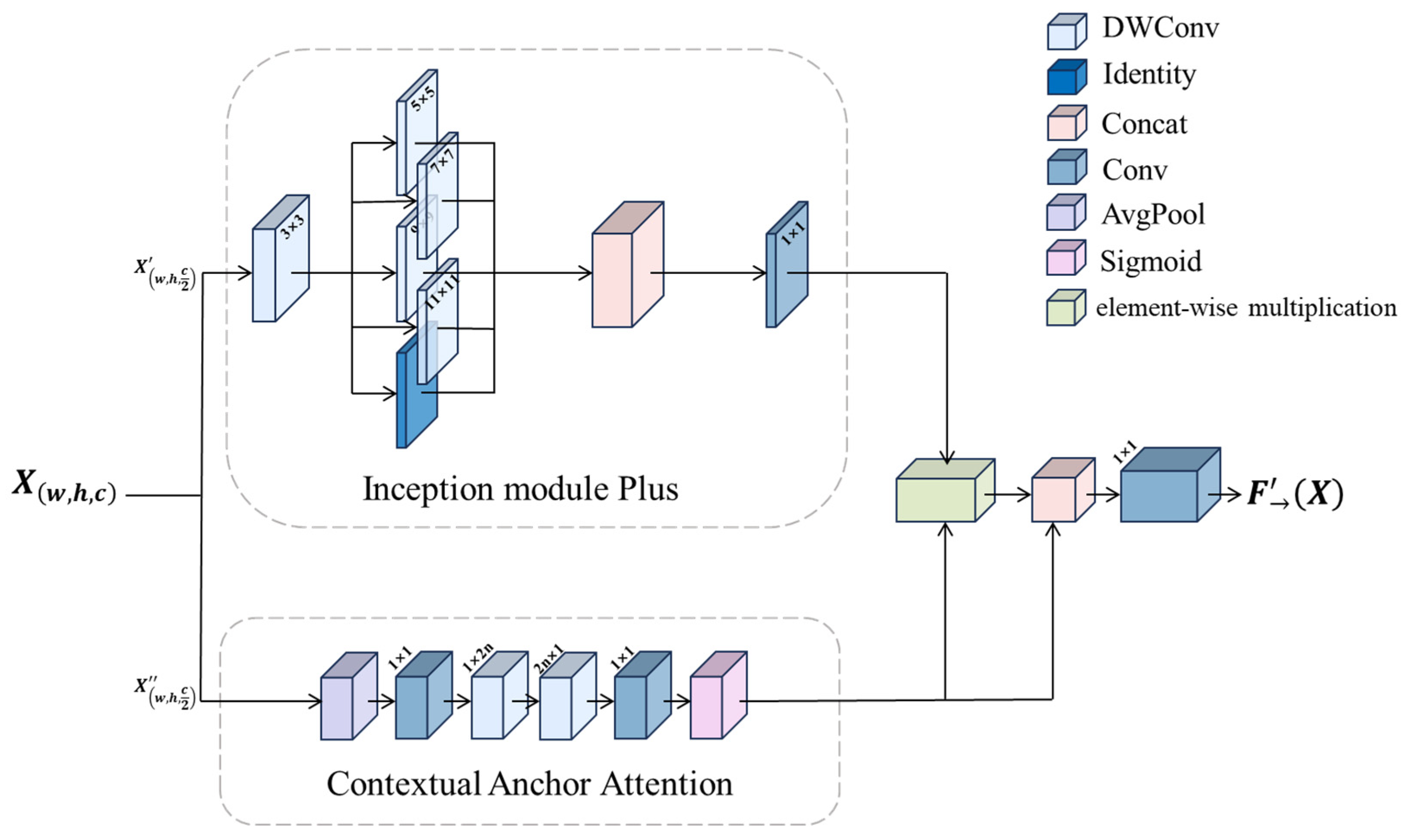

3.2. Poly Kernel Inception Block

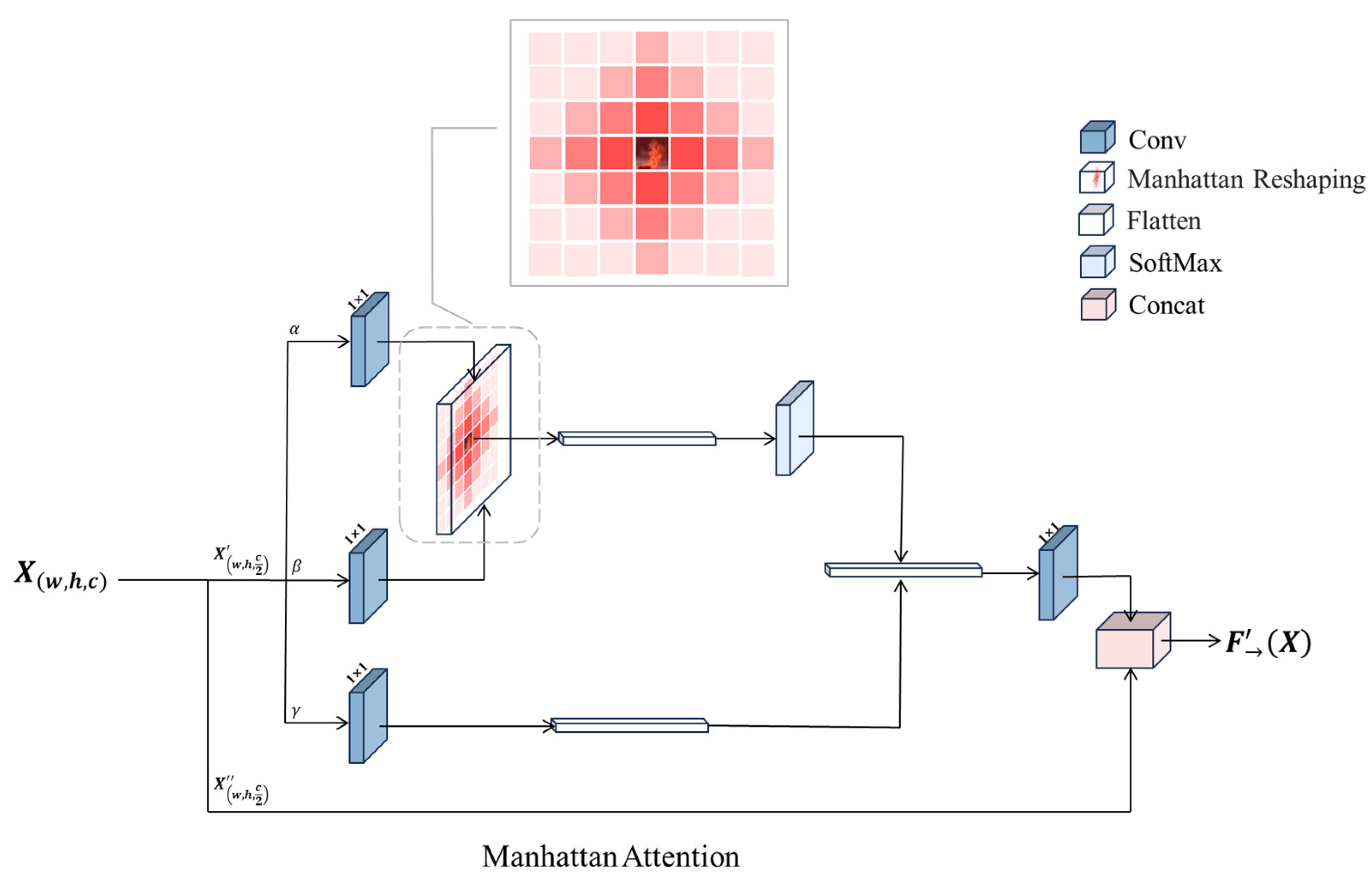

3.3. Manhattan Attention Mechanism

4. Experiment

4.1. Dataset

4.2. Training Environment

4.3. Model Evaluation Indicator

5. Experimental Analysis

5.1. Ablation Experiment

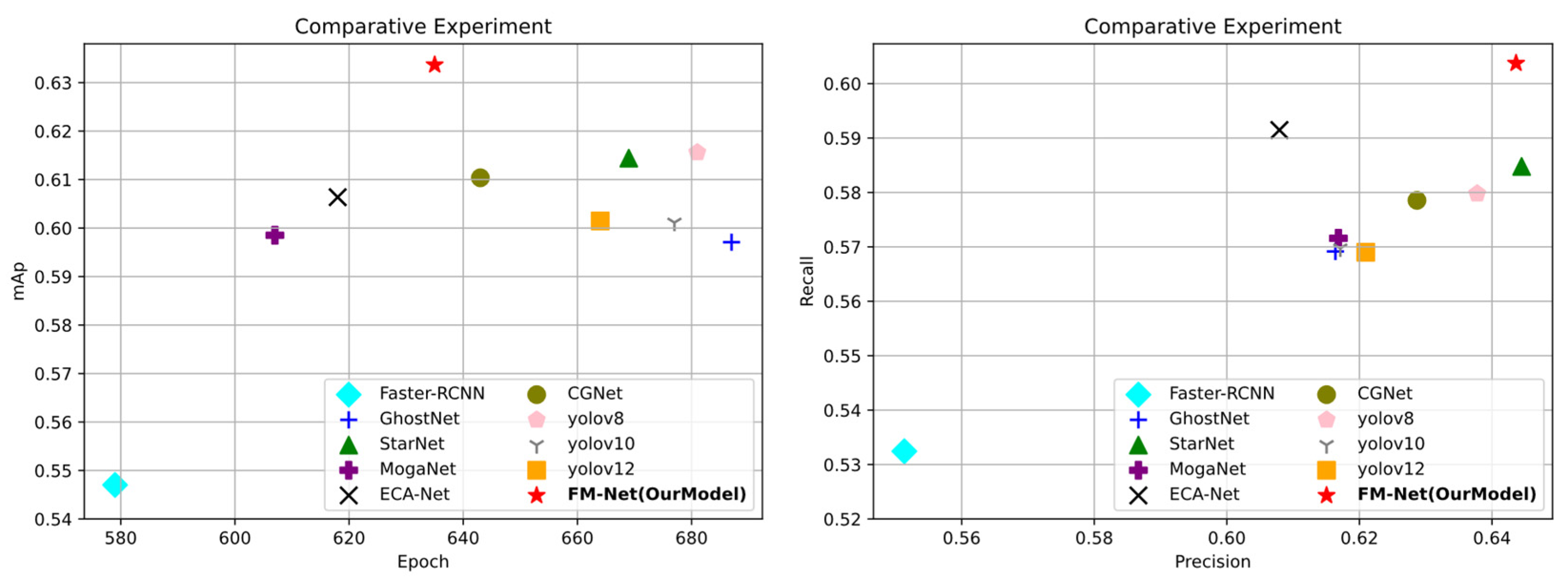

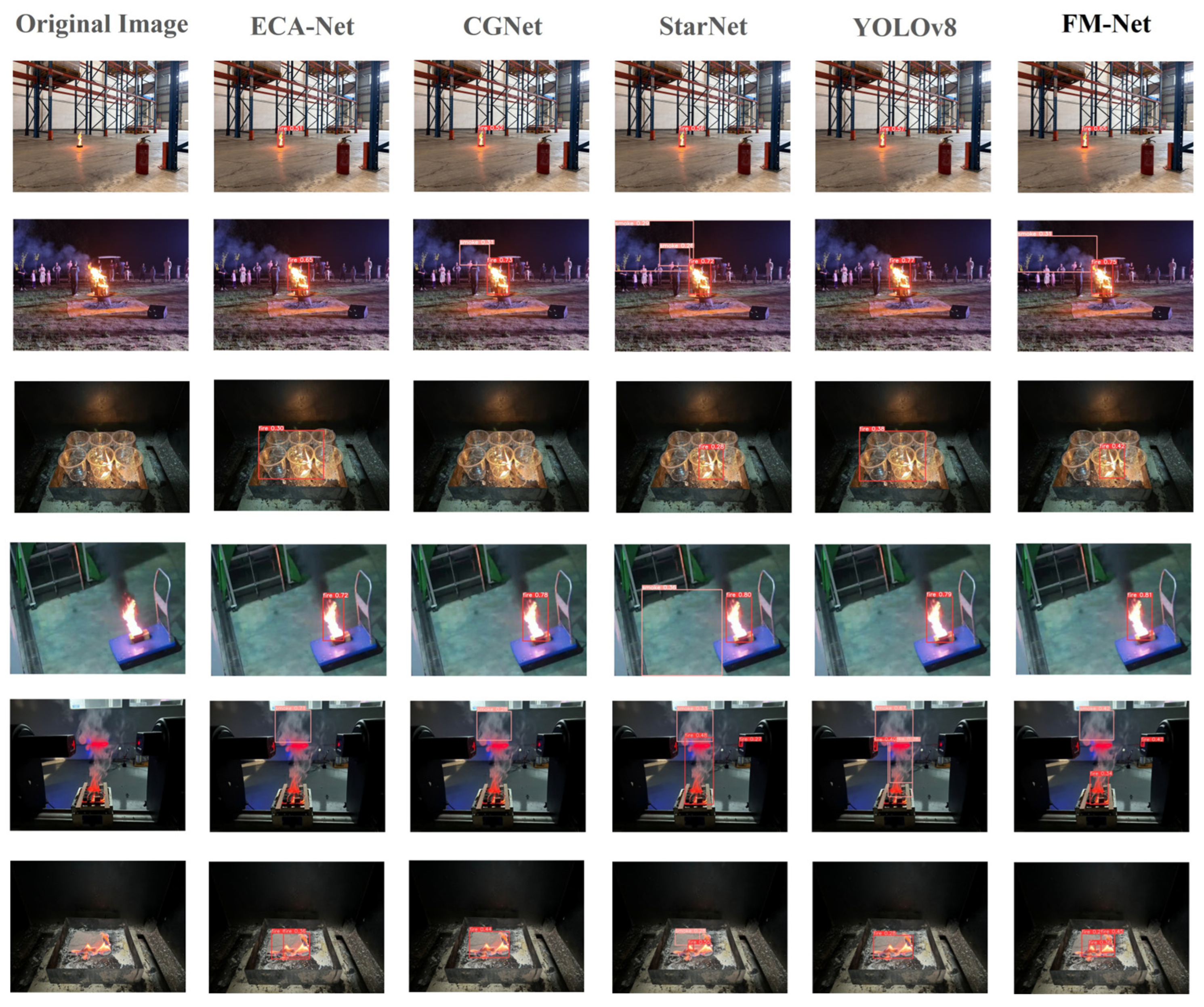

5.2. Comparison Experiment

5.3. Comparison on Fire-Flame-Dataset

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Li, H.; Xiong, S. Small-sample fire image recognition method based on fuzzy membership of color correlation. Fire Saf. Sci. 2024, 33, 83–94. [Google Scholar]

- Bai, H.; Niu, S.; Chen, F.; Liang, L. Forest fire prediction based on dataset reconstruction and Logistic regression. Fire Saf. Sci. 2024, 33, 61–67. [Google Scholar]

- Liang, X.; Wu, M.D.; Zhang, Z. Research on risk probability of urban explosion accidents based on Bayesian network. Fire Saf. Sci. 2023, 32, 116–127. [Google Scholar]

- Lu, K.; Huang, J.; Li, J.; Zhou, J.; Chen, X.; Liu, Y. MTL-FFDET: A multi-task learning-based model for forest fire detection. Forests 2022, 13, 1448. [Google Scholar] [CrossRef]

- Zhang, W.; Wei, J.J. Improved YOLOv3 fire detection algorithm embedding DenseNet structure and dilated convolution module. J. Tianjin Univ. (Sci. Technol.) 2020, 53, 976–983. [Google Scholar]

- Dong, C.; Hao, Z.; Guowu, Y.; Lingyu, Y.; Qiuyan, C.; Ying, R.; Yi, M. Wildfire detection algorithm for transmission lines integrating multi-scale features and positional information. Comput. Sci. 2024, 51, 248–254. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 6154–6162. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Ghiasi, G.; Cui, Y.; Srinivas, A.; Qian, R.; Lin, T.-Y.; Cubuk, E.D. Simple copy-paste is a strong data augmentation method for instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2918–2928. [Google Scholar]

- Talaat, F.M.; ZainEldin, H. An improved fire detection approach based on YOLO-v8 for smart cities. Neural Comput. Appl. 2023, 35, 20939–20954. [Google Scholar] [CrossRef]

- Huang, J.; He, Z.; Guan, Y.; Zhang, H. Real-time forest fire detection by ensemble lightweight YOLOX-L and defogging method. Sensors 2023, 23, 1894. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T.; Dick, R.P. A Context-Oriented Multi-Scale Neural Network for Fire Segmentation. In Proceedings of the 2024 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 27–30 October 2024. [Google Scholar] [CrossRef]

- Wang, X.; Li, S.; Yan, P.; Ma, X.; Ni, R.; Wu, Y. Fire Detection Algorithm Based on Improved YOLOv5s. In Proceedings of the 2024 9th International Conference on Intelligent Computing and Signal Processing (ICSP), Xi’an, China, 14–17 October 2024. [Google Scholar] [CrossRef]

- Wang, K.; Zeng, Y.; Zhang, Z.; Tan, Y.; Wen, G. FCSNet: A Frequency-Domain Aware Cross-Feature Fusion Network for Smoke Segmentation. J. Electron. Inf. Technology 2025, 47, 2320–2333. [Google Scholar] [CrossRef]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-unet: Unet-like pure transformer for medical image segmentation. In Proceedings of the 17th European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 205–218. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Vedaldi, A. Gather-excite: Exploiting feature context in convolutional neural networks. Adv. Neural Inf. Process. Syst. 2018, 31, 9423–9433. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7794–7803. [Google Scholar]

- Fan, Q.; Huang, H.; Chen, M.; Liu, H.; He, R. RMT: Retentive Networks Meet Vision Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024. [Google Scholar]

- Wu, T.; Tang, S.; Zhang, R.; Zhang, Y. CGNet: A Light-weight Context Guided Network for Semantic Segmentation. arXiv 2018, arXiv:1811.08201. [Google Scholar] [CrossRef] [PubMed]

- Cai, X.; Lai, Q.; Wang, Y.; Wang, W.; Sun, Z.; Yao, Y. Poly Kernel Inception Network for Remote Sensing Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024. [Google Scholar] [CrossRef]

- Ultralytics, YOLOv8. Available online: https://github.com/ultralytics/ultralytics (accessed on 11 November 2023).

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Xie, L.; Niu, J.; Liu, X.; Wei, L.; Tian, Q. GhostNetV2: Enhance Cheap Operation with Long-Range Attention. Adv. Neural Inf. Process. Syst. 2022, 35, 9969–9982. [Google Scholar]

- Li, S.; Wang, Z.; Liu, Z.; Tan, C.; Lin, H.; Wu, D.; Chen, Z.; Zheng, J.; Li, S.Z. MogaNet: Multi-order Gated Aggregation Network. arXiv 2024, arXiv:2211.03295. [Google Scholar]

- Ma, X.; Dai, X.; Bai, Y.; Wang, Y. Rewrite the Stars. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 5694–5703. [Google Scholar]

- Fire-Smoke-Dataset. Available online: https://github.com/DeepQuestAI/Fire-Smoke-Dataset (accessed on 22 July 2025).

| Dataset | Training Set | Validation Set | Total |

|---|---|---|---|

| Single Flame Picture | 3000 | 500 | 3500 |

| Single Smoke Picture | 3500 | 1125 | 4625 |

| Smoke & Flame Picture | 2500 | 1000 | 3500 |

| Negative Picture | 1000 | 0 | 1000 |

| Total | 10,000 | 2625 | 12,625 |

| Model A | Model B | Model C | Precision | Recall | mAP50 | F1 | FLOPs | |

|---|---|---|---|---|---|---|---|---|

| 1 | 0.63776 | 0.57978 | 0.6156 | 0.607389478 | 8.9G | |||

| 2 | √ | 0.64286 | 0.57555 | 0.61678 | 0.607345759 | 8.2G | ||

| 3 | √ | 0.63924 | 0.58277 | 0.61759 | 0.609700239 | 8.4G | ||

| 4 | √ | 0.6444 | 0.57054 | 0.61442 | 0.60522491 | 8.3G | ||

| 5 | √ | √ | 0.63602 | 0.58262 | 0.62456 | 0.608150024 | 8.5G | |

| 6 | √ | √ | 0.64078 | 0.57307 | 0.61441 | 0.605036528 | 8.6G | |

| 7 | √ | √ | 0.63936 | 0.58041 | 0.6245 | 0.608460509 | 8.6G | |

| 8 | √ | √ | √ | 0.64383 | 0.58996 | 0.62923 | 0.615718958 | 8.7G |

| Name | Precision | Recall | mAP50 | F1 | FLOPs |

|---|---|---|---|---|---|

| Faster R-CNN | 0.55135 | 0.53244 | 0.54702 | 0.54173 | 34G |

| GhostNet | 0.61638 | 0.56916 | 0.59709 | 0.59183 | 5.8G |

| StarNet | 0.64447 | 0.58478 | 0.61442 | 0.613176 | 8.9G |

| MogaNet | 0.61683 | 0.57158 | 0.59852 | 0.593344 | 8.6G |

| ECA-Net | 0.60793 | 0.59147 | 0.60637 | 0.599587 | 15.6 |

| CGNet | 0.62868 | 0.57854 | 0.61037 | 0.602569 | 8.6G |

| yolov8 | 0.63776 | 0.57978 | 0.6156 | 0.607389 | 8.9G |

| yolov10 | 0.61712 | 0.56972 | 0.60113 | 0.592473 | 7.8G |

| yolov12 | 0.62096 | 0.56901 | 0.60147 | 0.593851 | 7.3G |

| FM-Net | 0.64363 | 0.60374 | 0.63367 | 0.623047 | 9.2G |

| Name | Dataset | Precision | Recall | mAP50 | F1 |

|---|---|---|---|---|---|

| StarNet | Fire-Flame-Dataset | 0.61888 | 0.58318 | 0.60976 | 0.600499873 |

| ECA-Net | Fire-Flame-Dataset | 0.57213 | 0.5729 | 0.58373 | 0.572514741 |

| CGNet | Fire-Flame-Dataset | 0.58702 | 0.57807 | 0.59294 | 0.582510624 |

| yolov12 | Fire-Flame-Dataset | 0.59062 | 0.57458 | 0.59002 | 0.582489598 |

| FM-Net | Fire-Flame-Dataset | 0.63723 | 0.57911 | 0.61546 | 0.606781435 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Yao, Y.; Huo, Y.; Guan, J. FM-Net: A New Method for Detecting Smoke and Flames. Sensors 2025, 25, 5597. https://doi.org/10.3390/s25175597

Wang J, Yao Y, Huo Y, Guan J. FM-Net: A New Method for Detecting Smoke and Flames. Sensors. 2025; 25(17):5597. https://doi.org/10.3390/s25175597

Chicago/Turabian StyleWang, Jingwu, Yuan Yao, Yinuo Huo, and Jinfu Guan. 2025. "FM-Net: A New Method for Detecting Smoke and Flames" Sensors 25, no. 17: 5597. https://doi.org/10.3390/s25175597

APA StyleWang, J., Yao, Y., Huo, Y., & Guan, J. (2025). FM-Net: A New Method for Detecting Smoke and Flames. Sensors, 25(17), 5597. https://doi.org/10.3390/s25175597