SegGen: An Unreal Engine 5 Pipeline for Generating Multimodal Semantic Segmentation Datasets

Abstract

1. Introduction

- A modular UE5 pipeline for procedural scene generation and automated dataset capture, with pixel-perfect ground-truth labels.

- A proof-of-concept multimodal dataset of a heterogeneous forest (1169 samples, 1920 × 1080 resolution).

2. Related Work

2.1. Synthetic Data for Computer Vision

2.2. Procedural Content Generation and Multimodal Approaches

2.3. Domain Adaption and Beyond Autonomous Driving

2.4. Integration and Gaps in Current Research

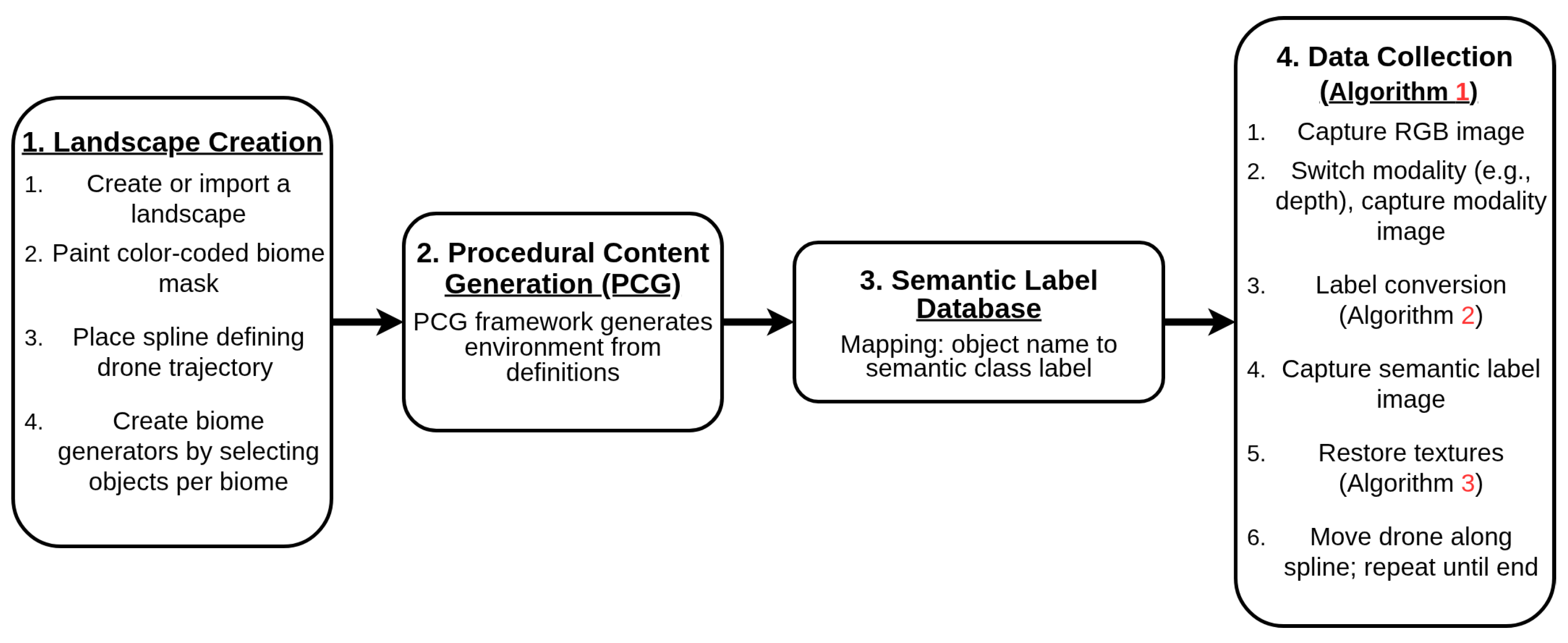

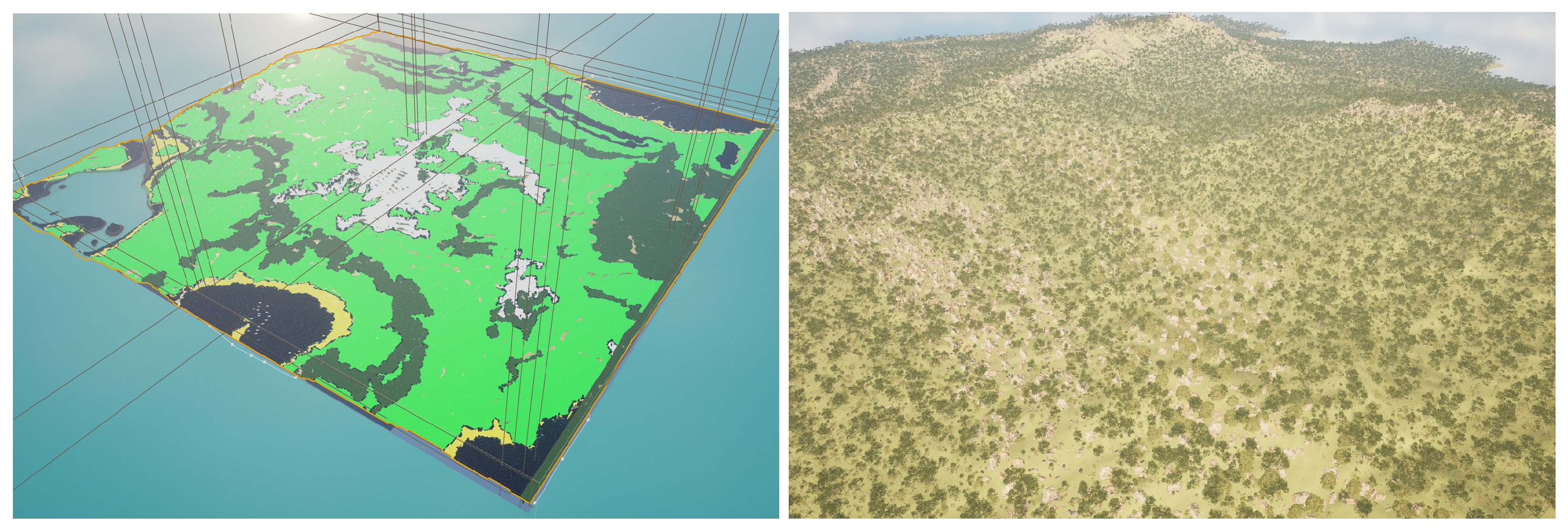

3. Methodology

| Algorithm 1 Spline-Based Multimodal Data Collection |

1: Initialize Drone Actor D at the start of spline S 2: Set camera parameters (focal length f, aperture a) 3: while Drone D has not reached the end of spline S do 4: for each modality do 5: if m is RGB then 6: Capture RGB image using virtual camera 7: Store RGB image in RGB folder 8: else 9: Activate Modality Switcher for modality m 10: Capture modality image (e.g., depth map) 11: Store image in corresponding modality folder 12: end if 13: end for 14: Activate Semantic Label View 15: Capture and store semantic label image in labels folder 16: Move Drone D to next predetermined sampling location along spline S 17: end while |

3.1. User-Configurable Object–Class Mapping

- Database

- –

- Populated by the user prior to runtime.

- –

- Each key is a name fragment (e.g., "alder" to refer to all types of alder trees), and each value is the corresponding semantic label material.

- Texture Store

- –

- Filled during label conversion to remember each object’s pre-label material.

- –

- Consumed during restoration to reapply the original materials.

- In Algorithm 2 (Label Conversion):

- For each nearby object O, if contains a key in , then

- In Algorithm 3 (Texture Restoration):

- For each modified object O, retrieve and set

- Clear T to prepare for the next capture cycle.

| Algorithm 2 Semantic Label Conversion Logic |

|

| Algorithm 3 Texture Restoration Procedure |

|

3.2. Drone Platform Spline Actor

- RGB Capture: The drone first captures an RGB image using the onboard virtual camera configured with specific aperture and focal length settings (user-defined specifications).

- Modality Switching and Capture: After the RGB image is stored, the drone activates the modality switcher, capturing images from additional sensor modalities (e.g., depth). Each modality is activated sequentially, and its respective image is captured and stored.

- Semantic Label Capture: Finally, the drone captures a semantic segmentation label image.

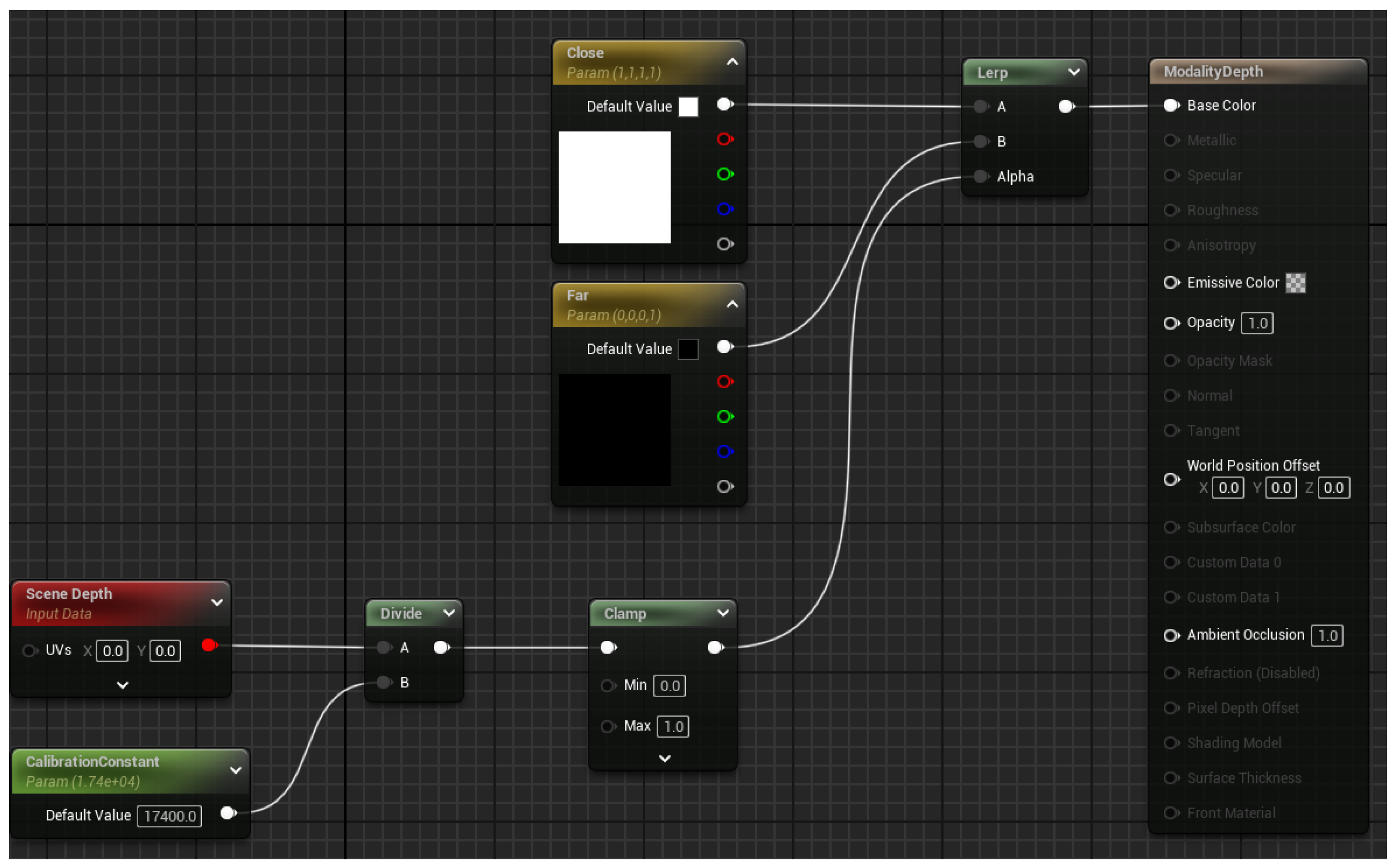

3.3. Modality Switcher

3.4. Label Conversion Logic

- Spatial Filtering: First, the system identifies all landscape streaming actors within a 100 m horizontal radius of the drone’s current position, excluding distant actors to improve computational efficiency.

- Material Replacement: For each identified actor, we iterate through contained objects. Objects whose names contain specific user-defined substrings are recognized and assigned corresponding semantic label materials.

- Texture Restoration: Original textures are stored temporarily, enabling reversion to the original textures after the semantic label image has been captured.

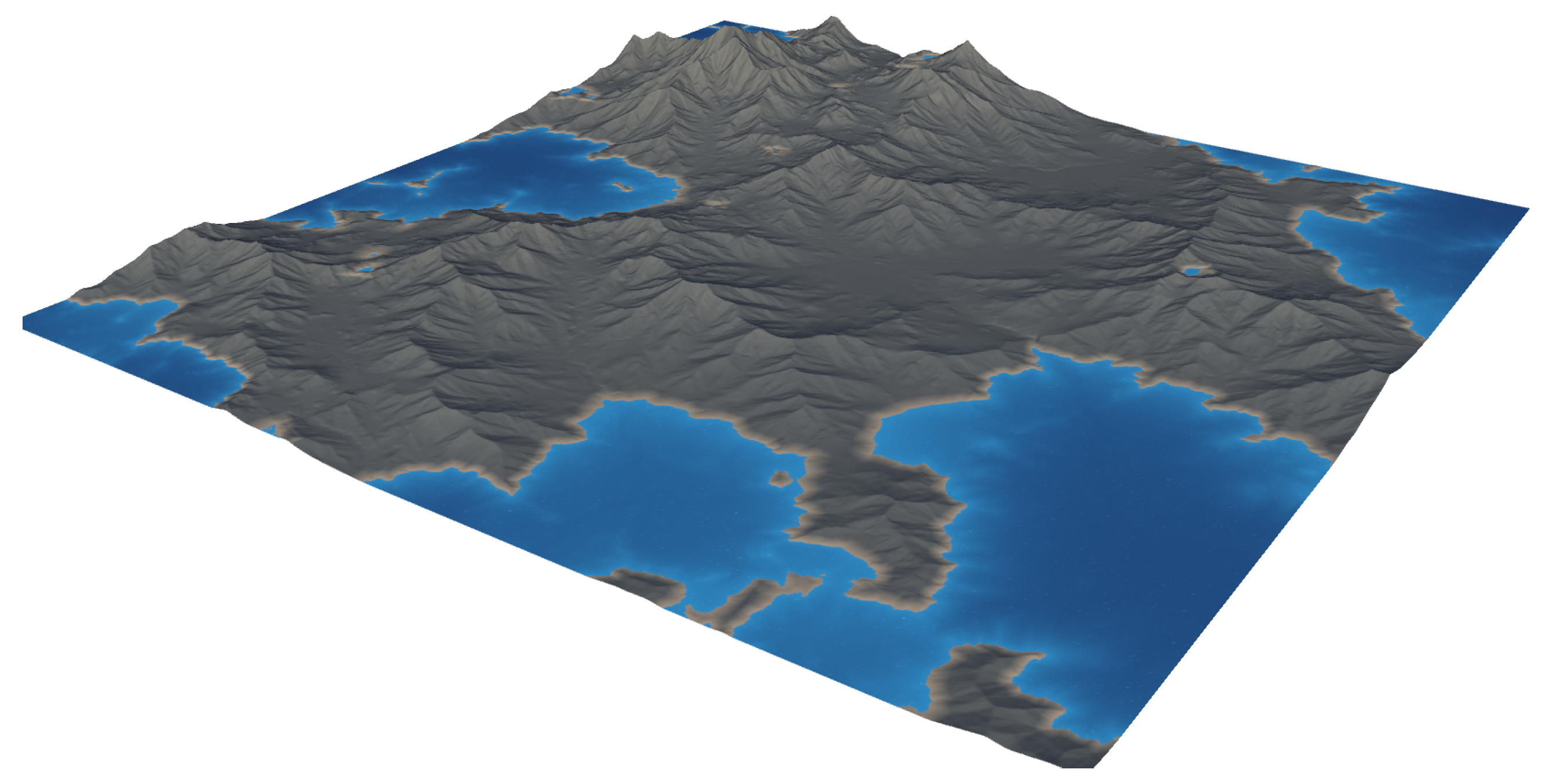

3.5. Creating New Datasets

- Step 1: Environment Setup

- Step 2: Semantic Class Mapping

- Step 3: Drone Trajectory Planning (Optional)

- Step 4: Automated Data Collection (if using a drone)

- Step 5: Data Post-processing (Optional)

3.6. Adding New Data Collection Modalities

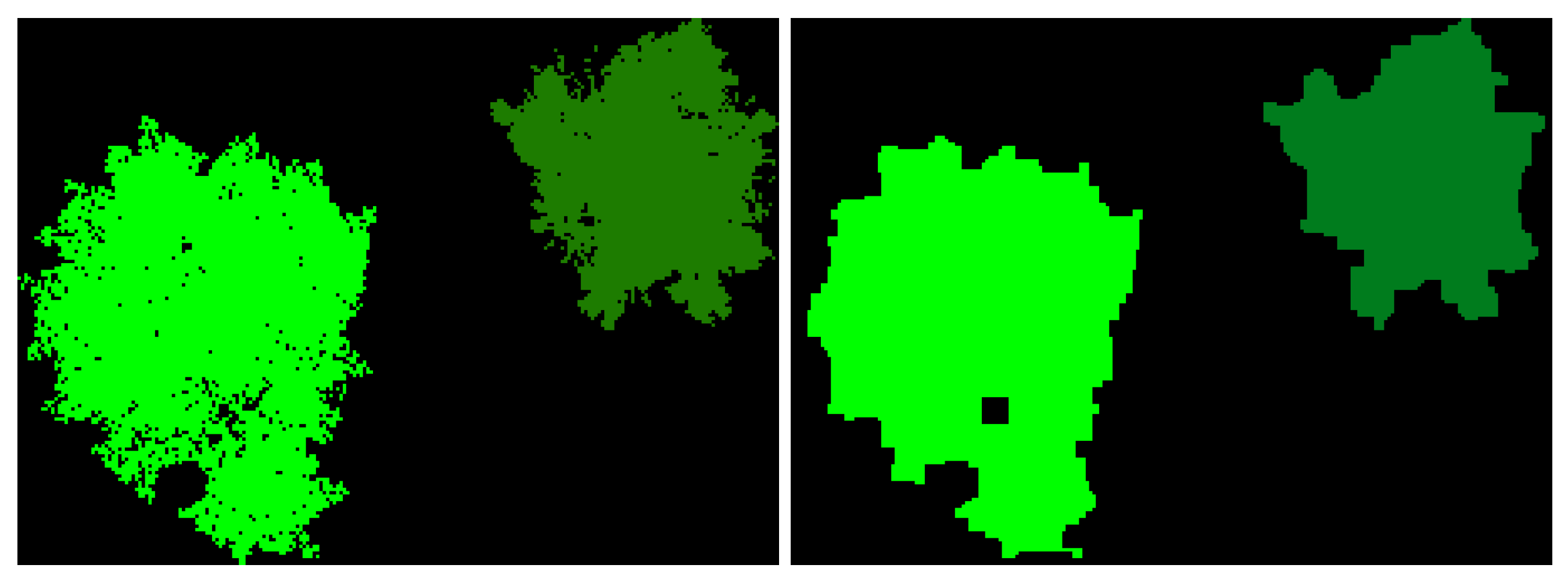

4. Use Case: Fine-Grained Landscape Classification

4.1. Dataset

- Random horizontal flip (),

- Random horizontal rotation ,

- Random scale and crop to with scale factor ,

- Gaussian blur with .

4.2. Model Selection

4.3. Training Protocol

- Input size: during training; at inference we use a resolution of .

- Optimizer: AdamW ().

- Epochs: 100 epochs for UNet, SegFormer, and FuseForm, 300 epochs for CMNeXt.

- Learning rate: with a 10-epoch linear warm-up (10% of base LR), followed by polynomial decay ().

- Batch size: 2 image pairs.

- Loss: pixel-wise cross-entropy.

- Intersection-over-Union (IoU).

- Per-Class Accuracy (CA).

- Overall Accuracy (OA).

5. Results

5.1. Analysis

5.2. Multimodal Performance Considerations and Limitations of Depth Fusion

On the Possibility of Sensor Misalignment

5.3. Mitigation Strategies

- Late or selective fusion: Introduce depth at coarse resolutions or modulate its contribution via learned attention, rather than concatenating raw features early.

- Modality-aware loss weighting: Downscale depth loss contributions relative to RGB for classes known to be depth-insensitive.

- Ablation sanity check: Train a depth-only model; if mIoU approaches random or background-only accuracy, it confirms that depth is non-discriminative for this task.

- Optical alignment test: Overlay label boundaries on both RGB and depth to visually inspect subpixel displacement. If present, consider re-rendering with animation disabled.

5.4. Synthetic-to-Real

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| UE5 | Unreal Engine 5 |

| RGB | Optical Image data |

| ML | Machine Learning |

| LiDAR | Light Detection and Ranging |

| PCG | Procedural Content Generation |

| MiT | Mix-Transformer |

| IoU | Intersection-over-Union |

| OA | Overall Accuracy |

| CA | Class Accuracy |

References

- Csurka, G.; Volpi, R.; Chidlovskii, B. Semantic Image Segmentation: Two Decades of Research. arXiv 2023, arXiv:2302.06378. [Google Scholar] [CrossRef]

- Cakir, S.; Gauß, M.; Häppeler, K.; Ounajjar, Y.; Heinle, F.; Marchthaler, R. Semantic Segmentation for Autonomous Driving: Model Evaluation, Dataset Generation, Perspective Comparison, and Real-Time Capability. arXiv 2022, arXiv:2207.12939. [Google Scholar] [CrossRef]

- Johnson, B.A.; Ma, L. Image Segmentation and Object-Based Image Analysis for Environmental Monitoring: Recent Areas of Interest, Researchers’ Views on the Future Priorities. Remote Sens. 2020, 12, 1772. [Google Scholar] [CrossRef]

- Rizzoli, G.; Barbato, F.; Zanuttigh, P. Multimodal Semantic Segmentation in Autonomous Driving: A Review of Current Approaches and Future Perspectives. Technologies 2022, 10, 90. [Google Scholar] [CrossRef]

- Li, J.; Dai, H.; Han, H.; Ding, Y. MSeg3D: Multi-modal 3D Semantic Segmentation for Autonomous Driving. arXiv 2023, arXiv:2303.08600. [Google Scholar]

- Song, Z.; He, Z.; Li, X.; Ma, Q.; Ming, R.; Mao, Z.; Pei, H.; Peng, L.; Hu, J.; Yao, D.; et al. Synthetic datasets for autonomous driving: A survey. IEEE Trans. Intell. Veh. 2023, 9, 1847–1864. [Google Scholar] [CrossRef]

- Liang, Y.; Wakaki, R.; Nobuhara, S.; Nishino, K. Multimodal Material Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 19800–19808. [Google Scholar]

- Zhang, J.; Liu, R.; Shi, H.; Yang, K.; Reiß, S.; Peng, K.; Fu, H.; Wang, K.; Stiefelhagen, R. Delivering Arbitrary-Modal Semantic Segmentation. In Proceedings of the CVPR, Vancouver, BC, Canada, 18–22 June 2023. [Google Scholar]

- Lu, Y.; Huang, Y.; Sun, S.; Zhang, T.; Zhang, X.; Fei, S.; Chen, V. M2fNet: Multi-Modal Forest Monitoring Network on Large-Scale Virtual Dataset. In Proceedings of the 2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Orlando, FL, USA, 16–21 March 2024; pp. 539–543. [Google Scholar] [CrossRef]

- Ros, G.; Sellart, L.; Materzynska, J.; Vazquez, D.; Lopez, A.M. The SYNTHIA Dataset: A Large Collection of Synthetic Images for Semantic Segmentation of Urban Scenes. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3234–3243. [Google Scholar] [CrossRef]

- Ramesh, A.; Correas-Serrano, A.; Gonz’alez-Huici, M.A. SCaRL—A Synthetic Multi-Modal Dataset for Autonomous Driving. In Proceedings of the ICMIM 2024; 7th IEEE MTT Conference, Boppard, Germany, 16–17 April 2024; pp. 103–106. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. arXiv 2021, arXiv:2105.15203. [Google Scholar] [CrossRef]

- McMillen, J.; Yilmaz, Y. FuseForm: Multimodal Transformer for Semantic Segmentation. In Proceedings of the Winter Conference on Applications of Computer Vision (WACV) Workshops, Tucson, AZ, USA, 28 February–4 March 2025; pp. 618–627. [Google Scholar]

- Richter, S.R.; Vineet, V.; Roth, S.; Koltun, V. Playing for Data: Ground Truth from Computer Games. arXiv 2016, arXiv:1608.02192. [Google Scholar] [CrossRef]

- Gaidon, A.; Wang, Q.; Cabon, Y.; Vig, E. Virtual Worlds as Proxy for Multi-Object Tracking Analysis. arXiv 2016, arXiv:1605.06457. [Google Scholar] [CrossRef]

- Cabon, Y.; Murray, N.; Humenberger, M. Virtual KITTI 2. arXiv 2020, arXiv:2001.10773. [Google Scholar] [CrossRef]

- Clabaut, E.; Foucher, S.; Bouroubi, Y.; Germain, M. Synthetic Data for Sentinel-2 Semantic Segmentation. Remote Sens. 2024, 16, 818. [Google Scholar] [CrossRef]

- Borkman, S.; Crespi, A.; Dhakad, S.; Ganguly, S.; Hogins, J.; Jhang, Y.; Kamalzadeh, M.; Li, B.; Leal, S.; Parisi, P.; et al. Unity Perception: Generate Synthetic Data for Computer Vision. arXiv 2021, arXiv:2107.04259. [Google Scholar] [CrossRef]

- Song, J.; Chen, H.; Xuan, W.; Xia, J.; Yokoya, N. SynRS3D: A Synthetic Dataset for Global 3D Semantic Understanding from Monocular Remote Sensing Imagery. In Proceedings of the Thirty-Eight Conference on Neural Information Processing Systems Datasets and Benchmarks Track, Vancouver, BC, Canada, 10–15 December 2024. [Google Scholar]

- Nikolenko, S.I. Synthetic Data for Deep Learning; Springer: Berlin/Heidelberg, Germany, 2021; Volume 174. [Google Scholar]

- Richter, S.R.; AlHaija, H.A.; Koltun, V. Enhancing Photorealism Enhancement. arXiv 2021, arXiv:2105.04619. [Google Scholar] [CrossRef] [PubMed]

- Hendrikx, M.; Meijer, S.; Van Der Velden, J.; Iosup, A. Procedural content generation for games: A survey. ACM Trans. Multimed. Comput. Commun. Appl. 2013, 9, 1–22. [Google Scholar] [CrossRef]

- Picard, C.; Schiffmann, J.; Ahmed, F. Dated: Guidelines for creating synthetic datasets for engineering design applications. In Proceedings of the International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Boston, MA, USA, 20–23 August 2023; American Society of Mechanical Engineers: New York, NY, USA, 2023; Volume 87301, p. V03AT03A015. [Google Scholar]

- Nunes, R.; Ferreira, J.; Peixoto, P. Procedural Generation of Synthetic Forest Environments to Train Machine Learning Algorithms. 2022. Available online: https://irep.ntu.ac.uk/id/eprint/46417/ (accessed on 2 September 2025).

- Lagos, J.; Lempiö, U.; Rahtu, E. FinnWoodlands Dataset. In Image Analysis; Springer Nature: Cham, Switzerland, 2023; pp. 95–110. [Google Scholar] [CrossRef]

- Schmitt, M.; Hughes, L.H.; Qiu, C.; Zhu, X.X. SEN12MS—A Curated Dataset of Georeferenced Multi-Spectral Sentinel-1/2 Imagery for Deep Learning and Data Fusion. arXiv 2019, arXiv:1906.07789. [Google Scholar] [CrossRef]

- Gong, L.; Zhang, Y.; Xia, Y.; Zhang, Y.; Ji, J. SDAC: A Multimodal Synthetic Dataset for Anomaly and Corner Case Detection in Autonomous Driving. Proc. AAAI Conf. Artif. Intell. 2024, 38, 1914–1922. [Google Scholar] [CrossRef]

- Yang, Y.; Patel, A.; Deitke, M.; Gupta, T.; Weihs, L. Scaling text-rich image understanding via code-guided synthetic multimodal data generation. arXiv 2025, arXiv:2502.14846. [Google Scholar]

- Wang, H.; Hu, C.; Zhang, R.; Qian, W. SegForest: A Segmentation Model for Remote Sensing Images. Forests 2023, 14, 1509. [Google Scholar] [CrossRef]

- Capua, F.R.; Schandin, J.; Cristóforis, P.D. Training point-based deep learning networks for forest segmentation with synthetic data. arXiv 2024, arXiv:2403.14115. [Google Scholar] [CrossRef]

- Wang, J.; Zheng, Z.; Ma, A.; Lu, X.; Zhong, Y. LoveDA: A Remote Sensing Land-Cover Dataset for Domain Adaptive Semantic Segmentation. In Proceedings of the Neural Information Processing Systems Track on Datasets and Benchmarks, Virtual, 6–12 December 2021; Volume 1. [Google Scholar]

- Mullick, K.; Jain, H.; Gupta, S.; Kale, A.A. Domain Adaptation of Synthetic Driving Datasets for Real-World Autonomous Driving. arXiv 2023, arXiv:2302.04149. [Google Scholar] [CrossRef]

- Feng, Z.; She, Y.; Keshav, S. SPREAD: A large-scale, high-fidelity synthetic dataset for multiple forest vision tasks. Ecol. Inform. 2025, 87, 103085. [Google Scholar] [CrossRef]

| Dataset/Work | Domain | Modalities | Key Contribution |

|---|---|---|---|

| Playing for Data [15] | Gaming/Urban | RGB | Demonstrated video games as a source of semantic labels |

| Virtual KITTI 1/2 [16,17] | Driving | RGB, Depth, Flow | Synthetic driving benchmarks with pixel-level annotations |

| SYNTHIA [10] | Driving | RGB, Depth | Large-scale synthetic driving dataset |

| Sentinel-2 (Clabaut) [18] | Remote sensing | Multispectral | Synthetic Sentinel-2 segmentation |

| Unity Perception [19] | General | RGB, Depth, 3D | Toolkit for generating synthetic datasets with perfect labels |

| Dataset / Work | Domain | Modalities | Key Contribution |

|---|---|---|---|

| Nunes et al. [25] | Forestry | RGB, Depth | PCG-based synthetic forest environments |

| FinnWoodlands [26] | Forestry | RGB, Depth, LiDAR | Multimodal forestry dataset |

| M2fNet [9] | Forestry | RGB, Depth | Multimodal forestry dataset |

| Sen12MS [27] | Remote sensing | Multispectral | Land cover mapping dataset |

| SCaRL [11] | Driving | RGB, LiDAR, Radar | Game engine-based multimodal data |

| SDAC [28] | Driving | RGB, LiDAR, Radar | Anomaly/corner case detection |

| Dataset | Domain | Modalities | Automation | Focus |

|---|---|---|---|---|

| SPREAD | Forestry | RGB, Depth, Tree Params | Semi-automated | Tree segmentation and parameter estimation |

| SegGen (ours) | Remote sensing (forests, urban, land cover, etc.) | RGB, Depth, (extensible) | Fully automated | General multimodal semantic segmentation across diverse environments |

| Class | Pixels (%) | Std. Across Images (%) |

|---|---|---|

| Ground | 39.63 | 9.35 |

| European Beech | 31.50 | 17.22 |

| Rocks | 14.07 | 20.73 |

| Norway Maple | 9.40 | 6.53 |

| Dead Wood | 0.35 | 0.28 |

| Black Alder | 5.05 | 3.14 |

| Method | Modals | mIoU | Ground | Beech | Rocks | Maple | Dead | Alder |

|---|---|---|---|---|---|---|---|---|

| UNet | RGB | 84.87 | 88.88 | 91.88 | 88.49 | 82.21 | 64.08 | 79.66 |

| SegFormer | RGB | 85.54 | 90.67 | 93.33 | 90.03 | 85.59 | 69.95 | 83.70 |

| CMNeXt | RGB | 82.60 | 88.90 | 91.85 | 88.81 | 82.22 | 64.17 | 79.65 |

| FuseForm | RGB | 85.83 | 90.71 | 93.37 | 90.14 | 85.50 | 71.64 | 83.62 |

| UNet | RGB–Depth | 81.99 | 88.75 | 91.86 | 88.28 | 81.95 | 62.22 | 78.89 |

| SegFormer | RGB–Depth | 83.87 | 89.77 | 92.92 | 88.97 | 84.30 | 65.68 | 81.61 |

| CMNeXt | RGB–Depth | 82.15 | 88.76 | 91.87 | 88.49 | 82.08 | 62.33 | 79.38 |

| FuseForm | RGB–Depth | 85.03 | 90.43 | 93.30 | 89.67 | 85.20 | 68.67 | 82.90 |

| Method | Modals | OA | Ground | Beech | Rocks | Maple | Dead | Alder |

|---|---|---|---|---|---|---|---|---|

| UNet | RGB | 93.92 | 93.40 | 96.18 | 96.41 | 89.18 | 72.70 | 87.78 |

| SegFormer | RGB | 95.00 | 93.99 | 96.89 | 97.41 | 93.32 | 80.40 | 90.84 |

| CMNeXt | RGB | 90.39 | 92.99 | 96.37 | 96.47 | 89.25 | 77.86 | 89.38 |

| FuseForm | RGB | 95.02 | 94.25 | 96.84 | 97.01 | 91.76 | 82.04 | 91.49 |

| UNet | RGB–Depth | 93.83 | 92.94 | 96.54 | 96.59 | 88.68 | 70.69 | 87.95 |

| SegFormer | RGB–Depth | 94.51 | 93.13 | 97.08 | 97.04 | 91.38 | 78.46 | 89.52 |

| CMNeXt | RGB–Depth | 89.78 | 93.01 | 96.15 | 96.39 | 89.56 | 74.52 | 89.02 |

| FuseForm | RGB–Depth | 94.88 | 94.08 | 97.17 | 96.81 | 91.35 | 77.62 | 89.48 |

| Method | mIoU | OA | ||||

|---|---|---|---|---|---|---|

| RGB | RGB–D | RGB | RGB–D | |||

| UNet | 84.87 | 81.99 | 93.92 | 93.83 | ||

| SegFormer | 85.54 | 83.87 | 95.00 | 94.51 | ||

| CMNeXt | 82.60 | 82.15 | 90.39 | 89.78 | ||

| FuseForm | 85.83 | 85.03 | 95.02 | 94.88 | ||

| Failure Mode | Explanation and Typical Symptom |

|---|---|

| Semantic non-discriminativeness | Depth values overlap heavily across species; the optimiser down-weights RGB texture to minimise joint loss, eroding inter-species mIoU while OA (dominated by Ground) remains stable. |

| Gradient conflict | Texture-driven RGB gradients seek to separate foliage types; depth gradients encourage grouping all canopy pixels together. Without a conflict-resolution mechanism (e.g., PCGrad) the network converges to a compromise that is sub-optimal for both. |

| Capacity dilution | Early-fusion layers must now encode an additional, largely redundant channel, reducing effective capacity for fine texture cues—especially harmful to the under-represented Dead and Alder classes. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

McMillen, J.; Yilmaz, Y. SegGen: An Unreal Engine 5 Pipeline for Generating Multimodal Semantic Segmentation Datasets. Sensors 2025, 25, 5569. https://doi.org/10.3390/s25175569

McMillen J, Yilmaz Y. SegGen: An Unreal Engine 5 Pipeline for Generating Multimodal Semantic Segmentation Datasets. Sensors. 2025; 25(17):5569. https://doi.org/10.3390/s25175569

Chicago/Turabian StyleMcMillen, Justin, and Yasin Yilmaz. 2025. "SegGen: An Unreal Engine 5 Pipeline for Generating Multimodal Semantic Segmentation Datasets" Sensors 25, no. 17: 5569. https://doi.org/10.3390/s25175569

APA StyleMcMillen, J., & Yilmaz, Y. (2025). SegGen: An Unreal Engine 5 Pipeline for Generating Multimodal Semantic Segmentation Datasets. Sensors, 25(17), 5569. https://doi.org/10.3390/s25175569