Chu-Style Lacquerware Dataset: A Dataset for Digital Preservation and Inheritance of Chu-Style Lacquerware

Abstract

1. Introduction

- Dataset construction: For the first time, this study establishes a high-quality image dataset of Chu-style lacquerware, providing a solid data foundation for AI models to learn Chu-style lacquerware features and to understand its cultural significance.

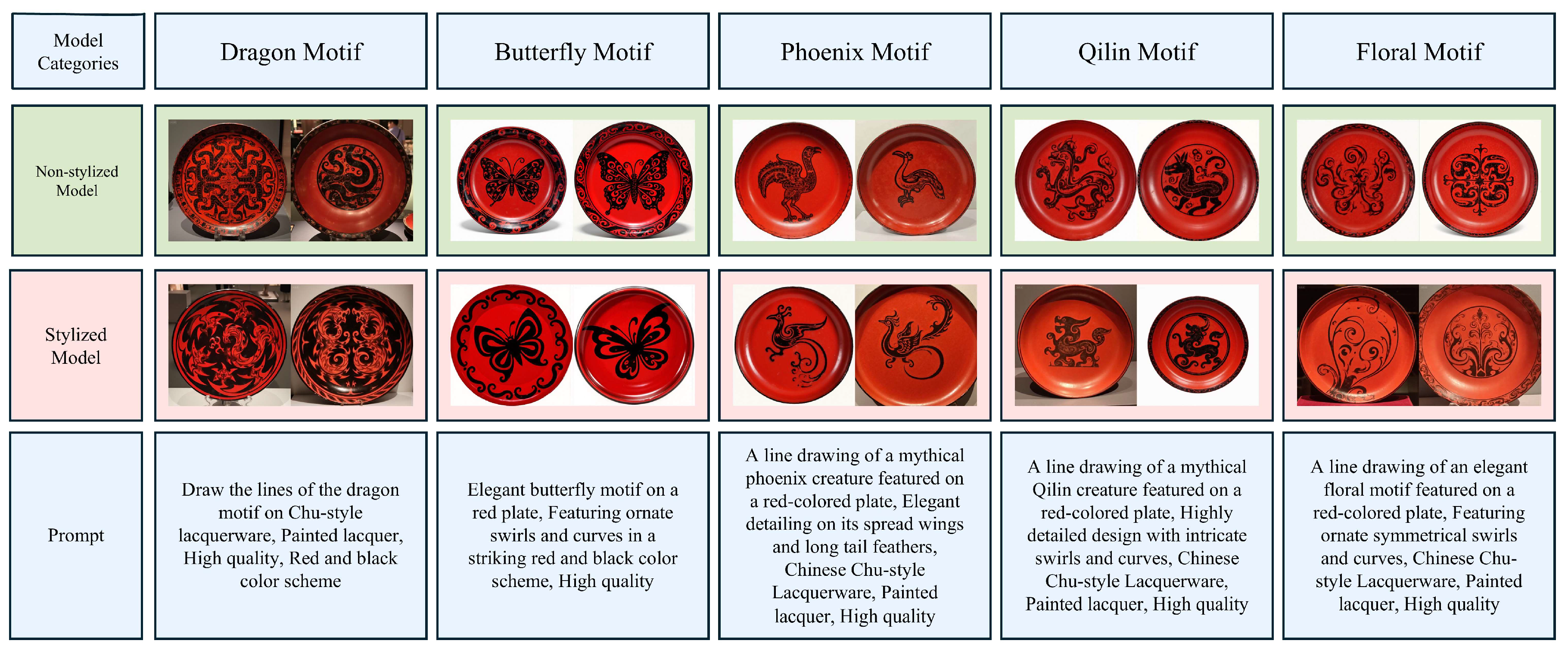

- Model optimization: Based on this dataset, three core models and five style models were successfully trained, enhancing both the accuracy and artistic expressiveness of Chu-style lacquerware image generation.

- Interdisciplinary value: This research provides a reusable technical pathway for the digital preservation and inheritance of intangible cultural heritage, and introduces new avenues for the public dissemination and educational promotion of Chu-style lacquerware culture.

2. Related Work

2.1. Protection Pathways for Intangible Cultural Heritage Handicrafts

2.2. Current Lacquerware Research

2.3. Research on Cultural Relics Datasets

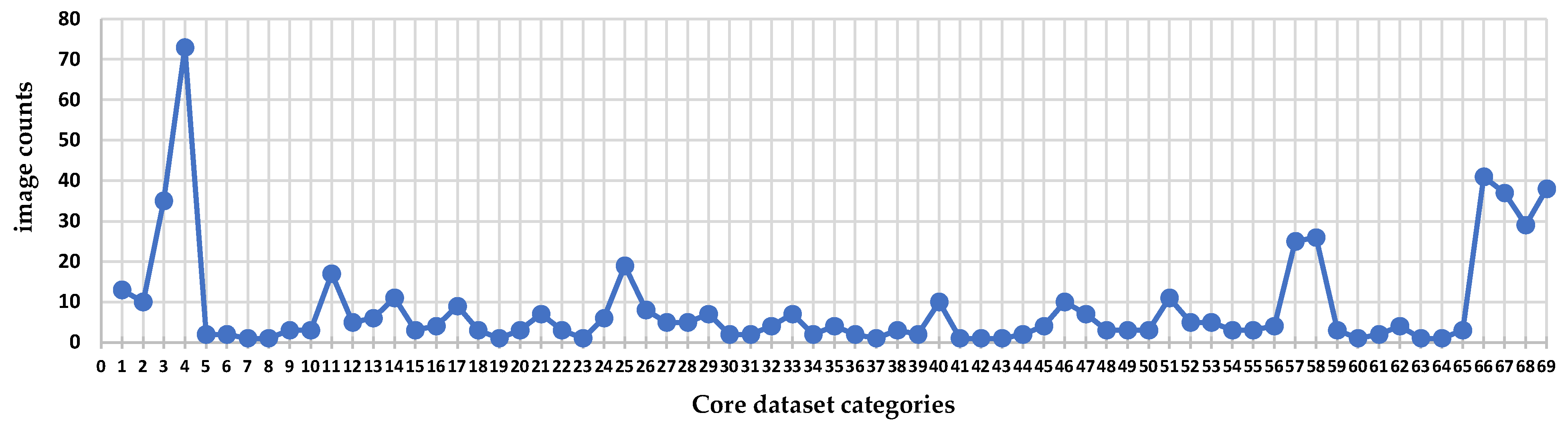

3. Dataset Description

3.1. Dataset Introduction

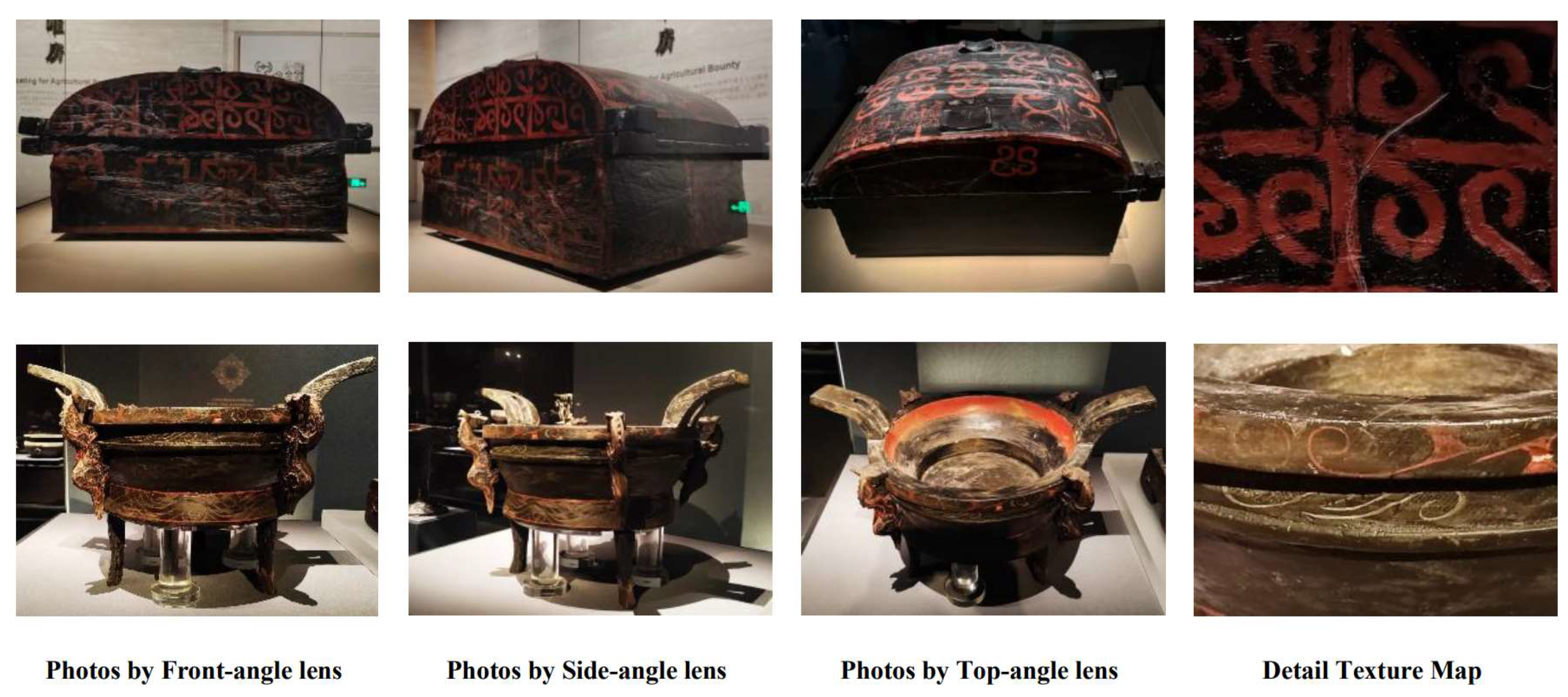

3.2. Data Collection

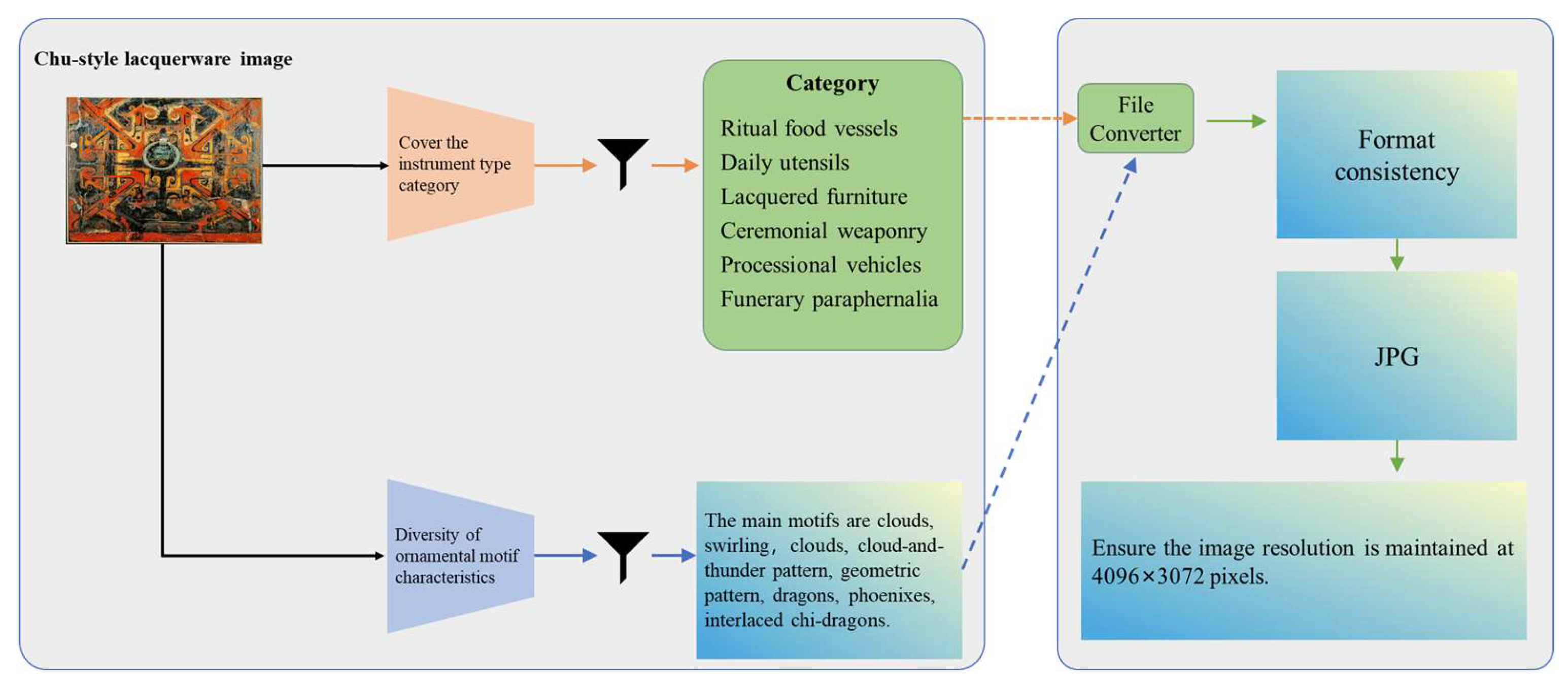

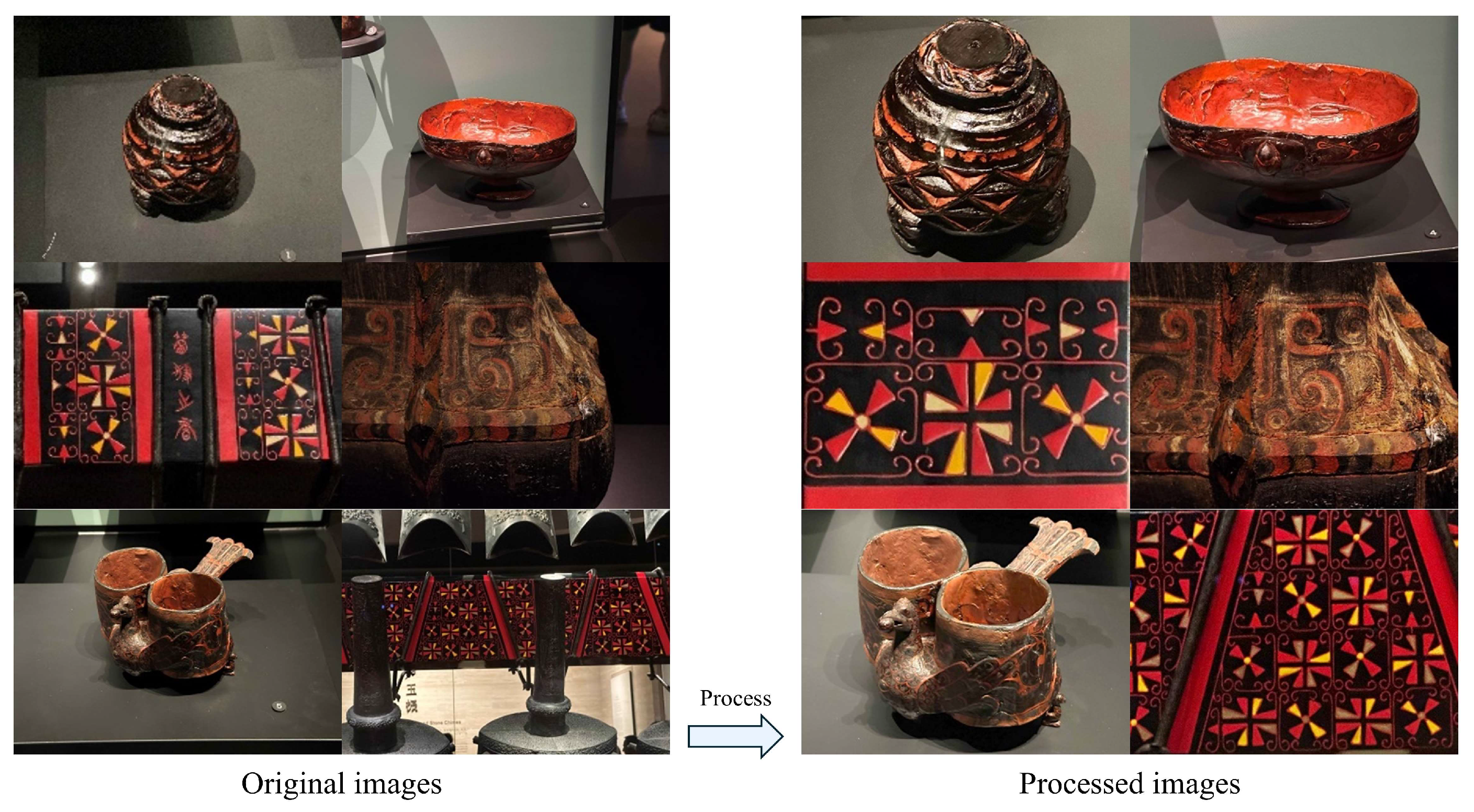

3.3. Core Dataset Processing Workflow for Chu-Style Lacquerware

- Initial screening: Remove low-quality images and apply strict selection criteria for both artifact forms and decorative patterns.

- Standardization: Normalize the format and resolution of the images.

- Editing and correction: Import the screened images into Adobe Photoshop 2024 for cropping, geometric rotation, and corrections of color and lighting.

- Image refinement: Perform image redrawing to enhance overall quality and enrich pattern details.

- Annotation: Apply a combined annotation approach using automatic labeling and manual verification to ensure the quality of textual annotations. The data annotation process is illustrated in Figure 4.

3.3.1. Data Screening

3.3.2. Image Standardization

- This resolution can fully preserve the carved details and painted strokes of Chu-style lacquerware patterns.

- It conforms to the 4:3 aspect ratio requirements of high-resolution display devices.

- While ensuring image quality, it avoids the unnecessary storage burden caused by excessively high resolutions.

3.3.3. Image Editing and Adjustment in Adobe Photoshop

3.3.4. Image Inpainting

3.3.5. Image Annotation

4. Methods

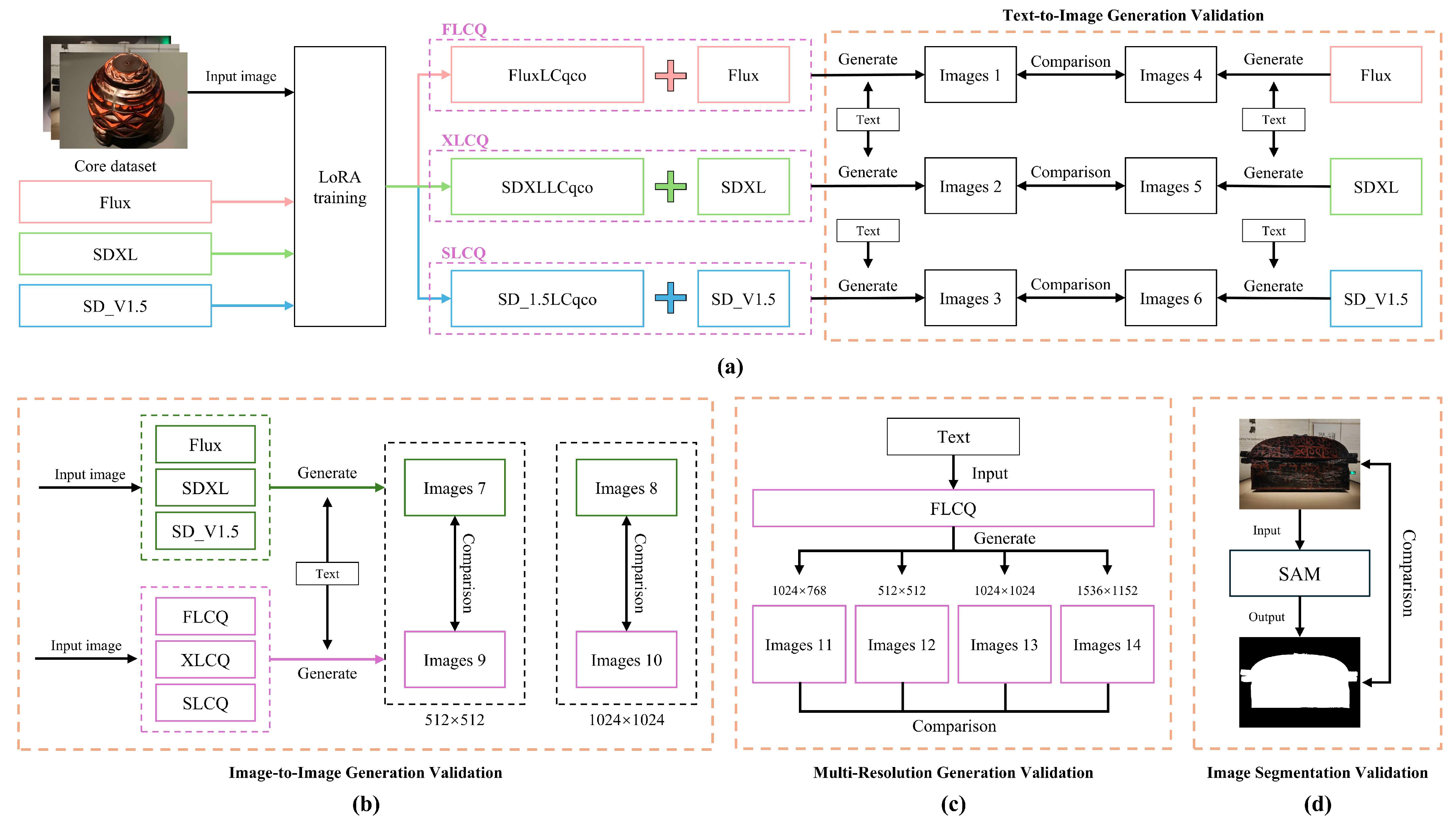

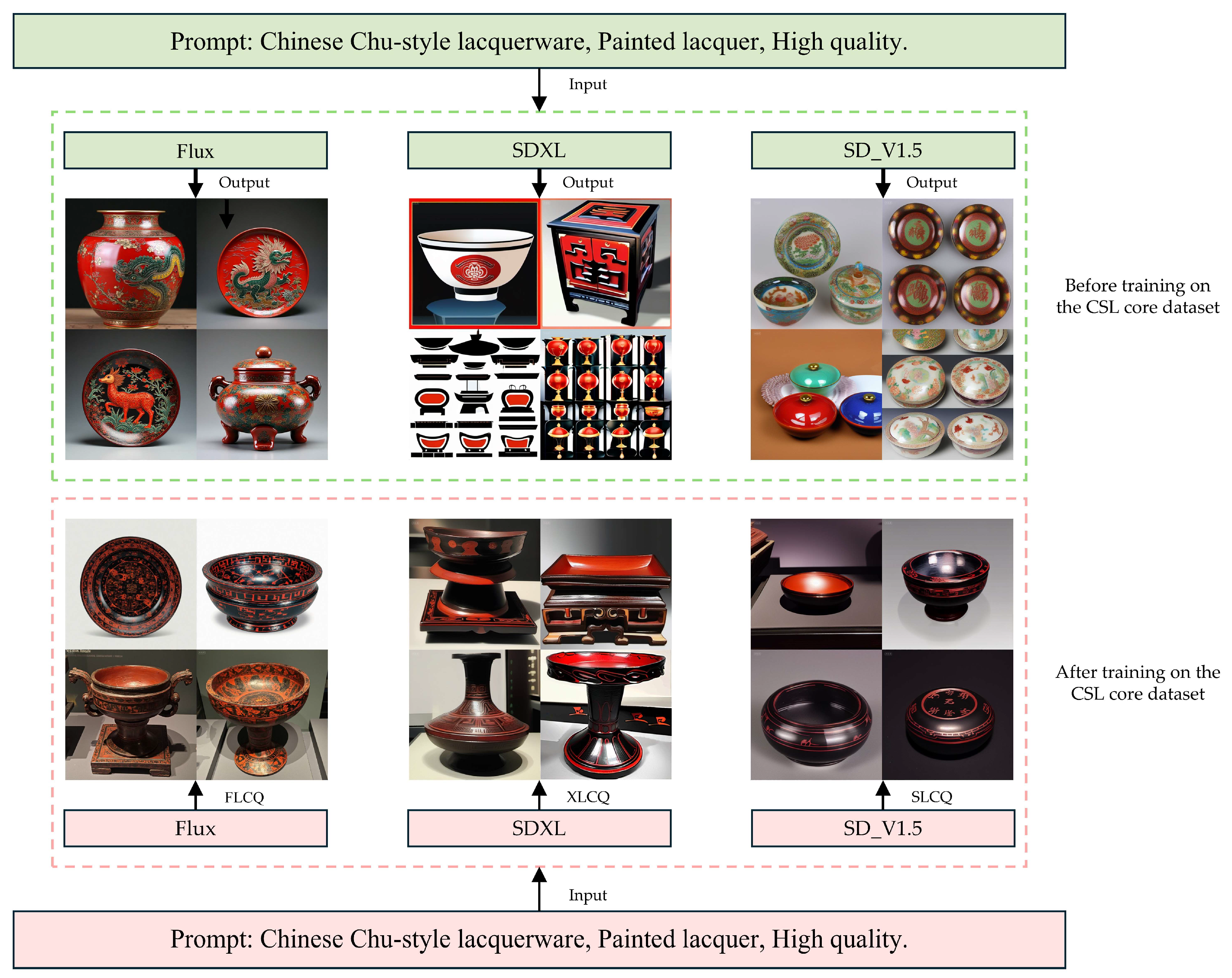

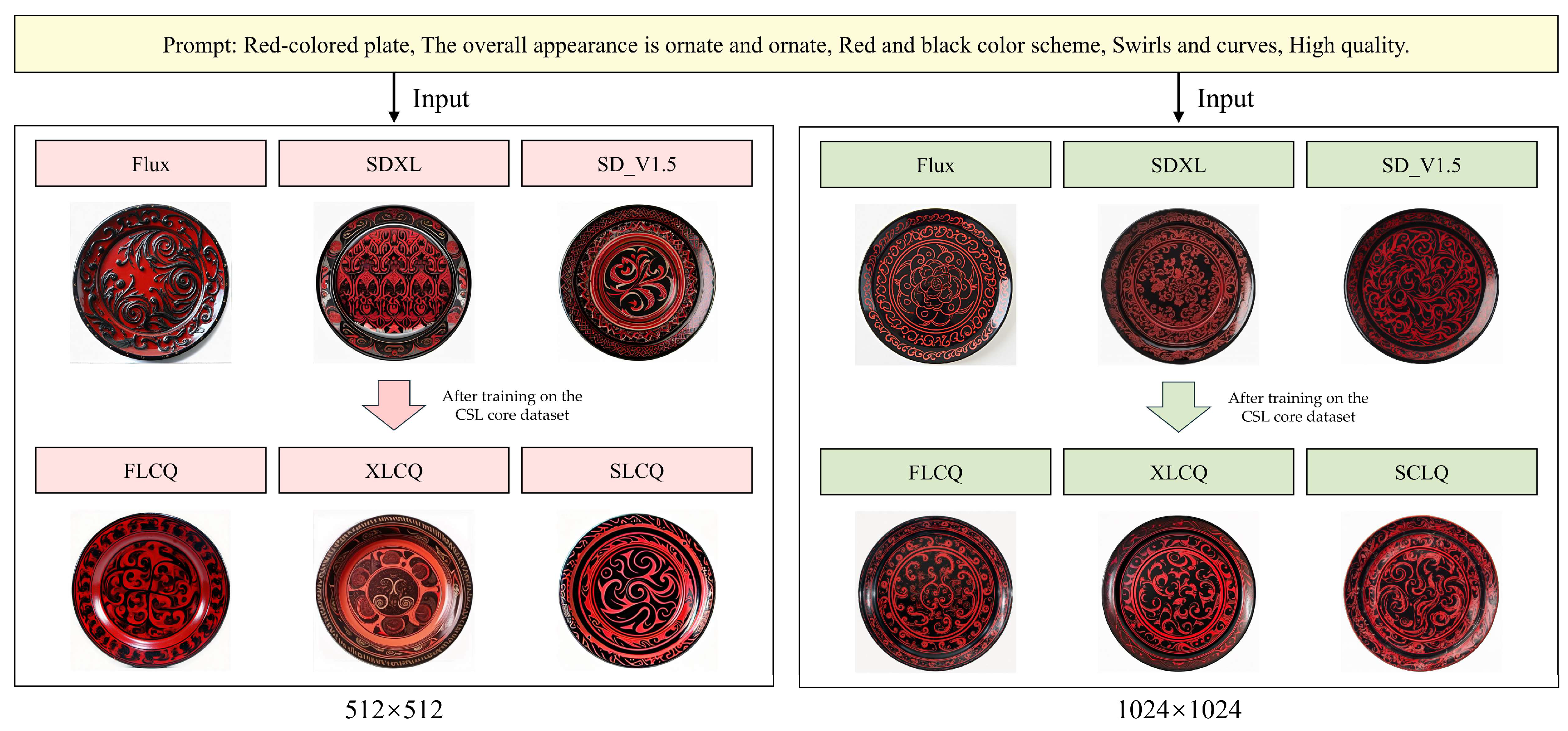

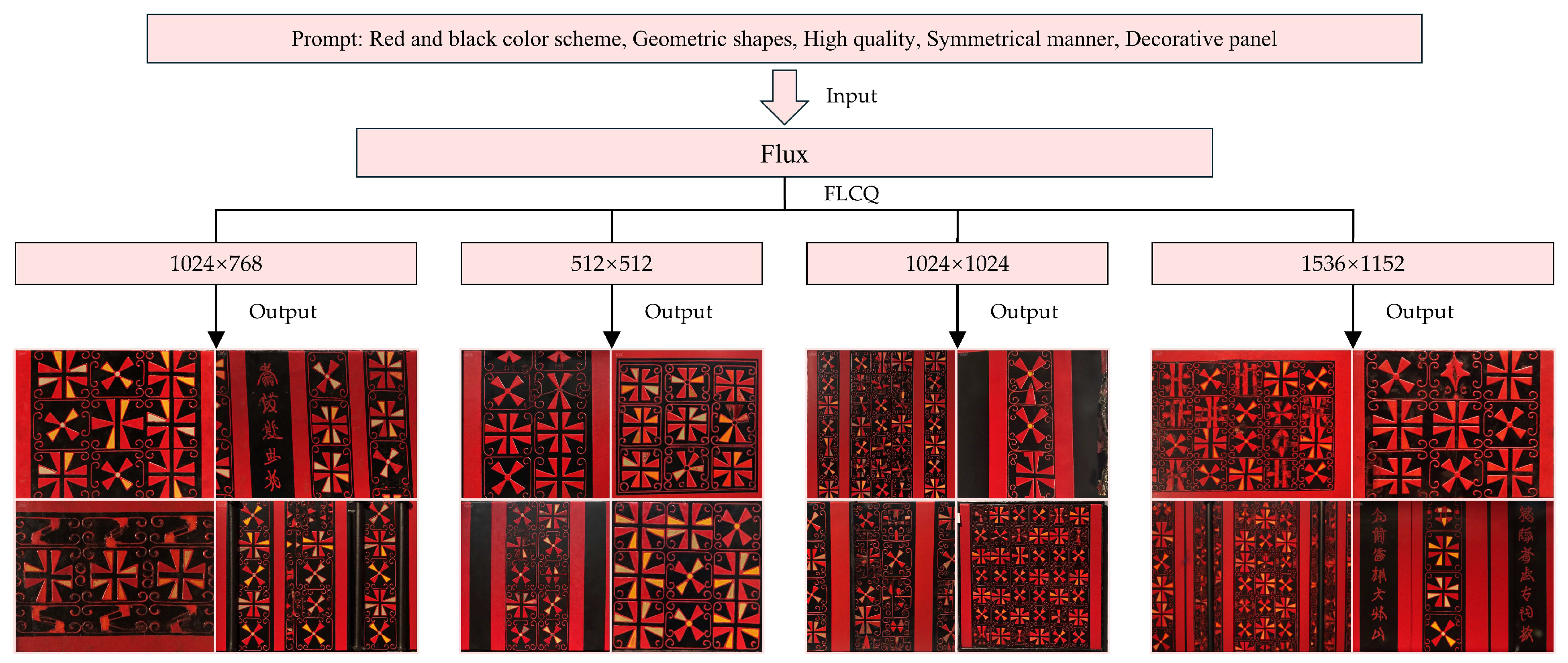

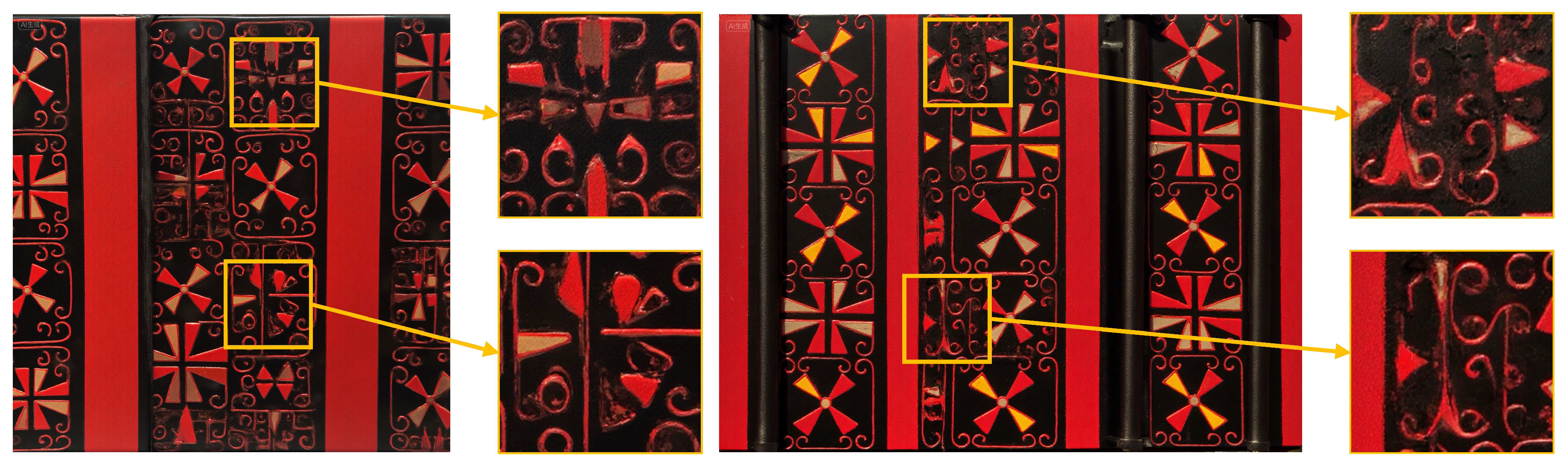

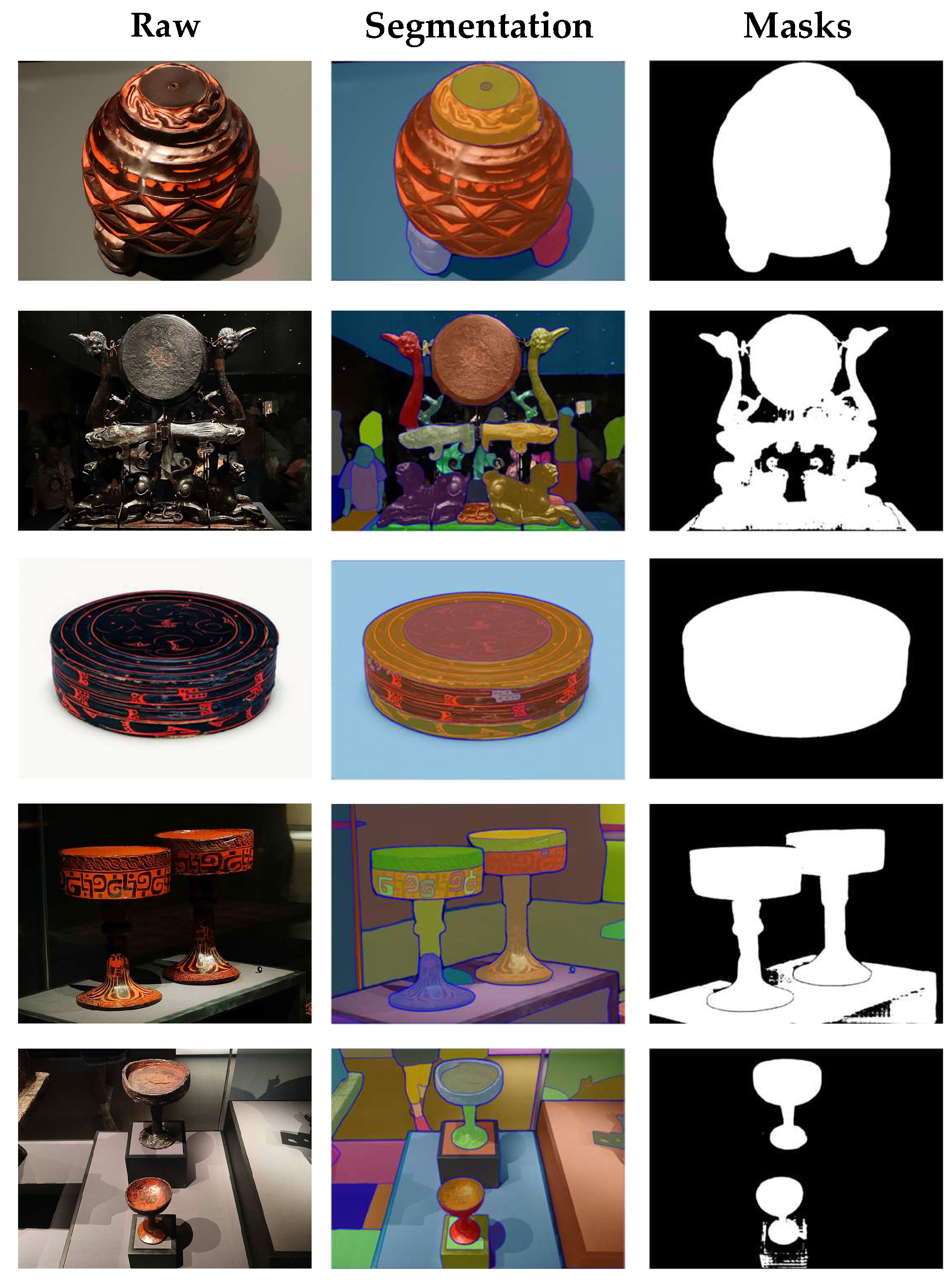

- For the CSL core dataset, validation was conducted from four perspectives: (1) text-to-image generation, (2) image-to-image generation, (3) multi-resolution generation, (4) image segmentation.

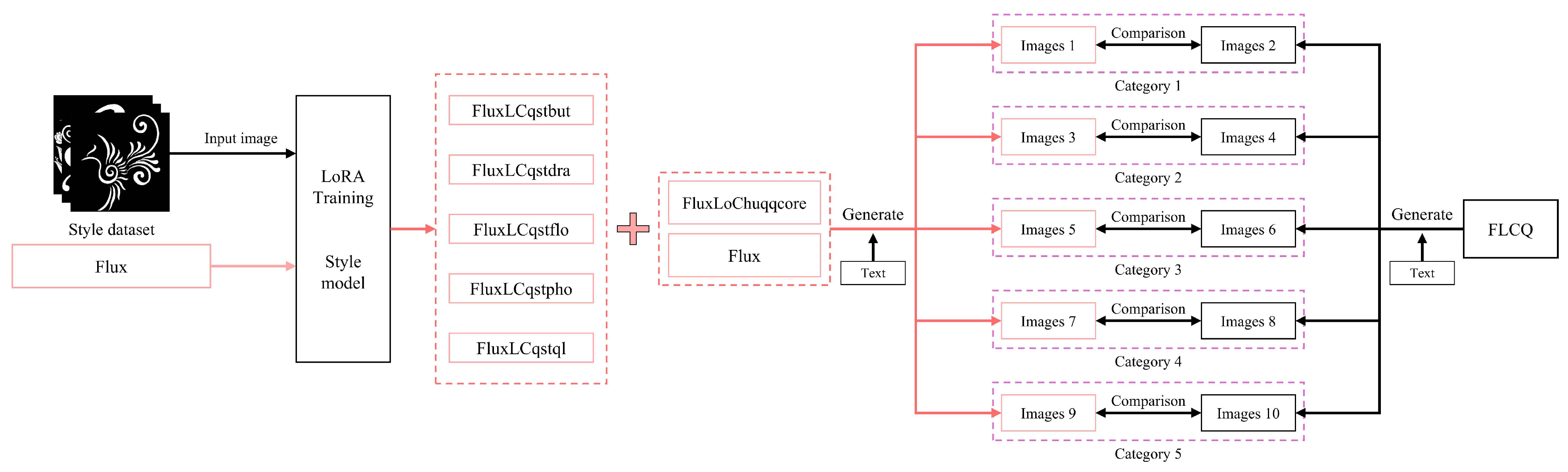

- For the CSL style dataset, validation was conducted through text-to-image generation experiments.

5. Technical Validation

5.1. Validation Results

5.2. Discussion

6. Conclusions and Future Work

6.1. Conclusions

6.2. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CSL | Chu-style Lacquerware Dataset |

| LoRA | Low-Rank Adaptation |

| AI | Artificial Intelligence |

| ICH | Intangible Cultural Heritage |

| SDXL | Stable Diffusion XL |

| SD_V1.5 | Stable Diffusion v1.5 |

| SAM | Segment Anything Model |

| OS | Overall Style |

| LF | Line Features |

| CF | Curvature Features |

References

- Zhai, K.; Sun, G.; Zheng, Y.; Wu, M.; Zhang, B.; Zhu, L.; Hu, Q. The earliest lacquerwares of China were discovered at Jingtoushan site in the Yangtze River Delta. Archaeometry 2022, 64, 218–226. [Google Scholar] [CrossRef]

- Zheng, Z.F.; Du, Q.M. Digital protection and development strategies for Chu lacquerware. Packag. Eng. (Art Des. Ed.) 2021, 40, 306–311. [Google Scholar]

- Cui, L. Research on Lacquer Display Design in Digital Age. In Design, User Experience, and Usability. HCII 2023. Lecture Notes in Computer Science; Marcus, A., Rosenzweig, E., Soares, M.M., Eds.; Springer: Cham, Switzerland, 2023; Volume 14031, pp. 103–114. [Google Scholar]

- Micoli, L.L.; Guidi, G.; Rodríguez-Gonzálvez, P.; González-Aguilera, D. Developing participation through digital reconstruction and communication of lost heritage. In A Research Agenda for Heritage Planning; Edward Elgar Publishing: Cheltenham, UK, 2021; pp. 87–97. [Google Scholar]

- Cheng, L. Support Vector Machine for Digitalization of Lacquerware Process. In Application of Intelligent Systems in Multi-modal Information Analytics. MMIA 2021. Advances in Intelligent Systems and Computing; Sugumaran, V., Xu, Z., Zhou, H., Eds.; Springer: Cham, Switzerland, 2021; Volume 1385, pp. 1301–1312. [Google Scholar]

- Xue, Y.; Wen, X.; Fu, W.; Yu, S. Application Research on Restoration of Ceramic Cultural Relics Based on 3D Printing. In Proceedings of the 11th International Conference, C&C 2023, Held as Part of the 25th HCI International Conference, HCII 2023, Copenhagen, Denmark, 23–28 July 2023; pp. 88–107. [Google Scholar]

- Podell, D.; English, Z.; Lacey, K.; Blattmann, A.; Dockhorn, T.; Müller, J.; Penna, J.; Rombach, R. SDXL: Improving Latent Diffusion Models for High-Resolution Image Synthesis. In Proceedings of the Twelfth International Conference on Learning Representations (ICLR 2024), Vienna, Austria, 7–11 May 2024. [Google Scholar]

- DeepFloyd. GitHub-Deep-Floyd/IF. Available online: https://github.com/deep-floyd/IF (accessed on 28 June 2025).

- Hugging Face. DeepFloyd IF. Available online: https://huggingface.co/docs/diffusers/en/api/pipelines/deepfloyd_if (accessed on 28 June 2025).

- Vargas-Veleda, Y.; Rodríguez-González, M.M.; Marauri-Castillo, I. Visual Representations in AI: A Study on the Most Discriminatory Algorithmic Biases in Image Generation. J. Media 2025, 6, 110. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. In Proceedings of the Tenth International Conference on Learning Representations (ICLR 2022), Virtual Event, 25–29 April 2022. [Google Scholar]

- Skublewska-Paszkowska, M.; Milosz, M.; Powroznik, P.; Lukasik, E. 3D technologies for intangible cultural heritage preservation—Literature review for selected databases. Herit. Sci. 2022, 10, 3. [Google Scholar] [CrossRef]

- Quan, H.; Li, Y.; Liu, D.; Zhou, Y. Protection of Guizhou Miao batik culture based on knowledge graph and deep learning. Herit. Sci. 2024, 12, 202. [Google Scholar] [CrossRef]

- Xu, L.; Shi, S.; Huang, Y.; Yan, F.; Yang, X.; Bao, Y. Corrosion monitoring and assessment of steel under impact loads using discrete and distributed fiber optic sensors. Opt. Laser Technol. 2024, 174, 110553. [Google Scholar] [CrossRef]

- Xu, Z.; Yang, Y.; Fang, Q.; Chen, W.; Xu, T.; Liu, J.; Wang, Z. A comprehensive dataset for digital restoration of Dunhuang murals. Sci. Data 2024, 11, 955. [Google Scholar] [CrossRef] [PubMed]

- Zheng, L.; Wang, L.; Zhao, X.; Yang, J.; Zhang, M.; Wang, Y. Characterization of the materials and techniques of a birthday inscribed lacquer plaque of the Qing dynasty. Herit. Sci. 2020, 8, 116. [Google Scholar] [CrossRef]

- Fu, Y.; Chen, Z.; Zhou, S.; Wei, S. Comparative study of the materials and lacquering techniques of the lacquer objects from Warring States Period China. J. Archaeol. Sci. 2020, 114, 105060. [Google Scholar] [CrossRef]

- Wu, M.; Zhang, Y.; Zhang, B.; Li, L. Study of colored lacquerwares from Zenghou Yi Tomb in early Warring States. New J. Chem. 2021, 45, 9434–9442. [Google Scholar] [CrossRef]

- Wang, X.; Hao, X.; Zhao, Y.; Tong, T.; Wu, H.; Ma, L.; Tong, H. Systematic study of the material, structure and lacquering techniques of lacquered wooden coffins from the Eastern Regius Tombs of the Qing Dynasty, China. Microchem. J. 2021, 168, 106369. [Google Scholar] [CrossRef]

- Han, B.; Fan, X.; Chen, Y.; Gao, J.; Sablier, M. The lacquer crafting of Ba state: Insights from a Warring States lacquer scabbard excavated from Lijiaba site (Chongqing, southwest China). J. Archaeol. Sci. Rep. 2022, 42, 103416. [Google Scholar] [CrossRef]

- Wang, K.; Liu, C.; Zhou, Y.; Hu, D. pH-dependent warping behaviors of ancient lacquer films excavated in Shanxi, China. Herit. Sci. 2022, 10, 31. [Google Scholar] [CrossRef]

- Hu, F.; Zhang, Y.; Du, J.; Li, N. Component and structural analysis of lacquerware fragments unearthed from “Nanhai No. 1”. Cult. Relics Conserv. Archaeol. Sci. 2023, 35, 43–52. [Google Scholar]

- Gong, Z.; Liu, S.; Jia, M.; Sun, S.; Hu, P.; Pei, J.; Hu, G. Analysis of the manufacturing craft of the painted gold foils applied on the lacquerware of the Jin Yang Western Han Dynasty tomb in Taiyuan, Shanxi, China. Herit. Sci. 2024, 12, 207. [Google Scholar] [CrossRef]

- Zhu, Z.; Qin, Y.; Guo, Z.; Cai, S.; Lin, P.; Wang, X.; Yang, J. Shedding new light on lacquering crafts from the Northern Wei Dynasty (386–534 CE) by revisiting the lacquer screen from Sima Jinlong’s Tomb. J. Cult. Herit. 2025, 71, 309–319. [Google Scholar] [CrossRef]

- Chen, J.; Liu, Y.; Yang, Y.; Pang, L.; Han, X. Scientific analysis of lacquered earthen relics unearthed from M9 at Guishan, Luoping, Yunnan. China Lacq. 2025, 44, 57–61. [Google Scholar]

- Zhao, J. Content and composition of the Heluo Music Cultural Relics Dataset (Xia, Shang–Ming and Qing Dynasties). J. Glob. Change Data Discov. 2021, 5, 444–452. [Google Scholar]

- Xiang, H.; Niu, W.; Huang, X.; Ning, B.; Zhang, F.; Xu, J. Large scale and complex structure grotto digitalization using photogrammetric method: A case study of Cave No. 13 in Yungang Grottoes. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 2024, 10, 231–238. [Google Scholar] [CrossRef]

- Ge, S. Content and composition of embroidery dataset archived in Luoyang Folk Museum. J. Glob. Change Data Discov. 2021, 5, 470–478. [Google Scholar]

- Liu, Y.; Zhang, Q.; Qi, Y.; Wan, T.; Zhang, D.; Li, Y.; Chen, S. DeepJiandu dataset for character detection and recognition on Jiandu manuscript. Sci. Data 2025, 12, 398. [Google Scholar] [CrossRef]

- Gil-Martín, M.; Luna-Jiménez, C.; Esteban-Romero, S.; Estecha-Garitagoitia, M.; Fernández-Martínez, F.; D’Haro, L.F. A dataset of synthetic art dialogues with ChatGPT. Sci. Data 2024, 11, 825. [Google Scholar] [CrossRef] [PubMed]

- Obaid, H.S.; Dheyab, S.A.; Sabry, S.S. The impact of data pre-processing techniques and dimensionality reduction on the accuracy of machine learning. In Proceedings of the 2019 9th Annual Information Technology, Electromechanical Engineering and Microelectronics Conference (IEMECON), Jaipur, India, 13–14 March 2019; pp. 279–283. [Google Scholar]

- Whang, S.E.; Lee, J.G. Data collection and quality challenges for deep learning. Proc. VLDB Endow. 2020, 13, 3429–3432. [Google Scholar] [CrossRef]

- Dohmatob, E.; Feng, Y.; Subramonian, A.; Kempe, J. Strong Model Collapse. In Proceedings of the Thirteenth International Conference on Learning Representations (ICLR 2025), Singapore, 25–29 April 2025. [Google Scholar]

- Tichau. GitHub—Tichau/FileConverter: File Converter Is a Very Simple Tool Which Allows You to Convert and Compress Files Using the Context Menu in Windows Explorer. Available online: https://github.com/Tichau/FileConverter (accessed on 28 June 2025).

- ImageMagick. ImageMagick—Mastering Digital Image Alchemy. Available online: https://imagemagick.org/ (accessed on 28 June 2025).

- Jasper AI. Flux.1-dev ControlNet Upscaler. Available online: https://huggingface.co/jasperai/Flux.1-dev-Controlnet-Upscaler (accessed on 28 June 2025).

- Cheng, Q.; Zhang, Q.; Fu, P.; Tu, C.; Li, S. A survey and analysis on automatic image annotation. Pattern Recognit. 2018, 79, 242–259. [Google Scholar] [CrossRef]

- Ye, Q.; Ahmed, M.; Pryzant, R.; Khani, F. Prompt Engineering a Prompt Engineer. In Findings of the Association for Computational Linguistics: ACL 2024, Bangkok, Thailand, 11–16 August 2024; Ku, L.-W., Martins, A., Srikumar, V., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 355–385. [Google Scholar]

- Xiao, B.; Wu, H.; Xu, W.; Dai, X.; Hu, H.; Lu, Y.; Yuan, L. Florence-2: Advancing a Unified Representation for a Variety of Vision Tasks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2024), Seattle, WA, USA, 17–21 June 2024; pp. 4818–4829. [Google Scholar]

- Black-Forest Labs. Flux. Available online: https://github.com/black-forest-labs/flux (accessed on 28 June 2025).

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-Resolution Image Synthesis with Latent Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 10684–10695. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Girshick, R. Segment Anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV 2023), Paris, France, 2–6 October 2023; pp. 3992–4003. [Google Scholar]

- Meta AI. Segment Anything. Available online: https://segment-anything.com/ (accessed on 28 June 2025).

| Author (Year) | Protection Path | Protection Object | Related Disciplinary Direction |

|---|---|---|---|

| Marek Milosz et al. (2022) [12] | 3D Technology | Intangible Cultural Heritage | Computer Science |

| Huafeng Quan et al. (2024) [13] | Knowledge Graph, Natural Language Processing, Deep Learning Techniques | Miao batik culture in Guizhou Province, China | Interdisciplinary field of Computer Science and Design |

| Ying Huang et al. (2024) [14] | Distributed Optical Fiber Sensor Corrosion Monitoring Technology | Handicraft using steel as material | Interdisciplinary field of Materials Science and Optics |

| Zishan Xu et al. (2024) [15] | Dataset protection | Dunhuang murals | Interdisciplinary field of Computer Science and Design |

| Author (Year) | Research Object | Region | The Language Used for Writing the Paper | Whether to Synchronize the Lacquerware Images |

|---|---|---|---|---|

| Liping Zheng et al. (2020) [16] | Lacquerware from the Late Qing period | Bashu area, China | English | × |

| Yingchun Fu et al. (2020) [17] | Lacquerware unearthed from the Chu tombs at Jiuliandun, Hubei, Warring States period | Hubei, China | English | × |

| Meng Wu et al. (2021) [18] | Colored lacquerwares from Zenghou Yi Tomb in Early Warring States | Hubei, China | English | × |

| Xin Wang et al. (2021) [19] | lacquered wooden coffins from Eastern Regius Tombs of the Qing Dynasty | Hebei, China | English | × |

| Bin Han et al. (2022) [20] | The Warring States lacquer scabbard unearthed from the Lijiaba site | Chongqing, China | English | × |

| Kai Wang et al. (2022) [21] | Ancient lacquer films of the Western Han Dynasty | Shanxi, China | English | × |

| Danfeng Hu et al. (2023) [22] | Lacquer fragments salvaged from the “Nanhai I” shipwreck | Guangdong, China | Chinese | × |

| Zisang Gong et al. (2024) [23] | Painted gold foils applied to lacquerware of the Late Western Han Dynasty | Shanxi, China | English | × |

| Zhanyun Zhu et al. (2025) [24] | Lacquer screen of the Northern Wei period | Shanxi, China | English | × |

| Jiake Chen et al. (2025) [25] | Han dynasty lacquerware unearthed from M9, Guishan, Luoping, Yunnan | Yunnan, China | Chinese | × |

| Dataset Classification | Type | Count | Resolution |

|---|---|---|---|

| Core Dataset | Image (.jpg) | 582 | 4096 × 3072 |

| Text (.txt) | 582 | ||

| Style Dataset | Image (.jpg) | 37 | 1024 × 1024 |

| Text (.txt) | 37 | ||

| Video Dataset | Video (.mov + .mp4) | 72 (43.mov + 29.mp4) | 1920 × 1080 |

| Data Source | Image Count | Resolution | Dataset Description | Data Composition | Cultural Attribute Depth | Data Reliability |

|---|---|---|---|---|---|---|

| Heluo Musical Relics_Xia-Qing [26] | 102 | 400 × 300 | Research on musical relics created and preserved from Xia-Shang to Ming-Qing dynasties | Typological classification, cross-cultural terminology | Typological evolution lineage of musical relics | Academically consensual terminology |

| Yungang Grottoes [27] | Not specified | Not specified | 3D scanned point cloud image data of Cave 13 at Yungang Grottoes, Shanxi Province | 3D models, high-definition images, environmental monitoring data | Religious artistic symbol interpretation | High-precision scanning |

| Embroidery Cultural Relics [28] | 261 | 737 × 821 800 × 726 | Embroidered costumes from the mid-late Qing Dynasty to the Republican era | Image data, statistical information tables | Embroidery technique documentation | Field-measured data |

| DeepJiandu [29] | 7416 | Width: 150–600+ pixels Height: 100–3200+ pixels | Jiandu Detection and Recognition | Infrared Imaging (BMP) VOC-Format Annotations | 2242-Class Ancient Script Taxonomy | Gaussian-Filtered Denoising |

| Art_GenEvalGPT [30] | Not specified | Not specified | Art-Themed Dialogs Generated via ChatGPT | Dialog Corpora (CSV/JSON Formats) | 26 Art Movements 378 Artist Referents | Automated Metric Evaluation Human-Validated Affective Annotation |

| CSL (Ours) | 582 * | 4096 × 3072 | Collection and organization of Chu-style lacquerware images | Vessel shape and pattern images, vessel videos | The culture of Chu lacquerware | Multi-faceted validation from technology and society |

| Method | Advantages | Limitations | |

|---|---|---|---|

| Online Resources Collection | Strong systematization and wide coverage; provides a relatively complete classification system of Chu-style lacquerware | Some images have low resolution; blurred edges; insufficient color information, making them unsuitable for training needs | |

| On-site Photography | Ensures comprehensive category coverage; expands sample size within single categories; produces higher-quality images more suitable for deep learning training | Restricted by exhibition hall lighting, reflections, and shooting angles; some images may contain noise, glare, or reduced clarity | |

| Data Processing | Constructing high-quality images suitable for training datasets in deep learning models | Online resources | On-site images |

| rough labels, unclear images; Model Collapse | Inconsistencies in format, resolution, and color space; reduced image clarity | ||

| Model Source | Base Model | LoRA-Based Generation Method | Method Description |

|---|---|---|---|

| Black Forest Labs [40] | Flux | FLCQ | When FLCQ is combined with Flux for generation |

| Podell et al. [7] | SDXL | XLCQ | When XLCQ is combined with SDXL for generation |

| Rombach et al. [41] | SD_V1.5 | SLCQ | When SLCQ is combined with SD_V1.5 for generation |

| Abbreviation | Dimension | Evaluation Criteria |

|---|---|---|

| OS | Overall Style | Whether the overall image conforms to the Chu-style lacquerware style |

| LF | Line Features | Whether the main patterns are composed of lines |

| CF | Curvature Features | Whether the degree of line curvature is similar to the original image |

| Model Name | Model Type | Dataset | Base Model | Fine-Tuning Method | Application |

|---|---|---|---|---|---|

| FluxLCqco | Core Model | Core Dataset | Flux | LoRA | Generate images that conform to the morphological and pattern features of Chu-style lacquerware |

| SDXLLCqco | Core Model | Core Dataset | SDXL | LoRA | |

| SD_1.5LCqco | Core Model | Core Dataset | SD_V1.5 | LoRA | |

| FluxLCqstbut | Style Model | Style Dataset | Flux | LoRA | Generate innovative Chu-style lacquerware patterns |

| FluxLCqstdra | Style Model | Style Dataset | Flux | LoRA | |

| FluxLCqstflo | Style Model | Style Dataset | Flux | LoRA | |

| FluxLCqstpho | Style Model | Style Dataset | Flux | LoRA | |

| FluxLCqstql | Style Model | Style Dataset | Flux | LoRA |

| Model | Advantages | Limitations |

|---|---|---|

| SD_V1.5 | After training on the CSL dataset, the overall style conforms to Chu-style lacquerware; capable of generating images with certain stylistic features | Morphology tends to be planar, lacking realism; Pattern details deviate from Chu-style lacquerware standards, appearing less natural and refined |

| SDXL | The basic morphology of artifacts is improved compared with SD_V1.5; lacquerware forms are closer to real objects | Improvements are limited; overall morphological fidelity remains insufficient; stylistic consistency and Pattern details still show deviations |

| Flux | Best morphological fidelity with strong image realism; optimal stylistic consistency; natural, rich, and elegant Patterns that conform to Chu-style lacquerware characteristics | No significant limitations were observed in the validation of this module |

| Resolution | Base Model | Criteria | Before Training | After Training | Enhancement Effect |

|---|---|---|---|---|---|

| 512 × 512 | Flux | OS | Deviated | Maintained | OS, CF |

| LF | Yes | Yes | |||

| CF | Yes | Improved | |||

| SDXL | OS | Deviated | Maintained | OS, LF | |

| LF | No | Yes | |||

| CF | No | No | |||

| SD_V1.5 | OS | Deviated | Maintained | OS, LF, CF | |

| LF | No | Yes | |||

| CF | No | Yes | |||

| 1024 × 1024 | Flux | OS | Maintained | Improved | OS, CF |

| LF | Yes | Yes | |||

| CF | No | Yes | |||

| SDXL | OS | Maintained | Improved | OS, LF, CF | |

| LF | No | Yes | |||

| CF | No | Yes | |||

| SD_V1.5 | OS | Maintained | Improved | OS, CF | |

| LF | Yes | Yes | |||

| CF | Yes | Improved |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bi, H.; Chen, Y.; Chen, C.; Shu, L. Chu-Style Lacquerware Dataset: A Dataset for Digital Preservation and Inheritance of Chu-Style Lacquerware. Sensors 2025, 25, 5558. https://doi.org/10.3390/s25175558

Bi H, Chen Y, Chen C, Shu L. Chu-Style Lacquerware Dataset: A Dataset for Digital Preservation and Inheritance of Chu-Style Lacquerware. Sensors. 2025; 25(17):5558. https://doi.org/10.3390/s25175558

Chicago/Turabian StyleBi, Haoming, Yelei Chen, Chanjuan Chen, and Lei Shu. 2025. "Chu-Style Lacquerware Dataset: A Dataset for Digital Preservation and Inheritance of Chu-Style Lacquerware" Sensors 25, no. 17: 5558. https://doi.org/10.3390/s25175558

APA StyleBi, H., Chen, Y., Chen, C., & Shu, L. (2025). Chu-Style Lacquerware Dataset: A Dataset for Digital Preservation and Inheritance of Chu-Style Lacquerware. Sensors, 25(17), 5558. https://doi.org/10.3390/s25175558