1. Introduction

Drowning constitutes a major global public health crisis that demands urgent attention, with its severity being impossible to overlook. According to the World Health Organization (WHO), the number of global drowning deaths reached a staggering 300,000 in 2021—equivalent to over 34 fatalities per hour [

1]. Focusing on China, data from the China Youth Drowning Prevention Big Data Report 2022 [

2] shows that drowning accounts for 33% of all injury-related deaths among Chinese adolescents. The high incidence of drowning incidents in 2022 further emphasizes the urgency of strengthening prevention and control measures.

Statistics indicate that China currently has a total of 39,700 swimming venues, primarily categorized into outdoor pools, indoor pools, and natural swimming sites. Specifically, outdoor pools (20,600 venues, accounting for 51.95%) and indoor pools (18,200 venues, accounting for 45.68%) together make up 97.63% of the total, while natural swimming sites are far less common (only 940 venues, comprising a mere 2.37%) [

3].

As the most prevalent types of swimming facilities, indoor and outdoor pools pose particularly acute drowning risks. Drowning is characterized by its quiet and rapid progression, and this process is easily obscured by complex environmental factors—such as water surface glare and crowd movements—greatly undermining the effectiveness of manual surveillance [

4]. Conversely, the inherent physical characteristics of these venues (fixed boundaries, clear water quality, and relatively controllable lighting) provide ideal, engineering-friendly conditions for deploying automated detection systems based on AI vision or sensor technology [

5,

6].

Therefore, researching drowning detection technology specifically for indoor and outdoor swimming pools—core scenarios marked by high drowning risks and strong technical feasibility—is of critical significance. It helps address blind spots in manual supervision, shorten rescue response times, and enhance the overall safety prevention and control capabilities of swimming facilities.

In recent years, drowning detection technologies have primarily developed along two technical pathways:

The first category relies on wearable sensing devices. These technologies collect physiological and behavioral data (e.g., swimmers’ heart rate, blood oxygen levels, water pressure, and movement status) to enable real-time behavior monitoring and emergency response [

7]. However, this approach mandates that users wear the devices, which not only may impair the swimming experience but also presents practical challenges—such as inconvenience in use and poor adaptability for infants and young children—hindering its large-scale application [

8].

The second category adopts vision-based deep learning methods, which use cameras to capture image data and leverage object detection algorithms to analyze swimmers’ behaviors [

9]. With the rapid iteration of object detection techniques, single-stage detection models (represented by the YOLO series) have gradually become the mainstream choice for drowning detection. This is attributed to their advantages of strong real-time performance, low deployment costs, and excellent scene generalization capabilities [

10]. Nevertheless, existing solutions still have room for optimization in several key dimensions:

From an engineering implementation perspective: Even lightweight models like YOLO11n impose significant burdens on the storage and computational resources of edge devices, due to their parameter scale, model size, and computational overhead. This makes them difficult to adapt to resource-constrained scenarios (e.g., pool-embedded terminals) [

11]. From a detection performance perspective: in complex aquatic environments, factors such as water surface glare interference and target occlusion can reduce the model’s focus on subtle drowning posture features, thereby weakening the robustness of recognition [

12]. From a multi-scale target adaptation perspective: drowning scenarios often involve targets of varying scales (e.g., nearby swimmers vs. distant drowning individuals). Current models’ multi-scale feature fusion strategies are still inadequate, frequently leading to missed detection of small targets or insufficient feature extraction for large targets [

13,

14].

To address the aforementioned challenges, this paper proposes the YOLO11-LiB model, which is designed to enhance existing object detection algorithms for improved drowning detection performance and provide a more effective solution to the identified problems. Specifically, the model achieves three key optimizations:

It reduces computational complexity through lightweight dynamic convolution and optimized downsampling operations, meeting the requirements for real-time early warning; it incorporates a residual-structured separation attention mechanism to adaptively adjust feature weights, suppress irrelevant interference, enhance sensitivity to drowning victim features, and reduce false positives and false negatives caused by complex backgrounds; and it employs advanced multi-scale feature fusion to capture detailed information of targets at different scales, mitigating the degradation of detection accuracy caused by distance variations or pose changes of drowning individuals, and thus improving detection performance in complex scenarios.

The main contributions of this study are summarized as follows:

Lightweight Feature Extraction Design: We propose the Lightweight Feature Extraction Module (LGCBlock), which integrates the Lightweight Attention Encoding Block (LAE) and effectively combines Ghost Convolution (GhostConv) with Dynamic Convolution (DynamicConv). This module optimizes the downsampling structure and the C3k2 module in the YOLO11n backbone network, significantly reducing model parameters and computational complexity while preserving the integrity of key features.

Enhanced Attention Mechanism for Robust Feature Extraction: We introduce the Cross-Channel Position-aware Spatial Attention Inverted Residual with Spatial-Channel Separate Attention module (C2PSAiSCSA) into the backbone network. This module embeds the Spatial–Channel Separate Attention (SCSA) mechanism within the Inverted Residual Mobile Block (iRMB) framework, enabling more comprehensive and efficient feature extraction. It enhances the perception of subtle drowning postures and effectively suppresses interference from water surface glare and crowd occlusion.

Multi-scale Feature Fusion Architecture: We redesign the neck structure as the Bidirectional Feature Fusion Network (BiFF-Net), which integrates the Bidirectional Feature Pyramid Network (BiFPN) and Frequency-Aware Feature Fusion (FreqFusion). This design optimizes the semantic fusion of multi-scale features through a bidirectional feedback mechanism of dynamic weighting and frequency-domain enhancement, significantly improving detection accuracy for multi-scale targets—particularly small drowning victims.

Comprehensive Performance Evaluation: We establish a multi-dimensional evaluation system and validate the proposed YOLO11-LiB model through comparative experiments with state-of-the-art algorithms and ablation studies. The results demonstrate that our model achieves a drowning class mean average precision (DmAP50) of 94.% with only 2.02 M parameters and a model size of 4.25 MB, effectively balancing accuracy and efficiency for real-time drowning detection in swimming pool scenarios.

3. Method

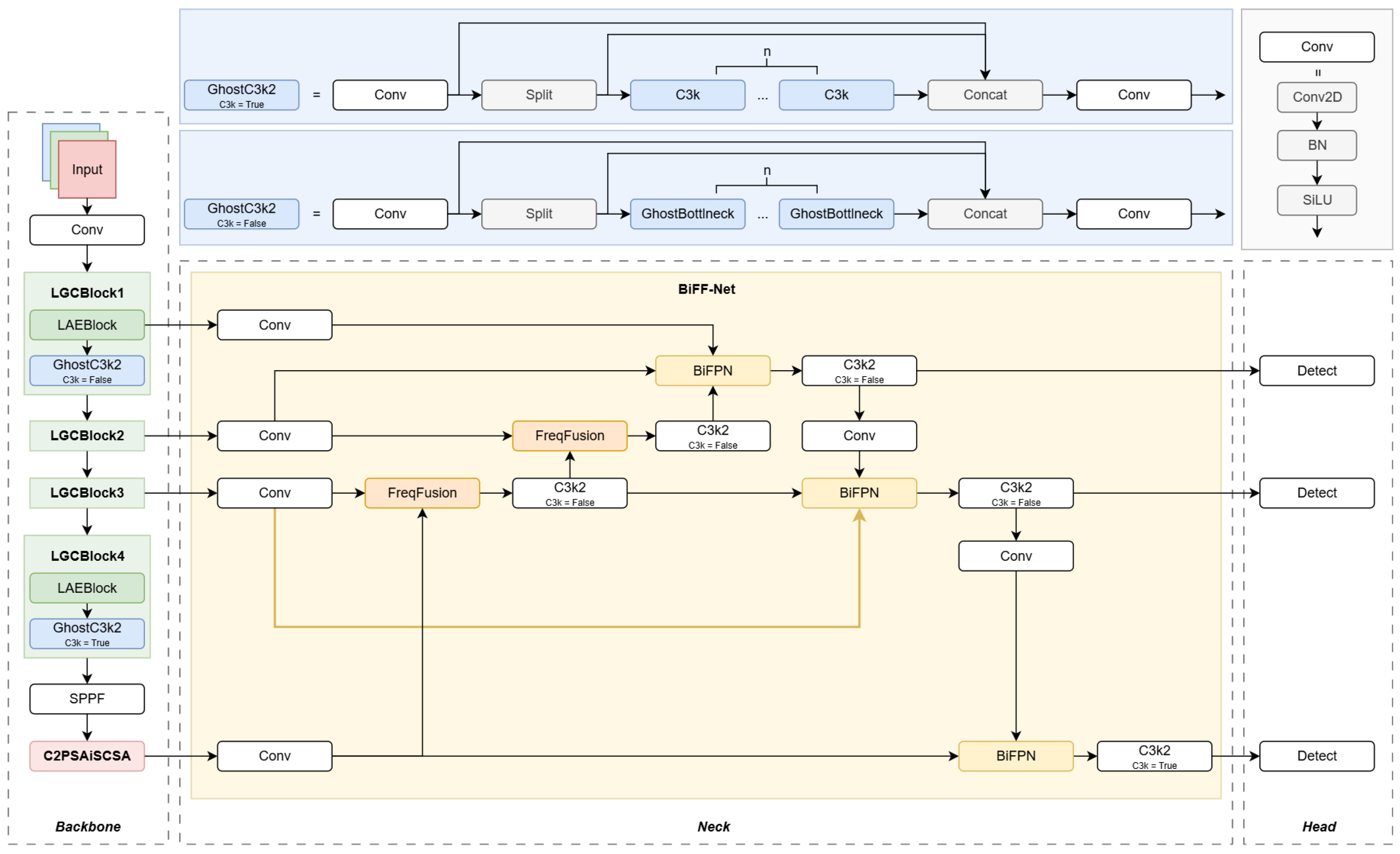

The overall architecture of YOLO11-LiB is illustrated in

Figure 1. Based on the Ultralytics YOLO11n framework, our model introduces targeted enhancements for drowning detection in complex aquatic environments, adopting a multi-scale output structure. Key modifications include:

In the backbone, traditional downsampling is replaced with the lightweight LAE module. Combined with the GhostC3k2 block, this enables efficient feature extraction. An enhanced Inverted Residual module with Spatial–Channel Separate Attention (C2PSAiSCSA) is added at the backbone’s end to boost focus on fine-grained details of small targets.

Within the neck, feature channels from different scales are first standardized. Feature representation is then refined through FreqFusion and multiple C3k2 blocks. Contextual interaction is enhanced using the bidirectionally weighted BiFPN, followed by cross-scale downsampling via strided convolutions. The fused features are finally fed into the detection head for prediction.

This architecture significantly improves detection accuracy and inference efficiency through multi-level attention and frequency domain fusion, meeting the real-time and robustness requirements for drowning detection.

3.1. LGCBlock

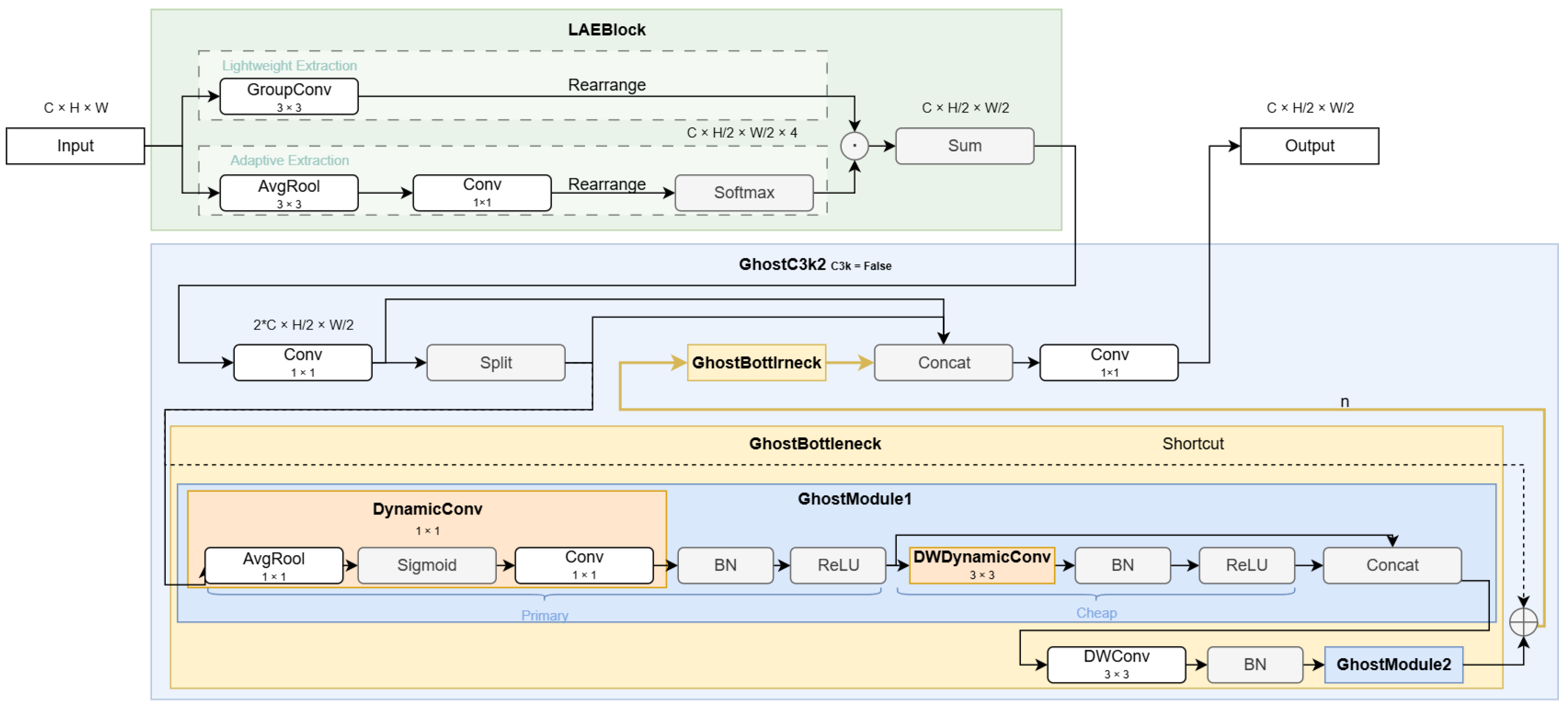

To enhance edge inference efficiency, we propose the LGCBlock, integrating a Lightweight Attention-based Extraction (LAE) module and a GhostC3k2 module (

Figure 2), addressing computational redundancy and information loss in the original YOLO11n backbone.

To address downsampling optimization, this paper introduces the Lightweight Attention-based Extraction (LAE) module (Yu et al., 2024, LSM-YOLO [

29]), which achieves efficient feature compression via dual-branch cooperation (

Figure 2). The attention branch employs 3 × 3 average pooling and 1 × 1 convolution to generate spatial weights for 2 × 2 regions. The downsampling branch uses grouped convolution for 2× reduction. Features are reshaped to

, fused via softmax-normalized weights and weighted summation, reducing computation to 12.5% of standard convolution while preserving critical information.

To reduce C3k2’s complexity, we design a GhostModule using dynamic convolution. Its primary path applies 1 × 1 DynamicConv (with a routing network combining four experts) for channel compression. The secondary path uses 3 × 3 depthwise separable dynamic convolution. Given output channels , the primary branch produces channels (), and the secondary branch , halving computation. This extends to a GhostBottleneck with channel expansion, depthwise convolution (for stride > 1), and channel compression, integrated with adaptive shortcuts and DropPath.

Integrated into the backbone, LAE replaces downsampling layers, while GhostC3k2 modifies C3k2 by dynamically using GhostBottlenecks (c3k = False) or retaining C3k (c3k = True), preserving residual connections.This synergy significantly reduces the computational load of the LGCBlock while maintaining accuracy, enabling efficient edge deployment for drowning detection.

3.2. C2PSAiSCSA

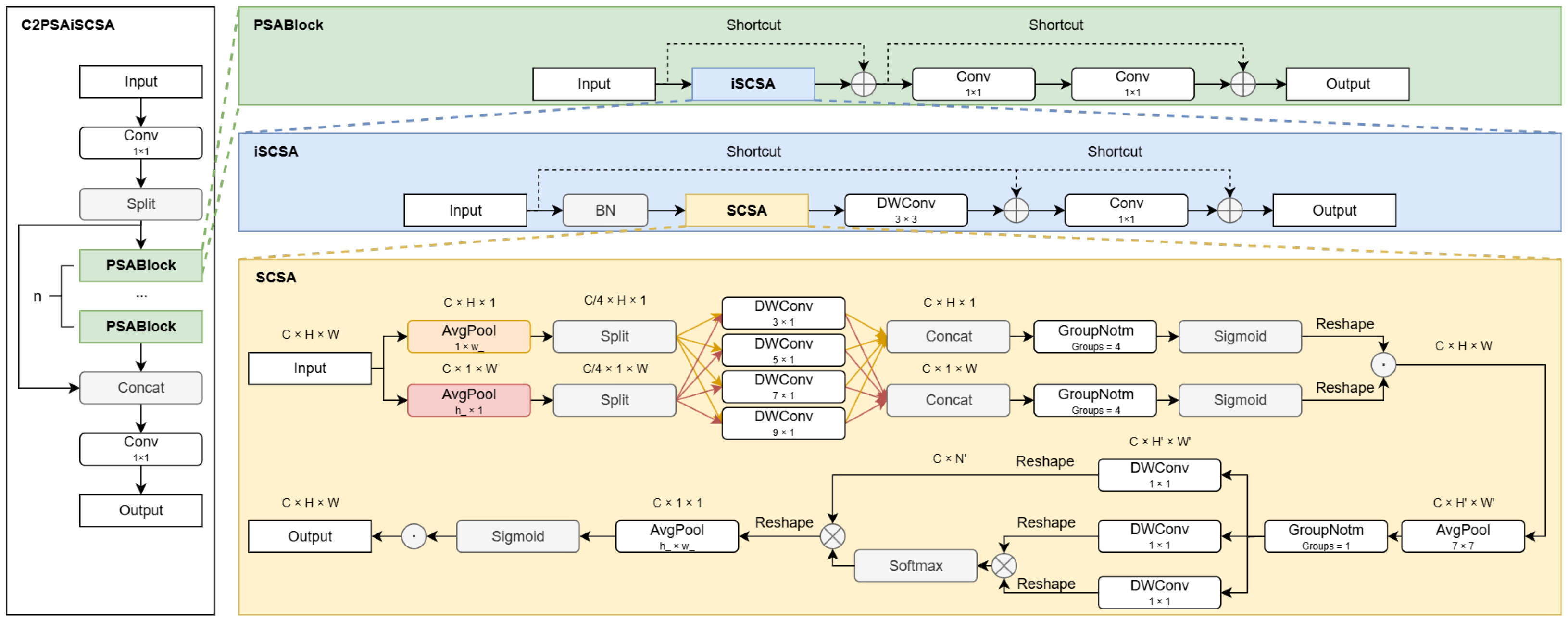

The original C2PSA module in YOLO11n lacks effective spatial modeling and dynamic adaptability, limiting its perception of subtle drowning movements in complex aquatic environments. To address this, we design the C2PSAiSCSA module by integrating an inverted Residual Mobile Block (iRMB) for local context and a Spatial–Channel Separate Attention (SCSA) mechanism for long-range dependencies.

As illustrated in

Figure 3, the core innovation is the iSCSA unit, which embeds SCSA within iRMB’s residual framework. Input features are first normalized and processed by SCSA to generate spatial-channel attention maps. Depthwise convolution then models local context and performs channel recalibration, followed by a projection layer and residual connection.

This iSCSA unit forms the PSABlock, which replaces the attention branch in the original C2PSA. The overall structure retains the dual-path design: one path preserves features via a skip connection, while the other undergoes deep enhancement through stacked PSABlocks. Each PSABlock contains an iSCSA module and a Feedforward Network (FFN). The iSCSA module employs an inverted residual structure: it expands channels, performs spatial modeling with depthwise separable convolution (enhanced by the integrated SCSA), and then compresses channels. The embedded SCSA uses multi-scale depthwise convolution for spatial attention and multi-head attention for channel selection, guiding the model to focus on critical regions.

Finally, features from the enhanced path are concatenated with the skip-connected features and integrated via a convolution. This design significantly improves spatial perception and feature selectivity for small drowning targets under lightweight constraints, enhancing robustness and accuracy in complex backgrounds without substantially increasing computational cost.

3.3. BiFF-Net

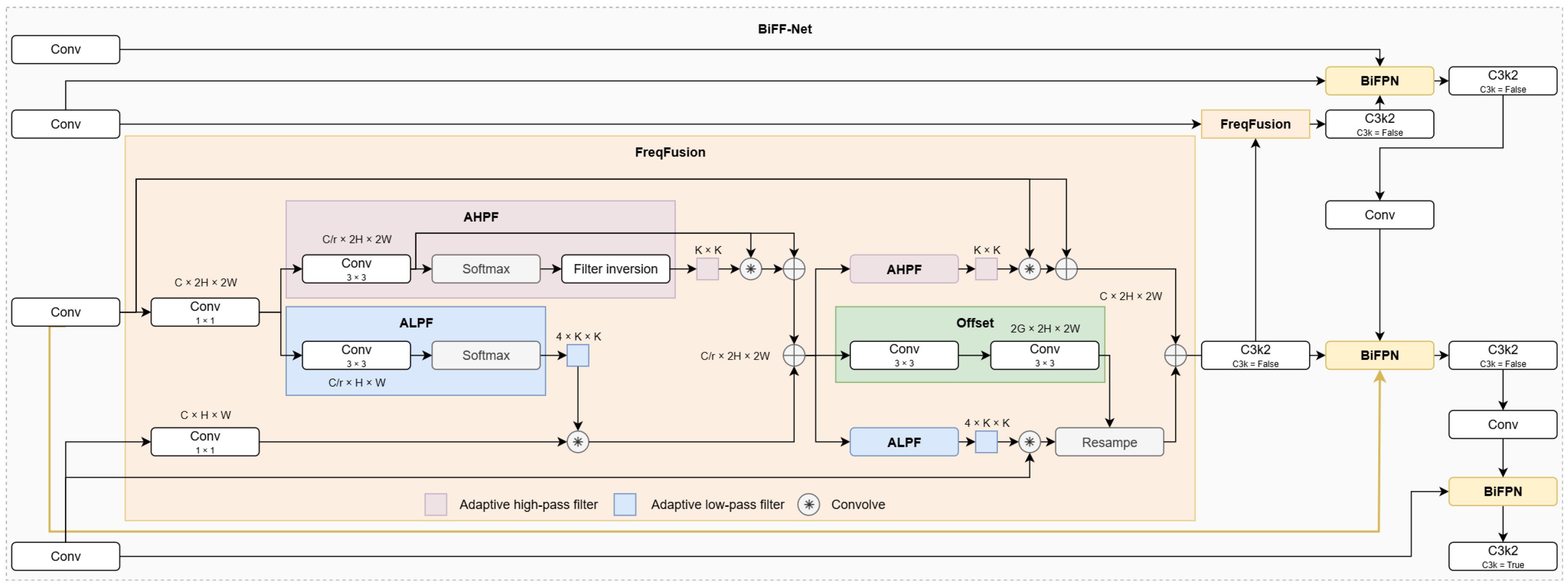

The original YOLO11n neck structure (FPN + PAN) employs a static fusion mechanism, struggling to balance semantic and detail features for small, low-contrast drowning targets amidst complex aquatic interference. To overcome this, we propose the Bidirectional Feature Fusion Network (BiFF-Net), integrating BiFPN and FreqFusion for dynamic, robust multi-scale fusion (

Figure 4).

The BiFPN module introduces learnable weights, normalized via a Swish activation, to dynamically modulate the contribution of input features from different scales. This adaptive weighting enhances critical features (e.g., target edges) while suppressing redundancies (e.g., water ripple noise), significantly improving fusion flexibility and robustness.

The FreqFusion module operates in the frequency domain to compensate for detail loss. It generates content-aware adaptive low-pass (ALPF) and high-pass (AHPF) filters. The low-pass branch upsamples and smooths deeper features using CARAFE with ALPF to emphasize semantic contours. The high-pass branch applies AHPF to shallower features to accentuate high-frequency details (e.g., fine edges, textures). The outputs are summed, producing features rich in both global semantics and local details.

BiFF-Net establishes a synergistic “Frequency Enhancement → Dynamic Weighting” workflow. Multi-scale features are first enhanced by FreqFusion to repair degradation (e.g., blurred contours). BiFPN then dynamically fuses these enhanced features. This mechanism enables dynamic cross-level interaction, effectively suppressing background interference and compensating for detail loss, thereby significantly improving perception and localization accuracy for challenging drowning targets while maintaining efficient inference.

4. Experiments

4.1. Dataset

This study constructs a dedicated drowning detection dataset covering both indoor and outdoor swimming pool environments, comprising a total of 2000 original images. Through extensive data augmentation techniques (including rotation, scaling, brightness adjustment, saturation variation, hue shift, zoom, blur, noise injection, and mosaic augmentation), the dataset was expanded to a total of 4800 images for model training. Data was sourced from the public dataset platform Roboflow (

https://roboflow.com/) and open internet resources (including videos and images), encompassing both above-water and underwater perspectives.

To maximize diversity and real-world applicability, the dataset was meticulously curated across multiple dimensions:

Scenario Diversity: Includes indoor heated pools and outdoor open-air pools, with different lane designs and poolside environments.

Environmental Diversity: Covers various lighting conditions (e.g., bright midday sunlight, low evening light, artificial nighttime lighting, and light refraction underwater), different weather conditions (sunny, cloudy), and varying water clarity.

Subject Diversity: Encompasses individuals of different age groups (children, adults, the elderly), body types, skin tones, and those wearing different swimwear (e.g., dark, light, bikinis, swimsuits).

Behavioral Diversity: Data includes not only swimming and drowning subjects but also rich background activities such as walking poolside, diving, and playing in the water, enhancing the model’s ability to discern targets in complex scenes.

Technical Diversity: Data was obtained from various capture devices (e.g., surveillance cameras, action cameras, smartphones), introducing variations in resolution, viewing angles (overhead, eye-level, oblique), and aspect ratios.

The dataset was partitioned into training, validation, and test sets in a 7:2:1 ratio. All images were meticulously annotated in YOLO format using Roboflow tools. The annotation categories are defined as follows:

Swimming: Represents normal and controlled swimming postures. Characteristics typically include a streamlined, near-horizontal body position; rhythmic and coordinated limb movements (e.g., regular arm strokes and leg kicks); the head is often raised for breathing or submerged rhythmically; and movement direction is purposeful.

Drowning: Represents active or imminent drowning behavior, characterized fundamentally by a loss of voluntary motor control. Specific manifestations include a vertical or upright, tilted body position with an inability to swim effectively; arms may extend laterally or slap the water involuntarily (Instinctive Drowning Response) without providing propulsion; the head may be submerged for extended periods or tilted back with the mouth seeking air, often with a glassy-eyed stare; leg movement is minimal or absent, often leading to a gradual submersion after struggle.

To mitigate overfitting risks due to the extended training of 500 epochs, the aforementioned data augmentation strategies were rigorously applied. Furthermore, the training process was closely monitored. The loss curves for both training and validation sets demonstrated a convergent trend without significant divergence, indicating that overfitting was effectively controlled. The loss curves were analyzed in detail in the subsequent chapters.

Figure 5 showcases representative samples from the dataset, providing a visual comparison of the two behavioral modes. This dataset has been publicly released on the Roboflow platform.

4.2. Evaluation Metrics

This study employs multiple evaluation metrics to comprehensively assess model performance, including Drowning Precision (DP), Drowning Recall (DR), Drowning mean Average Precision (DmAP50), Swimming mean Average Precision (SmAP50), Frame Rate (FPS), Floating Point Operations (FLOPs, in G), Number of Parameters (P/M, in millions), and model size (Size/M, in MB).

Specifically, Drowning Precision measures the accuracy of predictions for the drowning class, representing the proportion of true drowning instances among samples predicted as drowning. Drowning Recall quantifies the detection capability for drowning instances, indicating the proportion of actual drowning cases correctly identified by the model.

DmAP50 and SmAP50 denote the mean average precision for drowning and swimming classes respectively at an Intersection over Union (IoU) threshold of 0.5, reflecting the comprehensive performance of detection precision and recall capability for both target categories. The Frame Rate (FPS) evaluates the model’s real-time inference performance, demonstrating its capability for instantaneous detection.

FLOPs represent the computational complexity of the model, while the Number of Parameters and model size indicate the model’s scale and storage requirements, respectively, assessing its deployment feasibility on resource-constrained devices. Collectively, these metrics comprehensively characterize the proposed model’s overall performance across two dimensions: detection effectiveness and model efficiency.

4.3. Experimental Setup and Convergence Analysis

All experiments in this study were conducted within the PyCharm 2024.3.3 (Professional Edition) integrated development environment. The hardware configuration includes an NVIDIA GeForce RTX 3090 GPU (manufactured by NVIDIA Corporation, Santa Clara, CA, USA) and an Intel(R) Xeon(R) Platinum 8362 CPU (manufactured by Intel Corporation, Santa Clara, CA, USA) (15 cores, 2.80 GHz base frequency), running on the Windows 11 operating system. The software environment utilizes Python 3.10, with PyTorch 2.0.0 as the deep learning framework, supported by CUDA 11.8 acceleration.

Experimental hyperparameters were carefully tuned for the drowning detection task, with key parameters detailed in

Table 1. The configuration follows established practices for YOLO-series models while incorporating adjustments specific to our application scenario. This setup enabled both our proposed model and the baseline models to achieve optimal performance.

The training process and convergence behavior were meticulously analyzed. Extensive data augmentation was employed to mitigate overfitting risks associated with the limited dataset size.

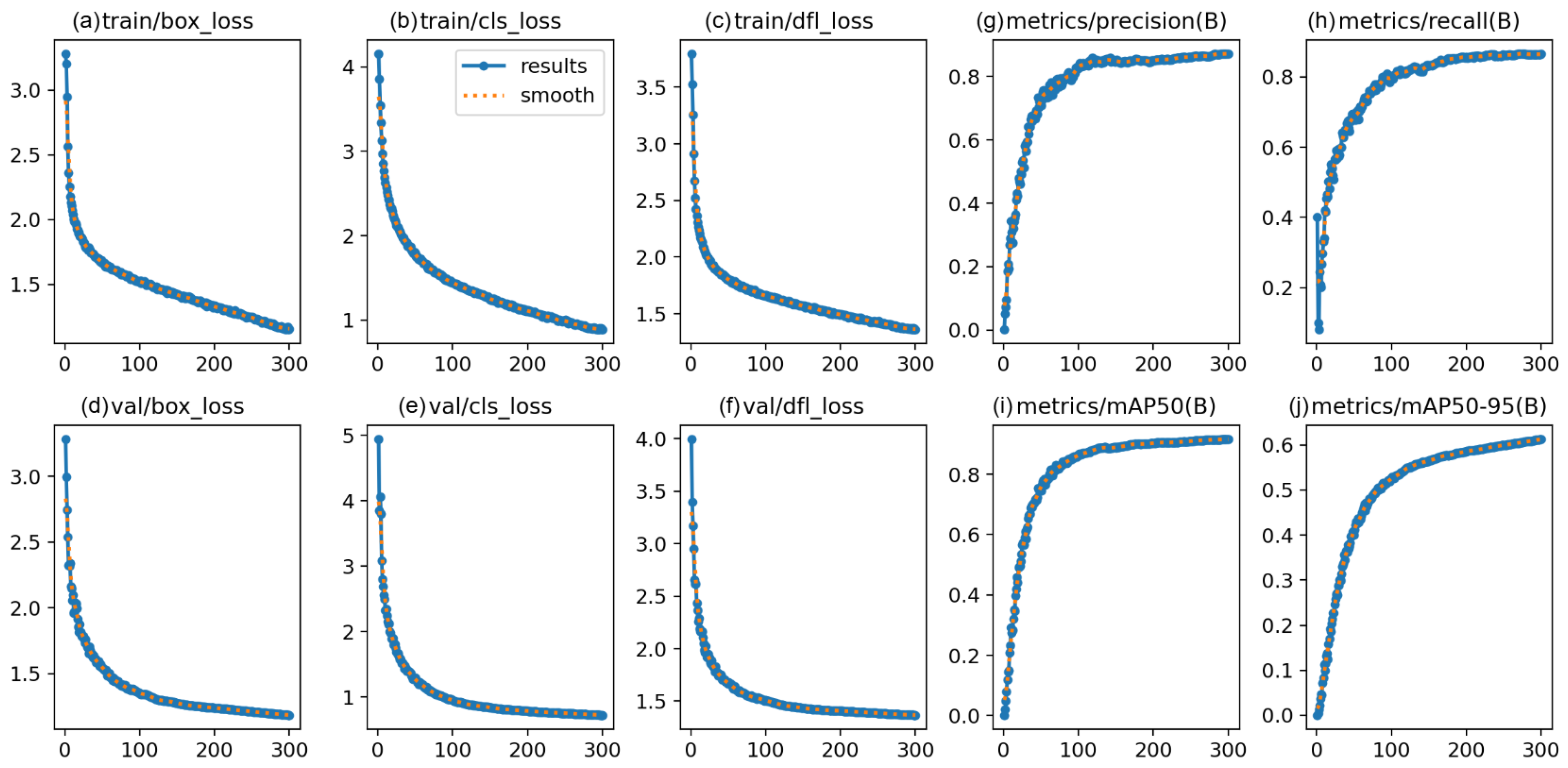

Figure 6 presents the trajectories of the training and validation losses alongside key performance metrics over the 300-epoch training course.

Analysis of the curves reveals a rapid decline in both training and validation losses during the initial 50 epochs, indicating efficient feature learning. The rate of decrease gradually slowed, with losses stabilizing after approximately 200 epochs, suggesting model convergence. Crucially, the validation loss closely tracked the training loss throughout the entire process without significant divergence, demonstrating strong generalization and a lack of severe overfitting. This is further supported by the performance metrics on the validation set. The mAP@50 exhibited a swift increase from near zero, surpassed 0.8 around epoch 100, and eventually plateaued at a high value of 0.941 by epoch 300.

The learning rate scheduling strategy contributed to this stable convergence. The learning rate was gradually reduced from its initial value of 0.01 to a final value of 0.000133, allowing for precise parameter tuning in the later stages of training without introducing instability.

In conclusion, the combination of a carefully chosen experimental setup, extensive data augmentation, and a tailored training regimen resulted in a stable and effective learning process. The model converged reliably over 300 epochs, achieving high performance on the validation set without exhibiting signs of overfitting, thereby validating the chosen experimental parameters and overall approach.

4.4. Comparative Experiments

To validate the effectiveness of the proposed YOLO11n-based improved model, this study selected multiple mainstream YOLO series versions, advanced object detection models, and representative non-YOLO series models for comparative experiments under identical datasets and experimental conditions. The results are presented in

Table 2. To ensure the robustness of the conclusions, all metrics are reported as the mean with a 95% confidence interval (CI) from five independent runs (

n = 5), and statistical significance was assessed using paired

t-tests (

= 0.05).

The improved model demonstrates outstanding performance in drowning detection: Drowning Precision (DP) reaches 88.4% (88.4 ± 0.4%), and Drowning Recall (DR) reaches 89.7% (89.7 ± 0.5%), significantly outperforming the lightweight models YOLO11n (DP: 85.7 ± 0.5%, DR: 88.8 ± 0.6%) and YOLO12n (DP: 80.8 ± 0.8%, DR: 83.3 ± 0.7%) (p < 0.05) while matching the performance of the medium-sized models YOLO11s and YOLO11m, and surpassing traditional one-stage detectors like RetinaNet (DP: 84.9 ± 0.6%, p < 0.05). The drowning mean Average Precision (DmAP50) was 94.1% (94.1 ± 0.3%), which is statistically superior to most comparison models, including YOLO11n (91.8 ± 0.4%, p < 0.01), YOLOv5 (92.9 ± 0.3%, p < 0.05), and Faster R-CNN (92.8 ± 0.5%, p < 0.05). It demonstrates highly competitive performance against the state-of-the-art ViTDet (94.5 ± 0.6%), with the difference being not statistically significant (p > 0.05), which significantly enhances the detection accuracy for critical targets. For swimming detection, the improved model achieves 85.6% (85.6 ± 0.4%) SmAP50, outperforming most baseline models (e.g., vs. YOLO11n: 82.4 ± 0.5%, p < 0.01) and demonstrating robust multi-class recognition capability.

Although the inference speed (FPS) of the improved model is approximately 80.5 (80.5 ± 2.5) frames/second, lower than some models like YOLO11m and YOLO11l, it still meets the real-time requirements for drowning detection scenarios. In terms of model efficiency, the computational complexity (FLOPs) is only 6.2 G (6.2 ± 0.1 G), with approximately 2.02 million parameters and a model size of just 4.25MB, significantly lower than RTDERT-I, large-scale YOLO11 models, Faster R-CNN, ViTDet, etc., facilitating deployment in resource-constrained environments.

Minor declines in certain metrics (such as inference speed and detection accuracy for specific classes) primarily stem from the computational overhead introduced by enhanced feature representation and multi-scale fusion capabilities. Specifically, while multi-layer attention modules, frequency domain feature fusion, and weighted feature pyramid structures significantly enhance recognition of drowning targets in complex scenes, they also introduce additional computational burden, resulting in slightly reduced inference efficiency. Simultaneously, the deepened backbone network and detection head structures, designed to improve detection accuracy, further increase model complexity, leading to trade-offs in some lightweight metrics. Overall, this balance reflects a reasonable compromise between accuracy and efficiency, better aligning with the high precision requirements of practical drowning detection applications.

In summary, the proposed improved model effectively reduces model complexity and storage requirements while maintaining high detection precision and recall, achieving an optimal balance between detection performance and lightweight design. The statistical analysis confirms that the performance improvements are robust and significant, demonstrating broad applicability and promotion value for practical drowning detection applications.

4.5. Ablation Study

To systematically evaluate the impact of each proposed module on the drowning detection performance of YOLO11n, this paper conducts ablation studies: the lightweight module LGCBlock, attention-enhanced module C2PSAiSCSA, and feature fusion module BiFF-Net are incrementally integrated into the baseline model to investigate their individual contributions to detection performance and operational efficiency. The results, presented in

Table 3, are reported as mean ±95% CI from five independent runs, with statistical significance (paired

t-test,

= 0.05) calculated against the baseline (YOLO11n).

First, after introducing the lightweight downsampling feature extraction module (denoted as A), which integrates the attention-enhanced properties of LAE and the efficient convolution design of GhostC3k2, the model’s computational complexity and parameter count are significantly reduced (FLOPs: 5.9 ± 0.1 G vs. 6.3 ± 0.1 G; Params: 2.21 M vs. 2.58 M). This module effectively decreases FLOPs and model size, enhancing lightweight performance. However, due to a trade-off in feature representation capability, a slight decline in precision and recall for the drowning category is observed (DmAP50: 92.0 ± 0.4% vs. 91.8 ± 0.4%, p > 0.05), illustrating the inherent balance between lightweight design and detection performance.

Second, when independently incorporating the inverted residual separated attention module C2PSAiSCSA (denoted as B), its spatial–channel selective attention mechanism strengthens the model’s ability to capture critical features within drowning target regions. This results in a significant improvement in drowning category detection precision and mAP (DmAP50: 93.0 ± 0.3% vs. 91.8 ± 0.4%, p < 0.01). Simultaneously, while maintaining low computational complexity, this module effectively enhances the model’s capability to recognize small targets against complex backgrounds, achieving substantial performance gains.

Third, after independently integrating BiFF-Net (denoted as C), the optimized weighted fusion process for multi-scale features enriches the interaction and propagation of semantic information. This strategy not only further boosts detection recall (DR: 90.1 ± 0.6%) but also plays a crucial role in reducing computational resource consumption and model size, thereby enhancing the model’s practicality and deployment efficiency.

Finally, modules are progressively combined: The YOLO11n+A+B configuration introduces the attention mechanism on the lightweight foundation, achieving a balance between detection accuracy (DmAP50: 92.3 ± 0.4%) and model efficiency. The YOLO11n+A+B+C configuration leverages the synergistic effect of all three modules, yielding optimal performance in drowning category precision, recall, and mAP (DmAP50: 94.1 ± 0.3% vs. baseline 91.8 ± 0.4%, p < 0.001). Although inference speed exhibits minor fluctuations, it remains within application requirements, while computational complexity and parameter size are substantially reduced.

The ablation studies comprehensively validate the synergistic effects of lightweight design, attention mechanisms, and efficient feature fusion. The statistical analysis confirms the significant contribution of each module, demonstrating the targeted contributions of each module in enhancing performance and optimizing efficiency, ultimately achieving an ideal balance between accuracy and efficiency for the drowning detection task.

4.6. Visualization Result Analysis

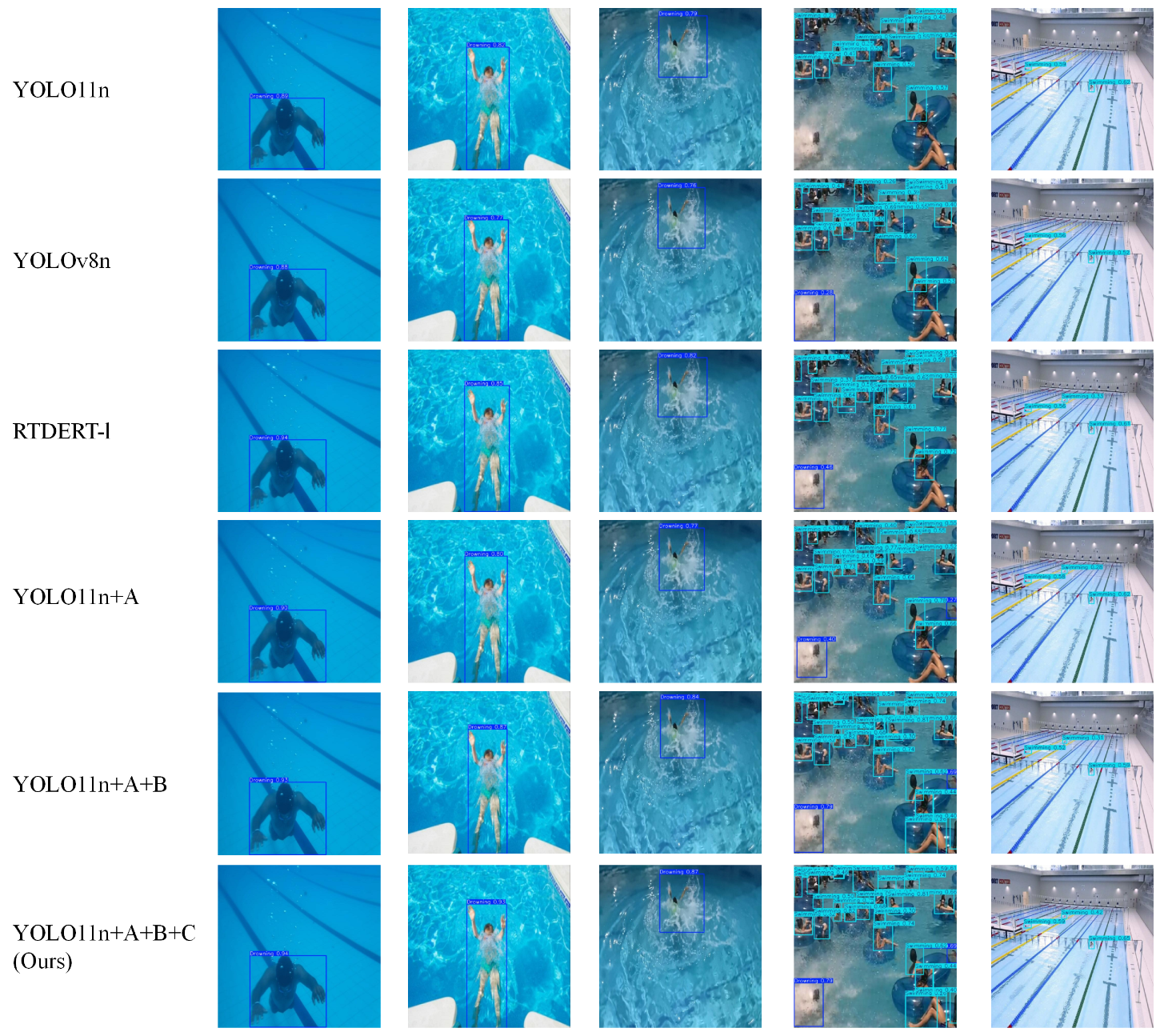

To intuitively demonstrate the advantages of the proposed algorithm in complex real-world scenarios, particularly its capability to address challenges such as water surface glare, crowd occlusion, and small object detection, this section provides a detailed qualitative analysis, as shown in

Figure 7.

As shown in the second example column, under interference from complex bright backgrounds caused by strong water surface glare, the baseline model YOLO11n was able to detect the drowning person but with relatively low confidence. In contrast, our model—leveraging the powerful spatial–channel attention mechanism of the C2PSAiSCSA module—effectively suppresses glare-induced background noise and focuses on authentic target features. This results in more complete and accurate detections without false positives.

As illustrated in the third and fourth example columns, in scenarios with dense crowds and splash occlusion between targets, YOLO11n exhibits low confidence in detecting partially occluded targets due to its limited receptive field and insufficient feature extraction capability. In comparison, the C2PSAiSCSA module in our model enhances the ability to focus on key parts of the targets (such as heads and arms), effectively penetrating visual occlusions. It successfully localizes and identifies occluded drowning targets, significantly improving the model’s robustness.

As demonstrated in the fifth example column, for distant small-scale targets (with bounding boxes occupying less than 32 × 32 pixels), the detection confidence of all comparative models declines. Notably, YOLO11n and YOLOv8n show high missed-detection rates, while other models remain effective in identifying these targets. By bidirectionally integrating deep semantic information with shallow high-resolution features, the proposed BiFF-Net module supplies the detection head with rich, fine-grained details of small targets. As a result, our method achieves the highest and most stable detection performance for small objects, successfully validating the design objective of this module.

In summary, the visualization results strongly corroborate, from a qualitative perspective, the superior performance of the proposed model in addressing critical challenges in practical deployment, especially empowered by its core modules, which aligns with and reinforces the conclusions drawn from the previous quantitative analysis.

6. Conclusions

This paper proposes the YOLO11-LiB model, an improved version based on YOLO11n, to address the practical requirements of drowning detection in swimming pool scenarios. Through lightweight design, attention mechanism optimization, and multi-scale feature fusion, it effectively resolves bottlenecks in existing technologies regarding edge deployment efficiency, robustness in complex environments, and multi-scale target detection.

The main research conclusions are as follows:

Lightweight design significantly enhances deployment adaptability: LGCBlock through synergistic optimization of LAE downsampling and GhostC3k2 structure, reduces downsampling computation by 87.5% and feature extraction overhead by 50% while preserving the integrity of key features. This substantially reduces model parameters and size, meeting deployment requirements in resource-constrained scenarios such as pool-embedded terminals.

Attention mechanism strengthens robustness in complex environments: C2PSAiSCSA enhances perception of subtle drowning postures by combining spatial-channel decoupled attention with local context modeling. It effectively suppresses interference from water surface glare and crowd occlusion, increasing drowning recall rate (DR) to 89.7%.

Fusion improves multi-scale detection accuracy: BiFF-Net optimizes semantic fusion of multi-scale features through its bidirectional feedback mechanism of dynamic weighting and frequency-domain enhancement. It particularly improves detection accuracy for small targets, achieving a DmAP50 of 94.1%, outperforming all comparison models.

Comprehensive performance balance validates solution effectiveness: Comparative and ablation experiments demonstrate that YOLO11-LiB achieves a DmAP50 of 94.1%, representing a 2.3% improvement over the baseline YOLO11n, while reducing model size by 19.2%. This achieves an ideal “accuracy–efficiency” balance, providing reliable technical support for real-time drowning detection.

Current research on aquatic object detection utilizing YOLO architectures demonstrates notable limitations: The visual datasets commonly employed frequently lack comprehensive coverage of complex, variable real-world aquatic environments, including adverse weather, turbid water, and low-light conditions, while exhibiting inadequate robustness under extreme optical circumstances. More critically, as single-frame detectors, YOLO-based systems fundamentally cannot capture temporal features inherent in continuous dynamic processes such as drowning, substantially elevating risks of false alarms and missed detections.

Future work must urgently prioritize constructing diversified datasets encompassing more complex and extreme scenarios, alongside exploring integration of multimodal sensory information to enhance perceptual reliability in challenging environments. Concurrently, transcending the single-frame detection paradigm through incorporation of temporal modeling or object tracking techniques is essential to analyze targets’ continuous motion states and behavioral patterns. This paradigm shift will enable more precise identification of progressive events like drowning. Collectively, these advancements will significantly improve system practicality and generalization capability, facilitating deployment of aquatic safety monitoring technologies across broader, more demanding real-world applications.