Fusing Residual and Cascade Attention Mechanisms in Voxel–RCNN for 3D Object Detection

Abstract

1. Introduction

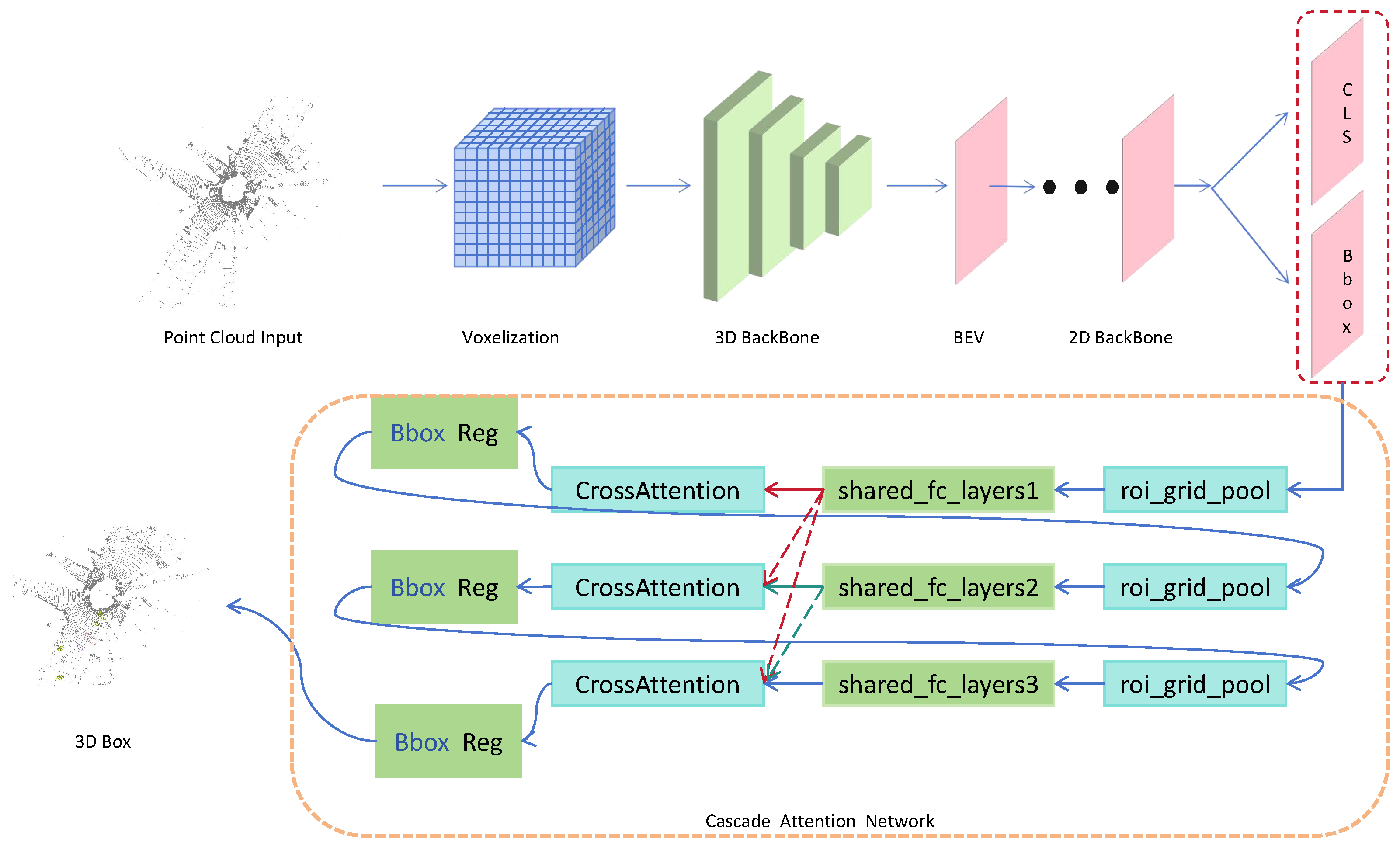

- (1)

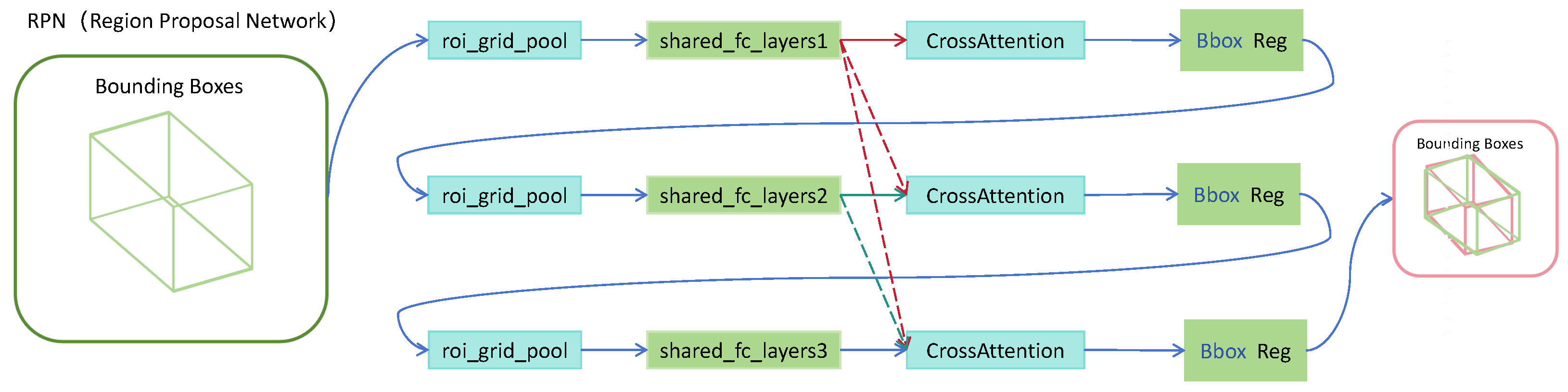

- To address the limited performance of the RPN, a Cascaded Attention Network is designed to iteratively refine region proposals and produce high-quality predictions.

- (2)

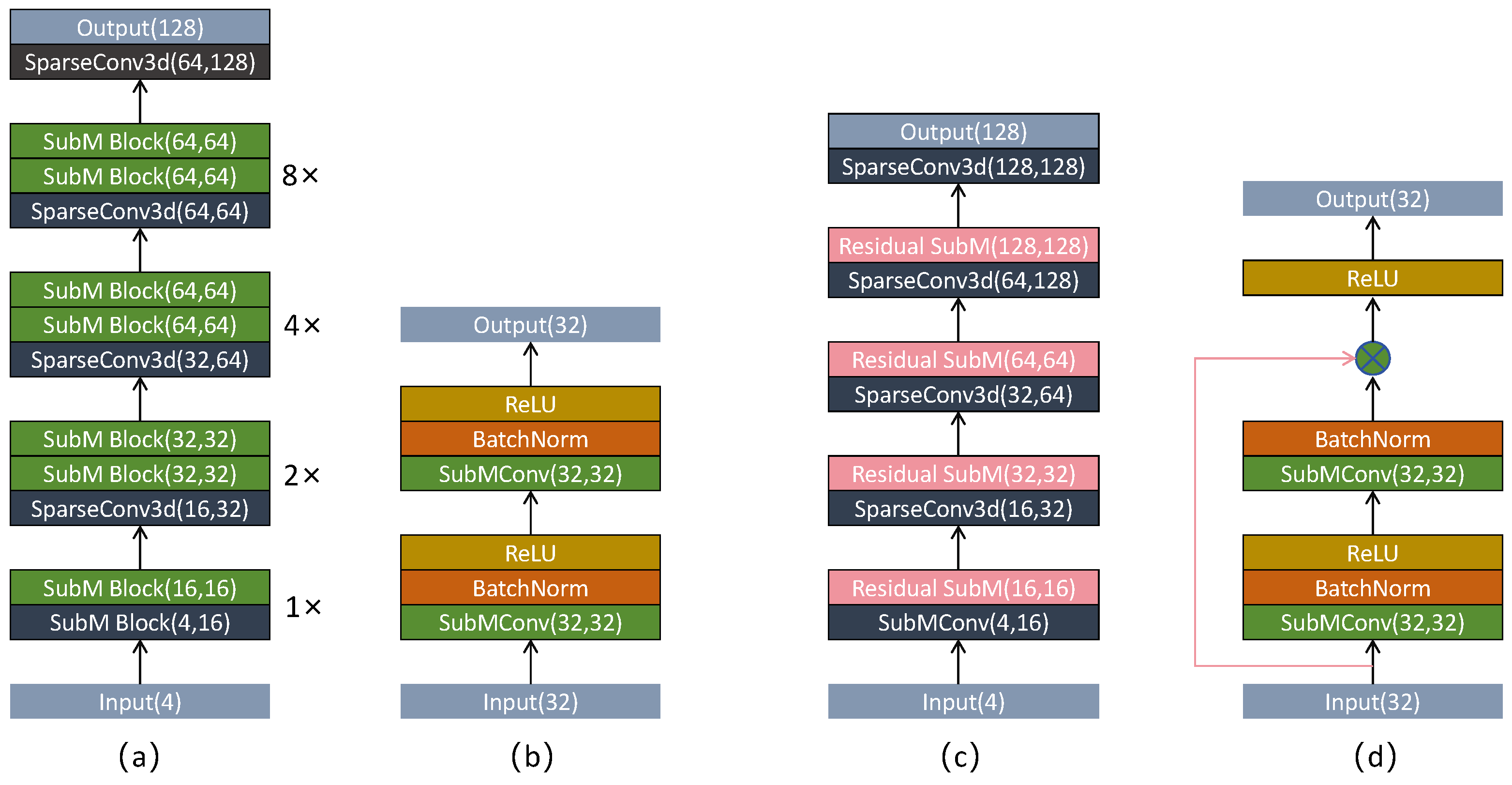

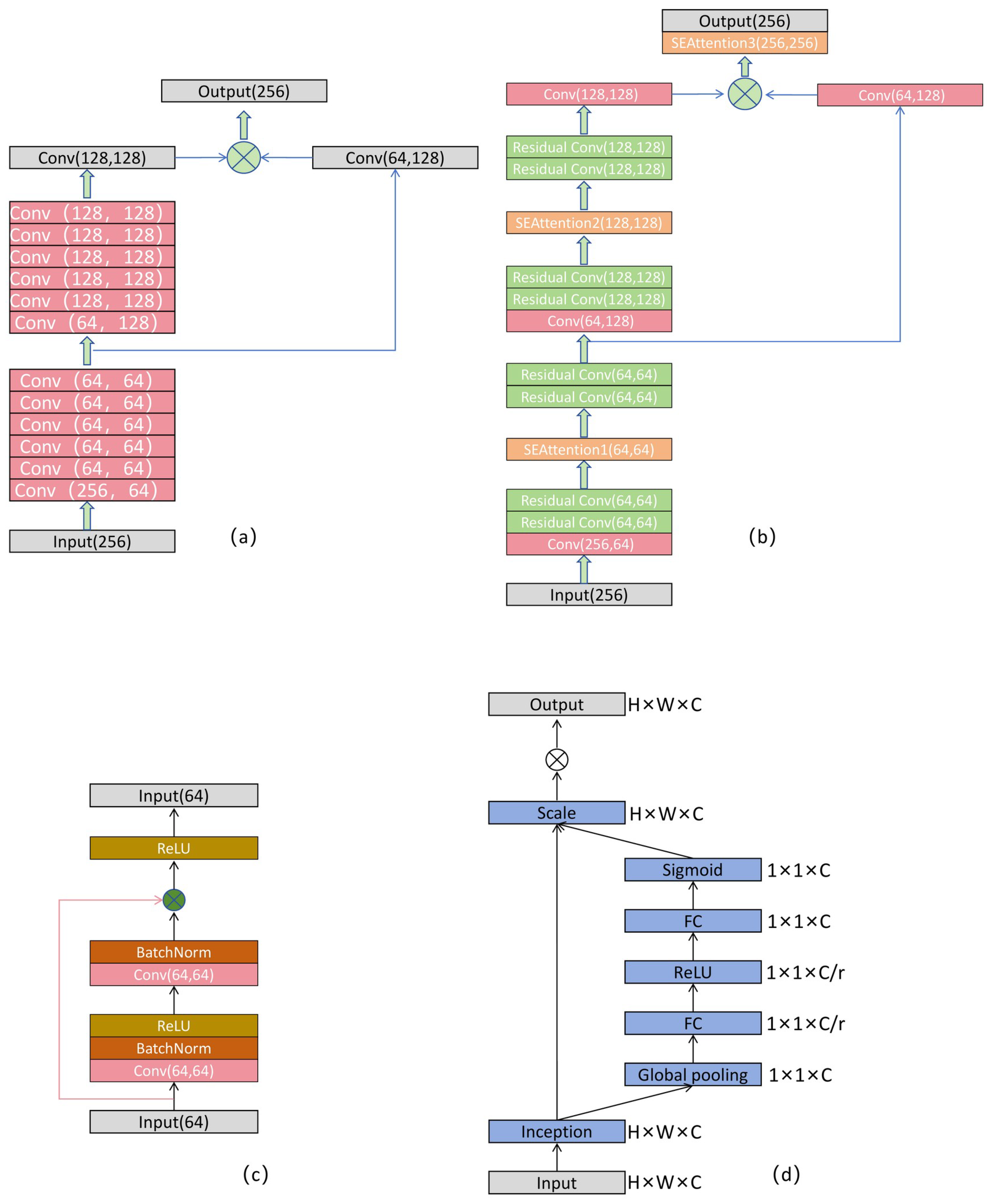

- To mitigate the degradation in feature representation caused by excessively deep convolutional layers in both 3D and 2D backbones, a 3D Residual Network is introduced to enhance feature transmission across layers. Furthermore, a 2D Residual Attention Network is proposed to improve the model’s sensitivity to small-scale objects by incorporating attention-based feature weighting.

- (3)

- The proposed method is validated on the KITTI dataset, with the results demonstrating its effectiveness. Under the hard setting of the 3D Average Precision (AP) metric using R40 evaluation, detection performance for cars, pedestrians, and cyclists improves by 3.34%, 10.75%, and 4.61%, respectively.

2. Related Work

3. Methods

3.1. Residual Backbone

3.2. Residual Attention Network

3.3. Cascade Attention Network

3.4. Loss Function

4. Experiment

4.1. Dataset and Evaluation Metrics

4.2. Experiment Details

4.3. Comparison with Other Algorithms

4.4. Ablation Experiment

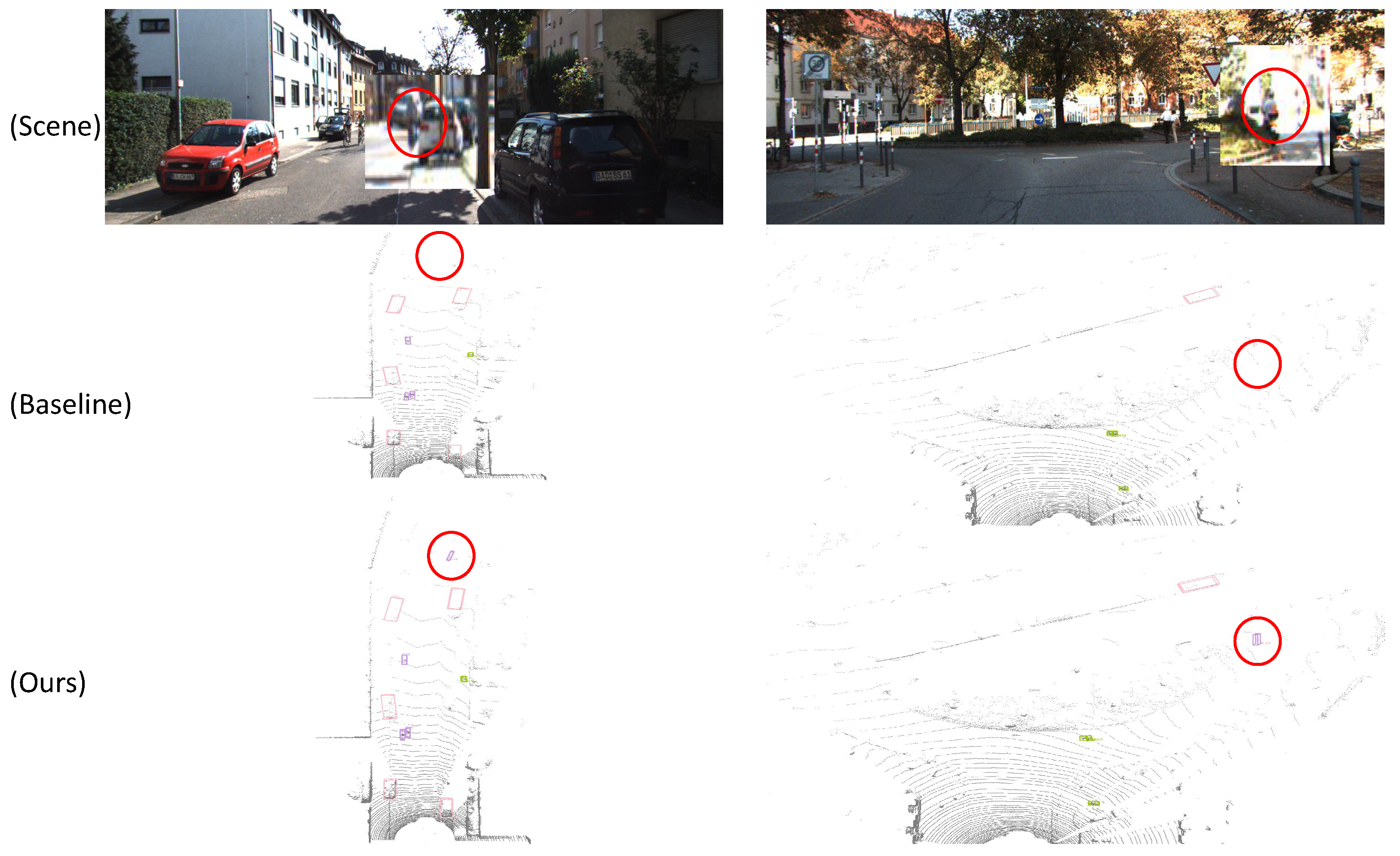

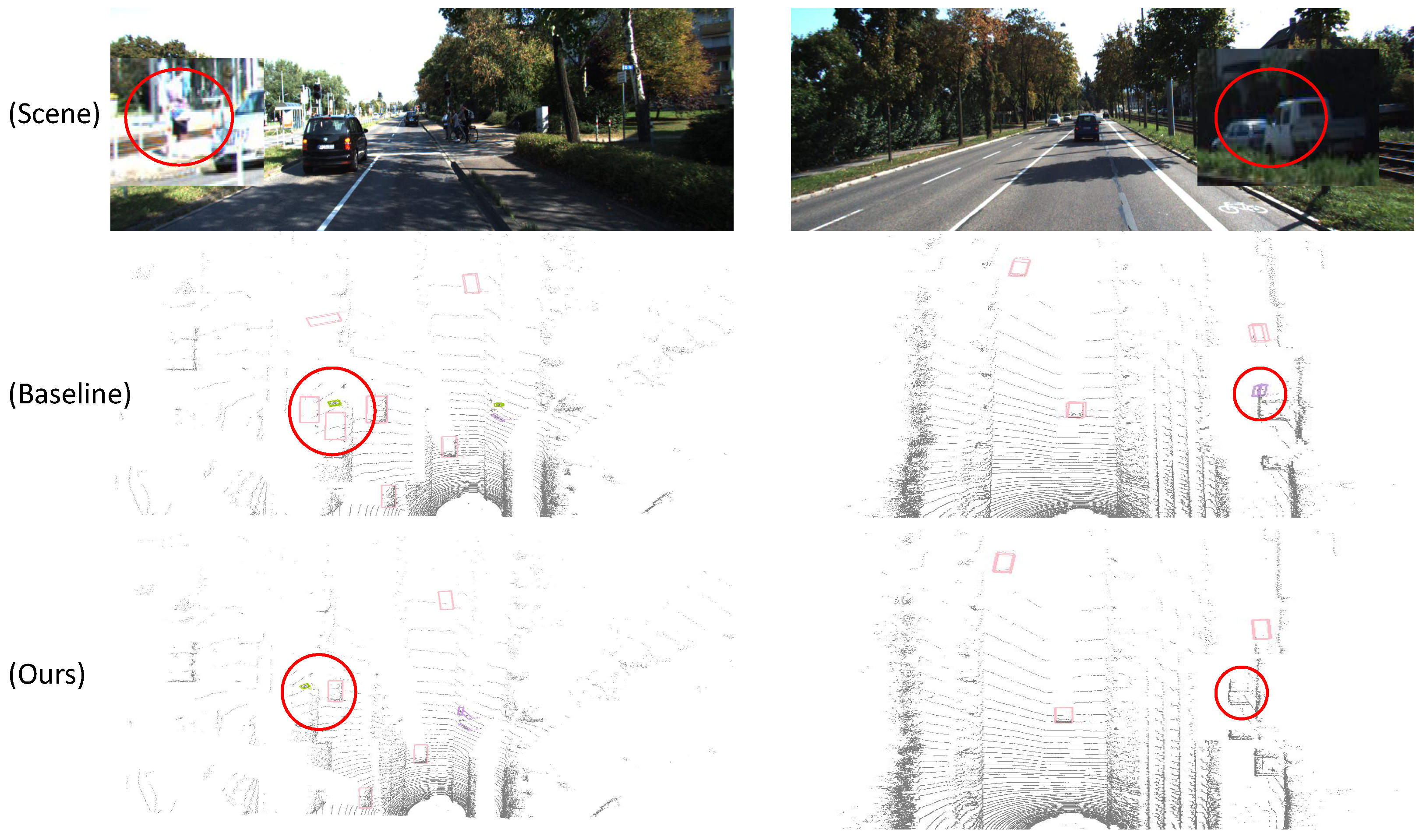

4.5. Visualisation of the Results

5. Discussion

5.1. Limitations

5.2. Improvement Methods

6. Summary

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| RCAVoxel-RCNN | Residual and Cascade Attention Mechanisms in Voxel–RCNN |

| RPN | Region Proposal Network |

| CAN | Cascade Attention Network |

| RAN | Residual Attention Network |

| SE | Squeeze-and-Excitation |

| BEV | Bird’s-Eye View |

| 3D | Three-Dimensional |

| 2D | Two-Dimensional |

| VFE | Voxel Feature Encoding |

| PFN | Pillar Feature Net PFN |

| VSA | Voxel Set Abstraction |

| FC | Fully Connected |

References

- Qian, R.; Lai, X.; Li, X. 3D object detection for autonomous driving: A survey. Pattern Recognit. 2022, 130, 108796. [Google Scholar] [CrossRef]

- Wu, Y.; Wang, Y.; Zhang, S.; Ogai, H. Deep 3D object detection networks using LiDAR data: A review. IEEE Sens. J. 2020, 21, 1152–1171. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Advances in Neural Information Processing Systems; NIPS Foundation: La Jolla, CA, USA, 2017; Volume 30. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. PointRCNN: 3D object proposal generation and detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Jia, J. 3DSSD: Point-based 3D single stage object detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11040–11048. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. Second: Sparsely embedded convolutional detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End learning for point cloud based 3d object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Deng, J.; Shi, S.; Li, P.; Zhou, W.; Zhang, Y.; Li, H. Voxel r-cnn: Towards high performance voxel-based 3d object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual Event, 2–9 February 2021; Volume 35, pp. 1201–1209. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Yin, T.; Zhou, X.; Krahenbuhl, P. Center-based 3D object detection and tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11784–11793. [Google Scholar]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. Pv-rcnn: Point-voxel feature set abstraction for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10529–10538. [Google Scholar]

- He, C.; Zeng, H.; Huang, J.; Hua, X.S.; Zhang, L. Structure aware single-stage 3d object detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11873–11882. [Google Scholar]

- Sheng, H.; Cai, S.; Liu, Y.; Deng, B.; Huang, J.; Hua, X.S.; Zhao, M.J. Improving 3d object detection with channel-wise transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 20–25 June 2021; pp. 2743–2752. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Mao, J.; Xue, Y.; Niu, M.; Bai, H.; Feng, J.; Liang, X.; Xu, H.; Xu, C. Voxel transformer for 3d object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 20–25 June 2021; pp. 3164–3173. [Google Scholar]

- Kong, T.; Sun, F.; Liu, H.; Jiang, Y.; Li, L.; Shi, J. Foveabox: Beyound anchor-based object detection. IEEE Trans. Image Process. 2020, 29, 7389–7398. [Google Scholar] [CrossRef]

- Fan, L.; Xiong, X.; Wang, F.; Wang, N.; Zhang, Z. RangeDet: In defense of range view for lidar-based 3d object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 2918–2927. [Google Scholar]

- Xiong, S.; Li, B.; Zhu, S. DCGNN: A single-stage 3D object detection network based on density clustering and graph neural network. Complex Intell. Syst. 2023, 9, 3399–3408. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems; NIPS Foundation: La Jolla, CA, USA, 2015; Volume 28. [Google Scholar]

- Gholamalinezhad, H.; Khosravi, H. Pooling methods in deep neural networks, a review. arXiv 2020, arXiv:2009.07485. [Google Scholar] [CrossRef]

- Tang, H.; Liu, Z.; Zhao, S.; Lin, Y.; Lin, J.; Wang, H.; Han, S. Searching efficient 3d architectures with sparse point-voxel convolution. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 685–702. [Google Scholar]

- Shi, S.; Jiang, L.; Deng, J.; Wang, Z.; Guo, C.; Shi, J.; Wang, X.; Li, H. PV-RCNN++: Point-voxel feature set abstraction with local vector representation for 3D object detection. Int. J. Comput. Vis. 2023, 131, 531–551. [Google Scholar] [CrossRef]

- Xue, Y.; Mao, J.; Niu, M.; Xu, H.; Mi, M.B.; Zhang, W.; Wang, X.; Wang, X. Point2seq: Detecting 3d objects as sequences. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8521–8530. [Google Scholar]

- Wang, H.; Yu, Y.; Cai, Y.; Chen, X.; Chen, L.; Li, Y. Soft-weighted-average ensemble vehicle detection method based on single-stage and two-stage deep learning models. IEEE Trans. Intell. Veh. 2020, 6, 100–109. [Google Scholar] [CrossRef]

- Wang, R.; Peethambaran, J.; Chen, D. Lidar point clouds to 3-D urban models: A review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 606–627. [Google Scholar] [CrossRef]

- Graham, B.; Van der Maaten, L. Submanifold sparse convolutional networks. arXiv 2017, arXiv:1706.01307. [Google Scholar] [PubMed]

- Wang, H.; Chen, Z.; Cai, Y.; Chen, L.; Li, Y.; Sotelo, M.A.; Li, Z. Voxel-RCNN-complex: An effective 3-D point cloud object detector for complex traffic conditions. IEEE Trans. Instrum. Meas. 2022, 71, 2507112. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Dollár, P.; Appel, R.; Belongie, S.; Perona, P. Fast feature pyramids for object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1532–1545. [Google Scholar] [CrossRef] [PubMed]

- Liu, Q.; Li, X.; Zhang, X.; Tan, X.; Shi, B. Multi-view joint learning and bev feature-fusion network for 3d object detection. Appl. Sci. 2023, 13, 5274. [Google Scholar] [CrossRef]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual attention network for image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3156–3164. [Google Scholar]

- Wu, H.; Deng, J.; Wen, C.; Li, X.; Wang, C.; Li, J. CasA: A cascade attention network for 3-D object detection from LiDAR point clouds. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5704511. [Google Scholar] [CrossRef]

- Chen, C.F.R.; Fan, Q.; Panda, R. Crossvit: Cross-attention multi-scale vision transformer for image classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 20–25 June 2021; pp. 357–366. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Liao, Z.; Jin, Y.; Ma, H.; Alsumeri, A. Distance Awared: Adaptive Voxel Resolution to help 3D Object Detection Networks See Farther. In Proceedings of the 2023 42nd Chinese Control Conference (CCC), Tianjin, China, 24–26 July 2023; pp. 7995–8000. [Google Scholar]

- Wu, P.; Wang, Z.; Zheng, B.; Li, H.; Alsaadi, F.E.; Zeng, N. AGGN: Attention-based glioma grading network with multi-scale feature extraction and multi-modal information fusion. Comput. Biol. Med. 2023, 152, 106457. [Google Scholar] [CrossRef] [PubMed]

| Methods | Car.3D (APR40) | Ped.3D (APR40) | Cyc.3D (APR40) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Easy | Mod | Hard | Easy | Mod | Hard | Easy | Mod | Hard | |

| PV-RCNN (CVPR) | 92.57 | 84.83 | 82.69 | 64.26 | 56.67 | 51.91 | 88.65 | 71.95 | 66.78 |

| PV-RCNN++ | 92.11 | 85.55 | 82.27 | 67.74 | 60.55 | 55.92 | 88.73 | 73.58 | 69.05 |

| PointPillars (CVPR) | 86.42 | 77.29 | 75.60 | 53.6 | 48.36 | 45.22 | 82.83 | 64.24 | 60.05 |

| SECOND (SENSOR) | 90.55 | 81.86 | 78.61 | 55.94 | 51.14 | 46.17 | 82.96 | 66.74 | 65.34 |

| CT3D (ICCV) | 92.85 | 85.82 | 82.86 | 65.73 | 58.56 | 53.04 | 91.99 | 71.6 | 67.34 |

| VoxelNet (CVPR) | 87.88 | 75.58 | 72.77 | 56.46 | 50.97 | 45.65 | 78.18 | 61.74 | 54.68 |

| (TPAMI) | 89.36 | 80.23 | 78.88 | 65.7 | 61.32 | 55.4 | 85.6 | 69.24 | 65.63 |

| PointRCNN (CVPR) | 89.8 | 78.65 | 78.05 | 62.69 | 55.77 | 52.65 | 84.48 | 66.37 | 60.83 |

| Ours | 92.81 | 85.78 | 83.51 | 71.46 | 64.74 | 58.72 | 92.92 | 76.61 | 71.62 |

| −0.04 | −0.04 | +0.65 | +5.74 | +3.42 | +3.32 | +0.93 | +4.66 | +4.28 | |

| Method | Module | Car.3D (APR40) | Ped (APR40) | Cyc.3D (APR40) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CAN | RAN | 3D Residual | Easy | Mod | Hard | Easy | Mod | Hard | Easy | Mod | Hard | ||

| RN | RAN | ||||||||||||

| Voxel–RCNN | 92.34 | 82.71 | 80.17 | 60.75 | 52.93 | 47.97 | 87.31 | 71.94 | 67.43 | ||||

| A | ✓ | 92.47 | 83.85 | 82.18 | 69.80 | 61.53 | 56.88 | 90.82 | 73.53 | 68.88 | |||

| B | ✓ | ✓ | 92.58 | 83.86 | 82.33 | 69.9 | 61.54 | 56.95 | 91.22 | 73.46 | 69.91 | ||

| C | ✓ | ✓ | 92.80 | 84.93 | 82.99 | 70.13 | 62.52 | 57.31 | 91.85 | 74.05 | 69.92 | ||

| Ours | ✓ | ✓ | ✓ | 92.81 | 85.78 | 83.51 | 71.46 | 64.74 | 58.72 | 92.92 | 76.61 | 71.62 | |

| Improvement | +0.47 | +3.09 | +3.34 | +10.71 | +11.81 | +10.75 | +5.61 | +4.67 | +4.61 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, Y.; Zhang, Y.; Fan, X.; Cai, D.; Gong, R. Fusing Residual and Cascade Attention Mechanisms in Voxel–RCNN for 3D Object Detection. Sensors 2025, 25, 5497. https://doi.org/10.3390/s25175497

Lu Y, Zhang Y, Fan X, Cai D, Gong R. Fusing Residual and Cascade Attention Mechanisms in Voxel–RCNN for 3D Object Detection. Sensors. 2025; 25(17):5497. https://doi.org/10.3390/s25175497

Chicago/Turabian StyleLu, You, Yuwei Zhang, Xiangsuo Fan, Dengsheng Cai, and Rui Gong. 2025. "Fusing Residual and Cascade Attention Mechanisms in Voxel–RCNN for 3D Object Detection" Sensors 25, no. 17: 5497. https://doi.org/10.3390/s25175497

APA StyleLu, Y., Zhang, Y., Fan, X., Cai, D., & Gong, R. (2025). Fusing Residual and Cascade Attention Mechanisms in Voxel–RCNN for 3D Object Detection. Sensors, 25(17), 5497. https://doi.org/10.3390/s25175497