Abstract

To address the insufficient accuracy of traditional single-sensor navigation methods in dense planting environments of pomegranate orchards, this paper proposes a vision and LiDAR fusion-based navigation line extraction method for orchard environments. The proposed method integrates a YOLOv8-ResCBAM trunk detection model, a reverse ray projection fusion algorithm, and geometric constraint-based navigation line fitting techniques. The object detection model enables high-precision real-time detection of pomegranate tree trunks. A reverse ray projection algorithm is proposed to convert pixel coordinates from visual detection into three-dimensional rays and compute their intersections with LiDAR scanning planes, achieving effective association between visual and LiDAR data. Finally, geometric constraints are introduced to improve the RANSAC algorithm for navigation line fitting, combined with Kalman filtering techniques to reduce navigation line fluctuations. Field experiments demonstrate that the proposed fusion-based navigation method improves navigation accuracy over single-sensor methods and semantic-segmentation methods, reducing the average lateral error to 5.2 cm, yielding an average lateral error RMS of 6.6 cm, and achieving a navigation success rate of 95.4%. These results validate the effectiveness of the vision and 2D LiDAR fusion-based approach in complex orchard environments and provide a viable route toward autonomous navigation for orchard robots.

1. Introduction

As an important economic crop, pomegranate cultivation has developed rapidly in many regions [1,2]. However, the level of mechanization in orchard operations significantly lags behind other agricultural sectors. Particularly in densely planted pomegranate orchards, traditional manual operations are not only labor-intensive but also exhibit low efficiency, severely constraining the rapid development of the pomegranate industry [3].

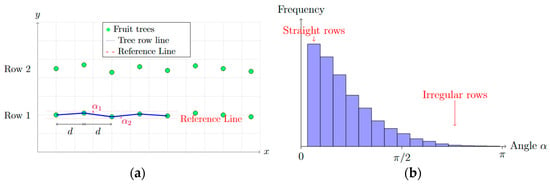

Compared with other orchards, pomegranate orchards exhibit distinct environmental characteristics and operational challenges. At the young-tree stage, trunk diameters are only 5–15 cm, and the planting density is high, with a row spacing of 2–3 m and an in-row spacing of 1–2 m, as shown in Figure 1. Beyond the spatial layout, ambient conditions directly affect both experiments and algorithm performance: fluctuations in temperature and humidity broaden the image luminance dynamic range; strong direct sunlight and dappled canopy shadows cause unstable image contrast and textures, increasing the difficulty of trunk detection; the ground surface contains both bare soil and weeds, and the soil is predominantly sandy loam—under dry conditions dust is raised, whereas under wet conditions water-surface glare and wheel slip further degrade the measurement quality of the camera and LiDAR. These climate, soil, and ground-surface factors, compounded with the high-density planting structure, impose more demanding operating conditions than typical orchards, making autonomous navigation more challenging in object perception, robust fitting, and path tracking [4,5].

Figure 1.

Pomegranate orchard environment: (a) Regular planting pattern; (b) Slope covering planting pattern.

In the development of autonomous navigation technology for orchards, traditional orchard navigation methods primarily rely on Global Navigation Satellite Systems (GNSS). However, in dense canopy-occluded orchard environments, GNSS signals are susceptible to interference or loss, resulting in significantly degraded positioning accuracy [6]. To overcome this limitation, researchers have developed sensor-based active positioning and navigation technologies, among which Light Detection and Ranging (LiDAR) and machine vision technologies have attracted considerable attention due to their robustness in complex environments [7].

In the field of machine vision navigation, Liu et al. [8] developed a single-stage navigation path extraction network (NPENet) that directly predicts road centerlines through an end-to-end approach, simplifying traditional multi-stage processing workflows. Cao et al. [9] implemented grape trunk detection based on an improved YOLOv8-Trunk network and fitted navigation lines using the least squares method. Yang et al. [10] proposed a visual navigation path extraction method based on neural networks and pixel scanning, improving the segmentation performance for orchard road condition information and background environments. Silva et al. [11] presented a deep learning crop row detection algorithm capable of achieving robust visual navigation under different field conditions. Gai et al. [12] developed a depth camera-based crop row detection system particularly suitable for robot navigation under tall crop canopies such as corn and sorghum, addressing GPS signal occlusion issues. Winterhalter et al. [13] proposed a method using pattern Hough transform to detect small plants, improving the accuracy of crop row detection in early growth stages. Gai et al. [14] introduced a color and depth image fusion method that enhanced crop detection accuracy under high weed density conditions. However, these single vision-based methods experience significant performance degradation under adverse lighting conditions and struggle to directly obtain precise distance information, limiting their application in precision navigation [15].

Jiang et al. [16] developed a 2D LiDAR-based orchard spraying robot navigation system that achieved trunk detection and path planning through DBSCAN clustering and RANSAC algorithms. Li et al. [17] proposed a 3D LiDAR-based autonomous navigation method for orchard mobile robots, optimizing point cloud processing efficiency through octree data structures. Abanay et al. [18] developed a ROS-based 2D LiDAR navigation system for strawberry greenhouse environments. Liu et al. [19] presented a LiDAR-based navigation system for standardized apple trees, but it primarily relied on regularly arranged tree configurations. Malavazi et al. [20] developed an autonomous agricultural robot navigation algorithm based on 2D LiDAR, achieving crop row line extraction through an improved PEARL method that enables robust navigation without prior crop information. Jiang et al. [21] proposed a 3D LiDAR SLAM-based orchard spraying robot navigation system, combining NDT and ICP point cloud registration algorithms to improve positioning accuracy. Jiang et al. [22] developed a 3D LiDAR SLAM-based autonomous navigation system for stacked cage farming, achieving reliable robot localization and mapping. Firkat et al. [23] proposed FGSeg, a LiDAR-based field ground segmentation algorithm for agricultural robots, achieving high-precision ground detection and obstacle recognition through seed ground point extraction. However, these single LiDAR-based methods still face challenges of point cloud processing efficiency and high computational resource consumption in complex agricultural environments, with detection accuracy significantly declining when trunk diameters are small, limiting their widespread application in low-cost agricultural robots [24].

To overcome the limitations of single sensors, multi-sensor fusion technology has gradually become a research hotspot in the field of orchard navigation. Jiang et al. [25] employed thermal cameras and LiDAR as sensors, utilizing YOLACT (You Only Look At CoefficienTs) deep learning for navigation, object detection, and image segmentation, integrating accurate distance data from LiDAR to achieve real-time navigation based on vehicle position. Ban et al. [26] proposed a camera-LiDAR-IMU fusion-based navigation line extraction method for corn fields, achieving precise agricultural robot navigation through feature-level fusion. Kang et al. [27] presented a high-resolution LiDAR and camera fusion method for fruit localization, achieving fusion by projecting point clouds onto image planes. Han et al. [28] developed a LiDAR and vision-based obstacle avoidance and navigation system, using calibration parameters to transform both data types into the same coordinate system. Shalal et al. [29] developed an orchard mapping and mobile robot localization system based on onboard camera and laser scanner data fusion, achieving tree detection and precise robot positioning through geometric transformation registration of laser point clouds with visual images. Xue et al. [30] proposed a trunk detection method based on LiDAR and visual data fusion, employing evidence theory for multi-sensor information fusion and converting laser point clouds and visual images to a unified coordinate system through calibration parameters, improving trunk detection robustness. Yu et al. [31] noted in their review of agricultural 3D reconstruction technology that multi-sensor fusion technology combines LiDAR’s precise distance information with cameras’ rich texture information, providing more comprehensive environmental perception capabilities for precision agriculture. Ji et al. [32] proposed a farmland obstacle detection and recognition method based on fused point cloud data, achieving reliable obstacle detection in complex agricultural environments through multi-sensor data fusion. However, these multi-sensor fusion methods still face challenges in data synchronization, sensor calibration, and real-time processing, requiring further algorithm optimization to reduce computational complexity and improve system practicality [33]. Currently, specialized research on autonomous navigation for pomegranate orchards is relatively limited, with most studies focusing on mature orchard environments such as apple and citrus orchards [34], showing limited adaptability for dense planting scenarios.

In current agricultural robot navigation, many studies adopt conventional algorithm-based navigation line extraction methods, such as RANSAC-based navigation line fitting, simple fusion of sensor data, and SLAM-based global path planning. However, these existing methods often fail to cope effectively with densely planted orchards characterized by complex occlusions and diverse scene changes, especially when tree trunks are thin and the spacing between trunks is narrow. They are susceptible to environmental disturbances, occlusions, and illumination variations, leading to insufficient navigation accuracy and poor adaptability under complex conditions, and they cannot realize real-time orchard autonomous navigation without a prior map. To address these issues, this paper proposes a fusion-based navigation line extraction scheme that combines a YOLOv8-ResCBAM trunk detection model with 2D LiDAR data, aiming to tackle the real-time autonomous navigation challenges in densely planted orchards that current techniques struggle with and to improve navigation accuracy and stability. This research employs a YOLOv8-ResCBAM trunk detection network model based on improved YOLOv8 [35], enhancing target trunk detection accuracy in dense environments through the introduction of residual connections and dual attention mechanisms. A reverse ray projection fusion algorithm is proposed that differs from traditional point cloud-to-image plane projection methods [36], projecting visual detection information into three-dimensional space and computing intersections with LiDAR scanning planes to achieve precise data association. A navigation line fitting algorithm based on geometric constraints and directional confidence is constructed, improving RANSAC algorithm fitting accuracy and stability through analysis of inter-tree geometric relationships and construction of angle histograms. The technical contributions of this research provide references for solving autonomous navigation problems in densely planted orchard environments and hold significant practical value for advancing orchard mechanization and intelligent development.

2. Materials and Methods

2.1. Experimental Platform

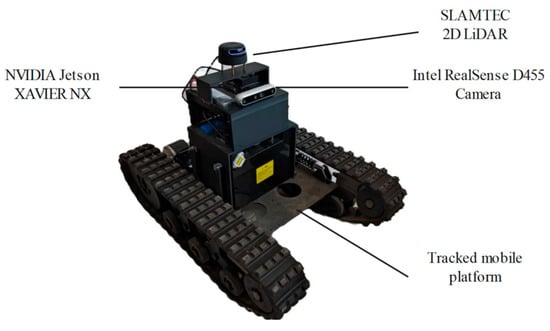

To validate the effectiveness of the proposed navigation system in pomegranate orchard environments, this study constructed an autonomous mobile platform as the experimental setup, as shown in Figure 2. The platform integrates an Intel RealSense D455 camera (Intel Corporation, Santa Clara, CA, USA), a SLAMTEC 2D LiDAR (Slamtec Co., Ltd., Shanghai, China), and an NVIDIA Jetson Xavier NX edge-computing module (NVIDIA Corporation, Santa Clara, CA, USA), and other equipment. The parameters of each component in the experimental platform are presented in Table 1.

Figure 2.

Experimental platform.

Table 1.

Hardware components.

The communication architecture of the entire system is based on the ROS Topics mechanism. Sensor data (camera images and LiDAR point clouds) are published as corresponding topics through their respective ROS drivers. The trunk detection module subscribes to the camera image topic and publishes detection results (bounding box center coordinates and confidence scores) as new ROS topics. The navigation line fitting module simultaneously subscribes to both the visual detection results topic and the LiDAR point cloud topic, and after fusion processing, publishes the fitted navigation line parameters to the path tracking control module. The path tracking control module receives the navigation line information, calculates the required linear and angular velocity control commands, and publishes them to the chassis motion controller through ROS Topics, thereby achieving autonomous navigation control of the mobile platform.

2.2. Monocular Camera and 2D LiDAR Data Fusion

In the complex autonomous navigation process of pomegranate orchards, accurate fruit tree positioning is the key to achieving orchard navigation. Sensor calibration aims to realize data fusion between vision and LiDAR to obtain accurate orchard information. This study conducted camera intrinsic parameter calibration, camera-LiDAR extrinsic parameter calibration, and joint calibration of multiple sensors to the chassis coordinate system, ensuring accurate alignment of different sensor data within a unified coordinate system [37].

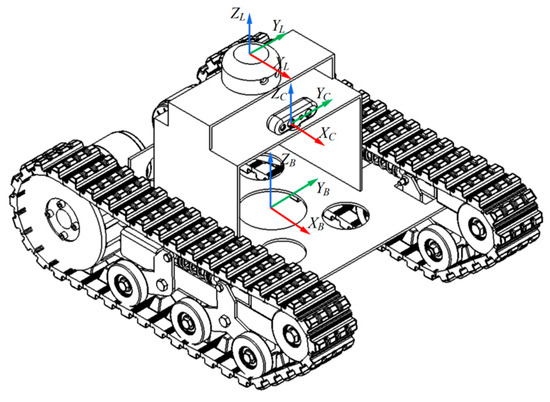

2.2.1. Coordinate System Definition and Establishment

To achieve effective fusion of multi-sensor data, this study established a standardized coordinate system framework. The chassis coordinate system is defined as the reference coordinate system, with its origin located at the chassis center point at a height above the ground. The LiDAR coordinate system has its origin at the LiDAR scanning center at a height above the ground. The camera coordinate system has its origin at the RGB camera optical center at a height above the ground. All proposed coordinate systems follow the convention where the x-axis points toward the vehicle’s forward direction, the y-axis points toward the vehicle’s left side, and the z-axis points vertically upward in accordance with the right-hand rule. The LiDAR is mounted directly above the origin of the chassis coordinate frame, while the camera is spatially offset relative to the LiDAR. The precise relative pose between the two sensors is obtained via joint extrinsic calibration. The arrangement of the mobile chassis and the two sensors is shown in Figure 3.

Figure 3.

Positional relationship between chassis and sensors.

2.2.2. Spatial Fusion

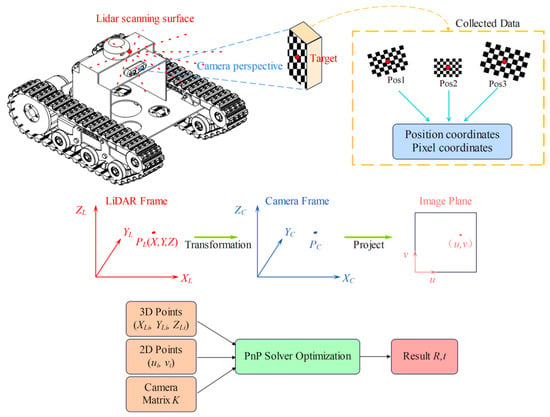

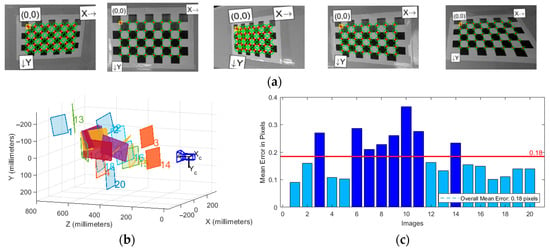

Camera intrinsic parameter calibration was performed using Zhang’s calibration method [38], employing the Camera Calibrator toolbox in MATLAB (2021b). The checkerboard grid used featured 8 × 5 inner corner points, with each square having a side length of 27 mm. As shown in Figure 4, checkerboard images were captured under different poses, and the camera’s focal length, principal point coordinates, radial distortion coefficients, and tangential distortion coefficients were calculated using functions provided by the OpenCV (3.3.1) library. The calibration process was repeated multiple times, and average values were taken to improve accuracy. The camera intrinsic parameter matrix is shown in Equation (1).

Figure 4.

Schematic diagram of extrinsic parameter calibration.

Extrinsic parameter calibration between the camera and LiDAR is a crucial step for achieving multi-sensor data fusion. As illustrated in Figure 4, this study proposes an extrinsic calibration method suitable for 2D LiDAR and camera systems. The method first obtains the pixel coordinates of the calibration board’s center point and its coordinates in the LiDAR coordinate system, then employs the PnP (Perspective-n-Point) algorithm to accurately solve the spatial pose relationship of the camera relative to the LiDAR.

The essence of extrinsic calibration is to determine the rigid body transformation relationship between the LiDAR coordinate system and the camera coordinate system. The proposed 2D LiDAR-camera extrinsic calibration method takes pixel coordinates and radar coordinates as inputs, first converting the calibration board center point from the LiDAR coordinate system to the camera coordinate system. The transformation relationship between the two systems is shown in Equation (2).

where is a 3 × 3 rotation matrix, is a 3 × 1 translation vector, represents the coordinates of the target point in the camera coordinate system, and represents the coordinates of the target point in the radar coordinate system.

The coordinates of the calibration board center point are converted from the camera coordinate system to the pixel coordinate system through the transformation shown in Equation (3). The relationship between the camera coordinate system and pixel coordinate system has been extensively studied [39,40] and will not be elaborated upon in this paper.

The conversion process from the radar coordinate system to the pixel coordinate system is thus expressed as:

where is the scale factor and K is the camera intrinsic parameter matrix.

For a given set of n calibration point pairs , as shown in Equation (5), the objective of the PnP problem is to solve for the optimal rotation matrix and translation vector .

where is the i-th pixel point, is the three-dimensional coordinates of the i-th point in the LiDAR coordinate system, and is the function that projects the i-th point to the pixel coordinate system through transformation parameters .

The position of the camera in the LiDAR coordinate system is calculated as:

Based on the aforementioned mathematical model and algorithm workflow, precise extrinsic calibration between the camera and 2D LiDAR can be achieved. This method establishes a complete coordinate transformation chain from the LiDAR coordinate system to the camera coordinate system and then to the pixel coordinate system. By utilizing the PnP algorithm to solve for the rotation matrix and translation vector , the relative position of the camera with respect to the 2D LiDAR is computed, thereby achieving precise alignment of data from both sensors within a unified coordinate system.

2.2.3. Temporal Fusion

In the camera-LiDAR extrinsic calibration process, ensuring temporal consistency between the two sensor data streams is a critical prerequisite for obtaining accurate calibration results. In multi-sensor fusion systems, temporal alignment strategies are primarily categorized into synchronous fusion and asynchronous fusion [41]. Since pomegranate orchard tree trunks serve as navigation reference targets with fixed positions and the mobile platform experiences minimal positional changes over short time periods, while the navigation system requires rapid response and should avoid excessive data waiting times, an asynchronous fusion strategy is more suitable for pomegranate orchard navigation environments.

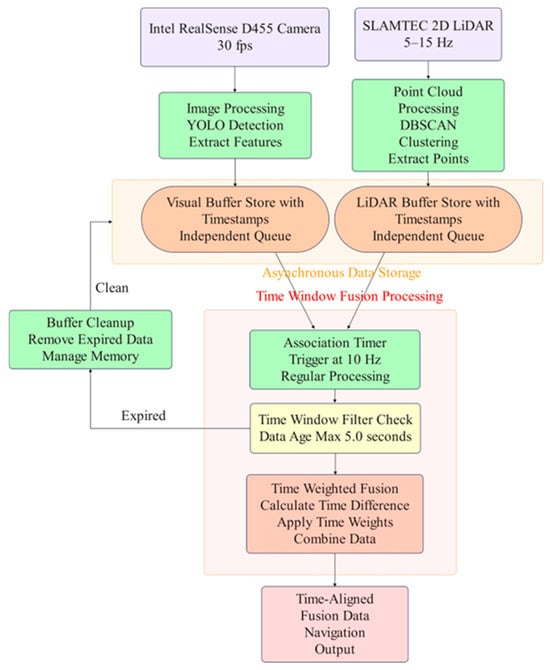

Due to the Intel RealSense D455 camera outputting RGB images at 30 fps and the SLAMTEC 2D LiDAR operating at a scanning frequency of 5–15 Hz, their respective data arrival intervals are 33.3 ms and 67–200 ms. Without appropriate temporal fusion processing, this would lead to incorrect calibration point correspondences, thereby affecting the solution accuracy of the PnP algorithm and producing erroneous spatial associations. As shown in Figure 5, a parallel approach is adopted to independently process Intel RealSense D455 camera data and SLAMTEC 2D LiDAR data, where visual features are extracted through YOLO detection algorithms and point cloud data undergoes DBSCAN clustering processing. Each sensor data stream is stored in independent circular buffers that retain timestamp information, eliminating the need for strict synchronization constraints. The system employs a 10 Hz timing-triggered data association mechanism between the two sensors, periodically retrieving data from both buffers within a 5 s time window, ensuring computational efficiency while guaranteeing access to fresh data. The system automatically removes expired data beyond the time window, ensuring the use of consistent stored data and preventing data accumulation.

Figure 5.

Temporal fusion model for monocular camera and 2D LiDAR.

Each camera frame is paired with the temporally nearest 2D LiDAR scan. Within each trunk detection bounding box, we compute the nearest distance between the pixel rays and the raw LiDAR point clusters; if both a distance threshold and a directional-consistency check are satisfied, the cluster is accepted and its centroid is projected onto the Z = 0 plane of the mobile chassis coordinate frame B to obtain a trunk point. All trunk points are then fitted with RANSAC to obtain the row-direction navigation line. The method fuses directly on raw pixels and raw measurements, facilitating real-time operation.

2.3. Algorithm Framework

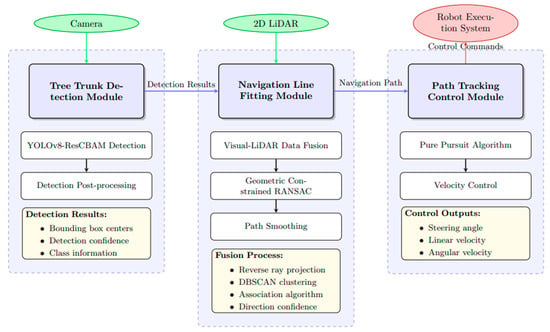

The proposed vision and LiDAR fusion-based orchard navigation system adopts a modular design architecture, primarily comprising three components: trunk detection module, navigation line extraction module, and path tracking control. The overall system architecture is illustrated in Figure 6.

Figure 6.

Vision-LiDAR Fusion Orchard Navigation System Architecture.

System architecture showing the data flow between three core modules. The Tree Trunk Detection Module processes camera images and outputs detection results to the Navigation Line Fitting Module, which fuses these with LiDAR data to generate navigation paths. The Path Tracking Control Module then converts these paths into control commands for the robot execution system.

2.4. YOLOv8-ResCBAM-Based Trunk Detection

Trunk detection in pomegranate orchard environments faces numerous technical challenges. Pomegranate juvenile trees have relatively small trunk diameters and exhibit low contrast characteristics against complex backgrounds. Simultaneously, branch and leaf occlusion, weed interference, and varying lighting conditions further increase detection difficulty and are prone to causing detection confusion.

2.4.1. YOLOv8-ResCBAM Model Applicability Analysis

To select an appropriate detection method for pomegranate orchard environments, this study conducted comparative analysis of mainstream object detection technologies. Traditional image processing methods, while computationally efficient, lack robustness under complex lighting and occlusion conditions. The deep learning-based YOLOv8 model achieves a good balance between speed and accuracy, but still has limitations in detecting small and occluded targets [42]. Considering the aforementioned issues comprehensively, this study selected the YOLOv8-ResCBAM model proposed by Ju et al. [43] as the fundamental architecture for trunk detection. The model is an efficient enhancement of the YOLOv8 architecture that integrates residual connections and a Convolutional Block Attention Module (CBAM). In orchard environments where trunks are small and planting density is high, traditional object detection methods often fail under background clutter or illumination changes. Leveraging improved feature extraction and attention, YOLOv8-ResCBAM can effectively discriminate fruit-tree trunks from complex backgrounds and remain robust to occlusions and illumination variations. Consequently, the model substantially enhances feature representation while maintaining computational efficiency, thereby providing high-precision trunk detections for the subsequent navigation line extraction.

YOLOv8-ResCBAM effectively mitigates the gradient vanishing problem in deep networks through ResBlock structures, improving the retention capability for small target features. In densely planted pomegranate orchard environments, this characteristic helps accurately identify slender tree trunks and reduces missed detections caused by feature loss. The Convolutional Block Attention Module (CBAM) comprises two sub-modules: channel attention and spatial attention. The channel attention mechanism enhances the model’s perception capability for trunk texture features by learning importance weights of different feature channels, effectively suppressing interference from background noise such as weeds. The spatial attention mechanism improves recognition capability for partially occluded tree trunks by focusing on key regions within feature maps.

2.4.2. YOLOv8-ResCBAM Training and Implementation

A large amount of image data was collected in pomegranate orchard environments, totaling 4150 images after data augmentation, with 70% allocated for training, 20% for validation, and 10% for testing. Tree trunks in each image were annotated using the Labelimg (1.7.1) annotation tool. The training parameters were configured as follows:

(1) SGD optimizer with an initial learning rate of 1 × 10−2, weight decay of 5 × 10−4, and momentum of 0.937.

(2) Input image size of 640 × 640, batch size of 8, training for 200 epochs. To prevent overfitting, a cosine annealing learning rate scheduling strategy was employed.

(3) Data augmentation techniques including random scaling, random rotation, color adjustment (HSV), and horizontal flipping were applied during the training process.

The detection results output by the trained model include bounding box coordinates, confidence scores, and class labels. The center point coordinates of the bounding box are extracted as shown in Equation (6), and detection results with confidence scores above the threshold () are published as ROS topics for subscription by downstream modules.

2.5. Vision and LiDAR Fusion-Based Navigation Line Fitting

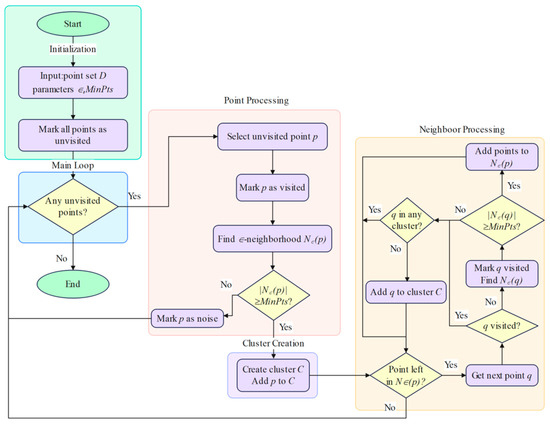

2.5.1. DBSCAN Trunk Clustering Algorithm

In the dense planting environment of pomegranate orchards with trunk diameters of 5–15 cm and plant spacing of 1–2 m, point cloud data acquired by 2D LiDAR exhibits characteristics of roughly row-wise arrangement. However, variations in plant spacing and local occlusions may result in incomplete or irregular point clouds. These characteristics render traditional clustering methods based on shape assumptions ineffective, making it difficult to effectively distinguish actual tree trunks from background noise44 [44]. Therefore, the DBSCAN (Density-Based Spatial Clustering of Applications with Noise) algorithm, which performs clustering based on density connectivity, is employed for LiDAR point cloud clustering. This algorithm is suitable for processing noisy data and irregularly shaped clusters, making it extremely effective for trunk detection in orchard environments [45].

Raw measurements are first limited to the [1,1.5] m range window according to the orchard row spacing, removing near- and far-field non–tree-row interference. Following the scan order, a median filter with window width k = 5 is applied to the point-cloud ranges to obtain . When holds, the sample is regarded as a spike and replaced with . This step effectively suppresses non–tree-row clutter and sporadic outliers, providing a cleaner point set for DBSCAN.

The DBSCAN algorithm workflow for pomegranate orchard environments is illustrated in Figure 7. The algorithm classifies a given point set into three categories:

Figure 7.

DBSCAN algorithm flowchart.

Core points: points that have at least points within their neighborhood radius;

Border points: points that have fewer than points within their neighborhood radius but are within the neighborhood radius of some core point;

Noise points: points that are neither core points nor border points.

The performance of the DBSCAN algorithm is primarily determined by two key parameters: the neighborhood radius and the minimum number of points . Parameter selection must comprehensively consider the geometric characteristics of pomegranate orchards, LiDAR specifications, and clustering accuracy requirements.

The selection of neighborhood radius requires balancing clustering completeness and accuracy. An excessively small value would cause a single tree to be segmented into multiple clusters, while an excessively large value might merge adjacent trees into one cluster. The parameter affects the noise resistance and completeness of clustering. The selection of this parameter is based on the number of trunk reflection points that LiDAR can acquire at different distances. Based on the geometric constraints of pomegranate orchards, the theoretical ranges of and values can be determined through the following analysis:

Pomegranate juvenile trees have diameters of 5–15 cm, with plant spacing of 1–2 m, row spacing of 2–3 m, and LiDAR angular resolution of 0.225°. To ensure that point clouds from individual tree trunks are not over-segmented, the minimum value of should be greater than 1.5 times the minimum trunk diameter, and the maximum value should be less than half the minimum plant spacing, i.e., .

Based on LiDAR technical specifications and trunk geometric characteristics, the theoretical point count estimation for a single trunk with 5 cm diameter at 1.5 m detection distance is shown in Equation (8).

where is the trunk diameter, is the radar linear resolution, and is the detection distance.

Considering practical factors such as occlusion and reflection intensity variations, setting can effectively filter noise points while ensuring clustering completeness. Based on the above, the DBSCAN neighborhood radius and the minimum number of points are derived from the LiDAR’s angular resolution and the desired linear resolution corresponding to the expected trunk diameter, selecting a target scale suited to densely planted inter-row spacing. This configuration strikes a balance between noise suppression and cluster integrity.

2.5.2. Vision and LiDAR Data Association

Line-of-Sight and LiDAR Plane Intersection Calculation

This study employs a loosely coupled sensor fusion method to associate and fuse visual detection results with 2D LiDAR data. The vision system provides pixel coordinates of tree trunks, while LiDAR provides accurate distance information. Through joint calibration of multiple sensors to the chassis reference coordinate system, this study performs processing and computation for vision and LiDAR data fusion.

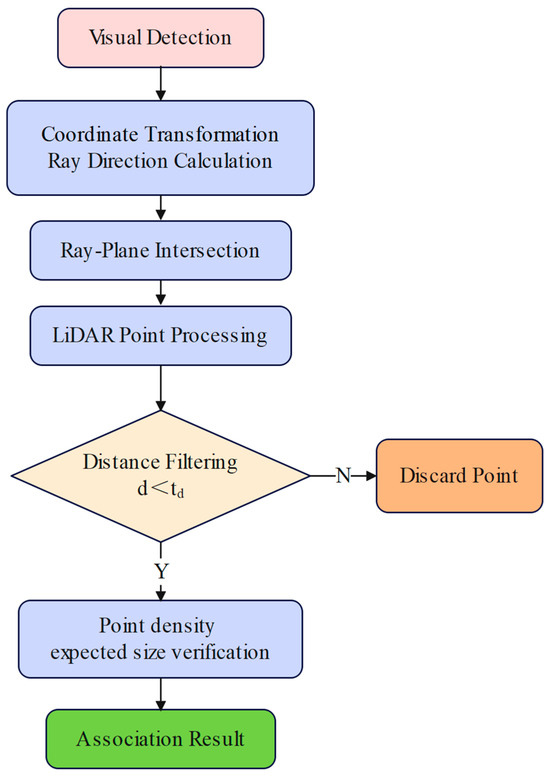

This study proposes a reverse ray projection algorithm that differs from traditional point cloud-to-image plane projection methods. The algorithm projects pixel coordinates from visual detection into three-dimensional rays and computes their intersections with LiDAR scanning planes to achieve data association.

The pixel point from visual detection is converted to a ray direction in the camera coordinate system:

The camera principal point and focal length are obtained through camera intrinsic parameter calibration.

The ray direction in the reference coordinate system is then calculated as:

The LiDAR plane can be expressed as:

where is the plane normal vector and is the distance from the plane to the origin.

The intersection point between the ray and plane can be obtained by solving the following equation:

where is the ray origin and is the ray direction.

The intersection coordinates are:

Association Algorithm

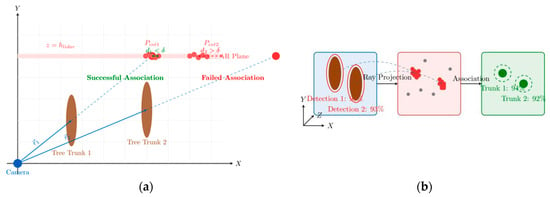

This paper proposes a vision and LiDAR data association algorithm that matches visually detected trunk points with LiDAR-clustered trunk point clouds. Unlike traditional data association methods that employ point cloud projection onto image planes, this study proposes a vision and LiDAR data association algorithm based on reverse ray projection. The core principle involves calculating the minimum distance between visual rays and LiDAR points and setting thresholds for association. The algorithm workflow is illustrated in Figure 8.

Figure 8.

Association algorithm flowchart.

Given a visual ray with origin and direction , and a LiDAR point , the minimum distance from the point to the ray is:

where is the vector from the ray origin to point , is the projection length of along the ray direction, and is the projection vector.

The proposed vision and LiDAR data association algorithm back-projects visual detections in the pixel coordinate system into three-dimensional rays in the reference coordinate system through camera intrinsic parameter matrix and distortion correction. Each ray extends from the camera center toward the detected target. The algorithm calculates the minimum distance from point clouds to rays and treats laser points with distances smaller than the preset threshold as matching candidate points. To ensure detection effectiveness, spatial neighborhood point density analysis and consistency verification based on expected trunk dimensions are performed. Finally, the DBSCAN clustering algorithm is employed to merge multiple detections belonging to the same trunk, generating precise trunk positions through centroid calculation.

As shown in Figure 9, Figure 9a illustrates the geometric principle of the association algorithm: the ray casting method projects visual detections into 3D space, and association succeeds when LiDAR points satisfy the distance threshold and density constraints of the projection geometric boundaries. Figure 9b shows the three stages of vision and LiDAR association: the visual detection stage that generates candidate regions with confidence scores, LiDAR point cloud data acquisition, and the final associated point clusters after processing.

Figure 9.

Schematic diagram of the association algorithm: (a) Geometric principle—the camera ray intersects the LiDAR height plane ({z=h}_{lidar}); items within the distance threshold \delta are shown in green (successful association), whereas those beyond \delta are in red (failed association). (b) Association process—the blue dashed line denotes the reverse ray projection from the camera optical center through each 2D trunk detection; LiDAR points close to this ray are highlighted in red as candidates, gray dots indicate background returns, and the finally associated trunks are shown in green.

2.5.3. Navigation Line Fitting

Kalman Filter Smoothing

To reduce navigation line jumps caused by noise, a Kalman filter is employed to smooth the fitting parameters and reduce noise effects [48]. For each row of fruit trees on the left and right sides, the state vector is , representing the slope and intercept parameters of the fitted line for that tree row, respectively.

Considering the fixed nature of fruit tree rows in orchard environments, navigation line parameters change slowly over short periods, thus a constant velocity model is adopted:

The observation equation is:

where and are the process noise and measurement noise, respectively.

The prediction steps are:

The update steps are:

where is the state covariance matrix, is the process noise covariance matrix, is the measurement noise covariance matrix, and is the Kalman gain. According to the characteristics of pomegranate orchard environments, the process noise covariance is set to to reflect the relative stability of navigation line parameters, where slope changes are smaller than intercept changes. The measurement noise covariance is determined based on statistical analysis of RANSAC fitting accuracy. From the weighted angle histogram in Section Geometric Constraint Navigation Line Fitting the principal row-direction angle is obtained, and the vehicle’s lateral offset from the row line is computed. The heading and lateral offset, after first-order Kalman filtering, are fed to the Pure Pursuit algorithm to generate linear and angular velocity commands. This interface decouples perception from control and effectively suppresses command jitter under short-term occlusions or abrupt illumination changes.

2.6. Experimental Methods

To validate the effectiveness and accuracy of the proposed vision and 2D LiDAR fusion-based orchard navigation system, this paper conducted sensor calibration experiments, visual detection performance evaluation experiments, data association verification experiments, geometric constraint navigation line fitting simulation experiments, and navigation line tracking comparison experiments. Experiments were conducted at the Anhui Pomegranate Germplasm Resource Nursery in Bengbu, Anhui Province, China (117°4′15.764880″ E, 32°58′53.022720″ N). The site exhibits typical densely planted pomegranate-orchard characteristics and includes trees at multiple growth stages; this study focused primarily on 1–3-year-old trees with trunk diameters of 5–15 cm, an in-row spacing of 1–2 m, and a row spacing of 2–3 m. The orchard layout of the test area is shown in Figure 11. Representative operating scenarios included straight rows, slight lateral offset, and cross-slope operation. Weather and illumination conditions were sunny, overcast, dusk, strong direct light, and backlit. In each scenario, one operating row was covered; each single-row segment contained 25–32 trees, and every scenario was repeated 10 times to assess stability and repeatability. In total, approximately 1400 trees were recorded. Throughout the experiments, the sensor mounting configuration and algorithm parameters were kept uniform.

Figure 11.

Experimental scenarios.

- (1)

- The sensor calibration experimental methodology was as follows: Zhang’s calibration method was employed for camera intrinsic parameter calibration, capturing 20 images of different poses using an 8 × 5 checkerboard (27 mm side length); the PnP algorithm was used for camera and 2D LiDAR extrinsic parameter calibration, obtaining multiple sets of calibration board center point positions in the radar coordinate system and pixel coordinates. Evaluation metrics included reprojection error and calibration accuracy.

- (2)

- The visual detection performance evaluation experimental methodology was as follows: 4150 images were collected under different lighting conditions (sunny, cloudy, dusk, strong light), divided into training, validation, and test sets at a 7:2:1 ratio, and the YOLOv8-ResCBAM model was used for trunk detection training and testing. Evaluation metrics included mAP50, recall rate, precision, and inference time.

- (3)

- The data association verification experimental methodology was as follows: five groups of 20 m-long inter-row orchard environments were selected, each containing 25–32 fruit trees, with each group experiment repeated 10 times, using the reverse ray projection algorithm for vision and LiDAR data association. Evaluation metrics included association count and association success rate.

- (4)

- The geometric constraint navigation line fitting simulation experimental methodology was as follows: six groups of simulation comparison experiments were designed with fruit tree point quantities ranging from 19 to 27, employing the proposed GCA and traditional RANSAC algorithm, respectively, for navigation line fitting. Evaluation metrics included fitting accuracy RMSE, inlier ratio, and computation time.

- (5)

- The navigation-line tracking comparison was designed as follows. Five representative inter-row segments were selected in the orchard. Each segment (20 m) was manually surveyed to obtain the ground-truth centerline and included operating conditions such as straight rows, slight lateral offset, cross-slope, local occlusions, and non-uniform illumination. For each segment, 10 independent navigation trials were conducted at speeds from 0.5 to 2.0 m·s−1. Four navigation line extraction methods were compared: (i) single 2D LiDAR with RANSAC, (ii) single vision detection with RANSAC, (iii) DeepLab v3+ semantic segmentation with RANSAC, and (iv) the proposed fusion-based method. The mobile chassis position was recorded using a “taut-string–centerline-marking–tape-measurement” protocol. Specifically, a cotton string was stretched taut between the midpoints equidistant from the left and right rows at the two ends of the test segment to define the ground-truth centerline, and sampling stations were marked every 1 m along the string. A marker was mounted at the geometric center of the mobile chassis; after the vehicle passed, a measuring tape was used at each station to measure the perpendicular distance from the trajectory to the centerline, yielding the lateral deviation.

For the single 2D LiDAR + RANSAC method, the “2.5.1 DBSCAN trunk clustering algorithm” was used to obtain candidate points for the left and right rows, followed by separate line fittings with RANSAC to produce the two row lines and the center navigation line. For the single vision detection + RANSAC method, key pomegranate-tree targets were detected in images; low-confidence and out-of-range points were removed, and the remaining pixel points were projected onto the ground plane via the extrinsic parameters to form a point set for RANSAC fitting of the center navigation line. For the DeepLab v3+ semantic segmentation + RANSAC method, DeepLab v3+ (Backbone: ResNet-50, input 640 × 640) segmented ground and trunk background in each frame; the road mask underwent morphological opening/closing and Guo–Hall thinning to obtain a skeleton, on which RANSAC fitting was performed. The resulting centerline was then projected into the ground coordinate frame using the camera–LiDAR extrinsics to obtain the center navigation line. Evaluation metrics included the average lateral error, the lateral error RMS, and the navigation success rate (single-sided lateral error ≤ 10 cm).

3. Results

3.1. Calibration Experimental Results

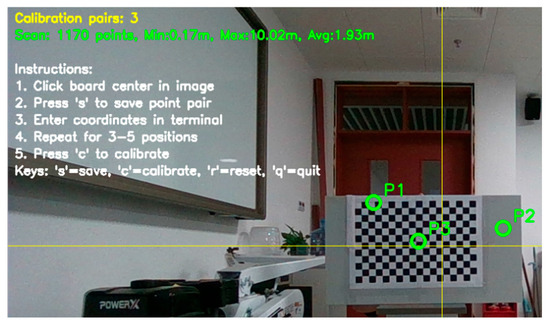

As shown in Figure 12, camera intrinsic parameter calibration was performed according to the monocular camera and 2D LiDAR data fusion method described in Section 2.2.

Figure 12.

Camera intrinsic parameter calibration: (a) Partial images used for calibration; (b) Checkerboard and camera positions; (c) Image errors for calibration.

After calibration, the intrinsic parameter matrix K was obtained as shown in Equation (32), with an average reprojection error of 0.18 pixels.

In addition to camera intrinsic parameter calibration, it is necessary to determine the relative position of the camera on the mobile platform according to the camera and 2D LiDAR extrinsic parameter calibration method described in Section 2.2 to complete data fusion. The mobile platform is placed on a horizontal plane, with the 2D LiDAR coordinate system origin located 250 mm directly above the platform coordinate system origin. As shown in Figure 13, the radar coordinate system position and pixel coordinates of the calibration board center point are obtained.

Figure 13.

Camera and radar extrinsic parameter calibration.

Through calibration calculations on multiple sets of data, the three-dimensional coordinates of the camera relative to the 2D LiDAR were determined to be (0.075, 0.029, −0.096). Combining the results of camera intrinsic parameter calibration, camera and 2D LiDAR extrinsic parameter calibration, and temporal synchronization, the data fusion between camera and 2D LiDAR was completed, providing a consistent spatial reference for subsequent navigation algorithms.

3.2. Visual Detection Performance Evaluation

For different scenarios in pomegranate orchards, the detection results of the YOLOv8-ResCBAM model are shown in Figure 14.

Figure 14.

Tree trunk detection results under different environmental conditions: (a) Sunny; (b) Dusk; (c) Cloudy; (d) Strong light.

As shown in Table 2, this paper conducted comparative analysis of different YOLOv8 model variants (each integrating different attention mechanisms) to evaluate their performance in detecting tree trunks in densely planted pomegranate orchards.

Table 2.

Performance comparison of different detection methods in pomegranate trunk detection.

The results demonstrate that the YOLOv8-ResCBAM model outperforms the baseline YOLOv8 and other attention-enhanced variants. Comparison of various metrics reveals that YOLOv8-ResCBAM exhibits the highest mAP50 and recall rate, achieving 95.7% and 92.5%, respectively, indicating its superior capability in detecting tree trunks under high-density and occlusion environments. Although its precision (91.0%) is slightly lower than the highest-performing YOLOv8-SA (92.0%), it still maintains a high level, and the balance between precision and recall is more favorable for practical applications. The inference time of YOLOv8-ResCBAM is 15.36 ms, which, though longer than the baseline YOLOv8 (12.96 ms), remains within the acceptable range for real-time processing in autonomous navigation systems. Through comparison of various metrics, it is verified that YOLOv8-ResCBAM demonstrates the best comprehensive performance in enhancing feature extraction and focusing on salient regions. Particularly in complex environments with dense planting and the presence of weed and branch occlusion, the advantage of high recall rate is especially prominent, proving the applicability and superiority of this model in practical orchard application scenarios.

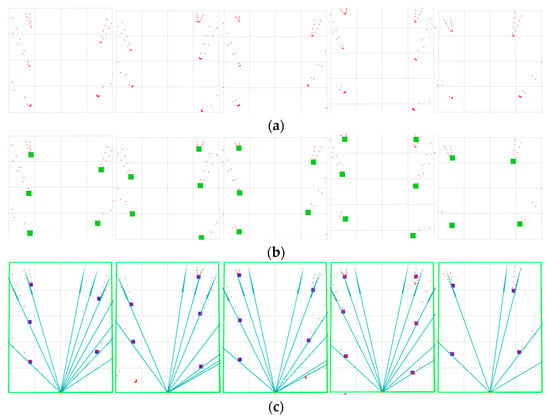

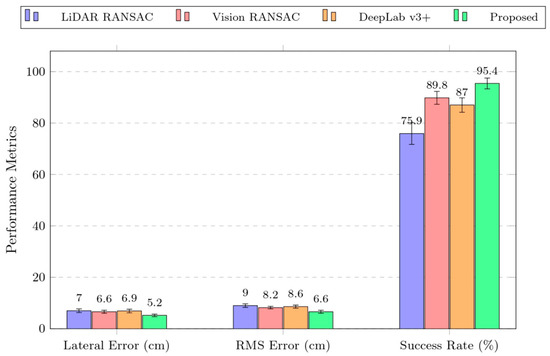

3.3. Data Association Results

The experimental results of LiDAR clustering of pomegranate trees and their association with visual rays are shown in Figure 15. In Figure 15a, the red scatter points represent reflection points detected by LiDAR, and the point cloud distribution reflects the spatial structural characteristics of fruit tree rows. Figure 15b shows the results after clustering processing of LiDAR point clouds, where green points represent the identified fruit tree target center points after clustering, demonstrating that the clustering algorithm effectively extracted the positional information of fruit trees. Figure 15c displays the association effect between visual feature points and LiDAR clustered points, where blue rays represent rays emitted from the camera position toward target points, and blue points represent data points where visual rays successfully associated with laser clusters.

Figure 15.

Data-association results. (a) 2D LiDAR point cloud—red dots are LiDAR returns. (b) LiDAR clustering—green solid squares mark cluster centroids (trunk hypotheses). (c) Visual–LiDAR as-sociation—blue solid lines are reverse ray projections from the camera optical center; blue solid squares denote LiDAR clusters that are successfully associated to the rays.

The results of five experimental groups, along with the average number of fruit trees and association counts, are presented in Table 3.

Table 3.

Data association statistics.

The experimental results demonstrate that the average association success rate reached 92.6%, indicating that the proposed vision and LiDAR data association algorithm possesses high accuracy. The association success rates for the five experimental groups under different fruit tree density environments (25–32 trees) consistently remained between 91.9 and 94.3%, with a standard deviation of 0.9%, validating the algorithm’s stability and adaptability, which meets the accuracy requirements for orchard navigation.

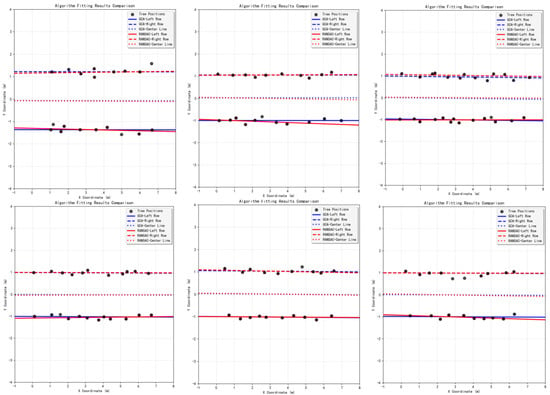

3.4. Geometric Constraint Navigation Line Fitting Simulation Results

As shown in Figure 16, a comparison of the visualization of fitting performance between the two algorithms in experiments is presented, where the blue line represents the fitting performance of the GCA and the red line represents the fitting performance of the RANSAC algorithm.

Figure 16.

Experimental comparison between GCA and RANSAC algorithm.

The detailed performance comparison results of the six experimental groups are shown in Table 4.

Table 4.

Performance Comparison of GCA and RANSAC Algorithm.

The experiments demonstrate that the proposed Geometric Constraint Algorithm exhibits significant advantages in orchard navigation line fitting tasks. Compared to the traditional RANSAC algorithm, GCA achieves an overall improvement of 2.4% in fitting accuracy. The average inlier ratio of GCA is 92.03%, which is 5.1% higher than RANSAC, demonstrating excellent noise resistance capability. GCA improves computational efficiency by 47.06%, meeting the requirements for real-time navigation.

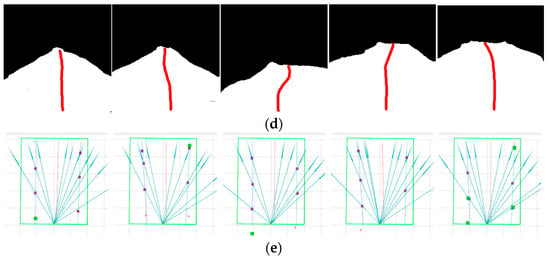

3.5. Navigation Line Tracking Results

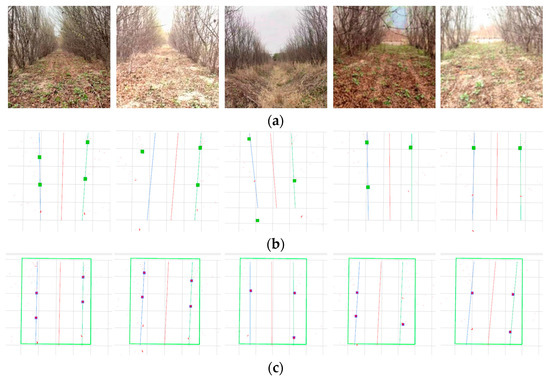

The experimental results are shown in Figure 17, displaying the navigation line fitting results of three algorithms under five experimental conditions.

Figure 17.

Experimental results of three navigation line extraction algorithms across five scenarios: (a) original camera images; (b) LiDAR-only fitting—red dots denote 2D LiDAR returns, green solid squares indicate LiDAR cluster centroids, and the cyan and red lines are the RANSAC-fitted left/right trunk-row lines and the navigation line; (c) vision-only fitting—blue squares mark 2D trunk detections, the green rectangle indicates the ROI, and the cyan and red lines are the RANSAC-fitted left/right trunk-row lines and the navigation line; (d) DeepLab v3+ semantic segmentation—black is background, white is the segmented ground region, and the red curve is the skeletonized center-line; (e) proposed fusion—blue solid lines with arrows denote reverse ray projections from the camera optical center; blue solid squares indicate LiDAR clusters successfully associated with the rays; green squares are the remaining unassociated clusters within the ROI; the cyan and red lines are as defined above.

The experimental results are presented in Table 5, comparing the evaluation metrics of different navigation line extraction methods.

Table 5.

Performance comparison of different navigation line extraction methods.

As shown in Figure 17, representative operating conditions—including straight rows, slight lateral offset, cross-slope, local occlusions, and non-uniform illumination—are presented together with the navigation-line fitting results of the four algorithms under the corresponding conditions. The results indicate that the proposed vision and 2D LiDAR fusion with a geometric constraint (GCA) improves both lateral deviation and navigation success rate over conventional methods. Although the gains relative to single-sensor and semantic-segmentation approaches are modest, they are crucial given the high occlusion, illumination variability, and small trunk size in orchards, helping ensure stable and efficient navigation for agricultural robots. Specifically, the proposed fusion method achieved the smallest average lateral error of 5.2 cm, corresponding to reductions of 25.7%, 21.2%, and 24.6% compared with the LiDAR method, the vision method, and the semantic-segmentation method, respectively. In addition, it attained the highest navigation success rate of 95.4% and exhibited better stability in the RMS of the lateral error.

To assess stability from an operational-task perspective, 95% conservative bounds are reported for the key metrics in Table 5: a conservative upper bound for the lateral error and its RMS, and a conservative lower bound for the success rate. Under common approximations, these bounds are equivalent to worst-case robust estimates and can serve as surrogate evidence of long-term or large-scale operational stability [49]. As shown in Table 6 (converted from the values in Table 5), the proposed method yields a conservative upper bound of 6.18 cm for the lateral error, 7.78 cm for the RMS, and a conservative lower bound of 91.28% for the success rate—all superior to the two baseline methods. These results indicate that, even under a conservative regimen, the method maintains a lower error upper bound and a higher success-rate lower bound, demonstrating task-level stability for densely planted inter-row operation.

Table 6.

95% Conservative Bounds at the Task Level.

As shown in Figure 18, a grouped bar chart provides an intuitive comparison of the navigation-line extraction performance of the different methods. The experimental results validate the effectiveness of the proposed vision and 2D LiDAR fusion-based approach in practical applications, offering a more feasible solution for agricultural automation.

Figure 18.

Comparison of different navigation line fusion methods.

4. Discussion

To improve the autonomous navigation capability of robots in densely planted pomegranate orchard environments, this study proposed a vision and 2D LiDAR fusion-based navigation line extraction method. The method integrates three core technical modules: YOLOv8-ResCBAM trunk detection, reverse ray projection data association, and geometric constraint navigation line fitting, achieving high-precision navigation line extraction in complex orchard environments.

In densely planted pomegranate orchards with challenging illumination and occlusions, our system achieves trunk detection with a current precision of 91.0%. To further improve performance, we will act on dataset collection/processing and model optimization: continuously add hard cases—strong backlighting, shadows, wet-ground glare, slight wind-induced sway, and season-dependent canopy density—apply targeted data augmentation and rigorous annotation to reduce small-object boundary noise; modestly improve image sharpness; jointly select, on the same validation set, suitable confidence thresholds and overlap-removal (NMS) thresholds to balance recall and precision; and apply simple temporal smoothing to detections across consecutive frames to avoid jitter from frame-wise flicker. These strategies are expected to raise the effective accuracy under complex outdoor conditions while maintaining stability across terrains and seasons, without noticeably increasing computational overhead.

The reverse ray projection algorithm proposed in this study demonstrates significant advantages in vision and LiDAR data association. Unlike traditional methods that project point clouds onto image planes, this algorithm projects visual detection results into three-dimensional rays and computes their intersections with LiDAR scanning planes, achieving more precise spatial correspondence. Experimental results show that in five groups of orchard environments with different densities (25–32 trees), the average association success rate reached 92.6% with a standard deviation of 0.9%, validating the algorithm’s stability and adaptability. The level of association success rate directly affects the accuracy of subsequent navigation line fitting. Association failures typically occur due to the following situations: first, sparse point clouds when LiDAR encounters small trunk diameters at long distances, leading to DBSCAN clustering failure; second, visual detection errors under complex lighting conditions, producing incorrect ray directions; third, irregular obstacles in orchards such as support poles and irrigation equipment interfering with data association.

To address the problem of traditional RANSAC algorithms being susceptible to noise in orchard environments, this study introduced a geometric constraint algorithm that guides the line fitting process by analyzing geometric relationships between fruit trees and constructing angle histograms. Experimental results show that compared to traditional RANSAC algorithms, the geometric constraint algorithm improved fitting accuracy by 2.4%, inlier ratio by 5.1%, and computational efficiency by 47.06%. The primary reason for performance improvement lies in the geometric constraint mechanism’s full utilization of prior knowledge about pomegranate orchards. Fruit tree rows in pomegranate orchards are typically planted along the same direction, and this regularity provides powerful constraints for the algorithm. By constructing direction histograms and extracting principal direction angles, the algorithm can preferentially select candidate lines that conform to orchard planting patterns during the fitting process, thereby improving real-time efficiency and stability of fitting.

In the orchard navigation-line tracking trials, the proposed fusion method delivered strong overall performance: the average lateral error was 5.2 cm, the RMS was 6.6 cm, and the navigation success rate reached 95.4%. Relative to the single-LiDAR method, the average lateral error and RMS decreased by 25.7% and 26.7%, respectively, while the success rate increased by 19.5%. Relative to the single detection-based vision method, the average lateral error and RMS decreased by 21.2% and 19.5%, with a 5.6% gain in success rate. Compared with the DeepLab v3+ semantic-segmentation method, the average lateral error and RMS decreased by 24.6% and 23.3%, and the success rate improved by 8.4%. For single LiDAR, when trunks are thin or the ground has soil cover or glare, near-ground returns become sparse and contain outliers, so the RANSAC-fitted centerline is prone to abrupt jumps. For single vision, strong backlighting and local occlusions easily cause misses/false detections and unstable localization of trees. The DeepLab v3+ method yields a smooth curve under sunny straight-row conditions, but under cross-slope, backlit, and occluded scenes its robustness is lower than the detection-based vision baseline; mask fragmentation and S-shaped skeletons are also common, making the fitting sensitive to noise. By using vision to provide semantic candidates that constrain geometric search, then weighting the fit with LiDAR’s absolute scale and directional consistency—and further applying a directional histogram and Kalman smoothing to suppress transient outliers—the proposed method markedly reduces line jumps and polyline jaggedness. Fusing the two sensors enables stable navigation across varying environmental conditions.

In densely planted inter-row scenarios, the method still exhibits a lower error upper bound and a higher success-rate lower bound under the conservative-bounds regimen, indicating task-level stability. The approach relies on three keys: a geometric prior of row-direction consistency in tree rows, the detectability of trunk-type targets, and the clusterability of sparse LiDAR returns. As shown by the derivation of the number of LiDAR hits on a trunk during navigation in Section 2.5.1 (Equation (8)), the number of points increases when trunks are thicker or the range is smaller (e.g., in apple or citrus orchards). Within reasonable parameter ranges across crops and tree forms, the return density is sufficient to support stable clustering and the subsequent geometrically constrained fitting, thereby providing clear parameter bounds and theoretical feasibility for cross-orchard transferability. To verify transferability, future work will add small-scale cross-scene tests—e.g., one test plot each in an apple and a citrus orchard—using few-shot fine-tuning only for the detection head while keeping the fusion and geometric-constraint fitting modules unchanged. We will also add task-level metrics (long-term navigation stability and obstacle-avoidance success rate) and report them alongside the average lateral error, RMS, and success rate.

Based on our data and field experience, we draw the following conclusions about environmental and meteorological impacts on measurement quality. Preferred conditions: clear days with stable, moderate illumination; no precipitation or fog; dry ground; ambient wind speed ≤ 3 m·s−1. Under these conditions, image illumination and shadow boundaries are most stable, ground-surface glare is minimal, spurious LiDAR returns are markedly reduced, and measurement quality is optimal. Adverse conditions: strong direct sunlight, higher wind speeds, rain, fog, or standing water. Strong direct sunlight produces long, sharp shadows and highlights that cause fluctuations in vision; LiDAR returns become mixed and outliers increase. Higher winds induce sway of branches and slender trunks, creating short-term occlusions and unstable tree localization. Rain/fog can fog the camera lens and LiDAR window, further degrading SNR. Recommended acquisition seasons and times: when compatible with production, prioritize concentrated data collection after pruning and before budbreak or in the early fruit-set stage (sparser canopy, less occlusion), and during dry or low-rainfall periods; avoid extended rainy or foggy periods whenever possible.

The results indicate that the proposed method can improve robot autonomous navigation capability in complex orchard environments and provide new insights for the popularization and promotion of orchard mechanized operations. In future work, the application will be extended to more types of orchard environments and different planting patterns to verify the algorithm’s generalizability and portability, aiming to provide effective technical solutions for robot navigation in densely planted orchard environments.

5. Conclusions

This study proposed a vision and LiDAR fusion-based inter-row navigation line extraction method for orchards, addressing the path extraction problem in densely planted pomegranate orchard environments. This research integrated a YOLOv8-ResCBAM trunk detection model, vision and LiDAR data fusion, and navigation line extraction algorithms under geometric constraints. In detection performance evaluation experiments, the YOLOv8-ResCBAM model improved trunk detection accuracy, achieving an mAP50 of 95.7% and a recall rate of 92.5%. The proposed reverse ray projection algorithm achieved precise data association with an association success rate of 92.6%. The proposed geometric constraint algorithm improved fitting accuracy by 2.4%, inlier ratio by 5.1%, and computational efficiency by 47.06% compared to traditional RANSAC algorithms. In the orchard navigation-line tracking trials, the vision and 2D LiDAR fusion-based navigation line extraction method reduced the average lateral error by 25.7%, 21.2%, and 24.6% relative to the single 2D LiDAR, single detection-based vision, and DeepLab v3+ semantic-segmentation methods, respectively, lowering the average lateral error to 5.2 cm and achieving a navigation-line extraction success rate of 95.4%. In addition, 95% conservative bounds were reported: a conservative upper bound of 6.18 cm for the lateral error, 7.78 cm for the lateral-error RMS, and a conservative lower bound of 91.28% for the success rate—all superior to the three baselines—demonstrating task-level stability for densely planted inter-row operation.

This study provides research concepts and solutions for robot autonomous navigation computation in densely planted orchard environments, offering reference value for advancing orchard mechanization and intelligent development. With the continuous improvement and optimization of related technologies, this method is expected to find broader applications in modern agriculture.

Author Contributions

Methodology, Z.B.; Software, X.Z.; Formal analysis, K.Y.; Investigation, X.D.; Resources, B.Q.; Writing—original draft, Z.S.; Writing—review & editing, X.H.; Visualization, C.J.; Project administration, Q.W. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by the Talent Introduction Project of Anhui Science and Technology University (JXYJ202204), 2024 University Research Projects of Anhui Province (Study on the mechanism of plant protection UAV obstacle avoidance on droplet deposition in the obstacle neighborhoods in rice terraces (2024AH050305)).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy and permissions re-strictions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ghasemi-Soloklui, A.A.; Kordrostami, M.; Gharaghani, A. Environmental and geographical conditions influence color, physical properties, and physiochemical composition of pomegranate fruits. Sci. Rep. 2023, 13, 15447. [Google Scholar] [CrossRef]

- Yazdanpanah, P.; Jonoubi, P.; Zeinalabedini, M.; Rajaei, H.; Ghaffari, M.R.; Vazifeshenas, M.R.; Abdirad, S. Seasonal metabolic investigation in pomegranate (Punica granatum L.) highlights the role of amino acids in genotype- and organ-specific adaptive responses to freezing stress. Front. Plant Sci. 2021, 12, 699139. [Google Scholar] [CrossRef]

- Wang, S.; Song, J.; Qi, P.; Yuan, C.; Wu, H.; Zhang, L.; Liu, W.; Liu, Y.; He, X. Design and development of orchard autonomous navigation spray system. Front. Plant Sci. 2022, 13, 960686. [Google Scholar] [CrossRef]

- Blok, P.M.; van Boheemen, K.; van Evert, F.K.; IJsselmuiden, J.; Kim, G.H. Robot navigation in orchards with localization based on Particle filter and Kalman filter. Comput. Electron. Agric. 2019, 157, 261–269. [Google Scholar] [CrossRef]

- Jiang, A.; Noguchi, R.; Ahamed, T. Tree Trunk Recognition in Orchard Autonomous Operations under Different Light Conditions Using a Thermal Camera and Faster R-CNN. Sensors 2022, 22, 2065. [Google Scholar] [CrossRef]

- Xia, Y.; Lei, X.; Pan, J.; Chen, L.; Zhang, Z.; Lyu, X. Research on orchard navigation method based on fusion of 3D SLAM and point cloud positioning. Front. Plant Sci. 2023, 14, 1207742. [Google Scholar] [CrossRef]

- Bai, Y.; Zhang, B.; Xu, N.; Zhou, J.; Shi, J.; Diao, Z. Vision-based navigation and guidance for agricultural autonomous vehicles and robots: A review. Comput. Electron. Agric. 2023, 205, 107724. [Google Scholar] [CrossRef]

- Liu, H.; Zeng, X.; Shen, Y.; Xu, J.; Khan, Z. A Single-Stage Navigation Path Extraction Network for agricultural robots in orchards. Comput. Electron. Agric. 2025, 229, 109687. [Google Scholar] [CrossRef]

- Cao, Z.; Gong, C.; Meng, J.; Liu, L.; Rao, Y.; Hou, W. Orchard Vision Navigation Line Extraction Based on YOLOv8-Trunk Detection. IEEE Access 2024, 12, 89156–89168. [Google Scholar] [CrossRef]

- Yang, Z.; Ouyang, L.; Zhang, Z.; Duan, J.; Yu, J.; Wang, H. Visual navigation path extraction of orchard hard pavement based on scanning method and neural network. Comput. Electron. Agric. 2022, 197, 106964. [Google Scholar] [CrossRef]

- De Silva, R.; Cielniak, G.; Wang, G.; Gao, J. Deep learning-based crop row detection for infield navigation of agri-robots. J. Field Robot. 2024, 41, 2299–2321. [Google Scholar] [CrossRef]

- Gai, J.; Xiang, L.; Tang, L. Using a depth camera for crop row detection and mapping for under-canopy navigation of agricultural robotic vehicle. Comput. Electron. Agric. 2021, 188, 106301. [Google Scholar] [CrossRef]

- Winterhalter, W.; Fleckenstein, F.V.; Dornhege, C.; Burgard, W. Crop row detection on tiny plants with the pattern hough transform. IEEE Robot. Autom. Lett. 2018, 3, 3394–3401. [Google Scholar] [CrossRef]

- Gai, J.; Tang, L.; Steward, B.L. Automated crop plant detection based on the fusion of color and depth images for robotic weed control. J. Field Robot. 2020, 37, 35–60. [Google Scholar] [CrossRef]

- Wang, T.H.; Chen, B.; Zhang, Z.Q.; Li, H.; Zhang, M. Applications of machine vision in agricultural robot navigation: A review. Comput. Electron. Agric. 2022, 198, 107085. [Google Scholar] [CrossRef]

- Jiang, A.; Ahamed, T. Navigation of an Autonomous Spraying Robot for Orchard Operations Using LiDAR for Tree Trunk Detection. Sensors 2023, 23, 4808. [Google Scholar] [CrossRef]

- Li, H.; Huang, K.; Sun, Y.; Lei, X. An autonomous navigation method for orchard mobile robots based on octree 3D point cloud optimization. Front. Plant Sci. 2025, 15, 1510683. [Google Scholar] [CrossRef]

- Abanay, A.; Masmoudi, L.; El Ansari, M.; Gonzalez-Jimenez, J.; Moreno, F.-A. LIDAR-based autonomous navigation method for an agricultural mobile robot in strawberry greenhouse: AgriEco Robot. AIMS Electron. Electr. Eng. 2022, 6, 317–328. [Google Scholar] [CrossRef]

- Liu, W.; Li, W.; Feng, H.; Xu, J.; Yang, S.; Zheng, Y.; Liu, X.; Wang, Z.; Yi, X.; He, Y.; et al. Overall integrated navigation based on satellite and LiDAR in the standardized tall spindle apple orchards. Comput. Electron. Agric. 2024, 216, 108489. [Google Scholar] [CrossRef]

- Malavazi, F.B.; Guyonneau, R.; Fasquel, J.B.; Lagrange, S.; Mercier, F. LiDAR-only based navigation algorithm for an autonomous agricultural robot. Comput. Electron. Agric. 2018, 154, 71–79. [Google Scholar] [CrossRef]

- Jiang, S.; Qi, P.; Han, L.; Liu, L.; Li, Y.; Huang, Z.; Liu, Y.; He, X. Navigation system for orchard spraying robot based on 3D LiDAR SLAM with NDT_ICP point cloud registration. Comput. Electron. Agric. 2024, 220, 108870. [Google Scholar] [CrossRef]

- Jiang, J.; Zhang, T.; Li, K. LiDAR-based 3D SLAM for autonomous navigation in stacked cage farming houses: An evaluation. Comput. Electron. Agric. 2024, 230, 109885. [Google Scholar] [CrossRef]

- Firkat, E.; An, F.; Peng, B.; Zhang, J.; Mijit, T.; Ahat, A.; Zhu, J.; Hamdulla, A. FGSeg: Field-ground segmentation for agricultural robot based on LiDAR. Comput. Electron. Agric. 2023, 210, 107923. [Google Scholar] [CrossRef]

- Ringdahl, O.; Hohnloser, P.; Hellström, T.; Holmgren, J.; Lindroos, O. Enhanced Algorithms for Estimating Tree Trunk Diameter Using 2D Laser Scanner. Remote Sens. 2013, 5, 4839–4856. [Google Scholar] [CrossRef]

- Jiang, A.; Ahamed, T. Development of an autonomous navigation system for orchard spraying robots integrating a thermal camera and LiDAR using a deep learning algorithm under low- and no-light conditions. Comput. Electron. Agric. 2025, 235, 110359. [Google Scholar] [CrossRef]

- Ban, C.; Wang, L.; Chi, R.; Su, T.; Ma, Y. A Camera-LiDAR-IMU fusion method for real-time extraction of navigation line between maize field rows. Comput. Electron. Agric. 2024, 223, 109114. [Google Scholar] [CrossRef]

- Kang, H.W.; Wang, X.; Cheng, C. Accurate fruit localisation using high resolution LiDAR-camera fusion and instance segmentation. Comput. Electron. Agric. 2022, 203, 107450. [Google Scholar] [CrossRef]

- Han, C.; Wu, W.; Luo, X.; Li, J. Visual Navigation and Obstacle Avoidance Control for Agricultural Robots via LiDAR and Camera. Remote Sens. 2023, 15, 5402. [Google Scholar] [CrossRef]

- Shalal, N.; Low, T.; McCarthy, C.; Hancock, N. Orchard mapping and mobile robot localisation using on-board camera and laser scanner data fusion—Part A: Tree detection. Comput. Electron. Agric. 2015, 119, 254–266. [Google Scholar] [CrossRef]

- Xue, J.L.; Fan, B.W.; Yan, J.; Dong, S.X.; Ding, Q.S. Trunk detection based on laser radar and vision data fusion. Int. J. Agric. Biol. Eng. 2018, 11, 20–26. [Google Scholar] [CrossRef]

- Yu, S.; Liu, X.; Tan, Q.; Wang, Z.; Zhang, B. Sensors, systems and algorithms of 3D reconstruction for smart agriculture and precision farming: A review. Comput. Electron. Agric. 2024, 224, 109229. [Google Scholar] [CrossRef]

- Ji, Y.; Li, S.; Peng, C.; Xu, H.; Cao, R.; Zhang, M. Obstacle detection and recognition in farmland based on fusion point cloud data. Comput. Electron. Agric. 2021, 189, 106409. [Google Scholar] [CrossRef]

- Zhang, Y.Y.; Zhang, B.; Shen, C.; Liu, H.L.; Huang, J.C.; Tian, K.P.; Tang, Z. Review of the field environmental sensing methods based on multi-sensor information fusion technology. Int. J. Agric. Biol. Eng. 2024, 17, 1–13. [Google Scholar] [CrossRef]

- Zhou, J.; Geng, S.; Qiu, Q.; Shao, Y.; Zhang, M. A deep-learning extraction method for orchard visual navigation lines. Agriculture 2022, 12, 1650. [Google Scholar] [CrossRef]

- Chien, C.T.; Ju, R.Y.; Chou, K.Y.; Xieerke, E.; Chiang, J.-S. YOLOv8-AM: YOLOv8 Based on Effective Attention Mechanisms for Pediatric Wrist Fracture Detection. arXiv 2024, arXiv:2402.09329. [Google Scholar] [CrossRef]

- Liu, H.; Wu, C.; Wang, H. Real time object detection using LiDAR and camera fusion for autonomous driving. Sci. Rep. 2023, 13, 8056. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Dong, X.; Ma, J.; Liu, K.; Ahmed, S.; Lin, J.; Qiu, B. The Improved A* Obstacle Avoidance Algorithm for the Plant Protection UAV with Millimeter Wave Radar and Monocular Camera Data Fusion. Remote Sens. 2021, 13, 3364. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Zhuang, Y.; Chen, W.; Jin, T.; Chen, B.; Zhang, H.; Zhang, W. A Review of Computer Vision-Based Structural Deformation Monitoring in Field Environments. Sensors 2022, 22, 3789. [Google Scholar] [CrossRef]

- Lin, K.-Y.; Tseng, Y.-H.; Chiang, K.-W. Interpretation and Transformation of Intrinsic Camera Parameters Used in Photogrammetry and Computer Vision. Sensors 2022, 22, 9602. [Google Scholar] [CrossRef]

- Duan, J. Study on Multi-Heterogeneous Sensor Data Fusion Method Based on Millimeter-Wave Radar and Camera. Sensors 2023, 23, 6044. [Google Scholar] [CrossRef]

- Iqra; Giri, K.J. SO-YOLOv8: A novel deep learning-based approach for small object detection with YOLO beyond COCO. Expert Syst. Appl. 2025, 280, 127447. [Google Scholar] [CrossRef]

- Ju, R.Y.; Chien, C.T.; Chiang, J.S. YOLOv8-ResCBAM: YOLOv8 Based on an Effective Attention Module for Pediatric Wrist Fracture Detection. arXiv 2024, arXiv:2409.18826. [Google Scholar]

- Zhang, Y.; Tan, Y.; Onda, Y.; Hashimoto, A.; Gomi, T.; Chiu, C.; Inokoshi, S. A tree detection method based on trunk point cloud section in dense plantation forest using drone LiDAR data. For. Ecosyst. 2023, 10, 100088. [Google Scholar] [CrossRef]

- Lee, S.; An, S.; Kim, J.; Namkung, H.; Park, J.; Kim, R.; Lee, S.E. Grid-Based DBSCAN Clustering Accelerator for LiDAR’s Point Cloud. Electronics 2024, 13, 3395. [Google Scholar] [CrossRef]

- Shi, J.; Bai, Y.; Diao, Z.; Zhou, J.; Yao, X.; Zhang, B. Row detection based navigation and guidance for agricultural robots and autonomous vehicles in row-crop fields: Methods and applications. Agronomy 2023, 13, 1780. [Google Scholar] [CrossRef]

- Zhou, M.; Wang, W.; Shi, S.; Huang, Z.; Wang, T. Research on Global Navigation Operations for Rotary Burying of Stubbles Based on Machine Vision. Agronomy 2025, 15, 114. [Google Scholar] [CrossRef]

- Lv, M.; Wei, H.; Fu, X.; Wang, W.; Zhou, D. A loosely coupled extended Kalman filter algorithm for agricultural scene-based multi-sensor fusion. Front. Plant Sci. 2022, 13, 849260. [Google Scholar] [CrossRef]

- Francq, B.G.; Berger, M.; Boachie, C. To Tolerate or to Agree: A Tutorial on Tolerance Intervals in Method Comparison Studies with BivRegBLS R Package. Stat. Med. 2020, 39, 4334–4349. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).