1. Introduction

With the rapid development of intelligent shipping technology, the shipbuilding industry is undergoing a profound transformation from traditional manufacturing to intelligent and digitalized production. To guide this change, major shipping nations and international organizations have successively introduced relevant regulations. For instance, the China Classification Society (CCS) has issued the “Smart Ship Specifications,” and the International Association of Classification Societies (IACS) has also successively promulgated corresponding specifications and standards [

1]. In this context, equipment health management has become one of the key supporting technologies for smart ships. As a core component of the shaft system, rolling bearings have a high failure rate, and their health status is directly related to the operational safety of the entire shaft system. Should a failure occur, it may cause irreversible damage to the vessel, leading to serious maritime safety incidents and significant economic losses [

2,

3,

4]. Therefore, research on the health condition monitoring of rolling bearings is not only of great theoretical value but also holds significant practical importance for ensuring vessel safety and enhancing its intelligence level.

However, the vibration signals of rolling bearings exhibit complex characteristics under the influence of factors such as high-speed vessel operation, multi-source noise interference, and multi-fault coupling, leading to challenges like difficult feature extraction and low fault diagnosis accuracy [

5]. The multi-bearing coupled configuration within marine propulsion systems means that fault signals not only contain their own features but are also affected by the coupled dynamics of adjacent bearings and the shaft system structure, which further exacerbates feature masking and recognition difficulty. At the same time, the deployment of on-site detection equipment is subject to practical constraints such as spatial limitations, harsh environmental conditions, and real-time requirements, making it difficult for traditional diagnostic methods to be directly applied in marine engineering practice.

In the current field of rolling bearing fault diagnosis, extensive research has been conducted on vibration signal processing and feature extraction. Traditional methods primarily focus on single analysis domains, including various signal processing techniques like time-domain, frequency-domain, and time-frequency analysis. Time-domain analysis reflects the overall characteristics of a signal through statistical parameters such as the root mean square value and peak factor; frequency-domain analysis reveals periodic components; and time-frequency analysis combines both time and frequency information, making it suitable for processing non-stationary signals [

6]. With the advancement of signal processing technology, methods such as short-time Fourier transform (STFT) [

7], wavelet transform (WT) [

8], empirical mode decomposition (EMD) [

9], and variational mode decomposition (VMD) [

10] have further enhanced the effectiveness and accuracy of feature extraction. Furthermore, research into enhancing signal processing performance and feature extraction is continuously deepening, with new techniques like VMD parameter optimization [

11], blind deconvolution based on candidate fault frequencies [

12], and envelope spectrum optimization methods [

13] providing effective support for the extraction and identification of fault signals.

However, studies have shown that single-domain signal processing methods still suffer from incomplete information extraction and poor generalization capabilities under complex operating conditions. Consequently, multi-domain feature fusion methods have emerged as a research hotspot in recent years. By integrating signal features from different analysis domains, these approaches can more comprehensively capture fault characteristics and significantly enhance diagnostic accuracy and robustness. Several notable contributions have advanced this field. Yan et al. [

14] proposed an SVM classification algorithm with multi-domain feature optimization for bearing fault diagnosis. Wang et al. [

15] developed the MDF-Relief-Bayes-KNN model, which integrates Relief feature selection with Bayesian optimization for k-nearest neighbors classification. Li et al. [

16] presented a multi-level feature fusion strategy for multi-domain vibration signal analysis. Additionally, Wang et al. [

17] introduced a fault diagnosis method that combines multi-domain features with whale optimization support vector machines. These studies have demonstrated the effectiveness of multi-domain approaches in improving diagnostic performance.

While multi-domain feature extraction has shown significant promise, effectively utilizing these extracted features for accurate fault classification presents another critical challenge. Pattern recognition, as the core component of fault diagnosis systems, directly determines classification performance and substantially influences overall diagnostic accuracy. Traditional machine learning methods have been extensively applied in bearing fault diagnosis, including classification and regression trees (CARTs) [

18], random forests (RFs) [

19,

20], the AdaBoost algorithm [

21], support vector machines (SVMs) [

22], and k-nearest neighbor classification (K-NN) [

23]. In recent years, deep learning approaches have gained considerable attention in this domain. Deep learning-based models, such as convolutional neural networks (CNNs) [

24], recurrent neural networks (RNNs) [

25], and long short-term memory networks (LSTMs) [

26], demonstrate superior capability in automatically extracting high-level features and exhibit exceptional performance in large-scale data environments. However, these methods typically require substantial amounts of labeled training data and suffer from limited model interpretability.

Although each of the aforementioned methods has its advantages, the complexity of marine equipment and the coupled characteristics of bearing failures pose higher demands on algorithm performance [

27,

28,

29]. Support vector machines (SVMs) enhance model generalization capabilities by minimizing structural risk; however, they still face limitations including high computational complexity, parameter sensitivity, and insufficient performance with small sample sizes [

30]. The least squares support vector machine (LSSVM), an improved version of the SVM algorithm, replaces inequality constraints with linear least squares, combining strong generalization capabilities with low computational costs, making it particularly suitable for the real-time engineering requirements of ships. Gao et al. [

31] proposed a rolling bearing fault diagnosis method based on LSSVMs, which achieved high diagnostic performance within a brief classification period.

However, the diagnostic performance of LSSVMs heavily relies on the correct selection of penalty parameters and kernel function parameters. Manual parameter tuning is not only time-consuming but also fails to achieve optimal results under varying operating conditions. To address this issue, parameter optimization algorithms are necessary for automatic optimization. Deng et al. [

32] proposed an enhanced particle swarm optimization (PSO) algorithm to optimize LSSVM parameters, thereby constructing an optimal LSSVM classifier for fault classification. Chen et al. [

33] introduced a bearing fault diagnosis method that integrates an improved grey wolf optimization (IGWO) algorithm with the LSSVM model. Furthermore, Jin et al. [

34] suggested a fault diagnosis technique based on a modified particle swarm optimization (MPSO) algorithm to enhance the LSSVM fault diagnosis method. Research indicates that swarm intelligence optimization algorithms represent a viable approach to improving diagnostic efficiency and performance.

Recently, numerous scholars have introduced various innovative swarm intelligence algorithms. Among these, the hippopotamus optimization algorithm (HO) was proposed by Amiri et al. [

35] in 2024. This algorithm is inspired by the behavioral patterns of hippopotamuses, simulating their positional updates, defense strategies, and evasion methods in rivers or ponds. The HO algorithm is notable for its exceptional performance, enabling it to rapidly identify and converge towards the optimal solution while effectively avoiding local minima. However, it still remains susceptible to performance degradation and the occurrence of local optima when faced with complex problems [

36].

This paper addresses two key challenges in rolling bearing fault diagnosis: (1) using raw signal feature vectors for multi-domain feature extraction to comprehensively evaluate bearing health status, and (2) developing a high-precision LSSVM fault identification model with adaptive parameter optimization. The main contributions of this work are as follows:

- (1)

Time-frequency joint analysis is utilized for multi-domain feature extraction, comprehensively characterizing the bearing’s health status through statistical indices such as vibration energy and spectral characteristics.

- (2)

A novel hippopotamus optimization algorithm (LCM-HO) improved with chaos mapping is proposed. By enhancing population diversity, this algorithm effectively strengthens the global optimization and local optima escape capabilities of the meta-heuristic algorithm. The LCM-HO algorithm is then applied to adaptively optimize the kernel parameters and penalty factors of the least squares support vector machine (LCM-HO-LSSVM).

- (3)

The superiority of the LCM-HO algorithm is verified through multi-metric comparisons with HO, GWO, and SSA using test functions. Furthermore, the proposed LCM-HO-LSSVM intelligent diagnostic method is validated using the University of Paderborn (PU) bearing dataset and a self-built marine bearing test platform. Evaluation results based on confusion matrices and accuracy rates confirm its superior diagnostic precision and generalization performance.

The structure of this paper is organized as follows:

Section 2 introduces the fundamental principles of HO-LSSVM.

Section 3 describes the LCM-HO algorithm and its LSSVM parameter optimization process.

Section 4 presents the proposed fault diagnosis framework.

Section 5 validates the effectiveness of the proposed method using the PU dataset and self-collected experimental data, comparing its performance, feature extraction, and fault identification effects.

Section 6 provides conclusion and future works.

5. Experiment

5.1. Algorithm Performance Test

To verify the performance advantages of the LCM-HO algorithm, this paper refers to the test functions mentioned in reference [

39]. A comparative analysis is conducted with the original HO algorithm, GWO, and SSA across multiple dimensions.

Table 3 provides a concise overview of the mathematical expressions, search ranges, and theoretical optimal values for the seven benchmark test functions. The functions F1–F4 are unimodal and designed to assess convergence accuracy and depth search capability. In contrast, functions F5–F7 are multimodal, facilitating the evaluation of the algorithm’s ability to address complex problems and the efficacy of global optimization searches.

This study refers to the multi-strategy metaheuristic optimization algorithm performance evaluation methods widely used in references [

40,

41], and quantitatively evaluates algorithm performance through four core indicators. (1) Min: characterizes the algorithm’s best optimization ability across multiple runs. (2) Avg: reflects the comprehensive performance of the algorithm in escaping local optimal solutions, with values closer to the theoretical optimal indicating better optimization. (3) Std: measures the degree of performance variability, tending towards 0 when demonstrating strong robustness. (4) Max: reveals the risk boundary of the algorithm and its engineering applicability. Each index constructs a complete evaluation system from four dimensions: ideal performance, average performance, stability threshold, and risk control.

To ensure the fairness of the experiment, all algorithms must utilize the same settings. To mitigate random errors, parameters are set to

N = 30, dim = 30, and

T = 400, and each algorithm is executed independently 30 times. The Min, Avg, Std, and Max computation outcomes for the four methods are detailed for each of the seven test functions, as shown in

Table 4. This provides a solid foundation for the subsequent analysis.

The LCM-HO algorithm demonstrates significant advantages across all evaluated functions, as evidenced by the analysis of the experimental data. This method achieves the theoretically optimal value of 0 for the F1 (unimodal), F6 (multimodal), and F7 (multimodal) functions, as illustrated in

Table 4. Despite the F2–F4 functions not achieving full convergence, their convergence accuracy (Min) is, notably, 0.5 to 2 orders of magnitude superior to that of the HO algorithm, 0.5 to 22 orders of magnitude better than the SSA algorithm, and 2 to 152 orders of magnitude superior to the GWO technique. Remarkably, in complex multi-peak function scenarios such as F5–F6, the LCM-HO approach retains its accuracy advantage while achieving convergence outcomes comparable to those of the HO and SSA algorithms.

In terms of stability, the LCM-HO algorithm excels in two critical indices: standard deviation and mean value. The standard deviation and mean value across all test functions are maintained at their lowest levels, with the standard deviation for F1 and F5–F7 stabilized at 0, which substantiates its robustness. Furthermore, from the perspective of risk prevention and control, the worst-case value of LCM-HO is improved when compared to the next-best algorithms, indicating an enhancement in its risk control capabilities.

In conclusion, logistic chaos mapping significantly enhances the HO algorithm, facilitating an optimization process characterized by both high precision convergence and strong resilience against interference. With the optimization algorithm validated, the following experiments evaluate the complete LCM-HO-LSSVM framework for bearing fault diagnosis using two datasets: the Paderborn University dataset for general validation and a self-constructed ship bearing test rig for marine-specific applications.

5.2. Experiment 1—PU Dataset

The bearing dataset from the University of Paderborn in Germany features a wide range of operating conditions, and the signals collected from its accelerated life test rig are more complex than those from artificial damage signals, making them more representative of realistic bearing fault characteristics. As a result, using this dataset can better validate the effectiveness of this method. This benchmark dataset, established by Lessmeier et al. [

42], is derived from the 6203 deep groove ball bearing test rig (

Figure 5), covering three failure modes, single-point damage on the inner/outer rings, composite damage (including pitting, corrosion, and indentations caused by both damage mechanisms), with damage severity categorized into three levels (

Figure 6,

Table 5).

The specific data collection settings are as follows:

- (1)

Data acquisition: obtained via an accelerated life test stand (

Figure 7). The types and severity of bearing damage are detailed in

Table 6 and

Table 7.

- (2)

Operating conditions: Four load-speed combination conditions were set (

Table 8).

- (3)

Sampling parameters: The vibration signal sampling frequency is 64 kHz, with a single sampling duration of 4 s.

- (4)

Data processing: Each sample was extracted with 4096 data points to balance computational efficiency and analytical accuracy.

Based on the above settings, 20 valid samples were collected for each damage type under the four operating conditions, ultimately constructing a multidimensional comprehensive dataset encompassing failure mechanisms, damage locations, and damage severity.

Figure 5.

Test bed for data testing.

Figure 5.

Test bed for data testing.

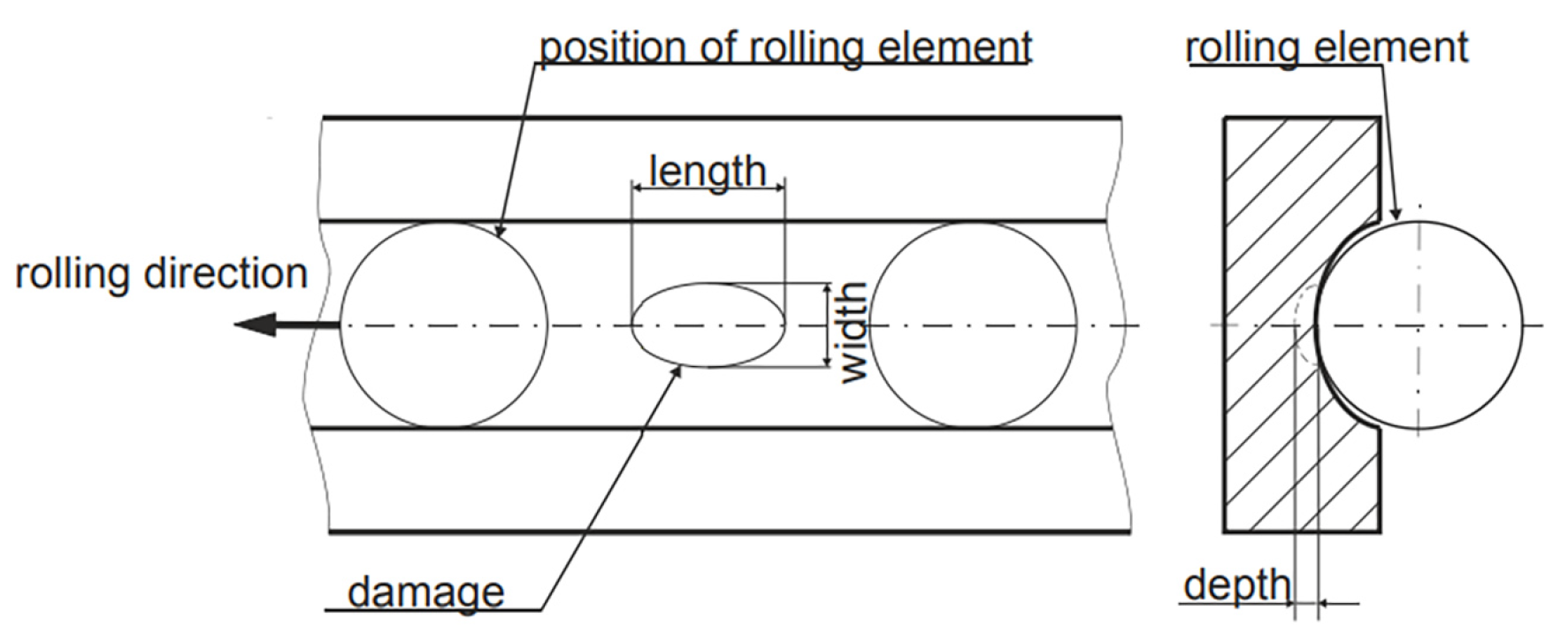

Figure 6.

Damage geometry.

Figure 6.

Damage geometry.

Figure 7.

Accelerated life test rig.

Figure 7.

Accelerated life test rig.

Table 5.

Determination of impairment rating by degree of impairment [

42].

Table 5.

Determination of impairment rating by degree of impairment [

42].

| Damage Level | Specify Percentage Value | 6203 Bearing Limit |

|---|

| 1 | 0–2% | ≤2 mm |

| 2 | 2–5% | >2 mm |

| 3 | 5–15% | <4.5 mm |

Table 6.

Classification of healthy bearing-related information and labeling [

42].

Table 6.

Classification of healthy bearing-related information and labeling [

42].

| Bearing Status | Number | Runtime/h | Radial Force | Speed | Sample Size | Label |

|---|

| Health | K001 | 50 | 1000–3000 | 1500–2000 | 80 | 1 |

| K002 | 19 | 3000 | 2900 | 80 | 2 |

| K003 | 1 | 3000 | 3000 | 80 | 3 |

| K004 | 5 | 3000 | 3000 | 80 | 4 |

| K005 | 10 | 3000 | 3000 | 80 | 5 |

Table 7.

Damage bearing failure types and label classification [

42].

Table 7.

Damage bearing failure types and label classification [

42].

| Bearing Status | Number | Injury Symptoms | Damaged Package | Injury Programming | Damage Level | Damage Characteristics | Sample Size | Label |

|---|

| Outer ring (OR) | KA04 | Fatigue Pitting | S | No Repeat | 1 | Single point | 80 | 6 |

| KA15 | Indentation | S | No Repeat | 1 | Single point | 80 | 7 |

| KA16 | Fatigue Pitting | R | Random | 2 | Single point | 80 | 8 |

| KA22 | Fatigue Pitting | S | No Repeat | 1 | Single point | 80 | 9 |

| KA30 | Indentation | R | Random | 1 | Distributed | 80 | 10 |

| Inner ring (IR) | KI04 | Fatigue Pitting | M | No Repeat | 1 | Single point | 80 | 11 |

| KI14 | Fatigue Pitting | M | No Repeat | 1 | Single point | 80 | 12 |

| KI16 | Fatigue Pitting | S | No Repeat | 3 | Single point | 80 | 13 |

| KI18 | Fatigue Pitting | S | No Repeat | 2 | Single point | 80 | 14 |

| KI21 | Fatigue Pitting | S | No Repeat | 1 | Single point | 80 | 15 |

| Composite damage (C) | KB23 | Fatigue Pitting | M | Random | 2 | Single point | 80 | 16 |

| KB24 | Fatigue Pitting | M | No Repeat | 3 | Distributed | 80 | 17 |

| KB27 | Indentation | M | Random | 1 | Distributed | 80 | 18 |

Table 8.

Four working conditions [

42].

Table 8.

Four working conditions [

42].

| No. | Rotation Speed/Rpm | Load Torque/Nm | Radial

Force/N | Setting Name |

|---|

| 1 | 1500 | 0.7 | 1000 | N15_M07_F10 |

| 2 | 900 | 0.7 | 1000 | N09_M07_F10 |

| 3 | 1500 | 0.1 | 1000 | N15_M01_F10 |

| 4 | 1500 | 0.7 | 400 | N15_M07_F04 |

5.2.1. Fault Identification Results 1

All computational experiments were conducted on a computer with the following specifications: 13th Gen Intel(R) Core(TM) i9-13900H processor (Intel Corporation, Santa Clara, CA, USA) @ 2.60 GHz, 32.0 GB RAM (Micron Technology, Boise, ID, USA), Windows 11 operating system, and MATLAB R2023a environment.

The raw signals collected from the experimental test rig were processed to extract multi-domain features, resulting in several feature datasets, as illustrated in

Table 9. These obtained feature datasets were subsequently imported into the classifiers: LSSVM, HO-LSSVM, and LCM-HO-LSSVM. Notably, the initial parameters for HO-LSSVM and LCM-HO-LSSVM were set as follows: The number of hippopotamus populations was 20, the maximum number of iterations was 30, and the search parameter range was defined with Lowerbound = [

,

] and Upperbound = [

,

]. The values for gam and sig2 were randomly assigned as gam = 1.2 and sig2 = 1.5.

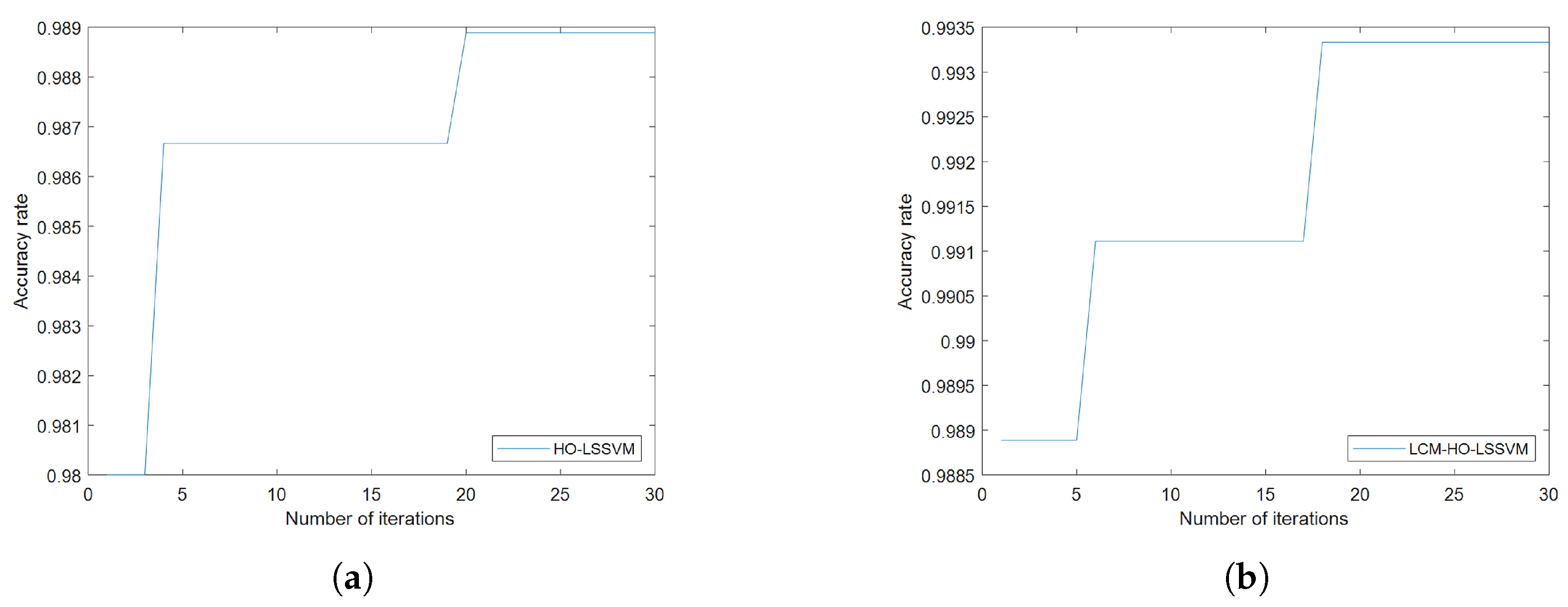

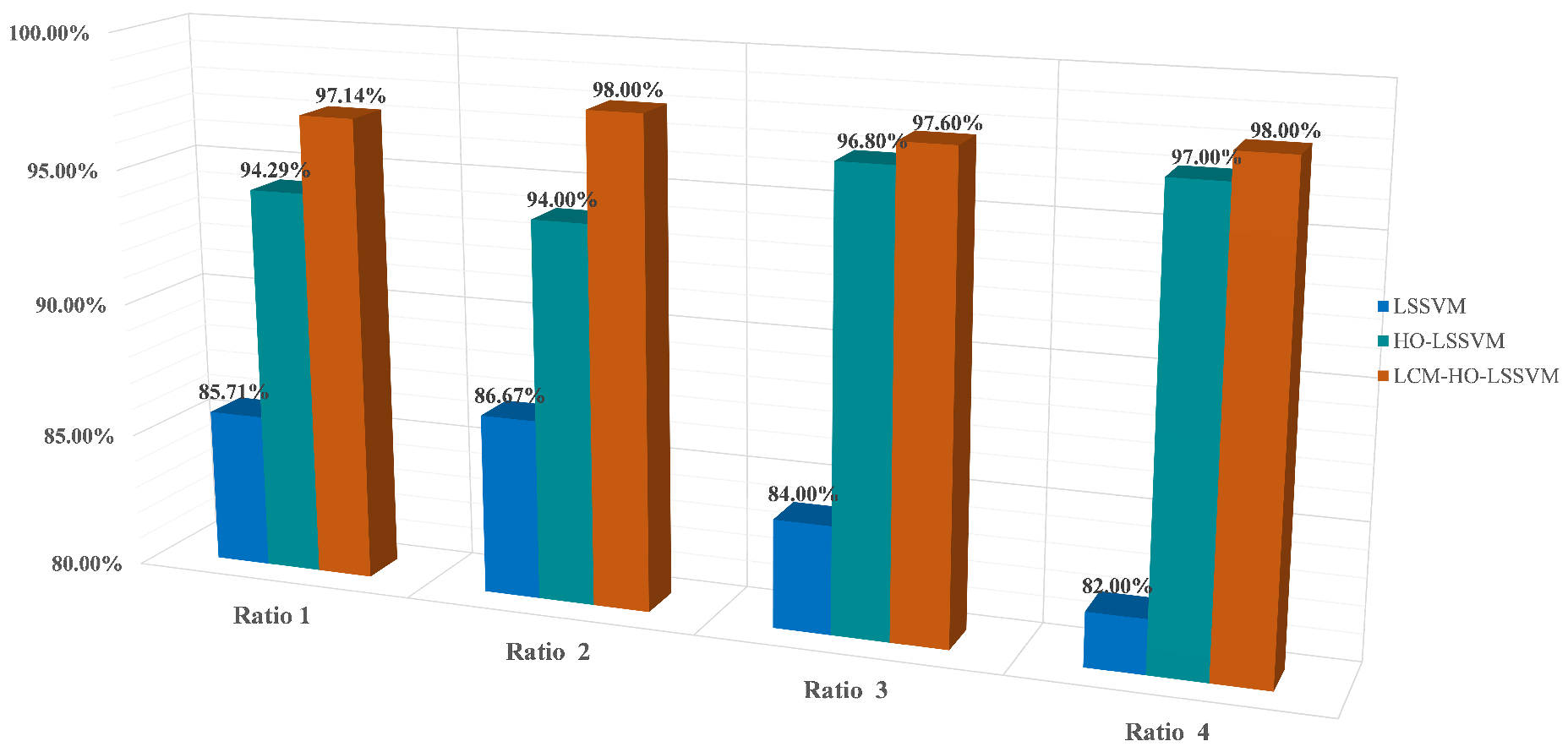

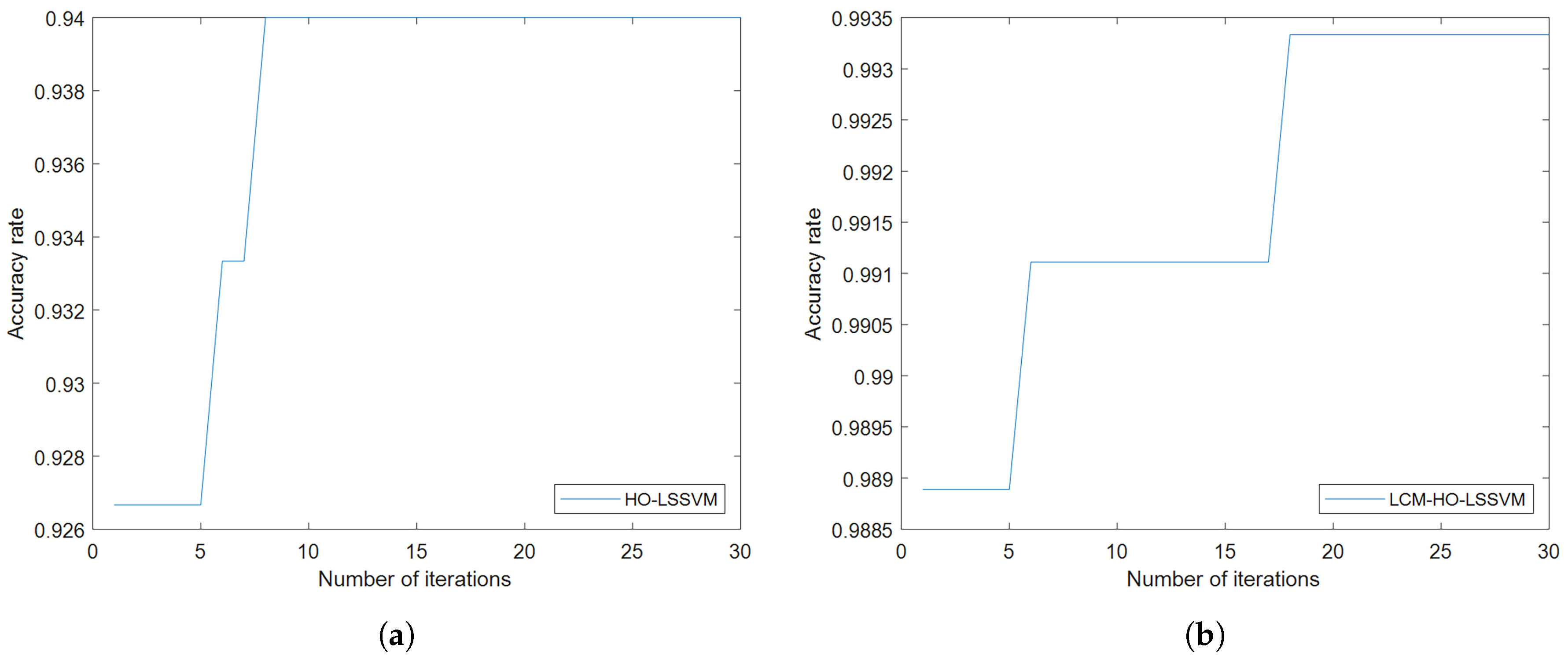

Furthermore, to assess the classifier’s performance, experiments were conducted on training and test samples with varying ratios. During the fault diagnosis process under ratio 2 conditions, the convergence behavior of the optimization algorithms is illustrated in

Figure 8, which shows the accuracy rate evolution over 30 iterations for both HO-LSSVM and LCM-HO-LSSVM. The results demonstrate that LCM-HO-LSSVM achieves higher accuracy and a more stable convergence performance, with the chaotic mapping strategy effectively enhancing the optimization process. The experimental results are presented in

Figure 9 and statistical results in

Table 10 and

Figure 10.

Table 9.

Partial characterization data set.

Table 9.

Partial characterization data set.

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | … | 21 |

|---|

| 0.7214 | 0.6775 | 0.4502 | 0.1728 | 0.6954 | 0.5994 | 0.5054 | 0.3623 | … | 0.7424 |

| 0.4291 | 0.3703 | 0.4522 | 0.3805 | 0.8489 | 0.7715 | 0.4140 | 0.5551 | … | 0.2023 |

| 0.6410 | 0.5867 | 0.3585 | 0.1887 | 0.7056 | 0.5797 | 0.2781 | 0.2988 | … | 0.5399 |

| 0.4126 | 0.3572 | 0.6395 | 0.4223 | 0.9915 | 0.9850 | 0.4101 | 0.8188 | … | 0.0993 |

| 0.3847 | 0.3312 | 0.2568 | 0.4199 | 0.6238 | 0.5513 | 0.2947 | 0.3229 | … | 0.2437 |

| 0.3947 | 0.3413 | 0.2598 | 0.3370 | 0.6045 | 0.5262 | 0.1845 | 0.3206 | … | 0.2955 |

Figure 8.

Convergence curves of HO-LSSVM and LCM-HO-LSSVM after 30 iterations. (a) HOLSSVM iteration curve; (b) LCM-HO-LSSVM iteration curve.

Figure 8.

Convergence curves of HO-LSSVM and LCM-HO-LSSVM after 30 iterations. (a) HOLSSVM iteration curve; (b) LCM-HO-LSSVM iteration curve.

Table 10.

Accuracy of each classifier.

Table 10.

Accuracy of each classifier.

| Ratio (Training:Testing) | LSSVM | HO-LSSVM | LCM-HO-LSSVM |

|---|

| Ratio 1 (60:20) | 78.610% | 98.889% | 98.889% |

| Ratio 2 (55:25) | 79.110% | 98.670% | 99.110% |

| Ratio 3 (50:30) | 77.410% | 98.333% | 98.703% |

| Ratio 4 (40:40) | 50.280% | 88.472% | 91.111% |

| Ratio 5 (30:50) | 45.670% | 79.778% | 81.333% |

Validation based on a multi-case complex damage-bearing dataset indicates that the LCM-HO-LSSVM model consistently outperforms the comparison algorithms across different testing scales. The experimental data reveal the following:

When the training set is sufficiently large, both LCM-HO-LSSVM and HO-LSSVM achieve an accuracy rate of over 98%, thereby confirming the inherent advantages of the HO framework; and when the number of training samples is N = 55, the accuracy rate of LCM-HO-LSSVM reaches 99%.

As the number of training samples gradually decreases to N = 55 and N = 50, the accuracy of LCM-HO-LSSVM remained stable at 98.85 ± 0.25%, consistently outperforming HO-LSSVM and LSSVM.

When the training samples are further reduced to smaller sizes of N = 40 and N = 30, LCM-HO-LSSVM exhibits improvements of 40.831% and 35.663%, respectively, compared to the unoptimized model.

This performance advantage arises from the synergy between multi-domain feature engineering and chaos optimization: When ample samples are available, the search capability of the HO algorithm is fully utilized; conversely, when samples are limited, the perturbation mechanism of logistic chaos mapping effectively mitigates overfitting, allowing the model to maintain stable classification performance despite a lower sample count and a feature dimension of 21D.

Figure 9.

Accuracy rate of three classifiers at different ratios. (a) Ratio 1. (b) Ratio 2. (c) Ratio 3. (d) Ratio 4. (e) Ratio 5.

Figure 9.

Accuracy rate of three classifiers at different ratios. (a) Ratio 1. (b) Ratio 2. (c) Ratio 3. (d) Ratio 4. (e) Ratio 5.

Figure 10.

Accuracy of different proportions for each classifier.

Figure 10.

Accuracy of different proportions for each classifier.

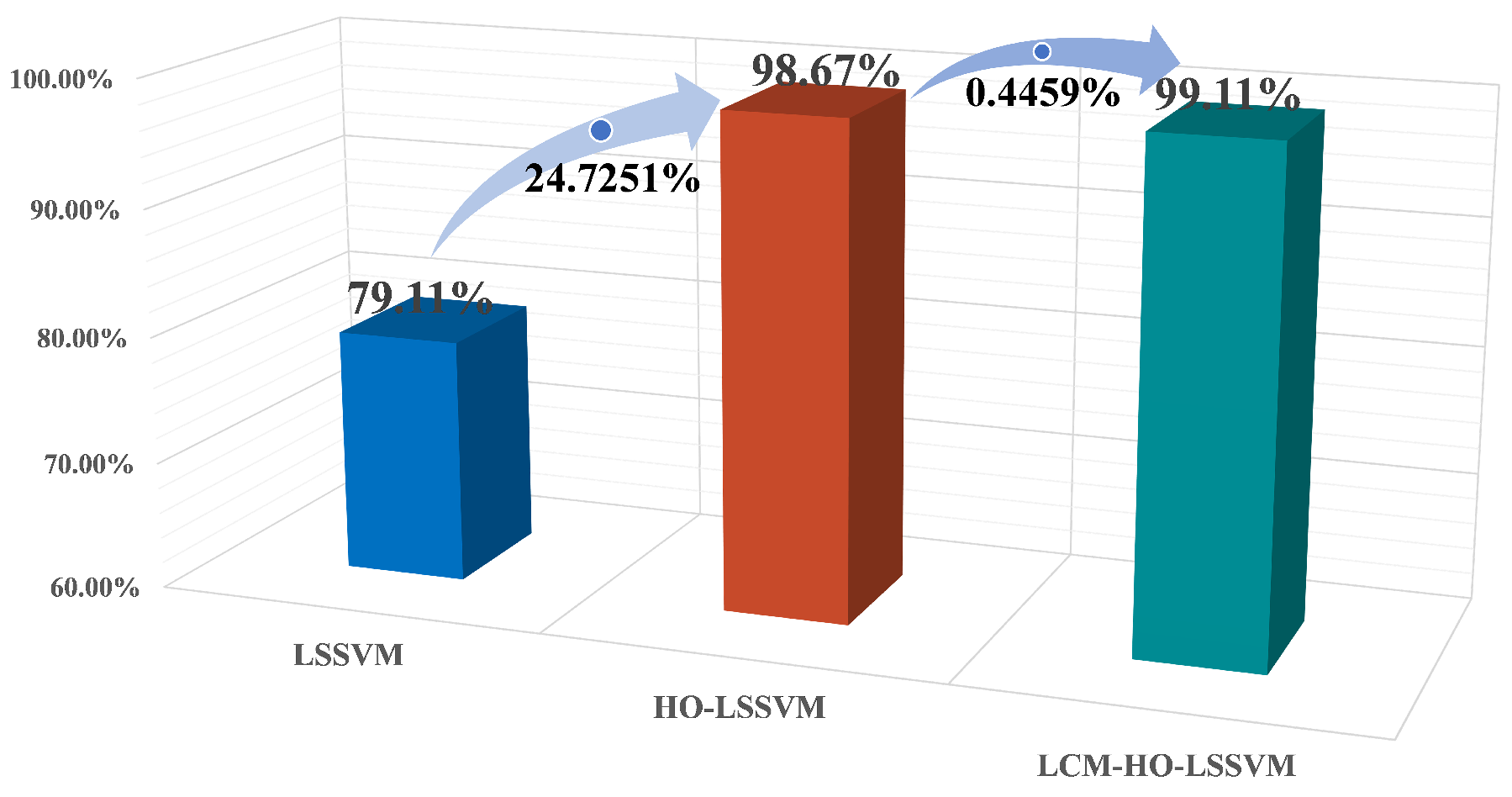

The experimental data indicate that LCM-HO-LSSVM demonstrates considerable advantages in the validation scenario with a sample ratio of 2:

The peak accuracy of the unoptimized LSSVM is 79.11%, the highest of the five scales, when the test is trained at ratio 2. In contrast, the optimized HO-LSSVM achieves an accuracy of 98.67% and the LCM-HO-LSSVM further improves to 99.11%.

Compared to the original LSSVM, LCM-HO-LSSVM improves by 20 percentage points in absolute terms. The accuracy of HO-LSSVM is relatively improved by 24.7251% compared to LSSVM, while the accuracy of LCM-HO-LSSVM is further improved by 0.4459% relatively compared to HO-LSSVM. These enhancements are illustrated in

Figure 11.

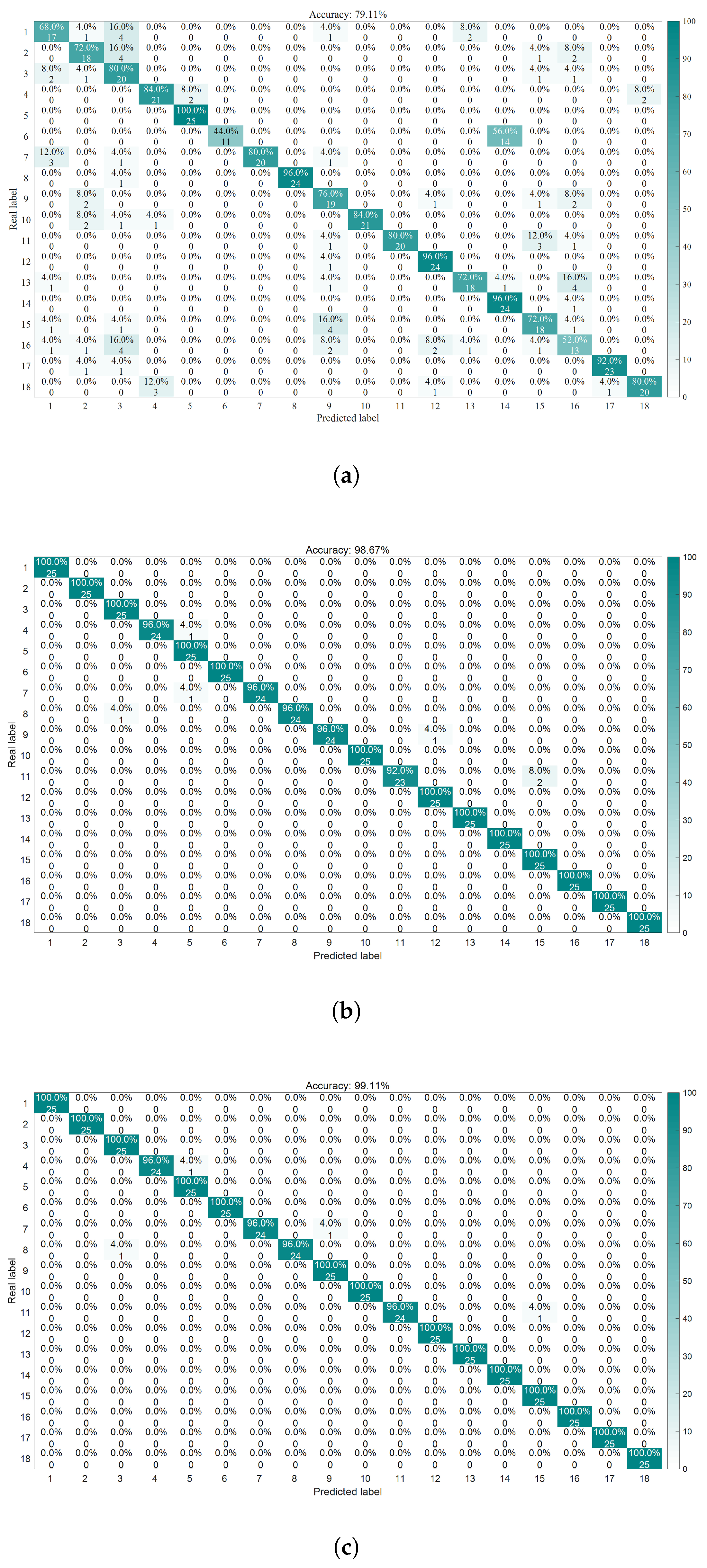

As demonstrated by the confusion matrix and accuracy rates depicted in

Figure 12, the findings can be summarized as follows:

The average accuracy of the LSSVM for healthy bearings is 80.8%, with label 1 achieving only 68% accuracy. The average accuracy for outer-ring damage is 76%, where label 6 has an accuracy of merely 44%. For inner-ring damage, the average accuracy is 83.2%, with labels 13 and 16 both achieving 72% accuracy. The average accuracy for composite damage is 74.67%, with label 16 exhibiting only 52% accuracy.

The average accuracy rate for the HO-LSSVM for healthy bearings is 99.2%. The average accuracy for outer ring damage is 97.6%, while for inner ring damage, it is 98.4%. The average accuracy for composite damage reaches 100%.

The average accuracy rate for the LCM-HO-LSSVM for healthy bearings is also 99.2%. The average accuracy for outer ring damage is 98.4%, and for inner ring damage, it is 99.2%. The average accuracy for composite damage is, agai, 100%.

In conclusion, the LCM-HO-LSSVM demonstrates a significant improvement over LSSVM across all four categories. Additionally, when compared to HO-LSSVM, there is an enhancement of 0.8% in accuracy for both outer and inner ring damage, indicating excellent generalization and robust resistance to interference in complex damage scenarios.

Figure 12.

Confusion matrix diagram for three classifiers. (a) LSSVM confusion matrix. (b) HO-LSSVM confusion matrix. (c) LCM-HO-LSSVM confusion matrix.

Figure 12.

Confusion matrix diagram for three classifiers. (a) LSSVM confusion matrix. (b) HO-LSSVM confusion matrix. (c) LCM-HO-LSSVM confusion matrix.

5.2.2. Validation of the Effectiveness of Multi-Domain Feature Sets

This section also performs feature selection comparison tests to confirm the requirement and validity of the retrieved 21-dimensional multi-domain feature collection. The benchmark condition is chosen to be the highest recognition accuracy ratio of 2 (training set:test set = 55:25). Reduced-dimension feature subsets are created using various feature selection techniques, and their diagnostic performance is subsequently contrasted and examined with that of the original 21-dimensional feature set.

(1) A feature discriminative ability assessment approach based on Euclidean distance is used to eliminate feature redundancy and filter out features with the best discriminative ability from multi-dimensional features. This approach computes each feature’s separability over various fault categories in order to evaluate its discriminative power. For the

i-th feature, the formula for calculating its discriminative ability

is Equation (

11).

where

is the

i-th feature value of the

k-th sample of the

c-th class.

is the

i-th feature mean of the

j-th class.

is the number of categories and

is the total sample size.

.

Normalization processing:

where

is the standardized discriminant ability value and

k represents the index of all features.

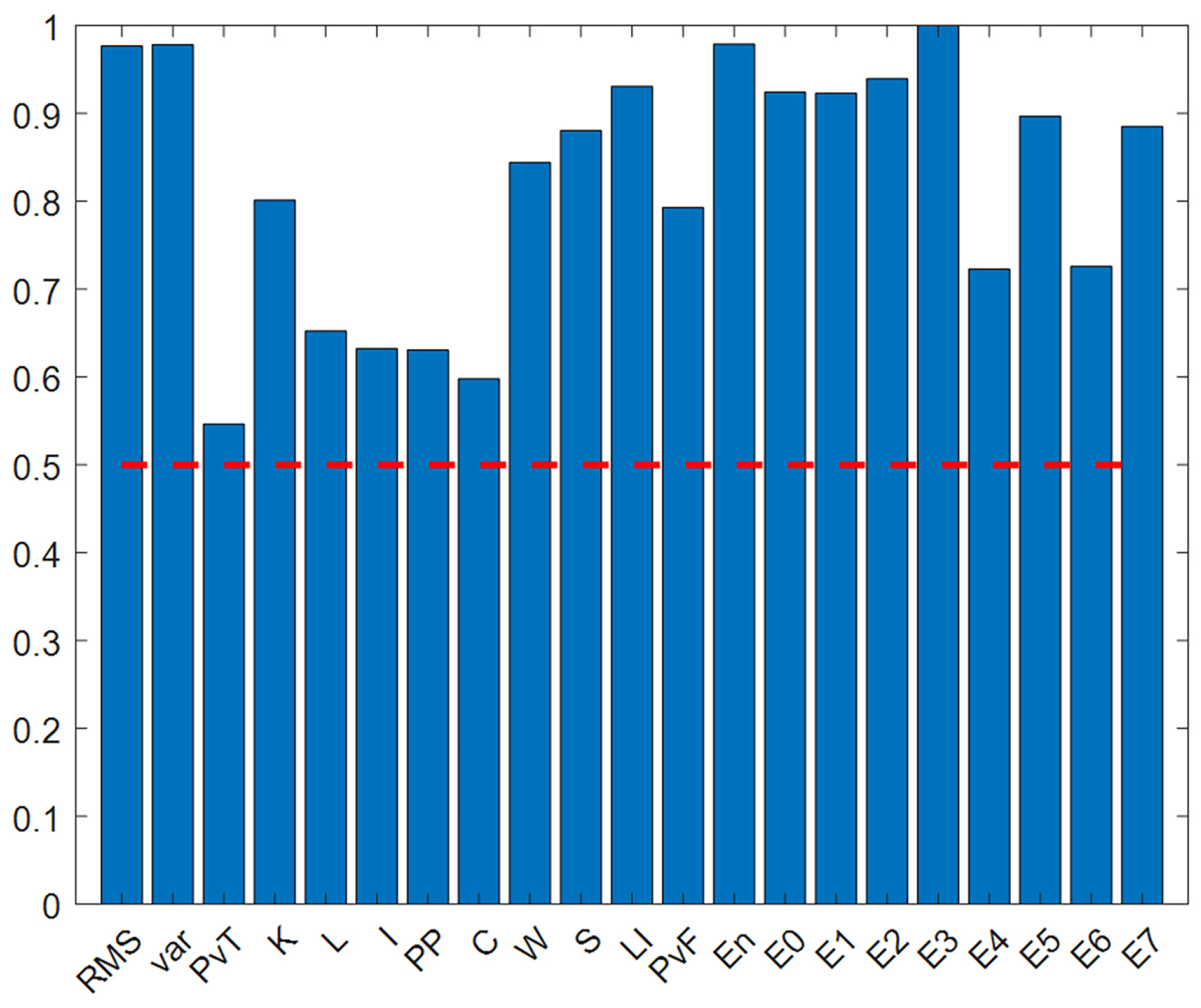

The results in

Figure 13 show that the Euclidean distance discrimination values of all 21 features are greater than 0.5, indicating that these features have good discrimination capabilities. To further analyze feature redundancy, Euclidean distance thresholds of 0.6, 0.7, 0.8, and 0.9 were set to construct four feature subsets. The values obtained for each feature are shown in

Table 11.

(2) The out-of-bag (OOB) importance scores for each feature were calculated using the random forest algorithm. To facilitate comparison and analysis, the original importance scores were normalized to ensure that all feature importance values were normalized to the [0, 1] interval. The normalization formula is given by Equation (

13):

where

is the normalized importance of the

i-th feature.

is the

i-th original OOB importance score and

is the maximum importance value among all features.

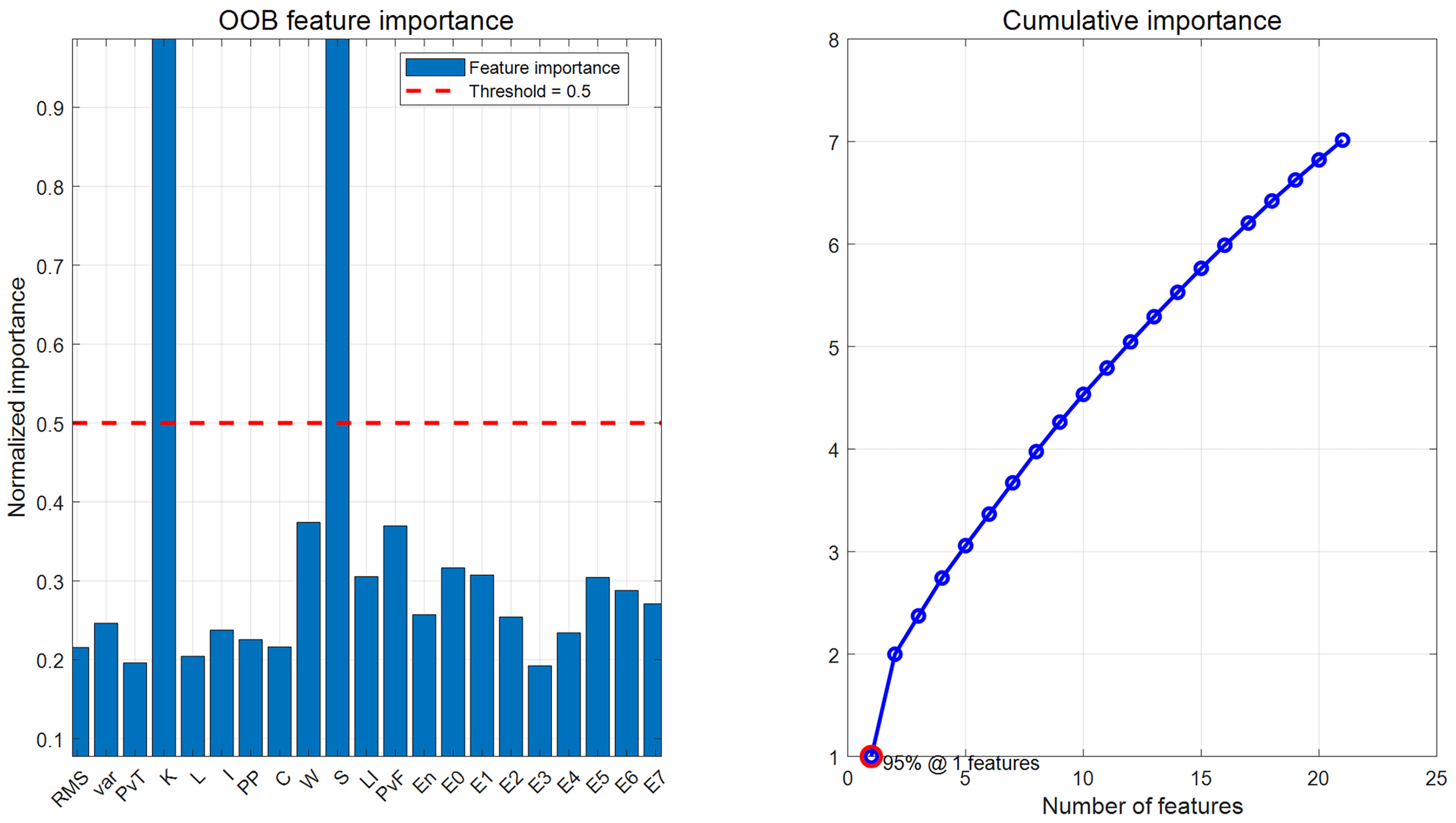

The OOB importance score results are shown in

Figure 14 and

Table 12. Based on the results, a TOP-K strategy was considered: the TOP-8, TOP-10, and TOP-12 feature subsets were selected based on importance rankings. Additionally, due to the uneven distribution of OOB features, a hybrid strategy was designed: By selecting the top 2 most important features, and then selecting 1–2 representative features from each of the time domain, frequency domain, and time-frequency domain, a 7-dimensional hybrid feature set was designed.

(3) The feature subsets generated by the above methods were input into the LCM-HO-LSSVM model for fault diagnosis. The accuracy is shown in

Figure 15, and the results are summarized in

Table 13.

- (1)

As the Euclidean distance threshold increases, the number of features decreases, and diagnostic accuracy declines to varying degrees. When the threshold is 0.9, the decline in diagnostic accuracy is most pronounced, decreasing by 14.89% compared to the original 21-dimensional feature set.

- (2)

Feature selection based on random forest importance also results in accuracy loss. The diagnostic accuracies of the TOP-8, TOP-10, and TOP-12 feature sets are 92.66%, 93.33%, and 96%, respectively, all of which are lower than the 99.11% of the original feature set.

- (3)

Although the hybrid strategy feature set considers the balance of multi-domain features, the 7-dimensional features still cannot fully represent complex fault information, resulting in a diagnostic accuracy of 92.44%.

Through validation experiments using various mainstream feature selection methods, it was found that whether based on Euclidean distance similarity screening or random forest importance ranking selection, the diagnostic accuracy of the reduced-dimension feature subset decreased. This result confirms the effectiveness of the original 21-dimensional multi-domain feature set and the irreplaceability of each feature dimension.

5.3. Experiment 2—Self-Constructed Marine Bearing Test Platform

Addressing the unique characteristics of multi-bearing coupled vibrations in ship propulsion systems, this study overcomes the limitations of traditional single-bearing diagnostic methods by tackling the technical challenges of identifying rolling bearing failures in multi-bearing systems. Theoretical analysis indicates that in multi-bearing systems composed of rolling bearings and journal bearings, when a rolling bearing fails, its vibration signals not only contain the bearing’s own fault characteristics but are also influenced by coupling effects from other bearings in the system and the shaft structure, leading to nonlinear coupling phenomena such as modal mixing and transmission path modulation. These factors significantly reduce the average identification rate of traditional single-fault diagnosis methods.

To address this challenge, this study developed an experimental platform for simulating multi-bearing faults in ship propulsion shaft systems, which is innovative in several aspects:

Realistic operating conditions: reproduces typical variable-speed operating conditions in ship propulsion systems.

Flexible fault configuration: supports various combinations of inner ring, outer ring, rolling element, and composite faults in rolling bearings.

Signal integrity: enables synchronous acquisition of multi-directional vibration signals.

The experimental system is shown in

Figure 16 and consists of a three-phase asynchronous motor (1200 r/min), shaft, rolling bearings, sliding bearings, a dynamometer assembly, and several sensor assemblies. The rolling bearing model is 6214-ZZ, with specific structural parameters listed in

Table 14. The sensor model is DH105E, with a response frequency of 0.1 Hz to 1000 Hz, and the signal sampling device is DH5960.

The rolling bearing fault settings are as follows: Inner and outer ring faults are created using wire cutting electrical discharge machining (EDM), with dimensions of 2.5 mm depth × 0.5 mm width. Rolling element faults are created using EDM, with a depth of 0.3 mm. Composite faults are defined as combinations of these single fault modes.

Data acquisition setup: Radial vibration acceleration signals were collected from the rolling bearings on the test bench. The monitoring was performed at a sampling rate of 20 kHz, with each sample lasting 2 s.

The dataset includes five signal categories: normal (N), rolling element failure (B), inner ring failure (IR), outer ring failure (OR) and composite failure (C). By analyzing the collected vibration signals, it was demonstrated that the improved HO-LSSVM method has significant advantages under complex ship operating conditions.

This experiment introduces three significant improvements compared to the German Paderborn University (PU) bearing dataset:

Expansion of Fault Types: New types of rolling element fault have been introduced and four different damage modes (B/IR/OR/C + N) have been constructed.

Optimization of working condition design: A single working condition test with a fixed rotational speed of 1200 r/min is employed to evaluate the robustness of the method under stable working conditions.

Data preprocessing: The sliding window is set to w = 1600 for the original signal, extracting s = 4000 valid fault points per sample and m = 100 standardized sample sizes for each fault type.

Table 15 presents the complete experimental data parameter system, which establishes a comprehensive method validation framework through complementary validation with the PU dataset across multiple working conditions.

5.3.1. Fault Identification Results 2

The same configuration as in

Section 5.2.1 was used for the calculations. First, the multi-domain features of the signals collected by the self-built test rig were computed, resulting in a multi-domain feature dataset, with a portion of the dataset shown in

Table 16. These obtained feature datasets were then, respectively, imported into the LSSVM, HO-LSSVM, and LCM-HO-LSSVM classifiers. The parameter configurations for HO and LCM-HO were consistent with those in the PU experiment. The convergence curves of the HO-LSSVM and LCM-HO-LSSVM after 30 iterations are shown in

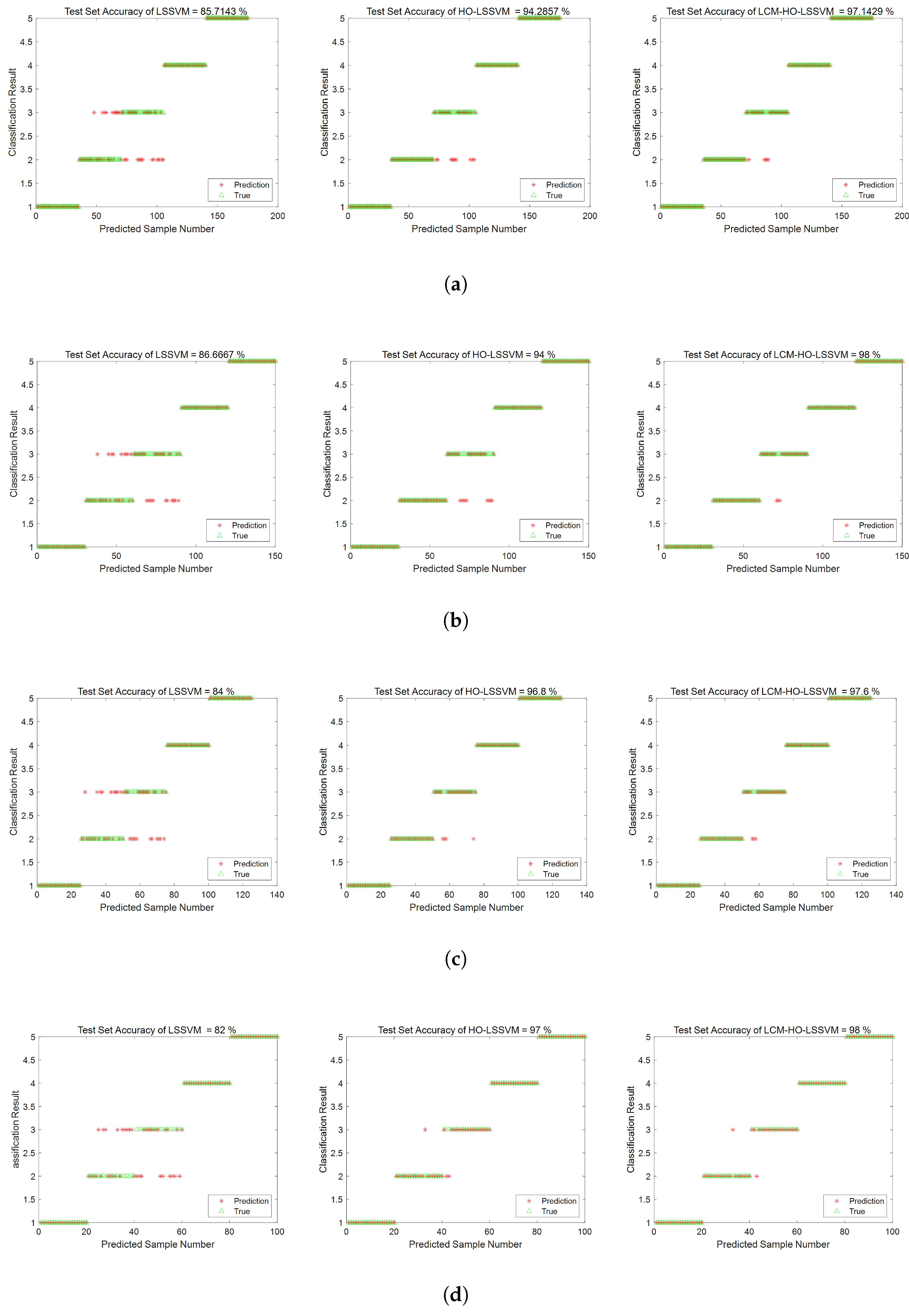

Figure 17. To evaluate the performance of the classifiers, experiments were conducted with different ratios of training and testing samples. The results are shown in

Figure 18, with statistical results presented in

Table 17.

Validation conducted on a specialized experimental platform for marine propulsion shaft systems demonstrates that the LCM-HO-LSSVM model exhibits superior performance in single-case multi-fault scenarios. The experimental design incorporates four types of typical bearing faults (IR, OR, B, and C) and evaluates four different training ratios. The results are summarized as follows:

Figure 19 illustrates the comparative results of the algorithms across varying training set ratios. The accuracy of LCM-HO-LSSVM consistently surpasses that of both the HO-LSSVM and LSSVM across all four ratios, indicating that the proposed method possesses commendable stability, generalization, and enhanced recognition capability.

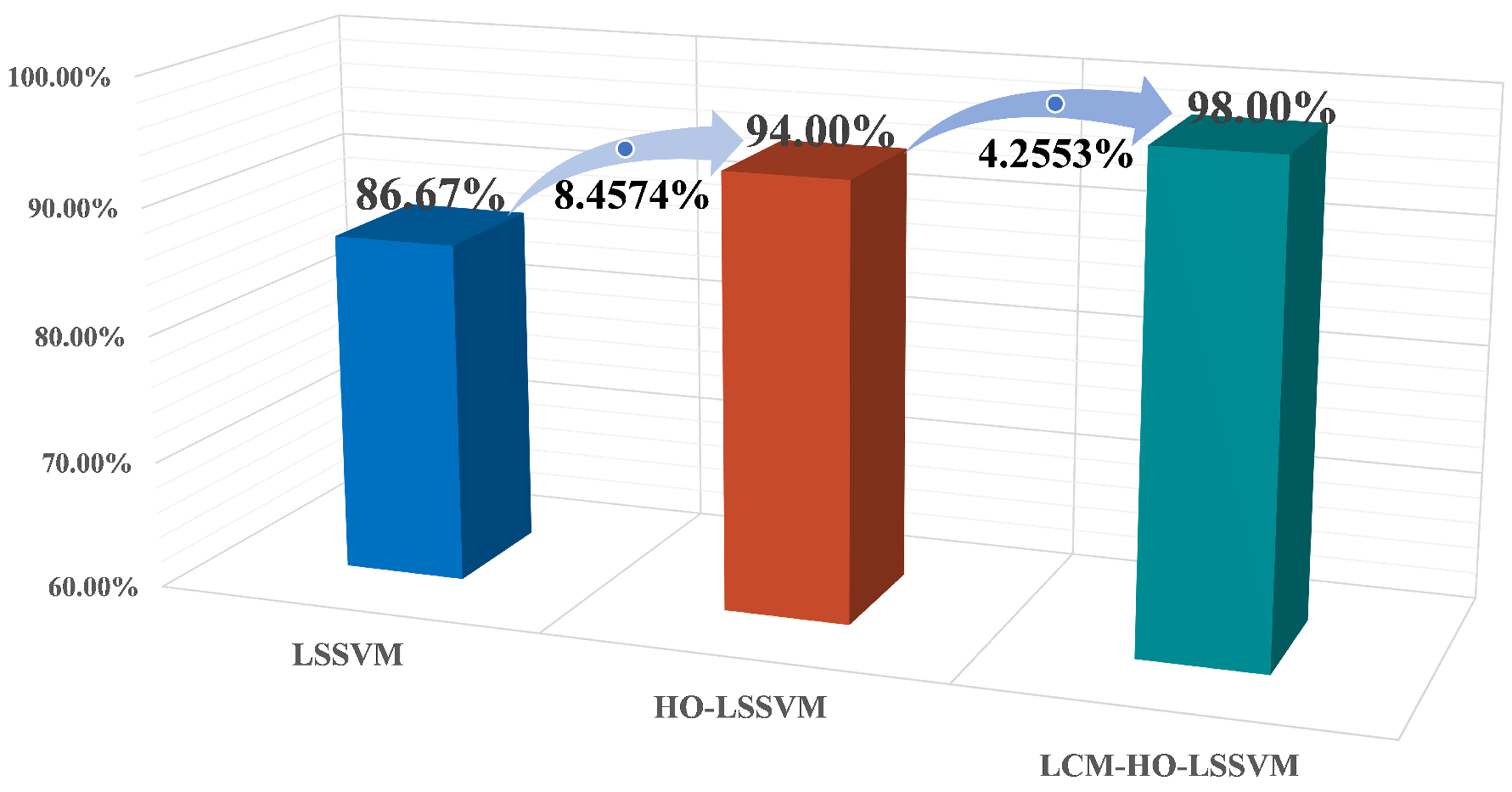

The maximum accuracy achieved by the original LSSVM is 86.67 % (at ratio 2), while the HO-LSSVM improves this to 94.0%, and the LCM-HO-LSSVM reaches an impressive 98.0%. The improvements in accuracy are 8.4574% for the HO-LSSVM, 4.2553% for the LCM-HO-LSSVM, and a significant 13.0726% for the LCM-HO-LSSVM compared to the original LSSVM accuracy, as depicted in

Figure 20.

The confusion matrix and accuracy metrics for ratio 2 are presented in

Figure 21, respectively. The identification accuracy for the normal state (N) is 100% across all three methods. For rolling body failure (B), the LSSVM achieves only 70%, whereas both the HO-LSSVM and LCM-HO-LSSVM attain 100%, resulting in an absolute improvement of 30%. Regarding composite failure (C), the LSSVM records the lowest identification accuracy at 63.3%, while the HO-LSSVM and LCM-HO-LSSVM achieve 70% and 90%, respectively, yielding absolute improvements of 26.7% over the LSSVM and 20% over the HO-LSSVM.

The experimental data substantiate that the proposed LCM-HO-LSSVM method possesses three significant advantages. (1) Method stability: It demonstrates high consistency of results across different training ratios, exhibiting minimal fluctuations in accuracy (with a standard deviation of only 0.35%), thereby reflecting the robustness of the algorithm. (2) Multi-fault recognition capability: It exhibits excellent differentiation for various fault types, including the inner ring, outer ring, rolling body, and composite faults. (3) Engineering adaptability: The method maintains a stable and high recognition rate (>97%) even under conditions of high noise and substantial interference in the ship propulsion system, particularly for the challenging composite faults.

To further demonstrate the superiority of the proposed method, we conducted a comparative study with members of the same research group using the same experimental platform [

43]. This study employed a method combining COA-VMD signal processing, GCMMPFE feature extraction, and SVM for fault recognition. However, that method only addressed four fault types (healthy, inner ring, outer ring, and rolling body faults), failing to recognize composite faults, and achieved a maximum diagnostic accuracy of 97.5%. In contrast, the model proposed in this study, while handling more complex fault types (including composite faults), achieved a higher accuracy of 98.0%. Although the accuracy improvement is 0.5%, it is noteworthy that our method’s diagnostic process is more concise and efficient. By directly performing multi-domain feature extraction on the raw signals to complete fault recognition, it eliminates cumbersome signal processing steps, making it more suitable for practical engineering applications. This comparison fully validates that our method, under similar experimental conditions, not only offers more comprehensive fault recognition capabilities and higher diagnostic precision but also holds a more significant advantage in terms of engineering applicability.

Figure 18.

Accuracy rate of three classifiers at different ratios. (a) Ratio 1. (b) Ratio 2. (c) Ratio 3. (d) Ratio 4.

Figure 18.

Accuracy rate of three classifiers at different ratios. (a) Ratio 1. (b) Ratio 2. (c) Ratio 3. (d) Ratio 4.

Figure 19.

Different proportions of accuracy for different classifiers.

Figure 19.

Different proportions of accuracy for different classifiers.

Figure 20.

Accuracy improvement ratio of the three classifiers at ratio 2.

Figure 20.

Accuracy improvement ratio of the three classifiers at ratio 2.

Figure 21.

Confusion matrix diagram for three classifiers. (a) LSSVM confusion matrix. (b) HO-LSSVM confusion matrix. (c) LCM-HO-LSSVM confusion matrix.

Figure 21.

Confusion matrix diagram for three classifiers. (a) LSSVM confusion matrix. (b) HO-LSSVM confusion matrix. (c) LCM-HO-LSSVM confusion matrix.

5.3.2. Validation of the Effectiveness of Multi-Domain Feature Sets

Similarly, the validity and necessity of the extracted 21-dimensional multi-domain feature set in the ship data set were verified, and feature selection comparison experiments were also conducted. The highest recognition accuracy ratio of 2 (training set:test set = 70:30) was selected as the benchmark condition, and multiple feature selection strategies were used to construct reduced-dimension feature subsets, which were then compared and analyzed with the diagnostic performance of the original 21-dimensional feature set.

(1) The same European distance is used, and the formula principle is the same as in

Section 5.2.2.

Figure 22 and

Table 18 show the normalized feature discrimination ability values for all features.

From the above chart, it can be seen that the Euclidean distance discrimination values are evenly distributed, with most greater than 0.5. There are 16 features greater than 0.5 and 16 features greater than 0.6, so the threshold strategies adopted are 0.5, 0.7, and 0.9.

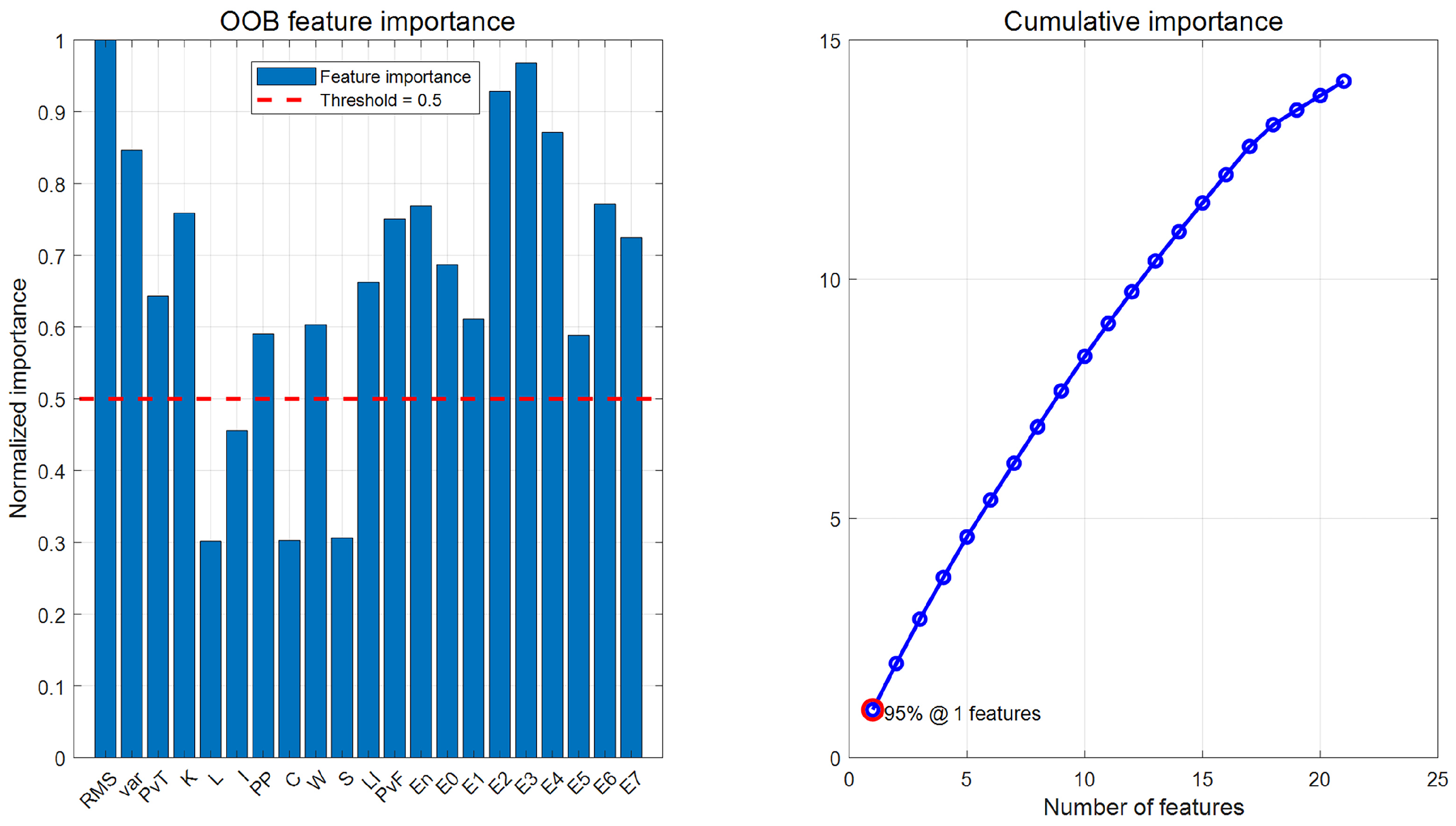

(2) Similarly, the OOB importance scores calculated using the random forest algorithm are shown in

Figure 23. From the figure, it can be seen that the OOB importance distribution is uniform, so the TOP-K strategy is directly adopted, and the TOP-8, TOP-10, and TOP-12 importance scores are selected as three screening methods to generate a new feature dataset.

Table 19 shows the normalized feature discrimination ability values of all features.

(3) The feature datasets obtained from the above screening method and without screening were imported into the LCM-HO-LSSVM-optimized classifier, using a training mode with a ratio of 2. The fault classification accuracy is shown in

Figure 24 and summarized in

Table 20:

In summary, the fault identification accuracy obtained after screening the Euclidean distance using three thresholds was lower than that of the original 21-dimensional feature set. Additionally, feature selection based on random forest importance also resulted in a loss of accuracy. The diagnostic accuracy of the feature datasets generated by the TOP-8, TOP-10, and TOP-12 strategies was approximately 93.33%, which is lower than the 99.11% accuracy of the original feature set. This result further confirms the effectiveness of the original 21-dimensional multi-domain feature set and the irreplaceability of each feature dimension.