1. Introduction

With modern people spending up to 90% of their time in enclosed spaces, indoor air quality (IAQ) represents a critical concern for public health, with exposure to poor IAQ linked to a substantial health burden. Each year, the WHO reports over 3.2 million premature deaths and the Global Burden of Disease estimates more than 8.09 million deaths worldwide attributable to poor IAQ [

1]. Poor IAQ also has adverse effects on well-being and productivity [

2,

3]. Recent research evidence has revealed that indoor air pollution consistently exceeds outdoor levels by 2 to 10 times, and occasionally by up to 100 times [

4,

5].

Carbon dioxide (CO

2) is a prevalent indoor air pollutant and a critical determinant for characterizing IAQ. Human respiration constitutes a main indoor CO

2 emissions source, while significant contributors also include combustion and fuel-burning processes such as heating with solid or fossil fuels, cooking, and smoking [

6]. Due to insufficient ventilation in indoor spaces, CO

2 emissions infiltrating from outdoors further deteriorate IAQ, with fossil fuel combustion in transportation being the main CO

2 emissions driver in urban areas [

7].

The indoor air is contaminated by several substances and compounds, with the most critical being carbon oxides (COx), particulate matter (PM), nitrogen oxides (NOx), ozone (O

3), volatile organic compounds (VOCs), radon, and toxic metals [

8]. Health organizations and regulatory bodies have established thresholds and exposure durations for pollutants based on their health implications. The American Society of Heating, Refrigerating and Air-Conditioning Engineers (ASHRAE) recommends that indoor CO

2 levels should remain below 1000 ppm, with indoor–outdoor differential not exceeding 700 ppm [

9]. This threshold is also reflected in 10 more guidelines, while 800 ppm and 1500 ppm frequently appear as upper boundaries in regional regulations [

10]. Under typical conditions, indoor CO

2 levels range between 400 and 1000 ppm.

Recent advancements in Artificial Intelligence (AI), Machine Learning (ML), big data analytics, and sensors have enabled proactive and informed IAQ management strategies to support healthier indoor spaces and promote energy efficiency [

11]. Heating, ventilation, and air-conditioning (HVAC) systems are integral parts of IAQ management; however, their operation is associated with increased energy demands [

12]. Leveraging predictive analytics, pattern recognition, and anomaly detection in IAQ, actionable insights are produced for HVAC optimization with the dual objective to minimize energy costs and maintain a healthy indoor environment [

13,

14].

The persistent need for accurate IAQ predictions motivated this study, which set out to evaluate Long Short-Term Memory (LSTM) neural networks concerning indoor CO2 concentrations over short temporal horizons, spanning from 15 min up to 3 h. The rationale behind this architectural selection was grounded in their strong capacity to learn intricate temporal patterns. This study implemented both univariate and multivariate LSTMs to investigate both temporal dependencies inherent in CO2 and interconnected temporal associations between CO2, climatic conditions, air pollution and occupancy.

A literature review was conducted on prior research studies that employed ML models for short-term CO2 forecasting indoors. Deep learning (DL) approaches were also identified, allowing a fairer comparison with the LSTM models developed in the present study. Predictive performance was reported using two standard regression error metrics: Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE).

J. Kallio et al. [

15] evaluated ML and DL models in short-term CO

2 forecasting within office settings using a one-year data collection. Decision Tree and Random Forest regression models maintained an optimal balance between accuracy and computational efficiency for CO

2 predictions up to 15 min ahead, utilizing a 10-min lookback window. Human presence was identified as the most influential predictor. Multi-Layer Perceptrons (MLPs) did not outperform the tree-based models despite their capacity to capture more intricate dependencies. Under a similar setup in office settings, N. Kapoor et al. [

16] compared several ML models for instant CO

2 forecasts, including linear, decision tree, support vector machines, MLP, and Gaussian process regression (GPR). Among the models examined, optimized GPR achieved the lowest MAE of 3.35 ppm with an RMSE of 4.20 ppm. Apart from the linear model, all the fine-tuned model variants produced reasonably well forecasts, underscoring the ML potential in CO

2 forecasting. Y. Zhu et al. [

17] proposed a low-cost, IoT-enabled IAQ monitoring system that leveraged LSTM models to predict CO

2 levels 1 min ahead. Uni- and bi-directional LSTM architectures with single and stacked layers were developed, using 10-min lookback windows. The bi-directional LSTM attained the lowest MAE of 8.95 ppm with an RMSE of 16.77 ppm.

K.U. Ahn et al. [

18] introduced a novel hybrid approach that coupled white-box, physics-based models with black-box, data-driven modeling to produce short-term CO

2 forecasts. A Bayesian Neural Network (BNN) was utilized to address the uncertainty of the mass balance equation model by estimating the model’s unknown or hard-to-measure input variables, such as aggregated ventilation rates, infiltration, and interzonal airflow. The study examined forecasting horizons of 5 min, 10 min, and 15 min ahead using a 27-day data collection from residential settings. The results were quite promising, with the hybrid model’s deterioration over increased forecasting horizons being less pronounced, achieving a Mean Bias Errors (MBE) of −0.01% and −1.28% for the living room and the bedroom, respectively. P. Garcia-Pinilla et al. [

19] conducted extensive experiments across 15 schools in Spain, comparing the predictive gains in CO

2 forecasting from statistical, ML and DL models, examining future horizons ranging from 10 min up to 4 h. For horizons up to 10 min, ML and DL models exhibited marginal gains compared to simpler statistical models, while DL models demonstrated robust predictive performance with increasing forecasting horizons. Temporal Convolutional Networks (TCN) achieved the lowest MAE of 29.19 ppm for near-term horizons and N-HiTS achieved the lowest MAE of 36.72 ppm for longer ones. S. Taheri and A. Razban [

20] implemented ML and DL models to predict indoor CO

2 concentrations in university classrooms for 1 h, 6 h and 24 h ahead. MLP achieved the lowest MAE of 30.16 ppm and 55.19 ppm for 1 h and 24 h ahead, respectively.

Aiming to optimize ventilation systems, E. Athanasakis et al. [

21] employed LSTM and Convolutional Neural Network (CNN) models to predict indoor CO

2 concentrations 5 min ahead, with a dilated CNN resulting in the lowest MAE of 4.56 ppm. To enable fast deployment in real-world applications, G. Segala et al. [

22] implemented a low-complexity CNN using air temperature, relative humidity, and CO

2 as predictors. This CNN achieved an RMSE around 15 ppm at 15 min future horizon, highlighting that even low complexity DL approaches could deliver substantial performance gains.

The remainder of this study is articulated as follows.

Section 3 describes the core methods and materials employed in this study.

Section 4 details the LSTM variant evaluation.

2. Methods and Materials

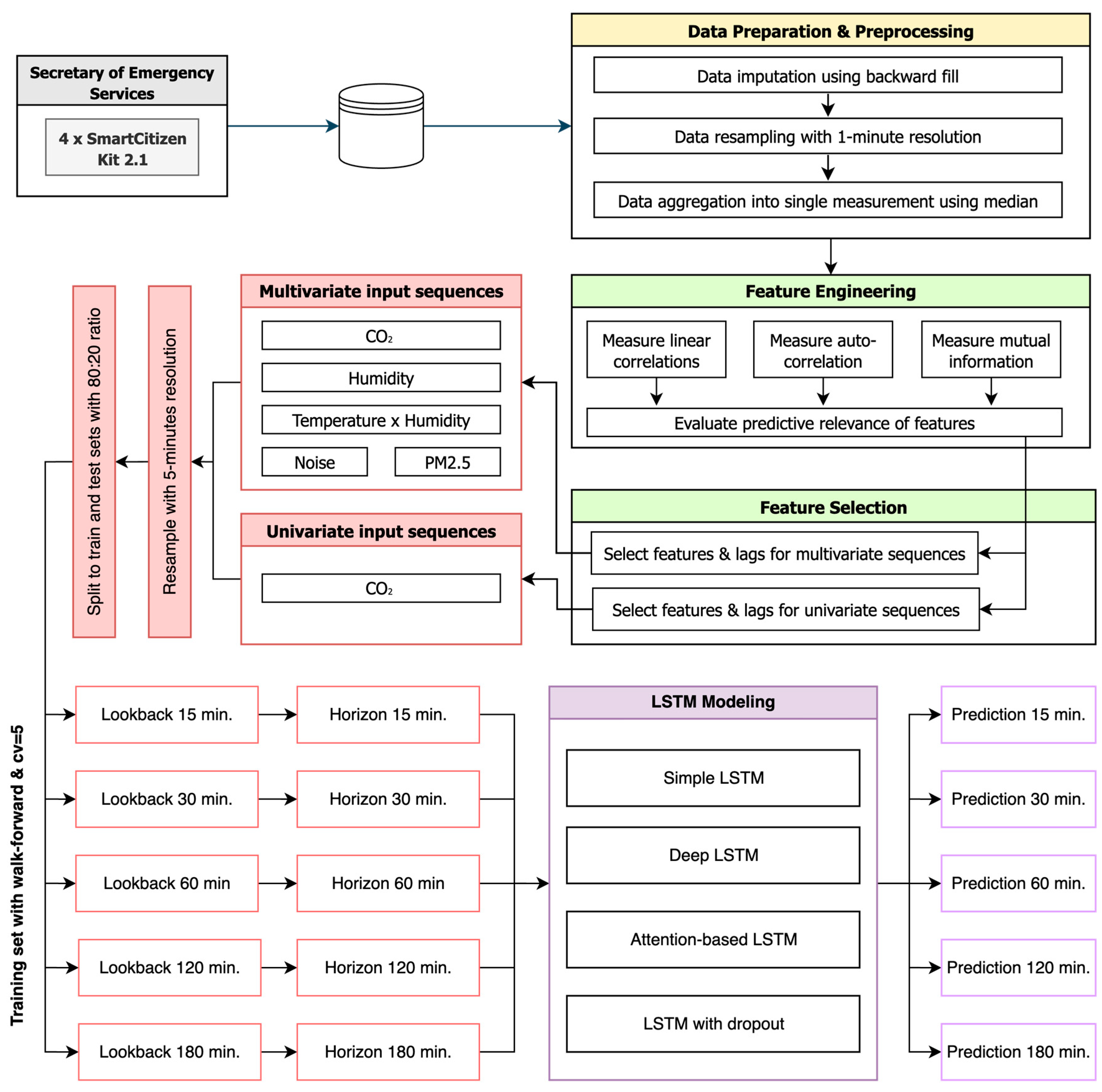

Figure 1 illustrates the high-level methodological framework employed in this study to forecast indoor CO

2 concentrations at future horizons of 15 min, 30 min, 60 min, 120 min, and 180 min. The time-series data collected from the four (4) sensing devices installed within hospital environment were preprocessed, imputing missing values, resampling with 1-min resolution, and aggregating into single measurements per time step using median. Subsequently, a feature engineering was performed to compute linear correlations, autocorrelations inherent in CO

2, and mutual information, assessing their predictive relevance of available features. Using the most informative features, input sequences were constructed for univariate and multivariate LSTM models, resampled to a 5-min resolution, and split into training and testing sets with 80:20 ratio. Using equal-length lookback windows and forecasting horizons, predictions for each horizon were generated with multiple LSTM variants, including simple, deep, attention-based, and dropout-regularized architectures.

2.1. Data Collection and Description

For the present study, a dataset comprising air quality and climatic time-series measurements was sourced from the indoor settings of the General Hospital Thriassio (GHT) located at Elefsina, Attica, Greece, and specifically, from the Secretary of Emergency Services zone. This zone serves as the primary hospital’s one-stop shop for the incoming patients and visitors to emergency services, and as a result, it experiences considerable and persistent occupation throughout the working days. Elevated occupancy patterns in this zone are also observed during the on-call duty days of GHT, although they do not follow any consistent, recurring temporal pattern. To gather data, a small-scale network of four (4) wall-mounted sensor units was installed in this hospital zone, each mounted on one of the four walls delineating the space, and was built using the open-source, modular SmartCitizen Kit (SCK) 2.1 platform (Fab Lab Barcelona, Institute for Advanced Architecture of Catalonia, Barcelona, Spain).

The monitoring period comprised two distinct one-month intervals from 21 September 2024 to 21 October 2024, and from 22 January 2025 to 22 February 2025, with measurements recorded at 1-min resolution. The dataset corresponding to the first monthly interval, denoted as

, comprised a total of 43,629 time-series measurements for each sensor unit, while the dataset corresponding to the second interval, denoted as

, comprised a total of 40,109 measurements. Across both datasets, time-series measurements were collected for air pollutants of CO

2, PM

2.5, and TVOC, for climatic conditions of air temperature, relative humidity, and barometric pressure, and for noise and luminosity levels, which served as indirect occupancy indicators for this zone (

Table 1). These variables, denoted as

, were treated as the candidate predictors for the target variable of CO

2, denoted as

, where

at each specific time step

.

2.2. Data Preparation and Preprocessing

The data preparation and preprocessing phase commenced with the exploration of and for missing values, as the sequential models require continuous and valid measurements. Missing values probably resulted from invalid payload transmissions, payload decoding errors, and occasional sensor malfunctions, since the data collection process was not interrupted at any time. Given the 1-min time interval between successive measurements, the backward imputation strategy was employed as a robust approach to replace the missing values in the dataset. This simple, yet effective strategy for measurements with low sampling resolution propagates the next known to replace the missing one. A total of 129 missing values were imputed for variables representing predictors , as was also input for 12 missing values associated with the target variable of CO2 concentrations.

Under the assumption that the indoor space experienced uniform environmental conditions, a sensor fusion process was performed to aggregate the time-series measurements collected from the four sensor units into a single, representative measurement for each 1-min time interval. The median was used as the aggregation function to ensure that each single measurement is not affected by transient disturbances, sensor-specific anomalies, and local perturbations.

2.3. Dataset Exploration

The data exploration phase involved computing descriptive statistics for the dataset’s variables across the two monitoring periods. Central tendency and dispersion measures were calculated to provide a detailed presentation of their principal distributional characteristics and patterns.

As presented in

Figure 2, the CO

2 concentration distributions were broadly comparable and consistent across the two monitoring periods, where

and

exhibited means of 516.2 ppm and 520.3 ppm, and standard deviations of 122.7 ppm and 120.4 ppm, respectively. These considerable variations indicated pronounced CO

2 fluctuations around a nearly identical average in the two monitoring periods. Both medians were lower by 7% compared to the corresponding means, indicating slightly right-skewed CO

2 distributions. Further examining distributional characteristics, the 75th percentiles in

and

were nearly identical at 565 ppm and 566 ppm, respectively, while the 95th percentile was higher in

by 3.45%, reflecting a marginal prevalence of elevated CO

2 concentrations during the latter period. On the whole, the underlying distributional characteristics in the CO

2 concentration across the two monitoring periods substantiate the assumption of stable prevailing conditions in the examined hospital settings.

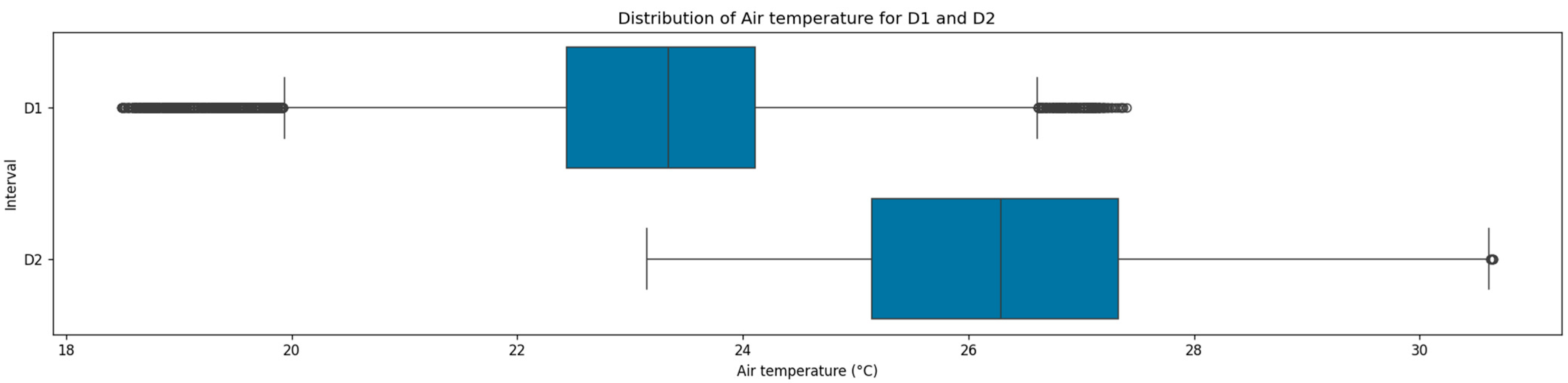

The air temperature distributions demonstrated notable differences across the two monitoring periods, as presented in

Figure 3. Both datasets showed a close arrangement between their central tendency measures with a consistent variance of 1.4 °C. In particular,

showed a mean of 23.2 °C with a higher median of 0.1 °C, whereas

exhibited 26.3 °C for both measures. The pronounced differences in central tendency were probably attributable to the thermal regulation of indoor environment through HVAC systems, and the relatively low variance in temperatures indicated stable thermal conditions. For both datasets, the IQRs were also similar, ranging from 22.4 °C to 24.1 °C in

, and from 25.1 °C to 27.3 °C in

.

The relative humidity distributions exhibited substantial variation between the two monitoring intervals, as presented in

Figure 4, reflecting the interplay between outdoor climate, building usage, and indoor air regulation. During the first monthly interval

, the mean and median relative humidity were 56.0% and 56.5%, respectively, with the IQR ranging from 49.9% to 61.9%. This indicated a generally humid indoor environment, with values frequently clustering in the upper half of the observed range. In stark contrast, the second monthly interval

showed a marked decrease in relative humidity, with the mean dropping to 29.95%, the median to 32.0%, and the IQR shifting down between 24.0% and 35.0%, pointing to a much drier indoor environment during the second interval. This reduction in both central tendency and spread may be attributed to the operation of heating systems during the winter months, which commonly leads to reduced indoor relative humidity.

The PM

2.5 concentration distribution showed a significant reduction in the second monitoring interval, accompanied by a marked variance reduction, as illustrated in

Figure 5. During the first interval, the mean was 10.9 ug/m

3 with a high standard deviation of 11.9 ug/m

3 and an IQR ranging between 0.0 ug/m

3 and 16.0 ug/m

3. In contrast, the mean decreased by 53.3% during the second interval with a lower standard deviation of 6.5 ug/m

3 and an IQR ranging between 0.0 ug/m

3 and 7.0 ug/m

3. The zero PM

2.5 concentration recorded during these two monitoring periods indicated excellent IAQ, with concentrations frequently below the sensors’ detection thresholds.

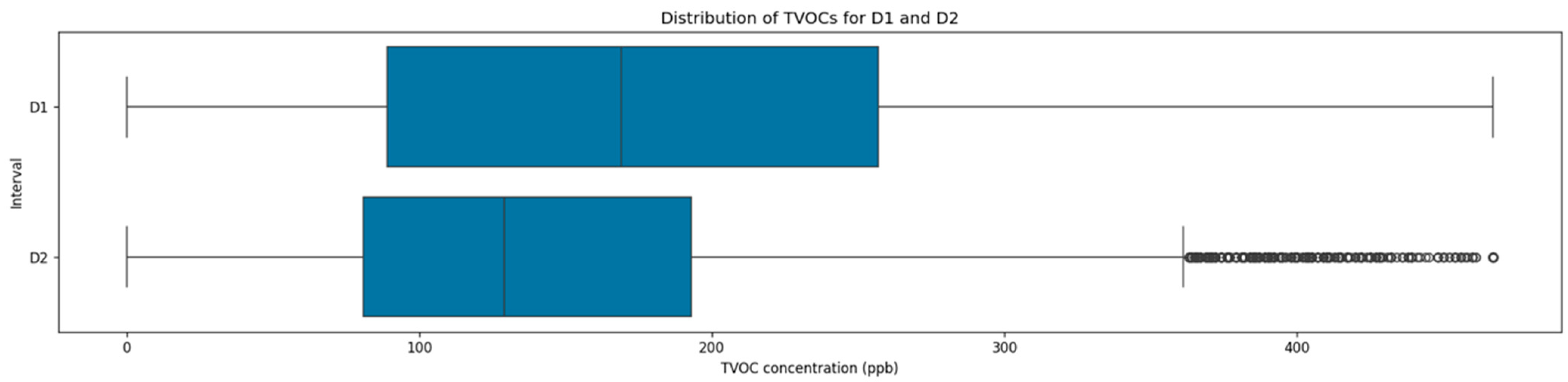

Similarly to PM

2.5, TVOC concentrations also demonstrated a noticeable decrease in central tendency and variability measures in the second interval, as presented in

Figure 6. During the first interval, TVOC had a mean of 185.9 ppb with a standard deviation of 145.3 ppb and an IQR ranging from 89.0 ppb to 257.0 ppb, reflecting elevated indoor TVOC levels with increased variance. During the second intervals, the TVOC mean dropped to 147.42 ppb, representing an approximate 20.7% reduction, while the standard deviation decreased to 85.33 ppb, corresponding to a reduction of 41.3%. In this interval, the IQR was narrower and between 81.0 ppb and 193.0 ppb, indicating an overall IAQ improvement and a substantial reduction in TVOC fluctuations.

The distribution of barometric pressure did not result in any interesting findings, remaining remarkably stable across the two monitoring intervals. These small variations are attributable to normal atmospheric fluctuations rather than any meaningful shift. The mean values were 101.1 kPa and 101.7 kPa for the first and second intervals, with low corresponding variabilities of 0.57 kPa and kPa.

2.4. Feature Engineering

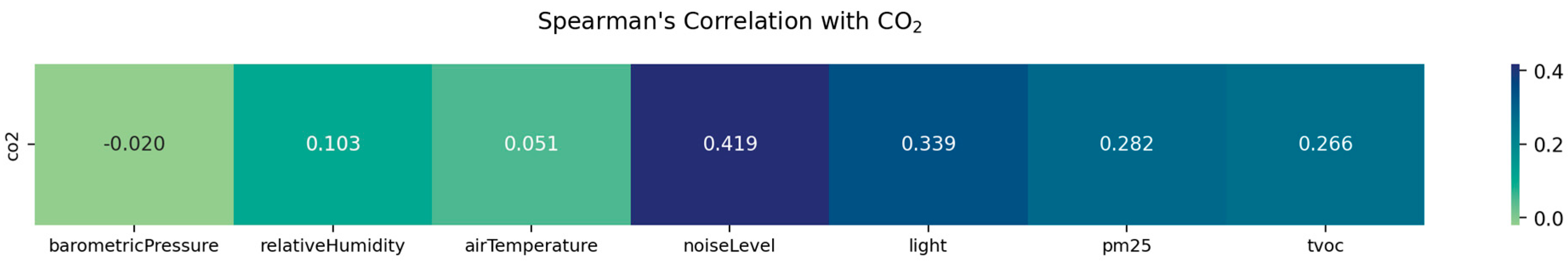

2.4.1. Exploring Linear and Monotonic Relationships with CO2

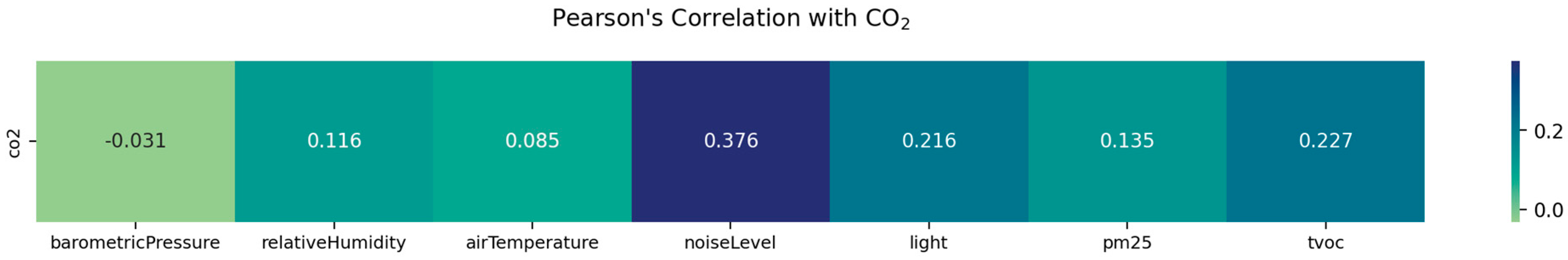

Adhering to the principle of parsimony, Pearson’s

and Spearman’s

correlation coefficients were computed to explore linear and monotonic relationships between the candidate predictors and the CO

2 concentration.

Figure 7 displays the results from Pearson’s correlation and

Figure 8 the results from Spearman’s correlation. Following the established interpretation guidelines for Pearson’s correlation, no candidate predictor exceeded even the lower threshold of

to denote a moderate linear relationship with CO

2 concentration. The indirect occupancy indicators emerged as the most influential predictors, with noise levels exhibiting the maximum correlation levels with

and

, and the light levels following with

and

. The consistently higher Spearman’s coefficients for indirect occupancy indicators suggested the presence of non-linear monotonic relationships with CO

2 concentration, indicating that the occupancy effects may follow threshold or saturation patterns rather than simple linear increases. Among the air pollutants, TVOC

showed a weak to moderate linear correlation with CO

2 concentration, consistent with its mutual dependence on human occupancy patterns, while PM2.5

resulted to a weak linear relationship with CO

2 concentration. Climatic conditions demonstrated negligible linear correlations, with barometric pressure, air temperature, and relative humidity resulting in

. These initial findings on Pearson’s and Spearman’s correlation magnitudes underscored that CO

2 concentration is probably governed by more complex, non-linear interactions with candidate predictors across the occupancy indicators, climatic conditions, and air pollutants.

2.4.2. Autocorrelation of CO2

While Pearson’s correlation coefficient can adequately capture linear dependencies between candidate predictors and CO2 concentration, the inclusion of lagged CO2 concentrations itself could also reveal strong linear associations, thereby uncovering autocorrelation within specific lookback windows. To reduce the high-frequency noise of the 1-min resolution in CO2 measurements, a 5-min aggregation using the median was applied, resulting in 24 lags spanning a 120-min temporal window. The autocorrelation function (ACF) exhibited statistically significant values for all these 24 lags , following a smooth, monotonic decay pattern throughout this temporal window without any oscillatory behaviors. An ACF value of 0.99 at lag 1 indicated near-perfect linear correlation between current CO2 concentrations and those measured 5 min earlier, demonstrating remarkable short-term persistence. At 30 min, the ACF value remained considerably high at over 0.85, while at 60 min it decreased to 0.69, still maintaining substantial autocorrelation patterns. Even at lag 24, the ACF value sustained a meaningful level of 0.44, confirming that CO2 retains forecasting utility over 120-min periods. The Partial ACF (PACF) revealed that CO2 exhibits primary dependence on lag 1 and secondary negative correlation at lag 2, with PACF values of 0.99 and −0.38, respectively, while contributions beyond lag 2 remained negligible. The observed negative PACF at lag 2 aligns with the gradually decaying ACF, supporting the presence of short-term persistence in CO2 concentrations, followed by a mild correction effect at longer lags, which is a pattern characteristic of autoregressive processes with direct dependencies primarily at lags 1 and 2.

2.4.3. Granger Causality Analysis

After examining whether the current values of candidate predictors exhibit linear correlation with CO2 concentration, as well as the autocorrelation of CO2 itself, the Granger causality test was employed to determine whether lagged values of the candidate predictors could enhance the predictability of future CO2 concentrations. Notably, predictors related to indoor climatic conditions were found to significantly enhance the prediction of CO2 concentrations, notwithstanding their previously limited linear associations. Noise level, air temperature, relative humidity, and PM2.5 exhibited highly significant Granger causality across all the 24 time lags, indicating sustained predictive strength for CO2 concentration throughout the entire 120-min temporal window. Barometric pressure also shows highly significant Granger causality between lag 4 and lag 11 , moderate significance between lag 12 and lag 16 , and marginal significance from lag 17 onwards . This diminishing significance at higher lags indicated that the predictive relationship of barometric pressure weakens as the temporal gap increases. Light level demonstrated marginal predictive capacity for up to 40 min with an optimal forecasting performance at lag 4 , which suggested a localized and time-sensitive relationship with CO2 concentrations. Lastly, TVOC displayed significant Granger causality exclusively at lag 1 , indicating that its predictive effect on CO2 concentration is contemporaneous and immediate, rather than delayed.

2.4.4. Mutual Information (MI)

To explore complex, non-linear dependencies between the candidate predictors and CO2 concentrations, the mutual information measure was utilized. As a measure of association, MI captures the total shared information between variables, providing sensitivity to both linear and non-linear dependencies. All the candidate predictors related to climatic conditions resulted in relatively higher MI scores than those related to occupancy status and air pollutants, despite exhibiting weaker linear correlations with CO2 concentration. Based on the MI measure, barometric pressure emerged as the most informative predictor , with its negligible linear correlation with CO2 highlighting the presence of a pronounced non-linear dependency. Relative humidity and air temperature showed substantial shared information content with CO2, resulting in and , respectively, which also contradicted their weak linear correlations and highlighted the presence of non-linear dependencies. Notably, noise level exhibited a relatively low in contrast to its stronger linear correlation with CO2 , indicating a predominant linear relationship. Light levels and TVOC maintained similar strengths of association under both linear and non-linear evaluation information content, whereas PM2.5 provided a moderate amount of additional non-linear information .

2.4.5. Polynomial and Interaction Terms

Recognizing the potential for synergistic effects among candidate predictors, polynomial and interaction terms were generated to evaluate underlying dependencies with CO2 concentrations that may not be represented by the original predictors alone. All the second-degree polynomial terms demonstrated MI scores nearly close to those of their corresponding linear terms; for the second-degree barometric pressure, for the second-degree relative humidity, and for the second-degree air temperature. Therefore, incorporating higher-degree terms did not offer any significant predictive advantage. Interaction terms revealed significant synergistic relationships between candidate climatic predictors. The product of barometric pressure and relative humidity achieved the highest interaction score ; however, it was closely approximating that of relative humidity alone. Notably, air temperature interactions with barometric pressure and relative humidity yielded higher mutual information scores than air temperature individually, indicating that incorporating these interactions enhances predictive capacity relative to using air temperature alone. From an aggregated perspective, the MI achieved by the original candidate predictors was marginally superior in terms of both maximum and average values compared to their polynomial counterparts . Therefore, despite the lower individual peak , interaction terms demonstrated the most consistent performance with and .

2.5. Modeling

2.5.1. Naïve Baseline

In the present study, the Last Observation Carried Forward (LOCF) method was used to establish a naïve baseline against which to evaluate the implemented LSTM variants. LOCF is a non-parametric, persistence method, which propagates the latest observed measurement across the desired future time horizon. Under this zero-change assumption, LOCF serves as the minimum error benchmark for more complex and sophisticated approaches in time-series forecasting, particularly for time-series exhibiting low temporal variance and irregular fluctuation patterns that deviate from smooth monotonic trends. Typically, the CO

2 concentration in indoor spaces demonstrates such erratic temporal behavior, particularly in this study, where human occupancy serves as the main source of CO

2 emissions, without interference from other significant sources. Let

representing the concentration of CO

2 at a specific time step

, then the LOCF method can be mathematically described by Equation (1), where

denotes the forecasting horizon.

2.5.2. LSTM Architectures

As already mentioned, this study implemented four (4) LSTM architectures with progressive structural complexity. The rationale behind this incremental approach is twofold: to identify the LSTM architecture with optimal predictive performance, while at the same time accounting for the computational overhead introduced by the specific architecture, acknowledging that increased complexity often entails diminishing marginal gains that may not justify the associated overhead. For each architecture, both univariate and multivariate LSTM modeling paradigms were implemented, thus exploring the predictive capacity of both autoregressive CO2 dependencies and exogenous variables in short-term CO2 concentration forecasting, in accordance with the feature engineering insights. The LSTM models produce single-step predictions for 15 min, 30 min, 60 min, 120 min, and 180 min ahead, using equal-length lookback windows. This proportional architectural design for the lookback windows’ length ensured alignment between the temporal context of the input sequences and output, thereby maintaining a balanced retention of relevant historical information. Given that CO2 concentration demonstrates stable dynamics with minimal variability over successive 1-min periods, the input sequences employed 5-min sampling intervals to attenuate noise artifacts.

To fine-tune the hyperparameters for each LSTM architecture, a grid search with 3-fold cross-validation was performed, resulting in the configurations described in Equations (2)–(7). Given the increased demands for computational resources for this optimization process, the present study did not conduct an exhaustive grid search. The search space encompassed the 8, 16, 32, and 64 units per layer and dropout rates between 0.1 and 0.4 with increments of 0.1, subject to the constraint that dropout rates between consecutive layers must differ by 0.1. During the hyperparameter tuning, two batch sizes of 32 and 64 samples were considered, along with learning rates of and . To ensure effective model convergence and mitigate the risk of overfitting, an early stopping rule of 3 epochs was incorporated for monitoring the validation loss.

The first LSTM model implemented a three-layer sequential architecture: an LSTM layer with 64 hidden units configured to process the entire input sequence and return only the final hidden state, a dense intermediate layer with 16 neurons using ReLU activation function for non-linear feature transformation, and a single-neuron linear output layer for direct CO2 concentration regression. The training of this shallow model used batches of 32 input sequences with a learning rate of , resulting in relatively rapid convergence within 9 epochs. By employing conservative architectural complexity, this model established an additional baseline, complementary to the naïve one, which demonstrated the minimal computational overhead for capturing temporal dependencies in CO2 concentration using DL. The batch size resulting from the optimization was 32 samples.

Building upon the previous model, the potential of deeper LSTM architectures was explored. Thus, the second LSTM model stacked three layers with a progressively reduced dimensionality of 64, 32, and 16 units. The cascading structure allowed the first two LSTM components to retain sequential outputs for continued temporal modeling, whereas the terminal layer compressed the entire sequence into a unified representation. This hierarchical temporal processing was complemented by an 8-neuron dense layer with ReLU activation for feature refinement, terminating in a single-neuron output layer for CO2 concentration forecasting. Utilizing batch length sizes of 32 and learning rate of , the training process for this model reached convergence at epoch 11. Relative to the first LSTM approach, this model demonstrated considerably higher complexity with increased depth and parameter count, thus enabling more nuanced temporal pattern learning at the expense of computational resources.

Attention mechanisms constitute a popular approach for dynamic weighting in RNNs, allowing for selective focus on historically relevant periods with patterns or significant external events, rather than being constrained by the limited information capacity of the final hidden state. To overcome this limitation, the third LSTM model was augmented with such mechanism, employing an architecture consisting of a 64-unit LSTM layer that processed the entire input sequence, while maintaining time step-level outputs, followed by a custom attention layer that computes temporal relevance scores for each time step based on learned query vectors. The attention mechanism applied softmax normalization to generate attention weights that sum to unity and produce a context-aware representation by computing the weighted sum of LSTM hidden states, thereby enabling the model to dynamically focus on historical periods with similar CO2 concentration patterns. The architecture concluded with a 16-neuron dense layer with ReLU activation for additional feature transformation and a single-neuron output layer for CO2 concentration forecasting. Regarding the training process, this model used batch sizes of 64 and learning rate , with slow yet stable convergence at epoch 16.

Dropout regularization is one of the most common techniques used in RNNs to prevent over-reliance on specific temporal patterns by zeroing out a small fraction of neuron outputs during training. In this sense, this technique was applied in the fourth LSTM model , incorporating three stacked LSTM layers with 64, 32, and 16 hidden units, respectively. A progressively increasing dropout rate of 0.1, 0.2, and 0.3 was applied, adhering to the results from the hyperparameter configuration process. The architecture was completed with a 16-neuron dense layer succeeded by 0.4 dropout—the highest rate applied to mitigate the substantial overfitting risk inherent in fully connected layers—and concluded with a single-neuron output layer for CO2 concentration forecasting. With batch sizes of 64, this model achieved the slowest convergence at 22 epochs.

For clarity and to facilitate methodological validation, the mathematical formulations for the LSTM models are presented. Let

be the input sequence with length

in

-dimensional feature space and

be the predicted CO

2 concentration at future horizon

. Equations (2) and (3) describe the formulation for the first

and the second

LSTM models, respectively, where

represents the ReLU activation function and

represents the corresponding bias term.

The third LSTM model

is mathematically formulated through Equations (4) and (5), where

represent the attention weights and

represent the hidden states of the 32-unit LSTM layer.

The fourth LSTM model

is mathematically formulated through Equations (6) and (7), where

represents a dropout rate of

.

2.6. Performance Evaluation

To evaluate the performance, two standard error metrics used in regression problems were employed: the Mean Absolute Error (MAE), which averages the absolute differences between predicted

and actual values

regardless of the error direction, and the Root Mean Squared Error (RMSE), which poses a quadratic term

to penalize significant deviations from the actual values

.

4. Conclusions

This study evaluated univariate and multivariate LSTM models adopting progressively increased architectural complexity for indoor CO2 forecasting across horizons spanning from 15 min up to 180 min ahead, leveraging a two-month data collection from the Secretary of Emergency Services of the GHT.

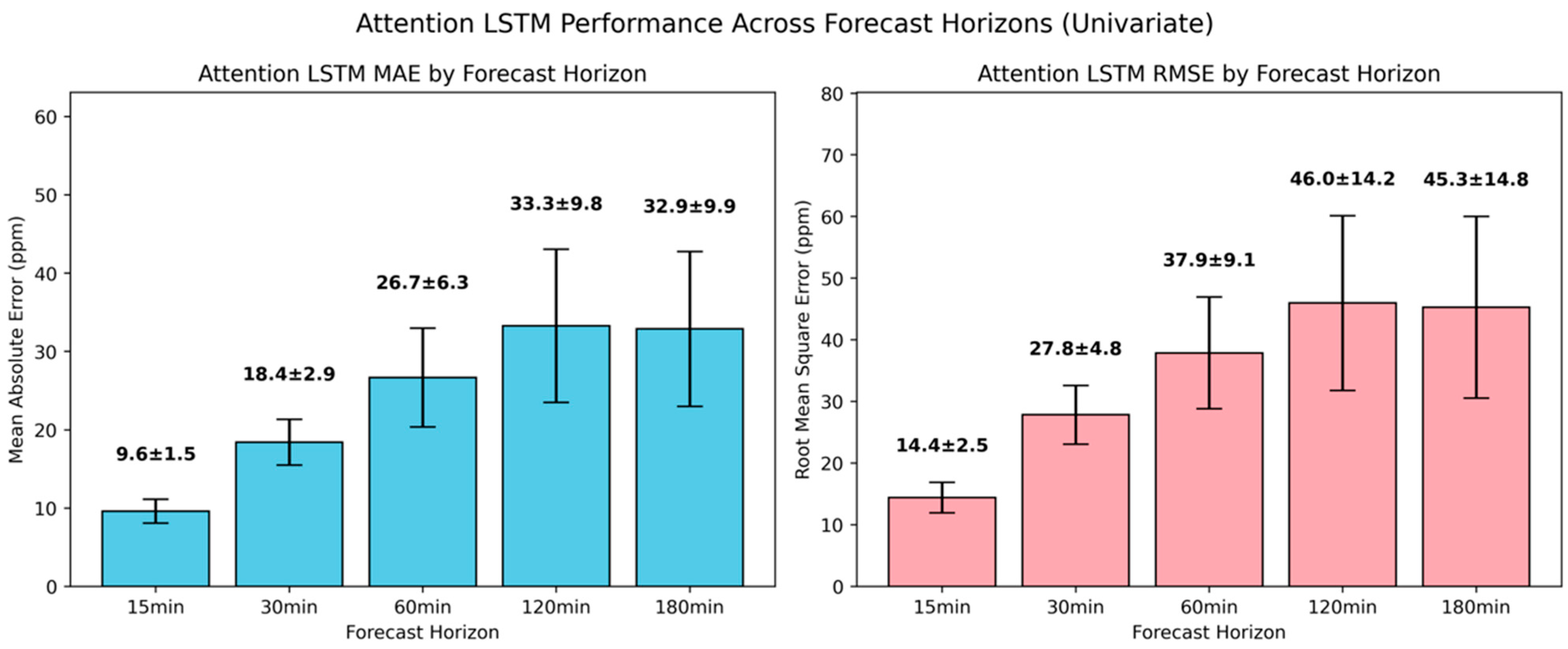

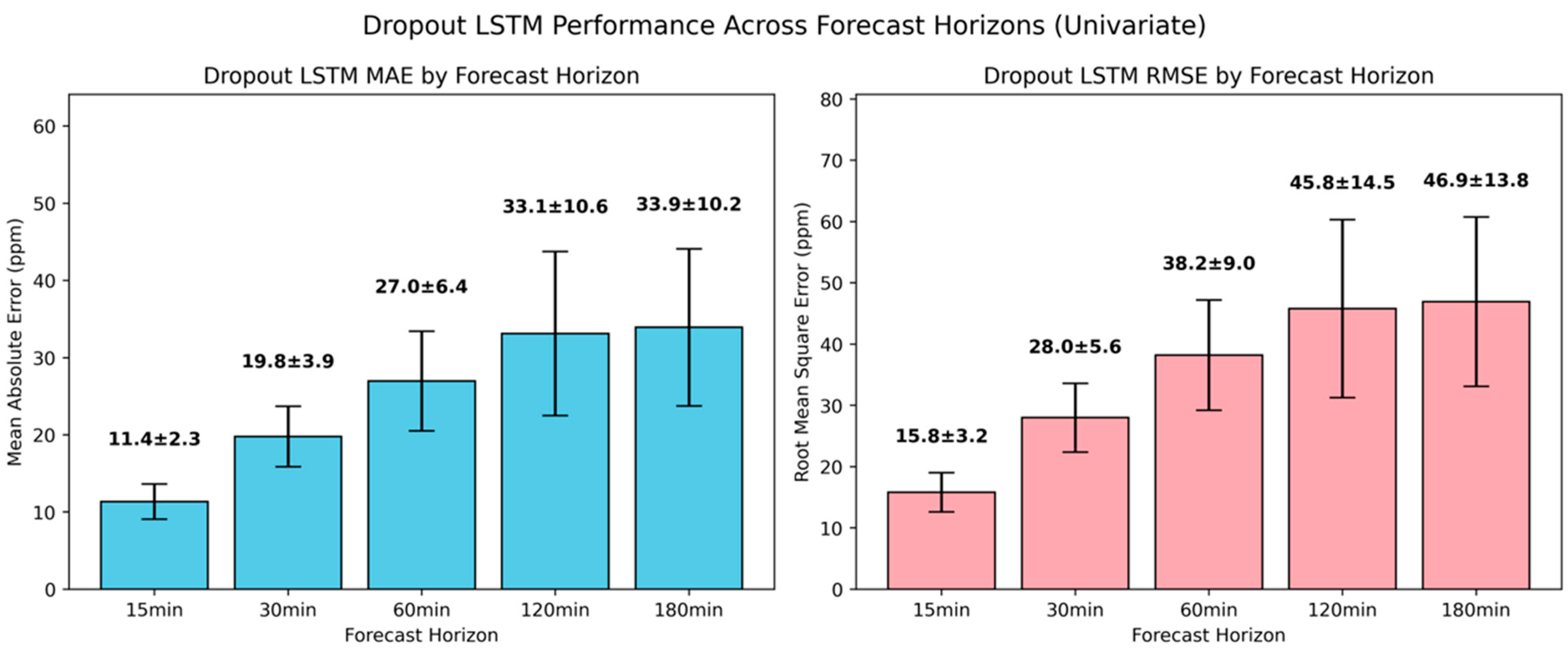

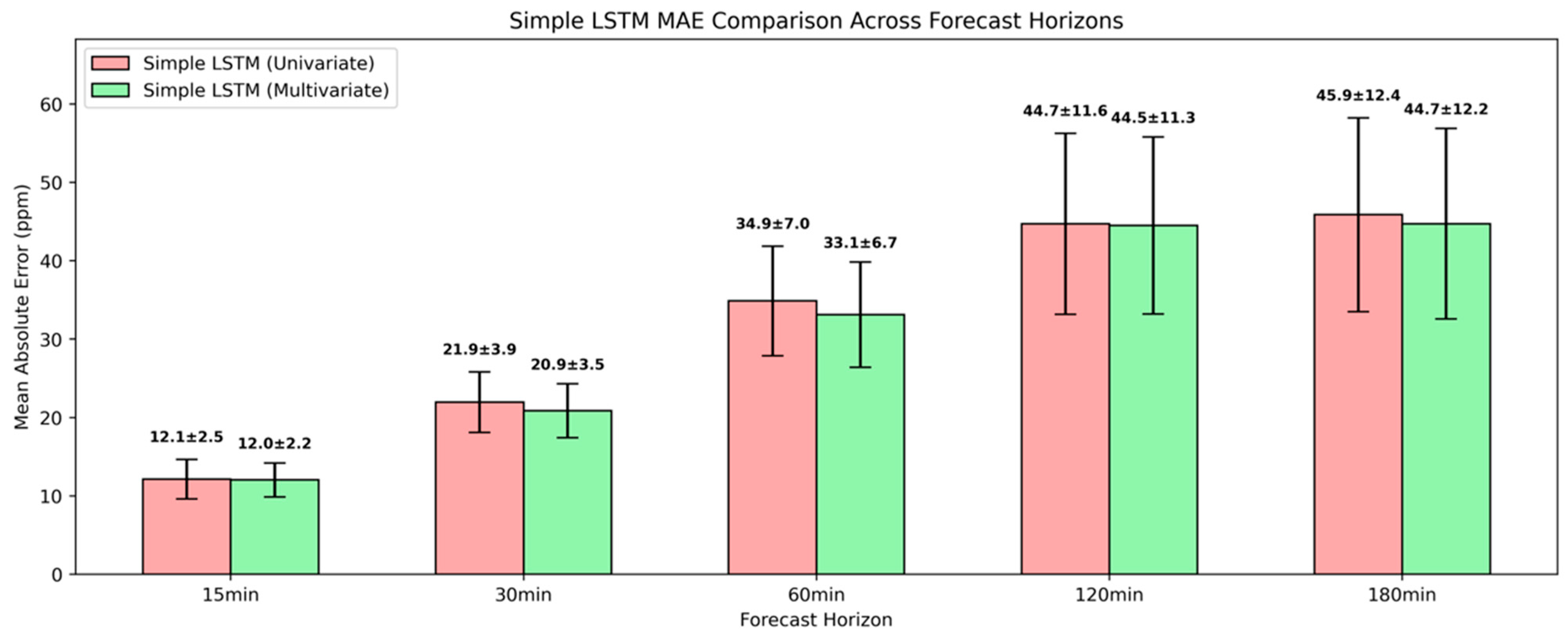

The ACF analysis revealed that CO2 exhibited strong temporal persistence up to 30 min ago and retained moderate autocorrelation up to 1 h ago. The Granger causality testing indicated statistically significant temporal dependencies between CO2 and additional predictors, with the most salient ones observed for noise level, air temperature, relative humidity and PM2.5 over a 120-min temporal window. Considerable shared information was identified between CO2 and air temperature, barometric pressure, and relative humidity, underscoring performance gains from incorporating these climatic conditions into the input sequences. The indirect occupancy indicators of light and noise levels demonstrated the highest Pearson’s correlation coefficients despite their relatively low MI scores, probably indicating an underlying linear relationship with CO2.

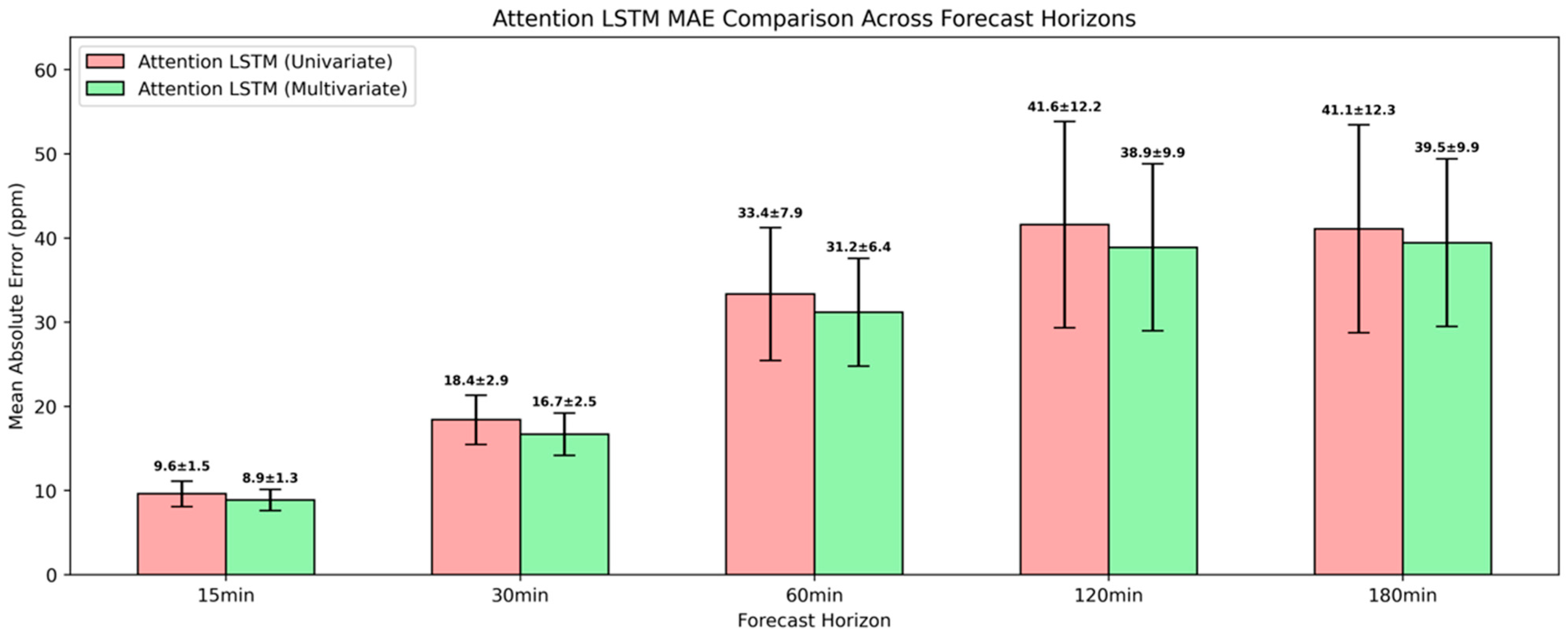

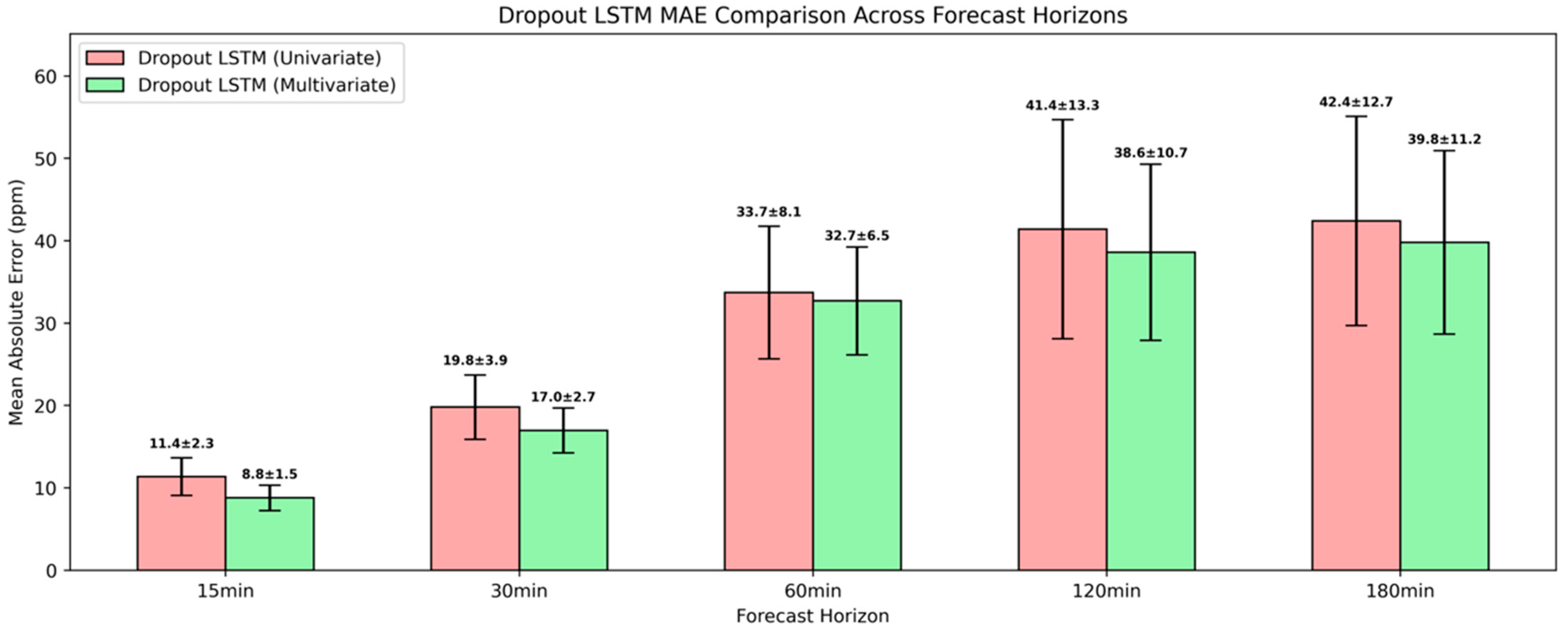

All the univariate LSTM models outperformed the naïve baseline across the examined forecasting horizons within the studied environment. A shallow univariate LSTM architecture achieved an MAE reduction of 59.9% and 52.4% for 15 min and 30 min ahead, while attention-based and regularized univariate LSTM architectures resulted in further error reduction. Considering the computational overhead associated with dropout, the attention-based LSTM maintained the optimal balance between computational efficiency and predictive accuracy, establishing itself as the evaluation benchmark against multivariate approaches. For 15 min and 30 min ahead, the univariate attention-based LSTM attained an MAE of 9.6 ppm and 18.4 ppm, while for 60 min ahead, the MAE was 26.7 ppm. For 120 min and 180 min ahead, it yielded near identical MAEs of 33.3 ppm and 32.9 ppm.

Among the examined configurations and within the experiment’s context, the most substantial predictive gains in CO2 forecasting were achieved with a multivariate attention-based LSTM architecture. This architecture’s relative superior performance reflected the multifaceted interplay among climatic conditions, air pollution, and occupancy governing indoor CO2, and underscored the attention mechanism’s capabilities to prioritize the most informative time steps. For 15 min, 30 min and 60 min ahead, this attention-based architecture achieved an MAE of 8.9 ppm, 16.7 ppm, and 33.4 ppm, respectively. Even for longer forecasting horizons, the MAE remained below 40 ppm, with comparable MAE of 38.9 ppm and 39.5 ppm for 120 min and 180 min ahead.

Compared to the predictive outcomes from related research works, the proposed multivariate attention-based LSTM attained competitive or superior performance over most forecasting horizons within the studied hospital environment. It should be stressed that each study explored datasets with diverse contextual, seasonal, and building characteristics, and incorporated different predictors, including CO2, climatic conditions, and occupancy patterns; therefore, generalizability beyond the studied environment remains to be demonstrated.

At 15 min ahead, the proposed multivariate attention-based LSTM achieved an MAE of 8.9 ppm, comparable to the MAE of 8.95 ppm that was reported by Y. Zhu et al. [

17] using a bidirectional LSTM at a considerably shorter 1-min horizon. By contrast, E. Athanasakis et al. [

21] reported a lower MAE of 4.56 ppm using a dilated CNN, examining also a considerably shorter 5-min horizon. At 30 min ahead, the proposed multivariate attention-based LSTM achieved an MAE of 16.7 ppm, outperforming the TCN of García-Pinilla et al. [

19] that attained an MAE of 29.19 ppm. At 60 min ahead, the MAE was 33.4 ppm, which is comparable to the 30.16 ppm achieved by the MLP of Taheri and Razban [

20]. Even for longer horizons of 120 min and 180 min, the proposed multivariate attention-based LSTM maintained an MAE below 40 ppm, yielding accuracy on par with the best multi-hour results reported by García-Pinilla et al. [

19], and substantially outperformed the 24-h ahead forecasts with an MAE of 55.19 ppm in Taheri and Razban [

20].

5. Future Work

This study demonstrates the practical value of attention-based LSTM models for short-term CO2 forecasting in indoor environments. By integrating climatic conditions, air pollutants, and occupancy status, the proposed approach yields accurate and reliable predictions that go beyond simple monitoring. The model supports energy-efficient operation of portable embedded devices, allowing them to adjust sensing schedules dynamically, reduce redundant measurements, and extend battery life. It also enables proactive IAQ management: instead of relying on reactive ventilation, which is limited by slow system response times and mixing dynamics, predictive insights allow timely interventions before CO2 thresholds are exceeded. This ensures healthier indoor conditions, reduces occupant exposure, and prevents the energy-intensive boosts often required by late corrective actions. Such predictive control is valuable across diverse settings—including offices, classrooms, healthcare facilities, and homes—where differences in room size, occupancy density, and HVAC responsiveness can otherwise result in prolonged periods of elevated CO2. By anticipating concentrations 15–180 min in advance, the proposed model provides a universal safeguard, simultaneously enhancing comfort, compliance, and energy efficiency.

Three main directions warrant further future investigation. First, this study relied on a single-site dataset with a specific sensor configuration and monitoring window, which may introduce instrumentation and temporal-coverage biases; external validation on datasets collected from diverse buildings, climates, and occupancy regimes is necessary to assess generalization. As a second direction, future research should prioritize the direct incorporation of occupancy, given its substantial influence on CO2 dynamics. The reliance on noise and luminosity levels to infer occupancy status represents a methodological limitation, and addressing this constraint could yield significant improvement in predictive performance. The third direction should be the integration of predictive models into digital solutions like digital twins and virtual sensing to confirm their practicality in real-world applications. As a fourth direction, future research studies should focus on identifying the dominant physical and environmental factors driving variations in CO2, PM2.5, and VOC concentrations, in order to complement the forecasting models with a stronger causal understanding and support more effective mitigation strategies.