TMU-Net: A Transformer-Based Multimodal Framework with Uncertainty Quantification for Driver Fatigue Detection

Abstract

1. Introduction

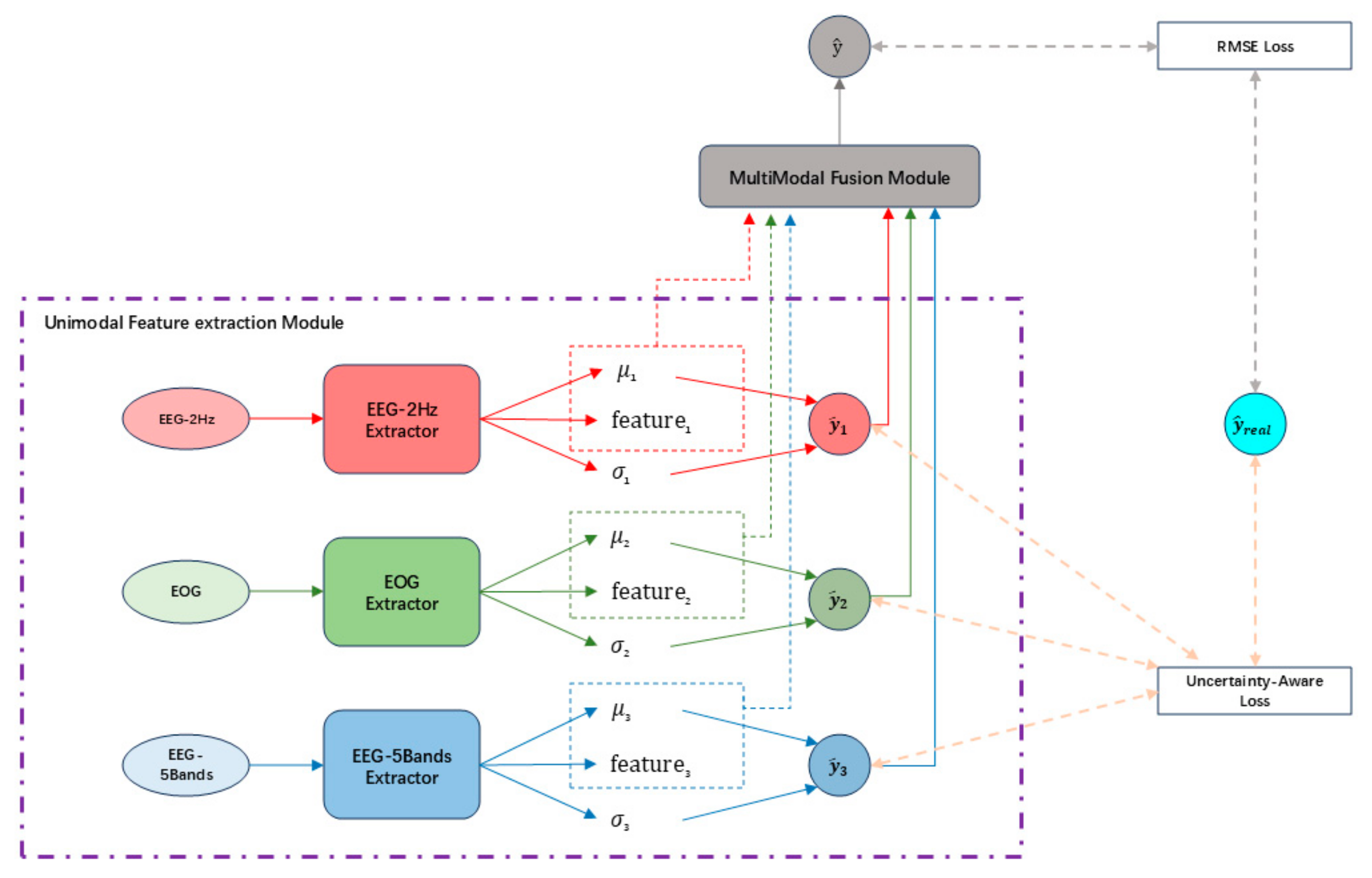

- We propose TMU-Net, a novel framework that for the first time integrates EEG signals (both 2 Hz full-band and five-band features) with EOG signals, incorporating uncertainty modeling to enhance the accuracy and robustness of driver fatigue detection.

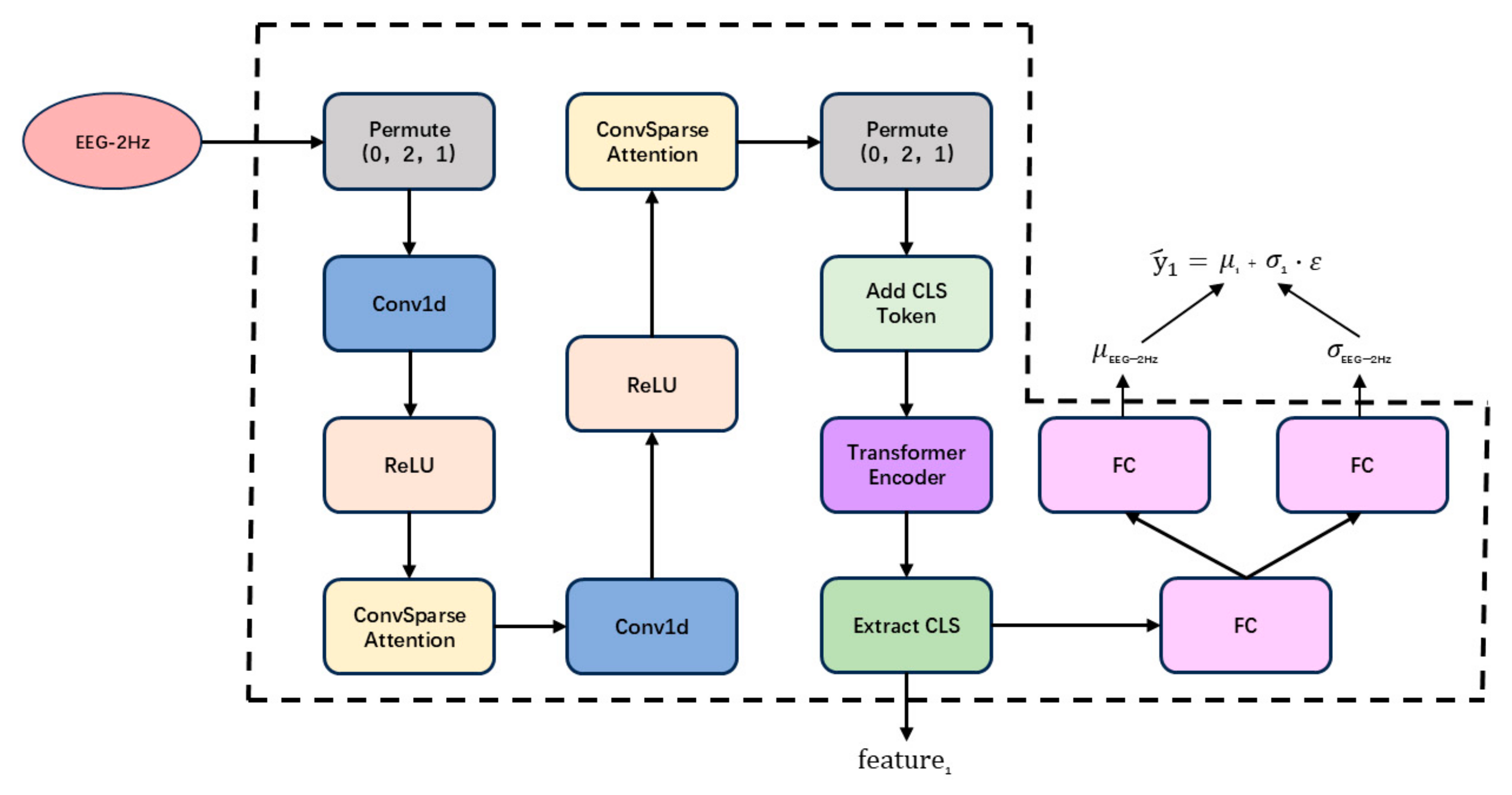

- We design a unimodal feature extraction module that employs causal convolution, ConvSparseAttention, and Transformer encoders to capture spatiotemporal features, while integrating uncertainty modeling to improve prediction reliability.

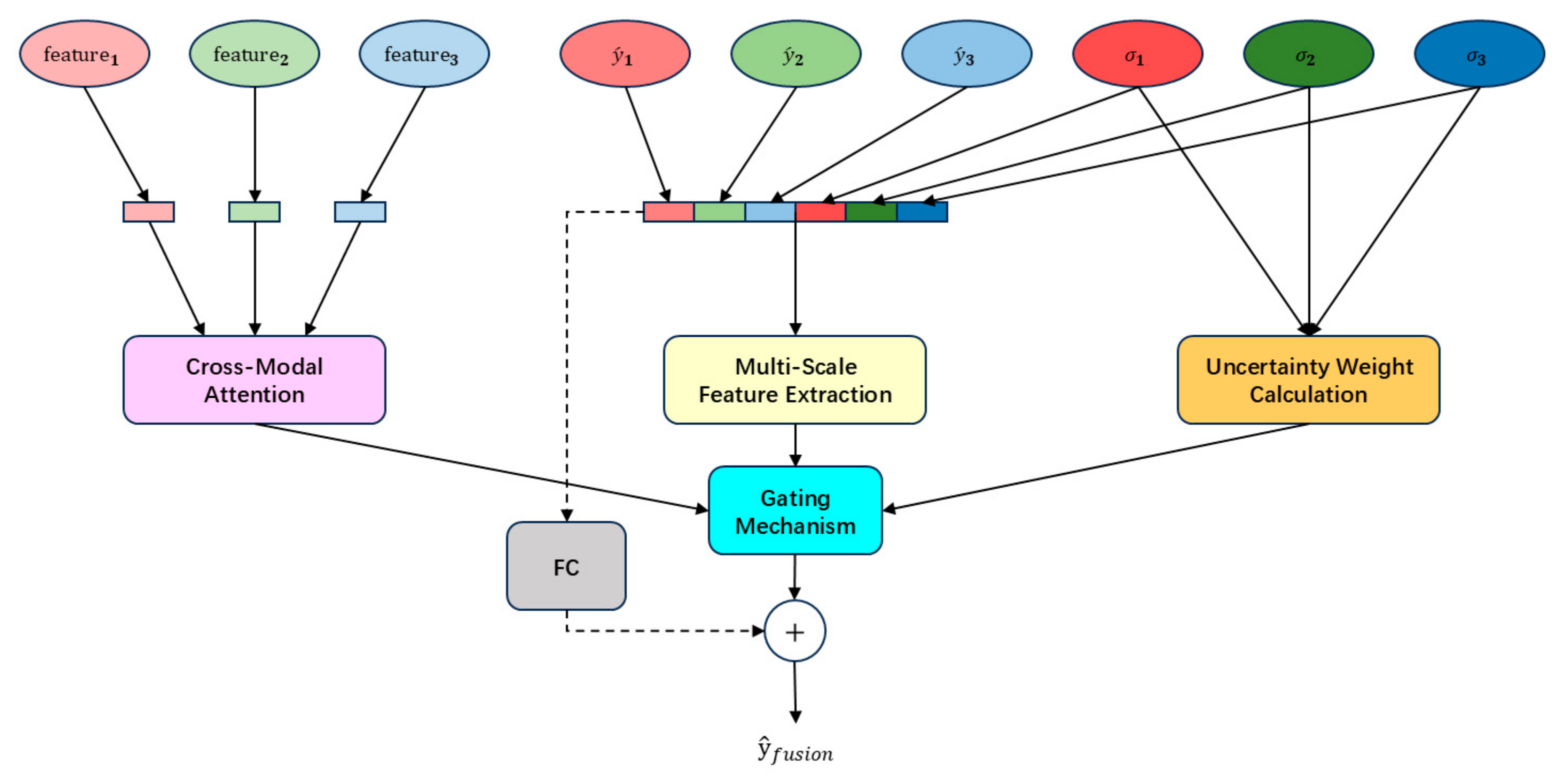

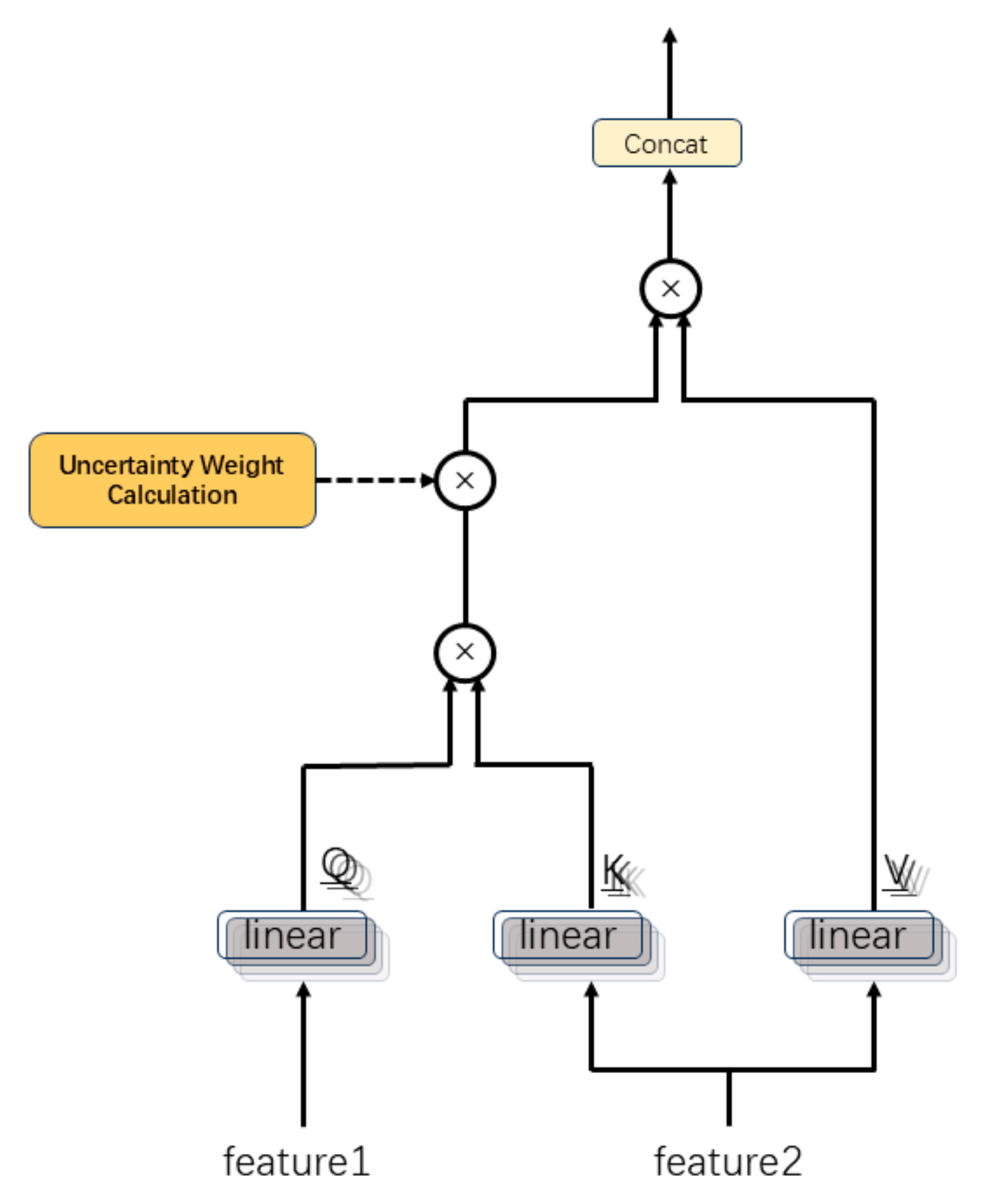

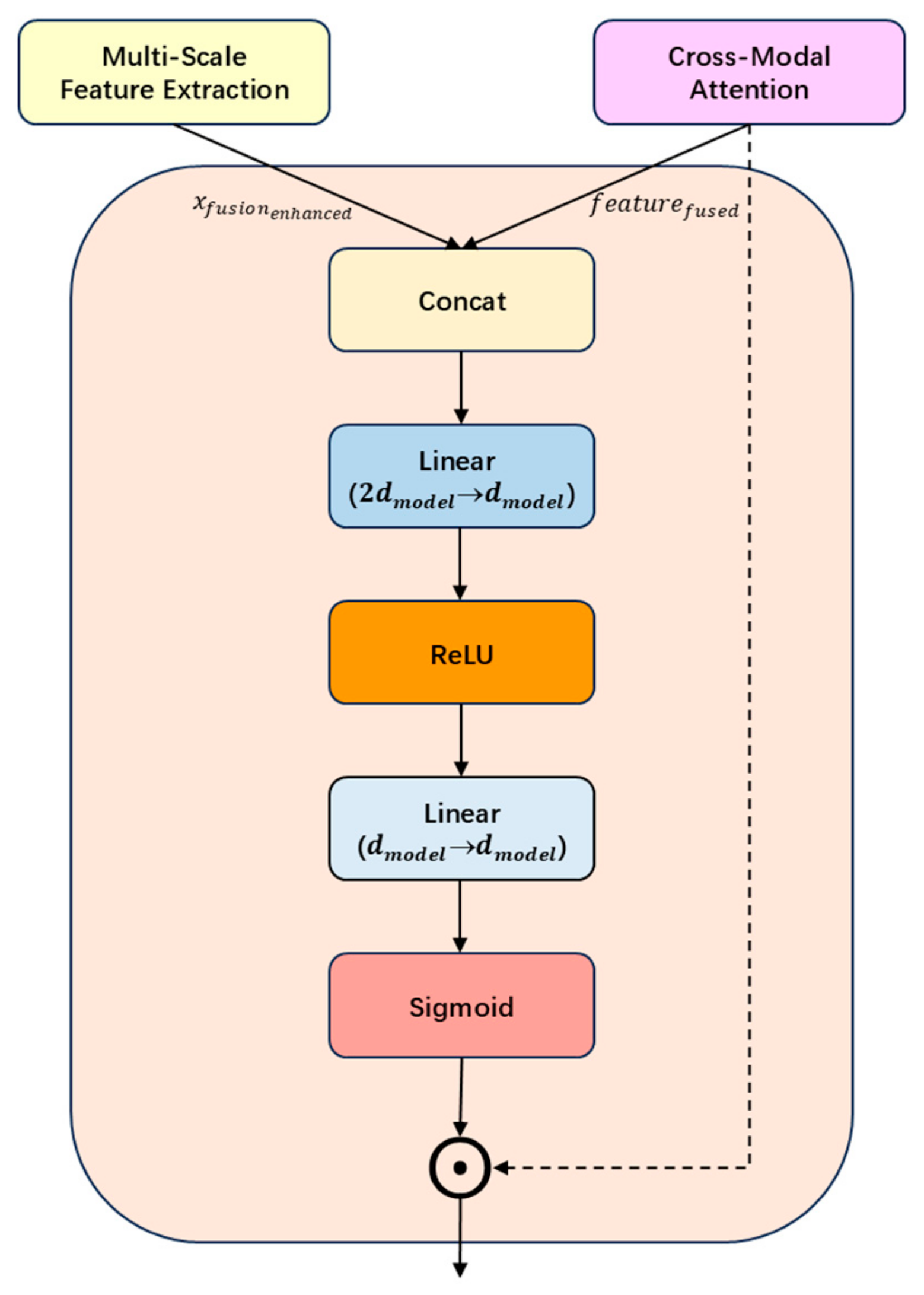

- We dynamically integrate features through a weighted gating mechanism, thereby achieving adaptive optimization of fatigue detection performance.

2. Methodology

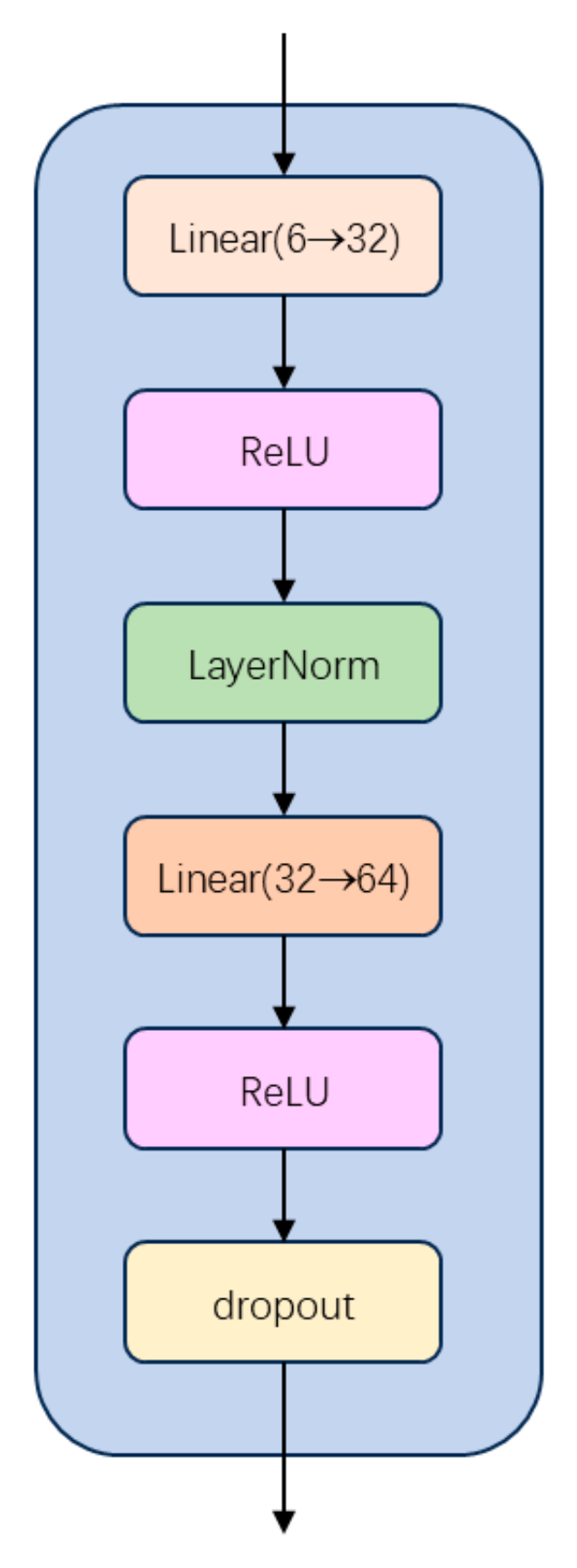

2.1. Unimodal Feature Extraction Module

2.2. Multimodal Feature Fusion Module

2.3. Design of the Model Loss Function

3. Experiments

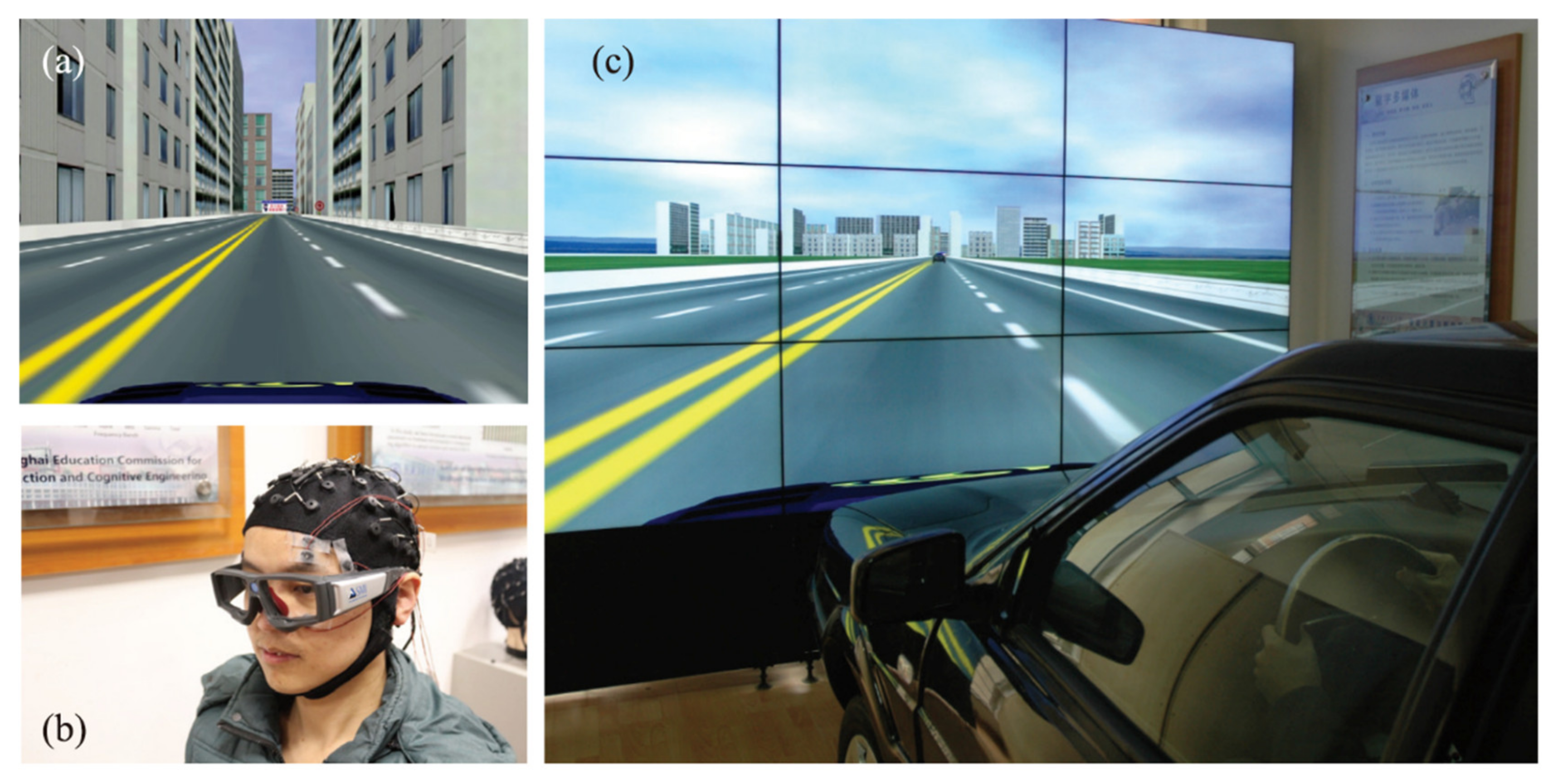

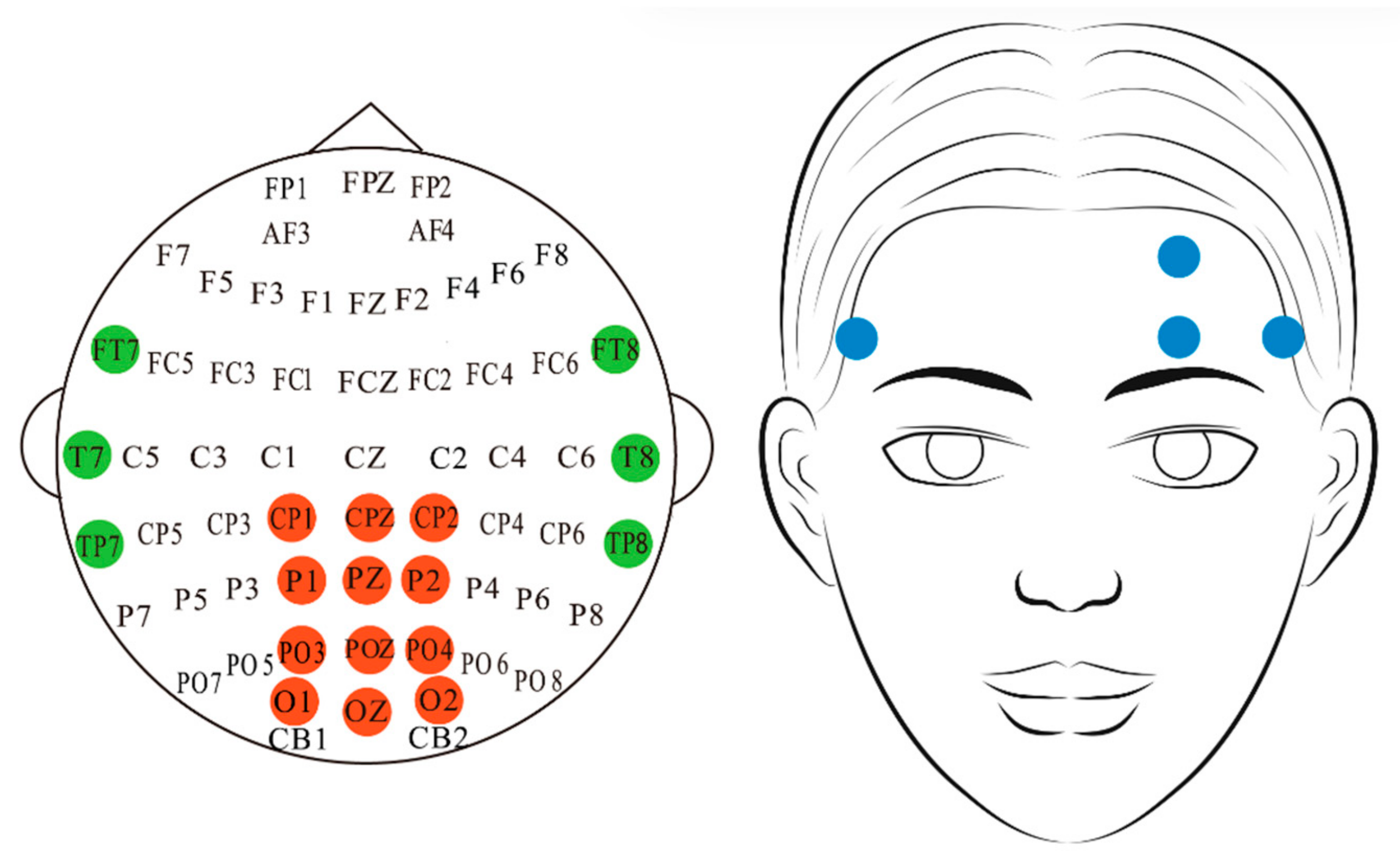

3.1. Dataset and Preprocessing

3.2. Parameter

3.3. Experimental Results

3.3.1. Fatigue Detection Performance

3.3.2. Performance Comparison

3.3.3. Comparison Between Unimodal and Multimodal Approaches

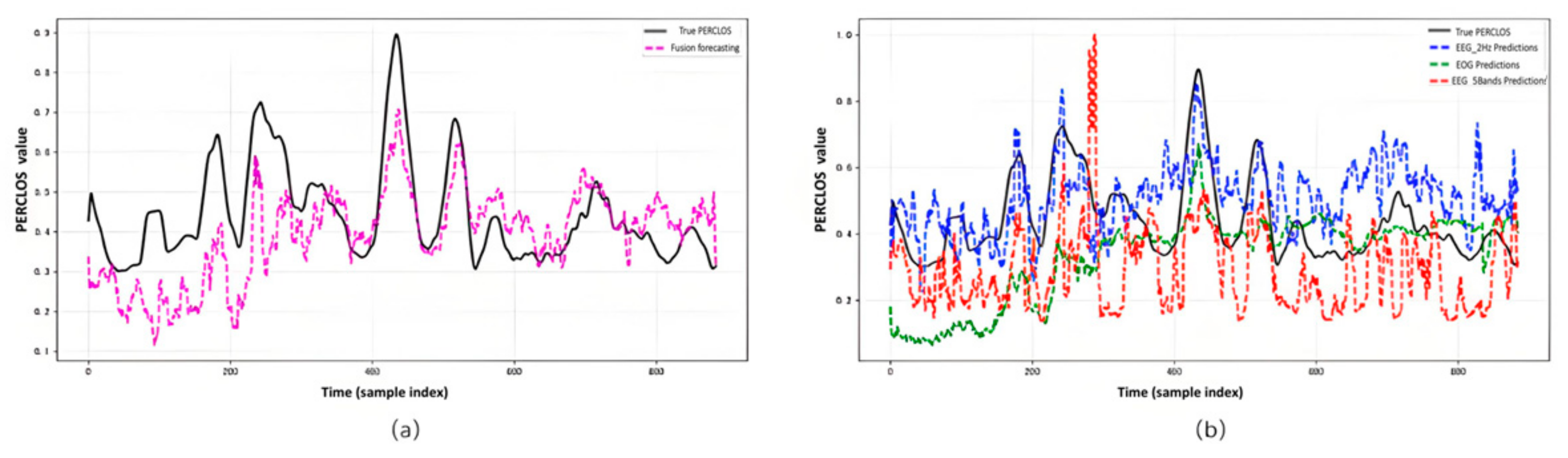

3.3.4. Temporal Prediction Results

3.3.5. Comparative Study on PERCLOS Prediction

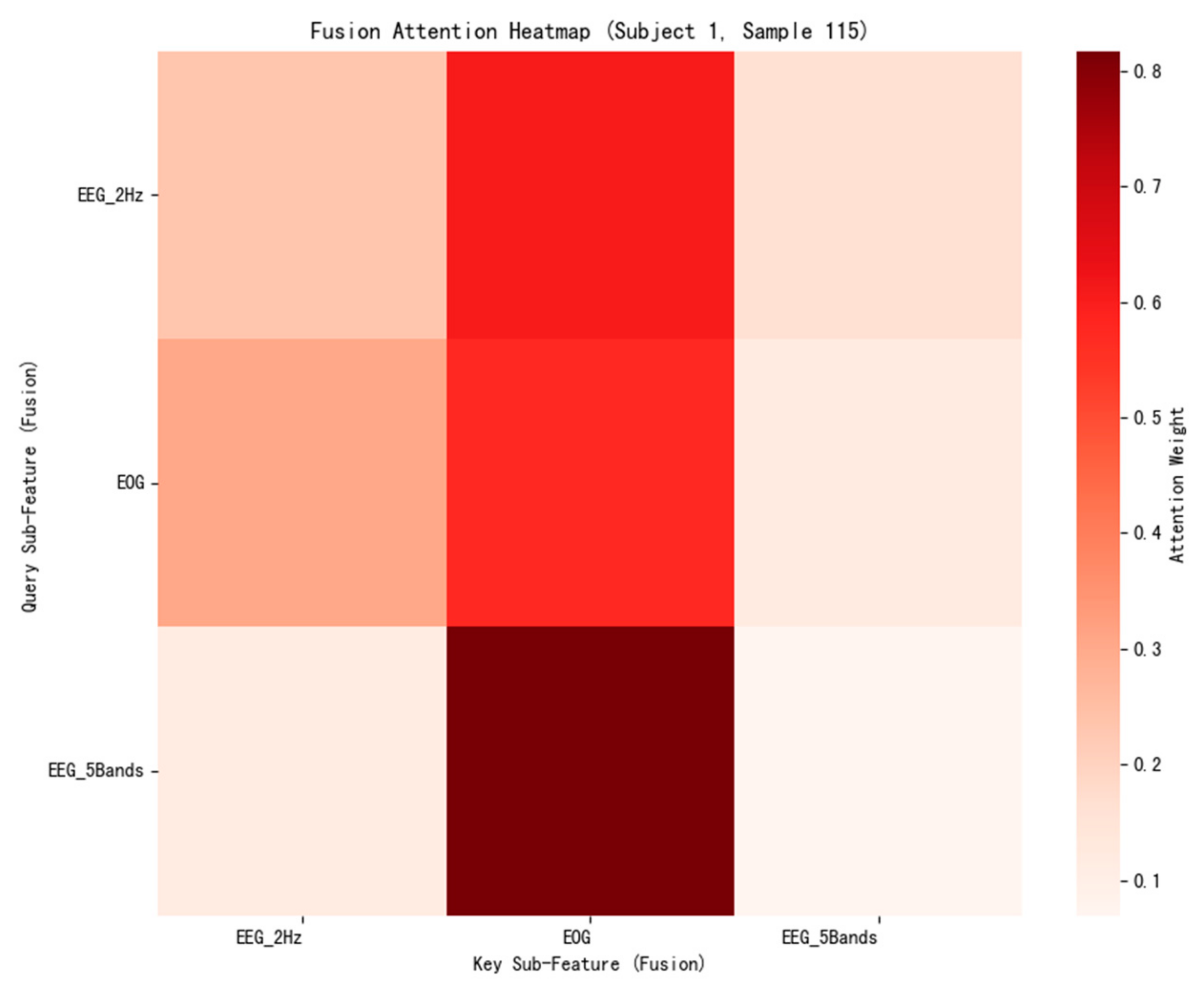

3.3.6. Attention Mechanism Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- World Health Organization. Global Status Report on Road Safety 2018; World Health Organization: Geneva, Switzerland, 2019. [Google Scholar]

- Jia, Z.; Xiao, H.; Ji, P. End-to-end fatigue driving EEG signal detection model based on improved temporal-graph convolution network. Comput. Biol. Med. 2023, 152, 106431. [Google Scholar] [CrossRef]

- Lv, C.; Nian, J.; Xu, Y.; Song, B. Compact vehicle driver fatigue recognition technology based on EEG signal. IEEE Trans. Intell. Transp.Syst. 2022, 23, 19753–19759. [Google Scholar] [CrossRef]

- Gao, D.; Wang, K.; Wang, M.; Zhou, J.; Zhang, Y. SFT-Net: A network for detecting fatigue from EEG signals by combining 4D feature flow and attention mechanism. IEEE J. Biomed. Health Inform. 2024, 28, 4444–4455. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Guan, W.; Ma, R.; Li, X. Comparison among driving state prediction models for car-following condition based on EEG and driving features. Accident Anal. Prev. 2019, 133, 105296. [Google Scholar] [CrossRef] [PubMed]

- Qu, Y.; Hu, H.; Liu, J.; Zhang, Z.; Li, Y.; Ge, X. Driver state monitoring technology for conditionally automated vehicles: Review and future prospects. IEEE Trans. Instrum. Meas. 2023, 72, 3000920. [Google Scholar] [CrossRef]

- Duchowski, A.T. Eye Tracking Methodology: Theory and Practice, 2nd ed.; Springer: London, UK, 2017. [Google Scholar]

- Quddus, A.; Zandi, A.S.; Prest, L.; Comeau, F.J.E. Using long short term memory and convolutional neural networks for driver drowsiness detection. Accident Anal. Prev. 2021, 156, 106107. [Google Scholar] [CrossRef]

- Knapik, M.; Cyganek, B. Driver’s fatigue recognition based on yawn detection in thermal images. Neurocomputing 2019, 338, 274–292. [Google Scholar] [CrossRef]

- Min, J.; Cai, M.; Gou, C.; Xiong, C.; Yao, X. Fusion of forehead EEG with machine vision for real-time fatigue detection in an automatic processing pipeline. Neural Comput. Appl. 2023, 35, 8859–8872. [Google Scholar] [CrossRef]

- Dinges, D.F.; Grac, R. PERCLOS: A Valid Psychophysiological Measure of Alertness as Assessed by Psychomotor Vigilance. 1998. Available online: https://rosap.ntl.bts.gov/view/dot/113 (accessed on 30 July 2025).[Green Version]

- Wierwille, W.W.; Ellsworth, L.A. Evaluation of driver drowsiness by trained raters. Accident Anal. Prev. 1994, 26, 571–581. [Google Scholar] [CrossRef]

- Bergasa, L.M.; Nuevo, J.; Sotelo, M.A.; Barea, R.; Lopez, M.E. Real-time system for monitoring driver vigilance. IEEE Trans. Intell. Transp. Syst. 2006, 7, 63–77. [Google Scholar] [CrossRef]

- Ji, Q.; Zhu, Z.; Lan, P. Real-time nonintrusive monitoring and prediction of driver fatigue. IEEE Trans. Veh. Technol. 2004, 53, 1052–1068. [Google Scholar] [CrossRef]

- Garcia, I.; Bronte, S.; Bergasa, L.M.; Almazán, J.; Yebes, J. Vision-based drowsiness detection for real driving conditions. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium, Madrid, Spain, 3–7 June 2012; pp. 618–623. [Google Scholar][Green Version]

- Shahbakhti, M.; Beiramvand, M.; Rejer, I.; Augustyniak, P.; Broniec-Wójcik, A.; Wierzchon, M.; Marozas, V. Simultaneous eye blink characterization and elimination from low-channel prefrontal EEG signals enhances driver drowsiness detection. IEEE J. Biomed. Health Inform. 2022, 26, 1001–1012. [Google Scholar] [CrossRef] [PubMed]

- Zheng, W.L.; Lu, B.L. A multimodal approach to estimating vigilance using EEG and forehead EOG. J. Neural Eng. 2017, 14, 026017. [Google Scholar] [CrossRef] [PubMed]

- Zheng, W.L.; Zhu, J.W.; Lu, B.L. Multimodal vigilance estimation using deep learning. IEEE Trans. Cybern. 2022, 52, 5296–5308. [Google Scholar]

- Jiang, W.B.; Liu, X.H.; Zheng, W.L.; Lu, B.L. Multimodal adaptive emotion transformer with flexible modality inputs on a novel dataset with continuous labels. In Proceedings of the 31st ACM International Conference on Multimedia, New York, NY, USA, 29 October–3 November 2023; pp. 5975–5984. [Google Scholar][Green Version]

- Luo, Y.; Lu, B.L. Wasserstein-distance-based multi-source adversarial domain adaptation for emotion recognition and vigilance estimation. In Proceedings of the 2021 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Houston, TX, USA, 9–12 December 2021; pp. 1424–1428. [Google Scholar][Green Version]

- Cheng, X.; Wei, W.; Du, C.; Qiu, S.; Tian, S.; Ma, X.; He, H. VigilanceNet: Decouple intra- and inter-modality learning for multimodal vigilance estimation. In Proceedings of the 30th ACM International Conference on Multimedia, New York, NY, USA, 10–14 October 2022; pp. 209–217. [Google Scholar][Green Version]

- Jiang, H.; Lu, Z. Visual Grounding for Object-Level Generalization in Reinforcement Learning. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 55–72. [Google Scholar][Green Version]

- Huo, X.; Zheng, W.L.; Lu, B.L. Real-time driver fatigue detection using multi-modal physiological signals. IEEE Trans. Intell. Transp. Syst. 2020, 21, 3356–3368. [Google Scholar]

- Kendall, A.; Gal, Y. What uncertainties do we need in Bayesian deep learning for computer vision? Adv. Neural Inf. Process. Syst. 2017, 30, 5574–5584. [Google Scholar]

- Ma, B.Q.; Li, H.; Luo, Y.; Lu, B.L. Depersonalized cross-subject vigilance estimation with adversarial domain generalization. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar][Green Version]

- Ko, W.; Oh, K.; Jeon, E.; Suk, H. Vignet: A deep convolutional neural network for EEG-based driver vigilance estimation. In Proceedings of the 2020 8th International Winter Conference on Brain-Computer Interface (BCI), Gangwon, Republic of Korea, 26–28 February 2020; pp. 1–3. [Google Scholar][Green Version]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; Marchand, M.; Lempitsky, V. Domain-adversarial training of neural networks. J. Mach. Learn. Res. 2016, 17, 1–35. [Google Scholar]

- Zhu, Y.; Zhuang, F.; Wang, D. Aligning domain-specific distribution and classifier for cross-domain classification from multiple sources. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; pp. 5989–5996. [Google Scholar][Green Version]

- Gou, M.; Yin, H.L.; Lu, B.L.; Zheng, W.L. Multi-modal adversarial regressive transformer for cross-subject fatigue detection. In Proceedings of the 2024 46th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 15–19 July 2024; pp. 1–4. [Google Scholar][Green Version]

- Boqun, M. Cross-Subject Emotion Recognition and Fatigue Detection Based on Adversarial Domain Generalization. Master’s Thesis, Shanghai Jiao Tong University, Shanghai, China, 2019. [Google Scholar][Green Version]

- Dinges, D.F.; Mallis, M.M.; Maislin, G.; Powell, J.W. Evaluation of Techniques for Ocular Measurement as an Index of Fatigue and as the Basis for Alertness Management. 1998. Available online: https://rosap.ntl.bts.gov/view/dot/2518 (accessed on 30 July 2025).[Green Version]

- Shi, J.X.; Wang, K. Fatigue driving detection method based on time-space-frequency features of multimodal signals. Biomed. Signal Process. Control 2023, 84, 85–93. [Google Scholar] [CrossRef]

- Alghanim, M.; Alotaibi, B.; Ahmad, I.; Dias, S.B.; Althobaiti, T.; Alzahrani, M.; Almutairi, B. A hybrid deep neural network approach to recognize driving fatigue based on EEG signals. Int. J. Intell. Syst. 2024, 2024, 4721863. [Google Scholar] [CrossRef]

- Nemcová, A.; Slaninová, K.; Justrová, A.; Šimková, K.; Smíšek, R.; Vítek, M. Optimized driver fatigue detection method using multimodal neural networks. Sci. Rep. 2025, 15, 55723. [Google Scholar] [CrossRef]

- Brooks, J.O.; Gangadharaiah, R.; Rosopa, E.B.; Pool, R.; Jenkins, C.; Rosopa, P.J.; Mims, L.; Schwambach, B.; Melnrick, K. Using the functional object detection—Advanced driving simulator scenario to examine task combinations and age-based performance differences: A case study. Appl. Sci. 2024, 14, 11892. [Google Scholar] [CrossRef]

| Index | RMSE | MAE |

|---|---|---|

| 1 | 0.118939 | 0.096812 |

| 2 | 0.110041 | 0.089023 |

| 3 | 0.230304 | 0.191571 |

| 4 | 0.169818 | 0.143578 |

| 5 | 0.101254 | 0.076963 |

| 6 | 0.128247 | 0.098166 |

| 7 | 0.116746 | 0.087638 |

| 8 | 0.161194 | 0.11413 |

| 9 | 0.097768 | 0.076226 |

| 10 | 0.046472 | 0.033936 |

| 11 | 0.194315 | 0.165127 |

| 12 | 0.255315 | 0.222368 |

| 13 | 0.361604 | 0.330456 |

| 14 | 0.157339 | 0.103876 |

| 15 | 0.066419 | 0.048681 |

| 16 | 0.149974 | 0.126567 |

| 17 | 0.198523 | 0.168296 |

| 18 | 0.138114 | 0.121762 |

| 19 | 0.073681 | 0.05776 |

| 20 | 0.140626 | 0.112973 |

| 21 | 0.135539 | 0.121048 |

| 22 | 0.18023 | 0.159634 |

| 23 | 0.131308 | 0.100847 |

| Avg | 0.150599 | 0.123802 |

| Std | 0.067982 | 0.06395 |

| Methods | RMSE |

|---|---|

| SVR | 0.2071 ± 0.2076 |

| MLP | 0.1942 ± 0.1822 |

| VIGNet [26] | 0.1876 ± 0.1903 |

| DG-DANN [27] | 0.1543 ± 0.1490 |

| MFSAN [28] | 0.1780 ± 0.1559 |

| MART (w/o Domain Generalization) [29] | 0.1556 ± 0.1311 |

| DResNet [30] | 0.1569 ± 0.0735 |

| TMU-Net | 0.1506 ± 0.0680 |

| Modality | RMSE | MAE |

|---|---|---|

| EEG_2HZ | 0.226376 ± 0.106922 | 0.184766 ± 0.098614 |

| EOG | 0.158179 ± 0.08263 | 0.122523 ± 0.070814 |

| EEG_5BANDS | 0.267976 ± 0.131726 | 0.224698 ± 0.119818 |

| EEG_2HZ+EOG | 0.154578 ± 0.072935 | 0.124668 ± 0.066955 |

| EEG_2HZ+ EEG_5BANDS | 0.221911 ± 0.089878 | 0.188159 ± 0.083861 |

| EOG+ EEG_5BANDS | 0.156201 ± 0.070616 | 0.126112 ± 0.068813 |

| All Modalities | 0.150599 ± 0.067982 | 0.123802 ± 0.063950 |

| Model Configuration | RMSE | MAE |

|---|---|---|

| No_ Cross-Modal Attention | 0.157 ± 0.073 | 0.129 ± 0.069 |

| No_ ConvSparseAttention | 0.179 ± 0.126 | 0.145 ± 0.112 |

| No_ Multi-Scale Feature Extraction | 0.154 ± 0.067 | 0.126 ± 0.062 |

| No_ Uncertainty Weight Calculation | 0.155 ± 0.075 | 0.127 ± 0.070 |

| Full Mode | 0.151 ± 0.068 | 0.124 ± 0.064 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Xu, X.; Du, Y.; Zhang, N. TMU-Net: A Transformer-Based Multimodal Framework with Uncertainty Quantification for Driver Fatigue Detection. Sensors 2025, 25, 5364. https://doi.org/10.3390/s25175364

Zhang Y, Xu X, Du Y, Zhang N. TMU-Net: A Transformer-Based Multimodal Framework with Uncertainty Quantification for Driver Fatigue Detection. Sensors. 2025; 25(17):5364. https://doi.org/10.3390/s25175364

Chicago/Turabian StyleZhang, Yaxin, Xuegang Xu, Yuetao Du, and Ningchao Zhang. 2025. "TMU-Net: A Transformer-Based Multimodal Framework with Uncertainty Quantification for Driver Fatigue Detection" Sensors 25, no. 17: 5364. https://doi.org/10.3390/s25175364

APA StyleZhang, Y., Xu, X., Du, Y., & Zhang, N. (2025). TMU-Net: A Transformer-Based Multimodal Framework with Uncertainty Quantification for Driver Fatigue Detection. Sensors, 25(17), 5364. https://doi.org/10.3390/s25175364