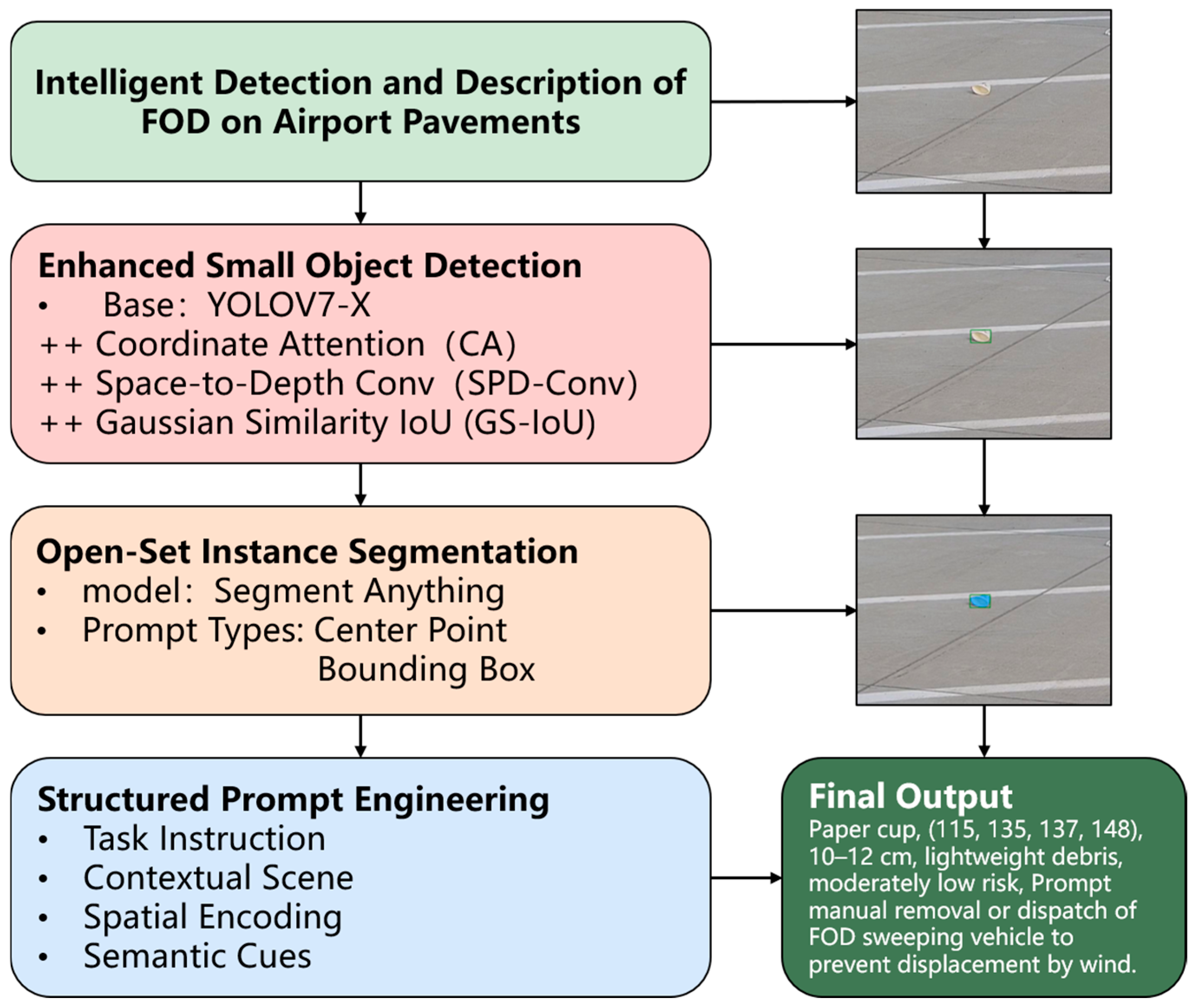

4.2.1. YOLO-SAM Segmentation Results

Figure 7 presents the segmentation results of the SAM when using YOLO-detected center points as prompt inputs. As shown in

Figure 7a, when the image is divided into smaller sub-images, the center points of foreign objects are detected with higher accuracy. Under such conditions, as illustrated in

Figure 7b, the SAM successfully segments all foreign objects with well-defined boundaries. However, some runway markings are mistakenly labeled as foreground, indicating partial misclassification. In contrast, as observed in

Figure 7c, segmentation performance in large-scale scenes deteriorates despite accurate center point prompts. This decline is primarily due to the high density of foreign objects and the excessive number of prompt points, which result in the generation of a large number of overlapping masks by the SAM. These results suggest that inputting cropped sub-images with reduced object density allows the model to capture more detailed features, thereby improving segmentation accuracy. Therefore, when using center points as prompts for the SAM, it is advisable to preprocess input images by dividing them into appropriately sized sub-images to enhance segmentation precision.

Figure 8 illustrates the segmentation results of the SAM when using YOLO-detected bounding boxes as prompt inputs. As shown in

Figure 8a, when the image is divided into smaller patches, the detected bounding boxes of foreign objects are relatively accurate. With precise bounding box prompts, the SAM is able to segment all foreign objects with clear and complete boundaries, as depicted in

Figure 8b—yielding better results than center point prompts. When inaccurate bounding boxes are used as inputs, the segmentation performance varies depending on the size of the bounding boxes. As seen in

Figure 8c, if the bounding box is too small, the SAM performs segmentation strictly within the box, leaving foreign objects outside the box undetected. However, when a larger bounding box is provided, the model still manages to accurately segment the target. These observations suggest that when the bounding box location is uncertain, applying moderate enlargement can effectively compensate for localization errors and improve segmentation reliability. Therefore, it is recommended to appropriately enlarge the bounding boxes before inputting them into the SAM to ensure accurate segmentation of foreign objects.

Since the output results of the YOLO model do not necessarily correspond to the precise center points and anchor boxes, to further investigate the influence of center point displacement and anchor box scale on the segmentation performance of the SAM, the YOLO-detected results were transformed by applying center point offsets of 0%, 20%, 40%, and 80%, as well as anchor box scaling factors of 50%, 100%, 150%, and 200%. These combinations of offset anchor boxes were then used as the prompts input to the SAM for foreign object segmentation. The segmentation performance of the SAM under different combinations was quantitatively evaluated using MPA, MIoU, and F1 Score metrics. The results are summarized in

Table 7.

When the center point offset rate is 0%, anchor box scaling at 100% and 150% yields high segmentation performance with MPA of 96.85, MIoU of 0.982, and F1 Score of 0.983, showing nearly identical results for both scales. However, at 50% scaling, MPA sharply drops to 36.85, MIoU to 0.583, and F1 Score to 0.602, likely due to smaller anchor boxes failing to adequately cover the target under accurate center positioning.

With a 20% center offset, performance declines moderately (MPA 85.24, MIoU 0.875, F1 Score 0.884), while a 150% anchor scaling achieves relatively better results (MPA 88.35, MIoU 0.912, F1 Score 0.932), indicating that increasing anchor size partially compensates for minor center deviations.

At 40% offset, all metrics decrease further, yet 150% scaling still performs best (MPA 82.63, MIoU 0.852, F1 Score 0.865), suggesting larger anchors better adapt to moderate displacement. When offset reaches 80%, performance deteriorates significantly, with the smallest scale (50%) yielding the poorest results (MPA 23.75, MIoU 0.435, F1 Score 0.495). In contrast, 200% scaling improves metrics (MPA 81.84, MIoU 0.832, F1 Score 0.854), as larger anchors more effectively encompass targets despite large offsets.

Overall, segmentation performance declines as the center point offset increases. However, appropriately increasing the anchor box scale can mitigate the negative impact of center displacement. Given the uncertainty of center point accuracy in practice, enlarging YOLO-detected anchor boxes to 150% is recommended as the input prompts to the SAM to enhance segmentation performance.

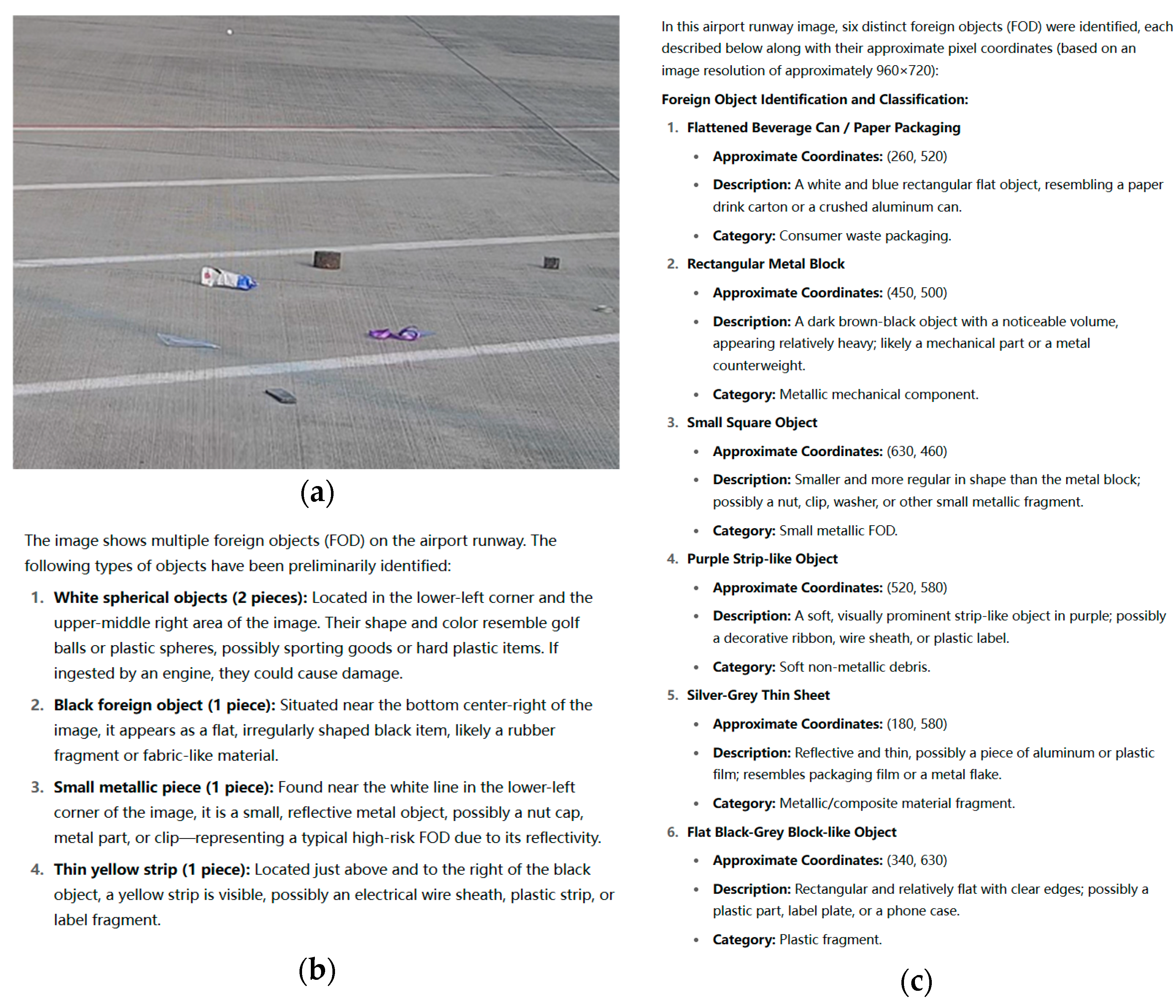

4.2.2. GPT-Based Description Accuracy

The image dataset used in this study originates from the airport’s foreign object detection system’s operational image repository. To ensure realistic applicability and representativeness, 500 images were selected as the test set, each containing 1 to 5 foreign objects of varying categories with their environmental context fully preserved. These images cover diverse typical airport scenarios, such as runway edges, taxiway intersections, and aprons.

Each image is accompanied by manually annotated environmental descriptions, prepared following the Civil Airport Operation Safety Management Regulations (CCAR-140) and the Foreign Object Debris Prevention Management Measures for Transport Airports (AP-140-CA-2022-05). The annotations include information on object location, ground material, lighting conditions, and adjacent facilities, serving as reference standards for subsequent accuracy evaluation.

For prompt setting, a standardized base prompt—“Please describe the environmental information in the image, including ground material, lighting conditions, spatial location, and surrounding facilities”—was used to ensure prompt controllability and evaluation consistency, testing the models’ responsiveness to semantic cues.

Regarding the generation strategy, one complete natural language description was generated per image and compared semantically to its corresponding manual reference. Five evaluation metrics were calculated: description accuracy, detail richness, language fluency, prompt controllability, and average inference time, as shown in

Table 8.

The experiments demonstrate that BLIP-2 achieves the best overall performance, excelling in description accuracy and prompt controllability. This indicates its strong capability to accurately comprehend image environmental semantics while flexibly generating target content based on given prompts. BLIP-2 also maintains high language fluency and moderate inference time, making it well suited for high-precision semantic generation tasks. GIT performs best in language fluency and detail richness, producing naturally structured and information-rich texts, which is advantageous for description tasks emphasizing expressive quality, although its inference speed is relatively slower. MiniGPT-4 leads in inference efficiency at 0.9 s per image, making it suitable for applications requiring real-time performance, but its weaker prompt controllability can cause generated content to deviate from the intended context. PaLI shows moderate performance across metrics, balancing language expression and environmental understanding, and is appropriate for deployment in multitask and multilingual scenarios.

Table 9 details the descriptive performance of these four models on foreign object images across varying environments. BLIP-2′s superior accuracy and controllability make it the preferred model for this application, while other models may be selected based on specific trade-offs between performance and efficiency.

4.2.3. Prompt Engineering Ablation

In airport operational environments, foreign objects are typically scattered across runways, taxiways, or adjacent areas with spatial distributions that are inherently uncertain. Without clear ordering and positional encoding, language models may suffer from referential ambiguity, redundant descriptions, or omission errors. Effectively organizing the spatial location information of each detected object is thus a crucial step for generating accurate descriptions and enabling interactive reasoning in multi-object detection and semantic generation tasks. To address this, we propose a spatially ordered foreign object numbering and positional encoding strategy designed to enhance the model’s structural awareness of multiple targets within an image, thereby improving the systematicity and logical clarity of the generated descriptions.

This strategy is based on human visual reading habits, emulating a left-to-right, top-to-bottom ordering to assign unique identifiers to each detected foreign object. Such ordering facilitates the generation of spatially oriented natural language descriptions, such as “the first foreign object,” “the fragment in the upper left corner,” or “the metal object at the far right.” The specific steps include the following:

- (a)

Mask bounding box extraction: From the mask results produced by the YOLO-SAM, extract the bounding rectangle for each object, obtaining its upper-left and lower-right pixel coordinates.

- (b)

Ordering rule: Sort all detected objects primarily by their (horizontal) coordinate and secondarily by their (vertical) coordinate, achieving a left-to-right, top-to-bottom sequence and assigning a unique label .

- (c)

Position normalization: Normalize pixel coordinates relative to image dimensions as , , ensuring spatial information consistency across varying image sizes to facilitate model learning and cross-sample alignment.

- (d)

Data structure generation: Each foreign object in the image is represented by a triplet consisting of its identifier, spatial location (normalized bounding box), and mask region (image crop or semantic segmentation map). Together, these form a structured spatial prompt accessible to language models.

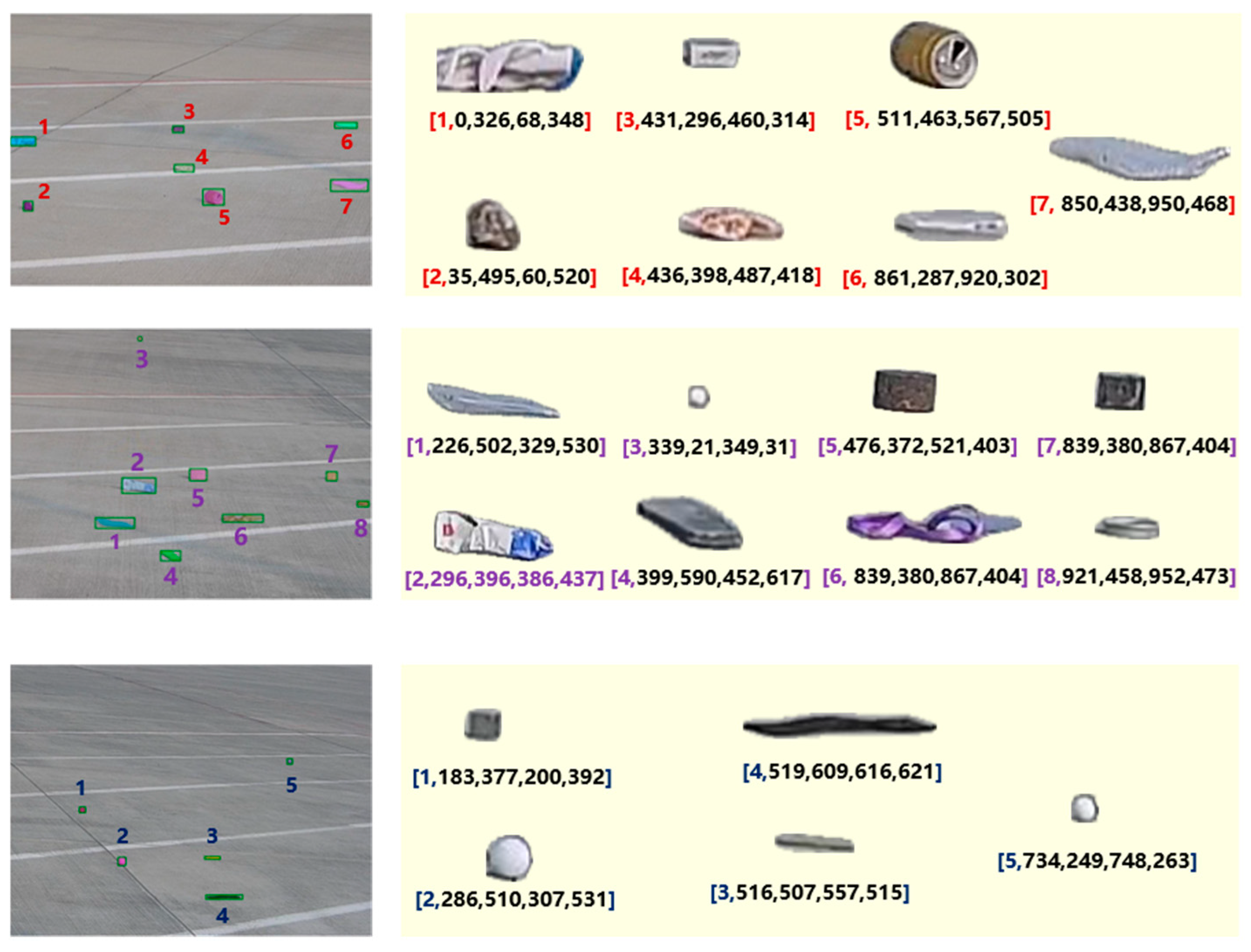

Figure 9 presents detection results for three sample images. The left side shows the numbering and spatial annotations of foreign objects within the original images, while the right side displays the cropped images of each object along with their corresponding spatial encoding information. As illustrated, the model successfully extracts the boundary information of each target and assigns consistent identifiers following the left-to-right, top-to-bottom numbering rule. This provides clear semantic guidance for subsequent multi-object descriptions.

After completing the basic environmental description and spatial information encoding of foreign objects, this study further designs a knowledge-integrated prompt engineering module tailored for the airport domain. The goal is to guide large LLMs to generate more professional, precise, and operationally instructive semantic descriptions of foreign objects through carefully designed natural language instructions. This approach comprises three components: a modular structured prompt design, the construction of a semantic cue lexicon and alignment mechanism, and a prompt generation workflow with model interface integration, forming a closed-loop system from knowledge acquisition, prompt construction, to language generation.

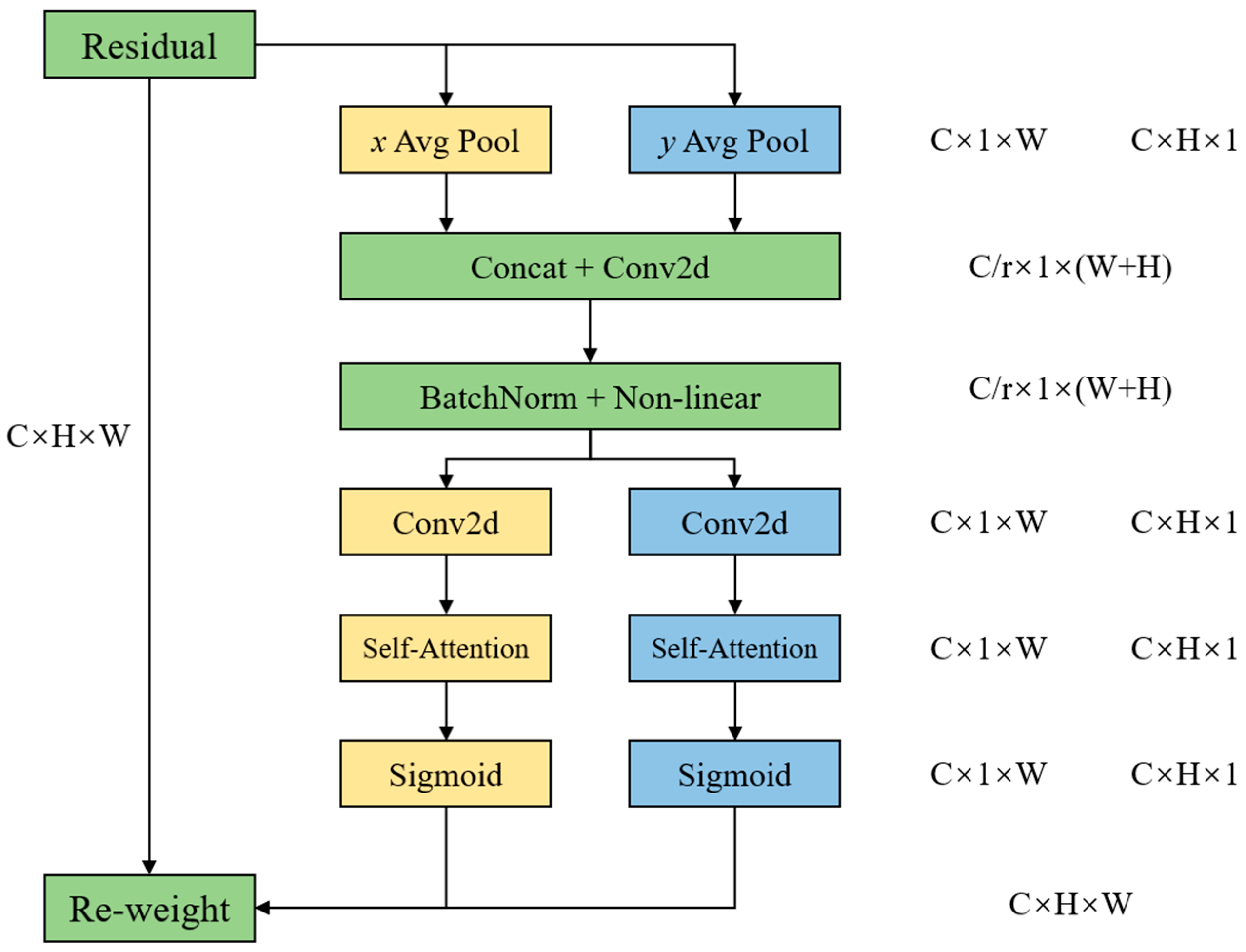

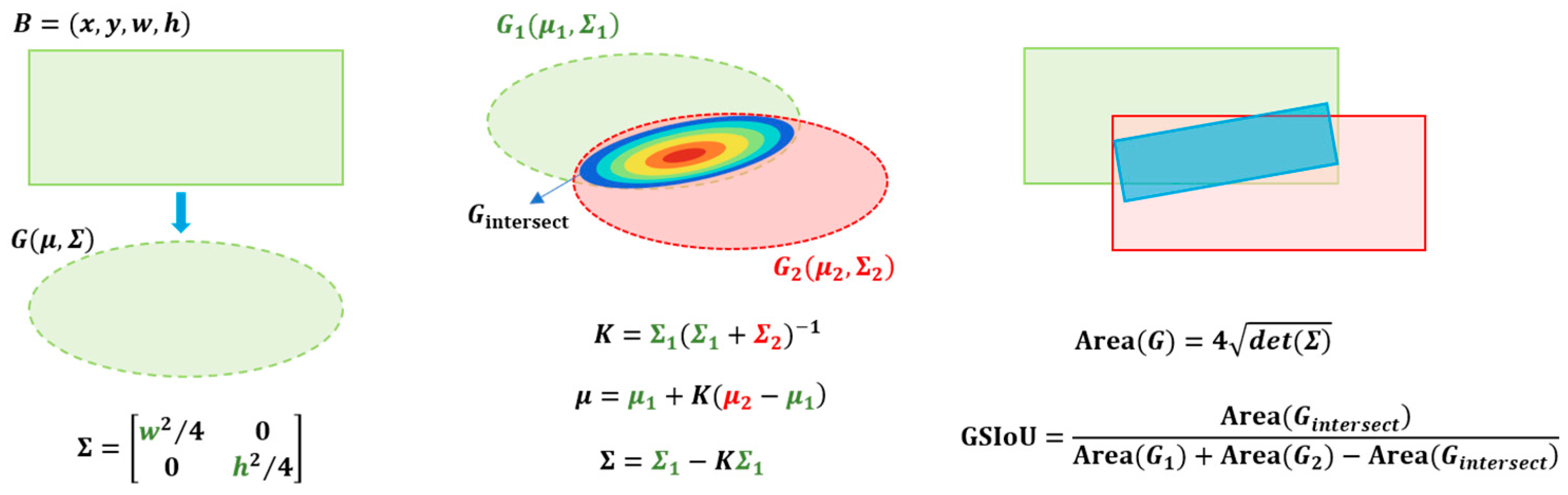

Firstly, to meet the controllability requirements of semantic organization and generation objectives, a four-layer nested structured prompt template is proposed, consisting of four core modules: “Task Instruction,” “Contextual Scene,” “Spatial Encoding,” and “semantic cues”. The Task Instruction explicitly defines the generation target, e.g., “Please describe foreign object number N.” The Contextual Scene module extracts overall scene information from images using multi-modal vision–language models, such as BLIP-2, including ground material, lighting conditions, and area identification. Spatial Encoding combines YOLO-generated bounding box coordinates and object mask maps to achieve precise localization within the image. Semantic cues embed airport foreign object prior knowledge tags, such as typical shapes, material types, and potential source paths. This modular prompt design enhances contextual completeness during language generation and supports rapid prompt reconfiguration for different task types, offering strong generalizability and adaptability.

At the knowledge supply layer, a multi-dimensional semantic cue lexicon is constructed to serve as the foundational resource for the semantic cues module. This lexicon integrates airport operational and maintenance regulations, a historical FOD case database, and expert knowledge from airport operations, organizing keywords into multi-level, multi-dimensional semantic structures. Specifically, it includes 50 typical foreign object categories (e.g., “screw,” “cable head,” “fabric piece”), 12 material attributes (e.g., “metal,” “plastic,” “textile”), 8 spatial risk zones (e.g., “runway centerline area,” “taxiway edge zone”), and 6 maintenance recommendation tags (e.g., “immediate removal required,” “risk controllable”).

In the prompt generation and model application stage, a template-engine-based prompt generation system is developed to automatically assemble structured prompt content according to the visual parsing results of input images, generating natural language instructions compliant with the language model’s input format. The prompts adopt an “instruction-driven + knowledge-constrained” paradigm and are input into GPT-series large language models. Together with image content, object numbering, and mask data, they form a multi-modal input that enables semantic reasoning and accurate description of foreign object scenes.

Comparative experiments were conducted to evaluate the practical effectiveness of the proposed structured prompt engineering framework, covering baseline model performance, module ablation effects, and the impact of semantic enhancement on language generation quality. The experimental setup includes three control groups: Group A uses a baseline model with generic natural language prompts without guidance; Group B introduces spatial location information by incorporating YOLO bounding box coordinates and mask maps as structured inputs; and Group C further integrates the multi-dimensional semantic cue lexicon developed herein, realizing a full-structured prompt input strategy. All groups employ the same language model architecture fine-tuned under identical image and object numbering inputs to ensure comparability.

Table 10 presents the quantitative results of three experimental groups on two key metrics: description accuracy and prompt consistency. The results demonstrate that incorporating spatial localization information increases the description accuracy from 76.4% to 83.7% and improves prompt consistency to 74.8%, indicating that explicit target positioning plays a crucial contextual guidance role during language generation. Furthermore, when combined with the knowledge cue module, Group C achieves a description accuracy of 91.3% and prompt consistency of 89.6%, representing improvements of 14.9 and 28.4 percentage points over the baseline, respectively.

To further investigate the contribution of each semantic element within the structured prompt, we conducted a dimension-specific ablation study. The structured semantic cues used in our prompt design consist of three major dimensions:

S: Spatial location information (e.g., “at the edge of the runway”, “upper-left corner”);

M: Material type (e.g., “metallic debris”, “plastic wrap”);

R: Risk level tag (e.g., “immediate removal required”, “non-critical”).

As shown in

Table 11, we observed consistent improvements in both description accuracy and prompt consistency as more semantic dimensions were included. Spatial encoding (S) alone significantly improved consistency by over 8%. The addition of material (M) further enhanced semantic richness, while the inclusion of risk-level indicators (R) contributed the most to domain-specific expressiveness and task relevance. This analysis confirms the effectiveness of the proposed semantic cue lexicon and highlights the dimension-wise contribution of each cue to language generation quality.

Figure 10 illustrates the comparison of generated responses before and after the integration of spatial location and knowledge guidance. These findings strongly validate the effectiveness of the structured prompt strategy in enhancing language generation quality, particularly in the normative use of terminology, completeness of scene semantics, and explicit expression of risk information. The proposed structured prompt engineering framework significantly improves the accuracy and semantic consistency of foreign object descriptions.

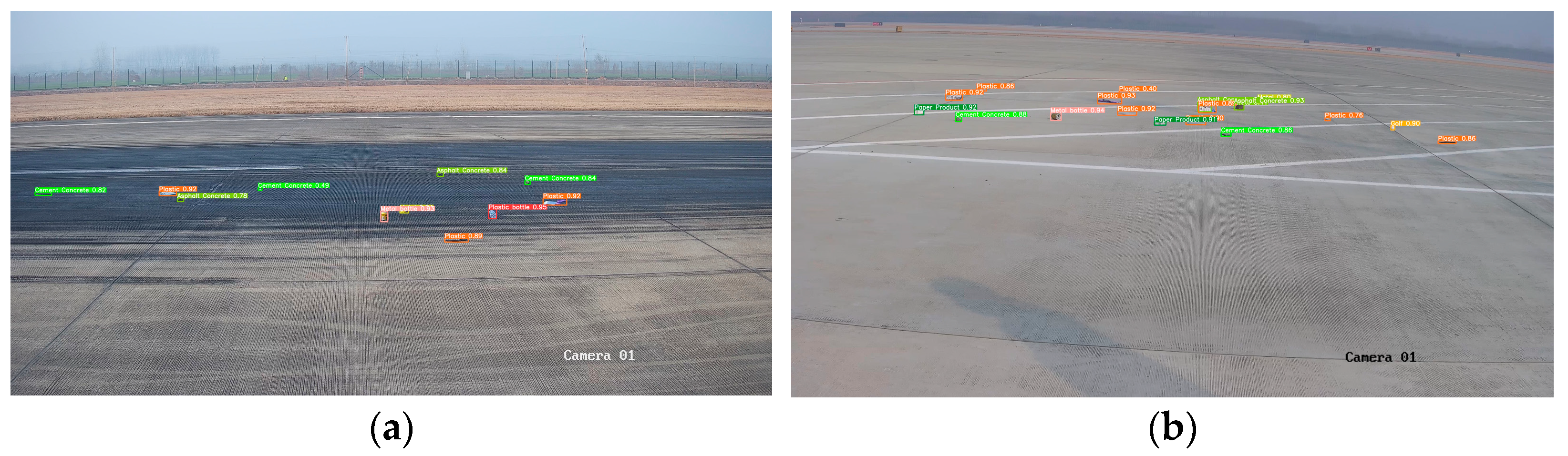

4.2.5. Robustness and Generalization Under Complex Conditions

To evaluate the robustness and generalization capability of the proposed YOLO-SAM-GPT framework in challenging airport environments, we conducted additional qualitative experiments under four representative complex conditions: complete darkness, low-light nighttime, high-glare nighttime, and rainy weather. As shown in

Figure 11, the model exhibits adaptability across these diverse scenarios.

In complete darkness, where the background is nearly invisible to human observers, the enhanced YOLO module successfully detects high-contrast FOD using learned structural and contextual priors; however, extremely low illumination occasionally leads to missed detections of small or low-reflectivity debris. In low-light nighttime scenes with minimal ambient illumination, the system maintains reliable detection and segmentation performance, leveraging texture-level cues. Under strong artificial lighting at night—commonly found near aprons or hangars—the model effectively handles intense glare and reflections, correctly localizing and describing small metallic debris. In rainy conditions, despite the SAM segmentation remaining robust for most objects, water-induced blur, reduced contrast, and motion artifacts occasionally cause false positives or inaccurate attribute descriptions. These examples highlight both the strengths and current limitations of the framework, confirming its deployment potential in low-visibility and weather-degraded environments while indicating areas for further improvement.

Future work should focus on improving robustness against detection errors through iterative refinement, feedback-based detection–segmentation loops, and complementary region proposals. Incorporating multi-modal inputs may mitigate failures in complete darkness or severe weather, while model compression and lightweight design could enable deployment in low-resource airport environments and on edge devices.