A Comprehensive Hybrid Approach for Indoor Scene Recognition Combining CNNs and Text-Based Features

Abstract

Highlights

- Proposed an innovative two-channel hybrid model by integrating convolutional neural networks (CNNs) with a text-based classifier.

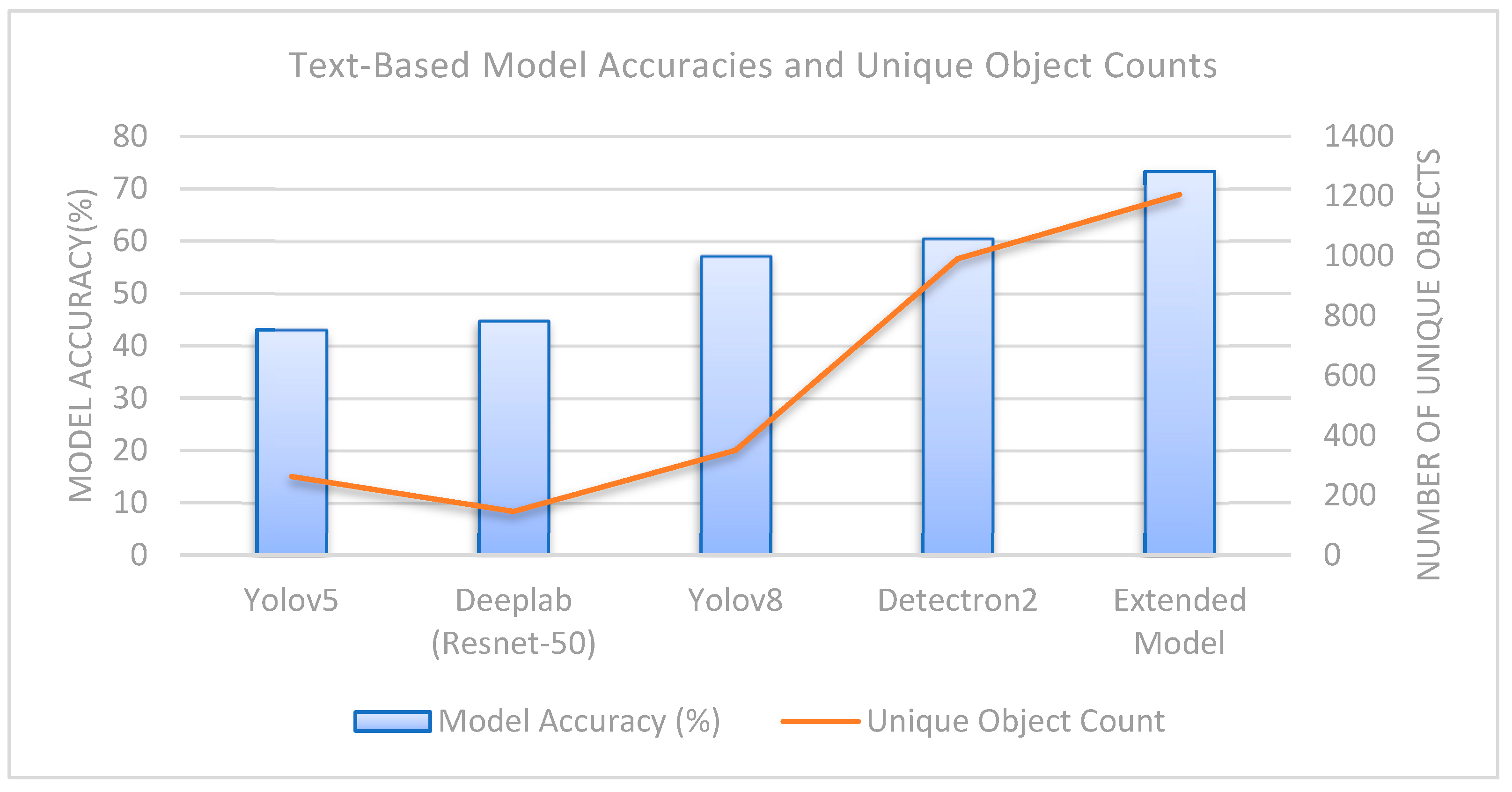

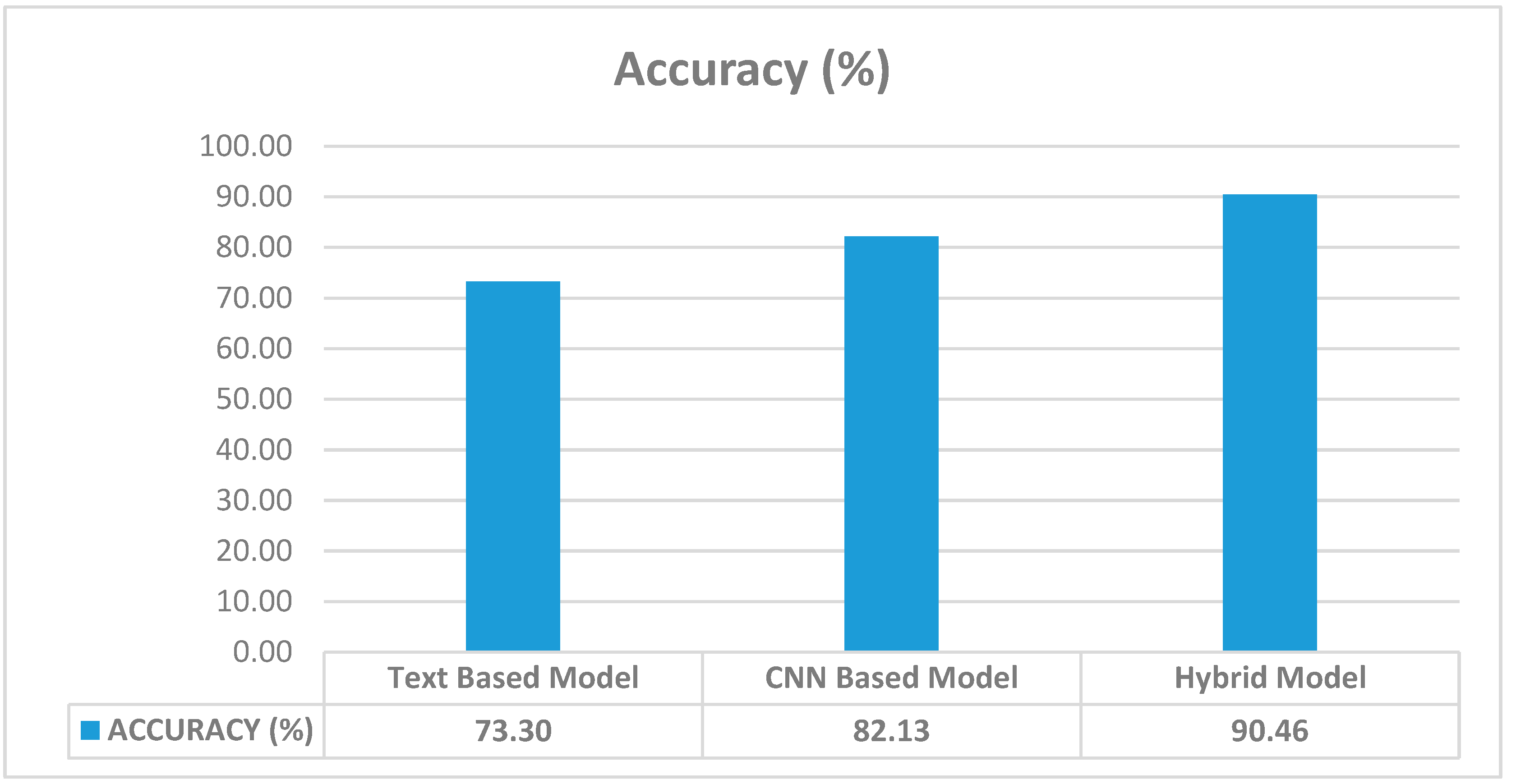

- Leveraged an extended dataset derived from multiple object recognition models, increasing input data diversity and achieving a text-based classifier accuracy of 73.30%. Achieved a significant improvement of 8.33% in accuracy compared to CNN-only models, with the hybrid model attaining an accuracy of 90.46%.

- Efficient and Scalable Methodology: Utilized EfficientNet for CNN-based feature extraction and Bag-of-Words for text representation, ensuring computational efficiency and scalability.

- Application Potential: Addressed challenges in indoor scene recognition, such as complex backgrounds and object diversity, demonstrating significant potential for applications in robotics, intelligent surveillance, and assistive systems.

Abstract

1. Introduction

1.1. Contribution and Novelty

- Hybrid Approach for Indoor Scene Recognition: An innovative two-channel hybrid model was proposed by integrating convolutional neural networks (CNNs) with a text-based classification pipeline to capture both visual and contextual semantics.

- Enhanced Data Diversity: The model leveraged an enriched input set derived from multiple object recognition algorithms, which expanded the vocabulary and contributed to achieving a text-based classifier accuracy of 73.30%.

- Improved Model Performance: The proposed hybrid framework outperformed the CNN-only baseline by 8.33%, reaching an overall accuracy of 90.46% on the MIT Indoor-67 dataset.

- Efficient and Scalable Design: EfficientNet was employed for deep visual feature extraction, while a Bag-of-Words representation was used for textual inputs, ensuring a balanced trade-off between computational efficiency and model scalability. A computationally efficient and implementation-friendly method was adopted using the CNN-based EfficientNet model for visual feature extraction and basic methods such as Bag-of-Words for text representation.

- Application Potential: By addressing core challenges in indoor scene recognition—including complex backgrounds and high object variability—the proposed hybrid model demonstrates strong applicability in domains such as service robotics, intelligent video surveillance, and context-aware assistive technologies.

1.2. Related Work

2. Background

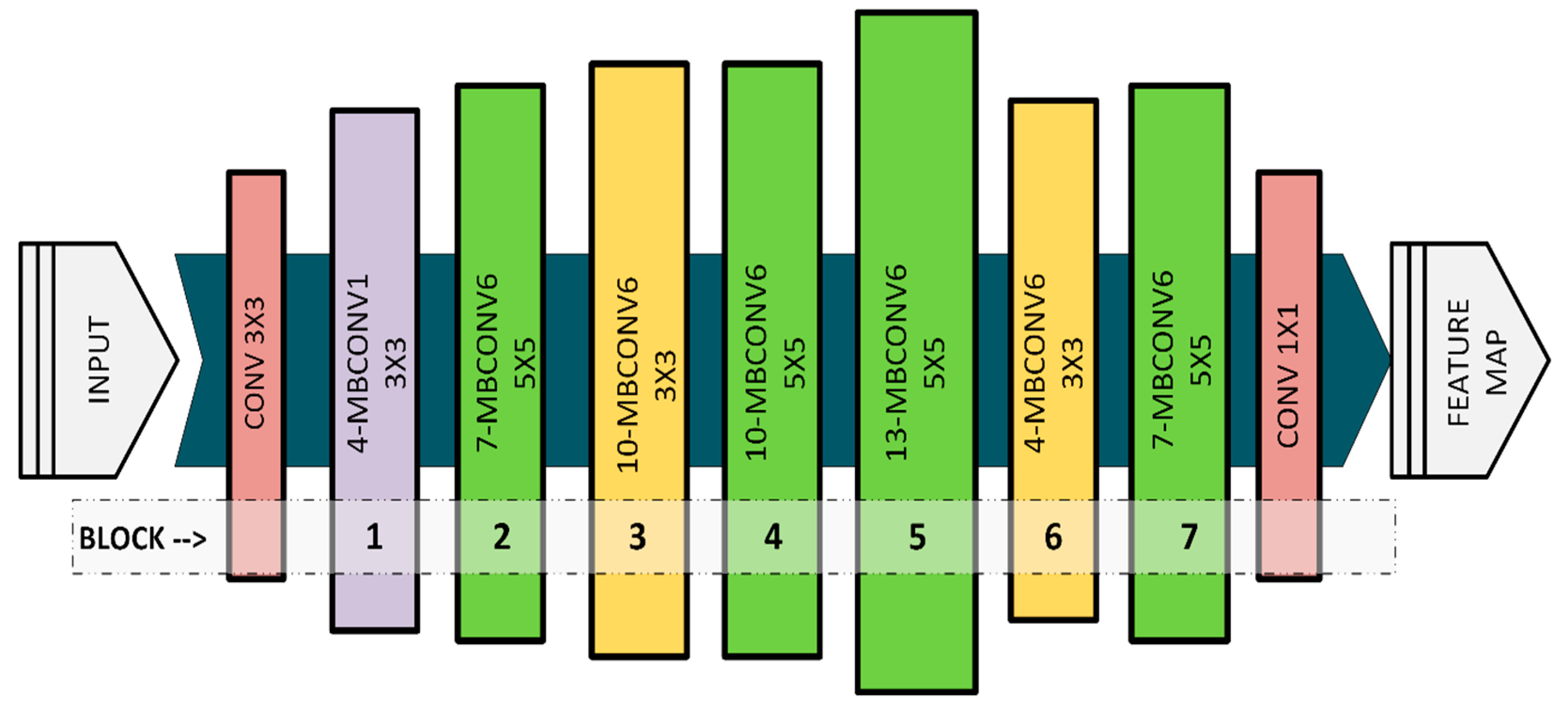

2.1. Convolutional Neural Networks—EfficientNet

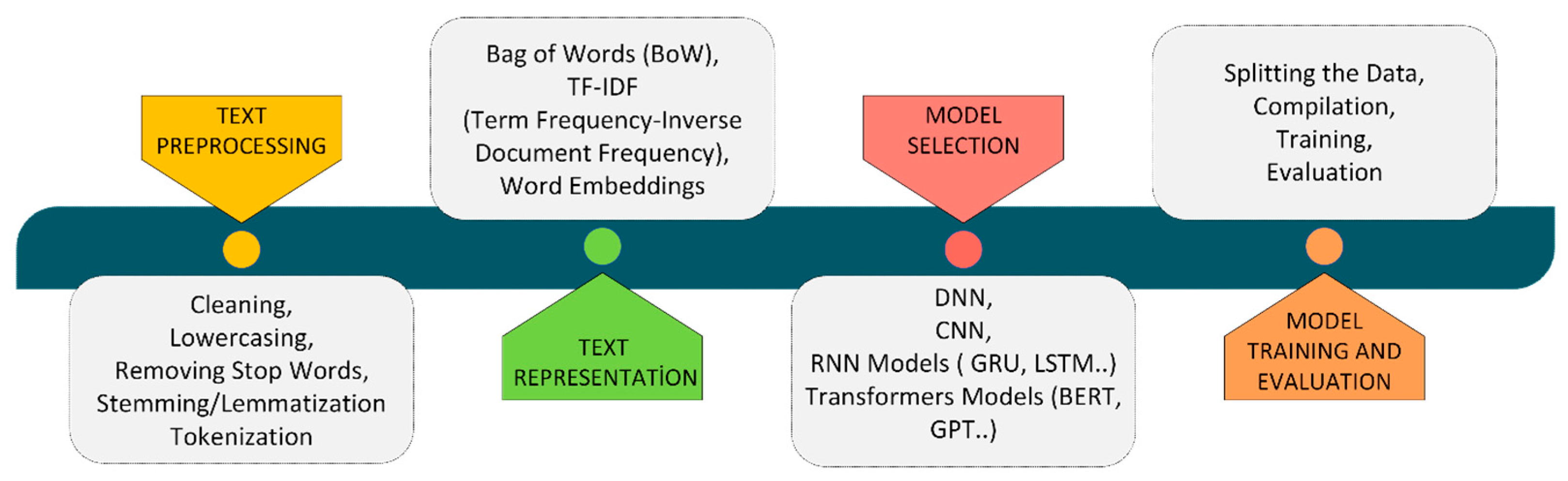

2.2. Text Classification

3. Proposed Approach

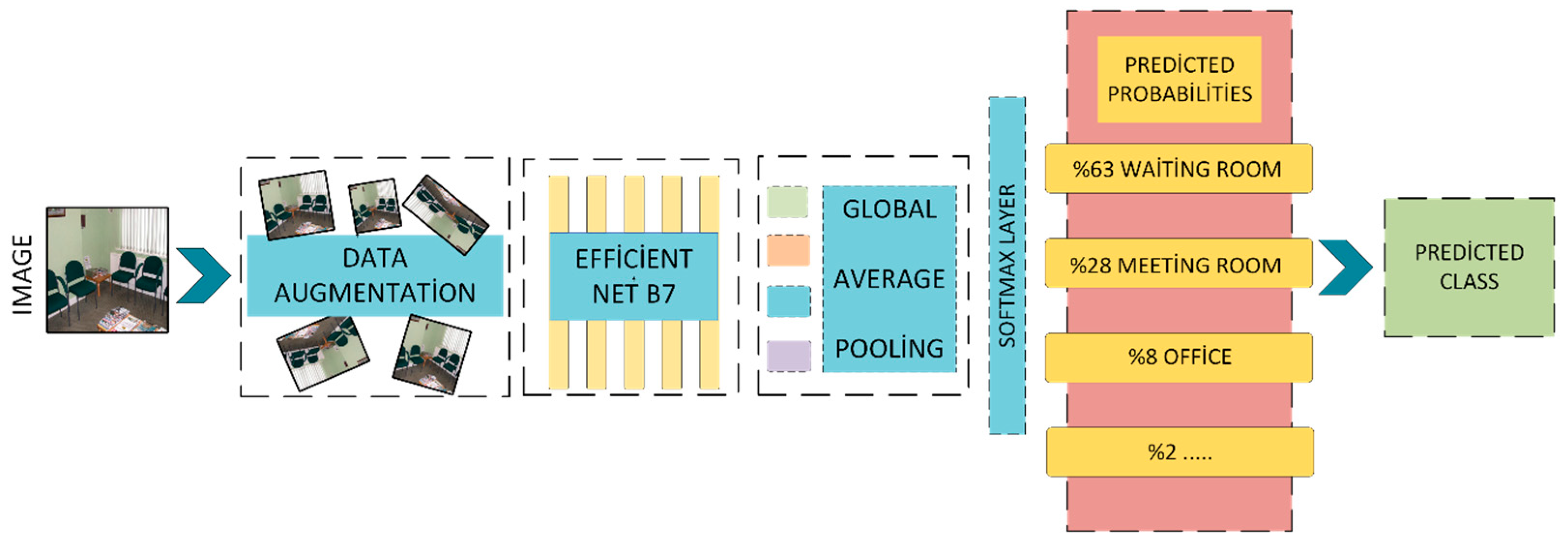

3.1. CNN Based Scene Classification

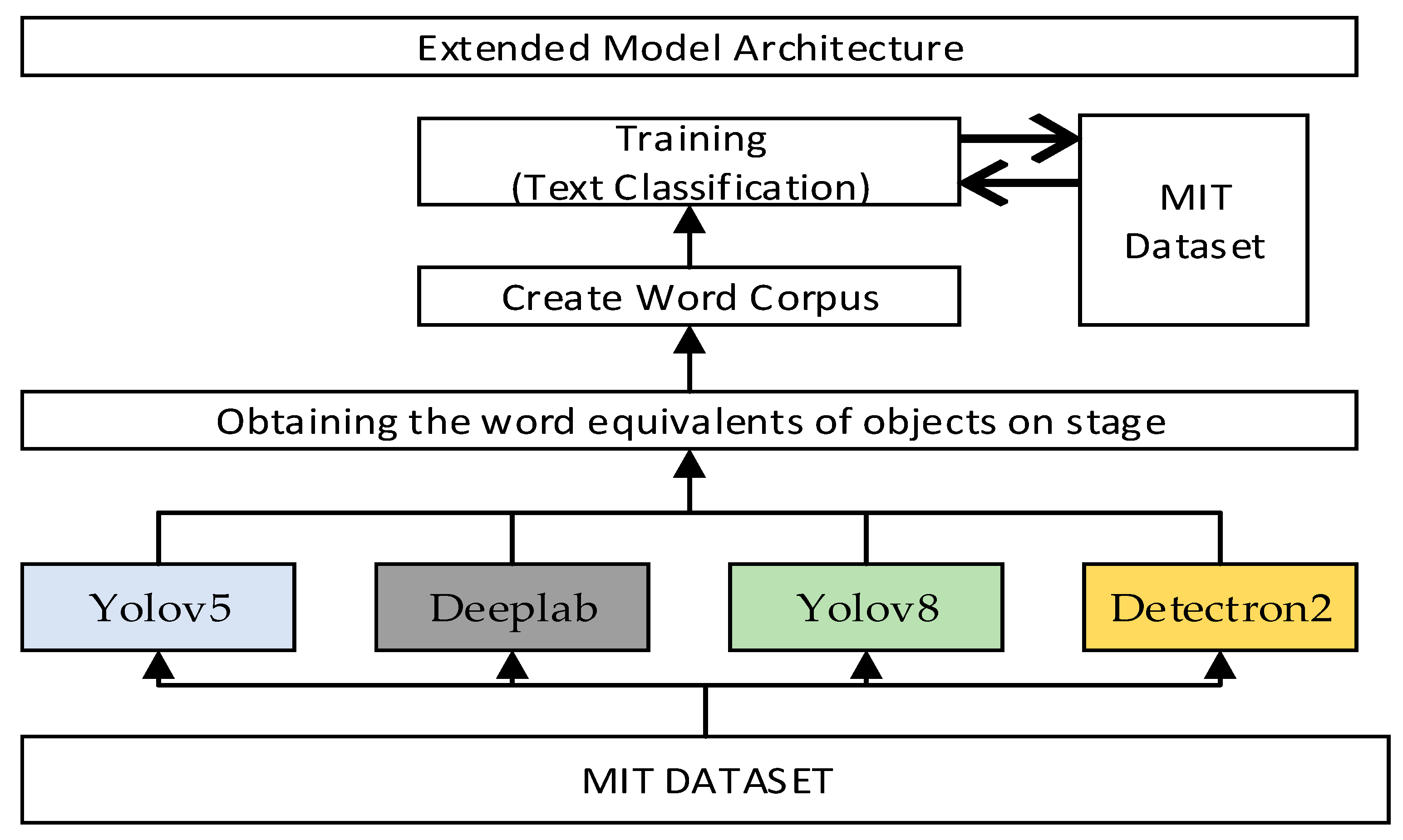

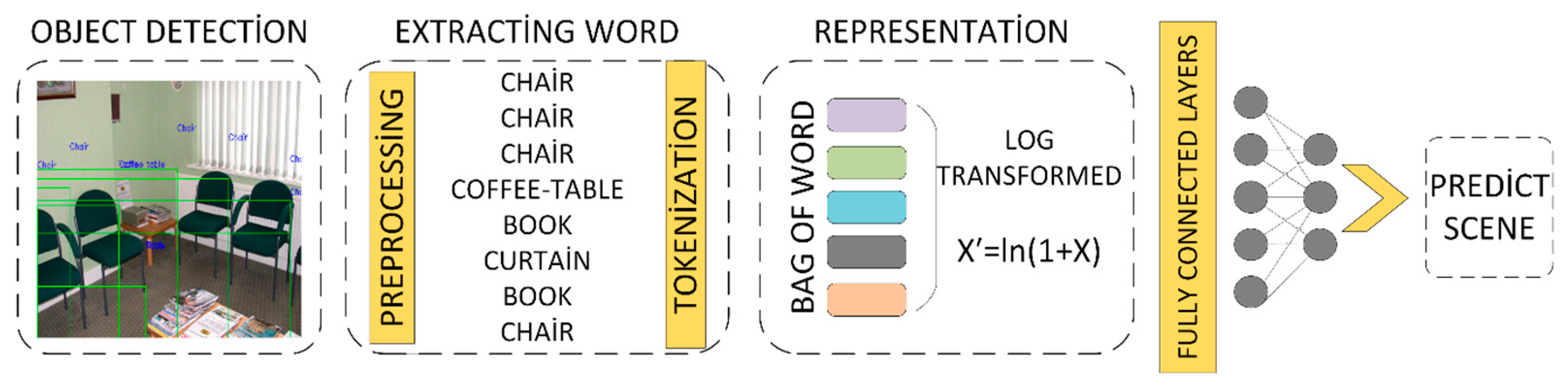

3.2. Text-Based Scene Classification

| Algorithm 1. Pseudocode of the text-based scene classifier. |

| Input: I : RGB image input of size (224 × 224 × 3) T : Scene description or class-level text annotation Output: ŷ : Predicted scene class label Procedure: 1. Visual Feature Extraction: CNN_feat ← CNN_Extractor(I) # Example architecture: DenseNet121 (pretrained on ImageNet) # Output dimension: ℝ^(2560) 2. Text Feature Extraction: Text_feat ← Vectorizer(T) # Preprocess: Tokenize, stop-word removal, lowercase # Text vector size = 15,000 (selected via max_features hyperparameter) # Output dimension: ℝ^(15,000) 3. Normalization (optional but recommended for balanced fusion): CNN_feat ← Normalize(CNN_feat) # L2 or Min-Max scaling Text_feat ← Normalize(Text_feat) 4. Feature Fusion: Fused ← Concatenate(CNN_feat, Text_feat) # Final shape: ℝ^(2560 + 15,000) = ℝ^(17,560) # Concatenation performed along the feature axis 5. Fully Connected Classifier: h1 ← ReLU(FC1(Fused)) # FC1: ℝ^(17,560) → ℝ^(4096) h2 ← ReLU(FC2(h1)) # FC2: ℝ^(4096) → ℝ^(1024) logits ← FC3(h2) # FC3: ℝ^(1024) → ℝ^(C), where C = number of classes ŷ ← Softmax(logits) Return ŷ |

3.3. Two-Channel Hybrid Model

4. Experimental Studies

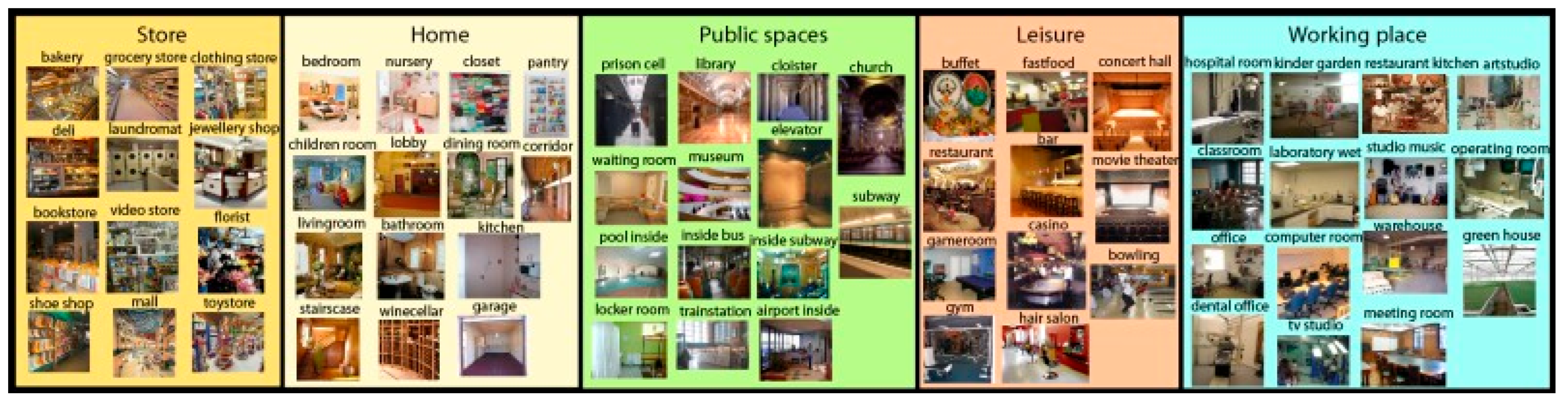

4.1. Dataset

4.2. Evaluation Metrics

4.3. Results and Discussion

- The visual channel is dominant in 72.4% of incorrect classifications.

- In 27.6% of misclassifications, the textual channel is dominant.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xie, L.; Lee, F.; Liu, L.; Kotani, K.; Chen, Q. Scene Recognition: A Comprehensive Survey. Pattern Recognit. 2020, 102, 107205. [Google Scholar] [CrossRef]

- Surendran, R.; Chihi, I.; Anitha, J.; Hemanth, D.J. Indoor Scene Recognition: An Attention-Based Approach Using Feature Selection-Based Transfer Learning and Deep Liquid State Machine. Algorithms 2023, 16, 430. [Google Scholar] [CrossRef]

- Khan, S.D.; Othman, K.M. Indoor Scene Classification through Dual-Stream Deep Learning: A Framework for Improved Scene Understanding in Robotics. Computers 2024, 13, 121. [Google Scholar] [CrossRef]

- Heikel, E.; Espinosa-Leal, L. Indoor Scene Recognition via Object Detection and TF-IDF. J. Imaging 2022, 8, 209. [Google Scholar] [CrossRef] [PubMed]

- Quattoni, A.; Torralba, A. Recognizing Indoor Scenes. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: New York, NY, USA, 2009; pp. 413–420. [Google Scholar]

- Vailaya, A.; Jain, A.; Zhang, H.J. On Image Classification: City vs. Landscape. In Proceedings of the IEEE Workshop on Content-Based Access of Image and Video Libraries, Santa Barbara, CA, USA, 17 June 1998; IEEE: New York, NY, USA, 1998; pp. 3–8. [Google Scholar]

- Yang, J.; Jiang, Y.-G.; Hauptmann, A.G.; Ngo, C.-W. Evaluating Bag-of-Visual-Words Representations in Scene Classification. In Proceedings of the International Workshop on Multimedia Information Retrieval, Augsburg, Germany, 24–29 September 2007; pp. 197–206. [Google Scholar]

- Zhao, X.; Wang, L.; Zhang, Y.; Han, X.; Deveci, M.; Parmar, M. A Review of Convolutional Neural Networks in Computer Vision. Artif. Intell. Rev. 2024, 57, 99. [Google Scholar] [CrossRef]

- Zhou, B.; Khosla, A.; Lapedriza, À.; Oliva, A. Object Detectors Emerge in Deep Scene CNNs. arXiv 2014, arXiv:1412.6856. [Google Scholar]

- Chen, B.X.; Sahdev, R.; Wu, D.; Zhao, X.; Papagelis, M.; Tsotsos, J.K. Scene Classification in Indoor Environments for Robots Using Context Based Word Embeddings. arXiv 2019, arXiv:1908.06422. [Google Scholar] [CrossRef]

- Miao, B.; Zhou, L.; Mian, A.; Lam, T.L.; Xu, Y. Object-to-Scene: Learning to Transfer Object Knowledge to Indoor Scene Recognition. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September 2021; IEEE: New York, NY, USA, 2021; pp. 7684–7690. [Google Scholar]

- Nagarajan, K.; Thanabal, M.S. Automatic Indoor Scene Recognition Based on Mandatory and Desirable Objects with a Simple Coding Scheme. J. Electron. Imaging 2021, 30, 053002. [Google Scholar] [CrossRef]

- Basu, A.; Kaewrak, K.; Petropoulakis, L.; Di Caterina, G.; Soraghan, J.J. Indoor Home Scene Recognition through Instance Segmentation Using a Combination of Neural Networks. In Proceedings of the 2022 IEEE World Conference on Applied Intelligence and Computing (AIC), Salamanca, Spain, 17–19 June 2022; IEEE: New York, NY, USA, 2022; pp. 1–6. [Google Scholar]

- Ismail, A.; Seifelnasr, M.; Guo, H. Understanding Indoor Scene: Spatial Layout Estimation, Scene Classification, and Object Detection. In Proceedings of the 3rd International Conference on Multimedia Systems and Signal Processing, Amsterdam, The Netherlands, 28–30 May 2018; pp. 23–27. [Google Scholar]

- Khan, S.H.; Hayat, M.; Bennamoun, M.; Togneri, R.; Sohel, F.A. A Discriminative Representation of Convolutional Features for Indoor Scene Recognition. IEEE Trans. Image Process. 2016, 25, 3372–3383. [Google Scholar] [CrossRef] [PubMed]

- Sun, N.; Zhu, X.; Liu, J.; Han, G. Indoor Scene Recognition Based on Deep Learning and Sparse Representation. In Proceedings of the 2017 13th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD), Guilin, China, 29–31 July 2017; IEEE: New York, NY, USA, 2017; pp. 408–413. [Google Scholar]

- Espinace, P.; Kollar, T.; Soto, A.; Roy, N. Indoor Scene Recognition through Object Detection. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; IEEE: New York, NY, USA, 2010; pp. 5115–5120. [Google Scholar]

- Li, J.W.; Yan, G.W.; Jiang, J.W.; Cao, Z.; Zhang, X.; Song, B. Construction of a Multiscale Feature Fusion Model for Indoor Scene Recognition and Semantic Segmentation. Sci. Rep. 2025, 15, 14701. [Google Scholar] [CrossRef]

- Wang, C.; Wei, J.; Pan, X. Joint CNN and Vision Transformer for Indoor Scene Recognition. 2025. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5254880 (accessed on 1 August 2025).

- Kundur, N.C.; Mallikarjuna, P. Insect Pest Image Detection and Classification Using Deep Learning. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 9. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019; PMLR: New York, NY, USA, 2019; pp. 6105–6114. [Google Scholar]

- Aggarwal, C.C.; Zhai, C. A Survey of Text Classification Algorithms. In Mining Text Data; Springer: Boston, MA, USA, 2012; pp. 163–222. [Google Scholar]

- Young, T.; Hazarika, D.; Poria, S.; Cambria, E. Recent Trends in Deep Learning Based Natural Language Processing. IEEE Comput. Intell. Mag. 2018, 13, 55–75. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Jurafsky, D.; Martin, H.J. Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition with Language Models, 3rd ed.; Pearson: London, UK, 2025; Available online: https://web.stanford.edu/~jurafsky/slp3 (accessed on 3 August 2025).

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Codeo, N.; Kwon, Y.; Michael, K.; TaoXie; Fang, J.; Imyhxy; et al. ultralytics/yolov5: v7.0—yolov5 SOTA Realtime Instance Segmentation. Zenodo. 2022. Available online: https://zenodo.org/records/7347926 (accessed on 5 June 2025). [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLOv8. Available online: https://github.com/ultralytics/ultralytics (accessed on 3 August 2025).

- Weber, M.; Wang, H.; Qiao, S.; Xie, J.; Collins, M.D.; Zhu, Y.; Yuan, L.; Kim, D.; Yu, Q.; Cremers, D. DeepLab2: A TensorFlow Library for Deep Labeling. arXiv 2021, arXiv:2106.09748. [Google Scholar]

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.Y.; Girshick, R. Detectron2. Available online: https://github.com/facebookresearch/detectron2 (accessed on 3 August 2025).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Chen, G.; Song, X.; Zeng, H.; Jiang, S. Scene Recognition with Prototype-Agnostic Scene Layout. IEEE Trans. Image Process. 2020, 29, 5877–5888. [Google Scholar] [CrossRef]

- Laranjeira, C.; Lacerda, A.; Nascimento, E.R. On Modeling Context from Objects with a Long Short-Term Memory for Indoor Scene Recognition. In Proceedings of the 2019 32nd SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Rio de Janeiro, Brazil, 28–31 October 2019; IEEE: New York, NY, USA, 2019; pp. 410–417. [Google Scholar]

- López-Cifuentes, A.; Escudero-Viñolo, M.; Bescós, J.; García-Martín, Á. Semantic-Aware Scene Recognition. Pattern Recognit. 2020, 102, 107256. [Google Scholar] [CrossRef]

- Seong, H.; Hyun, J.; Kim, E. FOSNet: An End-to-End Trainable Deep Neural Network for Scene Recognition. IEEE Access 2020, 8, 82066–82077. [Google Scholar] [CrossRef]

- Song, C.; Wu, H.; Ma, X. Inter-Object Discriminative Graph Modeling for Indoor Scene Recognition. Knowl. Based Syst. 2024, 302, 112371. [Google Scholar] [CrossRef]

- Song, C.; Ma, X. SRRM: Semantic Region Relation Model for Indoor Scene Recognition. In Proceedings of the 2023 International Joint Conference on Neural Networks (IJCNN), Gold Coast, Australia, 18–23 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–8. [Google Scholar]

- Xie, Y.; Yan, J.; Kang, L.; Guo, Y.; Zhang, J.; Luan, X. FCT: Fusing CNN and Transformer for Scene Classification. Int. J. Multimed. Inf. Retr. 2022, 11, 611–618. [Google Scholar] [CrossRef]

- Zeng, H.; Song, X.; Chen, G.; Jiang, S. Amorphous Region Context Modeling for Scene Recognition. IEEE Trans. Multimed. 2020, 24, 141–151. [Google Scholar] [CrossRef]

| Model | Year | Architecture | Modalities | Uses Transformer? | Accuracy (MIT-67, %) | Parameter Count | Remarks |

|---|---|---|---|---|---|---|---|

| JCVT (Chen Wang et al. [19]) | 2025 | CNN + ViT (LEVTM) | Visual | Yes | 98.2 | High | Deep hybrid with ViT-based local attention |

| Li et al. [18] (Scientific Reports) | 2025 | ResNet50 + SE + Transformer | Visual | Yes | 98.4 | High | Multiscale fusion with dual-task mechanism |

| Proposed Model (This Study) | 2025 | DenseNet121 + Text Classification | Visual + Text | No | 90.46 | Low | Lightweight, interpretable and modular structure |

| Input Width × Height (px) | 600 × 600 |

|---|---|

| Train split | 80% |

| Validation split | 10% |

| Test split | 10% |

| Batch size | 64 |

| Epoch number | 15 |

| Accuracy | 82.13% |

| Model | Dataset | Number of Classes | Total Number of Objects | Average Number of Objects | Number of Uniq Objects | Model Test Accuracy |

|---|---|---|---|---|---|---|

| Yolov5 | Object365 | 365 | 320,260 | 20 | 262 | 43.08 |

| Deeplab (Resnet-50) | COCO-Stuff | 182 | 194,190 | 12 | 146 | 44.81 |

| Yolov8 | Open Images (V7) | 600 | 251,612 | 16 | 350 | 57.17 |

| Detectron2 | LVIS | 1200 | 922,909 | 59 | 991 | 60.53 |

| Extended Model | - | - | 1,681,971 | 107 | 1206 | 73.30 |

| Evaluation on Test Data | Model Without Frequency Sequence Processing | Model with Frequency Sequence Processing |

|---|---|---|

| Accuracy (%) | 70.1% | 73.30% |

| Loss | 1.3246 | 0.9178 |

| Accuracy (%) | 90.46 |

|---|---|

| Loss | 35.75 |

| Precision (%) | 90.85 |

| Recall (%) | 90.46 |

| F1 score (%) | 90.37 |

| Weighted Accuracy (%) | 89.82 |

| Weighted Loss | 0.3703 |

| Year | Model | MIT Indoor 67 ACC (%) |

|---|---|---|

| 2020 | Semantic-aware [33] | 87.10 |

| 2020 | ARG—Net [38] | 88.13 |

| 2019 | Context modelling with bilstm [10] | 88.25 |

| 2019 | LGN [31] | 85.37 |

| 2023 | CSSRM [36] | 88.73 |

| 2020 | FOSNET [34] | 90.30 |

| 2024 | DGN [35] | 90.37 |

| -- | Our hybrid model | 90.46 |

| Actual Class | Prediction Class | CNN Score | Text Score | Dominant Channel |

|---|---|---|---|---|

| Lobby | tv_studio | 0.0025 | 0.0018 | CNN |

| bowling | church_inside | 0.0024 | 0.0018 | CNN |

| Office | tv_studio | 0.0018 | 0.0018 | CNN |

| jewelleryshop | mall | 0.0018 | 0.0019 | Text |

| Pantry | corridor | 0.0020 | 0.0020 | Text |

| warehouse | garage | 0.0023 | 0.0018 | CNN |

| operating_room | tv_studio | 0.0019 | 0.0018 | CNN |

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| CNN-based Model | 82.13 ± 0.74 | 82.25 ± 0.71 | 82.13 ± 0.76 | 82.10 ± 0.73 |

| Text-based Model | 73.30 ± 0.81 | 73.45 ± 0.78 | 73.30 ± 0.79 | 73.18 ± 0.82 |

| Hybrid Model | 90.46 ± 0.65 | 90.75 ± 0.62 | 90.64 ± 0.60 | 90.48 ± 0.66 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Uckan, T.; Aslan, C.; Hark, C. A Comprehensive Hybrid Approach for Indoor Scene Recognition Combining CNNs and Text-Based Features. Sensors 2025, 25, 5350. https://doi.org/10.3390/s25175350

Uckan T, Aslan C, Hark C. A Comprehensive Hybrid Approach for Indoor Scene Recognition Combining CNNs and Text-Based Features. Sensors. 2025; 25(17):5350. https://doi.org/10.3390/s25175350

Chicago/Turabian StyleUckan, Taner, Cengiz Aslan, and Cengiz Hark. 2025. "A Comprehensive Hybrid Approach for Indoor Scene Recognition Combining CNNs and Text-Based Features" Sensors 25, no. 17: 5350. https://doi.org/10.3390/s25175350

APA StyleUckan, T., Aslan, C., & Hark, C. (2025). A Comprehensive Hybrid Approach for Indoor Scene Recognition Combining CNNs and Text-Based Features. Sensors, 25(17), 5350. https://doi.org/10.3390/s25175350