1. Introduction

Gas-Insulated Switchgear (GIS), characterized by its compact design, high integration level, stable performance, and low maintenance costs, has been widely adopted in modern power systems [

1]. As critical nodes in the power grid, the safe and stable operation of GIS directly impacts the reliability of the entire power system. However, during manufacturing, transportation, installation, and long-term operation, internal defects such as metallic particles, sharp protrusions on conductors, bubbles or cracks within insulating components, and foreign objects can arise in GIS [

1,

2]. If not detected promptly, these potential defects can escalate into severe faults, jeopardizing grid security. Therefore, regular and effective internal condition monitoring of GIS, particularly the early identification and diagnosis of defects, is paramount for implementing preventive maintenance and ensuring power grid safety.

Multiple diagnostic methods are currently available for assessing the internal state of Gas-Insulated Switchgear (GIS), including Ultra-High Frequency (UHF) detection of partial discharges (PD) detection, ultrasonic detection, and

gas decomposition product detection [

2]. While these methods are effective in detecting discharge-related insulation defects, their capability is limited for physical defects that do not produce significant discharge signals, such as minor structural deformations, non-discharging foreign objects, or incipient cracks. In contrast, X-ray digital imaging modalities, such as Computed Radiography (CR) and Digital Radiography (DR), serve as non-contact, non-destructive inspection tools capable of penetrating the GIS metallic enclosure to directly visualize various physical defects, regardless of whether they generate discharges [

3]. This endows X-ray inspection with unique advantages and substantial application potential in the field of GIS defect diagnosis [

2]. Nevertheless, automated defect recognition in X-ray images poses three major challenges. First, the minute size and morphological diversity of critical defects (e.g., gas voids, micro-cracks, or metallic particles) may result in representations spanning only a few pixels [

4]. Second, the low contrast caused by minimal X-ray absorption differences between defects and surrounding materials leads to blurred feature boundaries. Finally, the intricate GIS internal architecture introduces overlapping projections and complex background noise, which may occlude or mimic defect signatures. The interpretation of X-ray images currently relies heavily on manual expertise, which is inefficient, costly, and subjective [

2], urgently necessitating the development of automated and intelligent detection technologies.

Traditional image processing-based defect-detection algorithms, such as threshold segmentation, morphological filtering, and edge detection [

5,

6], while applicable in certain specific scenarios, are generally highly sensitive to image quality and parameter settings. Other recent approaches have also explored novel paradigms such as training-free learning for GIS image analysis [

7]. However, these methods can be inadequate for handling the full complexity and diversity of GIS X-ray images. In recent years, deep learning, particularly Convolutional Neural Networks (CNNs), has emerged as the mainstream approach in object detection [

4]. Single-stage detectors, notably the You Only Look Once (YOLO) series [

4], have garnered significant attention for their effective balance between detection speed and accuracy. From the initial YOLOv1 [

8] and YOLOv3 [

9], to YOLOv4 [

10] and YOLOv5 [

11], which incorporated CSPNet [

12] and PANet [

13], and more recently YOLOv8 [

14], YOLOv9 [

15], and YOLOv10 [

16], and the very recent YOLOv11 [

17], and even YOLOv12 [

18], the YOLO framework has continuously evolved with steadily improving performance [

4]. Previous research has applied YOLOv5 to pattern recognition of GIS partial discharge PRPD patterns [

1], demonstrating the potential of the YOLO framework in this domain. However, directly applying general-purpose YOLO models to raw X-ray images for defect detection still encounters the aforementioned challenges: standard loss functions are insensitive to minuscule targets [

19]; fixed network architectures may not optimally extract low-contrast, detail-rich X-ray features; and standard multi-scale fusion mechanisms (e.g., FPN [

20], PANet [

13]) may not be optimal for GIS defects, which exhibit significant size variations [

21]. Therefore, in-depth optimization of YOLO models tailored to the characteristics of GIS X-ray images is essential.

To address the aforementioned issues and challenges, this paper adopts the lightweight and high-performance YOLOv10n as its baseline model. This choice was made based on the technological context at the time the experimental design and model development were finalized, when YOLOv10n provided an optimal trade-off between accuracy, speed, and computational efficiency for our specialized task. Although YOLOv11 [

17] and YOLOv12 [

18] have since been released, integrating them into this study would require a complete re-tuning of the training pipeline, hyperparameters, and improvement modules, which lies beyond the scope of this work. Our focus here is to demonstrate the effectiveness of the proposed improvement strategies on a stable and well-established baseline. Nevertheless, the extension and evaluation of these strategies on the latest YOLO architectures will be an important direction for future research. Building upon this baseline, we propose a series of synergistic improvement strategies aimed at constructing an enhanced model more suitable for defect detection in GIS X-ray images. The key enhancements comprise: adopting the Normalized Wasserstein Distance (NWD) loss function to strengthen the localization capability for small targets; introducing Monte Carlo Attention (MCAttn) [

22] and Parallelized Patch-Aware Attention (PPA) [

23] into the backbone network to enhance feature discriminability and robustness in complex backgrounds; and designing a novel neck network structure inspired by the concept of enhanced multi-scale interaction from the Generalized Feature Pyramid Network (GFPN) [

21]. Through the organic integration of these modules, we aim to achieve high-precision, high-efficiency detection of various internal defects in GIS, especially for challenging samples.

The main contributions of this paper can be summarized as follows:

A deeply improved object-detection model based on YOLOv10n is proposed, specifically tailored to the characteristics of GIS X-ray images.

The NWD loss function is applied to the task of GIS X-ray defect detection, and its effectiveness in enhancing the localization accuracy of minute defects is validated.

Two advanced attention mechanisms, MCAttn and PPA, are integrated into the backbone network of YOLOv10, exploring and demonstrating their potential in enhancing feature representation for X-ray images.

A neck network structure inspired by the GFPN concept is designed and implemented to improve the fusion of multi-scale defect features.

Comprehensive experimental validation is conducted on a GIS X-ray dataset collected from real-world industrial scenarios, with results indicating that the proposed model significantly outperforms the baseline and several other state-of-the-art models of similar types.

The remainder of this paper is organized as follows:

Section 2 details the proposed model architecture and its key improved components.

Section 3 describes the dataset used for experiments, evaluation metrics, and specific implementation details. It also provides an in-depth analysis of the experimental results, including ablation studies and performance comparisons with existing advanced methods.

Section 4 summarizes the entire work and outlines future research directions.

2. Proposed Method

To address the challenges in detecting defects in GIS X-ray images, which are inherently characterized by small target sizes, low contrast, and complex backgrounds, this paper proposes an enhanced defect-detection method based on the YOLOv10n framework. To improve the model’s performance on this specific task, we have systematically enhanced the baseline model (YOLOv10n), focusing on three core aspects:

- 1.

Loss Function: At the loss function level, the NWD loss is adopted to replace traditional bounding box losses based on Intersection over Union (IoU), to better address the localization issues of small targets.

- 2.

Backbone Network: At the backbone network level, the MCAttn mechanism is incorporated into the core C2f feature extraction modules, replacing the original C2f modules at the 2nd, 4th, and 6th layers, and PPA is used to replace the C2f module at the 8th layer. This aims to enhance the network’s ability to extract and perceive critical defect features.

- 3.

Neck Structure: At the neck network level, a new multi-scale feature fusion structure is designed, inspired by the principles of GFPN, to improve adaptability to defects of varying sizes.

The overall network architecture of the proposed method is illustrated in

Figure 1. This architecture integrates all the aforementioned improvements. The subsequent subsections will elaborate on the specific design of the NWD loss function application (

Section 2.1), the attention mechanism enhancements in the backbone network (

Section 2.2 and

Section 2.3), and the GFPN-inspired neck network structure (

Section 2.4).

2.1. NWD Loss

Common bounding box regression loss functions in object detection, such as those based on IoU such as GIoU, DIoU, and CIoU, perform well in general object-detection tasks. However, they exhibit certain limitations when dealing with small defect targets commonly found in GIS X-ray images, as in this study. Specifically, these loss functions are highly sensitive to the positional offsets of small targets; even minor pixel deviations can cause drastic changes in the IoU value, potentially reducing it to zero. This instability complicates model training and hinders the precise regression of small target bounding boxes.

To overcome this challenge, this paper introduces NWD [

19] as both a metric and a loss function for bounding boxes. The core idea of NWD is to model a bounding box

(where

are the center coordinates, and

w and

h are the width and height, respectively) and its internal pixel weight distribution as a two-dimensional Gaussian distribution

. The mean is

, and the covariance matrix is

. Instead of directly calculating the overlapping area, NWD employs the Wasserstein distance (also known as Earth Mover’s distance) to measure the similarity between the Gaussian distributions

and

corresponding to two bounding boxes (e.g., a predicted box

p and a ground truth box

). The squared second-order Wasserstein distance between them,

, can be efficiently computed as:

where

and

are the center coordinate vectors of the predicted box and the ground-truth box, respectively;

and

are their corresponding width and height; and

denotes the squared Euclidean distance. This distance, as shown in Equation (

1), considers both the deviation in center points and the differences in the dimensions of the bounding box (width and height).

Compared to IoU-based losses, NWD offers significant advantages, particularly in small object detection. (1) Even if two bounding boxes do not overlap at all (where the IoU gradient would be zero), NWD can still provide smooth and informative gradients, thereby facilitating continuous model optimization. (2) NWD is insensitive to object scale and provides a more principled way to measure the similarity between small targets, exhibiting greater robustness to positional and size deviations of minute objects. These characteristics make NWD highly suitable for enhancing the localization accuracy of small defects in GIS X-ray images.

Based on the Wasserstein distance, the NWD similarity is defined as:

where

and

represent the Gaussian distributions corresponding to the predicted and ground-truth boxes,

is the squared Wasserstein distance from Equation (

1), and

C is a constant used to scale the distance, typically set based on the statistics of the dataset (e.g., average target size). The similarity of the NWD ranges from

, where a value closer to 1 indicates a greater similarity between the two boxes. Finally, the NWD loss function

(where

denotes loss) adopted in this paper is calculated as follows:

where

is the final NWD loss, and

is the similarity metric defined in Equation (

2). The range of this loss function

is

; a higher NWD similarity results in a smaller loss value. In the model proposed in this paper, we replace the original bounding box regression loss branch of YOLOv10n with this

loss (see Equation (

3)), aiming to achieve superior detection performance for minute defects.

2.2. C2f_MCAttn Module

To further enhance the model’s capability to extract subtle defect features from complex GIS X-ray backgrounds, this paper improves the core Convolutional-to-Feature (C2f) modules within the YOLOv10n backbone network. Although standard C2f modules achieve a balance between efficiency and performance, their feature extraction ability still has room for improvement when processing X-ray images with low signal-to-noise ratios and poor feature discriminability, often leading to the neglect of critical defect details or interference from background textures.

For this purpose, we chose to embed the MCAttn mechanism [

22] within the C2f modules used at the P2, P3, and P4 feature levels of the backbone network (corresponding to the 2nd, 4th, and 6th layers in the overall architecture shown in

Figure 1). This forms the C2f_MCAttn unit (its structure is shown on the left side of

Figure 2). Unlike the baseline C2f, the MCAttn module is deployed after the final 1 × 1 convolutional layer of the C2f unit, performing dynamic, data-driven feature recalibration on the module’s output high-level features

.

The core innovation of the MCAttn mechanism [

22] lies in its use of randomization strategies and multi-scale information aggregation to generate attention weights that are more robust and insensitive to scale variations. Its key operational principles can be broken down into the following steps, as illustrated in

Figure 2:

Multi-Scale Contextual Feature Extraction: As depicted in the right panel of

Figure 2, the process begins with multi-scale feature extraction. The input features

are passed through parallel adaptive average pooling operations with different output spatial resolutions (e.g., a preset set

). This step, represented by the “Pooling” block in the diagram, captures feature statistical summaries

under different receptive fields.

Monte Carlo Attention Sampling: During the model training phase, one or a combination of feature representations is randomly sampled from the aforementioned set of multi-scale feature summaries. This stochastic sampling enhances the model’s robustness and prevents overfitting to specific patterns in the training data.

Channel Attention Generation and Application: The sampled features

are then fed into a lightweight attention generation network. This network, represented by the subsequent series of operations in the diagram, is typically a Multi-Layer Perceptron (MLP) inspired by the Squeeze-and-Excitation (SE) [

24] structure. It comprises two linear layers and non-linear activations to learn the importance of each channel, ultimately outputting a channel attention vector

. This vector

is then applied to the original features

via element-wise multiplication (denoted by ⊗ in the final step of the diagram) to achieve adaptive feature channel recalibration:

where

is the final output feature map,

is the input feature map from the C2f module,

is the computed channel attention vector, and ⊗ denotes element-wise multiplication.

Integrating MCAttn into C2f modules fundamentally aims to significantly improve the quality of the network’s feature representations. We anticipate that this enhancement will: (1) strengthen the model’s ability to discriminate features of defect targets in GIS X-ray images that vary widely in size, morphology, and signal-to-noise ratio; (2) improve the model’s generalization performance and robustness to interferences such as noise by introducing randomness during training; and (3) more effectively suppress task-irrelevant background features, enabling subsequent network layers to focus on information more pertinent to defect classification and localization.

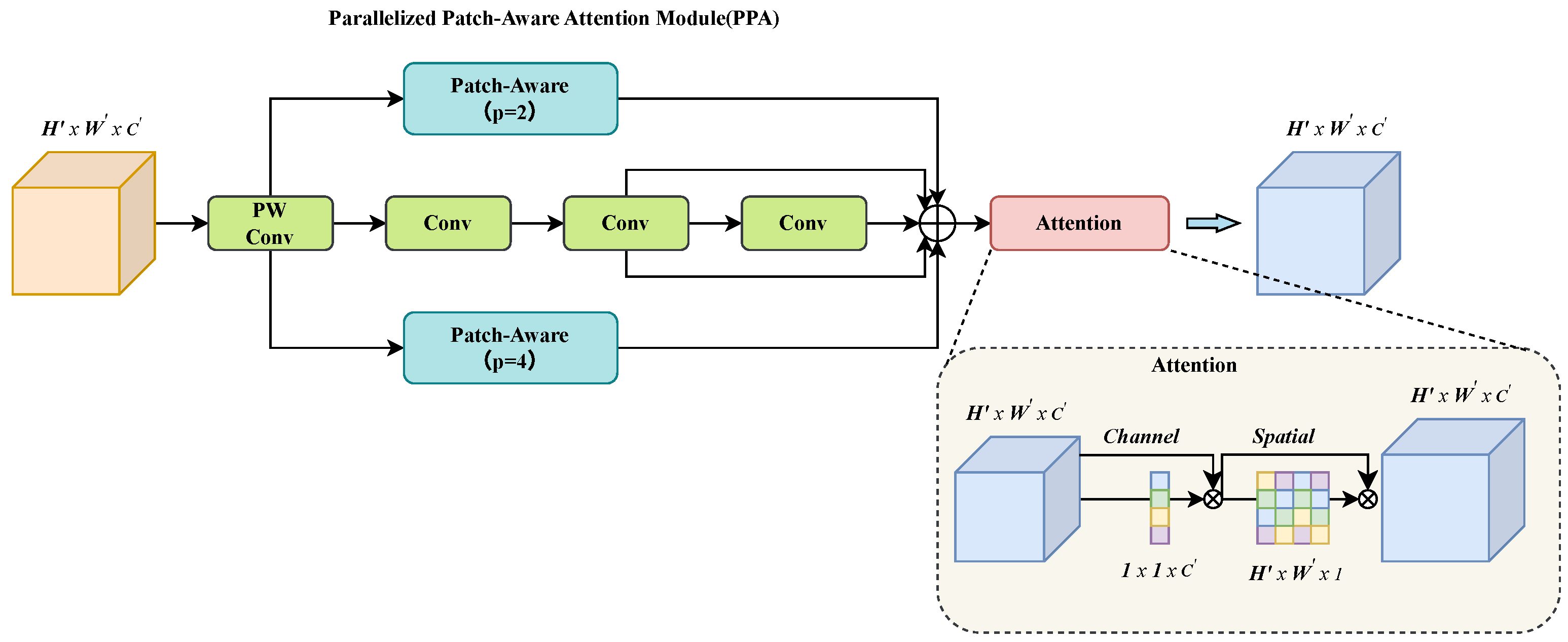

2.3. PPA Module

At deeper backbone stages (e.g., the P5 stage, Layer 8 in

Figure 1), repeated downsampling causes feature maps to progressively lose high-frequency spatial details essential for identifying minute defects, even as they gain semantic richness. Standard C2f modules often fail to balance the aggregation of this semantic context with the preservation of fine-grained features. To overcome this limitation, this paper replaces the C2f module at this stage with the Parallelized Patch-Aware Attention (PPA) module [

23]. Originally designed for infrared small target detection, PPA utilizes a parallel multi-branch architecture to capture features at multiple scales, which are then refined using subsequent attention mechanisms.

The overall structural design of the PPA module is illustrated in

Figure 3 and primarily consists of two core stages:

(1) Parallel Multi-Branch Feature Extraction: For an input feature ′ (typically obtained after channel adjustment via an initial 1 × 1 convolution), PPA employs three parallel branches to capture information at different levels and scales:

Local Perception Branch (Patch-Aware,

): This branch divides the input feature map into

local image patches and independently learns and interacts with the embedding representation of each patch (e.g., through a Feed-Forward Network (FFN) and task-correlation-based feature selection mechanisms [

23,

25]). It ultimately reconstructs a feature map

that focuses on local details.

Global Perception Branch (Patch-Aware, ): Employing a similar mechanism but with larger image patches, this branch aims to capture broader contextual dependencies, outputting a feature map . This patch-aware processing allows the model to perform adaptive feature aggregation across different spatial extents.

Serial Convolution Branch: This branch serves to capture classic local features with strong translation invariance, complementing the patch-based processing of the other two branches. It consists of a stack of several standard convolutional layers, each typically followed by Batch Normalization (BN) and a ReLU activation function. This design ensures the extraction of robust, low-level feature patterns such as edges and textures, which are then fused with the multi-scale contextual information from the parallel branches to form a more comprehensive feature representation, yielding .

The selection of patch sizes and is a deliberate design choice to establish a multi-scale perception mechanism. The smaller patches enable the model to focus on fine-grained local features, which is critical for identifying the subtle textures and edges of small defects. Conversely, the larger patches provide a broader receptive field, allowing the model to capture more extensive contextual information and better distinguish defects from the complex surrounding background structures. By processing these two scales in parallel, the PPA module can simultaneously perceive both detailed and contextual information, which is highly beneficial for the GIS defect-detection task. The outputs of these three branches are subsequently fused to obtain the aggregated feature .

(2) Attention-based Feature Enhancement: As illustrated in the latter stage of

Figure 3, the aggregated feature

is fed into a cascaded attention module for further refinement. This two-stage process is designed to first determine “what” features are important, and then identify “where” they are located.

Channel Attention: First, the features pass through an Efficient Channel Attention (ECA-Net) [

26] module. ECA-Net adaptively recalibrates the importance of each channel by learning cross-channel interaction without dimensionality reduction, effectively highlighting which feature channels are most relevant to the defect-detection task. This yields a channel-refined feature map

, where

is the channel attention map.

Spatial Attention: Subsequently, the channel-refined feature map

is processed by a spatial attention mechanism, similar to the one used in CBAM [

27]. This module generates a 2D spatial attention map that emphasizes the most informative regions within the feature map, guiding the model to focus on the precise locations of potential defects while suppressing background noise. The final output is produced by

, where

is the spatial attention map.

Here, ⊗ denotes element-wise multiplication. This cascaded channel-then-spatial attention structure ensures a comprehensive feature refinement, equipping the backbone network with stronger and more robust feature extraction capabilities for subsequent detection stages.

In the context of the GIS X-ray defect-detection task in this paper, replacing the C2f module in the deeper layers of the backbone with the PPA module offers several key advantages. (1) The multi-branch parallel processing can effectively capture both local detail features of defects (such as edges and textures) and their global background structural information simultaneously. (2) The Patch-Aware mechanism provides the model with the ability to learn and interact with features at different spatial granularities, which is beneficial for handling defects of varying sizes. (3) The subsequent dual channel and spatial attention mechanisms can further refine features, amplifying defect-related signals while suppressing irrelevant information common in X-ray images, such as noise or artifacts. Therefore, the introduction of PPA is expected to equip the backbone network with stronger and more robust feature extraction capabilities, thereby improving performance in subsequent detection stages.

2.4. Enhanced Feature Pyramid Neck

In object detection, effectively fusing features from different backbone levels is crucial for identifying targets across various scales. Over the years, several feature pyramid network architectures have been proposed to address this challenge, with their evolution illustrated in

Figure 4. The classic Feature Pyramid Network (FPN) [

20] (

Figure 4a) established a top-down pathway to merge high-level semantic features with low-level spatial details. Subsequently, the Path Aggregation Network (PANet) [

13] (

Figure 4b) introduced an additional bottom-up pathway, creating a more effective bidirectional information flow. More advanced structures like BiFPN [

28] (

Figure 4c) and GFPN [

21] (

Figure 4d) further optimized the fusion process.

Our proposed neck network is designed by drawing upon the strengths of these established architectures. In terms of path topology, we adopt the proven bidirectional architecture of PANet (as shown in

Figure 4b) to ensure robust two-way fusion of semantic and spatial information. However, our core design philosophy is inspired by the “heavy-neck” paradigm emphasized in GFPN (

Figure 4d). The central idea of GFPN is that investing more computational resources and complexity in the neck network’s fusion nodes leads to more powerful and discriminative multi-scale feature representations.

In line with this philosophy, instead of using simple convolutional layers for fusion, our neck employs more powerful

CSPStage modules within both the top-down and bottom-up pathways (as detailed in

Figure 1). This use of complex feature processing units at each fusion stage is our key implementation of the “heavy-neck” concept. The specific pathways are as follows:

Top-down Pathway: Deep, high-level semantic features are progressively upsampled and fused with shallower features from the backbone. Each fused feature map is then processed by a CSPStage module to refine the representation.

Bottom-up Pathway: Subsequently, the refined, semantically-rich feature maps from the top-down path are progressively downsampled and fused with features from higher levels. These are also processed by CSPStage modules to enrich deeper feature maps with precise localization cues.

By combining a PANet-like bidirectional path structure with the GFPN-inspired “heavy-neck” design, our enhanced neck network achieves a more thorough and effective fusion of multi-scale features, significantly improving the model’s performance on detecting GIS defects.

3. Experiments and Result

3.1. Dataset

All experiments in this paper were conducted on a custom-built X-ray image dataset for internal defects in Gas-Insulated Switchgear (GIS) equipment, provided by the State Grid Ningxia Electric Power Co., Ltd. (Yinchuan, Ningxia, China). This dataset, reflecting real-world industrial application scenarios, comprises a total of 718 X-ray images covering various equipment models and imaging conditions.

To support supervised learning, all images were annotated by professional engineers using bounding boxes. This study focuses on five representative types of internal GIS defects. A detailed description of their visual characteristics, along with the distribution of annotated instances for each category, is presented in

Table 1. The dataset exhibits a natural class imbalance, which is typical of real-world industrial data where some defect types occur more frequently than others.

The collected 718 annotated images were randomly partitioned into a Training Set, a Validation Set, and a Test Set according to an 8:1:1 ratio. Example images illustrating each of these defect types are shown in

Figure 5.

3.2. Experimental Environment

All models in this paper were trained under the PyTorch 2.3.0 framework. Stochastic Gradient Descent (SGD) was employed as the optimizer, with an initial learning rate set to

and a batch size of 32. All models were uniformly trained for 300 epochs. For other training hyperparameters, the default configuration of YOLOv10n was adopted. The specific hardware and software environment configurations for the experiments are detailed in

Table 2.

Recognizing that overfitting is a primary challenge when training on specialized datasets of limited size, we implemented a multi-layered strategy to ensure the model’s generalization ability. This strategy consisted of three key components:

Transfer Learning: All models were initialized with weights pre-trained on the large-scale COCO dataset. This provides the network with a robust foundation of general visual features, preventing it from having to learn these from scratch and significantly reducing the risk of fitting to noise in our specific dataset.

Extensive Data Augmentation: We utilized a rich set of online data augmentation techniques, including mosaic augmentation, random affine transformations (rotation, scaling, translation), and color space adjustments (HSV). This effectively creates a larger and more diverse “virtual” dataset, forcing the model to learn invariant and robust features rather than memorizing the training examples.

Regularization: A standard weight decay of was applied during optimization. This technique penalizes large weights in the network, thereby constraining model complexity and discouraging it from learning overly complex patterns that are specific only to the training data.

The effectiveness of this comprehensive strategy is ultimately validated by the strong performance our model achieved on the unseen, held-out test set. A model that had significantly overfit would fail to generalize and perform well on this data; thus, the high accuracy reported in our results serves as compelling empirical evidence that overfitting was successfully mitigated.

The inference speed, measured in Frames Per Second (FPS), was evaluated on the same NVIDIA GeForce RTX 4090 GPU with a batch size of 1 to reflect real-world deployment performance.

3.3. Experimental Metrics

To comprehensively and quantitatively evaluate the performance of the proposed improved model and other comparative methods on the GIS X-ray defect-detection task, we adopted standard evaluation metrics recognized in the field of object detection. The calculation of these metrics is typically based on the following four fundamental statistics: True Positives (TP), False Positives (FP), False Negatives (FN), and True Negatives (TN) (though TN is less directly used in object detection). In this task:

TP: Correctly detected defects (the IoU between the predicted bounding box and the ground truth bounding box is greater than a set threshold , and the predicted class matches the true class).

FP: Incorrect detection results (the IoU between the predicted box and all ground truth boxes is less than the threshold , or the predicted class is incorrect, or background is misidentified as a defect).

FN: Undetected true defects (a ground truth defect box exists, but no predicted box matches it with a sufficiently high IoU () and the correct class).

IoU is a key metric for measuring the degree of overlap between a predicted bounding box

and a ground truth bounding box

. Its calculation formula is:

The value range of IoU is , where a higher value indicates better overlap between the predicted and ground truth boxes.

Based on the above fundamental definitions, we selected the following core metrics for model evaluation:

Through a comprehensive analysis of these metrics, we can thoroughly assess the model’s accuracy, recall capability, and localization precision in the GIS defect-detection task.

3.4. Ablation Experiments and Results

To systematically validate our design choices and quantify the contributions of the proposed components, we conducted two sets of ablation studies on the custom-built GIS-Xray dataset.

First, we performed a progressive ablation study starting from the lightweight YOLOv10n baseline. These studies involved incrementally introducing and combining our four key enhancements: (1) the NWD loss function; (2) the MCAttn mechanism; (3) the PPA module; and (4) the GFPN-inspired neck. All experiments were conducted under identical training configurations. The detailed results are summarized in

Table 3.

The experimental results in

Table 3 clearly demonstrate the positive impact of each improved component on model performance. First, comparing Experiment 2 with Experiment 1 (BASE), the standalone introduction of the NWD loss function, without increasing any parameter count or computational load, improved mAP@0.5 by

and mAP@0.5:0.95 by

. This fully validates the significant advantage of the NWD loss function in improving the localization of minute defect targets. Second, examining the backbone enhancements, the C2fMCAttn module (Experiment 3) and the PPA module (Experiment 4) both improved performance, with PPA showing slightly higher gains at the cost of more parameters. Next, the GFPN-inspired neck network (Experiment 5) increased mAP@0.5:0.95 by

, proving the effectiveness of the improved fusion strategy. Finally, after progressively combining the components (Experiments 6, 7, and 8), the model’s performance continuously improved, with our final model achieving a

improvement in mAP@0.5:0.95 over the baseline.

Furthermore, to validate the superiority of our chosen modules over other mainstream alternatives, we conducted a second set of comparative experiments, with the results presented in

Table 4. The data show that our C2f_MCAttn module achieves a higher mAP@0.5:0.95 score (0.653) compared to both SE (0.631) and CBAM (0.635). Similarly, our GFPN (0.661) outperforms the popular BiFPN structure (0.654). These comparisons provide strong justification for our specific design choices.

In summary, the results from both sets of ablation experiments strongly demonstrate that the NWD loss function, the backbone network enhancement strategy based on MCAttn and PPA, and the GFPN-inspired neck network structure proposed in this paper each contribute significantly to the final performance. Not only are they effective when combined synergistically, but they also prove to be superior choices compared to other common alternatives for the GIS X-ray defect-detection task. Of course, the performance improvement is accompanied by a moderate increase in model complexity, reflecting a potential trade-off between accuracy and efficiency in practical applications.

3.5. Model Comparison and Visualization Analysis

To comprehensively evaluate the proposed model, we conducted both an in-depth per-category analysis against the baseline and a broad performance comparison against other mainstream lightweight models.

First, to delve deeper into the specific contributions of our improvements, we analyzed the per-category performance gains of our final model over the baseline, as detailed in

Table 5. The results show that our model achieves consistent improvements across all defect categories. Most notably, a remarkable gain of

9.7 percentage points in AP is observed for the “Crack” class. This is a particularly important finding, as cracks are often characterized by their slender, elongated shapes and low contrast, making them one of the most challenging defect types to detect. This substantial improvement strongly suggests that our key enhancements—such as the NWD loss function tailored for small and slender objects, and the advanced attention mechanisms (MCAttn and PPA) for superior feature extraction—are highly effective in addressing the core difficulties of this detection task.

Next, to further validate the effectiveness and advancement of our complete model, we conducted a comprehensive performance comparison against several other mainstream lightweight real-time object-detection models on the GIS-Xray test set. The models included in the comparison were YOLOv3-tiny [

9], YOLOv5n [

11], YOLOv8n [

14], and YOLOv9s [

15]. The main quantitative evaluation results are summarized in

Table 6.

The comparison results in

Table 6 clearly show that the model proposed in this paper significantly surpasses the baseline and other compared models in accuracy. Specifically, compared to the direct baseline YOLOv10n, our model achieved a

improvement in mAP@0.5 and a

improvement in mAP@0.5:0.95. This significant accuracy gain was realized with a moderate increase in model complexity (from 8.2 to 10.2 GFLOPs) and a corresponding reduction in inference speed (from 103 to 85 FPS). This demonstrates an excellent trade-off between accuracy and real-time performance, as our model maintains a high inference speed well-suited for practical applications.

The advantages of our method are more pronounced when compared with other representative models. For instance, while YOLOv8n and YOLOv10n offer higher speeds, our model provides a substantial lead in accuracy (e.g., +7.3% mAP@0.5:0.95 over YOLOv8n), making it a more compelling choice for high-precision applications. Compared to bulkier models like YOLOv3-tiny and YOLOv9s, our model is not only significantly more accurate but also more than twice as fast, further highlighting the efficiency of our design. Overall, for the studied GIS X-ray defect-detection task, our model achieves an optimal balance between detection accuracy and inference speed.

In addition to the quantitative performance metrics evaluated above, to more intuitively compare the performance differences of various models in actual detection scenarios, we selected typical GIS X-ray image samples covering all five defect categories and conducted a visual analysis of their detection results.

Figure 6 shows a comparison of the specific detection outputs of our proposed model (Ours) against the baseline YOLOv10n and four other advanced lightweight detectors (YOLOv3-tiny, YOLOv5n, YOLOv8n, YOLOv9s) on these samples.

Through careful observation and comparison of the detection results from each model in

Figure 6, the significant advantages of our proposed model in handling various complex situations can be clearly seen:

For the minute bubble defect in

Figure 6a, its features are faint, making detection extremely difficult. Interestingly, YOLOv3-tiny, with its larger parameter count, can detect this defect relatively well, whereas newer models like YOLOv5n and YOLOv8n exhibit missed detections. This suggests that YOLOv3-tiny performs adequately in handling certain small defects. In contrast, our proposed model (Ours) not only stably detects this minute bubble but also does so with high prediction confidence (as indicated by the bounding box). This visually demonstrates that our model’s performance in handling such challenging minute, low-contrast defects is significantly superior to most other lightweight comparative models.

The model’s superiority in detecting challenging defects is particularly evident with the concealed, hairline crack in

Figure 6b. Due to its extremely subtle features, most competing models failed to achieve a successful detection. It is noteworthy that, apart from our proposed model, only the significantly bulkier YOLOv3-tiny (10.3 M Params) and YOLOv9s (7.3 M Params) managed to identify this feature. This result highlights our architecture’s exceptional accuracy-efficiency trade-off, proving its ability to outperform larger models on such critical, fine-grained defects while maintaining a lightweight profile.

When detecting “foreign body (metal fitting)” type targets (

Figure 6c), most models performed successfully. However, misdetections are a concern; for instance, YOLOv8n incorrectly identified background as a “bubble” in this sample. Similarly, in the detection of “foreign body (metal suspension)” (

Figure 6d), other models also showed potential misdetections. It must be objectively acknowledged that while our model generally performs stably, it may occasionally produce a few False Positives in certain situations (as might be observed in

Figure 6d), indicating that there is still room for further optimization in suppressing misdetections.

Finally, for the “foreign body (tool)” defect in

Figure 6e, which is larger and has more distinct features, all compared models performed well, accurately detecting and localizing it, as expected.

Overall, these instances clearly demonstrate that through synergistic optimization of the loss function, backbone network attention mechanisms, and neck feature fusion structure, our model can more effectively cope with various complex situations in GIS X-ray images. It particularly exhibits significant advantages in detecting minute, low-contrast, and densely packed defects, thereby validating the effectiveness and advancement of the proposed method.

4. Conclusions

This research tackles the critical challenge of detecting micro-defects in GIS X-ray images, which are inherently characterized by submillimeter scales, low contrast, and cluttered backgrounds. The aim was to propose an automated detection method with higher precision and robustness.

To this end, we proposed a significantly improved model based on the YOLOv10n framework. This model enhances detection performance through multifaceted synergistic optimizations: (1) adopting the NWD loss function effectively improved the localization capability for small targets; (2) embedding MCAttn into the C2f modules of the backbone and introducing PPA to replace deeper modules significantly enhanced the extraction and discrimination of key features; and (3) leveraging the design principles of GFPN, a new neck network structure was built, optimizing the efficiency and effectiveness of multi-scale feature fusion.

A series of experiments on a real-world GIS X-ray image dataset, including detailed ablation studies and comparative analyses with several advanced lightweight detectors (such as YOLOv3-tiny, YOLOv5n, YOLOv8n, and YOLOv9s), validated the effectiveness of our method. The results indicated that our complete proposed model significantly outperformed the baseline YOLOv10n and other comparative models across various evaluation metrics. For instance, on the mAP@0.5:0.95 metric, it achieved a improvement over YOLOv10n, reaching a state-of-the-art level of . Qualitative analysis also intuitively demonstrated our model’s advantages in detecting challenging samples, especially minute and slender defects.

The proposed improvements and experimental results from this research demonstrate that by targeted optimization of the loss function, introduction of advanced attention mechanisms, and enhancement of the feature fusion structure, the performance of object-detection models in specific industrial X-ray imaging scenarios can be effectively boosted. This work holds significant theoretical reference value and potential application prospects for advancing intelligent GIS condition assessment technology, improving power grid operational and maintenance efficiency, and ensuring the safe and reliable operation of power systems.

Concurrently, we recognize that while our method brings accuracy improvements, it also leads to an increase in model parameter count and computational complexity. Furthermore, occasional misdetections in certain complex backgrounds suggest that there is still room for improvement in the model’s feature discrimination capabilities and background suppression strategies.

Future research will aim to build upon and broaden the findings of this study. Key directions include: (1) validating and further improving the model on larger and more diverse GIS X-ray datasets as they become available through our ongoing industrial collaboration, which will further enhance the model’s robustness and generalization ability within its primary application domain; (2) comparing and adapting our proposed enhancements to newer architectures like YOLOv11 and YOLOv12 to stay at the forefront of the field; (3) further exploring model lightweighting techniques and researching strategies to reduce the false alarm rate, thereby enhancing the model’s practical deployment value.