Classification of Different Motor Imagery Tasks with the Same Limb Using Electroencephalographic Signals

Abstract

1. Introduction

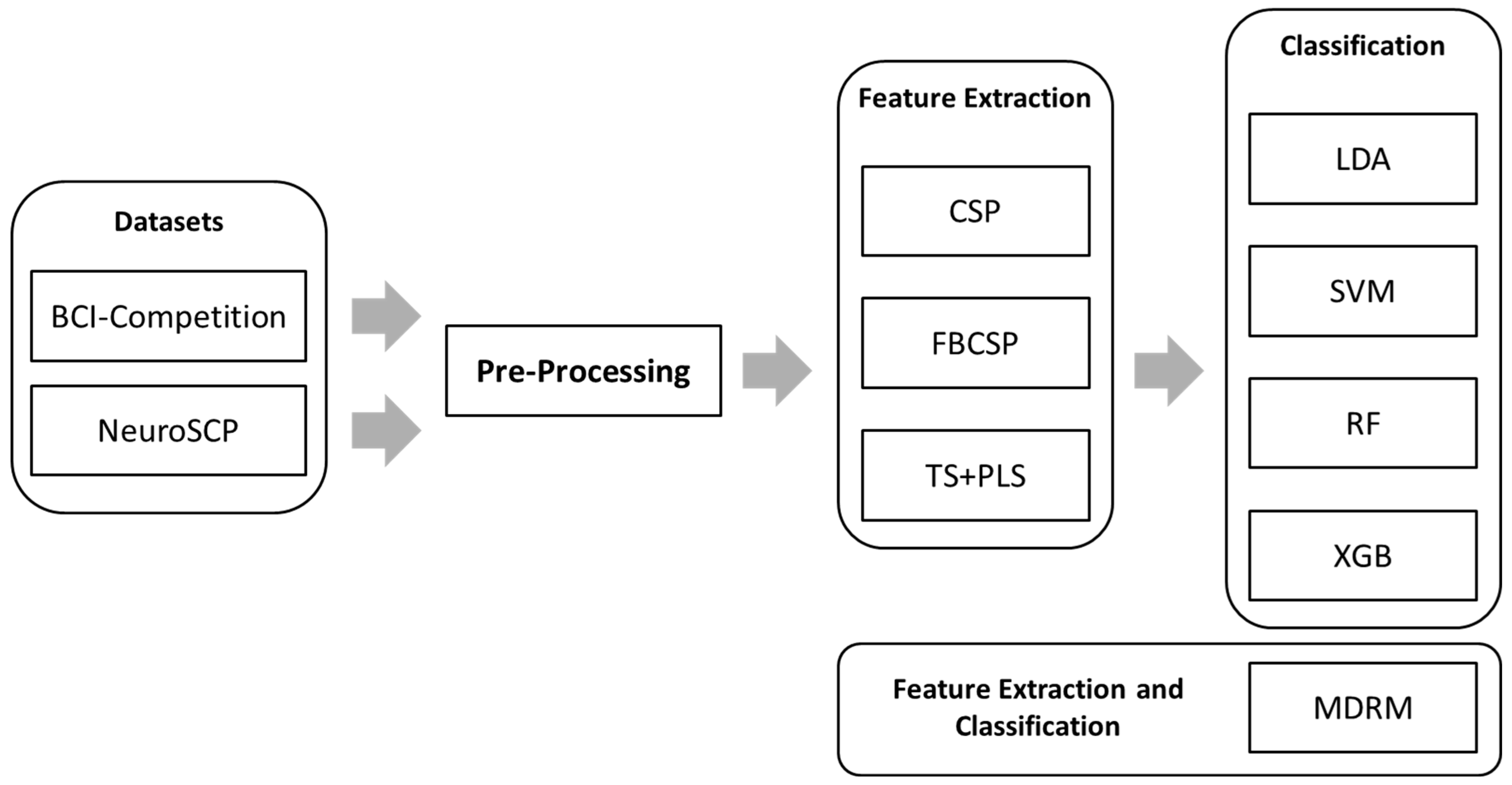

2. Materials and Methods

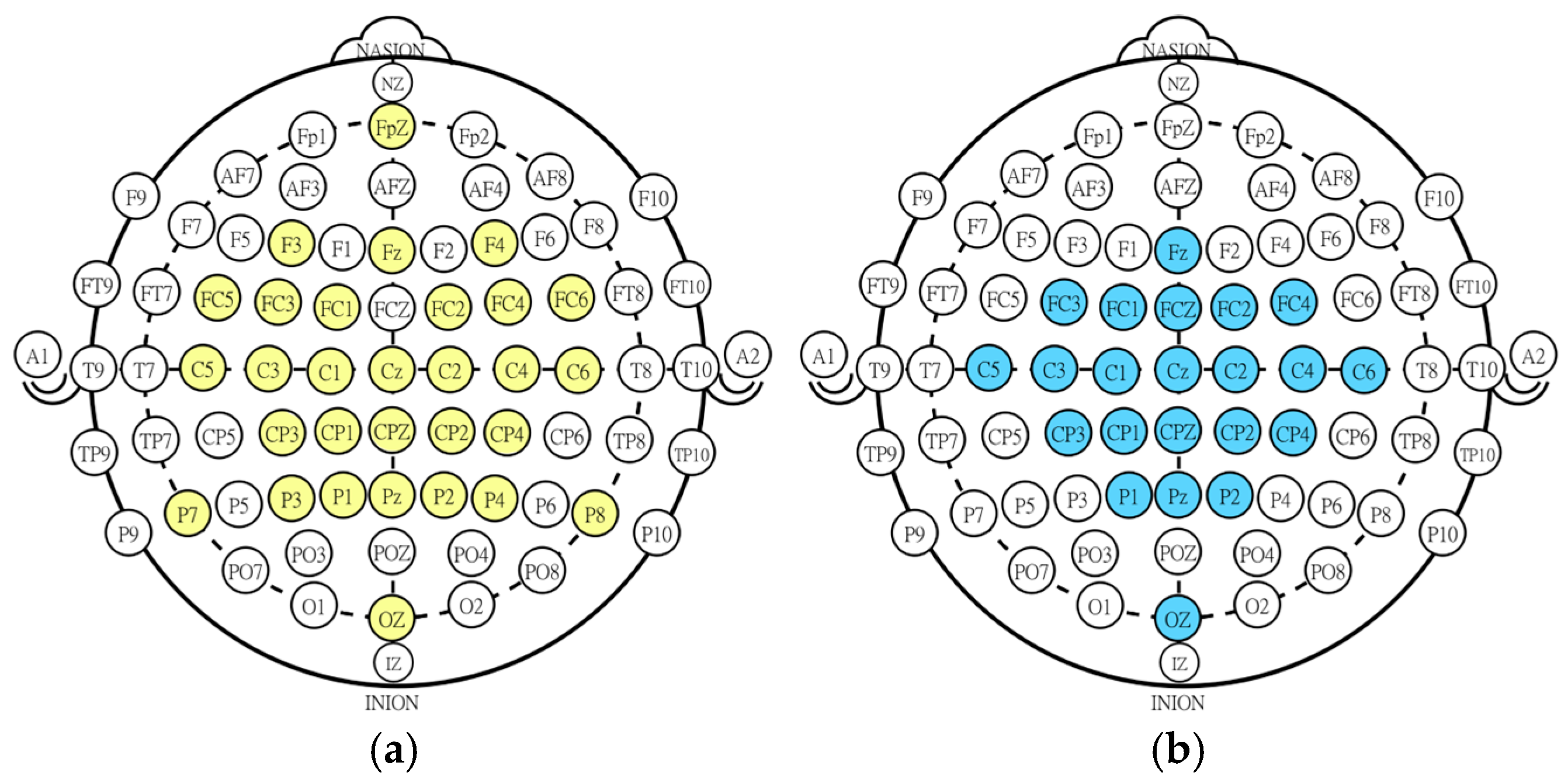

2.1. BCI Competition Dataset

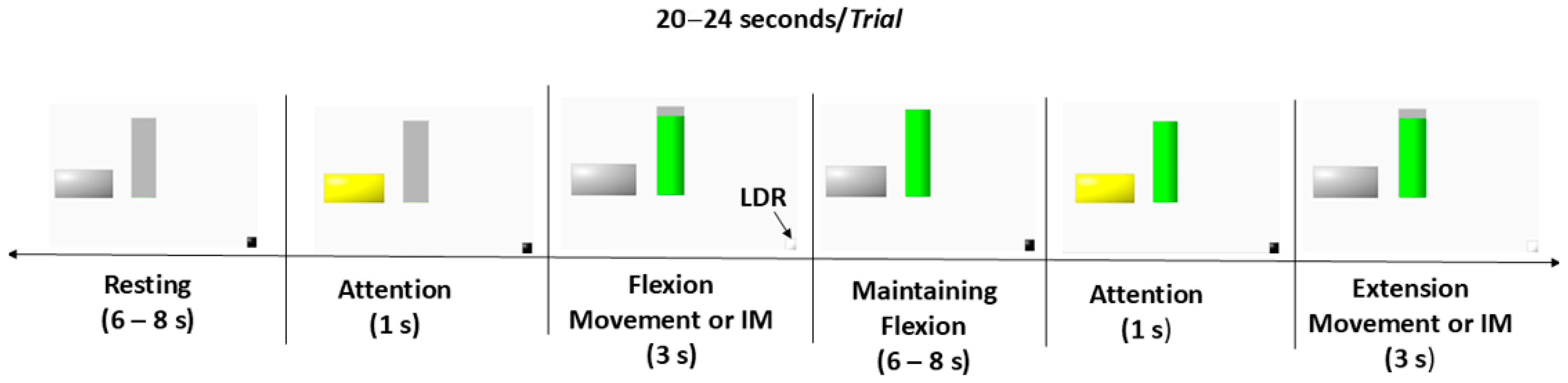

2.2. NeuroSCP Dataset

2.3. Data Preprocessing

2.4. Feature Extraction Methods

2.4.1. Common Spatial Patterns (CSP)

2.4.2. Filter Bank Common Spatial Patterns (FB-CSP)

2.4.3. Minimum Distance to Riemannian Mean (MDRM)

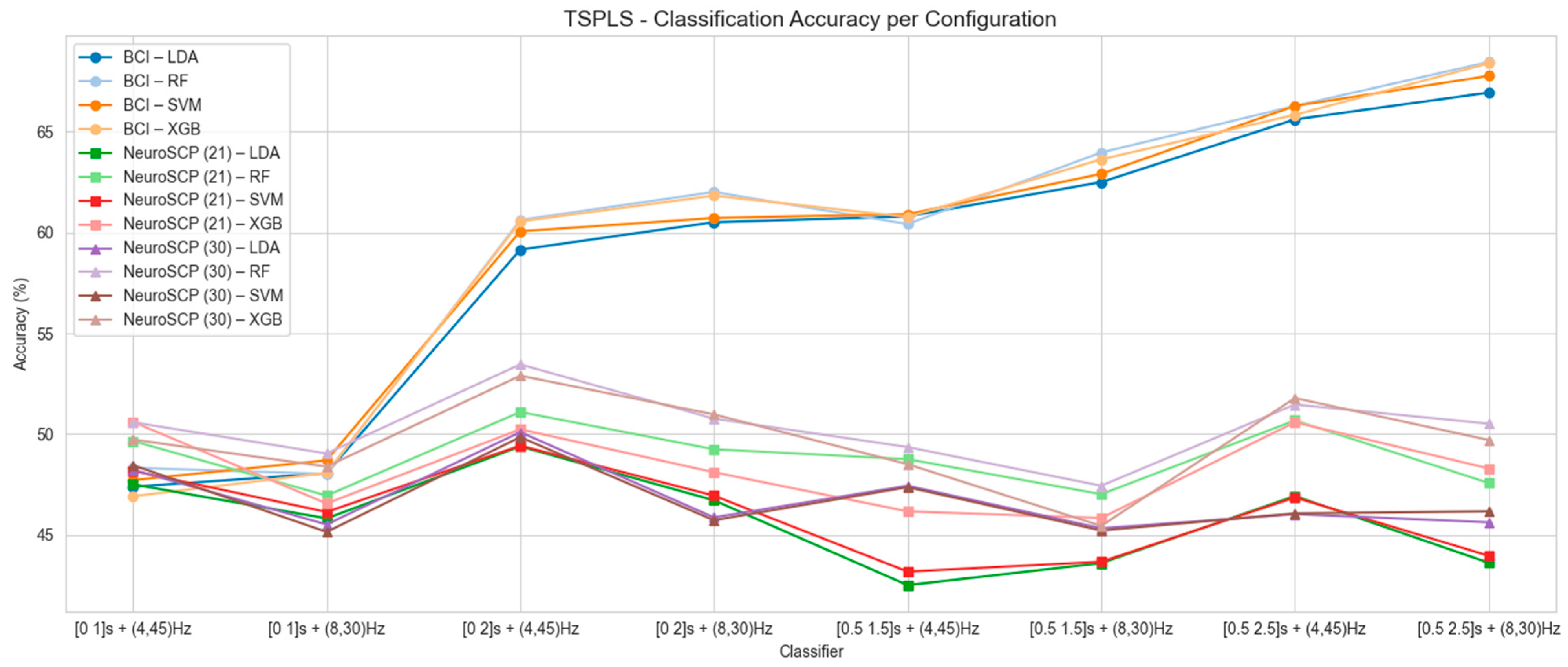

2.4.4. Riemannian Tangent Space (TS) + Partial Least Square (PLS)

2.5. Classification Methods

2.6. Transfer Learning Methods

3. Results

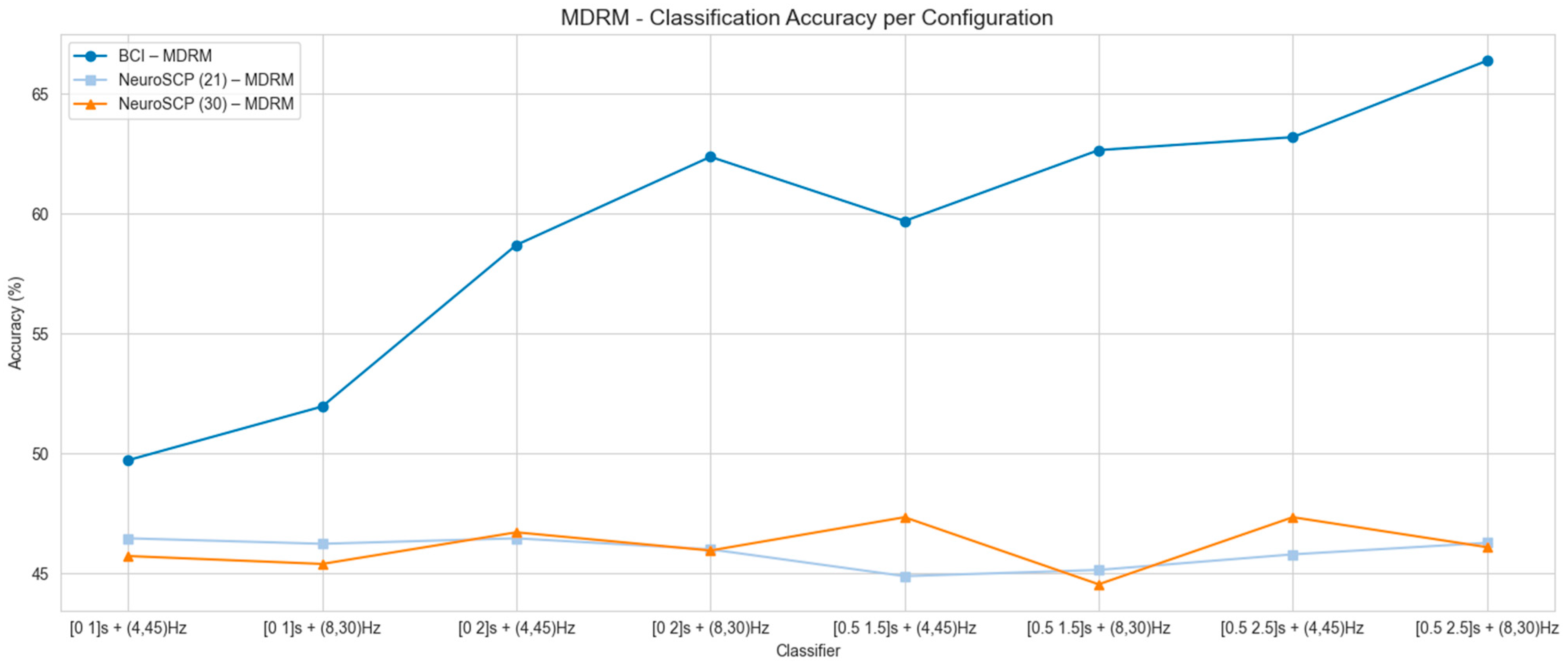

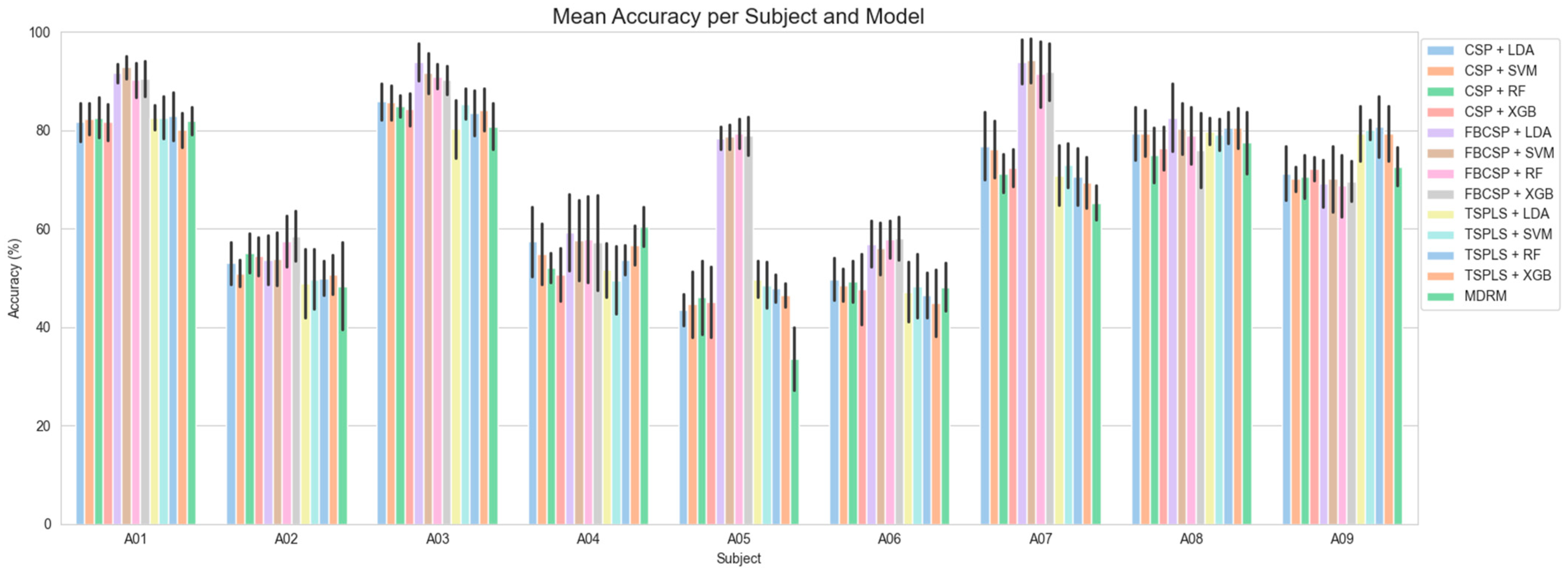

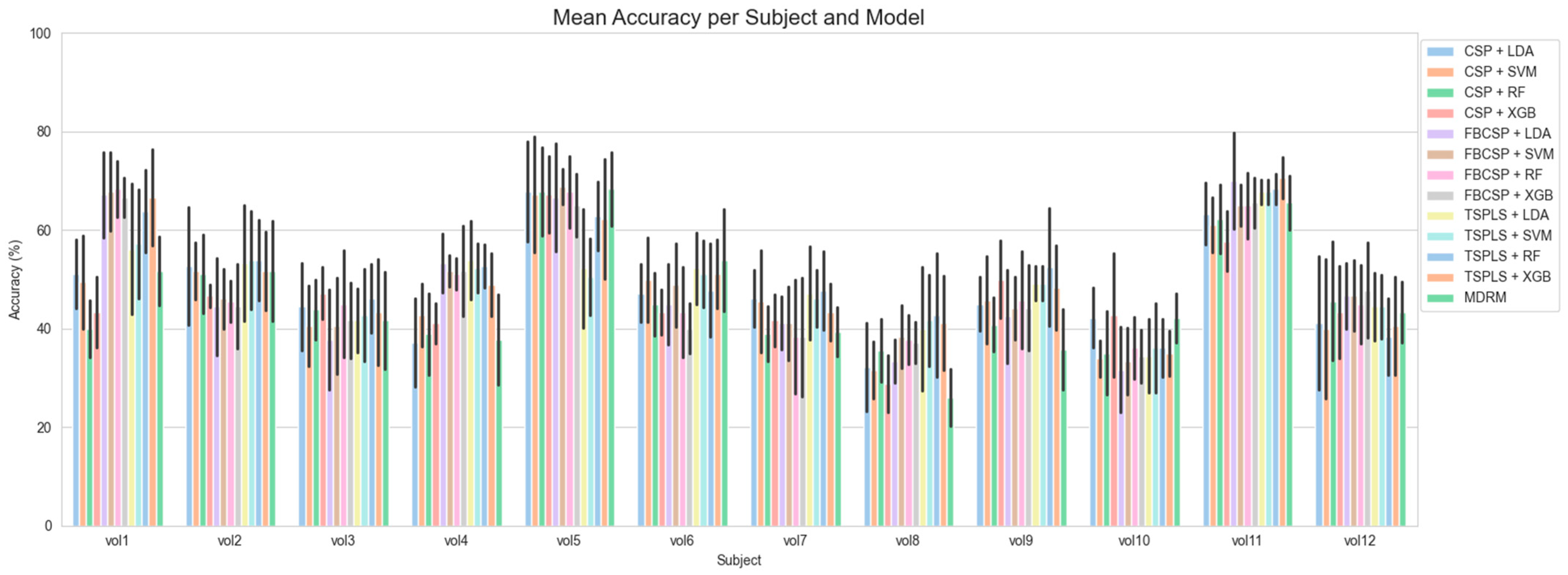

3.1. Mean Classification Accuracy for Each Feature Extraction Technique

3.2. Subject-Wise Classification Accuracy Results

3.3. Transfer Learning for the NeuroSCP Dataset

4. Discussion

4.1. BCI-C Classification

4.2. NeuroSCP Classification

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Murphy, S.J.; Werring, D.J. Stroke: Causes and Clinical Features. Medicine 2020, 48, 561–566. [Google Scholar] [CrossRef] [PubMed]

- Johnson, C.O.; Nguyen, M.; Roth, G.A.; Nichols, E.; Alam, T.; Abate, D.; Abd-Allah, F.; Abdelalim, A.; Abraha, H.N.; Abu-Rmeileh, N.M.; et al. Global, Regional, and National Burden of Stroke, 1990–2016: A Systematic Analysis for the Global Burden of Disease Study 2016. Lancet Neurol. 2019, 18, 439–458. [Google Scholar] [CrossRef] [PubMed]

- Tadi, P.; Lui, F. Acute Stroke. In StatPearls; StatPearls Publishing: Treasure Island, FL, USA, 2024. [Google Scholar]

- Zhao, L.J.; Jiang, L.H.; Zhang, H.; Li, Y.; Sun, P.; Liu, Y.; Qi, R. Effects of Motor Imagery Training for Lower Limb Dysfunction in Patients With Stroke: A Systematic Review and Meta-Analysis of Randomized Controlled Trials. Am. J. Phys. Med. Rehabil. 2023, 102, 409–418. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Cheng, X.; Rao, J.; Yu, J.; Lin, Z.; Wang, Y.; Wang, L.; Li, D.; Liu, L.; Gao, R. Motor Imagery Therapy Improved Upper Limb Motor Function in Stroke Patients with Hemiplegia by Increasing Functional Connectivity of Sensorimotor and Cognitive Networks. Front. Hum. Neurosci. 2024, 18, 1295859. [Google Scholar] [CrossRef]

- Kahraman, T.; Kaya, D.O.; Isik, T.; Gultekin, S.C.; Seebacher, B. Feasibility of Motor Imagery and Effects of Activating and Relaxing Practice on Autonomic Functions in Healthy Young Adults: A Randomised, Controlled, Assessor-Blinded, Pilot Trial. PLoS ONE 2021, 16, e0254666. [Google Scholar] [CrossRef]

- Santos-Couto-Paz, C.C.; Teixeira-Salmela, L.F.; Tierra-Criollo, C.J. The Addition of Functional Task-Oriented Mental Practice to Conventional Physical Therapy Improves Motor Skills in Daily Functions after Stroke. Braz. J. Phys. Ther. 2013, 17, 564–571. [Google Scholar] [CrossRef]

- Cunha, R.G.; Da-Silva, P.J.G.; Dos Santos Couto Paz, C.C.; da Silva Ferreira, A.C.; Tierra-Criollo, C.J. Influence of Functional Task-Oriented Mental Practice on the Gait of Transtibial Amputees: A Randomized, Clinical Trial. J. Neuroeng. Rehabil. 2017, 14, 28. [Google Scholar] [CrossRef]

- Jeannerod, M. Neural Simulation of Action: A Unifying Mechanism for Motor Cognition. NeuroImage 2001, 14, S103–S109. [Google Scholar] [CrossRef]

- Wang, H.; Xu, G.; Wang, X.; Sun, C.; Zhu, B.; Fan, M.; Jia, J.; Guo, X.; Sun, L. The Reorganization of Resting-State Brain Networks Associated With Motor Imagery Training in Chronic Stroke Patients. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 2237–2245. [Google Scholar] [CrossRef]

- Xu, Y.; Li, Y.L.; Yu, G.; Ou, Z.; Yao, S.; Li, Y.; Huang, Y.; Chen, J.; Ding, Q. Effect of Brain Computer Interface Training on Frontoparietal Network Function for Young People: A Functional Near-Infrared Spectroscopy Study. CNS Neurosci. Ther. 2025, 31, e70400. [Google Scholar] [CrossRef]

- Van der Lubbe, R.H.J.; Sobierajewicz, J.; Jongsma, M.L.A.; Verwey, W.B.; Przekoracka-Krawczyk, A. Frontal Brain Areas Are More Involved during Motor Imagery than during Motor Execution/Preparation of a Response Sequence. Int. J. Psychophysiol. 2021, 164, 71–86. [Google Scholar] [CrossRef]

- Wolpaw, J.R.; Birbaumer, N.; Heetderks, W.J.; McFarland, D.J.; Peckham, P.H.; Schalk, G.; Donchin, E.; Quatrano, L.A.; Robinson, C.J.; Vaughan, T.M. Brain-Computer Interface Technology: A Review of the First International Meeting. IEEE Trans. Rehabil. Eng. 2000, 8, 164–173. [Google Scholar] [CrossRef]

- Frolov, A.A.; Mokienko, O.; Lyukmanov, R.; Biryukova, E.; Kotov, S.; Turbina, L.; Nadareyshvily, G.; Bushkova, Y. Post-Stroke Rehabilitation Training with a Motor-Imagery-Based Brain-Computer Interface (BCI)-Controlled Hand Exoskeleton: A Randomized Controlled Multicenter Trial. Front. Neurosci. 2017, 11, 400. [Google Scholar] [CrossRef]

- de Zanona, A.F.; Piscitelli, D.; Seixas, V.M.; Scipioni, K.R.D.d.S.; Bastos, M.S.C.; de Sá, L.C.K.; Monte-Silva, K.; Bolivar, M.; Solnik, S.; De Souza, R.F. Brain-Computer Interface Combined with Mental Practice and Occupational Therapy Enhances Upper Limb Motor Recovery, Activities of Daily Living, and Participation in Subacute Stroke. Front. Neurol. 2022, 13, 1041978. [Google Scholar] [CrossRef] [PubMed]

- Abiri, R.; Borhani, S.; Sellers, E.W.; Jiang, Y.; Zhao, X. A Comprehensive Review of EEG-Based Brain–Computer Interface Paradigms. J. Neural Eng. 2019, 16, 011001. [Google Scholar] [CrossRef] [PubMed]

- Portillo-Lara, R.; Tahirbegi, B.; Chapman, C.A.R.; Goding, J.A.; Green, R.A. Mind the Gap: State-of-the-Art Technologies and Applications for EEG-Based Brain-Computer Interfaces. APL Bioeng. 2021, 5, 031507. [Google Scholar] [CrossRef] [PubMed]

- Koles, Z.J.; Lazar, M.S.; Zhou, S.Z. Spatial Patterns Underlying Population Differences in the Background EEG. Brain Topogr. 1990, 2, 275–284. [Google Scholar] [CrossRef]

- Ramoser, H.; Muller-Gerking, J.; Pfurtscheller, G. Optimal Spatial Filtering of Single Trial EEG during Imagined Hand Movement. IEEE Trans. Rehabil. Eng. 2000, 8, 441–446. [Google Scholar] [CrossRef]

- Alizadeh, D.; Omranpour, H. EM-CSP: An Efficient Multiclass Common Spatial Pattern Feature Method for Speech Imagery EEG Signals Recognition. Biomed. Signal Process. Control 2023, 84, 104933. [Google Scholar] [CrossRef]

- Ang, K.K.; Chin, Z.Y.; Zhang, H.; Guan, C. Filter Bank Common Spatial Pattern (FBCSP) in Brain-Computer Interface. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, China, 8 June 2008; pp. 2390–2397. [Google Scholar]

- Tibermacine, I.E.; Russo, S.; Tibermacine, A.; Rabehi, A.; Nail, B.; Kadri, K.; Napoli, C. Riemannian Geometry-Based EEG Approaches: A Literature Review. arXiv 2024, arXiv:2407.20250. [Google Scholar]

- Pichandi, S.; Balasubramanian, G.; Chakrapani, V. Hybrid Deep Models for Parallel Feature Extraction and Enhanced Emotion State Classification. Sci. Rep. 2024, 14, 24957. [Google Scholar] [CrossRef] [PubMed]

- Zou, Y.; Zhao, X.; Chu, Y.; Xu, W.; Han, J.; Li, W. A Supervised Independent Component Analysis Algorithm for Motion Imagery-Based Brain Computer Interface. Biomed. Signal Process. Control 2022, 75, 103576. [Google Scholar] [CrossRef]

- Zhang, W.; Liang, Z.; Liu, Z.; Gao, J. Feature Extraction of Motor Imagination EEG Signals in AR Model Based on VMD. In Proceedings of the 2021 International Conference on Electrical, Computer and Energy Technologies (ICECET), Cape Town, South Africa, 9–10 December 2021; pp. 1–5. [Google Scholar]

- Sherwani, F.; Shanta, S.; Ibrahim, B.S.K.K.; Huq, M.S. Wavelet Based Feature Extraction for Classification of Motor Imagery Signals. In Proceedings of the 2016 IEEE EMBS Conference on Biomedical Engineering and Sciences (IECBES), Kuala Lumpur, Malaysia, 4–8 December 2016; pp. 360–364. [Google Scholar]

- Achanccaray, D.; Hayashibe, M. Decoding Hand Motor Imagery Tasks Within the Same Limb From EEG Signals Using Deep Learning. IEEE Trans. Med. Robot. Bionics 2020, 2, 692–699. [Google Scholar] [CrossRef]

- Krishnamoorthy, K.; Loganathan, A.K. Deciphering Motor Imagery EEG Signals of Unilateral Upper Limb Movement Using EEGNet. Acta Scientiarum. Technol. 2025, 47, e69697. [Google Scholar] [CrossRef]

- Yong, X.; Menon, C. EEG Classification of Different Imaginary Movements within the Same Limb. PLoS ONE 2015, 10, e0121896. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Qiu, S.; Wei, W.; Wang, S.; He, H. Deep Channel-Correlation Network for Motor Imagery Decoding From the Same Limb. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 297–306. [Google Scholar] [CrossRef]

- Guan, S.; Li, J.; Wang, F.; Yuan, Z.; Kang, X.; Lu, B. Discriminating Three Motor Imagery States of the Same Joint for Brain-Computer Interface. PeerJ 2021, 9, e12027. [Google Scholar] [CrossRef]

- Zhang, M.; Huang, J.; Ni, S. Recognition of Motor Intentions from EEGs of the Same Upper Limb by Signal Traceability and Riemannian Geometry Features. Front. Neurosci. 2023, 17, 1270785. [Google Scholar] [CrossRef]

- Zhang, C.; Kim, Y.-K.; Eskandarian, A. EEG-Inception: An Accurate and Robust End-to-End Neural Network for EEG-Based Motor Imagery Classification. J. Neural Eng. 2021, 18, 046014. [Google Scholar] [CrossRef]

- Brunner, C.; Leeb, R.; Muller-Putz, G.R.; Schlogl, A. BCI Competition 2008—Graz Data Set A. Inst. Knowl. Discov. Graz Univ. Technol. 2008, 16, 34. [Google Scholar]

- Cevallos-Larrea, P.; Guambaña-Calle, L.; Molina-Vidal, D.A.; Castillo-Guerrero, M.; d’Affonsêca Netto, A.; Tierra-Criollo, C.J. Prototype of a Multimodal and Multichannel Electro-Physiological and General-Purpose Signal Capture System: Evaluation in Sleep-Research-like Scenario. Sensors 2025, 25, 2816. [Google Scholar] [CrossRef]

- Oldfield, R.C. The Assessment and Analysis of Handedness: The Edinburgh Inventory. Neuropsychologia 1971, 9, 97–113. [Google Scholar] [CrossRef]

- Hall, C.R.; Martin, K.A. Measuring Movement Imagery Abilities: A Revision of the Movement Imagery Questionnaire. J. Ment. Imag. 1997, 21, 143–154. [Google Scholar]

- Barachant, A.; Bonnet, S.; Congedo, M.; Jutten, C. Multiclass Brain-Computer Interface Classification by Riemannian Geometry. IEEE Trans. Biomed. Eng. 2012, 59, 920–928. [Google Scholar] [CrossRef] [PubMed]

- Ledoit, O.; Wolf, M. A Well-Conditioned Estimator for Large-Dimensional Covariance Matrices. J. Multivar. Anal. 2004, 88, 365–411. [Google Scholar] [CrossRef]

- Fisher, R.A. The Use of Multiple Measurements in Taxonomic Problems. Ann. Eugen. 1936, 7, 179–188. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13 August 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 785–794. [Google Scholar]

- Khazem, S.; Chevallier, S.; Barthélemy, Q.; Haroun, K.; Noûs, C. Minimizing Subject-Dependent Calibration for BCI with Riemannian Transfer Learning. In Proceedings of the 2021 10th International IEEE/EMBS Conference on Neural Engineering (NER), Online, 4 May 2021; pp. 523–526. [Google Scholar]

- Kalunga, E.K.; Chevallier, S.; Barthelemy, Q. Transfer Learning for SSVEP-Based BCI Using Riemannian Similarities Between Users. In Proceedings of the 2018 26th European Signal Processing Conference (EUSIPCO), Rome, Italy, 3 September 2018; IEEE: Rome, Italy, 2018; pp. 1685–1689. [Google Scholar]

- Bleuzé, A.; Mattout, J.; Congedo, M. Tangent Space Alignment: Transfer Learning for Brain-Computer Interface. Front. Hum. Neurosci. 2022, 16, 1049985. [Google Scholar] [CrossRef]

- Shuaibu, Z.; Qi, L. Optimized DNN Classification Framework Based on Filter Bank Common Spatial Pattern (FBCSP) for Motor-Imagery-Based BCI. IJCA 2020, 175, 16–25. [Google Scholar] [CrossRef]

- Ang, K.K.; Chin, Z.Y.; Wang, C.; Guan, C.; Zhang, H. Filter Bank Common Spatial Pattern Algorithm on BCI Competition IV Datasets 2a and 2b. Front. Neurosci. 2012, 6, 39. [Google Scholar] [CrossRef] [PubMed]

- Chu, Y.; Zhao, X.; Zou, Y.; Xu, W.; Song, G.; Han, J.; Zhao, Y. Decoding Multiclass Motor Imagery EEG from the Same Upper Limb by Combining Riemannian Geometry Features and Partial Least Squares Regression. J. Neural Eng. 2020, 17, 046029. [Google Scholar] [CrossRef] [PubMed]

- Shuqfa, Z.; Belkacem, A.N.; Lakas, A. Decoding Multi-Class Motor Imagery and Motor Execution Tasks Using Riemannian Geometry Algorithms on Large EEG Datasets. Sensors 2023, 23, 5051. [Google Scholar] [CrossRef] [PubMed]

- Tavakolan, M.; Frehlick, Z.; Yong, X.; Menon, C. Classifying Three Imaginary States of the Same Upper Extremity Using Time-Domain Features. PLoS ONE 2017, 12, e0174161. [Google Scholar] [CrossRef]

- Miladinović, A.; Accardo, A.; Jarmolowska, J.; Marusic, U.; Ajčević, M. Optimizing Real-Time MI-BCI Performance in Post-Stroke Patients: Impact of Time Window Duration on Classification Accuracy and Responsiveness. Sensors 2024, 24, 6125. [Google Scholar] [CrossRef]

- Didoné, D.D.; Oppitz, S.J.; Gonçalves, M.S.; Garcia, M.V.; Didoné, D.D.; Oppitz, S.J.; Gonçalves, M.S.; Garcia, M.V. Long-Latency Auditory Evoked Potentials: Normalization of Protocol Applied to Normal Adults. Arch. Otolaryngol. Rhinol. 2019, 5, 069–073. [Google Scholar]

- Jiang, X.; Bian, G.-B.; Tian, Z. Removal of Artifacts from EEG Signals: A Review. Sensors 2019, 19, 987. [Google Scholar] [CrossRef]

- Baccalá, L.A.; Sameshima, K. Partial Directed Coherence: A New Concept in Neural Structure Determination. Biol. Cybern. 2001, 84, 463–474. [Google Scholar] [CrossRef]

- Ma, P.; Dong, C.; Lin, R.; Liu, H.; Lei, D.; Chen, X.; Liu, H. A Brain Functional Network Feature Extraction Method Based on Directed Transfer Function and Graph Theory for MI-BCI Decoding Tasks. Front. Neurosci. 2024, 18, 1306283. [Google Scholar] [CrossRef]

- Zhan, G.; Chen, S.; Ji, Y.; Xu, Y.; Song, Z.; Wang, J.; Niu, L.; Bin, J.; Kang, X.; Jia, J. EEG-Based Brain Network Analysis of Chronic Stroke Patients After BCI Rehabilitation Training. Front. Hum. Neurosci. 2022, 16, 909610. [Google Scholar] [CrossRef]

| Year | Title | Feature Extraction | Classifiers | N Vol | Classes | Accuracy |

|---|---|---|---|---|---|---|

| 2015 | EEG Classification of Different Imaginary Movements within the Same Limb [29] | BP, CSP, FB-CSP | LDA, LR, SVM | 12 | 3-Classes: Rest, Grasp, Elbow | 56.2% (8.5) |

| 3-Classes: Rest, Grasp, Elbow (on Goal) | 60.7% (8.4) | |||||

| 2019 | Deep Channel-Correlation Network for Motor Imagery Decoding From the Same Limb [30] | Correlation, MSC | Channel Correlation CNN | 25 | 3-Classes: Rest, Hand, Elbow | 87% |

| 2020 | Decoding Hand Motor Imagery Tasks Within the Same Limb from EEG Signals Using Deep Learning [27] | CNN | CNN | 20 | 2-Classes: Flexion, Extension | 78.46% (12.5%) |

| 3-Classes: Flexion, Extension, Grasping | 76.7% (11.7%) | |||||

| 2021 | Discriminating Three Motor Imagery States of the Same Joint for Brain–Computer Interface [31] | TDP, CSP, FB-CSP, EMD-CSP, LMD-CSP | LDA, ELM, KNN, SVM, LS-SVM, MOGWO-TWSVM | 7 | 3-Classes: Abduction, Flexion, Extension of the shoulder | 91.6% |

| 2021 | EEG-Inception: An Accurate and Robust End-to-End Neural Network for EEG-based Motor Imagery Classification [33] | CNN | CNN | 9 | BCI-C IV-2a Right and left hands, both Feet, and Tongue | 88.4% (7) |

| 9 | BCI-C IV 2b Right and left hands | 88.6% (5.5) | ||||

| 2023 | Recognition of Motor Intentions from EEGs of the Same Upper Limb by Signal Traceability and Riemannian Geometry Features [32] | FB-CSP, Riemannian geometry | SVM | 15 | 6-Classes: Grasping and holding of the palm, Flexion and Extension of the elbow, Abduction/Adduction of the shoulder | 22.5% (3) |

| 4–45 Hz | 8–30 Hz | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Dataset | Classifier | [0 1] | [0 2] | [0.5 1.5] | [0.5 2.5] | [0 1] | [0 2] | [0.5 1.5] | [0.5 2.5] |

| BCI-C | LDA | 46.51 (8.95) | 58.29 (10.69) | 62.99 (14.01) | 66.54 (15.72) | 49.92 (7.41) | 60.39 (11.75) | 63.71 (15.78) | 67.44 (16.69) |

| RF | 45.29 (8.45) | 57.95 (11.06) | 60.42 (12.30) | 65.23 (14.79) | 47.43 (8.66) | 58.82 (12.44) | 62.65 (14.92) | 66.05 (17.12) | |

| SVM | 46.89 (8.75) | 59.31 (10.75) | 62.78 (13.26) | 65.88 (16.06) | 49.71 (7.55) | 60.70 (11.50) | 63.86 (15.75) | 67.83 (15.82) | |

| XGB | 45.24 (8.35) | 57.41 (11.53) | 59.52 (13.66) | 64.99 (15.41) | 48.17 (8.44) | 58.15 (12.81) | 61.39 (15.83) | 65.95 (15.61) | |

| NeuroSCP (21 channels) | LDA | 47.25 (11.96) | 47.55 (10.13) | 47.20 (9.09) | 48.61 (9.17) | 46.06 (12.01) | 47.08 (10.62) | 47.11 (9.76) | 47.08 (9.74) |

| RF | 44.84 (9.00) | 45.39 (10.26) | 45.60 (8.70) | 45.97 (7.47) | 45.37 (10.76) | 47.06 (9.91) | 45.16 (9.57) | 43.82 (8.76) | |

| SVM | 46.41 (11.67) | 46.64 (10.28) | 46.34 (9.93) | 48.17 (9.42) | 45.46 (11.38) | 46.09 (10.64) | 46.46 (9.80) | 44.56 (10.95) | |

| XGB | 44.44 (10.69) | 46.11 (9.41) | 44.81 (8.10) | 45.58 (7.44) | 45.19 (9.54) | 45.83 (9.59) | 43.98 (9.50) | 43.61 (9.32) | |

| NeuroSCP (30 channels) | LDA | 47.13 (9.30) | 50.51 (9.24) | 49.54 (7.26) | 51.53 (8.35) | 47.92 (10.81) | 50.35 (9.50) | 46.06 (7.43) | 49.68 (8.25) |

| RF | 47.25 (9.91) | 49.40 (9.59) | 46.55 (6.05) | 51.16 (10.79) | 45.97 (9.72) | 49.24 (8.06) | 45.28 (7.70) | 48.29 (7.89) | |

| SVM | 47.64 (9.79) | 49.63 (10.36) | 47.96 (7.01) | 51.69 (9.29) | 47.20 (8.78) | 49.54 (9.34) | 46.41 (8.11) | 49.12 (8.21) | |

| XGB | 44.98 (9.94) | 47.92 (9.03) | 47.57 (5.71) | 50.69 (8.70) | 45.88 (9.07) | 49.21 (7.04) | 45.02 (7.85) | 48.56 (8.66) | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kauati-Saito, E.; Pereira, A.d.S.; Fontana, A.P.; de Sá, A.M.F.L.M.; Soares, J.G.M.; Tierra-Criollo, C.J. Classification of Different Motor Imagery Tasks with the Same Limb Using Electroencephalographic Signals. Sensors 2025, 25, 5291. https://doi.org/10.3390/s25175291

Kauati-Saito E, Pereira AdS, Fontana AP, de Sá AMFLM, Soares JGM, Tierra-Criollo CJ. Classification of Different Motor Imagery Tasks with the Same Limb Using Electroencephalographic Signals. Sensors. 2025; 25(17):5291. https://doi.org/10.3390/s25175291

Chicago/Turabian StyleKauati-Saito, Eric, André da Silva Pereira, Ana Paula Fontana, Antonio Mauricio Ferreira Leite Miranda de Sá, Juliana Guimarães Martins Soares, and Carlos Julio Tierra-Criollo. 2025. "Classification of Different Motor Imagery Tasks with the Same Limb Using Electroencephalographic Signals" Sensors 25, no. 17: 5291. https://doi.org/10.3390/s25175291

APA StyleKauati-Saito, E., Pereira, A. d. S., Fontana, A. P., de Sá, A. M. F. L. M., Soares, J. G. M., & Tierra-Criollo, C. J. (2025). Classification of Different Motor Imagery Tasks with the Same Limb Using Electroencephalographic Signals. Sensors, 25(17), 5291. https://doi.org/10.3390/s25175291