Abstract

Fog computing is increasingly preferred over cloud computing for processing tasks from Internet of Things (IoT) devices with limited resources. However, placing tasks and allocating resources in distributed and dynamic fog environments remains a major challenge, especially when trying to meet strict Quality of Service (QoS) requirements. Deep reinforcement learning (DRL) has emerged as a promising solution to these challenges, offering adaptive, data-driven decision-making in real-time and uncertain conditions. While several surveys have explored DRL in fog computing, most focus on traditional centralized offloading approaches or emphasize reinforcement learning (RL) with limited integration of deep learning. To address this gap, this paper presents a comprehensive and focused survey on the full-scale application of DRL to the task offloading problem in fog computing environments involving multiple user devices and multiple fog nodes. We systematically analyze and classify the literature based on architecture, resource allocation methods, QoS objectives, offloading topology and control, optimization strategies, DRL techniques used, and application scenarios. We also introduce a taxonomy of DRL-based task offloading models and highlight key challenges, open issues, and future research directions. This survey serves as a valuable resource for researchers by identifying unexplored areas and suggesting new directions for advancing DRL-based solutions in fog computing. For practitioners, it provides insights into selecting suitable DRL techniques and system designs to implement scalable, efficient, and QoS-aware fog computing applications in real-world environments.

1. Introduction

IoT devices, including consumer electronics (CE),TinyML-enabled systems, and unmanned aerial vehicles (UAVs), have seen widespread adoption across a range of industries. These network-connected devices are capable of sensing, processing, and communicating data. However, because they have limited computing power, they often need to offload tasks to more powerful systems to meet QoS requirements [1]. Task offloading refers to the process of transferring computational workloads from resource-limited devices to more powerful computing systems, based on a machine-to-machine collaboration model [2,3].

Traditionally, IoT devices offload tasks to cloud environments. However, device-to-cloud offloading introduces significant latency and bandwidth overhead, as well as potential privacy concerns [4], making it unsuitable for delay-sensitive applications. To overcome these limitations, fog computing was introduced as a decentralized approach that brings computational resources closer to edge devices, allowing localized task execution and storage [5]. This proximity significantly reduces latency and enhances real-time processing efficiency [6]. By handling tasks near the data source, fog computing also improves the responsiveness of latency-sensitive applications and optimizes both communication and computation costs in line with QoS requirements [7].

Task offloading and resource allocation in distributed fog computing environments are inherently challenging due to the loosely coupled, highly dynamic, heterogeneous, and failure-prone nature of fog nodes [8]. In contrast to centralized cloud systems that operate within controlled and uniform infrastructures, fog computing must orchestrate diverse, geographically distributed resources while adhering to stringent QoS requirements. This complexity is further compounded by variable resource availability, user mobility, and the diverse performance needs of latency-sensitive applications. Achieving minimal communication and computation delays is crucial for enabling real-time analytics and mission-critical IoT operations. Moreover, the decentralized nature of fog computing introduces heightened concerns around data security, privacy protection, and effective data management, all of which are essential for maintaining trust and ensuring regulatory compliance [9]. Overcoming these challenges requires the development of intelligent, adaptive, and resource-aware task offloading strategies capable of optimizing performance while ensuring resilience against failures and accommodating heterogeneity in both hardware and network environments.

DRL has gained significant traction as a promising approach for addressing the complex challenges of task offloading and resource allocation in fog computing environments [10]. By enabling adaptive, real-time decision-making under uncertain and dynamic conditions, DRL offers considerable potential to enhance the efficiency and responsiveness of fog-based systems. The increasing application of DRL in this domain highlights the need for a comprehensive and systematic review of state-of-the-art DRL-based task offloading techniques, with particular attention to their role within decentralized and latency-sensitive fog computing environments. Although several surveys have investigated task offloading in fog computing, most existing reviews primarily highlights conventional ML [11], RL [12], and DL [1] approaches. While DRL has recently gained attention, its application is usually limited to general resource management [13] or computation offloading in edge-centric architectures [14]. Moreover, much of the literature still emphasizes centralized edge or mobile edge computing (MEC) models, overlooking the decentralized, heterogeneous, and latency-sensitive characteristics that define fog computing environments [1]. This highlights the absence of a dedicated and systematic survey that reviews and synthesizes the application of DRL for task offloading and resource allocation in fog computing environments. Consequently, there is a clear need for a focused review that consolidates recent developments, categorizes DRL-based techniques, and identifies open challenges and future research directions in fog-based systems.

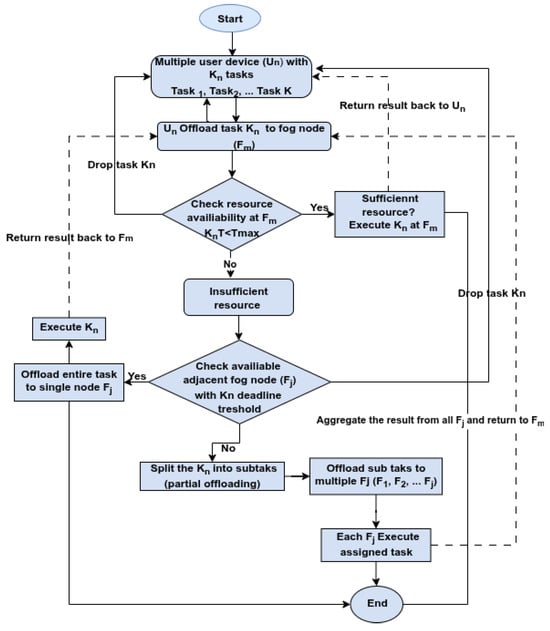

This study addresses the aforementioned gap by exploring how DRL is used in many-to-many task offloading and resource allocation between IoT devices and fog nodes, with a focus on real-time, latency-sensitive applications. We present a comprehensive literature survey on task offloading across different layers, including IoT devices, edge, and fog nodes, as well as inter-fog node offloading. A key focus of this survey is the exploration of DRL-based offloading approaches and the growing role of smart devices in enabling real-time service provisioning. The study proposes a conceptual task offloading model and a supporting offloading architecture and examines how emerging technologies can be leveraged to meet QoS requirements for latency-sensitive applications. It also highlights the potential of knowledge-driven DRL frameworks in intelligent systems. These studies often overlook challenges related to continuous action spaces, convergence, and real-time adaptation. The main contributions of this survey are summarized as follows:

- Comprehensive Analysis of Task Offloading and Resource Allocation: We investigate to provide detailed knowledge about task offloading strategies and resource allocation mechanisms in fog computing, outlining core concepts, standards, and techniques to meet application-level QoS requirements.

- Topological Models and Control Architectures: We examine task offloading topology models and their patterns, focusing on resource management and control architectures across mixed, mobile, and fixed device-fog interaction categories.

- DRL-Based Approaches in Fog Environments: We explore the application of DRL for task offloading and resource allocation in dynamic fog and edge environments, highlighting current practices and future opportunities to enhance intelligent, adaptive systems.

- Real-World Use Cases and Emerging Strategies: Through a synthesis of cutting-edge research and practical applications, we demonstrate how DRL-based offloading can drive innovation in smart device and fog resource management.

- Research Gaps and Future Directions: We identify open challenges and future research directions in DRL-based task offloading within the fog computing paradigm.

The remainder of this paper is organized as follows: Section 2 provides background information and reviews related work. Section 3 outlines the research methodology. Section 4 presents the findings and the analysis. Section 5 discusses the DRL-based task offloading architecture in fog computing environments. The subsections of (Section 5) also extend the discussion with the details of DL, RL, and DRL taxonomy, DRL algorithms, task execution models, and applications of DRL-based task offloading in fog computing. Section 6 discusses DRL approaches integrated with QoS optimization strategies. Section 7 highlights future research directions and open issues. Section 8 concludes the paper.

2. Background and Related Work

In this section, we present a four-layered task offloading architecture, followed by a comprehensive review of related work.

2.1. Device-Edge-Fog-Cloud Architecture

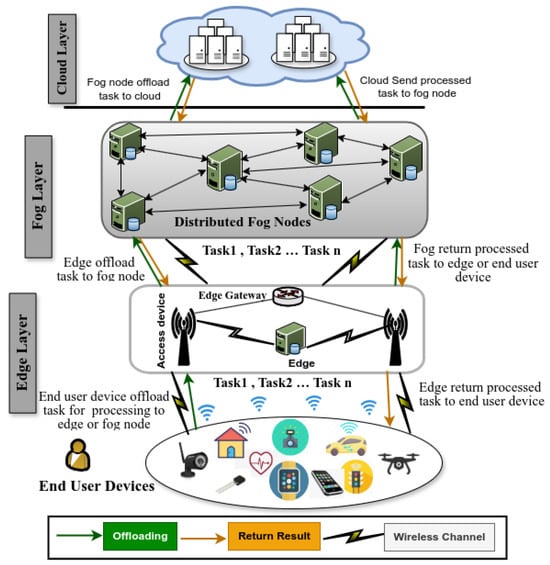

Figure 1 shows a high-level task offloading architecture consisting of four layers: end-user devices, edge, fog, and cloud. The first layer includes various end-user devices with limited computing power. The second layer consists of edge nodes that offer immediate, low-latency support for task offloading and often operate closely with the end-user devices. The third layer, the primary focus of this article, is the fog layer, which provides distributed, intermediate computing resources located near the data source. Finally, the fourth layer comprises centralized cloud data centers, suited for large-scale processing tasks that are not time-sensitive.

Figure 1.

Edge–fog–cloud continuum architecture.

Edge computing is a distributed computing platform in which computation, storage, and networking resources are deployed at or near the network’s edge—close to where data are generated. This infrastructure can include gateways, base stations, or local edge servers, enabling low-latency processing without relying solely on centralized cloud resources [15]. This proximity-based model, illustrated in Figure 1, shows access nodes operating parallel to the end-user device. Edge computing is inherently device-centric and localized, with performance highly dependent on the strategic placement of edge servers—particularly in dynamic platforms like the Internet of Vehicles (IoV) [16].

In contrast, fog computing introduces an additional intermediate layer between edge devices and the cloud, comprising a distributed network of fog nodes capable of more complex, scalable, and context-aware processing tasks [4]. It adopts a collaborative and horizontally scalable architecture, enabling multiple nodes to share resources, orchestrate services, and perform real-time analytics across wider geographic areas. This makes it especially well-suited for large-scale, latency-sensitive applications, such as smart transportation and industrial IoT, that require rapid decision-making without relying solely on centralized cloud infrastructure.

In summary, fog computing and edge computing share the goal of bringing computation closer to data sources to reduce latency and bandwidth usage, yet they differ in architecture, scope, management, scalability, security, and functionality [15]. Singh et al. [17] and Laroui et al. [18] note that both paradigms follow distributed architectural principles but characterize fog computing as decentralized, flat, and hierarchical, with horizontal node collaboration enabling scalable and flexible service deployment.

2.2. Previous Surveys

Task offloading has emerged as a significant research focus in the context of fog computing, and numerous survey studies have explored this topic in depth. Table 1 presents a consolidated overview of key surveys related to fog-based task offloading, summarizing the various problem-solving approaches adopted in each work.

Lahmar and Boukadi [19] focus on heuristic, meta-heuristic, and exact matching optimization techniques for resource allocation in fog computing, aiming to enhance QoS for latency-sensitive applications. Hong and Varghese [20] provide a comprehensive classification framework encompassing fog computing architectures, infrastructures, and underlying algorithms. Similarly, Salaht et al. [21] examine the challenges of service placement and resource management in both edge and fog computing environments. Kar et al. [22] investigate task offloading in federated systems, offering a comparative classification of edge, fog, and cloud computing layers, along with a roadmap of offloading strategies tailored to different federated scenarios. Finally, Mukherjee et al. [23] discuss the use of cloudlets for mobile offloading, addressing both mobile and non-mobile application contexts. Most of these studies employ conventional centralized approaches for task offloading and resource allocation, where multiple smart user devices offload tasks to a central edge node for decision-making. While this model simplifies coordination, it limits the flexibility and scalability of task offloading in distributed fog computing environments.

Table 1.

Summary of related surveys.

Table 1.

Summary of related surveys.

| Reference | Approaches | Focus | Architectural Scope |

|---|---|---|---|

| [24] | Distributed ML (DML) | Distributed computation | IoT–Edge computing |

| [6] | ML | Resource allocation | Fog computing |

| [13] | DRL | Resource management | IoT–Fog computing |

| [14] | DRL | Computation offloading | Edge computing |

| [19] | ML | Resource allocation | Fog-to-Cloud and Fog-to-Fog |

| [25] | ML | Communication and computation | IoT–Edge-to-Cloud computing |

| [26] | ML, RL | Mobile-based computation offloading | Smart user device–MEC |

| [11] | ML | Resource management | IoT–Fog |

| [22] | Traditional/ML | Task offloading in federated system | Edge–Cloud and Fog–Fog |

| [27] | FRL | Offloading performance in RL | IoT–Edge–Fog–Cloud |

| [12] | RL | Resource allocation for task offloading | IoT–Fog–Cloud |

| [1] | RL | Computation offloading | IoT–Edge |

| [28] | ML | Computation offloading | Edge and Fog |

| Our Work | DRL | Task offloading and resource allocation | IoT–Edge–Fog |

Salaht et al. [21] conducted a comprehensive survey on the taxonomy of service placement problems, focusing on two primary scenarios: (i) assigning services in a way that satisfies system requirements while optimizing a specific objective, and (ii) minimizing latency while ensuring acceptable QoS during service deployment. Their work primarily addresses the critical question: “Where should a service be deployed and executed to best meet QoS objectives?” Building on this foundation, the current survey extends the discussion by exploring DRL approaches for task offloading within smart computing environments—specifically between smart user devices, the edge, and fog architecture. This shift in perspective emphasizes the dynamic and adaptive nature of DRL in supporting real-time service demands and complex offloading decisions. To support the development of a DRL-based task offloading and resource allocation framework, the following subsections present the task computation model and relevant application scenarios in detail, forming the basis for modeling intelligent offloading strategies in distributed Fog computing environments.

The potential of DRL to enhance task offloading efficiency across various architectural settings has been recognized. Fahimullah et al. [11] examined different machine learning (ML) approaches for resource management in dynamic fog computing environments. Their findings indicate that Q-learning and DRL are among the most commonly applied techniques; however, their work does not focus specifically on task offloading. Among the studies that do target offloading, Shakarami et al. [29] provide a comprehensive review of computation offloading in IoT–MEC ecosystems using supervised, unsupervised, and RL methods. Similarly, Rodrigues et al. [25] explore ML techniques while highlighting the challenges posed by high-dimensional data in making offloading decisions. However, both studies do not address the dynamic status of resource availability or the inter-server communication required for real-time updates in distributed environments. To address this, Hortelano et al. [1] apply RL techniques to the task offloading problem in IoT–Edge networks. Nonetheless, their proposed architecture primarily follows a many-to-one offloading model, where multiple user devices offload tasks to a single edge node—limiting its application in fully distributed fog architectures. Further advancing this field, Tran-Dang et al. [12] present a survey that highlights the use of reinforcement learning for fog resource allocation, aiming to optimize task execution performance. However, their review also reveals that a key challenge still remains: Effectively deciding where to offload tasks in dynamic and distributed fog environments is yet to be fully addressed.

Several studies have surveyed fog computing from a range of perspectives, including resource allocation, infrastructure, algorithms, architecture, management models, and computation offloading [19,22,30,31]. While many of these works explore task offloading in fog environments, there is still limited focus on the opportunities and challenges presented by DL, RL, DRL, and federated learning (FL), particularly in the context of distributed fog node architectures. These gaps highlight the need for further investigation into adaptive task offloading strategies capable of operating in dynamic and heterogeneous fog computing ecosystems. To address this, the current study explores the application of AI/ML techniques, specifically, DL, RL, and DRL, for many-to-many task offloading and resource allocation. The scenario considered involves multiple smart user devices offloading tasks to multiple distributed fog nodes, aiming to meet the demands of real-time, latency-critical applications. In particular, the study focuses on how DRL can improve offloading decisions and optimize resource utilization between user devices and fog environments. It also provides insights into knowledge-driven multi-agent DRL approaches and their potential applications in emerging intelligent systems.

3. Research Methodology and Materials

This section explains the methodology used in conducting the study. The structure of the survey, including the research questions, keyword selection, and literature search strategy, is detailed in the following subsections.

3.1. Methodology

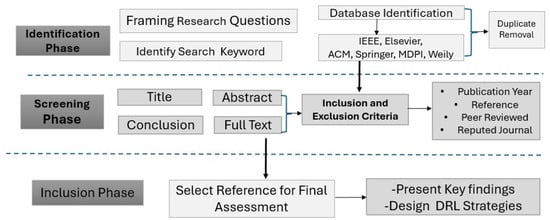

This survey covers research from 2017 to June 2025 on recent advances and emerging trends in DRL-based task offloading and resource allocation in fog computing. To ensure a rigorous and reproducible review process, we adopted a systematic methodology based on the widely recognized guidelines proposed by Kitchenham et al. [32] for conducting systematic literature reviews in software engineering. This structured approach enables the identification, evaluation, and synthesis of relevant studies in a transparent and methodical manner. As illustrated in Figure 2, the methodology is organized into three main phases, each designed to support a clear progression from research question formulation to literature selection, data extraction, and synthesis of the findings [33].

Figure 2.

Steps of a comprehensive literature review process [33].

3.2. Formulation of Research Questions

We began by formulating key research questions aimed at exploring DRL-based task offloading approaches across various scenarios relevant to business applications. These foundational questions were carefully constructed to guide the scope of the literature review, identify knowledge gaps, and examine how DRL can be applied to optimize offloading decisions, improve resource allocation, and meet performance requirements in dynamic and distributed fog computing environments. Table 2 presents the research questions alongside their underlying motivations, helping to narrow the focus of the study.

Table 2.

RQs with their motivation.

3.3. Study Selection

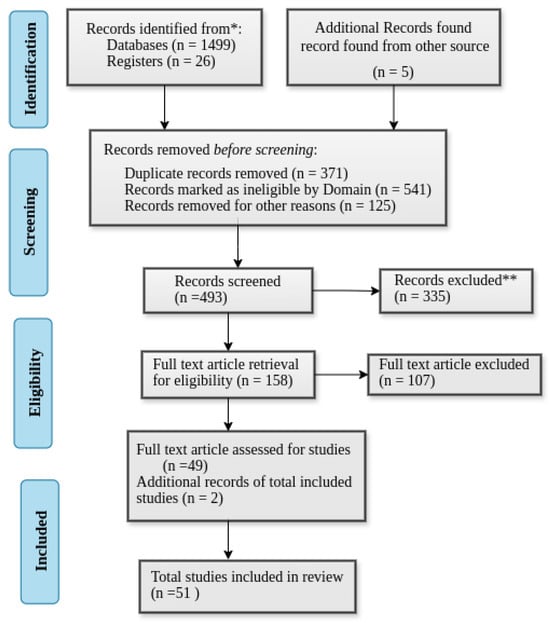

We used the PRISMA 2020 framework depicted in Figure 3 that shows the flow diagram for the paper organization process collected from the research database and other sources. It shows every step followed to organize our records.

Figure 3.

Paper selection flow diagram (* indicates the number of records identified from each database and register, and ** indicates the number of records excluded by automation tools, and manually by human review).

3.3.1. Eligibility Criteria

The inclusion criteria encompass research focused on task offloading and resource allocation between user devices, edge computing, and fog computing. Eligible works include articles published in peer-reviewed journals that address task offloading problems using DL, RL, and RL techniques. The review also considers studies that propose and evaluate ML-based approaches for task offloading and resource allocation in end-user device–fog computing scenarios. Furthermore, selected research must examine performance metrics such as energy efficiency, latency, throughput, resource utilization, and channel allocation. Only full-text articles published in journals indexed in the Web of Science, Scopus, or Science Citation Index (SCI) databases are included.

The exclusion criteria eliminate studies that do not specifically address DRL-based task offloading and resource allocation, including those primarily focused on unrelated IoT–fog topics. Non-peer-reviewed materials such as book chapters, conference abstracts, and other grey literature are excluded. Papers lacking sufficient methodological detail, such as case reports without clear procedures, are also omitted. Additionally, studies that do not involve task offloading between the user device and the fog layer—such as those focusing solely on cloud computing or edge computing without considering the fog—are excluded.

3.3.2. Search Strategy and Data Collection Process

We use AND (&&) and OR to search articles in scientific databases. After a regress study, the search query is presented as follows: [(“Task offloading” OR “Resource allocation” OR “Machine learning” OR “DL and RL-based task offloading”, OR “DRL based task offloading”) AND (“fog computing” OR “fog nodes” OR “distributed computations”)] were used as search keywords to include research works.

We designed a data extraction form based on QoS metrics such as energy consumption, latency, throughput, resource utilization, and fault tolerance to collect information from articles. Additionally, to capture relevant information about included studies, the data extraction form considers the year of publication, journal impact factors, study design, fog architecture, ML methods and algorithms, task characteristics, and evaluation methodology.

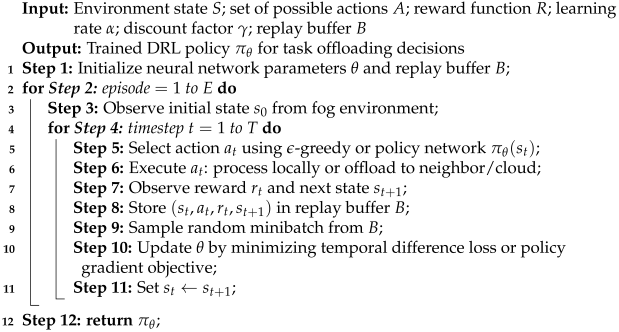

3.4. Synthesis of Common DRL Workflow

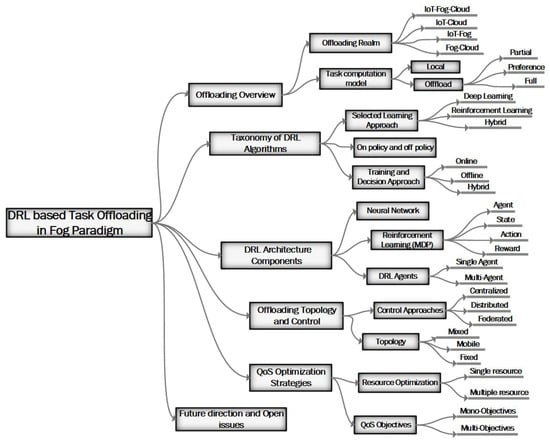

While the primary focus of this survey is on summarizing existing works, we provide in Algorithm 1 a generalized pseudo-code that synthesizes common DRL-based task offloading processes from the literature [34,35,36,37,38]. This pseudo-code is not a novel algorithm but an illustrative abstraction representing the workflow typically reported across reviewed studies. Figure 4 presents the detailed literature survey plan, structured as a taxonomy framework that categorizes key aspects of task offloading and execution in the fog computing paradigm.

| Algorithm 1: Generalized DRL Workflow for Task Offloading in Fog Computing |

|

Figure 4.

Overall survey structure.

4. Findings and Analysis

This section presents the key findings derived from the analysis of selected studies and evaluates their implications within the context of DRL-based task offloading and resource allocation in fog computing environments. This section interprets the outcomes in relation to the research objectives, highlighting performance trends, the comparative strengths and weaknesses of existing approaches, and their alignment with identified evaluation metrics. Furthermore, it explores how the results contribute to advancing the field, addresses potential limitations, and discusses the practical relevance of the findings in real-world fog computing scenarios.

4.1. Trend Analysis

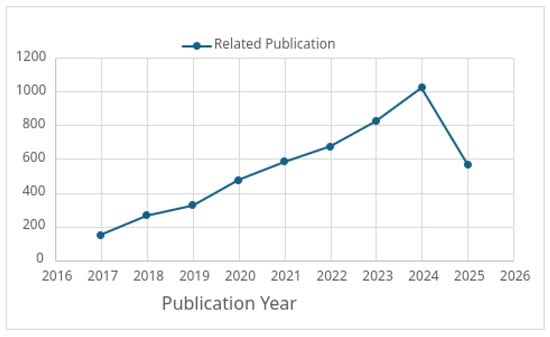

According to the Web of Science (WoS) research trend analysis tool, the graph presented in Figure 5 highlights the growing importance and the increasing volume of research focused on fog computing integrated with machine learning (ML) techniques to address task offloading and resource allocation challenges. Notably, advanced methods such as deep learning DL, RL, DRL, and hybrid approaches are increasingly being applied to support real-time application requirements in dynamic environments.

Figure 5.

Distribution of the publications from 2017 to 2025.

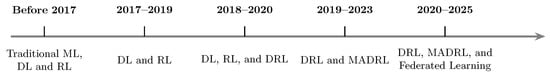

Figure 6 illustrates the progression of the learning algorithms used in the computation offloading and resource allocation in fog computing environments. Initially, traditional ML, DL, and RL techniques were applied in centralized device-to-cloud offloading models. This was followed by the adoption of decentralized offloading in device-to-edge-to-cloud systems, device-to-MEC, and device–fog–cloud, and the emergence of MADRL for multi-UAV and multi-agent fog coordination. More recently, the field has shifted toward decentralized and federated MADRL in fog and edge environments.

Figure 6.

Timeline of learning algorithms for task offloading and resource allocation in fog computing environments.

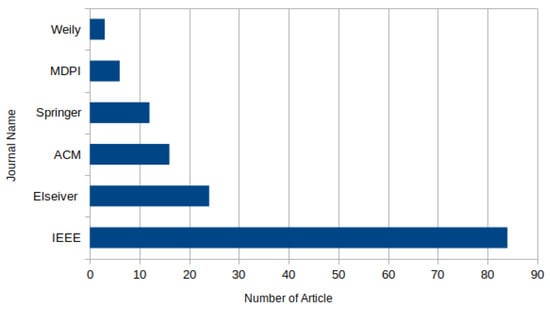

4.2. Bibliometric Analysis

Figure 7 illustrates the distribution of published articles on DRL-based task offloading in fog computing across major academic journals and publishers. To deepen the bibliometric analysis of DRL applications in fog computing, incorporating publication year trends and the distribution between conference and journal publications offers valuable context into how this field has evolved. Particularly after 2018, there has been a noticeable rising trend in research activity over the past decade. This rise follows the maturation of fog computing as a fundamental infrastructure for low-latency and distributed IoT services and the more widespread use of DRL methods in several disciplines.

Figure 7.

Journal database.

Between 2016 and 2018, research on fog-based resource management mostly used either DL alone or conventional RL. Starting in 2019, though, DRL-specific publications grew dramatically in numbers. The increasing awareness that DRL was well adapted to handle the complexity and movement of fog surroundings through its combination of perception (DL) and sequential decision-making (RL) drove this shift. More sophisticated paradigms including multi-agent DRL (MADRL) and federated DRL approaches started to be researched in 2022, therefore tackling scalability and decentralization more efficiently. These patterns imply that the field is maturing in terms of methodological complexity and practical relevance as well as increasing in volume. Regarding distribution channels, conference and journal publications exhibit a striking divide.

Regarding dissemination channels, there is a significant divide between conference and journal publications. Conference proceedings account for the majority, approximately 60%, of the research output, with IEEE-sponsored venues such as ICC, INFOCOM, and Globecom being the most prominent. These platforms are particularly preferred for the rapid dissemination of novel ideas and early-stage prototypes, often showcasing new DRL-based algorithms or architectures for task offloading. Journal publications, on the other hand—which make up roughly 40% of the literature—usually show up in high-impact venues like IEEE Access, ACM Transactions, and Elsevier’s Future Generation Computer Systems. These works tend to provide more comprehensive evaluations, real-world use cases, and longitudinal performance assessments, often addressing challenges like energy efficiency, service latency, and resource utilization in depth.

In summary, the bibliometric trends in DRL-based fog computing research reflect both growing interest and advancing maturity. The field’s relevance is underlined by the growing number of publications year over year; the balance between conference and journal outputs indicates a healthy research pipeline—from early invention to applied validation. These insights highlight how DRL has developed from an emerging technology into a strategic tool with emphasis on managing complex and distributed fog computing systems.

4.3. Empirical Distribution of DRL Algorithms

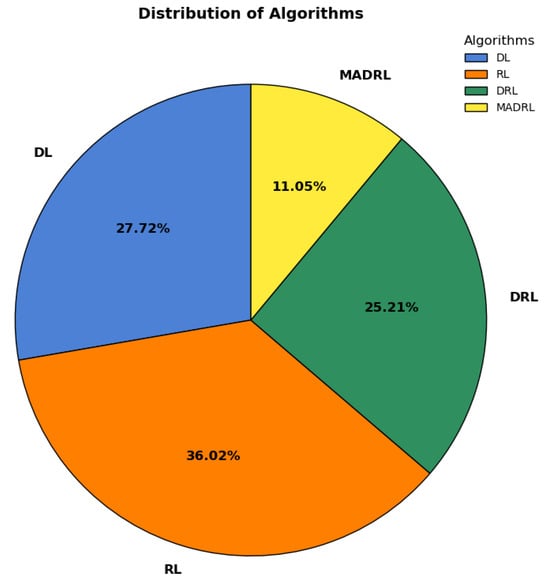

This section presents a comparative analysis of DRL in relation to DL and RL, followed by a discussion on the empirical distribution of various DRL algorithms. The pie chart in Figure 8 extracted from our database shows that RL techniques are the most commonly used approach by 36.02% to solve the joint task offloading and resource allocation decision problem, followed by DL 27.72%, DRL 25.21%, and MADRL 11.05%. This shows that DRL with the MADRL approach accounts for 36.26%, indicating a substantial increase in the adoption of DRL algorithms for task offloading and resource allocation in fog environments.

Figure 8.

Research work distribution using DL, RL, DRL, and MADRL algorithms.

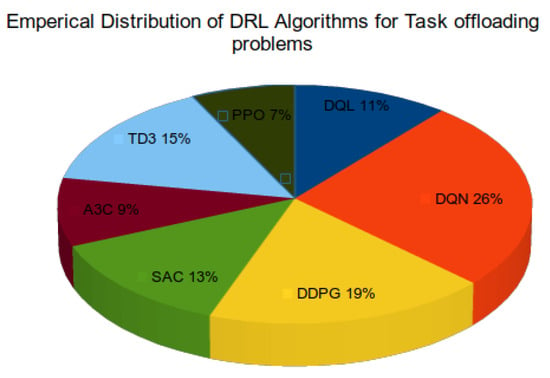

Figure 9 illustrates the empirical distribution of various DRL algorithms used to address task offloading problems in fog computing environments. Among the surveyed studies, Deep Q-Network (DQN) emerges as the most widely adopted algorithm, accounting for 26% of the implementations. DQN’s popularity can be attributed to its relatively simple architecture and strong performance in discrete action spaces, making it well suited for decision-making tasks like binary offloading or server selection.

Figure 9.

Empirical distribution of DRL algorithms.

Deep Deterministic Policy Gradient (DDPG), with a 19% share, follows DQN to have the second-highest share. It is particularly successful in continuous action spaces—which are prevalent in fog computing situations requiring fine-grained resource allocation decisions. Then follow, with 15% and 13%, respectively, Twin Delayed Deep Deterministic Policy Gradient (TD3) and Soft Actor–Critic (SAC), showing rising interest in off-policy actor–critic techniques offering more steady training and better exploration. More current research, however, emphasizes a rising inclination toward the TD3 algorithm because of its better performance across several aspects. Faster convergence, lower latency, lower energy consumption, and better cooperative decision-making in distributed settings are all features of TD3 emphasized by studies such as Wakgra et al. [37], Ali et al. [38], Wakgra et al. [39], and Chen et al. [40]. Furthermore, as emphasized by Tadele et al. [41] and Ali et al. [38], TD3 effectively handles large action spaces and supports decentralized decision-making. With clipped double-Q learning, delayed policy updates, and target policy smoothing, all of which help for more stable training and reduced overestimation bias, TD3’s architecture is enhanced compared to earlier algorithms. These characteristics make TD3 exceptionally suited for complicated, dynamic, and decentralized fog computing settings, especially when used implemented in multi-agent systems.

The Advantage Actor–Critic (A3C) algorithm accounts for 9%, indicating moderate usage due to its ability to handle asynchronous updates and parallel agents, which align well with distributed fog environments. Deep Q-Learning (DQL), a classic variant of DQN, is used in 11% of the studies, while Proximal Policy Optimization (PPO) comprises the smallest share at 7%, despite its robust policy optimization characteristics.

Overall, the distribution highlights a clear preference for value-based techniques such DQN, as well as growing acceptance of hybrid actor–critic systems like DDPG and TD3. These algorithms, well suited for task offloading in dynamic and resource-constrained fog computing situations, strike a pragmatic balance between decision-making performance and implementation complexity. Notably, recent research shows a clear shift from conventional value-based approaches toward more sophisticated actor–critic methods, including SAC and TD3. This transition shows the increasing need for more flexibility, training stability, and scalability to successfully handle the heterogeneous and distributed nature of contemporary fog-based systems.

4.4. Performance Metrics

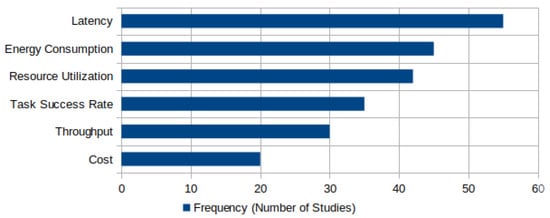

Figure 10 summarizes the key performance metrics commonly used in DRL-based task offloading studies within fog computing environments. The x-axis represents the frequency, i.e., the number of studies that use each metric, while the y-axis lists the evaluation metrics themselves.

Figure 10.

Key performance metrics used in DRL-based task offloading studies in fog computing environments.

Among the key performance metrics, latency emerges as the most frequently used metric, appearing in over 50 studies. This underscores the critical importance of minimizing task execution delays, particularly for latency-sensitive applications such as real-time monitoring, autonomous systems, and intelligent IoT services. Energy consumption follows closely, reflecting the widespread need to optimize power usage in resource-constrained devices and fog nodes. Resource utilization is also a prominent metric, used to evaluate how effectively computational and network resources are allocated across the distributed fog infrastructure. In addition, task success rate, which measures the percentage of offloaded tasks completed within QoS constraints, serves as an indicator of system reliability and robustness. Throughput, defined as the number of tasks completed per unit time, is commonly used to assess the efficiency of the DRL agent in managing dynamic workloads. Finally, the cost of a system, in terms of computation, communication, or monetary expenditure, is less frequently evaluated but remains an important consideration for large-scale, real-world deployments. In general, this distribution of metrics reflects the multi-objective nature of DRL-based optimization in fog computing, where trade-offs between latency, energy, and system performance must be carefully balanced.

4.5. Comparative Analysis of DRL Algorithms

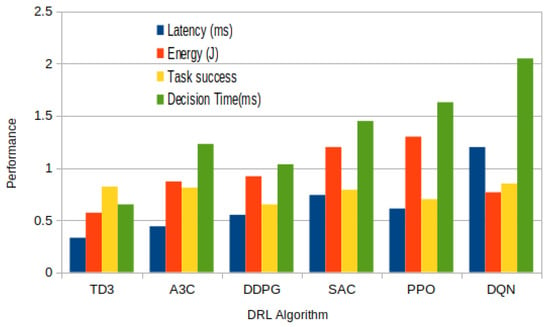

Figure 11 presents a comparative analysis of six prominent DRL algorithms—TD3, A3C, DDPG, SAC, PPO, and DQN—based on four performance metrics: latency (ms), energy consumption (J), task success rate, and decision time (ms). This comparison provides valuable insights into the trade-offs and suitability of each algorithm for task offloading in fog computing environments.

Figure 11.

DRL algorithm performance comparison.

Among the algorithms evaluated, TD3 consistently demonstrates the most favorable performance in all four metrics. It achieves the lowest latency and energy consumption, while also maintaining high task success rates and minimal decision time. This suggests that TD3 is well suited for real-time, resource-constrained fog systems, offering both efficiency and responsiveness. In contrast, DQN, despite being the most frequently used algorithm in the literature, performs the worst in terms of latency and decision time, with values peaking above all other methods. While DQN still exhibits a decent task success rate, its high latency and computational overhead indicate that it may not be optimal for highly dynamic or time-sensitive fog scenarios.

PPO and SAC show moderate performance, with PPO slightly outperforming SAC in energy efficiency and task success, though both suffer from relatively high decision times. These algorithms may offer more stable learning due to their policy optimization mechanisms but are potentially less efficient during real-time inference. DDPG and A3C fall in the mid-range across all metrics. DDPG provides a balanced trade-off between latency and task success, while A3C exhibits slightly higher energy consumption. These results highlight their viability for fog systems that require asynchronous training or continuous action spaces, though not necessarily under tight time constraints.

Overall, the analysis underscores TD3’s superiority in balancing all key performance indicators, low latency, energy efficiency, fast decision-making, and high task success, making it particularly advantageous for real-time, distributed fog computing applications. Meanwhile, DQN’s high computational cost, despite its popularity, reveals a gap between theoretical preference and practical suitability.

4.6. Single-Agent vs. Multi-Agent Performance

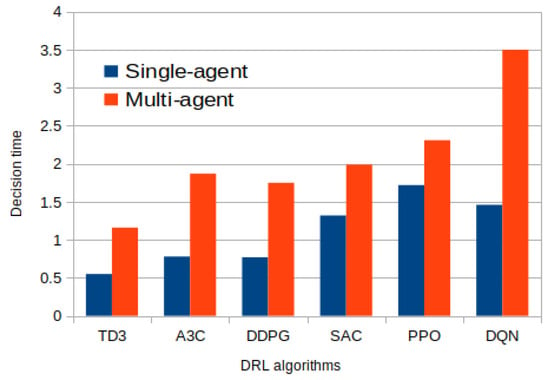

Figure 12 illustrates a comparative analysis of decision time between single-agent and multi-agent deep reinforcement learning (DRL) algorithms within the context of task offloading in fog computing environments. The results clearly indicate that single-agent DRL algorithms generally exhibit a lower decision time compared to their multi-agent counterparts. This performance difference can be attributed to the simpler decision-making process in single-agent systems, where only one agent interacts with the environment and makes task offloading decisions without the need for coordination or communication with other agents.

Figure 12.

Decision time: single-agent vs. multi-agent DRL.

Among all evaluated algorithms, TD3 consistently outperforms the others in both single-agent and multi-agent settings. TD3 achieves the lowest decision latency, making it highly suitable for real-time applications in fog computing, where rapid responsiveness is critical to meet strict QoS requirements. Its efficient decision-making can be credited to its core algorithmic improvements, such as delayed policy updates, clipped double Q-learning, and target policy smoothing, which enhance training stability and inference speed.

On the other end of the spectrum, DQN registers the highest decision time latency in both configurations. This performance drawback highlights the limitations of value-based methods like DQN in dynamic, resource-constrained environments where swift adaptation is essential. The relatively high computational complexity and sequential decision-making structure of DQN likely contribute to its slower performance.

The comparison emphasizes a crucial trade-off: While multi-agent DRL (MADRL) systems are advantageous for distributed learning and scalability in fog networks, they introduce additional communication overhead and coordination complexity, which can negatively impact decision time. Therefore, for time-sensitive applications, single-agent DRL, particularly TD3, offers a more efficient and practical solution, whereas MADRL frameworks may be better suited for scenarios requiring decentralized coordination and long-term global optimization.

4.7. Task Offloading Decision Time

The task offloading decision time can also be further analyzed by categorizing the agent’s action space into discrete, continuous, and hybrid types, as summarized in Table 3. This classification provides deeper insight into how the nature of the action space directly influences the complexity and speed of the decision-making process in DRL-based offloading systems.

Table 3.

Categorizing task offloading approach based on agent action space.

Discrete action spaces typically involve a limited set of predefined choices, such as selecting whether to offload a task or determining which fog node to send the task to. DRL algorithms operating in discrete spaces (e.g., DQN) are generally easier to implement but may face scalability limitations in complex environments, and they often incur higher decision latency as the number of actions increases. Continuous action spaces, on the other hand, allow for fine-grained control over decisions such as adjusting bandwidth allocation or CPU utilization levels. Algorithms such as DDPG, TD3, and SAC are well suited for continuous spaces, enabling more nuanced and adaptive offloading strategies. These models often demonstrate improved efficiency and faster decision times compared to discrete approaches, especially in dynamic fog environments where resource conditions vary continuously. Hybrid action spaces combine both discrete and continuous elements, allowing agents to perform complex multi-dimensional decisions, for example, choosing a target node (discrete) and allocating a specific amount of CPU resources (continuous). While offering greater flexibility and decision accuracy, hybrid models also introduce higher computational complexity, which can impact decision time unless optimized carefully.

By categorizing action spaces in this manner, researchers and practitioners can better select appropriate DRL models based on application requirements, system constraints, and performance goals—particularly with respect to decision latency in real-time fog computing scenarios.

5. Discussion

In this section, we critically analyze and interpret the findings presented in the previous sections, linking them to the overarching objectives of this study. We present the taxonomy of DL-, RL-, and DRL-based task offloading in fog computing. After that, we thoroughly examine how the reviewed approaches, frameworks, and methodologies contribute to advancing DRL-based task offloading and resource allocation in fog computing environments.

5.1. Taxonomy of DRL-Based Task Offloading in Fog Computing

Recently, task offloading decisions and resource allocation processes between smart user devices and fog computing environments have been supported by AI/ML. This intelligence-based decision approach is used to help the user device choose an offloading target from the available fog nodes. This improves the task offloading process in terms of enhancing resource allocation and task execution delay from the end device to the fog node. Computational tasks and resources are two fundamental elements to consider in fog-based task offloading approaches. In addition, knowing the nature of the application as delay tolerant and delay sensitive affects the level of decision performance. Currently, the application of AI/ML plays a significant role in optimizing the task offloading decision process while adopting an optimal policy. The integration of DL and RL, called DRL, is used to advance the decision process. In addition, hybrid learning techniques are used to solve offloading decision problems.

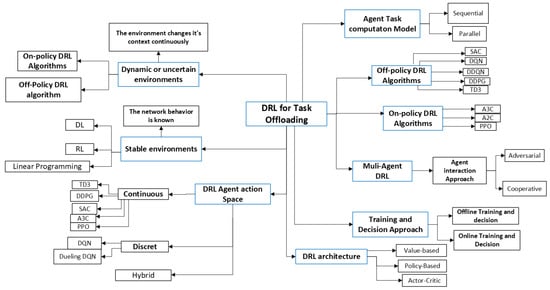

Figure 13 depicts the taxonomy of DL-, RL-, and DRL-based task offloading for stable and unstable fog computing environments. This work considers secure offloading as a future exploitable area that has not been covered much.

Figure 13.

Taxonomy of DL-, RL-, and DRL-based offloading.

The key is to explore the knowledge of fog node resources and task requirements for efficient resource allocation. Recently, learning algorithms have been used to extract knowledge from networks. In this regard, Cho and Xiao [54] discussed a learning approach for the relationship between task resource demand and the current workload status of the active fog node in a volatile vehicular computing network. In addition, Qi et al. [55] claimed a knowledge-driven service offloading decision in heterogeneous computing resources using DRL, considering access network, user mobility, and data dependency. Wang et al. [56] presented task offloading in a joint task scheduling and resource allocation problem to design an optimal policy using a deep Q-learning approach for NOMA-based fog computing networks. In the following subsections, the major components of DRL, such as DL, RL, and hybrid learning approaches, are discussed to address the task offloading problem while optimizing multiple objective QoS requirements.

5.1.1. Deep Learning (DL) Approaches

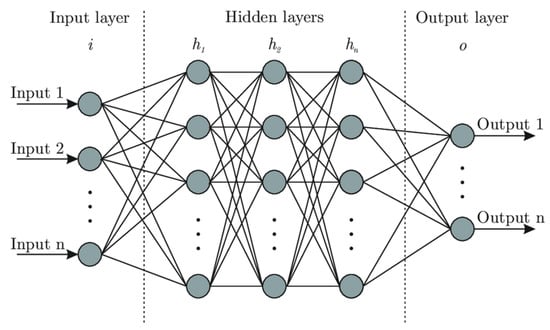

DL is a subset of machine learning that uses artificial neural networks (ANNs) with deep architectures (i.e., multiple hidden layers) to automatically learn hierarchical feature representations from data. As shown in Figure 14, a typical DL architecture consists of three main components: an input layer, hidden layers, and an output layer. The input layer maps raw features into the network; the hidden layers process the weighted feature inputs through activation functions to detect complex patterns; and the output layer produces final predictions based on the learning objective. Training is achieved through backpropagation, an optimization algorithm that adjusts the network’s weights by minimizing the difference between predicted and actual outputs.

Figure 14.

Classical neural network architecture.

DL models in fog computing environments, particularly when pre-trained, can significantly enhance network intelligence by supporting efficient task offloading and resource allocation. These models often leverage historical offloading data, such as device behavior, fog node availability, and resource usage patterns, to reduce computational overhead during inference. To improve adaptability in dynamic contexts, lightweight online training can be employed to fine-tune models for specific tasks, enabling situation-aware and real-time decision-making. By capturing complex patterns in the input data, DL facilitates collaborative processing between fog nodes and end-user devices, ultimately optimizing system performance under fluctuating conditions.

Several studies have demonstrated the practical utility of DL in latency-sensitive and mission-critical fog computing applications [49,57,58,59]. Despite these promising developments, the application of DL in highly dynamic fog environments remains limited. Task offloading in such contexts is often stochastic, following the Markov decision process (MDP) framework [60], where task arrivals and system states vary across time and location. Pre-trained DL models, while effective in static settings, often fail to generalize under these dynamic conditions without frequent updates. Maintaining Quality of Service (QoS) thus requires continual model retraining, which can introduce significant computational overhead. Moreover, intermittent fog node availability, particularly in mobile or vehicular networks such as the Internet of Vehicles (IoV), further complicates consistent model deployment and real-time responsiveness.

5.1.2. Reinforcement Learning (RL) Approaches

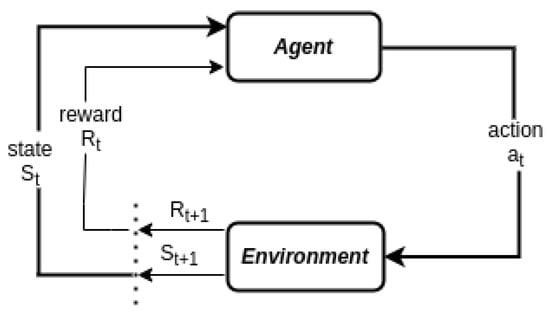

RL is a subfield of machine learning in which an agent learns to make decisions by continuously interacting with its environment. Through trial and error, the agent receives feedback in the form of rewards or penalties, which guide it in learning an optimal policy, a mapping from environmental states to actions, that maximizes the cumulative reward over time [16]. An RL model is typically defined by six key components: the agent, environment, state, action, reward, and state transition. In the context of fog computing, the environment represents the operational space involving user devices, edge servers, and fog nodes. The state is defined by the contextual information observed from the environment, such as the available computational resources of fog nodes, network bandwidth, latency conditions, and energy levels. The agent—typically a learning algorithm deployed on a user device or controller—perceives this state information and makes decisions about whether or not to offload a computational task.

When the agent takes an action (e.g., offloading to a specific fog node or choosing local execution), it observes the consequences of that action through reward signals. These rewards may be positive (e.g., low latency, efficient resource use) or negative (e.g., task failure, long execution time, high energy consumption). Over time, the agent refines its policy through a balance of exploration (trying new actions to discover their effects) and exploitation (choosing known actions that yield high rewards). The system then transitions to a new state based on the action taken and the environmental dynamics—this is referred to as the state transition.

In RL-based systems, the learning process can be implemented through a variety of training strategies, each offering distinct advantages depending on the complexity of the environment and the problem being addressed. One of the most widely used approaches is the value-based method, such as Q-learning, where the agent learns to estimate the expected utility or “value” of taking a specific action in a given state. This estimation is used to guide the agent toward actions that yield the highest long-term rewards. Alternatively, policy-based methods allow the agent to directly learn and optimize the policy function—a mapping from states to actions—without explicitly computing value functions. These methods are particularly useful in environments with large or continuous action spaces, where value estimation becomes computationally infeasible or less effective. A hybrid of the two, known as the actor–critic method, combines both value estimation and policy optimization to leverage the strengths of each.

RL systems may also be categorized based on whether they rely on an internal model of the environment. Model-based RL approaches involve learning or utilizing a predictive model of the environment’s dynamics to simulate future states and rewards, enabling the agent to plan its actions ahead of time. In contrast, model-free RL does not rely on any explicit modeling of the environment, learning policies or value functions directly from interactions with the environment. While model-free methods are generally more flexible and easier to implement, model-based approaches often require fewer samples and can be more efficient in complex, structured environments.

In the context of fog computing, RL techniques have shown great promise for improving task offloading and resource allocation, particularly in dynamic and unpredictable conditions. The core objective in these scenarios is to train intelligent agents capable of making adaptive, real-time decisions about where tasks should be executed and how computational resources should be managed. RL agents can learn optimal strategies for offloading tasks from user devices to fog nodes or cloud servers by evaluating current system states—such as resource availability, network latency, and energy consumption—and selecting actions that maximize long-term performance. Specifically, RL has been applied in fog environments to minimize end-to-end latency, ensuring that delay-sensitive applications (e.g., real-time health monitoring or autonomous vehicle control) receive timely processing. It also plays a vital role in reducing energy consumption, both on resource-constrained user devices and fog nodes. Furthermore, RL enhances overall system performance by improving task throughput and success rates, and by balancing computational loads across multiple fog nodes, thereby avoiding bottlenecks and increasing the scalability of the fog infrastructure. By continuously learning from experience and adapting to changing network conditions, RL-based task offloading systems contribute to more robust, efficient, and autonomous fog computing architectures.

5.1.3. Hybrid Approaches

Conventional offloading approaches, such as rule-based, linear programming, game theory, heuristics, meta-heuristics, matching theory, and various others, consider between user devices and fog nodes to deal with task offloading decisions and resource allocation problems. In addition, different studies also explore hybrid algorithms, including learning algorithms and conventional approaches, to tackle task offloading decision challenges in the fog computing environment. Some of the representative works are RL with Heuristics [61], Lyapunov guided RL [43], Federated RL [27], DRL with FL [62,63]. Recently, more attention has been given to a combined DL with RL called DRL [9,51], and FL with RL [10,27,63] algorithms are used for offloading problems.

However, the constantly changing formation of the fog and unpredictable updates of services make the environment challenging to converge quickly. To deal with this convergence problem, different learning algorithms and optimization approaches are combined to enhance the offloading decision and resource allocation problem. Lee et al. [61] present RL with heuristic approaches for resource management and resource allocation, respectively. Cao and Cai [64] considered a non-cooperative game approach to deal with communication and computation costs and machine learning to support distributed offloading decisions under dynamic conditions. Most of these approaches focus on global maxima and the immediate performance of the network. However, incorporating collective local and global knowledge can enhance the decision-making process. In conclusion, the application of the DRL algorithm for solving task offloading problems in such stochastic fog environments where the action space continuously grows becomes more applicable.

5.2. DRL-Based Task Offloading Architecture in Fog Computing

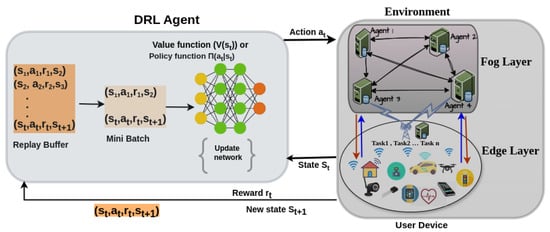

DRL has become a promising approach for managing task offloading in dynamic and heterogeneous fog computing environments. By integrating the perceptual strengths of DL with the adaptive decision-making of RL, DRL enables agents to learn optimal offloading strategies in real time, even under uncertainty. However, applying DRL in fog computing introduces challenges not encountered in standard DRL applications such as games or robotics, which assume centralized and fully observable environments. Conversely, fog environments are partially observable, distributed, and resource-limited. Each DRL agent is usually deployed at the fog node, and makes a decision based on limited local information while adapting to dynamic workloads, node mobility, and resource heterogeneity. This necessitates decentralized multi-agent DRL (MADRL) architectures, where agents work independently but may cooperate to raise general system performance. The action space and reward structures in fog systems are more complex, mostly encompassing multi-objective targets like energy usage, minimizing latency, and operational cost. Furthermore, due to limited computation and communication resources, DRL models in the fog network have to be lightweight and scalable, leveraging techniques such as online learning or federated updates. Figure 15 illustrates the interaction between DRL agents and the fog environment, where agents continuously monitor task and resource statuses to make informed offloading decisions.

Figure 15.

DRL-based offloading architecture.

In DRL-based task offloading frameworks, intelligent agents are typically deployed at the fog node level to observe dynamic network states and task characteristics such as workload size, task deadlines, available bandwidth, and energy levels. Based on these observations, the agent makes real-time decisions about whether to execute tasks locally or offload them to neighboring fog nodes. This decision-making process is guided by RL principles, where the agent learns an optimal policy by interacting with the environment and maximizing cumulative rewards over time. As demonstrated in the works of Seid et al. [34] and Xu et al. [35], DRL agents adaptively learn offloading strategies that optimize key performance metrics such as latency, energy consumption, and resource utilization.

5.2.1. Architectural Elements of DRL

In the MDP-based architecture, the task offloading and resource allocation problem in fog computing is formulated as a Markov decision process (MDP). This framework provides a robust foundation for modeling sequential decision-making in dynamic, uncertain, and partially observable environments. Within the context of fog computing, MDP addresses two key challenges: (1) managing state transitions that reflect the stochastic nature of resource availability, and (2) supporting the continuous training and adaptation of reinforcement learning agents under fluctuating system conditions. The MDP model is characterized by essential components such as transition probabilities (P), reward functions (r), the initial state of each fog node , and the next state reached after executing a given action. This formulation enables DRL agents to iteratively interact with their environment, learn from observed outcomes, and gradually develop optimal policies for intelligent task offloading and efficient resource utilization.

Figure 16 models the learning dynamics formally framed with the MDP tuples where

- S is a set of states, where is the state in step t.

- A is a set of actions. is the action in step t.

- is the state transition function indicating the probability that state happens after action a is taken in state s.

- is the reward when action a is taken in state s, and the next state is .

Figure 16.

DRL modeling in MDP architecture.

State: The state represents the current context or situation of the environment as perceived by the agent. It includes all relevant information needed to make a decision. In fog computing, a state may include variables such as CPU usage, bandwidth availability, energy level, task queue length, and network latency at a fog node.

DRL Agent: The agent is the decision-making entity in a DRL system that interacts with the environment. It observes the current state, selects actions based on a learned policy, and updates its strategy over time to maximize long-term cumulative rewards. In fog computing, the agent is typically deployed at the fog node, where it learns when and where to offload tasks or allocate resources. In multi-agent environments, the agent interaction models are described as cooperative, adversarial, mixed (cooperative and competitive), hierarchical, learning-based and communication-based [24,65].

Action: In this DRL-based task offloading in fog, the action is defined as executing the task locally and/or offload it to fog nodes. The action is actual, meaning that the selection of fog nodes and allocation of resources is performed according to the agent’s policy within the fog environments. The agents start the action by learning the network from their interactions based on resource status and task QoS requirements. Then, the decision is made either to execute locally or offload to fog nodes. In the second stage, if the user device decides to offload its executable task to the nearest local fog node, the task’s QoS requirements are attached to the task as information. After that, the autonomous fog node decides to execute locally or offload to other fog nodes. When the decision is to offload, the fog node with the task is looking for resources such as bandwidth, computing power, and memory for processing the offloaded task [66]. This exhaustive search of resources causes the search space to grow exponentially and increases run-time processing when task sizes increase in distributed fog computing. In support of this, Baek and Kaddoum [42] used Deep Recurrent Q-network (DRQN) and Liu et al. [46] Deep Q-learning (DQL) for task offloading problems for computation-intensive tasks. They claim that applying continuous updating raises a performance issue for task offloading; it mainly focuses on immediate performance, leading to performance degradation in the long run. Likewise, the stochastic nature of fog computing environments due to the mobility of fog nodes creates uncertainty of connectivity between nodes and makes it difficult to manage the state transitions of nodes. This highlights how the MDP can effectively model the dynamic nature of the network and addresses the challenges of task offloading.

Reward function: In DRL, the reward can be positive or negative depending on the agents’ decisions. The goal is to maximize the positive reward by minimizing task completion time and improving resource utilization during the execution of offloaded task. The negative reward is interpreted as the incurring of the maximum cost of resources, such as energy consumption and latency. The agent uses the value function and the policy function to calculate the reward from the agent’s present observation and transition probability distribution of the proposed action. The goal of the agent is to maximize the reward using the local maximum of the policy in each state of iteration.

However, in this MDP-based learning process, the agent does not know the direction in which to proceed while gathering the maximum reward. This is where the Bellman Equation (1) applies in RL learning to enable the agent with the memory from the transition in the MDP process.

Therefore, Equation (1) essentially states that the optimal policy for a given state is the action that maximizes the sum of the immediate reward and the discounted value of the next state, considering all possible next states and their associated transition probabilities . By applying Equation (1) recursively for each state in the environment, we can obtain the optimal policy for the entire environment. The reward function presented by Liu et al. [46] uses iterative computation of the optimal value function, which represents the maximum expected cumulative reward that an agent can receive from a given state s under an optimal policy . So, it is used to draw the value iteration in each state. It starts by initializing the value function to 0 for all states s. Then, for each iteration i, the algorithm updates the value function for each state s by taking the maximum expected cumulative reward over all possible actions that can be taken from that state s. In the reward function, a hyperparameter and are used to measure how far the agent reaches its goal and to govern the algorithm’s pace for updating the value parameters, respectively.

5.2.2. Single-Agent vs. Multi-Agent DRL

Fog computing is inherently distributed, relying on decentralized management for low-latency and scalable service delivery. In such architectures, users can execute their applications on one or more fog nodes. To support intelligent offloading and efficient resource allocation, AI/ML methods are increasingly adopted. However, in these single-agent models, task and resource management can hinder performance in decentralized fog environments where nodes operate autonomously. Multi-agent learning (MAL) has emerged as a robust alternative, enabling fog nodes to coordinate their decisions. This cooperation helps reduce computational delays and enhances the overall utilization of resources across the system. Here, the challenge is how to coordinate the agent policy and inter-agent communications. Two MAL settings are considered in RL-based task processing: The first is the cooperative approach, where the fog nodes (DRL agents) collaborate to achieve common objectives; agents share information to achieve the best result for the team. The other approach is the adversarial, in which agents compete to achieve their individual goals. Recently, DRL enabled MAL to be applied to various problem domains. The MADRL used in MEC enabled the Industrial Internet of Things (IIoT) for multi-channel access and task offloading problems [67]. Lu et al. [68] designed a multi-agent DRL approach for independent learning agents to solve stochastic end-user massive requirements change and computational resources. Chen et al. [40] introduced cooperative MADRL for multi-device multi-cloud dynamic network settings for real-time computing requirements in varied wireless channels in a centralized training and distributed execution strategy.

5.3. Task Offloading Architecture

The architectural scope of task offloading in fog computing defines the structural arrangement and interaction between user devices , fog nodes , and cloud computing () infrastructure. These architectures determine where and how computational tasks are offloaded and processed, which has direct implications for latency, energy efficiency, bandwidth utilization, and QoS. They also influence how learning-based offloading decisions are designed, specifically, how models explore and exploit task-resource coordination in distributed environments. In the user device–to-fog setup, tasks are offloaded directly from IoT devices to nearby fog nodes, which provide low-latency processing capabilities closer to the edge. In contrast, the user device–to-fog–to-cloud architecture introduces a hierarchical processing framework, where tasks are first offloaded to fog nodes, and if resource constraints are encountered, they are further offloaded to the cloud. The user device–to-cloud model bypass intermediate fog layers, sending tasks directly to the cloud, which may result in higher latency but greater computational power. Lastly, the fog–to-cloud architecture supports inter-layer communication between fog and cloud for load balancing, resource sharing, and overflow handling.

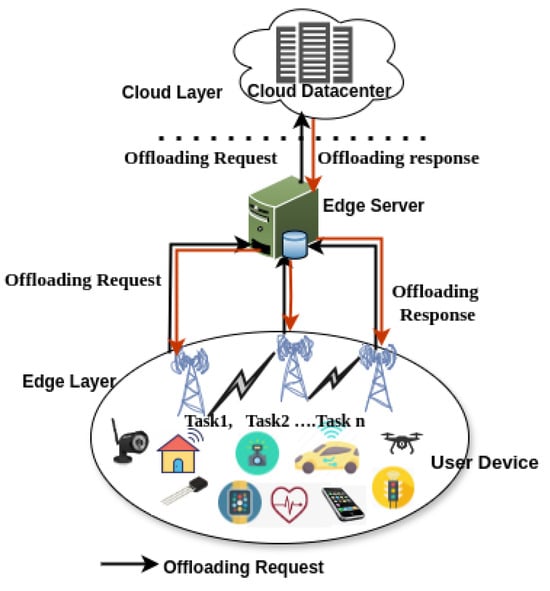

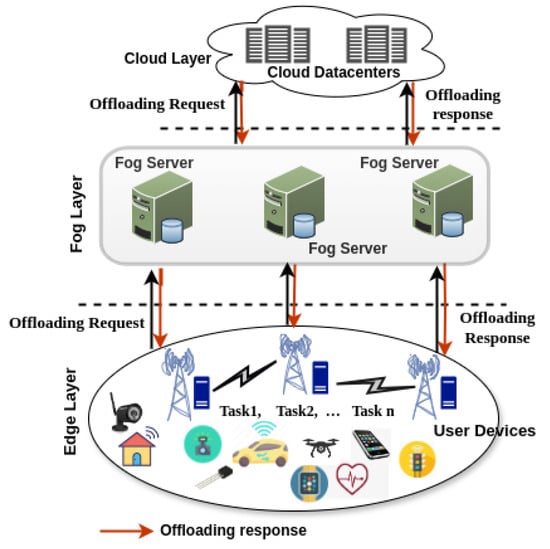

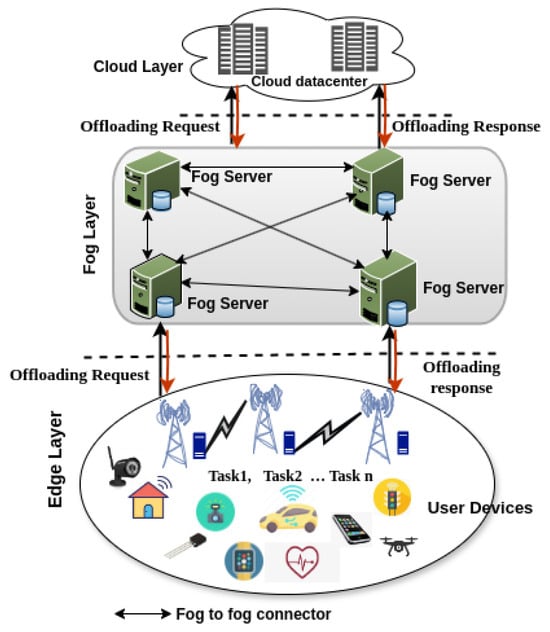

The current task offloading scenarios in fog computing are illustrated through the three architectures shown in Figure 17 (many-to-one), Figure 18 (many-to-many without fog-to-fog), and Figure 19 (many-to-many with fog-to-fog), where user devices offload computational tasks to fog nodes for processing.

Figure 17.

Task offloading architecture where multiple user devices offload their tasks to a single central fog server.

Figure 18.

Task offloading architecture where multiple user devices offload their tasks to multiple fog nodes, but there is no cooperation between fog nodes.

Figure 19.

Task offloading architecture where multiple user devices offload their tasks to multiple cooperating fog nodes.

Figure 17 illustrates a many-to-one offloading approach, where multiple end-user devices offload their tasks to a single edge server, as presented by Hortelano et al. [1]. This configuration primarily represents an edge computing scenario, as it involves only one computing node positioned above the user devices. In contrast, a true fog architecture should involve multiple interconnected computing nodes within the fog layer to support distributed processing. Figure 18, adapted from Zabihi et al. [69], depicts a many-to-many task offloading model, where multiple user devices offload tasks to multiple fog servers using various communication protocols. However, this architecture does not address inter-fog node communication, which is essential for dynamic task migration and resource balancing across the network. Similarly, Figure 19, based on the work of Tran-Dang et al. [12], presents another many-to-many offloading architecture that introduces the concept of communication between fog nodes in the network. However, the mechanisms of fog-to-fog communication, particularly how fog nodes interact and collaborate, are not explicitly detailed.

Overall, the architectural scope plays a critical role in shaping the effectiveness of learning-based task offloading frameworks. A robust decision-making model must align with the operational characteristics of the deployed architecture, whether flat (e.g., device–fog) or hierarchical (e.g., device–fog–cloud), to ensure optimal performance in distributed fog computing environments. Based on this comparative analysis, this study recommends the extended many-to-many task offloading architecture (Figure 19) as the most suitable model for applying DRL in fog computing environments. Unlike previous models, it incorporates the critical feature of inter-fog node communication, which is essential for collaborative decision-making, dynamic task migration, and efficient resource balancing. This architecture best aligns with the decentralized and latency-sensitive nature of fog computing and offers the structural flexibility needed for DRL-based task offloading in dynamic IoT systems [38].

5.4. Agent Controller Strategies

The coordination of agent communication between the task-owned node and the task executor node is discussed in the literature as a centralized, distributed, and federated approach. In the centralized model, a central controller is responsible for making all task offloading decisions. The central controller gathers information from various fog nodes to assign tasks and monitor execution across the system. Both model training and decision-making processes occur at the central server. While this architecture simplifies coordination, it poses significant scalability challenges, including the risk of a single point of failure. Moreover, it is generally unsuitable for dynamic, latency-sensitive applications or scenarios that require real-time, on-the-fly processing of raw data. The model is most effective when computational resources are managed by a single entity or institution [70].

The distributed architecture typically follows one of two approaches. In the first, task computation is delegated to multiple fog nodes, while offloading decisions are still managed centrally. In the second, both task computation and offloading decisions are handled in a fully distributed manner. For the latter approach, distributed learning techniques are employed to support the offloading process by sharing communication and execution information across nodes. Each fog node is responsible for managing the resource collaboration and executing tasks, thereby enabling decentralized control of the offloading process [10,71,72]. However, this model introduces certain challenges. Offloading private data to unknown or untrusted fog nodes raises security and privacy concerns. Additionally, frequent task transmissions can increase network traffic, leading to potential performance degradation across the system.

Traditional centralized and distributed learning approaches typically involve frequent offloading of locally generated data from user devices to edge, fog, or cloud servers for training and decision-making. Wahab et al. [73] introduced federated learning as an alternative offloading strategy to address critical issues such as network traffic, data privacy, and security. In federated architectures, model training is performed locally on user devices or within a heterogeneous network environment, avoiding the need to transmit raw data to fog nodes. Instead, only the trained model or its updates are shared with distributed nodes, where decision-making can also occur. This approach significantly enhances data privacy and security while reducing communication overhead, making it well-suited for latency-sensitive and privacy-critical applications.

5.5. Coordination Mechanisms

Task offloading architectures are typically classified according to the coordination mechanisms among nodes that provide computational resources for executing tasks. These architectures fall into three main topological categories: (i) static (fixed) topology, (ii) dynamic topology characterized by node mobility, and (iii) hybrid topology, which combines features of both static and dynamic configurations. This section examines each of these architectural models, discussing their practical application scenarios, the unique challenges they present, and the solutions proposed in the existing literature.

Static Model: In this architectural approach, both the fog nodes and the client user devices’ status and the placement remain unchanged over time. Here, the architecture follows a controlled approach since the task offloading node and receiving fog nodes are known. The representative application scenarios mentioned, such as manufacturing industries [74], involve fog nodes and IoT devices such as cameras and sensors that are deployed in fixed locations. Furthermore, smart traffic monitoring scenarios for stationary traffic nodes are also considered an example of fixed topology, since both the cameras and traffic light sensors are installed in a constant location [75]. Smart city utilities such as public safety, optimized service delivery, and others [76] can be designed with this type of architecture. Resource management in this architectural model exhibits lower complexity compared to dynamic and hybrid models [20]; however, potential concerns relate to underutilized resources and inflexibility in allocating them for collaborative tasks involving other devices.

Dynamic Model: In this architectural model, the topology designed for both the condition of servers and clients is constantly changing their status [77]. This dynamism reflects both the resource status of the participating nodes and their mobility in joining or leaving the network, such as in vehicular fog computing. This means that a node’s status is unpredictable in terms of its workload, location, and resource availability. This mobility-aware fog computing network setting considers key features such as dynamic resource allocation, node mobility predictions, managing seamless offloading tasks and data, context-aware computing, and others [78]. Particularly, it ensures service continuity and resource utilization between dynamic fog nodes when devices move from one coverage area to another by leveraging location and contextual information for optimizing task processing and decision-making at the respective nodes. Tan et al. [79] examined task offloading in dynamic fog computing networks with non-stationary topologies and variable computing resources, comparing stationary and non-stationary contexts. While their model assumes constant unit-task offloading delay under static or slow-changing conditions, real-world node mobility—characterized by unpredictable speed, distance, and duration within coverage—requires intelligent handling. In multi-hop ad hoc fog environments, such mobility can significantly impact application performance. Similar concepts have been applied in UAV-based fog computing for the Internet of Medical Things (IoMT) in rural, resource-limited areas [80] and in vehicular fog computing [61], where both task-requesting and task-executing nodes are in motion.

Hybrid Model: The hybrid model is where the network context has a mix of dynamic and static models. In conventional hybrid fog architecture, the fog nodes typically consist of fixed devices, whereas the user devices are mobile [81]. However, in this mixed network setting, dynamic and static settings are introduced, and the architecture becomes even more dynamic and requires additional considerations. This category considers the fog node as having a constant location as static, and clients’ status is mobile or vice versa according to priority settings [82]. The application scenarios for this model are represented as traffic monitoring and surveillance [83], environmental monitoring [84], and UAV-based service provision mechanisms in a rural area presented in [85], where there is no stable network connection. The UAV acts as a server to provide an access point for the local end devices. It can also be applicable to provide communication services in emergency response in a disaster context. Hazra et al. [81] explain the use case of a health monitoring environment where fog nodes are fixed, but patient status monitoring IoT devices are in mobility modes. The opportunities in the mixed architectural model are the separation of layers, simplifying resource management, improved security, and data privacy through local processing in each context. The challenge in this fixed architecture is potentially higher upfront costs compared with other approaches.

In dynamic and hybrid architectural models, managing the complexity of mobile fog nodes requires advanced control mechanisms. These nodes often have limited battery life, making efficient task processing and communication protocols essential for optimization. Their unstable nature can lead to task failures, disrupting dependent tasks offloaded to other nodes. Ensuring secure communication and data privacy in such dynamic environments is also a key challenge. To address these issues, learning-based mechanisms that predict node mobility patterns and network characteristics—such as long-term connectivity and stable resource availability—are increasingly necessary. Recent research proposes distributed, decentralized control strategies to adapt to changing network conditions, with emerging focus areas including self-organizing networks and AI-driven resource management.

An important aspect in these models is awareness of fog node and resource status for effective task offloading. Pu et al. [86] introduced a device-to-device (D2D) communication approach to enable collaboration between edge devices, supported by an incentive scheme similar to peer-to-peer networks. Similarly, Al-Khafajiy [87] proposed collaborative strategies between fog nodes for shared workload processing. Through D2D communication, IoT devices can exchange status information with nearby devices to identify idle resources, enabling adjacent devices and fog nodes to coordinate via a wireless access point. In fixed-to-fixed architectures, device routing tables advertise and update resource status for all connected nodes. In both cases, the collected knowledge supports informed offloading decisions. Future work should explore DRL-based self-organizing network settings for smart user devices and fog computing environments that integrate both fixed and dynamic architectures.

5.6. DRL Algorithms

To provide contextual understanding, RL is broadly classified into model-based and model-free approaches. Model-based RL leverages knowledge of the environment’s dynamics, typically represented by an MDP with transition probabilities , in terms of policy iteration and value iteration to guide decision-making in stochastic fog computing environments. In contrast, model-free RL does not assume prior knowledge of the environment’s dynamics. Instead, it learns optimal policies through interaction, using methods such as policy gradient optimization, off-policy algorithms like Q-learning , and on-policy algorithms including Temporal Difference (TD) learning and SARSA. These approaches are widely used for task offloading and resource management in dynamic fog environments.

DRL extends both paradigms by integrating deep neural networks to approximate policies or value functions, enabling scalability to high-dimensional state and action spaces. Examples include Deep Q-Networks (DQN), which are model-free, and Actor–Critic (AC) methods, which combine policy and value learning. Some DRL frameworks also incorporate model-based components for planning and prediction. This hybridization improves adaptability and efficiency in complex, dynamic systems such as fog computing.

Several notable DRL algorithms have been applied to address the task offloading problem in fog computing, as summarized in Table 4. These algorithms can be categorized into Value-Based (VB), Policy-Based (PB), and Actor–Critic (AC) methods. VB algorithms focus on estimating the value of being in a particular state or performing a specific action. A prominent example is the Deep Q-Network (DQN), which integrates Q-learning with deep neural networks. It incorporates mechanisms such as experience replay and target networks, making it well suited for environments with discrete action spaces.