Digital Cardiovascular Twins, AI Agents, and Sensor Data: A Narrative Review from System Architecture to Proactive Heart Health

Abstract

1. Introduction

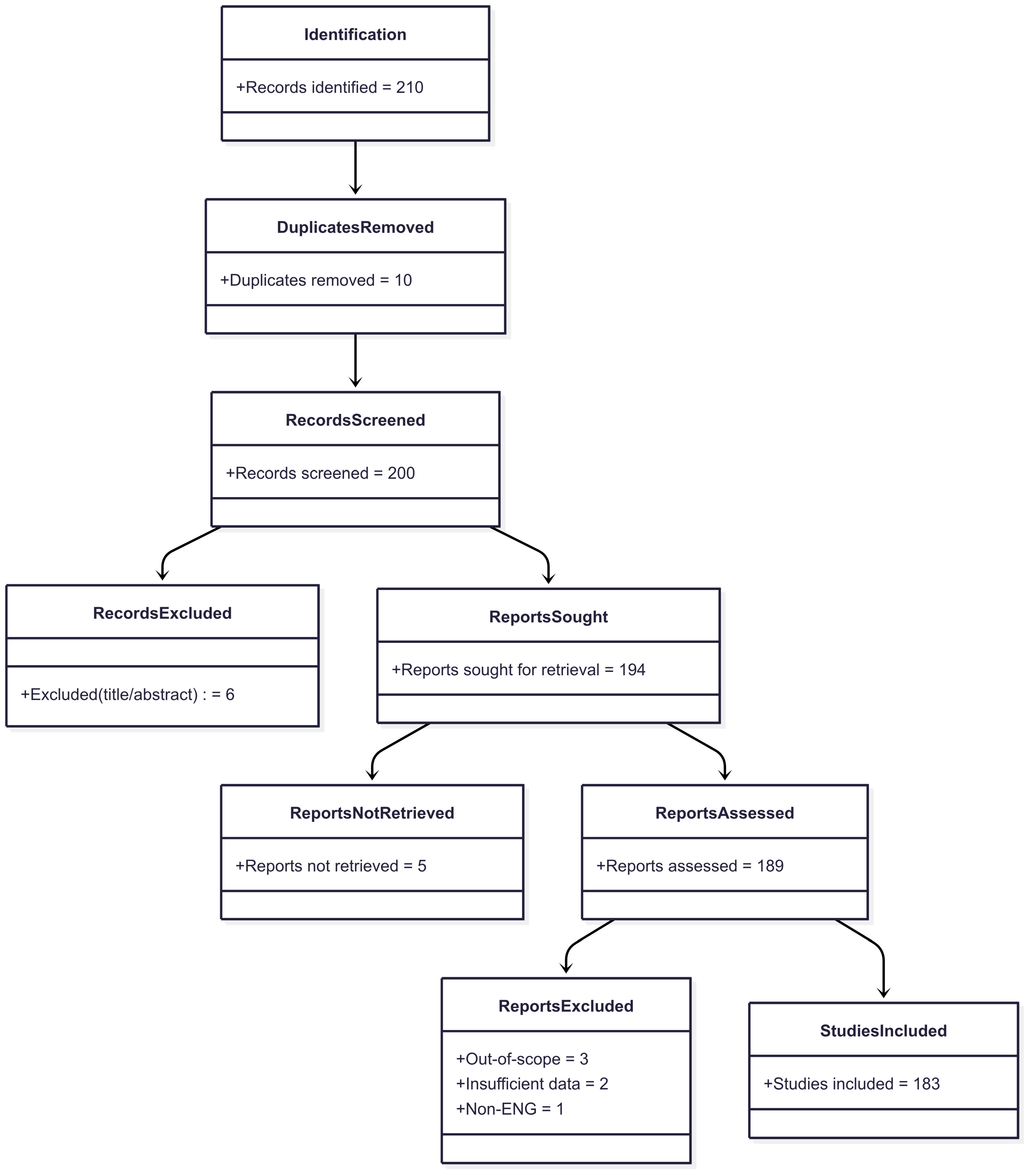

2. Methodology

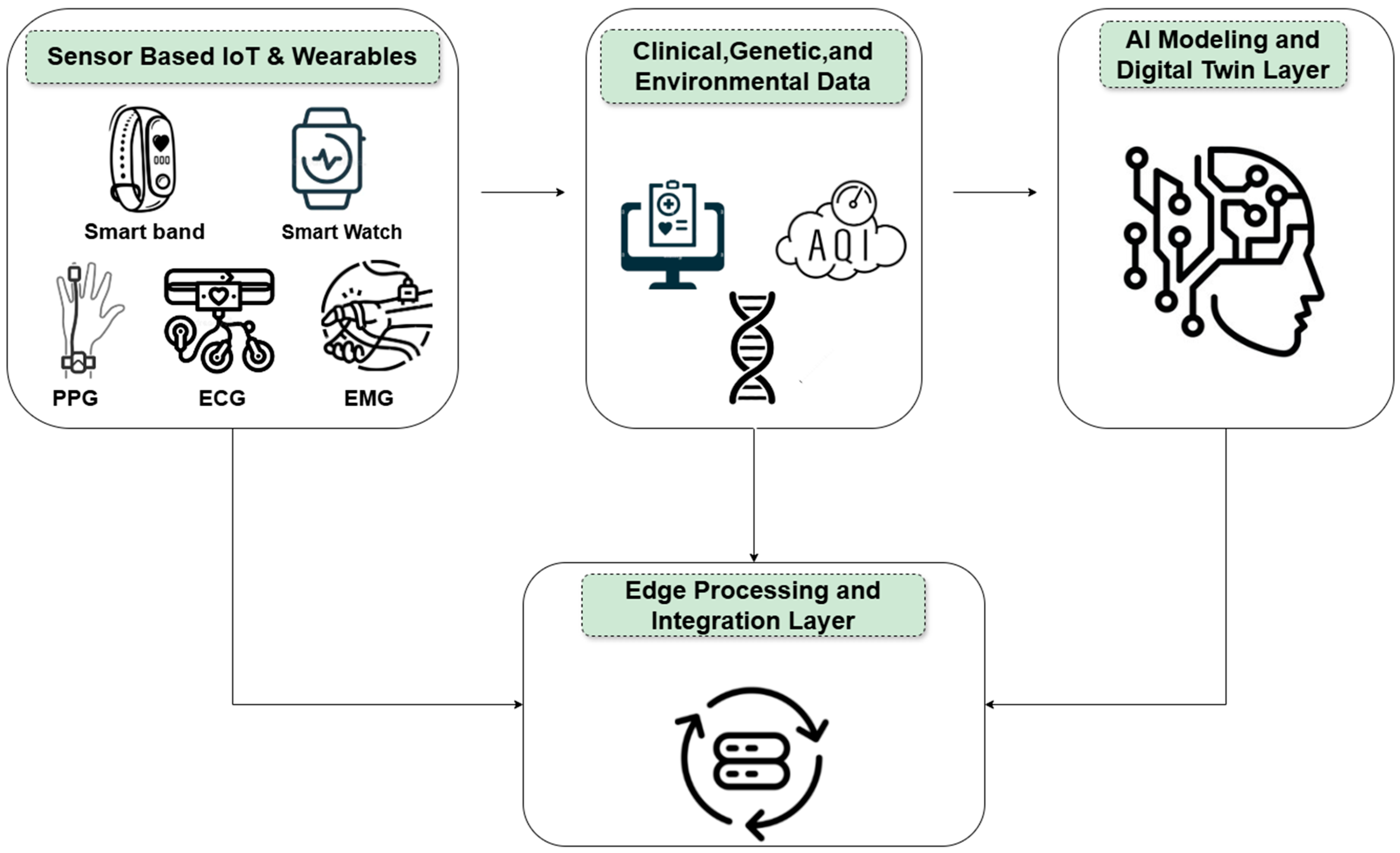

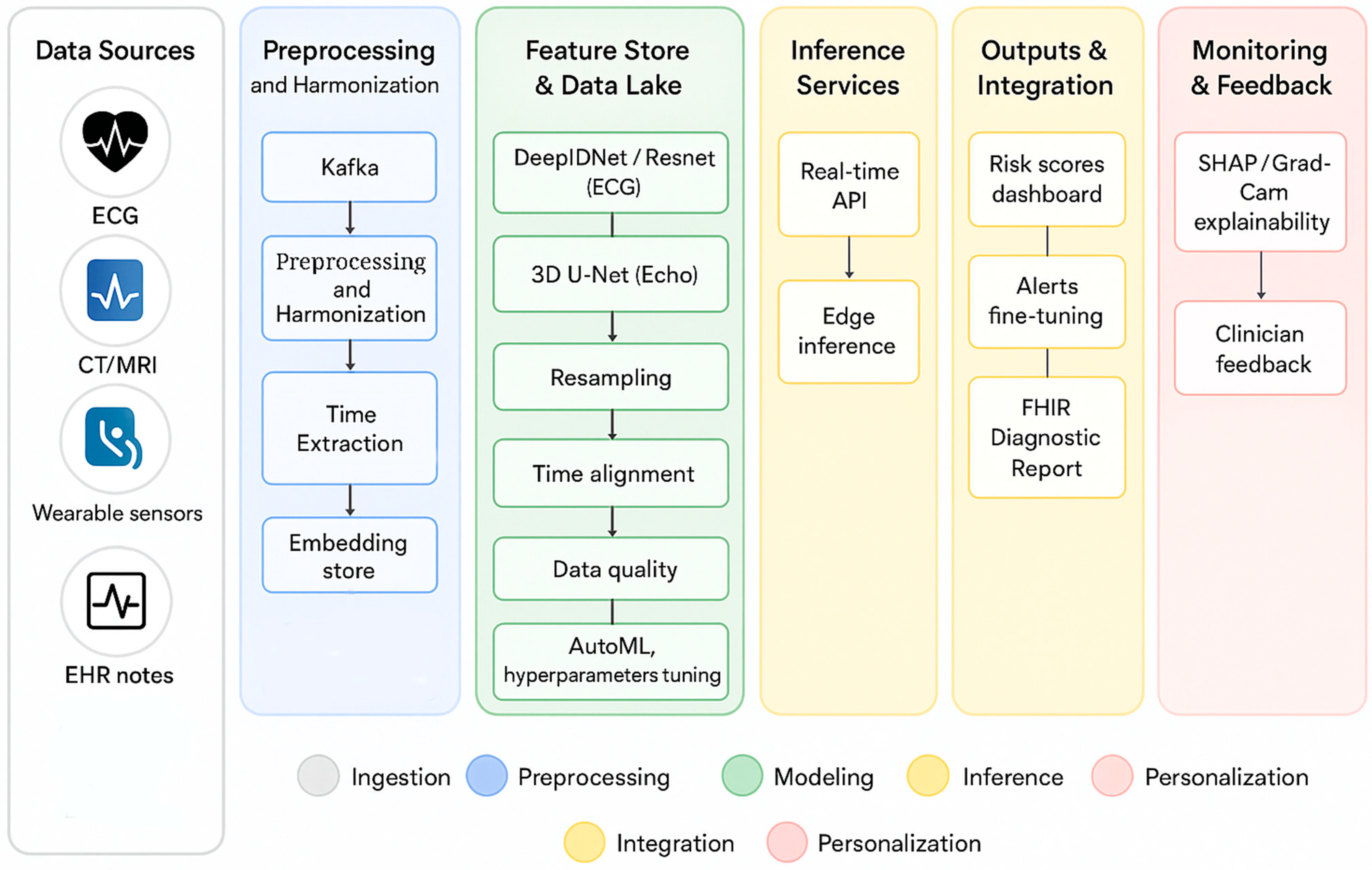

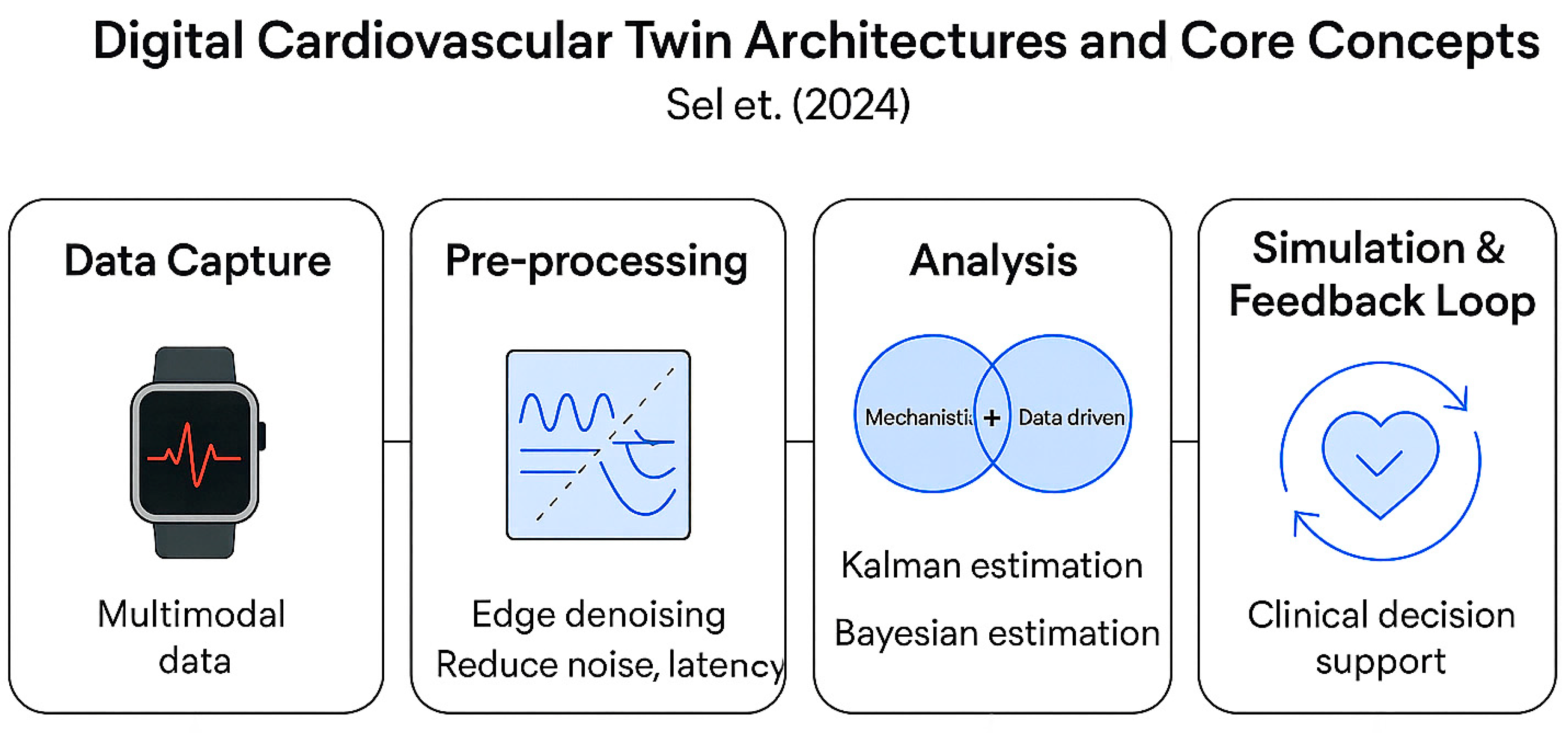

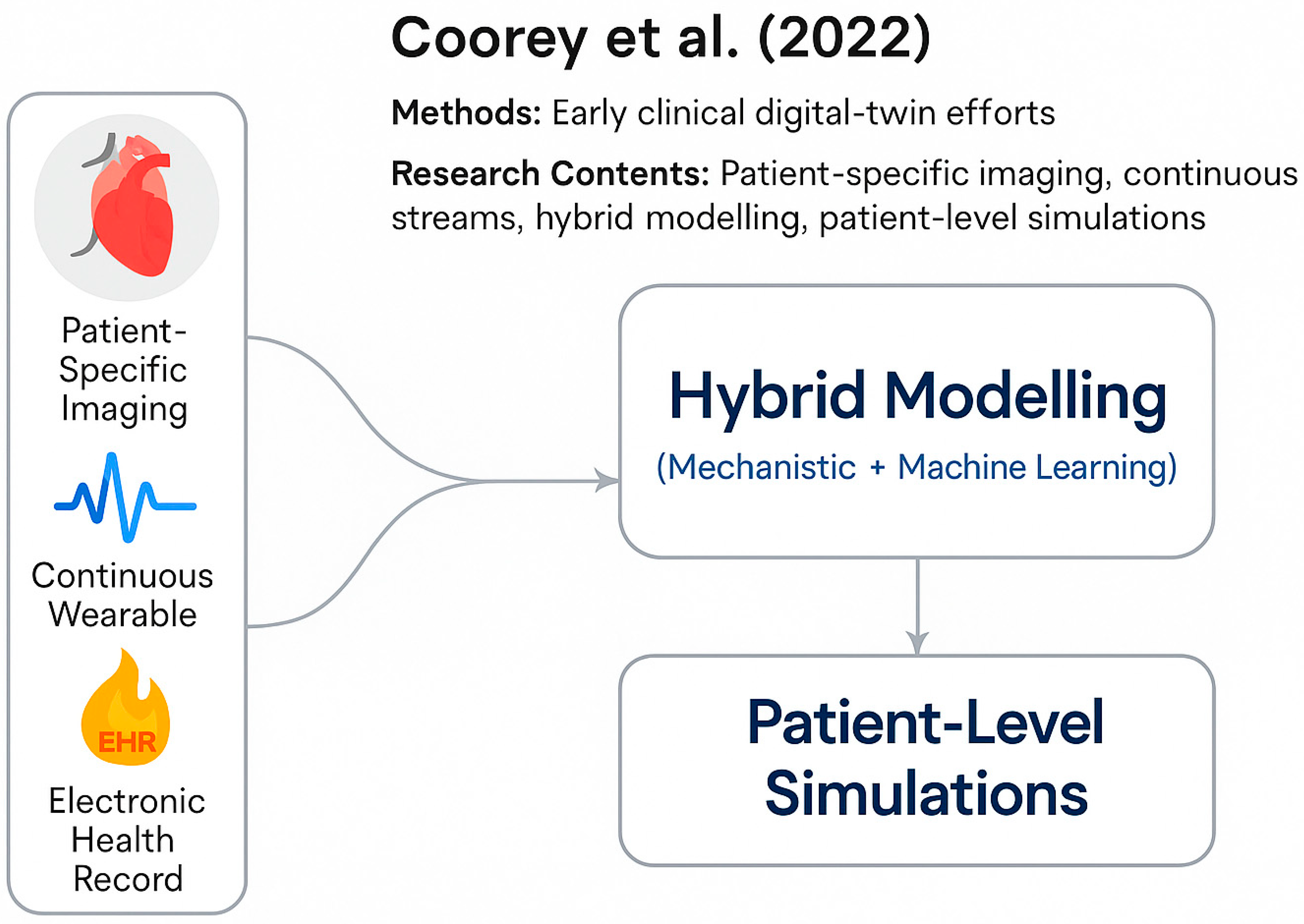

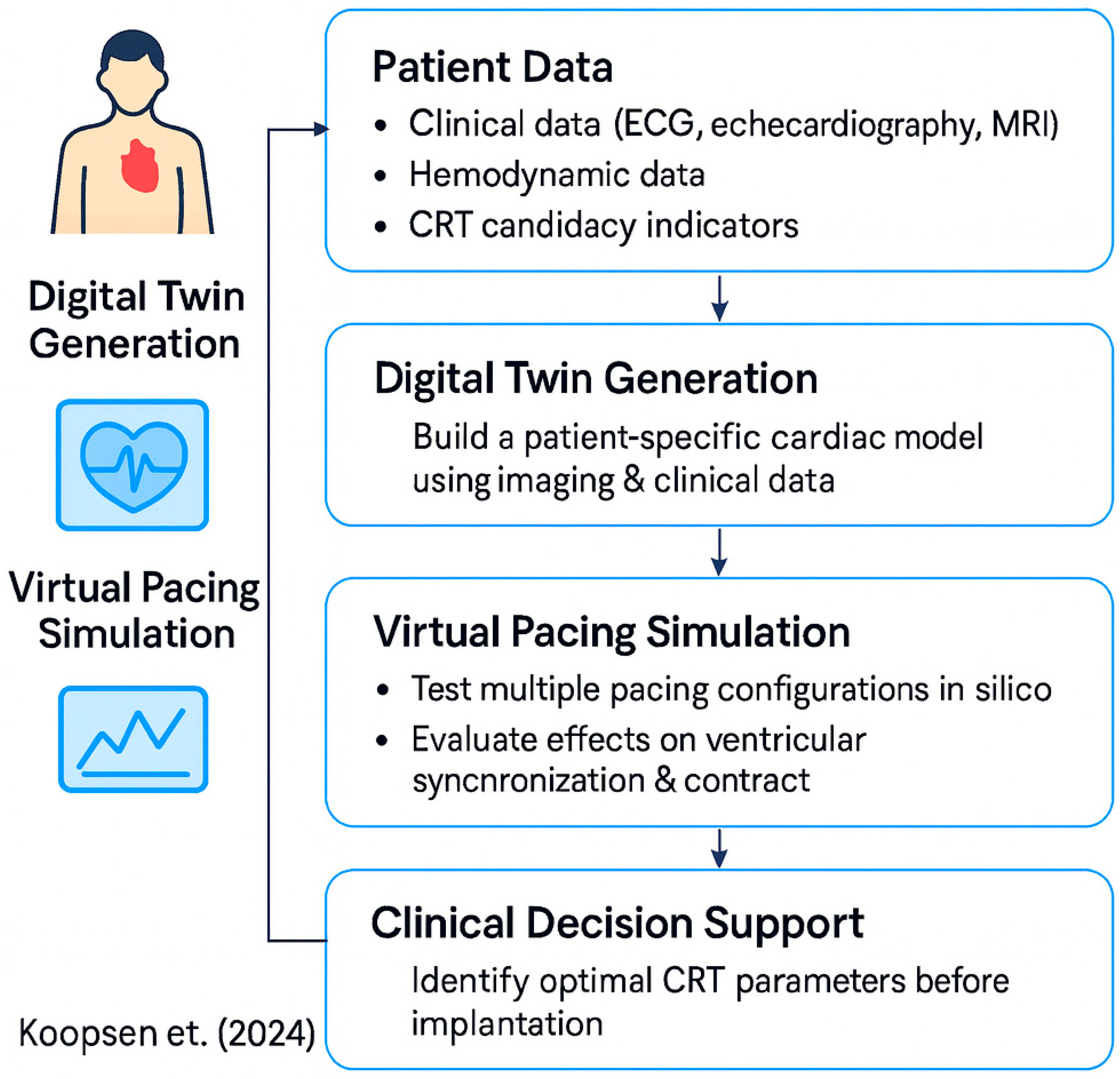

3. Components of the Digital Cardiovascular Twin

3.1. Sensory Layer

3.2. Machine Learning and Deep Learning for Data Interpretation and Prediction

| Task (Cardiac Monitoring) | Method Class | Data (Brief) | Key Performance (Metric) | Where It Excels (Comparative) | Limitations/Caveats in CVD Use | Best-Fit Scenarios (Applicability) | References |

|---|---|---|---|---|---|---|---|

| LV systolic dysfunction screening (LVEF ≤ 40%) from 12-lead ECG | Transformer (foundation; pretrain → finetune) | Pretrained on 8.5 M ECGs; fine-tuned for LVEF/other tasks | AUROC 0.86 (internal with 1% labels); 0.87 external; boosts low-label tasks | Captures long-range temporal patterns; excellent label- efficiency; strong multi-task transfer | Requires massive pretraining compute; deployment still needs careful calibration/ interpretability | Population-scale ECG pre-screen → triage to echocardiography; low-label health-system settings | [57] |

| Near-term AF risk (≤14 days) from patch single-lead ECG | Deep learning (attention/temporal; multimodal) | 459,889 ambulatory single-lead recordings (10 min–24 h) | AUC 0.80 (1-day horizon, “All features” model) | Early warning on AF-free ECG; integrates HRV + rhythm + demographics | Retrospective, device- specific; risk of distribution shift across wearables; prospective validation needed | Wearable AF surveillance and early-warning gating for patch/consumer ECG programs | [58] |

| Arrhythmia classification (12-lead; Chapman) | Graph Neural Network (lead-graph) | 10,646 subjects; 12 leads; 7 classes | Accuracy 99.82%, Specificity 99.97% (GCN-WMI) | Encodes inter-lead relations; strong multi-lead performance | Limited for single-lead wearables; sensitive to lead configuration | In-clinic 12-lead analysis; multi-lead Holter/offline QA where inter-lead coupling matters | [59] |

| Coronary CTA: vessel extraction and anatomical labeling | GCN on vascular graphs | 104 CCTA; 10 segment classes (AHA) | Tree-extraction 0.85; overall labeling 0.74 | Preserves tree topology; better anatomical consistency than CNN-only | Dependent on reliable centerline/segmentation; calcifications/gaps still problematic | Pre-procedural planning; CAD quantification pipelines with human oversight | [60] |

| HF drug-response prediction/trajectory modeling (EHR) | Spatiotemporal GNN + Transformer (patient-visit graphs) | 11,627 HF patients (Mayo Clinic EHR) | Outperformed baselines across 5 drug classes; best RMSE 0.0043 (NT-proBNP) | Learns longitudinal + relational patterns; subgrouping improves prediction and interpretability | Site-specific coding/ practice → transferability/ harmonization needed; privacy/PII governance | Hospital CDSS for HF titration; digital-twin personalization of therapy trajectories | [61] |

| Cardiac MRI segmentation (quality-aware automation) | Bayesian deep learning (uncertainty quantification) | Multi-center CMR; benchmarked Bayesian vs. non-Bayesian UQ | UQ triage cuts “poor” segmentations to 5%; only 31–48% cases require review | Safety guardrails; robust to OOD noise/blur (method- dependent) | Extra compute; needs workflow integration for human-in-the-loop review | Semi-automated CMR pipelines where safe triage and QC trump raw speed | [62] |

| Cuff-less blood pressure from wearables (PPG/ECG) | Transformer-hybrid (CNN+Transformer) | Two large wearables datasets: CAS-BP and Aurora-BP | CAS-BP: DBP 0.9 ± 6.5, SBP 0.7 ± 8.3 mmHg; Aurora-BP: DBP −0.4 ± 7.0, SBP −0.4 ± 8.6 mmHg; MAE below SOTA | Learns global temporal dependencies; fuses handcrafted + learned features | Domain/calibration drift across devices/skin tones/contexts; prospective ambulatory validation needed | Ambulatory BP trending and coaching with periodic calibration; patient-facing wearables | [63] |

4. AI Agents and Medical LLMs for Personalized Intervention

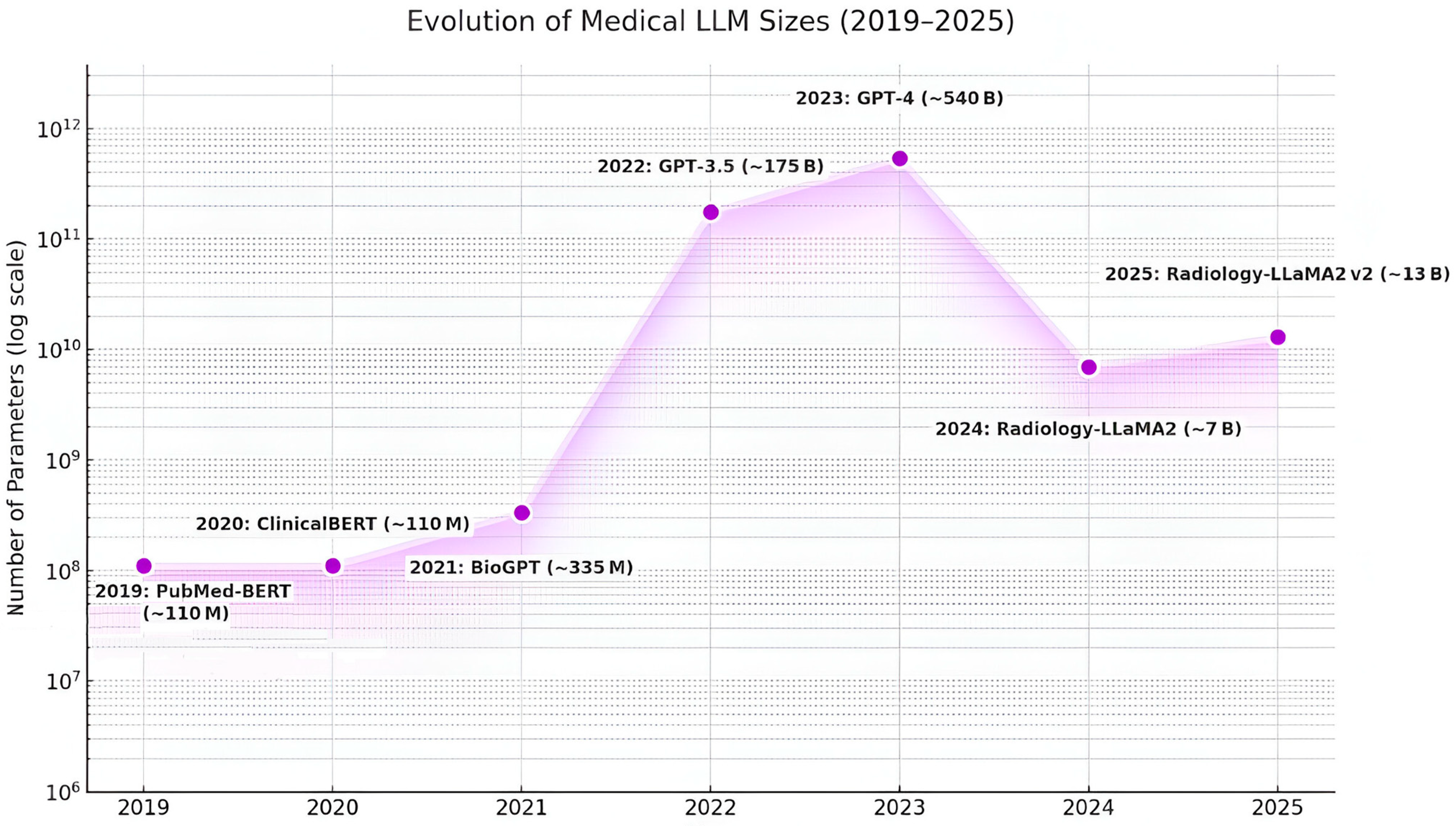

4.1. Generative AI and Medical LLMs

4.2. Proactive AI Agents

5. Discussion

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AMI | Ambient Intelligence |

| AUC | Area Under the Curve |

| BLE | Bluetooth Low Energy |

| CNN | Convolutional Neural Network |

| CT | Computed Tomography |

| CVD | Cardiovascular Disease |

| DL | Deep Learning |

| DLT | Distributed Ledger Technology |

| ECG | Electrocardiography |

| EDA | Electrodermal Activity |

| EHR | Electronic Health Record |

| EMG | Electromyography |

| GNN | Graph Neural Network |

| HR | Heart Rate |

| HRV | Heart Rate Variability |

| ICU | Intensive Care Unit |

| IoMT | Internet of Medical Things |

| IoT | Internet of Things |

| LLM | Large Language Model |

| LoRA | Low-Rank Adaptation |

| LSTM | Long Short-Term Memory |

| MAE | Mean Absolute Error |

| ML | Machine Learning |

| MRI | Magnetic Resonance Imaging |

| NLP | Natural Language Processing |

| PPG | Photoplethysmography |

| PRS | Polygenic Risk Score |

| RR | R-R Interval |

| SDNN | Standard Deviation of NN Intervals |

| SHAP | Shapley Additive Explanations |

| SVM | Support Vector Machine |

| VQA | Visual Question Answering |

| GDPR | General Data Protection Regulation |

| HL7 | Health Level 7 |

| FHIR | Fast Healthcare Interoperability Resources |

References

- Katz, J.N.; Minder, M.; Olenchock, B.; Price, S.; Goldfarb, M.; Washam, J.B.; Barnett, C.F.; Newby, L.K.; van Diepen, S. The Genesis, Maturation, and Future of Critical Care Cardiology. J. Am. Coll. Cardiol. 2016, 68, 67–79. [Google Scholar] [CrossRef] [PubMed]

- Lourida, K.G.; Louridas, G.E. Constraints in Clinical Cardiology and Personalized Medicine: Interrelated Concepts in Clinical Cardiology. Cardiogenetics 2021, 11, 50–67. [Google Scholar] [CrossRef]

- Shapiro, M.D.; Maron, D.J.; Morris, P.B.; Kosiborod, M.; Sandesara, P.B.; Virani, S.S.; Khera, A.; Ballantyne, C.M.; Baum, S.J.; Sperling, L.S.; et al. Preventive Cardiology as a Subspecialty of Cardiovascular Medicine: JACC Council Perspectives. J. Am. Coll. Cardiol. 2019, 74, 1926–1942. [Google Scholar] [CrossRef]

- McCarthy, C.P.; Raber, I.; Chapman, A.R.; Sandoval, Y.; Apple, F.S.; Mills, N.L.; Januzzi, J.L. Myocardial Injury in the Era of High-Sensitivity Cardiac Troponin Assays: A Practical Approach for Clinicians. JAMA Cardiol. 2019, 4, 1034–1042. [Google Scholar] [CrossRef]

- Chow, E.J.; Leger, K.J.; Bhatt, N.S.; Mulrooney, D.A.; Ross, C.J.; Aggarwal, S.; Bansal, N.; Ehrhardt, M.J.; Armenian, S.H.; Scott, J.M.; et al. Paediatric Cardio-Oncology: Epidemiology, Screening, Prevention, and Treatment. Cardiovasc. Res. 2019, 115, 922–934. [Google Scholar] [CrossRef]

- Groenewegen, A.; Zwartkruis, V.W.; Rienstra, M.; Zuithoff, N.P.A.; Hollander, M.; Koffijberg, H.; Oude Wolcherink, M.; Cramer, M.J.; van der Schouw, Y.T.; Hoes, A.W.; et al. Diagnostic Yield of a Proactive Strategy for Early Detection of Cardiovascular Disease versus Usual Care in Adults with Type 2 Diabetes or Chronic Obstructive Pulmonary Disease in Primary Care in the Netherlands (RED-CVD): A Multicentre, Pragmatic, Cluster-Randomised, Controlled Trial. Lancet Public Health 2024, 9, e88–e99. [Google Scholar] [CrossRef]

- Bhaltadak, V.; Ghewade, B.; Yelne, S. A Comprehensive Review on Advancements in Wearable Technologies: Revolutionizing Cardiovascular Medicine. Cureus 2024, 16, e61312. [Google Scholar] [CrossRef]

- Coorey, G.; Figtree, G.A.; Fletcher, D.F.; Tao, J.; Rizos, C.V.; Caputi, P.; Harrison, C.; Athan, D.; Chidiac, C.; Chow, C.K. The Health Digital Twin to Tackle Cardiovascular Disease—A Review of an Emerging Interdisciplinary Field. NPJ Digit. Med. 2022, 5, 126. [Google Scholar] [CrossRef] [PubMed]

- Martinez-Velazquez, R.; Gamez, R.; El Saddik, A. Cardio Twin: A Digital Twin of the Human Heart Running on the Edge. In Proceedings of the 2019 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Istanbul, Turkey, 26–28 June 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Johnson, Z.; Saikia, M.J. Digital Twins for Healthcare Using Wearables. Bioengineering 2024, 11, 606. [Google Scholar] [CrossRef]

- Sel, K.; Osman, D.; Zare, F.; Masoumi Shahrbabak, S.; Brattain, L.; Hahn, J.O.; Inan, O.T.; Mukkamala, R.; Palmer, J.; Paydarfar, D.; et al. Building Digital Twins for Cardiovascular Health: From Principles to Clinical Impact. J. Am. Heart Assoc. 2024, 13, e031981. [Google Scholar] [CrossRef] [PubMed]

- Karakasis, P.; Antoniadis, A.P.; Theofilis, P.; Vlachakis, P.K.; Milaras, N.; Patoulias, D.; Karamitsos, T.; Fragakis, N. Digital Twin Models in Atrial Fibrillation: Charting the Future of Precision Therapy? J. Pers. Med. 2025, 15, 256. [Google Scholar] [CrossRef]

- Bruynseels, K.; Santoni de Sio, F.; van den Hoven, J. Digital Twins in Health Care: Ethical Implications of an Emerging Engineering Paradigm. Front. Genet. 2018, 9, 31. [Google Scholar] [CrossRef]

- Armeni, P.; Polat, I.; De Rossi, L.M.; Diaferia, L.; Meregalli, S.; Gatti, A. Digital Twins in Healthcare: Is It the Beginning of a New Era of Evidence-Based Medicine? A Critical Review. J. Pers. Med. 2022, 12, 1255. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, L.; Yang, Y.; Zhou, L.; Ren, L.; Wang, F.; Liu, R.; Pang, Z.; Deen, M.J.; Chen, X. A Novel Cloud-Based Framework for the Elderly Healthcare Services Using Digital Twin. IEEE Access 2019, 7, 49088–49101. [Google Scholar] [CrossRef]

- de Lepper, A.G.W.; Buck, C.M.A.; van ‘t Veer, M.; Huberts, W.; van de Vosse, F.N.; Dekker, L.R.C. From Evidence-Based Medicine to Digital Twin Technology for Predicting Ventricular Tachycardia in Ischaemic Cardiomyopathy. J. R. Soc. Interface 2022, 19, 20220317. [Google Scholar] [CrossRef]

- Barbiero, P.; Viñas Torné, R.; Lió, P. Graph Representation Forecasting of Patient’s Medical Conditions: Toward a Digital Twin. Front. Genet. 2021, 12, 652907. [Google Scholar] [CrossRef]

- Sun, T.; He, X.; Song, X.; Shu, L.; Li, Z. The Digital Twin in Medicine: A Key to the Future of Healthcare? Front. Med. 2022, 9, 907066. [Google Scholar] [CrossRef] [PubMed]

- Venkatesh, K.P.; Raza, M.M.; Kvedar, J.C. Health Digital Twins as Tools for Precision Medicine: Considerations for Computation, Implementation, and Regulation. NPJ Digit. Med. 2022, 5, 150. [Google Scholar] [CrossRef] [PubMed]

- Koopsen, T.; Gerrits, W.; van Osta, N.; van Loon, T.; Wouters, P.; Prinzen, F.W.; Vernooy, K.; Delhaas, T.; Teske, A.J.; Meine, M.; et al. Virtual Pacing of a Patient’s Digital Twin to Predict Left Ventricular Reverse Remodelling after Cardiac Resynchronization Therapy. Europace 2024, 26, euae009. [Google Scholar] [CrossRef] [PubMed]

- Trayanova, N.A.; Prakosa, A. Up Digital and Personal: How Heart Digital Twins Can Transform Heart Patient Care. Heart Rhythm. 2024, 21, 89–99. [Google Scholar] [CrossRef]

- Kamel Boulos, M.N.; Zhang, P. Digital Twins: From Personalised Medicine to Precision Public Health. J. Pers. Med. 2021, 11, 745. [Google Scholar] [CrossRef]

- Xu, X.; Li, J.; Zhu, Z.; Zhao, L.; Wang, H.; Song, C.; Chen, Y.; Zhao, Q.; Yang, J.; Pei, Y. A Comprehensive Review on Synergy of Multi-Modal Data and AI Technologies in Medical Diagnosis. Bioengineering 2024, 11, 219. [Google Scholar] [CrossRef]

- Yammouri, G.; Ait Lahcen, A. AI-Reinforced Wearable Sensors and Intelligent Point-of-Care Tests. J. Pers. Med. 2024, 14, 1088. [Google Scholar] [CrossRef]

- Avanzato, R.; Beritelli, F.; Lombardo, A.; Ricci, C. Lung-DT: An AI-Powered Digital Twin Framework for Thoracic Health Monitoring and Diagnosis. Sensors 2024, 24, 958. [Google Scholar] [CrossRef]

- Rudnicka, Z.; Proniewska, K.; Perkins, M.; Pregowska, A. Cardiac Healthcare Digital Twins Supported by Artificial Intelligence-Based Algorithms and Extended Reality—A Systematic Review. Electronics 2024, 13, 866. [Google Scholar] [CrossRef]

- Cascarano, A.; Mur-Petit, J.; Hernández-González, J.; Camacho, M.; Eadie, N.T.; Gkontra, P.; Gil-Ortiz, R.; Gomez-Cabrero, D.; Gori, I.; Sanz, J.M.; et al. Machine and Deep Learning for Longitudinal Biomedical Data: A Review of Methods and Applications. Artif. Intell. Rev. 2023, 56, S1711–S1771. [Google Scholar] [CrossRef]

- Armoundas, A.A.; Narayan, S.M.; Arnett, D.K.; Spector-Bagdady, K.; Bennett, D.A.; Celi, L.A.; Chaitman, B.R.; DeMaria, A.N.; Estes, N.A.M.; Goldberger, J.J.; et al. Use of Artificial Intelligence in Improving Outcomes in Heart Disease: A Scientific Statement from the American Heart Association. Circulation 2024, 149, e1028–e1050. [Google Scholar] [CrossRef] [PubMed]

- Gomes, B.; Singh, A.; O’Sullivan, J.; Schnurr, T.; Goddard, P.; Loong, S.; Mahadevan, R.; Okholm, S.; Nielsen, J.B.; Pihl, C.; et al. Genetic Architecture of Cardiac Dynamic Flow Volumes. Nat. Genet. 2023, 56, 245–257. [Google Scholar] [CrossRef] [PubMed]

- Padmanabhan, S.; Tran, T.Q.B.; Dominiczak, A.F. Artificial Intelligence in Hypertension: Seeing Through a Glass Darkly. Circ. Res. 2021, 128, 1100–1118. [Google Scholar] [CrossRef]

- Adibi, S.; Rajabifard, A.; Shojaei, D.; Wickramasinghe, N. Enhancing Healthcare through Sensor-Enabled Digital Twins in Smart Environments: A Comprehensive Analysis. Sensors 2024, 24, 2793. [Google Scholar] [CrossRef] [PubMed]

- Foraker, R.E.; Benziger, C.P.; DeBarmore, B.M.; Cené, C.W.; Loustalot, F.; Khan, Y.; Lambert, C.; Lloyd-Jones, D.M.; Ritchey, M.D.; Sims, M.; et al. Achieving Optimal Population Cardiovascular Health Requires an Interdisciplinary Team and a Learning Healthcare System. Circulation 2021, 143, e9–e18. [Google Scholar] [CrossRef]

- Lovisotto, G.; Turner, H.; Eberz, S.; Martinovic, I. Seeing Red: PPG Biometrics Using Smartphone Cameras. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 397–405. [Google Scholar] [CrossRef]

- Yu, X.; Laurentius, T.; Bollheimer, C.; Leonhardt, S.; Hoog Antink, C. Noncontact Monitoring of Heart Rate and Heart Rate Variability in Geriatric Patients Using Photoplethysmography Imaging. IEEE J. Biomed. Health Inform. 2020, 25, 1781–1792. [Google Scholar] [CrossRef]

- Araki, T.; Yoshimoto, S.; Uemura, T.; Miyazaki, A.; Kurihira, N.; Kasai, Y.; Harada, Y.; Nezu, T.; Iida, H.; Sandbrook, J.; et al. Skin-Like Transparent Sensor Sheet for Remote Healthcare Using Electroencephalography and Photoplethysmography. Adv. Mater. Technol. 2022, 7, 2200362. [Google Scholar] [CrossRef]

- Zhang, G.; Zhang, S.; Dai, Y.; Shi, B. Using Rear Smartphone Cameras as Sensors for Measuring Heart Rate Variability. IEEE Access 2021, 9, 16675–16684. [Google Scholar] [CrossRef]

- Hnoohom, N.; Mekruksavanich, S.; Jitpattanakul, A. Physical Activity Recognition Based on Deep Learning Using Photoplethysmography and Wearable Inertial Sensors. Electronics 2023, 12, 693. [Google Scholar] [CrossRef]

- Park, J.; Seok, H.S.; Kim, S.S.; Shin, H. Photoplethysmogram Analysis and Applications: An Integrative Review. Front. Physiol. 2022, 12, 808451. [Google Scholar] [CrossRef]

- Tang, Q.; Chen, Z.; Guo, Y.; Liang, Y.; Ward, R.; Menon, C.; Elgendi, M. Robust Reconstruction of Electrocardiogram Using Photoplethysmography: A Subject-Based Model. Front. Physiol. 2022, 13, 859763. [Google Scholar] [CrossRef]

- Botina-Monsalve, D.; Benezeth, Y.; Miteran, J. Performance Analysis of Remote Photoplethysmography Deep Filtering Using Long Short-Term Memory Neural Network. Biomed. Eng. Online 2022, 21, 69. [Google Scholar] [CrossRef]

- Schäfer, A.; Vagedes, J. How Accurate Is Pulse Rate Variability as an Estimate of Heart Rate Variability? A Review on Studies Comparing Photoplethysmographic Technology with an Electrocardiogram. Int. J. Cardiol. 2013, 166, 15–29. [Google Scholar] [CrossRef]

- Berndt, D.J.; Clifford, J. Using Dynamic Time Warping to Find Patterns in Time Series. In Proceedings of the 3rd International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 31 July–1 August 1994; AAAI Press: Menlo Park, CA, USA, 1994; pp. 359–370. [Google Scholar]

- Peng, H.; Long, F.; Ding, C. Feature Selection Based on Mutual Information Criteria of Max-Dependency, Max-Relevance, and Min-Redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1226–1238. [Google Scholar] [CrossRef]

- Estevez, P.A.; Tesmer, M.; Perez, C.A.; Zurada, J.M. Normalized Mutual Information Feature Selection. IEEE Trans. Neural Netw. 2009, 20, 189–201. [Google Scholar] [CrossRef]

- Addison, P.S. Wavelet Transforms and the ECG: A Review. Physiol. Meas. 2005, 26, R155–R199. [Google Scholar] [CrossRef]

- Rilling, G.; Flandrin, P.; Goncalves, P. On Empirical Mode Decomposition and Its Algorithms. In Proceedings of the IEEE-EURASIP Workshop on Nonlinear Signal and Image Processing, Grado, Italy, 8–11 June 2003. [Google Scholar]

- Zhang, Z. Photoplethysmography-Based Heart Rate Monitoring in Physical Activities via Joint Sparse Spectrum Reconstruction. IEEE Trans. Biomed. Eng. 2015, 62, 1902–1910. [Google Scholar] [CrossRef]

- Mincholé, A.; Camps, J.; Lyon, A.; Rodríguez, B. Machine Learning in the Electrocardiogram. J. Electrocardiol. 2019, 57, S61–S64. [Google Scholar] [CrossRef] [PubMed]

- Hong, S.; Zhou, Y.; Shang, J. Deep Learning in ECG Diagnosis: A Review. Knowl.-Based Syst. 2021, 227, 107187. [Google Scholar] [CrossRef]

- Attia, Z.I.; Harmon, D.M.; Behr, E.R.; Friedman, P.A. Application of Artificial Intelligence to the Electrocardiogram. Eur. Heart J. 2021, 42, 4717–4730. [Google Scholar] [CrossRef] [PubMed]

- Avanzato, R.; Beritelli, F. Automatic ECG Diagnosis Using Convolutional Neural Network. Electronics 2020, 9, 951. [Google Scholar] [CrossRef]

- Feng, K.; Pi, X.; Liu, H.; Sun, K. Myocardial Infarction Classification Based on Convolutional Neural Network and Recurrent Neural Network. Appl. Sci. 2019, 9, 1879. [Google Scholar] [CrossRef]

- Che, C.; Zhang, P.; Zhu, M.; Qu, Y.; Jin, B. Constrained Transformer Network for ECG Signal Processing and Arrhythmia Classification. BMC Med. Inform. Decis. Mak. 2021, 21, 184. [Google Scholar] [CrossRef] [PubMed]

- Xu, Z.; Lee, Y.; Zhao, J.; Wang, X.; Chen, Y.; Zhang, H.; Zhang, Y. Personalized Federated Learning with Mixtures of Global and Local Models. In Advances in Neural Information Processing Systems (NeurIPS 2024); Curran Associates: Red Hook, NY, USA, 2024; p. 1097. [Google Scholar]

- Tomašev, N.; Gligorijević, V.; Blankenberg, R.; Wu, P.; Xu, J.; Ward, W.; White, E.; White, W.; Wong, E.; Woo, J.; et al. Development and Validation of a Personalized Model with Transfer Learning for Acute Kidney Injury Risk Estimation Using Electronic Health Records. JAMA Netw. Open 2022, 5, e2219776. [Google Scholar] [CrossRef]

- Salah, H.; Srinivas, S. Explainable machine learning framework for predicting long-term cardiovascular disease risk among adolescents. Sci. Rep. 2022, 12, 21905. [Google Scholar] [CrossRef] [PubMed]

- Vaid, A.; Somani, S.; Bikdeli, B.; Mlodzinski, E.; Wang, Z.; Chen, R.; Razavi, A.C.; Johnson, K.W.; Al’Aref, S.J.; Argulian, E.; et al. A Foundational Vision Transformer Improves Diagnostic Performance for Electrocardiograms. NPJ Digit. Med. 2023, 6, 173. [Google Scholar] [CrossRef] [PubMed]

- Gadaleta, M.; Schiavone, M.; Khurshid, S.; O’Shea, A.; Sinha, A.; Garg, A.; Weng, L.-C.; Ng, C.Y.; Ellinor, P.T.; Picard, M.H.; et al. Prediction of Atrial Fibrillation from At-Home Single-Lead ECG Signals without Arrhythmias. NPJ Digit. Med. 2023, 6, 196. [Google Scholar] [CrossRef]

- Andayeshgar, B.; Abdali-Mohammadi, F.; Sepahvand, M.; Kalhori, S.R.N.; Sheikhzadeh, F.; Khalilzadeh, O. Arrhythmia Detection by the Graph Convolution Network and a Proposed Structure for Communication between Cardiac Leads. BMC Med. Res. Methodol. 2024, 24, 96. [Google Scholar] [CrossRef]

- Hampe, N.; Knoll, C.; Busse, A.; Rajkumar, R.; Hennemuth, A.; Preim, B.; Preim, U.; Botha, C.P. Graph Neural Networks for Automatic Extraction and Labeling of the Coronary Artery Tree Using Deep Learning. J. Med. Imaging 2024, 11, 044002. [Google Scholar] [CrossRef]

- Chowdhury, S.; Rahman, M.M.; Dang, T.H.; Hoque, E.; Kim, M.; Khan, S.S.; Yoon, S. Stratifying Heart-Failure Patients with Graph Neural Network and Transformer Using Electronic Health Records to Optimize Drug Response Prediction. J. Am. Med. Inform. Assoc. 2024, 31, 1458–1468. [Google Scholar] [CrossRef]

- Ng, M.; Guo, F.; Biswas, L.; Petersen, S.E.; Piechnik, S.K.; Neubauer, S. Estimating Uncertainty in Neural Networks for Cardiac MRI Segmentation: A Benchmark Study. IEEE Trans. Biomed. Eng. 2023, 70, 1955–1966. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.-D.; Chen, X.; Wang, L.; Zhang, Y.; Xu, H.; Zhao, Q.; Zhou, T. HGCTNet: Handcrafted Feature-Guided CNN and Transformer Network for Wearable Cuffless Blood Pressure Measurement. IEEE J. Biomed. Health Inform. 2024, 28, 3882–3894. [Google Scholar] [CrossRef]

- Chen, Q.; Lee, B.G. Deep Learning Models for Stress Analysis in University Students: A Sudoku-Based Study. Sensors 2023, 23, 6099. [Google Scholar] [CrossRef]

- Ravi, D.; Khemchandani, R.; Caceres, C.; Singh, S.; Lane, N.D. In-Device Personalization of Deep Activity Recognizers via Progressive Fine-Tuning. In Proceedings of the ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp 2020), Cancún, Mexico, 12–17 September 2020; ACM: New York, NY, USA, 2020; pp. 671–674. [Google Scholar] [CrossRef]

- Poterucha, T.J.; Jing, L.; Ricart, R.P.; Sanchez, J.M.; Lopez-Jimenez, F.; Attia, Z.I.; Noseworthy, P.A.; Friedman, P.A.; Ackerman, M.J.; Pellikka, P.A.; et al. Detecting Structural Heart Disease from Electrocardiograms Using AI. Nature 2025, 644, 221–230. [Google Scholar] [CrossRef]

- Alasmari, S.; AlGhamdi, R.; Tejani, G.G.; Sharma, S.K.; Mousavirad, S.J. Federated Learning-Based Multimodal Approach for Early Detection and Personalized Care in Cardiac Disease. Front. Physiol. 2025, 16, 1563185. [Google Scholar] [CrossRef]

- El-Hajj, C.; Kyriacou, P.A. A Review of Machine Learning Techniques in Photoplethysmography for the Non-Invasive Cuffless Measurement of Blood Pressure. Biomed. Signal Process. Control 2020, 58, 101870. [Google Scholar] [CrossRef]

- Wang, J.; Spicher, N.; Warnecke, J.M.; Haghi, M.; Schwartze, J.; Deserno, T.M. Unobtrusive Health Monitoring in Private Spaces: The Smart Home. Sensors 2021, 21, 864. [Google Scholar] [CrossRef]

- Zhou, Z.-B.; Cui, T.-R.; Li, D.; Jian, J.-M.; Li, Z.; Ji, S.-R.; Li, X.; Xu, J.-D.; Liu, H.-F.; Yang, Y.; et al. Wearable Continuous Blood Pressure Monitoring Devices Based on Pulse Wave Transit Time and Pulse Arrival Time: A Review. Materials 2023, 16, 2133. [Google Scholar] [CrossRef]

- Khoo, L.S.; Lim, M.K.; Chong, C.Y.; McNaney, R. Machine Learning for Multimodal Mental Health Detection: A Systematic Review of Passive Sensing Approaches. Sensors 2024, 24, 348. [Google Scholar] [CrossRef]

- Tagne Poupi, T.A.; Nfor, K.A.; Kim, J.-I.; Kim, H.-C. Applications of Artificial Intelligence, Machine Learning, and Deep Learning in Nutrition: A Systematic Review. Nutrients 2024, 16, 1073. [Google Scholar] [CrossRef] [PubMed]

- Santosh Kumar, B.; Mishra, S. AGC for Distributed Generation. In Proceedings of the IEEE International Conference on Sustainable Energy Technologies (ICSET 2008), Singapore, 24–27 November 2008; IEEE: Singapore, 2008; pp. 1–6. [Google Scholar] [CrossRef]

- Santosh Kumar, B.; Mishra, S.; Bhende, C.N.; Chauhan, M.S. PI Control-Based Frequency Regulator for Distributed Generation. In Proceedings of the IEEE Region 10 Conference (TENCON 2008), Hyderabad, India, 19–21 November 2008; IEEE: Hyderabad, India, 2008; pp. 1–5. [Google Scholar] [CrossRef]

- Bach, T.A.; Kristiansen, J.K.; Babic, A.; Jacovi, A. Unpacking Human-AI Interaction in Safety-Critical Industries: A Systematic Literature Review. IEEE Access 2024, 12, 106385–106414. [Google Scholar] [CrossRef]

- Mavridou, E.; Vrochidou, E.; Kalampokas, T.; Venetis, K.; Kanakaris, G.; Papakostas, G.A. AI-Powered Software Development: A Systematic Review of Recommender Systems for Programmers. Computers 2025, 14, 119. [Google Scholar] [CrossRef]

- Jiang, W.; Zhang, Y.; Mo, H.; Wang, M.; Zhang, W. Learning and Mapping Academic Topic Evolution—Evolving Topics in the Australian National Disability Insurance Scheme. In Advances in Data Mining and Applications (ADMA 2024); Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2024; Volume 15387, pp. 131–145. [Google Scholar] [CrossRef]

- Cheng, Y.; Wang, H.; Bao, Y.; Lu, F. Appearance-Based Gaze Estimation with Deep Learning: A Review and Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 7509–7528. [Google Scholar] [CrossRef]

- Koren, U.W.; Alfnes, A. Fine-Tuning Large Language Models: Assessing Memorization and Redaction of Personally Identifiable Information. Master’s Thesis, BI Norwegian Business School, Oslo, Norway, 2024. Available online: https://hdl.handle.net/11250/3169319 (accessed on 16 July 2025).

- Day, M.-Y.; Tsai, C.-T. CMSI: Carbon Market Sentiment Index with AI Text Analytics. In Proceedings of the 2023 International Conference on Advances in Social Networks Analysis and Mining (ASONAM), Kusadasi, Turkey, 6–9 November 2023; ACM: New York, NY, USA, 2023; pp. 719–726. [Google Scholar] [CrossRef]

- Sushma, B.; Chinniah, P.; Ramesh, P.S. An ECG Signal Processing and Cardiac Disease Prediction Approach for IoT-Based Health Monitoring System Using Optimized Epistemic Neural Network. J. Med. Biol. Eng. 2025, 53, 93–106. [Google Scholar] [CrossRef] [PubMed]

- Varghese, J.; Chapiro, J. ChatGPT: The Transformative Influence of Generative AI on Science and Healthcare. J. Hepatol. 2023, 80, 977–980. [Google Scholar] [CrossRef]

- Rashidi, H.H.; Sharma, G.; Bravata, D.M.; Pierce, J.T.; Myers, L.J.; Akpinar-Elci, M. Introduction to Artificial Intelligence and Machine Learning in Pathology and Medicine: Generative and Nongenerative Artificial Intelligence Basics. Mod. Pathol. 2025, 38, 100688. [Google Scholar] [CrossRef]

- Zhang, Q.; Liu, X.; Wong, T.Y.; Ting, D.S.W. A Large Language Model for Ophthalmology: A Review of Applications, Opportunities, and Challenges. Ophthalmol. Sci. 2023, 3, 100318. [Google Scholar] [CrossRef]

- Chia, P.Y.; Tan, S.H.; Teo, J.W.P.; Ng, O.T.; Marimuthu, K. Evaluating Multilingual Large Language Models in the Context of Infectious Diseases: A Comparative Study of ChatGPT and GPT-4. BMC Infect. Dis. 2024, 24, 799. [Google Scholar] [CrossRef]

- Patel, N.R.; Lacher, C.R.; Huang, A.Y.; Park, S.M.; Wu, E.C.; Gee, J.; Hubbard, J.B. Evaluating the Application of Artificial Intelligence and Ambient Listening to Generate Medical Notes in Vitreoretinal Clinic Encounters. Clin. Ophthalmol. 2025, 19, 1763–1769. [Google Scholar] [CrossRef] [PubMed]

- Singh, R.; Wang, J.; Li, X.; Brown, P.; Zhao, L. Advancements in Generative AI: A Comprehensive Review of GANs, GPT, Autoencoders, Diffusion Models, and Transformers. IEEE Access 2024, 12, 69812–69837. [Google Scholar] [CrossRef]

- Chamola, V. Generative AI for Transformative Healthcare: A Comprehensive Study of Emerging Models, Applications, Case Studies, and Limitations. IEEE Access 2024, 12, 31078–31106. [Google Scholar] [CrossRef]

- Yu, K.; Nguyen, T.; Li, J.; Liu, M.; Langlotz, C.; Lungren, M.P.; Ng, A.Y.; Rajpurkar, P. Radiology-Llama2: Best-in-Class Large Language Model for Radiology. arXiv 2023, arXiv:2309.06419. [Google Scholar] [CrossRef]

- Nassiri, K.; Akhloufi, M.A. Recent Advances in Large Language Models for Healthcare. BioMedInformatics 2024, 4, 1097–1143. [Google Scholar] [CrossRef]

- Yu, P.; Xu, H.; Hu, X.; Deng, C. Leveraging Generative AI and Large Language Models: A Comprehensive Roadmap for Healthcare Integration. Healthcare 2023, 11, 2776. [Google Scholar] [CrossRef]

- Tuan, N.T.; Moore, P.; Thanh, D.H.V.; Pham, H.V. A Generative Artificial Intelligence Using Multilingual Large Language Models for ChatGPT Applications. Appl. Sci. 2024, 14, 3036. [Google Scholar] [CrossRef]

- Panagoulias, D.P.; Virvou, M.; Tsihrintzis, G.A. Augmenting Large Language Models with Rules for Enhanced Domain-Specific Interactions: The Case of Medical Diagnosis. Electronics 2024, 13, 320. [Google Scholar] [CrossRef]

- Singh, A.; Patel, R.; Kumar, V.; Zhao, H. Mitigating Hallucinations in Large Language Models: A Comprehensive Review and Future Directions. Future Internet 2024, 16, 462. [Google Scholar] [CrossRef]

- Vrdoljak, J.; Boban, Z.; Vilović, M.; Kumrić, M.; Božić, J. A Review of Large Language Models in Medical Education, Clinical Decision Support, and Healthcare Administration. Healthcare 2025, 13, 603. [Google Scholar] [CrossRef]

- Ghebrehiwet, I.; Zaki, N.; Damseh, R.; Mohamad, M.S. Revolutionizing Personalized Medicine with Generative AI: A Systematic Review. Artif. Intell. Rev. 2024, 57, 128. [Google Scholar] [CrossRef]

- Feretzakis, G.; Papaspyridis, K.; Gkoulalas-Divanis, A.; Verykios, V.S. Privacy-Preserving Techniques in Generative AI and Large Language Models: A Narrative Review. Information 2024, 15, 697. [Google Scholar] [CrossRef]

- Balas, V.E.; Semwal, V.B.; Khandare, A. (Eds.) Intelligent Computing and Networking: Proceedings of IC-ICN 2023; Lecture Notes in Networks and Systems; Springer: Singapore, 2023; Volume 699. [Google Scholar] [CrossRef]

- Maharjan, J.; Garikipati, A.; Singh, N.P.; Cyrus, L.; Sharma, M.; Ciobanu, M.; Barnes, G.; Thapa, R.; Mao, Q.; Das, R. OpenMedLM: Prompt engineering can out-perform fine-tuning in medical question-answering with open-source large language models. Sci. Rep. 2024, 14, 14156. [Google Scholar] [CrossRef]

- Dong, W.; Shen, S.; Han, Y.; Tan, T.; Wu, J.; Xu, H. Generative Models in Medical Visual Question Answering: A Survey. Appl. Sci. 2025, 15, 2983. [Google Scholar] [CrossRef]

- Hu, Y.; Natesan, H.; Liu, Z.; Li, F.; Fei, X.; Chang, D.; Torous, J. A Unified Taxonomy for Evaluating Large Language Model-Powered Chatbots in Mental Healthcare. Nat. Med. 2025, 8, 230. [Google Scholar] [CrossRef]

- Wu, Y.; Liu, Y.; Eichstaedt, J.C.; Smith, S.W. Clinical Large Language Models for Mental Healthcare. Nat. Med. 2024, 3, 12. [Google Scholar] [CrossRef]

- Qin, H.; Tong, Y. Opportunities and Challenges for Large Language Models in Primary Health Care. J. Prim. Care Community Health 2025, 16, 21501319241312571. [Google Scholar] [CrossRef]

- Yadav, G.S.; Longhurst, C.A. Will AI Make the Electronic Health Record More Efficient for Clinicians? NEJM AI 2025, 1, e2500020. [Google Scholar] [CrossRef]

- Halamka, J.D.; Kirsh, S.R.; Liu, V.X.; Simon, L. Applications of Artificial Intelligence in Medicine: An Expert Panel Discussion. Perm. J. 2024, 28, 3–12. [Google Scholar] [CrossRef]

- Spencer, E.-J.; Economou-Zavlanos, N.J.; van Genderen, M.E. What If We Do, but What If We Don’t? The Opportunity Cost of Artificial-Intelligence Hesitancy in the Intensive Care Unit. Intensive Care Med. 2024, 50, 113–120. [Google Scholar] [CrossRef]

- Jain, B.; Doshi, R.; Nundy, S. Leveraging Artificial Intelligence to Advance Health Equity in America’s Safety Net. J. Gen. Intern. Med. 2025, 40, 133–142. [Google Scholar] [CrossRef] [PubMed]

- Binkley, C.E.; Bouslov, D.; Zaidi, A.; Goldhaber-Fiebert, J.D.; Sharp, R.R.; Pfeffer, M.A.; Chokshi, D.A. An Early Pipeline Framework for Assessing Vendor AI Solutions to Support Return on Investment. NPJ Digit. Med. 2025, 8, 156–192. [Google Scholar] [CrossRef] [PubMed]

- Nabla. Enjoy Care Again—The Most Advanced AI Assistant, Restoring the Human Connection at the Heart of Healthcare. 2025. Available online: https://www.nabla.com/ (accessed on 5 July 2025).

- Oracle. Oracle Health Clinical AI Agent Listens So Physicians Can Too—Press Release. 2025. Available online: https://www.oracle.com/news/announcement/physicians-reduce-documentation-time-with-oracle-health-clinical-ai-agent-2025-03-04/ (accessed on 5 July 2025).

- Columbia University Irving Medical Center. Can AI Detect Hidden Heart Disease? 16 July 2025. Available online: https://www.cuimc.columbia.edu/news/can-ai-detect-hidden-heart-disease (accessed on 12 August 2025).

- NewYork-Presbyterian Advances. Study Shows AI Screening Tool Developed at NewYork-Presbyterian and Columbia Can Detect Structural Heart Disease Using Electrocardiogram Data. Available online: https://www.nyp.org/advances/article/cardiology/study-shows-ai-screening-tool-developed-at-newyork-presbyterian-and-columbia-can-detect-structural-heart-disease-using-electrocardiogram-data (accessed on 16 July 2025).

- Chao, C.-J.; Banerjee, I.; Arsanjani, R.; Ayoub, C.; Tseng, A.; Delbrouck, J.-B.; Chen, H.; Lee, A.; Lin, S.; Rahimi, S.; et al. EchoGPT: A Large Language Model for Echocardiography Report Summarization. medRxiv 2024, 18, v1. [Google Scholar] [CrossRef]

- Calegari, R.; Ciatto, G.; Mascardi, V.; Omicini, A. Logic-Based Technologies for Multi-Agent Systems: A Systematic Literature Review. Auton. Agent. Multi-Agent. Syst. 2021, 35, 1. [Google Scholar] [CrossRef]

- Cardoso, R.C.; Ferrando, A. A Review of Agent-Based Programming for Multi-Agent Systems. Computers 2021, 10, 16. [Google Scholar] [CrossRef]

- Deng, Z.; Guo, Y.; Han, C.; Ma, W.; Xiong, J.; Wen, S.; Xiang, Y. AI Agents Under Threat: A Survey of Key Security Challenges and Future Pathways. ACM Comput. Surv. 2025, 57, 182. [Google Scholar] [CrossRef]

- Lieto, A.; Bhatt, M.; Oltramari, A.; Vernon, D. The Role of Cognitive Architectures in General Artificial Intelligence. Cogn. Syst. Res. 2018, 48, 1–3. [Google Scholar] [CrossRef]

- Junaid, S.B.; Imam, A.A.; Shuaibu, A.N.; Ahmad, A.; Ali, A.; Khan, W. Artificial Intelligence, Sensors and Vital Health Signs: A Review. Appl. Sci. 2022, 12, 11475. [Google Scholar] [CrossRef]

- Chowdhury, M.E.H.; Khandakar, A.; Alzoubi, K.; Mansoor, S.; Abouhasera, R.; Koubaa, S.; Ahmed, R.; Mohammed, M.; Al-Emadi, N.; Al-Maadeed, S.; et al. Real-Time Smart-Digital Stethoscope System for Heart Diseases Monitoring. Sensors 2019, 19, 2781. [Google Scholar] [CrossRef] [PubMed]

- Chakrabarti, S.; Biswas, N.; Jones, L.D.; Kesari, S.; Ashili, S. Smart Consumer Wearables as Digital Diagnostic Tools: A Review. Diagnostics 2022, 12, 2110. [Google Scholar] [CrossRef] [PubMed]

- El-Rashidy, N.; El-Sappagh, S.; Islam, S.M.R.; Abouelmehdi, K.; Abdelrazek, S.; Abdel-Basset, M. Mobile Health in Remote Patient Monitoring for Chronic Diseases: Principles, Trends, and Challenges. Diagnostics 2021, 11, 607. [Google Scholar] [CrossRef]

- Wang, W.-H.; Hsu, W.-S. Integrating Artificial Intelligence and Wearable IoT System in Long-Term Care Environments. Sensors 2023, 23, 5913. [Google Scholar] [CrossRef]

- Kwan, H.Y.; Shell, J.; Fahy, C.; Yang, S.; Xing, Y. Integrating Large Language Models into Medication Management in Remote Healthcare: Current Applications, Challenges, and Future Prospects. Systems 2025, 13, 281. [Google Scholar] [CrossRef]

- Ashrafzadeh, S.; Hamdy, O. Patient-Driven Diabetes Care of the Future in the Technology Era. Cell Metab. 2019, 29, 564–575. [Google Scholar] [CrossRef]

- Car, J.; Tan, W.S.; Huang, Z.; Sloot, P.; Franklin, B.D.; Wyatt, J.C.; Car, L.T. eHealth in the Future of Medications Management: Personalisation, Monitoring and Adherence. BMC Med. 2017, 15, 73. [Google Scholar] [CrossRef]

- Coman, L.-I.; Ianculescu, M.; Paraschiv, E.-A.; Olteanu, R.L.; Bădicu, G.; Iordache, S.; Alexandru, C.-P. Smart Solutions for Diet-Related Disease Management: Connected Care, Remote Health Monitoring Systems, and Integrated Insights for Advanced Evaluation. Appl. Sci. 2024, 14, 2351. [Google Scholar] [CrossRef]

- Lu, T.; Lin, Q.; Yu, B.; Wang, H.; Zhou, M.; Liu, Y.; Wu, Y. A Systematic Review of Strategies in Digital Technologies for Motivating Adherence to Chronic Illness Self-Care. NPJ Health Syst. 2025, 2, 13. [Google Scholar] [CrossRef]

- Jenko, S.; Papadopoulou, E.; Kumar, V.; Manolopoulos, Y.; Musto, C.; Tzitzikas, Y. Artificial Intelligence in Healthcare: How to Develop and Implement Safe, Ethical and Trustworthy AI Systems. AI 2025, 6, 116. [Google Scholar] [CrossRef]

- Hemdan, E.E.-D.; Sayed, A. Smart and Secure Healthcare with Digital Twins: A Deep Dive into Blockchain, Federated Learning, and Future Innovations. Algorithms 2025, 18, 401. [Google Scholar] [CrossRef]

- Morjaria, L.; Gandhi, B.; Haider, N.; Mehmood, R.; Khokhar, M.S. Applications of Generative Artificial Intelligence in Electronic Medical Records: A Scoping Review. Information 2025, 16, 284. [Google Scholar] [CrossRef]

- Pavitra, K.H.; Agnihotri, A. Artificial Intelligence in Corporate Learning and Development: Current Trends and Future Possibilities. In Proceedings of the 2nd International Conference on Smart Technologies for Smart Nation (SmartTechCon 2023), Singapore, 18–19 August 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 688–693. [Google Scholar] [CrossRef]

- Oracle. Generative AI Agents. Available online: https://www.oracle.com/artificial-intelligence/generative-ai/agents/ (accessed on 2 April 2025).

- Bond, R.R.; Mulvenna, M.D.; Potts, C.; Finlay, D.; Donnelly, M. Digital Transformation of Mental Health Services. NPJ Ment. Health Res. 2023, 2, 13. [Google Scholar] [CrossRef]

- Sarkar, M.; Lee, T.-H.; Sahoo, P.K. Smart Healthcare: Exploring the Internet of Medical Things with Ambient Intelligence. Electronics 2024, 13, 2309. [Google Scholar] [CrossRef]

- Karthick, G.S.; Pankajavalli, P.B. Ambient Intelligence for Patient-Centric Healthcare Delivery: Technologies, Framework, and Applications. Des. Fram. Wirel. Netw. 2020, 82, 223–254. [Google Scholar]

- Spoladore, D.; Mondellini, M.; Mahroo, A.; Beller, J.; Rizzo, A.; Calabrò, P.; De Masi, S. Smart Waiting Room: A Systematic Literature Review and a Proposal. Electronics 2024, 13, 388. [Google Scholar] [CrossRef]

- Kirubakaran, S.J.; Gunasekaran, A.; Dolly, D.R.J.; Jagannath, D.J.; Peter, J.D. A Feasible Approach to Smart Remote Health Monitoring: Subscription-Based Model. Front. Public Health 2023, 11, 1150455. [Google Scholar] [CrossRef]

- Alabdaljabar, M.S.; Hasan, B.; Noseworthy, P.A.; Maalouf, J.F.; Ammash, N.M.; Hashmi, S.K. Machine Learning in Cardiology: A Potential Real-World Solution in Low- and Middle-Income Countries. J. Multidiscip. Healthc. 2023, 16, 285–295. [Google Scholar] [CrossRef]

- Patel, D.; Raut, G.; Cheetirala, S.N.; Glicksberg, B.; Levin, M.A.; Nadkarni, G.; Freeman, R.; Klang, E.; Timsina, P. AI Agents in Modern Healthcare: From Foundation to Pioneer—A Comprehensive Review and Implementation Roadmap for Impact and Integration in Clinical Settings. Preprints 2025. [Google Scholar] [CrossRef]

- Khera, R.; Oikonomou, E.; Nadkarni, G.; Antoniades, C.; Narula, J.; Fuster, V. Transforming Cardiovascular Care with Artificial Intelligence: From Discovery to Practice. J. Am. Coll. Cardiol. 2024, 84, 97–114. [Google Scholar] [CrossRef] [PubMed]

- Biondi-Zoccai, G.; D’Ascenzo, F.; Giordano, S.; Mirzoyev, U.; Erol, Ç.; Cenciarelli, S.; Leone, P.; Versaci, F. Artificial Intelligence in Cardiology: General Perspectives and Focus on Interventional Cardiology. Anatol. J. Cardiol. 2025, 29, 152–163. [Google Scholar] [CrossRef] [PubMed]

- Bhagirath, P.; Strocchi, M.; Bishop, M.J.; Boyle, P.M.; Plank, G. From Bits to Bedside: Entering the Age of Digital Twins in Cardiac Electrophysiology. Europace 2024, 26, euae295. [Google Scholar] [CrossRef]

- Corral-Acero, J.; Margara, F.; Marciniak, M.; Rodero, C.; Loncaric, F.; Feng, Y.; Gilbert, A.; Fernandes, J.F.; Bukhari, H.A.; Wajdan, A.; et al. The ‘Digital Twin’ to Enable the Vision of Precision Cardiology. Eur. Heart J. 2020, 41, 4556–4564. [Google Scholar] [CrossRef]

- Ugurlu, D.; Qian, S.; Fairweather, E.; Mauger, C.; Ruijsink, B.; Toso, L.D.; Deng, Y.; Strocchi, M.; Razavi, R.; Young, A.; et al. Cardiac Digital Twins at Scale from MRI: Open Tools and Representative Models from ~55,000 UK Biobank Participants. PLoS ONE 2025, 20, e0327158. [Google Scholar] [CrossRef]

- Conforti, R. Informatics in Emergency Medicine: A Literature Review. Emerg. Care Med. 2025, 2, 2. [Google Scholar] [CrossRef]

- Ahuja, A.; Agrawal, S.; Acharya, S.; Batra, N.; Daiya, V. Advancements in Wearable Digital Health Technology: A Review of Epilepsy Management. Cureus 2024, 16, e57037. [Google Scholar] [CrossRef]

- Ferreira, J.C.; Elvas, L.B.; Correia, R.; Mascarenhas, M. Enhancing EHR Interoperability and Security through Distributed Ledger Technology: A Review. Healthcare 2024, 12, 1967. [Google Scholar] [CrossRef]

- Saberi, M.A.; Mcheick, H.; Adda, M. From Data Silos to Health Records Without Borders: A Systematic Survey on Patient-Centered Data Interoperability. Information 2025, 16, 106. [Google Scholar] [CrossRef]

- Lazarova, E.; Mora, S.; Maggi, N.; Ruggiero, C.; Vitale, A.C.; Rubartelli, P.; Giacomini, M. An Interoperable Electronic Health Record System for Clinical Cardiology. Informatics 2022, 9, 47. [Google Scholar] [CrossRef]

- Mavrogiorgou, A.; Kiourtis, A.; Perakis, K.; Pitsios, S.; Kyriazis, D. IoT in Healthcare: Achieving Interoperability of High-Quality Data Acquired by IoT Medical Devices. Sensors 2019, 19, 1978. [Google Scholar] [CrossRef]

- Ademola, A.; George, C.; Mapp, G. Addressing the Interoperability of Electronic Health Records: The Technical and Semantic Interoperability, Preserving Privacy and Security Framework. Appl. Syst. Innov. 2024, 7, 116. [Google Scholar] [CrossRef]

- Machorro-Cano, I.; Olmedo-Aguirre, J.O.; Alor-Hernández, G.; Rodríguez-Mazahua, L.; Sánchez-Morales, L.N.; Pérez-Castro, N. Cloud-Based Platforms for Health Monitoring: A Review. Informatics 2024, 11, 2. [Google Scholar] [CrossRef]

- De Arriba-Pérez, F.; Caeiro-Rodríguez, M.; Santos-Gago, J.M. Collection and Processing of Data from Wrist Wearable Devices in Heterogeneous and Multiple-User Scenarios. Sensors 2016, 16, 1538. [Google Scholar] [CrossRef] [PubMed]

- Shah, Q.A.; Shafi, I.; Ahmad, J.; Alfarhood, S.; Safran, M.; Ashraf, I. A Meta Modeling-Based Interoperability and Integration Testing Platform for IoT Systems. Sensors 2023, 23, 8730. [Google Scholar] [CrossRef]

- Ghadessi, M.; Di, J.; Wang, C.; Toyoizumi, K.; Shao, N.; Mei, C.; Demanuele, C.; Tang, R.; McMillan, G.; Beckman, R.A. Decentralized Clinical Trials and Rare Diseases: A DIA-IDSWG Perspective. Orphanet J. Rare Dis. 2023, 18, 79. [Google Scholar] [CrossRef]

- Chen, J.; Di, J.; Daizadeh, N.; Lu, Y.; Wang, H.; Shen, Y.L.; Kirk, J.; Rockhold, F.W.; Pang, H.; Zhao, J.; et al. Decentralized Clinical Trials in the Era of Real-World Evidence: A Statistical Perspective. Clin. Transl. Sci. 2025, 18, 2. [Google Scholar] [CrossRef] [PubMed]

- Apostolaros, M.; Babaian, D.; Corneli, A.; Forrest, A.; Hamre, G.; Hewett, J.; Podolsky, L.; Popat, V.; Randall, P. Legal, Regulatory, and Practical Issues to Consider When Adopting Decentralized Clinical Trials. Ther. Innov. Regul. Sci. 2020, 54, 779–787. [Google Scholar] [CrossRef]

- Koller, C.; Blanchard, M.; Hügle, T. Assessment of Digital Therapeutics in Decentralized Clinical Trials: A Scoping Review. PLOS Digit. Health 2025, 4, e0000905. [Google Scholar] [CrossRef]

- Park, J.; Huh, K.Y.; Chung, W.K.; Yu, K. The Landscape of Decentralized Clinical Trials (DCTs): Focusing on the FDA and EMA Guidance. Transl. Clin. Pharmacol. 2024, 32, 41. [Google Scholar] [CrossRef] [PubMed]

- Mennella, C.; Maniscalco, U.; De Pietro, G.; Esposito, M. Ethical and Regulatory Challenges of AI Technologies in Healthcare: A Narrative Review. Heliyon 2024, 10, e26297. [Google Scholar] [CrossRef] [PubMed]

- Alowais, S.A.; Alghamdi, S.S.; Alsuhebany, N.; Alqahtani, T.; Alshaya, A.I.; Almohareb, S.N.; Aldairem, A.; Alrashed, M.; Bin Saleh, K.; Badreldin, H.A.; et al. Revolutionizing Healthcare: The Role of Artificial Intelligence in Clinical Practice. BMC Med. Educ. 2023, 23, 1. [Google Scholar] [CrossRef] [PubMed]

- Marques, M.; Almeida, A.; Pereira, H. The Medicine Revolution Through Artificial Intelligence: Ethical Challenges of Machine Learning Algorithms in Decision-Making. Cureus 2024, 16, e69405. [Google Scholar] [CrossRef]

- Amini, M.M.; Jesus, M.; Fanaei Sheikholeslami, D.; Alves, P.; Hassanzadeh Benam, A.; Hariri, F. Artificial Intelligence Ethics and Challenges in Healthcare Applications: A Comprehensive Review in the Context of the European GDPR Mandate. Mach. Learn. Knowl. Extr. 2023, 5, 1023–1035. [Google Scholar] [CrossRef]

- Bekbolatova, M.; Mayer, J.; Ong, C.W.; Toma, M. Transformative Potential of AI in Healthcare: Definitions, Applications, and Navigating the Ethical Landscape and Public Perspectives. Healthcare 2024, 12, 125. [Google Scholar] [CrossRef]

- Goktas, P.; Grzybowski, A. Shaping the Future of Healthcare: Ethical Clinical Challenges and Pathways to Trustworthy AI. J. Clin. Med. 2025, 14, 1605. [Google Scholar] [CrossRef]

- Jeyaraman, M.; Balaji, S.; Jeyaraman, N.; Yadav, S. Unraveling the Ethical Enigma: Artificial Intelligence in Healthcare. Cureus 2023, 15, e43262. [Google Scholar] [CrossRef]

- Jung, K. Large Language Models in Medicine: Clinical Applications, Technical Challenges, and Ethical Considerations. Healthc. Inform. Res. 2025, 31, 114–124. [Google Scholar] [CrossRef]

- Basubrin, O. Current Status and Future of Artificial Intelligence in Medicine. Cureus 2025, 17, e77561. [Google Scholar] [CrossRef]

- Quinn, T.P.; Senadeera, M.; Jacobs, S.; Coghlan, S.; Le, V. Trust and Medical AI: The Challenges We Face and the Expertise Needed to Overcome Them. J. Am. Med. Inform. Assoc. 2020, 28, 890–894. [Google Scholar] [CrossRef]

- Meijer, C.; Uh, H.-W.; el Bouhaddani, S. Digital Twins in Healthcare: Methodological Challenges and Opportunities. J. Pers. Med. 2023, 13, 1522. [Google Scholar] [CrossRef] [PubMed]

- Lüscher, T.F.; Wenzl, F.A.; D’Ascenzo, F.; Friedman, P.A.; Antoniades, C. Artificial Intelligence in Cardiovascular Medicine: Clinical Applications. Eur. Heart J. 2024, 45, 4291–4304. [Google Scholar] [CrossRef] [PubMed]

- Haltaufderheide, J.; Ranisch, R. The Ethics of ChatGPT in Medicine and Healthcare: A Systematic Review on Large Language Models (LLMs). NPJ Digit. Med. 2024, 7, 183. [Google Scholar] [CrossRef]

- Lautrup, A.D.; Hyrup, T.; Schneider-Kamp, A.; Dahl, M.; Lindholt, J.S.; Schneider-Kamp, P. Heart-to-Heart with ChatGPT: The Impact of Patients Consulting AI for Cardiovascular Health Advice. Open Heart 2023, 10, e002455. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Fu, T.; Xu, Y.; Ma, Z.; Xu, H.; Du, B.; Lu, Y.; Gao, H.; Wu, J.; Chen, J. TWIN-GPT: Digital Twins for Clinical Trials via Large Language Model. ACM Trans. Multimed. Comput. Commun. Appl. 2024. [Google Scholar] [CrossRef]

- Shah, K.; Xu, A.Y.; Sharma, Y.; Daher, M.; McDonald, C.; Diebo, B.G.; Daniels, A.H. Large Language Model Prompting Techniques for Advancement in Clinical Medicine. J. Clin. Med. 2024, 13, 5101. [Google Scholar] [CrossRef]

- Chen, Z.; Matoba, K.; Salvi, F.; Pagliardini, M.; Fan, S.; Mohtashami, A.; Sallinen, A.; Sakhaeirad, A.; Hernández Cano, A.; Romanou, A.; et al. MEDITRON-70B: Scaling Medical Pretraining for Large Language Models. arXiv 2023, arXiv:2311.16079. [Google Scholar] [CrossRef]

| Device | Sensor Type | Measured Parameters | Connectivity | Edge Capabilities | Role in Architecture | References |

|---|---|---|---|---|---|---|

| Zephyr BioHarness | ECG, Accelerometer | HR, HRV, RR intervals, posture | Bluetooth | RR detection, motion filtering | Wearable + Edge | [23] |

| Empatica E4 | PPG, EDA, Temp, ACC | HR, SpO2, GSR, skin temp | Bluetooth, USB | On-device preprocessing | Wearable Layer | [24] |

| Polar H10 | ECG | HR, RR intervals | Bluetooth | Onboard HRV analysis | Sensor + Edge Layer | [24,26] |

| Xiaomi Smart Band 7 | PPG, Accelerometer | HR, SpO2, sleep, steps | BLE | Basic HR tracking | Low-power Wearable | [23] |

| Shimmer3 GSR+ | PPG, GSR | HR, GSR, skin conductanc | Bluetooth | Raw signal logging | Research-grae Sensor Layer | [25] |

| Hexoskin Smart Shirt | ECG, Respiration, ACC | HR, respiration rate, HRV | Bluetooth | On-board buffering | Multimodal Wearable | [27] |

| Raspberry Pi + MAX30102 | PPG | HR, SpO2, RR, RMSSD, SDNN | I2C, Wi-Fi | Python (3.12)-based HRV computation | Edge + AI Input | [28] |

| Custom EMG on ESP32 | EMG (Digital) | RMS, MAV, ZC, SSC (muscle fatigue) | GPIO, UART | Real-time signal classification | Sensor + Edge Layer | [23,26] |

| Winsen ZPHS01B | Gas, Temp, Humidity | CO2, Temp, RH | UART/I2C | Digital signal output | Environmental Layer | [31] |

| Genomic API | Digital API | SNPs, PRS, gene markers | REST API | Cloud analytics | Genetic Input Layer | [29,30,31] |

| Model Name | Input Data | Base Architecture | Fine-Tuning Method | Target Disease | Reported Accuracy | Reference(s) |

|---|---|---|---|---|---|---|

| Heart Sense Transformer | ECG Image + IoT Sensor Data | Transformer | Custom ECG dataset fine-tuning | Heart Disease | 92.6% | [61] |

| ECG-DL13 | ECG Signal | CNN + Transfer Learning | Small ECG datasets | 13 Heart Conditions | 94.2% | [62] |

| EchoMed-CNN | Echocardiogram | CNN | Pretrained CNN layers fine-tuned | Endocarditis | 90.4% | [63] |

| Foundation ECG | Single-lead ECG | Transformer (Echo-FM) | Per clinical label fine- tuning | Multiple CVD conditions | 88.9% | [64] |

| Deep Ensemble ECG | ECG Image | Ensemble CNN models | 8 models fine-tuned on private ECG dataset | Arrhythmia and related CVD | 95.1% | [65] |

| IoT-ENN Hybrid | Wearable ECG sensor signals | Epistemic Neural Network | Optimized with Boosted Sooty Tern Algorithm | Cardiac Arrhythmias | 91.3% | [66] |

| Model or Topic | Application Area | Architecture | Notable Features | References |

|---|---|---|---|---|

| ChatGPT in Science and Healthcare | Clinical communication, research | Transformer (Decoder-only) | Conversational assistant, summarization | [67] |

| AI/ML in Pathology and Medicine | Pathology, diagnostics, education | General overview (including transformers) | Foundational concepts for generative/nongenerative AI | [68] |

| LLMs in Ophthalmology | Ophthalmology | BERT, GPT-4, PubMed-BERT, ClinicalBERT | Assessment in exams, clinical notes | [69] |

| Overview of Generative Models | NLP, vision, general AI | GANs, Autoencoders, Diffusion, Transformer | Technical evolution of generative AI | [70] |

| Generative AI in Healthcare | Clinical documentation, diagnostics | BioGPT, GatorTronGPT, ClinicalBERT | Multimodal applications, LLM integration | [71] |

| ICN Conference Papers | Image classification, EEG-based detection | CNN, SVM, Decision Trees | Use of medical datasets like HAM10000 | [72] |

| Multilingual LLMs with LoRA | Chatbot, smart cities | BLOOM-7B1 with LoRA + DeepSpeed | Synthetic dataset creation with prompting | [73] |

| Medical VQA with Generative Models | Visual Question Answering | Transformer-based generative models | Image-text integration in medical domain | [74,82] |

| Recent Advances in Medical LLMs | Summarization, clinical assistant | BERT, GPT-3, PaLM, LLaMA | Pretraining on MIMIC-III and other datasets | [75] |

| Clinical LLMs in Mental Health | Psychiatry, psychotherapy support | ChatGPT, BERT-based | Taxonomy of chatbot evaluation, AI-in-the-loop therapy | [83,84] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tasmurzayev, N.; Amangeldy, B.; Imanbek, B.; Baigarayeva, Z.; Imankulov, T.; Dikhanbayeva, G.; Amangeldi, I.; Sharipova, S. Digital Cardiovascular Twins, AI Agents, and Sensor Data: A Narrative Review from System Architecture to Proactive Heart Health. Sensors 2025, 25, 5272. https://doi.org/10.3390/s25175272

Tasmurzayev N, Amangeldy B, Imanbek B, Baigarayeva Z, Imankulov T, Dikhanbayeva G, Amangeldi I, Sharipova S. Digital Cardiovascular Twins, AI Agents, and Sensor Data: A Narrative Review from System Architecture to Proactive Heart Health. Sensors. 2025; 25(17):5272. https://doi.org/10.3390/s25175272

Chicago/Turabian StyleTasmurzayev, Nurdaulet, Bibars Amangeldy, Baglan Imanbek, Zhanel Baigarayeva, Timur Imankulov, Gulmira Dikhanbayeva, Inzhu Amangeldi, and Symbat Sharipova. 2025. "Digital Cardiovascular Twins, AI Agents, and Sensor Data: A Narrative Review from System Architecture to Proactive Heart Health" Sensors 25, no. 17: 5272. https://doi.org/10.3390/s25175272

APA StyleTasmurzayev, N., Amangeldy, B., Imanbek, B., Baigarayeva, Z., Imankulov, T., Dikhanbayeva, G., Amangeldi, I., & Sharipova, S. (2025). Digital Cardiovascular Twins, AI Agents, and Sensor Data: A Narrative Review from System Architecture to Proactive Heart Health. Sensors, 25(17), 5272. https://doi.org/10.3390/s25175272