1. Introduction

Cardiovascular diseases (CVDs) are the leading cause of death globally, accounting for approximately 32% of all deaths worldwide. Their high prevalence, disability rate, and associated medical burden pose a significant challenge to public health systems [

1,

2]. Consequently, there has been growing emphasis in recent years on the early detection and diagnosis of CVDs. Among various diagnostic methods, physiological signals play a critical role, particularly the electrocardiogram (ECG) [

3,

4,

5,

6,

7] and the phonocardiogram (PCG) [

8,

9,

10]. These signals can accurately reflect the state of the heart from multiple perspectives and are easily acquired through non-invasive and convenient means, making them well-suited for large-scale population screening and long-term monitoring [

11].

Most early detection studies of CVDs rely on unimodal signal analysis, focusing either on ECG or PCG data. These approaches can be broadly categorized into traditional feature engineering combined with machine learning and end-to-end deep learning methods. For example, Zhu et al. [

12] extracted morphological features (e.g., amplitude and duration) from the P-QRS-T waveforms of ECG signals, then applied Principal Component Analysis (PCA) for dimensionality reduction and Dynamic Time Warping (DTW) for temporal feature extraction, feeding the results into a Support Vector Machine (SVM) classifier. Kui et al. [

13], focusing on PCG signals, employed an improved Duration-Dependent Hidden Markov Model (DD-HMM) to segment cardiac cycles and extracted Mel-Frequency Spectral Coefficient (MFSC) features, which were subsequently classified using a Convolutional Neural Network (CNN). In contrast, deep learning methods have shifted toward end-to-end architectures. For instance, Kusuma et al. [

14] proposed a hybrid Deep Convolutional Neural Network–Long Short-Term Memory (DCNN-LSTM) model, where CNNs are used to extract spatial features from ECG signals and LSTMs are applied to model temporal dynamics, enabling the diagnosis of Congestive Heart Failure (CHF). Alkhodari et al. [

15] employed a Convolutional Neural Network–Bidirectional Long Short-Term Memory (CNN-BiLSTM) model to detect cardiovascular abnormalities and diagnose valvular diseases from PCG signals, leveraging the bidirectional LSTM and its enhanced context modeling capabilities.

Although the aforementioned unimodal approaches based on either ECG or PCG signals have achieved notable progress in CVDs detection, they are inherently limited by the physiological differences in signal origin—PCG reflects mechanical cardiac activity, while ECG captures electrical activity. As a result, single-modality analysis is often insufficient to comprehensively evaluate cardiac function. In response, multimodal fusion has emerged as a promising trend in recent research. For example, Li et al. [

16] extracted features from both ECG and PCG signals using a traditional artificial neural network, applied a genetic algorithm to select optimal feature subsets, and employed an SVM classifier for final prediction. Zhu et al. [

17] proposed DDR-Net, a dual-domain representation network that integrates multiscale low-level features from ECG and PCG signals using a dual-scale feature aggregation module. This is followed by SVM-RFECV-based feature selection and classification using an SVM, enabling efficient and accurate CVDs detection. These studies demonstrate that multimodal fusion strategies significantly enhance the comprehensiveness and robustness of CVDs detection systems, offering a more holistic representation of cardiac function.

However, most existing multimodal signal-based studies rely on conventional artificial neural networks (ANNs), which present several limitations. Specifically, when the network architecture is simple and thus consumes less energy, the model performance is often suboptimal. Conversely, more complex ANN models can improve performance but at the cost of significantly increased energy consumption. Furthermore, with the ongoing expansion of data volumes and network scales, ANN-based models demand substantial computational resources and power, resulting in slower inference speeds. This poses a particular challenge for wearable and edge devices, where efficient real-time deployment is critical [

18]. Therefore, it is imperative to explore methods that can effectively balance limited computational resources with acceptable model performance.

Based on the above analysis, this study proposes an innovative CVDs detection framework that leverages Spiking Neural Networks (SNNs) and incorporates both signal-level and decision-level fusion of ECG and PCG multimodal signals. From a network perspective, SNNs offer significantly lower energy consumption than conventional ANNs due to their inherent sparsity and event-driven processing characteristics [

19,

20,

21]. In terms of fusion strategy, this work adopts a hybrid approach that combines signal-level and decision-level fusion, which ensures a balance between detection performance and computational efficiency. Compared to conventional feature-level fusion, this approach substantially reduces computational overhead while maintaining classification accuracy. It is worth noting that although there has been preliminary exploration of SNN in CVDs detection—for example, Rana et al. [

22] proposed the use of SNN with attention mechanisms to enhance feature extraction from ECG signals, effectively leveraging the efficiency of SNN and the precision of attention modules to improve cardiac signal analysis—existing studies remain largely focused on unimodal signal analysis. There is still a pressing need to further investigate the application of SNN-based multimodal signal fusion for more effective and efficient CVDs detection.

Specifically, this study first performs signal-level fusion of ECG and PCG signals, followed by time–frequency transformation using the Adaptive Superlets Transform (ASLT) to obtain high-resolution spectrograms. The transformed signals are then separately fed into SNN models for training. Finally, a decision-level fusion of the trained models is carried out to classify and detect CVDs. This framework successfully explores an effective balance between model energy consumption and classification performance, providing valuable insights for the development of low-power, multimodal medical diagnostic systems.

2. Materials and Methods

2.1. Framework

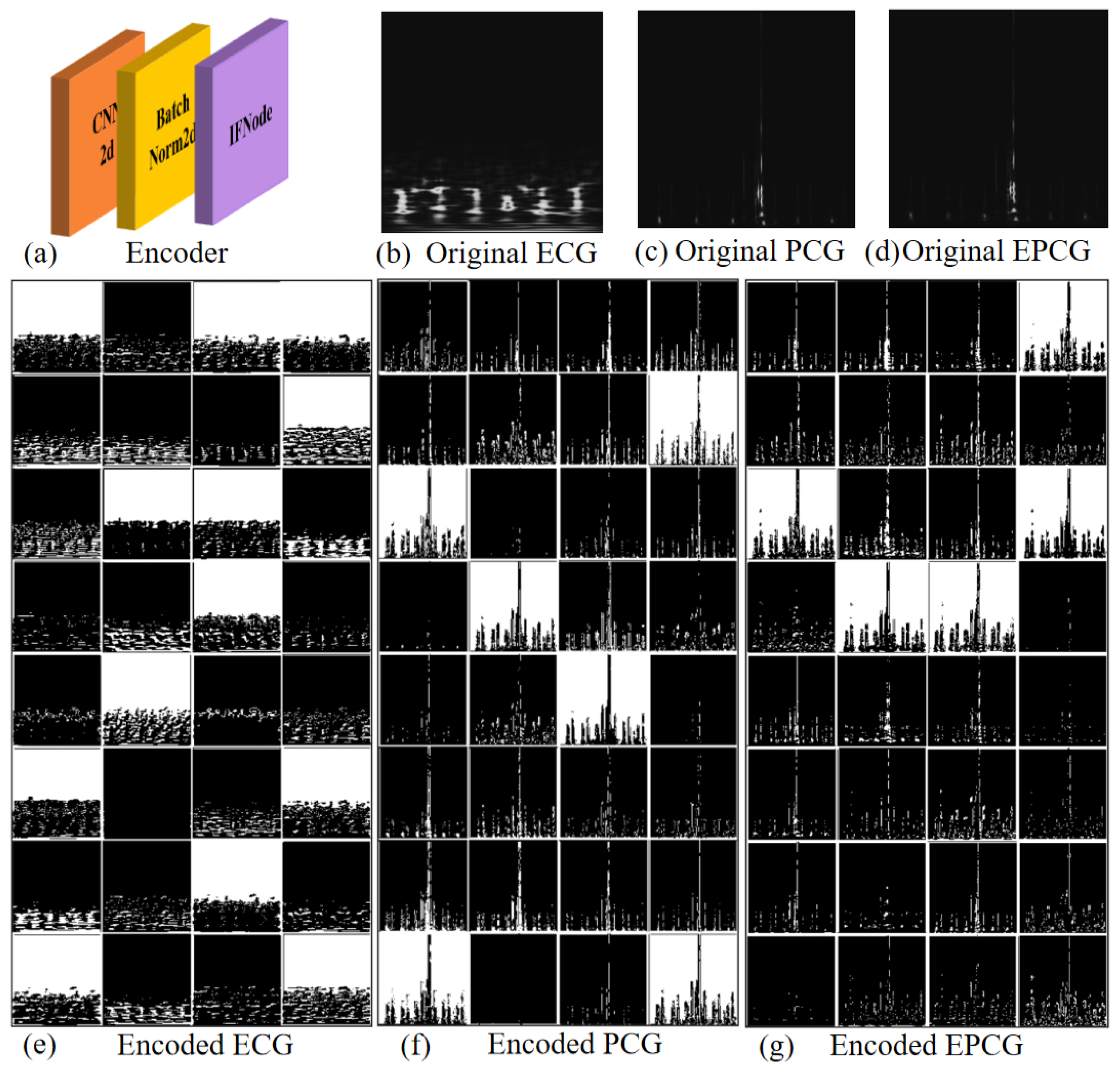

Figure 1 illustrates the architecture of the proposed model, which predicts CVDs by integrating original ECG signals and signal-level fused ECG-PCG signals (referred to as EPCG). The framework consists of four main stages: signal segmentation, time–frequency transformation, network training, and final decision-level fusion for classification. Specifically, after data segmentation and preprocessing, the ASLT is applied to convert the time-series signals into time–frequency spectrograms. These spectrograms, derived from the ECG and EPCG signals in the training set, are then used to independently train two Spiking Convolutional Neural Networks (SCNNs) using five-fold cross-validation. After training, the corresponding ECG and EPCG spectrograms from the test set are input into the two trained models for inference. Finally, a decision-level fusion module combines the outputs of the two models to produce the final classification result.

2.2. Datasets

The dataset used in this study is derived from the PhysioNet/CinC Challenge 2016 [

23], contributed by multiple international institutions. Based on the source institutions, the dataset is divided into six subsets, labeled from “training-a” to “training-f”. Among them, the “training-a” subset contains simultaneous ECG and PCG recordings collected from the same subjects. Both ECG and PCG signals are sampled at 2000 Hz.

Specifically, the “training-a” subset includes a total of 409 recordings, of which 405 contain both ECG and PCG signals. Within these 405 recordings, 117 are labeled as negative (normal controls), while the remaining 288 are labeled as positive, corresponding to patients diagnosed with mitral valve prolapse (MVP), benign aortic disease (AD), or other miscellaneous pathological conditions (MPCs). The duration of the recordings ranges from 9.27 s to 36.5 s. Due to severe noise contamination in some signals, making their classification unreliable, 17 recordings were excluded from further analysis [

24], leaving 388 usable recordings.

To enable signal-level fusion of ECG and PCG, it was necessary to ensure that both signals were of equal length. Therefore, we selected 382 recordings from the remaining 388 that contained more than 40,000 data points, ensuring sufficient signal duration without significantly reducing the dataset size. For each selected recording, we extracted a 36,000-point segment from the 4000th to the 40,000th sample, to avoid unstable signal portions typically occurring at the beginning of data acquisition.

Finally, due to the limited number of samples and class imbalance, we applied a data augmentation strategy [

25]. Specifically, each signal was segmented into 6-second segments using a sliding window approach. A step size of 2 s was used for normal samples and 6 s for abnormal samples. The 6-second duration was chosen to ensure the inclusion of multiple complete cardiac cycles while allowing accurate segmentation of the entire dataset and generating a sufficient number of samples for model training. This process resulted in a total of 1554 samples as summarized in

Table 1.

2.3. Adaptive Superlets Transform (ASLT)

Adaptive Superlets Transform (ASLT) [

26] enhances the time–frequency resolution by dynamically adjusting the number of wavelets, achieving an adaptive trade-off between time and frequency localization. Compared with traditional fixed-wavelet methods, ASLT is based on the concept of adaptive super-resolution. The Adaptive Superlet (ASL) combines a series of small wavelets centered around a given frequency, where the number of wavelets is adjusted depending on the frequency. Its general form is given by

where a(f) is a function related to the frequency f, used to determine the number of small wavelets at different frequencies. In practical applications, this function is typically defined as

where

and

represent the orders corresponding to the lowest and highest center frequencies, respectively, while

and

define the lower and upper bounds of the frequency range under analysis.

To address the "banding effect" caused by discrete jumps in wavelet order, fractional superlets introduce a weighted geometric mean, allowing the order to vary continuously. The response is computed as follows:

where

denotes the response of a single wavelet, which is computed via complex convolution:

This enhancement enables the Fractional Adaptive Superlet Transform (FASLT) to provide a smooth representation across the entire frequency domain.

A key advantage of ASLT lies in its ability to maintain a constant absolute bandwidth configuration. By dynamically coupling the wavelet order with frequency, ASLT achieves consistent resolution performance in wideband analysis. Compared with traditional methods such as the Short-Time Fourier Transform (STFT) and Continuous Wavelet Transform (CWT), ASLT demonstrates superior performance—particularly in analyzing complex time–frequency data.

2.4. Spiking Convolutional Neural Network (SCNN)

The network architecture used in this study is illustrated in

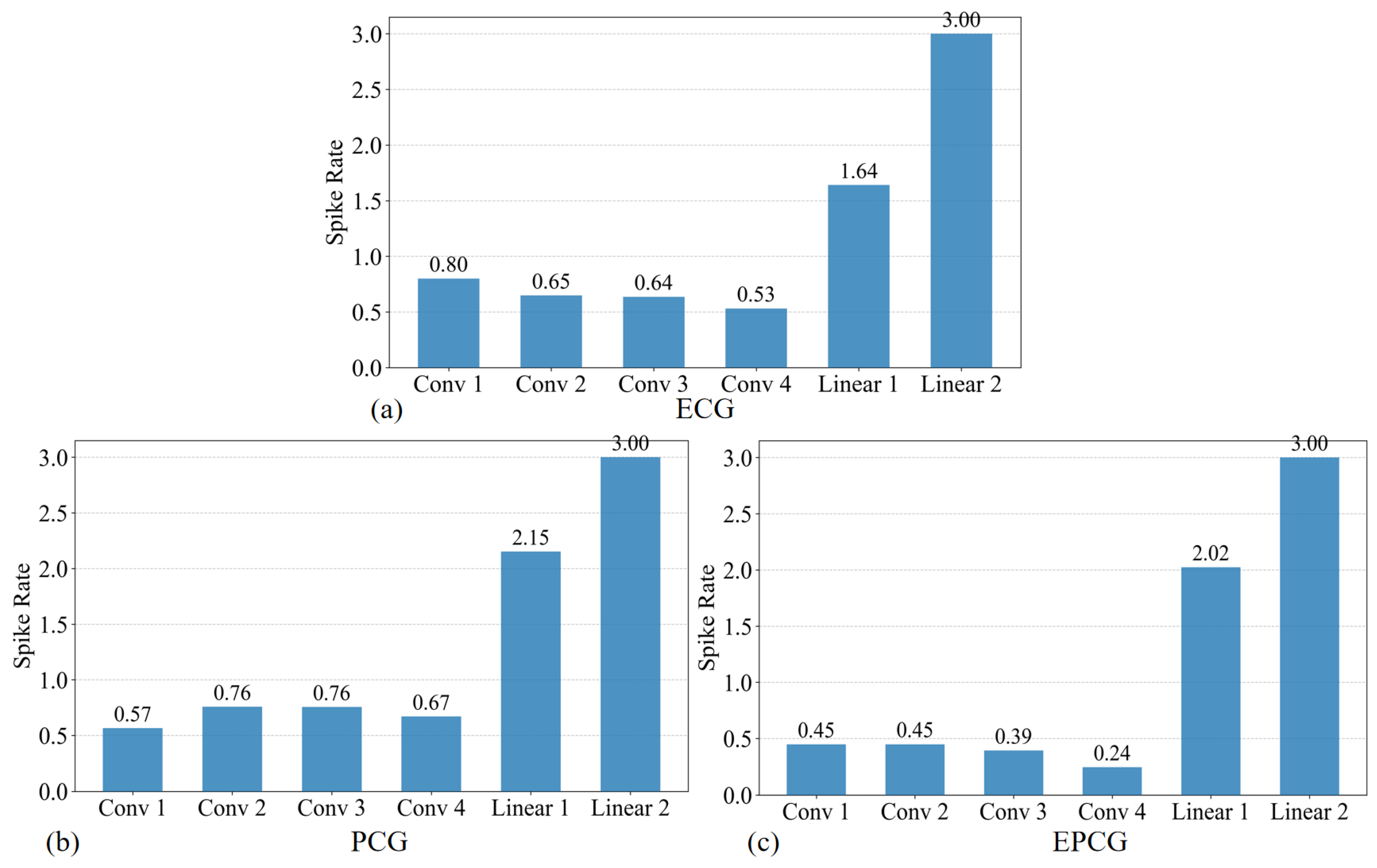

Figure 2. It is a spatiotemporal feature extraction model based on SNN, with the core innovation of converting the continuous-valued operations in conventional ANN into discrete spike-based computations. This event-driven paradigm enables highly efficient inference.

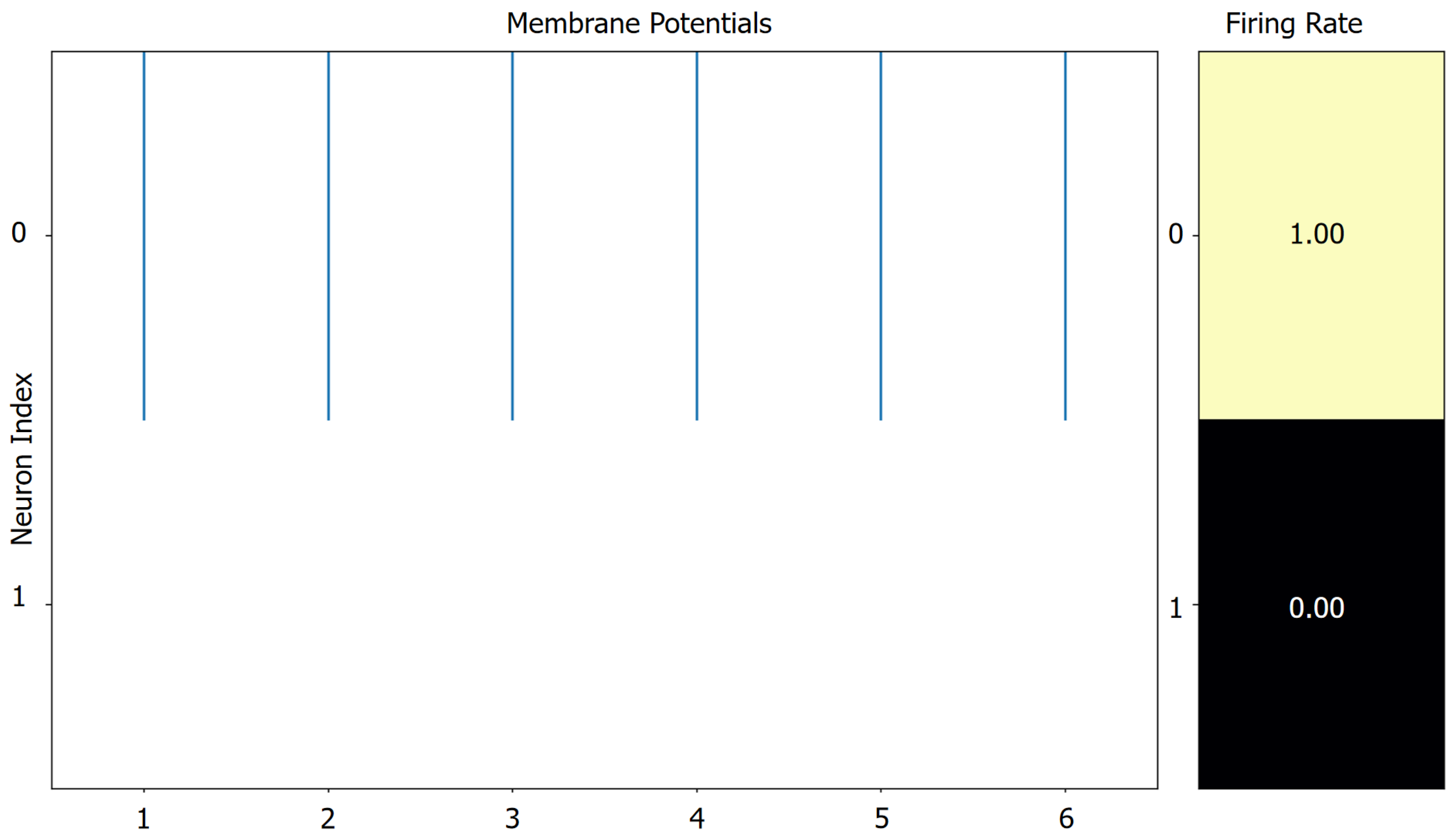

Specifically, the model consists of four sequential Convolutional Spiking Blocks, followed by two fully connected spiking layers that form the classifier. The input data are expanded along the temporal dimension (repeated T times) to form spike sequences, which are then aggregated using Temporal Mean Pooling to produce the final classification output.

The spiking units in the network are implemented using Integrate-and-Fire (IF) neurons, and the spike generation process is approximated using the arctangent function (ATan) as a surrogate function [

27]. This combination maintains biological plausibility while facilitating effective gradient backpropagation. The subthreshold neural dynamics of the Integrate-and-Fire Node (IFNode) are defined by the following equation:

From the perspective of discrete modeling, the subthreshold membrane dynamics of the IF neuron can be expressed as

where

denotes the membrane potential of the neuron at time step

t, and

represents the membrane potential at the previous time step

.

corresponds to the external input to the neuron at time step

t.

The ATan function can be defined as follows:

where

x denotes the input to the neuronal membrane potential or the spike function, and

is a tunable scaling factor employed to regulate the slope or smoothness of the surrogate function.

In the four convolutional blocks, the first convolutional module consists of a 7 × 7 two-dimensional convolutional layer, which expands the number of channels from 1 to 32 and employs a stride of 2 for initial spatial downsampling. The subsequent three convolutional layers progressively increase the channel dimensions to 2×, 4×, and 8× of the original size, respectively. Each of these layers utilizes a 3 × 3 convolutional kernel with a padding of one pixel to maintain the spatial resolution of the feature maps. Each convolutional layer is followed by a batch normalization layer, an IFNode, and a max-pooling layer, which respectively serve to stabilize the training process, simulate the nonlinear spiking behavior of biological neurons, and compress spatial dimensions while suppressing local noise.

After the convolutional stage, the output feature maps are flattened into a one-dimensional vector via a flatten operation and then fed into fully connected layers for further processing. The first fully connected layer maps the feature dimensions from channels × 8 × 7 × 7 to channels × 4 × 4, followed by IFNode activation. The second fully connected layer produces a 2-dimensional output representing the final classification decision, which is also paired with an IFNode to maintain the temporal response structure of the model.

2.5. Fusion Method

2.5.1. Signal-Level Fusion

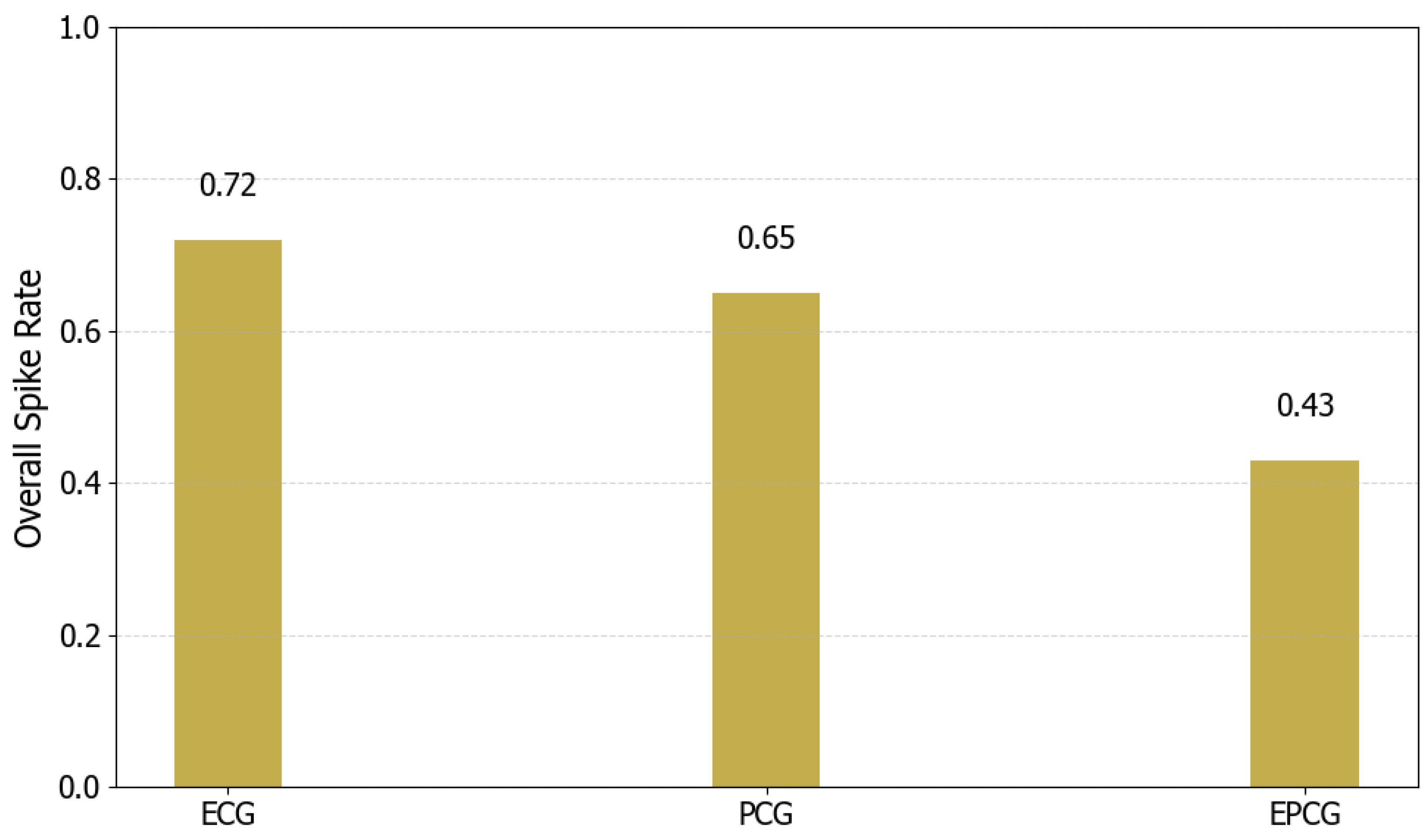

This study proposes a signal-level differential fusion method for multimodal physiological signals, grounded in the concept of differencing, as an effective approach to multi-source information integration. Specifically, a fused signal termed EPCG is constructed by performing point-wise differencing between synchronously acquired ECG and PCG signals. Due to the strong temporal synchronization between ECG and PCG signals, differential operation can effectively suppress the common background noise present in both signals. This approach is primarily based on the common-mode noise suppression principle from classical signal processing theory. Differential circuits or differential operations are important methods for improving the common-mode rejection ratio (CMRR). Furthermore, the differential operation involves only subtraction, making it a low-complexity operation. Therefore, considering both computational load and noise suppression, we propose a differential-based signal fusion method that can reduce the background noise in the fused EPCG signal.

It is worth noting that, although PCG signals hold significant clinical value for cardiac disease detection, their raw forms are generally more susceptible to noise interference and inter-individual variability, which limits their diagnostic performance in real-world applications. In contrast, ECG signals demonstrate greater stability and robustness in both temporal and morphological characteristics. Accordingly, compared to the ECG signal, the differential processing may introduce more unstable information from the PCG, potentially reducing the overall performance of the fused result. However, compared to the PCG signal, the differential processing not only suppresses certain non-stationary noise components in the PCG but also effectively integrates temporal features from the ECG. As a result, the constructed EPCG signal demonstrates higher sensitivity and discriminative capability in pathological recognition tasks than the original PCG.

This method provides a novel and effective multimodal signal fusion mechanism without significantly increasing computational complexity. It lays the foundation for subsequent decision-level fusion, preventing large discrepancies between signals from adversely affecting the final fusion outcome.

2.5.2. Decision-Level Fusion

In terms of decision-level fusion, this study designs and implements a confidence-based dynamic decision (CDD) fusion strategy to integrate the classification outputs from two signal-specific models. Specifically, the strategy prioritizes the ECG-based model (Model 1), which demonstrates greater stability and robustness, and determines whether to directly adopt its prediction or incorporate the EPCG-based model (Model 2) for corrective fusion based on prediction confidence.

The strategy begins by calculating the prediction confidence of Model 1 for each sample, defined as the maximum probability from its softmax output. If this confidence exceeds a predefined threshold, the prediction from Model 1 is accepted directly, which enhances fusion efficiency and avoids unnecessary computation. When Model 1 shows insufficient confidence in its prediction, Model 2 is introduced to assist. In such cases, a normalized weighted average of the predicted probabilities from both models is computed to form a fused result, thereby improving classification robustness for ambiguous or challenging samples. The final predicted class is determined by the category with the highest value in the weighted probability distribution.

This approach realizes the dynamic scheduling of “complementary advantages” between models in terms of strategy, and the structure is simple and can improve the robustness of the overall system.

5. Conclusions

In this study, a multimodal CVDs detection framework based on SNN is proposed, which effectively integrates ECG and PCG signals through collaborative modeling at both the signal and decision levels. A differential mechanism is employed to fuse ECG and PCG signals at the signal level, generating a composite EPCG signal. The ASLT method is then applied to extract high-resolution time–frequency representations, enabling the fine-grained characterization of both temporal and spectral features. During model training, two SCNNs are trained separately using ECG and EPCG inputs. To enhance overall classification robustness, a CDD fusion strategy is introduced at the decision level. Experiments conducted on the “training-a” of the PhysioNet/CinC Challenge 2016 dataset demonstrate that the proposed framework achieves superior performance and stability compared to single-modality detection methods. Further analysis reveals that both the convolutional depth of the model and the type of input images (grayscale vs. RGB) influence classification outcomes, thereby validating the rationality and effectiveness of the proposed model design. Moreover, a systematic evaluation of spike firing rate and energy consumption is carried out, showing that the proposed method maintains competitive classification accuracy while significantly reducing energy usage, underscoring its potential for deployment in low-power medical diagnostic applications.

In future work, we aim to further optimize the model architecture and fusion strategy, with a focus on exploring the optimal trade-off between energy efficiency and detection performance. Additionally, since we only use the "training-a" dataset, the generalizability and reliability of our study are somewhat limited. In the future, we will consider using more datasets to further strengthen the robustness and persuasiveness of our research.