Abstract

Reconstruction-based unsupervised detection methods have demonstrated strong generalization capabilities in the field of tablet anomaly detection, but there are still problems such as poor reconstruction effect and inaccurate positioning of abnormal areas. To address these problems, this paper proposes an unsupervised Diffusion-based Tablet Defect Detection (DTDD) method. This method uses an Assisted Reconstruction (AR) network to introduce original image information to assist in the reconstruction of abnormal areas, thereby improving the reconstruction effect of the diffusion model. It also uses a Scale Fusion (SF) network and an improved anomaly measurement method to improve the accuracy of abnormal area positioning. Finally, the effectiveness of the algorithm is verified on the tablet dataset. The experimental results show that the algorithm in this paper is superior to the algorithms in the same field, effectively improving the detection accuracy and abnormal positioning accuracy, and performing well in the tablet defect detection task.

1. Introduction

Drug quality is directly related to the health and safety of patients and the brand reputation of pharmaceutical companies. In recent years, drug safety issues [1,2,3] have occurred frequently, and the number of drug safety accidents has continued to rise. Among them, drug surface defects [4,5] are an important component, a key quality issue that needs to be detected in the production process, and the most intuitive manifestation of product quality being affected. Therefore, drug surface defect detection is of great significance to ensure drug quality, improve drug safety, and reduce the frequency of accidents [6,7,8].

Tablets are the most common form of medicines. Tablet defect detection is generally aimed at detecting scratches, defects, foreign matter occlusion, color pollution, holes, and other defects on the surface of tablets [9]. Traditional manual visual inspection methods are inefficient and highly subjective, and it is difficult to meet the requirements of high-speed production lines for accuracy and real-time performance. Traditional image processing technology achieves abnormality recognition through low-dimensional feature extraction and rule design, but its generalization ability for complex texture defects is insufficient and it is easily affected by illumination changes and background interference. In recent years, deep learning technology [10,11,12,13] has significantly improved the accuracy and robustness of tablet defect detection with its powerful feature learning ability and has gradually become the mainstream method. Among them, supervised methods represented by Siamese Network [14], Fast R-CNN [15], and YOLO [16] have fully demonstrated their advantages of a high accuracy and good adaptability. TT Mac et al. [17] proposed a method that combines pill image processing with an improved VGG16 convolutional neural network based on Adam optimization to ensure a high accuracy of defect detection and classification. Xue Q et al. [18] applied YOLOV5 with attention mechanism and multi-scale feature fusion to the drug inspection scenario, providing a high-precision first-level real-time defect detection system. S Kim et al. [19] proposed a pipeline consisting of a pill detection module and a defect detection module based on an autoencoder to detect defective pills in pill packaging. For the task of tablet defect detection, supervised methods require a large amount of defect data annotation, which cannot cope with the problem of a small number of defect samples and diverse defect forms generated during the tablet production process. In this regard, unsupervised methods represented by DRAEM [20], PatchCore [21], and LDM [22] are more suitable, among which reconstruction-based methods are a major research hotspot [23]. The core of the reconstruction-based method is that the model only learns feature distribution from normal images during the training phase and uses the trained model to reconstruct abnormal images into normal images during the test phase so as to determine the abnormal location by comparing the reconstructed image with the input image. Autoencoders [24], Variational Autoencoders [25] and Generative Adversarial Networks [26] all belong to this category. In recent years, the diffusion model, which has performed outstandingly, has been widely studied and applied.

In 2015, Jascha Sohl-Dickstein et al. [27] proposed a diffusion model based on the principles of non-equilibrium thermodynamics. The idea is to systematically and slowly destroy the structure of the original data distribution through an iterative forward diffusion process so that it gradually becomes a simple, analyzable distribution, and then learn a reverse diffusion process to restore the structure in the data, thereby obtaining a highly flexible and easy-to-handle generation model. In 2020, Ho J et al. [28] proposed a model for image generation, and it has been widely used. In 2021, Robin Rombach et al. proposed the LDM architecture, which can greatly reduce the computational complexity and achieve better results by performing a diffusion process on the latent representation space. J. Wang et al. [29] proposed a defect detection model based on a classifier-guided conditional diffusion model to detect defects and accurately identify defective areas; Hang Yao et al. [30] proposed a global and local adaptive diffusion model that can achieve anomaly-free reconstruction while retaining normal information as much as possible. He H et al. [31] proposed a diffusion-based anomaly detection architecture for multi-class anomaly detection, which can cope with the anomaly detection problem under multiple classifications. Zhang X et al. [32] proposed a feature reconstruction network Realnet with real synthetic anomalies and adaptive feature selection to synthesize real and diverse anomaly samples. In addition, to address the limitations of feature extraction in anomaly detection tasks, Z He et al. [33] introduced a cross-modal recurrent enhancement module and a frequency-aware alternating fusion module. These modules effectively suppress interference from low-quality depth maps and facilitate the aggregation of cross-modal features. By overcoming the similarity between the foreground and background in the frequency domain, this approach enables robust cross-scale fusion of integrated features. S Woo et al. [34] proposed the CBAM attention mechanism, which infers attention maps along two independent dimensions, channel and space, and it is widely used to improve the representation ability of the network. J Chen et al. [35] proposed a bidirectional cross-scale connection feature fusion network with a direct information connection layer and a shallow information fusion layer to address the difficulty and low accuracy of small target detection. Although the above methods have achieved certain results, they still face many challenges in the task of tablet defect detection: (1) small target defects such as tiny scratches and pits on the surface of tablets have a very low pixel ratio and extremely weak semantic information. Existing detection algorithms find it difficult to accurately capture their features, resulting in large positioning deviations and difficulty in designing an adaptive loss function to optimize the detection effect. (2) The defect morphology of tablets varies greatly. It is difficult for the feature fusion network to balance the feature extraction at different scales. The shallow network cannot deeply explore the details of small defects, and the deep network is prone to losing the overall structural information of large defects, resulting in limited detection accuracy. (3) Some tablets have complex defect backgrounds and textures, resulting in poor reconstruction effect and reconstruction quality of existing algorithms. In this regard, this paper proposes an unsupervised tablet defect detection method, DTDD, based on a diffusion model.

The contributions of this paper are as follows:

(1) An AR network is used to improve the quality of image reconstruction. This network introduces the semantic information of the original image into the diffusion model denoising network, ensuring the consistency of semantic information and assisting the diffusion model to correctly reconstruct the abnormal image, thereby improving the reconstruction effect.

(2) A semantically enhanced lightweight attention module (SELA) is designed to enhance the semantic expression and detail perception of target features, thereby improving the ability of the AR network to understand global semantic information and local detail textures.

(3) An SF network is used to achieve accurate positioning of abnormal areas. This method achieves hierarchical extraction of features of different scales through explicit task division, performs abnormality measurement and adaptive weighted fusion on features of each layer, avoids the computational overhead of cross-layer fusion, and enables the model to take into account both details and global information.

2. Background

2.1. Denoising Diffusion Probabilistic Model

DDPM models the data generation process as a reverse Markov chain, which is divided into two stages: noise addition (forward process) and data generation (reverse process). In the forward diffusion process, the model starts from the data and gradually adds Gaussian noise until the signal is completely covered by the noise. Assume that is the original data, is the original data density, t represents the time of the diffusion step, is the preset variance schedule, which determines the amount of noise added, I represents the identity matrix of the same dimension as the input image , is the noise sampled from the standard Gaussian distribution, Definition , and , then the entire forward process can be expressed as follows:

In the process of inverse denoising, the model learns to gradually recover the original noise-free data from the noisy data. Definition p is a parameterized Gaussian distribution, and its learning process can be expressed as follows:

means the joint probability distribution of the current moment after the next time step data is given. The data distribution of the current time step is Gaussian distribution, and its mean and covariance are determined by the model parameters. means the joint probability distribution from the last time step to the first time step. In the inverse denoising process, the model uses the maximum likelihood objective to train the neural network and uses stochastic gradient descent to optimize the random term. The expression can be written as follows:

Set the covariance to a fixed value and calculate the mean as follows:

Among them, ; this enables the model to more accurately reconstruct the original data from the noisy data, and the loss function is simplified to the following formula:

2.2. Latent Diffusion Model

LDM first uses a pre-trained variational autoencoder to encode high-dimensional image data into a low-dimensional latent space. The representation in this latent space is more compact and computationally efficient than the original image space. The encoder maps image to latent space , where LDM applies a diffusion model to generate data. The diffusion model transforms the data from the original distribution to a Gaussian distribution by gradually adding noise, and then it learns how to reverse the process. Finally, the decoder is used to reconstruct image from the latent space. The expressions of the forward diffusion process and the reverse denoising process are shown in Equations (8) and (9).

Since LDM supports conditional generation, the given conditional information c such as text description and category label can be introduced into the inverse diffusion process through the cross-attention mechanism to generate images. Therefore, its loss function can be expressed as follows:

2.3. Tablet Surface Defect Types

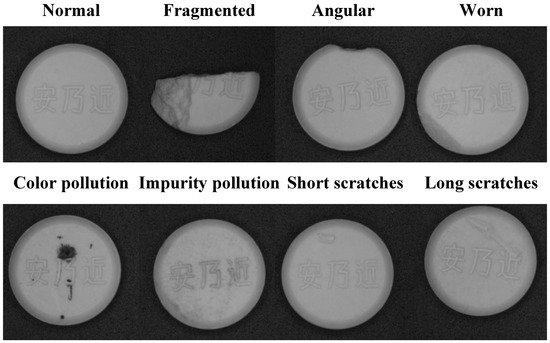

The types of surface defects of tablets mainly include the following, as shown in Figure 1.

Figure 1.

Tablet defect types.

Tablet defect. The basic outline of the tablet is incomplete, and some structures are changed, including cracks, missing corners, wear, etc. The cracks are manifested as the tablet splitting from the top or bottom to form one or more fragments; the missing corners are mostly caused by external force collision, resulting in the missing corners of the tablet. Wear is due to local loss caused by friction and other reasons, resulting in an uneven tablet surface or shape change.

Tablet contamination. Discoloration, contamination, or impurities on the tablet surface, mainly including color contamination and impurity contamination.

Tablet surface scratches. This type of defect is generally caused by uneven molds or scratches by sharp objects. Depending on the depth and length of the scratches, the damage to the tablet structure is also different.

3. Method

The DDTD network structure proposed in this paper consists of a latent diffusion model guided by the AR network and an SF network for feature extraction. The LDM-AR network is used to generate high-quality reconstructed images, and the SF network is responsible for extracting multi-scale features and comparative analysis of the input image and the reconstructed image, and then outputting accurate anomaly scores. In terms of input images, we integrated tablet data from open source datasets such as the MVTec anomaly detection dataset (MVTec AD) [36] to construct a tablet defect detection dataset containing multi-scale defects and complex backgrounds. Combined with the field images collected by the self-built test bench, the model’s field detection performance was tested on it.

3.1. Network Architecture

In the image reconstruction task, in order to balance computational efficiency and image reconstruction quality, this paper introduces LDM as the core framework. The input image is first encoded into a latent space vector through a pre-trained encoder, where . Then, in the latent space, the vector is gradually noisy to become a random Gaussian noise vector , completing the forward diffusion process of the diffusion model. The output of the forward diffusion process can be represented as follows:

In order to further enhance the model’s ability to understand the semantic information of the original image, this paper designs an AR network in the reverse denoising stage to guide the denoising network to reconstruct high-quality images. In this process, the random Gaussian noise vector is input into the denoising network of the diffusion model. At the same time, the AR network performs deep feature processing on the original image and the random Gaussian noise vector and fuses the calculation results with the denoising network output. Through this collaborative mechanism, the LDM is effectively guided to generate a reconstructed image with richer details and more accurate semantics.

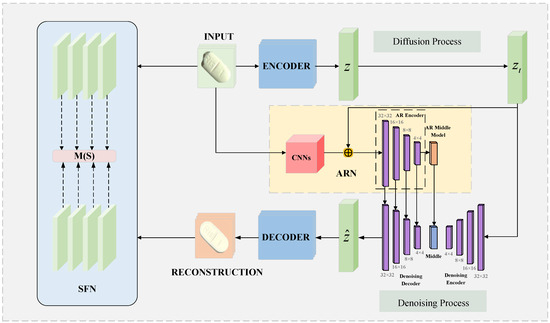

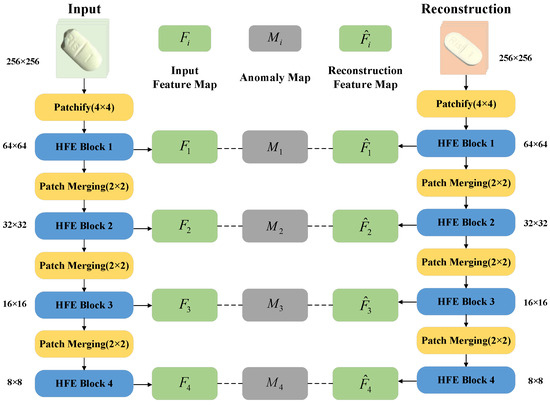

In the detection and location tasks of abnormal areas, we introduce the difference comparison between the two into the feature space. After obtaining the reconstructed image , it is input into the SF network together with the input image x. Through the multi-scale feature extraction strategy, the texture, shape, and structural differences of defects at multiple scales are effectively captured, thereby enhancing the recognition accuracy of various defects such as subtle scratches, tiny defects, and complex pollution. Taking into full consideration the characteristics of tablet defects, this paper adopts the combination of Euclidean distance and cosine similarity to design the anomaly score. By comparing the anomaly scores of input image x and reconstructed image on feature maps of different scales, the abnormal area can be obtained. Finally, the abnormal area is visualized through heatmap generation to obtain the detection result. The DTDD network architecture is shown in Figure 2.

Figure 2.

Model framework.

3.2. Assisted Reconstruction Network

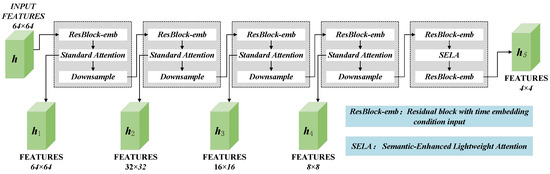

In order to solve the problems of detail loss and semantic deviation in traditional diffusion models when reconstructing images, this paper designs an Assisted Reconstruction network. In the AR network, the input consists of two parts: input image x and random Gaussian noise vector . The input image is first reduced to the same dimension as a random Gaussian noise vector through convolution. The sum of x and is then fed into the encoding block for continuous downsampling. Finally, the Assisted Reconstruction Middle (ARM) block extracts contextual information, gradually extracting and refining features and exploring deep connections between features, enabling the model to better understand the image content. The structure of the four encoding blocks and the ARM is shown in Figure 3.

Figure 3.

Implementation details of encoder in AR network.

The encoding block is mainly composed of a residual block with time-embedded conditional input, a standard attention mechanism, and a downsampling block. The current scale features extracted by each encoding block are saved and input into the downsampling block to achieve information transfer with the stable diffusion network. ARM mainly includes two residual layers and a semantically enhanced lightweight attention mechanism (SELA). The design of the double residual layer helps to extract deep features, and SELA can efficiently enhance the model’s ability to capture key semantic features.

The output of the ARM is superimposed with the middle block of the denoising network. The output of each encoding block is spliced with the output of the denoising network decoding block of the corresponding scale using jump connections, guiding the SD network from both global and local perspectives. In this way, the reconstructed output covers both latent space features and original semantic features, which can fully focus on multi-scale information and achieve an accurate reconstruction of the tablet image.

After the denoising process is completed, the reconstructed output is restored to the reconstructed image through the pre-trained decoder, and the loss function can be expressed as follows:

where represents the auxiliary reconstruction information generated by the AR network.

3.3. Semantic-Enhanced Lightweight Attention

In order to make the AR network pay attention to global semantic information and accurately capture local detail textures, this paper designs a semantically enhanced lightweight attention (SELA) module to enhance the semantic expression and detail perception capabilities of the target features. The module structure and process are shown in Figure 4. The SELA attention module adopts a parallel dual-branch structure. The semantic channel branch (left) and the spatial detail branch (right) enhance the feature expression capabilities from different dimensions and finally fuse to generate a semantically enhanced feature map. The semantic channel branch generates channel-level statistical features through average pooling and maximum pooling operations in the spatial dimension, and then generates SC attention weights through lightweight convolution to focus on channels with semantic significance. The spatial detail branch mines intra-channel associations through grouped convolutions and uses depthwise separable convolutions to enhance spatial detail features, generating SD attention weights to highlight the spatial area where the semantic information is located. The weights generated by the two branches weight the original feature maps respectively, and finally the enhanced results of the two branches are fused through splicing and 1 × 1 convolution.

Figure 4.

Schematic diagram of SELA.

Define the input feature map as and the global maximum pooling and global average pooling results as and ; then, the SC attention weight generated by the semantic channel branch is expressed as follows:

Among them, is the Sigmoid function, is the RELU activation function, is the 1 × 1 convolution operation, and the number of output channels is k; is the pooling splicing operation, and r is the channel compression ratio, which is set to 4 in this paper.

Define the group convolution of X to be divided into G groups, , and the depth-wise separable convolution operation DWConv can be defined as . Then, the SD attention weight calculated by the spatial detail branch is expressed as follows:

The output of the SELA module is expressed as follows:

3.4. Scale Fusion Network

We feed the reconstructed image and the input image x into the Scale Fusion (SF) network for feature extraction and calculate the anomaly score and locate the abnormal area based on the feature differences between the two types of images.

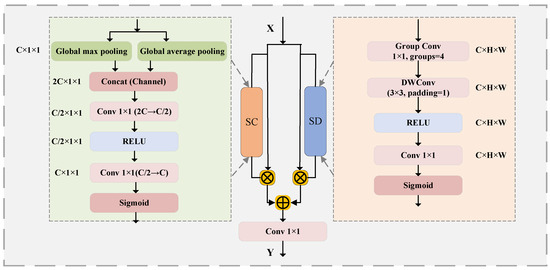

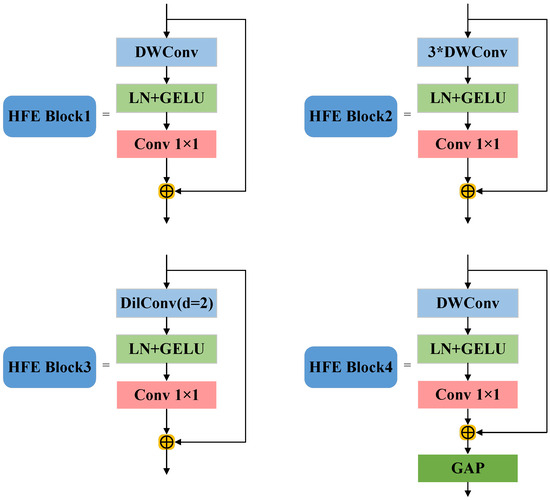

The SF network proposed in this paper designs four hierarchical feature extraction blocks (HFE Block) for the task of tablet anomaly detection. It realizes the hierarchical extraction of features of different scales through explicit task division, avoiding the computational overhead of cross-layer fusion. The network framework is shown in Figure 5.

Figure 5.

Schematic diagram of SF network.

Among them, after sampling the Patchify (4 × 4) table, the initial features are extracted by 7 × 7 deep separable convolution to retain the macro texture of the tablet surface; HFE Block2 enhances the sensitivity to subtle defects by downsampling through Patch Merging and stacking 3 times of 3 × 3 deep separable convolutions; HFE Block3 introduces dilated convolution to expand the receptive field and adapt to defects of different sizes; HFE Block4 realizes global semantic modeling of the overall shape of the tablet through downsampling, 7 × 7 deep separable convolution, and global average pooling. Taking the input image X as an example, the calculation process of its multi-scale feature map is as follows:

Among them, is a convolution, is a three-dimensional separable convolution stack, is an expansion convolution (expansion rate d = 2), is a 2 × 2 downsampling operation, is layer normalization, is a Gaussian error linear unit activation function, is a 1 × 1 pointwise convolution, and is global average pooling. The structural diagram of the four blocks is shown in Figure 6.

Figure 6.

Schematic diagram of HFE blocks.

The feature maps output at each stage have different resolutions and semantic information. We measure the abnormal distance between the input image feature map and the reconstructed image feature map of the corresponding scale, and we obtain the abnormal feature map at different scales. Through the adaptive weighted fusion strategy, we use the learnable weights to upsample and fuse the feature maps of different scales, so that the model takes into account both details and global information. Compared with traditional models, the SF network can capture tiny defects and handle overall shape anomalies in the task of tablet anomaly detection, achieving a balance between accuracy and speed.

3.5. Anomaly Scores and Visualization

In the design of anomaly scores, cosine similarity, Mahalanobis distance, and Euclidean norm are the most commonly used calculation methods. Among them, Euclidean distance can measure the straight-line distance between two points in space, which is suitable for capturing the geometric shape, size, and other features of tablet images, thereby measuring such differences. Cosine similarity can measure the directional similarity of two vectors, which is suitable for capturing the texture, color distribution, and other features of tablets. Both are aimed at defect features in different directions and are simpler to calculate than Mahalanobis distance, which can improve the calculation speed. Considering the complex surface texture of tablets, many types of defects, and small individuals, we adopt a weighted combination of cosine similarity and Euclidean distance to construct anomaly scores. We calculate the anomaly scores of the input image features and reconstructed image features of different scales extracted by the SF network, and the expression of the Euclidean distance of the i-th scale is

In order to achieve the weighting of cosine similarity and Euclidean distance, cosine similarity should be converted into cosine distance , and the formula of the cosine distance of the i-th scale is as follows:

Then, the expressions of the abnormal feature map and abnormal score S of the i-th scale are as follows:

Among them, is the upsampling operation, is the upsampling factor of the i-th scale, n is the number of feature maps involved in the fusion, and and are the weight coefficients of the distance metric and the multi-scale feature map, respectively. In order to display the calculated anomaly score results more concisely and clearly, and visually locate the defect area at the same time, we first use the anomaly score to generate anomaly maps and perform normalization and color mapping to obtain a more understandable visualization heat map. Similar operations are performed on the input image, and the heat map and the original image are superimposed, with the weights set to 0.6 and 0.4. The generated visualization results can display the abnormal area while retaining the original image information.

4. Experiment

4.1. Experimental Dataset

This study used multiple open-source datasets, including MVTec (https://www.mvtec.com/company/research/datasets/mvtec-ad, accessed on 19 August 2025) and PILL (https://aistudio.baidu.com/datasetdetail/121965, accessed on 19 August 2025), containing 3623 images of normal tablets and 1344 images of tablets with various defects, such as scratches, defects, stains, mold, bumps, and adhesions, mimicking actual industrial production scenarios. Of the 4967 tablet images in the dataset, the training set consists of 3586 normal tablet images, and the test set consists of 1381 images, including both normal and abnormal samples.

4.2. Implementation Details

We conducted experiments on a Linux deep learning server using the Ubuntu 20.04 operating system; the CPU model was 14 vCPU Intel (R) Xeon (R) Platinum 8362 CPU@2.80GHz, the GPU model was RTX3090, the video memory size was 48 GB, and the memory size was 64 GB. All models used were implemented based on Pytorch 3.9 using the PYtorch 1.12.1 framework, the Cuda version was 11.3, the image size was uniformly set to 256 × 256, the training rounds were 500 times, the batch size was 16, and all parameters were kept consistent in the experiments. All experimental results in this paper are presented as the mean ± standard deviation of five independent runs, and the significance of the improvements was verified by a t-test.

4.3. Evaluation Metrics

The evaluation indicators of anomaly detection usually include recall rate, precision, F1 score, AUROC, average precision, and the per-region-overlap score of each region, which are used to evaluate detection efficiency and accuracy. Unsupervised anomaly detection is characterized by a scarcity of anomaly samples, a high proportion of normal samples, class imbalance, and ambiguous anomaly definitions. The task requires both classification and localization. Therefore, evaluation metrics must meet the following requirements: be robust to imbalanced data, measure discriminative ability, and support region-level evaluation.

A single metric alone cannot meet these requirements. Therefore, this paper uses a combination of AUROC, PRO, F1, and AP as its evaluation metric. AUROC is insensitive to imbalanced data, eliminates the need for manual thresholding, and measures overall discriminative ability. AP is a comprehensive metric that combines ranking and localization but is sensitive to the ratio of positive and negative samples. F1 balances precision and recall, assessing overall performance at a practical threshold. PRO is a key metric for region-level anomaly localization, complementing the previous three metrics that ignore “regional details”. The formula is shown as follows:

The AUROC evaluation model is used as Formula (24) to evaluate the overall ability of the model to distinguish normal and abnormal samples under different thresholds. The closer the value is to 1, the better the model distinguishes between positive and negative samples.

The PRO index shown in Formula (25) is used to evaluate the overlap between the abnormal area predicted by the model and the actual abnormal area, and to measure the accuracy of locating the abnormal position.

The score shown in Formula (26) is used to comprehensively measure the accuracy and recall of the model in detecting anomalies and to more comprehensively determine the accuracy of the algorithm.

AP is used to judge the detection accuracy of the model for abnormal samples. By calculating the average precision under different recall rates, the accuracy of the model in detecting anomalies is reflected. Its expression is shown in Formula (27).

4.4. Experimental Results

4.4.1. Comparison with Baseline Model

The improvement of the algorithmic framework in the image reconstruction part is a targeted innovation based on the LDM algorithm process and the requirements of the tablet defect detection task. Therefore, this paper first compares the improved algorithm with the LDM algorithm to verify the advanced nature of the AR network. The experimental results are shown in Table 1.

Table 1.

Comparative experiment with baseline model.

It can be seen from the table data that the algorithm proposed in this paper performs excellently across all metrics. After verification by the t-test method, the results show that, compared with the LDM model, each metric achieves a significant improvement. The AP index increases by 11.15%, the score increases by 9.61%, the AUROC increases by 14.61%, and the PRO increases by 15.73%. This shows that the AR network plays an important role in improving the overall accuracy of the model and guiding the model to perform image reconstruction.

4.4.2. Comparative Experiment

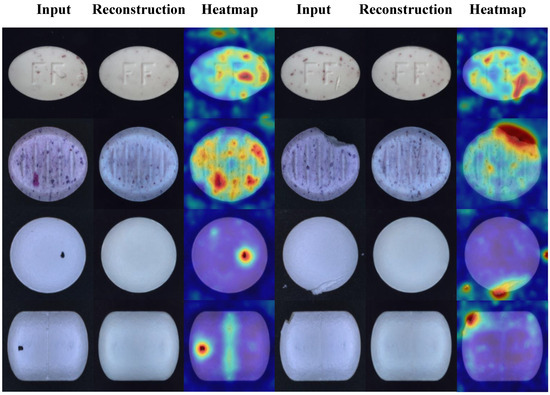

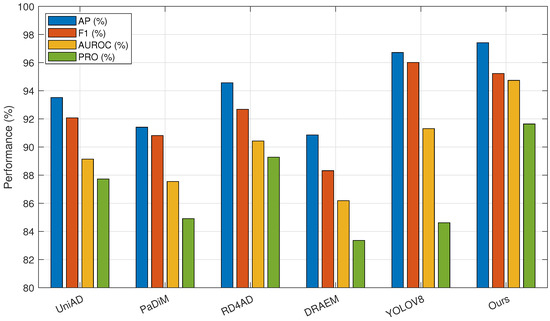

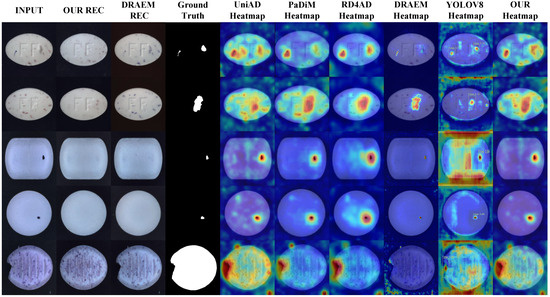

The improved algorithm is improved and designed for the task of tablet defect detection and can cope with various defect types and small sample challenges. The visualization results of the test are shown in Figure 7. To further verify the superiority of the algorithm in the task of tablet defect detection, the algorithm in this paper is compared with five algorithms: UniAD [37], PaDiM [38], RD4AD [39], DRAEM, and YOLOV8. The experimental results are shown in Table 2.

Figure 7.

Visualization result.

Table 2.

Quantitative results on Tablet dataset.

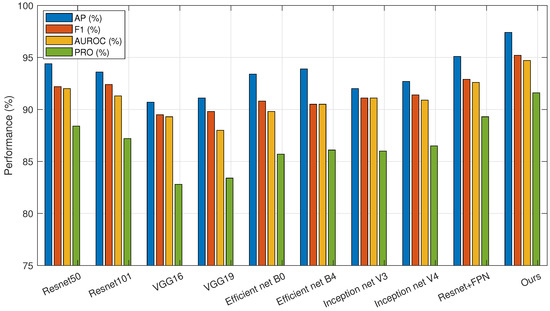

As shown in the table, the proposed algorithm achieves the best detection performance. Compared with the supervised algorithm YOLOV8, our method yields comparable results in AP and F1 metrics but demonstrates significant improvements in AUROC and PRO. Compared to the best results among other unsupervised algorithms, AP, F1, AUROC, and PRO are improved by 2.85%, 2.55%, 4.31%, and 2.36%, respectively. Therefore, our algorithm outperforms UniAD, PaDiM, RD4AD, DRAEM, and YOLOV8 in tablet defect detection tasks. To provide a more intuitive comparison between models, the results are presented in the form of bar charts in Figure 8. Figure 9 illustrates the performance of each algorithm on different tablet detection tasks within the dataset. As shown in the figure, the proposed algorithm exhibits superior reconstruction performance and sensitivity to anomalous regions, enabling precise detection of small defects of various types and shapes.

Figure 8.

Comparative experimental results bar graph.

Figure 9.

Qualitative illustration on Tablet dataset.

4.4.3. Ablation Experiment

In addition to the AR network, this paper mainly makes innovative improvements in the three aspects of attention mechanism, feature extraction network, and anomaly score measurement, so ablation experiments were conducted on these three aspects to prove the effectiveness of the improvements.

The improvement of this paper in the attention mechanism is that it innovatively proposes the SELA attention mechanism to efficiently enhance the model’s ability to capture key semantic features. It is now compared with the three commonly used attention mechanisms of Self-Attention [40], CBAM, and ECA-Net [41]. The four attentions were used to train the model under the same parameters and to test the indicators. The data in Table 3 are as follows:

Table 3.

Ablation studies of SELA.

From the data in Table 3, it can be seen that the SELA attention proposed in this paper has better performance. Compared with other attentions, it has an improvement of 2.14%, 3.96%, and 2.01% in AP, AUROC, and PRO, respectively. Among them, SELA is slightly worse than ECA in score, which is related to the strong morphological texture feature capture ability of the ECA local cross-channel interaction structure. In comparison, SELA has a certain gap, but it has more advantages in distinguishing boundary samples and multi-class defects.

The improvement made in this paper in the feature extraction part is that it adopts an SF network with stronger feature extraction ability and multi-scale target processing ability. In order to verify the effectiveness of this network, different feature extraction networks were combined with the model of this paper, and experiments were carried out separately. The experiment replaced the SF network with representative models of ResNet, VGG, Efficient net, and Inception net series, and it added the combined structural comparison of ResNet50+FPN to increase the persuasiveness of the experiment. The ablation experiment results are shown in Table 4, which are organized into a bar chart format as shown in Figure 10.

Table 4.

Ablation studies of SFN.

Figure 10.

Ablation experiment results bar graph.

From the data in the table, we can see that although the combination of ResNet+FPN has a certain improvement compared with other networks, its computational complexity is larger, which will cause a large waste of computing power. The algorithm in this paper not only has a lower computational complexity than the combination of ResNet+FPN, but also outperforms other feature extraction networks in various indicators and has better results in tablet defect detection. Among the four indicators, AP is 2.28% higher than the best data in other algorithms, is 2.34% higher than the best data in other algorithms, AUROC is 2.10% higher than the best data in other algorithms, and PRO is 2.33% higher than the best data in other algorithms, which further proves the advantages of the algorithm in this paper in the task of tablet defect detection.

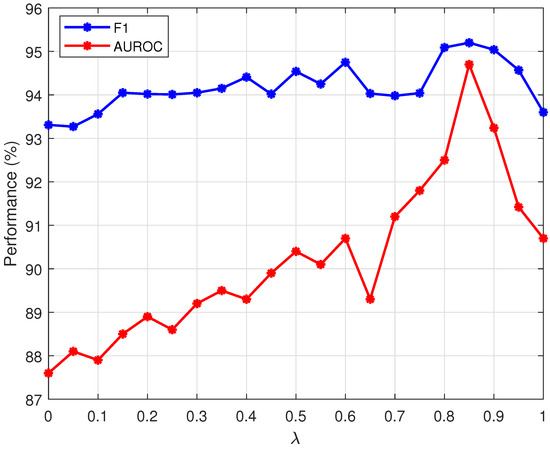

The anomaly score measurement indicators used in this paper are weighted Euclidean distance and cosine distance, which are more suitable for the tablet defect detection task than a single indicator. In the calculation formula of the anomaly score, the weight of the anomaly map of different scales is obtained by network training, while the weighted values of Euclidean distance and cosine distance are obtained by the grid search method. Table 5 lists the data with as 0 (cosine measurement), 1 (Euclidean measurement), and 0.85 (optimal value of grid search method); Figure 11 is a trend chart of the dual indicators AUROC and changing with , which is used to show the results of the grid search method. The best value obtained in this experiment is 0.85.

Table 5.

Ablation studies of anomaly score.

Figure 11.

Line graph of AUROC and changes.

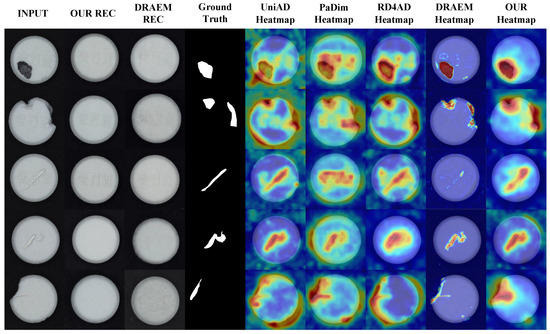

4.4.4. Physical Verification

To verify the effectiveness of the algorithm in practical production scenarios, a complete data acquisition system was built using an MV-CS050-10UC camera, various LED light sources, and an XCY-ICS400-V2 line scan test rig platform. This system was used to construct a comprehensive tablet defect detection dataset that includes samples of normal, defective, contaminated, and scratched tablets. This dataset is designed in the MVTec AD format, and the dataset contains 1000 normal tablets and more than 1500 abnormal defect photos. For this dataset, the algorithm in this paper is compared with the four algorithms of UniAD, PaDiM, RD4AD, and DRAEM to fully verify the performance of this method in the task of tablet defect detection. The experimental results are shown in Table 6, and the visualization results are shown in Figure 12.

Table 6.

Quantitative comparisons on physical verification dataset.

Figure 12.

Qualitative illustration on physical verification dataset.

As shown in Table 6, in the field validation dataset, the proposed algorithm is still superior to the other four algorithms in various indicators, and compared with the best data, AP, AUROC, F1, and PRO improved by 1.54%, 3.26%, 5.60%, and 2.7 8%, respectively. It can also be seen from Figure 12 that when faced with interference factors such as complex tablet textures and diverse defect shapes, DRAEM missed detections, oversimplified abnormal expressions of abnormal areas, and had weak overall detection robustness. UniAD and other algorithms had different degrees of insufficient thermal differentiation between the background and defects and were prone to misjudgment in complex defect scenes. In contrast, the background area of the proposed method was thermally clean; the abnormal area was thermally focused, had clear boundaries, and had better abnormality detection effects.

5. Conclusions

In order to solve the problems of diversified defects, few defect samples, the poor reconstruction effect of unsupervised methods based on reconstruction, and the inaccurate positioning of abnormal areas in the tablet production process, this paper proposes an unsupervised tablet defect detection method (DTDD) based on a diffusion model. We propose an Assisted Reconstruction (AR) network and Semantic-Enhanced Lightweight Attention to help the diffusion model achieve better reconstruction results, and we use the Scale Fusion (SF) network in the feature matching part to improve the matching accuracy. Finally, this paper designs anomaly scores and visualization heat maps for tablet defect detection tasks to comprehensively and reasonably evaluate and display the anomaly detection effect. A large number of experimental results on tablet defect datasets show that the algorithm in this paper is superior to advanced methods in the same field, and both positioning and detection performance are greatly improved. In the future, we will use more data to train the model and expand the training target to more types of pharmaceutical products so that it can play a greater role in the pharmaceutical testing industry.

Author Contributions

Conceptualization, M.Z., W.L. and L.H.; methodology, W.L.; software, M.Z.; validation, M.Z. and D.W.; formal analysis, M.Z.; data curation, M.Z.; writing—original draft preparation, M.Z.; writing—review and editing, W.L. and L.H.; visualization, W.L.; supervision, W.L.; project administration, W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Shaanxi Qinchuangyuan “Scientist + Engineer” Team Building (2023KXJ-118) and the NSFC (62376147).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Dataset available on request from the authors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jose, J.; Cox, A.R.; Bate, A. Introduction to Drug Safety and Pharmacovigilance. In Principles and Practice of Pharmacovigilance and Drug Safety; Springer: Berlin/Heidelberg, Germany, 2024; pp. 3–30. [Google Scholar]

- Mishra, H.P.; Gupta, R. Leveraging Generative AI for Drug Safety and Pharmacovigilance. Curr. Rev. Clin. Exp. Pharmacol. 2025, 20, 89–97. [Google Scholar] [CrossRef] [PubMed]

- Trifirò, G.; Crisafulli, S. A new era of pharmacovigilance: Future challenges and opportunities. Front. Drug Saf. Regul. 2022, 2, 866898. [Google Scholar] [CrossRef]

- Zhou, J.; Huang, J.; Liu, J.; Liu, J. Improved U2Net-Based Surface Defect Detection Method for Blister Tablets. Algorithms 2024, 17, 429. [Google Scholar] [CrossRef]

- Chen, Y.; Ding, Y.; Zhao, F.; Zhang, E.; Wu, Z.; Shao, L. Surface defect detection methods for industrial products: A review. Appl. Sci. 2021, 11, 7657. [Google Scholar] [CrossRef]

- Bihan, K.; Lebrun-Vignes, B.; Funck-Brentano, C.; Salem, J.E. Uses of pharmacovigilance databases: An overview. Therapies 2020, 75, 591–598. [Google Scholar] [CrossRef] [PubMed]

- Sharma, L.; Prakash, A.; Medhi, B. Ensuring medication and patient safety for better quality healthcare. Indian J. Pharmacol. 2024, 56, 375–378. [Google Scholar] [CrossRef] [PubMed]

- Al-Worafi, Y.M. Quality indicators for medications safety. In Drug safety in developing countries; Elsevier: Amsterdam, The Netherlands, 2020; pp. 229–242. [Google Scholar]

- Ma, X.; Kittikunakorn, N.; Sorman, B.; Xi, H.; Chen, A.; Marsh, M.; Mongeau, A.; Piché, N.; Williams, R.O., III; Skomski, D. Application of deep learning convolutional neural networks for internal tablet defect detection: High accuracy, throughput, and adaptability. J. Pharm. Sci. 2020, 109, 1547–1557. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Kittikunakorn, N.; Sorman, B.; Xi, H.; Chen, A.; Marsh, M.; Mongeau, A.; Piché, N.; Williams, R., III; Skomski, D. Deep learning convolutional neural networks for pharmaceutical tablet defect detection. Microsc. Microanal. 2020, 26, 1606–1609. [Google Scholar] [CrossRef]

- Quan, H.T.; Huy, D.D.; Hoan, N.T.; Duc, N.T. Deep learning-based automatic detection of defective tablets in pharmaceutical manufacturing. In Proceedings of the 8th International Conference on the Development of Biomedical Engineering in Vietnam: Proceedings of BME 8, 2020, Vietnam: Healthcare Technology for Smart City in Low-and Middle-Income Countries; Springer: Berlin/Heidelberg, Germany, 2022; pp. 789–801. [Google Scholar]

- Ettalibi, A.; Elouadi, A.; Mansour, A. AI and computer vision-based real-time quality control: A review of industrial applications. Procedia Comput. Sci. 2024, 231, 212–220. [Google Scholar] [CrossRef]

- Kwon, H.J.; Kim, H.G.; Lee, S.H. Pill detection model for medicine inspection based on deep learning. Chemosensors 2021, 10, 4. [Google Scholar] [CrossRef]

- LeCun, Y.; Jackel, L.; Bottou, L.; Brunot, A.; Cortes, C.; Denker, J.; Drucker, H.; Guyon, I.; Muller, U.; Sackinger, E.; et al. Comparison of learning algorithms for handwritten digit recognition. In Proceedings of the International Conference on Artificial Neural Networks, Perth, Australia, 27 November–1 December 1995; Volume 60, pp. 53–60. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE Computer Society: Washington, DC, USA, 2016; pp. 779–788. [Google Scholar]

- Mac, T.T. Pill Defect Detection Using an Improved Convolutional Neural Network. In Proceedings of the International Conference on Mechatronics and Control Engineering, Virtual, 26–28 July 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 55–60. [Google Scholar]

- Xue, Q.; Jun, L.; Qiu, T.; Xiaoyu, Q. Real-time detection method of pill surface defects based on YOLOV5. Inf. Technol. Netw. Secur. Jishu Wangluo Anquan 2021, 40. [Google Scholar] [CrossRef]

- Kim, S.; Jo, Y.; Cho, J.; Song, J.; Lee, Y.; Lee, M. Spatially variant convolutional autoencoder based on patch division for pill defect detection. IEEE Access 2020, 8, 216781–216792. [Google Scholar] [CrossRef]

- Zavrtanik, V.; Kristan, M.; Skočaj, D. Draem-a discriminatively trained reconstruction embedding for surface anomaly detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 8330–8339. [Google Scholar]

- Roth, K.; Pemula, L.; Zepeda, J.; Schölkopf, B.; Brox, T.; Gehler, P. Towards total recall in industrial anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14318–14328. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Park, H.; Noh, J.; Ham, B. Learning memory-guided normality for anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 14372–14381. [Google Scholar]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. Stat 2014, 1050, 1. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Sohl-Dickstein, J.; Weiss, E.; Maheswaranathan, N.; Ganguli, S. Deep unsupervised learning using nonequilibrium thermodynamics. In Proceedings of the International Conference on Machine Learning, Lile, France, 6–11 July 2015; pp. 2256–2265. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Wang, J.; Zhu, Z. A Surface Defect Detection Method Based on Image Guided Conditional Diffusion Model. In Proceedings of the 2024 43rd Chinese Control Conference (CCC), Kunming, China, 28–31 July 2024; pp. 8541–8546. [Google Scholar]

- Yao, H.; Liu, M.; Yin, Z.; Yan, Z.; Hong, X.; Zuo, W. GLAD: Towards better reconstruction with global and local adaptive diffusion models for unsupervised anomaly detection. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 1–17. [Google Scholar]

- He, H.; Zhang, J.; Chen, H.; Chen, X.; Li, Z.; Chen, X.; Wang, Y.; Wang, C.; Xie, L. A diffusion-based framework for multi-class anomaly detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 26–27 February 2024; Volume 38, pp. 8472–8480. [Google Scholar]

- Zhang, X.; Xu, M.; Zhou, X. Realnet: A feature selection network with realistic synthetic anomaly for anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16699–16708. [Google Scholar]

- He, Z.; Li, G.; Liu, Z. RGB-D Rail Surface Defect Inspection Driven by Conditional Diffusion Architecture and Frequency Knowledge. IEEE Sens. J. 2025, 25, 18334–18343. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–19. [Google Scholar]

- Chen, J.; Mai, H.; Luo, L.; Chen, X.; Wu, K. Effective feature fusion network in BIFPN for small object detection. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 699–703. [Google Scholar]

- Bergmann, P.; Fauser, M.; Sattlegger, D.; Steger, C. MVTec AD–A comprehensive real-world dataset for unsupervised anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 9592–9600. [Google Scholar]

- You, Z.; Cui, L.; Shen, Y.; Yang, K.; Lu, X.; Zheng, Y.; Le, X. A unified model for multi-class anomaly detection. Adv. Neural Inf. Process. Syst. 2022, 35, 4571–4584. [Google Scholar]

- Defard, T.; Setkov, A.; Loesch, A.; Audigier, R. Padim: A patch distribution modeling framework for anomaly detection and localization. In Proceedings of the International Conference on Pattern Recognition, Xiamen, China, 24–26 September 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 475–489. [Google Scholar]

- Deng, H.; Li, X. Anomaly detection via reverse distillation from one-class embedding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 9737–9746. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11534–11542. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).