A Simulation Framework for Zoom-Aided Coverage Path Planning with UAV-Mounted PTZ Cameras

Abstract

1. Introduction

1.1. Motivation

1.2. Literature Review

1.3. Main Contributions

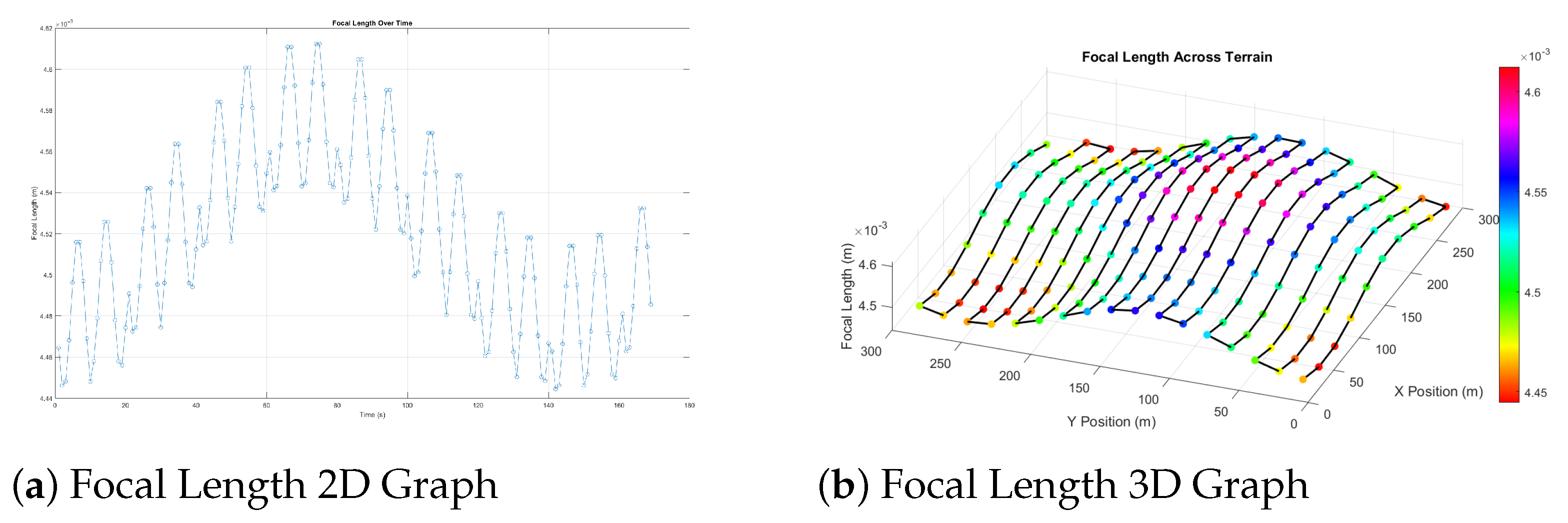

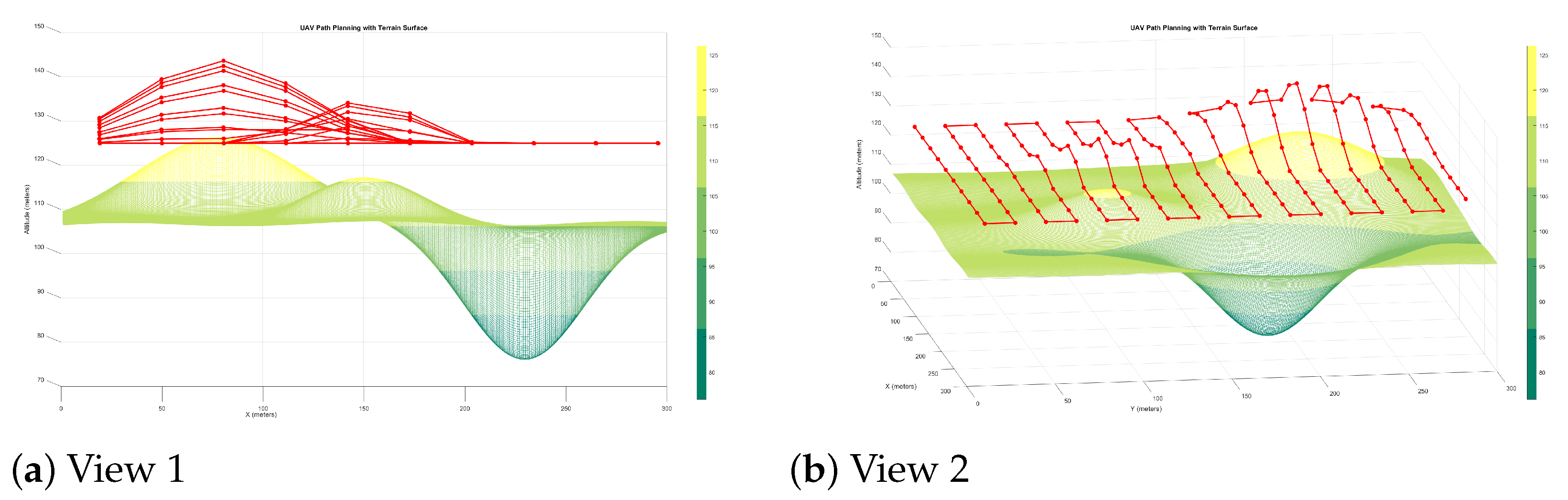

- Zoom-integrated CPP algorithm: A novel method that dynamically adjusts the focal length according to the terrain to maintain constant ground resolution without altitude changes.

- Energy-efficient path planning: Demonstration that zoom-based compensation significantly reduces path length and flight time compared to altitude-only approaches.

- Hybrid strategy for constrained zoom systems: An adaptable model that integrates altitude changes only when zoom limits are exceeded, enabling the use of lower-end PTZ cameras.

- Comprehensive evaluation: Simulations on synthetic terrains of varying complexity demonstrate robustness, resolution consistency, and operational gains over existing CPP strategies.

2. Materials and Methods

2.1. Boustrophedon Path: Optimised Sweep Path for Camera Coverage

- Straight sweep segments: These linear trajectories represent the portions of the path during which the vehicle acquires imagery. The primary objective during these segments is to achieve comprehensive coverage with a predefined image overlap [12].

- Turning manoeuvres: These manoeuvres occur at the transition points between consecutive sweep lines. The design and execution of these turns have a direct impact on overall mission duration and energy consumption [12].

- Camera specifications: The horizontal field of view (FOV) and pixel resolution determine the ground width captured in each image.

- Ground sample distance (GSD): Defined as the ground distance represented by a single image pixel (typically in meters per pixel), the GSD is influenced by both sensor resolution and flight altitude.

- Image side lap: This parameter defines the percentage of overlap between adjacent images along the sweep axis. Sufficient side lap is essential to ensure continuous coverage and to support subsequent image processing or stitching.

- Ideal path adherence: It is presumed that the vehicle follows the planned trajectory precisely, thereby streamlining the modelling process.

- Uniform airspeed: The vehicle is assumed to maintain a constant velocity throughout the mission, typically governed by an autopilot system.

2.2. Boustrophedon Path: Optimised Sweep Path for Camera Coverage with Zoom Implementation

- To achieve the desired ground resolution, the system first attempts to adjust the camera f within its mechanical limits.

- Focal length f adjustment and limits: The camera zoom system works by adjusting the (f), which is constrained by the mechanical limits of the camera:(f) must stay within the range m < f < m.If f remains within this range, the UAV maintains its current altitude and achieves the target resolution only through zoom adjustments.If f falls outside these limits, altitude adjustment becomes necessary.

- If the camera cannot achieve the required resolution solely by changing its f, the UAV must adjust its altitude according to the , which is the real-world distance represented by a single pixel in the captured image.The required UAV altitude (H) is derived using the field-of-view equation (as shown in Line 4 of Algorithm 1), plus the altitude of the terrain. This ensures that the UAV adjusts its altitude only when zoom limits are exceeded.

| Algorithm 1 Dynamic altitude adjustment for valid focal length. | |

| |

| ▹ Initial UAV altitude |

| ▹ Distance to ground |

| |

| |

| |

| ▹ Focal length is within limits |

| |

| |

| |

| |

| |

| |

| |

| |

| |

2.3. UAV Velocity Model

2.4. Simulation Data

2.4.1. Camera Data

2.4.2. PTZ Integration and Zoom Control

3. Results and Discussion

3.1. Boustrophedon CPP: Comparison Between Altitude Adjustment and Zoom Compensation for Uniform Camera Coverage

- Terrain Type 1—smooth elevation profile;

- Terrain Type 2—moderate undulations.

3.2. Performance Evaluation of Zoom-Based vs. Altitude-Based CPP

3.3. Extended Terrain Analysis: Mixed Elevation Terrain with Zoom-Based CPP

3.4. Evaluating Model Performance with Reduced Maximum Focal Length

3.5. Practical Considerations and Limitations

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| coverage path planning | |

| d | UAV-to-ground vertical distance |

| f | focal length |

| minimum focal length | |

| maximum focal length | |

| field of view | |

| ground sampling distance | |

| UAV base altitude | |

| terrain elevation | |

| improved back and forth | |

| pan–tilt–zoom | |

| camera vertical resolution in pixels | |

| reinforcement learning | |

| region of interest | |

| search and rescue missions | |

| unmanned aerial vehicle | |

| camera sensor width (m) | |

| Z | UAV altitude |

References

- Fevgas, G.; Lagkas, T.; Argyriou, V.; Sarigiannidis, P. Coverage Path Planning Methods Focusing on Energy Efficient and Cooperative Strategies for Unmanned Aerial Vehicles. Sensors 2022, 22, 1235. [Google Scholar] [CrossRef]

- Ai, B.; Jia, M.; Xu, H.; Xu, J.; Wen, Z.; Li, B.; Zhang, D. Coverage path planning for maritime search and rescue using reinforcement learning. Ocean Eng. 2021, 241, 110098. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, J.; Wang, S.; Wang, M.; Wang, T.; Feng, Z.; Zhu, S.; Zheng, E. FAEM: Fast Autonomous Exploration for UAV in Large-Scale Unknown Environments Using LiDAR-Based Mapping. Drones 2025, 9, 423. [Google Scholar] [CrossRef]

- Yue, X.; Zhang, Y.; He, M. LiDAR-based SLAM for robotic mapping: State of the art and new frontiers. arXiv 2023, arXiv:2311.00276. [Google Scholar] [CrossRef]

- Kazemdehbashi, S.; Liu, Y. An exact coverage path planning algorithm for UAV-based search and rescue operations. arXiv 2024, arXiv:2405.11399. [Google Scholar] [CrossRef]

- Alpdemir, M.N.; Sezgin, M. A reinforcement learning (RL)-based hybrid method for ground penetrating radar (GPR)-driven buried object detection. Neural Comput. Appl. 2024, 36, 8199–8219. [Google Scholar] [CrossRef]

- Mu, X.; Gao, W.; Li, X.; Li, G. Coverage Path Planning for UAV Based on Improved Back-and-Forth Mode. IEEE Access 2023, 11, 114840–114854. [Google Scholar] [CrossRef]

- Heydari, J.; Saha, O.; Ganapathy, V. Reinforcement Learning-Based Coverage Path Planning with Implicit Cellular Decomposition. arXiv 2021, arXiv:2110.09018v1. [Google Scholar] [CrossRef]

- Fontenla-Carrera, G.; Aldao, E.; Veiga, F.; González-Jorge, H. A Benchmarking of Commercial Small Fixed-Wing Electric UAVs and RGB Cameras for Photogrammetry Monitoring in Intertidal Multi-Regions. Drones 2023, 7, 642. [Google Scholar] [CrossRef]

- Yang, Z.; Gao, S.; Chen, Q.; Wu, B.; Xu, Q.; Gong, L.; Yang, L. Airborne Constant Ground Resolution Imaging Optical System Design. Photonics 2025, 12, 390. [Google Scholar] [CrossRef]

- Vargas, M.; Vivas, C.; Alamo, T.; Vargas, M.; Vivas, C.; Alamo, T. Optimal Positioning Strategy for Multi-Camera Zooming Drones. IEEE/CAA J. Autom. Sin. 2024, 11, 1802–1818. [Google Scholar] [CrossRef]

- Coombes, M.; Chen, W.H.; Liu, C. Boustrophedon coverage path planning for UAV aerial surveys in wind. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems, ICUAS 2017, Miami, FL, USA, 13–16 June 2017; pp. 1563–1571. [Google Scholar] [CrossRef]

- Eslami, M.A.; Rzasa, J.R.; Milner, S.D.; Davis, C.C. Dual Dynamic PTZ Tracking Using Cooperating Cameras. Intell. Control Autom. 2015, 6, 45–54. [Google Scholar] [CrossRef][Green Version]

- Li, T.; Zhang, B.; Xiao, W.; Cheng, X.; Li, Z.; Zhao, J. UAV-Based photogrammetry and LiDAR for the characterization of ice morphology evolution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4188–4199. [Google Scholar] [CrossRef]

- Chasie, M.; Theophilus, P.K.; Mishra, A.K.; Ghosh, S.; Das, S.K. Application of GNSS-supported static terrestrial lidar in mapping landslide processes in the Himalaya. Rev. Artic. Curr. Sci. 2022, 123, 844. [Google Scholar] [CrossRef]

- Wang, D.; Shu, H. Accuracy Analysis of Three-Dimensional Modeling of a Multi-Level UAV without Control Points. Buildings 2022, 12, 592. [Google Scholar] [CrossRef]

- Duan, T.; Hu, P.C.; Sang, L.Z. Research on route planning of aerial photography of UAV in highway greening monitoring. J. Phys. Conf. Ser. 2019, 1187, 052082. [Google Scholar] [CrossRef]

- Rios, N.C.; Mondal, S.; Tsourdos, A. Gimbal Control of an Air borne pan-tilt-zoom Camera for Visual Search. In Proceedings of the AIAA Science and Technology Forum and Exposition, AIAA SciTech Forum 2025, Orlando, FL, USA, 6–10 January 2025. [Google Scholar] [CrossRef]

- U30T 30x Optical Zoom Object Tracking Gimbal Camera Data Sheet. Available online: https://www.viewprotech.com/upfile/2021/09/20210908202901_556.pdf (accessed on 18 August 2025).

| Parameter | Value |

|---|---|

| Resolution | 1920 × 1080 pixels |

| FOV (Horizontal) | 63.7 deg |

| Zoom Capability | 30× optical |

| Sensor Width | Assumed from resolution and FOV |

| Command response latency | <50 ms |

| Implementation | Total Distance (m) | Total Flight Time (s) |

|---|---|---|

| Altitude-Based CPP [18] | 4976.66 | 228.09 |

| Zoom-Based CPP | 4945.92 | 169.00 |

| Parameter | Value |

|---|---|

| Minimum UAV-ground distance | 17.39 m |

| Maximum UAV-ground distance | 521.80 m |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rios, N.C.; Mondal, S.; Tsourdos, A. A Simulation Framework for Zoom-Aided Coverage Path Planning with UAV-Mounted PTZ Cameras. Sensors 2025, 25, 5220. https://doi.org/10.3390/s25175220

Rios NC, Mondal S, Tsourdos A. A Simulation Framework for Zoom-Aided Coverage Path Planning with UAV-Mounted PTZ Cameras. Sensors. 2025; 25(17):5220. https://doi.org/10.3390/s25175220

Chicago/Turabian StyleRios, Natalia Chacon, Sabyasachi Mondal, and Antonios Tsourdos. 2025. "A Simulation Framework for Zoom-Aided Coverage Path Planning with UAV-Mounted PTZ Cameras" Sensors 25, no. 17: 5220. https://doi.org/10.3390/s25175220

APA StyleRios, N. C., Mondal, S., & Tsourdos, A. (2025). A Simulation Framework for Zoom-Aided Coverage Path Planning with UAV-Mounted PTZ Cameras. Sensors, 25(17), 5220. https://doi.org/10.3390/s25175220