1. Introduction

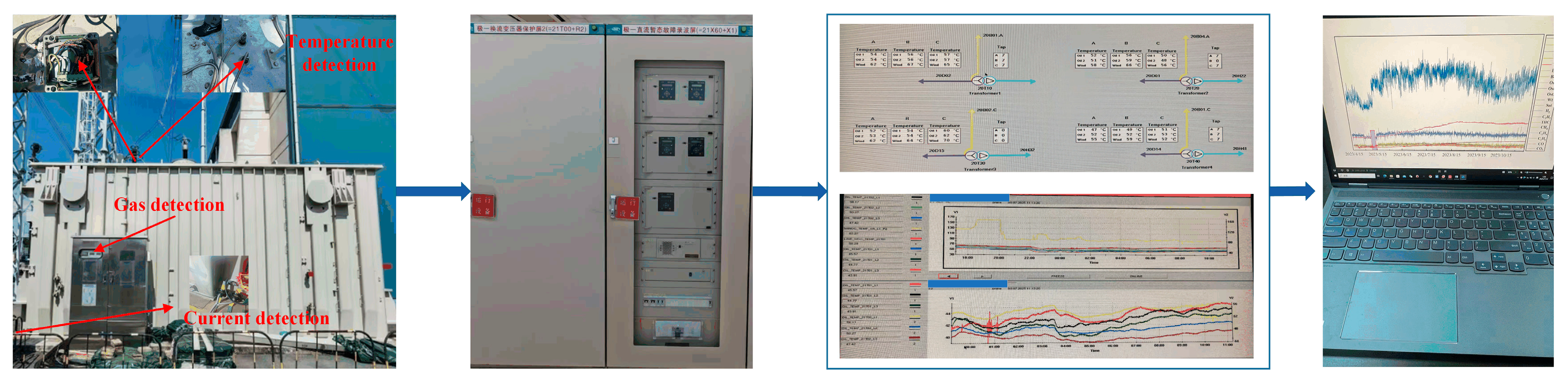

Converter transformers serve as critical components in Ultra-High Voltage Direct Current (UHVDC) transmission systems, enabling efficient long-distance power transmission and interconnection of asynchronous power grids [

1]. With the widespread deployment of online monitoring systems in modern power infrastructure, these transformers generate massive volumes of multivariate time-series data encompassing electrical parameters, temperature measurements, and dissolved gas analysis (DGA) results [

2]. The quality of this monitoring data directly influences the accuracy of equipment condition assessment, maintenance scheduling, and operational decision-making, making data reliability a paramount concern for power system operators and maintenance personnel [

3].

Despite advances in sensor technology and data acquisition systems, outliers remain pervasive in converter transformer monitoring data due to various factors, including sensor malfunctions, communication network errors, electromagnetic interference, and extreme environmental conditions [

4]. Additionally, physical factors such as sensor placement can significantly influence signal quality and error patterns, as demonstrated by Kent et al., who used support vector machines to detect desk illuminance sensor blockage in closed-loop daylight harvesting systems [

5]. Zhang et al. demonstrated that communication network problems alone cause 15–20% of transformer monitoring data to contain outliers or missing values [

6].

These data quality issues have profound implications for condition monitoring, fault diagnosis, and predictive maintenance: outliers can distort trend analysis, leading to false alarms or missed warnings; interfere with fault diagnosis algorithms, resulting in misclassification of transformer health states; and degrade the performance of predictive maintenance models [

7]. The complexity of converter transformer monitoring data further exacerbates these challenges, as it involves multiple parameter categories, high dimensionality, and long time series [

8]. Traditional outlier detection methods face significant limitations when applied to such complex data: they typically adopt single-perspective analysis, rely on static thresholds that cannot adapt to changing operational conditions, and lack the sophistication to capture intricate temporal and cross-parameter dependencies [

9].

Existing outlier detection approaches can be broadly categorized into statistical, distance-based, clustering-based, and machine learning methods. Statistical methods such as the three-sigma rule and boxplot analysis assume that data follows specific distributions, typically Gaussian, rendering them ineffective for the non-Gaussian, multimodal distributions commonly observed in transformer monitoring data [

10]. Zimek and Filzmoser comprehensively reviewed the theoretical foundations and practical limitations of statistical outlier detection, highlighting the breakdown of normality assumptions in high-dimensional spaces [

11]. Recent studies provide quantitative evidence of these limitations. Esmaeili et al. [

12] evaluated 17 outlier detection algorithms on time-series data, reporting that traditional statistical methods achieve accuracies between 65 and 82%, with precision ranging from 7 to 77%, while ML-based techniques demonstrate accuracies of 83–99% with precision between 83 and 98%. For k-NN-based approaches, computational complexity scales quadratically with dimensionality, making real-time monitoring challenging for high-dimensional transformer data.

Distance-based methods including k-nearest neighbors (k-NN) and Local Outlier Factor (LOF) suffer from high computational complexity, scaling poorly with data dimensionality, and exhibit extreme sensitivity to parameter selection [

13,

14]. Recent advances have produced variants like Influenced Outlierness (INFLO) and Local Outlier Probability (LoOP) that address some limitations, but the fundamental curse of dimensionality remains [

15]. Clustering approaches like Density-Based Spatial Clustering of Applications with Noise (DBSCAN) and K-means require careful tuning of hyper-parameters and struggle to handle multiple outlier patterns simultaneously, particularly when outliers form small clusters rather than isolated points [

16]. Traditional machine learning methods such as Isolation Forest (iForest) and One-Class Support Vector Machine (OC-SVM), while more flexible than statistical approaches, employ single models that cannot comprehensively capture the diverse outlier patterns present in multivariate time series [

17,

18]. Industry standards provide guidance on acceptable performance thresholds. IEC 60599:2022 [

19] recommends using 90th-percentile values for establishing normal operating ranges, while utilities are encouraged to develop their own thresholds based on operational data. Duval and dePablo [

20] demonstrated that conventional ratio methods fail to diagnose 15–33% of cases due to ratio combinations falling outside defined codes, highlighting the need for more robust approaches.

Recent advances in deep learning have shown remarkable promise for time-series anomaly detection, fundamentally transforming the field. Long Short-Term Memory (LSTM)-based architectures pioneered by Malhotra et al. can effectively model temporal dependencies, with subsequent methods like LSTM–Variational Autoencoder (LSTM-VAE) combining recurrent networks with probabilistic frameworks to achieve high detection accuracy on multivariate sensor data [

21,

22]. Autoencoder variants have evolved significantly, with unsupervised anomaly detection (USAD) employing adversarial training for robust reconstruction-based detection, achieving fast training times while maintaining competitive performance [

23]. OmniAnomaly introduces stochastic recurrent neural networks with planar normalizing flows to handle non-Gaussian distributions in multivariate time series [

24]. Generative Adversarial Network (GAN)-based approaches have also emerged, with MAD-GAN utilizing LSTM-RNN generators and discriminators to capture temporal correlations, introducing the novel DR-score that combines discrimination and reconstruction [

25]. The emergence of transformer architectures has been particularly impactful, with Anomaly Transformer introducing association discrepancy mechanisms that model both prior association and series association to distinguish normal and abnormal patterns, outperforming 15 baseline methods on multiple benchmarks [

26]. TranAD further advances this approach by proposing adversarially trained transformers that increase F1-scores by up to 17% while achieving 99% training time reduction [

27]. Graph Neural Network (GNN) approaches like Graph Deviation Network (GDN) explicitly model inter-sensor relationships, predicting sensor behavior based on attention-weighted neighbor embeddings [

28]. However, even these sophisticated approaches typically adopt single-view perspectives that may miss outliers visible only through specific data representations. The fundamental limitation shared by existing methods is their failure to comprehensively consider the multivariate time-series characteristics from multiple complementary viewpoints, leading to incomplete outlier detection [

29]. Recent transformer-focused studies quantify the performance–complexity trade-offs. Chen et al. [

30] reported average F1-scores exceeding 98% using transformer-based approaches. However, Xin et al. [

31] noted that while deep ensemble methods achieve F1-scores above 80% for 4 min prediction windows, the computational requirements remain prohibitive for real-time deployment in many industrial settings.

Beyond computational complexity, these advanced methods face more fundamental challenges in industrial deployment. Similarly, Zhao et al. [

32] developed MTAD-GAT utilizing graph attention networks to model inter-sensor relationships, demonstrating superior performance on benchmark datasets but assuming labeled training data or data predominantly from healthy operational states. Yue et al. [

33] proposed TS2Vec for universal time-series representation through contrastive learning, which still presupposes the ability to distinguish nominal operating patterns from anomalous behaviors during training. These assumptions become particularly problematic for converter transformer monitoring, where (1) equipment may operate with undetected latent defects from commissioning, (2) ground-truth labels for healthy operational states are unavailable, and (3) operational data inherently contains mixed conditions without clear boundaries between normal and abnormal behaviors. This gap between algorithmic assumptions and industrial reality motivates our unsupervised MVCOD framework, which operates directly on unlabeled, heterogeneous operational data without requiring training phases or assumptions about baseline operational conditions.

The multi-view learning approach offers a promising solution to these limitations. Zhao and Fu’s seminal work on Dual-Regularized Multi-View Outlier Detection demonstrates a 26% performance improvement by simultaneously detecting class outliers and attribute outliers [

34]. Recent advances include Multi-view Outlier Detection via Graphs Denoising (MODGD) which constructs multiple graphs for each view and learns consensus through ensemble denoising, avoiding restrictive clustering assumptions [

35]. Li et al. proposed Latent Discriminant Subspace Representations (LDSR), which identifies three types of outliers simultaneously through global low-rank and view-specific residual representations [

36]. These multi-view methods have shown consistent superiority over single-view methods, particularly for complex industrial monitoring scenarios where data naturally presents multiple perspectives. It is worth noting that traditional multivariate time-series models such as Vector Autoregression (VAR) and state-space models, while powerful for forecasting and causal analysis, are not directly applicable to unsupervised anomaly detection, as they require different assumptions about data structure and the availability of labels. Our review therefore focuses on methods specifically designed for outlier detection in unlabeled data. Recent works presented at the AAAI have further advanced multi-view anomaly detection. For instance, the IDIF (Intra-view Decoupling and Inter-view Fusion) framework [

37] introduces innovative modules for extracting common features across views and fusing multi-view information. Additionally, recent works such as partial multi-view outlier detection [

38] and neighborhood-based multi-view anomaly detection [

39] have explored various strategies for handling incomplete views and local structure preservation. While these approaches demonstrate effectiveness in general multi-view scenarios, they primarily focus on visual data with geometric relationships between views. In contrast, our MVCOD framework specifically addresses the unique challenges of industrial time-series data, where views represent different temporal, statistical, and topological perspectives rather than spatial viewpoints.

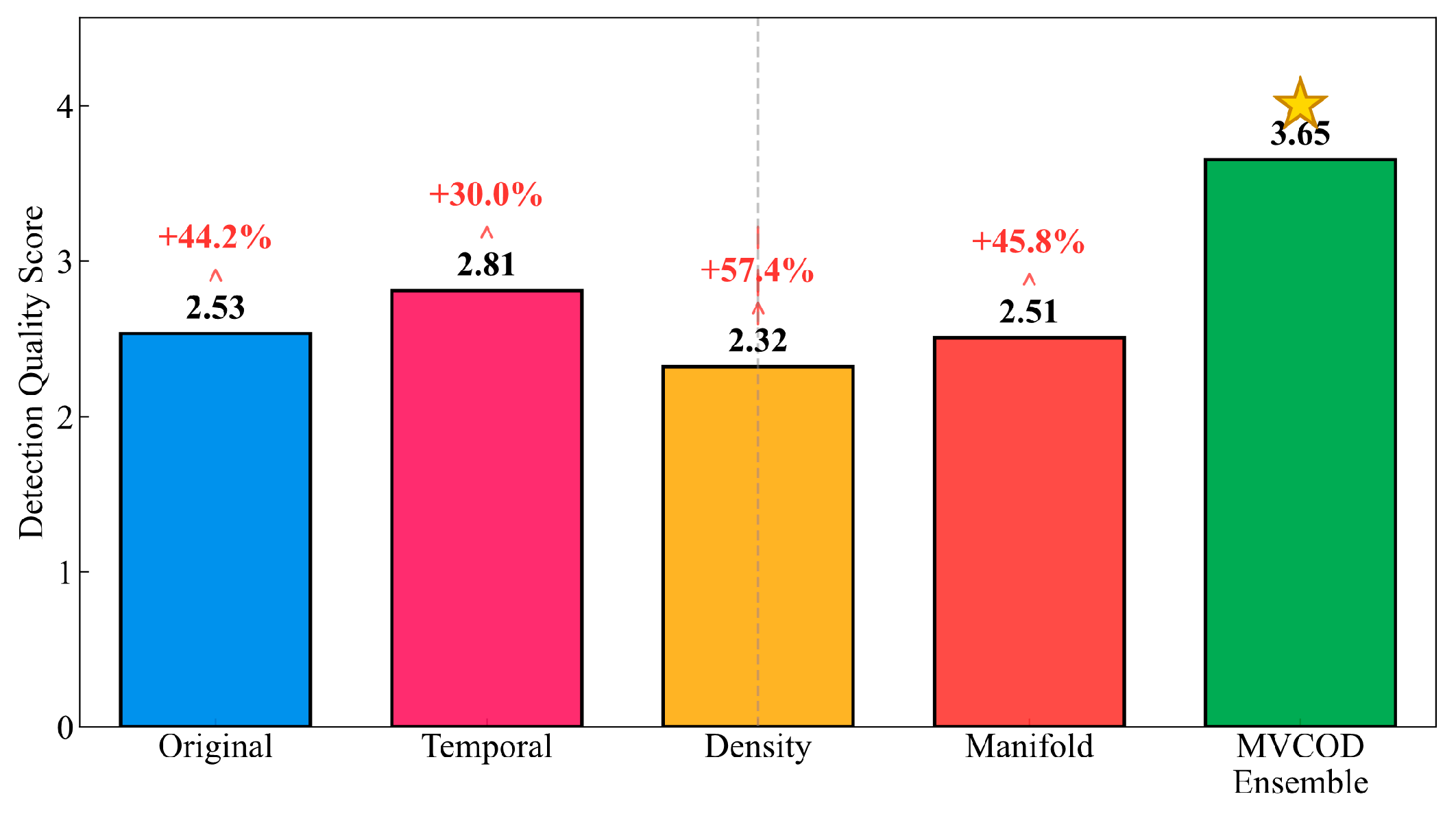

Motivated by these challenges and opportunities, this paper proposes a Multi-View Clustering-based Outlier Detection (MVCOD) framework that integrates multi-view data representation, multi-algorithm detection, and adaptive fusion to achieve robust and comprehensive outlier identification. The key finding is that different data views reveal different outlier patterns: raw measurements capture value deviations, temporal views identify dynamic anomalies, density views discover local outliers, and manifold views detect structural anomalies. By constructing four complementary data views and applying multiple detection algorithms to each view, the framework captures diverse outlier types that single-view methods miss. The main contributions of this work are as follows: (1) A systematic multi-view feature construction approach that generates four complementary representations specifically designed for transformer monitoring data characteristics. (2) An ensemble detection strategy integrating K-means, Hierarchical Density-Based Spatial Clustering of Applications with Noise (HDBSCAN), Ordering Points To Identify the Clustering Structure (OPTICS), and Isolation Forest algorithms to enhance detection robustness across different outlier patterns. (3) An adaptive fusion mechanism that dynamically optimizes detection results based on view quality and complementarity metrics. (4) A feature-level anomaly localization method that provides interpretable results by identifying specific parameters responsible for detected outliers, crucial for practical maintenance applications.

Through extensive experiments on real-world converter transformer data, our approach demonstrates superior performance compared to state-of-the-art methods.

Section 5 concludes the paper with key findings and future research directions. While this work focuses on a specific industrial application, the proposed MVCOD framework contributes to the broader field of multivariate time-series anomaly detection. The multi-view approach and adaptive fusion mechanism can be generalized to other domains where unlabeled time-series data requires outlier identification without ground-truth labels.

2. Preliminary Data Exploration and Challenges

This section explores the characteristics of converter transformer monitoring data to identify key challenges that motivate our methodological design.

2.1. Data Characteristics and Statistical Analysis

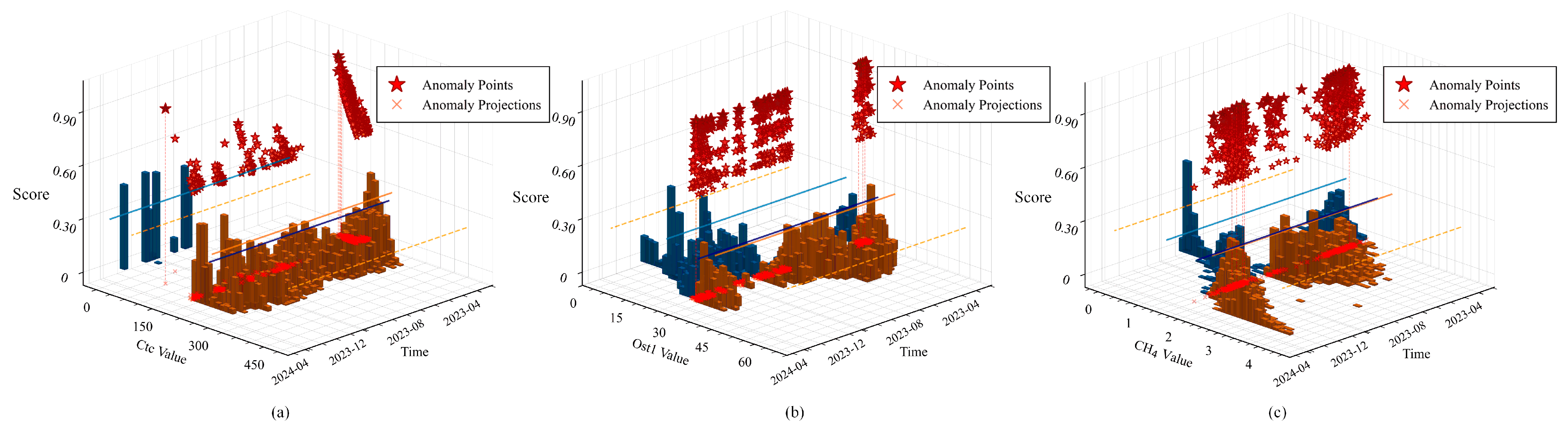

This study analyzes the operating data from an 800 kV converter transformer with a sampling interval of 1 h, comprising 19 state parameters. These include the electrical state parameters core total current (Ctc) and core fundamental current (Cfc), the temperature state parameters top oil temperature 1 (Tot1), top oil temperature 2 (Tot2), bottom oil temperature 1 (Bot1), bottom oil temperature 2 (Bot2), oil surface temperature 1 (Ost1), oil surface temperature 2 (Ost2), oil surface temperature 3 (Ost3), winding temperature (Wt), and switch oil temperature (Sot), and the gas state parameters H2, acetylene (C2H2), total hydrocarbons (THC), CH4, C2H4, ethane (C2H6), carbon monoxide (CO), carbon dioxide (CO2), and total combustible gas (TCG).

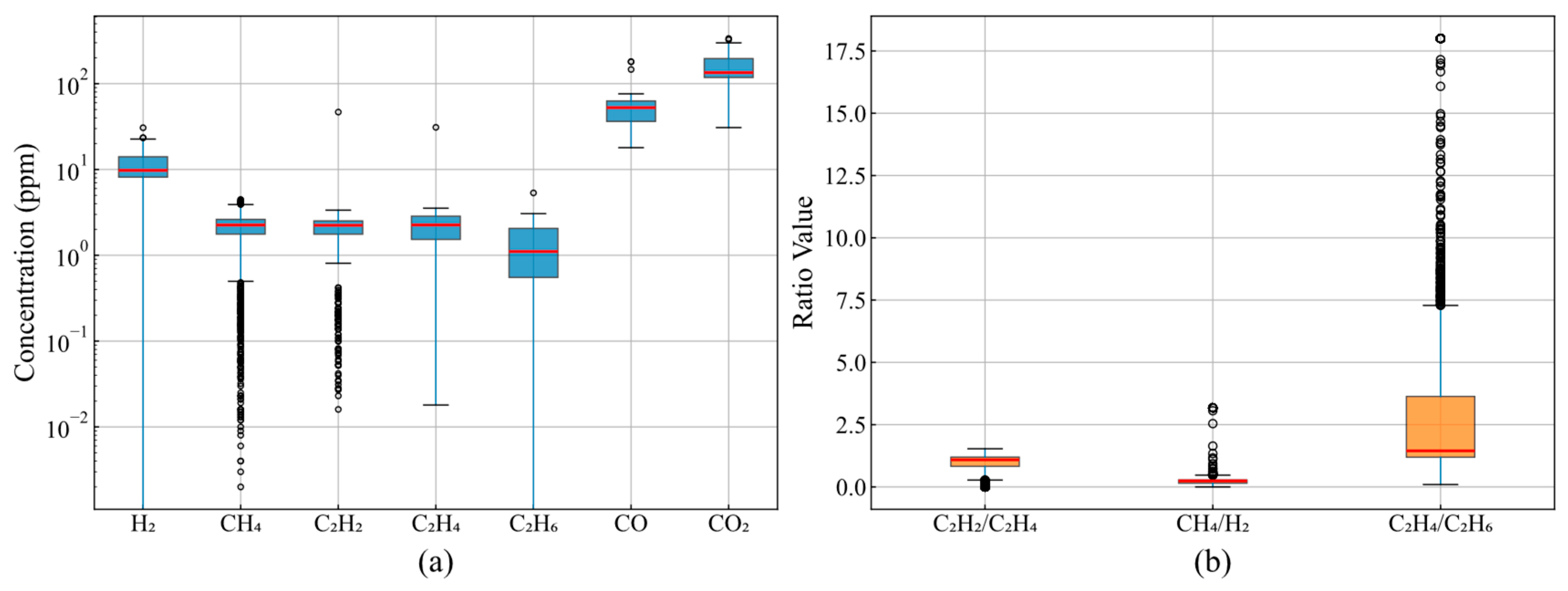

The statistical distribution characteristics of dissolved gas components directly influence anomaly detection algorithm design. The concentration distributions shown in

Figure 1a indicate that gas component concentrations span multiple orders of magnitude, with CO

2’s median concentration at 100 ppm and an interquartile range of 20–300 ppm, while C

2H

2’s median concentration is only 1 ppm. More importantly, H

2’s concentration distribution exhibits severe right-skewness, with a median of approximately 10 ppm but outliers exceeding 30 ppm, with maximum values reaching five times the upper box boundary. Outliers in CH

4 and C

2H

4 account for 4.7% and 3.8% of the total samples, respectively, rendering detection methods based on normality assumptions ineffective due to these asymmetric distribution characteristics.

Diagnostic gas ratios provide the physical basis for fault type identification. As shown in

Figure 1b, 90% of C

2H

2/C

2H

4 ratio samples are concentrated within the 0.1–1.5 interval, conforming to the normal operating range defined by the IEC 60599 standard. However, the C

2H

4/C

2H

6 ratio exhibits an abnormally wide distribution range, extending from 0.5 to 18, with 3.7% of samples exceeding 10, indicating potential high-temperature overheating faults. This variability in ratio distributions requires anomaly detection systems capable of distinguishing normal fluctuations from genuine fault indicators.

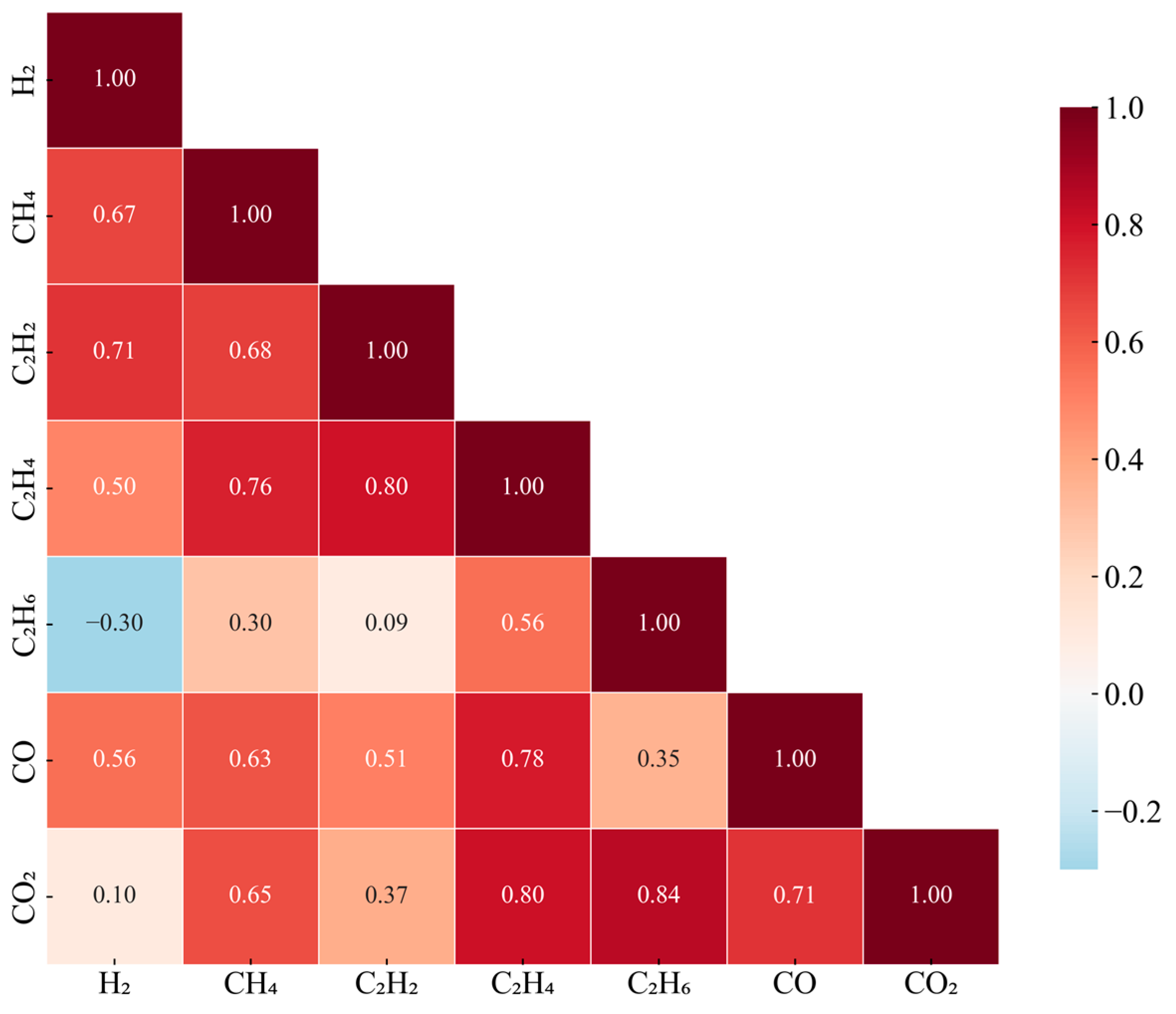

Inter-parameter correlation structures reflect physical coupling mechanisms within the transformer. The correlation matrix revealed in

Figure 2 presents a clear hierarchical structure: the hydrocarbon gases C

2H

4 and C

2H

6 show a correlation coefficient as high as 0.84, with C

2H

4 and CH

4 at 0.80, indicating common oil cracking processes; CO

2 and CO’s correlation of 0.71 reflects synchronized cellulose insulation aging; while the negative correlation of −0.30 between H

2 and C

2H

6 suggests mutual inhibition between partial discharge and thermal decomposition processes. This complex correlation structure determines that univariate detection methods cannot capture system-level anomalies.

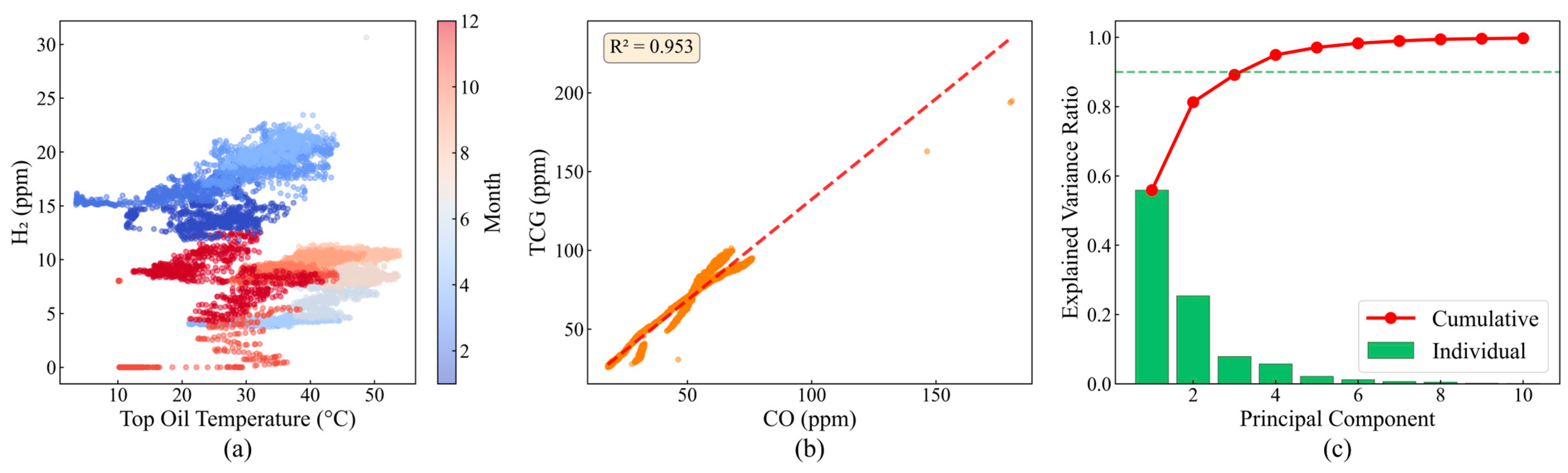

To further investigate nonlinear parameter relationships,

Figure 3a analyzes the seasonal dependency between H

2 concentration and temperature. The data shows a mean summer H

2 concentration of 12.3 ppm, significantly exceeding winter’s 8.7 ppm, with H

2 concentration increasing by an average of 1.8 ppm per 10 °C temperature rise. However, the high residual standard deviation of 3.2 ppm indicates limited explanatory power of temperature, necessitating consideration of comprehensive influences from load, humidity, and other factors. The CO versus TCG relationship analysis (

Figure 3b) yields a linear regression slope of 0.92 with a determination coefficient R

2 = 0.953, confirming that CO’s proportion in TCG remains stable within the 85–92% range, providing a theoretical basis for simplified monitoring indicators.

Principal component analysis results (

Figure 3c) quantify data dimensionality characteristics. The PCA was performed on the standardized 19-dimensional parameter space using the correlation matrix to ensure equal weighting across parameters with different units and scales. The eigenvalue decomposition revealed a clear dimensional hierarchy: the first principal component (PC1), with the eigenvalue λ

1 = 9.04, captures temperature-driven variations including oil temperatures and winding temperatures, reflecting the thermal state of the transformer. The second component (PC2), with λ

2 = 5.15, primarily represents gas generation dynamics, with high loadings on H

2, CH

4, and C

2H

4, indicating oil decomposition processes. Components PC3–PC6 capture mixed effects of electrical parameters and environmental factors.

The significance of this dimensional reduction extends beyond simple data compression. First, the 90.2% variance retention with just six components confirms substantial redundancy in the original 19 parameters, validating our multi-view approach that exploits different data perspectives. Second, the clear separation between the thermal PC1 and gas-related PC2 supports our decision to construct separate temperature and gas monitoring views in the MVCOD framework. Third, the PCA results guide feature selection for the manifold view construction in

Section 3.3.4, where we set the UMAP embedding dimension to 20 to preserve more subtle patterns that are potentially lost in aggressive PCA truncation.

Importantly, while PCA provides valuable insights into linear correlations, transformer fault mechanisms often involve nonlinear interactions. Therefore, we employ PCA primarily for exploratory analysis and dimensionality assessment rather than direct feature extraction, complementing it with nonlinear methods like UMAP in the actual MVCOD implementation.

The above analysis demonstrates that non-Gaussian distributions, nonlinear correlations, and high-dimensional redundancy of converter transformer operating data constitute the main technical challenges for anomaly detection.

2.2. Temporal Dynamics and Anomaly Patterns

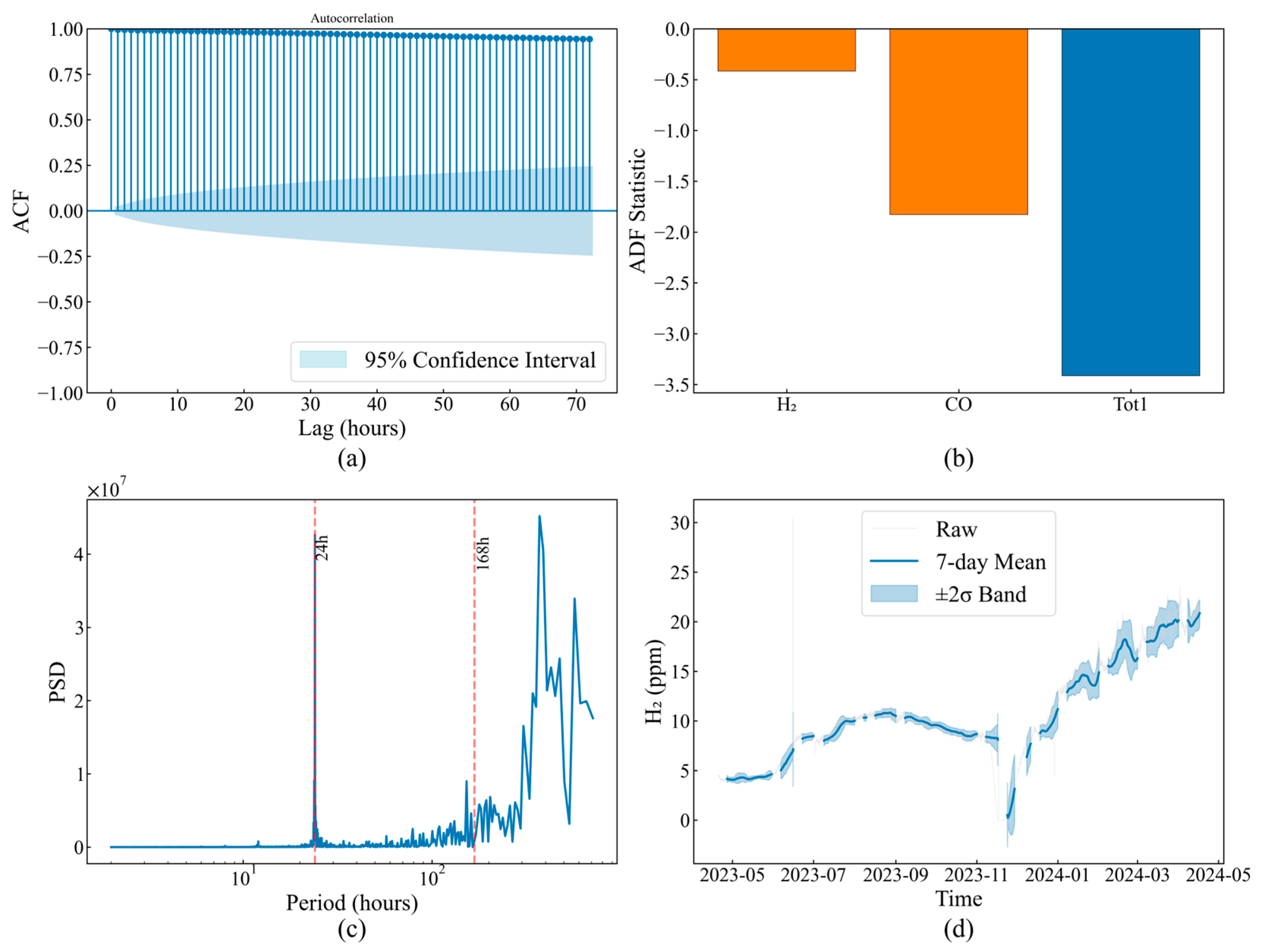

The temporal evolution characteristics of operating parameters determine time window selection and feature extraction strategies for anomaly detection. H

2 concentration autocorrelation analysis (

Figure 4a) shows a correlation coefficient as high as 0.95 at 1 h lag, maintaining 0.65 even after 72 h, with a Hurst exponent of 0.78 confirming long-memory process existence. This strong temporal dependency implies that instantaneous anomaly detection is prone to false alarms, requiring historical information integration for judgment.

Stationarity test results (

Figure 4b) classify parameters into two categories: the temperature parameter Tot1, with an ADF statistic of −3.2, rejects the unit root hypothesis, exhibiting mean-reverting stationary behavior; while the gas parameters H

2, CO, and TCG, with ADF statistics of −1.7, −1.8, and −1.9, respectively, confirm their non-stationary characteristics. This differentiation requires adopting differentiated preprocessing and modeling strategies for different parameter types.

Frequency-domain analysis (

Figure 4c) identifies 24 h and 168 h periodic components with power spectrum peaks of 2.4 × 10

7 and 1.68 × 10

7, respectively, with signal-to-noise ratios reaching 18.3 dB and 15.7 dB, respectively, reflecting regular load scheduling influences. While these periodic components belong to normal operating patterns, their amplitude variations may mask genuine anomalies, requiring consideration in detection algorithms.

Long-term trend analysis (

Figure 4d) captures gradual system state evolution. The H

2 concentration remained stable at 8–10 ppm before November 2023, and then it rapidly increased from January 2024 to peak at 22 ppm in March, representing 120% growth. Concurrently, the 7-day moving average 2σ confidence band expanded from ±1.2 ppm to ±3.8 ppm, indicating not only mean shift but also enhanced volatility. This evolution pattern typically reflects cumulative insulation degradation effects.

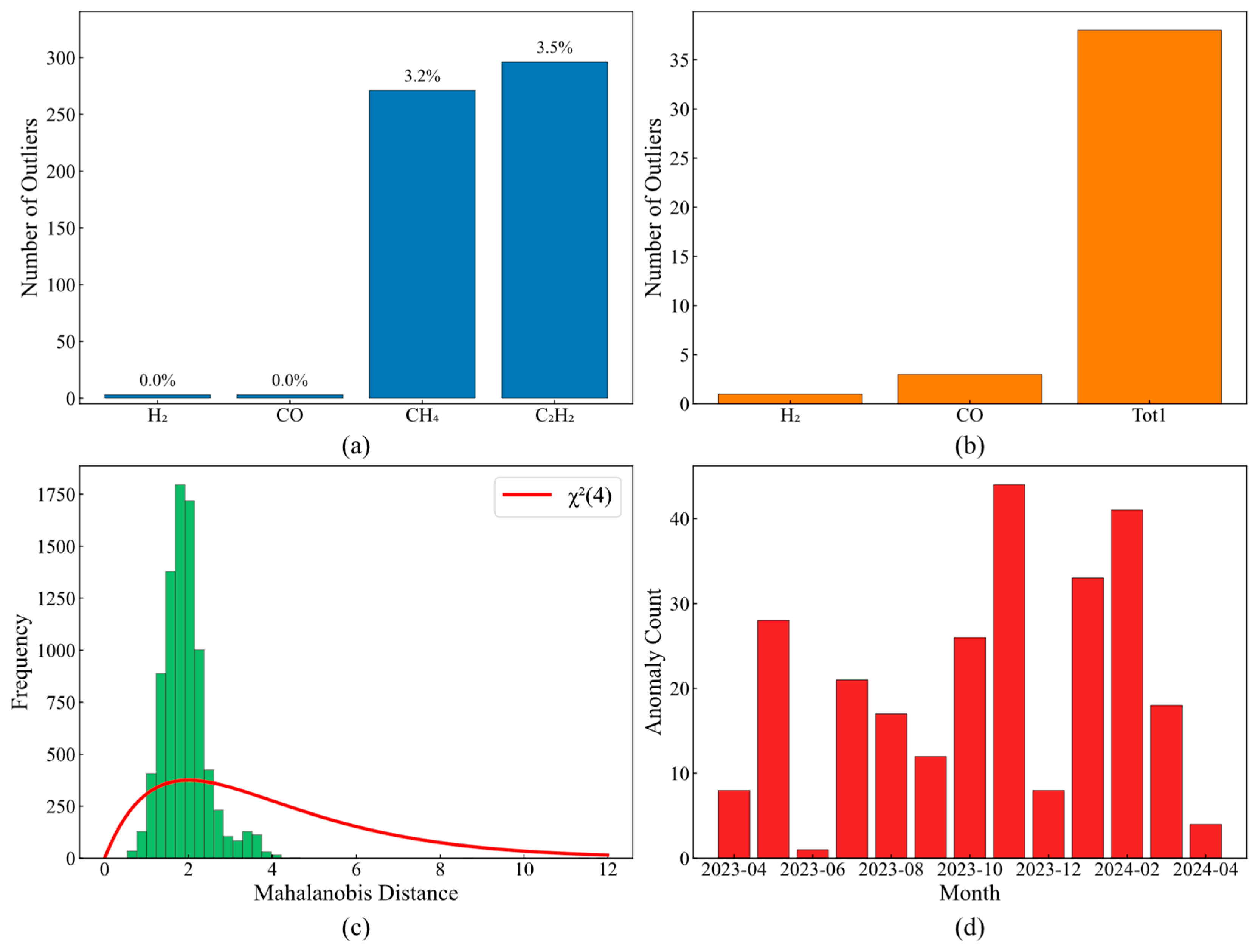

Comparative analysis of different detection methods reveals the criticality of method selection. Interquartile range-based methods (

Figure 5a) completely fail for H

2 and CO, with a 0% detection rate, while achieving 3.2% and 3.5% detection rates for CH

4 and C

2H

2, respectively. The 3σ criterion (

Figure 5b) exhibits greater parameter sensitivity differences: 38 anomalies detected in Tot1 versus only 1 in H

2, demonstrating the inapplicability of distributional assumptions.

Multivariate methods’ limitations are quantified through Mahalanobis distance analysis (

Figure 5c). The actual cumulative probability at theoretical χ

2(4) distribution of the 95% quantile is only 88%, with a 12% misclassification rate originating from data non-Gaussian characteristics, and with the actual distribution kurtosis of 4.8 far exceeding the theoretical value of 3.0. Temporal clustering in monthly anomaly distribution (

Figure 5d)—December 2023 peak of 44 anomalies, February 2024 peak of 41—coincides with equipment maintenance records, validating the physical significance of detection results.

Temporal analysis results emphasize the necessity of dynamic adaptive detection frameworks, as static thresholds and parametric methods cannot cope with non-stationary evolution and multi-scale dynamics.

Operating data status analysis systematically reveals core challenges facing converter transformer anomaly detection. Skewed gas concentration distributions (skewness coefficients 2.3–10.5) negate the applicability of the normality assumption; multi-scale temporal correlations spanning 1–168 h require detection algorithms with multi-resolution analysis capabilities; parameter correlations ranging from −0.30 to 0.84 reflect complex physical coupling, with univariate method detection rate differences reaching two orders of magnitude, confirming the necessity of multivariate analysis; 57.9% of gas parameters exhibit non-stationary characteristics, with baseline drift amplitude reaching 120%, while traditional static methods’ 12% misclassification rate highlights the importance of adaptive mechanisms. These preliminary observations reveal the need for more sophisticated detection approaches, which we address in the following section.

3. The Proposed Method

3.1. Overview of the Multi-View Clustering-Based Outlier Detection Framework

To address the intrinsic challenges of outlier detection in converter transformer operational data—including multi-scale temporal dependencies, non-Gaussian distributions, and complex inter-parameter correlations—this study proposes a Multi-View Clustering-based Outlier Detection (MVCOD) framework. The framework leverages complementary data representations and ensemble learning to achieve robust outlier identification at both the temporal and feature levels.

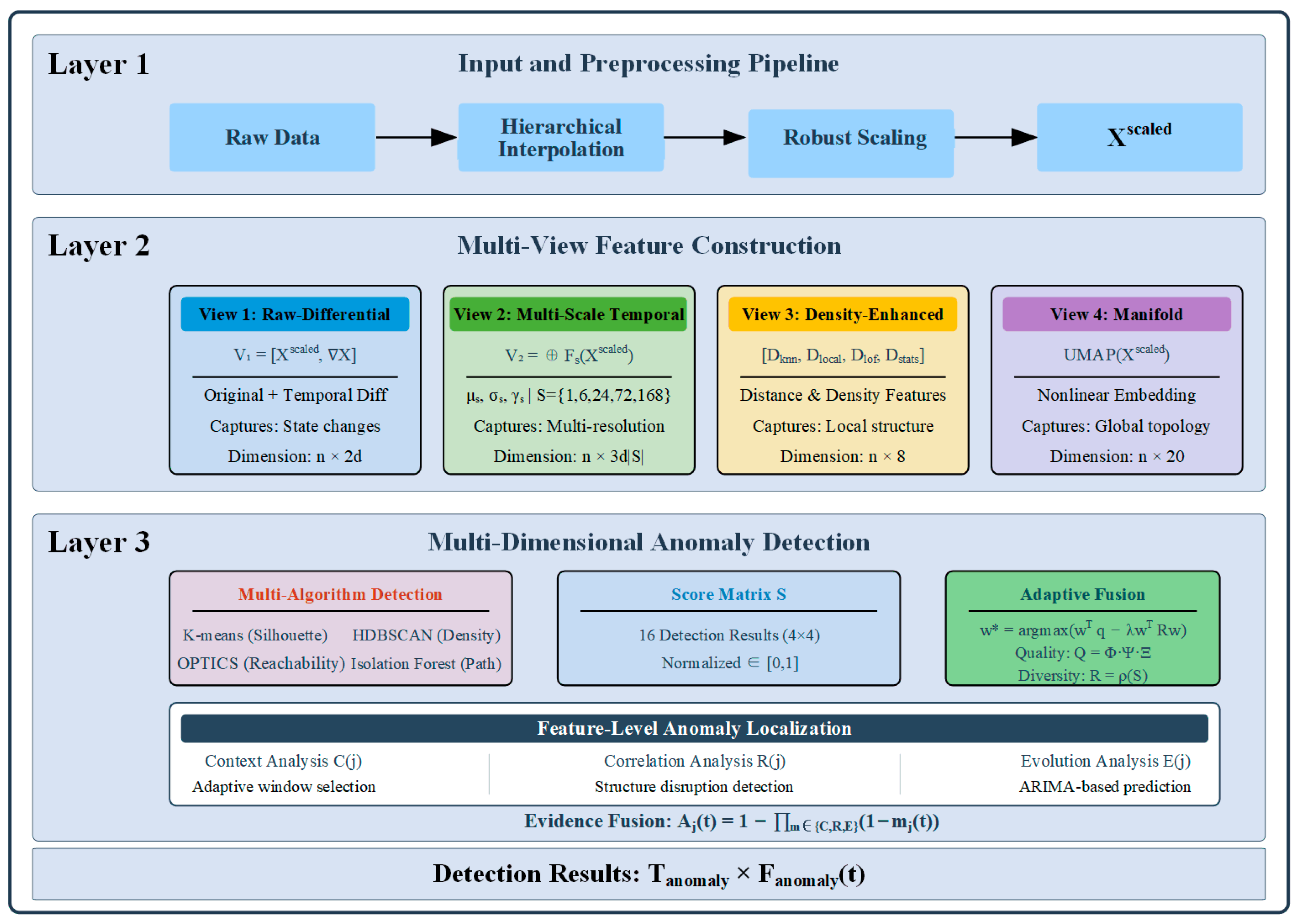

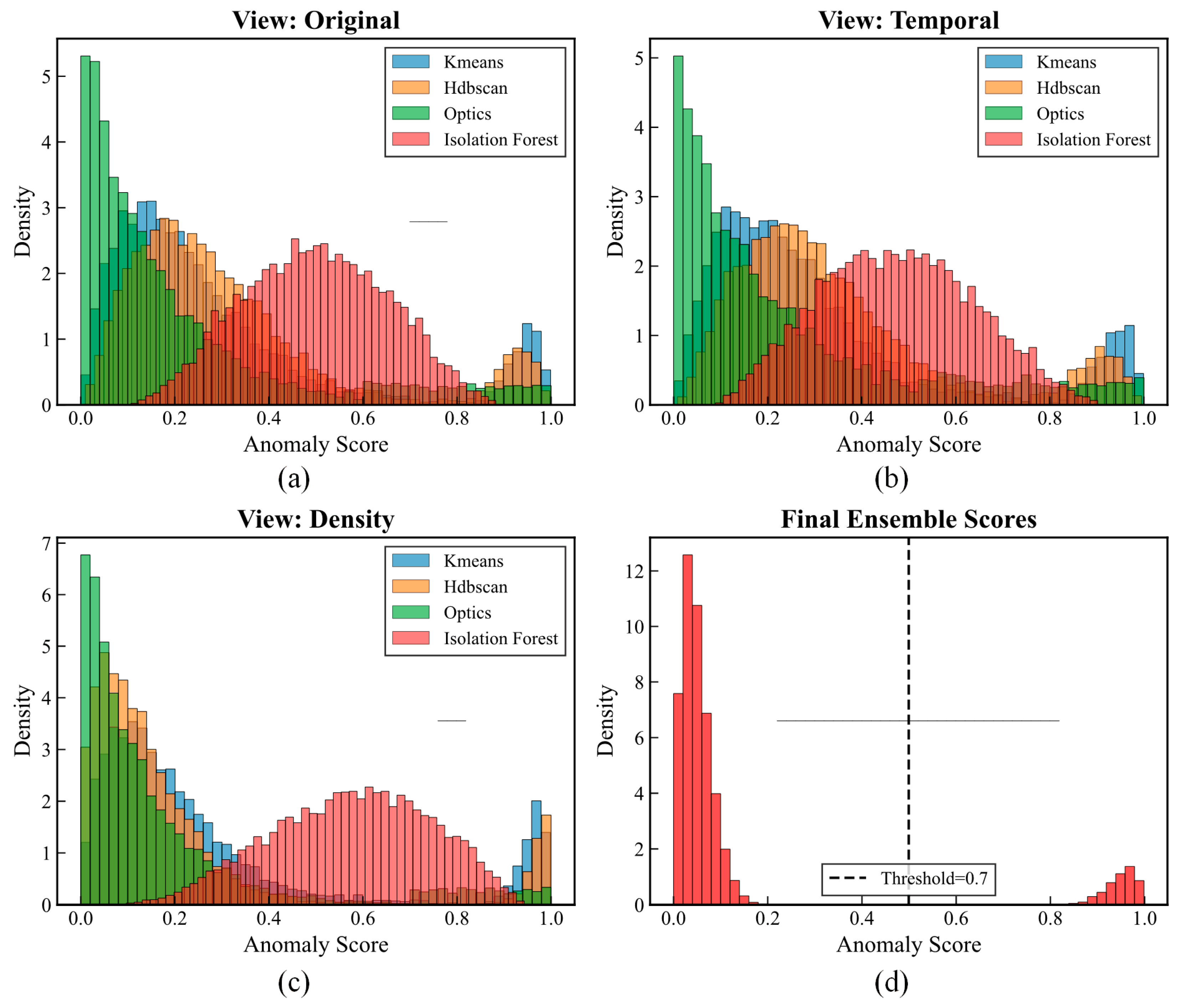

As shown in

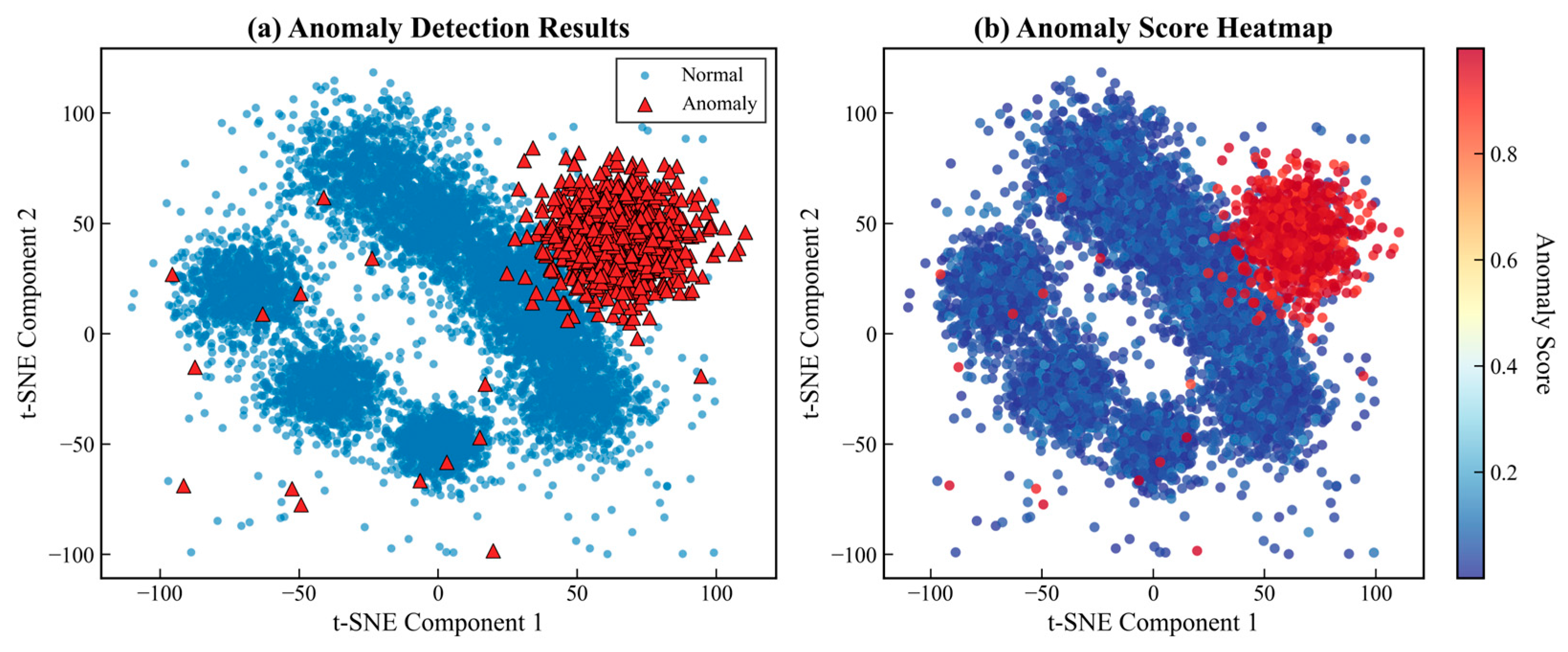

Figure 6, the MVCOD framework comprises four core modules: data preprocessing, multi-view feature construction, multi-clustering algorithm detection, and adaptive fusion. The data preprocessing module employs a hierarchical interpolation strategy to handle missing values and eliminates dimensional effects through robust standardization. The multi-view feature construction module generates four complementary representations: the raw-differential view captures states and their rates of change, the multi-scale temporal view extracts statistical features at different time granularities, the density view quantifies local distribution characteristics, and the manifold view reveals the intrinsic geometric structure of the data. The multi-clustering algorithm detection module applies four algorithms—K-means, Hierarchical Density-Based Spatial Clustering of Applications with Noise (HDBSCAN), Ordering Points To Identify the Clustering Structure (OPTICS), and Isolation Forest—to each view, producing 16 detection results. The adaptive fusion module dynamically determines weights based on detection quality and complementarity, achieving feature-level anomaly localization through context analysis, correlation analysis, and temporal evolution analysis.

The framework overcomes the limitations of single data representation through multi-view representation and enhances detection capability for different types of anomalies through multi-algorithm fusion. K-means identifies global anomalies based on inter-cluster distances, HDBSCAN captures density anomalies, OPTICS discovers outliers in hierarchical structures, and Isolation Forest detects local anomalies through isolation mechanisms. This design ensures effective detection of various anomaly types by the framework.

3.2. Data Preprocessing

Converter transformer parameters span three physical domains—electrical, temperature, and gas—with significant differences in missing patterns and numerical distributions among parameters, necessitating targeted preprocessing strategies. Let the original multivariate time series be and the missing value mask matrix be .

3.2.1. Hierarchical Missing Value Interpolation

Different interpolation strategies are adopted based on the missing rate

of each feature:

where

is linear interpolation, suitable for low missing rates;

is second-order polynomial interpolation, capable of capturing local nonlinear trends; and

is moving-average interpolation, with the window size

adaptively adjusted based on data length.

3.2.2. Robust Standardization

Considering the right-skewed distribution characteristics of gas concentration data, robust standardization based on the median and interquartile range is adopted:

This transformation is robust to outliers while preserving the relative magnitude relationships required for subsequent clustering analysis.

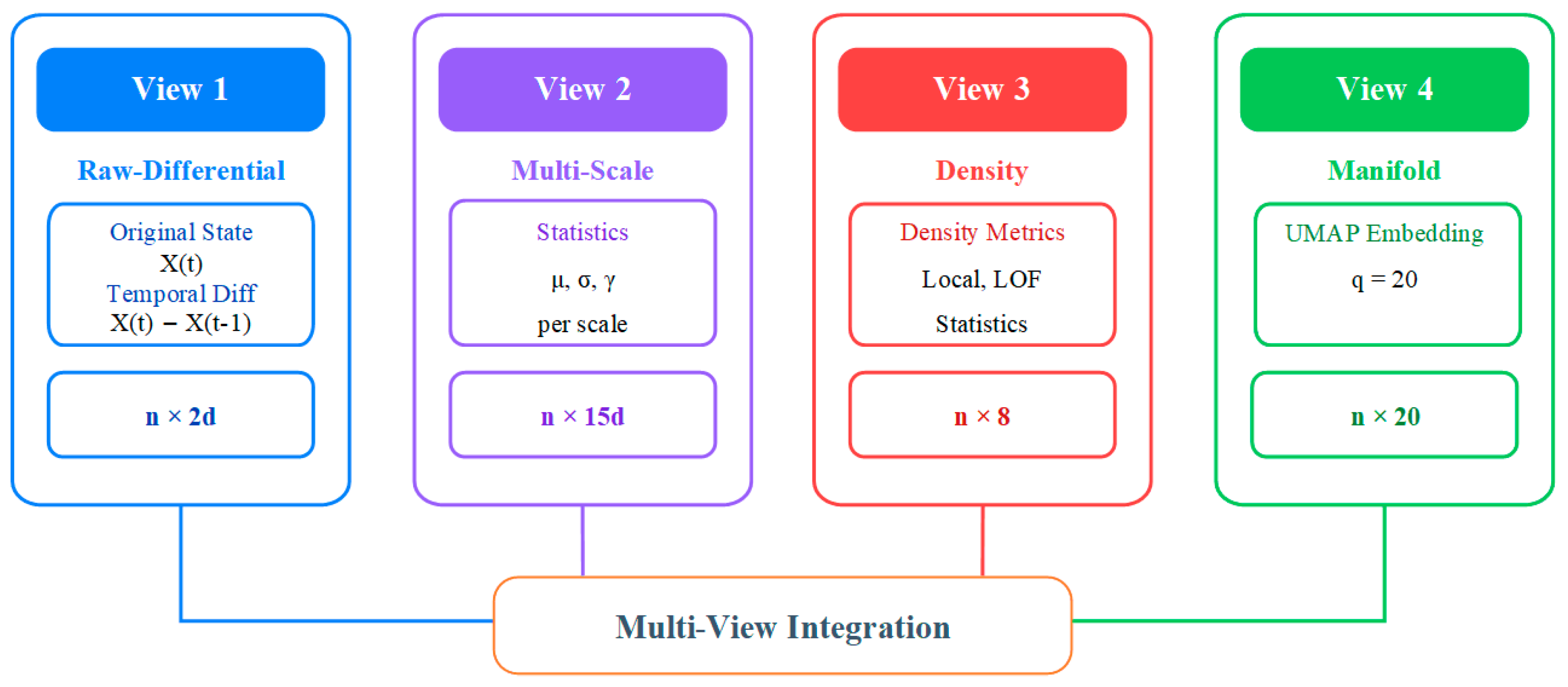

3.3. Multi-View Feature Construction

The preprocessed data

eliminates missing values and dimensional effects, laying the foundation for subsequent analysis. However, anomaly patterns in converter transformers exhibit diversity: gas concentrations may show instantaneous spikes, temperature parameters manifest as slow drifts, and electrical parameters present periodic fluctuation anomalies. This heterogeneity necessitates examining data from different perspectives to achieve comprehensive anomaly detection. This section constructs four complementary data views. The overall architecture of multi-view feature construction is illustrated in

Figure 7, where the preprocessed data is transformed into four distinct representations capturing different anomaly characteristics, each optimized for specific types of anomaly patterns.

3.3.1. View 1: Raw-Differential Representation

Transient anomalies often manifest as sudden changes in parameter values rather than anomalies in absolute values. To capture such dynamic features, the first view combines standardized data with its temporal differences:

where the difference operator is defined as follows:

For the first time point, set . The advantage of this representation lies in the following: the raw features preserve state information, enabling identification of static anomalies such as value exceedances; the differential features highlight change information, being sensitive to dynamic anomalies such as gas concentration surges and sudden temperature changes. The combination of both enables this view to detect both steady-state and transient anomalies simultaneously.

3.3.2. View 2: Multi-Scale Temporal Representation

The analysis in

Section 2 indicates that converter transformer data exhibits multi-scale temporal correlations ranging from hourly to weekly. Feature extraction with a single time window cannot adequately characterize this hierarchical temporal structure. Therefore, the second view constructs temporal features through multi-resolution analysis:

where

corresponds to hourly, quarter-daily, daily, 3-daily, and weekly scales respectively, The temporal scales

hours are selected based on the dominant periodicities identified in our spectral analysis (

Figure 4c), corresponding to hourly, quarter-daily, daily, 3-daily, and weekly patterns inherent in transformer operations, while

denotes feature concatenation. For each scale

, the feature extraction function

computes three types of statistics:

where Local mean

,

Local standard deviation ,

Window change rate .

This multi-scale representation enables effective capture of both short-term fluctuation anomalies and long-term evolution anomalies.

3.3.3. View 3: Density-Enhanced Representation

The non-Gaussian distributions and outlier patterns revealed in

Section 2 indicate the importance of distance-based anomaly detection. The third view enhances local structure information through various density-related features:

The k-nearest neighbor distance features

include

where

denotes the Euclidean distance from point

to its

-th nearest neighbor, with

set empirically. We set

for k-NN calculations, which represents approximately 0.34% of our dataset size, falling within the recommended range of 0.1–2% for local density estimation in anomaly detection tasks. The average distance reflects local density, while the maximum distance captures boundary effects.

Local density estimation

employs a Gaussian kernel:

where the bandwidth parameter

is adaptively determined, and the Local Outlier Factor

quantifies relative outlierness:

where local reachability density

Distance statistical features

provide distributional information:

representing the mean, standard deviation, median, and nearest neighbor distance of k-nearest neighbor distances, respectively.

3.3.4. View 4: Manifold Representation

Complex nonlinear relationships exist among converter transformer parameters, forming specific manifold structures in high-dimensional space. Anomalous samples typically deviate from this manifold. The fourth view employs Uniform Manifold Approximation and Projection (UMAP) to extract manifold features:

where

is the embedding dimension, and the hyper-parameter set

includes the following:

: Defines the neighborhood size for local structure;

: Controls the compactness of embedded points;

metric = Euclidean: Distance metric.

The UMAP parameters balance local structure preservation with global topology, based on preliminary experiments showing stable manifold representations across these settings, achieving dimensionality reduction by optimizing the following objective function:

where

and

are edge weights in high-dimensional and low-dimensional spaces, respectively. This nonlinear mapping preserves both local and global topological structures of the data, making anomalous points on the manifold easier to identify in low-dimensional space.

By constructing these four views, the MVCOD framework achieves comprehensive coverage of anomaly patterns: captures dynamic anomalies, identifies multi-scale pattern deviations, discovers density anomalies, and detects manifold structure disruptions. This multi-view strategy significantly enhances the completeness and robustness of anomaly detection

3.4. Multi-Clustering Algorithm Outlier Detection

The four constructed data views characterize converter transformer operational states from different perspectives, yet anomaly patterns within each view still exhibit diversity. For instance, in the density view, anomalies may manifest as isolated points, small clusters, or low-density regions; in the manifold view, anomalies may be located at manifold boundaries or completely deviate from the manifold structure. A single clustering algorithm cannot comprehensively capture these heterogeneous anomalies. Therefore, this section applies four clustering algorithms with different theoretical foundations to each view, achieving comprehensive detection through algorithmic complementarity.

Let the data of the -th view be , where is the feature dimension of that view. For each clustering algorithm , define the detection function , with the output being an anomaly score vector. This forms a detection matrix , where represents the anomaly score of the -th sample obtained through algorithm under view .

3.4.1. K-Means Anomaly Detection Based on Silhouette Coefficient

The K-means algorithm achieves data partitioning by minimizing the within-cluster sum of squares. It is sensitive to spherical cluster structures and suitable for detecting global anomalies that deviate from major data clusters. For view

, first determine the optimal number of clusters

:

where the average Silhouette Coefficient is

where

is the average distance from sample

to other points in the same cluster, and

is the average distance to the nearest neighboring cluster. The upper bound of the search range

prevents excessive segmentation.

After obtaining the clustering results

, anomaly identification is based on the distribution characteristics of Silhouette Coefficients. Calculate the first quartile

and interquartile range IQR of the Silhouette Coefficient vector, and set an adaptive threshold:

The anomaly score for sample

is defined as follows:

This design enables samples located at cluster boundaries or between clusters to receive higher anomaly scores.

3.4.2. HDBSCAN Density Clustering Detection

HDBSCAN identifies clusters of arbitrary shapes and noise points by constructing density hierarchy trees, particularly suitable for handling data with uneven density. The core parameters min_cluster_size and min_samples of the algorithm need to be adaptively determined based on data characteristics.

The minimum cluster size is set as follows:

This adaptive threshold is based on Tukey’s fence method [

40], commonly used in boxplot construction for outlier detection. The factor of 1.5 × IQR represents Tukey’s “inner fence”, which effectively identifies mild outliers while maintaining robustness against extreme values. In the K-means clustering context, samples with Silhouette Coefficients below this threshold are likely to be poorly clustered points at cluster boundaries or in overlapping regions.

To ensure that identified clusters have statistical significance, the minimum sample number is determined through stability analysis:

where

is the clustering result under parameter

, and cluster stability is defined as follows:

where

and

are the density levels at which edge (

) appears and disappears in the hierarchy tree, respectively.

HDBSCAN outputs the cluster label

and outlier score

for each sample. The anomaly score is defined as follows:

Noise points () are directly marked as anomalies, while other points are assigned values based on their outlier scores.

3.4.3. OPTICS Hierarchical Density Analysis

OPTICS generates reachability plots, revealing the density structure of data. Unlike HDBSCAN’s automatic clustering, OPTICS provides a continuous view of density changes, suitable for detecting anomalies in density gradients.

The core parameter

is determined through k-distance graph analysis. Calculate the distance from each point to its

-th nearest neighbor and sort them, determining

at the “elbow point” of the curve:

3.4.4. Isolation Forest Isolation Detection

Isolation Forest is based on the intuition that “anomalous samples are easier to isolate,” constructing isolation trees through random partitioning. This algorithm does not rely on distance or density concepts and is particularly effective for high-dimensional data and local anomalies.

For view , construct isolation trees, each using a subsample size of . During construction, randomly select features and split points to recursively partition data until samples are isolated or reach the depth limit .

The path length of sample

in the

-th tree is denoted as

, with average path length as follows:

Anomaly scores are obtained by comparison with the expected path length of binary search trees:

where

, and

is the harmonic number. Scores close to 1 indicate anomalies, while scores close to 0 indicate normality.

3.4.5. Detection Matrix Construction

Applying four algorithms to four views generates 16-dimensional detection results. To ensure comparability, normalize the output for each algorithm–view combination:

The final detection matrix provides comprehensive anomaly evidence for subsequent adaptive fusion.

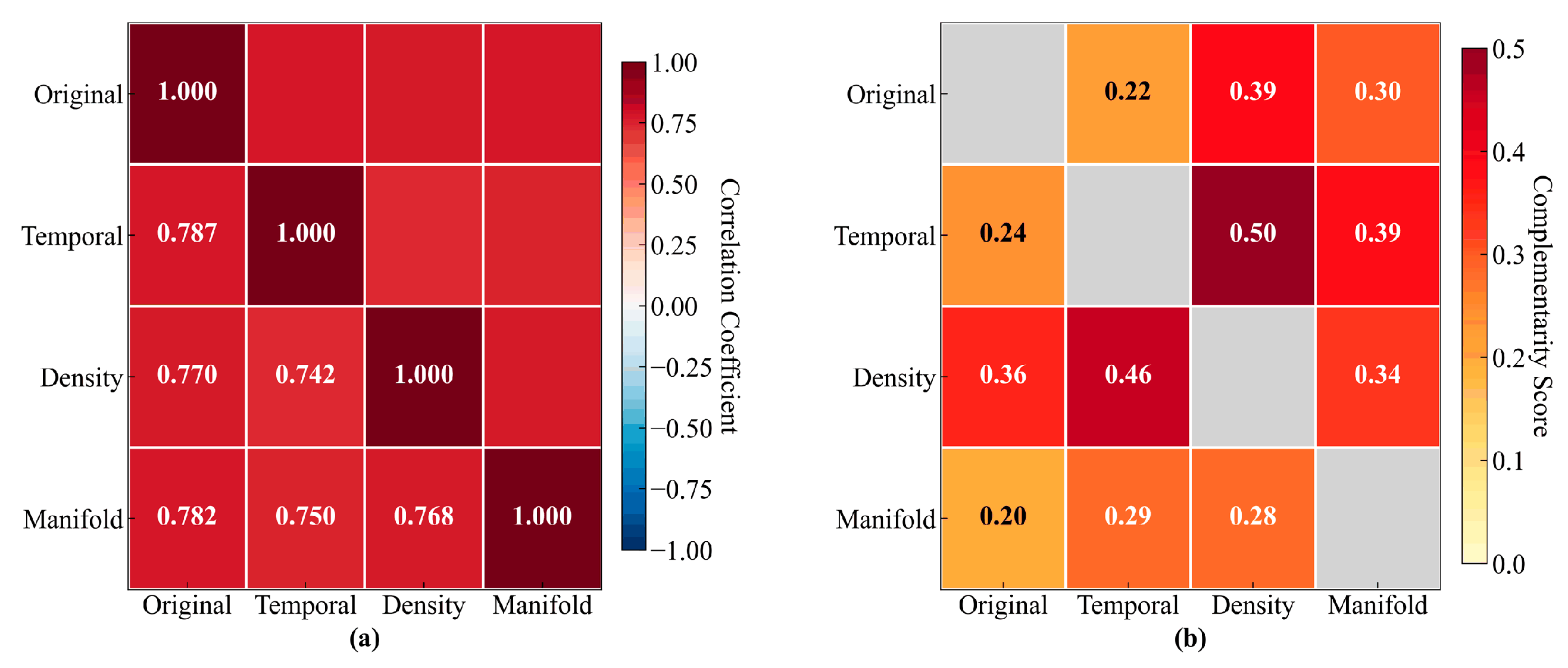

3.4.6. Adaptive Fusion Strategy

The 16 column vectors of the detection matrix represent detection results from different algorithm–view combinations. These results need to be integrated through weighted fusion to obtain final anomaly scores. The fusion weights are determined based on two criteria: detection quality, and complementarity.

Detection quality is evaluated through the statistical properties of anomaly score distributions. Define the quality indicator as follows:

where

, and

are the skewness, kurtosis, and separation degree, respectively. Complementarity is measured through the Spearman rank correlation matrix

. The weight optimization problem is formalized as follows:

subject to the constraints

and

, where

is the quality vector, and

balances quality and diversity.

After solving through the projected gradient method, the final anomaly score is

Anomaly determination employs distribution-based adaptive thresholding:

where

and

are the mean and standard deviation of

, respectively, and

is determined based on the target false positive rate.

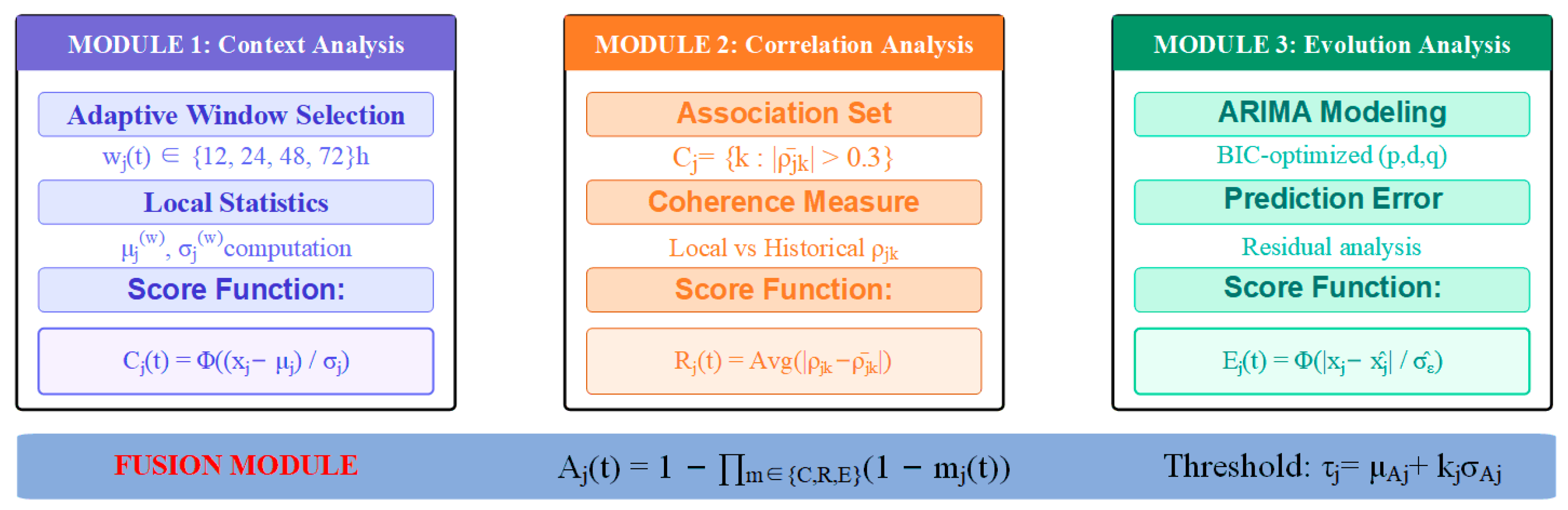

3.5. Feature-Level Anomaly Localization

Multi-clustering algorithm fusion determines the set of anomalous time points . However, a time point being identified as anomalous does not imply that all features are anomalous. In practical operations, anomalies are often triggered by a few key parameters—such as sudden hydrogen concentration increases indicating partial discharge, or synchronous temperature parameter rises reflecting overheating faults. The static threshold approach of directly checking whether feature values at anomalous moments exceed normal ranges has obvious limitations: the normal range of features dynamically changes with operating conditions, and coupling relationships among features make it common for individual features to be normal while their combination is anomalous. Therefore, advancing from anomalous time points to specific anomalous feature localization requires more refined analytical methods.

Based on the above understanding,

Figure 8 illustrates the feature-level anomaly localization process, which employs three complementary mechanisms to identify specific anomalous features from the detected anomalous time points. This section designs three complementary anomaly localization mechanisms that evaluate the anomaly degree of each feature from the perspectives of temporal context, feature correlation, and dynamic evolution. For an anomalous moment

, the truly anomalous feature subset

needs to be identified from

features.

- (1)

Context-Based Anomaly Localization

The contextual anomaly degree of feature

at time

is defined as its deviation from local historical patterns. Considering the non-stationarity of converter transformer data, an adaptive window strategy is adopted:

where

is the candidate window set (hours), and

is the coefficient of variation within the window

. This design enables long windows during stable periods for improved estimation accuracy, as well as short windows during fluctuating periods for rapid adaptation.

Based on the selected window, the contextual anomaly score is calculated as follows:

where

and

are recursive estimates within the window:

The mapping function converts standardized deviations to anomaly scores in the range , where is the standard normal distribution function.

- (2)

Correlation Structure-Based Anomaly Localization

Stable physical coupling relationships exist among converter transformer parameters. The correlation anomaly degree of feature

is measured by the degree of disruption in its coherence with associated features. First, identify the stable association set of feature

:

where

is the historical average correlation coefficient,

is the correlation strength threshold, and

ensures correlation stability.

The correlation anomaly score is defined as follows:

where

is the local correlation coefficient within window

, with

24. The denominator

serves as normalization, enabling effective capture of minor changes in strongly correlated features.

- (3)

Evolution Model-Based Anomaly Localization

Temporal evolution anomalies are identified through residual analysis of prediction models. An Autoregressive Integrated Moving Average (ARIMA) model is fitted for each feature:

Model orders

are determined by minimizing the Bayesian Information Criterion (BIC) criterion to balance goodness of fit and model complexity. The evolution anomaly score is based on standardized prediction residuals:

where

is the one-step-ahead prediction, and

is the recursive estimate of residual standard deviation:

The exponential smoothing parameter ensures robustness to anomalies.

- (4)

Integrated Anomaly Localization Decision

The three anomaly scores capture anomaly patterns from different dimensions. The final feature anomaly determination employs an evidence fusion strategy:

This probabilistic form ensures that strong anomaly evidence from any dimension can trigger detection. Feature

at time

is localized as anomalous if and only if

where

and

are the mean and standard deviation of historical anomaly indicators for feature

, respectively, and

is differentially set based on feature importance.

Through the above mechanisms, the MVCOD framework achieves precise mapping from anomalous time points to anomalous feature sets , completing the full process of outlier detection. This two-stage design—first detecting anomalous moments, then localizing anomalous features—both ensures comprehensiveness of detection and provides interpretable anomaly analysis results.