Preliminary Analysis and Proof-of-Concept Validation of a Neuronally Controlled Visual Assistive Device Integrating Computer Vision with EEG-Based Binary Control

Abstract

1. Introduction

1.1. Literature Review on Related Work

1.2. Objective and Design of the Current Study

- Utilization of a non-invasive EEG headset with 32 electrodes towards developing a fully integrated BCI and machine learning-based classification algorithm capable of detecting user-generated selection commands by analyzing concurrent physiological activities.

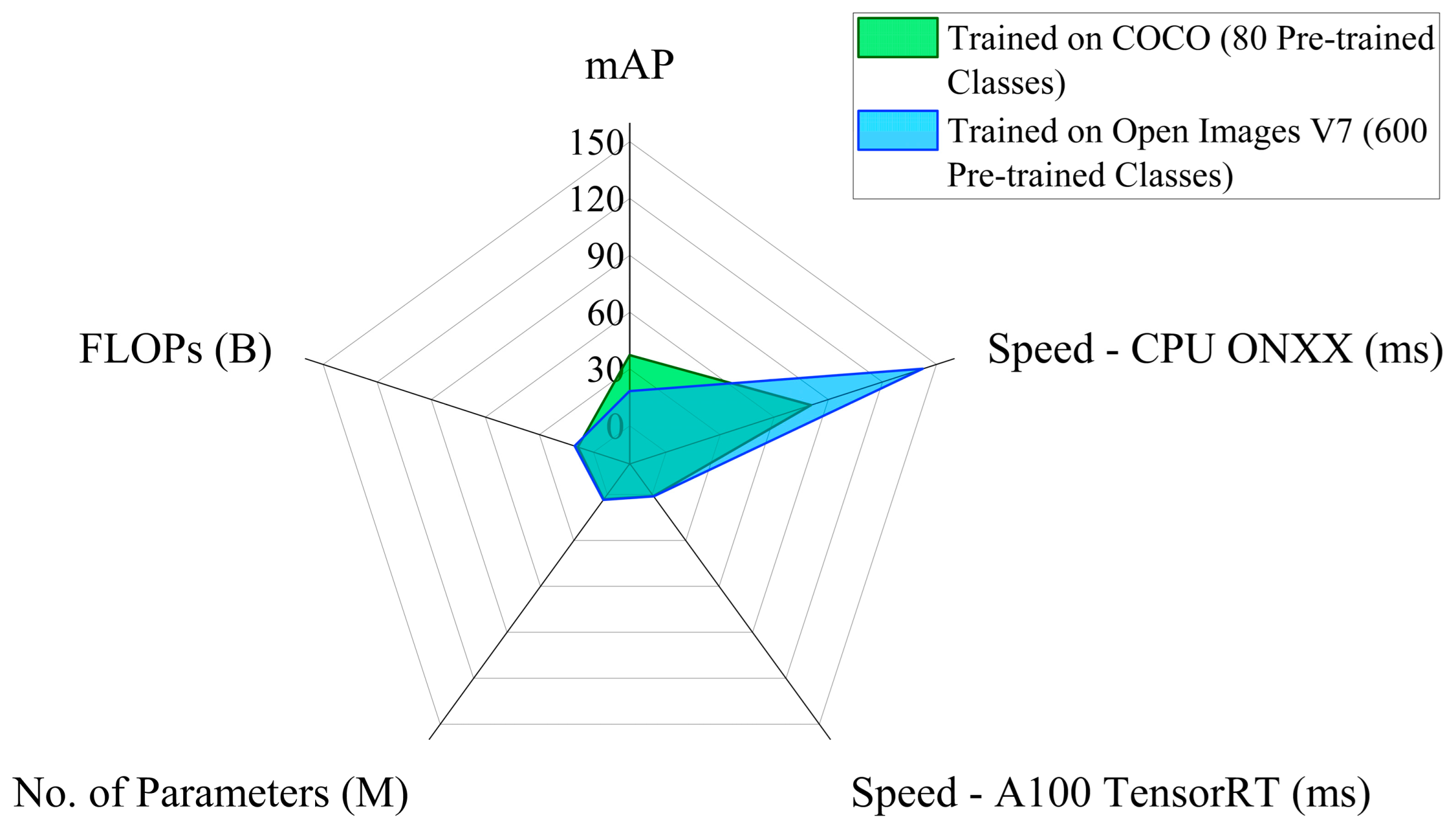

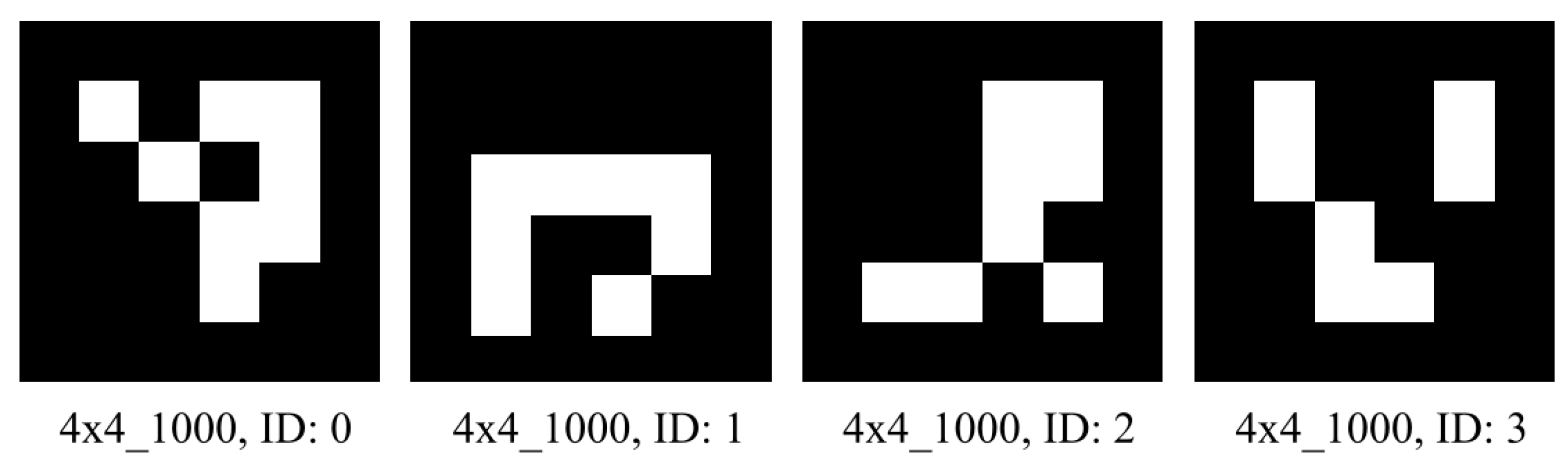

- Implementation of YOLOv8n object detection algorithm along with multiple ArUco marker-based spatial transformations to facilitate target identification and real-world positional coordinate derivation in varying environments of daily usage.

- Path planning optimization for the robotic manipulator using gradient descent (GD) technique with adjustable learning rate and error threshold values to improve efficiency and flexibility of the robot controller compared to conventional control algorithms.

- Incorporation of modular system development methods to ensure future scalability and user-specific calibrations of the NCVAD system.

- Evaluation of the system’s usability through real-world experimental validations and human performance assessment for temporal and behavioral analysis.

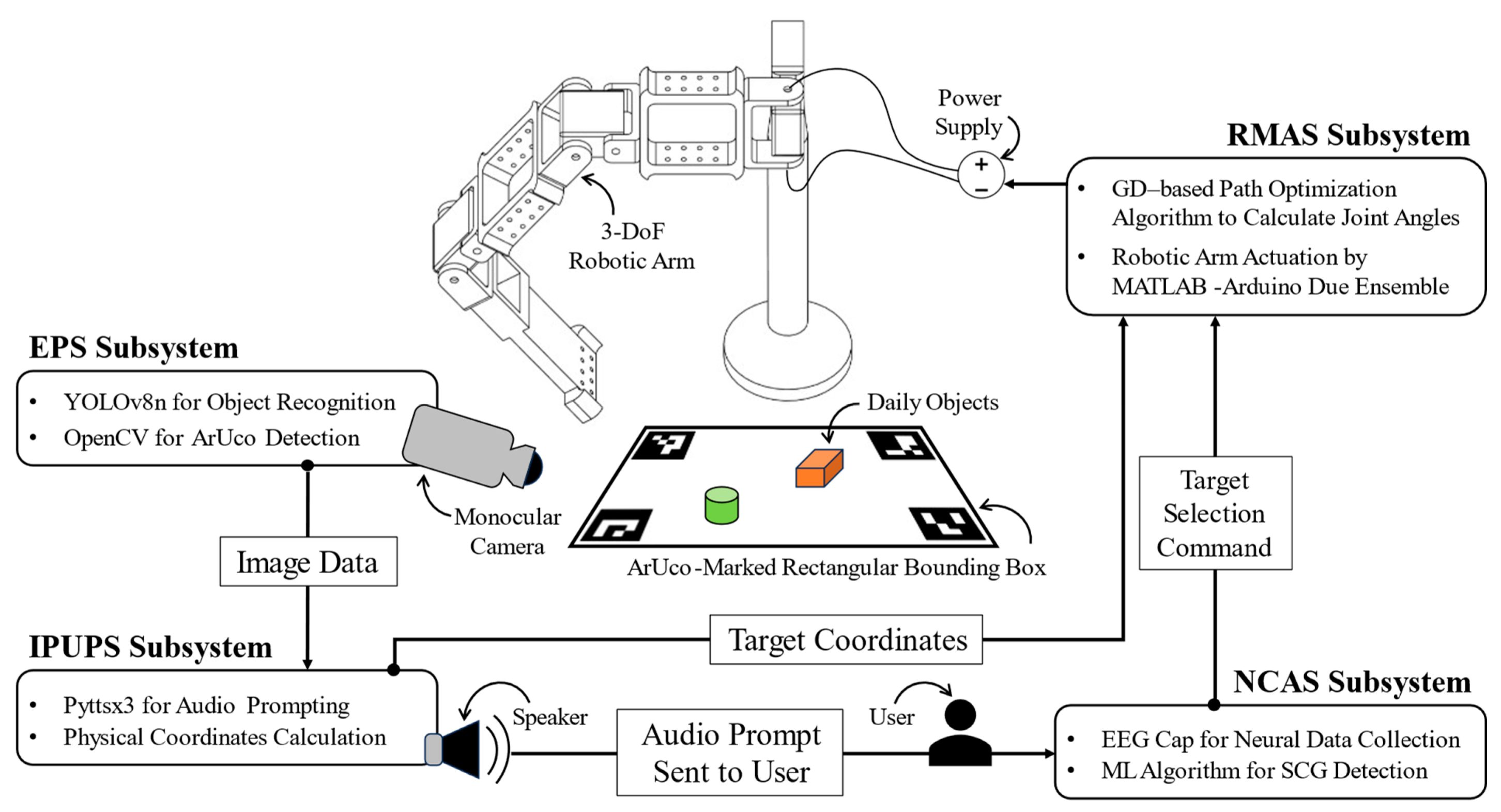

2. Materials and Methods to Describe the System Architecture

2.1. EPS for Image Data Extraction

2.2. IPUPS for Data Processing and Communication

2.3. NCAS for Selection Command Identification

2.3.1. Equipment for Data Acquisition and Processing

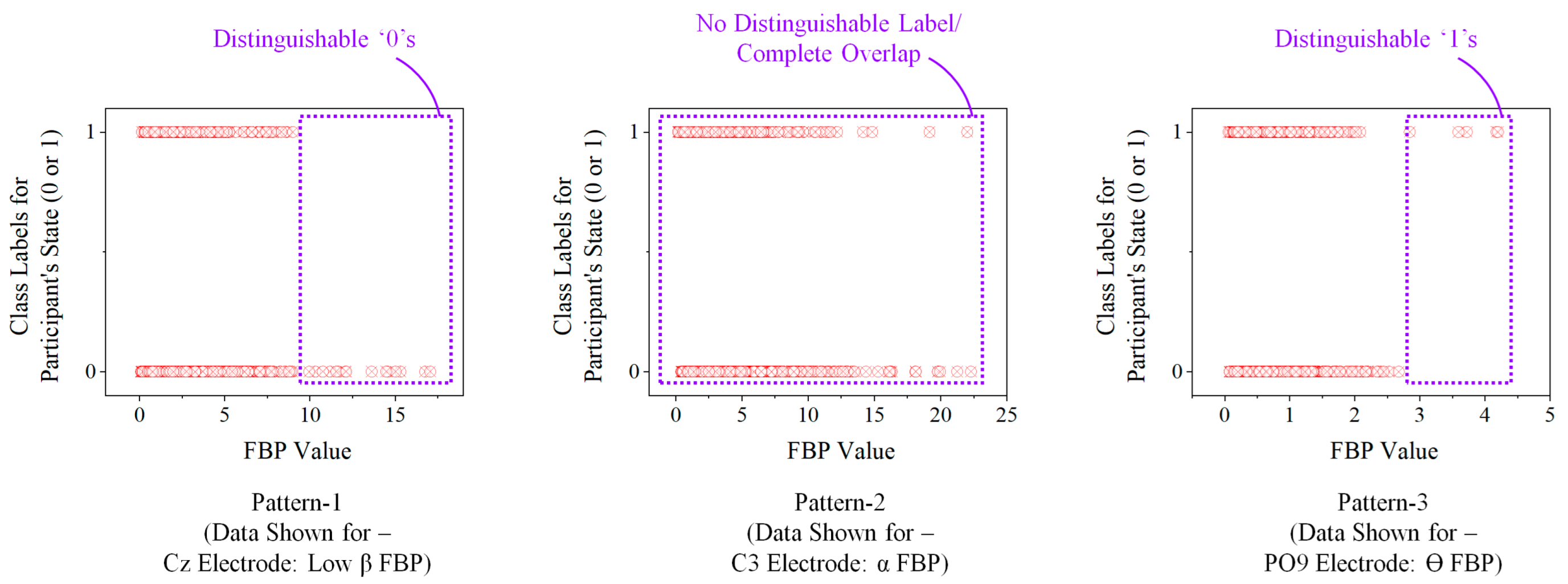

2.3.2. Data Collection and Analysis for Algorithm Development

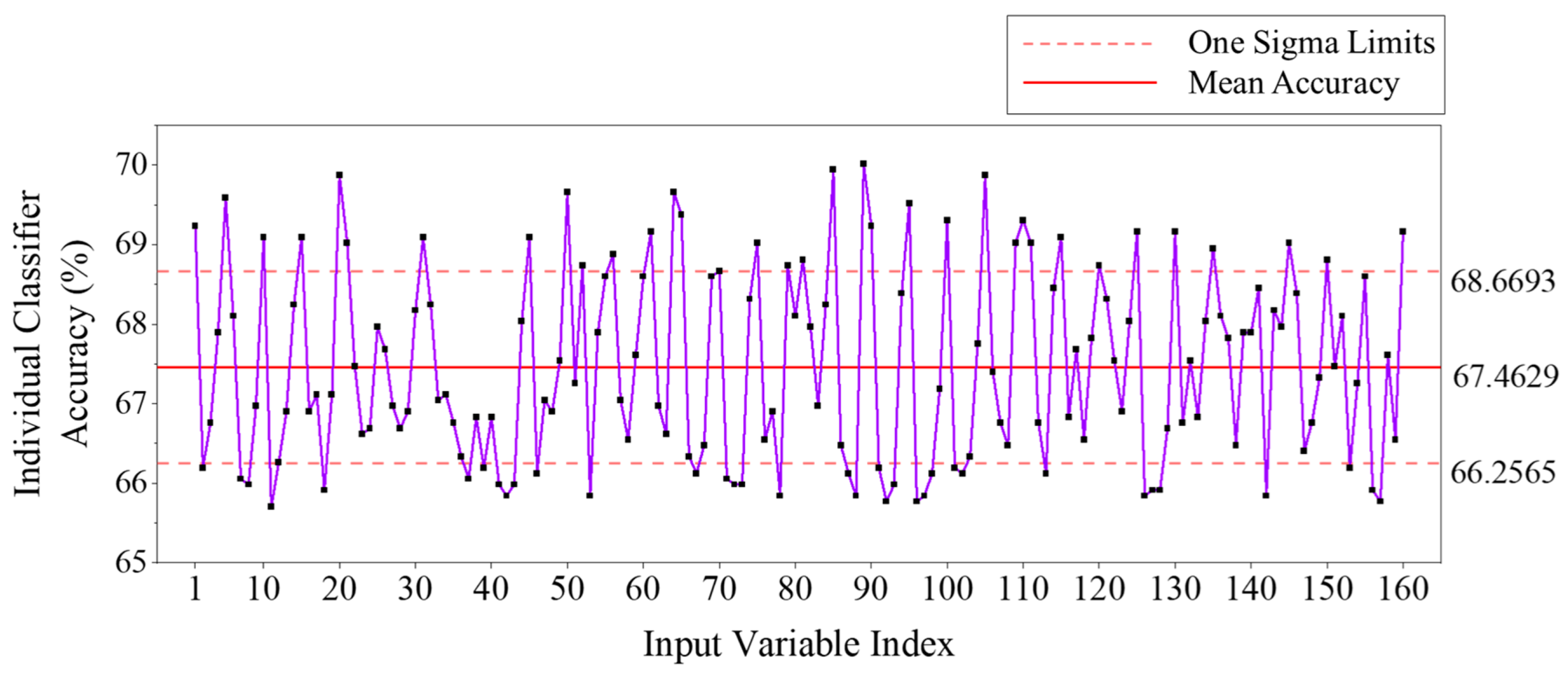

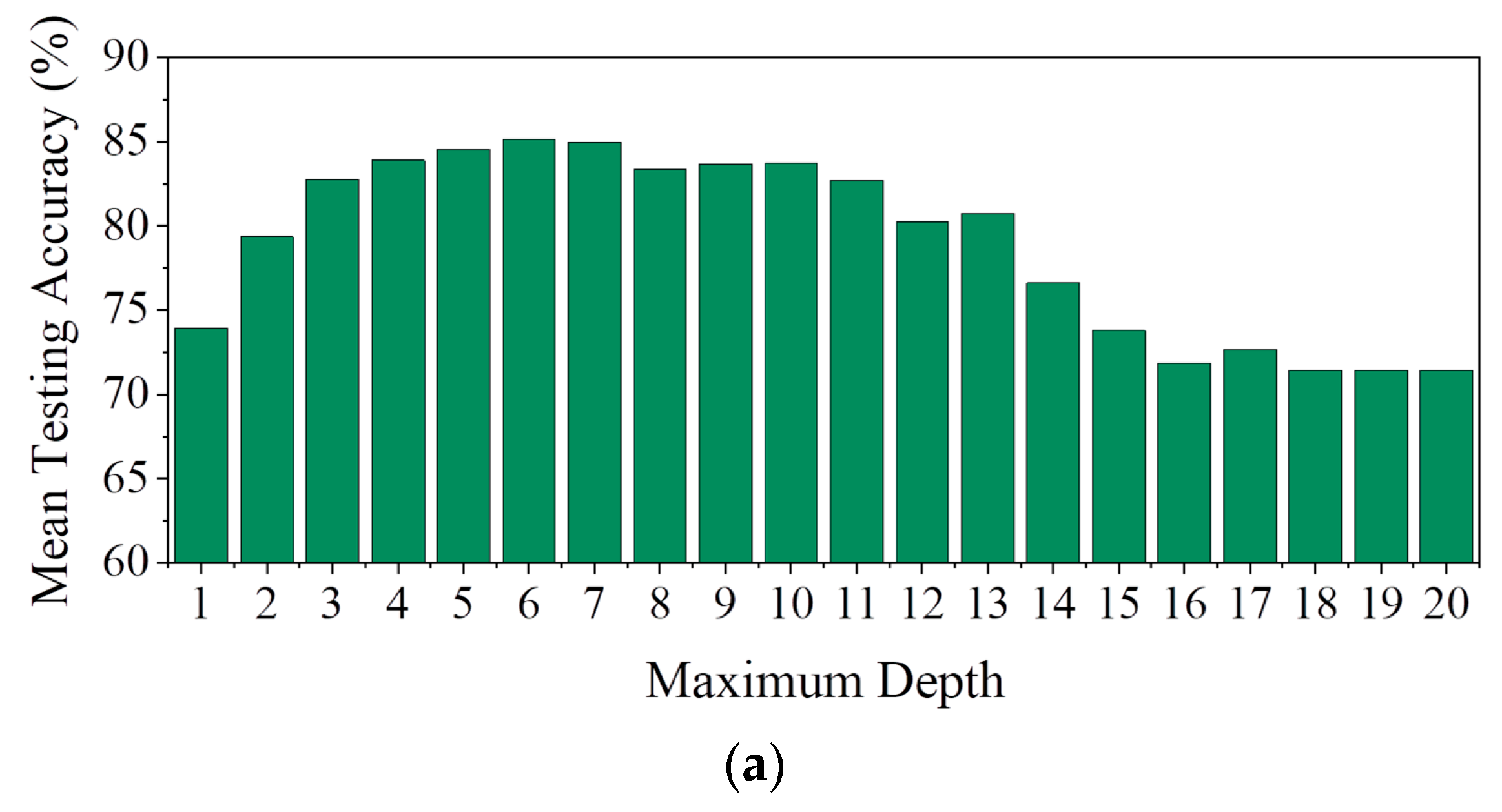

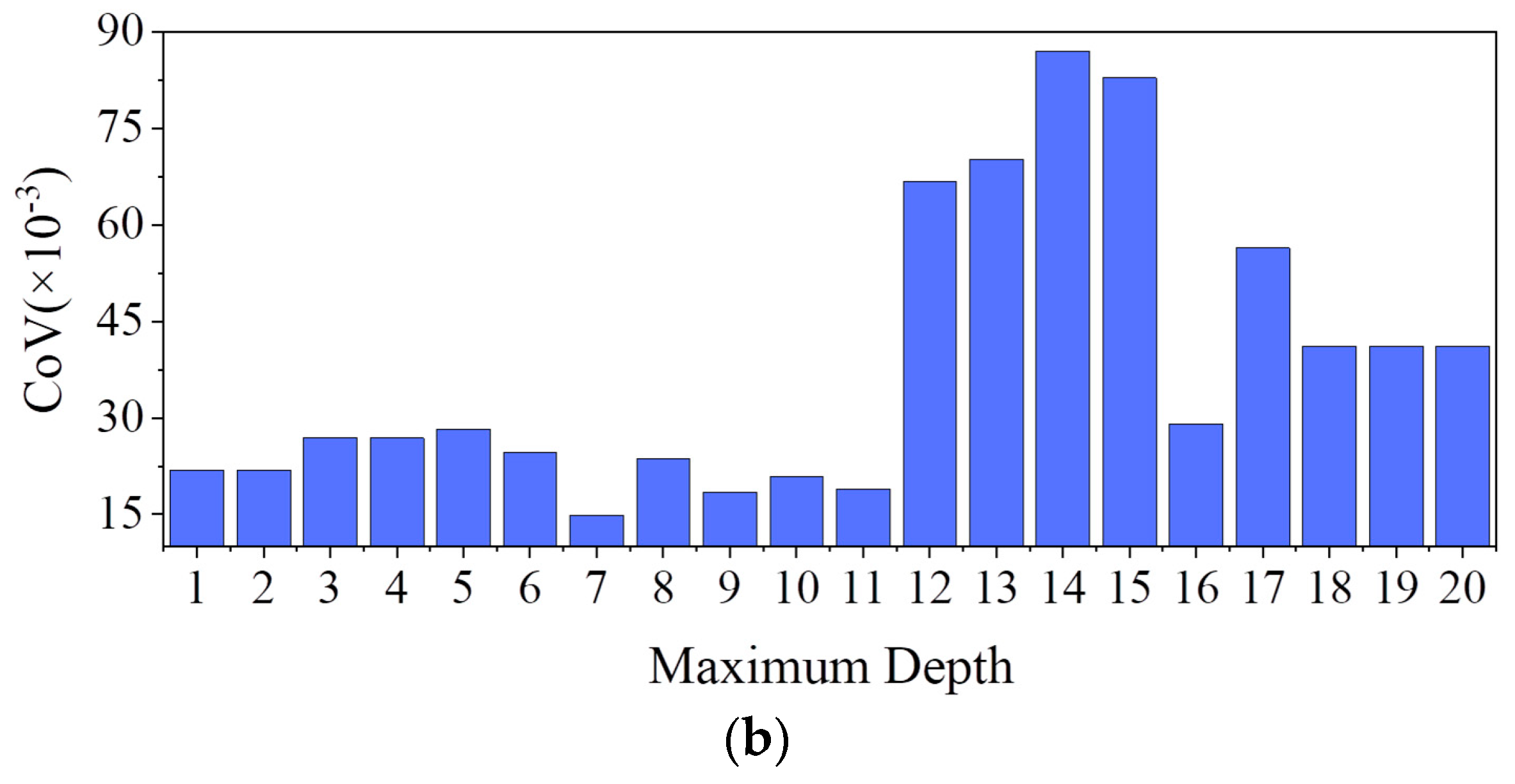

2.3.3. Ensemble Learning for SCDC Development

2.3.4. Algorithm Performance and Future Improvements

2.3.5. Computation Speed of the SCDC Model

2.4. RMAS for Robot Path Planning and Actuation

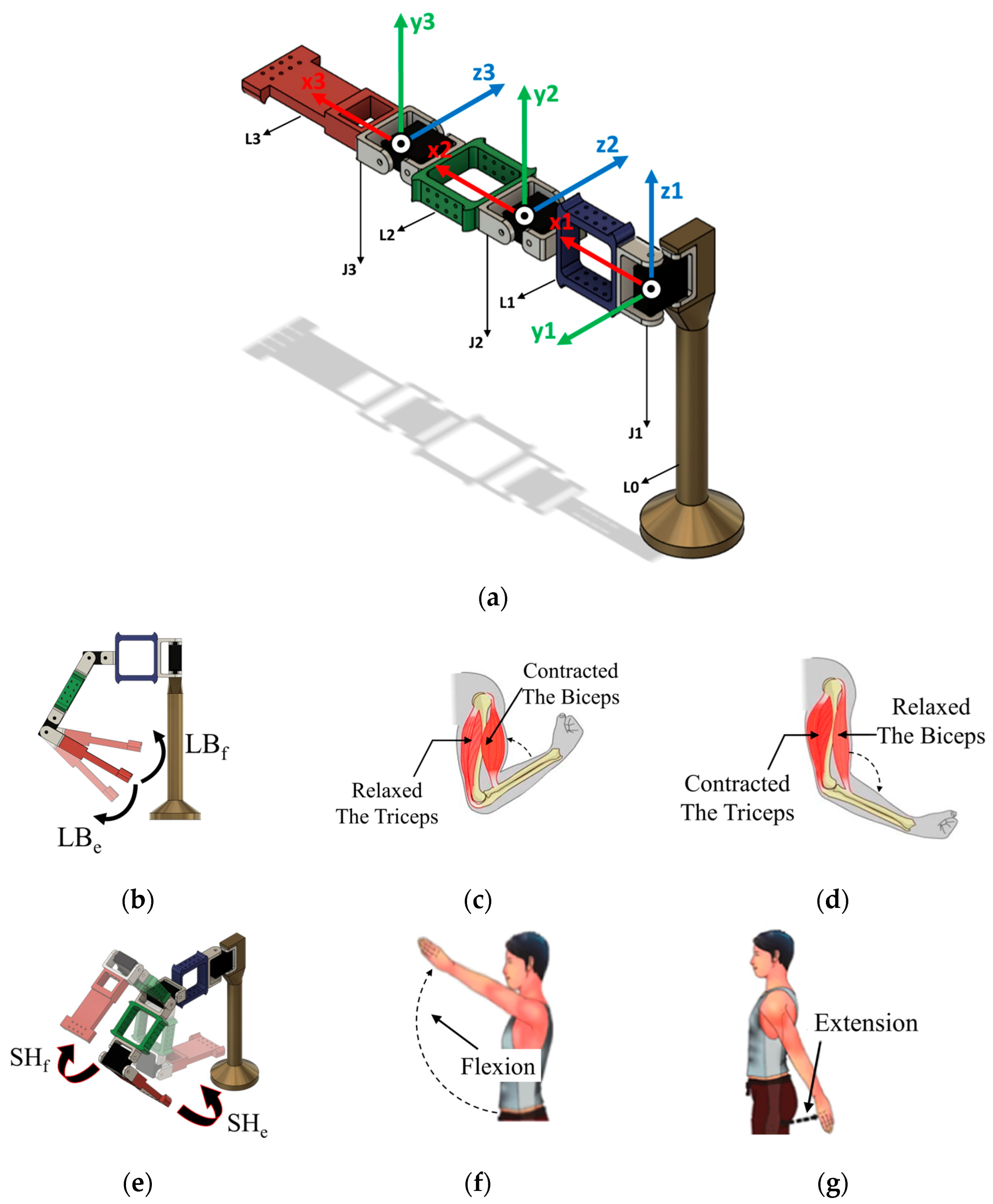

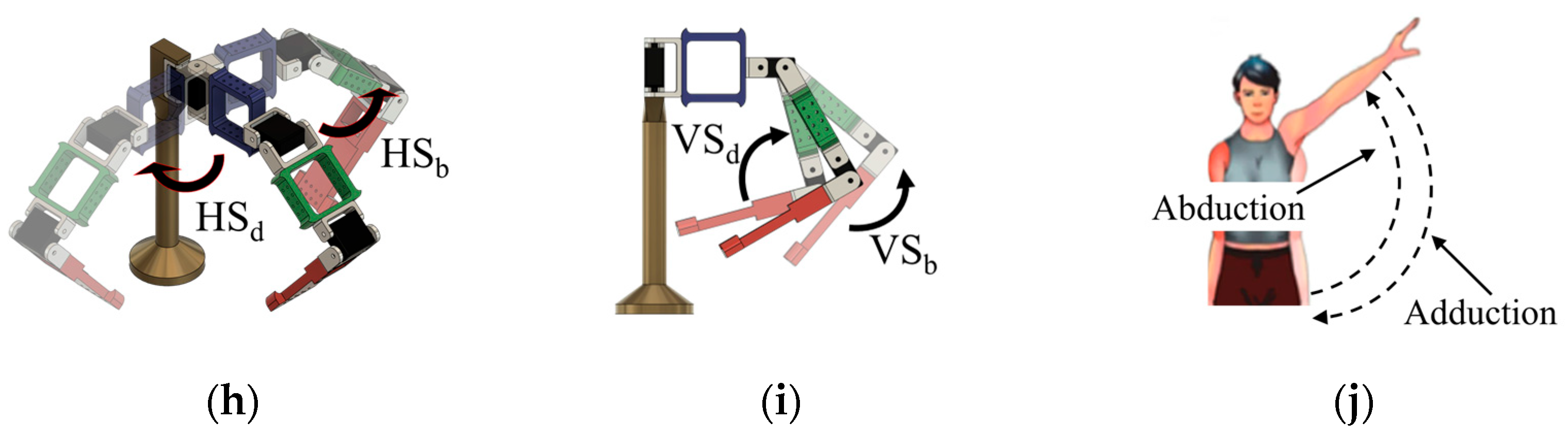

2.4.1. Robotic Arm Design

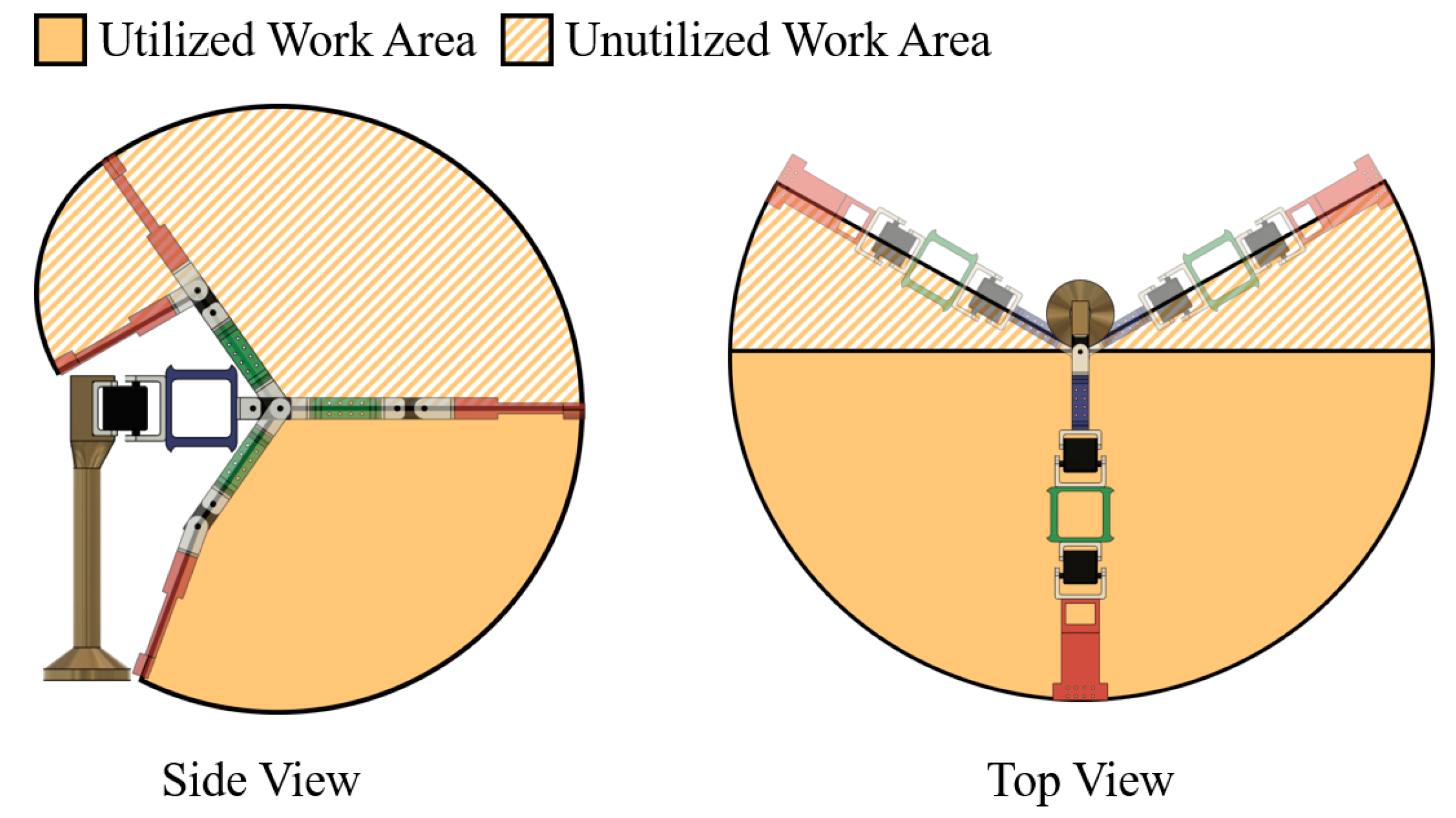

2.4.2. Forward Kinematics and Control Algorithm

2.4.3. Accuracy Assessment

2.4.4. Actuation Time to Different Points in the Work Zones

2.4.5. Latency Introduced by the Path Optimization Algorithm

2.5. System Calibration Procedures and Safety Protocols

2.5.1. Camera Calibration and ArUco Marker Placement

2.5.2. Robotic Arm Calibration and EEG-to-Actuation Synchronization

2.5.3. Safety Protocols for Error Handling

- EEG data misclassification.

- ArUco marker occlusion and visual obstruction.

- Servo actuation mismatch.

- Emergency override.

3. Results of the Experimental Validation of the Developed Physical NCVAD System as a Whole

3.1. Experiment Design for Physical System Validation

3.2. Validation of Results and Comparison with Similar Work in the Field of Study

4. Latency Results on Human Performance Evaluation

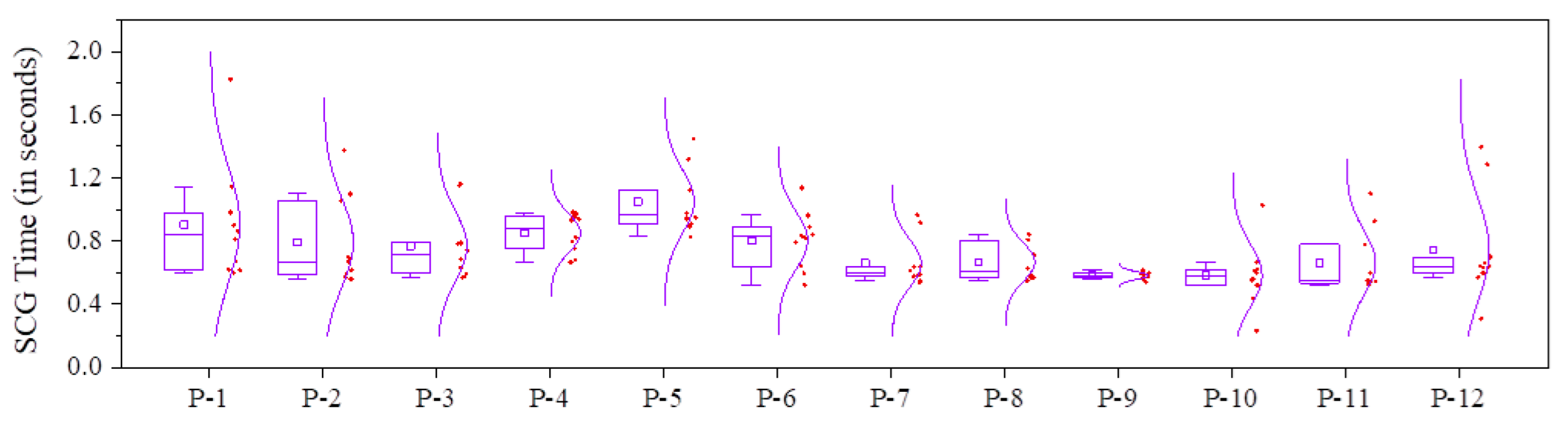

4.1. Experiment-1: Selection Command Generation and Registration

4.1.1. Experiment Design for Experiment-1

4.1.2. Participants and Experiment Results for Experiment-1

4.1.3. Behavioral Analysis for Experiment-1

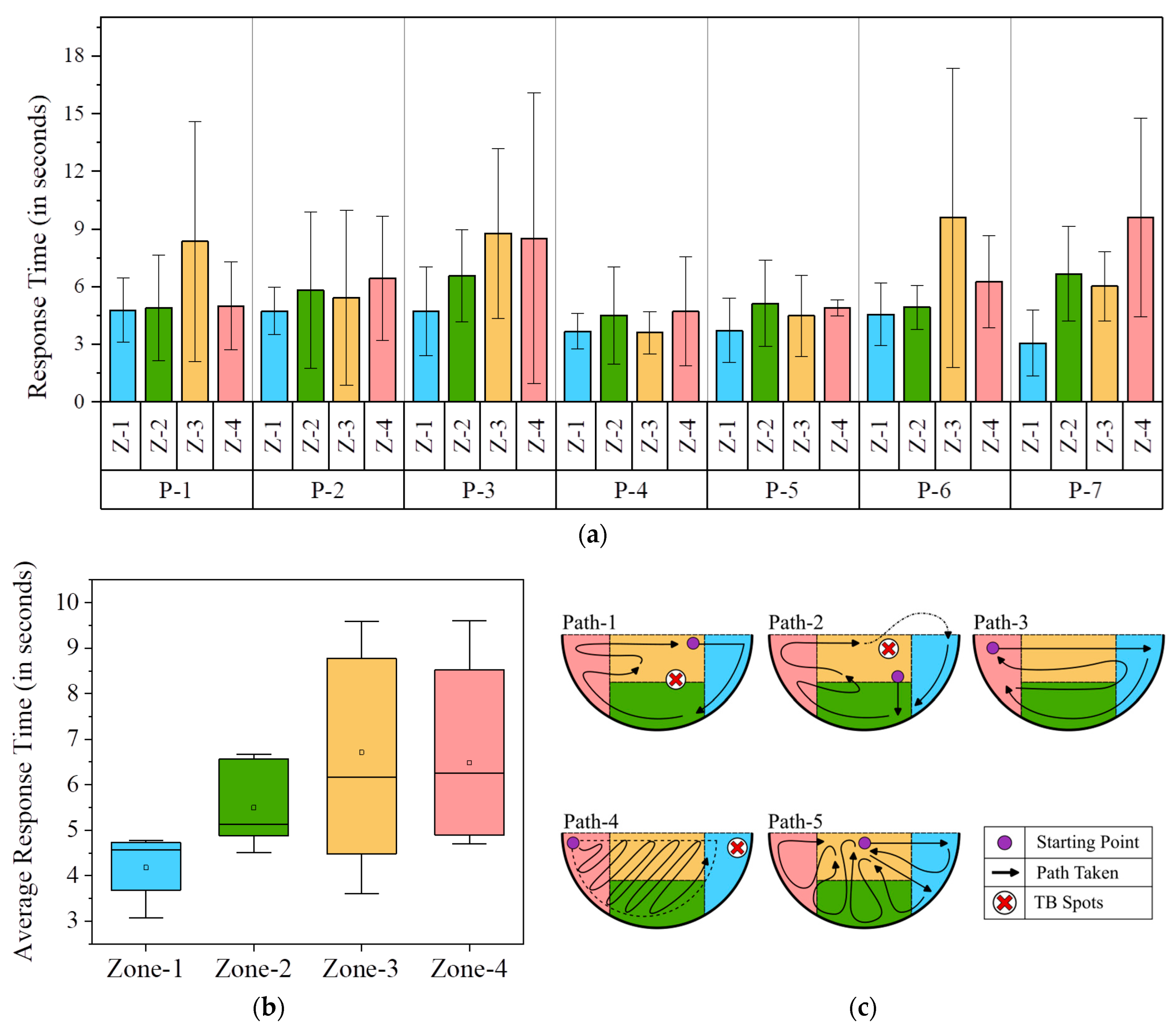

4.2. Experiment-2: Blindfolded Reach Task with Target and Decoys

4.2.1. Experiment Design for Experiment-2

4.2.2. Participants and Experiment Results for Experiment-2

4.2.3. Behavioral Analysis for Experiment-1

5. Discussions on Human Performance in Comparison to the Robotic Arm Actuation

6. Usability Standard and Benchmarks

7. Conclusions and Future Scope

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| VAD | Visual Assistive Device |

| NCVAD | Neuronally Controlled Visual Assistive Device |

| EEG | Electroencephalogram |

| BCI | Brain–Computer Interface |

| ERP | Event Related Potential |

| SSVEP | Steady State Visually Evoked Potential |

| YOLO | You Only Look Once |

| YOLOv8n | You Only Look Once, version 8, nano variant |

| mAP | Mean Average Precision |

| ArUco | Augmented Reality University of Cordoba |

| MATLAB | Matrix Laboratory |

| GD | Gradient Descent |

| POA | Path Optimization Algorithm |

| CV | Computer Vision |

| EPS | Environmental Perception Subsystem |

| IPUPS | Information Processing and User Prompting Subsystem |

| NCAS | Neural Command Acquisition Subsystem |

| RMAS | Robotic Manipulator Actuation Subsystem |

| CPU | Central Processing Unit |

| GPU | Graphics Processing Unit |

| ONNX | Open Neural Network Exchange |

| TensorRT | Tensor Runtime |

| FLOPs | Floating Point Operations per Second |

| COCO | Common Objects in Context |

| TTS | Text-to-Speech |

| WPM | Words per Minute |

| PnP | Perspective-n-Point |

| FBP | Frequency Band Power |

| LSL | Lab Streaming Layer |

| SPS | Samples per Second |

| CMS | Common Mode Sense |

| DRL | Driven Right Leg |

| SNR | Signal-to-Noise Ratio |

| EMG | Electromyogram |

| EOG | Electro-oculogram |

| ICA | Independent Component Analysis |

| SCG | Selection Command Generation |

| SSD | Selection Statement Dictation |

| SCDC | Selection Command Detection Classifier |

| IID | Independently and Identically Distributed |

| CoV | Coefficient of Variation |

| AdaBoost | Adaptive Boosting |

| LR | Learning Rate |

| TP | True Positive |

| TN | True Negative |

| FP | False Positive |

| FN | False Negative |

| ANN | Artificial Neural Network |

| ReLU | Rectified Linear Unit |

| DoF | Degrees of Freedom |

| VDC | Volts Direct Current |

| EE | End Effector |

| IK | Inverse Kinematics |

| DH | Denavit-Hartenberg |

| SSE | Sum of Squared Error |

| Z-i | Zone-i |

| P-i | Participant-i |

| CI | Confidence Interval |

| IQR | Inter-Quartile Range |

| TBS | Tactile Blind Spots |

| TLX | Task Load Index |

| SUS | System Usability Score |

References

- Elmannai, W.; Elleithy, K. Sensor-Based Assistive Devices for Visually-Impaired People: Current Status, Challenges, and Future Directions. Sensors 2017, 17, 565. [Google Scholar] [CrossRef]

- Tapu, R.; Mocanu, B.; Tapu, E. A Survey on Wearable Devices Used to Assist the Visual Impaired User Navigation in Outdoor Environments. In Proceedings of the 2014 11th International Symposium on Electronics and Telecommunications (ISETC), Timisoara, Romania, 14–15 November 2014; pp. 1–4. [Google Scholar]

- Patel, K.; Parmar, B. Assistive Device Using Computer Vision and Image Processing for Visually Impaired; Review and Current Status. Disabil. Rehabil. Assist. Technol. 2022, 17, 290–297. [Google Scholar] [CrossRef] [PubMed]

- Dahlin-Ivanoff, S.; Sonn, U. Use of Assistive Devices in Daily Activities among 85-Year-Olds Living at Home Focusing Especially on the Visually Impaired. Disabil. Rehabil. 2004, 26, 1423–1430. [Google Scholar] [CrossRef]

- Zhang, X.; Huang, X.; Ding, Y.; Long, L.; Li, W.; Xu, X. Advancements in Smart Wearable Mobility Aids for Visual Impairments: A Bibliometric Narrative Review. Sensors 2024, 24, 7986. [Google Scholar] [CrossRef] [PubMed]

- Okolo, G.I.; Althobaiti, T.; Ramzan, N. Assistive Systems for Visually Impaired Persons: Challenges and Opportunities for Navigation Assistance. Sensors 2024, 24, 3572. [Google Scholar] [CrossRef]

- Panazan, C.-E.; Dulf, E.-H. Intelligent Cane for Assisting the Visually Impaired. Technologies 2024, 12, 75. [Google Scholar] [CrossRef]

- Alimović, S. Benefits and Challenges of Using Assistive Technology in the Education and Rehabilitation of Individuals with Visual Impairments. Disabil. Rehabil. Assist. Technol. 2024, 19, 3063–3070. [Google Scholar] [CrossRef]

- Liang, I.; Spencer, B.; Scheller, M.; Proulx, M.J.; Petrini, K. Assessing People with Visual Impairments’ Access to Information, Awareness and Satisfaction with High-Tech Assistive Technology. Br. J. Vis. Impair. 2024, 42, 149–163. [Google Scholar] [CrossRef]

- Li, Y.; Kim, K.; Erickson, A.; Norouzi, N.; Jules, J.; Bruder, G.; Welch, G.F. A Scoping Review of Assistance and Therapy with Head-Mounted Displays for People Who Are Visually Impaired. ACM Trans. Access. Comput. 2022, 15, 1–28. [Google Scholar] [CrossRef]

- Khuntia, P.K.; Manivannan, P.V. Review of Neural Interfaces: Means for Establishing Brain–Machine Communication. SN Comput. Sci. 2023, 4, 672. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, Y.; Zhang, X.; Xu, B.; Zhao, H.; Sun, T.; Wang, J.; Lu, S.; Shen, X. A High-Performance General Computer Cursor Control Scheme Based on a Hybrid BCI Combining Motor Imagery and Eye-Tracking. iScience 2024, 27, 110164. [Google Scholar] [CrossRef] [PubMed]

- Tang, J.; Liu, Y.; Hu, D.; Zhou, Z. Towards BCI-Actuated Smart Wheelchair System. BioMed. Eng. Online 2018, 17, 111. [Google Scholar] [CrossRef]

- Ai, J.; Meng, J.; Mai, X.; Zhu, X. BCI Control of a Robotic Arm Based on SSVEP With Moving Stimuli for Reach and Grasp Tasks. IEEE J. Biomed. Health Inform. 2023, 27, 3818–3829. [Google Scholar] [CrossRef]

- Normann, R.A.; Greger, B.A.; House, P.; Romero, S.F.; Pelayo, F.; Fernandez, E. Toward the Development of a Cortically Based Visual Neuroprosthesis. J. Neural Eng. 2009, 6, 035001. [Google Scholar] [CrossRef]

- Fernandez, E. Development of Visual Neuroprostheses: Trends and Challenges. Bioelectron. Med. 2018, 4, 12. [Google Scholar] [CrossRef]

- Ienca, M.; Valle, G.; Raspopovic, S. Clinical Trials for Implantable Neural Prostheses: Understanding the Ethical and Technical Requirements. Lancet Digit. Health 2025, 7, e216–e224. [Google Scholar] [CrossRef]

- Wang, A.; Tian, X.; Jiang, D.; Yang, C.; Xu, Q.; Zhang, Y.; Zhao, S.; Zhang, X.; Jing, J.; Wei, N.; et al. Rehabilitation with Brain-Computer Interface and Upper Limb Motor Function in Ischemic Stroke: A Randomized Controlled Trial. Med 2024, 5, 559–569.e4. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.; Wang, R.; Yan, B.; Li, Y. EEG Brainwave Controlled Robotic Arm for Neurorehabilitation Training. CEAS 2023, 1, 1–8. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, Z.; Xu, H.; Song, Z.; Xie, P.; Wei, P.; Zhao, G. Neural Mass Modeling in the Cortical Motor Area and the Mechanism of Alpha Rhythm Changes. Sensors 2024, 25, 56. [Google Scholar] [CrossRef] [PubMed]

- Zhu, H.Y.; Chen, H.-T.; Lin, C.-T. Understanding the Effects of Stress on the P300 Response during Naturalistic Simulation of Heights Exposure. PLoS ONE 2024, 19, e0301052. [Google Scholar] [CrossRef]

- Rabbani, M.; Sabith, N.U.S.; Parida, A.; Iqbal, I.; Mamun, S.M.; Khan, R.A.; Ahmed, F.; Ahamed, S.I. EEG Based Real Time Classification of Consecutive Two Eye Blinks for Brain Computer Interface Applications. Sci. Rep. 2025, 15, 21007. [Google Scholar] [CrossRef]

- Sciaraffa, N.; Borghini, G.; Di Flumeri, G.; Cincotti, F.; Babiloni, F.; Aricò, P. Joint Analysis of Eye Blinks and Brain Activity to Investigate Attentional Demand during a Visual Search Task. Brain Sci. 2021, 11, 562. [Google Scholar] [CrossRef]

- Chapter 3 Eye and Eyelid Movements during Blinking: An Eye Blink Centre? In Supplements to Clinical Neurophysiology; Elsevier: Amsterdam, The Netherlands, 2006; pp. 16–25. ISBN 978-0-444-52071-5.

- Allami Sanjani, M.; Tahami, E.; Veisi, G. A Review of Surface Electromyography Applications for the Jaw Muscles Characterization in Rehabilitation and Disorders Diagnosis. Med. Nov. Technol. Devices 2023, 20, 100261. [Google Scholar] [CrossRef]

- Lin, X.; Takaoka, R.; Moriguchi, D.; Morioka, S.; Ueda, Y.; Yamamoto, R.; Ono, E.; Ishigaki, S. Electromyographic Evaluation of Masseteric Activity during Maximum Opening in Patients with Temporomandibular Disorders and Limited Mouth Opening. Sci. Rep. 2025, 15, 12743. [Google Scholar] [CrossRef]

- Vélez, L.; Kemper, G. Algorithm for Detection of Raising Eyebrows and Jaw Clenching Artifacts in EEG Signals Using Neurosky Mindwave Headset. In Smart Innovation, Systems and Technologies, Proceedings of the 5th Brazilian Technology Symposium, Campinas, Brazil, 16–18 October 2024; Iano, Y., Arthur, R., Saotome, O., Kemper, G., Borges Monteiro, A.C., Eds.; Springer International Publishing: Cham, Switzerland, 2021; Volume 202, pp. 99–110. ISBN 978-3-030-57565-6. [Google Scholar]

- Khoshnam, M.; Kuatsjah, E.; Zhang, X.; Menon, C. Hands-Free EEG-Based Control of a Computer Interface Based on Online Detection of Clenching of Jaw. In Bioinformatics and Biomedical Engineering; Rojas, I., Ortuño, F., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2017; Volume 10208, pp. 497–507. ISBN 978-3-319-56147-9. [Google Scholar]

- Bhuvan, H.S.; Manjudarshan, G.N.; Aditya, S.M.; Kulkarni, K. Neuro Controlled Object Retrieval System. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2024, 10, 862–867. [Google Scholar] [CrossRef]

- Shin, K.; Lee, M.; Chang, M.; Bae, Y.M.; Chang, W.; Kim, Y.-J. Enhancing Visual Perception for People with Blindness: A Feasibility Study of a 12-Channel Forehead ElectroTactile Stimulator with a Stereo Camera. 2024. [Google Scholar] [CrossRef]

- Ultralytics YOLOv8. Available online: https://docs.ultralytics.com/models/yolov8 (accessed on 21 February 2025).

- Sohan, M.; Sai Ram, T.; Rami Reddy, C.V. A Review on YOLOv8 and Its Advancements. In Data Intelligence and Cognitive Informatics; Jacob, I.J., Piramuthu, S., Falkowski-Gilski, P., Eds.; Algorithms for Intelligent Systems; Springer Nature: Singapore, 2024; pp. 529–545. ISBN 978-981-99-7999-8. [Google Scholar]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- Bakirci, M. Utilizing YOLOv8 for Enhanced Traffic Monitoring in Intelligent Transportation Systems (ITS) Applications. Digit. Signal Process. 2024, 152, 104594. [Google Scholar] [CrossRef]

- Barlybayev, A.; Amangeldy, N.; Kurmetbek, B.; Krak, I.; Razakhova, B.; Tursynova, N.; Turebayeva, R. Personal Protective Equipment Detection Using YOLOv8 Architecture on Object Detection Benchmark Datasets: A Comparative Study. Cogent Eng. 2024, 11, 2333209. [Google Scholar] [CrossRef]

- Zhang, S.; Li, A.; Ren, J.; Li, X. An Enhanced YOLOv8n Object Detector for Synthetic Diamond Quality Evaluation. Sci. Rep. 2024, 14, 28035. [Google Scholar] [CrossRef] [PubMed]

- Xu, L.; Zhao, Y.; Zhai, Y.; Huang, L.; Ruan, C. Small Object Detection in UAV Images Based on YOLOv8n. Int. J. Comput. Intell. Syst. 2024, 17, 223. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Lecture Notes in Computer Science, Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. ISBN 978-3-319-10601-4. [Google Scholar]

- COCO—Common Objects in Context. Available online: https://cocodataset.org/#home (accessed on 21 February 2025).

- Xu, Z.; Haroutunian, M.; Murphy, A.J.; Neasham, J.; Norman, R. An Underwater Visual Navigation Method Based on Multiple ArUco Markers. JMSE 2021, 9, 1432. [Google Scholar] [CrossRef]

- Čepon, G.; Ocepek, D.; Kodrič, M.; Demšar, M.; Bregar, T.; Boltežar, M. Impact-Pose Estimation Using ArUco Markers in Structural Dynamics. Exp. Tech. 2024, 48, 369–380. [Google Scholar] [CrossRef]

- Van Putten, A.; Giersberg, M.F.; Rodenburg, T.B. Tracking Laying Hens with ArUco Marker Backpacks. Smart Agric. Technol. 2025, 10, 100703. [Google Scholar] [CrossRef]

- Tocci, T.; Capponi, L.; Rossi, G. ArUco Marker-Based Displacement Measurement Technique: Uncertainty Analysis. Eng. Res. Express 2021, 3, 035032. [Google Scholar] [CrossRef]

- Smith, T.J.; Smith, T.R.; Faruk, F.; Bendea, M.; Tirumala Kumara, S.; Capadona, J.R.; Hernandez-Reynoso, A.G.; Pancrazio, J.J. Real-Time Assessment of Rodent Engagement Using ArUco Markers: A Scalable and Accessible Approach for Scoring Behavior in a Nose-Poking Go/No-Go Task. eNeuro 2024, 11, ENEURO.0500-23.2024. [Google Scholar] [CrossRef]

- Sosnik, R.; Zheng, L. Reconstruction of Hand, Elbow and Shoulder Actual and Imagined Trajectories in 3D Space Using EEG Current Source Dipoles. J. Neural Eng. 2021, 18, 056011. [Google Scholar] [CrossRef]

- Wang, J.; Bi, L.; Fei, W.; Tian, K. EEG-Based Continuous Hand Movement Decoding Using Improved Center-Out Paradigm. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 2845–2855. [Google Scholar] [CrossRef]

- Robinson, N.; Guan, C.; Vinod, A.P. Adaptive Estimation of Hand Movement Trajectory in an EEG Based Brain–Computer Interface System. J. Neural Eng. 2015, 12, 066019. [Google Scholar] [CrossRef]

- Li, C.; He, Z.; Lu, K.; Fang, C. Bird Species Detection Net: Bird Species Detection Based on the Extraction of Local Details and Global Information Using a Dual-Feature Mixer. Sensors 2025, 25, 291. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Verma, A.K.; Guragain, B.; Xiong, X.; Liu, C. Classification of Bruxism Based on Time-Frequency and Nonlinear Features of Single Channel EEG. BMC Oral Health 2024, 24, 81. [Google Scholar] [CrossRef]

- Bin Heyat, M.B.; Akhtar, F.; Khan, A.; Noor, A.; Benjdira, B.; Qamar, Y.; Abbas, S.J.; Lai, D. A Novel Hybrid Machine Learning Classification for the Detection of Bruxism Patients Using Physiological Signals. Appl. Sci. 2020, 10, 7410. [Google Scholar] [CrossRef]

- Tripathi, P.; Ansari, M.A.; Gandhi, T.K.; Albalwy, F.; Mehrotra, R.; Mishra, D. Computational Ensemble Expert System Classification for the Recognition of Bruxism Using Physiological Signals. Heliyon 2024, 10, e25958. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Chai, X.; Guan, K.; Liu, T.; Xu, J.; Fan, Y.; Niu, H. Detections of Steady-State Visual Evoked Potential and Simultaneous Jaw Clench Action from Identical Occipital Electrodes: A Hybrid Brain-Computer Interface Study. J. Med. Biol. Eng. 2021, 41, 914–923. [Google Scholar] [CrossRef]

- Chio, N.; Quiles-Cucarella, E. A Bibliometric Review of Brain–Computer Interfaces in Motor Imagery and Steady-State Visually Evoked Potentials for Applications in Rehabilitation and Robotics. Sensors 2024, 25, 154. [Google Scholar] [CrossRef]

- Cai, Y.; Meng, Z.; Huang, D. DHCT-GAN: Improving EEG Signal Quality with a Dual-Branch Hybrid CNN–Transformer Network. Sensors 2025, 25, 231. [Google Scholar] [CrossRef]

- Özkahraman, A.; Ölmez, T.; Dokur, Z. Performance Improvement with Reduced Number of Channels in Motor Imagery BCI System. Sensors 2024, 25, 120. [Google Scholar] [CrossRef]

- Zhang, S.; Cao, D.; Hou, B.; Li, S.; Min, H.; Zhang, X. Analysis on Variable Stiffness of a Cable-Driven Parallel–Series Hybrid Joint toward Wheelchair-Mounted Robotic Manipulator. Adv. Mech. Eng. 2019, 11, 1687814019846289. [Google Scholar] [CrossRef]

- Guan, S.; Yuan, Z.; Wang, F.; Li, J.; Kang, X.; Lu, B. Multi-Class Motor Imagery Recognition of Single Joint in Upper Limb Based on Multi-Domain Feature Fusion. Neural Process. Lett. 2023, 55, 8927–8945. [Google Scholar] [CrossRef]

- Denavit, J.; Hartenberg, R.S. A Kinematic Notation for Lower-Pair Mechanisms Based on Matrices. J. Appl. Mech. 2021, 22, 215–221. [Google Scholar] [CrossRef]

- Khuntia, P.K.; Manivannan, P.V. Comparative Analysis of Bio-Inspired and Numerical Control Paradigms for a Visual Assistive Device. SN Comput. Sci. 2025, 6, 486. [Google Scholar] [CrossRef]

- Mirzaei, A.; Khaligh-Razavi, S.-M.; Ghodrati, M.; Zabbah, S.; Ebrahimpour, R. Predicting the Human Reaction Time Based on Natural Image Statistics in a Rapid Categorization Task. Vis. Res. 2013, 81, 36–44. [Google Scholar] [CrossRef]

- Wong, A.L.; Goldsmith, J.; Forrence, A.D.; Haith, A.M.; Krakauer, J.W. Reaction Times Can Reflect Habits Rather than Computations. eLife 2017, 6, e28075. [Google Scholar] [CrossRef]

- Thorpe, S.; Fize, D.; Marlot, C. Speed of Processing in the Human Visual System. Nature 1996, 381, 520–522. [Google Scholar] [CrossRef] [PubMed]

- Masud, U.; Baig, M.I.; Akram, F.; Kim, T.-S. A P300 Brain Computer Interface Based Intelligent Home Control System Using a Random Forest Classifier. In Proceedings of the 2017 IEEE Symposium Series on Computational Intelligence (SSCI), Honolulu, HI, USA, 27 November–1 December 2017; IEEE: New York, NY, USA; pp. 1–5. [Google Scholar]

- Akram, F.; Alwakeel, A.; Alwakeel, M.; Hijji, M.; Masud, U. A Symbols Based BCI Paradigm for Intelligent Home Control Using P300 Event-Related Potentials. Sensors 2022, 22, 10000. [Google Scholar] [CrossRef]

- ISO 9241-11:2018; International Organization for Standardization—Ergonomics of Human–System Interaction—Part 11: Usability—Definitions and Concepts. International Organization for Standardization: Geneva, Switzerland, 2019.

- ISO 9241-210:2019; International Organization for Standardization—Ergonomics of Human–System Interaction—Part 210: Human-Centred Design for Interactive Systems. International Organization for Standardization: Geneva, Switzerland, 2019.

- Zander, T.O.; Kothe, C. Towards Passive Brain–Computer Interfaces: Applying Brain–Computer Interface Technology to Human–Machine Systems in General. J. Neural Eng. 2011, 8, 025005. [Google Scholar] [CrossRef]

- Frey, J.; Daniel, M.; Castet, J.; Hachet, M.; Lotte, F. Framework for Electroencephalography-Based Evaluation of User Experience. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; ACM: New York, NY, USA, 2016; pp. 2283–2294. [Google Scholar]

- Hart, S.G. Nasa-Task Load Index (NASA-TLX); 20 Years Later. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2006, 50, 904–908. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A Quick and Dirty Usability Scale. Usability Eval. Ind. 1995, 189, 4–7. [Google Scholar]

- Bangor, A.; Kortum, P.T.; Miller, J.T. An Empirical Evaluation of the System Usability Scale. Int. J. Hum.-Comput. Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

- Klug, B. An Overview of the System Usability Scale in Library Website and System Usability Testing. Weav. J. Libr. User Exp. 2017, 1. [Google Scholar] [CrossRef]

- Self-Reported Metrics . In Measuring the User Experience; Elsevier: Amsterdam, The Netherlands, 2013; pp. 121–161. ISBN 978-0-12-415781-1.

| Hardware Component | Respective Activity | Parent Subsystem | Technical Specification |

|---|---|---|---|

| Monocular Camera | Image Capturing | EPS | Make: Logitech C270 HD Resolution: 720p at 30 FPS Sensor: 0.9 MP CMOS Field of View: 55–60° Interface: USB-A 2.0 Dimensions: 72.9 × 31.9 × 66.6 mm Compatibility: Windows 7+, USB-A Type: Monocular Vision System |

| Speaker System | Audio Prompting | IPUPS | Make: Realtek ALC3287 Power Rating: 2 W Type: Stereo Sound System |

| EEG Headset | Neural Data Acquisition | NCAS | Make: Emotiv EPOC Flex EEG Saline Kit No. of Channels: 32 Referencing: CMS/DRL Configuration: 10–20 international system of placement Resolution: 16-bit ADC Filter: Digital fifth order sinc filter Notching: 50 Hz Bandwidth: 0.2–45 Hz Sampling Rate: 2048 Hz (internal); 128 SPS (EEG stream); 8 SPS (FBP stream) Connectivity: 2.4 GHz USB receiver |

| Servo Motors | Joint Actuations of the Robotic Arm | RMAS | Name: RDS3115-MG Current: Direct Current (DC) Rotation Angle: 0–270° Operating Voltage: 4.8–7.2 V Torque Rating: 13.5–17 kg-cm Current Drawn: 4–5 mA (idle); 1.8–2.2 A (stall) Dimensions: 40 × 20 × 40 mm |

| Power Supply Unit | Power the Servo Motors for Joint Actuation | RMAS | Make: Agilent E3634A DC Output Range: 0–25 V, up to 7 A Power Rating: 175–200 W Voltage Accuracy: ±(0.05% + 10 mV) Current Accuracy: ±(0.2% + 10 mA) Noise: <500 µVrms/3 mVp-p; <2 mArms Interfaces: GPIB; RS-232 Protection: Over Current (OC) and Over voltage (OV) protection Form Factor: 213 × 133 × 348 mm; 9.5 kg |

| Embedded Controller | Send Control Signal to the Servo Motors | RMAS | Make: Arduino Due Microcontroller: Atmel SAM3 × 8E ARM Cortex-M3 Microcontroller Spec: 32-bit; 84 MHz Flash Memory: 512 KB SRAM: 96 KB (64 + 32 KB) Operating Voltage: 3.3 V Digital I/O Pins: 54 Serial Ports: 4 × UART Total I/O Output Current: 130 mA Board Size: 101.5 × 53.3 mm |

| Laptop | Algorithms Deployment and Overall Task Control | All Subsystems | OS: Microsoft Windows 11 Version: 10.0.26100 System Type: x64-based PC Processor: i7-13650HX, 2600 Mhz Cores: 14 RAM: 24.0 GB Interface: Intel(R) USB 3.20 |

| Joint | θ | α | a | d |

|---|---|---|---|---|

| J1 | θ1 * | π/2 | a1 | a0 |

| J2 | θ2 * | 0 | a2 | 0 |

| J3 | θ3 * | 0 | a3 | 0 |

| Average Time Taken (in Seconds) | Standard Deviation (in Seconds) | Maximum Time Needed with 95% CI (in Seconds) | |

|---|---|---|---|

| Zone-1 | 0.556 | 0.071274 | 0.600176 |

| Zone-2 | 0.414 | 0.036469 | 0.436604 |

| Zone-3 | 0.480 | 0.053385 | 0.513089 |

| Zone-4 | 0.620 | 0.035355 | 0.641913 |

| Average Time Taken (in Milli Seconds) | Standard Deviation (in Milli Seconds) | Maximum Time Needed with 95% CI (in Milli Seconds) | |

|---|---|---|---|

| Zone-1 | 12.4704 | 0.6921 | 12.7181 |

| Zone-2 | 12.9060 | 1.3820 | 13.4005 |

| Zone-3 | 11.7888 | 0.0449 | 11.8048 |

| Zone-4 | 11.9322 | 0.2958 | 12.0381 |

| Activity Description | Responsible Subsystem | Latency Introduced (in Seconds) |

|---|---|---|

| ArUco Detection and Object Identification | Environmental Perception Subsystem (EPS) | 80.400 × 10−3 |

| Audio Prompting (with 1500 ms delay) and Coordinate Calculation | Information Processing and User Prompting Subsystem (IPUPS) | 25.301 |

| EEG Data Acquisition and Jaw-Clenching Classification | Neural Command Acquisition Subsystem (NCAS) | 0.368 |

| GD-based POA for Coordinate Calculation | Robotic Manipulator Actuation Subsystem (RMAS) | 0.424 × 10−3 |

| Robotic Arm Actuation for Reach Task | Robotic Manipulator Actuation Subsystem (RMAS) | 0.548 |

| Overall Time Taken for All Activities | The Neuronally Controlled Visual Assistive Device (NCVAD) | 26.297 * |

| Author Names | Description for Benchmark | Description for Current Study | ||

|---|---|---|---|---|

| Methods Used/ Targets Achieved | Obtained Performance Parameters | Methods Used/Targets Achieved | Obtained Performance Parameters | |

| Velez et al. [27] | Neurosky Mindwave headset used for jaw-clenching detection | 18 out of 20 correct detections | Emotiv EPOC Flex Headset used for jaw-clenching detection | 26 out of 30 correct detections |

| Masud et al. [63] | P300 event related potential-based EEG data classification | Accuracy achieved: 87.5% | Jaw-clenching trigger-based EEG data classification | Accuracy achieved: 86.67% |

| Khoshnam et al. [28] | EEG-based control for movement in virtual environment using jaw-clenching trigger | Sensitivity achieved: 80% | EEG-based control for real-time actuation of robotic arm using jaw-clenching trigger | Sensitivity achieved: 96.29% |

| Akram et al. [64] | P300 event-related potential-based paradigm for intelligent home control | Time taken for selection process: 31.5 s | Jaw-clenching-based EEG paradigm for assistive robotic arm control | Average time taken for all processes: 26.3 s |

| User Evaluation Metrics | Definition as per ISO 9241-11 | Corresponding Implementation in the Current Study |

|---|---|---|

| Effectiveness | Accuracy and completeness in accomplishing tasks | Selection accuracies and percentage calculations per trial |

| Efficiency | Resources expended relative to task goals | Task completion time and reaction latency evaluation |

| Satisfaction | User comfort and acceptability | Post-session questionnaires for user feedback on system efficacy |

| S. No. | Subscale | Raw Score by Participant (0–20) | Scaled Score (0–100) | Notes and Remarks |

|---|---|---|---|---|

| 1 | Mental Demand: How mentally demanding was the task? | 8 | 40 | Somewhat low mental demand |

| 2 | Physical Demand: How physically demanding was the task? | 2 | 10 | Very low physical demand |

| 3 | Temporal Demand: How hurried or rushed was the pace of the task? | 11 | 55 | Moderate temporal demand |

| 4 | * Performance: How successful were you in accomplishing what you were asked to do? | 3 | 15 | Participants perceive high success rate |

| 5 | Effort: How hard did you have to work to accomplish your level of performance? | 4 | 20 | Low effort by participant |

| 6 | Frustration: How insecure, discouraged, irritated, stressed, and annoyed were you? | 1 | 10 | Very low frustration reported by the participant (except for mild under confidence during the first trial of the experiment) |

| S. No. | Questionnaire | User Response | ||||

|---|---|---|---|---|---|---|

| Strongly Disagree (1) | Disagree (2) | Neutral (3) | Agree (4) | Strongly Agree (5) | ||

| 1 | I think that I would like to use this system frequently. | × | ||||

| 2 | I found the system unnecessarily complex. | × | ||||

| 3 | I thought the system was easy to use. | × | ||||

| 4 | I think that I would need the support of a technical person to be able to use this system. | × | ||||

| 5 | I found the various functions in this system were well integrated. | × | ||||

| 6 | I thought there was too much inconsistency in this system. | × | ||||

| 7 | I would imagine that most people would learn to use this system very quickly. | × | ||||

| 8 | I found the system very cumbersome to use. | × | ||||

| 9 | I felt very confident using the system. | × | ||||

| 10 | I needed to learn a lot of things before I could start working with this system. | × | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khuntia, P.K.; Bhide, P.S.; Manivannan, P.V. Preliminary Analysis and Proof-of-Concept Validation of a Neuronally Controlled Visual Assistive Device Integrating Computer Vision with EEG-Based Binary Control. Sensors 2025, 25, 5187. https://doi.org/10.3390/s25165187

Khuntia PK, Bhide PS, Manivannan PV. Preliminary Analysis and Proof-of-Concept Validation of a Neuronally Controlled Visual Assistive Device Integrating Computer Vision with EEG-Based Binary Control. Sensors. 2025; 25(16):5187. https://doi.org/10.3390/s25165187

Chicago/Turabian StyleKhuntia, Preetam Kumar, Prajwal Sanjay Bhide, and Pudureddiyur Venkataraman Manivannan. 2025. "Preliminary Analysis and Proof-of-Concept Validation of a Neuronally Controlled Visual Assistive Device Integrating Computer Vision with EEG-Based Binary Control" Sensors 25, no. 16: 5187. https://doi.org/10.3390/s25165187

APA StyleKhuntia, P. K., Bhide, P. S., & Manivannan, P. V. (2025). Preliminary Analysis and Proof-of-Concept Validation of a Neuronally Controlled Visual Assistive Device Integrating Computer Vision with EEG-Based Binary Control. Sensors, 25(16), 5187. https://doi.org/10.3390/s25165187