1. Introduction

Machinery fault diagnosis plays a critical role in ensuring operation reliability, offering guidance for timely mechanical maintenance and preventing unnecessary economic losses. Traditional fault diagnosis methods heavily rely on diagnosticians, who make diagnostic decisions by analyzing the monitored signals; such processes are labor-intensive, and diagnosticians often struggle to promptly process large volumes of monitored signals [

1]. Currently, intelligent fault diagnosis approaches are gaining increasing attention, involving the replacement of human diagnosticians with artificial intelligence techniques to rapidly process large amounts of collected signals [

2].

The general framework of intelligent fault diagnosis includes data acquisition, feature extraction, feature selection, and fault classification [

3]. In a such framework, plenty of mechanical signals, which provide the most intrinsic information about machinery faults, are first acquired. These signals can be collected by the installed sensors, such as accelerometers, or can be simulated according to the principles of mechanical dynamics. As stated in [

4], the simulated data can be used to clearly detect failures in physical bearing measurements. Subsequently, the acquired signals are processed to extract representative features, among which the most salient features are then selected. Lastly, the supervised classifier is employed to classify different mechanical faults based on the selected features. Among these stages, feature selection is crucial. This is because the extracted features may have large dimensionality, which may increase the computational costs of a subsequent classifier and degrade the classification accuracy of the classifier [

5]. Feature selection helps mitigate these issues by removing irrelevant or redundant features and improve the classification accuracy by retaining the most discriminative features [

6].

In general, feature selection methods can be broadly categorized as filters and wrappers [

7]. Filter approaches score each feature based on certain evaluation criteria and select the top-ranked features, while wrapper approaches associate selected features with classifiers and select the optimal feature subset that maximizes the classification accuracy of a classifier. Compared to wrapper approaches, filter approaches are more time-efficient, meaning it is more applicable in the field of mechanical fault diagnosis. In earlier research, Lei et al. [

5] applied the distance evaluation technique (DET) for the fault diagnosis of a heavy oil catalytic cracking unit, where the diagnostic accuracy increased from 86.18% to 100% after feature selection. Zheng et al. [

8] selected five features from twenty original features for identifying rolling bearing faults using the Laplacian score (LS), thus achieving 100% testing accuracy. Recently, Vakharia et al. [

9] employed ReliefF for the fault diagnosis of bearings, resulting in an accuracy of 6.45% after feature selection. Li et al. [

10] utilized minimal redundancy–maximal relevance (mRMR) to select four sensitive features from twenty features for the fault diagnosis of planetary gearboxes; a diagnostic accuracy of 96.94% was achieved. Additionally, both Wei et al. and Wang et al. applied self-weight (SW) to the fault diagnosis of rolling bearings in [

11,

12], respectively. A literature review highlights the successful applications of filters in machine fault diagnosis. However, the aforementioned studies share a common limitation: only a single filter (e.g., LS, DET, ReliefF, SW, and mRMR) is adopted. Meanwhile, each filter utilizes a different metric (e.g., entropy, distance, locality, etc.), which may lead to variability on different datasets. To be specific, a filter that performs well on one dataset may perform poorly on another dataset [

13].

Ensemble learning holds the potential to overcome the aforementioned weakness, and an ensemble of filters has been investigated in data classification [

14]. The generalization capability of ensemble learning is theoretically supported by the statistics, which focus on reducing model complexity and enhancing base learner diversity, thereby minimizing generalization error [

15]. In [

14], several filters and a specific classifier are adopted. Each filter is integrated with the classifier to create a base model. In this model, the filter selects a unique subset of features used to train and test the classifier. The outputs of all base models are combined using a simple voting strategy. Experimental results consistently demonstrate that the ensemble filters method outperforms each filter in most cases and obtains good performance independently on the dataset. Despite the improved performance achieved by studies using ensemble filters, two drawbacks remain. First, each filter in the ensemble filters selects the same number of features for classification, which is predefined by users. This may fail to give rise to the optimal results because different filters have distinct characteristics; in achieving the same result, one filter may select many features, whereas another may need only a few. Second, the voting strategy has the following three limitations: (1) It is difficult to use the voting strategy to make correct decisions when two classes acquire the same maximum number of votes. (2) It is likely that one will make different decisions when two classes are extremely similar. (3) The voting strategy requires that most base models perform well, but this cannot always be guaranteed.

Moreover, the classifier also plays an indispensable role in an ensemble model aiming to recognize different categories based on the selected features. Over the past few decades, various classifiers have been developed, with the sparse representation classifier (SRC) [

16] offering a novel approach to classification. It has been extensively applied in many fields, including face recognition [

17], anomaly detection [

18], and fault diagnosis [

19], among others, and excellent classification accuracies were obtained. Nonetheless, the standard SRC utilizes all training samples to represent the testing sample and solves the representation coefficients through greedy algorithms, which makes it computationally intensive.

Aiming to resolve all above-mentioned problems, this paper proposes an ensemble approach for the intelligent fault diagnosis of machinery. In this approach, six filters are combined with an improved sparse representation classifier (ISRC) to separately form six base models. For each base model, binary particle swarm optimization (BPSO) is employed to optimize its two hyper-parameters related to the filter and the ISRC, respectively. After optimization, each filter selects a feature subset which is then used to train and test the ISRC. Finally, the outputs of six ISRCs are aggregated by the cumulative reconstruction residual (CRR) strategy instead of voting. The effectiveness of the proposed approach is verified using six mechanical datasets on bearings and gears.

In summary, the contributions of this paper are as follows.

- (1)

To overcome the drawback of the SRC, we propose the ISRC, which inherits the advantage of high classification accuracy in the standard SRC, while being less time consuming.

- (2)

To overcome the limitations of the voting strategy, CRR is proposed, which is capable of making more accurate and reliable decisions than the commonly used voting approach.

- (3)

To enhance the generalization ability of the diagnosis model, an ensemble approach is presented by iterating each base model; meanwhile, the BPSO is adopted to optimize two hyper-parameters in each base model to achieve a better performance.

The remainder of this paper is organized as follows. Relevant findings from the literature are briefly reviewed in

Section 2. The proposed ISRC is presented in

Section 3. Detailed descriptions of the proposed ensemble approach for the intelligent fault diagnosis of machines are provided in

Section 4. In

Section 5, several comparative experiments are conducted to validate the effectiveness of the proposed approach. Finally,

Section 6 concludes this paper.

3. The Improved Sparse Representation Classifier

The fundamental rationale of the SRC is to first represent a testing sample as a linear combination of the training samples with sparse representation coefficients. Subsequently, the type of testing sample can be determined by the reconstruction residual.

Suppose

represents a training set containing

N samples belonging to

C categories, where

denotes the

i-th sample of the

c-th class, and

D is the dimension.

is the number of samples in the

c-th class. For convenience, the samples of the same class are listed together in a training set, which comprises a matrix,

. Given a testing sample,

, the SRC aims to solve the following problem:

where

is a small error constant.

denotes the representation coefficients and

counts the nonzero entries of

. In general, the problem of (

3) can be solved by orthogonal matching pursuit (OMP) [

25] to acquire an optimal sparse solution,

. Assuming that

corresponds to the representation coefficient of

, the reconstruction residual of the

c-th samples is defined as

Based on Equation (

4), the label of

corresponds to the class with minimum reconstruction residual, i.e.,

.

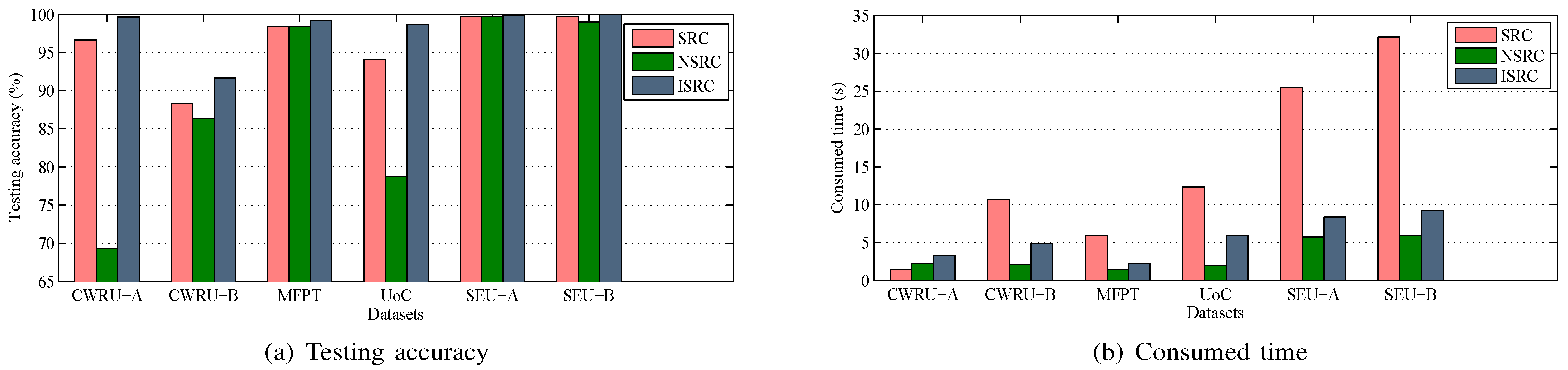

SRC has the advantages of high classification accuracy and few tunable hyper-parameters; nevertheless, it is considerably time-consuming because it solves the representation coefficients using all the training samples and it depends on an iteration algorithm to acquire a solution [

26].

To alleviate the problems aforementioned, Xu et al. [

26] put forward a simple and fast modified SRC. The basic idea of their method is to select only the nearest training sample of the testing sample from each class and then express the testing sample as a linear combination of the selected training samples, where the representation coefficients can be easily solved by the least square algorithm; we call this approach the NSRC approach. After obtaining the representation coefficients, each selected training sample is employed to reconstruct the testing sample, as expressed in Equation (

4), and the predict label of the testing sample corresponds to the category with minimum reconstruction residual. In comparison with the SRC, the NSRC represents the testing sample as a linear combination of the selected

C samples rather than the whole training samples. Additionally, the NSRC uses a least squares approach instead of iterative algorithms, such as OMP. As a result, the NSRC is inherently faster and less computationally demanding than the SRC. However, the NSRC may lead to a loss of classification accuracy because it relies on only one training sample from each class to represent the testing sample, leading to insufficient representation capability and increasing the risk of misclassification.

Aiming to resolve the above issues, this paper develops an improved sparse-representation-based classifier (ISRC), and its basic steps are as follows.

(1) Select the k-nearest training samples of the testing sample, , from every class based on Euclidean distance, which are denoted as . Here, denotes the i-th selected training sample in the c-th class.

(2) Use the selected samples to represent , i.e., , where denotes the representation coefficient corresponding to , and these representation coefficients can be solved efficiently through the least squares approach.

(3) Calculate the reconstruction residual of the

c-th, which is a normalized value:

where

. Here,

can measure the deviation degree of

from the samples in the

c-th class; the smaller it is, the more likely

is to be from the corresponding class.

(4) Determine the predict label of , which is .

Some analyses of the proposed ISRC are presented here. First, the ISRC is viewed as a special case of the SRC. Indeed, if the linear combination of the ISRC is forcibly rewritten as a linear combination of all the training samples, then the representation coefficients for the unselected samples are set to zero. In the ISRC, only training samples instead of all training samples are selected to represent the testing sample, and the representation coefficients are solved using the least squares approach rather than the iterative algorithm. Hence, the ISRC is naturally less time-consuming than the SRC, thereby inheriting the computational efficiency advantage of the NSRC. Second, the ISRC is a generalized version of the NSRC, since the former can adjust k to pursue an excellent classification performance, while the latter is a fixed classifier with . Thus, with a proper choice of k, the ISRC has sufficient capability to represent the test sample, resulting in higher classification accuracy compared to the NSRC.

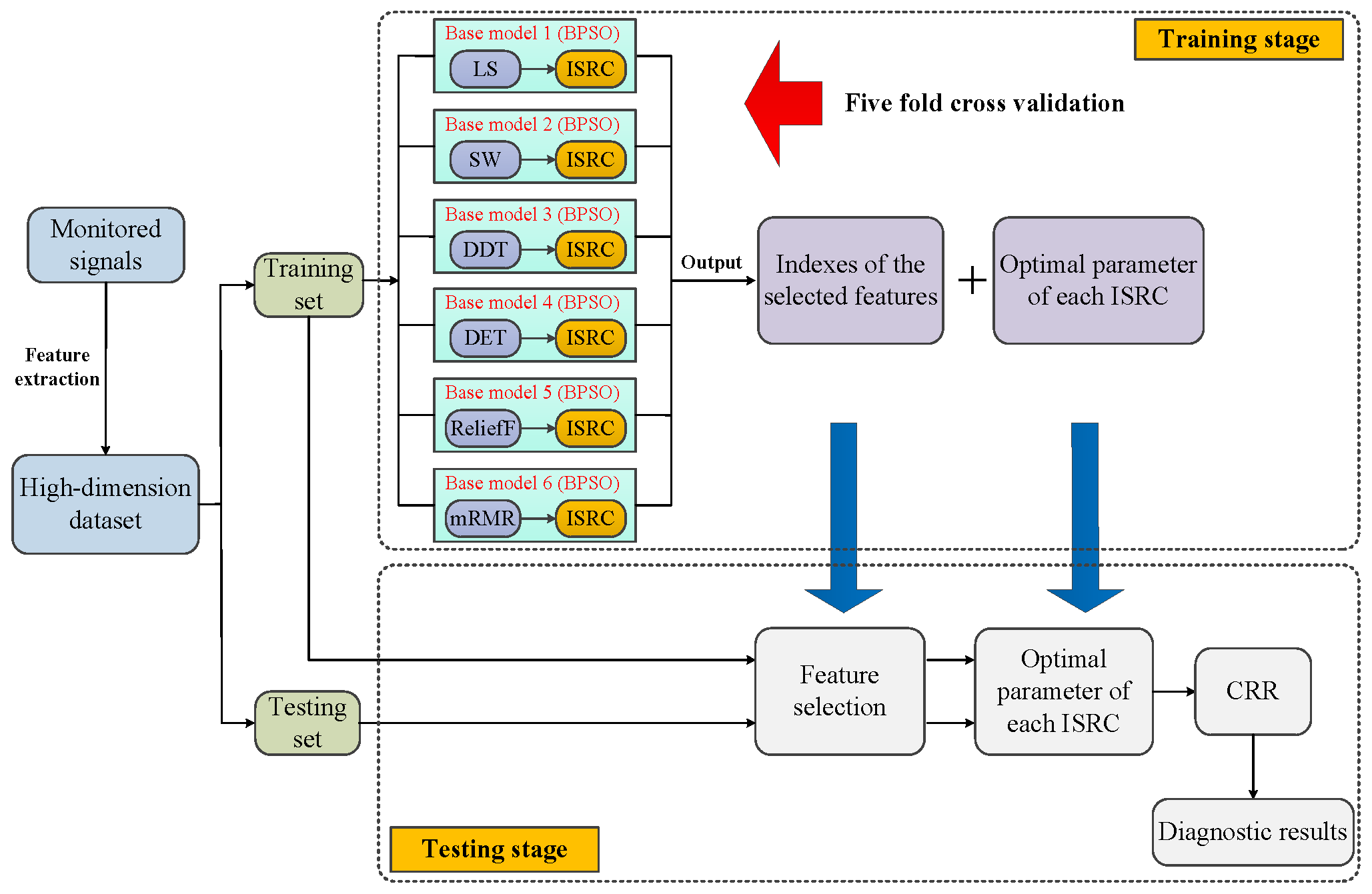

4. The Proposed Ensemble Approach for Fault Diagnosis of Machinery

Based on six filters, BPSO, and the ISRC, we propose an ensemble approach which is aggregated by six base models. Each base model consists of a filter and the ISRC, numbered according to the order of the filters, as discussed in

Section 2.1. The illustration of the proposed method for mechanical fault diagnosis is shown in

Figure 1, where the detailed description is presented as follows.

First, the monitored signals of machinery are collected, and these are then processed to extract representative features that are indicative of mechanical health conditions. Here, it is necessary to point out that feature extraction is not the focus of this work; thus, existing advanced feature extraction methods are directly employed. After feature extraction, a high-dimensional mechanical dataset can be obtained, which is then divided into training set and testing set. For the convenience of description, we denote as the training set, containing N samples coming from C health conditions, where refers to the i-th sample of the c-th class, which has D features. is the number of samples in the c-th class. This is conducted without loss of generality, assuming that is a testing sample.

Subsequently, the following two stages are carried out sequentially, i.e., the training stage and the testing stage.

4.1. Training Stage

This stage only makes use of the training set with the task of determining the indexes of the features to be selected and acquiring the optimal k of each ISRC. To achieve this, the following several procedures need to be conducted sequentially.

4.1.1. Feature Sorting

The training set is separately fed into each filter to output the corresponding ranked feature sequence, where the top-ranked features are more significant.

4.1.2. BPSO

After sorting features, the number of the selected features,

r, remains unknown. Previous studies [

27] assigned the same

r to all filters, potentially resulting in a suboptimal

r value. Similar to the filter, the ISRC has a tunable parameter,

k, which it is impractical to fix arbitrarily. Hence, each base model composed of a filter and an ISRC requires careful tuning to determine the optimal

r and

k, thereby enhancing the classification performance. Obviously, both the

r and the

k are positive integers, and it is not easy to acquire the optimal

r and

k simultaneously due to the problem having an NP-hard nature. Additionally, the traditional grid search strategy is impracticable due to the vast search space. Consequently, we turn to BPSO, a swarm intelligence optimization algorithm known for finding global optima. Moreover, the particle in BPSO can effortlessly track integer values for

r and

k.

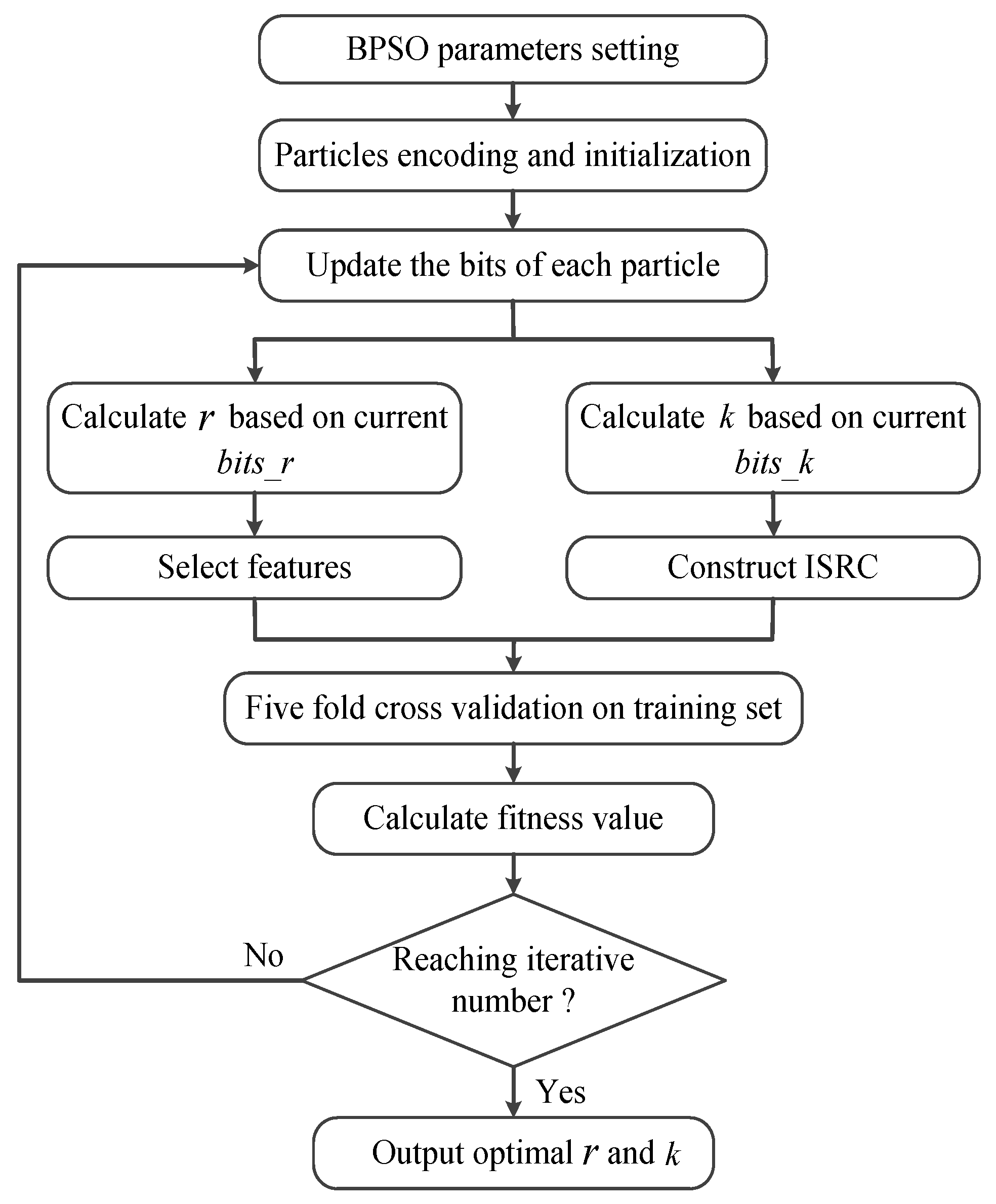

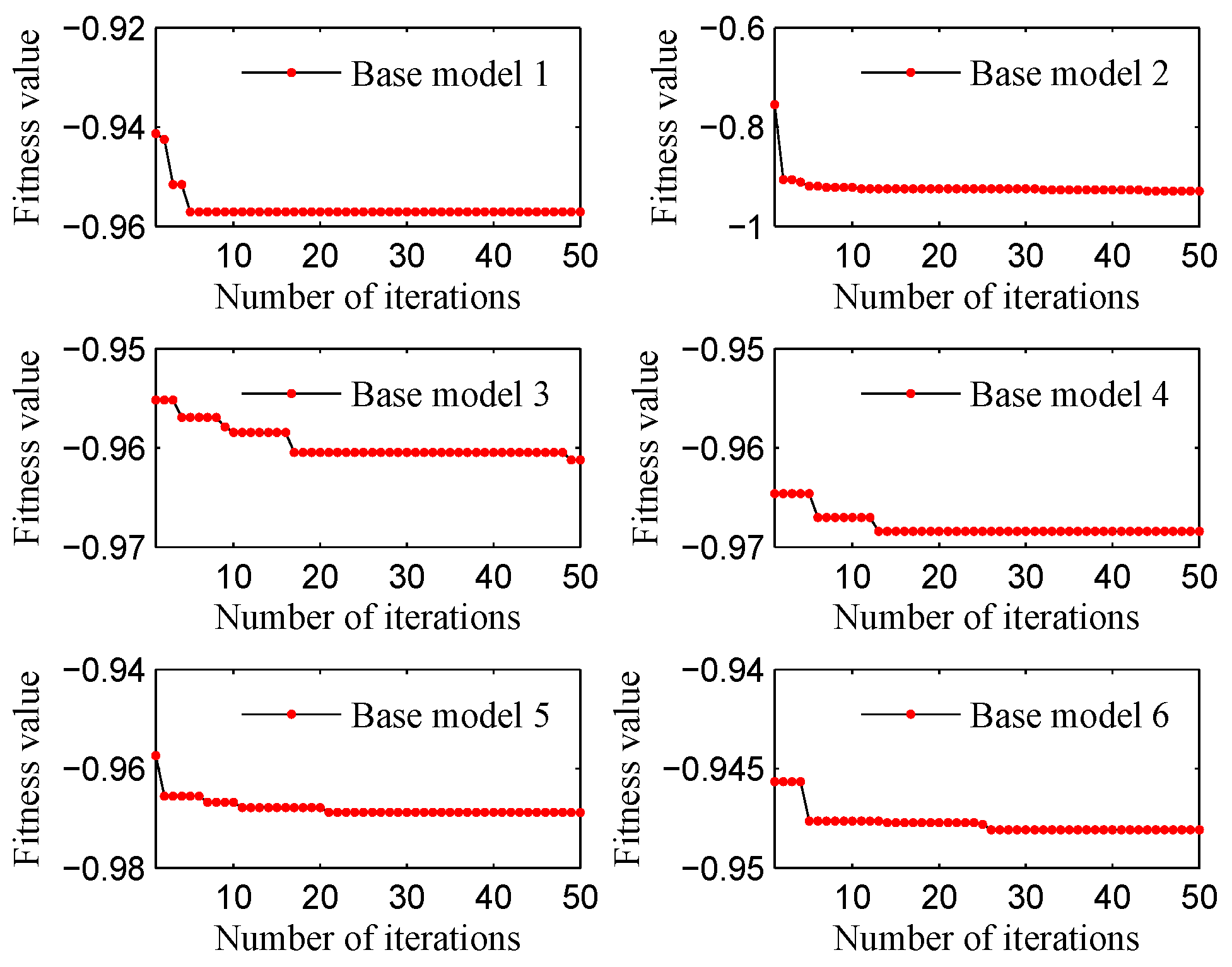

BPSO is performed on each base model in turn to obtain the optimal

r and

k for each base model. The stepwise flowchart of BPSO for each base model is presented in

Figure 2, and the specific implementation procedure is available in Algorithm 1.

| Algorithm 1 Optimization procedure of BPSO. |

| Input: The iteration number , the particles number , the encoded number for each particle , the training dataset |

| Initialization: position matrix , velocity matrix , The optimal position of each particle , the optimal value of each particle , the global optimal value |

- 1:

for do - 2:

for do - 3:

For , calculate fitness value in ( 7) combined with training dataset - 4:

Compare fitness value with and - 5:

Update and - 6:

end for Introduce acceleration factors, random numbers, and compute inertia weight - 7:

for do - 8:

Update as described in ( 1) - 9:

Limit by means of - 10:

- 11:

end for - 12:

end for

|

Step 1: The parameters related to BPSO are set: the acceleration factors, and ; the maximum and minimum inertia weights, and ; the maximum number of iterations, ; the particle number, ; the number of bits, , for calculating r; the number of bits, , for calculating k. In this paper, , , , and . The remaining three parameters will be given in the later experiments.

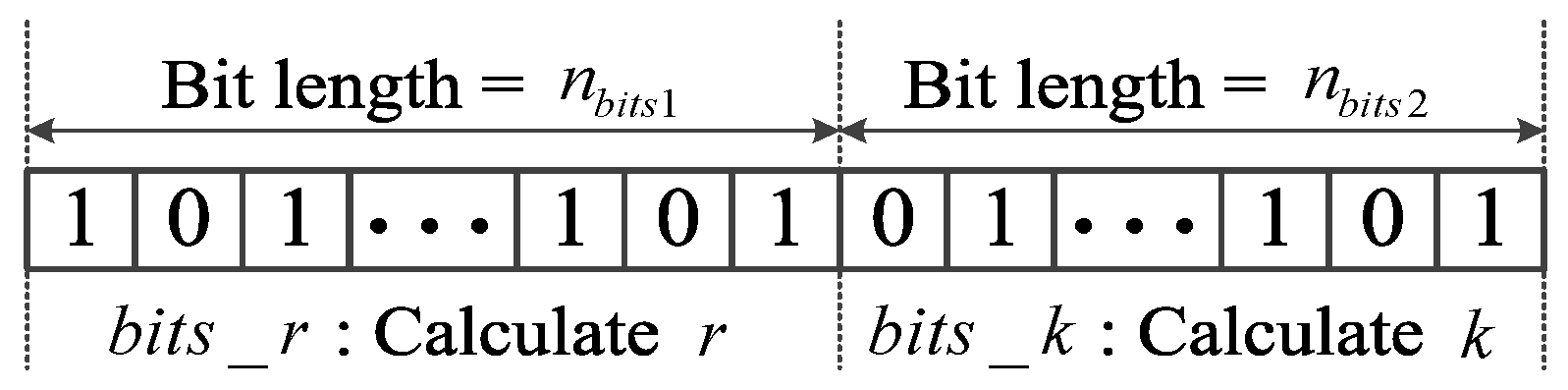

Step 2: All particles are encoded with random values of 0 or 1 for initialization. It should be noted that there are a total of

bits for coding each particle composed of two parts, as illustrated in

Figure 3. The first part (

) and the second part (

) aim to denote the values of

r and

k, respectively. Given a code containing

m bits, the value it denotes is

where

refers to the value of the

i-th bit, which equals to 0 or 1. According to the definition of Equation (

6), the values of

r and

k are easily calculated by setting

and

, respectively. Taking

and

as examples, the calculated values are

and

.

Step 3: Here, one starts to carry out the BPSO iteration by updating the bits of each particle. Based on the current particles, the values of

r and

k are calculated via Equation (

6); then, the corresponding features are selected and the ISRC is constructed. After that, the fitness value of each particle is calculated based on the calculated

r and

k, which is acquired by means of five fold cross-validation. To be specific, the whole training set which has selected the corresponding

r features is divided into five equal folds, of which four folds are used for training and the remaining one is responsible for testing. Each fold is used for testing once, so five classification accuracies can be obtained, which are denoted as

. Subsequently, the fitness value is defined as

where the first term denotes the average classification accuracy of fivefold cross-validation on the training set. The second term acts as a penalty, which holds that, if two particles achieve the same average classification accuracies, then the particle with a smaller

r is preferred. Here, an extreme situation is that

or

, and we assume that the fitness value of such cases is positive infinite.

Step 4: Judge whether the number of iterations reaches the maximum value, . If so, then the obtained optimal r and k are output; otherwise, Step 3 is repeated to continue the iteration.

Through the process in

Figure 2, each base model can obtain its optimal

r and

k independently. After training, the values of

k in six ISRCs together with six groups of the indexes of the features selected by six filters are stored for the use of the subsequent testing stage.

4.2. Testing Stage

This stage works by inputting the training set and the testing sample into each base model to obtain six single outputs. Then, six single outputs are aggregated to acquire the ensemble output by means of certain aggregation strategy. The detailed steps are as follows.

4.2.1. Output of Each Base Model

Taking base model 1 as an example, the training set

and testing sample

all conduct feature selection based on the indexes determined by LS. After feature selection, both the training set and the testing sample are fed into the ISRC with the optimal

k; the four steps of the ISRC in

Section 3 are carried out sequentially. Finally, the predicted label of the testing sample

can be acquired, which corresponds to its health condition. Simultaneously, the reconstruction residual vector defined in Equation (

5) is also obtained, which is denoted as

, where

refers to the reconstruction residual corresponding to the

c-th class for

. Similarly, the remaining five base models generate the predicted labels of

along with their respective reconstruction residual vectors, which are separately denoted as

,

,

,

, and

.

4.2.2. Aggregation

Six predict labels are obtained for

, which must be further processed to obtain the ultimate output via the aggregation strategy. A commonly used approach is the simple voting approach, as explored in [

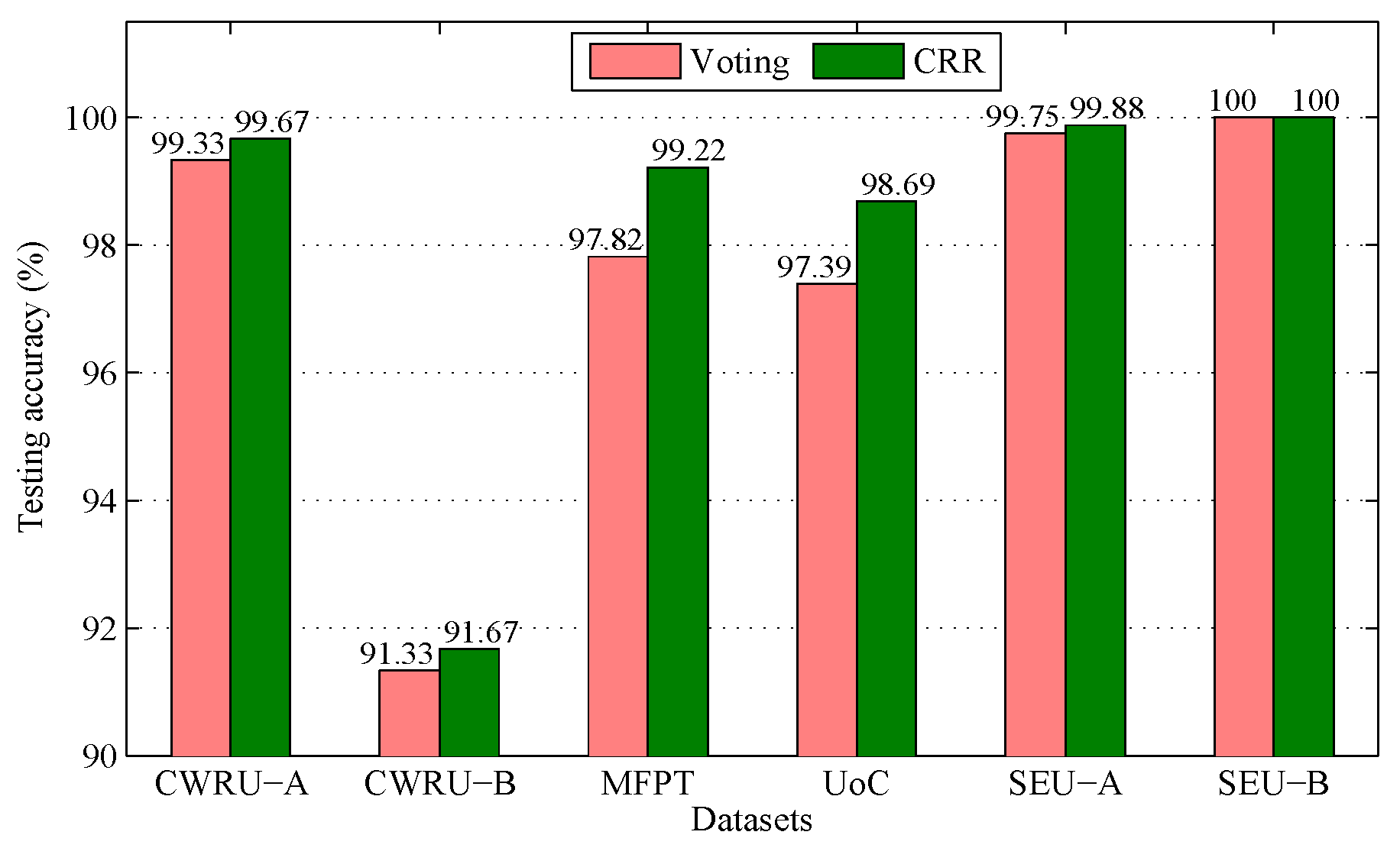

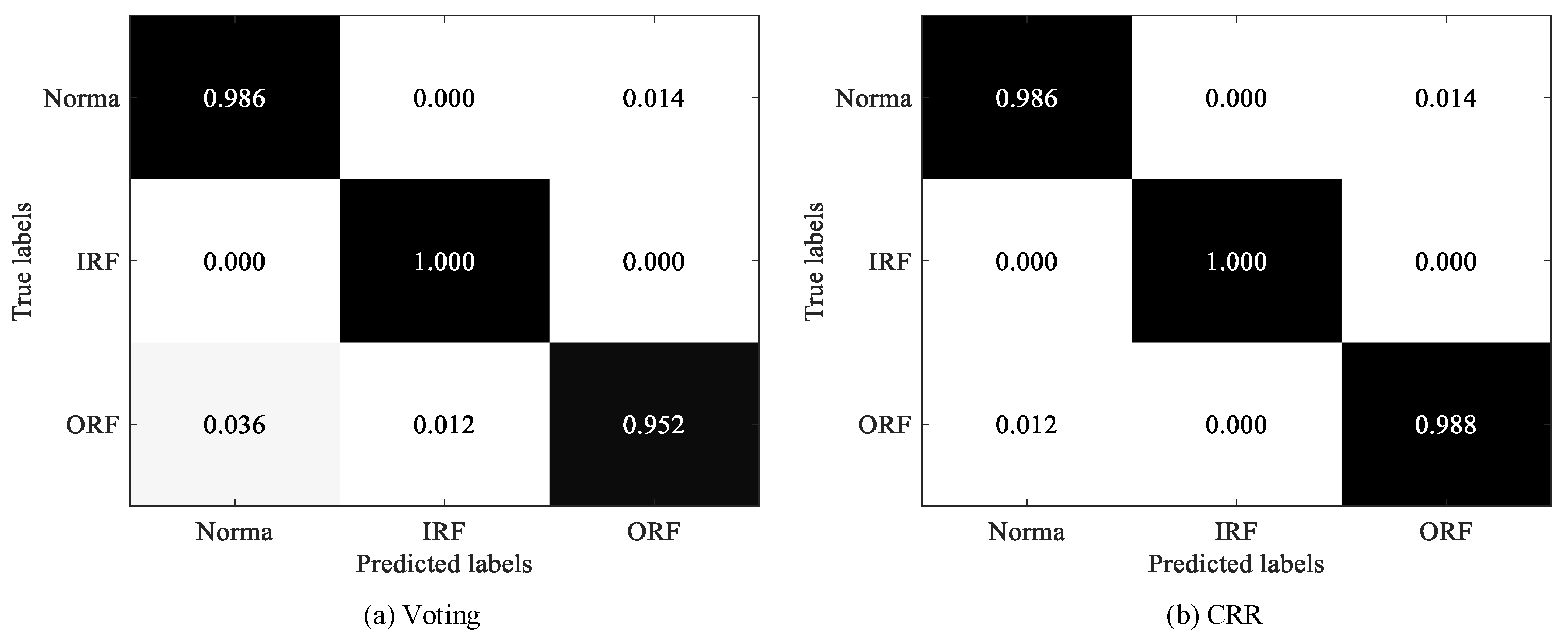

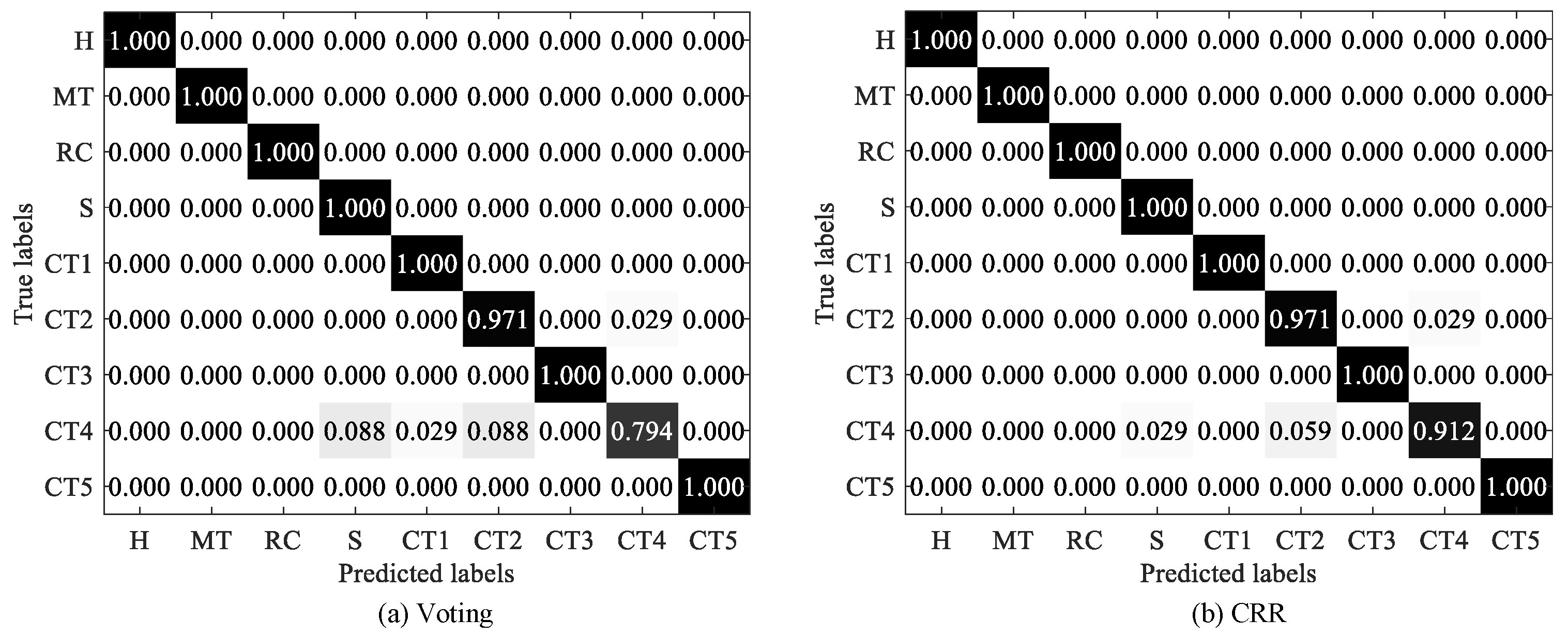

27]. Nevertheless, the voting strategy may suffer from three weaknesses, as follows. First, the voting strategy fails to provide an accurate judgment of when two classes receive the same maximum number of votes. Second, there may be two similarly small reconstruction residuals in

for

, which obliterates the difference between such two classes. If we arbitrarily determine the predicted label of the

i-th base model based on these two values, the results may become considerably biased. Third, the voting strategy relies on most base models performing well to achieve an optimal result. Nevertheless, this requirement can not be always ensured.

To overcome these weaknesses, this work proposes an alternative strategy named the cumulative reconstruction residual (CRR) strategy, which is inspired by cumulative probability as discussed in [

14]. The rationale of CRR is fairly simple; it works by determining the category of the testing sample,

, as the health condition with the minimum cumulative reconstruction residual. Mathematically, we denote

as the cumulative reconstruction residual vector, where

is the cumulative reconstruction residual of the

c-th class for

. The label of

is predicted as

. In comparison to voting, CRR offers three significant advantages. First, since CRR makes a decision for

based on the calculated

, it effectively avoids the confused situation that two classes acquire the same maximum votes number, while such a situation is common when undertaking the voting strategy. Second, CRR assesses the overall cumulative reconstruction residual performance across all base models, thereby exacerbating the differences between the classes and enabling explicit and accurate predictions to be made. Third, CRR presents the ensemble result based on the cumulative value of the reconstruction residual, making it more inclusive than voting, as it can accommodate the poorer performances of base models in certain cases.

6. Conclusions

This paper proposes an ensemble approach for intelligent fault diagnosis of machinery, which consists of several filters and classifiers. To overcome the limitations of the SRC, we propose an improved variant of the SRC, namely the ISRC, for fault classification. Six filters are utilized, with each filter combined with the ISRC to constitute a base model. For each base model, the filter selects a subset of features for training and testing the ISRC; here, two hyper-parameters involved in the filter and the ISRC approaches are optimized by the BPSO algorithm. The outputs of all the base models are combined by CRR, replacing the commonly used voting strategy. Furthermore, six datasets related to bearings and gears are utilized to evaluate the performance of the proposed approach. Through several experiments, the following conclusions are drawn:

(1) Feature selection is significantly essential, as it enhances diagnostic accuracy by selecting the most discriminative features.

(2) Combining multiple filters and classifiers for fault diagnosis is more effective than using a single filter and a single classifier, which can reduce the variability in the results over different datasets.

(3) Two hyper-parameters involved in the filter and the classifier of each base model are supposed to be optimized instead of manually assigning the same value, promoting higher diagnostic accuracy.

(4) In comparison to the SRC, the developed ISRC not only inherits its merit of high classification accuracy, it also overcomes its drawback of high computational time. Thereby, the ISRC emerges as a promising classifier.

(5) The designed CRR outperforms the voting approach, as it effectively addresses three limitations of the voting strategy. Therefore, CRR is more suitable for aggregation.

In this work, we focus on developing a BPSO-optimized ensemble approach to achieve a high diagnosis performance, and the method is simply evaluated using six regular datasets, where sufficient and identical samples are provided for the normal condition and each faulty condition. Nevertheless, very few, if any, faulty examples (labeled and unlabeled) are available due to safety considerations [

35]. Few or even zero-fault-shot learning is a current research hotspot, and is of great and practical significance in mechanical fault diagnosis. Therefore, we will explore in this area in the future.