AI-Generated Fall Data: Assessing LLMs and Diffusion Model for Wearable Fall Detection

Abstract

1. Introduction

- Can LLMs generate accelerometer data specific to gender, age, or joint?

- Do LLM-generated data perform better or align more closely with real data than diffusion-based methods?

- Do LLM-generated data improve the performance of the model for fall detection tasks?

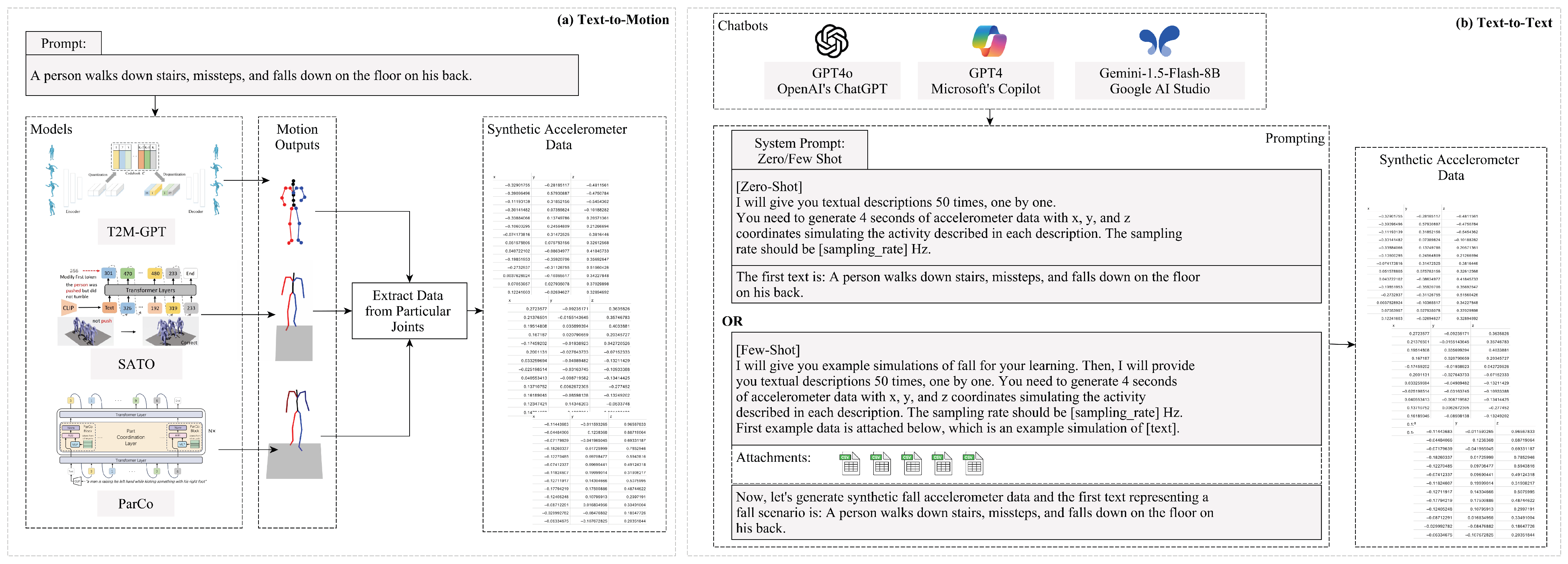

- Data Generation: First, we generate synthetic fall data using two categories of pre-trained LLMs: text-to-motion and text-to-text generation models. These models receive prompts describing fall scenarios and produce accelerometer data accordingly.

- Qualitative and Quantitative Analysis: We conduct both qualitative and quantitative analyses to evaluate the distribution of synthetic data in comparison to real data and assess the degree of alignment between the generated and real data.

- We investigate the impact of augmenting real fall data with synthetic fall data in training a Long Short-Term Memory (LSTM)-based fall detection model. The performance of the augmented model is then compared against a baseline model trained exclusively on real data.

- Ablation Study: Finally, we conduct an ablation study to evaluate the impacts of the quantity of synthetic data, prompting techniques, and baseline dataset characteristics when combined with synthetic data.

2. Related Work

2.1. Non-Generative Data Augmentation

2.2. Generative Simulation-Based Approaches

2.3. Generative AI with Learned Representations

2.4. Observed Challenges and Research Framing

3. Methodology

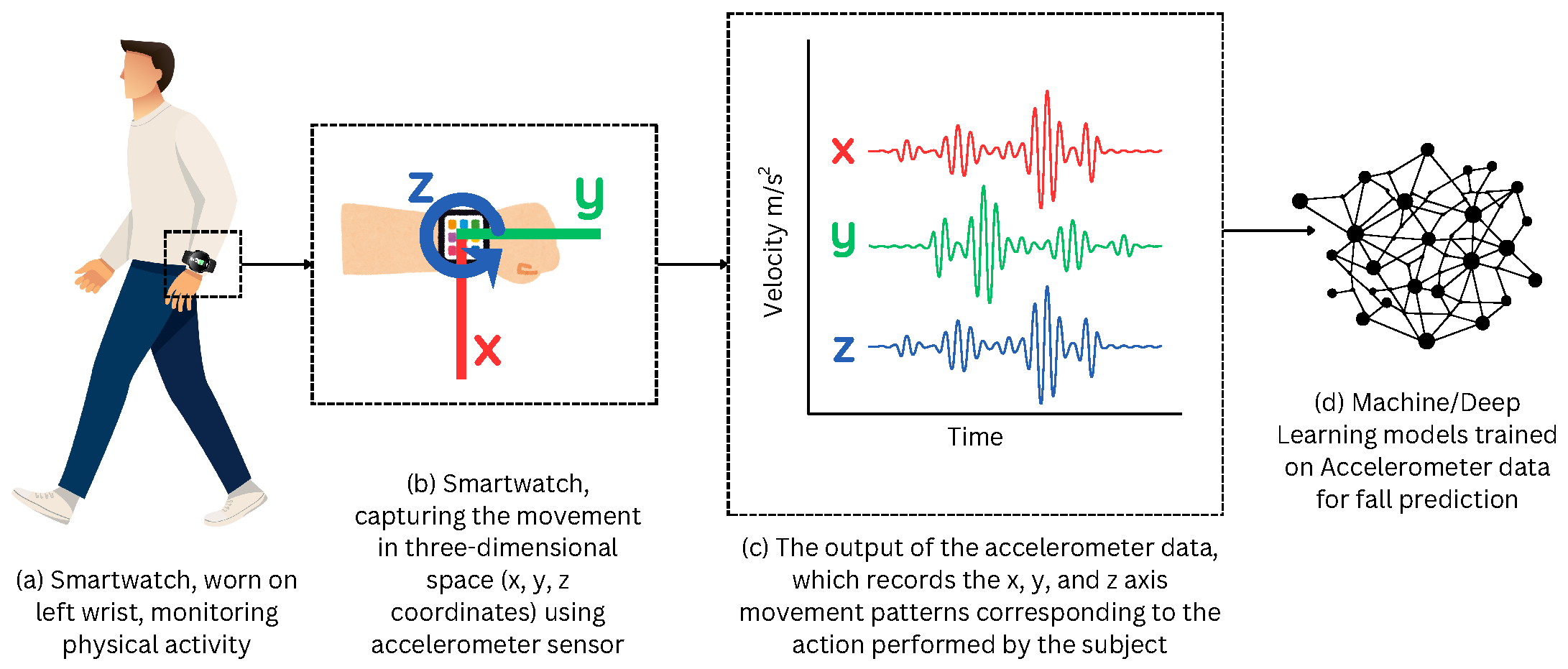

3.1. Synthetic Data Generation

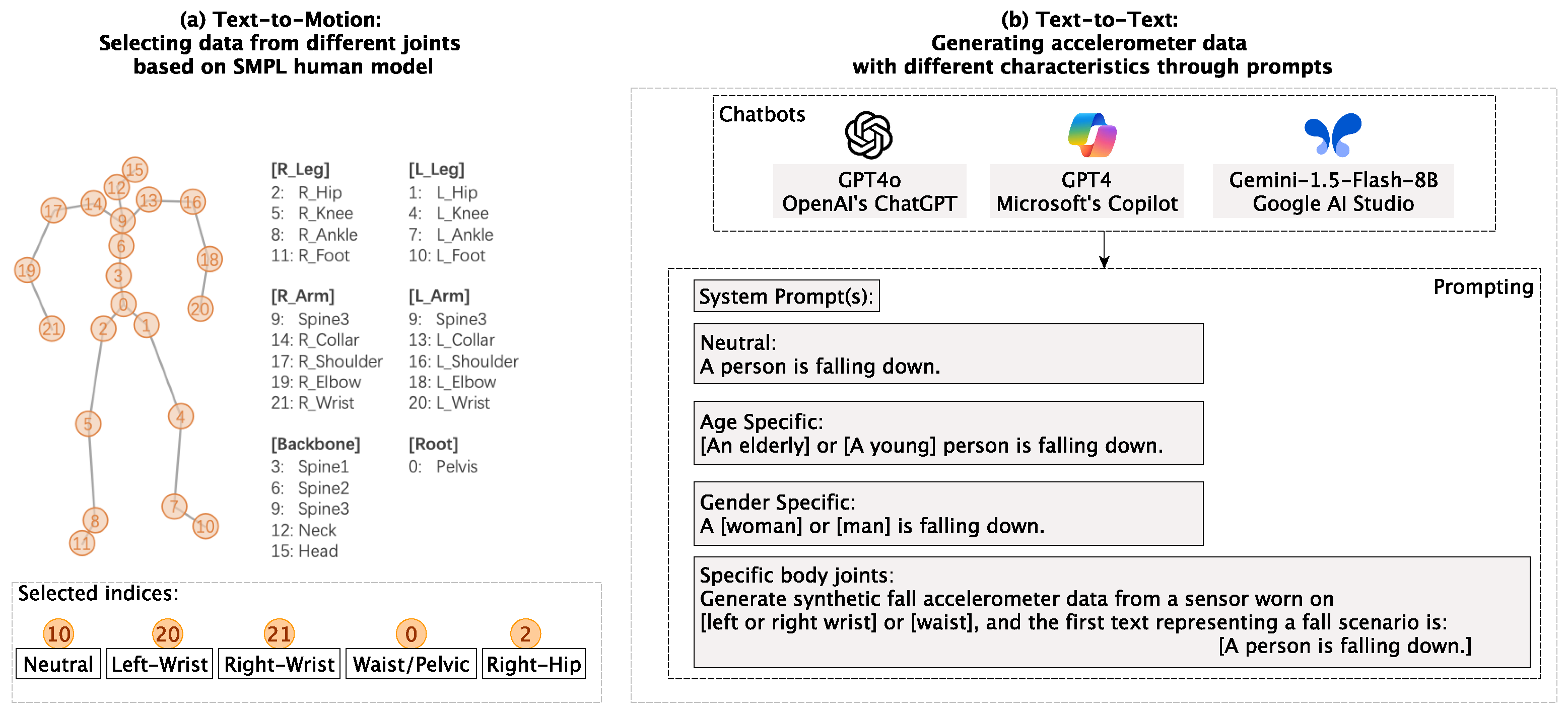

3.1.1. Text-to-Motion Generation

3.1.2. Text-to-Text Generation

3.2. Baseline Preparation

3.2.1. Baseline Datasets

3.2.2. Diffusion-Generated Dataset

3.2.3. Data Preparation for Training and Testing a Fall Detection Model

3.3. Fall Detection Classifier

4. Implementation Details and Evaluation Criteria

5. Results and Discussion

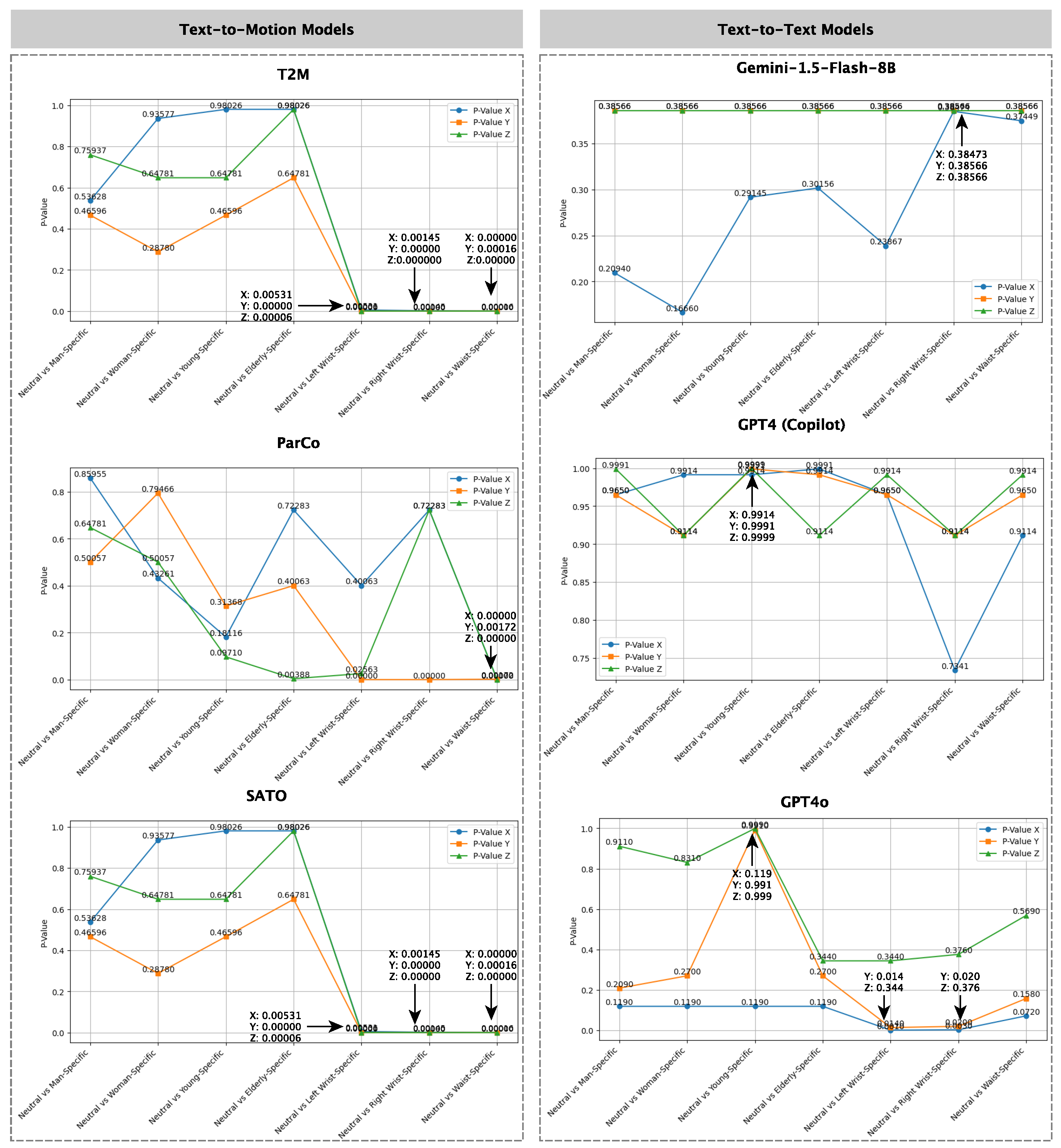

5.1. Can LLMs Generate Accelerometer Data Specific to Gender, Age, and Joint?

5.2. Do LLM-Generated Data Perform Better or Align More Closely with Real Data than Diffusion-Based Methods?

5.3. Do LLM-Generated Data Improve the Performance of the Model for a Fall Detection Task?

6. Ablation Study

- Do the baseline dataset characteristics impact the fall detection task using synthetic data?

- Does the quantity of LLM-generated data affect the accuracy of fall prediction models?

- Which prompting strategy is more effective for generating diverse fall data, human-designed scenarios, or self-generated scenarios?

6.1. Do the Baseline Dataset Characteristics Impact the Fall Detection Task Using Synthetic Data?

6.2. Which Prompting Strategy Is More Effective for Generating Diverse Fall Data—Human-Designed Scenarios or Self-Generated Scenarios?

| Listing 1. Prompting pipeline for generating synthetic fall scenarios and corresponding accelerometer data using an LLM. |

| System Prompt: I want you to create 50 scenarios of persons falling. Each scenario must be unique. List of 50 textual descriptions of fall scenarios. System Prompt: Now, for all of these 50 scenarios, create accelerometer data of 4 s with x, y, z columns at sampling speed [sampling_rate] Hz. The output CSV must contain 4 columns with names x, y, and z in lower letters. The CSV must be semicolon-separated. Response: output.CSV |

6.3. Does the Quantity of LLM-Generated Data Affect the Accuracy of Fall Prediction Models?

7. Key Findings and Applicability of LLMs for Fall Detection

- 1.

- Can LLMs generate accelerometer data specific to gender, age, and joint placement?Our results indicate that text-to-motion models (T2M, SATO, and ParCo) effectively capture joint-specific variations but fail to differentiate demographic attributes such as gender and age. On the other hand, text-to-text models (GPT4o, GPT4, and Gemini) struggled to introduce both joint and demographic variations, producing only minor differences in joint-specific prompts, making them less effective for applications requiring age- or gender-specific biomechanical variations.

- 2.

- Do LLM-generated data align more closely with real data than diffusion-based methods?When evaluating alignment with real data, Diffusion-TS consistently outperformed all LLM-generated data, achieving the lowest JSD and highest Coverage scores across all datasets. Among LLM-based methods, text-to-motion models (T2M, SATO, and ParCo) demonstrated better alignment than text-to-text models, capturing more realistic motion distributions. However, text-to-text models exhibited high variance and spikiness, particularly in datasets with higher sampling rates such as KFall (100 Hz) and SisFall (200 Hz). This suggests that LLMs lose specificity as the sampling rate increases, leading to poor alignment. Although few-shot prompting slightly improved data realism, it also introduced noise and instability, particularly in high-frequency datasets, further limiting the reliability of LLM-generated synthetic data.

- 3.

- Do LLM-generated data improve fall detection model performance?The impact of LLM-generated data on fall detection performance varied by dataset characteristics. In the SMM dataset, performance declined across all synthetic methods, with T2M causing the least drop (−4.05%). This reduction was largely due to two factors: (1) the use of a left wrist sensor, which captures subtle, low-amplitude movements that provide weak fall indicators, and (2) temporal mismatch, i.e., SMM data includes only the impact phase, while synthetic data modeled full transitions, leading to poor alignment. In contrast, UMAFall showed the greatest performance gain, with GPT4-FS improving the F1-score by +56.83%. This improvement is likely due to the low sampling rate (20 Hz) of the UMAFALL dataset and limited activity complexity, which made it easier for synthetic data to complement the original distribution. KFall and SisFall experienced moderate improvements, although high-frequency sampling in SisFall (200 Hz) made it more sensitive to noise introduced by FS prompting. Specifically, GPT4o-FS reduced performance in SisFall by −7.10%. Overall, text-to-motion models consistently outperformed text-to-text models in maintaining alignment with baseline data. While Diffusion-TS offered the most stable results across all datasets, it showed limited performance gain, suggesting that its well-formed outputs may lack critical fall-specific variability required for generalization.

- Applicability of LLMs for Fall Detection

8. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- United Nations. World Population Prospects 2022: Summary of Results; United Nations: New York, NY, USA, 2022. [Google Scholar]

- World Health Organization. Ageing and Health; World Health Organization: Geneva, Switzerland, 2023. [Google Scholar]

- Ngu, A.H.; Yasmin, A.; Mahmud, T.; Mahmood, A.; Sheng, Q.Z. Demo: P-Fall: Personalization Pipeline for Fall Detection. In Proceedings of the 8th ACM/IEEE International Conference on Connected Health: Applications, Systems and Engineering Technologies, Orlando, FL, USA, 21–23 June 2023; Association for Computing Machinery: New York, NY, USA, 2024. CHASE ’23. pp. 173–174. [Google Scholar] [CrossRef]

- Liu, X.; McDuff, D.; Kovacs, G.; Galatzer-Levy, I.; Sunshine, J.; Zhan, J.; Poh, M.Z.; Liao, S.; Achille, P.D.; Patel, S. Large Language Models are Few-Shot Health Learners. arXiv 2023, arXiv:2305.15525. [Google Scholar] [CrossRef]

- Leng, Z.; Kwon, H.; Plötz, T. Generating Virtual On-body Accelerometer Data from Virtual Textual Descriptions for Human Activity Recognition. arXiv 2023, arXiv:2305.03187. [Google Scholar] [CrossRef]

- Zhou, W.; Ngo, T.H. Using Pretrained Large Language Model with Prompt Engineering to Answer Biomedical Questions. arXiv 2024, arXiv:2407.06779. [Google Scholar] [CrossRef]

- Xu, H.; Han, L.; Yang, Q.; Li, M.; Srivastava, M. Penetrative AI: Making LLMs Comprehend the Physical World. arXiv 2024, arXiv:2310.09605. [Google Scholar] [CrossRef]

- Wang, X.; Fang, M.; Zeng, Z.; Cheng, T. Where Would I Go Next? Large Language Models as Human Mobility Predictors. arXiv 2024, arXiv:2308.15197. [Google Scholar] [CrossRef]

- Alharbi, F.; Ouarbya, L.; Ward, J.A. Synthetic Sensor Data for Human Activity Recognition. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–9. [Google Scholar] [CrossRef]

- Liaquat, M.; Nugent, C.; Cleland, I. Using Synthetic Data to Improve the Accuracy of Human Activity Recognition. In Proceedings of the 15th International Conference on Ubiquitous Computing & Ambient Intelligence (UCAmI 2023), Riviera Maya, Mexico, 28–30 November 2023; Bravo, J., Urzáiz, G., Eds.; Springer: Cham, Switzerland, 2023; pp. 167–172. [Google Scholar]

- Matthews, O.; Ryu, K.; Srivastava, T. Creating a Large-scale Synthetic Dataset for Human Activity Recognition. arXiv 2020, arXiv:2007.11118. [Google Scholar] [CrossRef]

- Hwang, H.; Jang, C.; Park, G.; Cho, J.; Kim, I.J. ElderSim: A Synthetic Data Generation Platform for Human Action Recognition in Eldercare Applications. arXiv 2020, arXiv:2010.14742. [Google Scholar] [CrossRef]

- Singh, A.; Le, B.T.; Nguyen, T.L.; Whelan, D.; O’Reilly, M.; Caulfield, B.; Ifrim, G. Interpretable Classification of Human Exercise Videos Through Pose Estimation and Multivariate Time Series Analysis. In AI for Disease Surveillance and Pandemic Intelligence; Springer: Cham, Switzerland, 2022; pp. 181–199. [Google Scholar]

- Dubey, S.; Dixit, M. A comprehensive survey on human pose estimation approaches. Multimed. Syst. 2023, 29, 167–195. [Google Scholar] [CrossRef]

- Stenum, J.; Hsu, M.M.; Pantelyat, A.Y.; Roemmich, R.T. Clinical gait analysis using video-based pose estimation: Multiple perspectives, clinical populations, and measuring change. PLoS Digit. Health 2024, 3, e0000467. [Google Scholar] [CrossRef]

- Tang, W.; Ren, Z.; Wang, J. Guest editorial: Special issue on human pose estimation and its applications. Mach. Vis. Appl. 2023, 34, 120. [Google Scholar] [CrossRef]

- Tufisi, C.; Praisach, Z.I.; Gillich, G.R.; Bichescu, A.I.; Heler, T.L. Forward Fall Detection Using Inertial Data and Machine Learning. Appl. Sci. 2024, 14, 10552. [Google Scholar] [CrossRef]

- Leng, Z.; Kwon, H.; Plötz, T. On the Benefit of Generative Foundation Models for Human Activity Recognition. arXiv 2023, arXiv:2310.12085. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, Y.; Cun, X.; Huang, S.; Zhang, Y.; Zhao, H.; Lu, H.; Shen, X. T2M-GPT: Generating Human Motion from Textual Descriptions with Discrete Representations. arXiv 2023, arXiv:2301.06052. [Google Scholar]

- Meng, Z.; Xie, Y.; Peng, X.; Han, Z.; Jiang, H. Rethinking Diffusion for Text-Driven Human Motion Generation. arXiv 2024, arXiv:2411.16575. [Google Scholar] [CrossRef]

- Chi, S.; Chi, H.g.; Ma, H.; Agarwal, N.; Siddiqui, F.; Ramani, K.; Lee, K. M2D2M: Multi-Motion Generation from Text with Discrete Diffusion Models. arXiv 2024, arXiv:2407.14502. [Google Scholar]

- Wu, Y.; Ji, W.; Zheng, K.; Wang, Z.; Xu, D. MoTe: Learning Motion-Text Diffusion Model for Multiple Generation Tasks. arXiv 2024, arXiv:2411.19786. [Google Scholar]

- Tang, R.; Han, X.; Jiang, X.; Hu, X. Does Synthetic Data Generation of LLMs Help Clinical Text Mining? arXiv 2023, arXiv:2303.04360. [Google Scholar] [CrossRef]

- Chen, W.; Xiao, H.; Zhang, E.; Hu, L.; Wang, L.; Liu, M.; Chen, C. SATO: Stable Text-to-Motion Framework. arXiv 2024, arXiv:2405.01461. [Google Scholar]

- Zou, Q.; Yuan, S.; Du, S.; Wang, Y.; Liu, C.; Xu, Y.; Chen, J.; Ji, X. ParCo: Part-Coordinating Text-to-Motion Synthesis. arXiv 2024, arXiv:2403.18512. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving language understanding by generative pre-training. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Montreal, QC, Canada, 2–8 December 2018. [Google Scholar]

- van den Oord, A.; Vinyals, O.; Kavukcuoglu, K. Neural Discrete Representation Learning. arXiv 2018, arXiv:1711.00937. [Google Scholar] [CrossRef]

- Zhang, M.; Cai, Z.; Pan, L.; Hong, F.; Guo, X.; Yang, L.; Liu, Z. MotionDiffuse: Text-Driven Human Motion Generation with Diffusion Model. arXiv 2022, arXiv:2208.15001. [Google Scholar] [CrossRef]

- Guo, C.; Zou, S.; Zuo, X.; Wang, S.; Ji, W.; Li, X.; Cheng, L. Generating Diverse and Natural 3D Human Motions from Text. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5142–5151. [Google Scholar] [CrossRef]

- Plappert, M.; Mandery, C.; Asfour, T. The KIT Motion-Language Dataset. Big Data 2016, 4, 236–252. [Google Scholar] [CrossRef]

- OpenAI. ChatGPT. 2023. Available online: https://chat.openai.com (accessed on 8 July 2025).

- OpenAI. GPT-4o: A Multimodal Model for Text, Vision, and Audio. 2024. Available online: https://openai.com/index/hello-gpt-4o/ (accessed on 8 July 2025).

- Microsoft. Microsoft Copilot. 2023. Available online: https://www.microsoft.com/en-us/microsoft-365/copilot (accessed on 8 July 2025).

- Yu, X.; Jang, J.; Xiong, S. A large-scale open motion dataset (KFall) and benchmark algorithms for detecting pre-impact fall of the elderly using wearable inertial sensors. Front. Aging Neurosci. 2021, 13, 692865. [Google Scholar] [CrossRef]

- Casilari, E.; Santoyo-Ramón, J.A.; Cano-García, J.M. UMAFall: A Multisensor Dataset for the Research on Automatic Fall Detection. In Proceedings of the 14th International Conference on Mobile Systems and Pervasive Computing (MobiSPC), Leuven, Belgium, 24–26 July 2017; Elsevier: Amsterdam, The Netherlands, 2017; pp. 181–188. [Google Scholar]

- Sucerquia, A.; López, J.D.; Vargas-Bonilla, J.F. SisFall: A Fall and Movement Dataset. Sensors 2017, 17, 198. [Google Scholar] [CrossRef] [PubMed]

- Yuan, X.; Qiao, Y. Diffusion-TS: Interpretable Diffusion for General Time Series Generation. arXiv 2024, arXiv:2403.01742. [Google Scholar] [CrossRef]

- Haque, S.T.; Debnath, M.; Yasmin, A.; Mahmud, T.; Ngu, A.H.H. Experimental Study of Long Short-Term Memory and Transformer Models for Fall Detection on Smartwatches. Sensors 2024, 24, 6235. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Ngu, A.H.H.; Yasmin, A. An empirical study on AI-powered edge computing architectures for real-time IoT applications. In Proceedings of the 2024 IEEE 48th Annual Computers, Software, and Applications Conference (COMPSAC), Osaka, Japan, 2–4 July 2024. [Google Scholar]

- Wu, J.; Wang, J.; Zhan, A.; Wu, C. Fall Detection with CNN-Casual LSTM Network. Information 2021, 12, 403. [Google Scholar] [CrossRef]

- Butt, A.; Narejo, S.; Anjum, M.R.; Yonus, M.U.; Memon, M.; Samejo, A.A. Fall Detection Using LSTM and Transfer Learning. Wirel. Pers. Commun. 2022, 126, 1733–1750. [Google Scholar] [CrossRef]

- Zhou, H.; Chen, A.; Buer, C.; Chen, E.; Tang, K.; Gong, L.; Liu, Z.; Tang, J. Fall Detection using Knowledge Distillation Based Long short-term Memory for Offline Embedded and Low Power Devices. arXiv 2023, arXiv:2308.12481. [Google Scholar] [CrossRef]

- Agarap, A.F. Deep learning using rectified linear units (ReLU). arXiv 2018, arXiv:1803.08375. [Google Scholar]

- Loper, M.; Mahmood, N.; Romero, J.; Pons-Moll, G.; Black, M.J. SMPL: A Skinned Multi-Person Linear Model. In Seminal Graphics Papers: Pushing the Boundaries, Volume 2, 1st ed.; Association for Computing Machinery: New York, NY, USA, 2023. [Google Scholar]

- Kolmogorov, A. Sulla determinazione empirica di una legge di distribuzione. G. dell’Istituto Ital. Degli Attuari 1933, 4, 83–91. [Google Scholar]

- Noury, N.; Fleury, A.; Rumeau, P.; Bourke, A.K.; O’Laighin, G.; Rialle, V.; Lundy, J.E. Fall detection–principles and methods. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 22–26 August 2007; pp. 1663–1666. [Google Scholar] [CrossRef]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165. [Google Scholar] [CrossRef]

- Naeem, M.F.; Oh, S.J.; Uh, Y.; Choi, Y.; Yoo, J. Reliable Fidelity and Diversity Metrics for Generative Models. arXiv 2020, arXiv:2002.09797. [Google Scholar] [CrossRef]

- Endres, D.M.; Schindelin, J.E. A new metric for probability distributions. IEEE Trans. Inf. Theory 2003, 49, 1858–1860. [Google Scholar] [CrossRef]

| Method | Sensor Placement Control | Demographic Specificity | Temporal Dynamics | Limitations |

|---|---|---|---|---|

| GANs (WGAN, CTGAN, TGAN) | ✗ | ✗ | Moderate | Mode collapse, tuning instability |

| Probabilistic Models (SDV-PAR) | ✗ | ✗ | Low–Moderate | Poor realism, limited expressiveness |

| Pose Estimation from Video | ✓ (indirect) | ✗ | Moderate | Sensitive to occlusion, video quality |

| 3D Simulation (ElderSim, I3D) | ✓ | ✗ | High | High compute cost, low diversity |

| Diffusion Models (M2D2M, MOTE) | ✓ | ✗ | High | Requires large data, lacks fine control |

| Digital Twins (OpenSim) | ✓ | ✓ (potential) | Very High | Needs calibration, poor generalizability |

| LLMs (text-to-motion) | ✓ | ✗ | High | No demographic control, fine-tuning cost |

| Dataset | Participants | Age (Years) | Gender (M/F) | Falls | ADLs | Sensors Used | Sampling Rate (Hz) |

|---|---|---|---|---|---|---|---|

| SMM | 42 (16 young, 26 old) | 23 (young), 65.5 (old) | 11M, 5F (young); 12M, 14F (old) | 5 types | 9 types | Huawei Smartwatch (left wrist), Nexus Smartphone (right hip) | 32 |

| KFall | 32 (all young) | 24.9 (young) | 32M, 0F | 15 types | 21 types | LPMS-B2 (lower back) | 100 |

| UMAFall | 17 (all young) | 19–28 (young) | 10M, 7F | 3 types | 8 types | SimpleLink SensorTag (waist, right wrist) | 20 |

| SisFall | 38 (23 young, 15 old) | 19–30 (young), 60–75 (old) | 11M, 12F (young); 8Males, 7F (old) | 15 types | 19 types | ADXL345 (waist) | 200 |

| Index | Prompts |

|---|---|

| 1 | An elderly person is falling down. |

| 2 | An elderly person sits, becomes unconscious, then falls on his left, and lies on the floor. |

| 3 | An elderly person walks, becomes unconscious, then falls on his head, and lies on the floor. |

| 4 | An elderly person walks, then he falls down on his face, and stays on the floor. |

| 5 | An elderly person falls down on his right side, and lies on the ground. |

| 6 | A person stands still, wobbles, leans right suddenly, then falls down, and tucks in tight on the floor. |

| 7 | A person walks, slips suddenly, and falls on his back on the floor. |

| 8 | A person climbs a ladder, loses grip on one rung, and falls sideways. |

| 9 | A person walks on gravel, twists their ankle, and falls forward onto their hands and knees down to the floor. |

| 10 | A person runs, then slips and falls flat on the floor. |

| 11 | A person walks, suddenly trips, loses his balance, and ends up lying on the floor. |

| 12 | A person runs, slips, and FALLS FLAT on the floor. |

| 13 | A person walks, suddenly trips, loses his balance, and falls down on the floor, unable to get up. |

| 14 | A person walks, suddenly trips, loses his balance, and falls down and then ends up lying on the floor. |

| 15 | A person missed a chair and fell on the floor on his head. |

| 16 | A person falls asleep, falls down on the floor on his head. |

| 17 | A person is standing up, and falls down on the floor on his head. |

| 18 | A person falls down on the floor on his head. |

| 19 | A person walks, falls asleep, and then falls down on the floor on his head. |

| 20 | A child rides a bike too fast, loses control, and tumbles onto the grass with a scraped knee. |

| 21 | A person is stepping up, and falls down on the floor on his head. |

| 22 | A person walks, and then falls down on his head on the floor. |

| 23 | A person stands up from a chair, and falls down on the floor on his head. |

| 24 | A person bends to pick an object, and falls down on the floor on his head. |

| 25 | A person climbs stairs and falls down on the floor on his head. |

| 26 | A person kneels down to pick an object, slips, and falls on his head on the floor. |

| 27 | A person missed a chair and fell on the floor on his left. |

| 28 | A person missed a chair and fell on the floor on his back. |

| 29 | A person bends to the left, picks up something, becomes unconscious, and falls down onthe floor on his head. |

| 30 | A person turns right, and then falls down on the floor on his head. |

| 31 | A person walks forward, becomes unconscious, and falls down on the floor on his head. |

| 32 | A person is walking. They suddenly trip, lose their balance, and end up lying on the floor. |

| 33 | A person walking suddenly trips, loses their balance, and ends up lying on the floor, unable to get up. |

| 34 | A person walking suddenly trips, loses their balance, and ends up lying on the floor. |

| 35 | A person is standing upright, then suddenly trips and ends up lying in the fetal position. |

| 36 | A person stands up. Then they descend to the floor, lies sprawled out. |

| 37 | A person attempts to get out of their chair, but ends up hitting their head on the floor and lying sprawled out. |

| 38 | A person is standing upright. They do a half turn before they lose their balance and descend to the floor. They lie with their legs pulled up to their chest. |

| 39 | A person is taking confident strides, until they trip hitting their head as they lie on the ground sprawled out. |

| 40 | A person walks forward and trips ending on the floor. |

| 41 | A person walks backwards and trips and falls ending on the floor. |

| 42 | A person walks on a wet floor, slips, then falls and lands on their side. |

| 43 | An elderly person stumbles while stepping off a curb, falls, and lands on their hip. |

| 44 | A person reaches for an object on a high shelf, loses balance, and falls backward on the floor. |

| 45 | A person runs down a hill, loses control, and rolls to a stop on the ground. |

| 46 | A person stands on a chair to change a light bulb, the chair tips, and they fall to the floor. |

| 47 | A person trips over his own feet, falls on the ground, and ends up lying there. |

| 48 | A person rides a scooter, hits a bump, and falls, landing on their knee. |

| 49 | A person leans too far back in a chair, and he falls backward on the floor. |

| 50 | A person walks downstairs, missteps, and falls down on the floor on his back. |

| Dataset | Baseline | Diffusion-TS | Text-to-Motion LLMs | Text-to-Text LLMs | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| T2M | ParCo | SATO | Gemini-Flash-8B | GPT4 (Copilot) | GPT4o (ChatGPT) | ||||||

| Zeroshot | Fewshot | Zeroshot | Fewshot | Zeroshot | Fewshot | ||||||

| SMM | 0.740 | 0.680 (−8.11%) | 0.710 (−4.05%) | 0.680 (−8.11%) | 0.648 (−12.43%) | 0.636 (−14.05%) | 0.662 (−10.54%) | 0.578 (−21.89%) | 0.626 (−15.41%) | 0.588 (−20.54%) | 0.620 (−16.22%) |

| KFall | 0.778 | 0.854 (+9.76%) | 0.902 (+15.94%) | 0.870 (+11.84%) | 0.902 (+15.94%) | 0.794 (+2.06%) | 0.848 (+8.99%) | 0.878 (+12.87%) | 0.822 (+5.65%) | 0.898 (+15.43%) | 0.892 (+14.65%) |

| UMAFall | 0.542 | 0.652 (+20.30%) | 0.630 (+16.23%) | 0.698 (+28.78%) | 0.712 (+31.18%) | 0.575 (+6.09%) | 0.702 (+29.52%) | 0.630 (+16.23%) | 0.850 (+56.83%) | 0.580 (+7.01%) | 0.756 (+39.48%) |

| SisFall | 0.732 | 0.748 (+2.19%) | 0.742 (+1.37%) | 0.758 (+3.55%) | 0.784 (+7.10%) | 0.752 (+2.73%) | 0.750 (+2.46%) | 0.772 (+5.46%) | 0.776 (+6.01%) | 0.782 (+6.83%) | 0.680 (−7.10%) |

| Dataset | Baseline | Diffusion-TS | Text-to-Motion LLMs | Text-to-Text LLMs | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| T2M | ParCo | SATO | Gemini-Flash-8B | Copilot | GPT4o | ||||||

| Zeroshot | Fewshot | Zeroshot | Fewshot | Zeroshot | Fewshot | ||||||

| SMM (right hip) | 0.8075 | 0.778 (−3.65%) | 0.812 (+0.56%) | 0.856 (+6.01%) | 0.746 (−7.62%) | 0.762 (−5.63%) | 0.682 (−15.54%) | 0.806 (−0.19%) | 0.754 (−6.63%) | 0.772 (−4.40%) | 0.754 (−6.63%) |

| UMAFall (waist) | 0.744 | 0.922 (+23.92%) | 0.854 (+14.78%) | 0.850 (+14.25%) | 0.892 (+19.89%) | 0.946 (+27.15%) | 0.710 (−4.57%) | 0.888 (+19.35%) | 0.946 (+27.15%) | 0.888 (+19.35%) | 0.702 (−5.65%) |

| Dataset | Baseline | GPT4o | Copilot | Gemini-Flash-8B | |||

|---|---|---|---|---|---|---|---|

| Zeroshot | Auto | Zeroshot | Auto | Zeroshot | Auto | ||

| SMM (left wrist) | 0.740 | 0.588 (−20.54%) | 0.724 (−2.16%) | 0.578 (−21.89%) | 0.714 (−3.51%) | 0.636 (−14.05%) | 0.626 (−15.41%) |

| KFall (waist) | 0.778 | 0.898 (+15.42%) | 0.892 (+14.65%) | 0.878 (+12.85%) | 0.874 (+12.34%) | 0.794 (+2.06%) | 0.850 (+9.25%) |

| UMAFall (waist) | 0.542 | 0.580 (+7.01%) | 0.840 (+54.98%) | 0.630 (+16.24%) | 0.660 (+21.77%) | 0.575 (+6.09%) | 0.723 (+33.39%) |

| SisFall (waist) | 0.732 | 0.782 (+6.83%) | 0.768 (+4.92%) | 0.772 (+5.46%) | 0.774 (+5.74%) | 0.752 (+2.73%) | 0.772 (+5.46%) |

| Dataset | Baseline | Diffusion-TS | Text-to-Motion Models (T2M+ParCo+SATO) | Text-to-Text Models (Gemini+GPT4+GPT4o) | Auto (Gemini+GPT4+GPT4o) |

|---|---|---|---|---|---|

| SMM (left wrist) | 0.740 | 0.680 (−8.11%) | 0.730 (−1.35%) | 0.746 (+0.81%) | 0.626 (−15.41%) |

| KFall (waist) | 0.778 | 0.854 (+9.77%) | 0.846 (+8.74%) | 0.824 (+5.91%) | 0.902 (+15.94%) |

| UMAFall (right wrist) | 0.542 | 0.652 (+20.30%) | 0.680 (+25.46%) | 0.642 (+18.45%) | 0.690 (+27.30%) |

| SisFall (waist) | 0.732 | 0.748 (+2.19%) | 0.744 (+1.64%) | 0.762 (+4.10%) | 0.738 (+0.82%) |

| Aspect | Text-to-Text LLMs (GPT4, GPT4o, Gemini) | Text-to-Motion Models (T2M, SATO, ParCo) | Diffusion-TS | Remarks |

|---|---|---|---|---|

| Joint-specific motion | Limited | Strong | N/A | T2M models capture body-part motion more realistically |

| Demographic sensitivity (age/gender) | Weak | Weak | N/A | None of the models showed demographic variation |

| Alignment with real data (JSD, Coverage) | Moderate to poor, unstable in high frequency | Moderate | Strong | Diffusion-TS maintains stability across datasets |

| Fall detection performance (F1-score) | Dataset-dependent; best on UMAFall | Consistent gains across datasets | Stable but not always highest | T2M often yields highest gains |

| Sampling rate robustness | Poor at high frequency (≥100 Hz) | Moderate | Strong | LLMs degrade as sampling rate increases |

| Prompt scalability | Manual or Auto; labor-intensive | Manual; labor-intensive | N/A | Prompt engineering is a major bottleneck for LLMs |

| Computational cost | Low (inference only) | High (requires fine-tuning) | Moderate (training required) | T2M fine-tuning is costly but effective |

| Deployability on wearables | Limited (due to quantization, noise) | Limited (requires model compression) | More practical | Diffusion-TS offers better stability |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alamgeer, S.; Souissi, Y.; Ngu, A. AI-Generated Fall Data: Assessing LLMs and Diffusion Model for Wearable Fall Detection. Sensors 2025, 25, 5144. https://doi.org/10.3390/s25165144

Alamgeer S, Souissi Y, Ngu A. AI-Generated Fall Data: Assessing LLMs and Diffusion Model for Wearable Fall Detection. Sensors. 2025; 25(16):5144. https://doi.org/10.3390/s25165144

Chicago/Turabian StyleAlamgeer, Sana, Yasine Souissi, and Anne Ngu. 2025. "AI-Generated Fall Data: Assessing LLMs and Diffusion Model for Wearable Fall Detection" Sensors 25, no. 16: 5144. https://doi.org/10.3390/s25165144

APA StyleAlamgeer, S., Souissi, Y., & Ngu, A. (2025). AI-Generated Fall Data: Assessing LLMs and Diffusion Model for Wearable Fall Detection. Sensors, 25(16), 5144. https://doi.org/10.3390/s25165144