Research on Deep Learning-Based Multi-Level Cross-Domain Foreign Object Detection in Power Transmission Lines

Abstract

1. Introduction

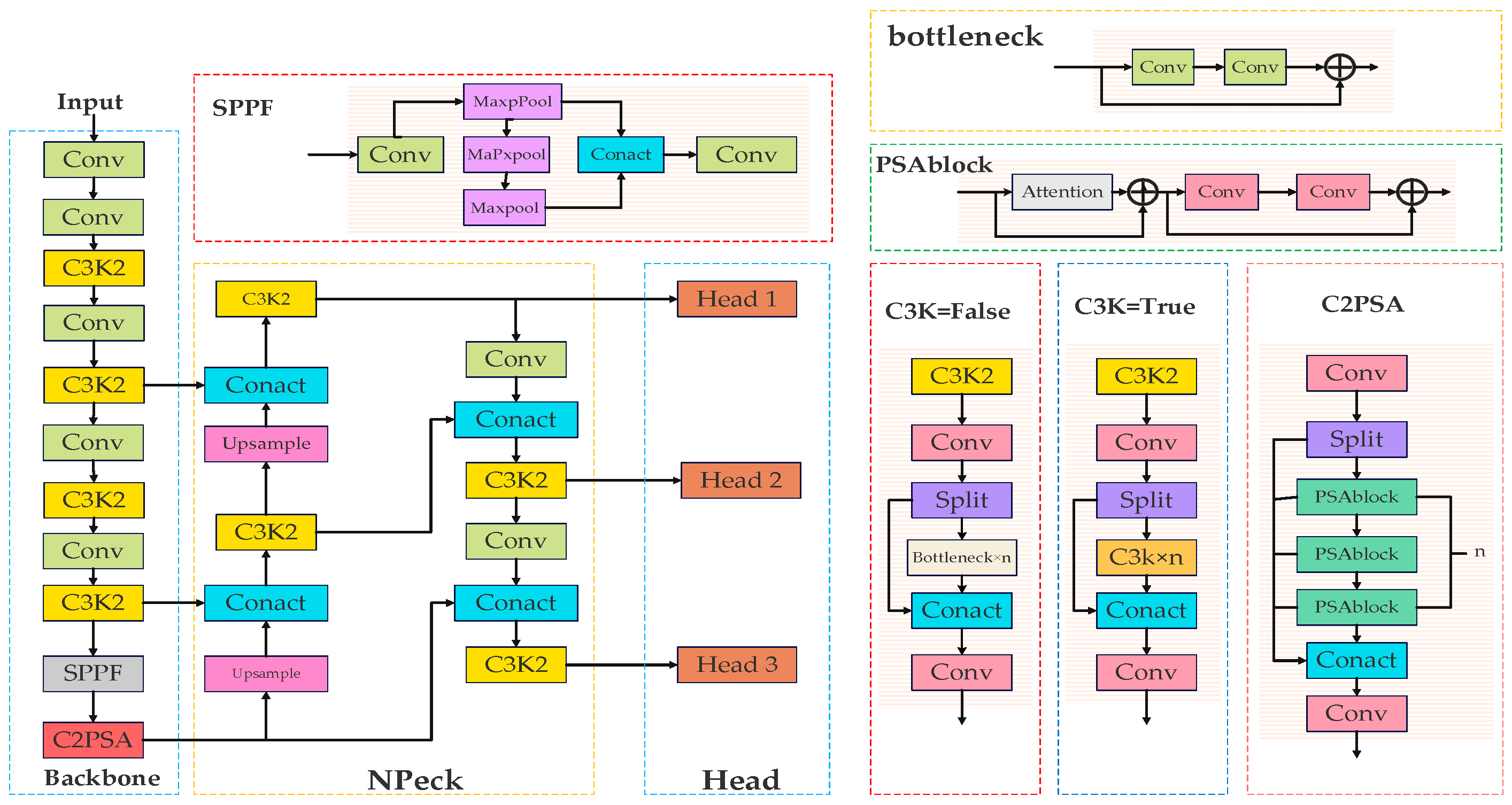

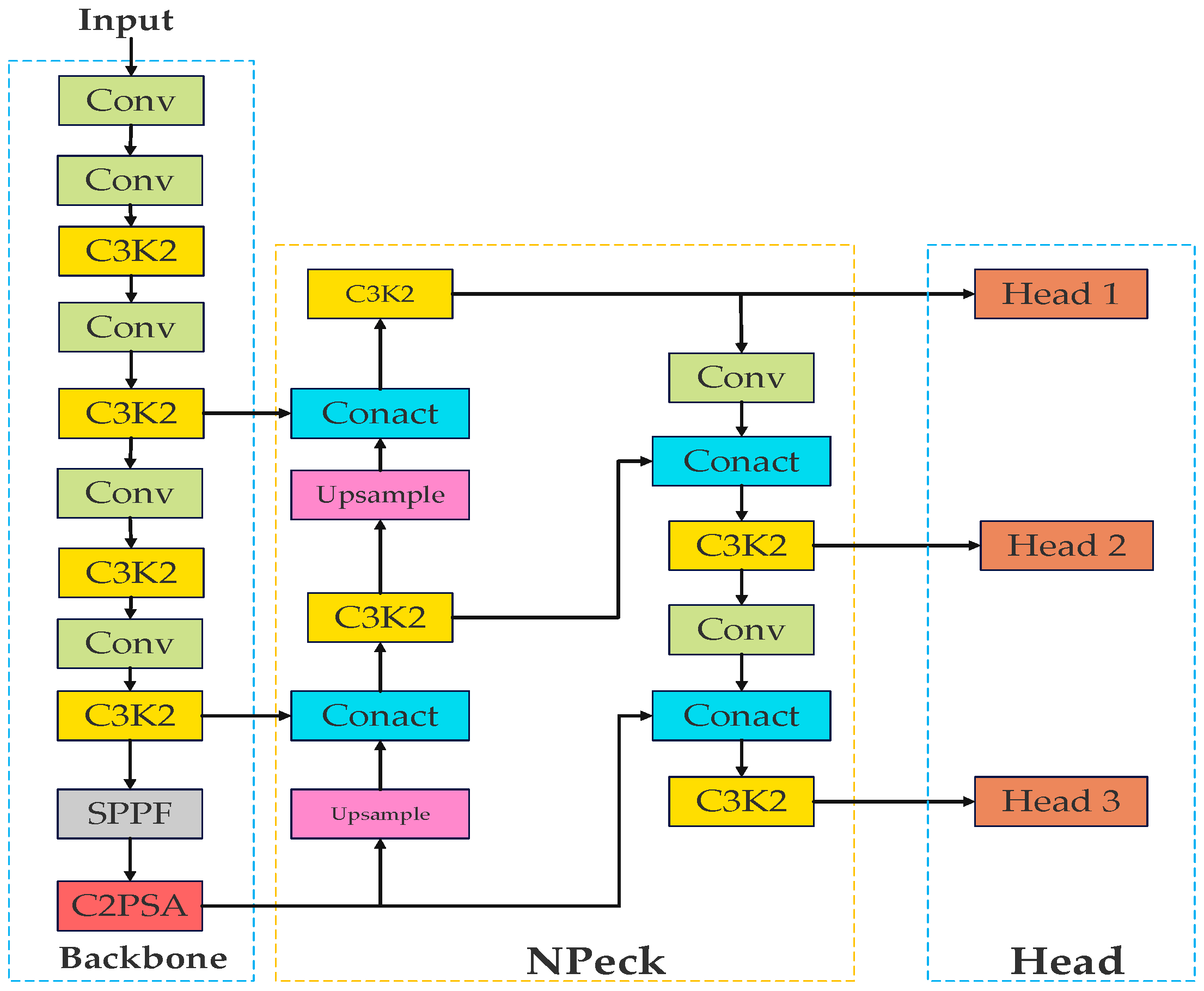

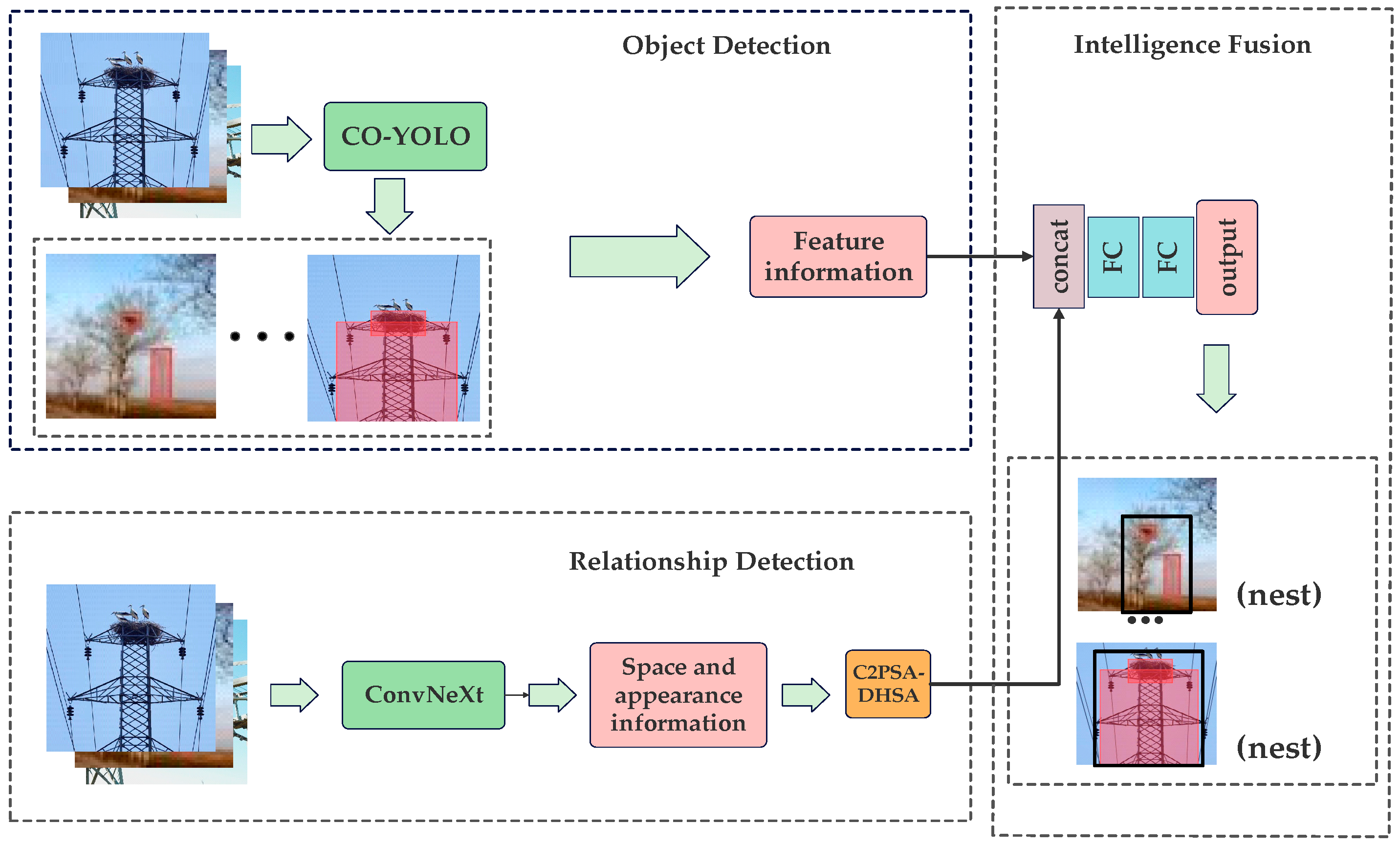

- A structural enhancement of YOLOv11, used C3k2-Dual module, NonLocalBlockND module, C2PSA-DHSA module, DySample module, PIoU and combined ConvNeXT, enabling improved detection of small, dense, and occluded targets with faster inference, tailored for real-time transmission line scenarios.

- A novel multi-level cross-domain fusion framework is proposed that combines object-level features and spatial semantic cues using ConvNeXt-B, thereby strengthening feature representation and detection robustness.

- Integration of Bayesian optimization for automated hyperparameter tuning, leading to improved convergence speed and overall detection performance.

2. Models and Methods

2.1. Object Detection Part

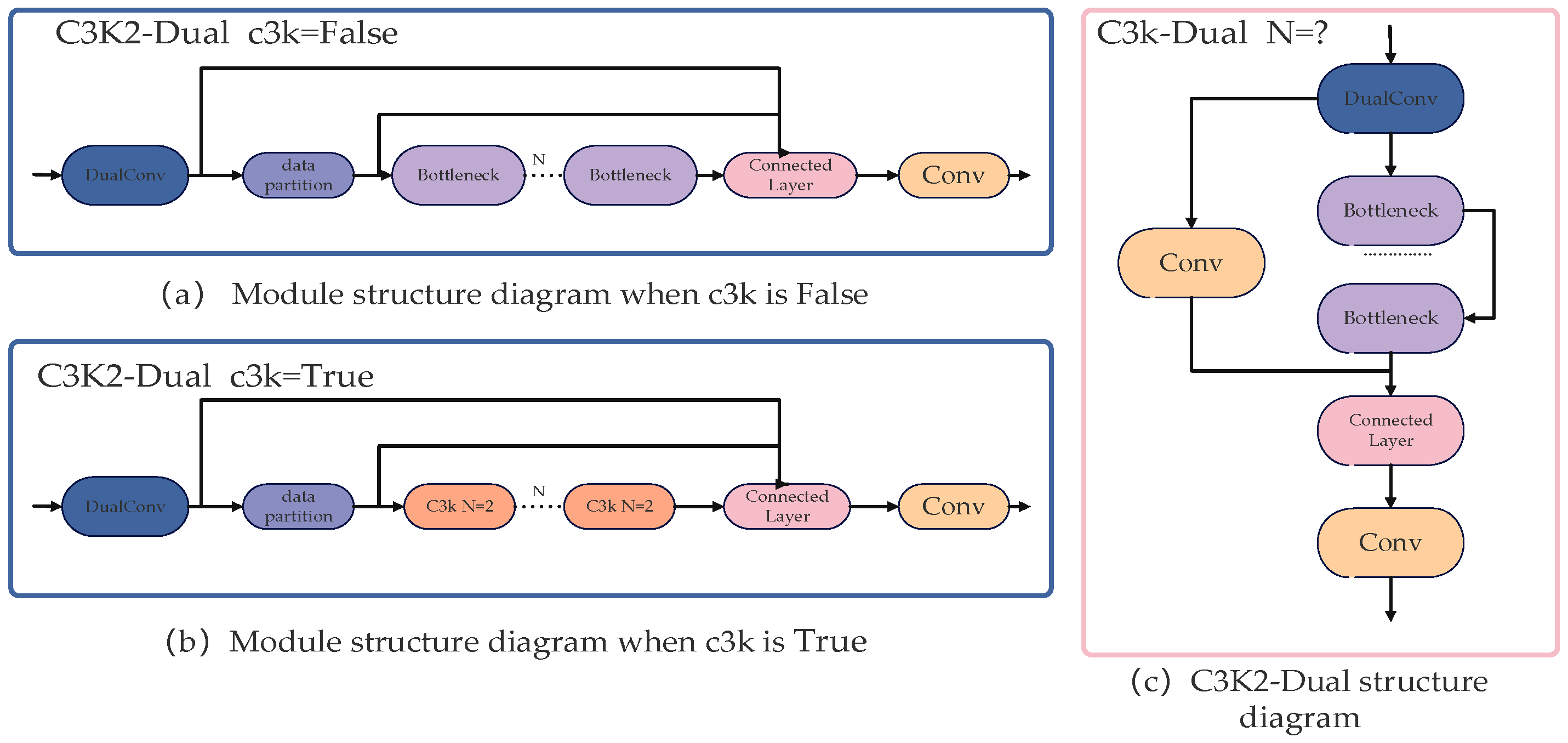

2.1.1. C3k2-Dual Module

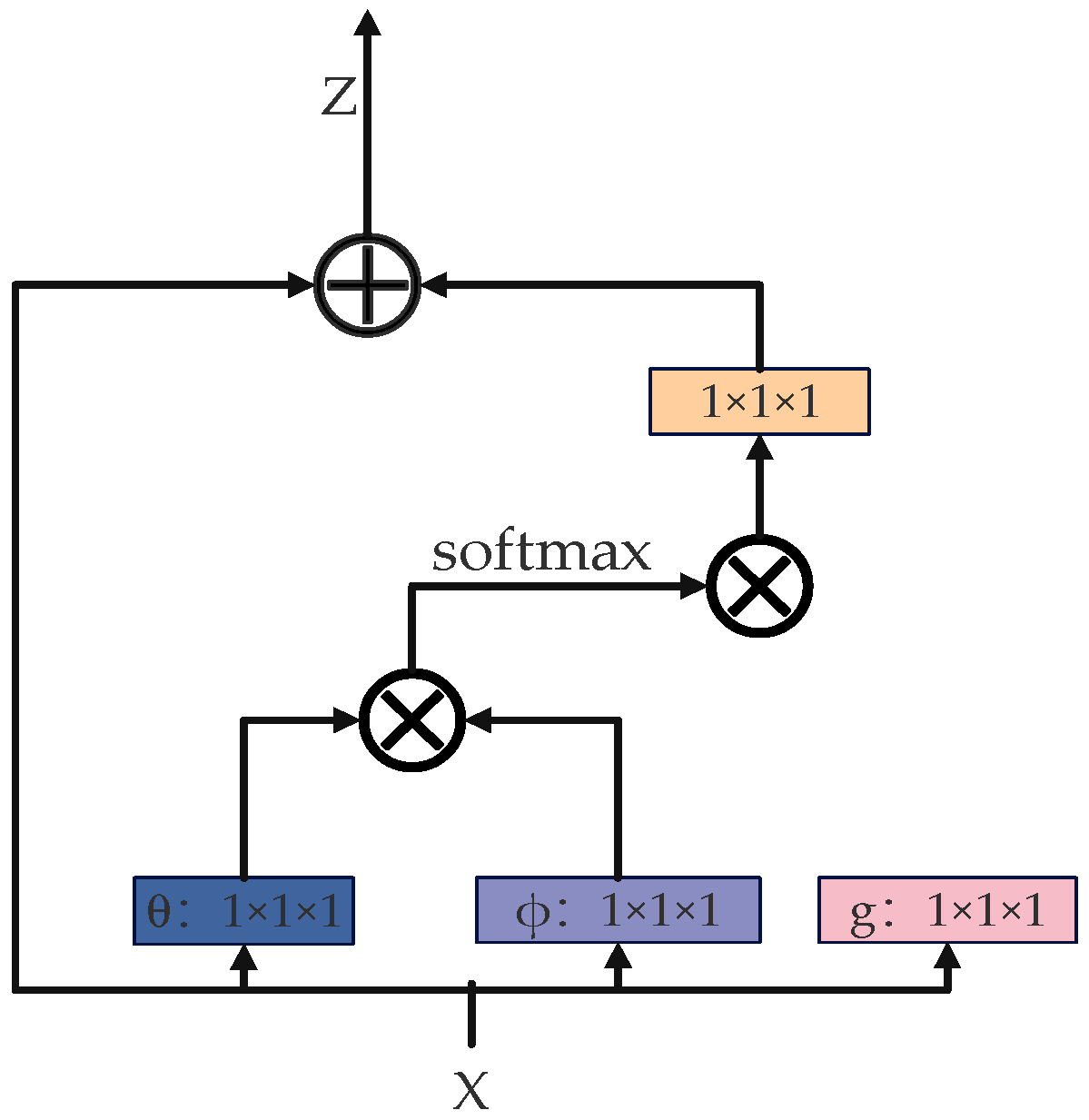

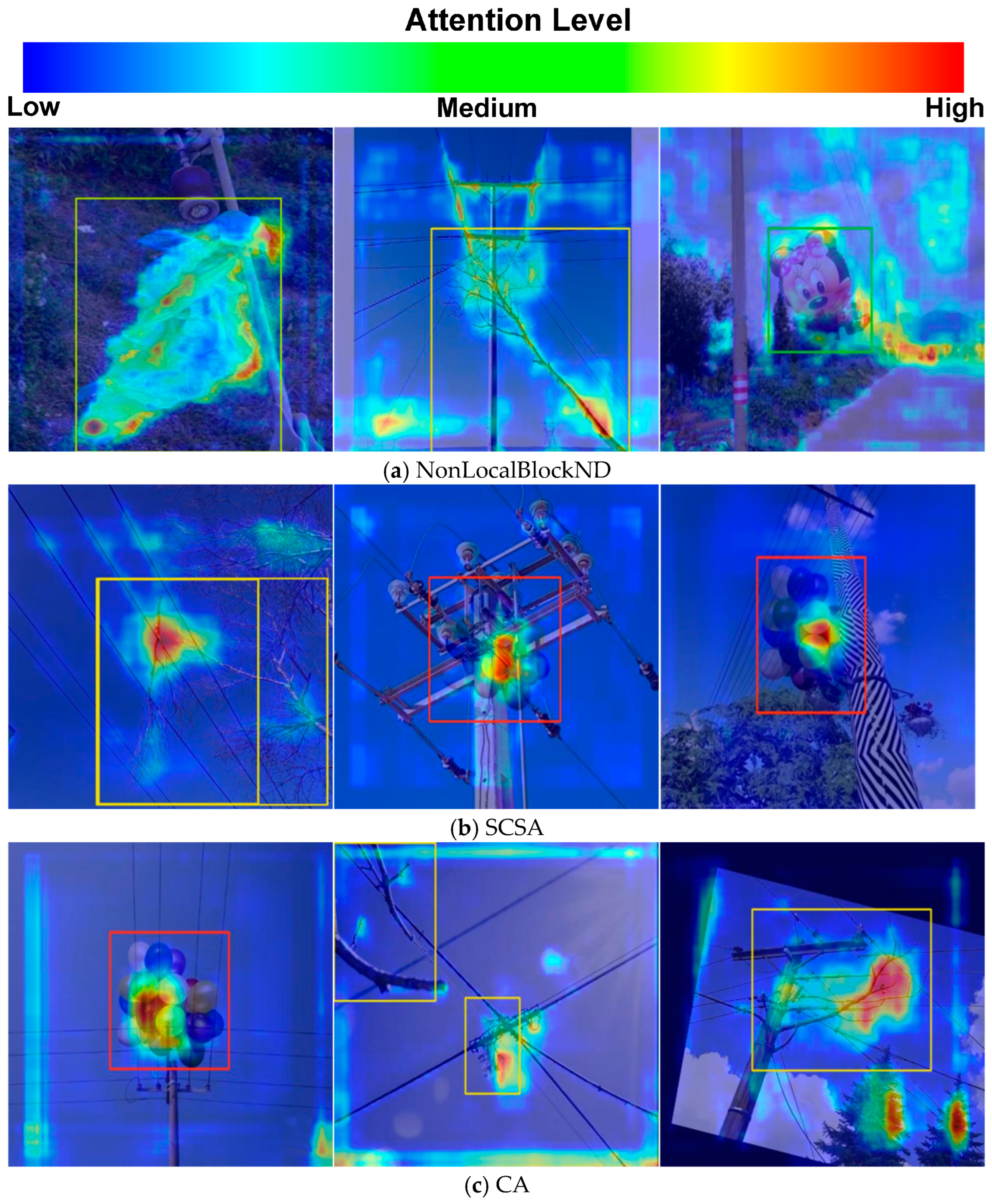

2.1.2. NonLocalBlockND Module

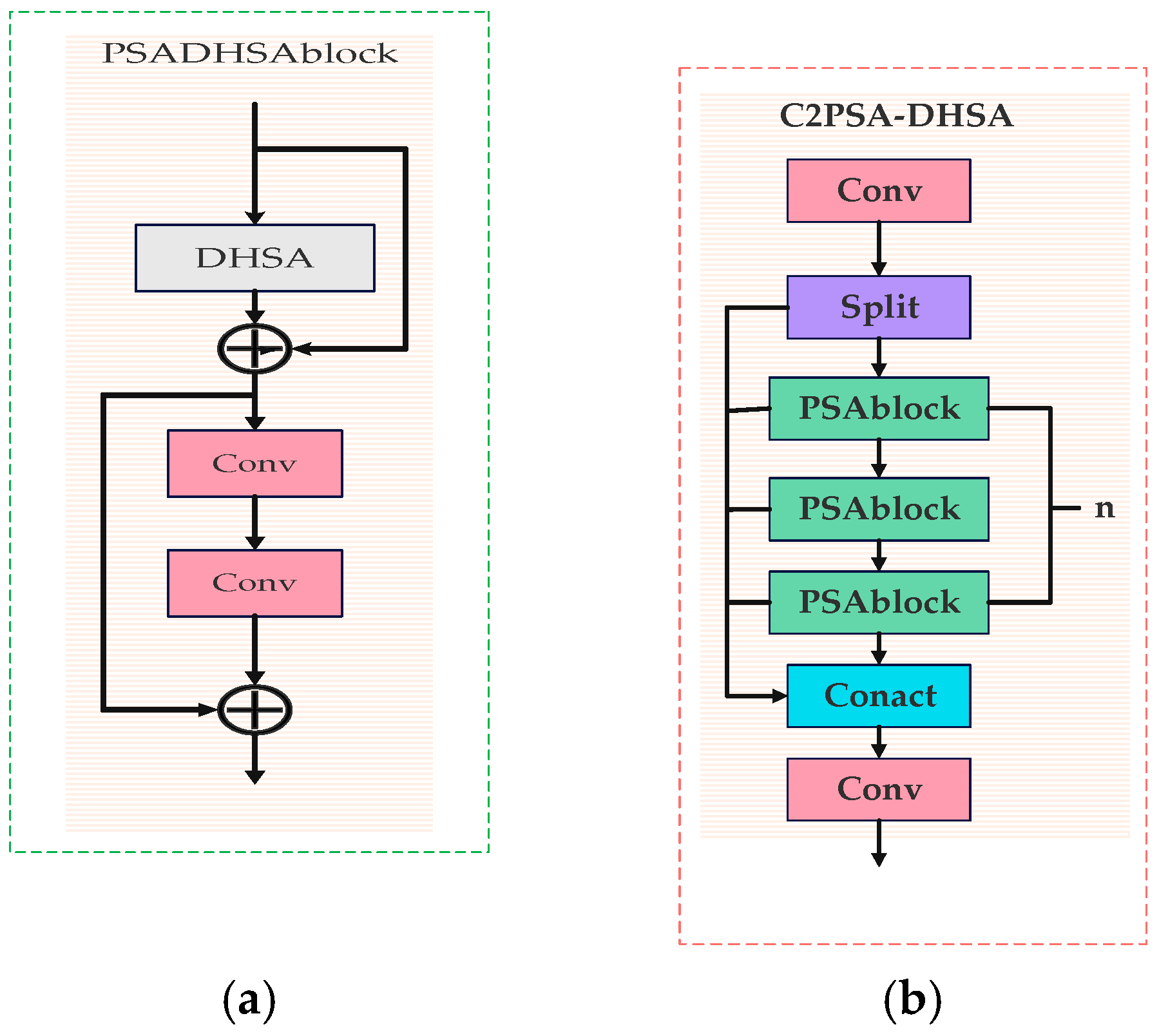

2.1.3. C2PSA-DHSA Module

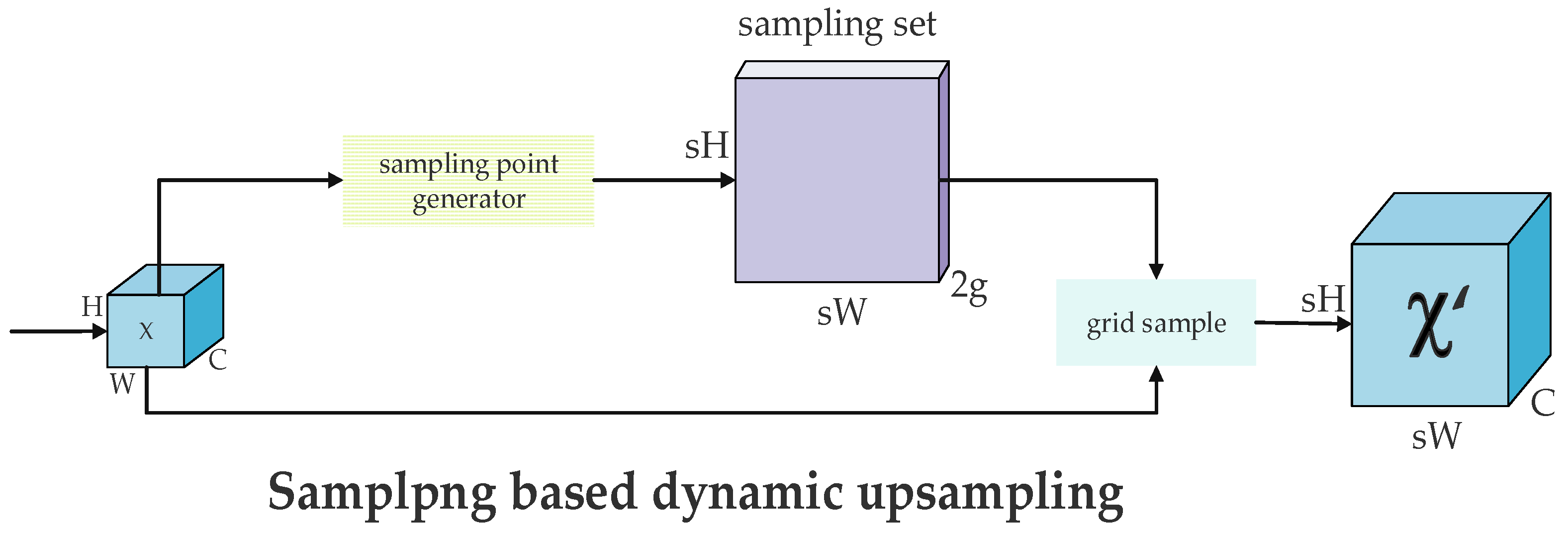

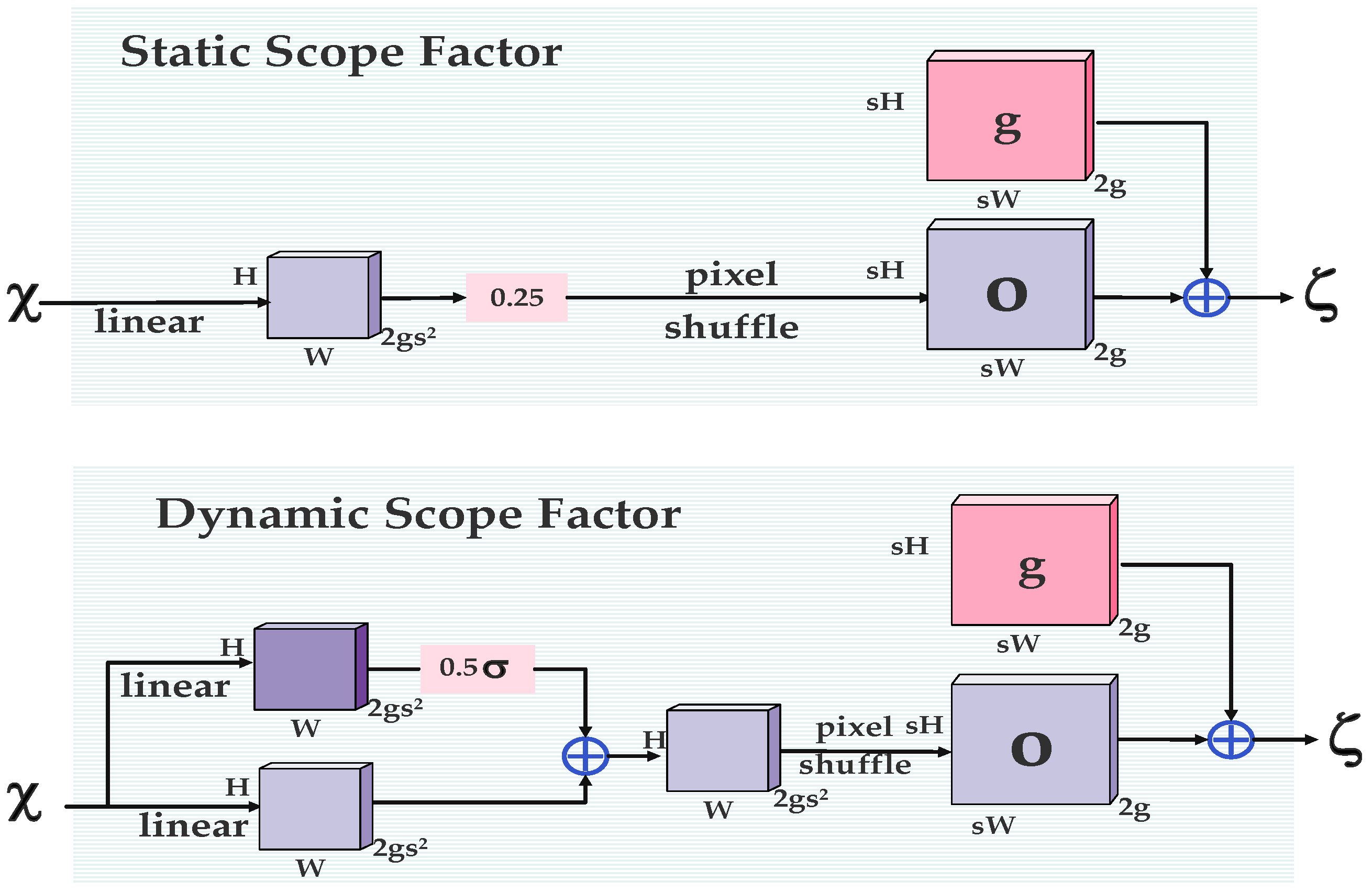

2.1.4. DySample Module

2.1.5. Loss Function

2.1.6. Improved Network Model

2.2. Relationship Detection

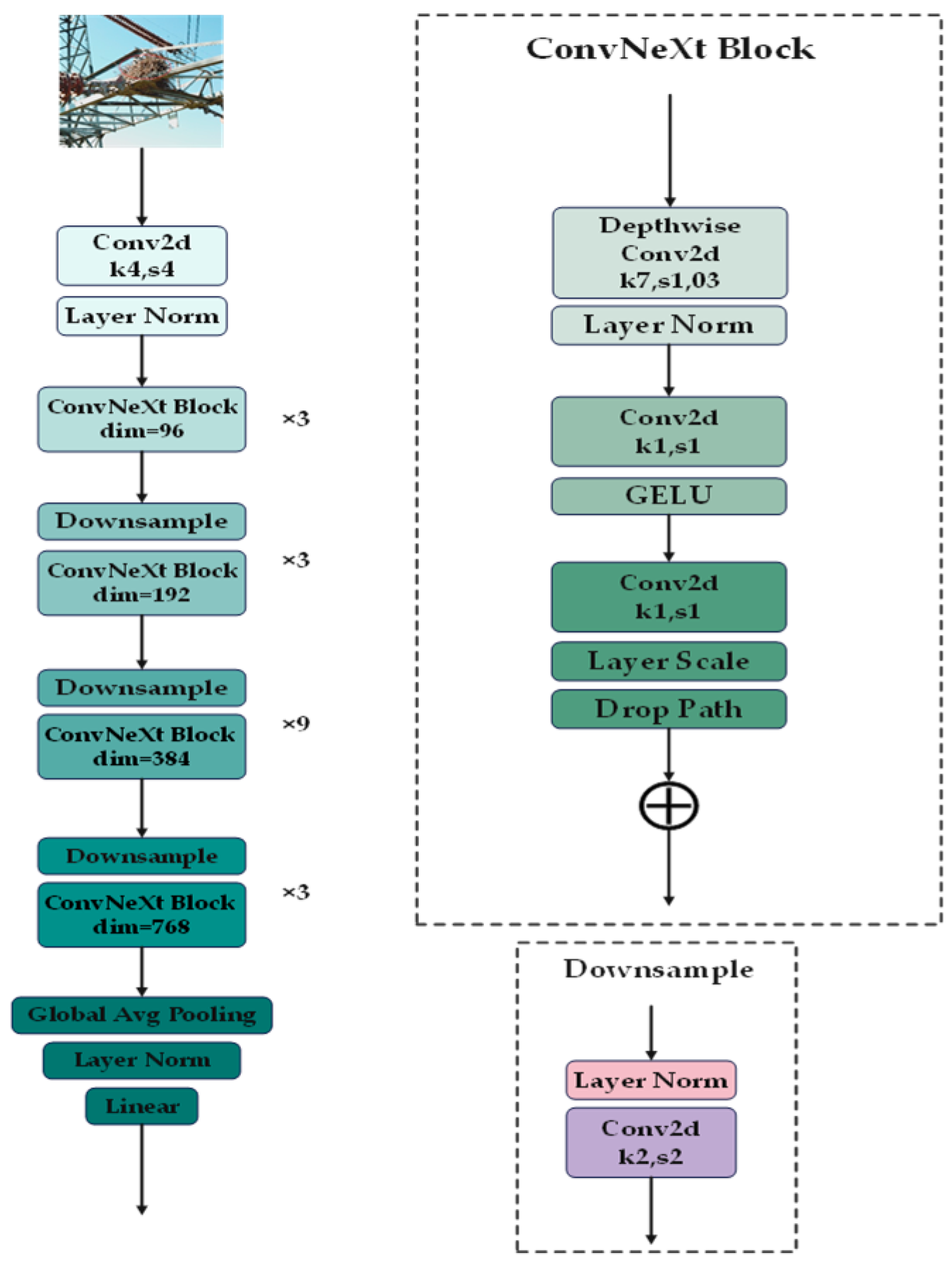

ConvNeXT-B

2.3. CO-YOLO

3. Experiments and Analyses

3.1. Experimental Settings

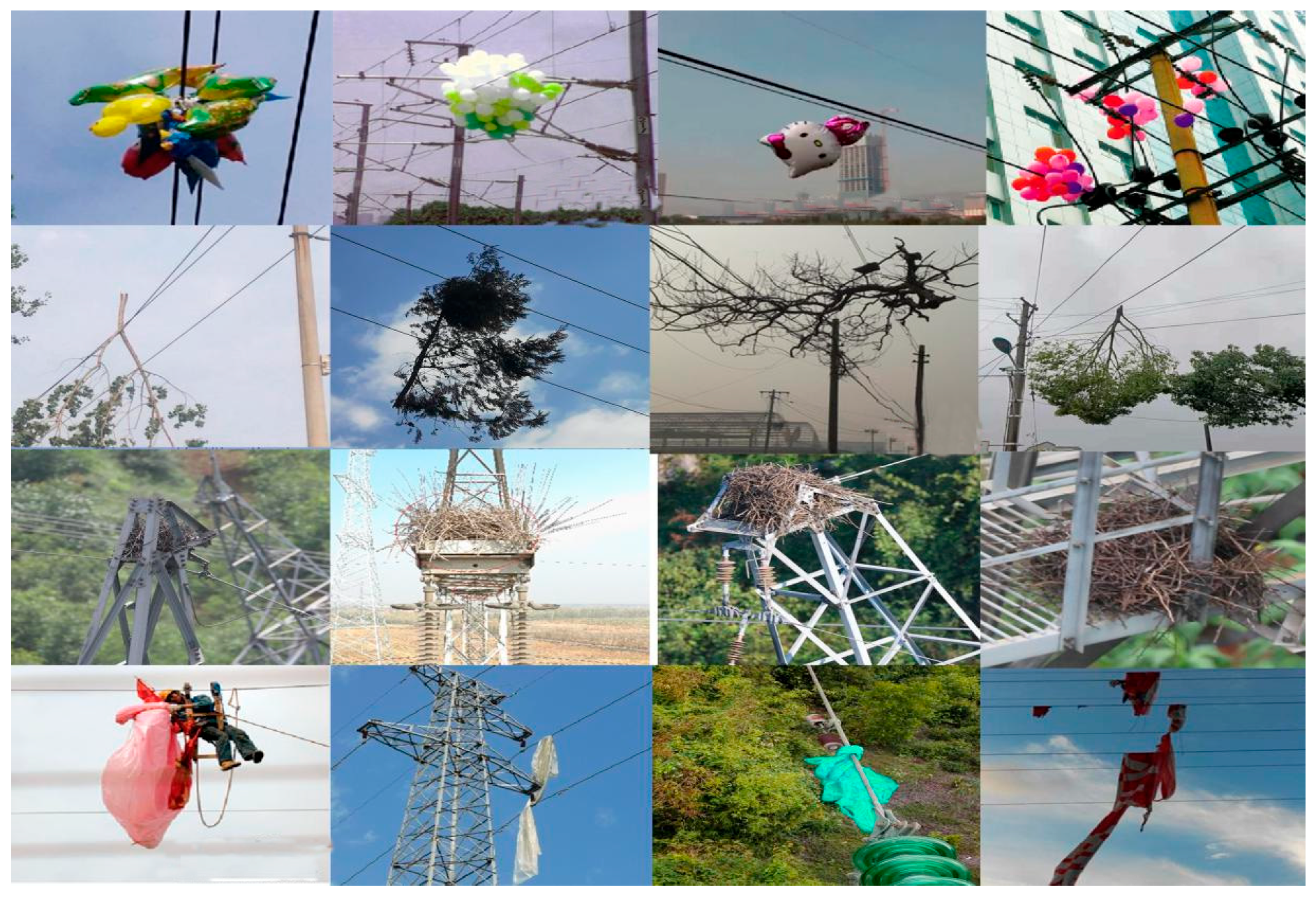

3.2. DataSets

- -

- Training set: 70% of the total samples, used for model parameter training;

- -

- Validation set: 15%, used for hyperparameter tuning and model selection;

- -

- Test set: 15%, used for final performance evaluation.

3.3. Evaluation Metrics

- Accuracy measures the proportion of total predictions that are correct, defined as Equation (5) as follows:

- Precision reflects the proportion of predicted positive samples that are indeed positive shown as Equation (6) as follows:

- Recall (also called Sensitivity) captures the proportion of actual positive samples correctly identified, shown as Equation (7) as follows:

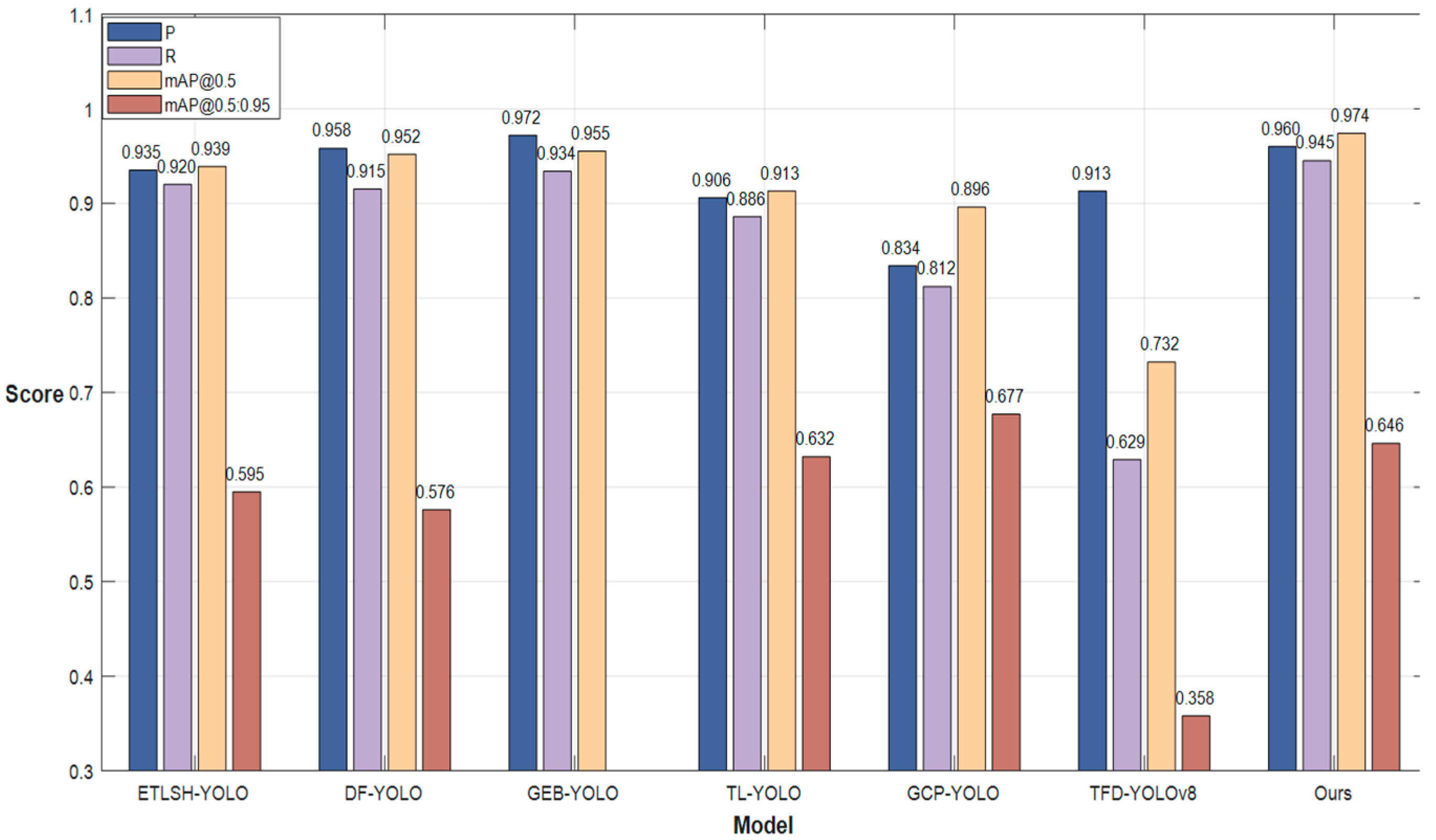

3.4. Benchmark Comparison Experiment

3.5. Subsection

3.6. Ablation Study

3.7. Attention Visualization Comparison Experiment

3.8. Generalized Experiments

4. Conclusions

- Compared to YOLOv11, CO-YOLO improves mAP@0.5 by 1.9% mAP@0.5:0.95 by 2.2%, with only a 12.1% reduction in FPS.

- Compared to ETLSH-YOLO, it achieves improvements of 3.7% and 8.6% in mAP metrics.

- When tested against TFD-YOLOv8, mAP@0.5 and mAP@0.5:0.95 increased by 33.0% and 80.4%, respectively.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chivunga, J.N.; Lin, Z.; Blanchard, R. Power Systems’ Resilience: A Comprehensive Literature Review. Energies 2023, 16, 7256. [Google Scholar] [CrossRef]

- Vazquez, D.A.Z.; Qiu, F.; Fan, N.; Sharp, K. Wildfire mitigation plans in power systems: A literature review. IEEE Trans. Power Syst. 2022, 37, 3540–3551. [Google Scholar] [CrossRef]

- Ferreira, V.H.; Zanghi, R.; Fortes, M.Z.; Sotelo, G.G.; Silva, R.D.B.M.; Souza, J.C.S.; Guimarães, C.H.C.; Gomes, S., Jr. A survey on intelligent system application to fault diagnosis in electric power system transmission lines. Electr. Power Syst. Res. 2016, 136, 135–153. [Google Scholar] [CrossRef]

- Cozza, A.; Pichon, L. Echo response of faults in transmission lines: Models and limitations to fault detection. IEEE Trans. Microw. Theory Tech. 2016, 64, 4155–4164. [Google Scholar] [CrossRef]

- Affijulla, S.; Tripathy, P. A robust fault detection and discrimination technique for transmission lines. IEEE Trans. Smart Grid 2017, 9, 6348–6358. [Google Scholar] [CrossRef]

- Kumar, B.R.; Mohapatra, A.; Chakrabarti, S.; Kumar, A. Phase angle-based fault detection and classification for protection of transmission lines. Int. J. Electr. Power Energy Syst. 2021, 133, 107258. [Google Scholar] [CrossRef]

- Chen, Z.; Yang, J.; Feng, Z.; Zhu, H. RailFOD23: A dataset for foreign object detection on railroad transmission lines. Sci. Data 2024, 11, 72. [Google Scholar] [CrossRef]

- Wu, Y.; Zhao, S.; Xing, Z.; Wei, Z.; Li, Y.; Li, Y. Detection of foreign objects intrusion into transmission lines using diverse generation model. IEEE Trans. Power Deliv. 2023, 38, 3551–3560. [Google Scholar] [CrossRef]

- Liu, C.; Ma, L.; Sui, X.; Guo, N.; Yang, F.; Yang, X.; Huang, Y.; Wang, X. YOLO-CSM-based component defect and foreign object detection in overhead transmission lines. Electronics 2023, 13, 123. [Google Scholar] [CrossRef]

- Tang, C.; Dong, H.; Huang, Y.; Han, T.; Fang, M.; Fu, J. Foreign object detection for transmission lines based on Swin Transformer V2 and YOLOX. Vis. Comput. 2024, 40, 3003–3021. [Google Scholar] [CrossRef]

- Peng, L.; Wang, K.; Zhou, H.; Li, H.; Yu, P. Detection of bolt defects on transmission lines based on multi-scale YOLOv7. IEEE Access 2024, 12, 156639–156650. [Google Scholar] [CrossRef]

- Fahim, S.R.; Sarker, Y.; Sarker, S.K.; Sheikh, M.R.I.; Das, S.K. Self attention convolutional neural network with time series imaging based feature extraction for transmission line fault detection and classification. Electr. Power Syst. Res. 2020, 187, 106437. [Google Scholar] [CrossRef]

- Kumar, V.R.; Jeyanthy, P.A.; Kesavamoorthy, R. Optimization-assisted CNN model for fault classification and site location in transmission lines. Int. J. Image Graph. 2024, 24, 2450008. [Google Scholar] [CrossRef]

- Zunair, H.; Khan, S.; Hamza, A.B. RSUD20K: A dataset for road scene understanding in autonomous driving. In Proceedings of the 2024 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 27–30 October 2024; pp. 708–714. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Boston, MA, USA, 7–12 June 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In European Conference on Computer Vision; Springer International Publishing: Cham, Germany, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Nguyen, D.T.; Nguyen, T.N.; Kim, H.; Lee, H.J. A high-throughput and power-efficient FPGA implementation of YOLO CNN for object detection. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2019, 27, 1861–1873. [Google Scholar] [CrossRef]

- Renwei, T.; Zhongjie, Z.; Yongqiang, B.; Ming, G.; Zhifeng, G. Key parts of transmission line detection using improved YOLO v3. Int. Arab J. Inf. Technol 2021, 18, 747–754. [Google Scholar] [CrossRef]

- Yaru, W.; Lilong, F.; Xiaoke, S.; Zhuo, Q.U.; Ke, Y.A.N.G.; Qianming, W.A.N.G.; Yongjie, Z.H.A.I. TFD-YOLOv8: A transmission line foreign object detection method. J. Graph. 2024, 45, 901. [Google Scholar]

- Li, S.J.; Liu, Y.X.; Li, M.; Ding, L. DF-YOLO: Highly accurate transmission line foreign object detection algorithm. IEEE Access 2023, 11, 108398–108406. [Google Scholar] [CrossRef]

- Yu, C.; Liu, Y.; Zhang, W.; Zhang, X.; Zhang, Y.; Jiang, X. Foreign objects identification of transmission line based on improved YOLOv7. IEEE Access 2023, 11, 51997–52008. [Google Scholar] [CrossRef]

- Zhao, L.; Zhang, Y.; Dou, Y.; Jiao, Y.; Liu, Q. ETLSH-YOLO: An Edge–Real-Time transmission line safety hazard detection method. Symmetry 2024, 16, 1378. [Google Scholar] [CrossRef]

- Zheng, J.; Liu, H.; He, Q.; Hu, J. GEB-YOLO: A novel algorithm for enhanced and efficient detection of foreign objects in power transmission lines. Sci. Rep. 2024, 14, 15769. [Google Scholar] [CrossRef] [PubMed]

- Shao, Y.; Zhang, R.; Lv, C.; Luo, Z.; Che, M. TL-YOLO: Foreign-Object detection on power transmission line based on improved Yolov8. Electronics 2024, 13, 1543. [Google Scholar] [CrossRef]

- Duan, P.; Liang, X. An Improved YOLOv8-Based Foreign Detection Algorithm for Transmission Lines. Sensors 2024, 24, 6468. [Google Scholar] [CrossRef]

- Xu, W.; Xiwen, C.; Haibin, C.; Yi, C.; Jun, Z. Foreign object detection method in transmission lines based on improved yolov8n. In Proceedings of the 10th International Symposium on System Security, Safety, and Reliability (ISSSR), IEEE, Xiamen, China, 30–31 March 2024; pp. 196–200. [Google Scholar]

| Item | Configuration |

|---|---|

| Operation System | Win11 |

| CPU | 12th Gen Intel CoreTM i9-12900H |

| GPU | NVIDIA GeForce RTX 3060 Laptop GPU (6 GB) |

| Python | 3.8 |

| PyTorch | 2.1.2 |

| Cuda | 10.0 |

| Type | Nest | Branch | Balloon | Plastic | All | |

|---|---|---|---|---|---|---|

| Number | ||||||

| Origin | 315 | 132 | 352 | 180 | 979 | |

| Enhanced | 576 | 498 | 679 | 328 | 2081 | |

| Model | Type | GFLOPs | Pramas | P (Precision) | R (Recall) | mAP@0.5 | mAP@0.5:0.95 | FPS |

|---|---|---|---|---|---|---|---|---|

| Faster R-CNN | Two-stage | 198 | 4287; 5000 | 0.576 | 0.601 | 0.956 | 0.577 | 192 |

| Mask R-CNN | Two-stage | 227 | 4439; 6000 | 0.535 | 0.598 | 0.957 | 0.56 | 184 |

| Cascade R-CNN | Two-stage | 201 | 6916; 1000 | 0.567 | 0.622 | 0.962 | 0.591 | 225 |

| Fast R-CNN | Two-stage | 131 | 4070; 0000 | 0.596 | 0.618 | 0.966 | 0.591 | 186 |

| Libra R-CNN | Two-stage | 180 | 4162; 7000 | 0.567 | 0.632 | 0.978 | 0.585 | 210 |

| YOLOv5 | One-stage | 7.1 | 250; 3724 | 0.962 | 0.958 | 0.974 | 0.633 | 323 |

| YOLOv8 | One-stage | 8.1 | 300; 6428 | 0.961 | 0.918 | 0.966 | 0.628 | 294 |

| YOLOv10 | One-stage | 8.2 | 269; 5976 | 0.917 | 0.93 | 0.952 | 0.629 | 313 |

| YOLOv11 | One-stage | 6.3 | 258; 2932 | 0.958 | 0.946 | 0.965 | 0.639 | 345 |

| Ours | One-stage | 7.2 | 282; 1696 | 0.96 | 0.945 | 0.984 | 0.661 | 303 |

| Model | Pramas | P | R | mAP@0.5 | mAP@0.5:0.95 | FPS |

|---|---|---|---|---|---|---|

| CBAM | 2,648,822 | 0.967 | 0.929 | 0.974 | 0.634 | 286 |

| ECA | 2,624,535 | 0.961 | 0.967 | 0.978 | 0.638 | 286 |

| SCSA | 2,730,908 | 0.959 | 0.962 | 0.986 | 0.638 | 278 |

| Ours | 2,715,156 | 0.962 | 0.963 | 0.984 | 0.649 | 294 |

| Model | Pramas | P | R | mAP@0.5 | mAP@0.5:0.95 |

|---|---|---|---|---|---|

| C2PSA-ACmix | 2,737,044 | 0.943 | 0.947 | 0.974 | 0.625 |

| C2PSA-DAT | 2,755,228 | 0.971 | 0.952 | 0.968 | 0.633 |

| C2PSA-EMA | 2,677,564 | 0.946 | 0.944 | 0.965 | 0.633 |

| C2PSA-FL | 2,743,900 | 0.881 | 0.856 | 0.921 | 0.545 |

| Ours | 2,637,080 | 0.955 | 0.956 | 0.971 | 0.637 |

| Model | Pramas | P | R | mAP@0.5 | mAP@0.5:0.95 | FPS |

|---|---|---|---|---|---|---|

| C3K2-MLLAB | 2,729,596 | 0.937 | 0.96 | 0.972 | 0.639 | 203 |

| C3K2-RFA | 2,953,804 | 0.952 | 0.964 | 0.969 | 0.643 | 185 |

| C3K2-SC | 2,775,852 | 0.961 | 0.95 | 0.983 | 0.634 | 250 |

| Ours | 2,877,420 | 0.934 | 0.95 | 0.975 | 0.644 | 256 |

| Model | Pramas | P | R | mAP@0.5 | mAP@0.5:0.95 | FPS |

|---|---|---|---|---|---|---|

| EIoU | 2,726,812 | 0.954 | 0.956 | 0.973 | 0.624 | 313 |

| WIoU | 2,726,812 | 0.961 | 0.95 | 0.976 | 0.635 | 294 |

| DIoU | 2,726,812 | 0.951 | 0.954 | 0.972 | 0.65 | 294 |

| SIoU | 2,726,812 | 0.954 | 0.956 | 0.973 | 0.624 | 303 |

| Model | P | R | mAP@0.5 | mAP@0.5:0.95 |

|---|---|---|---|---|

| ETLSH-YOLO [27] | 0.935 | 0.920 | 0.939 | 0.595 |

| DF-YOLO [25] | 0.958 | 0.915 | 0.952 | 0.576 |

| GEB-YOLO [28] | 0.972 | 0.934 | 0.955 | — |

| TL-YOLO [29] | 0.906 | 0.886 | 0.913 | 0.632 |

| GCP-YOLO [30] | 0.834 | 0.812 | 0.896 | 0.677 |

| TFD-YOLOv8 [31] | 0.913 | 0.629 | 0.732 | 0.358 |

| Ours | 0.960 | 0.945 | 0.974 | 0.646 |

| Model | C2PSA-DHSA | C3k2-Dual | NonLocalBlockND | DySample | Loss | GFLOPs | Pramas | P | R | mAP@0.5 | mAP@0.5:0.95 | FPS |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv11 | 6.3 | 2,582,932 | 0.958 | 0.946 | 0.965 | 0.639 | 345 | |||||

| YOLOv11 | √ | 6.4 | 2,637,080 | 0.955 | 0.956 | 0.971 | 0.637 | 294 | ||||

| YOLOv11 | √ | 6.3 | 2,595,284 | 0.958 | 0.956 | 0.974 | 0.638 | 270 | ||||

| YOLOv11 | √ | √ | 7.5 | 2,931,568 | 0.97 | 0.961 | 0.979 | 0.642 | 294 | |||

| YOLOv11 | √ | √ | 6.5 | 2,769,304 | 0.97 | 0.944 | 0.982 | 0.654 | 270 | |||

| YOLOv11 | √ | √ | 6.4 | 2,649,432 | 0.96 | 0.917 | 0.965 | 0.633 | 270 | |||

| YOLOv11 | √ | √ | √ | 7.6 | 3,063,792 | 0.961 | 0.941 | 0.973 | 0.646 | 263 | ||

| YOLOv11 | √ | √ | √ | 7.5 | 2,943,920 | 0.964 | 0.907 | 0.97 | 0.634 | 263 | ||

| YOLOv11 | √ | √ | √ | 7.6 | 3,021,996 | 0.962 | 0.926 | 0.972 | 0.645 | 286 | ||

| YOLOv11 | √ | √ | √ | 6.5 | 2,781,656 | 0.965 | 0.941 | 0.978 | 0.643 | 263 | ||

| YOLOv11 | √ | √ | √ | √ | 7.6 | 3,076,144 | 0.952 | 0.946 | 0.969 | 0.632 | 263 | |

| Ours | √ | √ | √ | √ | √ | 7.2 | 2,821,696 | 0.96 | 0.945 | 0.984 | 0.641 | 303 |

| Date sets | P | R | mAP@0.5 | mAP@0.5:0.95 |

|---|---|---|---|---|

| FOTL | 0.920 | 0.926 | 0.945 | 0.621 |

| CPLID | 0.898 | 0.861 | 0.928 | 0.533 |

| Ours | 0.960 | 0.945 | 0.984 | 0.661 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Q.; Wang, X.; Su, Y.; Jiang, W.; Zhang, Z.; Shen, F.; Zhu, L. Research on Deep Learning-Based Multi-Level Cross-Domain Foreign Object Detection in Power Transmission Lines. Sensors 2025, 25, 5141. https://doi.org/10.3390/s25165141

Liu Q, Wang X, Su Y, Jiang W, Zhang Z, Shen F, Zhu L. Research on Deep Learning-Based Multi-Level Cross-Domain Foreign Object Detection in Power Transmission Lines. Sensors. 2025; 25(16):5141. https://doi.org/10.3390/s25165141

Chicago/Turabian StyleLiu, Qingxue, Xia Wang, Yun Su, Wei Jiang, Zhe Zhang, Fuyu Shen, and Lizitong Zhu. 2025. "Research on Deep Learning-Based Multi-Level Cross-Domain Foreign Object Detection in Power Transmission Lines" Sensors 25, no. 16: 5141. https://doi.org/10.3390/s25165141

APA StyleLiu, Q., Wang, X., Su, Y., Jiang, W., Zhang, Z., Shen, F., & Zhu, L. (2025). Research on Deep Learning-Based Multi-Level Cross-Domain Foreign Object Detection in Power Transmission Lines. Sensors, 25(16), 5141. https://doi.org/10.3390/s25165141