Small UAV Target Detection Algorithm Using the YOLOv8n-RFL Based on Radar Detection Technology

Abstract

1. Introduction

- (1)

- We propose the YOLOv8n-RFL model to detect the UAV. The detection algorithm of UAV is based on the mathematical model of the UAV echo signal and converts the time-domain echo signal into the RD plane. Using the RD planar graph as input to the YOLOv8n backbone network, the UAV targets are extracted from the complex background by the C2f-RVB and C2f-RVBE modules, and acquire more feature maps containing multi-scale UAV feature information, improving the model’s ability of extract features.

- (2)

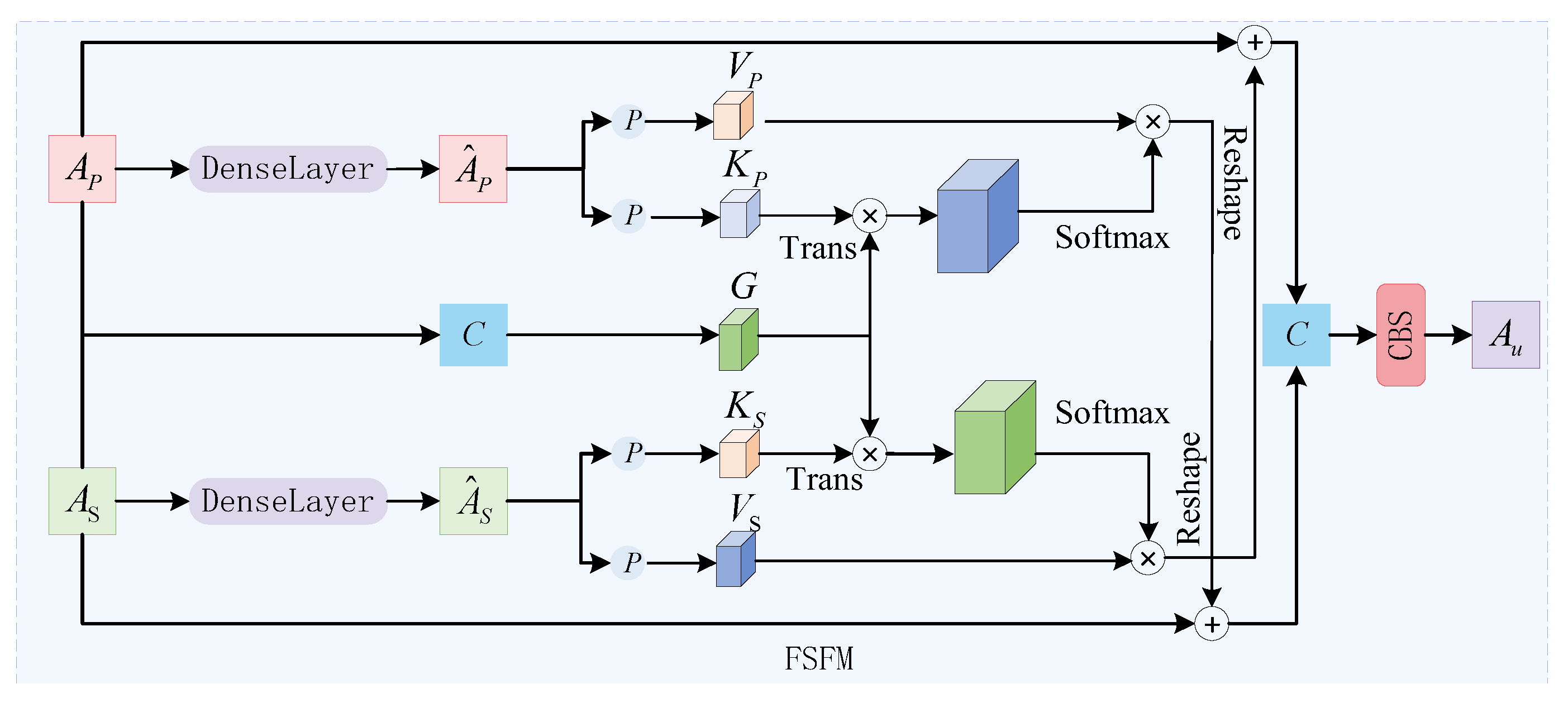

- We integrate shallow features from the backbone network and the deep features from the neck network through the FSFM to generate high-quality fused feature maps with rich details and deep semantic information, and then use the LWSD detection head to perform the UAV features recognition based on the generated fused feature maps.

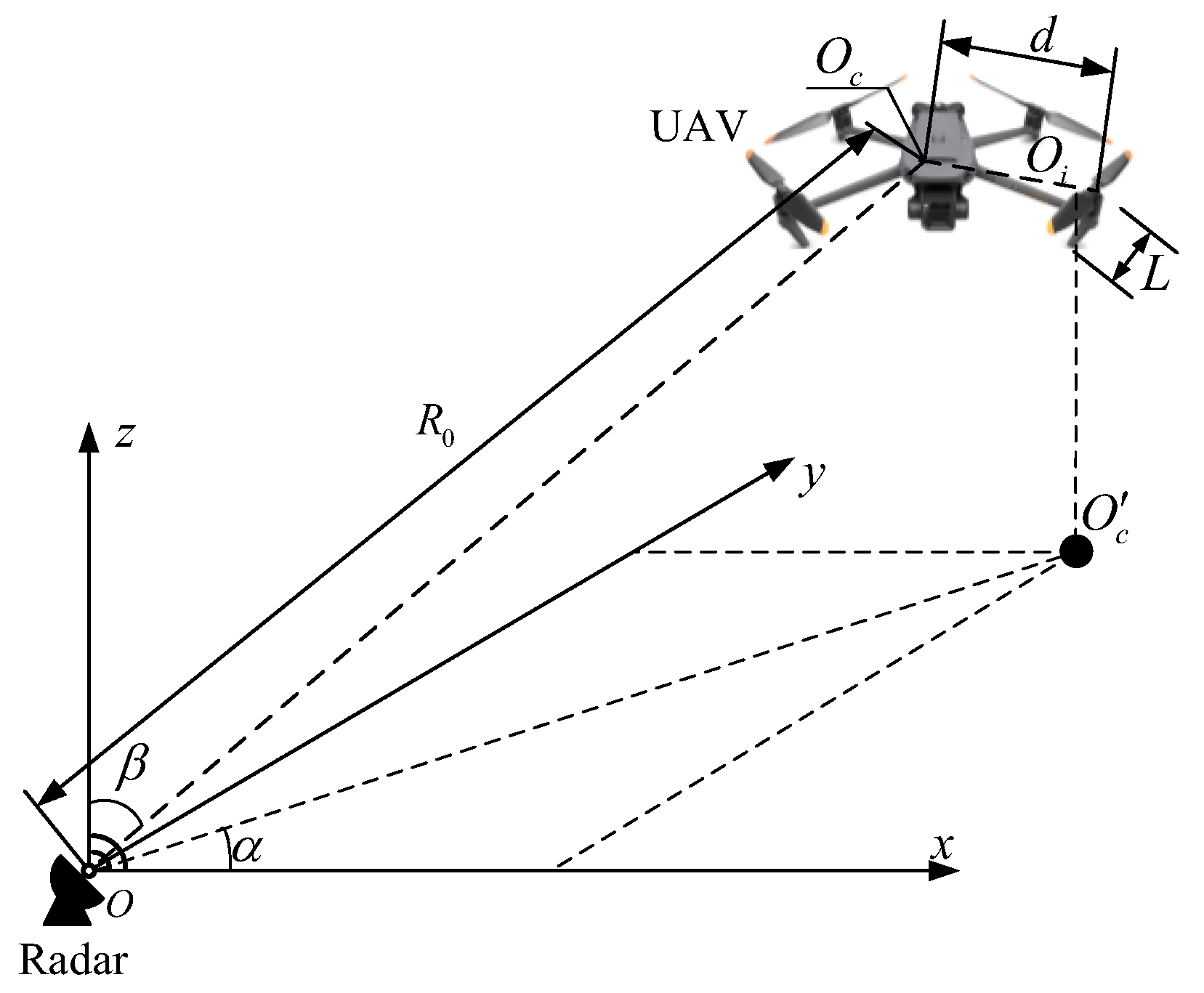

2. The Mathematical Model of Unmanned Aerial Vehicle (UAV) Echo Based on Radar Detection Technology

2.1. Basic Methods for Radar Detection of UAVs

2.2. The Mathematical Model of the UAV Echo

3. The UAV Detection Method Based on YOLOv8-RFL

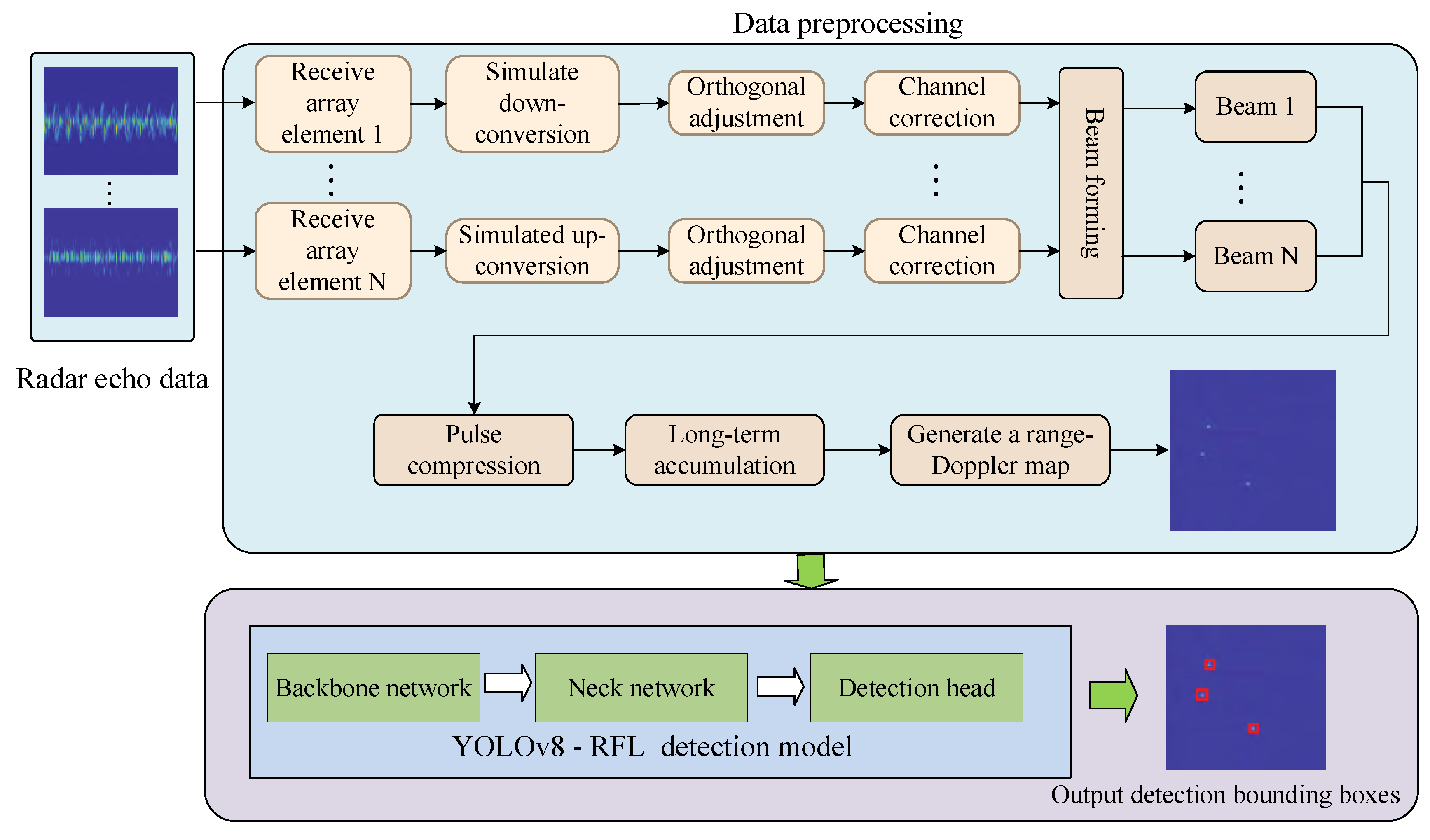

3.1. Overall Idea of the Detection of UAV

- (1)

- Radar signal reception. Each receiving array element unit independently acquires the signal reflected back from the UAV target. In general, to ensure precise signal processing, the data from the receiving array element is externally corrected to eliminate errors introduced by hardware or environmental factors. Through down-conversion and quadrature demodulation, the echo signal of UAV is converted to a baseband signal, which makes subsequent digital signal processing easier.

- (2)

- The signals processed by channel correction down-conversion and orthogonal demodulation, it can maintain the consistency of each receiving channel, and reduce the phase and amplitude errors between channels, and thus guarantee the correct combination of multi-channel signals.

- (3)

- Perform beamforming on the corrected signal. Beamforming is the weighted combination of data from each receiving channel in a specific direction to achieve spatial filtering, thereby generating beams in different directions to enhance the directionality of the UAV target signal while suppressing interference from other directions. Based on this, pulse compression is applied to the received radar echo signals to improve range resolution. The long-time accumulation method is introduced to perform Doppler processing on the pulse-compressed signal to form the RD planar graph.

- (4)

- The range-Doppler map is formed by long-term accumulation process. The long-term accumulation process mainly includes moving target detection (MTD) and moving target indication (MTI). MTD performs Doppler processing on the signal after pulse compression, and MTI further suppresses static or slow background noise to highlight moving targets. Based on the RD planar graph, the YOLOv8-RFL detection model is used to detect the UAV target.

3.2. The UAV Detection Method

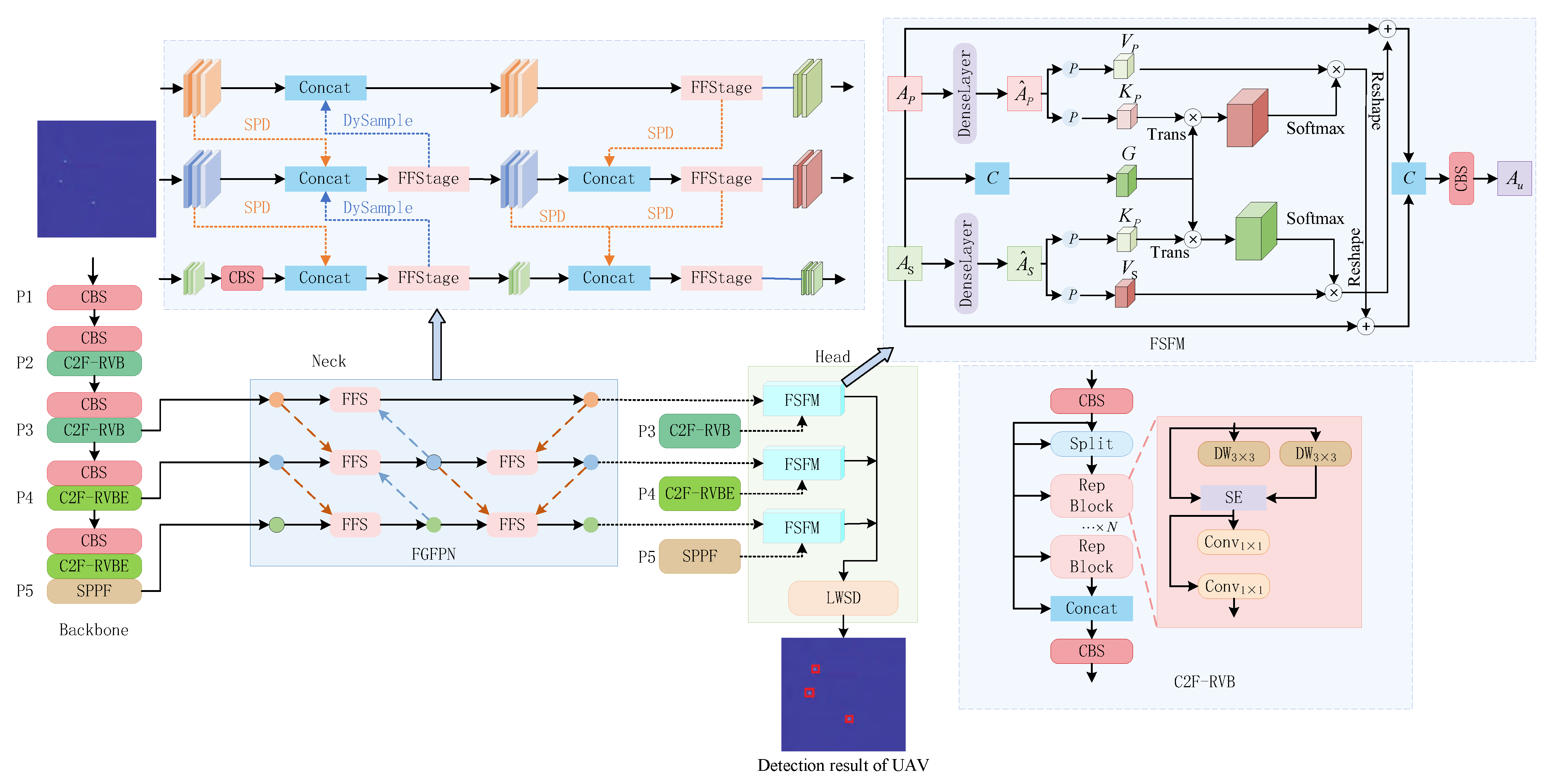

3.2.1. The Design of the YOLOv8-RFL Detection Model

- (1)

- The radar RD planar graph is input from the backbone network, and the UAV target is extracted from the complex background through the C2f-RVB and C2f-RVBE modules to acquire a feature map containing multi-scale UAV feature information. Based on the FGFPN neck network, the model can better aggregate feature maps from different resolutions of the backbone network, improving the efficiency and accuracy of feature fusion.

- (2)

- The shallow features from the backbone network and the deep features from the neck network are integrated through the FSFM to generate high-quality fused feature maps with rich details and deep semantic information. The LWSD detection head is utilized to perform more accurate classification based on the generated fused feature maps and output the detection results of the UAV information.

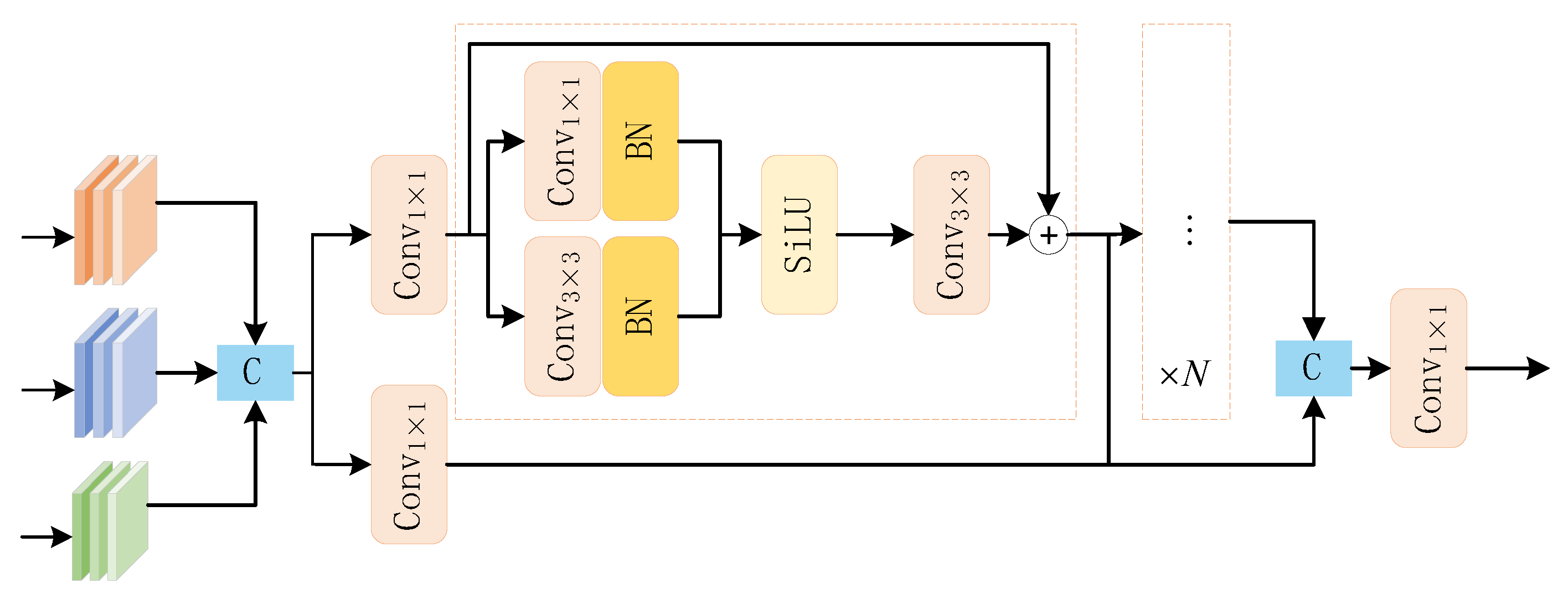

3.2.2. The Improved C2f Feature Extraction Module

3.2.3. The Generalized Feature Pyramid Network Based on Feature Focusing

3.2.4. The Feature Semantic Fusion Module Based on Cross-Attention Mechanism

3.2.5. The Lightweight Detection Head Based on Weight Sharing

4. Experiment and Analysis

4.1. Network Training and Radar Parameters

4.2. Evaluation Indicators

4.3. Experiment Comparative Analysis

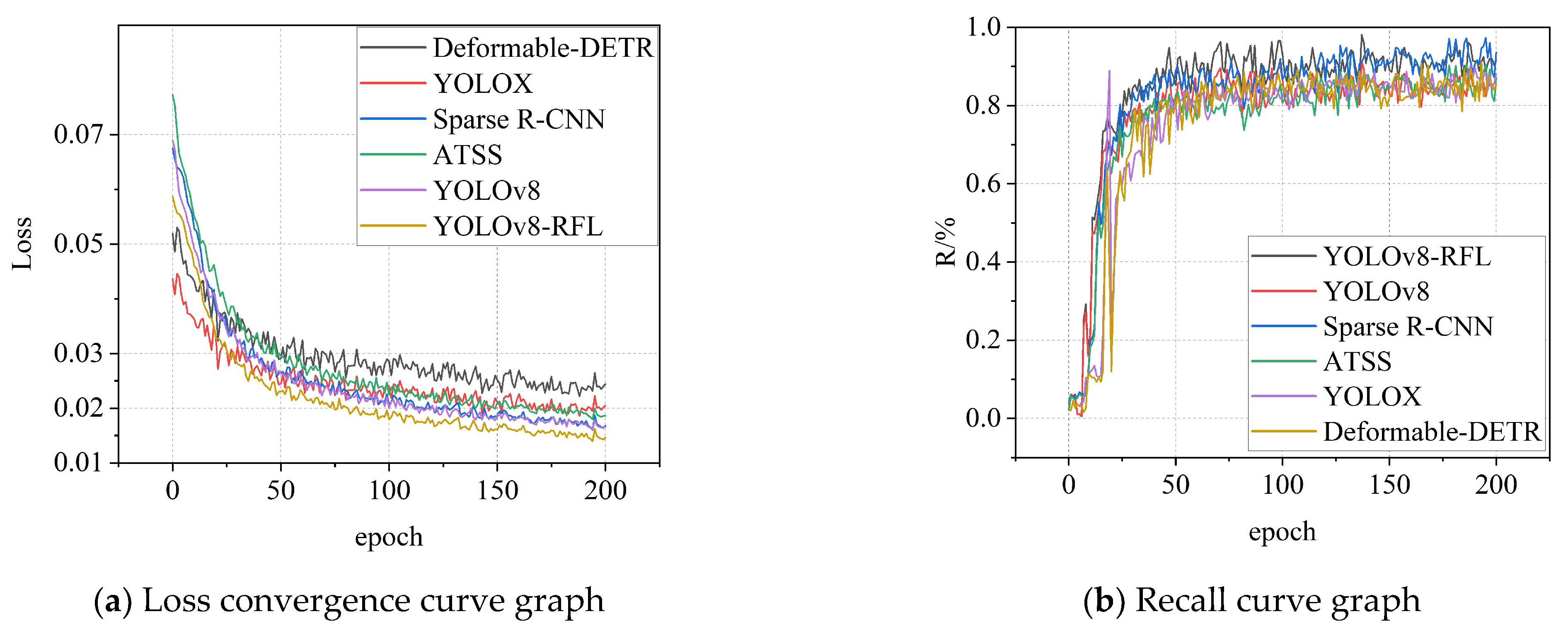

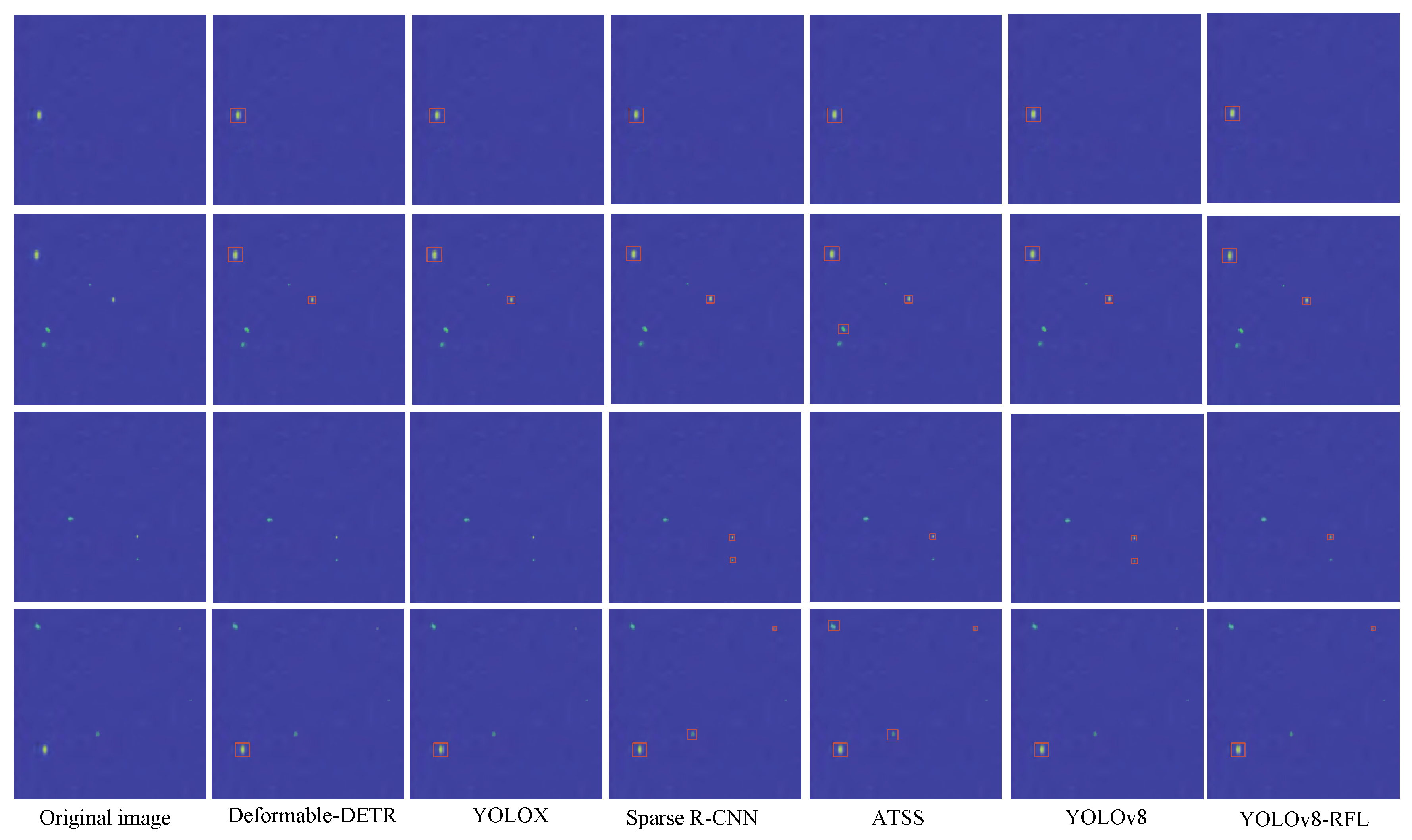

4.3.1. Comparative Experiments of Different Models

4.3.2. The Proposed Method Compared with CFAR

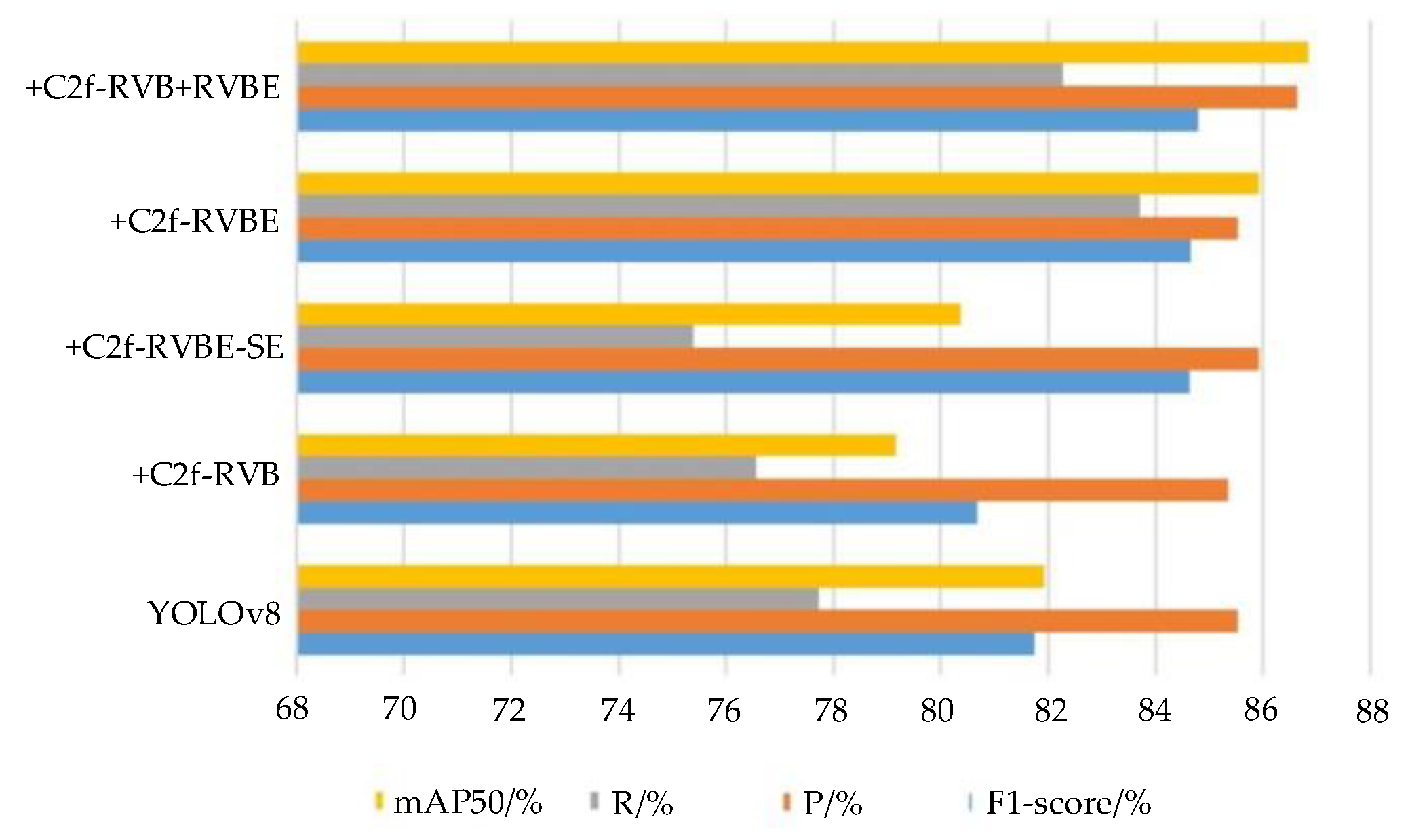

4.3.3. Comparative Experiments with the C2f Module

4.3.4. Ablation Tests

5. Conclusions

- (1)

- In the process of detecting unmanned aerial vehicle (UAV) targets using radar means, because the echo signal formed by UAV belongs to an uncertain state, the signal output by radar sensor appears obvious aliasing, and it is difficult to analyze the target information of UAV quickly and effectively. This paper proposes that the YOLOv8n-RFL detection model is mainly based on the mathematical model of the radar echo signal to form the RD planar graph for identification. By detecting the RD planar graph, it was found that the fusion of the C2f-RVB and C2f-RVBE modules utilized by YOLOv8n-RFL effectively mitigated the impact of complex background information on the extraction of UAV echo information, enabling the backbone network of the model to capture more abundant UAV information and significantly enhancing the model’s ability to extract UAV features.

- (2)

- The proposed FGFPN neck network based on feature focusing adopts the idea of “feature focusing—diffusion”, enhancing the fusion and interaction of features of different scales among various levels of the neck network and improving the expression ability of unmanned aerial vehicle features. In addition, the FSFM based on the cross-attention mechanism is introduced to fuse shallow features with rich detail information and deep features with rich semantic information, avoiding the neglect of key features of UAVs and thereby improving the detection and recognition rate of UAVs.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chen, X.; Chen, W.; Rao, Y.; Huang, Y.; Guan, J.; Dong, Y. Progress and prospects of radar target detection and recognition technology for flying birds and UAVs. J. Radars 2020, 9, 803–827. [Google Scholar] [CrossRef]

- He, W.; Sun, J.; Wang, X.; Zhang, X. Micro motion feature extraction of micro-rotor UAV based on RSP-CFD method. J. Signal Process. 2021, 37, 399–408. [Google Scholar] [CrossRef]

- Divy, R.; Emily, H.; Sinclair, H.; Anthony, D.; Bhashyam, B. Convolutional Neural Networks for Classification of Drones Using Radars. Drones 2021, 5, 149. [Google Scholar] [CrossRef]

- Qin, T.; Wang, Z.; Huang, Y.; Liu, Y.; Wei, H. Radar CFAR target detection technology review. J. Detect. Control 2023, 45, 1–11. [Google Scholar]

- Chen, X.; Nan, Z.; Zhang, H.; Chen, W.; Guan, J. Experimental research on radar micro-Doppler of flying bird and rotor UAV. Chin. J. Radio Sci. 2021, 36, 704–714. [Google Scholar]

- Yan, J.; Gu, C.; Kong, D.; Gong, J. Research on radar detection of drones using micro-doppler signals. Air Space Def. 2025, 8, 10–17. [Google Scholar]

- Liu, L.; Xie, L.; Mo, Y. Radar echo characteristic analysis and parameter estimation method for rotor UAV. J. Natl. Univ. Def. Technol. 2025, 47, 202–210. [Google Scholar]

- Zhou, C.; Song, Q.; Zhang, Y. Small target detection algorithm Based on improved YOLOv8 for staring Radar. J. Signal Process. 2025, 41, 853–867. [Google Scholar]

- Chen, X.; He, X.; Deng, Z.; Guan, J.; Du, X. Radar Intelligent Processing Technology and Application for Weak Target. J. Radars 2024, 13, 501–524. [Google Scholar]

- Wu, L.; Guo, P.; Liu, C.; Li, W. Radar Signal Modulation Type Recognition Based on Attention Mechanism Enhanced Residual Networks. ACTA Armamentarii 2023, 44, 2310. [Google Scholar]

- Xie, C.; Zhang, L.; Zhong, Z. Radar signal recognition based on time-frequency feature extraction and residual neural network. Syst. Eng. Electron. 2021, 43, 917–926. [Google Scholar]

- Liu, L.; Dai, L.; Chen, T. Radar signal modulation recognition based on spectrum complexity. J. Harbin Eng. Univ. 2018, 39, 1081–1086. [Google Scholar] [CrossRef]

- Wang, X.; Wang, Y.; Wang, Y. Graph neural network based radar target detection method with multi-scale feature fusion. Radar Sci. Technol. 2025, 23, 39–47. [Google Scholar]

- Tang, H.; Peng, X.; Xiong, W.; Cui, Y.; Hu, J.; Xing, H. Topology-Based Bidirectional Radar-Optical Fusion Algorithm for USV Detection at Sea. Acta Photonica Sin. 2025, 54, 0110002. [Google Scholar]

- Zou, L.; Yang, Q.; Zhao, N.; Yang, X. Guided Attitude control of UAV terminal Based on fuzzy adaptive. Comput. Simul. 2025, 42, 117–123. [Google Scholar]

- Zhang, S.; Zhai, R.; Liu, Y. Identification of UAV swarm type based on fusion features of communication and radar domain. Syst. Eng. Electron. 2023, 45, 3734–3742. [Google Scholar]

- Sun, Y.; Li, S.; Qu, L. Rotorcraft UAV classification and recognition based on Multi-Domain feature fusion. Radar Sci. Technol. 2023, 21, 447–453. [Google Scholar]

- Liang, X.; Xiang, J.; Qin, S.; Xiao, Y.; Chen, L.; Zou, D.; Ma, H.; Huang, D.; Huang, Y.; Wei, W. Small target detection algorithm based on SAHI-Improved-YOLOv8 for UAV imagery: A case study of tree pit detection. Smart Agric. Technol. 2025, 12, 101181. [Google Scholar] [CrossRef]

- Park, J.; Jung Dae, H.; Bae, K.B.; Park, S.O. Range-Doppler map improvement in FMCW radar for small moving drone detection using the stationary point concentration technique. IEEE Trans. Microw. Theory Tech. 2020, 68, 1858–1871. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, X. Analysis of radar detection performance for UAV based on clutter tail distribution. Syst. Eng. Electron. 2024, 46, 113–120. [Google Scholar]

- Zhang, H.; Sun, W.; Sun, C.; He, R.; Zhang, Y. HSP-YOLOv8: UAV aerial photography small target detection algorithm. Drones 2024, 8, 453. [Google Scholar] [CrossRef]

- Zhao, S.; Chen, H.; Zhang, D.; Tao, Y.; Feng, X.; Zhang, D. SR-YOLO: Spatial-to-Depth Enhanced Multi-Scale Attention Network for Small Target Detection in UAV Aerial Imagery. Remote Sens. 2025, 17, 2441. [Google Scholar] [CrossRef]

- Nie, K.; Li, Z.; Gao, W.; Li, C.; Liu, Y.; Li, Q. Defect detection method for power insulator based on YOLOv8-RFL model. Power Syst. Technol. 2025, 49, 1–10. [Google Scholar]

- Li, H.; Zhang, X.; Gao, J. A Measurement Method and Calculation Mathematical Model of Projectile Fuze Explosion Position Using Binocular Vision of AAVs. IEEE Trans. Instrum. Meas. 2025, 74, 7007711. [Google Scholar] [CrossRef]

- Song, C.; Zhou, L.; Wu, Y.; Wu, Y.; Ding, C. An estimation method of micro-movement parameters of UAV based on the concentration of time-frequency. J. Electron. Inf. Technol. 2020, 42, 2029–2036. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Zhang, X.; Gao, J. A cloud model target damage effectiveness assessment algorithm based on spatio-temporal sequence finite multilayer fragments dispersion. Def. Technol. 2024, 40, 48–64. [Google Scholar] [CrossRef]

- Tong, K.; Wu, Y. Deep learning-based detection from the perspective of small or tiny objects: A survey. Image Vis. Comput. 2022, 123, 104471. [Google Scholar] [CrossRef]

- Li, H.; Zhang, X.; Kang, W. A testing and data processing method of projectile explosion position based on three UAVs’ visual spatial constrain mechanism. Expert Syst. Appl. 2025, 265, 125984. [Google Scholar] [CrossRef]

- Zhang, P.; Li, G.; Huo, C.; Ying, H. Classification of drones based on micro-Doppler radar signatures using dual radar sensors. J. Radars 2018, 7, 557–564. [Google Scholar] [CrossRef]

- Leonardi, M.; Ligresti, G.; Piracci, E. Drones classification by the use of a multifunctional radar and micro-Doppler analysis. Drones 2022, 6, 124. [Google Scholar] [CrossRef]

- Song, C.; Wu, Y.; Zhou, L.; Li, R.; Yang, J.; Liang, W.; Ding, C. A multicomponent micro-Doppler signal decomposition and parameter estimation method for target recognition. Sci. China Inf. Sci. 2019, 62, 29304. [Google Scholar] [CrossRef]

- Li, H.; Zhang, X. A Measurement Method of Projectile Explosion Position and Explosion Image Recognition Algorithm Based on PSPNet and Swin Transformer Fusion. IEEE Sens. J. 2025, 25, 4715–4726. [Google Scholar] [CrossRef]

| Parameter Name | Parameter Setting |

|---|---|

| Operating system | Windows |

| CPU | 12th Gen Intel(R) Core(TM) i7-12700F |

| GPU | NVTDIA CaFnroe RTX 3060 Ti |

| Python | 3.9.13 |

| PyToroh | 1.13.1 |

| CUDA | 12.4 |

| Training cycle | 200 |

| Initial learning rate | 0.001 |

| Optimizer | Adam |

| Batch size | 16 |

| Sample normalization | 640 × 640 |

| Parameter Name | Parameter Value |

|---|---|

| Working frequency | 35.64 GHz |

| Transmission power | 10W (5% duty cycle) |

| Pulse width | 0.1~20 μs |

| Pulse repetition frequency | 32 KHz |

| Fast sampling frequency | 500 MHz |

| Doppler closure ratio | 0.0025 |

| Accumulated frame count | 1024 |

| Number of sampling points | 1000 |

| Algorithm | P/% | R/% | mAP50/% | F1-Score/% | FPS/ms |

|---|---|---|---|---|---|

| Deformable-DETR | 81.49 | 71.27 | 76.52 | 75.91 | 42.62 |

| YOLOX | 85.83 | 77.56 | 81.17 | 81.54 | 57.38 |

| Sparse R-CNN | 81.31 | 75.39 | 80.38 | 78.26 | 42.65 |

| ATSS | 81.28 | 78.31 | 81.28 | 79.75 | 47.65 |

| YOLOv8 | 85.53 | 77.72 | 81.92 | 81.74 | 58.24 |

| YOLOv8-RFL | 87.65 | 84.27 | 87.14 | 86.48 | 62.28 |

| Parameter Name | Parameter Value |

|---|---|

| Number of protection units | 8 |

| Reference unit number | 16 |

| Number of sorting orders | 18 |

| Threshold factor | 25 |

| Doppler threshold | 8 |

| Distance threshold | 3 |

| Match speed threshold | 7 |

| Match distance threshold | 7 |

| C2f-RVB | C2f-RVBE | FFStage | FSFM | FGFPN | P/% | R/% | mAP/% | FPS |

|---|---|---|---|---|---|---|---|---|

| 85.53 | 77.72 | 81.92 | 58.24 | |||||

| √ | 85.34 | 80.12 | 79.17 | 57.38 | ||||

| √ | 85.65 | 76.56 | 83.23 | 57.54 | ||||

| √ | 86.30 | 81.45 | 84.76 | 58.57 | ||||

| √ | 85.75 | 79.81 | 83.54 | 58.19 | ||||

| √ | 86.00 | 80.50 | 84.32 | 58.31 | ||||

| √ | √ | √ | √ | √ | 87.65 | 84.27 | 87.14 | 62.28 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, Z.; Lei, Z. Small UAV Target Detection Algorithm Using the YOLOv8n-RFL Based on Radar Detection Technology. Sensors 2025, 25, 5140. https://doi.org/10.3390/s25165140

Shi Z, Lei Z. Small UAV Target Detection Algorithm Using the YOLOv8n-RFL Based on Radar Detection Technology. Sensors. 2025; 25(16):5140. https://doi.org/10.3390/s25165140

Chicago/Turabian StyleShi, Zhijun, and Zhiyong Lei. 2025. "Small UAV Target Detection Algorithm Using the YOLOv8n-RFL Based on Radar Detection Technology" Sensors 25, no. 16: 5140. https://doi.org/10.3390/s25165140

APA StyleShi, Z., & Lei, Z. (2025). Small UAV Target Detection Algorithm Using the YOLOv8n-RFL Based on Radar Detection Technology. Sensors, 25(16), 5140. https://doi.org/10.3390/s25165140