The State of the Art and Potentialities of UAV-Based 3D Measurement Solutions in the Monitoring and Fault Diagnosis of Quasi-Brittle Structures

Abstract

1. Introduction

2. Non-Contact Monitoring Techniques

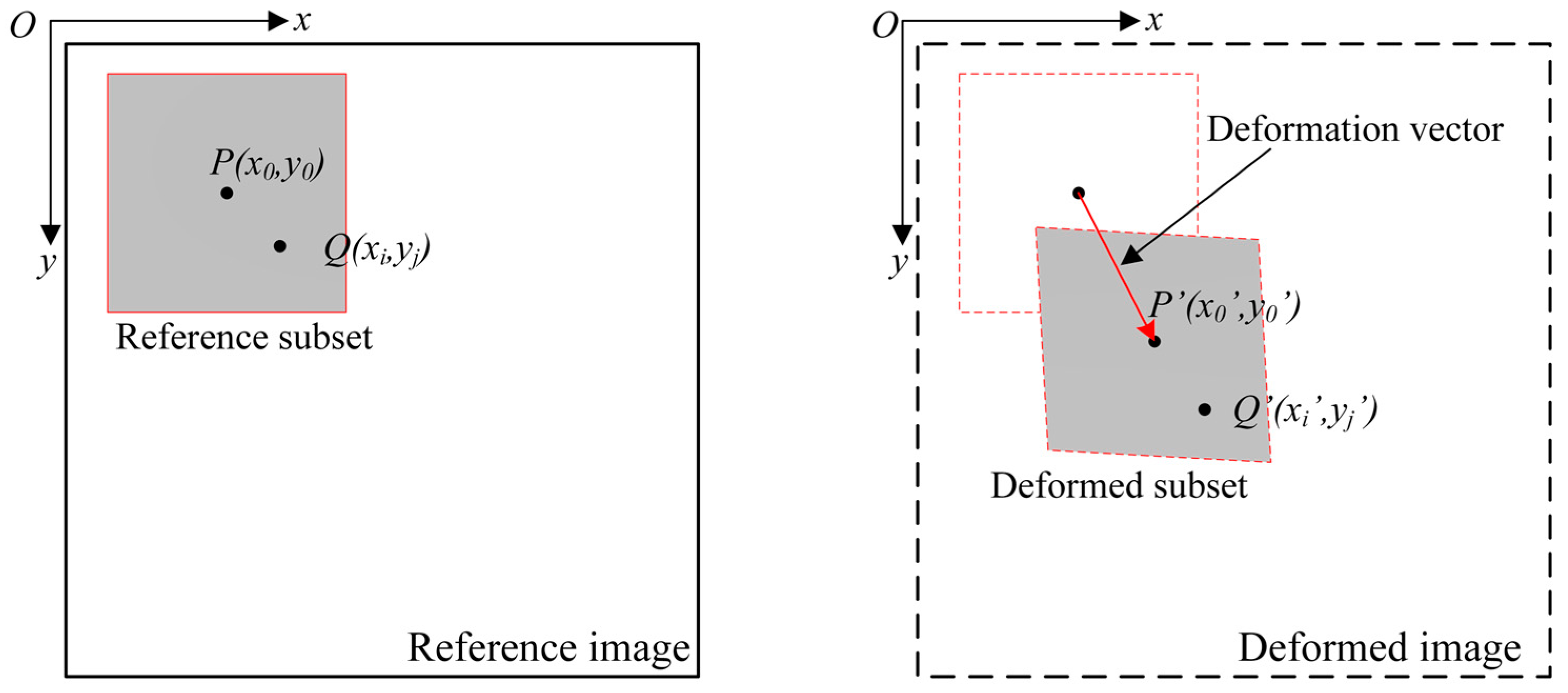

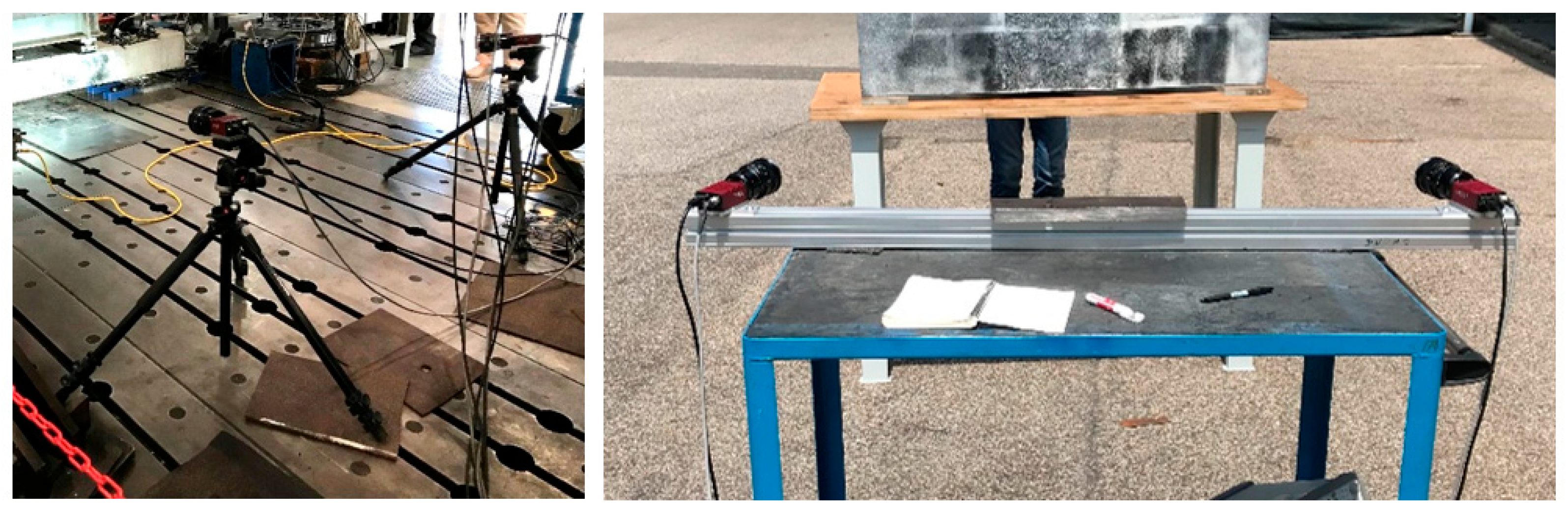

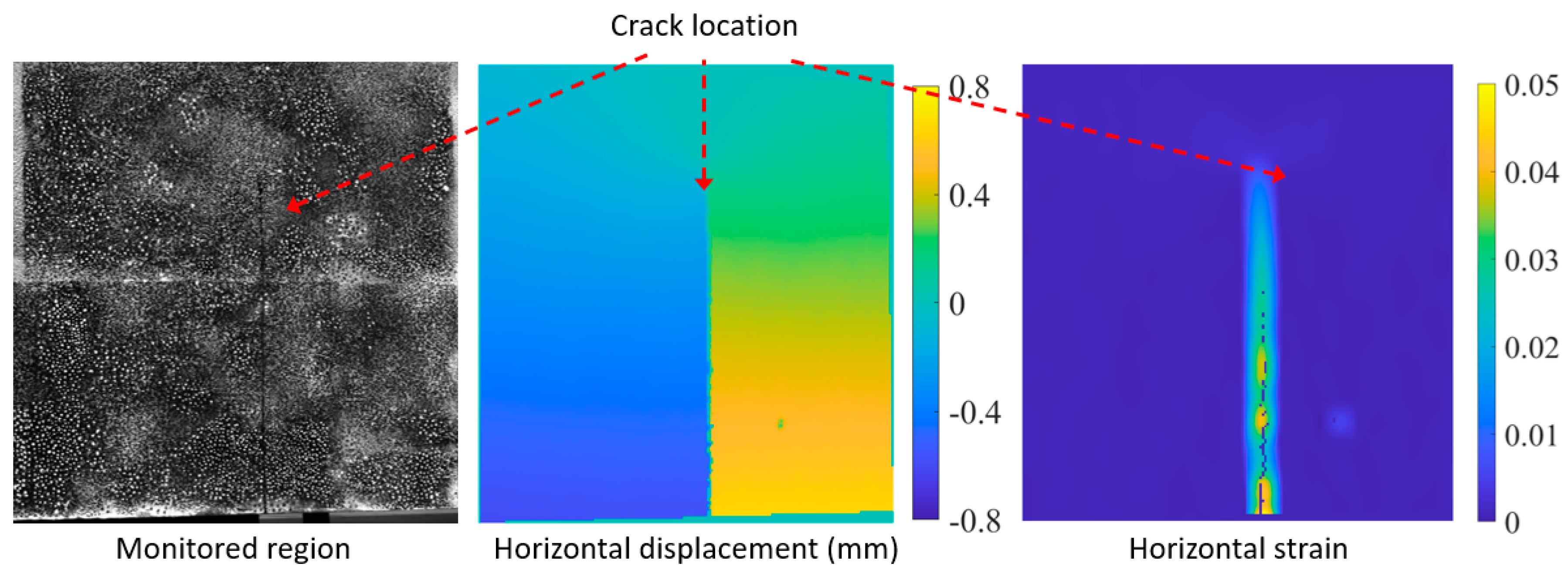

2.1. Digital Image Correlation (DIC)

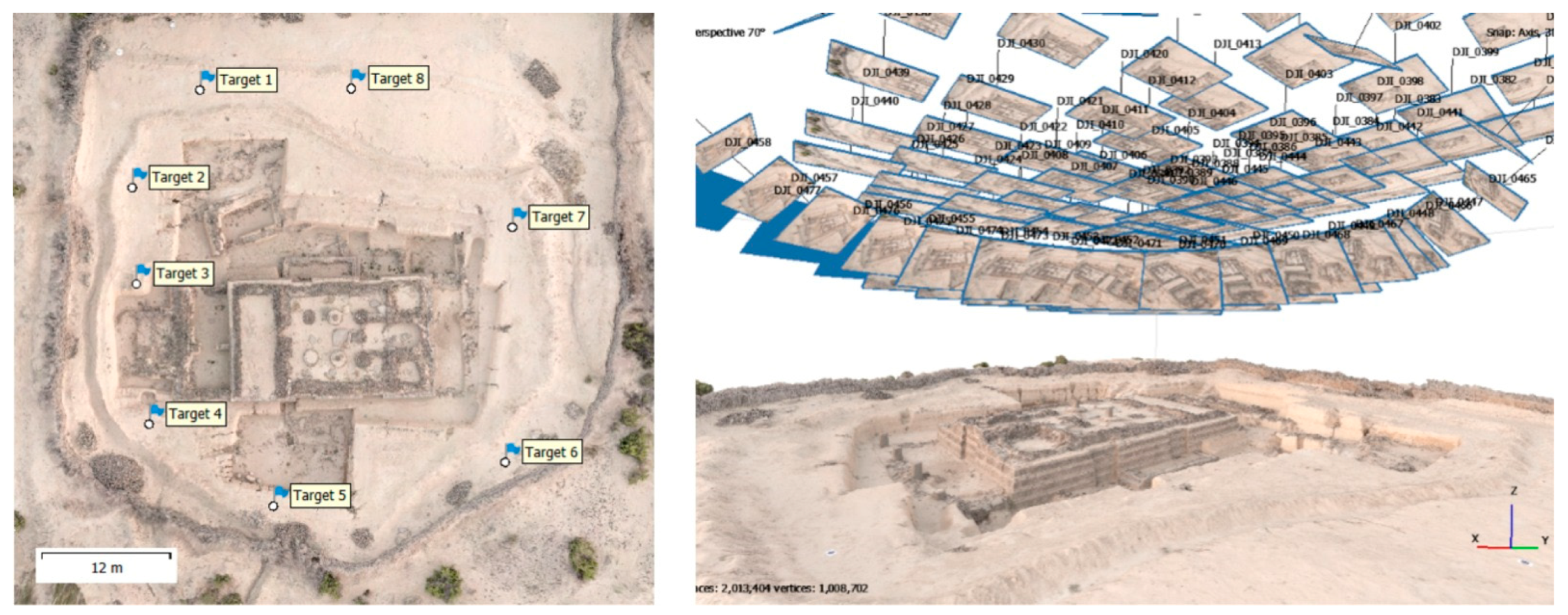

2.2. Photogrammetry

- The identification of a set of features present in each image, typically performed with the scale-invariant feature transform (SIFT) algorithm [72].

- Robust features identification and tracking, normally obtained with the random sample consensus (RANSAC) algorithm [73], to iteratively compute the rigid motion (rotation and translation) of one camera with respect to another, taking corresponding keypoints in the images as a starting point.

- Camera alignment and automatic calibration, obtained by finding correspondences between the features in several images [74].

- Three-dimensional reconstruction, obtained by means of stereo triangulation between pairs of images sharing a set of features [75].

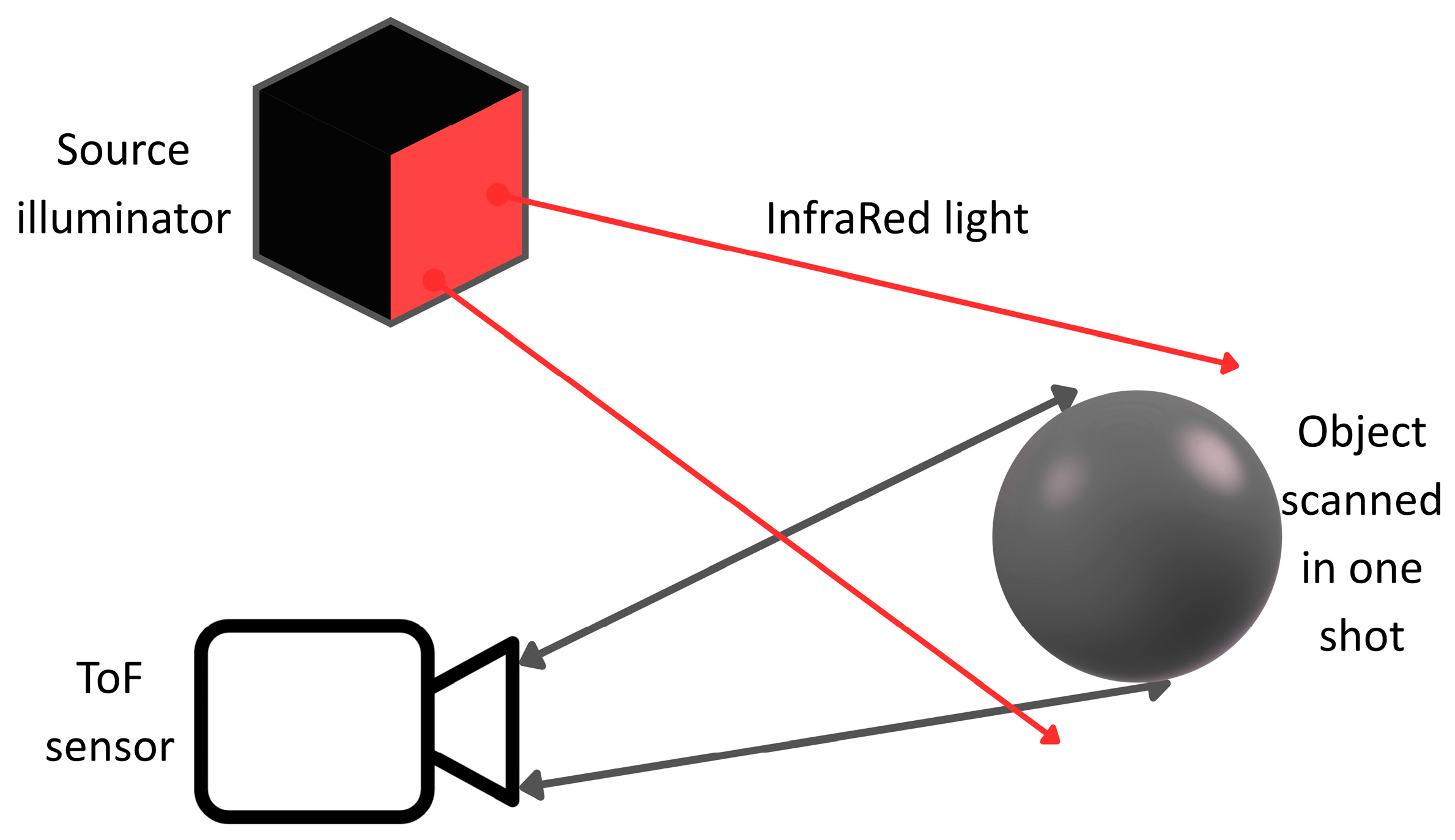

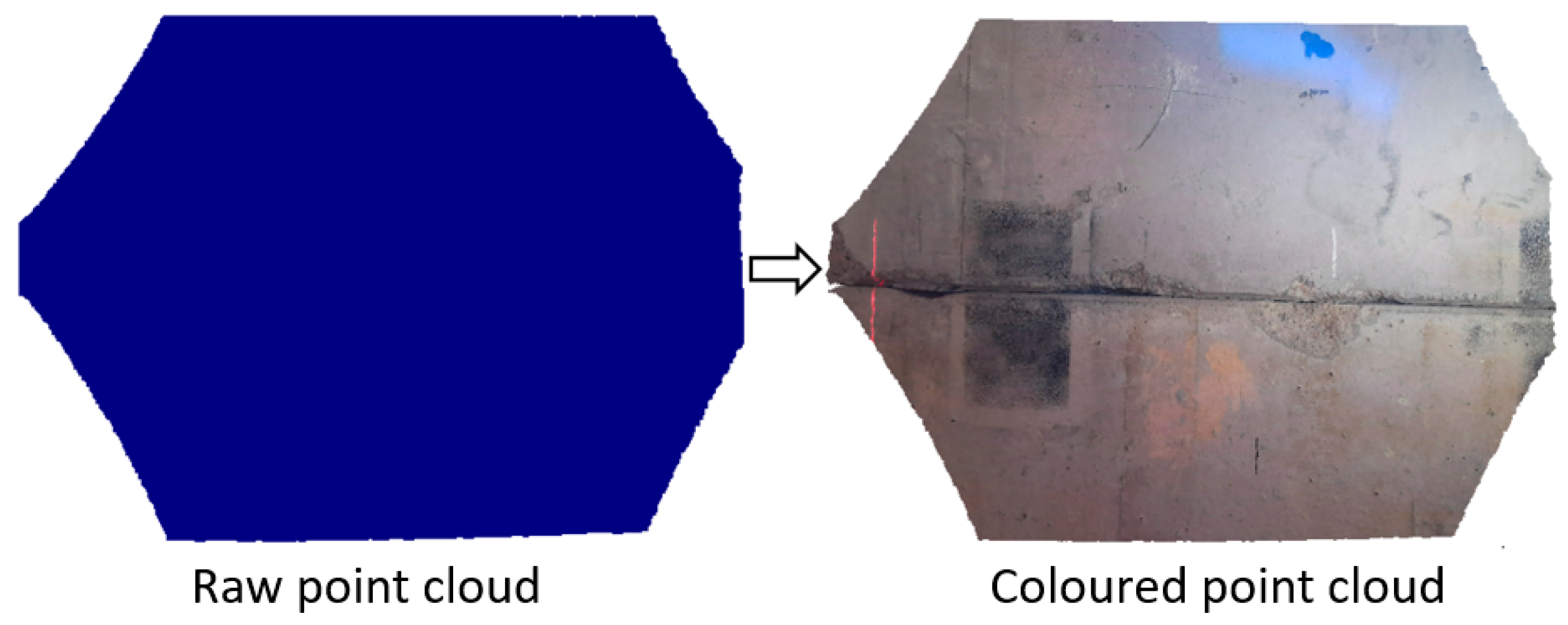

2.3. Tof and Laser Scanners

3. Lighting Issues

4. Learning Tools

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Farrar, C.R.; Dervilis, N.; Worden, K. The past, present and future of structural health monitoring: An overview of three ages. Strain 2025, 61, e12495. [Google Scholar] [CrossRef]

- Prakash, G.; Revanth Dugalam, R.; Barbosh, M.; Sadhu, A. Recent advancement of concrete dam health monitoring technology: A systematic literature review. Structures 2022, 44, 766–784. [Google Scholar] [CrossRef]

- Bolzon, G.; Frigerio, A.; Hajjar, M.; Nogara, C.; Zappa, E. Structural health assessment of existing dams based on non-destructive testing, physics-based models and machine learning tools. NDTE Int. 2025, 150, 103271. [Google Scholar] [CrossRef]

- Figueiredo, E.; Brownjohn, J. Three decades of statistical pattern recognition paradigm for SHM of bridges. Struct. Health Monit. 2022, 21, 3018–3054. [Google Scholar] [CrossRef]

- Zhang, J.; Cenci, J.; Becue, V.; Koutra, S. The overview of the conservation and renewal of the industrial Belgian heritage as a vector for cultural regeneration. Information 2021, 12, 27. [Google Scholar] [CrossRef]

- Soleymani, A.; Jahangir, H.; Moncef, L.; Nehdi, M.L. Damage detection and monitoring in heritage masonry structures: Systematic review. Constr. Build. Mater. 2023, 397, 132402. [Google Scholar] [CrossRef]

- Ubertini, F.; Comanducci, G.; Cavalagli, N. Vibration-based structural health monitoring of a historic bell-tower using output-only measurements and multivariate statistical analysis. Struct. Health Monit. 2016, 15, 438–457. [Google Scholar] [CrossRef]

- Chisari, C.; Mattia Zizi, M.; De Matteis, G. Dynamic model identification of the medieval bell tower of Casertavecchia (Italy). Eng. Fail. Anal. 2025, 167, 109055. [Google Scholar] [CrossRef]

- Masciotta, M.G.; Pellegrini, D. Tracking the variation of complex mode shapes for damage quantification and localization in structural systems. Mech. Syst. Signal Process. 2022, 169, 108731. [Google Scholar] [CrossRef]

- Azmi, M.; Paultre, P. Three-dimensional analysis of concrete dams including contraction joint non-linearity. Eng. Struct. 2002, 24, 757–771. [Google Scholar] [CrossRef]

- Mata, J.; Miranda, F.; Lorvão Antunes, A.; Romão, X.; Santos, J. Characterization of relative movements between blocks observed in a concrete dam and definition of thresholds for novelty identification based on machine learning models. Water 2023, 15, 297. [Google Scholar] [CrossRef]

- Verstrynge, E.; De Wilder, K.; Drougkas, A.; Voet, E.; Koen Van Balen, K.; Wevers, M. Crack monitoring in historical masonry with distributed strain and acoustic emission sensing techniques. Constr. Build. Mater. 2018, 162, 898–907. [Google Scholar] [CrossRef]

- Bamonte, P.; Cardani, G.; Condoleo, P.; Taliercio, A. Crack patterns in double-wall industrial masonry chimneys: Possible causes and numerical modelling. J. Cult. Herit. 2021, 47, 133–142. [Google Scholar] [CrossRef]

- Giuseppetti, G.; Donghi, G.; Marcello, A. Experimental investigations and numerical modeling for the analysis of AAR process related to Poglia dam: Evolutive scenarios and design solutions. In Dam Maintenance and Rehabilitation; Taylor & Francis: London, UK, 2003; pp. 183–191. [Google Scholar]

- Human, O.; Oosthuizen, C. Notes on the behaviour of a 65 year old concrete arch dam affected by AAR (mainly based on visual observations). In Proceedings of the 84th Annual ICOLD Meeting, Johannesburg, South Africa, 15–20 May 2016. [Google Scholar]

- Campos, A.; López, C.M.; Blanco, A.; Aguado, A. Structural diagnosis of a concrete dam with cracking and high nonrecoverable displacements. J. Perform. Constr. Facil. 2016, 30, 04016021. [Google Scholar] [CrossRef]

- Sangirardi, M.; Altomare, V.; De Santis, S.; de Felice, G. Detecting damage evolution of masonry structures through computer-vision-based monitoring methods. Buildings 2022, 12, 831. [Google Scholar] [CrossRef]

- Germanese, D.; Leone, G.R.; Moroni, D.; Pascali, M.A.; Tampucci, M. Long-term monitoring of crack patterns in historic structures using UAVs and planar markers: A preliminary study. J. Imaging 2018, 4, 99. [Google Scholar] [CrossRef]

- Reagan, D.; Sabato, A.; Niezrecki, C. Feasibility of using digital image correlation for unmanned aerial vehicle structural health monitoring of bridges. Struct. Health Monit. 2018, 17, 1056–1072. [Google Scholar] [CrossRef]

- Liu, B.; Collier, J.; Sarhosis, V. Digital image correlation based crack monitoring on masonry arch bridges. Eng. Fail. Anal. 2025, 169, 109185. [Google Scholar] [CrossRef]

- Belloni, V.; Sjölander, A.; Ravanelli, R.; Crespi, M.; Nascetti, A. Crack Monitoring from Motion (CMfM): Crack detection and measurement using cameras with non-fixed positions. Autom. Constr. 2023, 156, 105072. [Google Scholar] [CrossRef]

- Hatir, M.E.; Ince, I.; Korkanç, M. Intelligent detection of deterioration in cultural stone heritage. J. Build. Eng. 2021, 44, 102690. [Google Scholar] [CrossRef]

- Yu, R.; Li, P.; Shan, J.; Zhu, H. Structural state estimation of earthquake-damaged building structures by using UAV photogrammetry and point cloud segmentation. Measurement 2022, 202, 111858. [Google Scholar] [CrossRef]

- Qing, Y.; Ming, D.; Wen, Q.; Weng, Q.; Xu, L.; Chen, Y.; Zhang, Y.; Zeng, B. Operational earthquake-induced building damage assessment using CNN-based direct remote sensing change detection on superpixel level. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102899. [Google Scholar] [CrossRef]

- Sengupta, P.; Chakraborty, S. A state-of-the-art review on model reduction and substructuring techniques in finite element model updating for structural health monitoring applications. Arch. Comput. Methods Eng. 2025, 32, 3031–3062. [Google Scholar] [CrossRef]

- Li, X.; Zhang, F.-L.; Xiang, W.; Liu, W.-X.; Fu, S.-J. Structural health monitoring system based on digital twins and real-time data-driven methods. Structures 2024, 70, 107739. [Google Scholar] [CrossRef]

- Parente, L.; Falvo, E.; Castagnetti, C.; Grassi, F.; Mancini, F.; Rossi, P.; Capra, A. Image-based monitoring of cracks: Effectiveness analysis of an open-source machine learning-assisted procedure. J. Imaging 2022, 8, 22. [Google Scholar] [CrossRef]

- Malekloo, A.; Ozer, E.; Alhamaydeh, M.; Girolami, M. Machine learning and structural health monitoring overview with emerging technology and high-dimensional data source highlights. Struct. Health Monit. 2023, 21, 1906–1955. [Google Scholar] [CrossRef]

- Flah, M.; Nunez, I.; Ben Chaabene, W.; Nehdi, M.F. Machine learning algorithms in civil structural health monitoring: A systematic review. Arch. Comput. Methods Eng. 2021, 28, 2621–2643. [Google Scholar] [CrossRef]

- Hariri-Ardebili, A.; Mahdavi, G.; Nuss, L.K.; Lall, U. The role of artificial intelligence and digital technologies in dam engineering: Narrative review and outlook. Eng. Appl. Artif. Intell. 2023, 126, 106813. [Google Scholar] [CrossRef]

- Mishra, M. Machine learning techniques for structural health monitoring of heritage buildings: A state-of-the-art review and case studies. J. Cult. Herit. 2021, 47, 227–245. [Google Scholar] [CrossRef]

- Reis, H.C.; Turk, V.; Ustuner, M.; Yildiz, C.M.K.; Tatli, R. Post-seismic structural assessment: Advanced crack detection through complex feature extraction using pre-trained deep learning and machine learning integration. Earth Sci. Inform. 2025, 18, 133. [Google Scholar] [CrossRef]

- Sutton, M.; Orteu, J.; Schreier, H. Image Correlation for Shape, Motion and Deformation Measurements: Basic Concepts, Theory and Applications; Springer Science & Business Media: New York, NY, USA, 2009. [Google Scholar] [CrossRef]

- Pan, B.; Qian, K.; Xie, H.; Asundi, A. Two-dimensional digital image correlation for in-plane displacement and strain measurement: A review. Meas. Sci. Tech. 2009, 20, 062001. [Google Scholar] [CrossRef]

- Hanke, K.; Grussenmeyer, P. Architectural photogrammetry: Basic theory, procedures, tools. ISPRS Comm. V Symp. 2002, 5, 300–339. [Google Scholar] [CrossRef]

- Hansard, M.; Lee, S.; Choi, O.; Horaud, R. Time-of-Flight Cameras: Principles, Methods and Applications; Springer Science & Business Media: New York, NY, USA, 2012; Available online: https://link.springer.com/book/10.1007/978-1-4471-4658-2 (accessed on 22 January 2025).

- Morgenthal, G.; Hallermann, N. Quality assessment of unmanned aerial vehicle (UAV) based visual inspection of structures. Adv. Struct. Eng. 2014, 17, 289–302. [Google Scholar] [CrossRef]

- Hu, X.; Assaad, R. The use of unmanned ground vehicles (mobile robots) and unmanned aerial vehicles (drones) in the civil infrastructure asset management sector: Applications, robotic platforms, sensors, and algorithms. Expert. Syst. Appl. 2023, 232, 120897. [Google Scholar] [CrossRef]

- Kapoor, M.; Katsanos, E.; Nalpantidis, L.; Winkler, J.; Thöns, S. Structural Health Monitoring and Management with Unmanned Aerial Vehicles: Review and Potentials; BYG-R-454; Technical University of Denmark: Lyngby, Denmark, 2021; Available online: https://www.byg.dtu.dk/forskning/publikationer/byg_rapporter (accessed on 25 July 2025).

- Duque, L.; Seo, J.; Wacker, J. Synthesis of unmanned aerial vehicle applications for infrastructures. J. Perform. Constr. Facil. 2018, 32, 04018046. [Google Scholar] [CrossRef]

- Sony, S.; Laventure, S.; Sadhu, A. A literature review of next-generation smart sensing technology in structural health monitoring. Struct. Control Health Monit. 2019, 26, e2321. [Google Scholar] [CrossRef]

- Freeman, M.; Kashani, M.; Vardanega, P. Aerial robotic technologies for civil engineering: Established and emerging practice. J. Unmanned Veh. Syst. 2021, 9, 75–91. [Google Scholar] [CrossRef]

- Peters, W.; Ranson, W. Digital imaging techniques in experimental stress analysis. Opt. Eng. 1982, 21, 427–431. [Google Scholar] [CrossRef]

- Pan, B. Digital image correlation for surface deformation measurement: Historical developments, recent advances and future goals. Meas. Sci. Tech. 2018, 29, 082001. [Google Scholar] [CrossRef]

- Hoult, N.; Dutton, M.; Hoag, A.; Take, W. Measuring crack movement in reinforced concrete using digital image correlation: Overview and application to shear slip measurements. Proc. IEEE 2016, 104, 1561–1574. [Google Scholar] [CrossRef]

- Fayyad, T.; Lees, J. Experimental investigation of crack propagation and crack branching in lightly reinforced concrete beams using digital image correlation. Eng. Fract. Mech. 2017, 182, 487–505. [Google Scholar] [CrossRef]

- Liu, R.; Zappa, E.; Collina, A. Vision-based measurement of crack generation and evolution during static testing of concrete sleepers. Eng. Fract. Mech. 2020, 224, 106715. [Google Scholar] [CrossRef]

- Zhang, F.; Zarate Garnica, G.; Yang, Y.; Lantsoght, E.; Sliedrecht, H. Monitoring shear behavior of prestressed concrete bridge girders using acoustic emission and digital image correlation. Sensors 2020, 20, 5622. [Google Scholar] [CrossRef] [PubMed]

- Gencturk, B.; Hossain, K.; Kapadia, A.; Labib, E.; Mo, Y. Use of digital image correlation technique in full-scale testing of prestressed concrete structures. Measurement 2014, 47, 505–515. [Google Scholar] [CrossRef]

- De Wilder, K.; Lava, P.; Debruyne, D.; Wang, Y.; De Roeck, G.; Vandewalle, L. Experimental investigation on the shear capacity of prestressed concrete beams using digital image correlation. Eng. Struct. 2015, 82, 82–92. [Google Scholar] [CrossRef]

- Omondi, B.; Aggelis, B.; Sol, H.; Sitters, C. Improved crack monitoring in structural concrete by combined acoustic emission and digital image correlation techniques. Struct. Health Monit. 2016, 15, 359–378. [Google Scholar] [CrossRef]

- Hajjar, M.; Bolzon, G.; Zappa, E. Experimental and numerical analysis of fracture in prestressed concrete. Procedia Struct. Integr. 2023, 47, 354–358. [Google Scholar] [CrossRef]

- Thériault, F.; Noël, M.; Sanchez, L. Simplified approach for quantitative inspections of concrete structures using digital image correlation. Eng. Struct. 2022, 252, 113725. [Google Scholar] [CrossRef]

- Hajjar, M. UAV Monitoring of Dam Joints: A Feasibility Study. Ph.D. Thesis, Politenico di Milano, Milan, Italy, 2025. Available online: https://www.politesi.polimi.it/handle/10589/233315 (accessed on 1 March 2025).

- Ngeljaratan, L.; Moustafa, M. System identification of large-scale bridges using target-tracking digital image correlation. Front. Built Environ. 2019, 5, 85. [Google Scholar] [CrossRef]

- Ngeljaratan, L.; Moustafa, M. Structural health monitoring and seismic response assessment of bridge structures using target-tracking digital image correlation. Eng. Struct. 2020, 213, 110551. [Google Scholar] [CrossRef]

- Dizaji, M.; Harris, D.; Alipour, M. Integrating visual sensing and structural identification using 3D-digital image correlation and topology optimization to detect and reconstruct the 3D geometry of structural damage. Struct. Health Monit. 2022, 21, 2804–2833. [Google Scholar] [CrossRef]

- Kumar, D.; Chiang, C.; Lin, Y. Experimental vibration analysis of large structures using 3D DIC technique with a novel calibration method. J. Civ. Struct. Health Monit. 2022, 12, 391–409. [Google Scholar] [CrossRef]

- Malesa, M.; Malowany, K.; Pawlicki, J.; Kujawinska, M.; Skrzypczak, P.; Piekarczuk, A.; Tomasz, L.; Zagorski, A. Non-destructive testing of industrial structures with the use of multi-camera Digital Image Correlation method. Eng. Fail. Anal. 2016, 69, 122–134. [Google Scholar] [CrossRef]

- Malowany, K.; Malesa, M.; Kowaluk, T.; Kujawinska, M. Multi-camera digital image correlation method with distributed fields of view. Opt. Lasers Eng. 2017, 98, 198–204. [Google Scholar] [CrossRef]

- Dizaji, M.; Harris, D.; Kassner, B.; Hill, J. Full-field non-destructive image-based diagnostics of a structure using 3D digital image correlation and laser scanner techniques. J. Civ. Struct. Health Monit. 2021, 11, 1415–1428. [Google Scholar] [CrossRef]

- Khadka, A.; Afshar, A.; Zadeh, M.; Baqersad, J. Strain monitoring of wind turbines using a semi-autonomous drone. Wind. Eng. 2022, 46, 296–307. [Google Scholar] [CrossRef]

- Kumarapu, K.; Mesapam, S.; Keesara, V.; Shukla, A.; Manapragada, N.; Javed, B. RCC structural deformation and damage quantification using unmanned aerial vehicle image correlation technique. Appl. Sci. 2022, 12, 6574. [Google Scholar] [CrossRef]

- Kalaitzakis, M.; Kattil, S.; Vitzilaios, N.; Rizos, D.; Sutton, M. Dynamic structural health monitoring using a DIC-enabled drone. In Proceedings of the 2019 International Conference on Unmanned Aircraft Systems, Atlanta, GA, USA, 11–14 June 2019; pp. 321–327. [Google Scholar] [CrossRef]

- Kalaitzakis, M.; Vitzilaios, N.; Rizos, D.; Sutton, M. Drone-based StereoDIC: System development, experimental validation and infrastructure application. Exp. Mech. 2021, 61, 981–996. [Google Scholar] [CrossRef]

- Stuart, C.; Vitzilaios, N.; Rizos, D.; Sutton, M. Railroad Bridge Inspection Using Drone-Based Digital Image Correlation. United States-Department of Transportation-Federal Railroad Administration-Office of Research, Development, and Technology, 2023, RR 23-02. Available online: https://railroads.dot.gov/sites/fra.dot.gov/files/2023-02/Railroad%20Bridge%20Inspection%20Drone-Based.pdf (accessed on 18 February 2025).

- Mousa, M.; Yussof, M.; Udi, U.; Nazri, F.; Kamarudin, M.; Parke, G.; Ghahari, S. Application of digital image correlation in structural health monitoring of bridge infrastructures: A review. Infrastructures 2021, 6, 176. [Google Scholar] [CrossRef]

- Ye, X.; Dong, C.; Liu, T. A review of machine vision-based structural health monitoring: Methodologies and applications. J. Sens. 2016, 1, 7103039-10. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M. Computer vision for SHM of civil infrastructure: From dynamic response measurement to damage detection-A review. Eng. Struct. 2018, 156, 105–117. [Google Scholar] [CrossRef]

- Niezrecki, C.; Baqersad, J.; Sabato, A. Digital image correlation techniques for non-destructive evaluation and structural health monitoring. Handb. Adv. Non-Destr. Eval. 2018, 46, 1545–1590. [Google Scholar] [CrossRef]

- Blikharskyy, Y.; Kopiika, N.; Khmil, R.; Selejdak, J.; Blikharskyy, Z. Review of development and application of digital image correlation method for study of stress-strain state of RC structures. Appl. Sci. 2022, 12, 10157. [Google Scholar] [CrossRef]

- Lowe, D. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 1150–1157. [Google Scholar] [CrossRef]

- Fischler, M.; Bolles, R. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Schonberger, J.; Frahm, J. Structure-from-motion revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar] [CrossRef]

- Martínez-Carricondo, P.; Agüera-Vega, F.; Carvajal-Ramírez, F. Use of UAV-photogrammetry for quasi-vertical wall surveying. Remote Sens. 2020, 12, 2221. [Google Scholar] [CrossRef]

- Fonstad, M.; Dietrich, J.; Courville, B.; Jensen, J.; Carbonneau, P. Topographic structure from motion: A new development in photogrammetric measurement. Earth Surf. Process Landf. 2013, 38, 421–430. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Grussenmeyer, P.; Koehl, M.; Freville, T. Acquisition and Processing Experiences of Close Range UAV Images for the 3D Modeling of Heritage Buildings. In Digital Heritage Progress in Cultural Heritage: Documentation, Preservation, and Protection; EuroMed: Limassol, Cyprus, 2016; Volume 10058, pp. 420–431. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Koehl, M.; Grussenmeyer, P.; Freville, T. Acquisition and processing protocols for UAV images: 3D modeling of historical buildings using photogrammetry. In Proceedings of the 26th CIPA Int Symposium ISPRS, Ottawa, ON, Canada, 28 August–1 September 2017; Volume 4, pp. 163–170. [Google Scholar] [CrossRef]

- Aicardi, I.; Chiabrando, F.; Grasso, N.; Lingua, A.; Noardo, F.; Spanò, A. UAV photogrammetry with oblique images: First analysis on data acquisition and processing. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 835–842. [Google Scholar] [CrossRef]

- Ulvi, A. Using UAV photogrammetric technique for monitoring, change detection, and analysis of archeological excavation sites. J. Comp. Cult. Herit. 2022, 15, 1–19. [Google Scholar] [CrossRef]

- Fiz, J.; Martín, P.; Cuesta, R.; Subías, E.; Codina, D.; Cartes, A. Examples and results of aerial photogrammetry in archeology with UAV: Geometric documentation, high resolution multispectral analysis, models and 3D printing. Drones 2022, 6, 59. [Google Scholar] [CrossRef]

- Fritsch, D.; Becker, S.; Rothermel, M. Modeling facade structures using point clouds from dense image matching. In Proceedings of the International Conference on Advances in Civil, Structural and Mechanical Engineering, Cape Town, South Africa, 2–4 September 2013; Institute of Research Engineers and Doctors: New York, NY, USA, 2013; pp. 57–64. [Google Scholar]

- Bori, M.; Hussein, Z. Integration the low cost camera images with the google earth dataset to create a 3D model. Civ. Eng. J. 2022, 6, 446–458. [Google Scholar] [CrossRef]

- Liu, Y.; Nie, X.; Fan, J.; Liu, X. Image-based crack assessment of bridge piers using unmanned aerial vehicles and three-dimensional scene reconstruction. Comput. Aided Civ. Infrastruct. Eng. 2020, 35, 511–529. [Google Scholar] [CrossRef]

- Ioli, F.; Pinto, A.; Pinto, L. UAV photogrammetry for metric evaluation of concrete bridge cracks. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43, 1025–1032. [Google Scholar] [CrossRef]

- Buffi, G.; Manciola, P.; Grassi, S.; Barberini, M.; Gambi, A. Survey of the Ridracoli dam: UAV-based photogrammetry and traditional topographic techniques in the inspection of vertical structures. Geomat. Nat. Hazards Risk 2017, 8, 1562–1579. [Google Scholar] [CrossRef]

- Ridolfi, E.; Buffi, G.; Venturi, S.; Manciola, P. Accuracy analysis of a dam model from drone surveys. Sensors 2017, 17, 1777. [Google Scholar] [CrossRef]

- Henriques, M.; Roque, D. Unmanned aerial vehicles (UAV) as a support to visual inspections of concrete dams. In Proceedings of the Second International Dam World Conference 2015, Lisbon, Portugal, 21–24 April 2015; National Laboratory for Civil Engineering: Lisbon, Portugal, 2015; pp. 1–12. [Google Scholar]

- Texas Instruments. Time-of-Flight Camera—An Introduction. Available online: https://www.ti.com/lit/wp/sloa190b/sloa190b.pdf (accessed on 10 May 2025).

- He, Y.; Chen, S. Recent advances in 3D data acquisition and processing by time-of-flight camera. IEEE Access 2019, 7, 12495–12510. [Google Scholar] [CrossRef]

- Lumileds. Infrared Illumination for Time-of-Flight Applications. Available online: https://lumileds.com/wp-content/uploads/files/WP35.pdf. (accessed on 17 May 2025).

- Andersen, M.; Jensen, T.; Lisouski, P.; Mortensen, A.; Hansen, M.; Gregersen, T.; Ahrendt, P. Kinect Depth Sensor Evaluation for Computer Vision Applications; Technical Report ECE-TR-6; Aarhus University: Aarhus, Denmark, 2012; pp. 1–37. [Google Scholar]

- Paredes, A.; Song, Q.; Conde, M. Performance evaluation of state-of-the-art high-resolution time-of-flight cameras. IEEE Sens. J. 2023, 23, 13711–13727. [Google Scholar] [CrossRef]

- Tölgyessy, M.; Dekan, M.; Chovanec, Ľ.; Hubinský, P. Evaluation of the azure kinect and its comparison to kinect v1 and kinect v2. Sensors 2021, 21, 413. [Google Scholar] [CrossRef]

- Albert, J.; Owolabi, V.; Gebel, A.; Brahms, C.; Granacher, U.; Arnrich, B. Evaluation of the pose tracking performance of the azure kinect and kinect v2 for gait analysis in comparison with a gold standard: A pilot study. Sensors 2020, 20, 5104. [Google Scholar] [CrossRef]

- Li, L.; Liu, X.; Patel, M.; Zhang, L. Depth camera-based model for studying the effects of muscle loading on distal radius fracture healing. Comput. Biol. Med. 2023, 164, 107292. [Google Scholar] [CrossRef] [PubMed]

- Brambilla, C.; Marani, R.; Romeo, L.; Nicora, M.; Storm, F.; Reni, G.; Malosio, M.; D’Orazio, T.; Scano, A. Azure Kinect performance evaluation for human motion and upper limb biomechanical analysis. Heliyon 2023, 9, e21606. [Google Scholar] [CrossRef]

- Ramasubramanian, A.; Kazasidis, M.; Fay, B.; Papakostas, N. On the evaluation of diverse vision systems towards detecting human pose in collaborative robot applications. Sensors 2024, 24, 578. [Google Scholar] [CrossRef]

- Khoa, T.; Lam, P.; Nam, N. An efficient energy measurement system based on the TOF sensor for structural crack monitoring in architecture. J. Inf. Telecommun. 2023, 7, 56–72. [Google Scholar] [CrossRef]

- Marchisotti, D.; Zappa, E. Uncertainty mitigation in drone-based 3D scanning of defects in concrete structures. In Proceedings of the 2022 IEEE International Instrumentation and Measurement Technology Conference, Ottawa, ON, Canada, 16–19 May 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Marchisotti, D.; Zappa, E. Feasibility study of drone-based 3-D measurement of defects in concrete structures. IEEE Trans. Instrum. Meas. 2022, 71, 1–11. [Google Scholar] [CrossRef]

- Toriumi, F.; Bittencourt, T.; Futai, M. UAV-based inspection of bridge and tunnel structures: An application review. Rev. IBRACON De. Estrut. E Mater. 2022, 16, e16103. [Google Scholar] [CrossRef]

- Fryskowska, A. Accuracy assessment of point clouds geo-referencing in surveying and documentation of historical complexes. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 161–165. [Google Scholar] [CrossRef]

- Hassan, A.; Fritsch, D. Integration of laser scanning and photogrammetry in 3D/4D cultural heritage preservation—A review. Int. J. Appl. Sci. Tech. 2019, 9, 16. [Google Scholar] [CrossRef]

- Ulvi, A. Documentation, three-dimensional (3D) modelling and visualization of cultural heritage by using Unmanned Aerial Vehicle (UAV) photogrammetry and terrestrial laser scanners. Int. J. Remote Sens. 2021, 42, 1994–2021. [Google Scholar] [CrossRef]

- Pinto, L.; Bianchini, F.; Nova, V.; Passoni, D. Low-cost UAS photogrammetry for road infrastructures inspection. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 1145–1150. [Google Scholar] [CrossRef]

- Yang, H.; Xu, X.; Neumann, I. The benefit of 3D laser scanning technology in the generation and calibration of FEM models for health assessment of concrete structures. Sensors 2014, 14, 21889–21904. [Google Scholar] [CrossRef]

- Xu, X.; Bureick, J.; Yang, H.; Neumann, I. TLS-based composite structure deformation analysis validated with laser tracker. Compos. Struct. 2018, 202, 60–65. [Google Scholar] [CrossRef]

- Li, X.; Deng, E.; Wang, Y.; Ni, Y. 3D laser scanning for predicting the alignment of large-span segmental precast assembled concrete cable-stayed bridges. Autom. Constr. 2023, 155, 105056. [Google Scholar] [CrossRef]

- Zarate Garnica, G.; Lantsoght, E.; Yang, Y. Monitoring structural responses during load testing of reinforced concrete bridges: A review. Struct. and Infrastruct. Eng. 2022, 18, 1558–1580. [Google Scholar] [CrossRef]

- Wang, C.; Yang, X.; Xi, X.; Nie, S.; Dong, P. LiDAR Remote Sensing Principles. In Introduction to LiDAR Remote Sensing; Taylor & Francis: Abingdon, UK, 2024. [Google Scholar] [CrossRef]

- Lee, D.; Jung, M.; Yang, W.; Kim, A. Lidar odometry survey: Recent advancements and remaining challenges. Intell. Serv. Robot. 2024, 17, 95–118. [Google Scholar] [CrossRef]

- Lenda, G.; Marmol, U. Integration of high-precision UAV laser scanning and terrestrial scanning measurements for determining the shape of a water tower. Measurement 2023, 218, 113178. [Google Scholar] [CrossRef]

- Kelly, C.; Wilkinson, B.; Abd-Elrahman, A.; Cordero, O.; Lassiter, H. Accuracy assessment of low-cost lidar scanners: An analysis of the Velodyne HDL–32E and Livox Mid–40′s temporal stability. Remote Sens. 2022, 14, 4220. [Google Scholar] [CrossRef]

- Gaspari, F.; Ioli, F.; Barbieri, F.; Belcore, E.; Pinto, L. Integration of UAV-lidar and UAV-photogrammetry for infrastructure monitoring and bridge assessment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43, 995–1002. [Google Scholar] [CrossRef]

- Aldao, E.; González-Jorge, H.; González-deSantos, L.; Fontenla-Carrera, G.; Martínez-Sánchez, J. Validation of solid-state LiDAR measurement system for ballast geometry monitoring in rail tracks. Infrastructures 2023, 8, 63. [Google Scholar] [CrossRef]

- Tong, W. An evaluation of digital image correlation criteria for strain mapping applications. Strain 2005, 41, 167–175. [Google Scholar] [CrossRef]

- Haddadi, H.; Belhabib, S. Use of rigid-body motion for the investigation and estimation of the measurement errors related to digital image correlation technique. Opt. Lasers Eng. 2008, 46, 185–196. [Google Scholar] [CrossRef]

- Guo, X.; Li, Y.; Suo, T.; Liang, J. De-noising of digital image correlation based on stationary wavelet transform. Opt. Lasers Eng. 2017, 90, 161–172. [Google Scholar] [CrossRef]

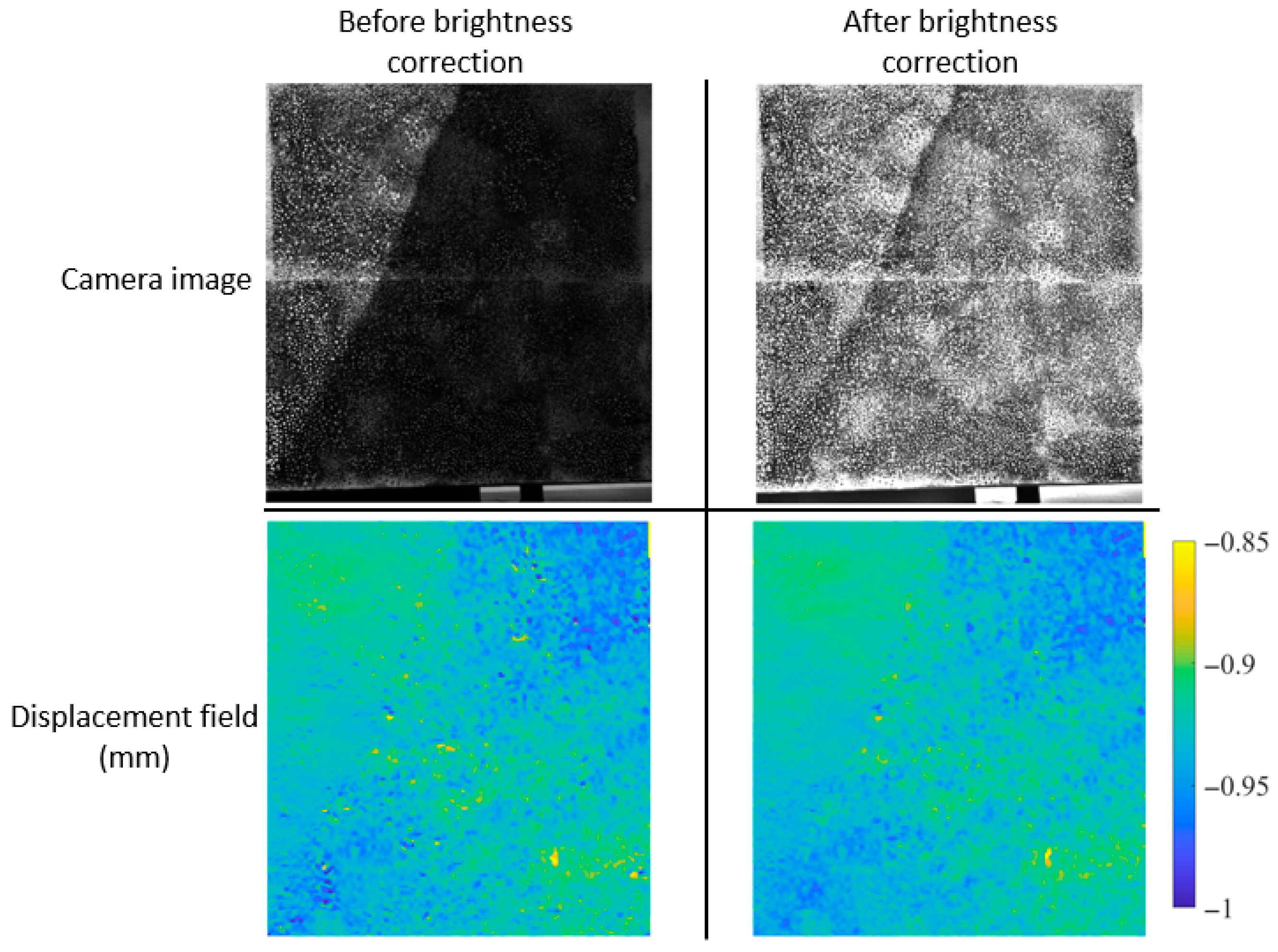

- Sciuti, V.; Canto, R.; Neggers, J.; Hild, F. On the benefits of correcting brightness and contrast in global digital image correlation: Monitoring cracks during curing and drying of a refractory castable. Opt. Lasers Eng. 2021, 136, 106316. [Google Scholar] [CrossRef]

- Gu, G.; Wang, Y. Uneven intensity change correction of speckle images using morphological top-hat transform in digital image correlation. Imaging Sci. J. 2015, 63, 488–494. [Google Scholar] [CrossRef]

- Zhou, H.; Shu, D.; Wu, C.; Wang, Q.; Wang, Q. Image illumination adaptive correction algorithm based on a combined model of bottom-hat and improved gamma transformation. Arab. J. Sci. Eng. 2023, 48, 3947–3960. [Google Scholar] [CrossRef]

- Jobson, D.; Rahman, Z.; Woodell, G. Properties and performance of a center/surround Retinex. IEEE Trans. Image Process. 1997, 6, 451–462. [Google Scholar] [CrossRef]

- Sun, L.; Tang, C.; Xu, M.; Lei, Z. Non-uniform illumination correction based on multi-scale Retinex in digital image correlation. Appl. Opt. 2021, 60, 5599–5609. [Google Scholar] [CrossRef]

- Texas Instruments. Introduction to Time-of-Flight Long Range Proximity and Distance Sensor System Design. Available online: https://www.ti.com/lit/an/sbau305b/sbau305b.pdf?ts=1732838735208 (accessed on 22 May 2025).

- Dung, C.; Anh, L.D. Autonomous concrete crack detection using deep fully convolutional neural network. Autom. Constr. 2019, 99, 52–58. [Google Scholar] [CrossRef]

- Yu, C.; Du, J.; Li, M.; Li, Y.; Li, W. An improved U-Net model for concrete crack detection. Mach. Learn. Appl. 2022, 10, 100436. [Google Scholar] [CrossRef]

- Chen, Y.; Wu, R.; Puranam, A. Multi-task deep learning for crack segmentation and quantification in RC structures. Autom. Constr. 2024, 166, 105599. [Google Scholar] [CrossRef]

- Weng, Y.; Shan, J.; Lu, Z.; Lu, X.; Spencer, B., Jr. Homography-based structural displacement measurement for large structures using unmanned aerial vehicles. Comput. Aided Civ. Infrastruct. Eng. 2021, 36, 1114–1128. [Google Scholar] [CrossRef]

- Yoon, H.; Shin, J.; Spencer, B., Jr. Structural displacement measurement using an unmanned aerial system. Comput. Aided Civ. Infrastruct. Eng. 2018, 33, 183–192. [Google Scholar] [CrossRef]

- Jeong, E.; Seo, J.; Wacker, J. Grayscale drone inspection image enhancement framework for advanced bridge defect measurement. Transp. Res. Rec. 2021, 2675, 603–612. [Google Scholar] [CrossRef]

- Jeong, E.; Seo, J.; Wacker, J. UAV-aided bridge inspection protocol through machine learning with improved visibility images. Expert. Syst. Appl. 2022, 197, 116791. [Google Scholar] [CrossRef]

- Lei, B.; Wang, N.; Xu, P.; Song, G. New crack detection method for bridge inspection using UAV incorporating image processing. J. Aerosp. Eng. 2018, 31, 04018058. [Google Scholar] [CrossRef]

- Bhowmick, S.; Nagarajaiah, S.; Veeraraghavan, A. Vision and deep learning-based algorithms to detect and quantify cracks on concrete surfaces from UAV videos. Sensors 2020, 20, 6299. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Li, L.; Liu, Z.; Peng, Z.; Liu, S.; Zhou, S.; Chai, X.; Jiang, K. Dam crack detection studies by UAV based on YOLO algorithm. In Proceedings of the 2023 2nd International Conference on Robotics, Artificial Intelligence and Intelligent Control (RAIIC), Mianyang, China, 11–13 August 2023; pp. 104–108. [Google Scholar] [CrossRef]

- Shan, J.; Huang, P.; Loong, C.; Liu, M. Rapid full-field deformation measurements of tall buildings using UAV videos and deep learning. Eng. Struct. 2024, 305, 117741. [Google Scholar] [CrossRef]

- Ji, R.; Sorosh, S.; Lo, E.; Norton, T.; Driscoll, J.; Kuester, F.; Barbosa, A.; Simpson, B.; Hutchinson, T. Application framework and optimal features for UAV-based earthquake-induced structural displacement monitoring. Algorithms 2025, 18, 66. [Google Scholar] [CrossRef]

- Koch, C.; Georgieva, K.; Kasireddy, V.; Akinci, B.; Fieguth, P. A review on computer vision based defect detection and condition assessment of concrete and asphalt civil infrastructure. Adv. Eng. Inform. 2015, 29, 196–210. [Google Scholar] [CrossRef]

- Ai, D.; Jiang, G.; Lam, S.; He, P.; Li, C. Computer vision framework for crack detection of civil infrastructure-A review. Eng. Appl. Artif. Intell. 2023, 117, 105478. [Google Scholar] [CrossRef]

- Luo, K.; Kong, X.; Zhang, J.; Hu, J.; Li, J.; Tang, H. Computer vision-based bridge inspection and monitoring: A review. Sensors 2023, 23, 7863. [Google Scholar] [CrossRef]

- Khan, M.; Kee, S.; Pathan, A.; Nahid, A. Image Processing Techniques for Concrete Crack Detection: A Scientometrics Literature Review. Remote Sens. 2023, 15, 2400. [Google Scholar] [CrossRef]

| Average Noise (mm) | Standard Deviation (mm) | |

|---|---|---|

| Cameras on tripods | 0.01 | 0.02 |

| Cameras on a UAV | 0.05 | 0.05 |

| Reference Number | Application Details | Quantity Measured |

|---|---|---|

| [75] | UAV photogrammetry | 3D model: accuracy of 30 mm |

| [83] | UAV photogrammetry | 3D model: accuracy up to 800 mm |

| [84] | UAV photogrammetry + image processing + back-projection | Measured crack openings: 2 to 4 mm (mean error 13%) |

| [85] | UAV photogrammetry + image processing + back-projection | Measured crack openings: 3 to 20 mm (mean error 1 mm) |

| [86] | UAV photogrammetry | 3D model: accuracy of 15 mm |

| Measurement Range | Depth Error |

|---|---|

| 1 m | 1 mm |

| 1.5 m | 2.4 mm |

| 2.5 m | 3.4 mm |

| 3 m | 3.7 mm |

| Technique | Smallest Measured Crack/Defect (mm) | Camera Resolution | Reference Number |

|---|---|---|---|

| DIC | 0.05 | 2 MP | 19 |

| Photogrammetry | 2 | 15.6 MP | 84 |

| ToF | 3.5 | 0.37 MP | 101 |

| Before Correction | After Correction | |

|---|---|---|

| Horizontal displacement (mm) | 0.0145 | 0.0128 |

| Vertical displacement (mm) | 0.0164 | 0.0121 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hajjar, M.; Zappa, E.; Bolzon, G. The State of the Art and Potentialities of UAV-Based 3D Measurement Solutions in the Monitoring and Fault Diagnosis of Quasi-Brittle Structures. Sensors 2025, 25, 5134. https://doi.org/10.3390/s25165134

Hajjar M, Zappa E, Bolzon G. The State of the Art and Potentialities of UAV-Based 3D Measurement Solutions in the Monitoring and Fault Diagnosis of Quasi-Brittle Structures. Sensors. 2025; 25(16):5134. https://doi.org/10.3390/s25165134

Chicago/Turabian StyleHajjar, Mohammad, Emanuele Zappa, and Gabriella Bolzon. 2025. "The State of the Art and Potentialities of UAV-Based 3D Measurement Solutions in the Monitoring and Fault Diagnosis of Quasi-Brittle Structures" Sensors 25, no. 16: 5134. https://doi.org/10.3390/s25165134

APA StyleHajjar, M., Zappa, E., & Bolzon, G. (2025). The State of the Art and Potentialities of UAV-Based 3D Measurement Solutions in the Monitoring and Fault Diagnosis of Quasi-Brittle Structures. Sensors, 25(16), 5134. https://doi.org/10.3390/s25165134