Ensemble Learning Framework for Anomaly Detection in Autonomous Driving Systems

Abstract

Highlights

- Our proposed ensemble models for anomaly detection in autonomous driving systems consistently outperform individual models in all accuracy metrics on two datasets.

- In evaluating false positive rates, ensemble learning demonstrated significant gains, reducing false positives and thereby enhancing overall system reliability.

- The findings underscore the efficacy of ensemble learning in enhancing resilience and precision of anomaly detection systems in autonomous vehicles.

- Enhanced anomaly detection bolsters public confidence in autonomous driving systems.

Abstract

1. Introduction

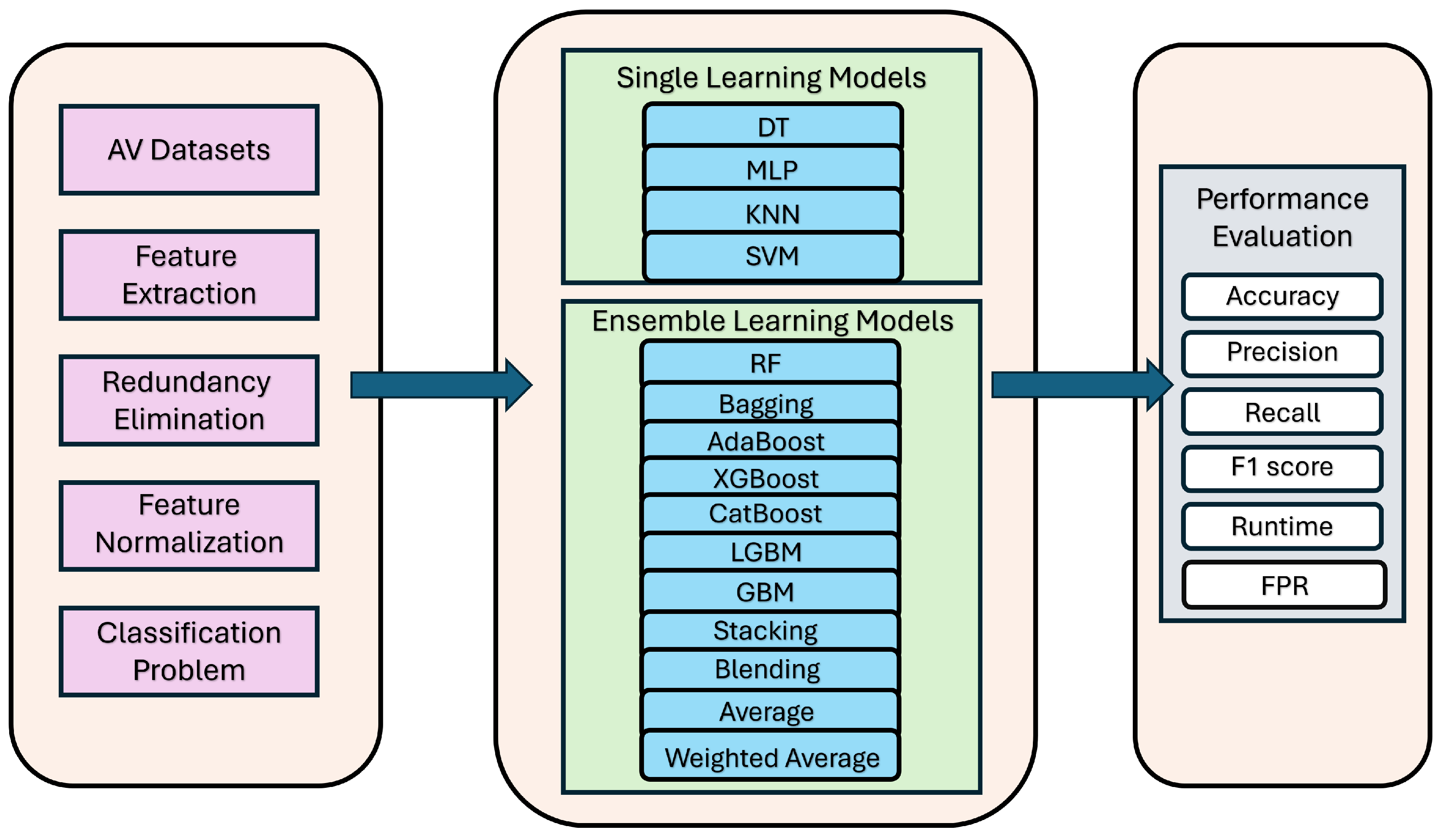

- We propose an ensemble learning framework for anomaly detection in autonomous driving systems. In our framework, we perform a comprehensive evaluation of various ensemble learning methods along with individual models.

- We employ a diverse array of ensemble techniques exploring 11 ensemble learning models, including random forest (RF), bagging classifier (bagging), adaptive boosting (AdaBoost), eXtreme Gradient Boosting (XGBoost), categorical boosting (CatBoost), light gradient boosting machine (LGBM), gradient boosting machine (GBM), average (Avg), weighted average (W. Avg), stacking (Stacking), and blending (Blending). We achieve superior predictive performance compared to single-classifier models.

- We conduct a rigorous evaluation of the proposed ensemble framework using two distinct real-world autonomous driving datasets with different characteristics—the VeReMi and Sensor datasets.

- We provide insights into strengths, limitations, and trade-offs of different ensemble learning methods for the anomaly detection task for autonomous vehicles.

- We release our source codes to facilitate their use in autonomous driving anomaly detection classification. We emphasize that this resource has the two datasets, preprocessing scripts, and hyperparameter tuning settings to facilitate full reproducibility of our results. We encourage researchers to utilize this resource for further development and to build additional models (the URL for our source codes can be found at https://github.com/Nazat28/Ensemble-Models-for-Classification-on-Autonomous-Vehicle-Dataset, accessed on 1 August 2025).

2. Related Work

2.1. Anomaly Detection in AV

2.2. Ensemble Learning in Autonomous Driving

3. The Problem Statement

3.1. Anomaly Types in AVs

3.2. Anomaly Detection Systems for AVs

3.3. Shortcomings of Base Learner Models

3.4. Main Benefits of Popular Ensemble Methods

4. Materials and Methods

4.1. End-to-End Framework for Autonomous Driving Systems

4.2. Innovative Value of Our Ensemble Learning Framework in Autonomous Driving Context

5. Foundations of Evaluation

- What are the best base (individual) models for a given autonomous driving dataset?

- Which ensemble method has the best performance for a given autonomous driving dataset?

- What is the performance of different classes of AI models for anomaly detection in our two autonomous driving datasets in terms of accuracy, precision, recall, F1, false positive rate, and runtime?

- What are the limitations and strengths of ensemble learning methods when applied to anomaly detection in autonomous driving systems?

5.1. Dataset Description

5.2. Experimental Setup

6. Evaluation Results

6.1. Performance of Ensemble Learning Models

6.1.1. Binary Classification

6.1.2. Multiclass Classification

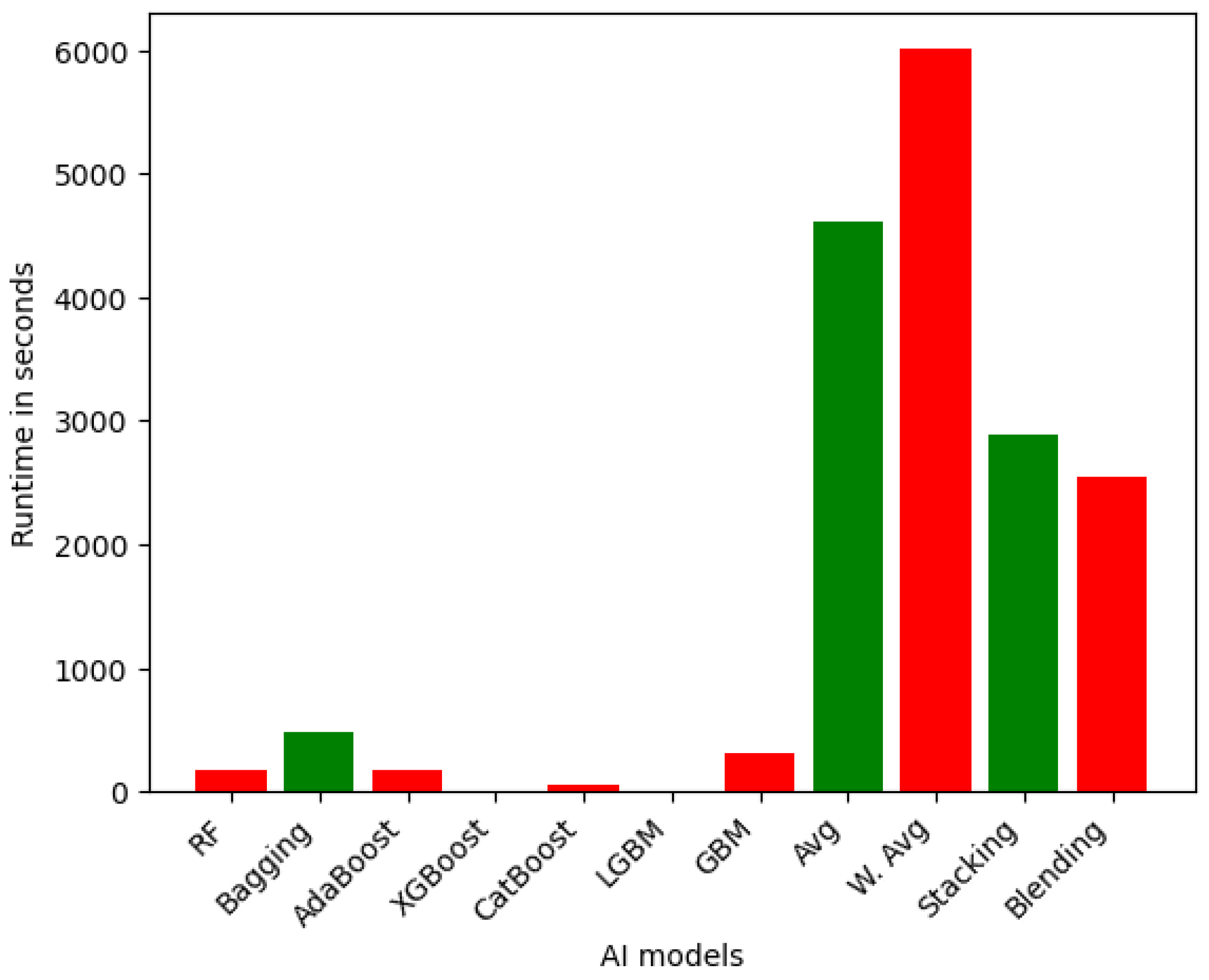

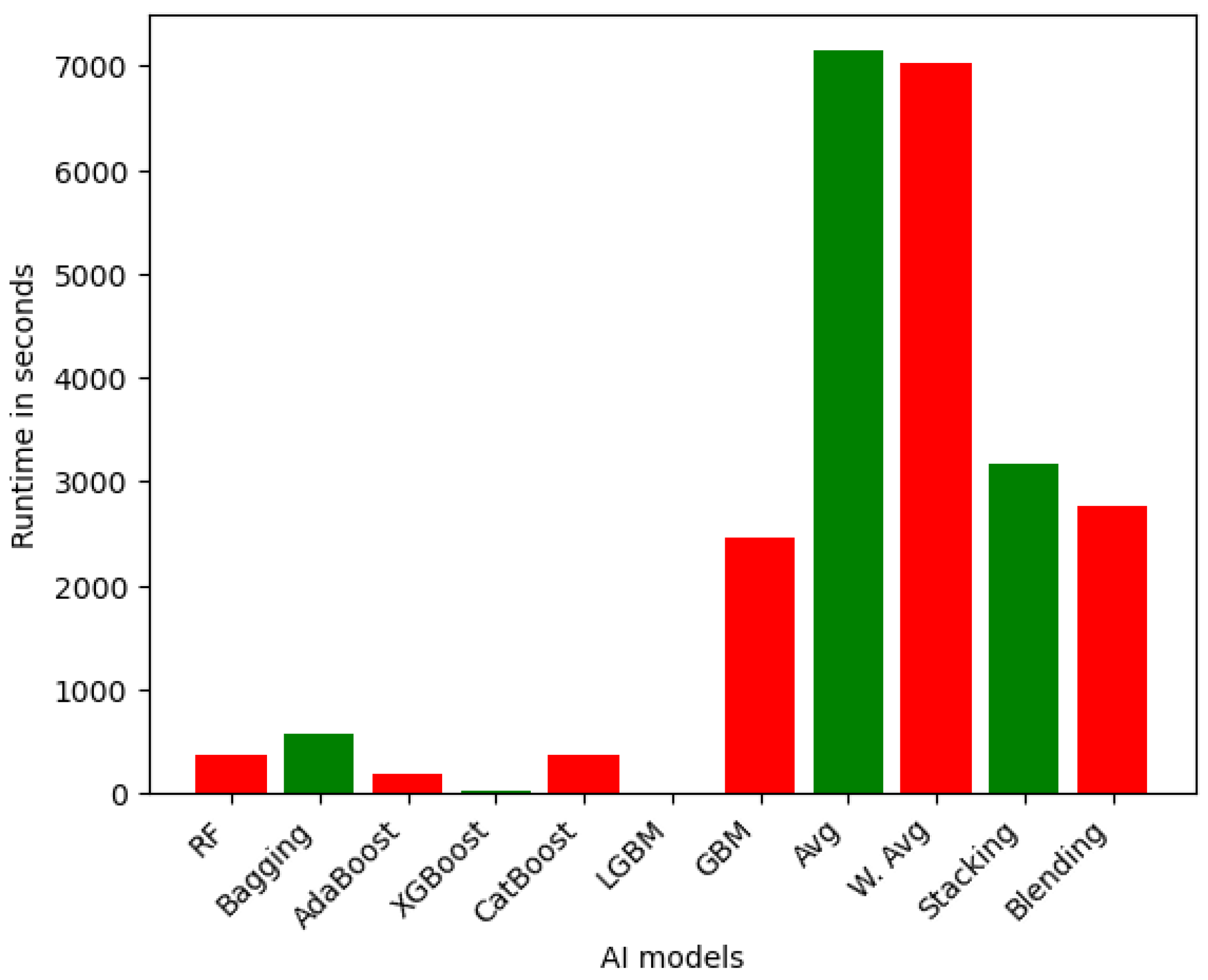

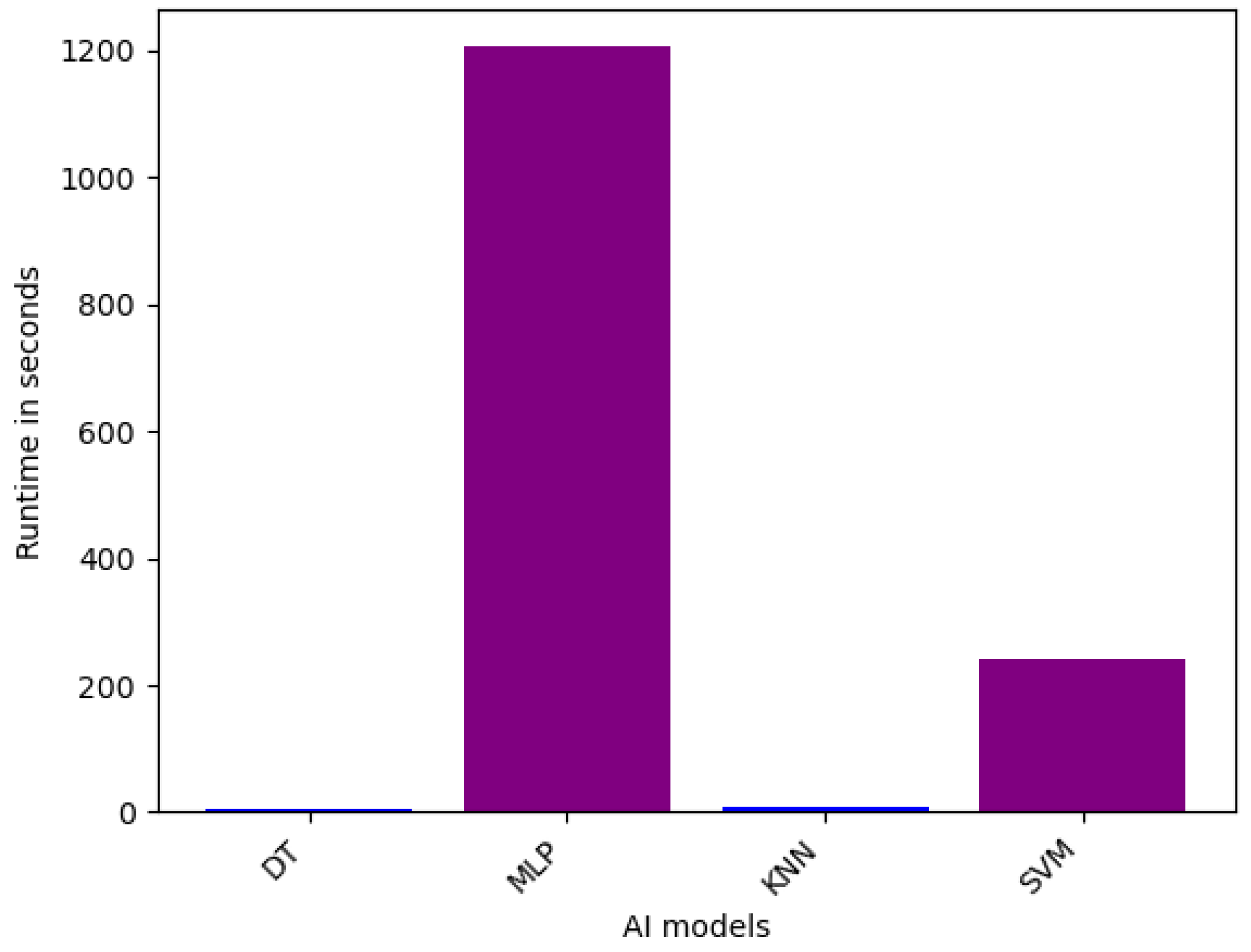

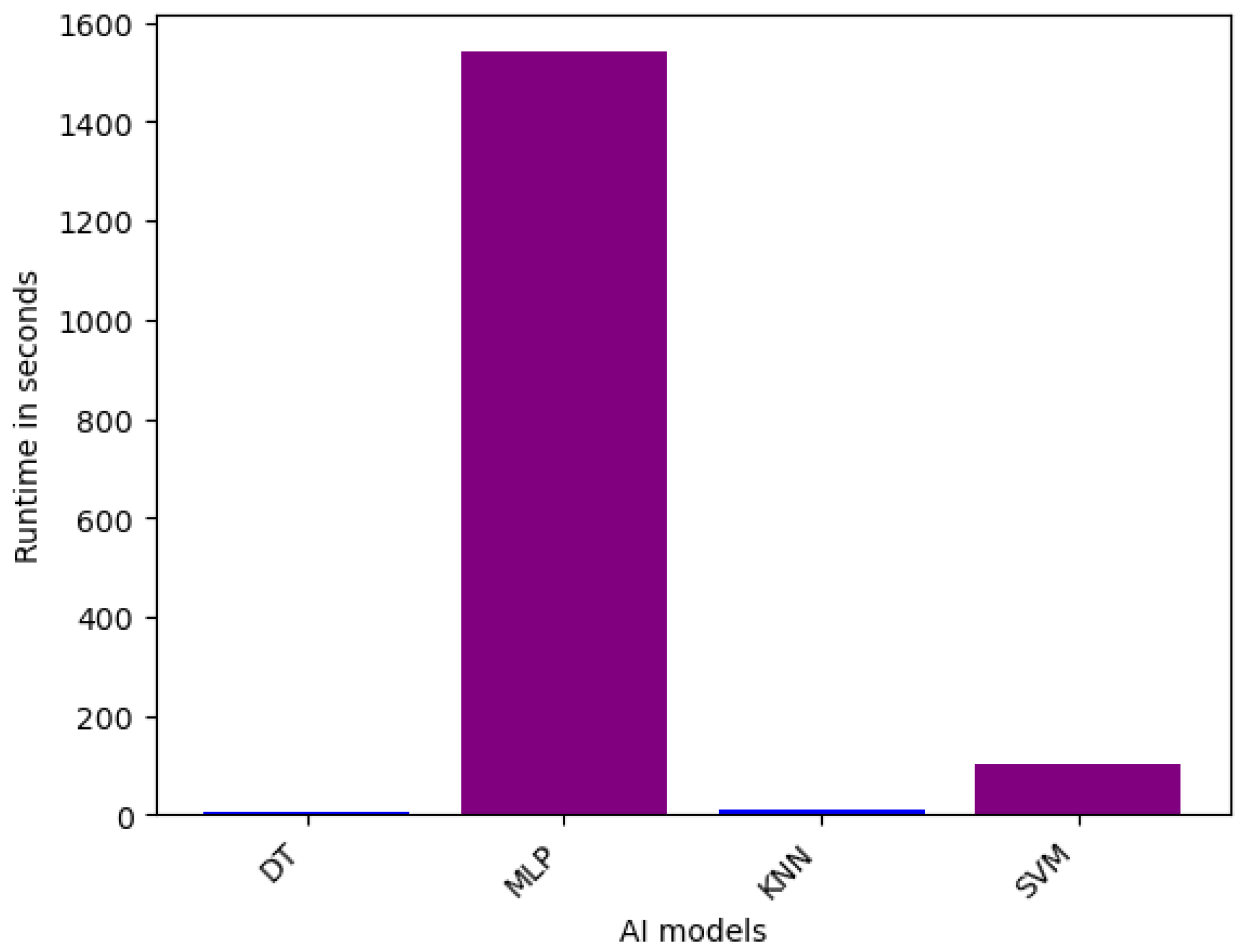

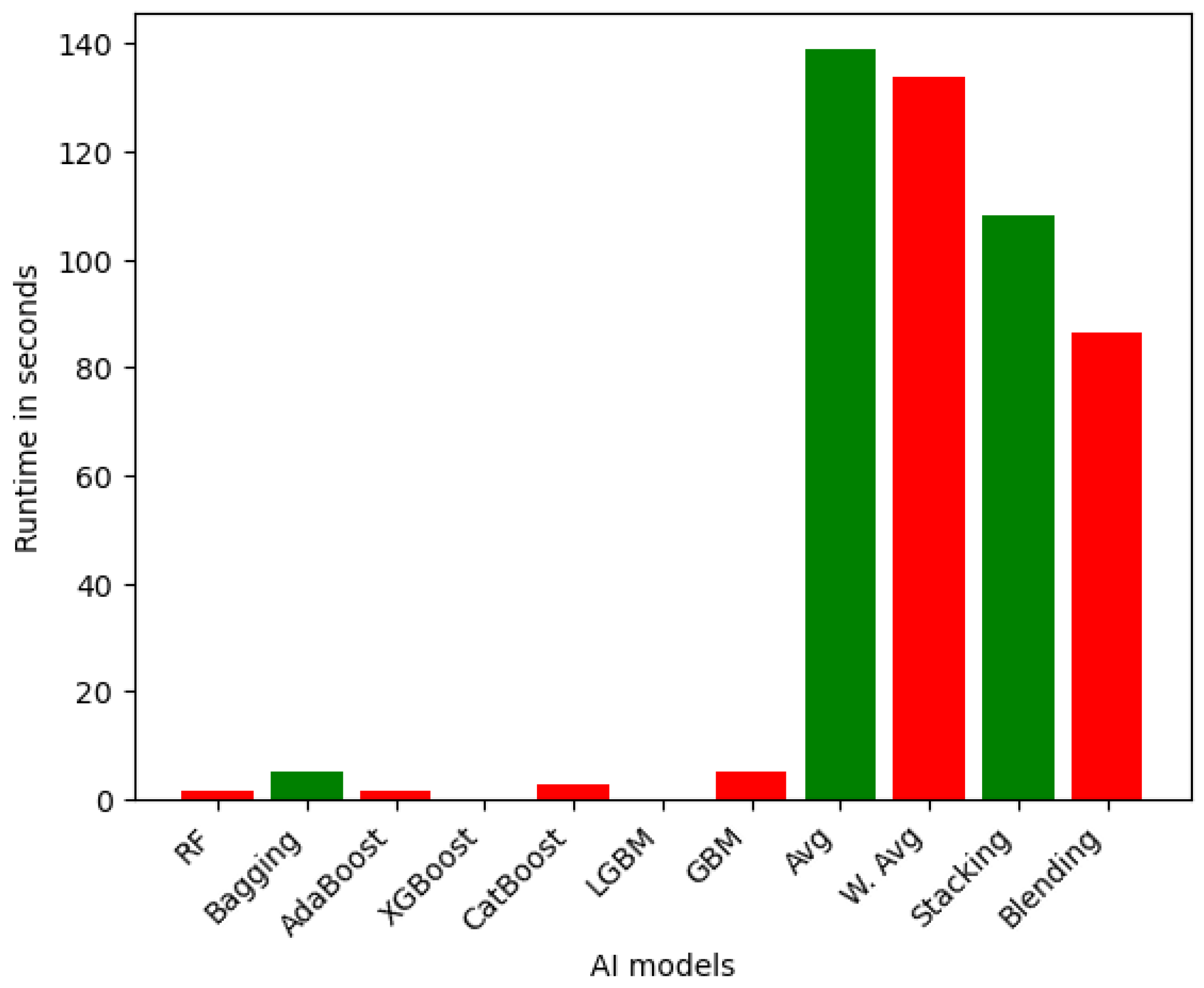

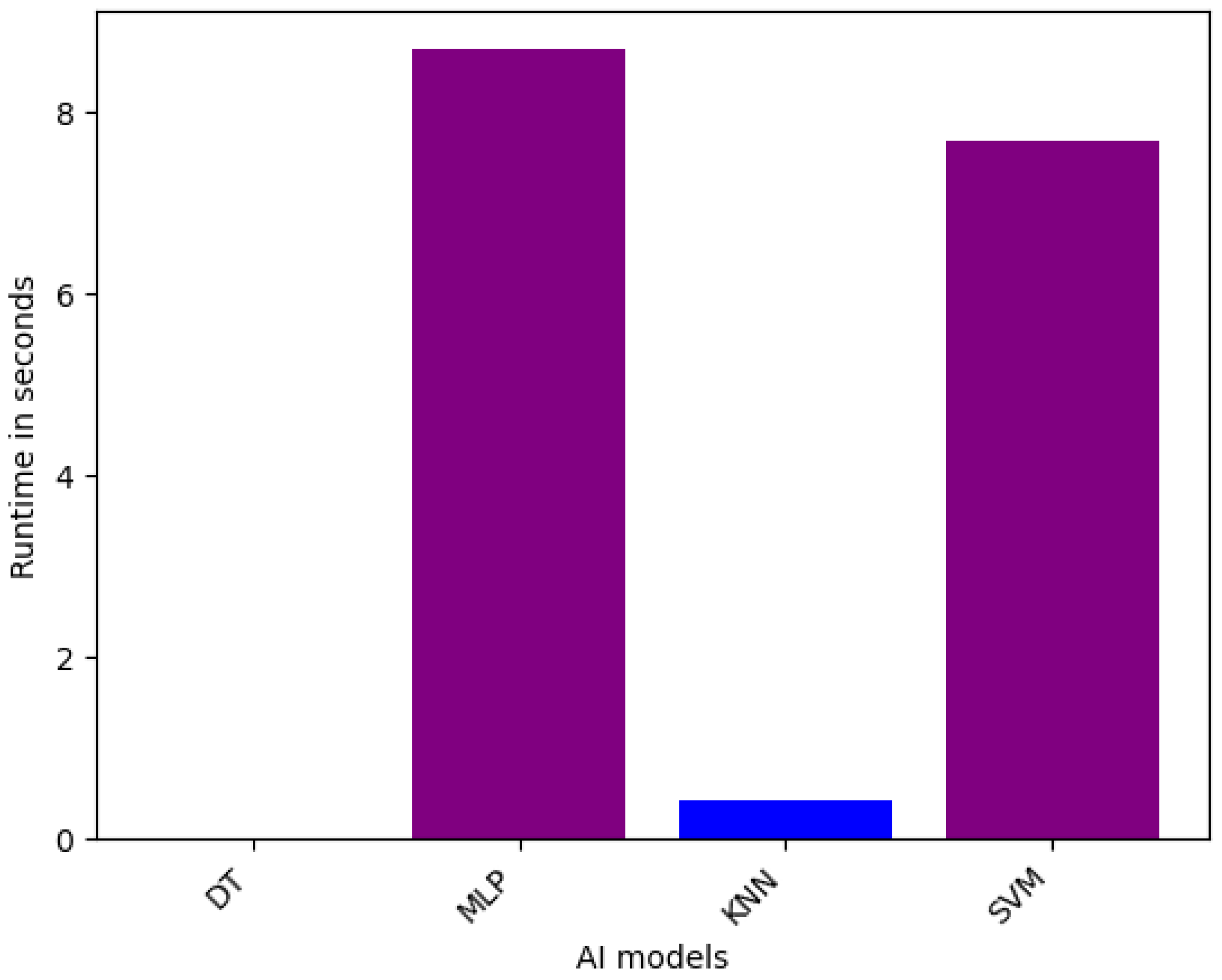

6.1.3. Runtime Analysis

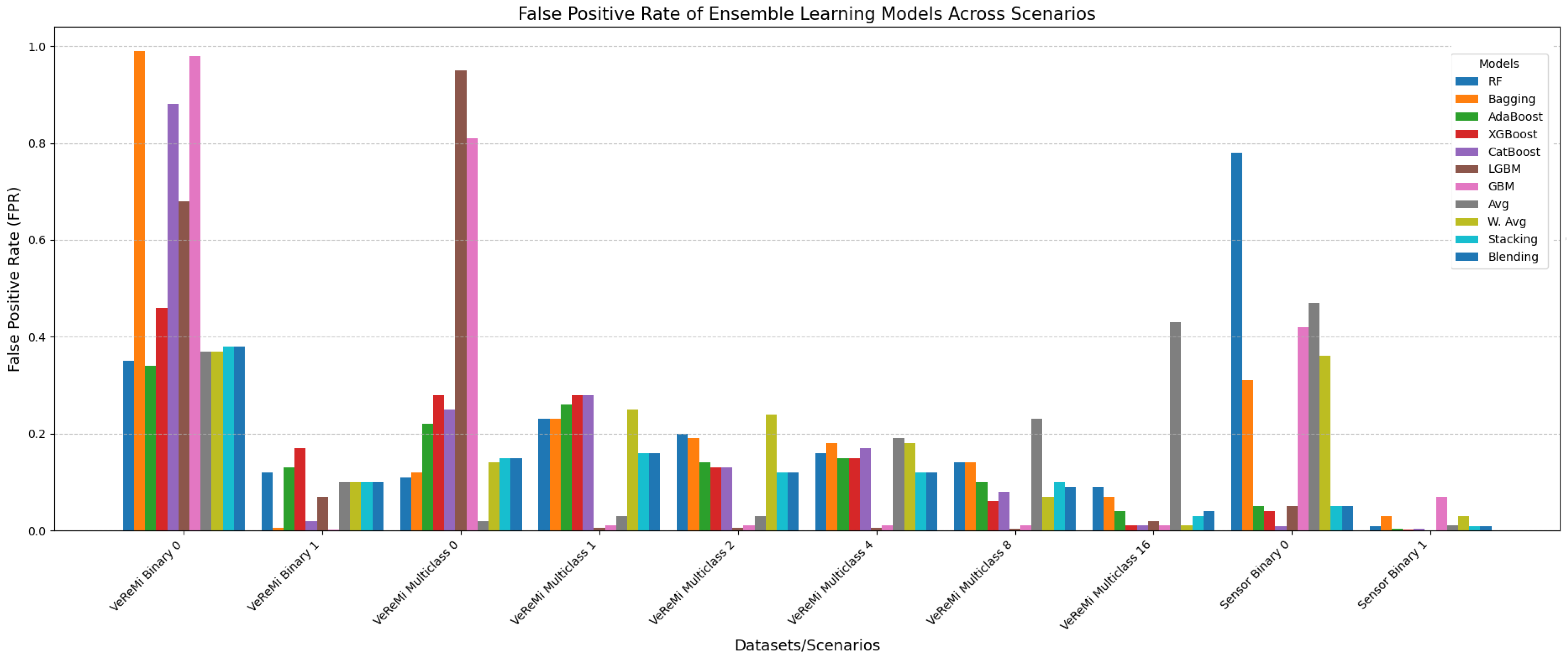

6.1.4. False Positive Rate Analysis

6.2. Effect of Hyperparameters of Ensemble Learning Models

6.2.1. VeReMi Dataset

6.2.2. Sensor Dataset

6.2.3. Guidelines and Insights from Hyperparameter Tuning

6.3. Comparative Analysis and Summary of Evaluation Results

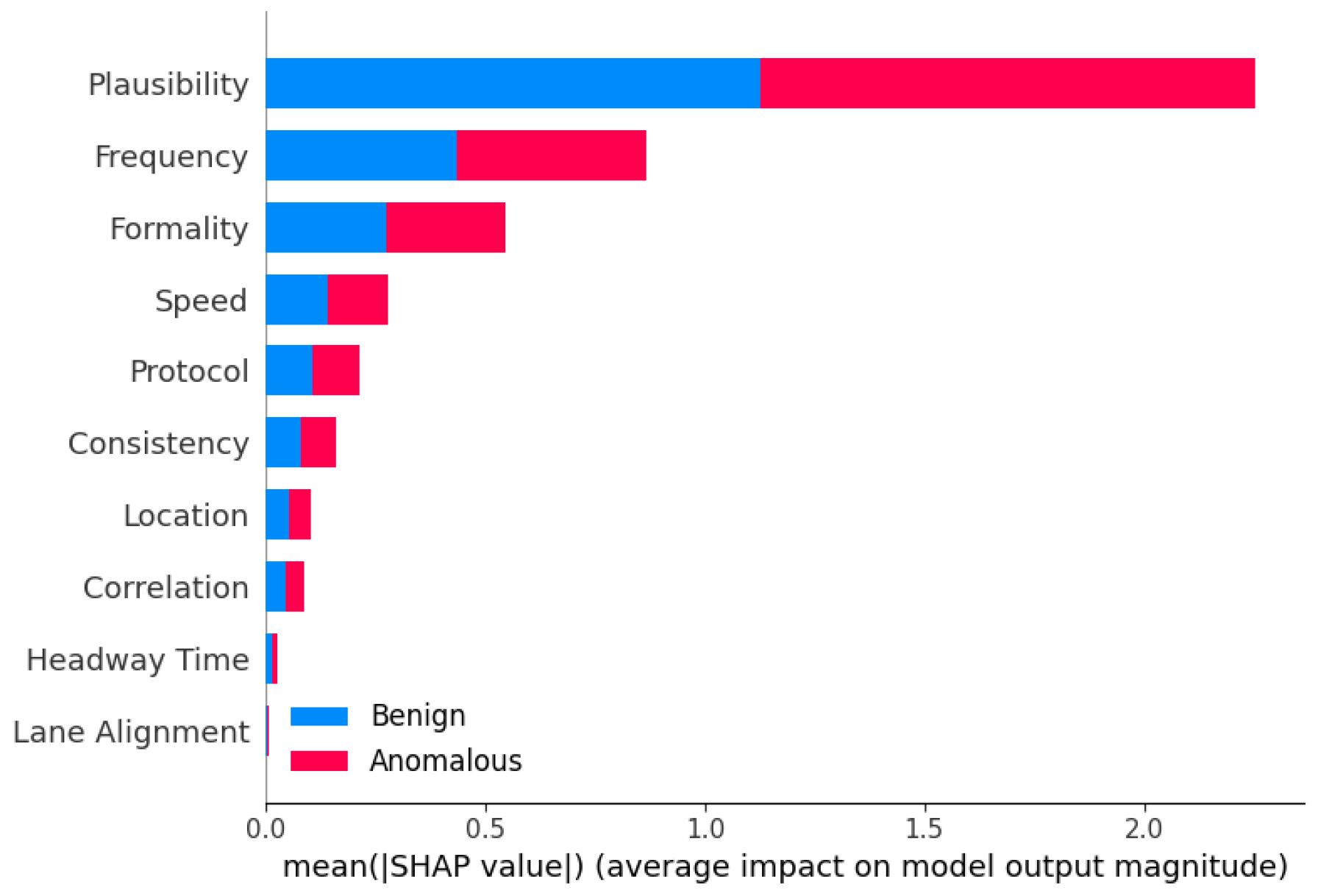

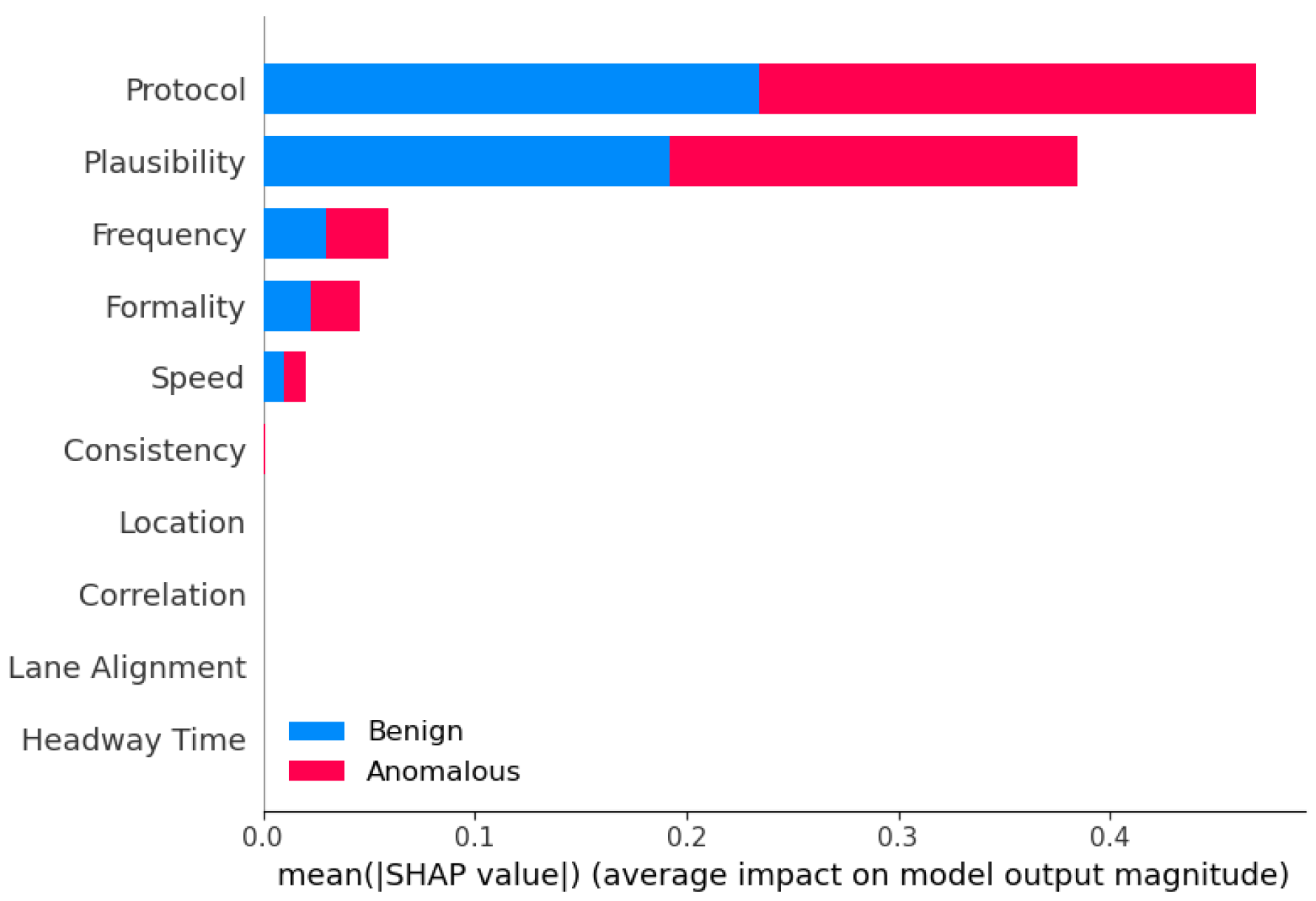

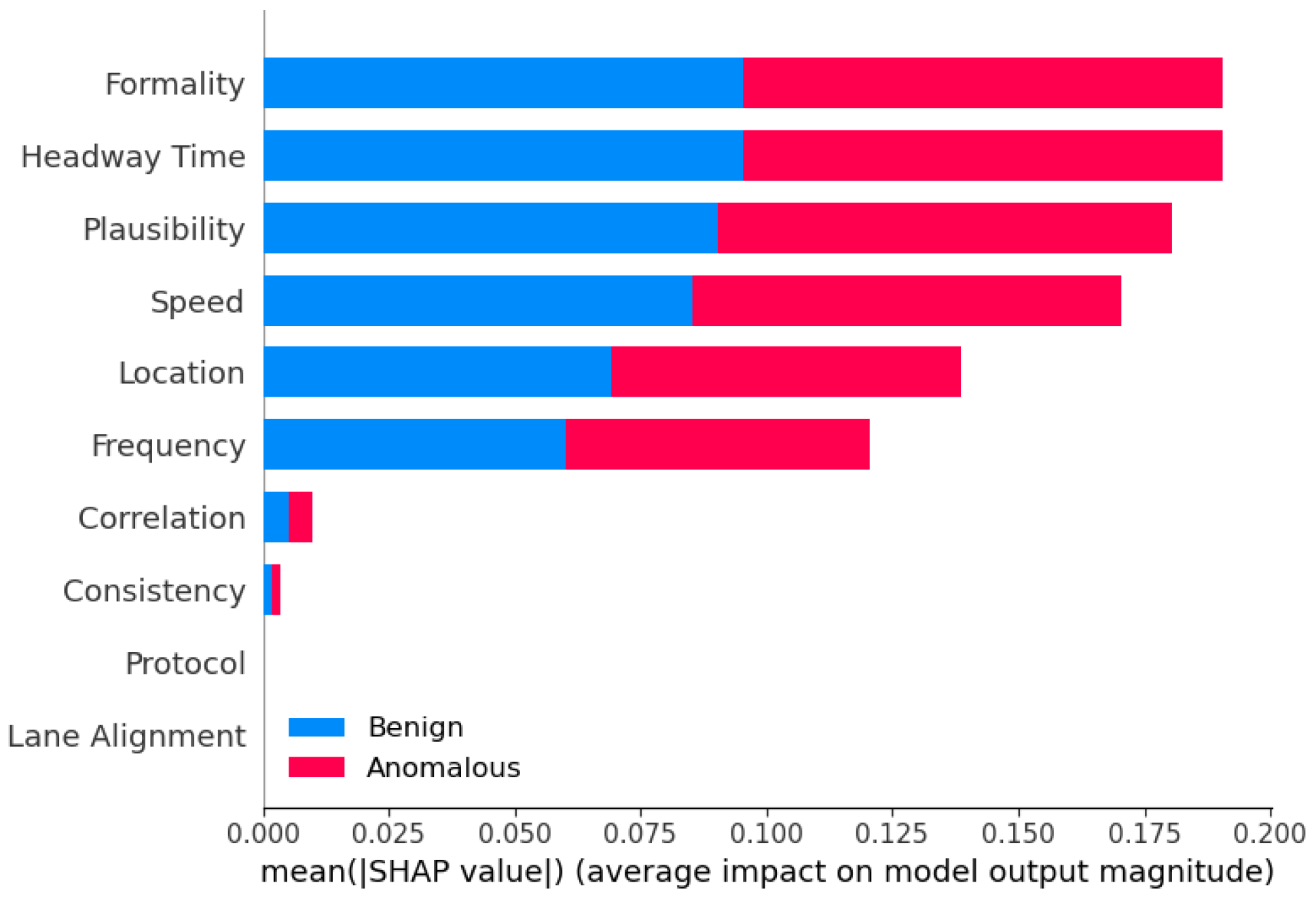

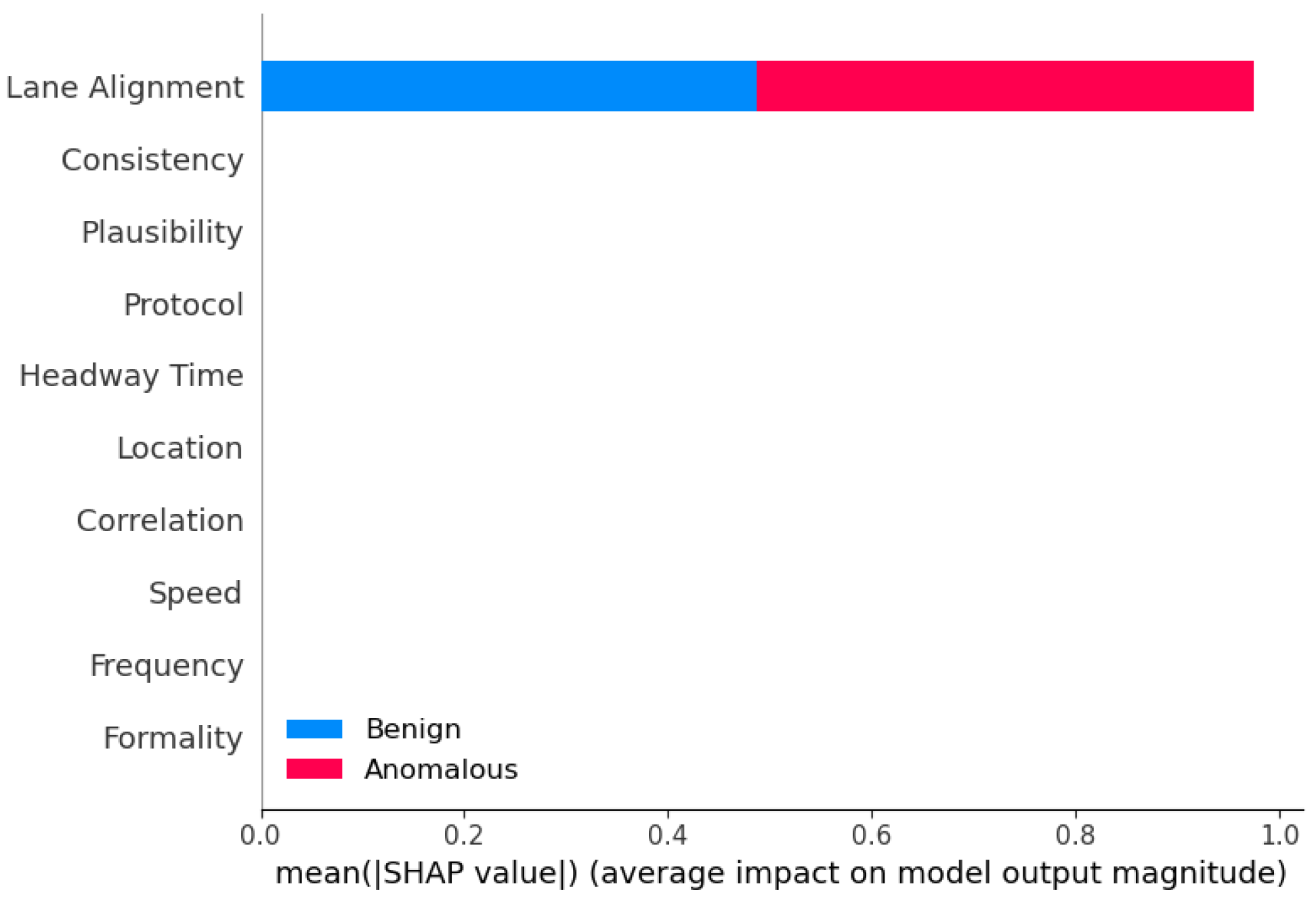

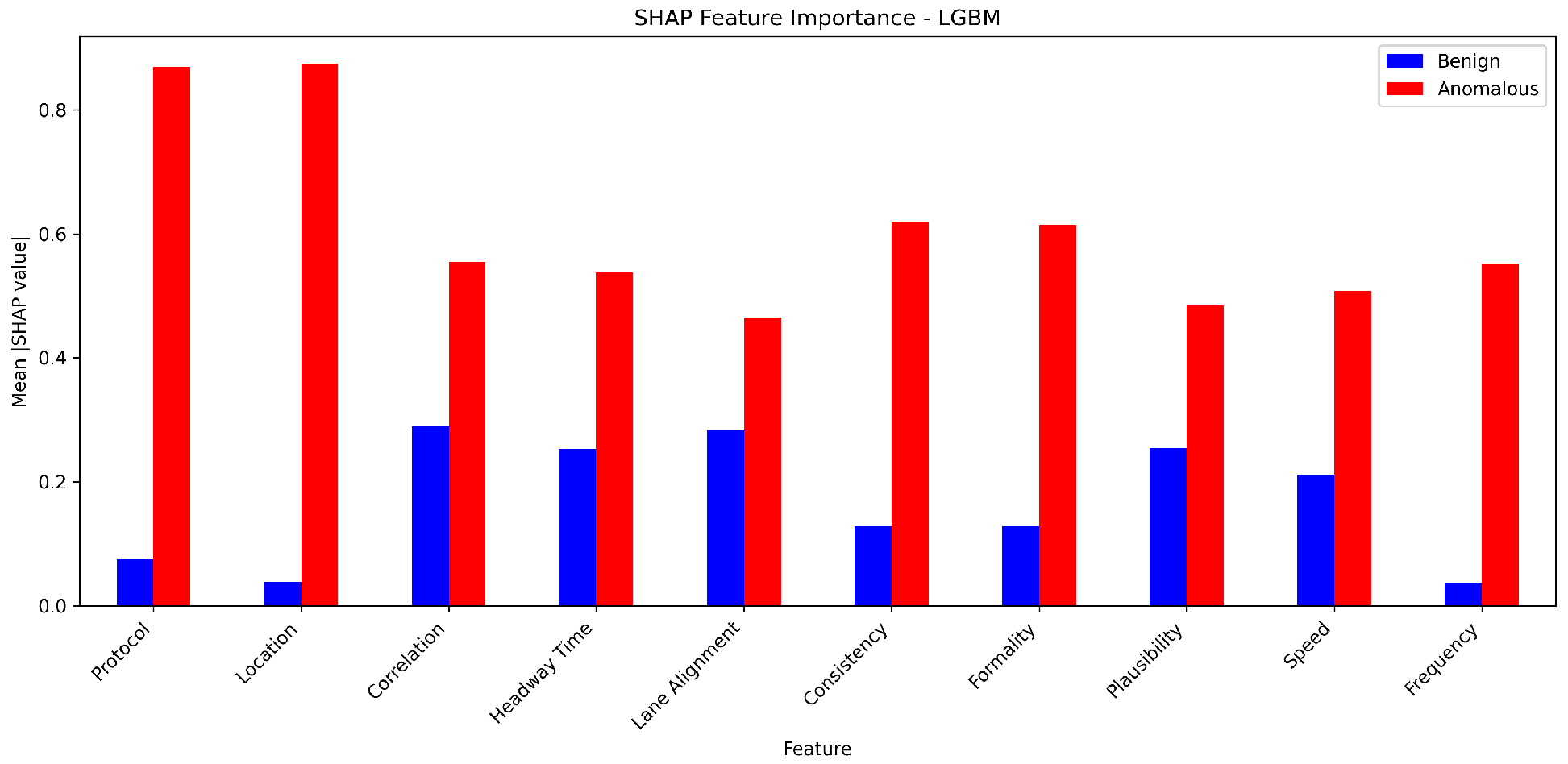

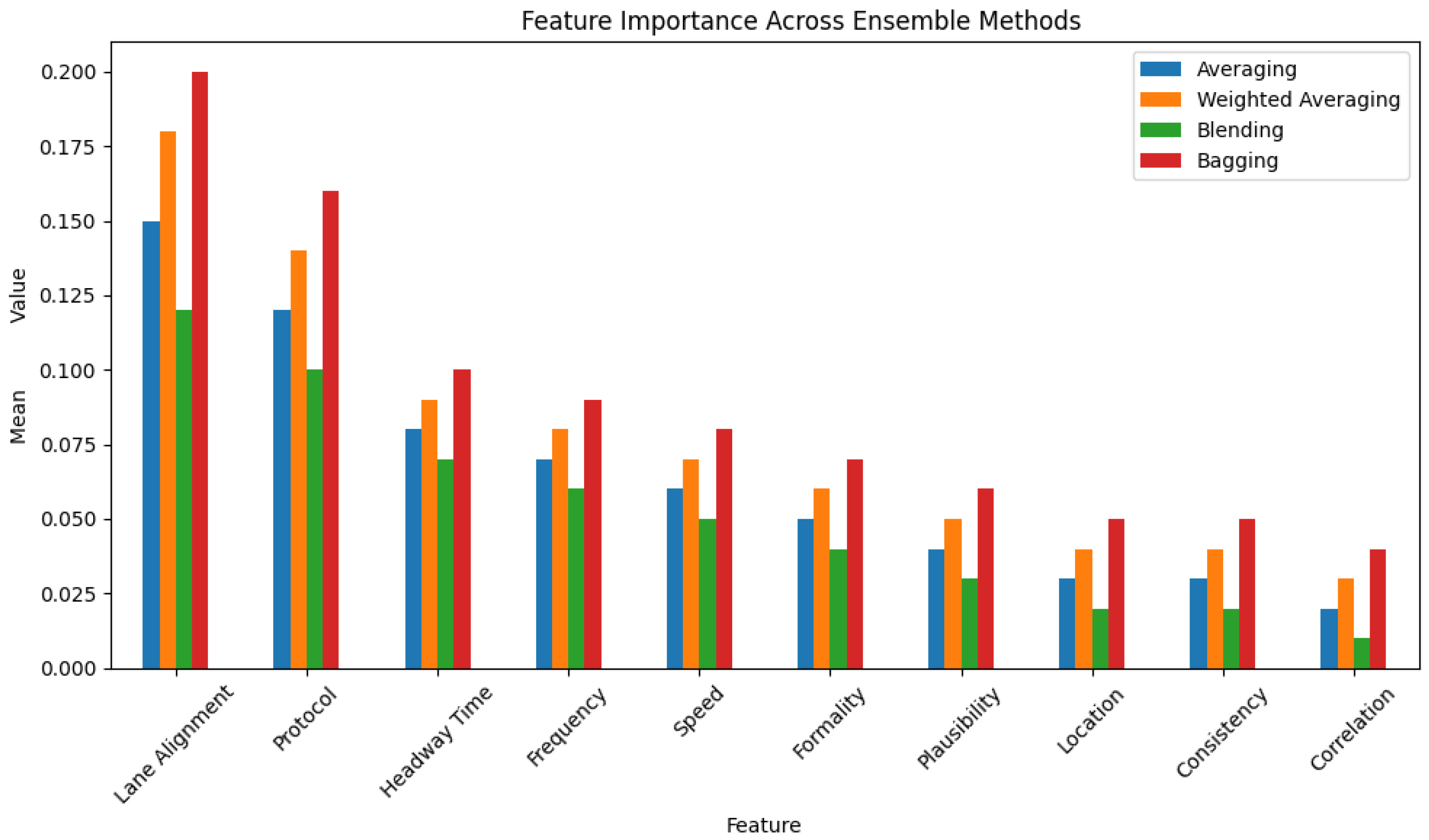

6.4. Interperatability Analysis of Top Features for Different Methods

7. Discussion

7.1. Main Insights and Related Discussion

7.1.1. Superiority of Ensemble Learning over Single Models

7.1.2. Trade-Off Between Performance Metrics and Hyperparameter Tuning

7.1.3. Computational Efficiency Considerations and Optimizations for Deployment

7.2. Use of AI Notation Instead of ML

7.3. Usage of MLP and Traditional AI Models

7.4. Limitations

7.4.1. Limited Dataset Diversity

7.4.2. Computational Complexity and Scalability Concerns

7.5. Explaining Decision-Making of Ensemble Methods

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AVs | Autonomous Vehicles |

| VANET | Vehicular Ad Hoc Network |

| KNN | K-nearest Neighbors |

| ADB | Aggressive Driving Behavior |

| RF | Random Forest |

| AdaBoost | Adaptive Boosting |

| XGBoost | EXtreme Gradient Boosting |

| CatBoost | Categorical Boosting |

| LGBM | Light Gradient Boosting Machine |

| Avg | Averaging |

| W. Avg | Weighted Average |

| Blend | Blending |

| M-CNN | Modified-Convolutional Neural Network |

| MLP | Multilayer Perceptron |

| LSTM | Long-Short Term Memory |

| SVM | Support Vector Machine |

| DT | Decision Tree |

| FDI | False Data Injection |

| OBU | Onboard Unit |

Appendix A. AI Models and Hyper-Parameters

Appendix A.1. AI Models and Hyperparameters Details

Appendix A.1.1. Base Models

Appendix A.1.2. Ensemble Methods

References

- Hussain, R.; Hussain, F.; Zeadally, S. Integration of VANET and 5G Security: A review of design and implementation issues. Future Gener. Comput. Syst. 2019, 101, 843–864. [Google Scholar] [CrossRef]

- Nazat, S.; Abdallah, M. Anomaly Detection Framework for Securing Next Generation Networks of Platoons of Autonomous Vehicles in a Vehicle-to-Everything System. In Proceedings of the 9th ACM Cyber-Physical System Security Workshop, Melbourne, VIC, Australia, 10–14 July 2023; CPSS ’23. pp. 24–35. [Google Scholar] [CrossRef]

- Gillani, M.; Niaz, H.A.; Tayyab, M. Role of machine learning in WSN and VANETs. Int. J. Electr. Comput. Eng. Res. 2021, 1, 15–20. [Google Scholar] [CrossRef]

- Agrawal, K.; Alladi, T.; Agrawal, A.; Chamola, V.; Benslimane, A. NovelADS: A novel anomaly detection system for intra-vehicular networks. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22596–22606. [Google Scholar] [CrossRef]

- Aziz, S.; Faiz, M.T.; Adeniyi, A.M.; Loo, K.H.; Hasan, K.N.; Xu, L.; Irshad, M. Anomaly detection in the internet of vehicular networks using explainable neural networks (xNN). Mathematics 2022, 10, 1267. [Google Scholar] [CrossRef]

- Song, Z.; He, Z.; Li, X.; Ma, Q.; Ming, R.; Mao, Z.; Pei, H.; Peng, L.; Hu, J.; Yao, D.; et al. Synthetic datasets for autonomous driving: A survey. IEEE Trans. Intell. Veh. 2023, 9, 1847–1864. [Google Scholar] [CrossRef]

- Su, J.; Jiang, C.; Jin, X.; Qiao, Y.; Xiao, T.; Ma, H.; Wei, R.; Jing, Z.; Xu, J.; Lin, J. Large Language Models for Forecasting and Anomaly Detection: A Systematic Literature Review. arXiv 2024, arXiv:2402.10350. [Google Scholar] [CrossRef]

- Vanerio, J.; Casas, P. Ensemble-learning approaches for network security and anomaly detection. In Proceedings of the Workshop on Big Data Analytics and Machine Learning for Data Communication Networks, Los Angeles, CA, USA, 21 August 2017; pp. 1–6. [Google Scholar]

- Rao, R.V.; Kumar, S. Enhancing Anomaly Detection and Intrusion Detection Systems in Cybersecurity through. Machine Learning Techniques. Int. J. Innov. Sci. Res. Technol. 2023, 8, 2321–2328. [Google Scholar]

- Zhang, C.; Ma, Y. Ensemble Machine Learning: Methods and Applications; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Zhang, H.; Fu, R. An Ensemble Learning—Online Semi-Supervised Approach for Vehicle Behavior Recognition. IEEE Trans. Intell. Transp. Syst. 2022, 23, 10610–10626. [Google Scholar] [CrossRef]

- Wang, H.; Wang, X.; Han, J.; Xiang, H.; Li, H.; Zhang, Y.; Li, S. A Recognition Method of Aggressive Driving Behavior Based on Ensemble Learning. Sensors 2022, 22, 644. [Google Scholar] [CrossRef]

- Gupta, M.; Upadhyay, V.; Kumar, P.; Al-Turjman, F. Implementation of autonomous driving using Ensemble-M in simulated environment. Soft Comput. 2021, 25, 12429–12438. [Google Scholar] [CrossRef]

- Du, R.; Feng, R.; Gao, K.; Zhang, J.; Liu, L. Self-supervised point cloud prediction for autonomous driving. IEEE Trans. Intell. Transp. Syst. 2024, 25, 17452–17467. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Brownlee, J. Stacking Ensemble Machine Learning With Python. 2021. Available online: https://machinelearningmastery.com/stacking-ensemble-machine-learning-with-python/ (accessed on 11 July 2025).

- Van Der Heijden, R.W.; Lukaseder, T.; Kargl, F. Veremi: A dataset for comparable evaluation of misbehavior detection in vanets. In Proceedings of the Security and Privacy in Communication Networks: 14th International Conference, SecureComm 2018, Singapore, 8–10 August 2018; Proceedings, Part I. Springer: Berlin/Heidelberg, Germany, 2018; pp. 318–337. [Google Scholar]

- Müter, M.; Groll, A.; Freiling, F.C. A structured approach to anomaly detection for in-vehicle networks. In Proceedings of the 2010 Sixth International Conference on Information Assurance and Security, Atlanta, GA, USA, 23–25 August 2010; IEEE: Piscataway Township, NJ, USA, 2010; pp. 92–98. [Google Scholar]

- Nazat, S.; Arreche, O.; Abdallah, M. On Evaluating Black-Box Explainable AI Methods for Enhancing Anomaly Detection in Autonomous Driving Systems. Sensors 2024, 24, 3515. [Google Scholar] [CrossRef]

- Rajendar, S.; Kaliappan, V.K. Sensor Data Based Anomaly Detection in Autonomous Vehicles using Modified Convolutional Neural Network. Intell. Autom. Soft Comput. 2022, 32, 859. [Google Scholar] [CrossRef]

- Zekry, A.; Sayed, A.; Moussa, M.; Elhabiby, M. Anomaly detection using IoT sensor-assisted ConvLSTM models for connected vehicles. In Proceedings of the 2021 IEEE 93rd Vehicular Technology Conference (VTC2021-Spring), Virtual, 25–28 April 2021; IEEE: Piscataway Township, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Alsulami, A.A.; Abu Al-Haija, Q.; Alqahtani, A.; Alsini, R. Symmetrical simulation scheme for anomaly detection in autonomous vehicles based on LSTM model. Symmetry 2022, 14, 1450. [Google Scholar] [CrossRef]

- Prathiba, S.B.; Raja, G.; Anbalagan, S.; S, A.K.; Gurumoorthy, S.; Dev, K. A Hybrid Deep Sensor Anomaly Detection for Autonomous Vehicles in 6G-V2X Environment. IEEE Trans. Netw. Sci. Eng. 2023, 10, 1246–1255. [Google Scholar] [CrossRef]

- van Wyk, F.; Wang, Y.; Khojandi, A.; Masoud, N. Real-Time Sensor Anomaly Detection and Identification in Automated Vehicles. IEEE Trans. Intell. Transp. Syst. 2020, 21, 1264–1276. [Google Scholar] [CrossRef]

- Javed, A.R.; Usman, M.; Rehman, S.U.; Khan, M.U.; Haghighi, M.S. Anomaly Detection in Automated Vehicles Using Multistage Attention-Based Convolutional Neural Network. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4291–4300. [Google Scholar] [CrossRef]

- Teng, S.; Li, X.; Li, Y.; Li, L.; Xuanyuan, Z.; Ai, Y.; Chen, L. Scenario Engineering for Autonomous Transportation: A New Stage in Open-Pit Mines. IEEE Trans. Intell. Veh. 2024, 9, 4394–4404. [Google Scholar] [CrossRef]

- Li, X.; Miao, Q.; Li, L.; Ni, Q.; Fan, L.; Wang, Y.; Tian, Y.; Wang, F.Y. Sora for Senarios Engineering of Intelligent Vehicles: V&V, C&C, and Beyonds. IEEE Trans. Intell. Veh. 2024, 9, 3117–3122. [Google Scholar] [CrossRef]

- Xiang, J.; Nan, Z.; Song, Z.; Huang, J.; Li, L. Map-Free Trajectory Prediction in Traffic with Multi-Level Spatial-Temporal Modeling. IEEE Trans. Intell. Veh. 2024, 9, 3258–3270. [Google Scholar] [CrossRef]

- Yin, J.; Li, L.; Mourelatos, Z.P.; Liu, Y.; Gorsich, D.; Singh, A.; Tau, S.; Hu, Z. Reliable Global Path Planning of Off-Road Autonomous Ground Vehicles Under Uncertain Terrain Conditions. IEEE Trans. Intell. Veh. 2024, 9, 1161–1174. [Google Scholar] [CrossRef]

- Ranft, B.; Stiller, C. The Role of Machine Vision for Intelligent Vehicles. IEEE Trans. Intell. Veh. 2016, 1, 8–19. [Google Scholar] [CrossRef]

- Xing, Y.; Lv, C.; Wang, H.; Cao, D.; Velenis, E.; Wang, F.Y. Driver Activity Recognition for Intelligent Vehicles: A Deep Learning Approach. IEEE Trans. Veh. Technol. 2019, 68, 5379–5390. [Google Scholar] [CrossRef]

- Liang, L.; Ma, H.; Zhao, L.; Xie, X.; Hua, C.; Zhang, M.; Zhang, Y. Vehicle detection algorithms for autonomous driving: A review. Sensors 2024, 24, 3088. [Google Scholar] [CrossRef]

- Thaker, J.; Jadav, N.K.; Tanwar, S.; Bhattacharya, P.; Shahinzadeh, H. Ensemble Learning-based Intrusion Detection System for Autonomous Vehicle. In Proceedings of the 2022 Sixth International Conference on Smart Cities, Internet of Things and Applications (SCIoT), Mashhad, Iran, 14–15 September 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Du, H.P.; Nguyen, A.D.; Nguyen, D.T.; Nguyen, H.N.; Nguyen, D.H.P. A Novel Deep Ensemble Learning to Enhance User Authentication in Autonomous Vehicles. In IEEE Transactions on Automation Science and Engineering; IEEE: Piscataway Township, NJ, USA, 2023; pp. 1–12. [Google Scholar] [CrossRef]

- Lombacher, J.; Hahn, M.; Dickmann, J.; Wöhler, C. Object classification in radar using ensemble methods. In Proceedings of the 2017 IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), Nagoya, Japan, 19–21 March 2017; pp. 87–90. [Google Scholar] [CrossRef]

- Li, W.; Qiu, F.; Li, L.; Zhang, Y.; Wang, K. Simulation of Vehicle Interaction Behavior in Merging Scenarios: A Deep Maximum Entropy-Inverse Reinforcement Learning Method Combined With Game Theory. IEEE Trans. Intell. Veh. 2024, 9, 1079–1093. [Google Scholar] [CrossRef]

- Kamel, J.; Wolf, M.; van Der Heijden, R.W.; Kaiser, A.; Urien, P.; Kargl, F. VeReMi Extension: A Dataset for Comparable Evaluation of Misbehavior Detection in VANETs. In Proceedings of the 2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020. [Google Scholar]

- Parra, G.D.L.T. Distributed AI-Defense for Cyber Threats on Edge Computing Systems. Ph.D. Thesis, The University of Texas at San Antonio, San Antonio, TX, USA, 2021. [Google Scholar]

- Taylor, A. Anomaly-Based Detection of Malicious Activity in In-Vehicle Networks. Ph.D. Thesis, Université d’Ottawa/University of Ottawa, Ottawa, ON, Canada, 2017. [Google Scholar]

- Khalil, R.A.; Safelnasr, Z.; Yemane, N.; Kedir, M.; Shafiqurrahman, A.; Saeed, N. Advanced Learning Technologies for Intelligent Transportation Systems: Prospects and Challenges. IEEE Open J. Veh. Technol. 2024, 5, 397–427. [Google Scholar] [CrossRef]

- DCunha, S.D. Is AI Shifting The Human-in-the-Loop Model In Cybersecurity? 2017. Available online: https://datatechvibe.com/ai/is-ai-shifting-the-human-in-the-loop-model-in-cybersecurity/ (accessed on 21 October 2021).

- Mijalkovic, J.; Spognardi, A. Reducing the false negative rate in deep learning based network intrusion detection systems. Algorithms 2022, 15, 258. [Google Scholar] [CrossRef]

- Pang, G.; Shen, C.; Van Den Hengel, A. Deep anomaly detection with deviation networks. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 353–362. [Google Scholar]

- Chowdhury, A.; Karmakar, G.; Kamruzzaman, J.; Jolfaei, A.; Das, R. Attacks on self-driving cars and their countermeasures: A survey. IEEE Access 2020, 8, 207308–207342. [Google Scholar] [CrossRef]

- Santini, S.; Salvi, A.; Valente, A.S.; Pescapè, A.; Segata, M.; Cigno, R.L. A consensus-based approach for platooning with inter-vehicular communications. In Proceedings of the 2015 IEEE Conference on Computer Communications (INFOCOM), Hong Kong, 26 April–1 May 2015; IEEE: Piscataway Township, NJ, USA, 2015; pp. 1158–1166. [Google Scholar]

- Limbasiya, T.; Teng, K.Z.; Chattopadhyay, S.; Zhou, J. A systematic survey of attack detection and prevention in connected and autonomous vehicles. Veh. Commun. 2022, 37, 100515. [Google Scholar] [CrossRef]

- Mankodiya, H.; Obaidat, M.S.; Gupta, R.; Tanwar, S. XAI-AV: Explainable artificial intelligence for trust management in autonomous vehicles. In Proceedings of the 2021 International Conference on Communications, Computing, Cybersecurity, and Informatics (CCCI), Virtual, 15–17 October 2021; IEEE: Piscataway Township, NJ, USA, 2021; pp. 1–5. [Google Scholar]

- Tuv, E.; Borisov, A.; Runger, G.; Torkkola, K. Feature selection with ensembles, artificial variables, and redundancy elimination. J. Mach. Learn. Res. 2009, 10, 1341–1366. [Google Scholar]

- Chandola, V.; Banerjee, A.; Kumar, V. Anomaly detection: A survey. ACM Comput. Surv. (CSUR) 2009, 41, 1–58. [Google Scholar] [CrossRef]

- Singh, D.; Singh, B. Investigating the impact of data normalization on classification performance. Appl. Soft Comput. 2020, 97, 105524. [Google Scholar] [CrossRef]

- Seni, G.; Elder, J. Ensemble Methods in Data Mining: Improving Accuracy Through Combining Predictions; Morgan & Claypool Publishers: San Rafael, CA, USA, 2010. [Google Scholar]

- Dixit, P.; Bhattacharya, P.; Tanwar, S.; Gupta, R. Anomaly detection in autonomous electric vehicles using AI techniques: A comprehensive survey. Expert Syst. 2022, 39, e12754. [Google Scholar] [CrossRef]

- Song, Y.Y.; Ying, L. Decision tree methods: Applications for classification and prediction. Shanghai Arch. Psychiatry 2015, 27, 130. [Google Scholar] [PubMed]

- Li, W.; Yi, P.; Wu, Y.; Pan, L.; Li, J. A new intrusion detection system based on KNN classification algorithm in wireless sensor network. J. Electr. Comput. Eng. 2014, 2014, 240217. [Google Scholar] [CrossRef]

- Tao, P.; Sun, Z.; Sun, Z. An improved intrusion detection algorithm based on GA and SVM. IEEE Access 2018, 6, 13624–13631. [Google Scholar] [CrossRef]

- Mebawondu, J.O.; Alowolodu, O.D.; Mebawondu, J.O.; Adetunmbi, A.O. Network intrusion detection system using supervised learning paradigm. Sci. Afr. 2020, 9, e00497. [Google Scholar] [CrossRef]

- Dorogush, A.V.; Ershov, V.; Gulin, A. CatBoost: Gradient boosting with categorical features support. arXiv 2018, arXiv:1810.11363. [Google Scholar] [CrossRef]

- Jin, D.; Lu, Y.; Qin, J.; Cheng, Z.; Mao, Z. SwiftIDS: Real-time intrusion detection system based on LightGBM and parallel intrusion detection mechanism. Comput. Secur. 2020, 97, 101984. [Google Scholar] [CrossRef]

- Yulianto, A.; Sukarno, P.; Suwastika, N.A. Improving adaboost-based intrusion detection system (IDS) performance on CIC IDS 2017 dataset. J. Phys. Conf. Ser. 2019, 1192, 012018. [Google Scholar]

- Dhaliwal, S.S.; Nahid, A.A.; Abbas, R. Effective intrusion detection system using XGBoost. Information 2018, 9, 149. [Google Scholar] [CrossRef]

- H2O.ai. H2O Gradient Boosting Machine. Available online: https://docs.h2o.ai/h2o/latest-stable/h2o-docs/data-science/gbm.html (accessed on 22 April 2024).

- Waskle, S.; Parashar, L.; Singh, U. Intrusion detection system using PCA with random forest approach. In Proceedings of the 2020 International Conference on Electronics and Sustainable Communication Systems (ICESC), Coimbatore, India, 2–4 July 2020; IEEE: Piscataway Township, NJ, USA, 2020; pp. 803–808. [Google Scholar]

- Bühlmann, P. Bagging, boosting and ensemble methods. In Handbook of Computational Statistics: Concepts and Methods; Springer: Berlin/Heidelberg, Germany, 2012; pp. 985–1022. [Google Scholar]

- Zounemat-Kermani, M.; Batelaan, O.; Fadaee, M.; Hinkelmann, R. Ensemble machine learning paradigms in hydrology: A review. J. Hydrol. 2021, 598, 126266. [Google Scholar] [CrossRef]

- Idrissi Khaldi, M.; Erraissi, A.; Hain, M.; Banane, M. Comparative Analysis of Supervised Machine Learning Classification Models. In The International Conference on Artificial Intelligence and Smart Environment; Springer: Berlin/Heidelberg, Germany, 2024; pp. 321–326. [Google Scholar]

- Kumari, S.; Upadhaya, A. Investigating role of SVM, decision tree, KNN, ANN in classification of diabetic patient dataset. In International Conference on Artificial Intelligence on Textile and Apparel; Springer: Berlin/Heidelberg, Germany, 2023; pp. 431–442. [Google Scholar]

- Bisong, E. The Multilayer Perceptron (MLP). In Building Machine Learning and Deep Learning Models on Google Cloud Platform: A Comprehensive Guide for Beginners; Apress: Berkeley, CA, USA, 2019; pp. 401–405. [Google Scholar] [CrossRef]

- Mahesh, B.L.; Kaur, S. Exploring Machine Learning Algorithms for Detecting Cyber Attacks and Threats. In Proceedings of the 2024 9th International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 16–18 December 2024; IEEE: Piscataway Township, NJ, USA, 2024; pp. 1–8. [Google Scholar]

- Nazat, S.; Li, L.; Abdallah, M. XAI-ADS: An Explainable Artificial Intelligence Framework for Enhancing Anomaly Detection in Autonomous Driving Systems. IEEE Access 2024, 12, 48583–48607. [Google Scholar] [CrossRef]

- Nwakanma, C.I.; Ahakonye, L.A.C.; Njoku, J.N.; Odirichukwu, J.C.; Okolie, S.A.; Uzondu, C.; Ndubuisi Nweke, C.C.; Kim, D.S. Explainable artificial intelligence (XAI) for intrusion detection and mitigation in intelligent connected vehicles: A review. Appl. Sci. 2023, 13, 1252. [Google Scholar] [CrossRef]

- Taslimasa, H.; Dadkhah, S.; Neto, E.C.P.; Xiong, P.; Ray, S.; Ghorbani, A.A. Security issues in Internet of Vehicles (IoV): A comprehensive survey. Internet Things 2023, 22, 100809. [Google Scholar] [CrossRef]

- Boualouache, A.; Engel, T. A survey on machine learning-based misbehavior detection systems for 5g and beyond vehicular networks. IEEE Commun. Surv. Tutorials 2023, 25, 1128–1172. [Google Scholar] [CrossRef]

| Related Works | Datasets Used | AI Models | Methods | Focus |

|---|---|---|---|---|

| Our Work | VeReMi, Sensor datasets | Ensemble Learning (11 ensemble methods) | Ensemble Learning Framework | Robust anomaly detection in AVs and VANETS |

| Rajendar et al. (2022) [20] | Sensor Data | M-CNN | Anomaly Detection | Sudden abnormalities in AVs |

| Zekry et al. (2021) [21] | IoT Sensor Data | CNN, Kalman Filtering | Anomaly Detection | Anomalous behaviors in AVs |

| Alsulami et al. (2022) [22] | AV System Data | LSTM | Anomaly Detection | False Data Injection (FDI) attacks |

| Prathiba et al. (2023) [23] | Attack Data | Cooperative Analytics | Anomaly Detection | Anomalies in AV networks |

| Hybrid Deep Anomaly Detection (HDAD) [23] | Shared Sensor Data | Hybrid Deep Learning | Anomaly Detection | Harmful activities in AVs |

| Time-Series Anomaly Detection [24] | Time-Series Data | N/A | Anomaly Detection | Cyber intrusions, malfunctioning sensors |

| CNN-based LSTM Model [25] | Signal Data | CNN, LSTM | Anomaly Detection | Anomalous or healthy signals |

| Scenario Engineering in AVs [26] | N/A | N/A | Scenario Engineering | Trustworthiness, robustness in AVs |

| Generative AI Models for Scenario Engineering [27] | N/A | Generative AI (Sora) | Scenario Generation | Intelligent vehicle scenarios |

| Efficient Trajectory Prediction [28] | Argoverse and nuScenes Datasets | Attention, LSTM, GCN, Transformers | Trajectory Prediction | Spatial–temporal information |

| Reliability-based Path Planning [29] | Deformable Terrain Data | RRT* | Path Planning | Off-road AVs |

| Advanced Driver Assistance Systems [30] | N/A | CNNs | Various | AV features, object recognition, localization |

| Driver Activity Recognition System [31] | ImageNet Dataset | CNN (AlexNet, GoogLeNet, ResNet50) | Activity Recognition | Driving activities, distracted behaviors |

| Semi-supervised K-NN-based Ensemble Learning [11] | Maneuvering Behavior Data | K-NN Ensemble | Semi-supervised Learning | Classifying maneuvering behaviors |

| Ensemble-based Intrusion Detection System [33] | N/A | Ensemble Learning | Intrusion Detection | Classifying malicious and benign data requests |

| Ensemble-based Anomaly Detection [13] | Vehicle Data | Ensemble Learning | Anomaly Detection | Identifying potential faults |

| PelFace [34] | Face Data | Ensemble Learning | Face-based Authentication | Enhancing face-based authentication |

| Hybrid Ensemble Approach [35] | Radar Data | Random Forest, CNN | Object Classification | Classifying objects using radar data |

| Data-driven Method for Virtual Merging Scenarios [36] | N/A | N/A | Markov Decision Processes, Game Theory | Modeling vehicle behavior |

| VeReMi Dataset | Sensor Dataset | |

|---|---|---|

| Number of Labels | 2 and 6 | 2 |

| Number of Features | 6 | 10 |

| Dataset Size | 993,834 | 10,000 |

| Training Sample | 695,684 | 7000 |

| Testing Sample | 298,150 | 3000 |

| Normal Samples No. | 664,131 | 5000 |

| Anomalous Samples No. | 329,703 | 5000 |

| Models | Acc | Prec | Rec | F1 |

|---|---|---|---|---|

| RF | 0.80 | 0.82 | 0.91 | 0.86 |

| Bagging | 0.80 | 0.82 | 0.90 | 0.86 |

| AdaBoost | 0.73 | 0.74 | 0.91 | 0.82 |

| XGBoost | 0.70 | 0.70 | 0.96 | 0.81 |

| CatBoost | 0.70 | 0.70 | 0.96 | 0.81 |

| LGBM | 0.70 | 0.71 | 0.94 | 0.81 |

| GBM | 0.68 | 0.68 | 1.00 | 0.81 |

| Avg | 0.80 | 0.83 | 0.89 | 0.86 |

| W. Avg | 0.80 | 0.83 | 0.89 | 0.86 |

| Stacking | 0.80 | 0.83 | 0.89 | 0.86 |

| Blending | 0.80 | 0.83 | 0.89 | 0.86 |

| Models | Acc | Prec | Rec | F1 |

|---|---|---|---|---|

| DT | 0.79 | 0.82 | 0.87 | 0.84 |

| MLP | 0.66 | 0.67 | 0.96 | 0.79 |

| KNN | 0.78 | 0.82 | 0.85 | 0.84 |

| SVM | 0.55 | 0.54 | 0.66 | 0.60 |

| Models | Acc | Prec | Rec | F1 |

|---|---|---|---|---|

| RF | 0.90 | 0.90 | 0.98 | 0.94 |

| Bagging | 0.89 | 0.91 | 0.96 | 0.93 |

| AdaBoost | 0.99 | 0.99 | 1.00 | 0.99 |

| XGBoost | 0.98 | 0.97 | 1.00 | 0.99 |

| CatBoost | 1.00 | 1.00 | 1.00 | 1.00 |

| LGBM | 0.99 | 0.99 | 1.00 | 0.99 |

| GBM | 0.86 | 0.88 | 0.96 | 0.92 |

| Avg | 0.86 | 0.86 | 0.99 | 0.92 |

| W. Avg | 0.89 | 0.90 | 0.97 | 0.93 |

| Stacking | 0.98 | 0.98 | 0.99 | 0.99 |

| Blending | 0.99 | 0.99 | 0.99 | 0.99 |

| Models | Acc | Prec | Rec | F1 |

|---|---|---|---|---|

| DT | 0.85 | 0.89 | 0.92 | 0.90 |

| MLP | 0.89 | 0.93 | 0.93 | 0.93 |

| KNN | 0.84 | 0.85 | 0.97 | 0.91 |

| SVM | 0.88 | 0.90 | 0.95 | 0.92 |

| Models | Acc | Prec | Rec | F1 |

|---|---|---|---|---|

| RF | 0.65 | 0.79 | 0.94 | 0.86 |

| Bagging | 0.66 | 0.76 | 0.98 | 0.86 |

| AdaBoost | 0.66 | 0.69 | 0.98 | 0.81 |

| XGBoost | 0.67 | 0.67 | 1.00 | 0.80 |

| CatBoost | 0.67 | 0.68 | 1.00 | 0.81 |

| LGBM | 0.67 | 0.68 | 0.99 | 0.80 |

| GBM | 0.66 | 0.70 | 0.97 | 0.82 |

| Avg | 0.65 | 0.76 | 0.91 | 0.83 |

| W. Avg | 0.65 | 0.76 | 0.91 | 0.83 |

| Stacking | 0.72 | 0.79 | 0.96 | 0.87 |

| Blending | 0.72 | 0.78 | 0.96 | 0.87 |

| Models | Acc | Prec | Rec | F1 |

|---|---|---|---|---|

| DT | 0.66 | 0.72 | 0.97 | 0.83 |

| MLP | 0.67 | 0.67 | 1.00 | 0.80 |

| KNN | 0.65 | 0.77 | 0.95 | 0.85 |

| SVM | 0.67 | 0.67 | 1.00 | 0.80 |

| Metric | RF | Bagging | AdaBoost | XGBoost | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Hyperparameter | max_depth | n_estimators | max_depth | n_estimators | max_depth | n_estimators | max_depth | n_estimators | ||||||||

| Values | 4 | 50 | 100 | 200 | 4 | 50 | 100 | 200 | 4 | 50 | 100 | 200 | 6 | 50 | 100 | 200 |

| Acc | 0.67 | 0.80 | 0.80 | 0.80 | 0.67 | 0.80 | 0.80 | 0.80 | 0.70 | 0.80 | 0.80 | 0.80 | 0.69 | 0.75 | 0.75 | 0.75 |

| Prec | 0.67 | 0.83 | 0.83 | 0.83 | 0.67 | 0.82 | 0.82 | 0.82 | 0.71 | 0.84 | 0.83 | 0.84 | 0.70 | 0.79 | 0.79 | 0.79 |

| Rec | 1.00 | 0.88 | 0.88 | 0.88 | 1.00 | 0.90 | 0.90 | 0.90 | 0.93 | 0.86 | 0.87 | 0.86 | 0.97 | 0.86 | 0.86 | 0.86 |

| F-1 | 0.80 | 0.86 | 0.86 | 0.86 | 0.80 | 0.86 | 0.86 | 0.86 | 0.80 | 0.85 | 0.85 | 0.85 | 0.81 | 0.82 | 0.82 | 0.82 |

| Metric | CatBoost | LGBM | GBM | |||||||||||||

| Hyperparameter | depth | lr | num_boost_round | lr | max_depth | n_estimators | ||||||||||

| Values | 6 | 10 | 0.5 | 0.8 | 100 | 500 | 0.01 | 0.5 | 10 | 50 | 10 | 50 | ||||

| Acc | 0.70 | 0.74 | 0.71 | 0.74 | 0.70 | 0.72 | 0.70 | 0.72 | 0.68 | 0.80 | 0.68 | 0.80 | ||||

| Prec | 0.70 | 0.75 | 0.72 | 0.75 | 0.70 | 0.73 | 0.70 | 0.73 | 0.68 | 0.83 | 0.68 | 0.83 | ||||

| Rec | 0.96 | 0.91 | 0.94 | 0.91 | 0.96 | 0.93 | 0.96 | 0.93 | 1.00 | 0.88 | 1.00 | 0.88 | ||||

| F-1 | 0.81 | 0.82 | 0.81 | 0.82 | 0.81 | 0.82 | 0.81 | 0.82 | 0.81 | 0.85 | 0.81 | 0.85 | ||||

| Metric | RF | Bagging | AdaBoost | XGBoost | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Hyperparameter | max_depth | n_estimators | max_depth | n_estimators | max_depth | n_estimators | max_depth | n_estimators | ||||||||

| Values | 4 | 50 | 100 | 200 | 4 | 50 | 100 | 200 | 4 | 50 | 100 | 200 | 6 | 50 | 100 | 200 |

| Acc | 0.81 | 0.82 | 0.91 | 0.91 | 0.83 | 0.82 | 0.90 | 0.90 | 0.98 | 0.98 | 0.84 | 0.85 | 0.99 | 0.98 | 0.97 | 0.98 |

| Prec | 0.80 | 0.81 | 0.91 | 0.91 | 0.83 | 0.84 | 0.91 | 0.91 | 0.98 | 0.98 | 0.90 | 0.90 | 0.99 | 0.98 | 0.97 | 0.98 |

| Rec | 1.00 | 1.00 | 0.98 | 0.98 | 0.98 | 0.96 | 0.97 | 0.97 | 1.00 | 0.99 | 0.89 | 0.91 | 1.00 | 1.00 | 0.99 | 1.00 |

| F-1 | 0.89 | 0.90 | 0.94 | 0.94 | 0.90 | 0.89 | 0.94 | 0.94 | 0.99 | 0.99 | 0.90 | 0.91 | 0.99 | 0.99 | 0.98 | 0.99 |

| Metric | CatBoost | LGBM | GBM | |||||||||||||

| Hyperparameter | lr | depth | lr | num_boost_round | lr | max_depth | ||||||||||

| Values | 0.03 | 0.5 | 6 | 10 | 0.5 | 0.8 | 100 | 500 | 0.01 | 0.5 | 10 | 50 | ||||

| Acc | 0.97 | 1.00 | 1.00 | 0.96 | 0.99 | 0.99 | 0.99 | 0.99 | 0.86 | 0.92 | 0.92 | 0.85 | ||||

| Prec | 0.96 | 1.00 | 1.00 | 0.96 | 0.99 | 0.99 | 0.99 | 0.99 | 0.88 | 0.92 | 0.92 | 0.90 | ||||

| Rec | 1.00 | 1.00 | 1.00 | 0.99 | 1.00 | 1.00 | 1.00 | 1.00 | 0.96 | 0.98 | 0.98 | 0.92 | ||||

| F-1 | 0.98 | 1.00 | 1.00 | 0.97 | 1.00 | 1.00 | 1.00 | 1.00 | 0.92 | 0.95 | 0.95 | 0.91 | ||||

| Higher Metrics (ACC, PRE, REC, F1) | Sensor | VeReMi | Overall |

|---|---|---|---|

| Best Setup | Ensemble | Ensemble | Ensemble |

| Best Models | CatBoost, LGBM, Stacking, Blending, AdaBoost | RF, Bagging, Avg, W. Avg, Stacking, Blending, LGBM, DT | Blending, Stacking, CatBoost, DT, LGBM, RF, AdaBoost |

| Lower FPR (%) | Sensor | VeReMi | Overall |

| Best Setup | Ensemble | Ensemble/Single | Ensemble |

| Best Models | RF, AdaBoost, Stacking, CatBoost, Bagging, LGBM, MLP | RF, AdaBoost, Stacking, DT, KNN | RF, AdaBoost, Stacking |

| Runtime | Sensor | VeReMi | Overall |

| Fastest Models (less than 1 min in all level variants) | RF, Bagging, AdaBoost, XGBoost, CatBoost, LGBM, GBM, DT, MLP, KNN, SVM | LGBM, XGBoost, AdaBoost, DT, KNN | AdaBoost, XGBoost, LGBM, DT, KNN |

| Average Models (1 and 10 min in all level variants) | Avg, W. Avg, Stacking, Blending | RF, CatBoost, Bagging, GBM, SVM | Avg, W. Avg, GBM, Stacking, Blending, SVM |

| Slowest Models (more than 10 min in all level variants) | - | Stacking, Blending, Avg, W. Avg, MLP | Stacking, Blending, Avg, W. Avg, MLP |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nazat, S.; Alayed, W.; Li, L.; Abdallah, M. Ensemble Learning Framework for Anomaly Detection in Autonomous Driving Systems. Sensors 2025, 25, 5105. https://doi.org/10.3390/s25165105

Nazat S, Alayed W, Li L, Abdallah M. Ensemble Learning Framework for Anomaly Detection in Autonomous Driving Systems. Sensors. 2025; 25(16):5105. https://doi.org/10.3390/s25165105

Chicago/Turabian StyleNazat, Sazid, Walaa Alayed, Lingxi Li, and Mustafa Abdallah. 2025. "Ensemble Learning Framework for Anomaly Detection in Autonomous Driving Systems" Sensors 25, no. 16: 5105. https://doi.org/10.3390/s25165105

APA StyleNazat, S., Alayed, W., Li, L., & Abdallah, M. (2025). Ensemble Learning Framework for Anomaly Detection in Autonomous Driving Systems. Sensors, 25(16), 5105. https://doi.org/10.3390/s25165105