A Real-Time Mature Hawthorn Detection Network Based on Lightweight Hybrid Convolutions for Harvesting Robots

Abstract

1. Introduction

2. Materials and Methods

2.1. Construction of Hawthorn Image Dataset in Complex Environments

2.1.1. Image Dataset Collection

2.1.2. Image Dataset Augmentation and Processing

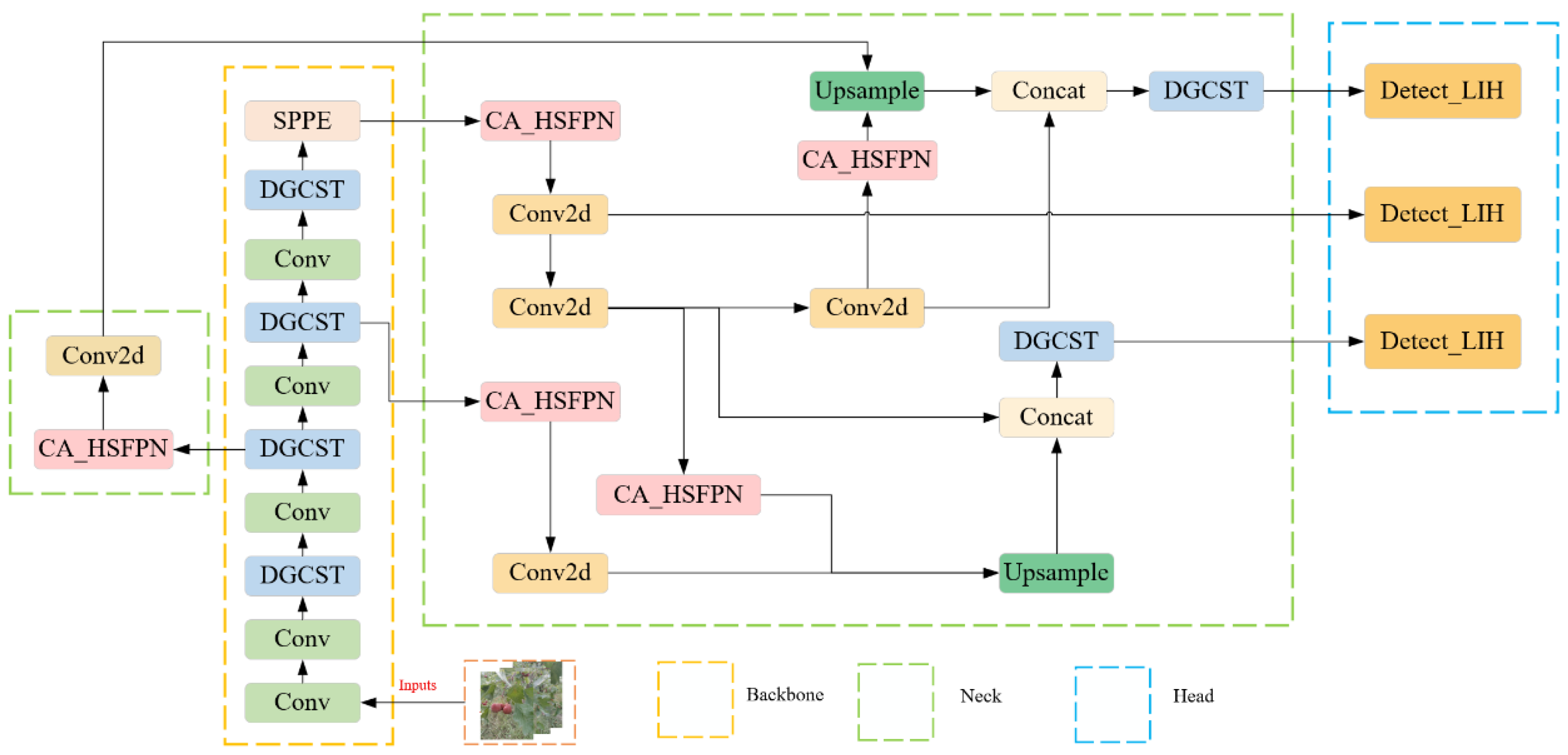

2.2. The YOLO-DCL Hawthorn Detection Model Based on Lightweight Convolution

- (1)

- Replacing the original C2f module in YOLOv8n’s backbone network with a dynamic group convolution shuffle transformer (DGCST) module to improve feature extraction, especially in complex backgrounds.

- (2)

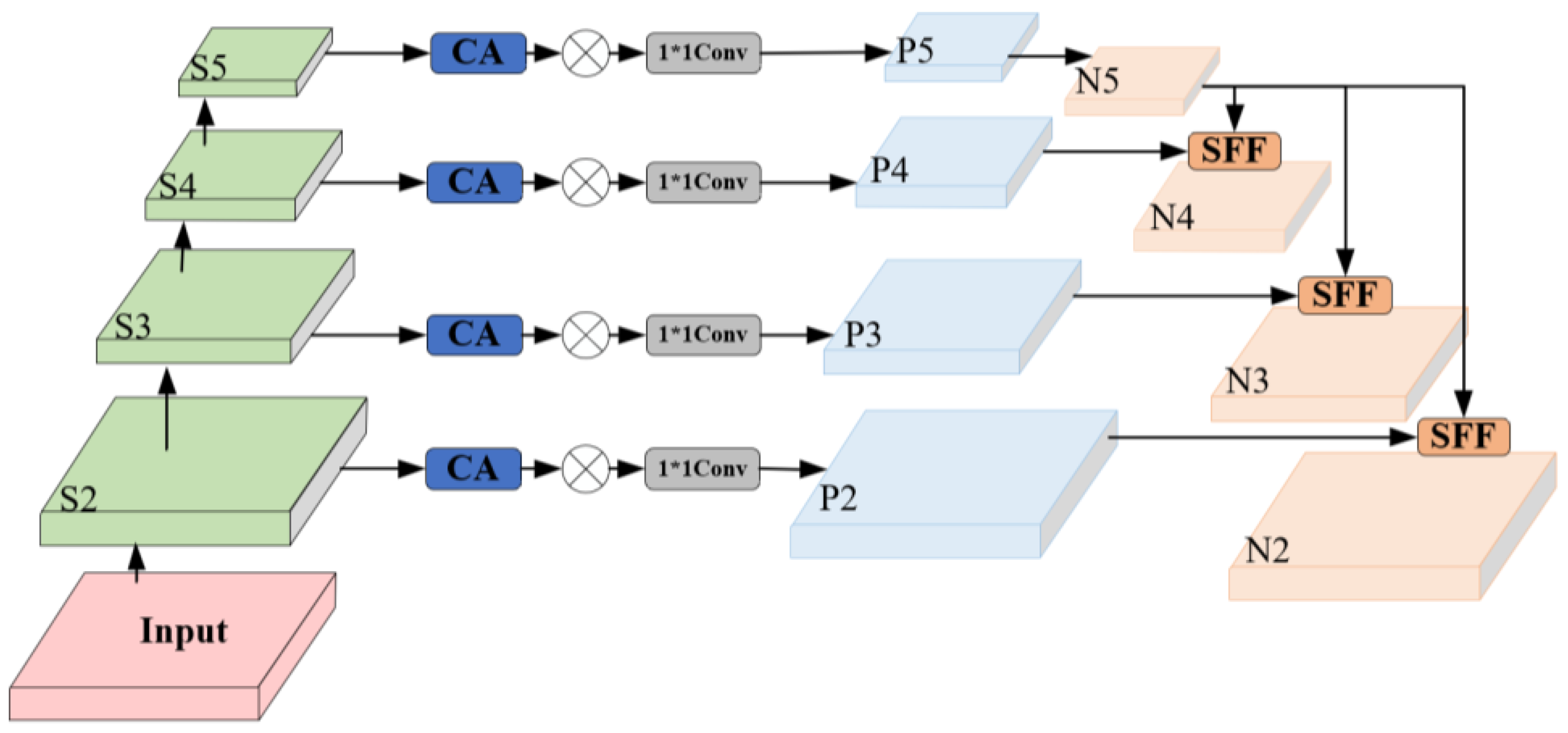

- In the neck network, incorporating the coordinate attention (CA) mechanism into the feature pyramid network (FPN) to construct a hierarchical scale-aware pyramid feature network (HSPFN), which improves the C2f structure.

- (3)

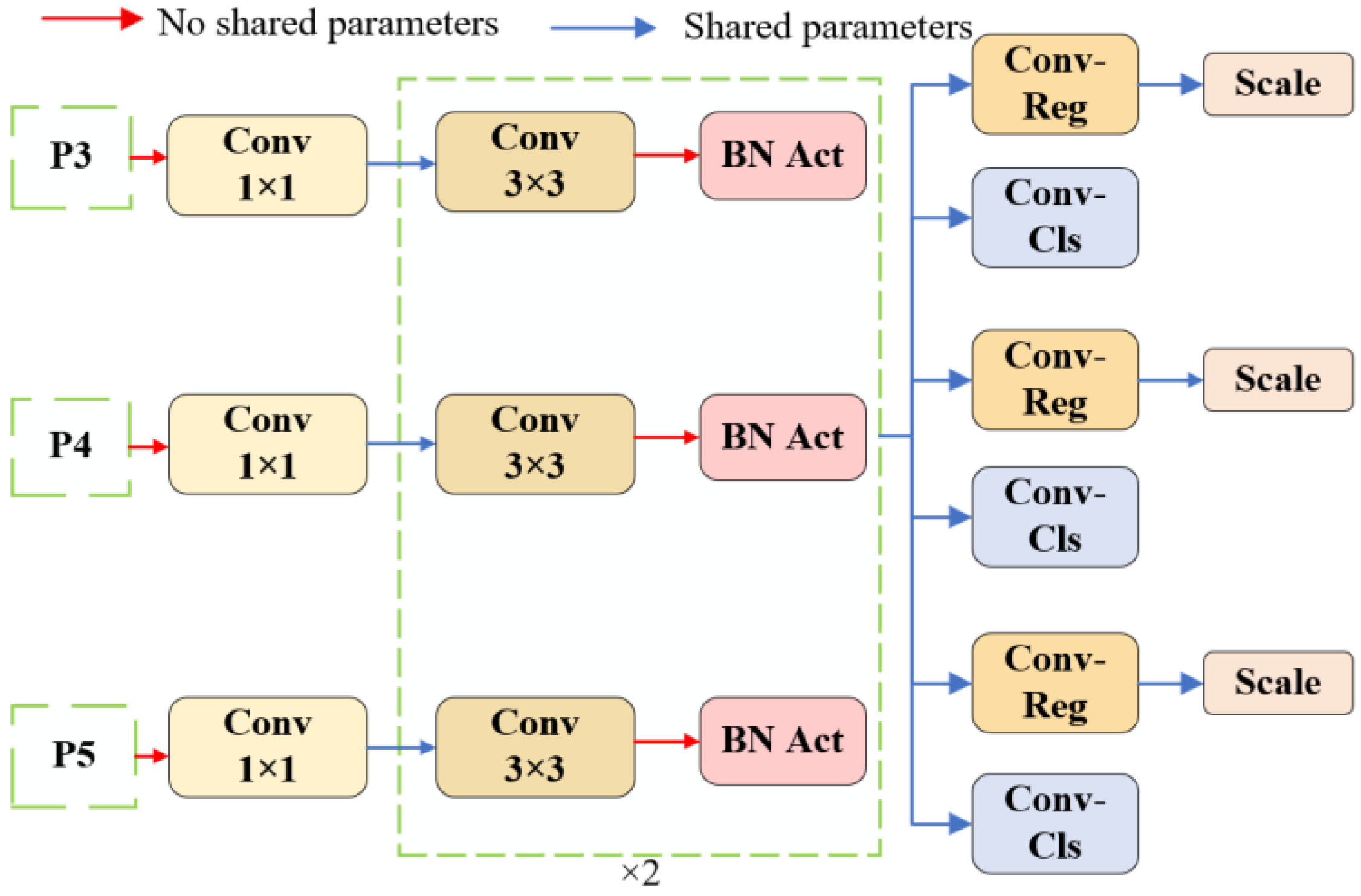

- Designing a new lightweight detection head, Detect_LIH, based on shared convolution and batch normalization to significantly reduce model parameters and computational load while maintaining detection performance.

- (4)

- Replacing the default CIoU loss function with the PIoUv2 loss function to improve detection accuracy and accelerate model convergence, optimizing the bounding box regression process more effectively.

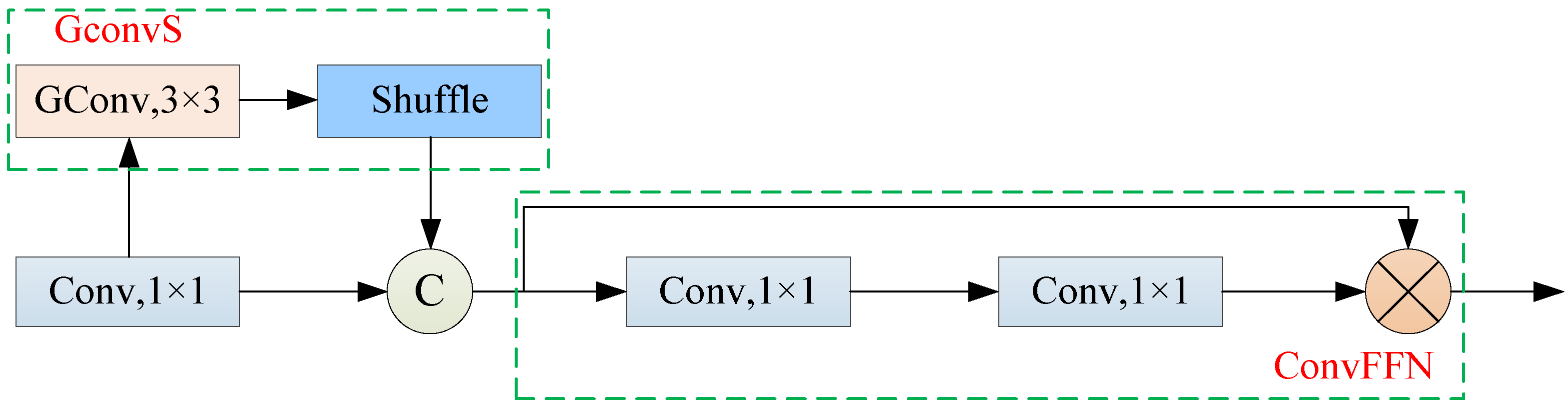

2.2.1. Dynamic Group Convolution Shuffle Transformer Module

2.2.2. Hierarchical Scale Feature Pyramid Network (HSFPN)

2.2.3. Detect_LIH Lightweight Detection Head

2.2.4. Loss Function Optimization and Improvement

2.3. Performance Evaluation Metrics

2.4. Environment Configuration and Training Strategy

3. Results and Analysis

3.1. Performance Comparison of Different Feature Extraction Networks

3.2. Ablation Study

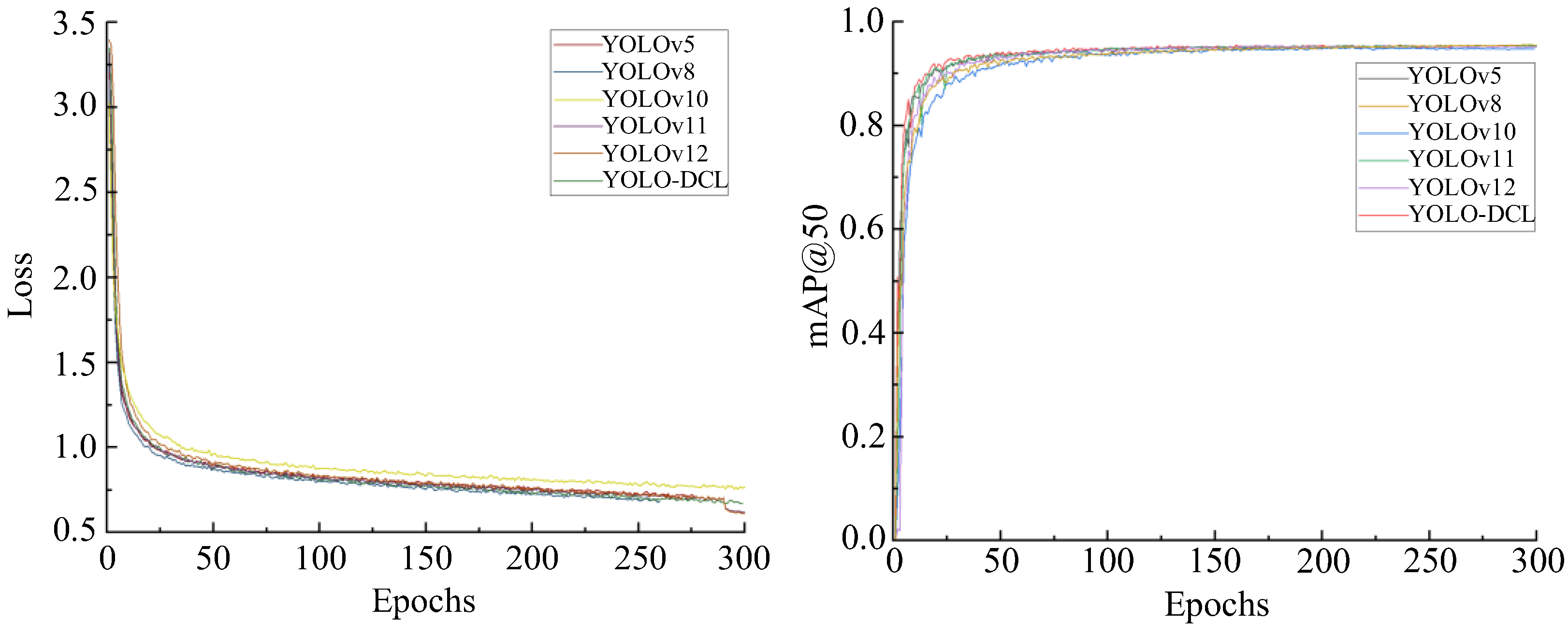

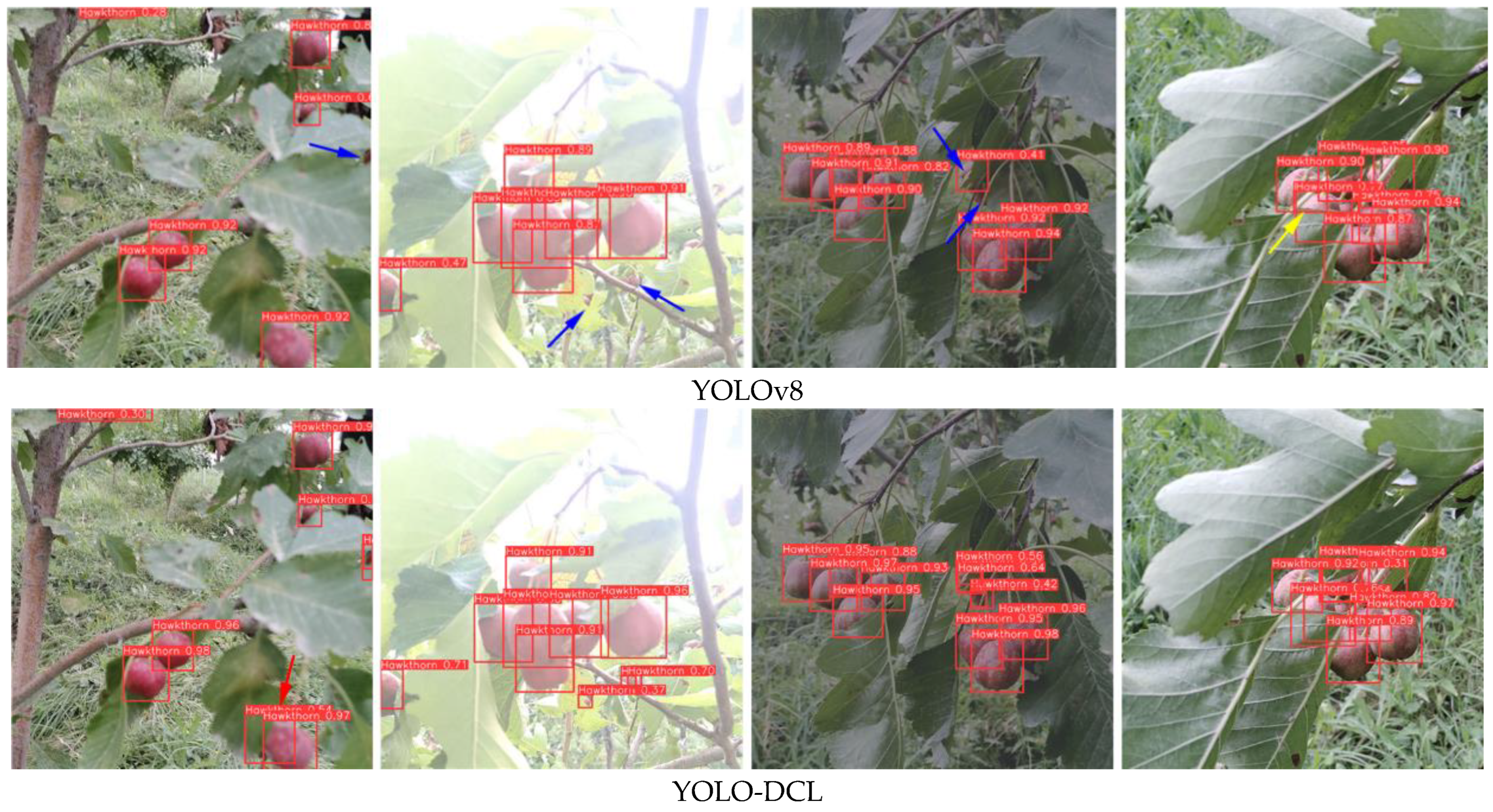

3.3. Performance Comparison with Mainstream Models

3.4. Performance Comparison in Different Orchard Scenarios

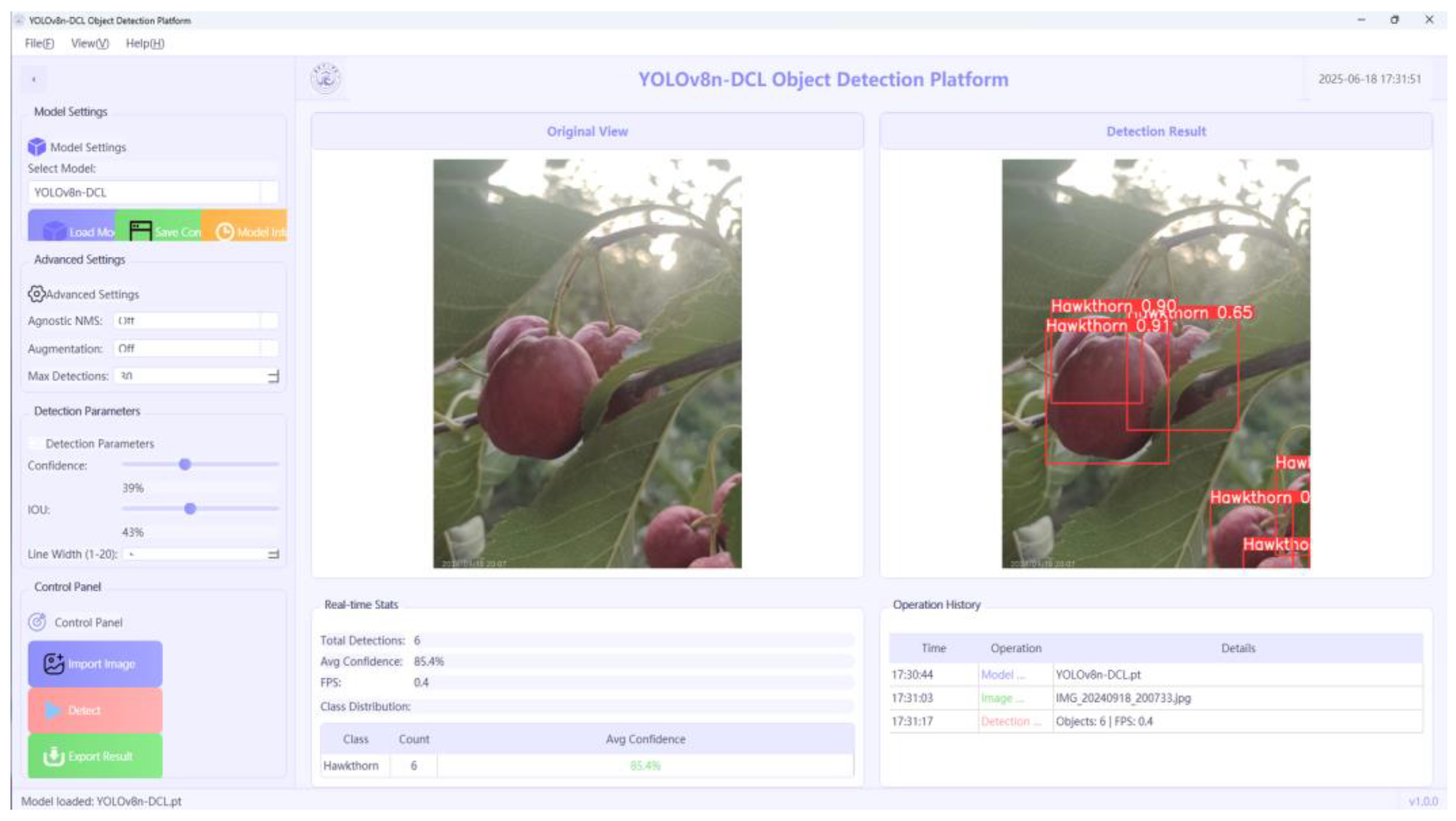

3.5. Edge Device Deployment and Validation

4. Conclusions

- (1)

- To enhance detection efficiency and real-time performance, YOLO-DCL incorporates several key architectural modifications. The baseline model’s C2f module in the backbone is replaced by a DGCST. The neck network is redesigned using an HSPFN, and Detect_LIH, is introduced. Furthermore, the loss function is updated from CIoU to PIoUv2. These enhancements enable YOLO-DCL to achieve a 0.3% improvement in detection accuracy compared to the baseline, while simultaneously reducing parameters by 60.0%, computational cost by 41.5%, and model size by 58.7%.

- (2)

- To further validate YOLO-DCL’s efficacy for detecting small hawthorn targets, comparative evaluations were performed against prominent models: Fast R-CNN, SSD, YOLOv5, YOLOv7, YOLOv8, YOLOv10, YOLOv11, and YOLOv12. The results indicate that YOLO-DCL achieves superior overall performance, excelling in model complexity (parameters, computational load, size) and detection accuracy (mAP). This demonstrates its potential as an efficient solution for hawthorn detection in orchard environments using low-computation platforms.

- (3)

- Experimental validation conducted on a Jetson Xavier platform demonstrated the efficacy of a real-time hawthorn detection system developed using the PySide6 framework. The proposed YOLO-DCL model exhibited a 32.4% increase in frames per second (FPS) and a 41.1% reduction in inference latency compared to the baseline. These performance gains confirm the model’s suitability for fulfilling the real-time operational requirements of robotic fruit harvesting systems.

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Dong, J.; Chen, J.; Gong, S.; Xu, J.; Xu, X.; Zhang, T. Research progress on chemical constituents and pharmacological effects of crataegi fructus and predictive analysis on Q-Marker. Chin. Tradit. Herb. Drugs 2021, 52, 2801–2818. [Google Scholar]

- Dong, N.; Wang, Y.; Zheng, S.; Wang, S. The current situation and development suggestions of China’s hawthorn industry. China Fruits 2022, 2022, 87–91. [Google Scholar]

- Zhai, Y. Structure Design and Motion Analysis of a Hawthorn Picking Machine. Master’s Thesis, Yanbian University, Yanji, China, 2018. [Google Scholar]

- Song, H.; Shang, Y.; He, D. Review on deep learning technology for fruit target recognition. Trans. Chin. Soc. Agric. Mach. 2023, 54, 1–19. [Google Scholar]

- Ma, M.; Guo, J. Research status of machine vision technology for fruit positioning. Chin. South. Agric. Mach. 2024, 55, 6–9. [Google Scholar]

- Mo, S.; Dong, T.; Zhao, X.; Kan, J. Discriminant model of banana fruit maturity based on genetic algorithm and SVM. J. Fruit Sci. 2022, 39, 2418–2427. [Google Scholar]

- Zou, W. Research on citrus fruit maturity grading based on machine vision technology. Agric. Technol. 2023, 43, 41–44. [Google Scholar]

- Dong, G.; Xie, W.; Huang, X.; Qiao, Y.; Mao, Q. Review of small object detection algorithms based on deep learning. Comput. Eng. Appl. 2023, 59, 16–27. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–17 December 2015; pp. 1440–1448. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A. SSD: Single shot multibox detector. In Proceedings of the Computer Vision-ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Li, L.; Liang, J.; Zhang, Y.; Zhang, G.; Chun, C. Accurate detection and localization method of citrus targets in complex environments based on improved YOLOv5. Trans. Chin. Soc. Agric. Mach. 2024, 55, 280–290. [Google Scholar]

- Gu, H.; Li, Z.; Li, T.; Li, T.; Li, N.; Wei, Z. Lightweight detection algorithm of seed potato eyes based on YOLOv5. Trans. Chin. Soc. Agric. Eng. 2024, 40, 126–136. [Google Scholar]

- Luo, Z.; He, C.; Chen, D.; Li, P.; Sun, Q. Passion fruit rapid detection model based on lightweight YOLOv8s-GD. Trans. Chin. Soc. Agric. Mach. 2024, 55, 291–300. [Google Scholar]

- Dong, G.; Chen, X.; Fan, X.; Zhou, J.; Jiang, H.; Cui, C. Detecting Xinmei fruit under complex environments using improved YOlOv5s. Trans. Chin. Soc. Agric. Eng. 2024, 40, 118–125. [Google Scholar]

- Song, H.; Wang, Y.; Wang, Y.; Lü, S.; Jiang, M. Camellia oleifera fruit detection in natural scene based on YOLOv5s. Trans. Chin. Soc. Agric. Mach. 2022, 53, 234–242. [Google Scholar]

- Yang, S.; Wang, W.; Gao, S.; Deng, Z. Strawberry ripeness detection based on YOLOv8 algorithm fused with LW-Swin Transformer. Comput. Electron. Agric. 2023, 215, 108360. [Google Scholar] [CrossRef]

- Wu, F.; Duan, J.; Ai, P.; Chen, Z.; Yang, Z.; Zou, X. Rachis detection and three-dimensional localization of cut off point for vision-based banana robot. Comput. Electron. Agric. 2022, 198, 107079. [Google Scholar] [CrossRef]

- Zheng, Z.; Hu, Y.; Qiao, Y.; Hu, X.; Huang, Y. Real-time detection of winter jujubes based on improved YOLOX-nano network. Remote Sens. 2022, 14, 4833. [Google Scholar] [CrossRef]

- Li, S.; Huang, H.; Meng, X.; Wang, M.; Li, Y.; Xie, L. A glove-wearing detection algorithm based on improved YOLOv8. Sensors 2023, 23, 9906. [Google Scholar] [CrossRef] [PubMed]

- Gong, W. Lightweight Object Detection: A Study Based on YOLOv7 Integrated with ShuffleNetv2 and Vision Transformer. arXiv 2024, arXiv:2403.01736. [Google Scholar] [CrossRef]

- Su, Z.; Fang, L.; Kang, W.; Hu, D.; Pietikäinen, M.; Liu, L. Dynamic Group Convolution for Accelerating Convolutional Neural Networks. In Proceedings of the 16th European Conference on Computer Vision (ECCV 2020), Glasgow, UK, 23–28 August 2020; pp. 138–155. [Google Scholar]

- Huang, Z.; Ben, Y.; Luo, G.; Cheng, P.; Yu, G.; Fu, B. Shuffle transformer: Rethinking spatial shuffle for vision transformer. arXiv 2021, arXiv:2106.03650. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the 2021 IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 19–15 June 2021; pp. 13713–13722. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, C.; Wang, K.; Li, Q.; Zhao, F.; Zhao, K.; Ma, H. Powerful-IoU: More straightforward and faster bounding box regression loss with a nonmonotonic focusing mechanism. Neural Netw. 2024, 170, 276–284. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.; Debnath, S.; Hu, R.; Chen, X.; Liu, Z.; Kweon, I.S.; Xie, S. Convnext v2: Co-designing and scaling convnets with masked autoencoders. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 16133–16142. [Google Scholar]

- Liu, X.; Peng, H.; Zheng, N.; Yang, Y.; Hu, H.; Yuan, Y. Efficientvit: Memory efficient vision transformer with cascaded group attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 14420–14430. [Google Scholar]

- Chen, J.; Kao, S.H.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H.G. Run, don’t walk: Chasing higher FLOPS for faster neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 12021–12031. [Google Scholar]

- Qin, D.; Leichner, C.; Delakis, M.; Fornoni, M.; Luo, S.; Yang, F.; Wang, W.; Banbury, C.; Ye, C.; Akin, B.; et al. MobileNetV4: Universal models for the mobile ecosystem. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 78–96. [Google Scholar]

- Li, Y.; Hou, Q.; Zheng, Z.; Cheng, M.M.; Yang, J.; Li, X. Large selective kernel network for remote sensing object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 16794–16805. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Fotuhi, M.J.; Hazem, Z.B.; Bingül, Z. Adaptive joint friction estimation model for laboratory 2 DOF double dual twin rotor aerodynamical helicopter system. In Proceedings of the 2018 6th International Conference on Control Engineering & Information Technology (CEIT), Istanbul, Turkey, 25–27 October 2018; IEEE: New York, NY, USA, 2018; pp. 1–6. [Google Scholar]

| Model | mAP@0.5/% | Params/M | FLOPs/G | Weights/MB |

|---|---|---|---|---|

| YOLOv8-C2f | 95.3 | 3.0 | 8.2 | 5.95 |

| YOLOv8-ConvNextV2 | 95.5 | 5.7 | 14.1 | 11.0 |

| YOLOv8-EfficientViT | 95.3 | 4.0 | 9.5 | 8.32 |

| YOLOv8-FasterNet | 95.3 | 4.2 | 10.7 | 8.2 |

| YOLOv8-MobileNetV4 | 95.0 | 5.7 | 22.6 | 11.1 |

| YOLOv8-LSKNet | 94.4 | 5.9 | 19.8 | 11.5 |

| YOLOv8-Ghost | 95.0 | 2.3 | 6.3 | 4.65 |

| YOLOv8-DGCST | 95.3 | 2.2 | 6.3 | 4.45 |

| Model | DGCST | HSFPN | Detect_LIH | PIoUv2 | Params/M | FLOPs/G | P/% | R/% | mAP@0.5/% | Weights/MB |

|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv8n | × | × | × | × | 3.0 | 8.2 | 91.8 | 89.9 | 95.3 | 5.95 |

| √ | × | × | × | 2.2 | 6.3 | 93.5 | 87.3 | 95.3 | 4.45 | |

| √ | √ | × | × | 1.5 | 5.9 | 91.0 | 89.8 | 95.5 | 3.16 | |

| √ | √ | √ | × | 1.2 | 4.8 | 93.1 | 88.3 | 95.5 | 2.46 | |

| √ | √ | √ | √ | 1.2 | 4.8 | 91.6 | 90.1 | 95.6 | 2.46 |

| Model | Params/M | FLOPs/G | P/% | R/% | mAP@0.5/% | Weights/MB |

|---|---|---|---|---|---|---|

| Fast R-CNN | 136.7 | 369.7 | 65.4 | 84.5 | 83.5 | 108 |

| SSD | 23.6 | 273.2 | 88.1 | 67.0 | 78.0 | 90.6 |

| YOLOv5 | 2.5 | 7.2 | 92.6 | 88.7 | 95.4 | 5.02 |

| YOLOv6 | 4.2 | 11.9 | 93.3 | 87.9 | 95.0 | 32.7 |

| YOLOv7 | 6.0 | 13.2 | 91.7 | 90.5 | 95.3 | 11.6 |

| YOLOv8 | 3.0 | 8.2 | 91.8 | 89.8 | 95.3 | 5.95 |

| YOLOv10 | 2.7 | 8.4 | 91.8 | 88.2 | 94.8 | 5.48 |

| YOLOv11 | 2.5 | 6.4 | 93.8 | 88.5 | 95.3 | 5.22 |

| YOLOv12 | 2.5 | 6.5 | 92.7 | 87.5 | 95.3 | 5.25 |

| YOLO-DCL | 1.2 | 4.8 | 91.6 | 90.1 | 95.6 | 2.46 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, B.; Chen, B.; Li, X.; Wang, L.; Wang, D. A Real-Time Mature Hawthorn Detection Network Based on Lightweight Hybrid Convolutions for Harvesting Robots. Sensors 2025, 25, 5094. https://doi.org/10.3390/s25165094

Ma B, Chen B, Li X, Wang L, Wang D. A Real-Time Mature Hawthorn Detection Network Based on Lightweight Hybrid Convolutions for Harvesting Robots. Sensors. 2025; 25(16):5094. https://doi.org/10.3390/s25165094

Chicago/Turabian StyleMa, Baojian, Bangbang Chen, Xuan Li, Liqiang Wang, and Dongyun Wang. 2025. "A Real-Time Mature Hawthorn Detection Network Based on Lightweight Hybrid Convolutions for Harvesting Robots" Sensors 25, no. 16: 5094. https://doi.org/10.3390/s25165094

APA StyleMa, B., Chen, B., Li, X., Wang, L., & Wang, D. (2025). A Real-Time Mature Hawthorn Detection Network Based on Lightweight Hybrid Convolutions for Harvesting Robots. Sensors, 25(16), 5094. https://doi.org/10.3390/s25165094