Abstract

Vehicle detection plays a pivotal role in traffic management as a key technology for intelligent traffic management and driverless driving. However, current deep learning-based vehicle detection models face several challenges in practical applications. These include slow detection speeds, large computational and parametric quantities, high leakage and misdetection rates in target-intensive environments, and difficulties in deploying them on edge devices with limited computing power and memory. To address these issues, this paper proposes an improved vehicle detection method called SMA-YOLO, based on the YOLOv7 model. Firstly, MobileNetV3 is adopted as the new backbone network to lighten the model. Secondly, the SimAM attention mechanism is incorporated to suppress background interference and enhance small-target detection capability. Additionally, the ACON activation function is substituted for the original SiLU activation function in the YOLOv7 model to improve detection accuracy. Lastly, SIoU is used to replace CIoU to optimize the loss of function and accelerate model convergence. Experiments on the UA-DETRAC dataset demonstrate that the proposed SMA-YOLO model achieves a lightweight effect, significantly reducing model size, computational requirements, and the number of parameters. It not only greatly improves detection speed but also maintains higher detection accuracy. This provides a feasible solution for deploying a vehicle detection model on embedded devices for real-time detection.

1. Introduction

Intelligent traffic systems [1,2] play a crucial role in urban transportation planning, traffic accident reduction, and vehicle–road coordination. Vehicle detection technology, as the core and foundation of intelligent traffic systems, has gained significant attention for its applications in unmanned driving [3,4], traffic accident detection [5,6], driving violation detection [7,8], and vehicle path planning [9]. However, current vehicle detection technology still has problems with limited detection accuracy and speed, which cannot meet the requirements for real-time and accurate vehicle detection. Additionally, the existing vehicle detection algorithms are complex and require high computational capabilities, making them difficult to deploy on embedded devices.

Recent advancements in vehicle detection have evolved from traditional computer vision techniques to deep learning-based approaches. Early methods relied on handcrafted features such as Haar cascades [10] and the Histogram of Oriented Gradient-Support Vector Machine (HOG-SVM) [11], which struggled with occlusions and lighting variations. The emergence of convolutional neural networks significantly improved robustness, with two-stage detectors like Faster Region-based Convolutional Neural Network (Faster R-CNN) [12] achieving high accuracy at the cost of real-time performance. Subsequent one-stage detectors, including You Only Look Once (YOLO) [13] and its variants (e.g., YOLOv4 [14], YOLOv7 [15]), prioritized speed by simplifying network architectures. Despite these advancements, there is still a need for further improvements in detection accuracy and speed to fully meet the demands of real-time and precise vehicle detection in intelligent traffic systems.

To address the problems of complex structures, large numbers of parameters and computational costs in existing vehicle detection models, as well as the difficulty in deploying them on embedded devices with limited storage and computation capacity, in this paper, we modify the backbone model of YOLOv7 [15] to MobileNetV3 [16], constructing a lightweight vehicle detection model. To compensate for the loss of accuracy caused by the light-weighted backbone network, we firstly replace the initial Sigmoid Linear Unit (SiLU) activation function in the YOLOv7 network with the Adaptive Conjugate One-Nonlinear Activation (ACON) function [17], which improves the model’s generalization ability and the efficiency of the information transfer in each feature layer. Next, we incorporate the Simple Parameter-Free Attention Module (SimAM) [18] into the backbone network, which improves the model’s ability to extract features. Finally, we use SCYLLA Intersection over Union (SIoU) [19] to optimize the loss function, which improves the model’s detection performance. Experimental results demonstrate that the proposed SMA-YOLO model not only improves the detection speed and meets the real-time requirements for vehicle detection but also enhances accuracy and detection performance.

The main contributions of this paper are as follows:

- (1)

- A lightweight road vehicle detection model called SMA-YOLO for embedded devices with limited storage and computing power is constructed by replacing the backbone network of the YOLOv7 model with MobileNetV3.

- (2)

- The ACON activation function is introduced to replace the SiLU activation function in the original YOLOv7 model, which enhances the model’s generalization ability and the information transfer efficiency between feature layers.

- (3)

- The SimAM attention mechanism module is integrated in the backbone network, which effectively improves the feature extraction ability of the model.

- (4)

- The SIoU is adopted as the loss function to optimize the model and further enhance the detection performance.

2. Related Work

Current vehicle detection techniques can be categorized into three main approaches: traditional vision-based detection, wireless sensor-based and deep learning-based target detection algorithms. Vision-based techniques typically follow three stages: selecting the target region, extracting target feature information, and classifying these features. However, these methods suffer from several drawbacks: redundant windows, being time-consuming, low detection efficiency, high rates of missed and false detections, stringent equipment requirements, and the poor robustness and generalization performance of manually designed features. These limitations make it difficult to complete detection tasks in complex environments. Sensor-based vehicle detection methods, which use ultrasonic [20,21], microwave [22,23], geomagnetic [24,25] or inductive coil technology [26,27], represent the current mainstream approach for parking lot vehicle detection. The disadvantages of these methods include a cumbersome installation process, strict environmental requirements, and inapplicability to complex road traffic scenes. With the development of deep-learning technology, target detection methods based on deep learning have gradually become a prominent research topic [28,29,30,31,32]. The current target detection algorithms based on deep learning can be divided into two categories: single-stage regression-based algorithms and two-stage region proposal-based algorithms. The representative two-stage algorithms include Region-based Convolutional Neural Network (R-CNN) [33], Fast R-CNN [34], Faster R-CNN [12], etc. These algorithms offer higher localization precision and accuracy but suffer from slower training speeds and lower detection rates. Conversely, single-stage detection algorithms, such as Single Shot Multi-Box Detector (SSD) [35], YOLO [13], EfficientDet [36], and RetinaNet [37], provide high real-time performance and fast detection rates, at the cost of slightly lower accuracy compared to two-stage algorithms.

In recent years, many scholars have proposed a series of improvements to address the shortcomings of different target detection algorithms. Li et al. [38] proposed the Cascade Multi-Scale Region-based Convolutional Neural Network (CMS R-CNN) algorithm which utilizes cascaded multi-scale regions to combine high-resolution information with rich semantic information, thus alleviating the difficulty in detecting small vehicles. Li et al. [39] introduced a cross-layer fusion multi-object detection and recognition algorithm based on Faster R-CNN. This approach uses the five-layer structure of VGG16 (Visual Geometry Group) to obtain more characteristic information, achieving better effects. Chen et al. [40] incorporated the Inception module into the SSD algorithm, improving the detection accuracy while maintaining a higher detection rate. Simon et al. [41] used the sum of imaginary and real fractions and a specific regression strategy to improve the YOLOv2 model to obtain the Euler-Region-Proposal Network (E-RPN) model, which further improves detection accuracy.

In the study of vehicle detection algorithms for embedded systems and resource-constrained devices, researchers have developed numerous efficient and lightweight vehicle detection networks for these platforms. Ge et al. [42] proposed a lightweight vehicle detection network based on an improved YOLOv3-tiny model. They optimized the feature extraction network by replacing the original feature extractor with DarkNet-19 and ResNet-18, enhancing detection accuracy. Furthermore, they used the K-means algorithm to cluster nine anchor boxes for multi-scale prediction, especially for small targets. Wu et al. [43] enhanced YOLOv3-tiny to develop a lightweight vehicle detection network. They introduced a spatial pyramid pooling structure and a K-mean++ clustering algorithm to enhance feature extraction and bounding box prediction. Moreover, they replaced the Intersection over Union (IoU) loss function with the Generalized Intersection over Union (GIoU) loss to improve localization accuracy. The model was compressed by pruning redundant channels using the scaling factor of the batch normalization layer. Taheri Tajar et al. [44] developed a lightweight YOLOv3-tiny vehicle detection model by pruning and simplifying the original network. They trained the model on the BIT-Vehicle dataset and excluded unnecessary layers. Collectively, these studies demonstrate that lightweight modifications to YOLOv3-tiny can significantly improve detection performance while maintaining real-time speed in embedded systems and resource-constrained devices. Yuan and Xu [45] proposed a lightweight vehicle detection algorithm by improving YOLOv4. They replaced the original CSPDarknet53 backbone network with MobileNetv3 for feature extraction and used deep separable convolution in the enhanced feature extraction networks Spatial Pyramid Pooling (SPP) and Path Aggregation Network (PANet) to further reduce parameters. Additionally, the loss function was redesigned using a weighting method to address the imbalance in object detection data. The optimized YOLOv4 model improved accuracy by 0.53% while reducing model parameters by 78%, showing its effectiveness in achieving a balance between accuracy and efficiency. Bie et al. [46] proposed the YOLOv5n-L algorithm for real-time vehicle detection. They incorporated depth-wise separable convolution and the C3Ghost module to reduce model parameters and improve detection speed. A Squeeze-and-Excitation attention mechanism was integrated into the backbone network to enhance accuracy and suppress environmental interference. Furthermore, a bidirectional feature pyramid network was employed for multi-scale feature fusion. Compared to comparison models, this method has higher detection accuracy and lower computational costs and reduces the platform storage and computing capacity requirements, so can be easily employed in resource-constrained devices. Liu et al. [47] introduced YOLOv8-FDD, employing feature-sharing and dynamic-interaction detection heads alongside multi-scale feature enhancements to achieve a more lightweight and accurate model for real-time vehicle detection in traffic scenarios. Xie et al. [48] presented YOLO-ACE, optimizing the YOLOv10 backbone and neck architecture and applying knowledge distillation to create a highly efficient detector with improved accuracy for vehicle and pedestrian perception in autonomous driving.

YOLOv7 has demonstrated superior performance in vehicle detection tasks across diverse scenarios, achieving an optimal balance between speed and accuracy. Its architecture, featuring Extended Efficient Layer Aggregation Network (E-ELAN) modules and compound model scaling, optimizes gradient paths and computational efficiency, enabling robust multi-scale feature extraction critical for detecting vehicles of varying sizes in complex urban or aerial environments [15,49]. YOLOv7-RAR [50] enhances YOLOv7 with a Res3Unit backbone and ACmix hybrid attention to improve small-target recognition under occlusions and lighting variations. Lightweight adaptations of YOLOv7 further extend its applicability. For instance, YOLOv7-RDD [51] employs DSConv and SimAM attention to reduce computational complexity while maintaining high precision in pavement distress detection. Though focused on road defects, these methodologies—including spatial pyramid pooling optimizations (SPPCSPD) and SIoU loss—are transferable to vehicle detection, particularly enhancing small-object localization and reducing redundant predictions.

3. Improved Model SMA-YOLO Based on YOLOv7

The proposed model, SMA-YOLO, is an improved YOLOv7-based vehicle detection method featuring three key upgrades: (1) replacing the backbone with MobileNetV3 to reduce parameters; (2) incorporating SimAM attention to enhance small-target feature extraction; and (3) swapping SiLU activations with ACON to boost detection accuracy. Additional optimization includes adopting SIoU loss for faster convergence.

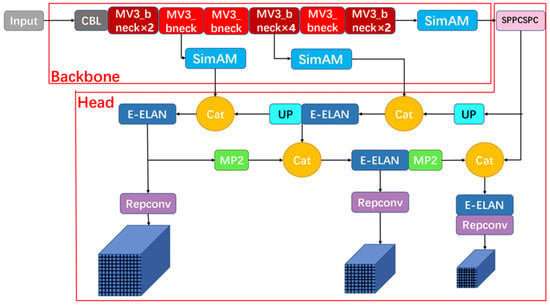

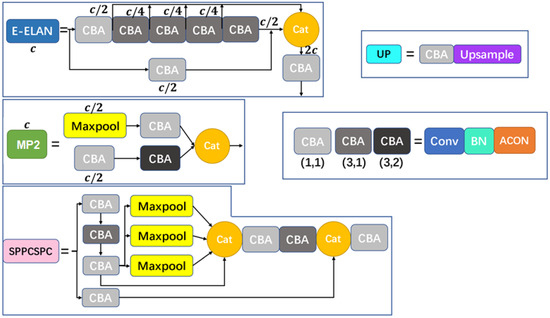

The SMA-YOLO model structure is shown in Figure 1, and the detailed structure of each module is shown in Figure 2. In particular, the structure of the CBA module is Conv+BN+ACON, which accelerates and optimizes the convolutional neural network. The efficient aggregation network E-ELAN can improve the accuracy and efficiency of target detection. The SPPCSPC structure connects the SPP (Spatial Pyramid Pooling) module and the CSPC (Cross Stage Partial Connections) module in series to obtain richer feature representation, which improves the accuracy of the image classification task while guaranteeing the high efficiency of the network. The main role of the MP2 module is to introduce multiscale information into the deep neural network, so as to improve the network’s target object perception and classification accuracy.

Figure 1.

Overall structure of the SMA-YOLO model.

Figure 2.

Structure of each module in the SMA-YOLO model.

3.1. Feature Extraction Network MobileNetV3

To reduce model complexity, this paper introduces MobileNetV3 [16] as a substitute for the original backbone network in YOLOv7. MobileNetV3 is available in two versions: MobileNetV3-Large and MobileNetV3-Small, with differences in the number of channels and the number of the bottlenecks. In this paper, MobileNetV3-Small is adopted; its network structure is detailed in Table 1.

Table 1.

Network structure of MobileNetV3-Small [16].

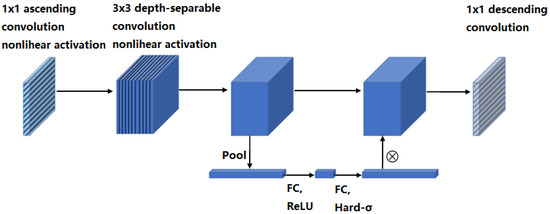

The structure of the bottleneck module in the network is shown in Figure 3. The feature information extracted from the high-dimensional space was firstly analyzed using a 1 × 1 ascending convolution, followed by a 3 × 3 depth-separable convolution, to which the SE (Squeeze-and-Excitation) attention mechanism was introduced to adjust the weight sizes of the different channels. Finally, a 1 × 1 descending convolution operation was performed, and residual operations were performed on the input and output results when the step size stride = 1.

Figure 3.

Structure of the bottleneck module in MobileNetV3-Small network.

3.2. ACON Activation Function

In this paper, the ACON [17] activation function is adopted to replace the original SiLU function in the YOLOv7 model, as shown in the definition of the CBA module in Figure 2. The ACON activation function was obtained by approximately smoothing the Maxout series function, which can adaptively control the activation function linearly or nonlinearly, and has the advantages of non-saturation and sparsity, which can reduce the negative impact of neuronal necrosis. This specially designed activation function enables each neuron to adaptively choose whether to activate or not, which helps to improve the generalization ability of the model and the information transfer efficiency of each feature layer, while also reducing the computation and complexity of the model to a certain extent.

ACON-C is a generalized activation framework in the ACON family [17], defined using Equation (1):

where and are two learnable parameters that functionally control the values of the upper and lower bounds, which can be adaptively tuned in the network; denotes the sigmoid activation function; and is a smoothing factor that controls whether the neuron is activated ( is 0 for no activation).

3.3. SimAM Attention Mechanisms

Existing attention modules usually work through a serial or parallel combination of spatial and channel attention mechanisms to facilitate information selection during visual processing. However, in the human brain, these two attentional mechanisms usually work in parallel. Therefore, we introduced the SimAM attention mechanism [18] to assign unique weights to each neuron. The location of the SimAM attention mechanism introduced into SMA-YOLO is shown in Figure 1.

SimAM is a parameter-free attention mechanism based on an energy function, which calculates the importance weight of each neuron directly by optimizing the energy function. The design of SimAM is inspired by the spatial suppression theory in neuroscience, where active neurons inhibit the activity of surrounding neurons, thereby assigning higher priority to the active neurons. Its core weighting process can be expressed as Equation (2):

where is the target neuron, is the mean of all neurons except , is the variance of all neurons except , is the regularization coefficient, used to balance the energy function.

The lower the energy, the more the neuron is distinguished from the surrounding neurons and the higher the importance. According to the definition of the attentional mechanism, the features need to be augmented using Equation (3):

where is a mathematical function capable of mapping real numbers to a range between 0 and 1, is an energy matrix used to extract key features in an image or perform other image processing tasks, where each element represents the energy value of a pixel point, is a per-element multiplication operator, and is the feature matrix, which represents the features in the input data. The effect of this formula is to weight the original feature matrix using a function and an energy matrix to produce an enhanced feature matrix .

3.4. Loss Function

Localization loss in the YOLOv7 network model is calculated using CIoU, which is calculated using Equation (4):

where represents the intersection and concurrency ratio between the predicted and real frames, represents the predicted frame, represents the real frame, is the Euclidean distance between the centroid of the predicted frame and the real frame, represents the diagonal length of the smallest external frame of the predicted and real frames, denotes the equilibrium parameter, and is used to measure the disparity in the width-to-height ratio of the predicted frame to the real frame. and can be calculated using Equation (5) and Equation (6), respectively.

where and denote the width and height of the real frame, and and denote the width and height of the predicted frame, respectively.

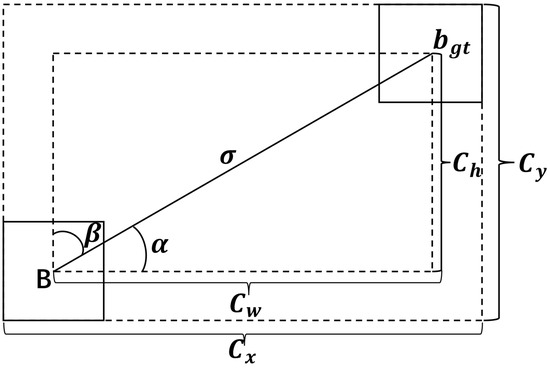

When the width-to-height ratio of the predicted frame and the real frame is the same, is equal to 0. At this time, the effect of the aspect ratio penalty term disappears and the CIoU loss function is less effective. Therefore, the SIoU loss function [19] was selected instead of CIoU in this study. The angular cost is introduced in the SIoU loss function to redescribe the distance, which reduces the total degrees of freedom of the loss function. The meanings of the parameters in the SIoU loss function are shown in Figure 4.

Figure 4.

Schematic of the parameters of the SIoU loss function.

The SIoU loss function is divided into three parts: angle cost, distance cost and shape cost.

(1) Angle Cost

Adding an angle-aware component minimizes the number of distance-related variables. The prediction results were first matched to the X or Y axis, and then the relevant calculations were performed along that axis. denotes the angular difference between the target frame and the real frame, and the convergence process minimizes if , otherwise is minimized. is computed as shown in Equation (7):

The angular cost is calculated using Equations (8)–(11):

where and denote the center coordinates of the prediction frame, and and denote the center coordinates of the real frame.

(2) Distance Cost

Distance cost measures the distance between the center point of the real frame and the predicted frame and is calculated using Equation (12):

where and are hyperparameters used to regulate the distance cost function, and , and are calculated using Equation (13), Equation (14) and Equation (15), respectively:

where denotes the angular cost. When , the distance cost decreases significantly, and when , the distance cost is elevated.

(3) Shape Cost

Shape cost is defined by Equation (16):

where and are hyperparameters used to adjust the shape of the shape cost function. The value of reflects the shape cost of different datasets, when is set to 1, the model will optimize the aspect ratio of the detection frame and restrict its free movement. To determine the value of , the authors of YOLOv7 used a genetic algorithm to conduct experiments on different datasets, setting the range of from 2 to 6. The formulas for and are given in Equation (17) and Equation (18), respectively, when takes the values of and .

where and are the width and height of the prediction frame, and and denote the width and height of the real frame, respectively. In summary, the final definition of the improved regression loss function is defined as in Equation (19):

The SIoU loss function expresses the complexity of the target detection task more comprehensively, reduces the probability that the value of the penalty term is 0, improves the regression accuracy and stability of the loss function, and reduces the prediction error. The optimized loss function is calculated using Equation (20):

where and are the regression and categorization losses, respectively, and and are the weights of the regression and categorization losses, respectively.

4. Experiments and Discussions

4.1. Experiment Preparation

4.1.1. Environment Configuration

Experiments were performed on an Intel(R) Xeon(R) Silver 4210 with a NVIDIA GeForce RTX 2080 with 10.8G of video memory. The deep-learning framework used was PyTorch (1.8.1+cu102), and the Python version was 3.7. Multi-threaded data reading was disabled due to device limitations.

4.1.2. Dataset

In order to validate the detection performance of the proposed SMA-YOLO model, the experiments in this paper were evaluated using the UA-DETRAC dataset [52]. This dataset consists of more than 80,000 real road vehicle images, which are traffic vehicle images collected from roads in 24 different locations in Beijing and Tianjin. The images were taken from more than 60 videos that included four clearly labeled target objects: car, bus, van, and other. In the experiments in this study, the training set, validation set and test set of the dataset were divided in a ratio of 3:1:1.

4.1.3. Evaluation Metrics

In this paper, average precision (AP), mean average precision (mAP) of all categories, number of parameters (Params), number of floating-point operations (GFLOPs), model size, and frames per second (FPS) were used as the evaluation metrics.

4.1.4. Implementation Details

The input images were uniformly resized to 640 × 640 pixels during training before being fed into the target detection model. All models used in this study’s experiments employed pre-training weights obtained from training on the COCO-Train2017 dataset. This included the backbone network, MobileNetV3, which was initialized with these pre-trained weights. We fine-tuned the entire model, including the backbone and the detection head, during the training process. This approach ensured that all models benefitted from the rich feature representations learned on the COCO-Train2017 dataset.

The training phase was set to 80 epochs with a batch size of 64. The initial learning rate was 1 × 10−3, decaying to a final rate of 1 × 10−5 using cosine annealing. Momentum decay and weight decay were configured at 0.937 and 0.0005, respectively. Model parameters were optimized using the Adam optimizer. During testing, the confidence threshold was 0.1, the IoU threshold was 0.25, and each image was limited to 100 prediction boxes. For evaluation, MINOVERLAP was set to 0.5 to compute mAP0.5.

4.2. Experimental Results and Analysis

4.2.1. Ablation Study

In order to verify the improvement effect of each improvement module proposed in this paper in terms of detection performance compared to the YOLOv7 model, ablation experiments were conducted, and the results are shown in Table 2.

Table 2.

Results of the ablation experiments in terms of parameters, GFLOPS, model size, mAP, and FPS.

From Table 2, it can be seen that using the YOLOv7 model as the baseline model, the addition of the SimAM attention mechanism reduced the parameters and computation of the model by 25.88% and 66.48%, respectively. The model size was reduced by 22.84%, the mAP improved by 0.47%, and the FPS improved by 42.46%. After the recalculation of the loss function using SIoU, the model’s parameters, computation and size were not significantly changed, but the mAP improved by 0.69% and the FPS decreased by 19.94%, which demonstrates a more significant improvement in the detection accuracy of the model with only a small reduction in FPS. After replacing the backbone network with MobileNetV3, although the mAP slightly decreased by 0.75%, the number of parameters of the model and the computation were reduced by 88.17% and 90.22%, respectively. The size of the model was reduced by 79.35%, and the FPS improved by 97.12%. After replacing the activation function with ACON, the number of parameters, the amount of computation, and the size of the model were improved by 1.43%, 0.47%, and 3.28%, respectively, the model’s mAP improved by 0.44%, and the FPS improved by 3.97%. Finally, by incorporating all the improved techniques into one model, the resulting SMA-YOLO model achieved a 88.20% reduction in parameters, a 90.12% reduction in computation, a 79.42% reduction in model size, a 0.28% improvement in mAP, and a 66.77% improvement in FPS when compared to the benchmark model YOLOv7.

Analyzing the data in Table 2, we observed that replacing the backbone of YOLOv7 with MobileNetV3 effectively reduced the number of model parameters and increased the FPS. Specifically, the introduction of MobileNetV3 as the backbone led to a significant reduction in computational complexity, thereby enhancing the inference speed of the model. In contrast, SMA-YOLO introduced additional modules such as SimAM, SIoU, and ACON into the YOLOv7+MobileNetV3 model. While keeping the parameters roughly the same, these enhancements increased the mAP but reduced the FPS. This trade-off is due to the additional computational overhead introduced by these advanced modules, which improve the accuracy of the model at the cost of increased computational requirements.

The experimental results show that our proposed SMA-YOLO model outperforms the initial YOLOv7 model in terms of complexity, occupied memory size, detection speed and accuracy.

4.2.2. Comparison with State-of-the-Art Methods

In this section, using the same experimental conditions, the proposed SMA-YOLO model and state-of-the-art approaches were trained on the same training set and tested on the same test set, and the results are shown in Table 3.

Table 3.

Comparison results of model size, speed, accuracy, number of parameters and GFLOPs using the UA-DETRAC dataset.

From the experimental results shown in Table 3, it can be seen that compared with the YOLOv7 model, the proposed model SMA-YOLO’s size is 79.42% smaller, the FPS is improved by 66.77%, the number of parameters is reduced by 88.20%, the computation is reduced by 90.12%, and the mAP is improved by 0.28%. Compared to classical models preceding YOLOv7 (Table 3, rows above YOLOv7), SMA-YOLO demonstrates significantly enhanced lightweight characteristics, achieving accelerated detection speed, reduced parameter count, and lower computational complexity while maintaining superior accuracy. Compared to newer YOLO variants succeeding YOLOv7 (Table 3, rows below YOLOv7), SMA-YOLO retains detection accuracy advantages while strategically balancing speed–precision trade-offs.

4.2.3. Comparison of Visualization Results

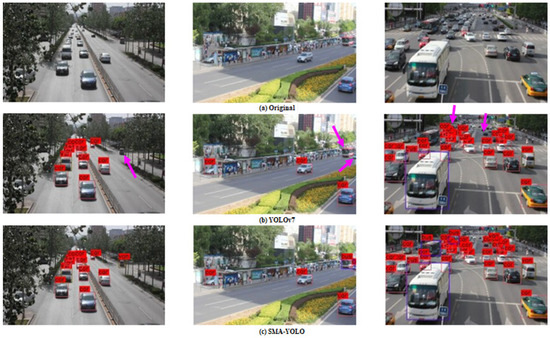

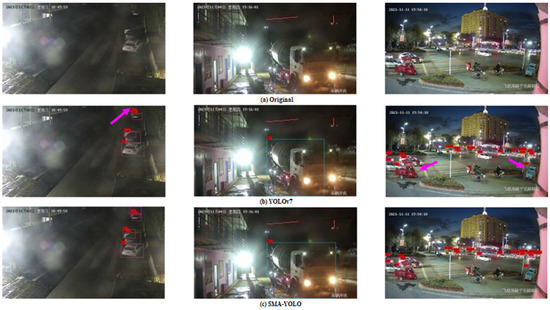

In order to compare the detection effect of the proposed SMA-YOLO model with respect to the YOLOv7 model, we selected some representative detection results for comparison, and the results are shown in Figure 5 and Figure 6.

Figure 5.

Comparison of detection results between YOLOv7 and SMA-YOLO in a road scene.

Figure 6.

Comparison of detection results between YOLOv7 model and SMA-YOLO in a night scene.

Comparing the three images in the first column of Figure 5, it can be seen that the proposed SMA-YOLO model was able to correctly detect the trucks that were missed by the YOLOv7 model, indicated by pink arrows. Comparing the three images in the second column of Figure 5, it can be seen that the YOLOv7 model missed the buses and the cars indicated by pink arrows in the distance, while the proposed SMA-YOLO model was able to accurately detect the locations and types of these two targets. As can be seen by comparing the three images in the third column of Figure 5, in terms of vehicle density, the YOLOv7 model missed the small targets in the distance and the partially covered vehicles indicated by pink arrows, while the proposed SMA-YOLO model was able to detect these missed vehicles correctly. Therefore, we may conclude that our proposed SMA-YOLO model can detect small and distant targets in the image more accurately, with better detection in complex road scenes.

The comparison results of the YOLOv7 model and the SMA-YOLO model proposed in this paper for the detection of vehicles at night are shown in Figure 6. Comparing the three images in the first column of Figure 6, it can be seen that the YOLOv7 model missed the detection of the truck indicated by the pink arrow due to insufficient light and strong interference at night, while the proposed SMA-YOLO model successfully detected the location of the truck and was able to identify its category. Comparing the three images in the second column of Figure 6, it can be seen that the lights of the mixer truck were highly intrusive and the far rear lighting of the truck was very harsh. Although the YOLOv7 model was able to detect the mixer truck, the location and size of the detection frame were incorrect, while the detection frame generated by the proposed SMA-YOLO model more closely matched the outline of the mixer truck. Comparing the three images in the third column of Figure 6, it can be seen that the YOLOv7 model incorrectly classified the tricycle as a car (as indicated by pink arrows), while the SMA-YOLO model was able to classify it correctly. These experiments show that the proposed SMA-YOLO model exhibited more accurate detection of ambiguous vehicles in complex nighttime scenes and generated detection frames that fit the contours of the detected vehicles more closely.

4.3. Discussion

In the field of object detection, there is often a trade-off between model speed (FPS) and accuracy (mAP). As shown in Table 3, SMA-YOLO demonstrates significantly enhanced lightweight characteristics compared to classical models preceding YOLOv7 (Table 3, rows above YOLOv7). SMA-YOLO achieves accelerated detection speed, reduced parameter count, and lower computational complexity, while maintaining superior accuracy. This is due to the incorporation of advanced modules such as the SimAM attention mechanism, which enhances the detection of small objects and overall accuracy but increases computational complexity.

Compared to newer YOLO variants succeeding YOLOv7 (Table 3, rows below YOLOv7), SMA-YOLO retains detection accuracy advantages while strategically balancing speed–precision trade-offs. For instance, while models like YOLOv5 and YOLOv7 demonstrate higher FPS, SMA-YOLO achieves a higher mAP, making it suitable for applications where detection accuracy is paramount. These models employ more lightweight network structures, sacrificing some detection accuracy to achieve faster inference speed.

A critical limitation involves accuracy degradation under adverse weather (e.g., heavy rain/fog), where compromised image quality impedes object detection. While current evaluations use standard datasets under typical conditions, future work will rigorously assess robustness on specialized benchmarks like the RTTS Hazy Dataset to quantify weather-induced performance drops and develop adaptive preprocessing modules for real-world deployment resilience.

The model’s efficacy diminishes with low-resolution images due to insufficient feature detail as SMA-YOLO’s design prioritizes high-resolution inputs leveraging SimAM. Future iterations will incorporate super-resolution preprocessing and lightweight architectures optimized for low-quality visuals, ensuring reliable detection in resource-constrained environments.

5. Conclusions

Aiming to address the problems that current vehicle detection algorithms are still not effective enough in complex traffic road scenarios and are difficult to deploy in edge devices with limited computing resources and memory, this paper has proposed SMA-YOLO, an improved vehicle detection model based on the YOLOv7 model. To enhance the flexibility and generalization performance of the vehicle detection model to meet the needs of different environments, we have made several key improvements, which include introducing the SimAM attention mechanism, adopting MobileNetV3 for feature extraction, replacing SiLU with ACON activation functions, and substituting CIoU with SIoU loss calculation. These modifications further reduced the model’s size without decreasing its accuracy and significantly improved the detection speed. Future research will focus on employing the proposed model in resource-constrained embedded devices and investigating its performance under real-world conditions, including harsh weather and low-resolution images.

Author Contributions

Methodology, Y.S. and Z.T.; software, Y.S.; validation, Y.S. and Y.L.; writing—original draft preparation, Y.S., H.L. and Z.T.; writing—review and editing, Z.T. and H.L.; visualization, Y.S. and Y.L.; supervision, Z.T.; funding acquisition, Z.T. and H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This study was partially supported by the scientific research project of Zhejiang Provincial Department of Education (No. 23115101-F), the scientific research project of Keyi College, Zhejiang Sci-Tech University (No. KY2024001).

Data Availability Statement

The UA-DETRAC dataset [52] was used to validate the models.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Dimitrakopoulos, G.; Demestichas, P. Intelligent transportation systems. IEEE Veh. Technol. Mag. 2010, 5, 77–84. [Google Scholar] [CrossRef]

- Dong, L. Research on the industrial development of intelligent transportation system in China. In Proceedings of the 2020 5th International Conference on Electromechanical Control Technology and Transportation, Nanchang, China, 15–17 May 2020. [Google Scholar]

- Liang, L.; Ma, H.; Zhao, L.; Xie, X.; Hua, C.; Zhang, M.; Zhang, Y. Vehicle Detection Algorithms for Autonomous Driving: A Review. Sensors 2024, 24, 3088. [Google Scholar] [CrossRef] [PubMed]

- Srivastava, S.; Narayan, S.; Mittal, S. A survey of deep learning techniques for vehicle detection from UAV images. J. Syst. Archit. 2021, 117, 102152. [Google Scholar] [CrossRef]

- Zhou, Z.; Dong, X.; Li, Z.; Yu, K.; Ding, C.; Yang, Y. Spatio-Temporal Feature Encoding for Traffic Accident Detection in VANET Environment. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19772–19781. [Google Scholar] [CrossRef]

- Adewopo, V.; Elsayed, N. Smart City Transportation: Deep Learning Ensemble Approach for Traffic Accident Detection. IEEE Access 2024, 12, 59134–59147. [Google Scholar] [CrossRef]

- Ren, Y. Intelligent Vehicle Violation Detection System Under Human–Computer Interaction and Computer Vision. Int. J. Comput. Intell. Syst. 2024, 17, 40. [Google Scholar] [CrossRef]

- Sinha, D.; Divya, S.; Anjali, C.; Keethigha, R.K. Traffic Signal Violation Detection System using YOLOv3. In Proceedings of the 2024 International Conference on Cognitive Robotics and Intelligent Systems (ICC-ROBINS), Coimbatore, India, 17–19 April 2024. [Google Scholar]

- Reda, M.; Onsy, A.; Haikal, A.Y.; Ghanbari, A. Path planning algorithms in the autonomous driving system: A comprehensive review. Robot. Auton. Syst. 2024, 174, 104630. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001, Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 21–23 September 2005. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Bochkovskiy, A.; Wang, C.; Liao, H. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Wang, C.; Bochkovskiy, A.; Liao, H. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. arXiv 2019, arXiv:1905.02244. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Liu, M.; Sun, J. Activate or not: Learning customized activation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Yang, L.; Zhang, R.Y.; Li, L. SimAM: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021. [Google Scholar]

- Gevorgyan, Z. SIoU loss: More powerful learning for bounding box regression. arXiv 2022, arXiv:2205.12740. [Google Scholar] [CrossRef]

- Nesti, T.; Boddana, S.; Yaman, B. Ultra-Sonic Sensor Based Object Detection for Autonomous Vehicles. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Vancouver, BC, Canada, 18–22 June 2023. [Google Scholar]

- Poza-Lujan, J.L.; Uribe-Chavert, P.; Posadas-Yagüe, J.L. Low-cost modular devices for on-road vehicle detection and charaterisation. Des. Autom. Embed. Syst. 2023, 27, 85–102. [Google Scholar] [CrossRef]

- Sohail, M.; Khan, A.U.; Sandhu, M.; Shoukat, I.A.; Jafri, M.; Shin, H. Radar sensor based machine learning approach for precise vehicle position estimation. Sci. Rep. 2023, 13, 13837. [Google Scholar] [CrossRef]

- Fan, Y.; Tian, S.; Sheng, Q.; Li, J.; Chen, J.; Wang, B.; Ma, J. A coarse-to-fine vehicle detection in large SAR scenes based on GL-CFAR and PRID R-CNN. Int. J. Remote Sens. 2023, 44, 2518–2547. [Google Scholar] [CrossRef]

- Yao, R.; Uchiyama, T. Analysis of Magnetic Signatures for Vehicle Detection Using Dual-Axis Magneto-Impedance Sensors. IEEE Sens. J. 2023, 24, 8721–8730. [Google Scholar] [CrossRef]

- He, R.; Mao, G.; Hui, Y.; Cheng, Q. Geomagnetic Sensor Based Abnormal Parking Detection in Smart Roads. In Proceedings of the 2023 IEEE Global Communications Conference, Kuala Lumpur, Malaysia, 4–8 December 2023. [Google Scholar]

- Oluwatobi, A.N.; Tayo, A.O.; Oladele, A.T.; Adesina, G.R. The design of a vehicle detector and counter system using inductive loop technology. Procedia Comput. Sci. 2012, 183, 493–503. [Google Scholar] [CrossRef]

- Ali, S.S.M.; George, B.; Vanajakshi, L.; Venkatraman, J. A Multiple Inductive Loop Vehicle Detection System for Heterogeneous and Lane-Less Traffic. IEEE Trans. Instrum. Meas. 2012, 61, 1353–1360. [Google Scholar]

- Wang, Z.; Zhan, J.; Duan, C.; Guan, X.; Lu, P.; Yang, K. A Review of Vehicle Detection Techniques for Intelligent Vehicles. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 3811–3831. [Google Scholar] [CrossRef]

- Song, Y.; Hong, S.; Hu, C.; He, P.; Tao, L.; Tie, Z.; Ding, C. MEB-YOLO: An Efficient Vehicle Detection Method in Complex Traffic Road Scenes. Comput. Mater. Contin. 2023, 75, 5761–5784. [Google Scholar] [CrossRef]

- SP, K.; Mohandas, P. DETR-SPP: A fine-tuned vehicle detection with transformer. Multimed. Tools Appl. 2024, 83, 25573–25594. [Google Scholar]

- Tao, L.; Hong, S.; Lin, Y.; Chen, Y.; He, P.; Tie, Z. A Real-Time License Plate Detection and Recognition Model in Unconstrained Scenarios. Sensors 2024, 24, 2791. [Google Scholar] [CrossRef]

- Gao, X.; Yu, A.; Tan, J.; Gao, X.; Zeng, X.; Wu, C. GSD-YOLOX: Lightweight and more accurate object detection models. J. Vis. Commun. Image Represent. 2024, 98, 104009. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision & Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; Dollár, P. Focal loss for dense object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Wang, C.; Wang, Q.; Yang, W. CMS R-CNN: An efficient cascade multi-scale region-based convolutional neural network for accurate 2D small vehicle detection. In Proceedings of the 2019 Chinese Automation Congress (CAC), Hangzhou, China, 22–24 November 2019. [Google Scholar]

- Li, C.; Qu, Z.; Wang, S.; Liu, L. A method of cross-layer fusion multi-object detection and recognition based on improved faster R-CNN model in complex traffic environment. Pattern Recognit. Lett. 2021, 145, 127–134. [Google Scholar] [CrossRef]

- Chen, W.; Qiao, Y.; Li, Y. Inception-SSD: An improved single shot detector for vehicle detection. J. Ambient Intell. Humaniz. Comput. 2020, 13, 5047–5053. [Google Scholar] [CrossRef]

- Simon, M.; Milz, S.; Amende, K.; Gross, H.M. Complex-YOLO: An euler-region-proposal for real-time 3D object detection on point clouds. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 9–14 September 2018. [Google Scholar]

- Ge, P.; Guo, L.; He, D.; Huang, L. Light-weighted vehicle detection network based on improved YOLOv3-tiny. Int. J. Distrib. Sens. Netw. 2022, 18, 15501329221080665. [Google Scholar] [CrossRef]

- Wu, H.; Hua, Y.; Zou, H.; Ke, G. A lightweight network for vehicle detection based on embedded system. J. Supercomput. 2022, 78, 18209–18224. [Google Scholar] [CrossRef]

- Taheri Tajar, A.; Ramazani, A.; Mansoorizadeh, M. A lightweight Tiny-YOLOv3 vehicle detection approach. J. Real-Time Image Process. 2021, 18, 2389–2401. [Google Scholar] [CrossRef]

- Yuan, D.L.; Xu, Y. Lightweight Vehicle Detection Algorithm Based on improved YOLOv4. Eng. Lett. 2021, 29, 277–286. [Google Scholar]

- Bie, M.; Liu, Y.; Li, G.; Hong, J.; Li, J. Real-time vehicle detection algorithm based on a lightweight You-Only-Look-Once (YOLOv5n-L) approach. Expert Syst. Appl. 2023, 213, 119108. [Google Scholar] [CrossRef]

- Liu, X.; Wang, Y.; Yu, D.; Yuan, Z. YOLOv8-FDD: A Real-Time Vehicle Detection Method Based on Improved YOLOv8. IEEE Access 2024, 12, 136280–136296. [Google Scholar] [CrossRef]

- Xie, Y.; Du, D.; Bi, M. YOLO-ACE: A Vehicle and Pedestrian Detection Algorithm for Autonomous Driving Scenarios Based on Knowledge Distillation of YOLOv10. IEEE Internet Things J. 2025, 12, 30086–30097. [Google Scholar] [CrossRef]

- Qin, Z.; Chen, D.; Wang, H. MCA-YOLOv7: An Improved UAV Target Detection Algorithm Based on YOLOv7. IEEE Access 2024, 12, 42642–42649. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, Y.; Wang, Z.; Jiang, Y. YOLOv7-RAR for Urban Vehicle Detection. Sensors 2023, 23, 1801. [Google Scholar] [CrossRef]

- Ning, Z.; Wang, H. YOLOv7-RDD: A Lightweight Efficient Pavement Distress Detection Model. IEEE Trans. Intell. Transp. Syst. 2024, 25, 6994–7002. [Google Scholar] [CrossRef]

- Lyu, S.; Chang, M.; Du, D.; Wen, L.; Qi, H.; Li, Y.; Wei, Y.; Ke, L.; Hu, T.; Coco, M.; et al. UA-DETRAC 2017: Report of AVSS2017 & IWT4S challenge on advanced traffic monitoring. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance, Lecce, Italy, 29 August–1 September 2017. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar] [CrossRef]

- Yang, G.; Feng, W.; Jin, J.; Lei, Q.; Li, X.; Gui, G.; Wang, W. Face Mask Recognition System with YOLOV5 Based on Image Recognition. In Proceedings of the 2020 IEEE 6th International Conference on Computer and Communications, Chengdu, China, 11–14 December 2020. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. arXiv 2019, arXiv:1904.08189. [Google Scholar] [CrossRef]

- Long, X.; Deng, K.; Wang, G.; Zhang, Y.; Dang, Q.; Gao, Y.; Shen, H.; Ren, J.; Han, S.; Ding, E.; et al. PP-YOLO: An effective and efficient implementation of object detector. arXiv 2020, arXiv:2007.12099. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).