Boxing Punch Detection and Classification Using Motion Tape and Machine Learning

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Details

2.1.1. Materials

2.1.2. Sensor Fabrication

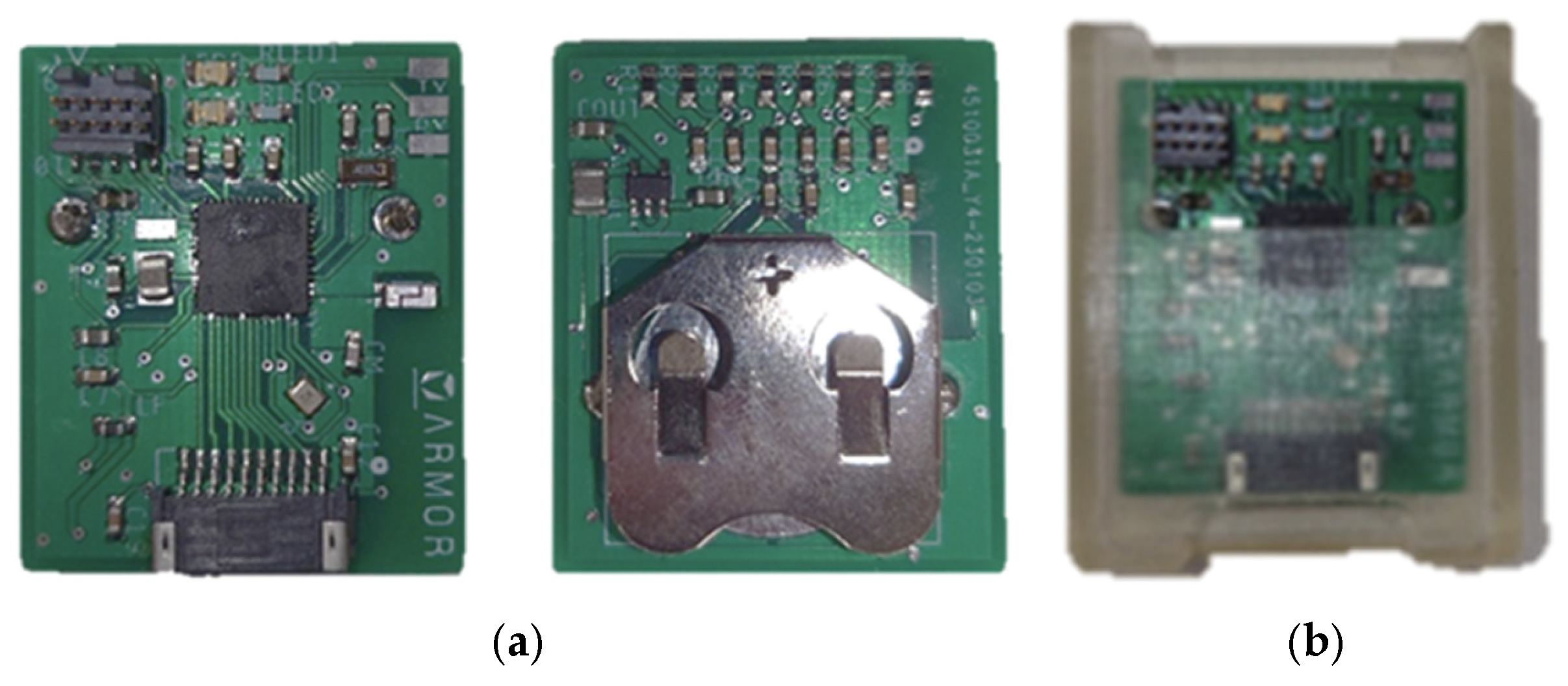

2.1.3. Wireless Sensing Node

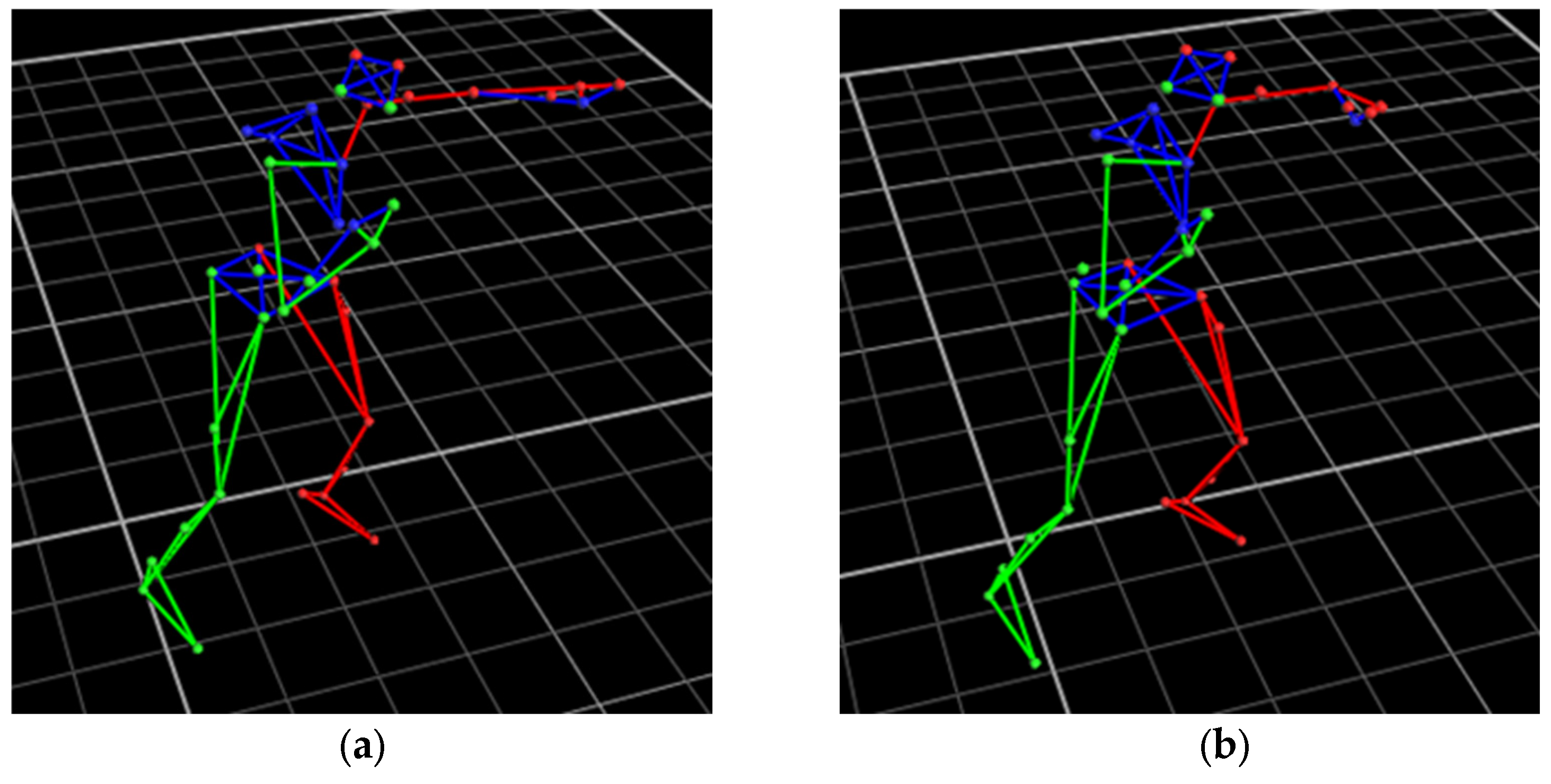

2.1.4. Optical Motion Capture

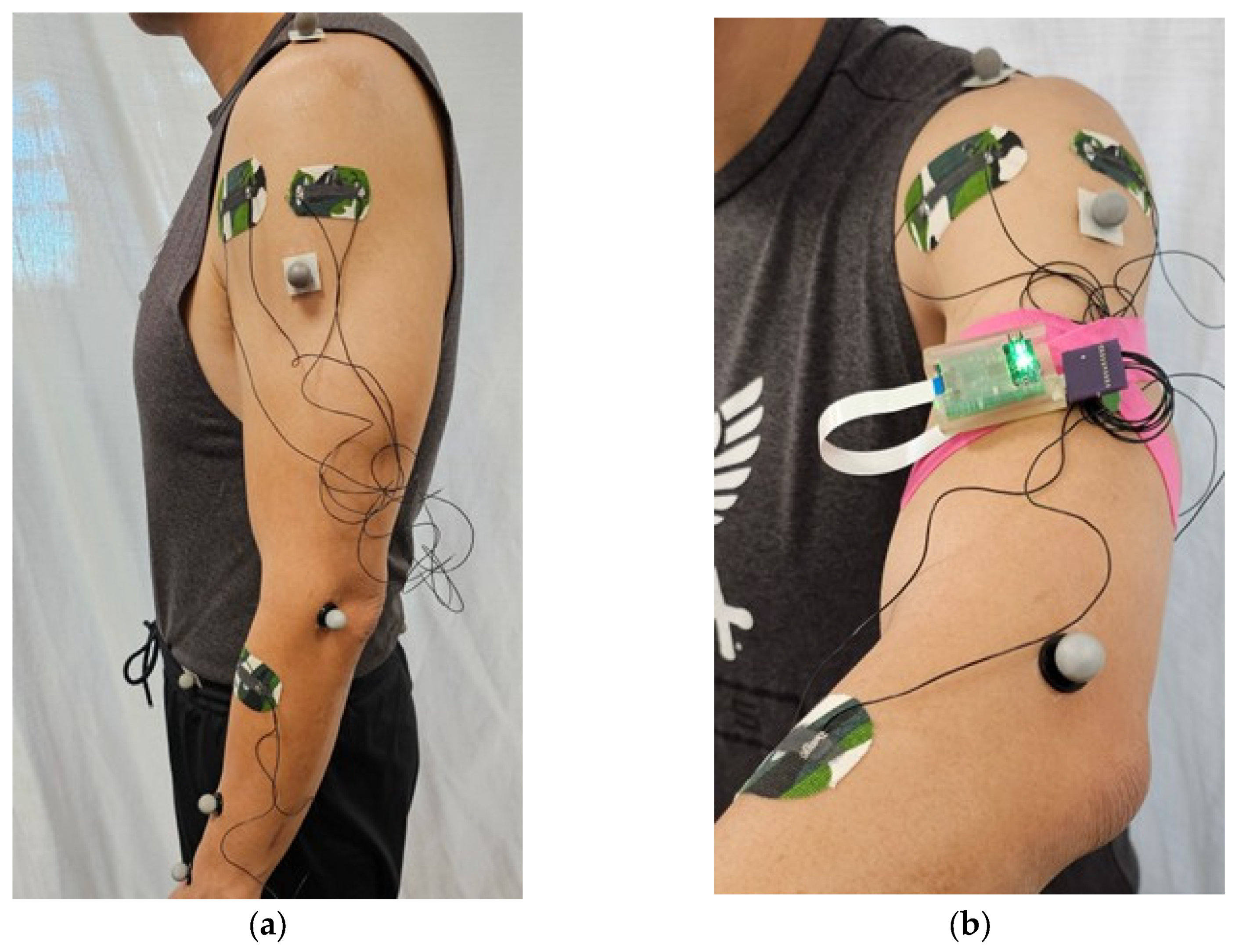

2.1.5. Human Participant Study for Boxing

2.2. Data Processing Method

2.3. Punch Detection Method

| Algorithm 1. Punch detection procedure by Vales-Alonso et al. [6] | |

| 1 | Input: Points , Powers , Distances , Calibration cluster , , |

| 2 | Output: Punch times , , , |

| 3 | Parameters: , , , , , |

| 4 | ; |

| 5 | ; |

| 6 | for all i; |

| 7 | repeat |

| 8 | for all i do |

| //Possible punch section | |

| 9 | if , otherwise 0; |

| 10 | end |

| //Punch confirmation | |

| 11 | if less than consecutive 1s; |

| 12 | for each i such that do |

| //Threshold updating | |

| 13 | for to ; |

| 14 | end |

| 15 | ; |

| 16 | until ; |

| //Time extraction | |

| 17 | ; |

| 18 | for each i such that do |

| 19 | number of consecutive 1s starting at index i; |

| 20 | Append time of index to ; |

| 21 | end |

| 22 | Return |

2.4. Punch Classification Models

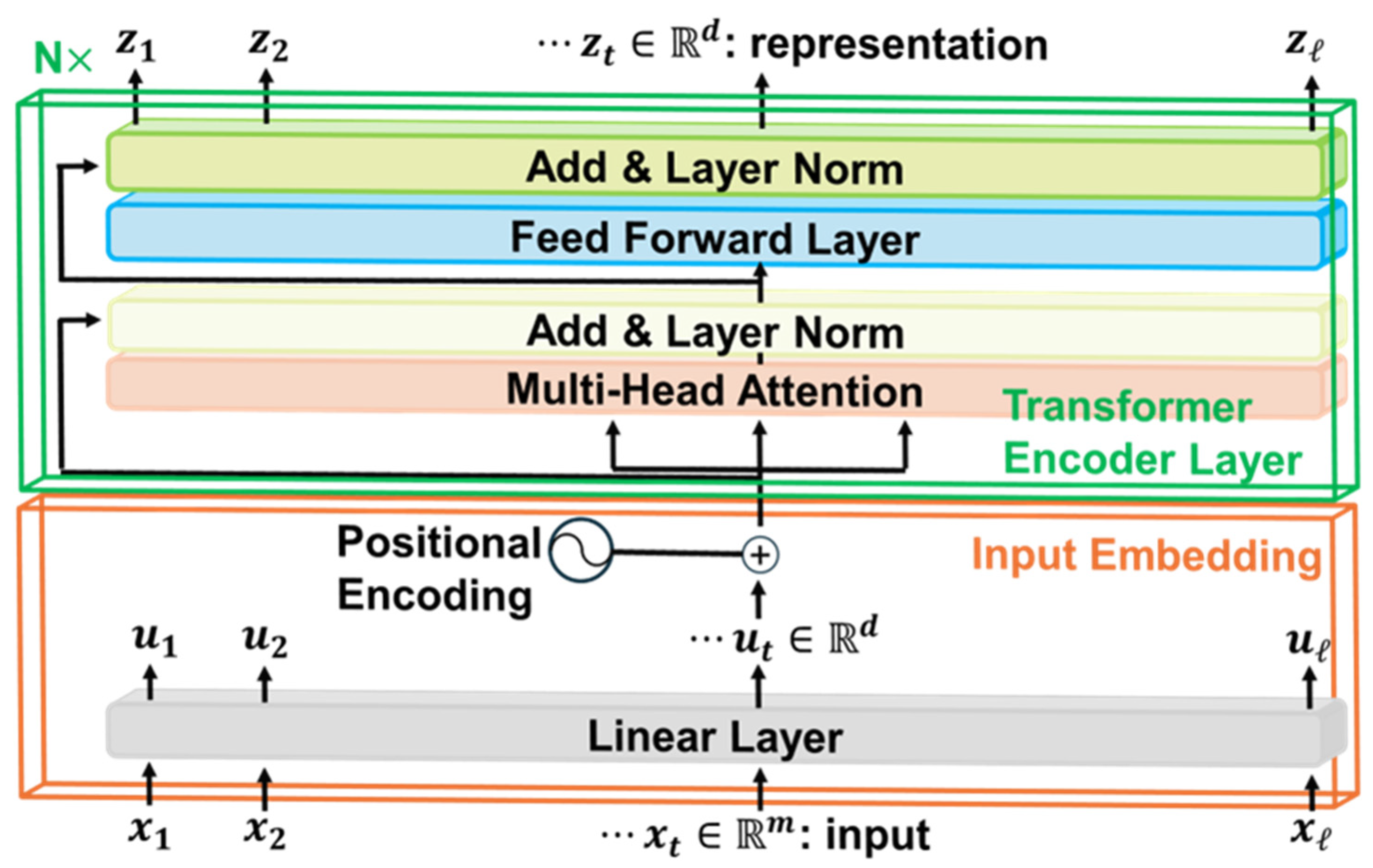

2.4.1. Time Series Transformer

2.4.2. MiniRocket

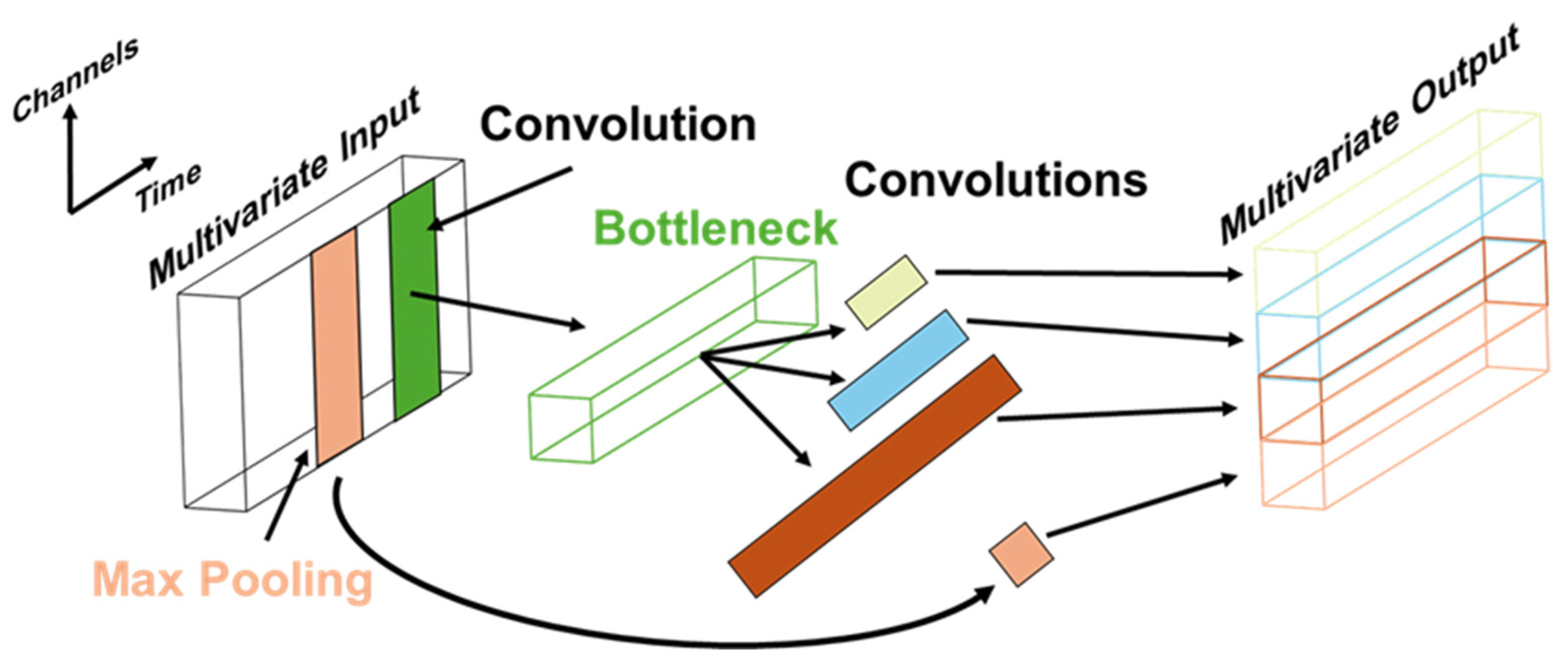

2.4.3. InceptionTime

3. Results and Discussion

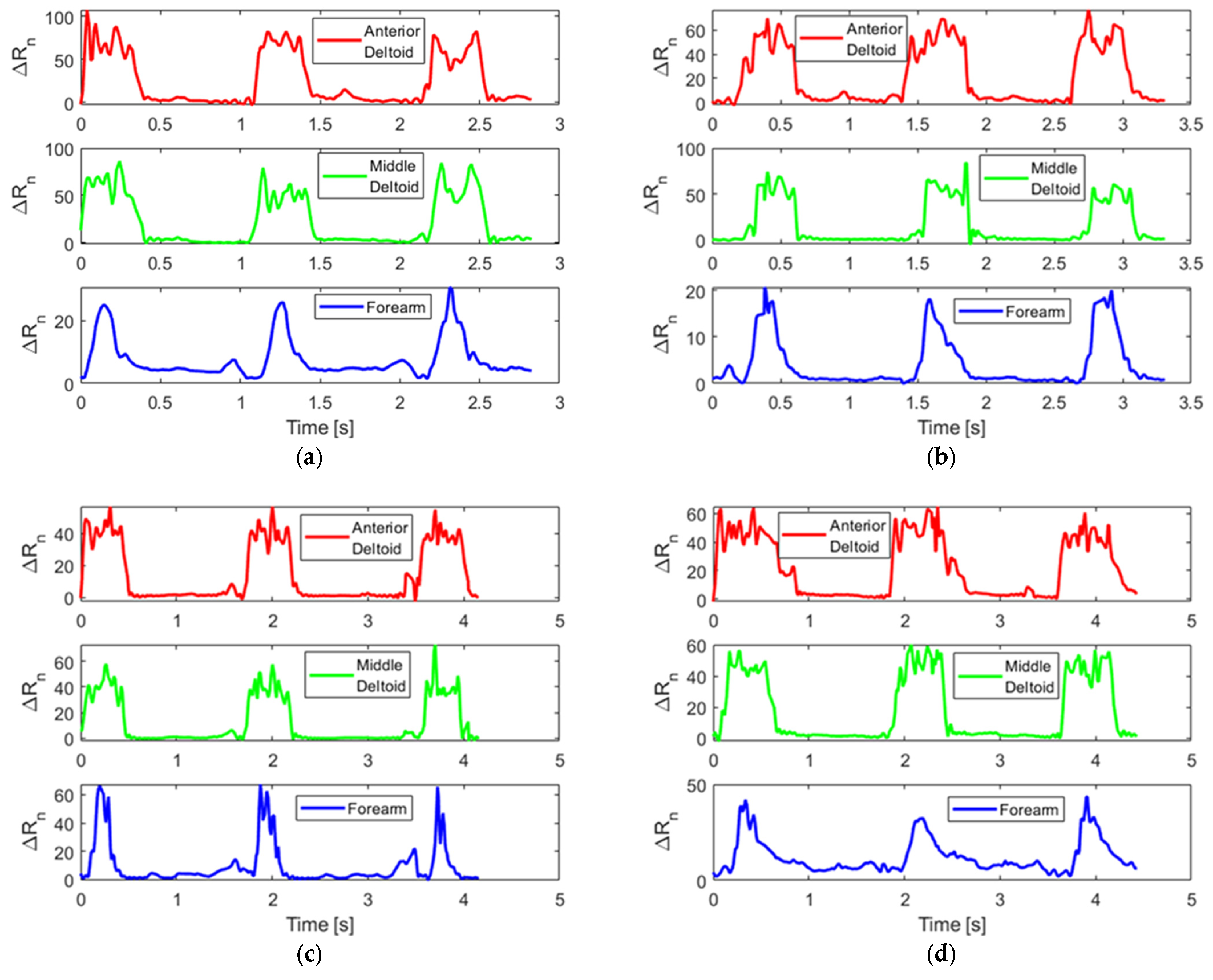

3.1. Dataset Visualization

3.2. Punch Detection

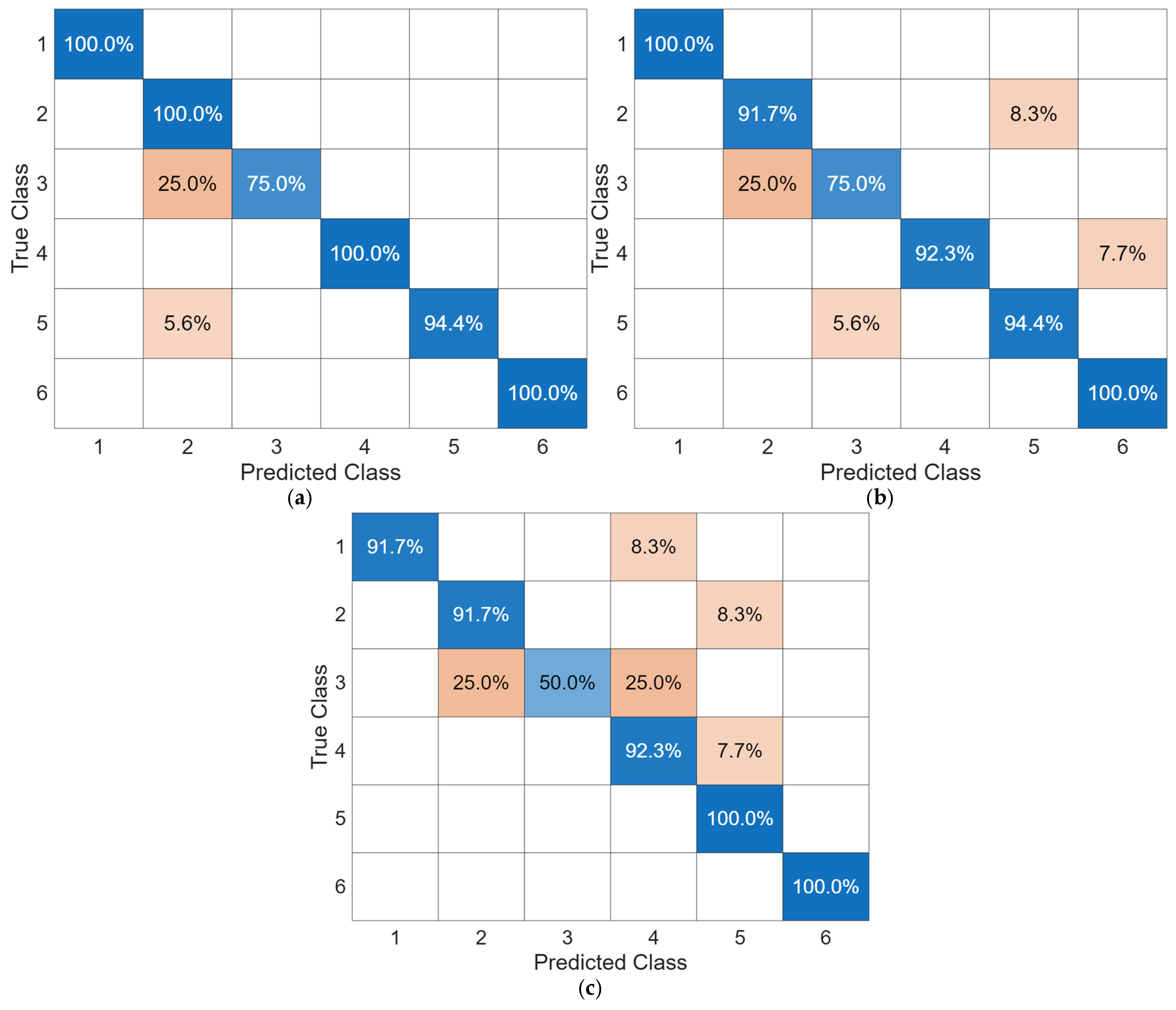

3.3. Punch Classification

3.4. Discussion of Limitations

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| SVM | Support Vector Machine |

| IMU | Inertial Measurement Unit |

| DAQ | Data Acquisition |

| GNS | Graphene Nanosheets |

| EC | Ethyl Cellulose |

| ETOH | 200 Proof Ethyl Alcohol |

| K-Tape | Kinesiology Tape |

| MCU | Microcontroller Unit |

| ADC | Analog-To-Digital Converter |

| BLE | Bluetooth Low Energy |

| SLA | Stereolithography |

| MOCAP | Motion Capture |

| IR | Infrared |

| PCB | Printed Circuit Board |

| PC | Personal Computer |

| BOB | Body Opponent Bag |

| TST | Time Series Transformer |

| ML | Machine Learning |

| MiniRocket | Minimally Random Convolutional Kernel Transform |

| CMA-ES | Covariance Matrix Adaptation Evolution Strategies |

| PSO | Particle Swarm Optimization |

| Cobyla | Constrained Optimization By Linear Approximation |

| Fast-GA | Fast Genetic Algorithm |

| LLM | Large Language Model |

| NLP | Natural Language Processing |

| PPV | Proportion Of Positive Values |

| CNN | Convolutional Neural Network |

References

- Lindner, J. Boxing Popularity Statistics and Trends in 2024•Gitnux. Available online: https://gitnux.org/boxing-popularity-statistics/ (accessed on 3 May 2024).

- PunchLab PunchLab|Track Punching Bag, Follow Combat Workouts. Available online: https://punchlab.net/ (accessed on 22 April 2024).

- Kasiri-Bidhendi, S.; Fookes, C.; Morgan, S.; Martin, D.T.; Sridharan, S. Combat Sports Analytics: Boxing Punch Classification Using Overhead Depthimagery. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 9 December 2015; IEEE Computer Society: New York, NY, USA, 2015; pp. 4545–4549. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Stefański, P.; Jach, T.; Kozak, J. Classification of Punches in Olympic Boxing Using Static RGB Cameras. In Proceedings of the Computational Collective Intelligence, Leipzig, Germany, 9–11 September 2024; Nguyen, N.T., Botzheim, J., Gulyás, L., Núñez, M., Treur, J., Vossen, G., Kozierkiewicz, A., Eds.; Springer Nature: Cham, Switzerland, 2023; pp. 540–551. [Google Scholar]

- Vales-Alonso, J.; González-Castaño, F.J.; López-Matencio, P.; Gil-Castiñeira, F. A Nonsupervised Learning Approach for Automatic Characterization of Short-Distance Boxing Training. IEEE Trans. Syst. Man Cybern. Syst. 2023, 53, 7038–7052. [Google Scholar] [CrossRef]

- Buśko, K.; Staniak, Z.; Szark-Eckardt, M.; Nikolaidis, P.T.; Mazur-Różycka, J.; Łach, P.; Michalski, R.; Gajewski, J.; Górski, M. Measuring the Force of Punches and Kicks among Combat Sport Athletes Using a Modified Punching Bag with an Embedded Accelerometer. Acta Bioeng. Biomech. 2016, 18, 47–54. [Google Scholar] [PubMed]

- Hykso. The Science Behind Hykso. Available online: https://shop.hykso.com/pages/the-science-behind-hykso (accessed on 24 February 2025).

- Chua, J. Using Wearable Sensors in Combat Sports. Available online: https://sportstechnologyblog.com/2019/09/02/using-wearable-sensors-in-combat-sports/ (accessed on 22 April 2024).

- StrikeTec. Experience Striketec. Available online: https://striketec.com/ (accessed on 22 April 2024).

- Hanada, Y.; Hossain, T.; Yokokubo, A.; Lopez, G. BoxerSense: Punch Detection and Classification Using IMUs. In Proceedings of the Sensor- and Video-Based Activity and Behavior Computing, Zürich, Switzerland, 20–22 October 2021; Ahad, M.A.R., Inoue, S., Roggen, D., Fujinami, K., Eds.; Springer Nature: Singapore, 2022; pp. 95–114. [Google Scholar]

- Lin, Y.A.; Zhao, Y.; Wang, L.; Park, Y.; Yeh, Y.J.; Chiang, W.H.; Loh, K.J. Graphene K-Tape Meshes for Densely Distributed Human Motion Monitoring. Adv. Mater. Technol. 2021, 6, 2000861. [Google Scholar] [CrossRef]

- Lee, A.; Dionicio, P.; Farcas, E.; Godino, J.; Patrick, K.; Wyckoff, E.; Loh, K.J.; Gombatto, S. Physical Therapists’ Acceptance of a Wearable, Fabric-Based Sensor System (Motion Tape) for Use in Clinical Practice: Qualitative Focus Group Study. JMIR Hum. Factors 2024, 11, e55246. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.-C.; Lin, Y.-A.; Pierce, T.; Wyckoff, E.; Loh, K.J. Measuring the Golf Swing Pattern Using Motion Tape for Feedback and Fault Detection. In Proceedings of the 14th International Workshop on Structural Health Monitoring, Stanford, CA, USA, 12–14 September 2023. [Google Scholar]

- Manna, K.; Wang, L.; Loh, K.J.; Chiang, W.-H. Printed Strain Sensors Using Graphene Nanosheets Prepared by Water-Assisted Liquid Phase Exfoliation. Adv. Mater. Interfaces 2019, 6, 1900034. [Google Scholar] [CrossRef]

- Loh, K.; Lin, Y.-A. Smart Elastic Fabric Tape for Distributed Skin Strain, Movement, and Muscle Engagement Monitoring. U.S. Patent 2024/12,156,725, 3 December 2024. [Google Scholar]

- Pierce, T.; Lin, Y.-A.; Loh, K.J. Wireless Gait and Respiration Monitoring Using Nanocomposite Sensors. In Proceedings of the 14th International Workshop on Structural Health Monitoring, Stanford, CA, USA, 12–14 September 2023. [Google Scholar]

- Davis, R.B.; Õunpuu, S.; Tyburski, D.; Gage, J.R. A Gait Analysis Data Collection and Reduction Technique. Hum. Mov. Sci. 1991, 10, 575–587. [Google Scholar] [CrossRef]

- Zerveas, G.; Jayaraman, S.; Patel, D.; Bhamidipaty, A.; Eickhoff, C. A Transformer-Based Framework for Multivariate Time Series Representation Learning. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Singapore, 14–18 August 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 2114–2124. [Google Scholar]

- Mahalanobis, P.C. Reprint of: Mahalanobis, P.C. (1936) “On the Generalised Distance in Statistics.” . Sankhya A 2018, 80, 1–7. [Google Scholar] [CrossRef]

- Dempster, A.; Schmidt, D.F.; Webb, G.I. MiniRocket: A Very Fast (Almost) Deterministic Transform for Time Series Classification. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Singapore, 14–18 August 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 248–257. [Google Scholar]

- Ismail Fawaz, H.; Lucas, B.; Forestier, G.; Pelletier, C.; Schmidt, D.F.; Weber, J.; Webb, G.I.; Idoumghar, L.; Muller, P.-A.; Petitjean, F. InceptionTime: Finding AlexNet for Time Series Classification. Data Min. Knowl. Discov. 2020, 34, 1936–1962. [Google Scholar] [CrossRef]

- Bagnall, A.; Lines, J.; Bostrom, A.; Large, J.; Keogh, E. The Great Time Series Classification Bake Off: A Review and Experimental Evaluation of Recent Algorithmic Advances. Data Min. Knowl. Discov. 2017, 31, 606–660. [Google Scholar] [CrossRef] [PubMed]

- Tan, C.W.; Bergmeir, C.; Petitjean, F.; Webb, G.I. UCR Time Series Extrinsic Regression Archive; Monash University: Melbourne, Australia; UEA: Norwich, UK, 2020. [Google Scholar]

- Oguiza, I.; Rodriguez-Fernandez, V.; Neoh, D.; filipj8; J-M; Kainkaryam, R.; Mistry, D.; Yang, Z.; Williams, D.; Cho, R.; et al. TimeseriesAI/Tsai: v0.3.9. Available online: https://zenodo.org/records/10647659 (accessed on 10 August 2025).

- Bennet, P.; Doerr, C.; Moreau, A.; Rapin, J.; Teytaud, F.; Teytaud, O. Nevergrad: Black-Box Optimization Platform. ACM SIGEVOlution 2021, 14, 8–15. [Google Scholar] [CrossRef]

- Hansen, N.; Ostermeier, A. Adapting Arbitrary Normal Mutation Distributions in Evolution Strategies: The Covariance Matrix Adaptation. In Proceedings of the IEEE International Conference on Evolutionary Computation, Nagoya, Japan, 20–22 May 1996; pp. 312–317. [Google Scholar]

- Jones, D.R.; Schonlau, M.; Welch, W.J. Efficient Global Optimization of Expensive Black-Box Functions. J. Glob. Optim. 1998, 13, 455–492. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle Swarm Optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Powell, M.J.D. A Direct Search Optimization Method That Models the Objective and Constraint Functions by Linear Interpolation. In Advances in Optimization and Numerical Analysis; Gomez, S., Hennart, J.-P., Eds.; Springer: Dordrecht, The Netherlands, 1994; pp. 51–67. ISBN 978-94-015-8330-5. [Google Scholar]

- Doerr, B.; Le, H.P.; Makhmara, R.; Nguyen, T.D. Fast Genetic Algorithms. In Proceedings of the Genetic and Evolutionary Computation Conference, Melbourne, Australia, 1 July 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 777–784. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 6000–6010. [Google Scholar]

- Jiang, Y.; Ma, J.; He, L.; Zhang, S. Large-scale Visual Genome Annotation with Semantics. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in Network. arXiv 2014, arXiv:1312.4400. [Google Scholar] [PubMed]

- Lin, Y.-A.; Loh, K.J. Wearable Patterned Nanocomposite Circuits for Temperature Compensated Strain Sensing. Smart Mater. Struct. 2025, 34, 065036. [Google Scholar] [CrossRef]

| Set Number | Punch Types | Number of Trials | Weight | Shadowboxing | Description |

|---|---|---|---|---|---|

| 1 | Jab | 5 | - | Yes | Jabs shadowboxing |

| 2 | Jab | 5 | 5 lb | Yes | Jabs shadowboxing with 5 lb dumbbell |

| 3 | Jab | 2 | - | No | Jabs striking heavy bag |

| 4 | Lead hook | 5 | - | Yes | Lead hooks shadowboxing |

| 5 | Lead hook | 5 | 5 lb | Yes | Lead hooks shadowboxing with 5 lb dumbbell |

| 6 | Lead hook | 2 | - | No | Lead hooks striking heavy bag |

| Models | Batch Size | Learning Rate | Dropout | Max Dilations | Number of Filters | Conv ks | Number of Layers | ||

|---|---|---|---|---|---|---|---|---|---|

| Encoder | Fully Connected | Conv | |||||||

| TST | 39 | 1.386 × 10−3 | 1.084 × 10−5 | 3.815 × 10−5 | - | - | - | - | 4 |

| MiniRocket | 32 | 0.560 | - | 1.047 × 10−5 | - | 39 | - | - | - |

| InceptionTime | 31 | 1.072 × 10−3 | - | 1.125 × 10−4 | 5.544 × 10−5 | - | 25 | 28 | - |

| Punch Types | ||||

|---|---|---|---|---|

| Jabs | 92.5% | 92.5% | 100% | 96.1% |

| Jabs (5 lb) | 89.6% | 89.6% | 100% | 94.5% |

| Jabs (BOB) | 97.6% | 97.6% | 100% | 98.8% |

| Lead hooks | 88.8% | 100% | 88.8% | 94.1% |

| Lead hooks (5 lb) | 99.0% | 100% | 99.0% | 99.5% |

| Lead hooks (BOB) | 70.4% | 97.4% | 71.7% | 82.6% |

| Overall | 90.5% | 95.8% | 94.3% | 95.0% |

| Punch Types | TST | MiniRocket | InceptionTime |

|---|---|---|---|

| Jabs | 100% | 100% | 91.7% |

| Jabs (5 lb) | 100% | 91.7% | 91.7% |

| Jabs (BOB) | 75.0% | 75.0% | 50.0% |

| Lead hooks | 100% | 92.3% | 92.3% |

| Lead hooks (5 lb) | 94.4% | 94.4% | 100% |

| Lead hooks (BOB) | 100% | 100% | 100% |

| Overall | 96.9% | 93.8% | 92.3% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, S.-C.; Pierce, T.; Lin, Y.-A.; Loh, K.J. Boxing Punch Detection and Classification Using Motion Tape and Machine Learning. Sensors 2025, 25, 5027. https://doi.org/10.3390/s25165027

Huang S-C, Pierce T, Lin Y-A, Loh KJ. Boxing Punch Detection and Classification Using Motion Tape and Machine Learning. Sensors. 2025; 25(16):5027. https://doi.org/10.3390/s25165027

Chicago/Turabian StyleHuang, Shih-Chao, Taylor Pierce, Yun-An Lin, and Kenneth J. Loh. 2025. "Boxing Punch Detection and Classification Using Motion Tape and Machine Learning" Sensors 25, no. 16: 5027. https://doi.org/10.3390/s25165027

APA StyleHuang, S.-C., Pierce, T., Lin, Y.-A., & Loh, K. J. (2025). Boxing Punch Detection and Classification Using Motion Tape and Machine Learning. Sensors, 25(16), 5027. https://doi.org/10.3390/s25165027