In this paper, a variety of evaluation indicators are used to comprehensively measure the signal before and after noise reduction. Specifically, the effective model saved after the training is used to reason the test set and calculate and evaluate the indicators of the noise reduction signal and the original clean signal obtained by the reasoning. At the same time, through the time–frequency analysis of the noise reduction signal and the original signal, the experimental results are fully displayed. The predicted value in the evaluation index refers to the signal after noise reduction (i.e., model output signal), while the true value is the original clean signal (noise-free signal).

6.1. Evaluation Metrics

SNR (signal-to-noise ratio) [

25]: In this manuscript, the SNR is defined here for the first time. SNR measures the ratio between signal power and noise power. It is usually used to represent the strength of a signal in a noisy environment. The higher the SNR value, the stronger the useful information in the signal is compared with the noise, which indicates that the quality of the signal is better:

where

is the power of the signal and

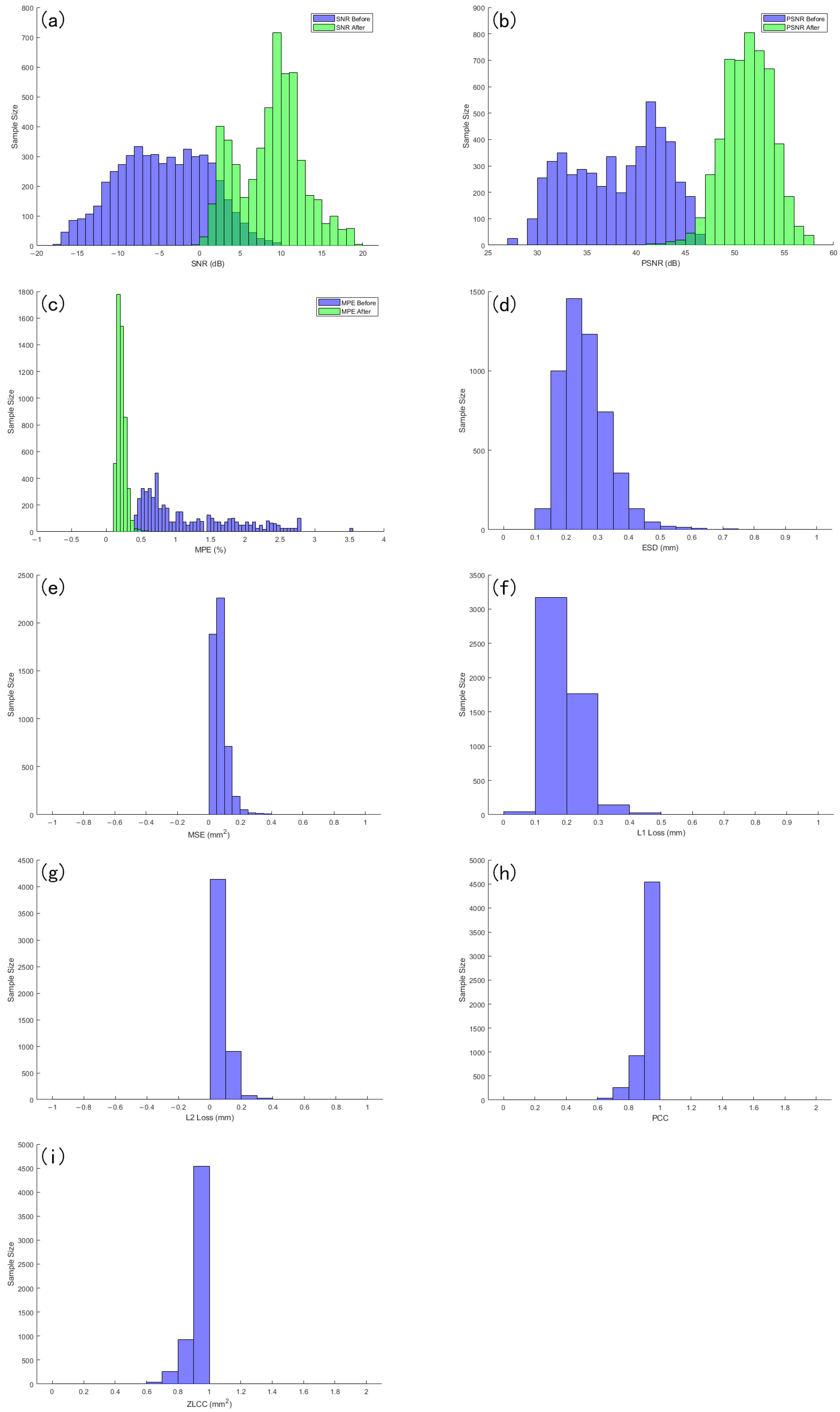

is the power of the noise. In practical applications, the power of signal and noise is usually estimated by its mean square value. To assess the overall denoising performance of the proposed model across the entire dataset, a histogram-based comparison was conducted based on the signal-to-noise ratio (SNR). As illustrated in

Figure 19a, the x-axis represents the SNR values, while the y-axis indicates the number of samples corresponding to each SNR range. The results reveal a significant rightward shift in the distribution after denoising, demonstrating that a majority of the signals achieved higher SNR values compared to their original noisy counterparts. This confirms that the model effectively enhances the signal quality and reduces noise across a wide range of input conditions.

PSNR (peak signal to noise ratio) [

26]: PSNR is an index to measure the peak signal-to-noise ratio between the denoised signal and the clean signal. The basic idea is to reflect the difference between the denoised signal and the original clean signal through the mean square error (MSE) and convert it into a signal-to-noise ratio. The higher the PSNR value, the better the reconstruction quality of the image or signal:

where

is the maximum possible value of the signal and

is the mean square error.

Figure 19b shows the sample size distribution of the signal before and after denoising in different PSNR intervals. The horizontal axis represents PSNR values, and the vertical axis represents the corresponding number of samples in each interval. As can be seen from the figure, the PSNR before denoising is mostly concentrated in the lower numerical range, with a relatively scattered distribution; the PSNR after denoising showed a significant right shift overall, and the number of samples increased significantly in the higher PSNR range, indicating that denoising effectively improved signal quality. In most samples, the denoising operation resulted in significant PSNR gain, demonstrating the advantage of the proposed method in signal fidelity.

MPE (mean percentage error) [

27]: MPE is used to measure the relative error between the reconstructed signal and the clean signal. It calculates the ratio of the error of each point to the real signal and averages all data points. MPE can provide the relative size of error, which is helpful to evaluate the performance of the noise reduction model:

where

and

are the real signal and reconstructed signals of the ith point, respectively.

Figure 19c shows the sample size distribution of the signal before and after denoising in different MPE intervals. The horizontal axis represents the MPE value, and the vertical axis represents the number of samples corresponding to each interval. It can be seen that the MPE distribution before noise reduction is generally biased towards larger numerical ranges, indicating that the original signal has a large error; after denoising, the MPE distribution clearly shifts towards the low value range, with a higher degree of concentration and a significant reduction in high error samples. This change indicates that the noise reduction method used effectively reduces the relative error of the signal and improves the accuracy and consistency of signal reconstruction.

ESD (error standard deviation) [

28]: ESD measures the standard deviation of signal reconstruction error, which reflects the fluctuation of error in the whole dataset. The smaller the ESD value, the smaller the fluctuation of reconstruction error and the better the denoising effect:

ESD is mainly used to evaluate the stability and consistency of the model in the process of signal reconstruction.

Figure 19d shows the ESD distribution of the denoised signal, with the horizontal axis representing the numerical value of ESD after denoising and the vertical axis representing the number of samples in the corresponding interval. Overall, the ESD values of most samples are distributed in the lower range, and the histogram shows a concentrated distribution feature, indicating that the envelope spectrum of the denoised signal is closer to the reference signal, and the degree of spectral distortion is smaller. This indicates that the proposed denoising method has good performance in preserving the spectral features of the signal.

MSE (mean square error) [

29]: MSE is a commonly used error measurement method, which is used to measure the difference between the real signal and the reconstructed signal. It can effectively quantify the accuracy of signal reconstruction by calculating the square of the error of each sample point and obtaining the mean value. The smaller the MSE, the smaller the difference between the reconstructed signal and the original signal:

where

and

are the signal and the reconstructed signal, respectively, and

N is the total number of samples of the signal.

Figure 19e shows the distribution of mean square error (MSE) of the denoised signal in different numerical ranges. The horizontal axis represents the interval in which the MSE value is located, and the vertical axis represents the number of samples within the corresponding interval. From the graph, it can be seen that the MSE values of most samples are concentrated in the lower range, and the histogram shows a clear leftward distribution trend, indicating that the error between the denoised signal and the reference signal is relatively small.

L1 loss (mean absolute error) [

30] calculates the average absolute value of the signal reconstruction error, which is mainly used to measure the accuracy of the model. Unlike MSE, L1 loss is not sensitive to outliers. The smaller the L1 loss value, the smaller the error between the reconstructed signal and the original signal:

L1 loss is mainly used for regression tasks and signal denoising, especially in the presence of large noise.

From

Figure 19f, it can be intuitively seen that the error values are mainly distributed in the range close to zero, and the absolute error of most samples remains at a low level, with only a small amount distributed in the high-value area. This distribution pattern indicates that after denoising treatment, the model can accurately restore the signal in most cases, and the error fluctuates little between samples. In other words, the low value set of L1 Loss not only reflects the improvement of denoising accuracy but also demonstrates the good robustness of the method under different sample conditions.

L2 loss (mean square error) [

31]: L2 loss is a commonly used loss function to measure the mean square error between the reconstructed signal and the real signal. L2 loss is sensitive to outliers, so it can effectively suppress large errors in many practical tasks. The smaller the L2 loss, the smaller the difference between the reconstructed signal and the original signal:

L2 loss is often used to optimize objectives in model training, especially in regression and reconstruction tasks.

Figure 19g shows the sample size distribution of the denoised signal in different L2 loss intervals. The overall distribution shows a clear low-value concentration trend, indicating that most samples perform well in the root mean square error (L2 loss) metric and have small reconstruction errors.

The Pearson correlation coefficient (commonly used symbol

r or

) is a standardized index to measure the degree of linear correlation between two continuous variables, which is defined as the ratio of the product of the sample covariance and the respective sample standard deviation, so the numerical range is strictly limited to

; when

indicates complete positive correlation,

indicates complete negative correlation, and

indicates no linear relationship. The overall Pearson correlation coefficient is recorded as

, which is defined as the ratio of covariance and standard deviation of random variables X and Y:

where

are the standard deviation of

X and

Y, respectively. The corresponding sample Pearson correlation coefficient

r (also known as the Pearson product–moment correlation coefficient) can be expressed as

where x and y are the sample means, respectively, the numerator is the unbiased estimation of the sample covariance, and the denominator is the product of the sample standard deviation.

Figure 19h shows the distribution of sample size of the denoised signal in different Pearson correlation coefficient (PCC) intervals. It can be observed that the PCC values are mainly concentrated in the high correlation interval, and the overall distribution tends to be close to the numerical range of 1, indicating that most samples maintain a high degree of linear correlation with the reference signal after denoising. This result indicates that the proposed method not only performs well in error metrics but also effectively preserves the global trend information of the signal.

Zero-lag cross-correlation (ZLCC) [

32] is defined as the value of the cross-correlation function at lag zero, which is used to measure the similarity of two signals without timing offset. The non-normalized form of ZLCC is expressed as an integral in the continuous time domain and as a vector inner product in the discrete time domain, reflecting the synchronization relationship at the energy level of the original signal. If the signal is normalized by subtracting the mean value and dividing it by the standard deviation, the normalized zero-lag cross-correlation (ZNCC) is obtained. Its value range is strictly limited to

: 1 means a fully positive correlation,

means a fully inverse correlation, and 0 means no linear correlation.

ZLCC is the value of the cross-correlation function at the lag

which is used to quantify the similarity between the signals

f and

g when there is no time shift alignment. For continuous signals

and

,

where “⋆” indicates cross-correlation operation. If the signal is a real value, the complex conjugate symbol is omitted. For the discrete sequences

,

with finite length

,

The sum is the inner product of two sequences in zero-lag alignment. Continuous form:

If the normalized zero-lag cross-correlation (ZNCC) is defined, subtract the respective mean values

and

and divide them by the standard deviations

and

to obtain

Its value range is . The range of non-normalized ZLCC values is not fixed. Its positive value indicates that the two signals are enhanced in the same phase under the current alignment, and its negative value indicates that the phase is opposite. The absolute size of the value is affected by the signal energy. The normalized ZNCC value falls strictly between : 1 means fully synchronous positive correlation, means fully synchronous negative correlation, and 0 means no linear synchronization feature.

Figure 19i shows the sample size distribution of the denoised signal in different ZLCC value ranges. It can be seen that the ZLCC values are mostly concentrated in the high correlation region close to 1, indicating that without introducing time delay, the denoised signal and the reference signal are highly aligned in the time domain, with good synchronization and similarity. This distribution characteristic indicates that the proposed method can effectively preserve the phase information and overall morphology of the original signal while suppressing noise interference, demonstrating strong time-domain consistency recovery ability.

Table 5a,b list the average values of various evaluation indicators after applying denoising methods on the test set in order to comprehensively evaluate the denoising performance of the proposed method.

6.3. Comparative Experiments

In the comparative experiments, all models were trained and evaluated on the same vibration signal dataset. The proposed BiL-DCAE model was systematically compared with multiple advanced deep learning models, including RNN, GRU, Transformer, DCAE, LSTM-DAE, U-Net, and BiLSTM, as well as several classical denoising methods, including low-pass filtering, band-pass filtering, mean filtering, Savitzky–Golay filtering, Variational Mode Decomposition (VMD), wavelet transform, Wiener filtering, and Kalman filtering.

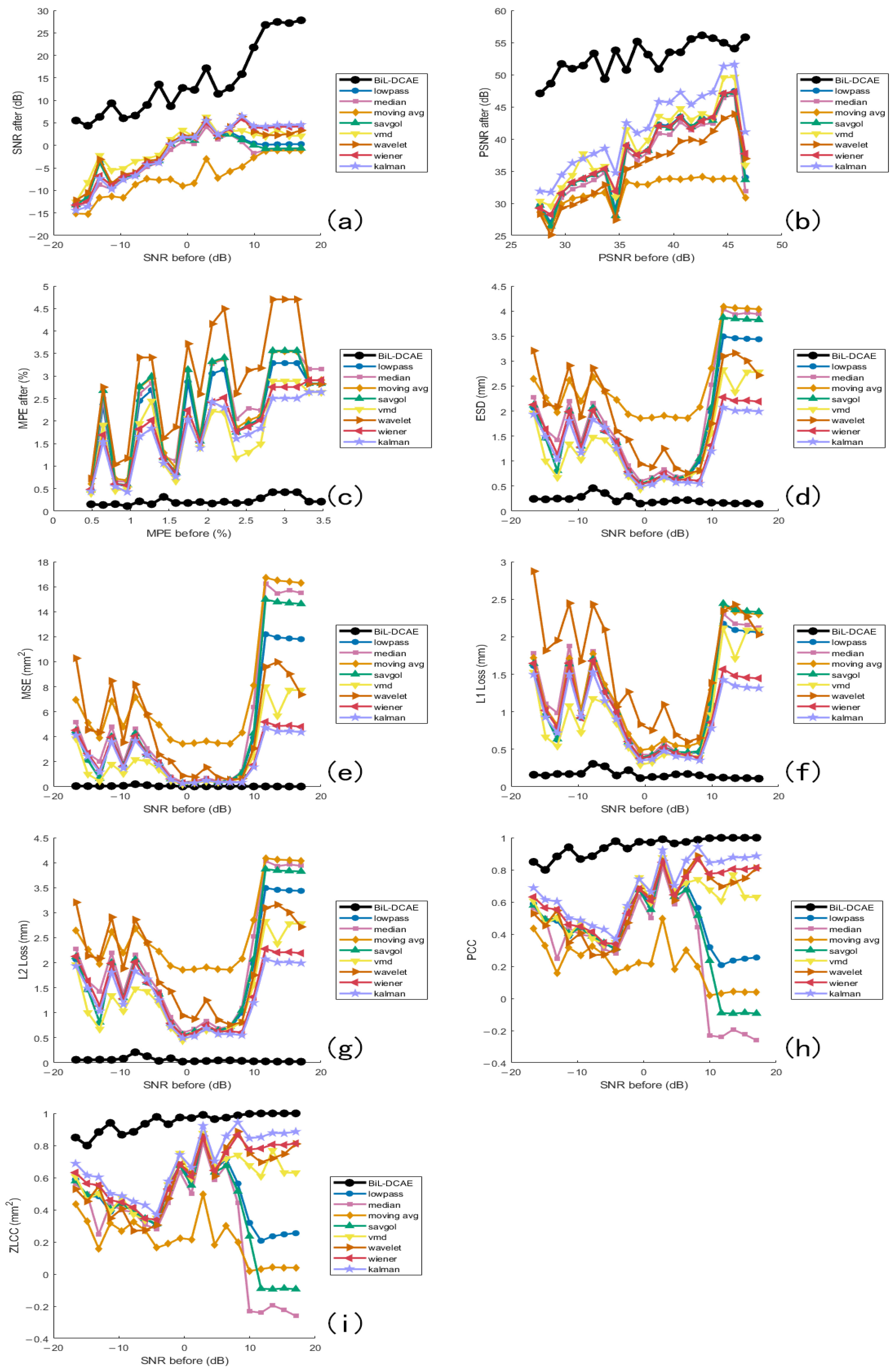

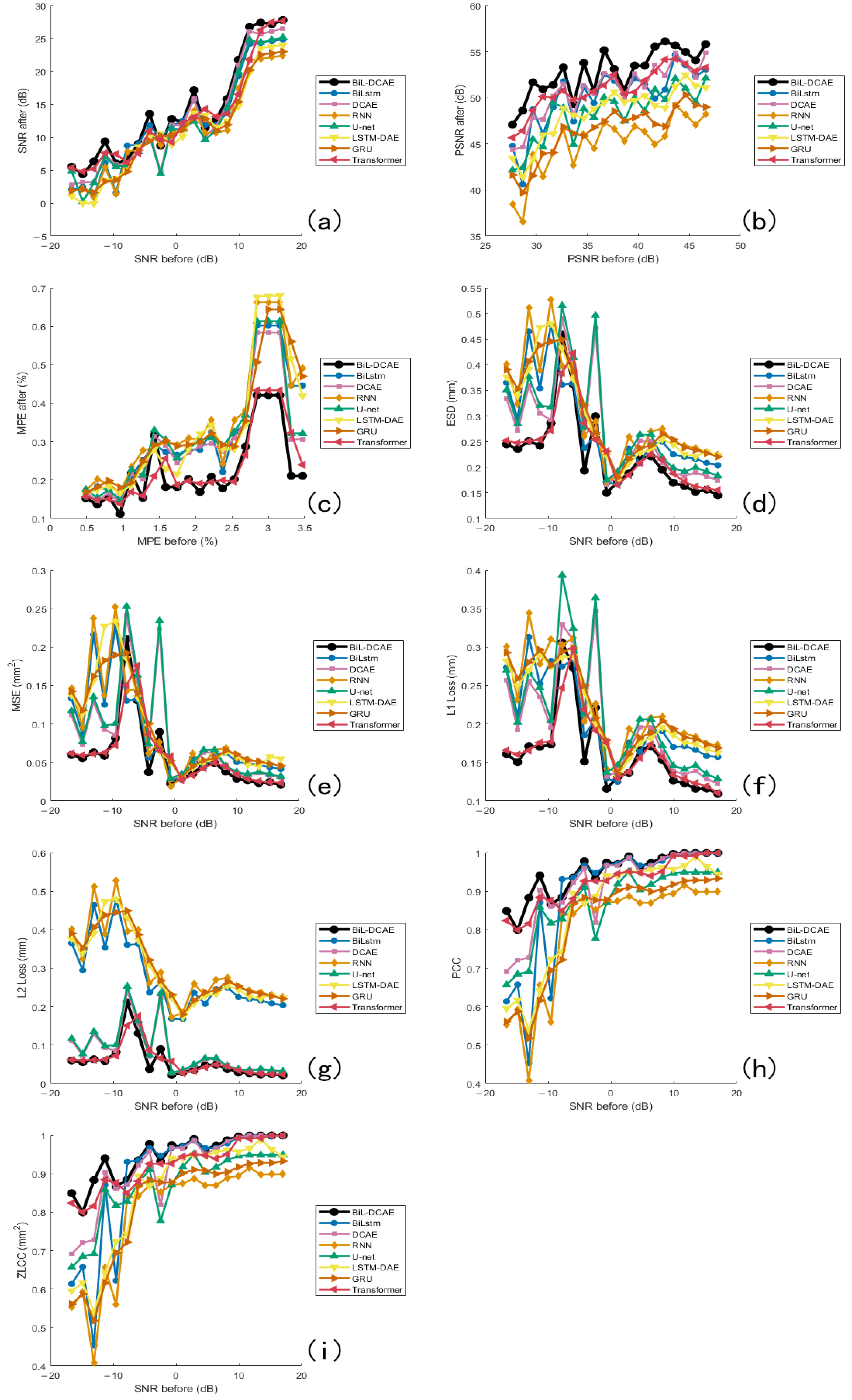

To improve clarity, the comparative results are presented in two figures:

Figure 20 illustrates the comparison between BiL-DCAE and various classical denoising methods across multiple quantitative metrics, while

Figure 21 provides a comprehensive comparison with advanced deep learning models.

For classical methods, BiL-DCAE overwhelmingly outperforms all baselines, with the Kalman filter ranking second but still showing a noticeable gap. Wiener filtering, VMD, and wavelet transform form the mid-performing group, while simple filters such as low-pass, Savitzky–Golay, mean, and moving average filters rank lowest, highlighting their limited capacity to handle non-stationary and complex noise.

In the deep learning group, BiL-DCAE consistently achieves the highest scores across all metrics, followed by Transformer-based models. Notably, Transformer approaches exhibit comparable SNR performance to BiL-DCAE in high-SNR regions but show greater instability across different noise levels, reflecting their sensitivity to data distribution and hyperparameter settings. While BiL-DCAE achieves overall higher PSNR values, Transformers slightly outperform in a few localized cases for ESD, MPE, and MSE, indicating their strong global modeling capability. However, BiL-DCAE demonstrates superior consistency across the entire range, particularly in peak preservation (MPE) and frequency-domain fidelity (ESD), which we attribute to its hybrid convolutional and bidirectional temporal modeling.

In L1 and L2 losses, BiL-DCAE maintains competitive performance, with L2 loss nearly matching Transformer in high-SNR regions, suggesting robust reconstruction under low-noise conditions. Correlation-based metrics (PCC and ZLCC) show that BiL-DCAE, Transformer, DCAE, and BiLSTM converge to similarly high values in high-SNR regions, indicating their shared ability to maintain overall waveform morphology. Overall ranking trends across metrics consistently place BiL-DCAE first, followed by Transformer, with DCAE, U-Net, and BiLSTM forming a secondary group, and RNN/GRU trailing due to their limited capacity for capturing long-term bidirectional dependencies.

These findings highlight that the hybrid architecture of BiL-DCAE, which combines convolutional layers for local time–frequency feature extraction and bidirectional LSTM modules for long-term temporal modeling, provides a significant advantage in suppressing noise while preserving essential signal characteristics.