1. Introduction

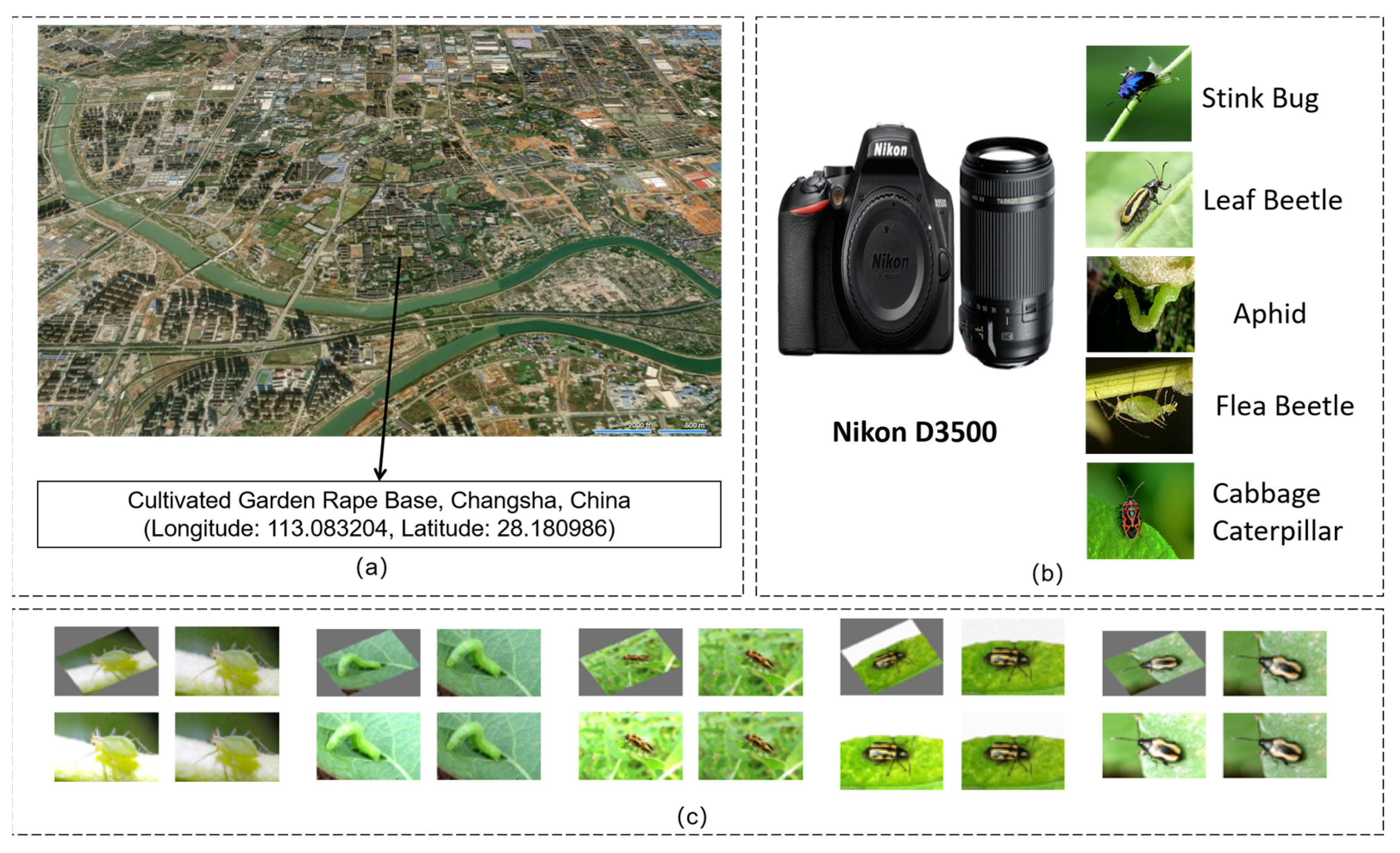

Rapeseed, one of China’s major economic crops, is frequently attacked by pests such as aphids, cabbage worms, stink bugs, flea beetles, and leaf beetles during its growth cycle [

1]. These pests not only lead to reduced yields but also seriously threaten crop quality and economic benefits. Traditional prevention and control methods rely mainly on experience and routine observation, which are subject to information lags and untimely responses. Therefore, the development and application of rapeseed pest and disease monitoring and early warning technologies are of great significance. With the development of deep learning technology, automated detection methods based on computer vision have gradually become a research hotspot.

In recent years, deep learning-based target detection technology has shown significant potential in agricultural pest identification and has become a research hotspot for smart agricultural management. For example, Gan et al. [

2] proposed an improved YOLOv8-DBW model for corn leaf pest detection, which significantly improved detection accuracy and model lightweightness, making it suitable for mobile deployment. Zhao et al. [

3] combined YOLOv5 with drone imagery to achieve accurate identification of rice field canopy pests and diseases, verifying the practicality of deep models in field scenarios. Xie et al. [

4] designed the SEDCN-YOLOv8 model for cucumber pest detection in complex natural backgrounds, introducing deformation convolution and attention mechanisms to enhance the model’s robustness to small targets and occlusions. These achievements reflect the widespread application of target detection in crop pest and disease identification.

The existing mainstream target detection methods can be divided into two categories: two-stage and single-stage. Two-stage methods such as R-CNN [

5] and Faster R-CNN [

6] achieve high accuracy by first generating candidate regions and then performing classification and regression, but the computational complexity is high; single-stage methods such as SSD [

7] and the YOLO series [

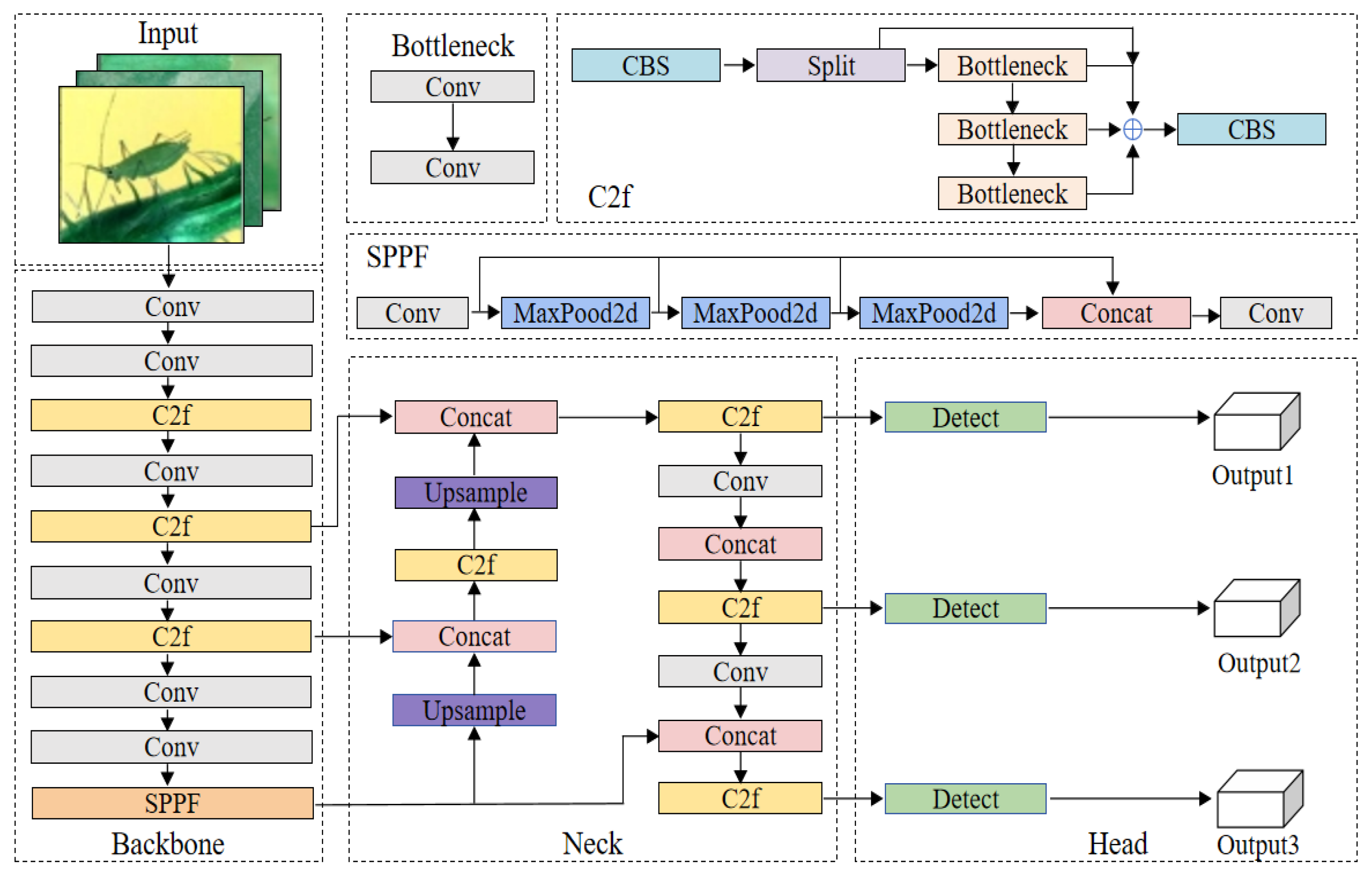

8] directly locate and identify targets in an end-to-end manner. With the advantages of simple structure, fast inference speed, and convenient deployment, they are more suitable for resource-constrained agricultural automation scenarios. Since its introduction in 2016, the YOLO series has been continuously iterated, gradually optimizing the balance between detection accuracy, speed, and robustness. The YOLOv8 [

9] architecture is well-known for its real-time target detection performance. It uses the CSPDarknet53 backbone network with a C2f module, which replaces the traditional CSPLayer to improve feature extraction and small target detection capabilities. Its Neck combines the Spatial Pyramid Pooling Fast (SPPF) layer, which accelerates calculations through fixed-size feature maps to achieve efficient multi-scale detection. The decoupled Head design handles target, classification, and regression tasks separately, thereby improving detection accuracy. However, YOLOv8 still has problems such as a large number of parameters and high computational cost, and its deployment in edge devices or embedded systems faces challenges, especially in meeting the real-time and low-power requirements in agricultural scenarios.

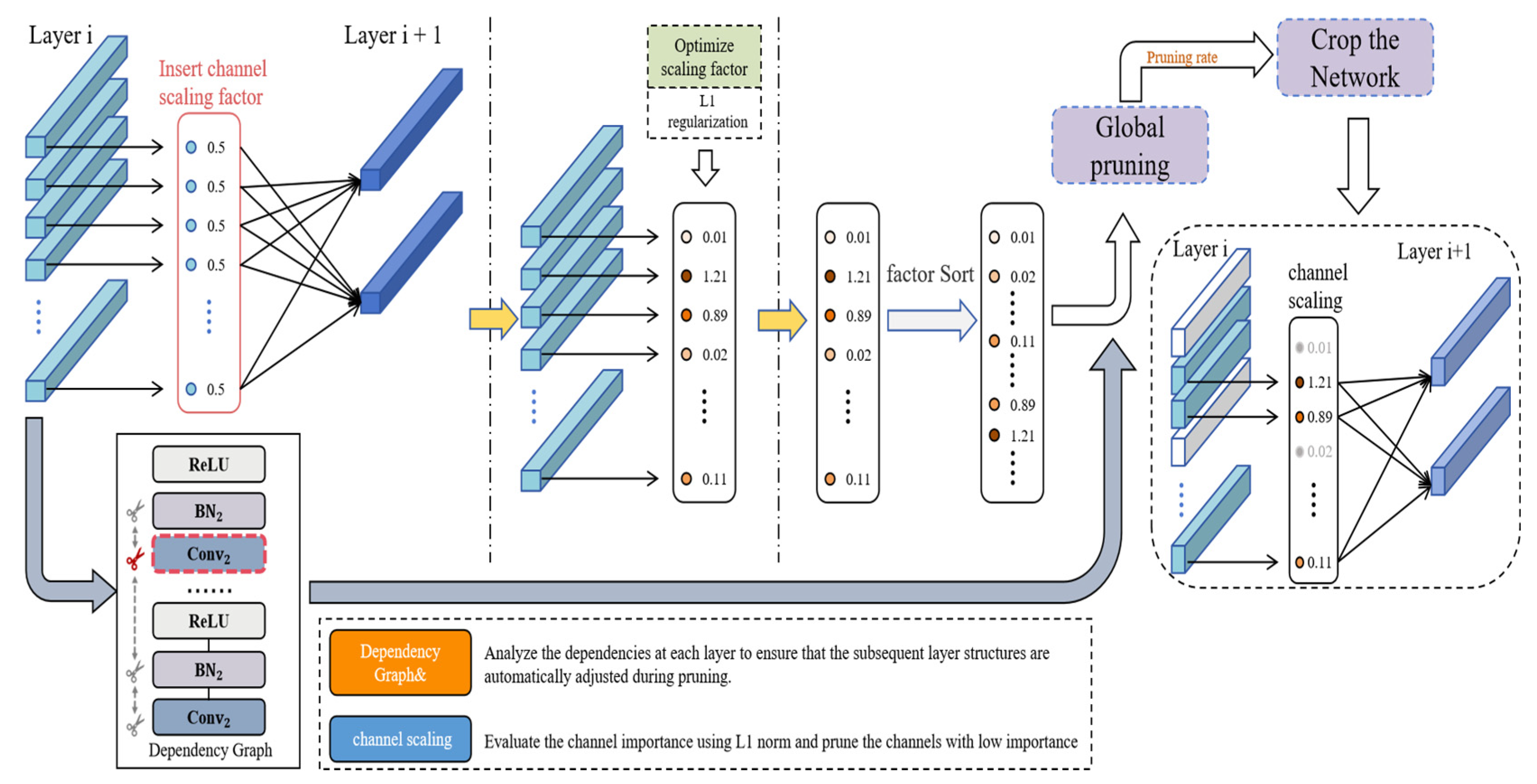

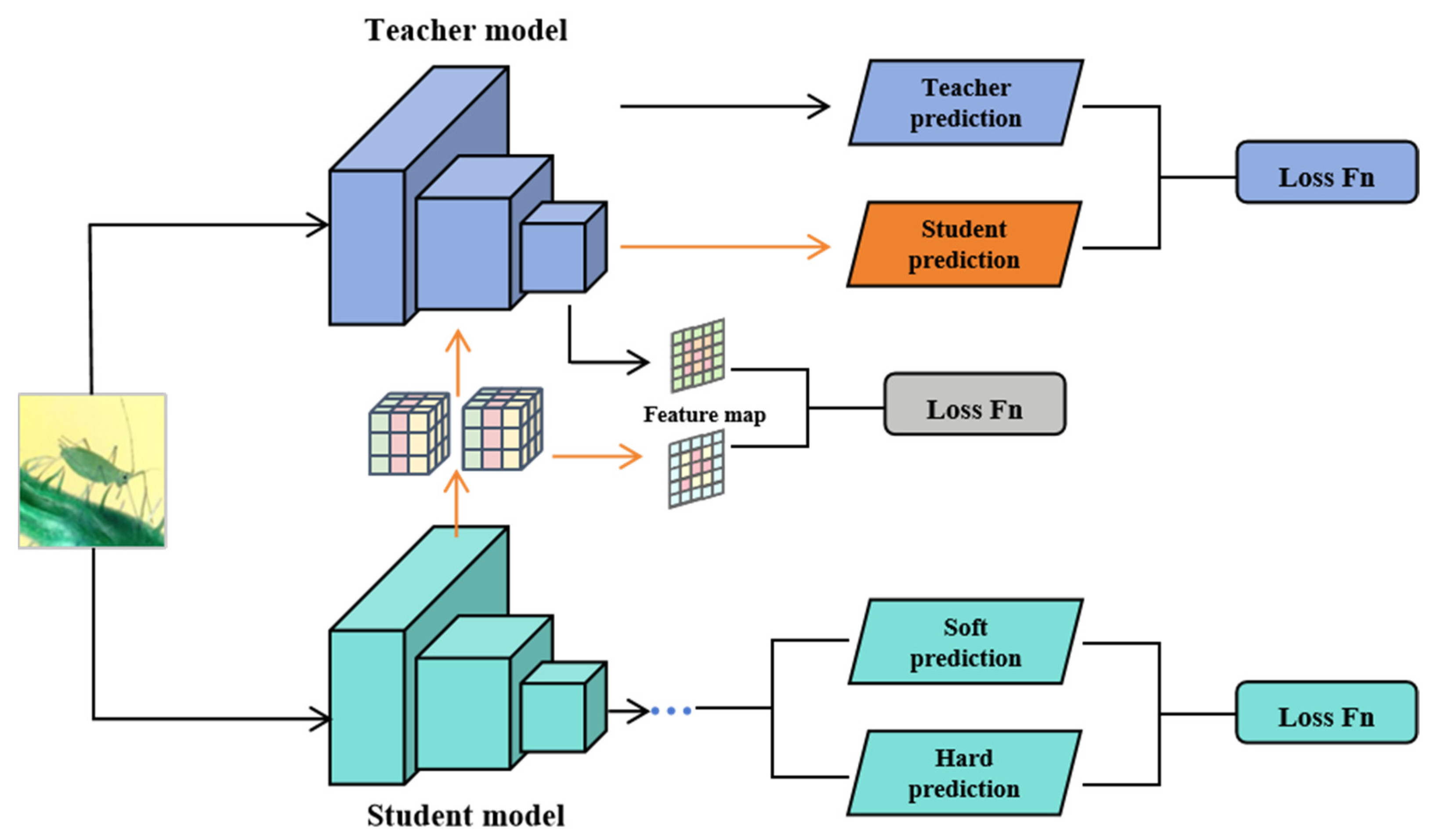

In response to the above bottlenecks, model compression technology has become a key research direction for improving the deployability of detection models. Its core is to reduce model complexity through strategies such as lightweight design, pruning, quantization, and knowledge distillation. Among them, pruning removes redundant structures by evaluating the importance of parameters, such as weight-based unstructured pruning [

10] and structured pruning based on BN channel scaling factors [

11]. Knowledge distillation improves the performance of lightweight student models by transferring the knowledge of large teacher models to them. The soft label distillation proposed by Hinton et al. [

12] laid the theoretical foundation. Zagoruyko et al. [

13] strengthened the transfer of intermediate features through the attention mechanism. Park et al. [

14] further improved the generalization ability by using the knowledge of the relationship between samples.

Existing studies have verified the effectiveness of these technologies in agricultural pest detection. For example, Wang B et al. [

15] combined hyperspectral imaging with 3D convolution to suppress noise and capture spectral–spatial features; Xiao Z et al. [

16] constructed a lightweight network through one-dimensional convolution and attention mechanism; Kuzuhara et al. [

17] designed a two-stage detection framework (YOLOv8 region proposal + Xception re-identification); Ullah et al. [

18] developed DeepPestNet to improve generalization through data augmentation. However, these works mostly focus on a single compression strategy, making it difficult to achieve the optimal trade-off between model compactness and detection performance, especially in complex multi-target scenarios.

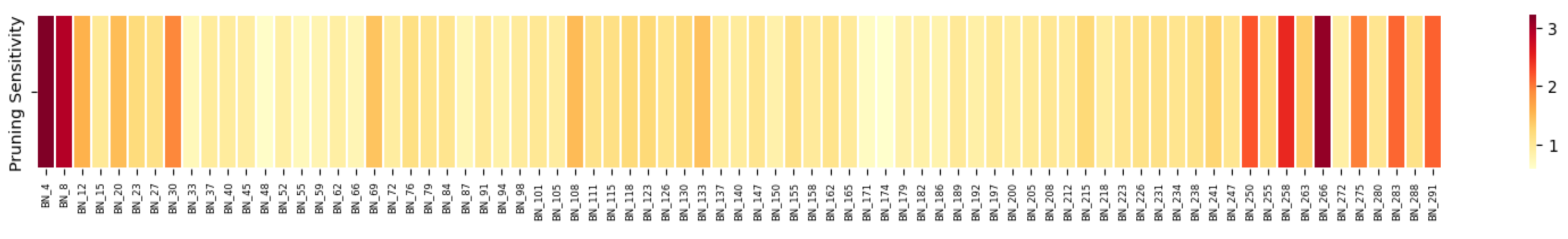

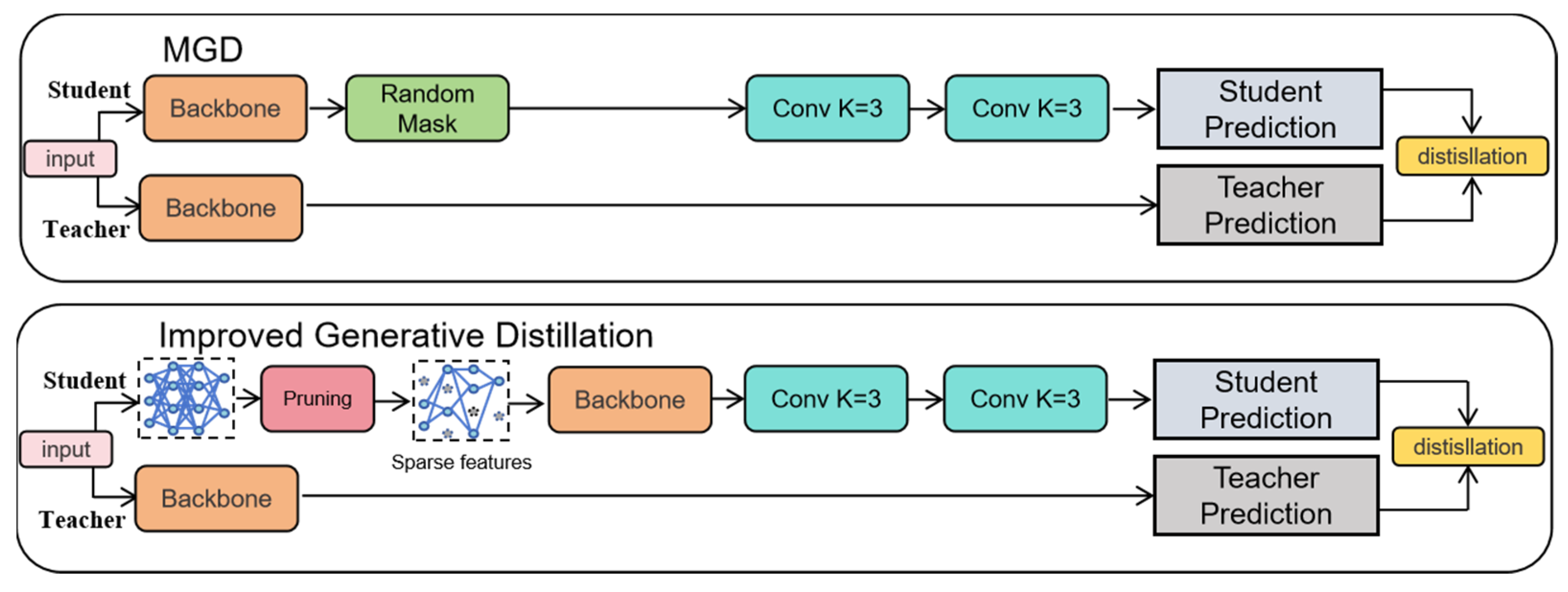

To this end, this study uses YOLOv8s as a baseline model and introduces targeted enhancements to better suit the needs of field detection scenarios, particularly in terms of detection performance and deployment efficiency. Specifically, we calculate the mean gamma coefficient of all BatchNorm layers in the YOLOv8s architecture and use this as a pruning sensitivity metric to perform global pruning on the model, resulting in a lighter, more compact model. Furthermore, to address the difficulty of traditional distillation methods in adequately representing sparse features after pruning, this paper proposes a collaborative distillation strategy, LMGD, that combines logit distillation with improved generative distillation. Logit distillation uses a single detection head for distillation between the compact model and the teacher model, leveraging high-level semantic and decision-making information to assist in evaluating the feature extraction quality of the student model. Improved generative distillation helps the student model learn the structure and representation patterns of the data abstracted by the teacher model, thereby improving its understanding of details and local structure. Combined, the compact model acquires more comprehensive knowledge. The main contributions of this work are as follows:

A compression framework combining channel pruning and dual distillation strategies is designed to improve inference speed while maintaining accuracy.

Developed a rapeseed pest image dataset covering five typical pest categories to support model training and evaluation.

Comprehensive comparative experiments demonstrated the effectiveness of the proposed model in balancing detection performance and deployment efficiency. While achieving 96.7% mAP@0.5, 93.2% precision, and 92.7% recall, the model was able to compress the parameter size from 11.2 MB to 4.4 MB and the FLOPs from 28.3 GB to 10.01 GB, representing reductions of approximately 60.7% and 64.6%, respectively. This approach improved model inference efficiency while only decreasing detection accuracy by 0.1%. The improved model achieved a measured frame rate of 11.76 FPS on a Jetson Nano edge device, demonstrating excellent real-time inference performance.

4. Experimental Results and Analysis

4.1. Experimental Configuration and Assessment Metrics

The experiments were conducted on a Windows 11 system using an Intel i7-13700KF CPU and an NVIDIA RTX 4090 GPU with 128 GB of system memory. The model was implemented using the PyTorch 2.5.1 framework and Python 3.8. The model was trained from scratch for 200 epochs with a 224 × 224 input resolution and a batch size of 32. A fixed random seed was used to ensure reproducibility. Adam was used as the optimizer with an initial learning rate of 2 × 10−5. Data loading was accelerated by adjusting the number of CPU threads, while all other parameters remained default. Pruning was performed using the pruning-torch tool, and no pretrained weights were loaded.

Performance evaluation covered both model lightweighting and detection accuracy. The pruning phase focused on parameter count, FLOPs, and mAP@0.5 as core metrics. The distillation phase further incorporated mAP@0.5:0.95, recall, and precision. Among them, AP calculates the average precision under different recall rates, reflecting the model’s comprehensive recognition ability for positive classes; mAP evaluates the robustness of the model by calculating the average precision under different IoU thresholds (0.5–0.95); the recall rate reflects the missed detection rate, the precision rate measures the false detection rate, and TP, FP, and FN represent the number of pests correctly detected, falsely detected, and missed, respectively.

The above indicator equation is as follows:

4.2. Main Results

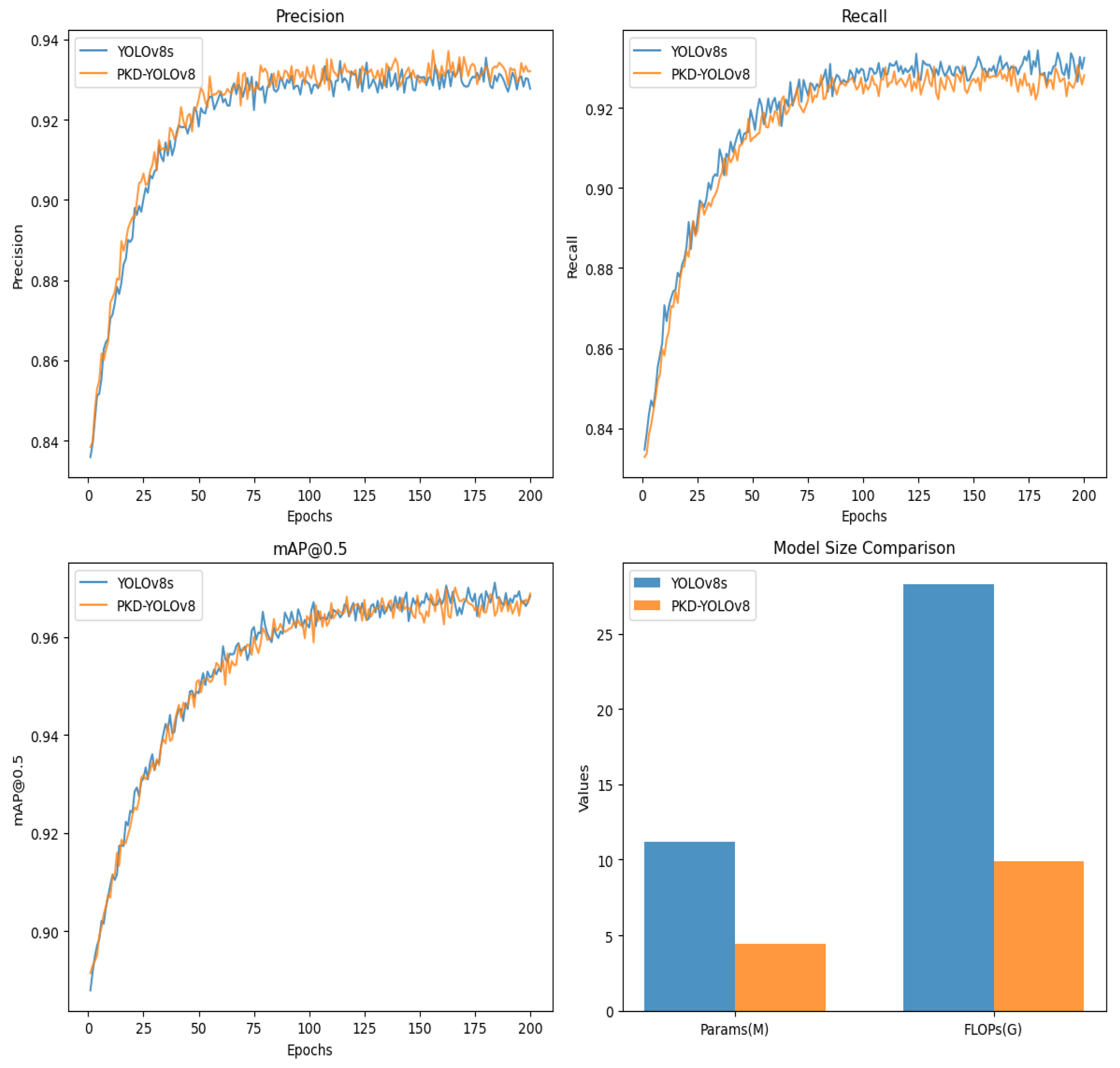

In this section, we conduct extensive and exhaustive experiments on the rapeseed pest image dataset, ACEFP, to validate the feasibility and superiority of our approach. The experimental results are shown in

Figure 7.

Figure 7 shows a comparison between the baseline YOLOv8s model and the improved PKD-YOLOv8 model. Precision, recall, mAP@0.5, parameters, and FLOPs are compared. The figure shows that the P and R values of both models exhibit significant fluctuations during the first 110 epochs. After the next 90 epochs, the curves gradually stabilize, with the final P and R values of the PKD-YOLOv8 model reaching 93.2% and 92.7%, respectively. For the mAP@0.5 metric, the curve fluctuates significantly during the first 130 epochs, but gradually stabilizes over the next 70 epochs. A comparison between the PKD-YOLOv8 and YOLOv8s models is also presented, focusing on the number of parameters and FLOPs. The optimized PKD-YOLOv8 model shows a 60.7% reduction in parameters and a 64.6% reduction in FLOPs. Overall, the PKD-YOLOv8 model achieves 96.7% mAP@0.5 while significantly reducing the overall model size. This is only a 0.1% decrease from YOLOv8s’ 96.8%, approaching the baseline performance and demonstrating further optimization.

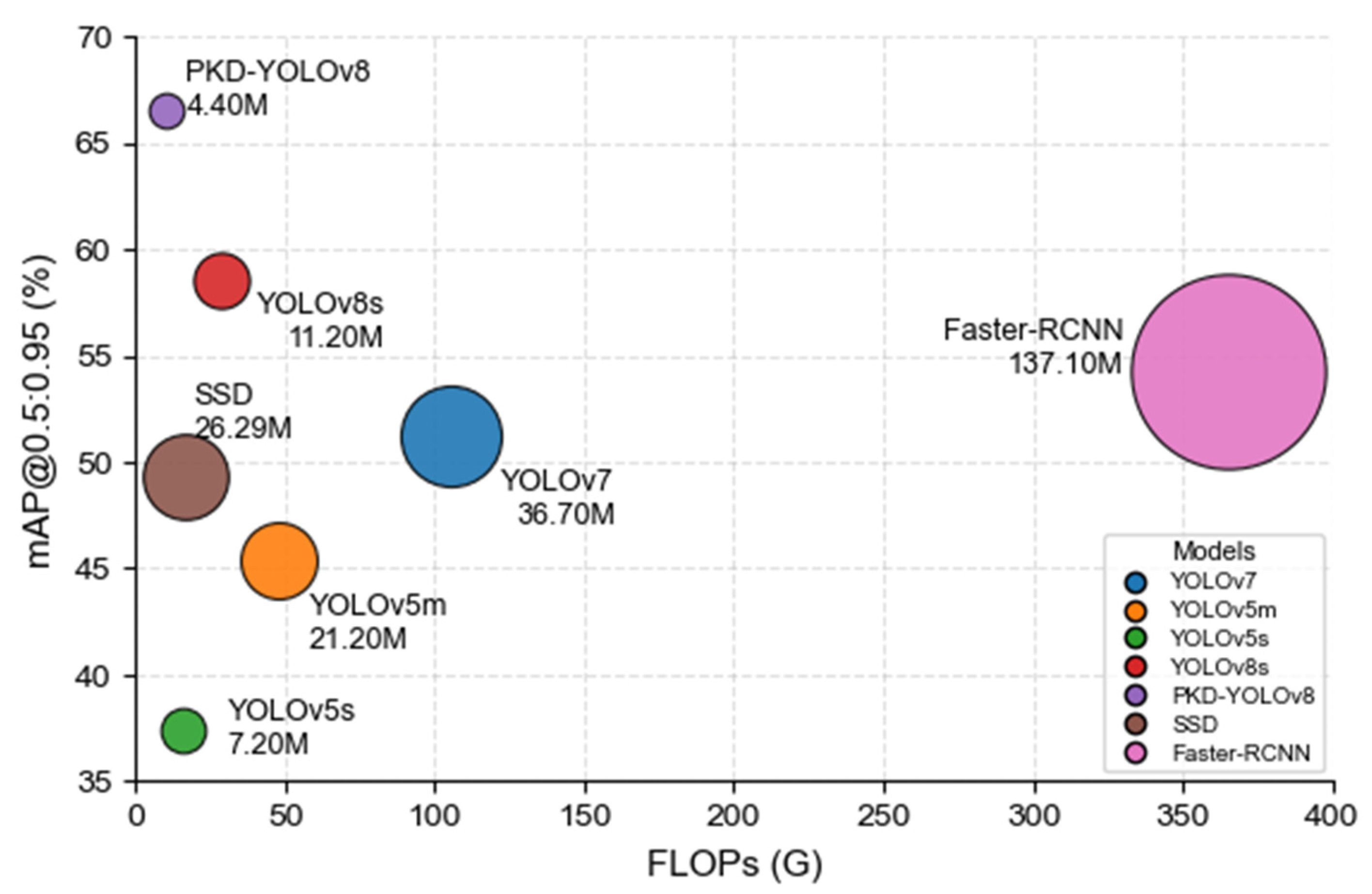

To further demonstrate the effectiveness of our approach, we evaluated seven object detection models—YOLOv7, YOLOv5m, YOLOv5s, YOLOv8s, SSD, Faster-RCNN, and the proposed PKD-YOLOv8—under the same training conditions and datasets. The experimental results are shown in

Table 2. Specifically, among the traditional YOLO models, YOLOv7 and YOLOv5m achieved mAP@0.5:0.95 values of 51.2% and 45.4%, respectively. However, these models suffer from high computational complexity of 105.6 G FLOPs and 48.2 G FLOPs, respectively, and parameter sizes of 36.7 M and 21.2 M, respectively. Lightweight alternatives, such as YOLOv5s, significantly reduce model complexity to 15.8 G FLOPs and only 7.2 M parameters. However, this reduction comes at the expense of lower detection accuracy, with a mAP@0.5:0.95 of 37.4%. In comparison, the baseline model YOLOv8s has 28.6 G FLOPs and 11.2 M parameters, achieving a detection accuracy of 58.6%, showing a better basic performance balance.

To further validate our approach, we also evaluated the classic SSD [

7] and Faster R-CNN [

30] models. SSD achieved a mAP of 49.3% with a computational overhead of 16.6 G FLOPs and 26.29 M parameters, respectively. Although Faster R-CNN achieved a relatively high mAP of 54.3%, its computational complexity and parameter count were substantial, at 370.2 G FLOPs and 137.10 M.

Nevertheless, as shown in

Figure 8, all baseline models achieve lower accuracy than our proposed PKD-YOLOv8 model, which achieves 66.5% mAP@0.5:0.95. Notably, compared to YOLOv8s, its parameter count and FLOPs are reduced by 60.7% and 64.6%, respectively, highlighting its potential for real-time pest detection in resource-limited agricultural environments.

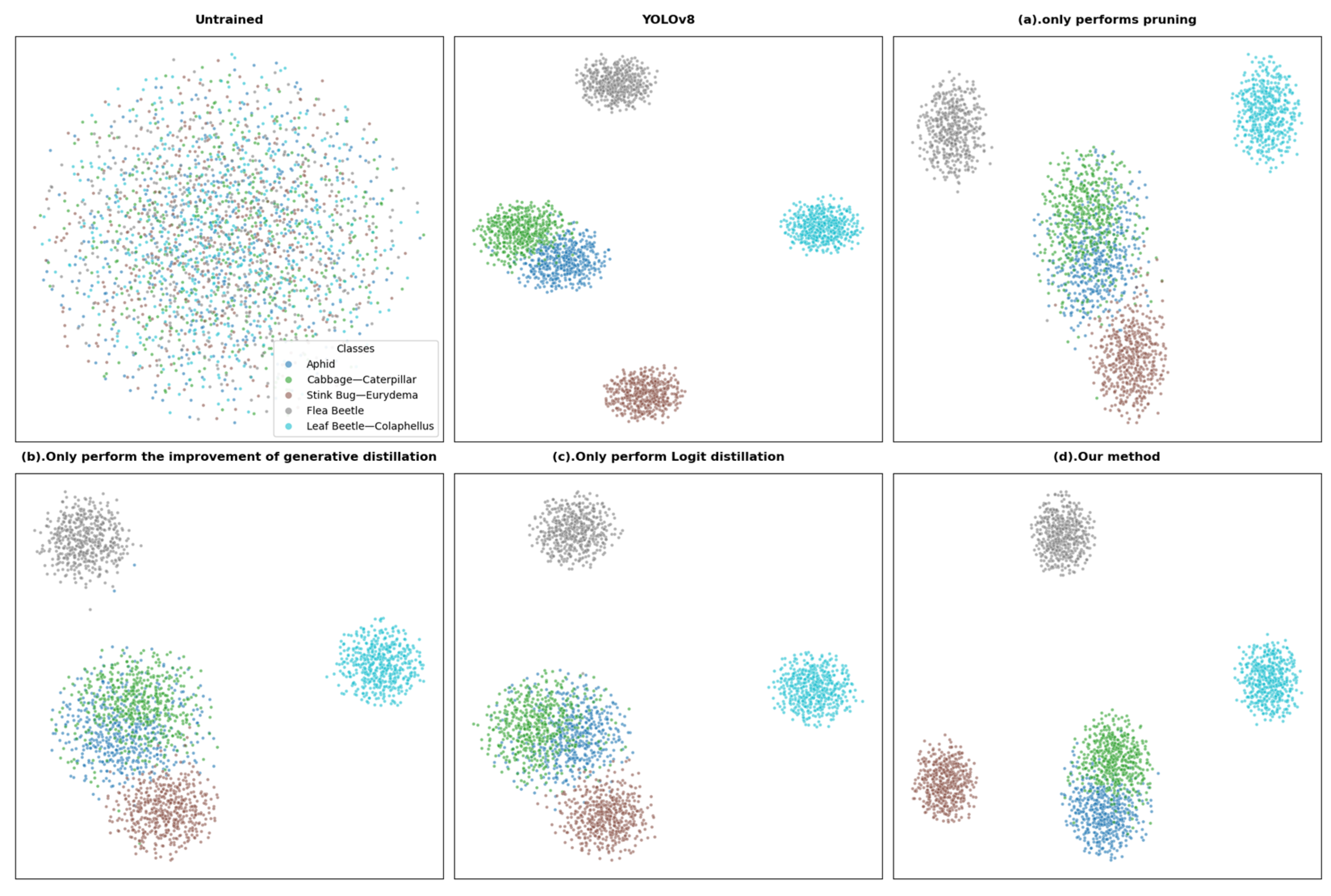

4.3. Visual Presentation of the PKD Framework

To further demonstrate the effectiveness and interpretability of our method, we used t-SNE to perform feature extraction, dimensionality reduction, and visualization on the original data and the trained model features. This method extracted key features from the original data, reduced the data dimensionality, and achieved effective data visualization. The results are shown in

Figure 9.

The figure shows the spatial distribution of features across different models on the target pest classification task: The untrained data distribution is mixed and disordered; the YOLOv8 baseline model forms clearly defined clusters for each pest, demonstrating excellent classification performance.

Figure 9a (pruning only) shows blurred cluster boundaries and overlapping categories, indicating that pruning compromises representational capabilities.

Figure 9b (improved generative distillation only) shows a distribution close to the baseline, but with increased cluster radius and the presence of outliers, indicating that it preserves structural information but lacks fine-grained discrimination.

Figure 9c (logit distillation only) forms clear clusters but exhibits unusually compact regions, reflecting overconfidence caused by its reliance on soft labels.

Figure 9d (our approach) achieves the best overall performance, achieving clustering close to the baseline and exhibiting superior inter-class spacing. Its effectiveness is primarily attributed to the following:

Logit distillation utilizes a shared detection head between the student and teacher models, leveraging high-level semantic and decision-making information to aid in evaluating the student model’s feature extraction quality. Improved generative distillation can help the student model learn the structure and representation patterns of the data abstracted by the teacher model, thereby improving the model’s understanding of details and local structures. When combined, the compact model can acquire more comprehensive knowledge.

4.4. Ablation Study

To comprehensively evaluate the performance of the model, we adopt YOLOv8 as the baseline model and conduct a series of detailed ablation experiments on the effectiveness of each module, the determination of the pruning ratio, and the selection of the knowledge distillation strategy.

4.4.1. Effectiveness of Each Module

We selectively added or removed specific modules from the baseline model for comparison. As shown in

Table 3, the accuracy of the pruned model alone (Group A) dropped significantly to 95.6% due to the lack of knowledge transfer. After introducing improved generative distillation (Group B), mAP@0.5 was restored to 96.3% by reconstructing the multi-scale features of the teacher network. Logit distillation (Group C), leveraging the semantic supervision of the teacher detection head, improved accuracy to 96.4%. When the two were optimized together (Group D), the dual feature-output supervision mechanism achieved mAP@0.5 of 96.7%, approaching the 96.8% of the original teacher model. This demonstrates that improved generative distillation suppresses the loss of fine-grained information caused by pruning by enhancing local structure perception, while logit distillation transfers high-level semantic decision logic. The two complement each other to alleviate the problem of feature degradation.

4.4.2. Model Performance with Different Pruning Ratios

The pruning ratio is a key hyperparameter in our method. Increasing the pruning ratio significantly reduces the number of model parameters, but also increases the likelihood of incorrectly pruning key channels, affecting the final prediction. To find the optimal pruning ratio, we conducted a series of experiments, the results of which are shown in

Table 4. By comparing and analyzing model performance at different ratios, we ultimately selected 70% as the optimal pruning ratio.

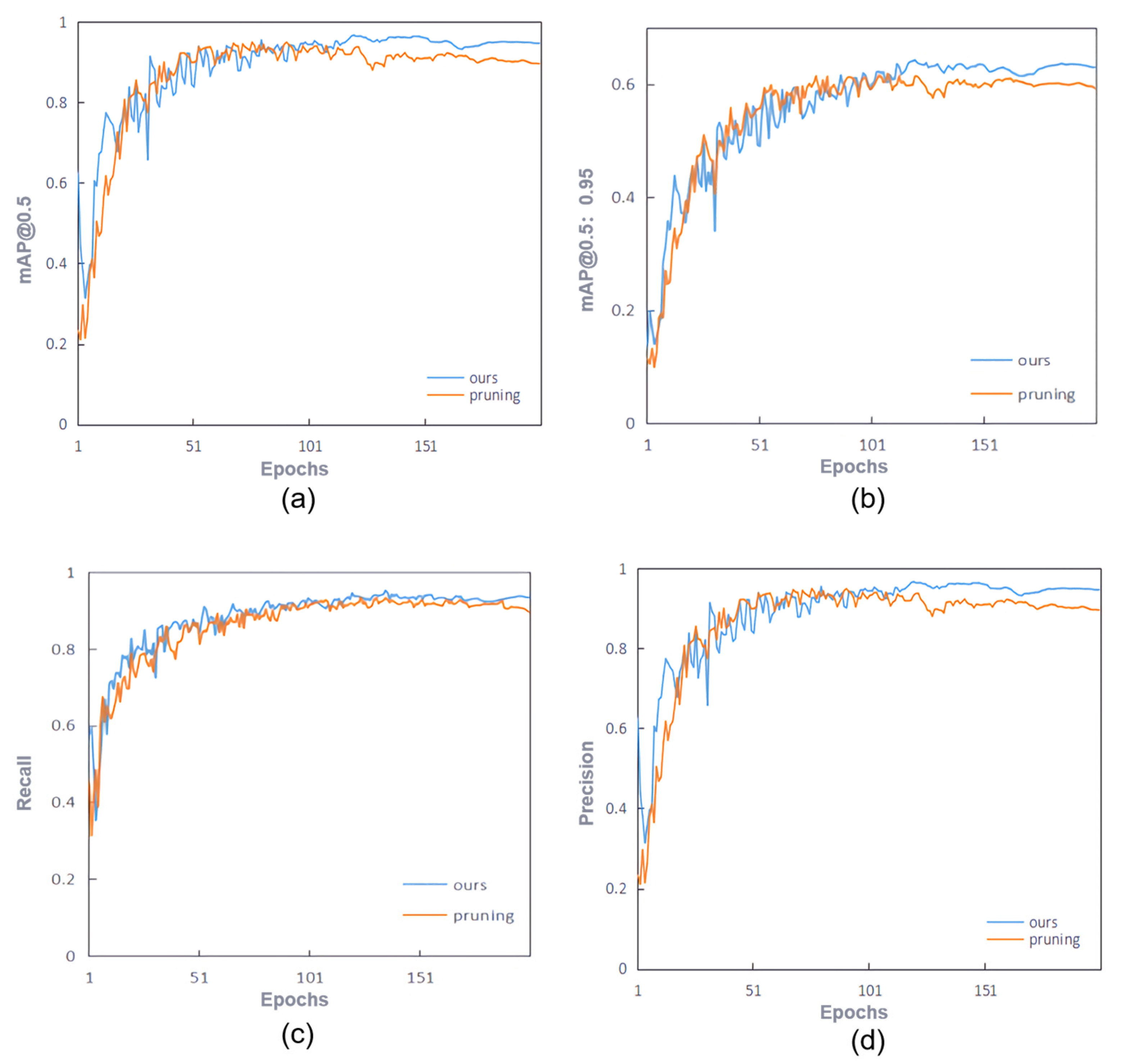

4.4.3. Model Performance Under Different Knowledge Distillation Strategies

To validate the effectiveness of our method, we compared the performance of a model fine-tuned using the LMGD method with a compact model pruned at a 70% rate on four metrics: mAP@0.5, mAP@0.5:0.95, Recall, and Precision. The experimental results are shown in

Figure 10. As shown in

Figure 10, our method significantly outperforms the compact model in all four metrics. The two methods achieved similar detection accuracy in the first 100 training rounds, but as the number of training rounds increases, the LMGD method demonstrates superior performance, and the gap widens. This demonstrates that the LMGD method effectively prevents overfitting and consistently performs well on the test set.

To more comprehensively evaluate the effectiveness of this method, we conducted a series of experiments on the selection of knowledge distillation strategies for compact models and compared various classic distillation methods. The experimental results are shown in

Table 5. The experimental results show that from the perspective of mAP@0.5, the detection accuracy of our method reaches 96.7%, which is 0.9%, 0.4%, 0.4%, 0.6%, and 1.0% higher than the KD [

31], MGD [

32], Cross KD [

33], CWD [

34], and FitNet [

26] methods, respectively. While maintaining a 70% reduction in model parameters, the accuracy only decreases by 0.1% compared to the teacher model before pruning and improves by 1.1% compared to the student model after pruning. The detection accuracy of each category is at least 0.1% higher than the comparison method, and the maximum is only 0.2% lower.

To further demonstrate our method, we provide visualizations of the detection results for each knowledge distillation strategy.

Figure 11 shows the visualization of different models for detecting five types of rapeseed pests. For aphids, while most models correctly identify the target, there are slight deviations between the Student and Cross KD models. Our method’s bounding boxes fit the insect body better and achieve higher confidence. For cabbage worm detection, the bounding boxes of traditional methods are slightly wider and easily overlap with the background. Our method’s bounding boxes tightly enclose the main body of the insect, resulting in more accurate detection. For stink bugs, flea beetles, and leaf beetles, methods like MGD and FitNet experience bounding box shifts due to complex backgrounds. However, our method maintains high accuracy, with bounding boxes highly consistent with the insect’s outline. Notably, when detecting samples with rapeseed pests, the KD method generates multiple anchor boxes, while other methods do not. This may be due to limitations in the KD method’s feature transfer process. Overall, our method demonstrates higher detection accuracy, bounding box accuracy, and prediction information reliability in the five pest detection tasks shown in

Figure 11, further validating its detection performance and adaptability.

4.5. Model Deployment and Inference Performance Evaluation on Jetson Nano

To verify the deployability and real-time performance of the proposed lightweight approach in real-world edge computing scenarios, this study used the NVIDIA Jetson Nano B01(NVIDIA Corporation; Santa Clara, CA, USA) embedded platform as a test device to test and evaluate the model’s inference speed on a real-world device.

The Jetson Nano is an embedded development board designed specifically for edge computing and lightweight AI applications. Its compact size (100 × 80 × 29 mm) facilitates integration and deployment. The platform utilizes an NVIDIA Maxwell architecture GPU with 128 integrated CUDA cores, a quad-core ARM Cortex-A57 CPU (1.43 GHz), and 4 GB of built-in LPDDR4 video memory, supporting parallel inference and efficient data processing.

Table 6 shows the detailed hardware configuration of the Jetson Nano.

To adapt the model to the Jetson Nano platform, the model must be converted from PyTorch to ONNX format. A deployment environment must then be established using the JetPack SDK, which includes TensorRT, CUDA, and cuDNN. TensorRT is then used to perform graph optimization and precision compression on the ONNX model to improve inference efficiency.

After deployment, we conducted unified inference tests on the original YOLOv8 model and the lightweight PKD-YOLOv8 model on the same test set, recording the average inference time and corresponding frame rate (FPS) for a single frame. All tests were performed in Jetson Nano high-performance mode. The results are shown in

Table 7.

Test results show that the original YOLOv8 model’s average single-frame inference time on a Jetson Nano is 137 ms, with an FPS of 7.29. The PKD-YOLOv8 model, optimized through pruning and distillation, reduces this average inference time to 85 ms, with an FPS of 11.76, an improvement of approximately 38%. This enhances the model’s real-time inference capabilities on low-power edge devices. This result validates the effectiveness and practicality of this approach in real-world deployment scenarios.

5. Conclusions

This paper addresses the challenges of model deployment and low detection accuracy in rapeseed field pest identification. We constructed a field dataset containing five common pest types and proposed a collaborative compression learning method that combines model pruning and knowledge distillation. Based on structural analysis of the YOLOv8 model and layer sensitivity evaluation, we performed structured pruning and introduced an improved knowledge distillation strategy to improve the detection performance of the lightweight model. The effectiveness of the proposed method was verified through a series of experiments in a unified experimental environment. Our method achieved 96.7% mAP@0.5, 93.2% accuracy, and 92.7% recall, while reducing the parameter size from 11.2 MB to 4.4 MB and the FLOPs from 28.3 GB to 10.01 GB, representing reductions of approximately 60.7% and 64.6%, respectively. This method improved model inference efficiency while only decreasing detection accuracy by 0.1%. We hope that the proposed method will contribute to future performance improvements in the YOLO family. Despite this, this study still has certain limitations: data collection was concentrated in a specific region, resulting in relatively limited sample diversity; the model has not yet been deployed and tested on real agricultural equipment, and system integration and real-time performance require further verification.

Future work will focus on two aspects: first, promoting the deployment of the model in actual agricultural terminal devices, such as drones and mobile terminals, combining high-resolution image acquisition and wireless transmission to achieve efficient field pest monitoring; second, expanding data sources, continuously collecting pest images at different times, locations, and weather conditions, to improve the model’s generalization and robustness.

In addition, although the proposed method was developed based on YOLOv8, its core strategies—including pruning-aware analysis and collaborative compression with distillation—are model-agnostic and can be extended to other YOLO series models such as YOLOv9 to YOLOv12, which share similar architectural patterns. This enables the method to retain compatibility with other YOLO versions, increasing its practical utility.