Optimizing MFCC Parameters for Breathing Phase Detection

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

3.1. Dataset Description

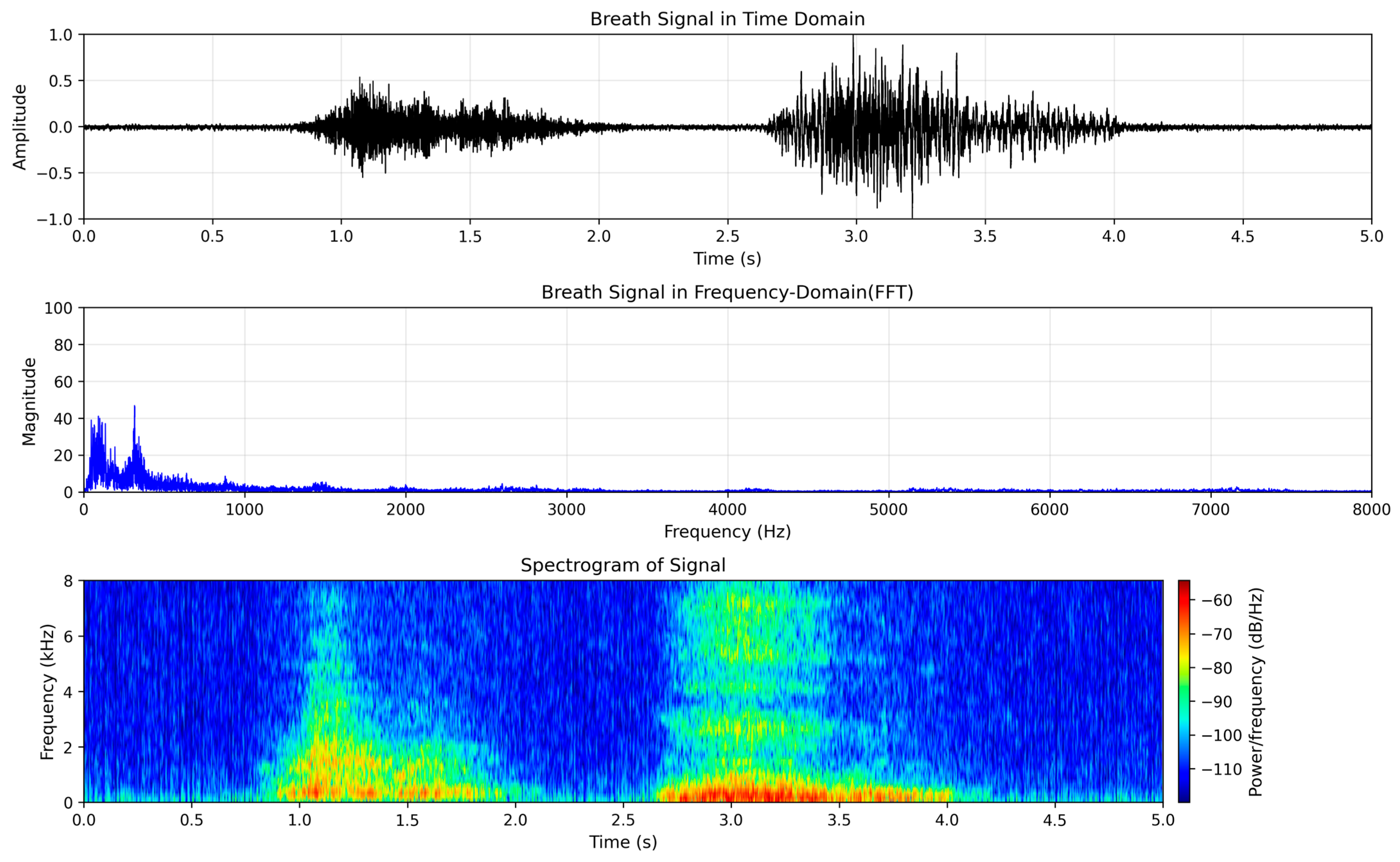

3.2. Pre-Processing

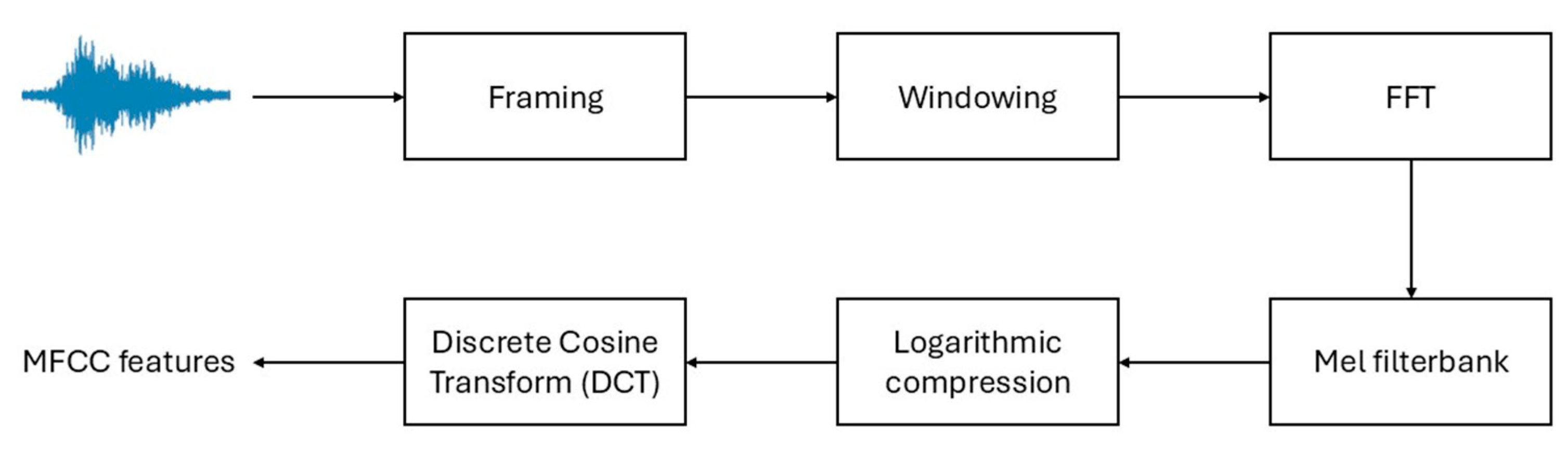

3.3. Mel Frequency Cepstral Coefficients Feature Extraction

3.4. SVM Classifier

4. Results

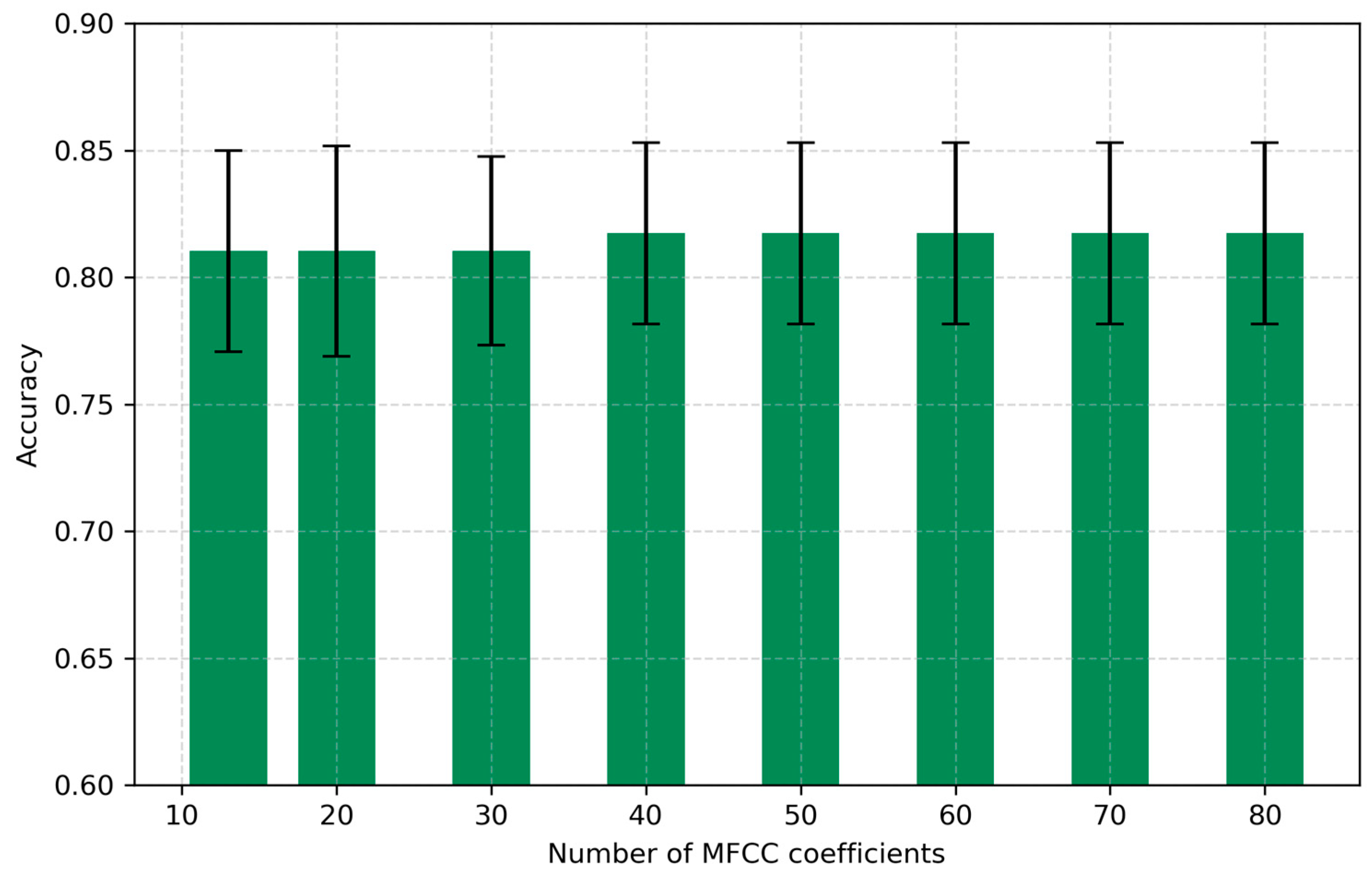

4.1. Number of Coefficients

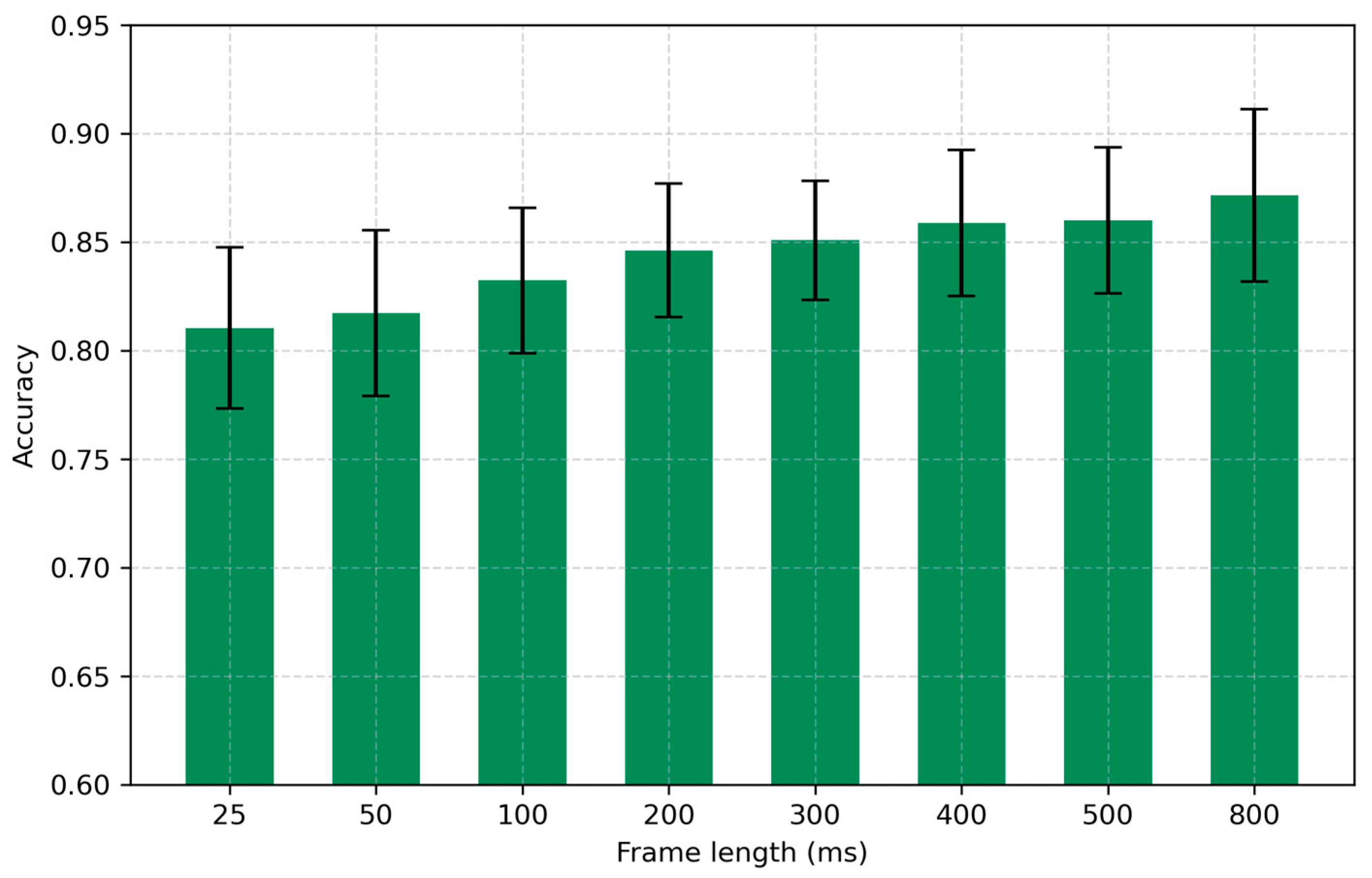

4.2. Frame Length

4.3. Latency-Accuracy Trade-Off

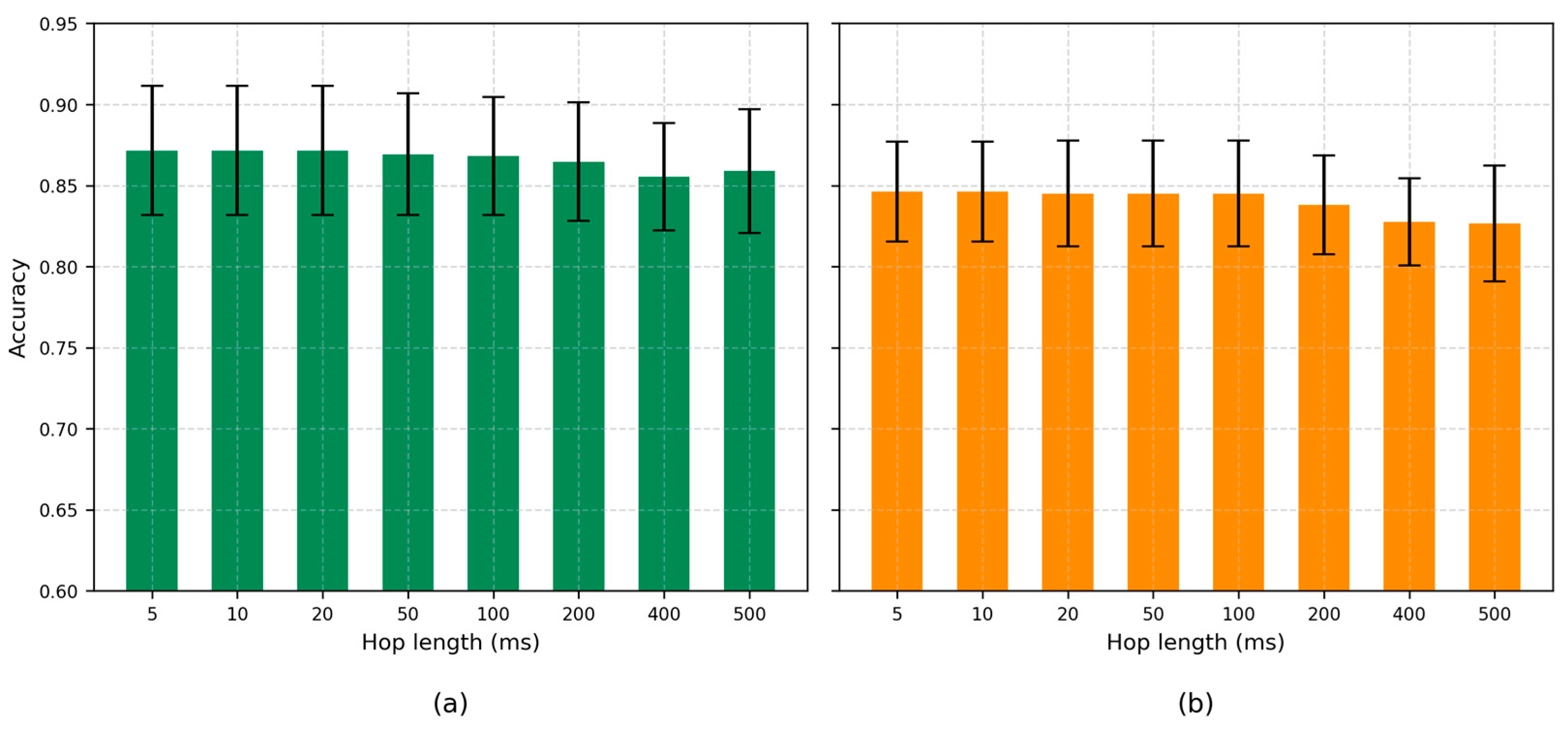

4.4. Hop Length

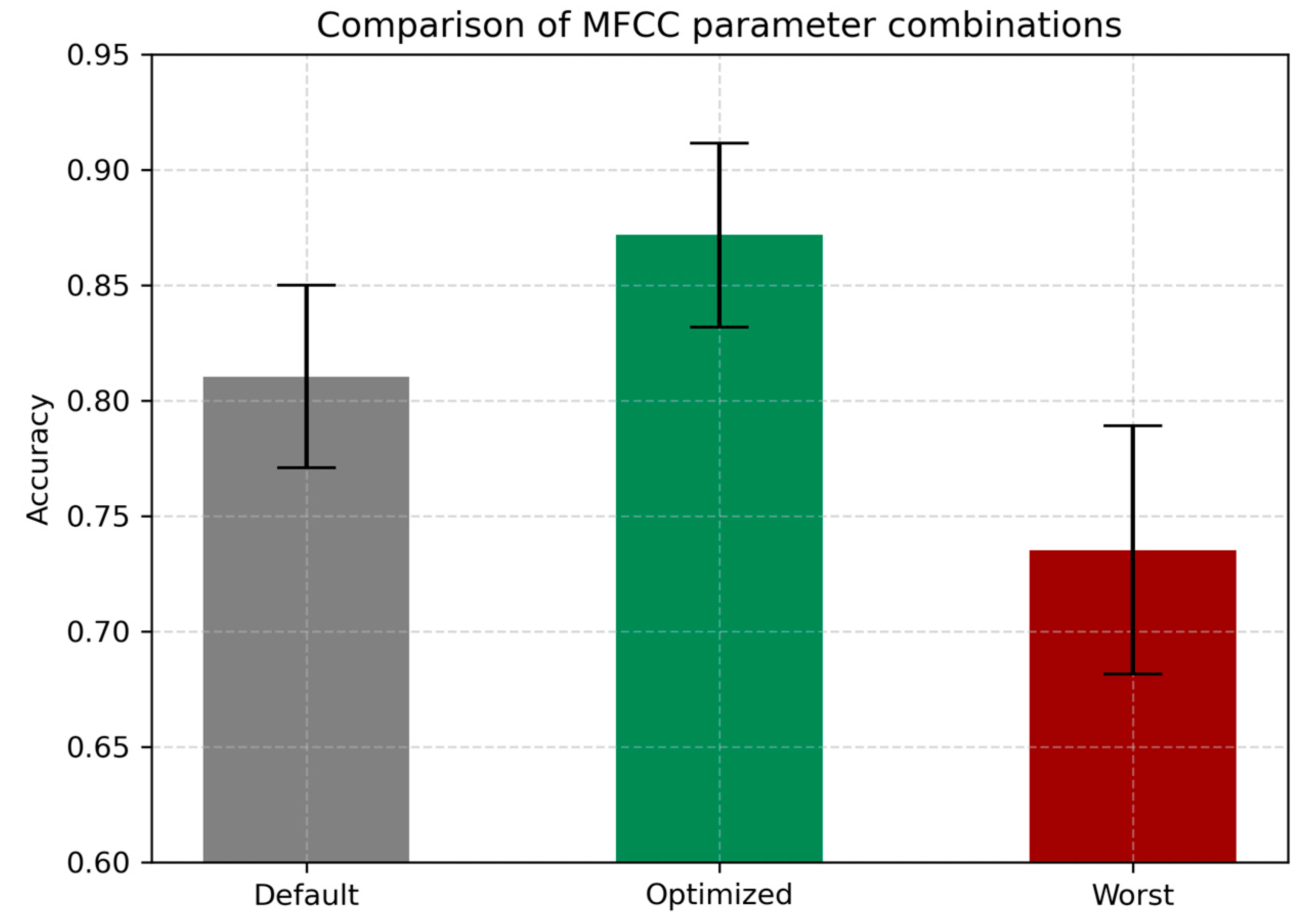

4.5. Optimal Combination of Parameters

4.6. Comparison with Deep Learning Models

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AUC | Area Under the Curve |

| COPD | Chronic Obstructive Pulmonary Disease |

| COVID-19 | Coronavirus Disease 2019 |

| EER | Equal Error Rate |

| FFT | Fast Fourier Transform |

| LSTM | Long Short-Term Memory |

| MFCCs | Mel Frequency Cepstral Coefficients |

| OSA | Obstructive Sleep Apnea |

| PCM | Pulse Code Modulation |

| RBF | Radial Basis Function |

| RF | Random Forest |

| ROC | Receiver Operating Characteristic |

| STD | Standard Deviation |

| SVD | Saarbrücken Voice Disorders |

| SVM | Support Vector Machine |

| WAV | Waveform audio file format |

References

- Del Negro, C.A.; Funk, G.D.; Feldman, J.L. Breathing matters. Nat. Rev. Neurosci. 2018, 19, 351–367. [Google Scholar] [CrossRef] [PubMed]

- Dempsey, J.A.; Welch, J.F. Control of breathing. Semin. Respir. Crit. Care Med. 2023, 44, 627–649. [Google Scholar] [CrossRef] [PubMed]

- Ashhad, S.; Kam, K.; Del Negro, C.A.; Feldman, J.L. Breathing rhythm and pattern and their influence on emotion. Annu. Rev. Neurosci. 2022, 45, 223–247. [Google Scholar] [CrossRef]

- Mitsea, E.; Drigas, A.; Skianis, C. Breathing, attention & consciousness in sync: The role of breathing training, metacognition & virtual reality. Tech. Soc. Sci. J. 2022, 29, 79–97. [Google Scholar] [CrossRef]

- Soroka, T.; Ravia, A.; Snitz, K.; Honigstein, D.; Weissbrod, A.; Gorodisky, L.; Weiss, T.; Perl, O.; Sobel, N. Humans have nasal respiratory fingerprints. Curr. Biol. 2025, 35, 3011–3021.e3. [Google Scholar] [CrossRef] [PubMed]

- Zaccaro, A.; Piarulli, A.; Laurino, M.; Garbella, E.; Menicucci, D.; Neri, B.; Gemignani, A. How breath-control can change your life: A systematic review on psycho-physiological correlates of slow breathing. Front. Hum. Neurosci. 2018, 12, 409421. [Google Scholar] [CrossRef]

- Landry, V.; Matschek, J.; Pang, R.; Munipalle, M.; Tan, K.; Boruff, J.; Li-Jessen, N.Y. Audio-based digital biomarkers in diagnosing and managing respiratory diseases: A systematic review and bibliometric analysis. Eur. Respir. Rev. 2025, 34, 240246. [Google Scholar] [CrossRef]

- Jeong, H.; Yoo, J.H.; Goh, M.; Song, H. Deep breathing in your hands: Designing and assessing a DTx mobile app. Front. Digit. Health 2024, 6, 1287340. [Google Scholar] [CrossRef]

- Shih, C.H.; Tomita, N.; Lukic, Y.X.; Reguera, Á.H.; Fleisch, E.; Kowatsch, T. Breeze: Smartphone-based acoustic real-time detection of breathing phases for a gamified biofeedback breathing training. Proc. ACM Interact. Mob. 2019, 3, 1–30. [Google Scholar] [CrossRef]

- Agrawal, V.; Naik, V.; Duggirala, M.; Athavale, S. Calm a mobile based deep breathing game with biofeedback. In Proceedings of the Extended Abstracts of the 2020 Annual Symposium on Computer-Human Interaction in Play, Virtual Event Canada, 2–4 November 2020. [Google Scholar] [CrossRef]

- Latifi, S.A.; Ghassemian, H.; Imani, M. Feature extraction and classification of respiratory sound and lung diseases. In Proceedings of the 2023 6th International Conference on Pattern Recognition and Image Analysis (IPRIA), Qom, Iran, 14–16 February 2023. [Google Scholar] [CrossRef]

- Lurie, A.; Roche, N. Obstructive sleep apnea in patients with chronic obstructive pulmonary disease: Facts and perspectives. COPD J. Chronic Obs. Pulm. Dis. 2021, 18, 700–712. [Google Scholar] [CrossRef]

- Vitazkova, D.; Foltan, E.; Kosnacova, H.; Micjan, M.; Donoval, M.; Kuzma, A.; Kopani, M.; Vavrinsky, E. Advances in respiratory monitoring: A comprehensive review of wearable and remote technologies. Biosensors 2024, 14, 90. [Google Scholar] [CrossRef]

- Iannella, G.; Pace, A.; Bellizzi, M.G.; Magliulo, G.; Greco, A.; De Virgilio, A.; Croce, E.; Gioacchini, F.M.; Re, M.; Costantino, A.; et al. The Global Burden of Obstructive Sleep Apnea. Diagnostics 2025, 15, 1088. [Google Scholar] [CrossRef] [PubMed]

- Adeloye, D.; Song, P.; Zhu, Y.; Campbell, H.; Sheikh, A.; Rudan, I. Global, regional, and national prevalence of, and risk factors for, chronic obstructive pulmonary disease (COPD) in 2019: A systematic review and modelling analysis. Lancet Respir. Med. 2022, 10, 447–458. [Google Scholar] [CrossRef]

- Song, P.; Adeloye, D.; Salim, H.; Dos Santos, J.P.; Campbell, H.; Sheikh, A.; Rudan, I. Global, regional, and national prevalence of asthma in 2019: A systematic analysis and modelling study. J. Glob. Health 2022, 12, 04052. [Google Scholar] [CrossRef] [PubMed]

- Migliaccio, G.M.; Russo, L.; Maric, M.; Padulo, J. Sports performance and breathing rate: What is the connection? A narrative review on breathing strategies. Sports 2023, 11, 103. [Google Scholar] [CrossRef]

- Sikora, M.; Mikołajczyk, R.; Łakomy, O.; Karpiński, J.; Żebrowska, A.; Kostorz-Nosal, S.; Jastrzębski, D. Influence of the breathing pattern on the pulmonary function of endurance-trained athletes. Sci. Rep. 2024, 14, 1113. [Google Scholar] [CrossRef]

- Balban, M.Y.; Neri, E.; Kogon, M.M.; Weed, L.; Nouriani, B.; Jo, B.; Holl, G.; Zeitzer, J.M.; Spiegel, D.; Huberman, A.D. Brief structured respiration practices enhance mood and reduce physiological arousal. Cell Rep. Med. 2023, 4, 100895. [Google Scholar] [CrossRef] [PubMed]

- Toussaint, L.; Nguyen, Q.A.; Roettger, C.; Dixon, K.; Offenbächer, M.; Kohls, N.; Hirsch, J.; Sirois, F. Effectiveness of progressive muscle relaxation, deep breathing, and guided imagery in promoting psychological and physiological states of relaxation. Evid.-Based Complement. Altern. Med. 2021, 2021, 5924040. [Google Scholar] [CrossRef] [PubMed]

- Cook, J.; Umar, M.; Khalili, F.; Taebi, A. Body acoustics for the non-invasive diagnosis of medical conditions. Bioengineering 2022, 9, 149. [Google Scholar] [CrossRef] [PubMed]

- Lalouani, W.; Younis, M.; Emokpae Jr, R.N.; Emokpae, L.E. Enabling effective breathing sound analysis for automated diagnosis of lung diseases. Smart Health 2022, 26, 100329. [Google Scholar] [CrossRef]

- Davis, S.; Mermelstein, P. Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. IEEE Trans. Acoust. 1980, 28, 357–366. [Google Scholar] [CrossRef]

- Abdul, Z.K.; Al-Talabani, A.K. Mel frequency cepstral coefficient and its applications: A review. IEEE Access 2022, 10, 122136–122158. [Google Scholar] [CrossRef]

- Yan, Y.; Simons, S.O.; van Bemmel, L.; Reinders, L.G.; Franssen, F.M.; Urovi, V. Optimizing MFCC parameters for the automatic detection of respiratory diseases. Appl. Acoust. 2025, 228, 110299. [Google Scholar] [CrossRef]

- Ruinskiy, D.; Lavner, Y. An effective algorithm for automatic detection and exact demarcation of breath sounds in speech and song signals. IEEE Trans. Audio Speech Lang. Process 2007, 15, 838–850. [Google Scholar] [CrossRef]

- Duckitt, W.D.; Tuomi, S.K.; Niesler, T.R. Automatic detection, segmentation and assessment of snoring from ambient acoustic data. Physiol. Meas. 2006, 27, 1047–1056. [Google Scholar] [CrossRef]

- Kim, T.; Kim, J.W.; Lee, K. Detection of sleep disordered breathing severity using acoustic biomarker and machine learning techniques. Biomed. Eng. Online 2018, 17, 16. [Google Scholar] [CrossRef]

- Srivastava, A.; Jain, S.; Miranda, R.; Patil, S.; Pandya, S.; Kotecha, K. Deep learning based respiratory sound analysis for detection of chronic obstructive pulmonary disease. PeerJ Comput. Sci. 2021, 7, e369. [Google Scholar] [CrossRef] [PubMed]

- Pahar, M.; Niesler, T. Machine learning based COVID-19 detection from smartphone recordings: Cough, breath and speech. arXiv 2021, arXiv:2104.02477. [Google Scholar]

- Bardou, D.; Zhang, K.; Ahmad, S.M. Lung sounds classification using convolutional neural networks. Artif. Intell. Med. 2018, 88, 58–69. [Google Scholar] [CrossRef]

- Dash, T.K.; Mishra, S.; Panda, G.; Satapathy, S.C. Detection of COVID-19 from speech signal using bio-inspired based cepstral features. Pattern Recognit. 2021, 117, 107999. [Google Scholar] [CrossRef]

- Ingco, W.E.M.; Reyes, R.S.; Abu, P.A.R. Development of a spectral feature extraction using enhanced MFCC for respiratory sound analysis. In Proceedings of the 2019 International SoC Design Conference (ISOCC), Jeju, Republic of Korea, 6–9 October 2019. [Google Scholar] [CrossRef]

- Ingco, W.E.M.; Abu, P.A.R.; Reyes, R.S. Performance evaluation of an intelligent lung sound classifier based on an enhanced MFCC model. In Proceedings of the 2021 7th International Conference on Electrical, Electronics and Information Engineering (ICEEIE), Malang, Indonesia, 2 October 2021. [Google Scholar] [CrossRef]

- Fahed, V.S.; Doheny, E.P.; Lowery, M.M. Random forest classification of breathing phases from audio signals recorded using mobile devices. In Proceedings of the Interspeech 2023, Dublin, Ireland, 20–24 August 2023. [Google Scholar] [CrossRef]

- Mehrban, M.H.K.; Voix, J.; Bouserhal, R.E. Classification of breathing phase and path with in-ear microphones. Sensors 2024, 24, 6679. [Google Scholar] [CrossRef] [PubMed]

- Tran-Anh, D.; Vu, N.H.; Nguyen-Trong, K.; Pham, C. Multi-task learning neural networks for breath sound detection and classification in pervasive healthcare. Pervasive Mob. Comput. 2022, 86, 101685. [Google Scholar] [CrossRef]

- Tirronen, S.; Kadiri, S.R.; Alku, P. The effect of the MFCC frame length in automatic voice pathology detection. J. Voice 2024, 38, 975–982. [Google Scholar] [CrossRef] [PubMed]

- Laborde, S.; Iskra, M.; Zammit, N.; Borges, U.; You, M.; Sevoz-Couche, C.; Dosseville, F. Slow-paced breathing: Influence of inhalation/exhalation ratio and of respiratory pauses on cardiac vagal activity. Sustainability 2021, 13, 7775. [Google Scholar] [CrossRef]

- Gupta, S.; Jaafar, J.; Ahmad, W.W.; Bansal, A. Feature extraction using MFCC. Signal Image Process Int. J. 2013, 4, 101–108. [Google Scholar] [CrossRef]

- Siam, A.I.; Elazm, A.A.; El-Bahnasawy, N.A.; El Banby, G.M.; Abd El-Samie, F.E. PPG-based human identification using Mel-frequency cepstral coefficients and neural networks. Multimed. Tools Appl. 2021, 80, 26001–26019. [Google Scholar] [CrossRef]

- McFee, B.; Raffel, C.; Liang, D.; Ellis, D.P.W.; McVicar, M.; Battenberg, E.; Nieto, O. librosa: Audio and music signal analysis in python. In Proceedings of the 14th Python in Science Conference, Austin, TX, USA, 11–17 July 2015. [Google Scholar] [CrossRef]

- Kramer, O. Scikit-learn. In Machine Learning for Evolution Strategies; Springer: Berlin/Heidelberg, Germany, 2016; pp. 45–53. [Google Scholar] [CrossRef]

- Cervantes, J.; Garcia-Lamont, F.; Rodríguez-Mazahua, L.; Lopez, A. A comprehensive survey on support vector machine classification: Applications, challenges and trends. Neurocomputing 2020, 408, 189–215. [Google Scholar] [CrossRef]

- Hershey, S.; Chaudhuri, S.; Ellis, D.P.W.; Gemmeke, J.F.; Jansen, A.; Moore, R.C.; Plakal, M.; Channing Moore, R.; Platt, D.; Saurous, R.A.; et al. CNN architectures for large-scale audio classification. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017. [Google Scholar] [CrossRef]

- Gemmeke, J.F.; Ellis, D.P.W.; Freedman, D.; Jansen, A.; Lawrence, W.; Moore, R.C.; Plakal, M.; Ritter, M. Audio set: An ontology and human-labeled dataset for audio events. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: The next generation of on-device computer vision networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Haider, N.S. Respiratory sound denoising using empirical mode decomposition, hurst analysis and spectral subtraction. Biomed. Signal Process. Control 2021, 64, 102313. [Google Scholar] [CrossRef]

- Emmanouilidou, D.; McCollum, E.D.; Park, D.E.; Elhilali, M. Adaptive noise suppression of pediatric lung auscultations with real applications to noisy clinical settings in developing countries. IEEE Trans. Biomed. Eng. 2015, 62, 2279–2288. [Google Scholar] [CrossRef]

- Upadhyay, N.; Karmakar, A. Speech enhancement using spectral subtraction-type algorithms: A comparison and simulation study. Procedia Comput. Sci. 2015, 54, 574–584. [Google Scholar] [CrossRef]

- Ramasubramanian, V.; Vijaywargi, D. Speech enhancement based on hypothesized Wiener filtering. In Proceedings of the Interspeech 2008, Brisbane, Australia, 22–26 September 2008. [Google Scholar] [CrossRef]

- Podder, P.; Hasan, M.M.; Islam, M.R.; Sayeed, M. Design and implementation of Butterworth, Chebyshev-I and Elliptic Filter for Speech Signal Analysis. Int. J. Comput. Appl. 2014, 98, 12–18. [Google Scholar] [CrossRef]

- Haider, N.S.; Behera, A.K. Respiratory sound denoising using sparsity-assisted signal smoothing algorithm. Biocybern. Biomed. Eng. 2022, 42, 481–493. [Google Scholar] [CrossRef]

- Ali, M.A.; Shemi, P.M. An improved method of audio denoising based on wavelet transform. In Proceedings of the 2015 IEEE International Conference on Power, Instrumentation, Control and Computing (PICC), Thrissur, India, 9–11 December 2015. [Google Scholar] [CrossRef]

- Lee, C.S.; Li, M.; Lou, Y.; Dahiya, R. Restoration of lung sound signals using a hybrid wavelet-based approach. IEEE Sens. J. 2022, 22, 19700–19712. [Google Scholar] [CrossRef]

- Dong, G.; Zhang, Z.; Sun, P.; Zhang, M. Adaptive Differential Denoising for Respiratory Sounds Classification. arXiv 2025, arXiv:2506.02505. [Google Scholar]

- Rocha, B.M.; Filos, D.; Mendes, L.; Serbes, G.; Ulukaya, S.; Kahya, Y.P.; Jakovljevic, N.; Turukalo, T.L.; Vogiatzis, I.M.; Perantoni, E.; et al. An open access database for the evaluation of respiratory sound classification algorithms. Physiol. Meas. 2019, 40, 035001. [Google Scholar] [CrossRef]

- Bhattacharya, D.; Sharma, N.K.; Dutta, D.; Chetupalli, S.R.; Mote, P.; Ganapathy, S.; Chandrakiran, C.; Nori, S.; Suhail, K.K.; Gonuguntla, S.; et al. Coswara: A respiratory sounds and symptoms dataset for remote screening of SARS-CoV-2 infection. Sci. Data 2023, 10, 397. [Google Scholar] [CrossRef]

- Heitmann, J.; Glangetas, A.; Doenz, J.; Dervaux, J.; Shama, D.M.; Garcia, D.H.; Benissa, M.R.; Cantais, A.; Perez, A.; Müller, D. DeepBreath—Automated detection of respiratory pathology from lung auscultation in 572 pediatric outpatients across 5 countries. NPJ Digit. Med. 2023, 6, 104. [Google Scholar] [CrossRef] [PubMed]

| Frame Length (ms) | Latency (s) | Accuracy (±STD) |

|---|---|---|

| 200 | 0.200 | 0.8462 ± 0.0308 |

| 300 | 0.300 | 0.8508 ± 0.0273 |

| 400 | 0.400 | 0.8589 ± 0.0337 |

| 800 | 0.800 | 0.8716 ± 0.0397 |

| Configuration | Accuracy | AUC | F1 | Precision | EER |

|---|---|---|---|---|---|

| Worst | 0.7352 | 0.8855 | 0.7437 | 0.7382 | 0.1760 |

| Default | 0.8096 | 0.9338 | 0.8165 | 0.8079 | 0.1295 |

| Optimized | 0.8716 | 0.9663 | 0.8764 | 0.8725 | 0.0899 |

| Model/Feature | Accuracy | AUC | F1 | Precision | EER |

|---|---|---|---|---|---|

| SVM (MFCC, optimized) | 0.8716 | 0.9663 | 0.8764 | 0.8725 | 0.0899 |

| VGGish + RF | 0.8713 | 0.9598 | 0.8802 | 0.8822 | 0.0960 |

| YAMNet + RF | 0.7871 | 0.9372 | 0.7984 | 0.7952 | 0.1295 |

| MobileNetV2 + MFCC | 0.8416 | 0.9594 | 0.8548 | 0.8538 | 0.1088 |

| MobileNetV2 + Spectrogram | 0.8416 | 0.9661 | 0.8496 | 0.8517 | 0.1041 |

| Study | Problem | Dataset | Modality | Classifier | n_mfcc | Frame Length (ms) | Hop Length (ms) | Accuracy |

|---|---|---|---|---|---|---|---|---|

| Tirronen et al., 2024 [38] | Voice pathology detection | SVD 1 | Speech | SVM | 13 (default) | 500 | 5 (default) | 66.4% |

| Yan et al., 2025 [25] | Automatic detection of respiratory diseases | Cambridge COVID-19 Sound database | Speech, Cough, and Breath | SVM/LSTM | 30 | 25 | 5 | 81.1%/79.2% |

| Coswara | Speech, Cough, and Breath | SVM/LSTM | 40 | 25 | 5 | 80.6%/79.6% | ||

| SVD 1 | Speech | SVM/LSTM | 30 | 25 | 5 | 71.7%/71.9% | ||

| Current study | Breathing phase detection | Proprietary dataset | Breath | SVM | 30 | 300 | 10 | 85.08% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhantleuova, A.K.; Makashev, Y.K.; Duzbayev, N.T. Optimizing MFCC Parameters for Breathing Phase Detection. Sensors 2025, 25, 5002. https://doi.org/10.3390/s25165002

Zhantleuova AK, Makashev YK, Duzbayev NT. Optimizing MFCC Parameters for Breathing Phase Detection. Sensors. 2025; 25(16):5002. https://doi.org/10.3390/s25165002

Chicago/Turabian StyleZhantleuova, Assel K., Yerbulat K. Makashev, and Nurzhan T. Duzbayev. 2025. "Optimizing MFCC Parameters for Breathing Phase Detection" Sensors 25, no. 16: 5002. https://doi.org/10.3390/s25165002

APA StyleZhantleuova, A. K., Makashev, Y. K., & Duzbayev, N. T. (2025). Optimizing MFCC Parameters for Breathing Phase Detection. Sensors, 25(16), 5002. https://doi.org/10.3390/s25165002