1. Introduction

In recent years, the rapid advancement of the Internet of Things (IoT) technology has led to a significant increase in the deployment of sensor devices and smart systems. IoT applications span various fields, including smart cities, agricultural monitoring, infrastructure management, and healthcare, resulting in an exponential rise in the number of deployed IoT devices [

1]. According to a report, the number of IoT devices worldwide is expected to reach approximately 4.4 billion by 2025, raising concerns about the increasing load on communication networks [

2]. As the large-scale deployment of IoT devices progresses, the shortage on communication resources has become a serious issue. The operation of numerous devices within the same communication area leads to frequent channel contention and interference, causing increased communication delays and packet loss [

3]. In addition, many IoT devices operate on battery power, and in many cases, recharging or replacing batteries frequently is impractical due to deployment constraints. Therefore, achieving efficient communication under limited energy availability is a crucial challenge. However, network congestion and packet collisions force devices to retransmit data to meet the Quality of Service (QoS) of the IoT applications, thereby increasing power consumption. This issue is particularly significant in high-density IoT networks, where declining energy efficiency can severely impact overall system performance.

Low Power Wide Area (LPWA) technology has gained significant attention for enabling long-range, low-power communications [

4,

5]. In a typical LPWA network, devices can communicate with base stations located several kilometers to over ten kilometers away, facilitating the management of widely distributed IoT devices [

6]. Additionally, the extended battery lifespan of LPWA-enabled devices, often lasting several years, minimizes the need for frequent maintenance, contributing to reduced operational costs for large-scale IoT systems. Long Range (LoRa) is one of the most widely adopted LPWA technologies, along with Sigfox and Narrowband IoT (NB-IoT) [

7]. Due to its superiority in communication range, low power consumption, and operational flexibility without a license compared to other LPWA standards, this paper focuses on the LoRa technology.

However, since the traditional LoRa network employs Aloha as the media access control (MAC) protocol [

8], each device transmits data at random intervals; multiple devices using the same channel simultaneously may lead to communication collisions [

9]. When a collision occurs, the data packet cannot be received correctly, necessitating retransmission. This retransmission increases network load, causing further interference and creating a negative feedback loop. Furthermore, as IoT adoption expands and more devices connect to LoRa networks, channel interference becomes a significant issue. Although LoRa supports multiple channels, improper channel selection may lead to congestion, reducing communication success rates [

10]. Therefore, inter-channel interference must be considered, requiring dynamic channel selection based on congestion conditions. Additionally, LoRa devices that repeatedly retransmit data may increase their energy consumption. In environments with high channel interference, the likelihood of failed transmissions rises, leading to excessive battery usage [

9]. Moreover, transmission parameter settings significantly impact energy consumption. For instance, setting the transmission power (TP) too high results in unnecessary energy waste, while setting it too low reduces the communication range, increasing the probability of transmission failures [

3]. In such cases, devices must attempt multiple retransmissions, further degrading energy efficiency. Therefore, selecting an appropriate TP based on the communication environment is essential. Furthermore, bandwidth (BW) settings also affect communication stability and energy efficiency. A wider BW allows for faster data transmission but decreases spectrum efficiency and may increase interference [

11]. Conversely, a narrower BW improves communication stability but increases transmission time, leading to higher energy consumption and latency.

As described above, the communication performance of the LoRa network is highly influenced by transmission parameters such as channel, TP, and BW. Proper configuration of these parameters can enhance communication success rates and energy efficiency. In LoRa networks, the configuration of transmission parameter methods can be broadly classified into two approaches: centralized and distributed methods. In the centralized approach, the network server monitors the communication status of the network and assigns optimal transmission parameters to LoRa devices [

12,

13]. The primary advantage of this method is its ability to achieve global optimization of parameters, considering the entire network [

14]. Specifically, the network server aggregates information such as device locations, communication success rates, and interference conditions, allowing it to configure optimal channels and TP to maximize the overall performance of the LoRa network. However, the centralized approach has several drawbacks. For instance, as the number of devices increases, the processing load on the Network Server (NS) intensifies, eventually reaching its capacity limits and restricting the scalability of the system [

15]. Additionally, frequent communication between devices and the server is required to update parameters, making it challenging to ensure real-time responsiveness. Furthermore, the implementation and operational costs are high due to the need for network-wide management, central servers, and sophisticated optimization algorithms.

On the other hand, in the distributed approaches [

16,

17,

18,

19,

20,

21,

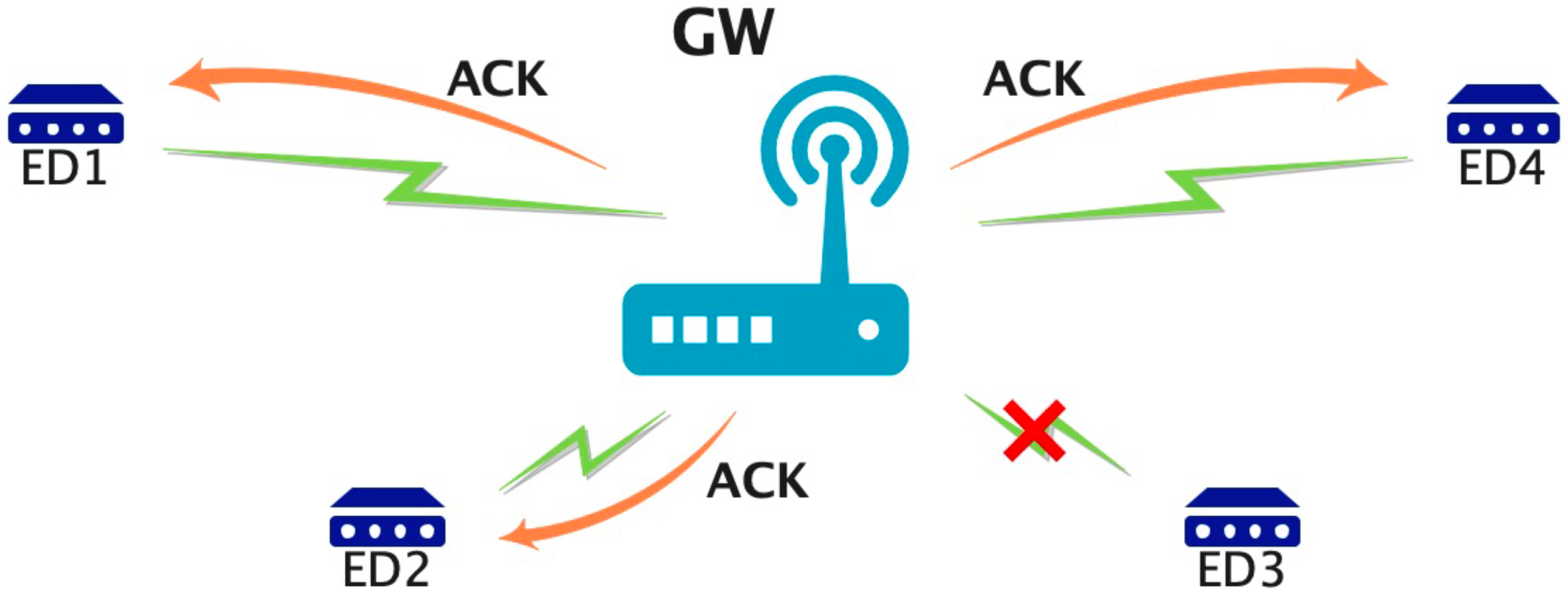

22], each device independently observes its surrounding environment and autonomously adjusts its transmission parameters. Devices can learn from their local information, such as communication success rates and acknowledgment (ACK) information to select appropriate transmission parameters, thereby enhancing network performance. A key advantage of this approach is the distribution of network load, reducing dependency on a central server and mitigating the scalability issues associated with an increasing number of devices [

23]. Additionally, since parameter adjustments are made without relying on a central server, devices can quickly adapt to environmental changes, improving real-time response. However, the distributed approaches also present challenges. For instance, in resource-constrained environments such as LoRa devices, executing learning processes with high computational load is challenging, leading to difficulty in practical applications and slower convergence and delays in overall network optimization. Furthermore, for IoT devices with power constraints, an increase in computational load results in higher energy consumption, making continuous learning difficult [

16,

24]. To address this issue and ensure consistent adaptation across devices, it is essential to develop lightweight learning algorithms that can operate efficiently within the resource limitations of LoRa devices, reducing computational load while maintaining adaptability. Refs. [

17,

18,

19,

20,

21,

22] proposed lightweight distributed transmission parameter selection approaches that can run on actual IoT devices. However, energy efficiency is not well considered in this work [

25].

As discussed above, centralized resource allocation approaches suffer from scalability limitations and communication overhead, whereas decentralized approaches require high computational costs or do not consider the optimization of energy efficiency. To solve these problems, this paper proposes a lightweight distributed reinforcement learning method aimed at improving both communication success rates and energy efficiency in LoRa networks. In the proposed approach, each LoRa end device independently learns and dynamically selects the optimal channel, TP, and BW based on its local communication environment, using reinforcement learning. The method employs a UCB1-tuned algorithm to balance exploration and exploitation in the parameter selection process. Moreover, energy consumption information is integrated into the learning framework to ensure that devices can reduce power usage while maintaining high transmission success rates. The proposed scheme is designed to be computationally lightweight and feasible for implementation on resource-constrained IoT devices, enabling adaptive communication even in high-density network scenarios. The main contributions of this paper are summarized as follows:

By introducing a lightweight reinforcement learning approach instead of complex optimization algorithms, the proposed method enables operation under resource-constrained conditions. Specifically, the proposed method utilizes the Upper Confidence Bound (UCB)1-tuned algorithm for transmission parameter selection, making it feasible for IoT devices with limited memory capacity and computational ability.

The proposed method incorporates energy consumption considerations in the reward design of the UCB1-tuned algorithm to improve energy efficiency. Specifically, the learning process balances TP and channel selection while ensuring communication reliability based on the ACK information and selected TP. Unlike conventional methods that primarily focus on maximizing communication success rates, this study aims to optimize both energy efficiency and communication quality. By considering the trade-off between power consumption and transmission success rates, the method enhances both transmission efficiency and energy savings in LoRa systems.

To further improve the spectrum and energy efficiency, the BW selection is introduced into our proposed method. Experimental results indicate that the success rate and energy efficiency can be significantly enhanced when the density of the LoRa network is higher by adding BW selection.

To validate the practicality of the proposed method, experiments are conducted using real LoRa devices in a high-density network. The proposed method is compared with conventional approaches such as ADR-Lite, fixed parameter selection, and the -greedy method in terms of transmission success rates and energy efficiency. The performance evaluation results demonstrate that the proposed method can achieve the highest transmission success rates and energy efficiency.

This paper is structured as follows.

Section 2 reviews the related work.

Section 3 introduces the system model and problem formulation.

Section 4 describes the proposed method.

Section 5 presents the performance evaluation.

Section 6 concludes this paper and gives direction for future research.

5. Performance Evaluation

In this section, we evaluate the performance of the proposed method. First, we analyze the impact of channel and TP selection on the transmission success rate and energy efficiency, comparing the proposed method (UCB1-tuned) with conventional methods (-greedy, ADR-Lite, and Fixed Allocation). Next, we evaluate the efficiency of adding BW selection by comparing the performance with and without BW selection. Finally, we compare the performance of the proposed method with other algorithms (-greedy, ADR-Lite, and Fixed Allocation) and demonstrate its effectiveness in terms of transmission success rate and energy efficiency.

5.1. Performance Evaluation of Channel and TP Selection

In this subsection, we evaluate the selection ratio of each TP value, transmission success rate, and the energy efficiency of the proposed method. Here, in the proposed method, the channel and TP are selected independently using the UCB1-tuned algorithm by each LoRa ED. Additionally, comparison with several other algorithms is also carried out. For each result, it was the average value of five experiments.

5.1.1. Experimental Environment and Parameter Settings

In this work, LoRa EDs implementing the proposed method were set up as shown in

Figure 2. The transmitter and receiver sides consist of the LoRa EDs and the GW, respectively. The transmitters generate and transmit data. The receiver processes the received data to observe the transmission success rate and energy efficiency of each transmitter. Three receivers were set up to imitate GW, and each was assigned a different received channel. The number of LoRa EDs varies from 10 to 30 in the performance evaluations. In our deployment, the intra-distance between adjacent end devices was approximately 10 cm, the intra-distance between adjacent gateways was approximately 8 cm, and the distance between each gateway and its associated end devices ranged from 10 cm to 100 cm. Each device starts operation at random timings and transmits data every 10 s. The

BW and

SF are set to 125 kHz and 7, respectively. The number of retransmissions are set to 0. Each LoRa ED selects one channel from five channels, i.e., {920.6, 921.0, 921.4, 921.8, 922.2} MHz, and one TP from the TP set {−3, 1, 5, 9, 13} dBm. The GW can only receive the transmitted data using the following three channels: {921.0, 921.4, 921.8} MHz. The transmission times for each LoRa ED is set to 200. Parameters related to the energy consumption mode used in the performance evaluation are

mWh,

mWh,

mWh,

mW,

bytes, and

= 36 ∼ 44 bytes. Here,

represents the wake-up time of the LoRa device,

is the processing time for selecting transmission parameters by the micro-controller, and

is the reception time of the device, which are measured in the experiments. The details of the experimental parameters are summarized in

Table 3.

5.1.2. Comparison Methods

To examine the effectiveness of the proposed method, we compare our proposed method with the -greedy-based, ADR-Lite, and fixed transmission parameters allocation methods. -greedy is the simplest MAB algorithm, where LoRa EDs select the combination of the channel and TP with the largest reward with probability and randomly select a combination with probability . Specifically, is defined as , where t represents the number of transmissions. As the transmission count increases, decreases, encouraging exploitation of learned strategies over time. The fixed allocation method pre-assigns channels evenly to transmitters and transmits at the minimum TP. The ADR-Lite algorithm was introduced as a centralized method, but it is implemented as a distributed method in this performance evaluation. In the ADR-Lite algorithm, the LoRa ED sorts the TP in increasing order while the channel is listed according to the channel situation. Specifically, the list of the transmission parameters is set as {{CH1, −3 dBm}, {CH9, −3 dBm}, {CH3, −3 dBm}, {CH5, −3 dBm}, {CH7, −3 dBm}, {CH1, 1 dBm}, CH9, 1 dBm}, {CH3, 1 dBm}, …, {CH1, 13 dBm}, {CH9, 3 dBm}, {CH3, 13 dBm}, {CH5, 13 dBm}, {CH7, 13 dBm}}, where CH1, CH3, CH5, CH7, and CH9 are the channels with 920.6, 921.0, 921.4, 921.8, and 922.2 MHz, respectively. CH1 and CH9 are unavailable for the receiver, which can be regarded as the channels with the worst situation. The combination of the channel and TP located further back in the transmission parameter list, the TP is higher while the channel situation is better. In the ADR-Lite algorithm, LoRa ED initiates communication starting with the last combination of the transmission parameters in the list first. If the transmission is successful, the next set of the transmission parameters is halved to the middle value of the first set and the previously selected transmission parameter set in the list; if it fails, the next set of the transmission parameters is set to the transmission parameters in the middle of the last set and the previously selected transmission parameter set in the list.

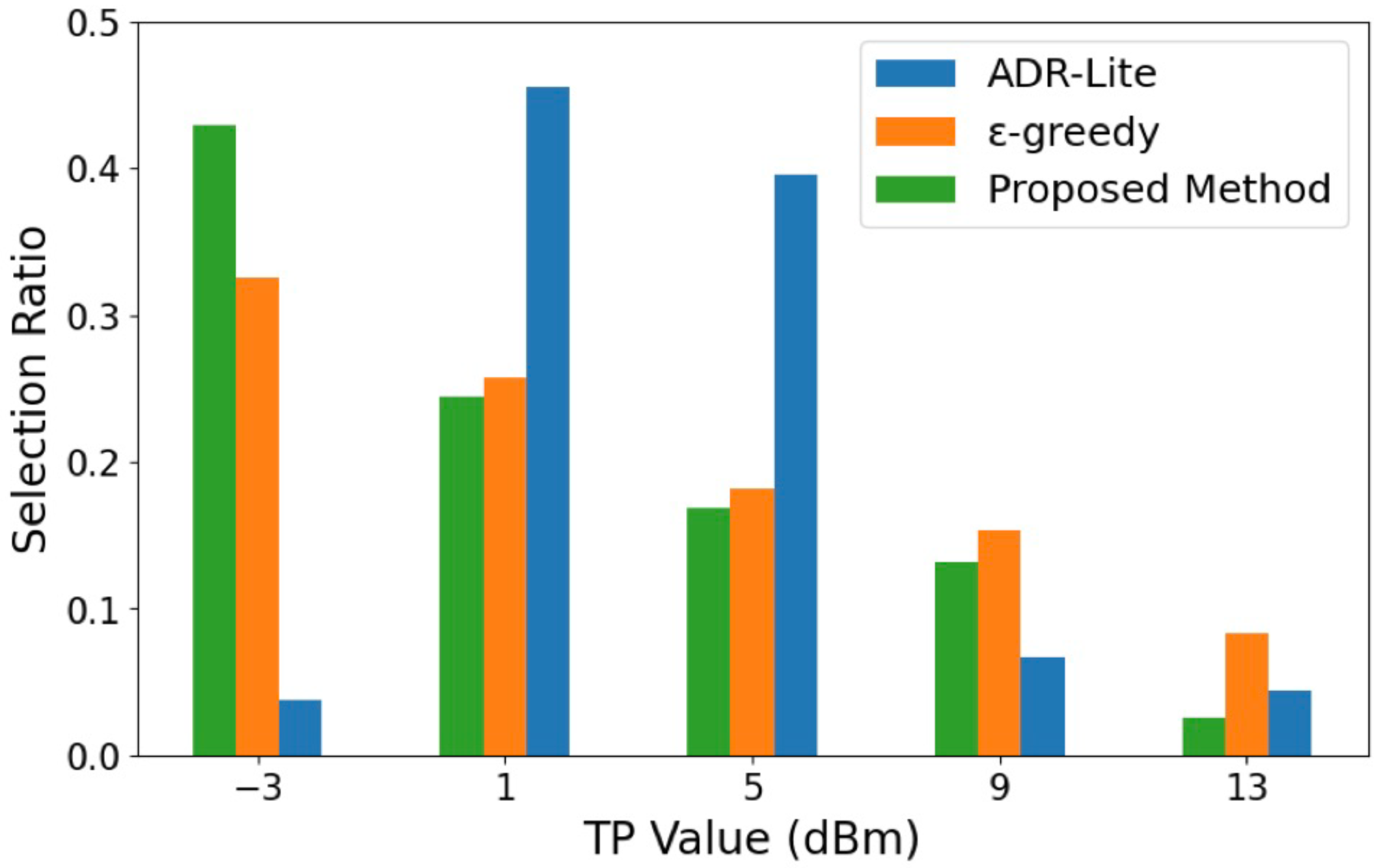

5.1.3. Selection Ratio of Each TP Level

Figure 3 shows the proportion of TP selected when the transmission was successful for each algorithm in the experiment conducted with 30 transmitters. Fixed allocation is omitted in the results because it transmits at the minimum TP for all transmissions.

As shown in

Figure 3, our proposed method can achieve the highest proportion of selecting the minimum power. This is because the

-greedy algorithm required a longer exploration period than the proposed method, which led to a higher likelihood of selecting higher transmission power levels. In addition, the ADR-Lite showed the lowest rate of selecting the minimum power level, primarily due to its tendency to increase the transmission power immediately after a single transmission failure. Such behavior may contribute to reduced energy efficiency, especially in dense network environments.

5.1.4. Transmission Success Rate

Figure 4 shows the transmission success rate with varying numbers of transmitters for each algorithm.

As shown in

Figure 4, the transmission success rate decreases as the number of transmitters increases. This is likely due to increased traffic leading to channel congestion and communication collisions. In addition, our proposed method can achieve the highest success rate under any number of transmitters compared to other methods. Although fixed allocation evenly assigned channels, its performance was worse than that of the MAB (our proposed and

-greedy-based) methods. This is because it does not consider the states of other devices in the surroundings. Moreover, the ADR-Lite method cannot avoid channels with low transmission success rates, as it only considers the results (successfully transmitted or not) of the previous transmission.

5.1.5. Energy Efficiency

In this subsection, we evaluate the average energy efficiency of each method based on the formulation in

Section 3.2. Specifically, we use the definition of energy efficiency for each selected transmission parameter combination as given in (

8) and compute the average energy efficiency of all devices during the total transmissions for each method.

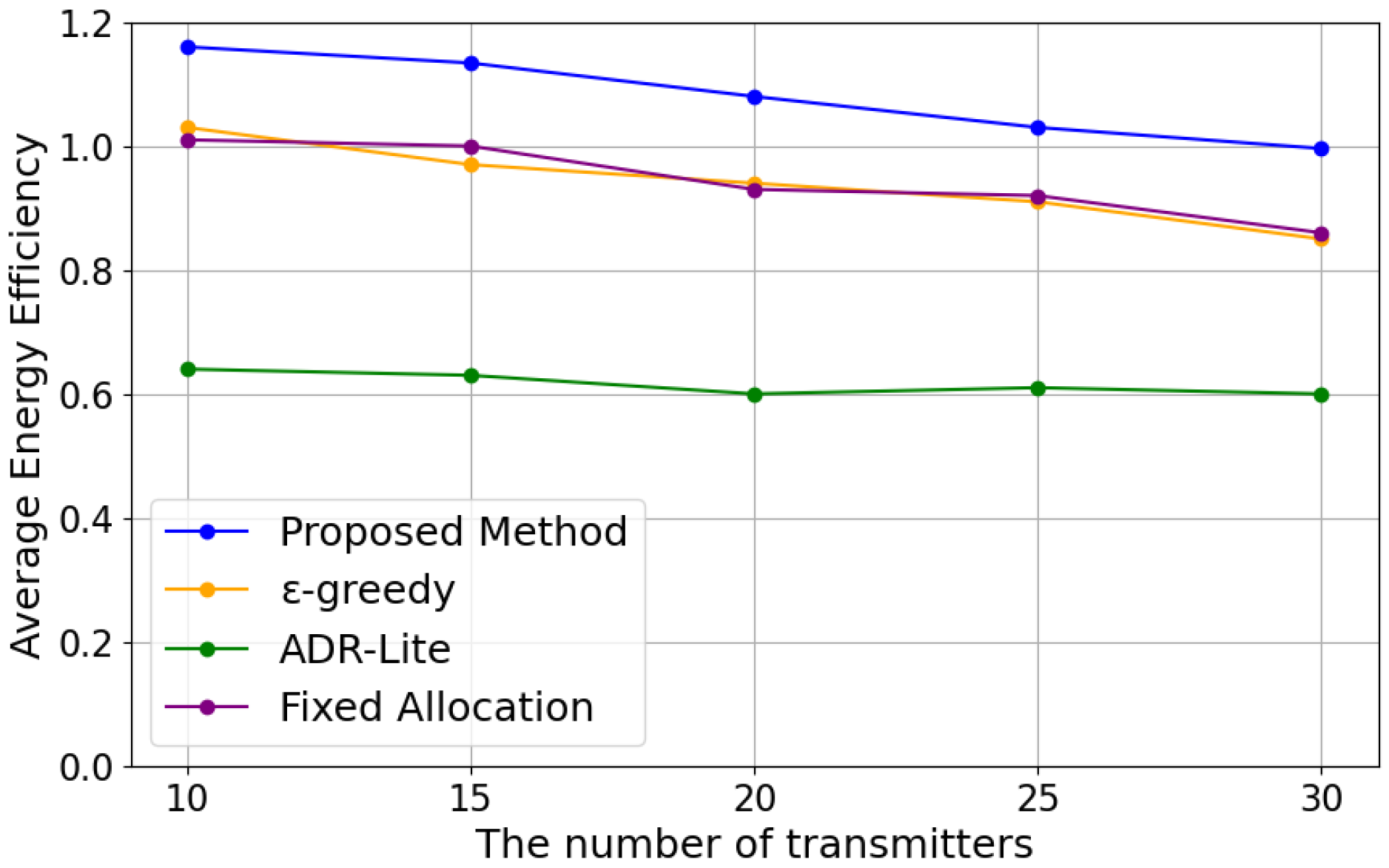

Figure 5 shows the results in energy efficiency with the varying numbers of LoRa EDs for each algorithm.

As shown in

Figure 5, the value of energy efficiency decreases as the number of transmitters increases. This is likely due to increased traffic leading to lower transmission success rates and more devices selecting larger TP. In addition, our proposed method can achieve the best performance under any number of transmitters, followed by the

-greedy method. This is because our proposed method is better at selecting lower TP. The ADR-Lite method performed poorly in energy efficiency because it selected a larger TP to avoid transmission failures. Despite assigning the minimum TP, fixed allocation performed worse in energy efficiency than our proposed method. This is because it had a lower transmission success rate compared to our proposed method. Therefore, the trade-off between the level of the selected TP and the transmission success rate is also important in energy efficiency.

5.2. Performance Evaluation of the Proposed Method with and Without BW Selection

In this subsection, we compare the performance of the proposed method (UCB1-tuned) with and without BW selection in terms of transmission success rate and energy efficiency. Each result represents the average value obtained from ten experimental trials.

5.2.1. Experimental Environment and Parameter Settings

This experiment follows the same setup as described in

Section 5.1, with the differences summarized in

Table 4. In this experiment,

BW selection is enabled, allowing each device to dynamically choose between 125 kHz and 250 kHz. Due to this change, the available channels also differ from those in

Section 5.1. Specifically, when

BW is 125 kHz, the available channels are {920.6, 920.8, 921.0} MHz, whereas when

BW is 250 kHz, the available channels are {920.7, 921.1} MHz. Additionally, the transmission interval is set to 12 s, and the payload size ranges from 41 to 50 bytes. The variation in payload size is due to the inclusion of information in each transmitted packet, such as the device ID, selected transmission parameters, the number of transmission attempts, and the counts of successful and failed transmissions. As a result, the payload size slightly varies for each transmission. For comparison methods,

BW is fixed at 125 kHz, and the available channels are set to {920.6, 920.8, 921.0, 921.2, 921.4} MHz.

5.2.2. Transmission Success Rate

Figure 6 shows the transmission success rate under different numbers of transmitters. The results indicate that the transmission success rate tends to decrease as the number of devices increases. In the proposed method, the success rate was 90.53% in an environment with 10 devices, decreasing to 80.87% with 20 devices and 77.79% with 30 devices. On the other hand, in the comparison method, the success rate was 90.99% with 10 devices, which was almost equivalent to that of the proposed method, but it decreased to 79.51% with 20 devices and 72.94% with 30 devices, showing a more significant drop as the number of devices increased. Particularly in the environment with 30 devices, the proposed method outperformed the comparison method by 4.85%, confirming its advantage in scenarios where a large number of devices coexist.

The primary factor contributing to this difference in success rates is considered to be the BW selection. In the comparison method, all transmission channels were fixed at BW 125 kHz, limiting the number of available channels to five. In contrast, the proposed method included both BW 125 kHz and BW 250 kHz as selectable options, with additional channels available for BW 250 kHz. A wider BW reduces transmission time for the same amount of data, potentially lowering the risk of transmission overlap and interference. This mechanism is particularly beneficial in environments where multiple devices transmit simultaneously, as shorter transmission durations decrease the probability of interference with other devices. As a result, the proposed method effectively mitigates the decline in success rate even in high-density device environments. These findings demonstrate that the proposed method enables adaptive communication control that responds to environmental changes, particularly maintaining a higher success rate as the number of devices increases. Moving forward, further evaluations under different environmental conditions and the optimization of BW selection will be essential to achieving even higher transmission success rates.

5.2.3. Energy Efficiency

Figure 7 shows the results in energy efficiency with the varying numbers of LoRa EDs for each algorithm.

As shown in

Figure 7, it can be observed that in both methods, energy efficiency tends to decrease as the number of devices increases. In the proposed method, the energy efficiency was 0.97 at 10 devices, decreasing to 0.89 at 20 devices and 0.84 at 30 devices. On the other hand, in the comparison method, the energy efficiency was also 0.97 at 10 devices, which is equivalent to the proposed method. However, as the number of devices increased, it dropped more sharply to 0.85 at 20 devices and 0.78 at 30 devices. Notably, at 30 devices, the proposed method with

BW maintained approximately 7.69% higher energy efficiency than the comparison method, indicating its superior performance in maintaining efficiency.

One of the key factors contributing to this difference is the same as that of the transmission success rate. That is, BW is fixed at 125 kHz for the comparison method, resulting in longer transmission times, which may lead to increased energy consumption. In contrast, the proposed method allows the selection of BW 250 kHz, which shortens the transmission time for the same amount of data. In LoRa communication, reducing the transmission time (ToA: Time on Air) directly contributes to lower energy consumption. Therefore, the use of BW 250 kHz is considered to have played a crucial role in improving energy efficiency. Based on these considerations, the proposed method demonstrates its effectiveness in mitigating the decline in energy efficiency, especially in environments with a large number of devices. The selection of BW 250 kHz contributes to energy efficiency improvement by reducing transmission time, even while introducing a potential risk of interference. For future research, it will be important to conduct a more detailed analysis of the impact of interference and optimize the BW selection strategy to establish a more efficient communication method.

5.3. Performance Comparison of the Proposed Method with Other Algorithms

In this subsection, we evaluate the transmission success rate and the energy efficiency of the proposed method and compare it with several other algorithms. For each result, it was the average value of ten experiments.

5.3.1. Experimental Environment and Comparison Methods

This experiment follows the same setup as described in

Section 5.2. Therefore, each device dynamically selects a

BW of either 125 kHz or 250 kHz, with the available channels being {920.6, 920.8, 921.0} MHz for 125 kHz and {920.7, 921.1} MHz for 250 kHz. The comparison methods in this experiment are the same as those in

Section 5.1, but some settings differ. In Fixed Allocation, the

BW is fixed at 250 kHz, and the available channels are 920.7 and 921.1 MHz, evenly assigned. The ADR-Lite algorithm remains the same as in

Section 5.1, but the parameter set differs, using {CH1, 250 kHz, −3 dBm}, {CH2, 250 kHz, −3 dBm}, {CH1, 250 kHz, 1 dBm}, {CH2, 250 kHz, 1 dBm}, {CH1, 250 kHz, 5 dBm}, {CH2, 250 kHz, 5 dBm}, …, {CH2, 125 kHz, 9 dBm}, {CH3, 125 kHz, 9 dBm}, {CH1, 125 kHz, 13 dBm}, {CH2, 125 kHz, 13 dBm}, {CH3, 125 kHz, 13 dBm}.

5.3.2. Transmission Success Rate

Figure 8 shows the transmission success rate with varying numbers of transmitters for each algorithm. As shown in

Figure 8, it can be observed that the success rate of each method tends to decrease overall as the number of transmitting devices increases. When the number of devices was 10, all methods maintained a success rate of over 80%. However, at 20 devices, a decrease of approximately 5–10% was observed, and at 30 devices, the success rate declined further. Notably, the

-greedy method showed a significant drop in success rate as the number of devices increased, decreasing from 83.20% at 10 devices to 70.75% at 30 devices. While the fixed allocation and ADR-Lite methods exhibited relatively stable results against the increase in the number of devices, they still could not maintain as high a success rate as the proposed method.

The best-performing method was the proposed method. It achieved a success rate of 90.53% at 10 devices, 80.87% at 20 devices, and 77.79% at 30 devices, recording the highest success rate across all device numbers. These results indicate that the proposed method maintains relatively stable performance even as the number of devices increases. Compared to other methods, the -greedy method, in particular, tends to experience a significant drop in success rate because it performs exploratory parameter selection. As the environment becomes more complex, it becomes increasingly difficult to find optimal transmission parameters. The fixed allocation method struggled to maintain success rates as the number of transmissions increased since it could not adapt to channel competition or environmental changes. On the other hand, ADR-Lite adjusts parameters based on whether the previous transmission was successful, offering short-term adaptability. Because it does not consider long-term channel usage conditions, it tends to be biased toward specific channels or transmission conditions, resulting in a stagnation in overall success rates.

Figure 9 shows the change in the success rate as the number of transmissions increases when the number of transmitters is fixed at 30. From

Figure 9, it can be seen that the proposed method gradually outperforms the other methods as the number of transmissions increases. In the early stage, where the number of transmissions is small and the parameter selection is mainly exploratory, the success rate of the proposed method is lower than that of ADR-Lite and the fixed allocation method. However, as the number of transmissions increases, the proposed method is able to utilize the learning results obtained during the exploration phase to select more appropriate transmission parameters, resulting in a higher success rate compared to the other methods. These results indicate that the proposed method can effectively improve communication performance by leveraging accumulated knowledge over time, even in environments with high channel competition.

The reason why the proposed method outperformed the other methods can be attributed to its ability to adaptively select multiple parameters, including channel, TP, and BW. In particular, while ADR-Lite bases its adjustments only on previous success or failure, the proposed method considers a broader range of information, making it more adaptable to environmental changes. Even when channel competition intensified due to an increase in the number of devices, the proposed method exhibited a more gradual decline in success rate compared to other methods, demonstrating its effectiveness in maintaining stable communication.

5.3.3. Energy Efficiency

Figure 10 shows the results in energy efficiency with the varying numbers of LoRa EDs for each algorithm.

As shown in

Figure 10, the experimental results indicate that energy efficiency tends to decrease as the number of transmitters increases. In an environment with 10 devices, all methods recorded an energy efficiency of 0.8 or higher. However, at 20 devices, there was an overall decrease of approximately 0.05, and at 30 devices, the efficiency declined even further. Notably, the

-greedy method exhibited a significant decrease in energy efficiency as the number of devices increased, dropping from 0.89 at 10 devices to 0.76 at 30 devices. The primary reason for this decline is that the

-greedy method frequently performs unnecessary transmissions during its trial-and-error process, leading to excessive energy consumption. On the other hand, the fixed allocation method had the lowest energy efficiency, and this gap widened as the number of devices increased. This is because the fixed allocation method does not adapt to environmental changes and continues operating under the same transmission conditions. As the number of devices increases, transmission competition becomes more intense, leading to a higher likelihood of transmission failure. Consequently, more energy is wasted, significantly reducing overall efficiency. In contrast, the proposed method maintained the highest energy efficiency, achieving 0.97 with 10 devices, 0.89 with 20 devices, and 0.84 with 30 devices. These results demonstrate that the proposed method effectively suppresses the increase in energy consumption while maintaining stable efficiency, even as the number of devices grows. Even ADR-Lite, which exhibited relatively high energy efficiency, declined from 0.95 to 0.83, showing that sustaining efficiency in a large-scale device environment becomes increasingly challenging.

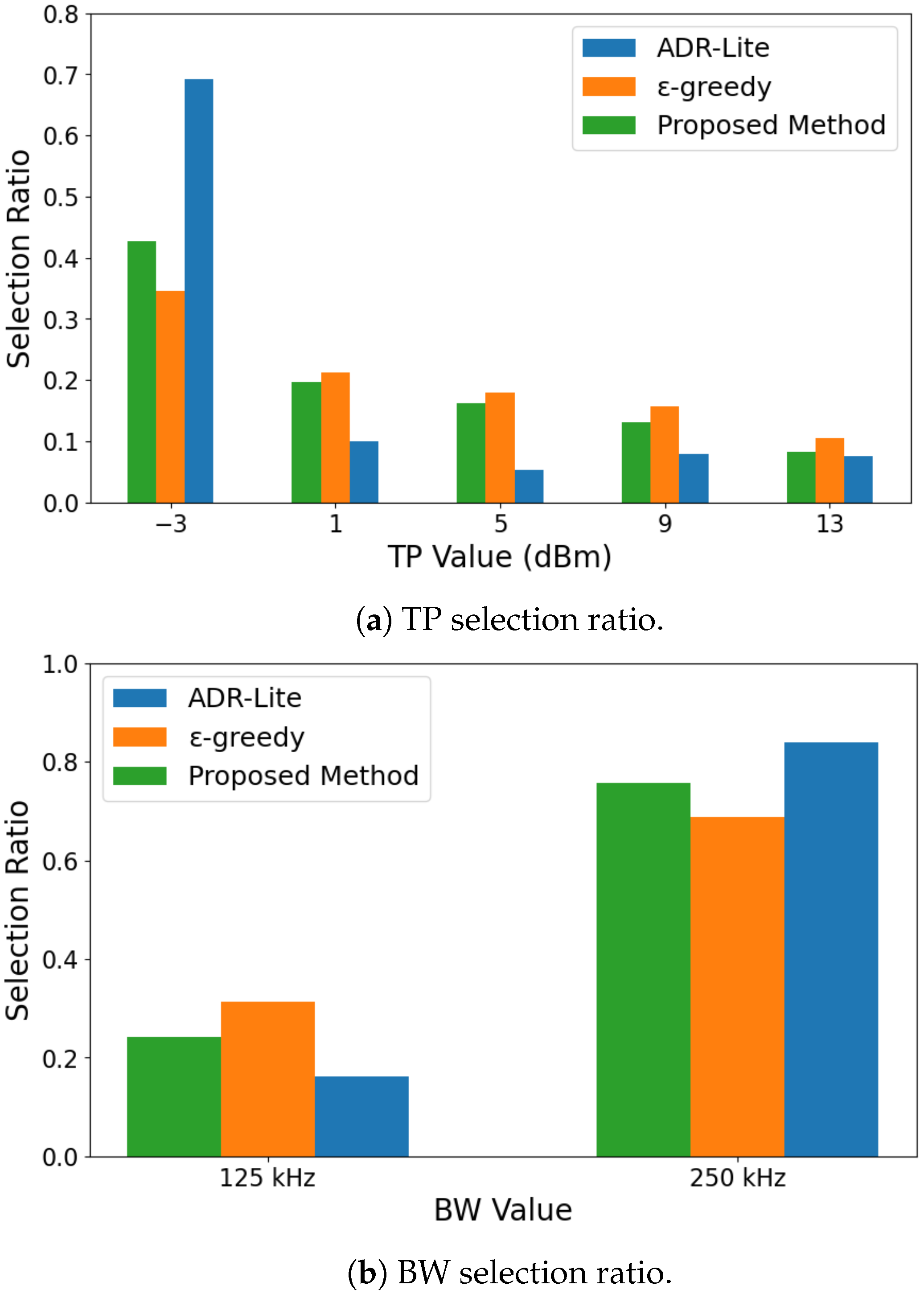

The key factor contributing to these results is the selection strategy for TP and

BW.

Figure 11a,b illustrate the proportion of TP and

BW selected for successful transmissions in an experiment with 30 transmitters. ADR-Lite had the highest proportion of selecting the minimum TP and the widest

BW, followed by the proposed method. However, the proposed method achieved the highest energy efficiency, highlighting the significant impact of balancing TP and

BW selection on energy consumption. Specifically, a wider

BW shortens transmission time, potentially reducing energy consumption. However, a wider

BW is also more susceptible to interference, increasing packet loss. Additionally, lowering TP can reduce energy consumption, but it may also degrade communication quality, making transmission failures more likely. Since there is a trade-off in selecting TP and

BW, the proposed method optimally balances these factors, leading to improved energy efficiency.

The reason why the proposed method outperformed other methods in terms of energy efficiency is that it maintains a high transmission success rate, thereby reducing packet loss and minimizing energy consumption. In particular, ADR-Lite adjusts parameters solely based on past transmission results, whereas the proposed method considers a broader range of information, allowing it to select optimal transmission parameters more effectively. As a result, even when channel competition intensifies due to an increasing number of devices, the proposed method can sustain a high success rate while minimizing unnecessary energy consumption. These findings indicate that the proposed method is the most stable in terms of energy efficiency and is well-suited for high-density environments.

6. Discussion

The proposed method demonstrated its effectiveness in terms of transmission success rate and energy efficiency in an experimental environment with 30 LoRa end devices. However, to apply this method to larger and denser network environments, several technical challenges must be addressed. For instance, as network density increases, the existing parameter space, consisting of combinations of channel, SF, BW, and TP, may become insufficient to avoid transmission collisions and congestion. To address this issue, one possible approach is to expand the parameter space by increasing the number of available channels, adding more selectable SF values, and adding the numbers of TP levels. However, expanding the set of selectable parameters inevitably enlarges the action space for the reinforcement learning algorithm, which leads to longer exploration times and higher memory requirements to store learning histories for each parameter set.

In addition, the proposed method is designed to minimize computational load on each device. At each transmission attempt, the UCB1-tuned index is calculated for all parameter sets (arms), and the set with the highest score is selected. The computational complexity per loop is linear with respect to the number of arms K, i.e., , and the calculations consist primarily of basic arithmetic and logarithmic operations, making them computationally lightweight. Nevertheless, increasing the number of arms significantly increases the required memory and the time needed for convergence. In our implementation on an Arduino Pro Mini, approximately 58% of the available Flash memory and 67% of the SRAM were utilized, indicating that even with the current parameter space size, memory constraints are already a limiting factor. Therefore, appropriate control of the action space becomes essential when handling a larger number of parameters.

Moreover, while this study fixed the SF at 7, the method can be extended to support multiple SF values by including SF as a selectable parameter. However, such an extension would further increase the size of the action space, resulting in greater exploration burden and memory usage. Furthermore, scaling the method to hundreds of end devices may introduce additional challenges, such as a higher probability of transmission collisions and limitations in downlink capacity for ACK responses.

To address these challenges, we will try to optimize exploration efficiency and memory usage by incorporating action space pruning and adopting learning architectures that structurally decompose or hierarchically organize the parameter space in our future work. Besides, we will consider integrating offline learning in the initial phase to preselect promising parameter combinations, thereby reducing online exploration costs. Additionally, we will explore partitioning the parameter space among devices to avoid redundant learning and reduce contention, thereby distributing memory usage more efficiently. Furthermore, collaborative learning will be investigated to further reduce duplicated exploration and improve overall learning efficiency. Through these approaches, we aim to achieve a balance between scalability and implementation feasibility, enabling practical and cost-effective deployment of the proposed method in dense LoRa network environments.

7. Conclusions

This paper proposed an autonomous decentralized transmission parameter selection method using reinforcement learning to improve energy efficiency in LoRa networks. The proposed method enables each device to learn and select appropriate channel, TP, and BW by utilizing ACK information and TP, aiming to balance transmission success rate and energy efficiency. The method was implemented on actual LoRa devices and evaluated in a high-density LoRa network. The experimental results demonstrated that the proposed method outperformed conventional methods in both transmission success rate and energy efficiency. Notably, the method maintained a high success rate while reducing power consumption by utilizing lower TP and wider BW. These results suggest that the proposed method is effective even for resource-constrained IoT devices.

Optimization of other transmission parameters, including dynamic selection of the SF, is necessary to further enhance both energy efficiency and communication quality in our future work. Specifically, dynamically adjusting the SF based on device location and surrounding communication conditions may lead to further improvements in energy efficiency. Additionally, extending the applicability of the proposed method to long-range communication environments and mobility scenarios requires further algorithmic improvements. Although LoRa supports long-range communication, signal attenuation and interference become more significant in distant areas, making adaptive parameter control that can maintain high transmission success rates and energy efficiency even more critical. Furthermore, mobility support is an essential future consideration. The proposed method in this paper is primarily designed for static IoT devices, but for mobile applications such as logistics tracking and smart city monitoring, the wireless environment continuously changes over time, requiring real-time adaptability. To achieve it, optimizing the algorithm to improve the learning speed and ensure feasibility on resource-limited edge devices is crucial. Building upon the findings of this study, we will advance research towards constructing a more energy-efficient LoRa network that can adapt to long-range communication environments and mobility scenarios.