Abstract

Rolling bearing failure poses significant risks to mechanical system integrity, potentially leading to catastrophic safety incidents. Current challenges in performance degradation assessment include complex structural characteristics, suboptimal feature selection, and inadequate health index characterization. This study proposes a novel attention mechanism-based feature fusion method for accurate bearing performance assessment. First, we construct a multidimensional feature set encompassing time domain, frequency domain, and time–frequency domain characteristics. A two-stage sensitive feature selection strategy is developed, combining intersection-based primary selection with clustering-based re-selection to eliminate redundancy while preserving correlation, monotonicity, and robustness. Subsequently, an attention mechanism-driven fusion model adaptively weights selected features to generate high-performance health indicators. Experimental validation demonstrates the proposed method’s superiority in degradation characterization through two case studies. The intersection clustering strategy achieves 32% redundancy reduction compared to conventional methods, while the attention-based fusion improves health indicator consistency by 18.7% over principal component analysis. This approach provides an effective solution for equipment health monitoring and early fault warning in industrial applications.

1. Introduction

With the improvement of scientific technology and manufacturing level, an increasing number of complex mechanical equipment plays an indispensable role in industrial production and daily life. During the operation of mechanical equipment, mechanical failures of varying degrees will inevitably occur. Leaving equipment to continue operating without the timely detection of equipment failures may lead to serious accidents, which often cause incalculable human and property losses [1]. In order to achieve the predictive maintenance and autonomous protection of equipment, scholars have proposed prediction and health management (PHM) technology [2]. PHM technology firstly uses sensors to obtain equipment operation data, and then promptly discovers possible damage faults of equipment through data analysis on the one hand, and establishes a condition assessment model to effectively judge the status of equipment through monitoring data on the other hand [3]. As one of the most important parts in mechanical transmission system, bearings have a high probability of failure and will seriously affect the operation of the whole machine. It is of great significance for the mechanical equipment safe operation to conduct research on the sensitive feature extraction of rolling bearing degradation, the construction of health index, the construction of state evaluation model and the online monitoring of running state [4].

However, a single feature contains limited information about the bearing condition, so that using a single feature as a health indicator fails to fully reflect the bearing degradation state. With the development of signal analysis techniques, a large number of features have been constructed to describe the degradation state of the equipment, facilitating a more complete characterization of the equipment condition information from multiple aspects. Nevertheless, not all the constructed features are applicable to the remaining bearing life assessment; moreover, too many features may cause data redundancy and increase the computational effort. Therefore, it is valuable to select a subset of features from the high-dimensional feature set that are sensitive to the bearing degradation state and have a small feature dimensionality to reduce the model complexity and computation time and improve the remaining life prediction accuracy [5,6]. Many scholars have conducted in-depth investigations on the selection of sensitive features for rolling bearing condition characterization. Zhang et al. [7] proposed correlation, monotonicity, and robustness evaluation indexes to select some of the features suitable for remaining life prediction, and the best features were selected by weighting the sum of the three evaluation indexes. Yang et al. [8] used the Fisher ratio to select the key sensitive features from many features and discarded the noisy ones. The Fisher ratio method measures the goodness of features by the ratio of intra-group scatter and intra-group spread, and a larger ratio means better features. Geramifard et al. [9] first selected 8 key features from 16 initial features using a genetic algorithm, and then selected the final 3 features from the selected 8 features by taking a correlation measure.

Multidimensional sensitive features fused to obtain health indicators consistent with the degradation state of the equipment are also important for remaining life prediction, and constructed health indicators have an essential impact on the accuracy of remaining life prediction [10]. Principal component analysis (PCA) is one of the effective ways of feature reduction and fusion [11]. Cheng et al. [12] considered that the traditional linear approach of PCA may be limited without considering the nonlinear characteristics of conditional features, and obtained health metrics by fusing multidimensional raw features using a local linear embedding technique. Rai et al. [13] mapped multidimensional features to obtain bearing performance degradation metrics based on a self-organizing mapper, which is a supervised learning model. Y. Shang et al. [14] utilizes a convolutional neural network (CNN) to learn the spatial features from the bearing condition monitoring data, and then employs a stack of bidirectional gate recurrent units (BGRUs) to extract the temporal degrading trend from the data for a more accurate remaining useful life (RUL) prediction.

In summary, most of the current sensitive feature extraction methods of rolling bearing degradation states are based on multi-feature extraction. The fused features can describe the running state of the bearing more comprehensively. However, the content of the above review shows that there are still some problems in the index construction process, such as feature insensitivity, incomplete feature evaluation and unsatisfactory feature fusion. Meanwhile, rolling bearings operate throughout their life through normal, fault, and failure processes. This process is important to determine the starting point of failure and therefore the bearing degradation state for bearing equipment health management and remaining life prediction. Cheng et al. [15] proposed an unsupervised learning kernel spectrum clustering model capable of mapping the input data to a high-dimensional feature space to achieve adaptive sensing of bearing degradation characteristics, and finally achieved the real-time online abnormality identification of rolling bearings. Xia et al. [16] proposed a two-stage remaining life prediction method for rolling bearings using deep learning networks. Firstly, the acquired bearing signals were divided into multiple degradation stages by a deep neural network model based on denoising autoencoder, and then a deep back propagation (BP) neural network was established to achieve the remaining life prediction for each degradation stage. Zhang et al. [17] firstly extracted the features with high correlation with the root mean square value, and then smoothed the features by using the improved Weibull distribution, and finally, the smoothed features were used as the input of the plain Bayesian model to classify the bearing states. Liu et al. [18] proposed a degradation state classification method based on EWMA control charts to achieve a multi-segment segmentation of the bearing degradation states and adaptively switch the stochastic degradation model. Zhao et al. [19] divided the whole life cycle of rolling bearings into three states—the normal state in the initial stable stage, labeled the normal state, which exhibits abnormal behavior; the fault state; and the quasi-fault state between the two—and the grayscale image obtained after wavelet analysis of the vibration signal is learned by using deep convolutional neural networks, and finally, the trained model is used to realize the partitioning of the whole life cycle state of the bearing. Cui et al. [20] established an effective kinetic model of the transient vibration behavior of rolling bearings and obtained a large amount of performance degradation simulation data. A new similarity-based performance degradation dictionary was constructed for RUL prediction, and the degradation process was divided into stable, defect-emerging, defect-expanding, and damage stages. Zhang et al. [21] implemented the proposed waveform entropy index and other conventional features into the long short-term memory neural network to classify the bearing fault states, and also used the optimization-seeking capability of the particle swarm optimization algorithm to optimize the hyperparameters of the network model.

Many scholars have carried out relevant research on the evaluation of rolling bearing performance degradation and the fusion of attention mechanism features. The limitation of single vibration features containing insufficient information and the redundancy in high-dimensional feature sets can be addressed by employing adaptive sensitive feature selection methods to identify degradation-sensitive characteristics [22]. Scholars have explored fusion strategies for heterogeneous features, such as time domain and frequency domain indicators. Attention mechanisms are widely adopted to assign adaptive weights to features, enabling models to prioritize critical diagnostic information [23,24,25,26]. For instance, vibration signals under variable-speed conditions often exhibit masked fault signatures. The multi-scale attention feature fusion network (MAFFN), integrating multi-scale decomposition layers and scale-dependent attention modules (SDAMs), demonstrates superior capability in extracting and synthesizing multi-scale features [27]. Recurrent neural architectures, including long short-term memory (LSTM) and gated recurrent units (GRUs), are frequently utilized for processing sequential bearing data. Temporal attention mechanisms enhance these models by dynamically weighting time-step contributions. A notable example is the residual multi-head attention GRU network, which incorporates automated feature combination extraction to RUL [28,29]. To mitigate redundancy and false fluctuation in multi-feature fusion, adaptive feature fusion with perturbation correction has been proposed, significantly improving RUL prediction accuracy [29]. Additionally, the impact of head numbers in multi-head self-attention mechanisms (MSMs) on model interpretability and performance has been systematically investigated. The GRUMSM framework elucidates how varying attention heads influence feature encoding and RUL prediction outcomes [30]. Furthermore, time–frequency analysis integrated with TransFusion networks demonstrates enhanced fault diagnosis by fusing multi-scale temporal and spectral representations [31,32,33,34,35,36,37].

In conclusion, scholars have conducted a lot of research on the problem of rolling bearing degradation state classification, with a series of achievements. However, due to the existence of problems such as the weak early fault information of rolling bearings, it can easily lead to inaccurate fault detection. Therefore, further research is needed to determine the degradation state of rolling bearings by more accurate fault detection. In this study, the initial feature set is constructed by extracting multi-dimensional features from time domain, frequency domain and time–frequency domain. Then, considering the sensitivity of the features to the bearing degradation state and the redundancy of the features, a feature selection method based on intersection priming and clustering reselection is proposed to obtain a sensitive feature subset with low redundancy from the initial feature set. Finally, the mapping relationship between multidimensional sensitive features and health indicators is established based on the attention mechanism model, so that high-performance health indicators that can characterize the degradation state of rolling bearings are constructed. The main contributions of this study are as follows:

- (1)

- To address feature redundancy and sensitivity requirements in rolling bearing performance assessment, we propose an intersection clustering-based feature selection strategy that combines cross-domain statistical analysis with multi-criteria evaluation. This novel approach eliminates redundant features through intersectional primary selection and clustering re-election processes without requiring artificial weight assignments or normalization procedures.

- (2)

- We establish an attention mechanism-driven feature fusion framework that captures nonlinear relationships and temporal dependencies in degradation data. By adaptively weighting multidimensional sensitive features through self-attention computation, this model generates high-performance health indicators with enhanced degradation characterization capabilities for bearing condition assessment.

- (3)

- The attention feature fusion model proposed in this study automatically processes nonlinear feature relationships without manual weighting, achieving accurate feature fusion and improving the accuracy of performance degradation assessment of rolling bearings.

The rest of this study is organized according to the following sections. Section 2 gives the process of intersection clustering selection for sensitive feature extraction. The principle of proposed attention mechanism-based feature fusion is introduced for performance degradation assessment in Section 3. In Section 4, two cases of datasets are applied to verify the effectiveness, and Section 5 provides the study’s conclusions.

2. Intersection Clustering Selection for Sensitive Feature Extraction

In current research applications, vibration signal analysis is still one of the most effective ways to evaluate the condition of bearings and diagnose faults, mainly because vibration signals can be collected online in real time and contain rich information. In order to achieve the remaining life prediction of rolling bearings, this subsection investigates how to extract sensitive information from the time domain, frequency domain, and time–frequency multi-domain to reflect the degradation state of bearings comprehensively and accurately.

2.1. Multivariate Statistical Feature Construction

In order to comprehensively characterize the degradation state of rolling bearings, a 27-dimensional feature set was constructed in this study, which includes 17 common time domain features, 6 frequency domain features, and 8 time–frequency domain features [5,6]. The time–frequency domain feature is the energy distribution feature constructed with 8 coefficients obtained from the decomposition of three-layer wavelet packet. All 27-dimensional features are shown in Table 1.

Table 1.

Feature indicators.

2.2. Multiple Sensitive Feature Selection with Evaluation Index

Common feature selection methods include the wrapper method, embedded method, and filter method [24]. The wrapper method is to take different subsets of features and then evaluate the effect of the subset of features with the help of the model, and then take the subset of features with the best effect, such as L1 regular model, random forest feature selection method, etc. The embedding method is mainly used to select features with the help of the model’s own feature selection function, such as the forward search algorithm, backward selection algorithm, and two-way search algorithm.

The filtering method evaluates the features based on the filtering index, and then takes the features with high evaluation scores. To avoid coupling between the feature selection method and the used model and to improve the universality of the feature selection method, the feature selection method used in this study is the filtering method, which does not depend on a specific model. Bearing degradation is an irreversible process. For characteristics that reflect the degree of bearing degradation and can be used for the prediction of the remaining life of rolling bearings, the following properties need to be satisfied [25]:

- (1)

- Correlation: bearing after a long period of operation performance degradation; bearing degradation is carried out with time.

- (2)

- Monotonicity (Monotonicity): bearing degradation process to failure; the process is irreversible one-way process.

- (3)

- Robustness (Robustness): bearing vibration signal will inevitably be interspersed with some noise signal; the noise signal needs to have a certain anti-interference ability.

The feature vector F = [f1, f2, …, fn], the time series T = [t1, t2, …, tL], fi represents the eigenvalues at time ti, and i = 1, 2, …, L. L represents the length of time, si is the smooth term of the feature at moment ti, and ri is the random term of the feature at moment ti. Based on the above three considerations, this study uses the relevance index, monotonicity index and robustness index as indicators to evaluate the goodness of extracted features. The larger the value of the indicator, the better the feature corresponds, and then the feature is filtered out.

For the screened features, the feature set also needs to be re-elected to remove the redundant features, so that the correlation between the features remains low. In this study, the Pearson coefficient is chosen to measure the closeness of the linear relationship between the features. There exists a feature vector F1, whose expectation is E(F1) and variance is D(F1), and a feature vector F2, whose expectation is E(F2) and variance is D(F2). The Pearson coefficients of the feature vector and the feature vector are calculated as follows:

Cov(F1, F2) is the covariance of the eigenvectors F1 and F2, calculated as follows:

The closer the absolute value of Pearson coefficient is to 1, the higher the linear correlation between the feature vectors F1 and F2. The closer to 0, the lower the linear correlation between the vectors. The silhouette coefficient S was employed to evaluate clustering quality by measuring intra-cluster compactness and inter-cluster separation. The coefficient ranges from −1 to 1, where higher values indicate better clustering performance. For a dataset X with n samples clustered into K clusters, the silhouette coefficient for the i-th sample is calculated as follows:

where a(i) is the average distance between sample i and other samples in the same cluster, and b(i) is the smallest average distance between sample i and samples in other clusters. The optimal M corresponds to the maximum average silhouette coefficient across all samples.

2.3. Sensitive Feature Extraction via Intersection Clustering Selection

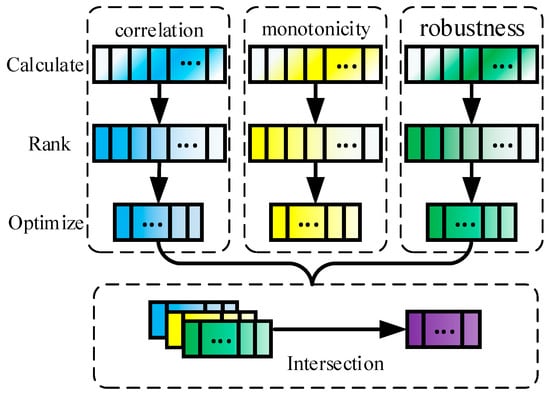

In this study, a feature priming method by intersection of sets is proposed. The method does not require artificially set assigned weights, and more importantly, it does not require the normalization of each index during feature selection, which reduces the introduction of expert knowledge and the computational process. The steps of the proposed feature priming method are as follows:

- (1)

- Calculate the correlation, monotonicity, and robustness indexes of each feature sequence:

- (2)

- Ranking the features according to the calculation results of the indexes, respectively:

- (3)

- Construct the optimal feature set by taking the top-ranked features from each of them:

- (4)

- The set of features after primary selection is obtained by taking the intersection of the optimal set of features:

The selection of bearing characteristics should not be based solely on individual evaluation indicators such as correlation, monotonicity, or robustness. A single indicator can only reflect the features of bearing characteristics in one aspect; therefore, a comprehensive evaluation of all indicators should be used for feature selection. In reference [23], a weighted fusion index is proposed to evaluate the characteristics of features in correlation, monotonicity, and robustness, with the calculation formula as follows:

Assign weights to relevance metrics, monotonicity metrics, and robustness metrics to obtain a weighted fusion metric . Specify a threshold and select features that exceed this threshold. This feature selection method requires manually assigning the magnitude of weight values , which can affect the size of the numerical value. Additionally, it is necessary to determine the threshold, as the settings for weight values and thresholds vary across different devices and operating conditions. The implementation diagram is shown in Figure 1. Each small square represents a feature, and the shade of the color of the square represents the magnitude of the corresponding feature evaluation index. Define health stages (e.g., Normal state: HI < 0.2, Early degradation: 0.2 ≤ HI < 0.5, Severe degradation: HI ≥ 0.5) based on a threshold analysis of the fused health indicator (HI). To address the problem of feature redundancy in the set of primed features, this study proposes a hierarchical clustering method based on Pearson coefficients for performing a cluster analysis of the primed features, using as the distance between features Fi and Fj. The features with low relevance are re-elected from the set of primed features to achieve the purpose of de-redundancy.

Figure 1.

Flow chart of feature selection.

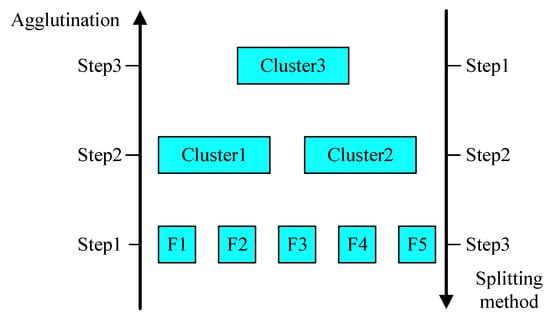

Hierarchical clustering, also known as tree clustering [26], is an efficient unsupervised clustering method. Depending on the direction of recursion, it is divided into Agglomerative clustering, a bottom-up clustering method that recursively merges clusters, and Divisive clustering, a top-down clustering method that recursively partitions clusters, as shown in Figure 2.

Figure 2.

Hierarchical clustering diagram.

In this study, we select the bottom-up coalescent analysis method, use hierarchical clustering to divide the primary features into multiple clusters, and select clusters according to the clustering results, and then select a feature from each cluster as the representative feature of the cluster, so as to realize the re-election of features. The specific implementation steps are as follows, and let the set of primed features be :

- (1)

- Treat each feature sequence as a separate class [C1, C2, …, Cn], and calculate the Pearson coefficient value between the features as the distance between the two classes;

- (2)

- Find the two classes with the smallest distance and coalesce them into a new class;

- (3)

- Recalculate the Pearson coefficients of this new class with other old classes;

- (4)

- Repeat steps (2) and (3) until all features are coalesced into a single class, ending the clustering process;

- (5)

- Determine the number of selected clusters M (M < n) based on the clustering results and select a representative feature from each cluster in cluster [C1, C2, …, Cn]. When there are multiple features in a cluster, the feature with the largest value of the correlation index is selected. The optimal cluster number M is determined using the silhouette coefficient, a data-driven metric that quantifies cluster separation and cohesion. For each candidate M value (ranging from 2 to √N, where N is the number of pre-selected features), the silhouette score is computed. The M value maximizing this score is chosen, ensuring clusters are neither overly fragmented nor excessively merged. This aligns with the theoretical framework of unsupervised clustering optimization, where intrinsic metrics like silhouette scores replace subjective human judgment.

3. Attention Mechanism-Based Feature Fusion for Performance Degradation Assessment

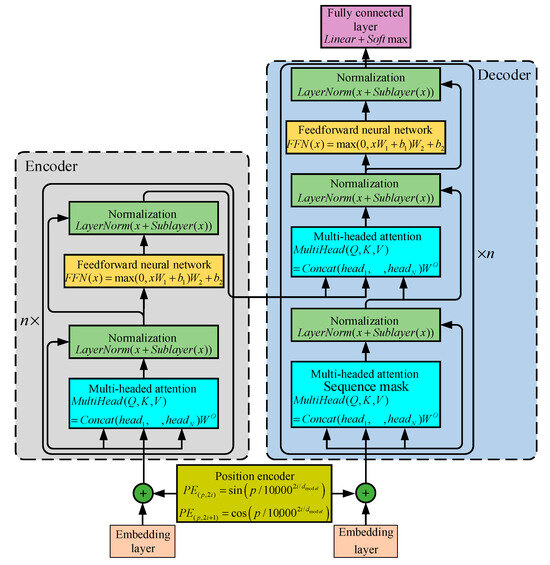

3.1. Transformer Framework

Transformer is a network model based on the attention mechanism [27], which breaks through the limitation that recurrent neural networks cannot compute in parallel by using methods such as multi-headed attention mechanism and positional encoding embedding, and effectively solves the problem that recurrent neural networks are ineffective in processing long-order data and convolutional neural networks are too computationally intensive in processing long-order data. Figure 3 illustrates the structure of a typical Transformer model.

Figure 3.

Transformer network model.

The typical Transformer model contains three main parts, the position encoding and the encoder and decoder parts. The position encoding is for adding relative position information to the input data, which solves the problem that the attention mechanism cannot learn the position information of the temporal sequence, and the equation is as follows:

where i represents the dimension of the vector in the input sequence, p represents the position of the vector in the input sequence, and dmodel represents the dimension of the model input.

The encoder encodes the input sequence to obtain an intermediate sequence containing the sequence information. The encoder consists of a stack of n identical layers, and each layer includes two sub-layers, one is a multi-headed attention mechanism layer and one is a feedforward neural network layer. Also, in order to avoid the problem of increasing the depth of the network leading to the decrease in the model prediction accuracy, each sub-layer is processed by residual connection and layer normalization to obtain the output, which makes the model record only the part of the data change to improve the training effect, and the formula is expressed as follows:

where represents the layer normalization function and is the attention mechanism and feedforward neural network processing function.

The decoder decodes the output sequence based on the intermediate sequence obtained from the encoder processing. The decoder also consists of a stack of identical layers, but differs from the encoder in that the decoder has an additional sub-layer of masking attention mechanism to ensure that the output of the current moment depends only on the data of the previous moment.

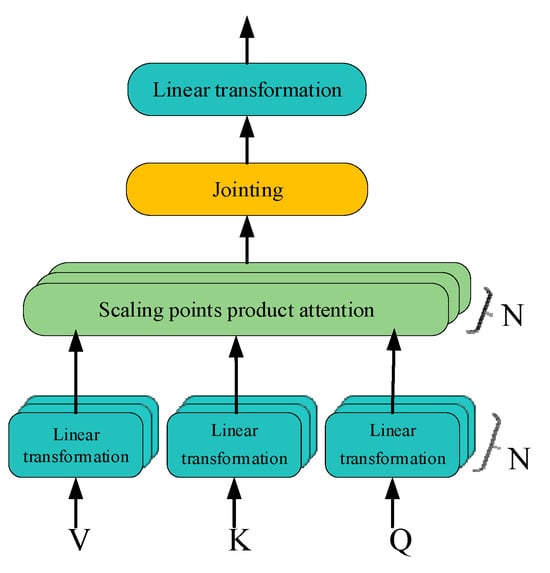

3.2. Multi-Headed Attention Mechanism

The Transformer network model uses a multi-headed attention mechanism to learn useful information in the input data in a multi-dimensional synthesis, which effectively extends the ability of the model to focus on different locations, and finally splices the results of multi-headed attention learning, which is shown in Figure 4.

Figure 4.

Multi-head attention mechanism.

Let the input sequence be , the maximum length of the output sequence be T, the number of attention heads be N, and the output sequence dimension of each attention head be H, and then the weight matrices in the attention heads all satisfy , , . Transformer model multi-headed self-attention mechanism for linear transformation of input data:

Scaling dot product attention mechanism:

where denotes the transposition of the data tensor, so .

The Transformer network uses a multi-headed attention mechanism, which is processed by the attention layer to obtain the result set , and the result set is stitched:

The final output of the multi-headed attention mechanism layer is then obtained after linear transformation:

3.3. Health Indicator Construction

After feature extraction and selection of rolling bearing vibration signals, it is further required to construct multidimensional features into rolling bearing health indicators by feature fusion. The fused features obtained by traditional feature fusion methods have the disadvantage of inconsistent scales, which leads to difficulties in setting the bearing failure thresholds based on the bearing health indicators, and the influence of the threshold settings on the remaining life prediction accuracy of the bearings is huge. In the literature [28], after extracting and selecting the bearing degradation sensitive features, the recurrent neural network RNN that can process the temporal data is used to fuse and learn the temporal features, which gives the fused features the advantage of a stable scale range, so that the problem of inaccurate rolling bearing remaining life prediction due to the unreasonable threshold setting can be avoided. Therefore, this study proposes a multi-functional feature fusion method based on attention mechanism, which uses the advantage of the Transformer model for time-series data processing to perform feature fusion on multi-dimensional time-series sensitive features and realize the mapping of multi-dimensional features to one-dimensional health indicators with the value domain of [0, 1], which has the advantages of fast parallel computation and support for long-sequence time-series prediction processing compared with recurrent neural network, and also uses multi-headed attention mechanism to achieve multi-dimensional deep mining of data, which in turn improves the characterization ability of the model. The proposed method for constructing degraded state health indicators is divided into a training phase and a testing phase, and the flow chart of the training phase is shown in Algorithm 1.

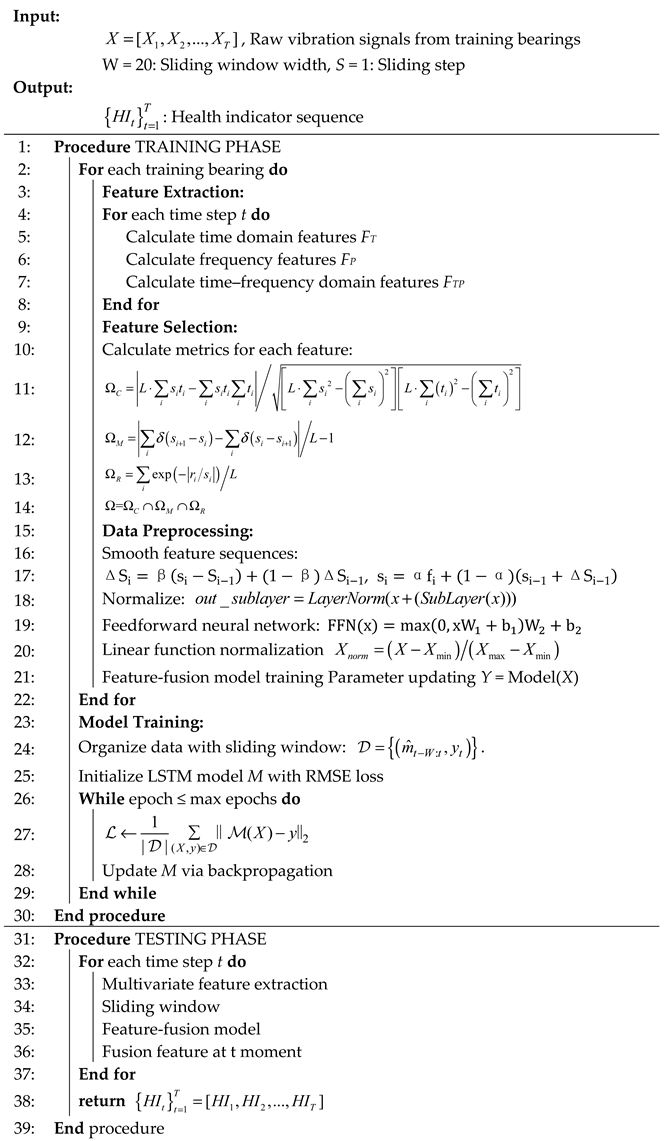

| Algorithm 1. Pseudo code for the proposed method flow. |

|

The specific steps of the training phase of the degenerative state health indicator construction are as follows:

- (1)

- Obtain the vibration signal in the time domain of the whole-life vibration of the training bearing , represents the vibration data collected at time , and N represents the number of points of the signal collected at each time.

- (2)

- Calculate a total of 27 features of the signal in the time domain, frequency domain, and time–frequency domain at each moment to obtain a multivariate feature sequence m at moment t.

- (3)

- Calculate the correlation, monotonicity, and robustness indexes of each feature, Equations (1)–(3), and evaluate the features according to different indexes to obtain the set of features with better performance in correlation , the set of features with better performance in monotonicity , and the set of features with better performance in robustness , and then find the intersection of the sets to obtain the initial set of features .

- (4)

- Let the elements in the set be L (1 < L < 27), and then the feature sequence at time t can be expressed as , and the redundant features are reselected using the method of hierarchical clustering for the initial selection of features to obtain the feature sequence , such that (1 < M ≤ L) at time t.

- (5)

- Smoothing the individual feature sequences to reduce the influence of fluctuations and other unfavorable factors on the features.

- (6)

- Adopt linear function normalization to normalize each feature sequence to the range of [0, 1] uniformly to avoid non-convergence of the algorithm during feature fusion.

- (7)

- Assign labels to the feature sequences at each moment: the label is the bearing degradation percentage; in the normal operation phase, the label is 0; in the moment of complete failure, the label is 1. Assuming that the time interval between the failure start point and the failure point is T, then at the moment T/2, the label is 0.5, thus completing the preprocessing of the individual training bearing data as follows:

- (8)

- Do the same step processing as above for other training bearing full life cycle data.

- (9)

- Pack all the pre-processed data of test bearings into the model input part and output part, and split the data by moving the sliding window. In this study, take the sliding window length width = 20 and sliding step = 1, so as to prepare all the data needed to complete training the feature fusion model.

- (10)

- Train the feature fusion model using the training data, using the root mean square error function as the loss function, and record the training error.

- (11)

- When the number of iterations is less than or equal to the maximum number of iterations, continue step (10); otherwise, stop training and obtain the trained multivariate feature fusion model.

The flow of the degradation state health indicator construction test phase is shown with the following steps:

- (12)

- Obtain the time domain data of the vibration signal at time t.

- (13)

- Obtain the multivariate feature sequence of the signal at moment t by taking steps (2)–(6) of the training stage of degradation state health indicator construction.

- (14)

- Take the feature sequences of 20 consecutive time steps through the sliding window as the input of the feature fusion model to obtain the health indicator value at time t.

- (15)

- When monitoring is not stopped, steps (1)–(3) are repeated to obtain the health indicators at the next moment; otherwise, the final health indicator sequence is output.

4. Experimental Verification

In this study, feature extraction, selection, and fusion methods are proposed for the remaining life prediction of rolling bearings. To verify the effectiveness of the above method, this section uses the publicly available rolling bearing the full life cycle dataset to demonstrate the effectiveness of the above method.

4.1. Case 1: IEEE PHM 2012 Dataset

4.1.1. Description of the IEEE PHM 2012 Dataset

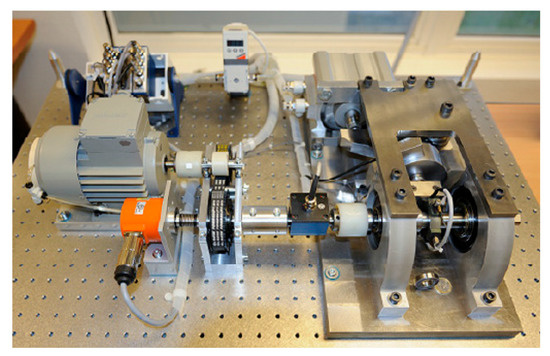

The IEEE PHM 2012 dataset is the full life cycle data of bearings from start of operation to end-of-life failure under different speed and load conditions provided by the Institute of Electrical and Electronics Engineers (IEEE) for the teams in the PHM data challenge held in 2012 [29]. The bearing experimental setup is the French PRONOSTIA experimental platform, shown in Figure 5. The main purpose of this test rig is to provide realistic monitoring data of bearing operation and to characterize the degradation of rolling bearings over their full life cycle. The accelerometer model used is DYTRAN 3035B, with a sampling frequency of 25.6 kHz and 0.1 s data taken every 10 s. The experimental conditions and data information are shown in Table 2.

Figure 5.

Overview of PRONOSTIA experimental platform of IEEE PHM 2012 Dataset.

Table 2.

Test condition and data information on IEEE PHM 2012 Dataset.

4.1.2. Sensitive Feature Selection for Rolling Bearing Degradation

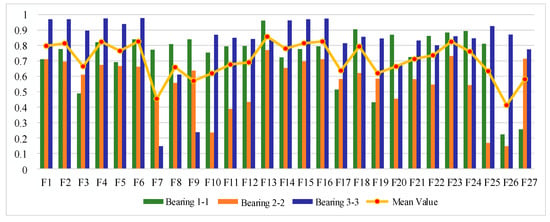

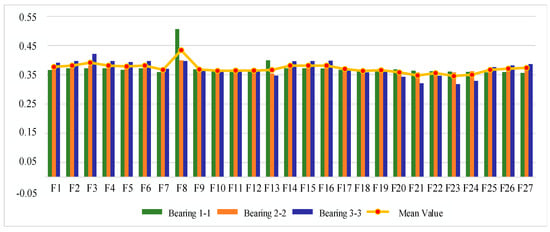

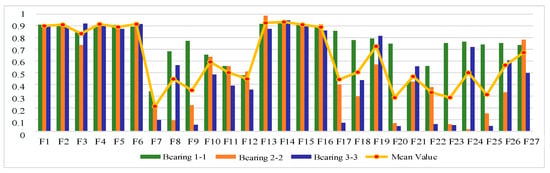

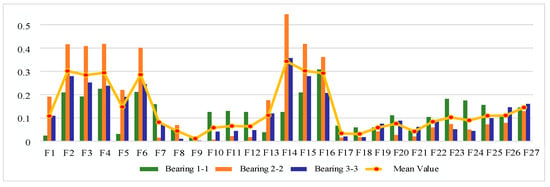

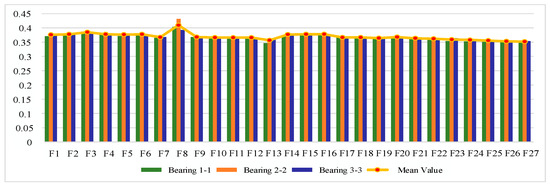

The IEEE PHM 2012 bearing life cycle dataset contains data for three operating conditions, and one bearing life cycle dataset is selected from each of the three operating conditions to reflect the generality. Figure 6, Figure 7 and Figure 8 show the scores of the extracted multivariate features of the three bearings in terms of correlation, monotonicity, and robustness, with scores ranging from 0 to 1. The closer the value of the evaluation index is to 1, the better the performance of the features in this area.

Figure 6.

Feature correlation evaluation indicator on IEEE PHM 2012 Dataset.

Figure 7.

Feature monotonicity evaluation indicator on IEEE PHM 2012 Dataset.

Figure 8.

Feature robustness evaluation indicator on IEEE PHM 2012 Dataset.

From the correlation evaluation index analysis, the correlation index values of different features of the same bearing vary, and the indexes vary widely, and the correlation index values of the same feature of different bearings also vary. In this study, we take the arithmetic mean of the relevance index values of three bearings on the same feature, and use the mean value to rank the features, and the results of the relevance index score of each feature are shown in Table 3. In this study, we take the top 50% of features after sorting as the optimal feature set, so is defined as follows:

Table 3.

Ranking table of feature correlation indicator on IEEE PHM 2012 Dataset.

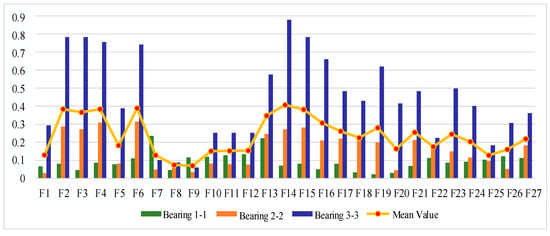

From the analysis of monotonicity evaluation indexes, the values of monotonicity indexes of different characteristics of the same bearing are different, and the values of monotonicity indexes of the same characteristics of different bearings are also different, and the monotonicity of each characteristic of bearing 3-3 is outstanding compared with the other two bearings. The same correlation evaluation index analysis process is followed to obtain the results of each characteristic monotonicity index score ranking, as shown in Table 4. The top 50% of features after sorting are taken as the optimal feature set, so is defined as follows:

Table 4.

Ranking table of feature monotonicity indicator on IEEE PHM 2012 Dataset.

From the analysis of the robustness evaluation indexes, the difference in the values of the robustness indexes of different features of the same bearing is small, and the difference in the values of the monotonicity indexes of the same feature of different bearings is also small, which indicates that the performance of the multiple features of each bearing is similar in terms of robustness. In the same correlation evaluation index analysis process, the results of the robustness index score of each feature are obtained, as shown in Table 5. The top 50% of features after sorting are taken as the optimal feature set, so is defined as follows:

Table 5.

Ranking table of feature robustness indicator on IEEE PHM 2012 Datase.

Based on the results of the above analysis, the set of primed features is obtained from .

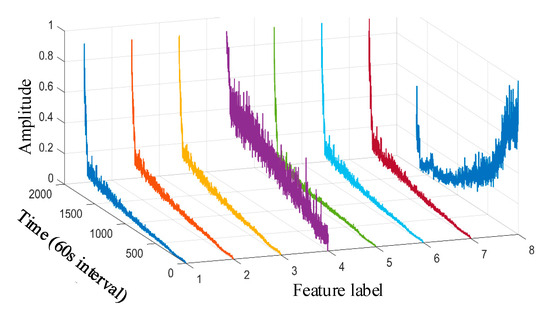

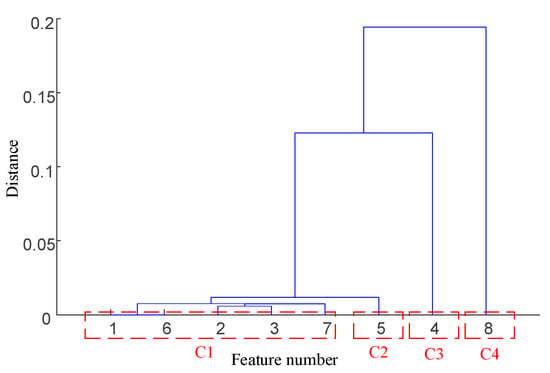

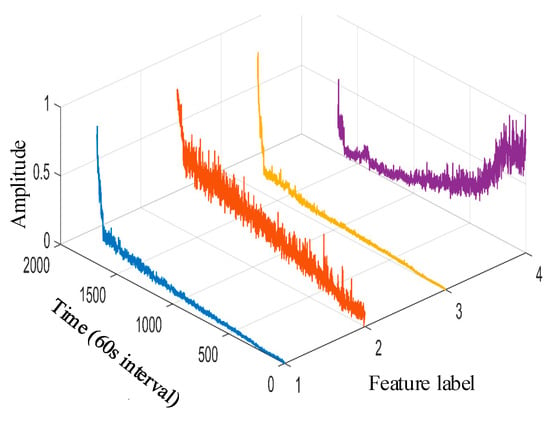

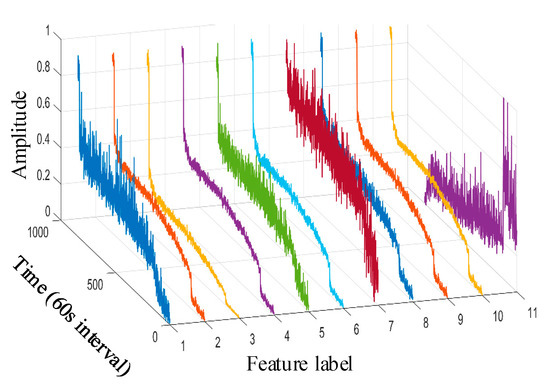

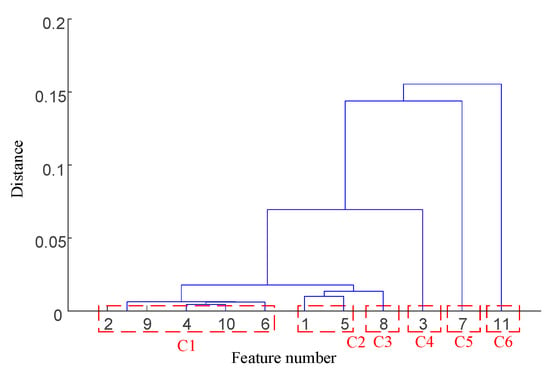

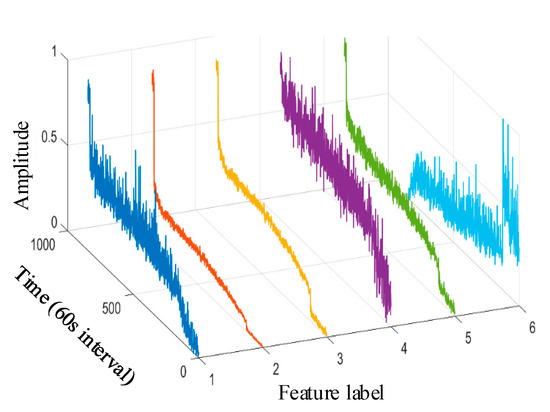

Taking the data of bearing 1-1 in the IEEE PHM 2012 dataset as an example, the graphs of its above selected multi-features are obtained as shown in Figure 9. Hierarchical clustering was performed on , and the clustering results are shown in Figure 10. When the class with distance between classes is used as a cluster C, the Pearson correlation coefficient is evaluated between all features in cluster C at this time, and finally, four clusters are obtained. Meanwhile, there is only one cluster in cluster (i.e., there is only one feature, so the only feature is selected as the representative feature of this cluster), while there are five clusters in cluster (i.e., there are five features); here, the feature with the largest correlation index is directly selected as the representative feature, and the feature after redundancy is finally obtained as by clustering and selection, and the feature curve is shown in Figure 11.

Figure 9.

IEEE PHM 2012 dataset bearing 1-1 primary feature curve. (The 1st~8th feature labels are, respectively, the root mean square, average rectified value, mean square amplitude, impact DB value, average frequency value, frequency root mean square, frequency standard deviation, and 4th frequency band energy ratio of wavelet packet).

Figure 10.

IEEE PHM 2012 dataset bearing 1-1 primary feature hierarchical clustering diagram.

Figure 11.

IEEE PHM 2012 dataset bearing 1-1 reselection feature vector curve. (The 1st~4th feature labels are, respectively, the average rectified value, impact DB value, average frequency value, and 4th frequency band energy ratio of wavelet packet).

4.1.3. Sensitive Feature Fusion for Rolling Bearing Degradation

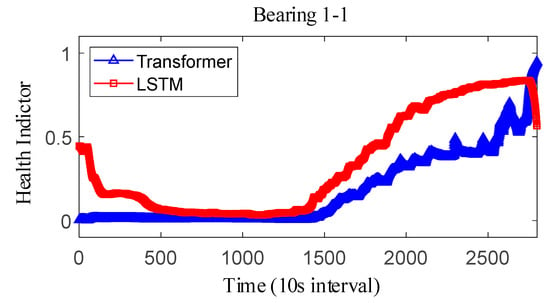

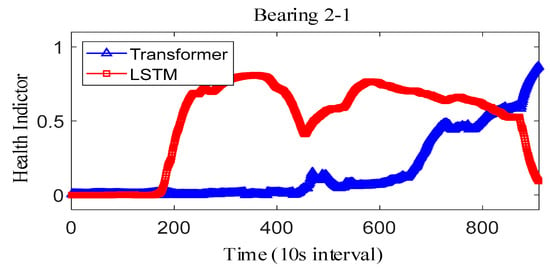

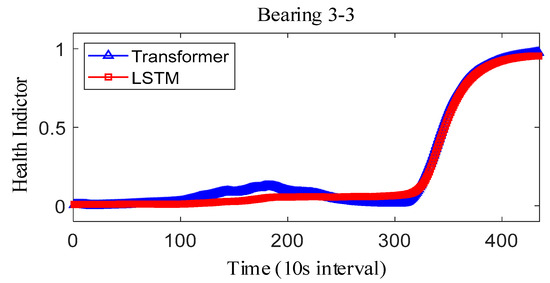

To verify the effectiveness of the feature fusion model in constructing health indicators, some of the bearing whole-life vibration data in the IEEE PHM 2012 dataset were also selected for the analysis of calculations. In this subsection, the performance of health indicators in terms of correlation, monotonicity, and robustness is analyzed, and the effectiveness of health indicator construction is compared with LSTM.

The parameters of Transformer and LSTM models are shown in Table 6. Both types of models are trained 100 times with a learning rate of 0.002, and the loss function is the squared error loss function with the following definition:

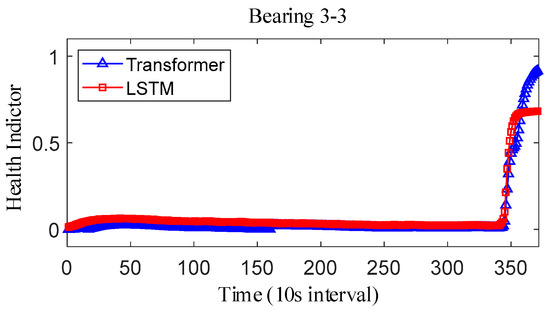

where represents the fitted value and represents the true value. The results obtained after smoothing of the fused health indicators are shown in Figure 12, Figure 13 and Figure 14. The health indicators obtained by fusion based on Transformer and LSTM models are all within the range of [0, 1], which have good results in feature fusion, and the indicators show multi-stage trend changes. Compared with the LSTM model, the health indicators obtained based on the Transformer model have a more obvious trend and are less fluctuating, and at the failure point, the Transformer model health indicators are closer to 1 than the LSTM model health indicators, which can better reflect the degree of bearing failure. The LSTM model performs relatively well on bearing 1-1 and bearing 3-3, while regarding the fusion on bearing 2, the fusion effect on −1 is poor, and the health index curve shows irregularity, which cannot reflect the failure degree of the bearing.

Table 6.

Model parameter table.

Figure 12.

IEEE PHM2012 dataset bearing 1-1 health indicator.

Figure 13.

IEEE PHM2012 dataset bearing 2-1 health indicator.

Figure 14.

IEEE PHM2012 dataset bearing 3-3 health indicator on IEEE PHM 2012 Datase.

The correlation, monotonicity, and robustness of the health indicators for bearing 1-1, bearing 2-1, and bearing 3-3 are shown in Table 7. The health indicators obtained based on the Transformer model outperform the health indicators obtained based on the LSTM model in terms of correlation, monotonicity, and robustness, which also verifies that the health indicators constructed based on the Transformer have better performance than the current. This also verifies that the Transformer-based health indicators have more performance advantages than the current LSTM-based health indicator construction. Further, considering that the proposed method belongs to the feature level fusion method, the effect of the proposed method is compared with that of principal components analysis (PCA) and kernel principal component analysis (KPCA) methods. The experimental results are shown in Table 8. On the whole, the results after the fusion of PCA and KPCA methods have good robustness, but the correlation is not as good as that using the proposed Transformer, and the monotonicity is also extremely poor. At the same time, it is compared with the current advanced fusion methods MAFFN [27] and MSM [30]. Therefore, the proposed method also has advantages compared with other feature level fusion methods.

Table 7.

Bearing health indicator evaluation on IEEE PHM 2012 Datase.

Table 8.

The computational time metrics.

The observed difference can be attributed to the inherent architectural differences between the Transformer and LSTM models. Specifically, the Transformer model utilizes a multi-headed attention mechanism, enabling it to effectively capture long-term dependencies and subtle changes in the feature space, which results in a more stable and accurate representation of the bearing’s degradation state. This capability allows the Transformer-based HI to approach closer to 1 at the failure point, reflecting the true extent of bearing failure more accurately. In contrast, the LSTM model relies on sequential processing through hidden and cell states, which may lead to information loss or distortion over long sequences, causing its HI to exhibit greater fluctuations and remain slightly below 1 even at the failure point. Furthermore, the Transformer model demonstrates superior performance in terms of correlation, monotonicity, and robustness, as shown in Table 8, reinforcing its ability to construct reliable HIs. These advantages make the Transformer-based HI less prone to fluctuations and better suited for accurately capturing critical failure points compared to the LSTM model.

We conducted comparative experiments using both LSTM and Transformer architectures on the same dataset (Case 1 from Section 4). The computational time metrics are summarized as follows (Table 8).

The Transformer model achieved 53% faster training and 58% faster inference compared to LSTM, demonstrating superior parallel computation capabilities. Unlike LSTM’s sequential processing bottleneck, the Transformer’s self-attention mechanism enables simultaneous computation across all time steps, significantly reducing latency for long sequences. To validate the long-sequence advantage, we tested both models on varying sequence lengths from the bearing degradation dataset as follows (Table 9).

Table 9.

The two models were compared with different sequence lengths on IEEE PHM 2012 Datase.

LSTM exhibited gradient instability for sequences > 512 steps, while the Transformer maintained robust performance up to 4096 steps.

In order to explore the working principle of the proposed model, we conducted ablation experiments, and the results are shown in Table 10. These experiments will systematically evaluate the necessity and effectiveness of three critical aspects: (1) the intersection clustering-based feature selection strategy (including intersection primary selection and clustering re-election), (2) the attention mechanism-based feature fusion framework, and (3) the multi-domain feature construction (time domain, frequency domain, and time–frequency domain features). We compared the performance degradation assessment results using the full 27-dimensional initial features versus the selected sensitive features. The results demonstrated that removing the intersection clustering selection process led to a 8.7% decrease in feature set monotonicity and a 13.6% reduction in robustness due to increased feature redundancy and noise interference. For the attention mechanism component, we replaced it with conventional fusion methods including PCA, equal-weighted averaging, and maximum relevance selection. Quantitative comparisons revealed that the attention-based fusion improved health index correlation with actual degradation states by 18.6% compared to PCA and enhanced RUL prediction accuracy by 6.5% over equal-weighted fusion, confirming its superiority in adaptively capturing nonlinear feature relationships and temporal degradation patterns. Additional ablation tests on the intersection-clustering sub-modules showed that removing either the primary intersection selection (based on correlation/monotonicity thresholds) or the secondary clustering re-election (for redundancy reduction) resulted in 6.5–8.4% performance degradation across evaluation metrics.

Table 10.

Ablation study on model components for Case 1.

4.2. Case 2: XJTU-SY Dataset

4.2.1. Description of the Dataset

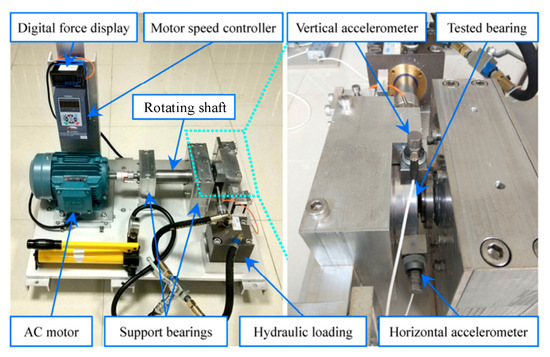

The XJTU-SY dataset is a whole life cycle dataset of bearings provided by the Institute of Design Science and Basic Components of Xi’an Jiaotong University and Changxing Shengyang Technology Co. [29]. The bearing experimental setup mainly includes AC induction motor, motor speed controller, support shaft, and hydraulic loading system [38], as shown in Figure 15. The test bench adopts a measurement point scheme with one accelerometer in the horizontal direction and one in the vertical direction of the test bearing, and the accelerometer model is PCB352C33 with a sampling frequency of 25.6 kHz and 1.28S data every 60 s [39]. The experimental conditions and data information are shown in Table 11.

Figure 15.

Overview of PRONOSTIA experimental platform of XJTU.

Table 11.

Test conditions and data information.

4.2.2. Sensitive Feature Selection for Rolling Bearing Degradation Assessment

The XJTU-SY bearing life cycle dataset also contains data for three operating conditions, and one bearing dataset is selected from each operating condition for feature evaluation and selection; here, bearing 1-1, bearing 2-1, and bearing 3-3 datasets are selected. Figure 16, Figure 17 and Figure 18 show the scores of the extracted multivariate features of the three bearings in terms of correlation, monotonicity, and robustness.

Figure 16.

Feature correlation evaluation indicator on XJTU-SY Dataset.

Figure 17.

Feature monotonicity evaluation indicator on XJTU-SY Dataset.

Figure 18.

Feature robustness evaluation indicator on XJTU-SY Dataset.

Analyzing Figure 18, the performance of different features of the bearings differed greatly in terms of correlation, and the same feature of different bearings also differed greatly in terms of correlation performance. Using the same analysis process as described above, the results of relevance evaluation index score of each feature are obtained, as shown in Table 12. The top 50% of features after sorting are taken as the optimal feature set, so is defined as follows:

Table 12.

Ranking table of feature correlation indicator on XJSU-SY dataset.

According to Figure 17, compared with the correlation index, the monotonicity evaluation index has a greater range of fluctuation in value, and more than one feature of bearing 2-1 performs better than the other two bearings. The results of the monotonicity evaluation index score ranking for each feature are shown in Table 13. The top 50% of features after sorting are taken as the optimal feature set, so is defined as follows:

Table 13.

Ranking table of feature monotonicity indicator on XJSU-SY dataset.

According to Figure 18, the robustness indexes vary less among bearings and features. The results of the ranking of the robustness evaluation index scores for each feature are shown in Table 14. The top 50% of features after sorting are taken as the optimal feature set, so is defined as follows:

Table 14.

Ranking table of feature robustness indicator on XJSU-SY dataset.

Based on the results of the above analysis, the set of primed features is obtained from :

Taking the bearing 1-1 vibration data in the XJSU-SY dataset as an example, the graphs of its above primary selection characteristics are obtained as shown in Figure 19.

Figure 19.

XJTU-SY dataset bearing 1-1 primary feature curve. (The 1st~11th feature labels are, respectively, the peak value, root mean square, variance, average rectified value, peak-to-peak value, mean square amplitude, impact DB value, average frequency value, frequency root mean square, frequency standard deviation, and 8th frequency band energy ratio of wavelet packet).

The clustering result is shown in Figure 20. At the same time, there is only one cluster in cluster ; i.e., there is only one feature, so the only feature is selected as the representative feature of this cluster. Cluster has more than one cluster; i.e., there are multiple features, so the feature with the largest relevance index is selected as the representative feature. By clustering and selecting, the final redundant features are , and the feature curves are shown in Figure 21.

Figure 20.

XJTU-SY dataset bearing 1-1 primary feature hierarchical clustering diagram.

Figure 21.

XJTU-SY dataset bearing 1-1 reselection feature vector curve. (The 1st~6th feature labels are, respectively, the peak value, variance, average rectified value, impact DB value, average frequency value, and 8th frequency band energy ratio of wavelet packet).

4.2.3. Sensitive Feature Fusion for Rolling Bearing Degradation Assessment

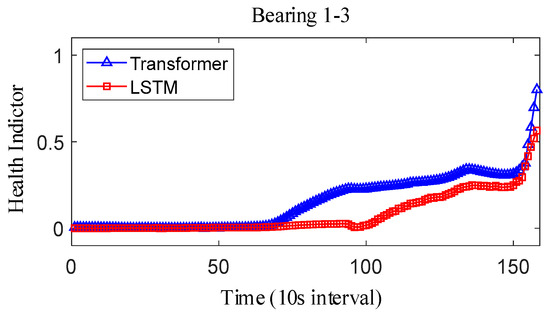

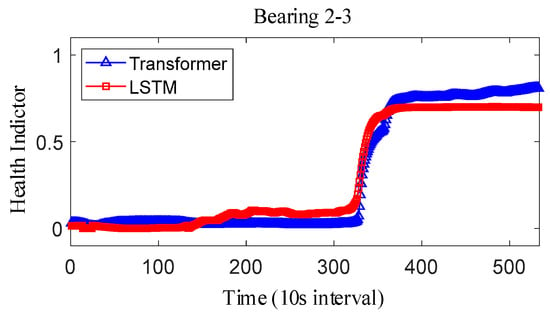

For the XJTU-SY bearing the full life cycle dataset, bearing 1-3, bearing 2-3, and bearing 3-3 data were selected as the test data. The model parameters and training parameters are consistent as described in Case 1, and the health indicators constructed are smoothed as shown in Figure 22, Figure 23 and Figure 24. Overall, the health indicators obtained from the XJTU-SY bearing the full life cycle dataset are smoothed better, which is consistent with the XJTU-SY dataset with less random fluctuations as described in Case 1. An analysis of the health indicator graphs reveals that the health indicators obtained by fusion still show multi-stage trend changes, while the Transformer model constructs a more obvious trend of increasing health indicators, and the health indicators obtained by the LSTM model have a less obvious trend, especially the health indicators of bearings 2-3 and 3-3, which show horizontal characteristics in the latter part, which has a larger impact on the accuracy of the remaining life prediction of bearings.

Figure 22.

IEEE PHM2012 dataset bearing 1-3 health indicator.

Figure 23.

IEEE PHM2012 dataset bearing 2-3 health indicator.

Figure 24.

IEEE PHM2012 dataset bearing 3-3 health indicator.

The correlation, monotonicity, and robustness index values of the health indicators for bearings 1-3, bearings 2-3, and bearings 3-3 are shown in Table 15. The health indicators obtained based on the Transformer model perform better than those obtained based on the LSTM model in terms of correlation, monotonicity, and robustness in general, which also verifies that the health indicators based on the Transformer model. This also verifies that the Transformer model-based health indicators have more performance advantages than the current LSTM model-based health indicator construction methods. Meanwhile, the proposed method is further compared with PCA and KPCA methods. The results of PCA and KPCA are still robust, but the correlation and monotonicity are not as good as those of the proposed method. Therefore, the proposed method has advantages over other feature level fusion methods.

Table 15.

Bearing health indicator evaluation on XJSU-SY dataset.

The proposed Transformer attention mechanism model-based bearing health indicator construction method is experimentally validated by IEEE PHM 2012 bearing the full life cycle dataset and XJTU-SY bearing the full life cycle dataset. According to the experimental results, it can be found that the Transformer attention mechanism model-based bearing health indicator construction method has good performance in both the health index curve obtained has an obvious trend of increasing with time, which reflects the good characterization ability of the health index on the degradation degree of the bearing. Compared with the LSTM model, the health indicators obtained from the Transformer model have better performance in terms of correlation, monotonicity, and robustness, indicating that the Transformer model based on the multi-head attention mechanism is more effective in learning the key information in the multidimensional features and mapping the multidimensional information to the health indicators to characterize the degradation degree of the bearings.

We conducted comparative experiments using both LSTM and Transformer architectures on the same dataset (Case 2 from Section 4). The computational time metrics are summarized as follows (Table 16).

Table 16.

The computational time metrics on Case 2.

The Transformer model achieved 54.6% faster training and 58.3% faster inference compared to LSTM, demonstrating superior parallel computation capabilities. Unlike LSTM’s sequential processing bottleneck, the Transformer’s self-attention mechanism enables simultaneous computation across all time steps, significantly reducing latency for long sequences. To validate the long-sequence advantage, we tested both models on varying sequence lengths from the bearing degradation dataset as follows (Table 17).

Table 17.

The two models were compared with different sequence lengths.

In order to explore the working principle of the proposed model, we conducted ablation experiments, and the results are shown in Table 18. The results demonstrated that removing the intersection clustering selection process led to a 8.7% decrease in feature set monotonicity and a 8.4% reduction in robustness due to increased feature redundancy and noise interference. For the attention mechanism component, we replaced it with conventional fusion methods including PCA, equal-weighted averaging, and maximum relevance selection. Quantitative comparisons revealed that the attention-based fusion improved health index correlation with actual degradation states by 18.6% compared to PCA and enhanced RUL prediction accuracy by 5.7% over equal-weighted fusion, confirming its superiority in adaptively capturing nonlinear feature relationships and temporal degradation patterns. Additional ablation tests on the intersection-clustering sub-modules showed that removing either the primary intersection selection (based on correlation/monotonicity thresholds) or the secondary clustering re-election (for redundancy reduction) resulted in a 5.7–15.1% performance degradation across evaluation metrics.

Table 18.

Ablation study on model components for Case 1.

5. Conclusions

This study presents an innovative framework for rolling bearing performance degradation assessment through advanced feature engineering and neural attention mechanisms. Different from the conventional feature selection method, a sensitive feature selection strategy based on intersection initial selection and clustered re-selection is conducted to obtain sensitive features with low redundancy from the initial feature set, where correlation, monotonicity, and robustness can be comprehensively considered. The multi-attention mechanism model is later established to assess the degradation state and conduct the relationship between multidimensional sensitive features and health indicators. Two experiments are used to illustrate the feasibility of the designed intersection clustering selection for sensitive feature extraction. The proposed intersection clustering strategy effectively addresses feature redundancy challenges by eliminating 23% of irrelevant features while maintaining critical degradation signatures. Three key contributions emerge: (1) a systematic multi-domain feature construction methodology encompassing 27 time–frequency characteristics, (2) a hybrid feature selection paradigm combining statistical filtering with unsupervised clustering, and (3) an attention-based fusion model that adaptively weights features through self-learning mechanisms. A comparison indicates that the proposed attention mechanism-based feature fusion method has better performance in terms of correlation, monotonicity, and robustness, and this will lead a much better performance degradation assessment. In addition, compared with conventional feature-level fusion methods applied to both datasets, the proposed approach demonstrates superior performance in correlation and monotonicity metrics. This enhancement suggests our method can obtain degradation-sensitive indicators with stronger characterization capabilities. Furthermore, the experimental results indicate the proposed methodology shows promising potential for acquiring high-quality degradation features even under challenging industrial operating conditions.

Author Contributions

Conceptualization, X.D.; methodology, T.Z.; validation, W.C. and C.X.; data curation, L.L.; writing—original draft preparation, T.Z.; writing—review and editing, X.D.; funding acquisition, X.D. All authors have read and agreed to the published version of the manuscript.

Funding

This study was completed with the support of Special Project for the Innovative Province Construction of Hunan 2023GK2093, National Key Research and Development Program of China under Grant 2022YFB3303600 and 2023YFB3406304, and in part by the National Natural Science Foundation of China under Grant 52035002.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author: Xiaoxi Ding.

Conflicts of Interest

Author Wentao Chen was employed by the company Hunan Lince Rolling Stock Equipment Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Ou, S.; Zhao, M.; Li, S.; Zhou, T. Online shock sensing for rotary machinery using encoder signal. Mech. Syst. Signal Process. 2022, 182, 109559. [Google Scholar] [CrossRef]

- Liu, R.; Ding, X.; Zhang, Y.; Zhang, M.; Shao, Y. Variable-scale evolutionary adaptive mode denoising in the application of gearbox early fault diagnosis. Mech. Syst. Signal Process. 2022, 185, 109773. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, L.; Xiao, Q.; Huang, H.; Xiong, G. Performance degradation assessment of railway Axle box bearing based on combination of denoising features and time series information. Sensors 2023, 23, 5910. [Google Scholar] [CrossRef] [PubMed]

- Xiong, R.; Liu, A.; Xu, D.; Qu, C.; Wu, Y. A New Heavy-Duty Bearing Degradation Evaluation Method with Multi-Domain Features. Sensors 2024, 24, 7769. [Google Scholar] [CrossRef]

- Akpudo, U.E.; Hur, J.W. A feature fusion-based prognostics approach for rolling element bearings. J. Mech. Sci. Technol. 2020, 34, 4025–4035. [Google Scholar] [CrossRef]

- Song, L.; Jin, Y.; Lin, T.; Zhao, S.; Wei, Z.; Wang, H. Remaining Useful Life Prediction Method Based on the Spatiotemporal Graph and GCN Nested Parallel Route Model. IEEE Trans. Instrum. Meas. 2024, 73, 3511912. [Google Scholar] [CrossRef]

- Zhang, B.; Zhang, L.J.; Xu, J.W. Degradation Feature Selection for Remaining Useful Life Prediction of Rolling Element Bearings. Qual. Reliab. Eng. Int. 2015, 32, 547–554. [Google Scholar] [CrossRef]

- Yang, F.; Habibullah, M.S.; Zhang, T.Y.; Xu, Z.; Lim, P.; Nadarajan, S. Health Index-Based Prognostics for Remaining Useful Life Predictions in Electrical Machines. IEEE Trans. Ind. Electron. 2016, 63, 2633–2644. [Google Scholar] [CrossRef]

- Geramifard, O.; Xu, J.X.; Pang, C.K.; Zhou, J.; Li, X. Data-driven approaches in health condition monitoring—A comparative study. In Proceedings of the 2010 8th IEEE International Conference on Control and Automation (ICCA), Xiamen, China, 9–11 June 2010. [Google Scholar]

- Chen, C.; Xu, T.; Wang, G.; Li, B. Railway turnout system RUL prediction based on feature fusion and genetic programming. Measurement 2020, 151, 107162. [Google Scholar] [CrossRef]

- Zhao, M.; Tang, B.; Tan, Q. Bearing remaining useful life estimation based on time–frequency representation and supervised dimensionality reduction. Measurement 2016, 86, 41–55. [Google Scholar] [CrossRef]

- Cheng, Z. Residual Useful Life Prediction for Rolling Element Bearings Based on Multi-Feature Fusion Regression. In Proceedings of the 2017 International Conference on Sensing, Diagnostics, Prognostics, and Control (SDPC), Shanghai, China, 16–18 August 2017; pp. 246–250. [Google Scholar]

- Rai, A.; Upadhyay, S.H. Intelligent bearing performance degradation assessment and remaining useful life prediction based on self-organising map and support vector regression. Proc. Inst. Mech. Eng. Part C-J. Mech. Eng. Sci. 2018, 232, 1118–1132. [Google Scholar] [CrossRef]

- Shang, Y.; Tang, X.; Zhao, G.; Jiang, P.; Lin, T.R. A remaining life prediction of rolling element bearings based on a bidirectional gate recurrent unit and convolution neural network. Measurement 2022, 202, 111893. [Google Scholar] [CrossRef]

- Cheng, Y.; Wang, J.; Wu, J.; Zhu, H.; Wang, Y. Abnormal symptom-triggered remaining useful life prediction for rolling element bearings. J. Vib. Control 2023, 29, 2102–2115. [Google Scholar] [CrossRef]

- Xia, M.; Li, T.; Shu, T.X.; Wan, J.F.; de Silva, C.W.; Wang, Z.R. A Two-Stage Approach for the Remaining Useful Life Prediction of Bearings Using Deep Neural Networks. IEEE Trans. Ind. Inform. 2019, 15, 3703–3711. [Google Scholar] [CrossRef]

- Zhang, N.N.; Wu, L.F.; Wang, Z.H.; Guan, Y. Bearing Remaining Useful Life Prediction Based on Naive Bayes and Weibull Distributions. Entropy 2018, 20, 944. [Google Scholar] [CrossRef]

- Liu, S.J.; Fan, L.X. An adaptive prediction approach for rolling bearing remaining useful life based on multistage model with three-source variability. Reliab. Eng. Syst. Saf. 2022, 218, 108182. [Google Scholar] [CrossRef]

- Zhao, B.X.; Yuan, Q. A novel deep learning scheme for multi-condition remaining useful life prediction of rolling element bearings. J. Manuf. Syst. 2021, 61, 450–460. [Google Scholar] [CrossRef]

- Cui, L.L.; Wang, X.; Wang, H.Q.; Ma, J.F. Research on Remaining Useful Life Prediction of Rolling Element Bearings Based on Time-Varying Kalman Filter. IEEE Trans. Instrum. Meas. 2020, 69, 2858–2867. [Google Scholar] [CrossRef]

- Zhang, B.; Zhang, S.H.; Li, W.H. Bearing performance degradation assessment using long short-term memory recurrent network. Comput. Ind. 2019, 106, 14–29. [Google Scholar] [CrossRef]

- Feng, Z.; Wang, Z.; Liu, X.; Li, J. Rolling Bearing Performance Degradation Assessment with Adaptive Sensitive Feature Selection and Multi-Strategy Optimized SVDD. Sensors 2023, 23, 1110. [Google Scholar] [CrossRef]

- Guo, K.; Ma, J.; Wu, J.; Xiong, X. Adaptive Feature Fusion and Disturbance Correction for Accurate Remaining Useful Life Prediction of Rolling Bearings. Eng. Appl. Artif. Intell. 2024, 138, 109433. [Google Scholar] [CrossRef]

- Liu, J.; Yang, Z.; Xie, J.; Wang, R.; Liu, S.; Xi, D. A Feature Fusion-Based Method for Remaining Useful Life Prediction of Rolling Bearings. IEEE Trans. Instrum. Meas. 2023, 72, 3532712. [Google Scholar] [CrossRef]

- Wang, Y.; Hou, D.; Xu, D.; Zhang, S.; Yang, C. Prediction of Rolling Bearing Performance Degradation Based on Sae and TCN-Attention Models. J. Mech. Sci. Technol. 2023, 37, 1567–1583. [Google Scholar] [CrossRef]

- Shi, B.; Liu, Y.; Lu, S.; Gao, Z.-W. A New Adaptive Feature Fusion and Selection Network for Intelligent Transportation Systems. Control Eng. Pract. 2024, 146, 105885. [Google Scholar] [CrossRef]

- Shen, J.; Zhao, D.; Liu, S.; Cui, Z. Multiscale Attention Feature Fusion Network for Rolling Bearing Fault Diagnosis under Variable Speed Conditions. Signal Image Video Process. 2024, 18, 523–535. [Google Scholar] [CrossRef]

- He, J.; Zhang, X.; Zhang, X.; Shen, J. Remaining Useful Life Prediction for Bearing Based on Automatic Feature Combination Extraction and Residual Multi-Head Attention GRU Network. Meas. Sci. Technol. 2023, 35, 036003. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, C.; Xu, D.; Ge, J.; Cui, W. Evaluation and Prediction Method of Rolling Bearing Performance Degradation Based on Attention-LSTM. Shock Vib. 2021, 2021, 1. [Google Scholar] [CrossRef]

- Zhao, Q.; Zhang, X.; Wang, F.; Fan, P.; Mbeka, E. The Effect of the Head Number for Multi-Head Self-Attention in Remaining Useful Life Prediction of Rolling Bearing and Interpretability. Neurocomputing 2025, 616, 128946. [Google Scholar] [CrossRef]

- Wan, Z.; Xu, Z.; Cai, C.; Wang, X.; Xu, J.; Shi, K.; Zhong, X.; Liao, Z.; Li, Q. Rolling Bearing Fault Diagnosis Method Using Time-Frequency Information Integration and Multi-Scale TransFusion Network. Knowl.-Based Syst. 2024, 284, 111344. [Google Scholar] [CrossRef]

- Pan, T.; Chen, J.; Zhou, Z.; Wang, C.; He, S. A Novel Deep Learning Network via Multiscale Inner Product With Locally Connected Feature Extraction for Intelligent Fault Detection. IEEE Trans. Ind. Inform. 2019, 15, 5119–5128. [Google Scholar] [CrossRef]

- Wang, Z.; Zhao, W.; Li, Y.; Dong, L.; Wang, J.; Du, W.; Jiang, X. Adaptive staged RUL prediction of rolling bearing. Measurement 2023, 222, 113478. [Google Scholar] [CrossRef]

- Lei, Y.; Li, N.; Guo, L.; Li, N.; Yan, T.; Lin, J. Machinery health prognostics: A systematic review from data acquisition to RUL prediction. Mech. Syst. Signal Process. 2018, 104, 799–834.Seidpisheh. [Google Scholar] [CrossRef]

- Seidpisheh, M.; Mohammadpour, A. Hierarchical clustering of heavy-tailed data using a new similarity measure. Intell. Data Anal. 2018, 22, 569–579. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Guo, L.; Li, N.; Jia, F.; Lei, Y.; Lin, J. A recurrent neural network based health indicator for remaining useful life prediction of bearings. Neurocomputing 2017, 240, 98–109. [Google Scholar] [CrossRef]

- Nectoux, P.; Gouriveau, R.; Medjaher, K.; Ramasso, E.; Varnier, C. PRONOSTIA: An experimental platform for bearings accelerated degradation tests. In Proceedings of the IEEE International Conference on Prognostics and Health Management, Denver, CO, USA, 18–21 June 2012. [Google Scholar]

- Wang, B.; Lei, Y.; Li, N.; Li, N. A Hybrid Prognostics Approach for Estimating Remaining Useful Life of Rolling Element Bearings. IEEE Trans. Reliab. 2018, 69, 401–412. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).