1. Introduction

Perception systems for autonomous vehicles and robotic platforms have made rapid progress in recent years. Among the many emerging approaches, multimodal sensor fusion has become a prominent research direction [

1,

2], enabling systems to take advantage of the complementary strengths of various sensor modalities. RGB cameras offer detailed color and texture information, which is valuable for object classification under well-lit conditions. Thermal cameras capture heat signatures, making them effective in low light or visually obscured environments. Meanwhile, LiDAR sensors generate dense and accurate 3D point clouds, supporting precise spatial location and geometric reasoning regardless of ambient lighting. However, each sensor type also has inherent limitations [

3,

4]. RGB cameras are highly sensitive to illumination and may fail in darkness or in adverse weather. Thermal cameras often suffer from low spatial resolution and reduced effectiveness when temperature contrasts are weak. LiDAR sensors, though accurate, can struggle with certain material surfaces and exhibit sparsity at longer distances. These limitations have motivated the development of sensor fusion techniques to construct more robust and comprehensive perception systems [

5,

6,

7,

8,

9,

10]. Fusion methods are generally categorized into three types, which are early fusion, intermediate fusion, and late fusion. Late fusion combines outputs at the decision level, offering simplicity. In recent years, the approach of combining multiple fusion strategies has attracted attention. Early fusion integrates raw sensor data at the input level, leading to higher accuracy. Intermediate fusion balances accuracy and computational speed by fusing intermediate feature representations.

To enhance the effectiveness of point cloud acquisition, we employ multiple LiDAR sensors with complementary characteristics, leveraging the specific strengths of each type [

2,

4]. While some LiDARs offer superior stability and performance in close-range environments, others are optimized for long-range sensing. Combining different LiDAR types enables the system to compensate for individual sensor limitations and helps mitigate the density imbalance of point clouds, ensuring high-resolution perception across both near and far ranges. However, deploying multiple LiDAR sensors in close proximity introduces the risk of sensor interference, where overlapping laser pulses may degrade measurement quality. These practical challenges underscore the need for a robust calibration strategy that not only ensures accurate spatial and temporal alignment, but also explicitly models the unique characteristics of each sensor to achieve coherent and reliable multi-LiDAR fusion. In addition, the integration of LiDAR with 360-degree RGB and thermal cameras represents a significant advancement over conventional narrow field-of-view systems. Panoramic imaging mitigates blind spots, providing omnidirectional perception ideal for complex urban scenarios [

11,

12,

13]. Motivated by these benefits, we propose a temporally aware feature-level fusion approach that accumulates sequential LiDAR frames and aligns them with 360-degree images for robust multimodal perception.

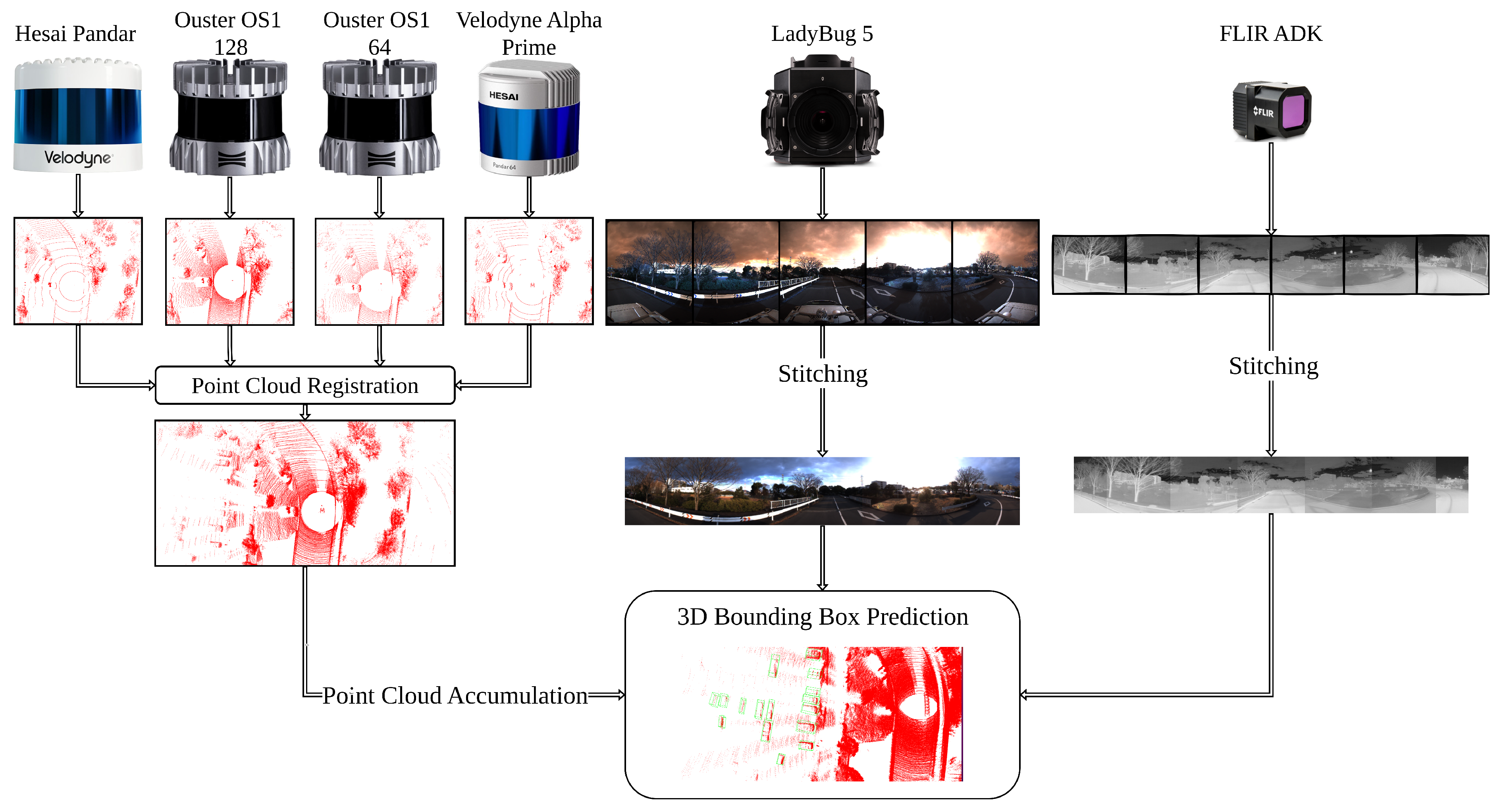

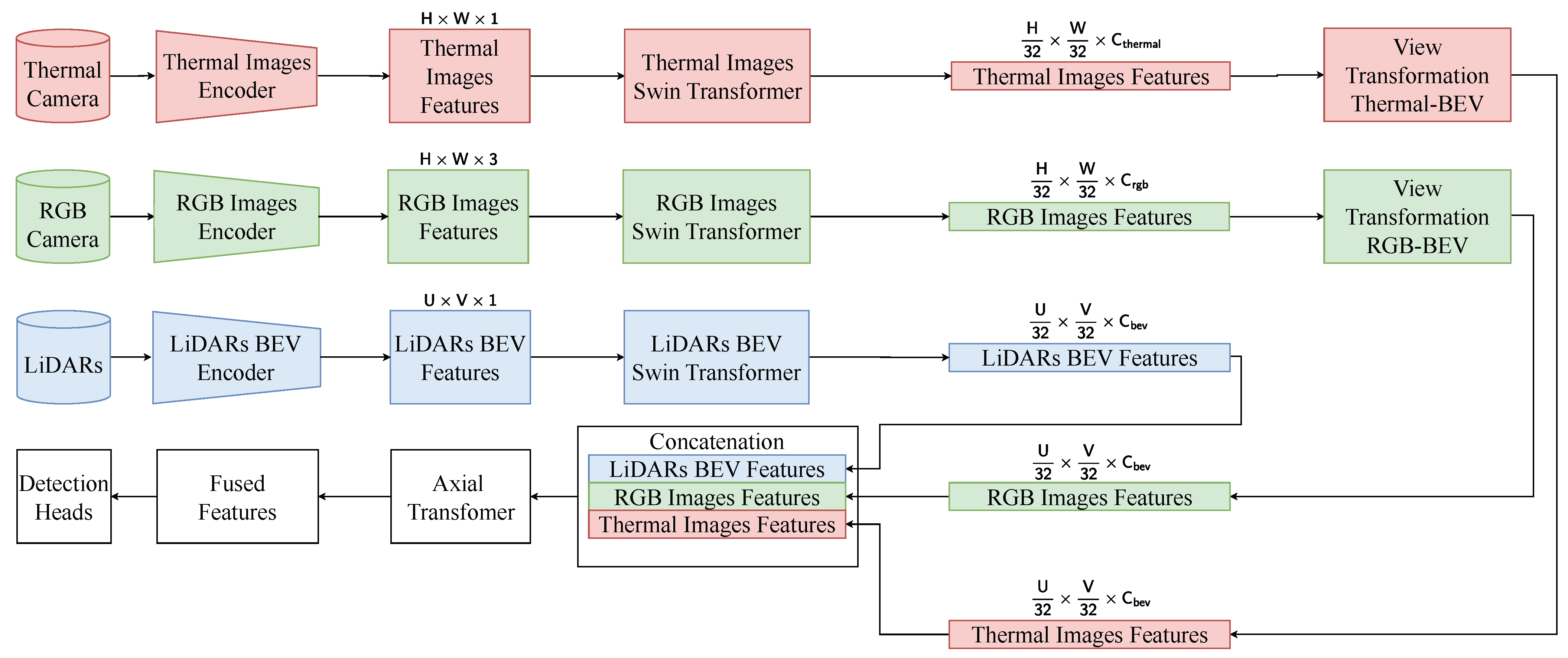

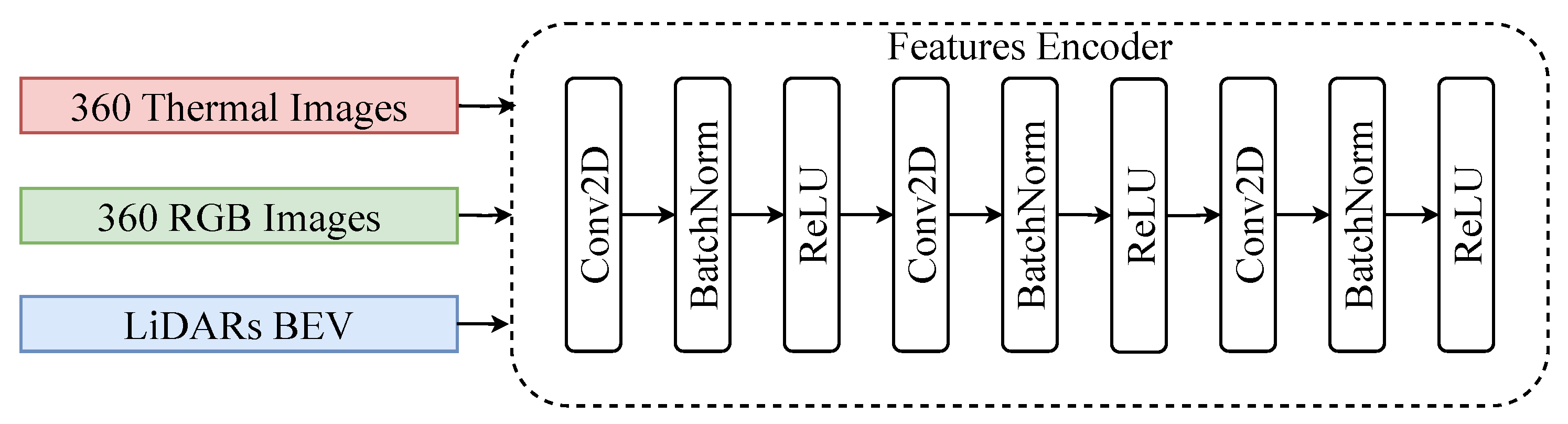

Figure 1 illustrates the overall architecture of the proposed system. Four LiDAR sensors are first calibrated to form a unified 3D acquisition system, which significantly increases the spatial coverage and density of point cloud data. In addition to that, a set of RGB cameras and a set of thermal cameras are stitched to construct two 360 cameras, respectively. This 360 design ensures full environmental awareness from both visual and thermal perspectives. To further enhance spatial resolution and compensate for temporal sparsity or occlusions, multiple consecutive LiDAR frames are temporally accumulated. This accumulation enhances point density, preserving critical geometry despite occlusions or material properties. The resulting fused LiDAR frames are then aligned with the 360 RGB and thermal images through geometric calibration. Finally, the aligned multimodal data are passed through a unified early-fusion and middle-fusion framework for object detection. This architecture allows the system to learn in two stages, contributing to robust and accurate object recognition. The main contributions of this work are summarized as follows:

- 1.

We propose a novel calibration strategy specifically designed to enhance alignment accuracy between multiple LiDAR sensors. By addressing both spatial discrepancies and temporal misalignments, this strategy improves global consistency in point cloud integration. It is particularly effective in setups involving wide fields of view or overlapping sensor coverage, ensuring that the fused point clouds maintain geometric fidelity and can be reliably used in object detection.

- 2.

We developed an enhanced multimodal fusion framework that jointly utilizes LiDAR point clouds, 360-degree RGB images, and 360-degree thermal images to achieve robust object detection in complex environments. Each modality contributes complementary information. LiDAR point clouds provide precise spatial geometry, RGB images capture rich texture and color cues, and thermal images ensure reliability under poor lighting or adverse weather. Our fusion approach is designed to fully exploit 360-degree perception while maintaining spatial and temporal consistency across the modalities. As a result, the system is resistant to sensor-specific limitations that can affect single-modality detection.

- 3.

This paper is structured as follows.

Section 2 provides a detailed overview of the state of the art in sensor fusion and calibration techniques, with a particular emphasis on approaches relevant to LiDAR and camera systems. This section serves as the foundation for understanding the technical motivations behind our proposed methods.

Section 3 introduces our novel calibration strategy, which addresses both LiDAR-to-LiDAR and LiDAR-to-camera alignment. We describe the methodology in detail and highlight its adaptability in dynamic and targetless settings.

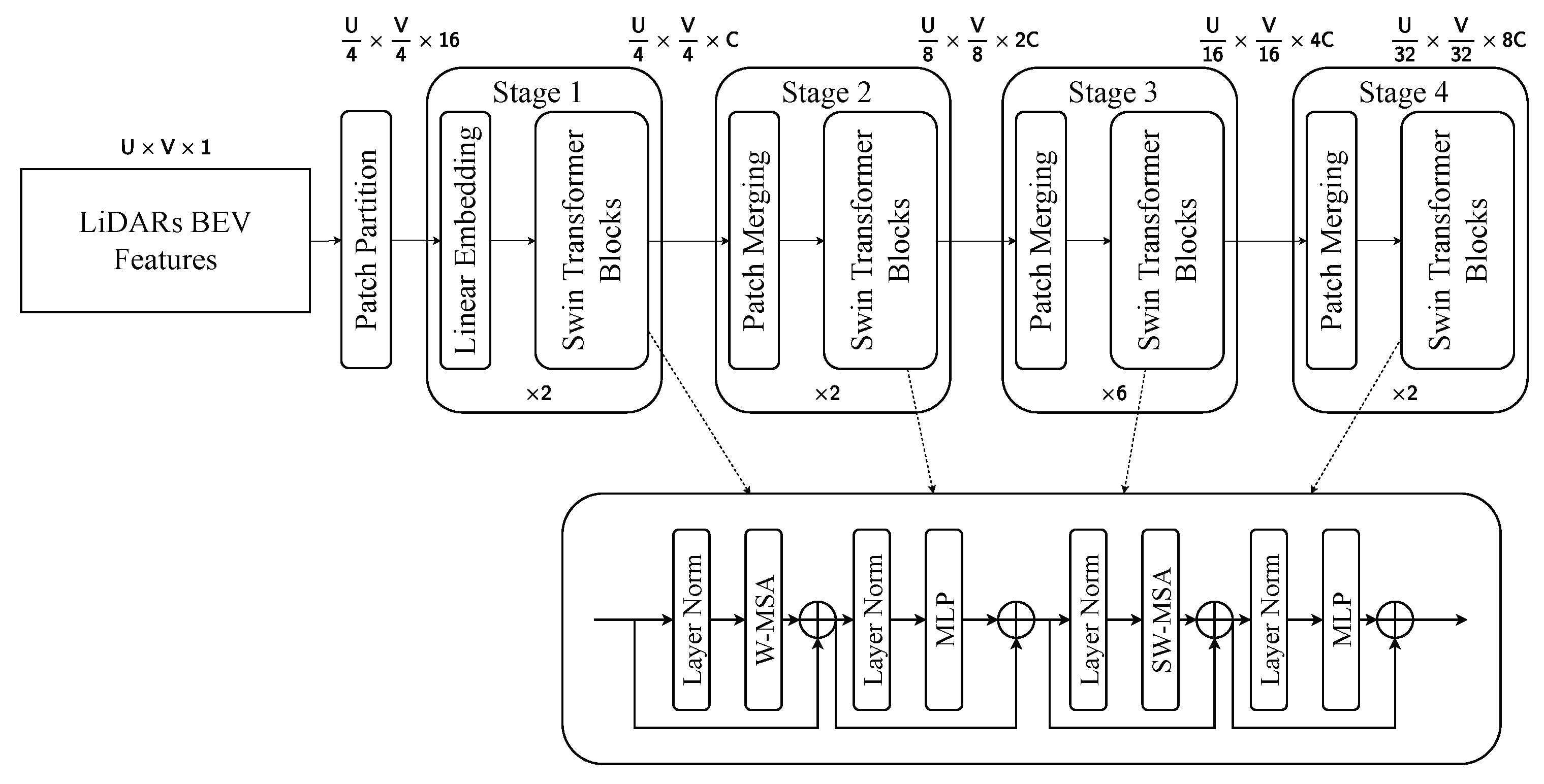

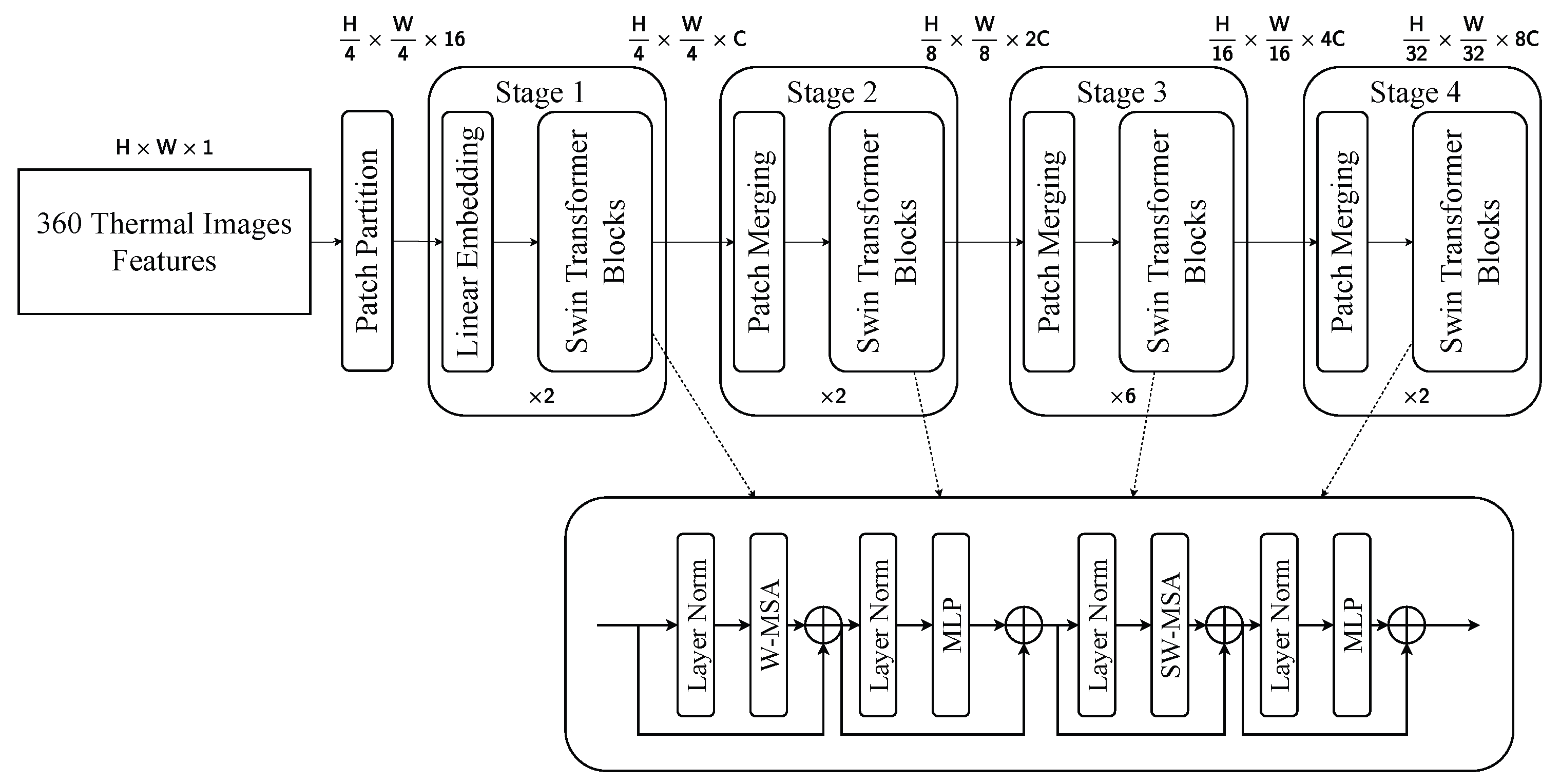

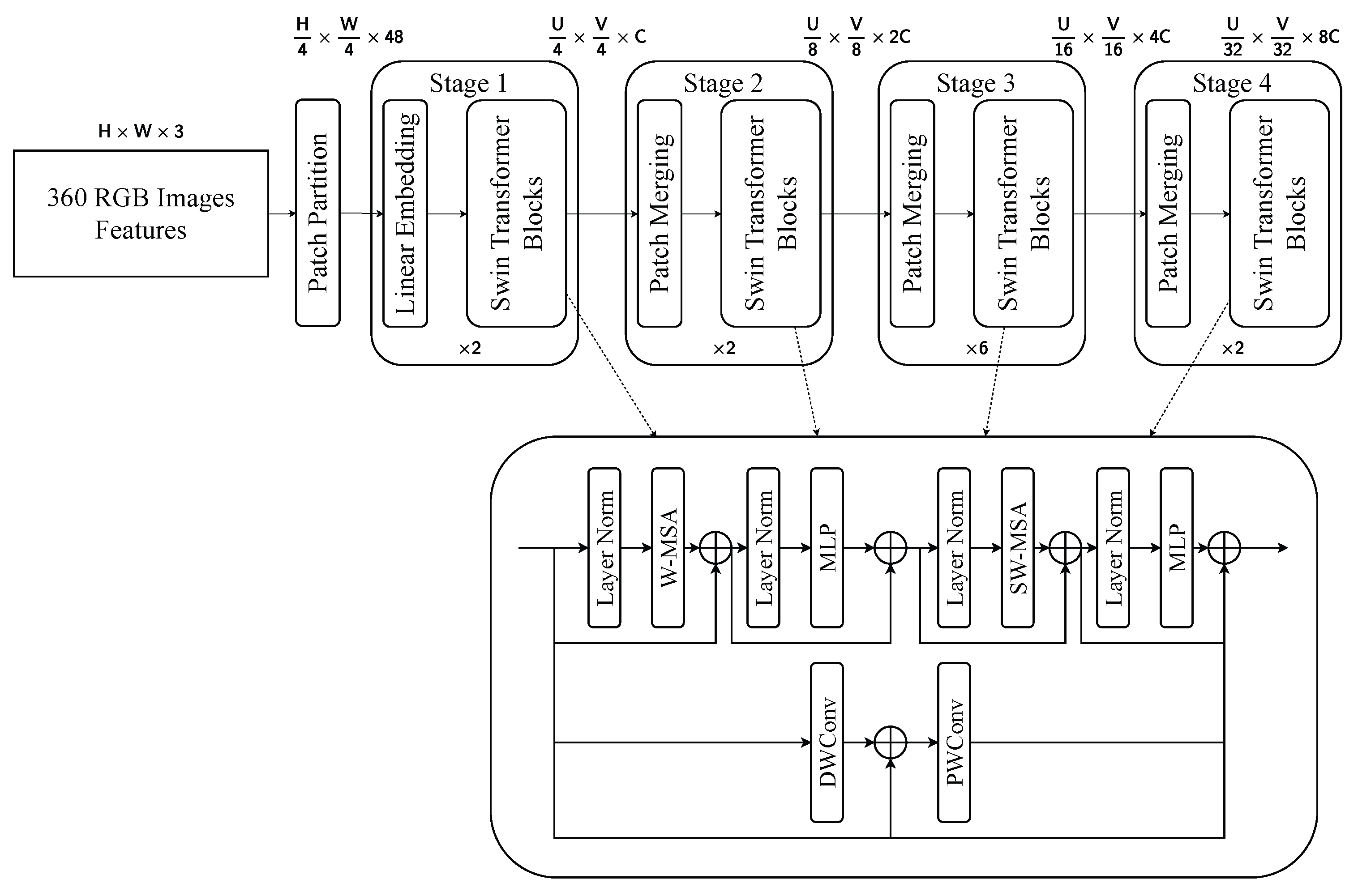

Section 4 outlines the architecture and design of our multimodal fusion framework for 3D object detection, emphasizing the integration of LiDAR point clouds, 360-degree RGB images, and thermal imaging.

Section 5 presents a detailed quantitative and qualitative evaluation of the proposed methods, including ablation studies and performance benchmarks.

Section 6 offers in-depth discussions on the implications of our findings, potential limitations, and directions for future research. Finally,

Section 7 concludes the paper by summarizing our key contributions and highlighting the broader impact of our work on the field of autonomous driving and multimodal perception.

3. Multimodal Calibration

To enhance spatial coverage and reduce the occurrence of missing points caused by occlusions, material reflectivity, or limited sensor field of view, we employ a multi-LiDAR setup consisting of four different LiDAR sensors. Each LiDAR offers distinct advantages in resolution and vertical coverage. By strategically combining data from these complementary sensors, we effectively compensate for blind spots or sparse regions that would otherwise remain unobserved when relying on a single LiDAR. This configuration enables robust 3D scene reconstruction in complex urban environments [

29].

In this paper, we used Ouster-128 LiDAR, Ouster-64 LiDAR, Velodyne Alpha Prime LiDAR, Hesai Pandar LiDAR [

30], six FLIR ADK cameras [

31], and the LadyBug 5 camera [

32] as shown in

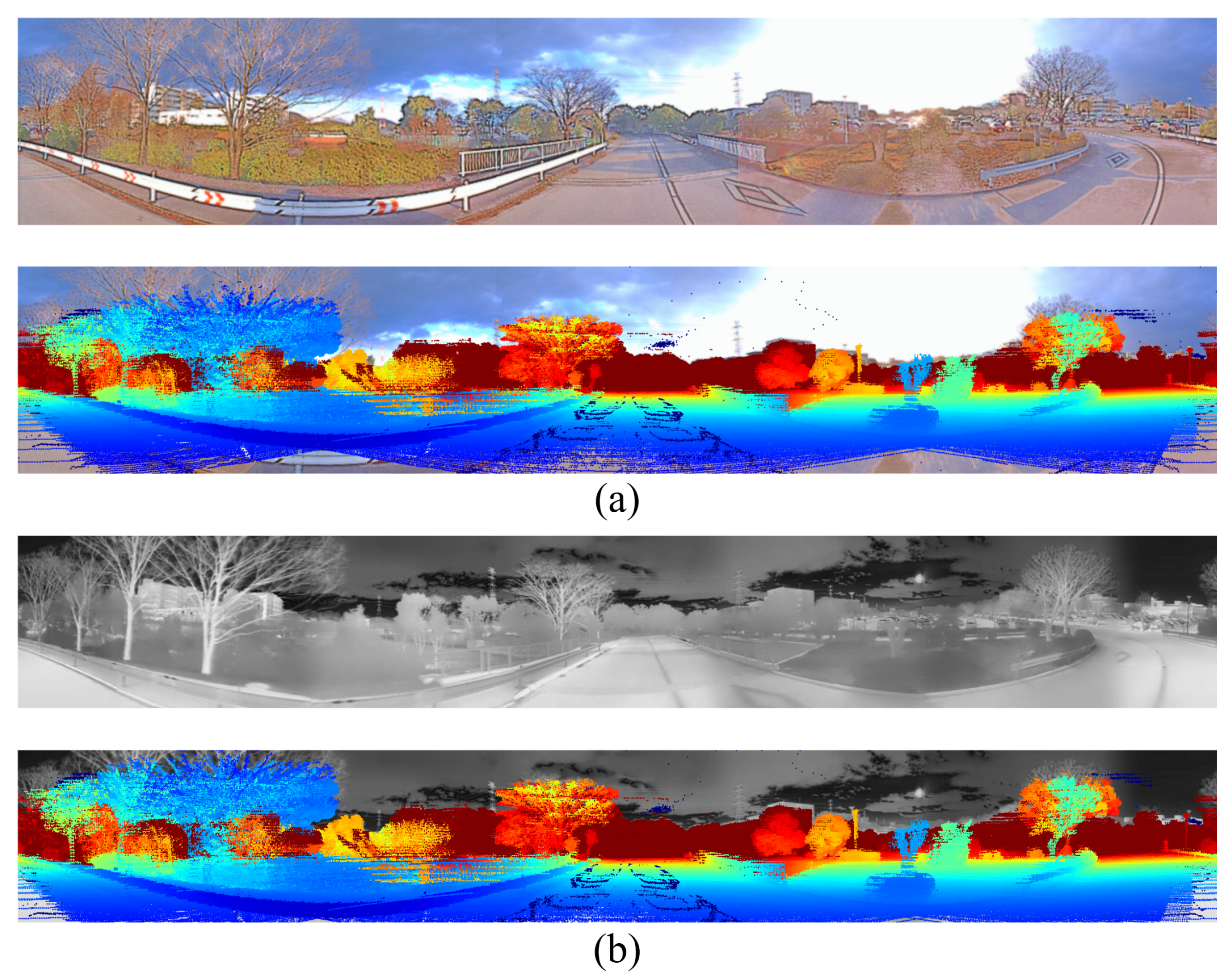

Figure 3 to record the dataset and evaluate the results. LadyBug camera and the FLIR ADK cameras were arranged in a unified structure to capture 360-degree images. The 360 RGB camera and the 360 thermal camera were combined, as shown in

Figure 4. For RGB images, we applied the Retinex algorithm to enhance color fidelity and improve visibility under varying lighting conditions. For thermal images, contrast-limited adaptive histogram equalization was employed to normalize information across the six thermal cameras, enhancing local contrast and preserving structural details.

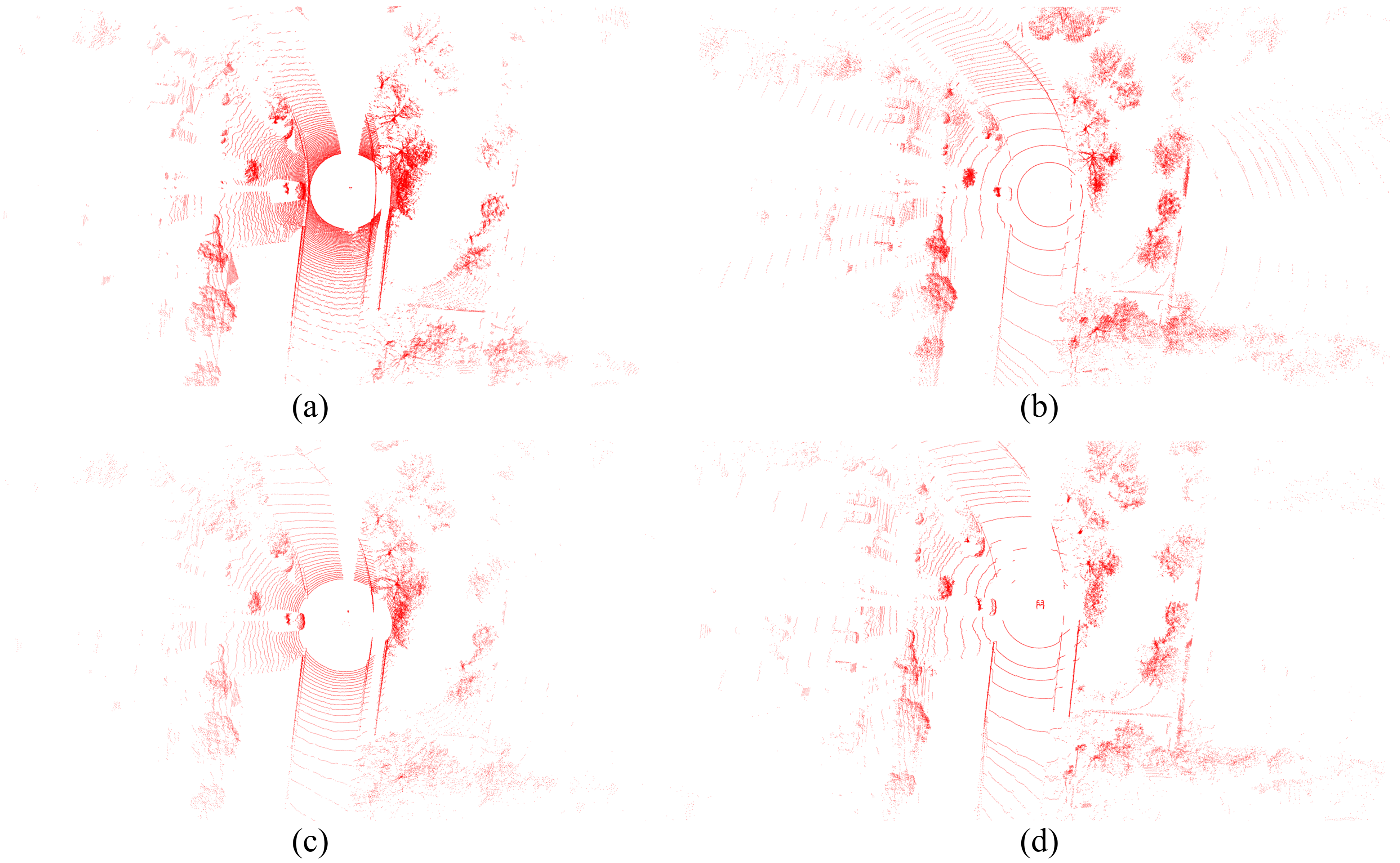

Figure 5 shows the result of projecting the point clouds of four LiDAR sensors into the 3D space. The Ouster OS1-128 LiDAR captures data through 128 vertical channels, providing exceptionally dense point clouds at close range. This high density is particularly effective for capturing fine-grained geometric details of objects at close or mid-distance, making it well suited for extracting structural features and precise object boundaries. However, one limitation of this sensor is its sensitivity to material and surface properties; objects with low reflectivity, unusual colors, or complex shapes may result in a lack of or missing points. The Velodyne Alpha Prime also features 128 channels, but is optimized for long-range sensing. Its laser arrangement provides superior point coverage at extended distances, which is critical for early detection and tracking of far-field objects in autonomous driving. However, the point density of this LiDAR is quite low in close proximity, which can introduce difficulties in detecting moving objects near the vehicle.

The Ouster OS1-64 is structurally similar to OS1-128, with a focus on high-density point capture in the close or middle range. Although it produces fewer points overall due to fewer channels, OS1-64 benefits from greater stability and lower data throughput, making it more robust in continuous operation. This sensor effectively complements OS1-128 by filling in the gaps when the latter suffers from point dropouts or signal loss. The Hesai Pandar LiDAR offers a distinct advantage in mid-range and long-range detection. Moreover, the Hesai Pandar is known for its high stability and consistent performance in prolonged operation.

By integrating these four LiDAR sensors, the system constructs a synthetic perception layer that significantly increases the density of spatial points and the robustness in all distance ranges, as shown in

Figure 6. This multi-LiDAR configuration allows the system to generate rich and continuous 3D point clouds within a single frame, capturing detailed geometry from the near field to far field. As a result, the proposed system not only provides full 360-degree coverage but also adapts effectively to the requirements of diverse operational scenarios ranging from urban navigation to high-speed highway driving. In multi-LiDAR fusion scenarios, point cloud alignment across sensors with heterogeneous characteristics is essential for reliable 3D perception.

Iterative Closest Point (ICP) algorithms have been widely adopted as a fundamental solution for rigid registration of 3D point clouds, particularly in LiDAR-based perception systems. The ICP algorithm [

33] is known for its conceptual simplicity, ease of implementation, and rapid convergence when the initial misalignment is small. However, its reliance on strict point-to-point correspondence makes it highly sensitive to outliers and sensor noise. To improve robustness, GICP extends the classical formulation by modeling each point as a Gaussian distribution and incorporating local surface geometry through covariance estimation [

34]. By blending point-to-point and point-to-plane metrics, GICP enhances convergence in partially overlapping or noisy scenes. However, it remains sensitive to inaccurate normal estimation and introduces considerable computational overhead, particularly when operating on dense point clouds. Several other variants have emerged to address the limitations of classical ICP. VICP improves robustness in dynamic environments but introduces a dependence on the reliability of external motion cues. Beyond these, learning-based methods such as PRNet and RPM-Net leverage deep neural networks to learn feature descriptors and soft correspondences, achieving superior robustness in complex environments. However, these methods require substantial training data and are often less interpretable or generalizable compared to their classical counterparts.

To address the limitations of classical ICP, we propose an improved registration method using the Log-Cosh loss function in the Lie group SE(3) [

35]. The Log-Cosh loss function is resistant to outliers typically caused by sensor noise and calibration errors. The Log-Cosh loss function also maintains gradient stability, leading to improved convergence behavior. Furthermore, rather than linearizing the transformation in Euclidean space, we perform optimization directly on SE(3), allowing for more accurate and consistent pose updates. The proposed geometric formulation not only accelerates convergence but also achieves a more effective balance between robustness, accuracy, and computational efficiency. Overall, our method significantly enhances the stability and performance of multi-LiDAR registration, particularly under challenging scenarios such as noisy, sparse, or incomplete point cloud data. However, this gain in accuracy comes at the cost of increased per-iteration computational complexity. As a result, when implemented on the same hardware, the overall runtime may not differ significantly from that of traditional methods, despite the improved convergence results. Overall, our approach significantly enhances the stability and performance of multi-LiDAR registration, especially under challenging conditions such as noisy, sparse, or incomplete point cloud data.

Let

be the source point clouds and

be the target point clouds. The goal is to estimate a rigid transformation

as in Equation (

1).

The residual vector for each pair of points is defined as in Equation (

2):

Using this residual, Equations (

3)–(

5) together define the Log-Cosh ICP optimization framework, where the classical squared loss function is replaced by the Log-Cosh function, and the transformation is updated iteratively.

After each iteration,

is updated via

In this study, we build on our previous research [

36,

37]. These studies contribute to a feature-based, targetless calibration framework for multimodal sensor systems. Unlike conventional target-based approaches, which rely on artificial markers or controlled environments, the targetless paradigm demonstrates superior adaptability to unstructured and dynamic scenes, eliminates the logistical overhead of target deployment, and enhances generalizability across varying sensor configurations.

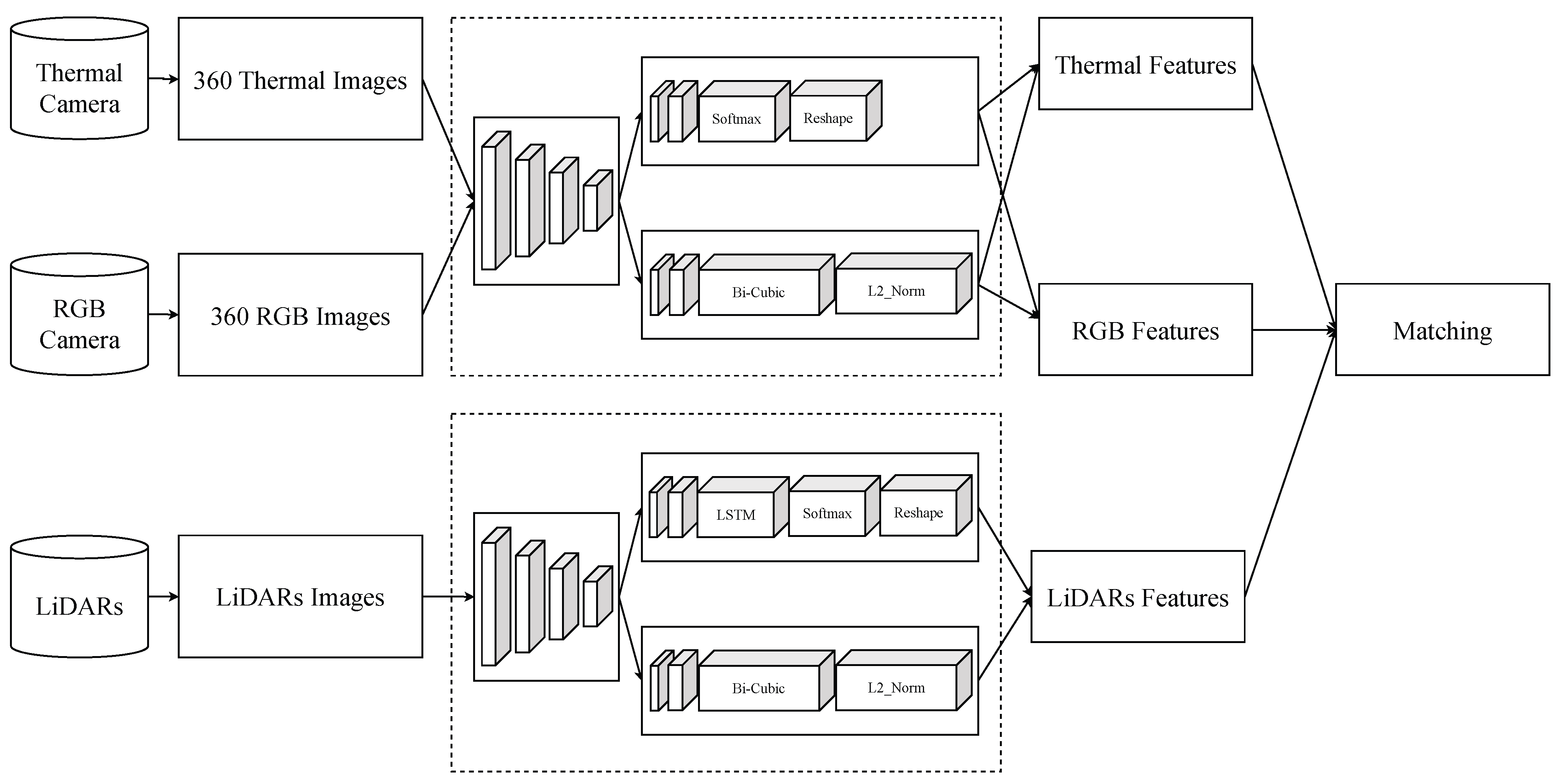

Our calibration pipeline begins with the extraction of salient features from both 2D images and 3D point clouds using the SuperPoint detector [

38], which provides robust and repeatable key points across different modalities, as in

Figure 7. The features of the LiDAR images, 360 RGB images, and 360 thermal images are matched by the Euclidean distance, as in Equation (

6), and the histogram of oriented gradients, as in Equation (

7). RGB images are enhanced by Retinex decomposition to enhance fine details in poorly lit areas without compromising natural colors [

39]. Outliers are then filtered using the RANSAC algorithm [

40] with the Mahalanobis distance [

41], as in Equation (

8).

Q is the set of points,

is the vector of points,

is the mean vector of the set, and

S is the covariance matrix of the set. The calibration results are shown in

Figure 8.

5. Experiment Results

To evaluate the effectiveness of our proposed method, we performed experiments on a comprehensive dataset comprising synchronized RGB images, thermal images, and LiDAR point clouds. The RGB imagery was captured using five out of six cameras from the Ladybug5 system, each with a horizontal field of view (FoV) of 90 degrees, and thermal images were acquired from six FLIR ADK cameras, each offering a 75-degree FoV. The two camera systems were arranged in a circular configuration to approximate panoramic thermal coverage.

The LiDAR data were collected from Ouster 128, Ouster 64, Velodyne Alpha Prime, and Hesai Pandar. The Ouster OS1-128 and OS1-64 devices have vertical FoVs ranging from to degrees, with 128 and 64 laser channels, respectively. The Velodyne Alpha Prime is configured with 128 channels and a vertical FoV ranging from to degrees, making it suitable for long-range high-resolution sensing. Meanwhile, Hesai Pandar64 provides 64 laser channels and a vertical FoV of approximately to degrees, offering balanced performance for mid-range perception. In all the evaluation sections, we only evaluated the accuracy of car detection.

The input data consist of LiDAR point clouds with 3,487,243 points per frame, 360 RGB images with a resolution of , and 360 thermal images with a resolution of . In the model, the Adam optimizer uses a learning rate of 0.0001, a momentum of 0.9, and a weight decay of 0.01. The model is trained for 80 epochs with a batch size of 16. This study was conducted with an RTX 3050 GPU.

5.1. Comparison with State-of-the-Art Registration Methods

We first assessed the precision of our calibration procedure by comparing it with several calibration methods. These methods are applied in LiDAR camera calibration and registration. For comparison, all methods were initialized with the same sensor configuration. To quantitatively assess alignment quality, we used two metrics: Root Mean Square Error [

52] and Recall [

53].

- 1.

Root Mean Square Error (

) measures the average Euclidean distance between the corresponding pair of points after registration. Lower

values correspond to more accurate and reliable transformations. It serves as an indicator of how well the transformed source point clouds align with the target. In our implementation, these correspondences are not manually annotated, but are established automatically during the registration process. Specifically, the algorithm performs iterative nearest-neighbor matching to determine point-to-point correspondences, which are then refined throughout the optimization. Only inliers, points within a predefined distance threshold, are used in the RMSE computation to ensure that the metric reflects a meaningful alignment accuracy. A lower RMSE indicates a more precise and reliable transformation.

- 2.

measures the ability of the detector to find all relevant objects in the image. High recall means that the model detects most of the actual objects, even if some detections are inaccurate or redundant. A True Positive (

) result indicates a correct detection and a False Negative (

) result indicates a ground truth object that is not detected.

Table 1 presents the registration performance of various methods in a dataset consisting of 100 frame pairs collected under normal weather conditions. The results show that our proposed method achieves the highest performance, with a

of

and the lowest

at

, indicating a highly accurate and consistent alignment. Compared to classical and modern methods, our method outperforms all others in both accuracy and geometric alignment quality. Overall, the results confirm that not only is our model theoretically robust, but it also delivers superior practical performance in 3D registration tasks under standard environmental conditions.

5.2. Comparison with State-of-the-Art Detectors

To thoroughly and rigorously evaluate the effectiveness and robustness of our proposed system, we conducted comprehensive comparisons with several state-of-the-art approaches that have been widely applied in object detection research. The methods selected for this comparison reflect their prominence in current research and their practical applicability in single- and multimodal sensing systems. All evaluated methods and our proposed approach are consistently trained, validated, and tested using the same experimental setup, dataset, and sensor configurations to ensure the fairness and integrity of comparative analysis. Furthermore, we consider that a considerable portion of previous research has focused primarily on evaluating detection algorithms under optimal environmental conditions with clear visibility and minimal disturbances. Such an idealized scenario may not sufficiently represent the challenges encountered in practical deployments. To address this limitation in a comprehensive way, we expand our evaluation approach into two distinct phases to fully reflect realistic conditions.

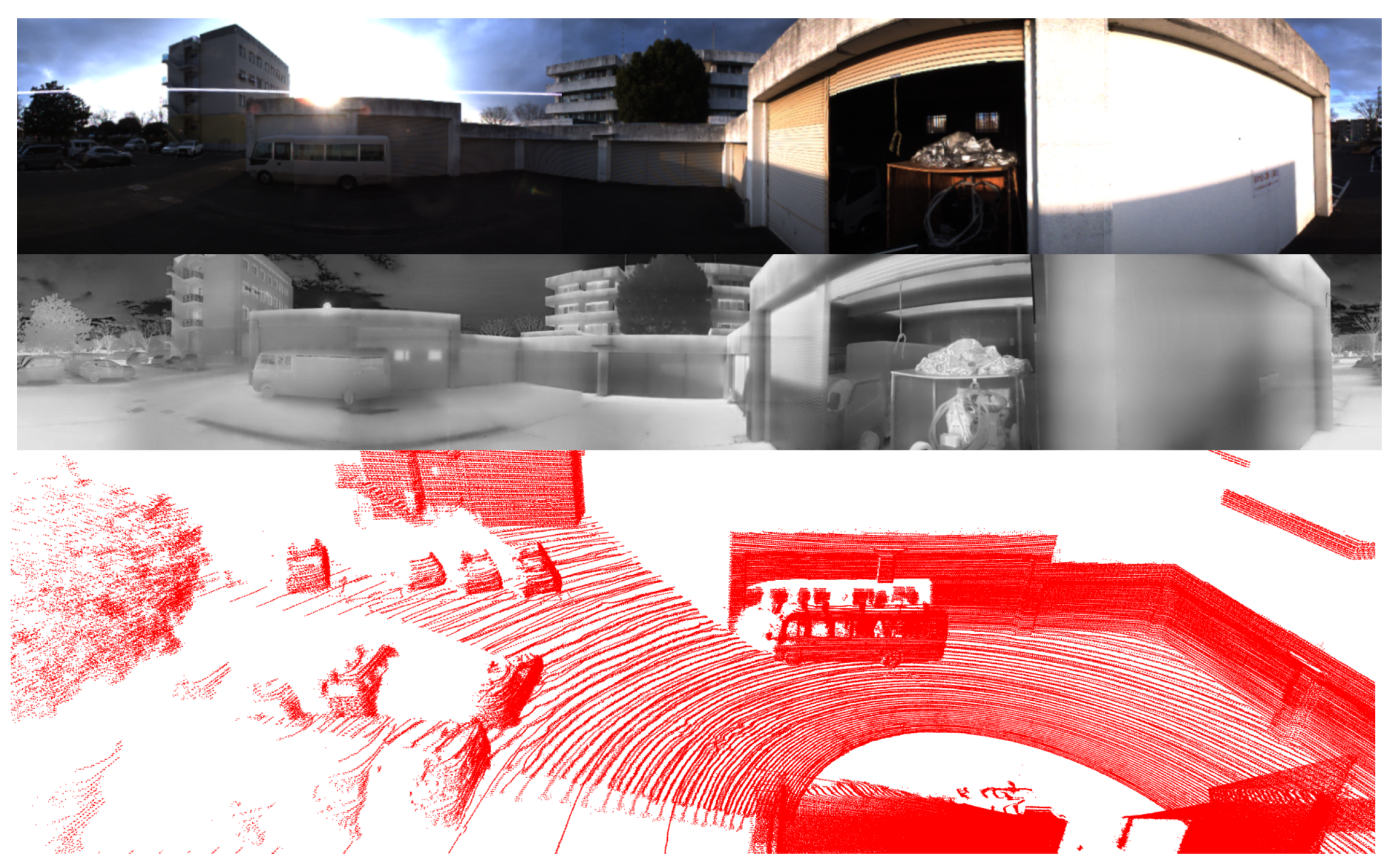

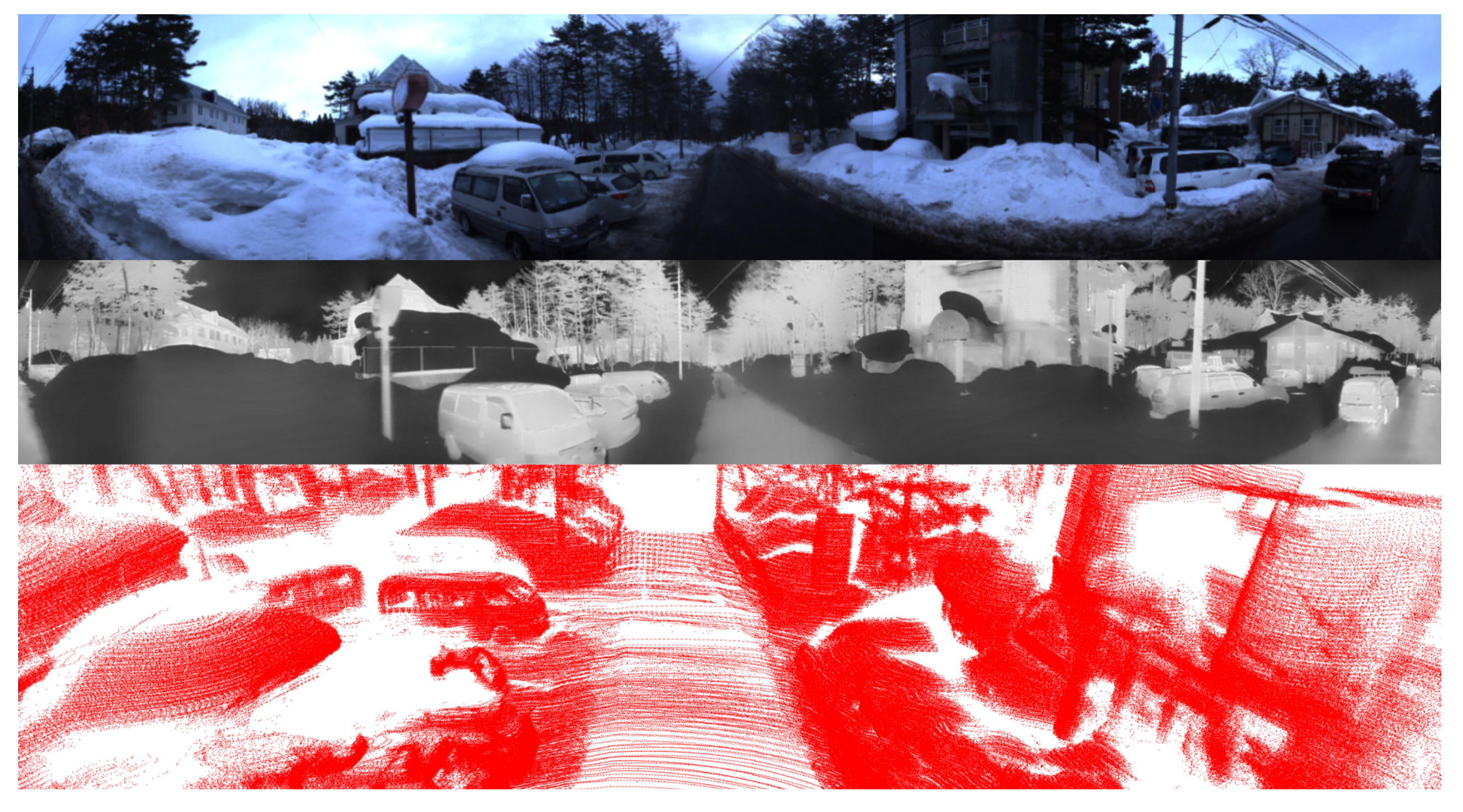

In the first evaluation, we performed experiments using datasets collected under normal environmental conditions characterized by clear weather and ideal lighting. This dataset represents a scenario in which most multimodal object detection algorithms typically demonstrate high performance and reliability. In contrast, the second and third evaluations involve rigorous testing using datasets acquired under adverse environmental conditions, including scenarios with reflective surfaces, thermal interference, and poor visibility due to weather phenomena such as snow or night. These challenging circumstances significantly impact sensor effectiveness and pose notable difficulties for detection algorithms. By implementing this two-comprehensive evaluation framework, our aim is to systematically assess and highlight the performance, strengths, and limitations of each fusion method in varying operational scenarios. To quantify and objectively analyze detection results, we utilize standard performance metrics, including Intersection over Union and Average Precision, thereby ensuring that our findings are both reliable and widely comparable within the research community:

- 1.

Intersection over Union (

) [

59] is a measure of the overlap between the predicted bounding box and the ground truth bounding box. A detection is considered correct if the IoU between the predicted and ground truth box exceeds a predefined threshold. The area of overlap is the shared area between the predicted box and the ground truth box. The area of union is the total area covered by both boxes.

- 2.

The Average Precision (

) [

53] in Equations (

14), (

16) and (

17) is a measure of the general accuracy of the model in detecting objects, calculated based on the Precision–Recall curve at various prediction thresholds. The higher and more stable the prediction, the larger the

. There are two types of AP commonly used in 3D object detection problems:

and

.

compares the predicted box and the ground truth box in terms of location and area in a two-dimensional plane.

compares the predicted box and the ground truth box in terms of location and volume in a three-dimensional space. A False Positive (

) result indicates a predicted object that does not correspond to any ground truth object.

We will evaluate and compare the algorithm’s performance across three distinct environmental conditions. The first scenario corresponds to typical conditions without significant environmental interference. The second scenario involves data collection in a snowy environment. The final scenario is set at night. Under normal conditions, both LiDAR sensors and cameras operate optimally, facilitating accurate and straightforward object detection using any type of sensor. In the snowy environment, LiDAR sensors and thermal cameras become more susceptible to interference from their surroundings, whereas RGB cameras maintain relatively stable performance. In contrast, at night, RGB cameras experience significant performance degradation compared to LiDAR and thermal cameras. Conducting experiments across multiple environmental scenarios allows us to thoroughly evaluate and validate the robustness and effectiveness of our proposed method. We evaluated the performance with as .

5.2.1. Evaluation Under Normal Conditions

Table 2 presents a comparative analysis of several methods under normal environmental conditions. In this scenario, sensors operate optimally without interference from weather conditions or variations due to time of day. An illustrative example is provided in

Figure 17, which highlights the clarity and high fidelity of the captured data. In such settings, sensors are able to perform at their full potential, without being impeded by external environmental factors. Any noise or point cloud distortions predominantly originate from the inherent properties of LiDAR systems when they interact with specific materials or object geometries, rather than being attributable to external environmental factors.

5.2.2. Evaluation Under Snowy Daytime Conditions

In

Table 3, we present a comparative evaluation of various methods under environmental conditions characterized by the presence of snow. In this experiment, data acquisition was carried out during periods of the day, enabling RGB cameras to collect high-quality imagery, as exemplified in

Figure 18. However, the snowy environment introduced significant interference to both LiDAR sensors and thermal cameras. Noise points are generated by substantial laser light reflection from snow and ice. This phenomenon significantly diminishes the quality of point cloud data, thus impairing the precision and accuracy of object detection tasks utilizing LiDAR sensors. In addition, the cold environment associated with snow induces thermal uniformity, thereby reducing the thermal contrast between objects and surroundings. Among the sensor modalities evaluated, RGB cameras generally experience the least degradation in performance, as they preserve essential visual attributes such as color, shape, and texture. Although the predominantly white background created by snow may reduce contrast, it typically does not lead to significant deterioration in detection performance, particularly compared to the challenges encountered by LiDAR.

The results shown in

Table 3 clearly illustrate the advantage of incorporating RGB cameras compared to LiDAR-only approaches. LiDAR-only methods experience substantial performance declines under snowy conditions due to environmental interference. In contrast, methods that employ a combination of LiDAR and RGB cameras exhibit stability in both BEV and 3D object detection. Generally, in the snowy conditions evaluated here, the integration of RGB cameras emerges as the most impactful strategy to improve the overall 3D object detection reliability and accuracy. Compared with existing approaches for one frame, our proposed method demonstrates noticeable improvements in detection performance under snow conditions. Moreover, the presence of outliers is significantly reduced across varying distances due to the comprehensive spatial coverage provided by the four LiDAR sensors at close, medium, and long ranges.

5.2.3. Evaluation Under Snowy Nighttime Conditions

In this evaluation, we compare the performance of various methods on a snowy night with that in

Table 4. In such environments, the quality of images captured by RGB cameras deteriorates significantly due to the absence of natural illumination, even when image enhancement tools such as Retinex are used, as shown in

Figure 19. As a result, the detection performance that relies on RGB data becomes unreliable. Under these challenging lighting conditions, LiDAR sensors and thermal cameras demonstrate clear advantages. LiDAR sensors operate independently of ambient light and thus remain stable and reliable at night. The absence of sunlight minimizes background interference, leading to cleaner point clouds with reduced noise levels.

Thermal cameras also perform more effectively at night, as thermal contrast between objects and the surrounding environment tends to be greater in the absence of solar radiation. This increased contrast improves the clarity and distinctiveness of thermal signatures, allowing thermal cameras to detect objects with greater precision. Although cold temperatures, especially during winter, can reduce the thermal difference between objects and the environment, thermal imaging still generally outperforms RGB imaging under these conditions. Although RGB cameras may still be usable at night when supported by artificial lighting, their performance remains inconsistent and prone to errors. In contrast, fusion-based approaches that integrate LiDAR with thermal imaging exhibit stronger robustness and reliability. The performance difference between systems with and without RGB cameras becomes less significant at night.

Similarly to the above situations, the use of multiple LiDAR sensors offers substantial benefits by increasing point cloud density and further reducing spatial noise. In low light conditions, where sunlight interference is absent, all four LiDAR sensors tend to operate more stably, producing cleaner and more consistent point cloud data. The denser and more reliable point distribution improves the system’s capacity for accurate spatial perception. The experimental results clearly demonstrate that our approach, when supported by multiple LiDAR sensors, outperforms several existing methods.

5.3. Ablation Studies

In the previous evaluation, we presented a comprehensive evaluation by comparing our proposed method with several existing approaches under different conditions. Although the comparison with other methods provided a broad overview of performance, the ablation studies aimed to conduct a more focused and detailed analysis to further demonstrate the effectiveness and robustness of our method.

5.3.1. Evaluation of Effectiveness of LiDAR Sensors at Different Distances

To further understand the performance and limitations of different LiDAR configurations, we performed a distance-based evaluation of 3D object detection. In real-world autonomous systems, objects appear in varying ranges from the sensor, and the effectiveness of a LiDAR can vary significantly with distance because of differences in resolution, point density, and sensor placement. Therefore, it is essential to assess whether sensor fusion strategies can maintain detection quality in the near, mid, and far ranges.

Table 5 presents the 3D Average Precision measured in distance intervals.

The configuration using four LiDAR sensors consistently achieves the best performance across all distance intervals. This confirms that multisensor fusion can effectively combine the complementary strengths of each individual sensor while reducing their limitations, offering broader spatial coverage and more robust environmental representation. Generally, instead of relying on a single sensor, combining four LiDAR sensors demonstrates clear advantages by improving detection performance across all spatial ranges.

5.3.2. Evaluation of Effectiveness of Modalities

To examine the contribution of individual sensing modalities under adverse conditions, we evaluated 3D detection performance using different combinations of LiDAR, RGB camera, and thermal camera. Specifically, we consider scenarios under snowfall and nighttime, where perception becomes particularly challenging due to reduced visibility.

Table 6 presents the Average Precision under these conditions for various configurations of modality.

The results indicate that using only LiDAR, or selectively disabling either the RGB or thermal branch, still produces acceptable detection outputs depending on situations. Each modality, on its own or in partial combinations, is capable of contributing meaningful cues depending on the situation. However, the integration of LiDAR, RGB, and thermal data provides a more complete understanding of the scene, enhancing stability. These findings underscore the importance of multimodal fusion in real-world applications where environmental variability is inevitable.

5.3.3. Evaluation of Effectiveness of Each Module

To evaluate the individual contribution of each module in our architecture, we conducted an ablation study by systematically replacing key components with simpler alternatives. Specifically, the focal loss is replaced with the standard cross-entropy loss, the Swin Transformer is replaced with average pooling, and the axial attention fusion module is replaced with a simple feature concatenation strategy. We also evaluated the effect of removing the depthwise separable convolution, which is designed to improve efficiency in the fusion process.

As shown in

Table 7, each simplified configuration leads to a decrease in detection performance, indicating that the original design of each module plays a significant role in the final result. Simpler alternatives may offer speed, but combining all modules yields the highest detection accuracy.

5.4. Qualitative Results

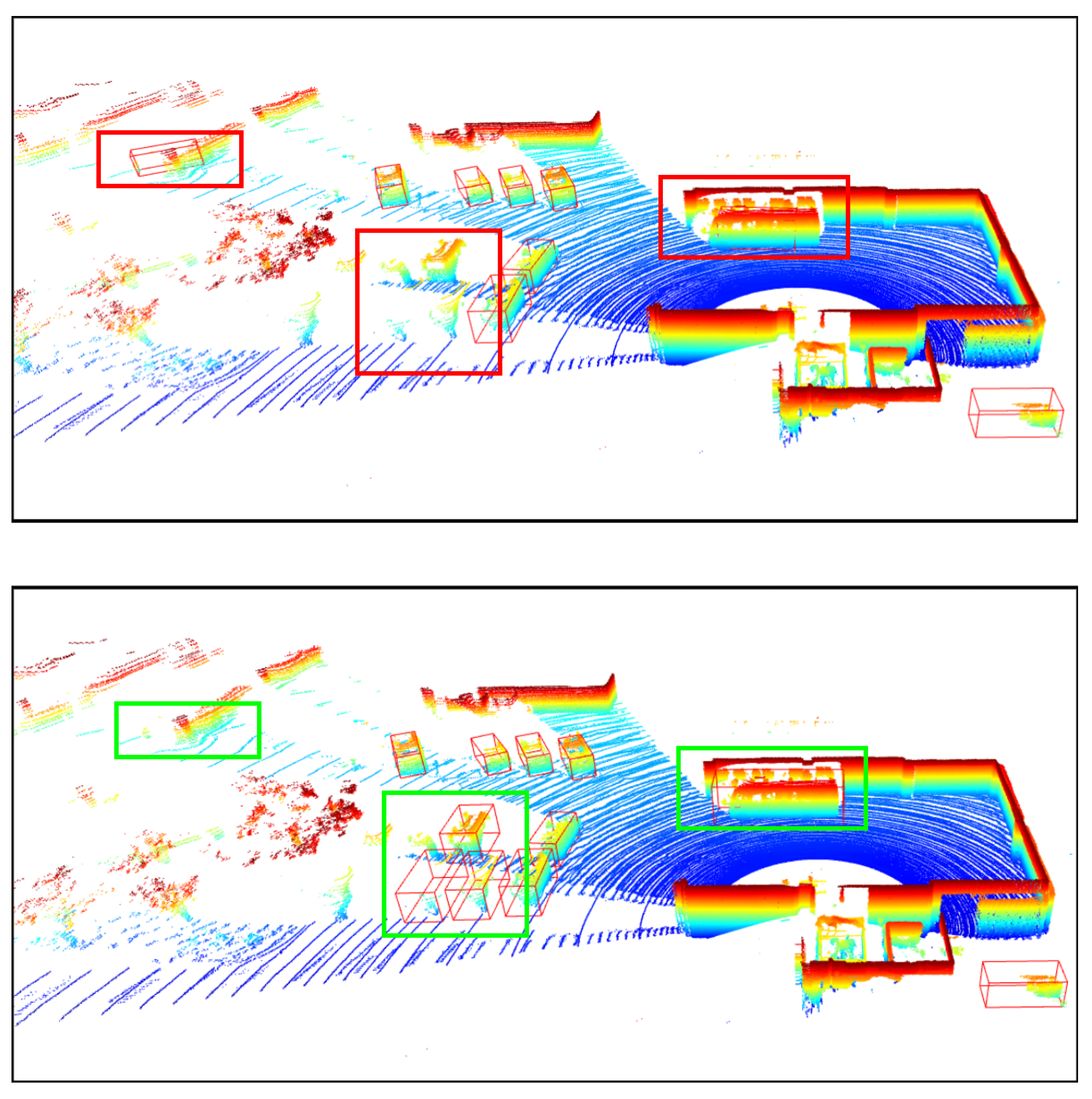

In this section, we present the qualitative results of our proposed model under particularly challenging conditions, as shown in

Figure 20. RGB images are significantly degraded due to insufficient illumination or strong light interference, which limits their effectiveness. In contrast, in snowy daytime conditions as in

Figure 21, LiDAR sensors often suffer from severe noise caused by snowflakes and reflective surfaces, while thermal cameras may experience reduced contrast due to uniformly low temperatures.

The comparison between the two scenarios underscores the effectiveness of the proposed multimodal fusion framework in addressing a wide range of visual challenges. In nighttime environments, the RGB modality is significantly degraded due to limited illumination, intense glare from artificial lights, and increased visual noise. Under such conditions, thermal imaging offers greater stability, while LiDAR gives a reliable geometric structure for accurate 3D localization. In contrast, snowy daytime conditions introduce a different set of challenges. The presence of heavy snow results in strong reflectivity, which adversely impacts the quality of LiDAR measurements. At the same time, uniformly low temperatures reduce the effectiveness of thermal imaging in distinguishing objects, except for those with notable heat emission. Despite these difficulties, the predicted 3D bounding boxes remain consistently well aligned across all modalities, demonstrating the effectiveness of the proposed approach. These results confirm that the integration of RGB, thermal, and LiDAR data enables the system to adapt to varying environmental conditions and maintain high detection accuracy, even when individual sensing modalities are compromised.

6. Discussion

Although many advances have been mentioned in this paper, there are still some challenges. First, although the use of 360 cameras can completely solve the problems related to blind spots, making the field of view wider, if there is a strong light source shining directly on the intersection area of the two cameras, the effect of light streaks cannot be solved by conventional balancing algorithms, as in

Figure 22.

In addition, the use of multiple LiDAR sensors in multiple locations helps to eliminate some blind spots and increase the density of points. Proper LiDAR sensor placement is crucial to avoid laser interference. In addition, using different LiDAR sensors with different designs will require careful intensity normalization. In contrast, using the same LiDAR sensors avoids intensity normalization, but choosing LiDAR sensors that are similar or have very similar point distributions will cause the density of points in close and far areas to be significantly different. For example, if, instead of four LiDAR sensors with different point distributions from near to far, we use four Ouster-128 LiDAR sensors, the density of points in the near area will increase dramatically, and as a consequence, deep learning methods may overfit objects at close distances.