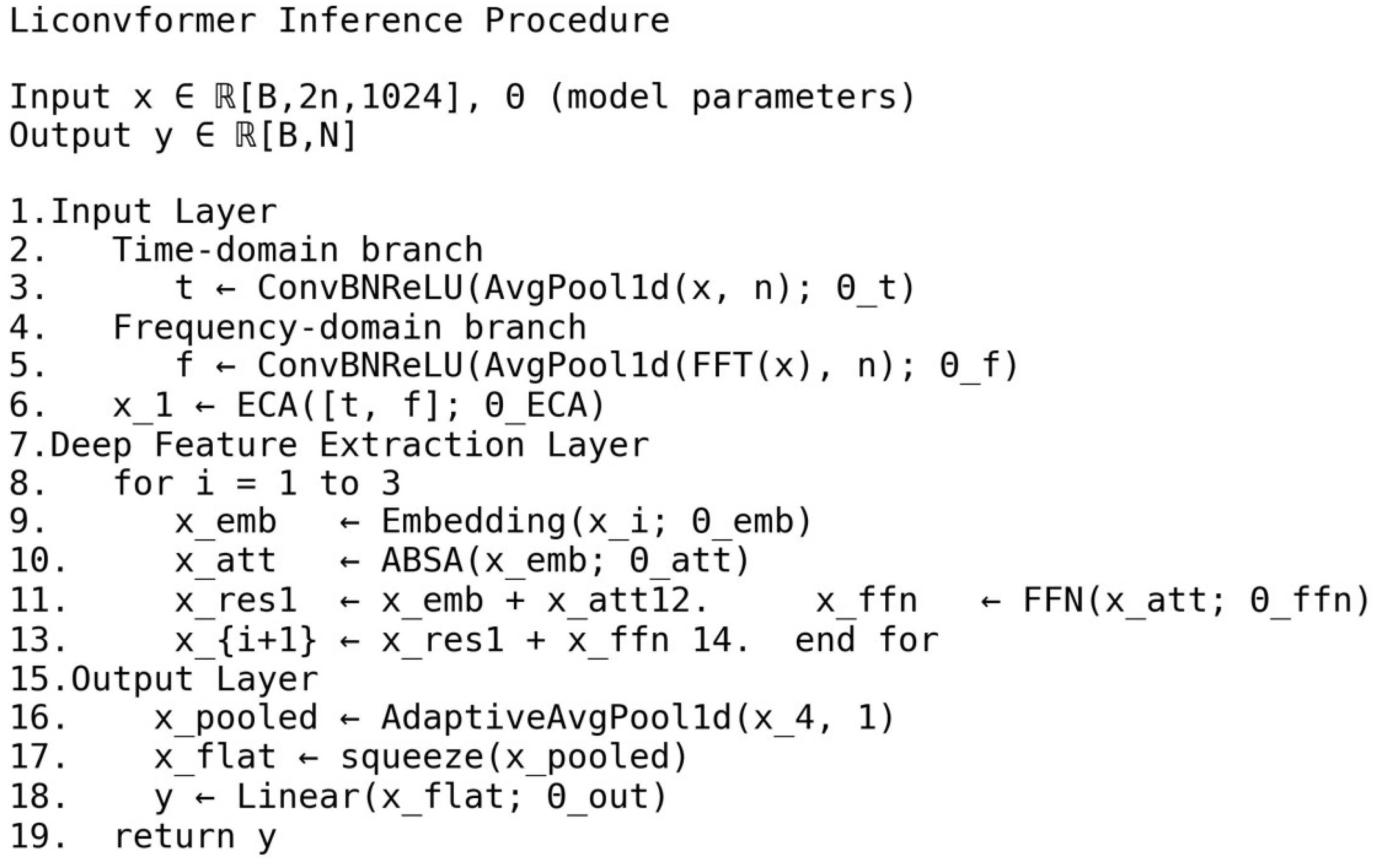

4.1. Preparation

This study systematically compares the proposed YConvFormer method with seven benchmark models: the traditional CNN architecture; ResNet18; four state-of-the-art ConvFormer models: LiConvFormer (proposed in 2024), CLFormer (proposed in 2022), ConvFormer-NSE (proposed in 2023), and LiteFormer (proposed in 2024); and two dual-modal network models: DCNN and CCFT (proposed in 2024).

To assess the robustness of the proposed method in a real industrial noise environment and simulate potential distribution discrepancies between training and testing data in manufacturing scenarios, two different types of noise were intentionally injected into the test set [

47]. Noise was added to the test dataset to simulate real-world disturbances, ensuring the model’s performance is robust under challenging conditions. The equation is as follows:

where

represents the original signal sampling points, and

represents the sampling points after noise injection.

denotes Gaussian noise that follows a normal distribution with a mean of 0 and a variance of λ.

is the scaling factor, and

represents Gaussian noise with a mean of 1 and a variance of λ. λ is the variance, and the larger the value of λ, the greater the difference between the training and test sets.

To ensure a fair comparison across models, all methods use exactly the same training configuration: the AdamW optimizer is employed with an initial learning rate of 0.001 and a weight decay of 0.01. The learning rate scheduling adopts the ReduceLROnPlateau strategy, where the learning rate is reduced to 10% of its current value if the validation loss does not improve for 5 consecutive epochs. The minimum learning rate is limited to 0.00001. The batch size is fixed at 32, and the model is trained for a total of 100 epochs.

To evaluate our model, we adopted accuracy, complexity, recall, precision, and F1-score as key performance metrics [

48,

49]. To ensure robustness, we conducted five consecutive experiments and computed the aforementioned metrics for each run, reporting their averaged values. We followed an iterative train–validation strategy: after each epoch, we evaluated model performance on the validation set; in the latter half of training, we saved the checkpoint with the highest validation accuracy and used it for the final test-set evaluation. Note that no cross-validation was performed.

The experimental environment is configured as follows: PyTorch 1.12.0, CPU Intel Core i5-12600KF, and GPU NVIDIA GeForce GTX 4060 Ti.

4.2. Performance on the XJTU Dataset

The experiment uses a publicly available dataset collected from a planetary gearbox at Xi’an Jiaotong University [

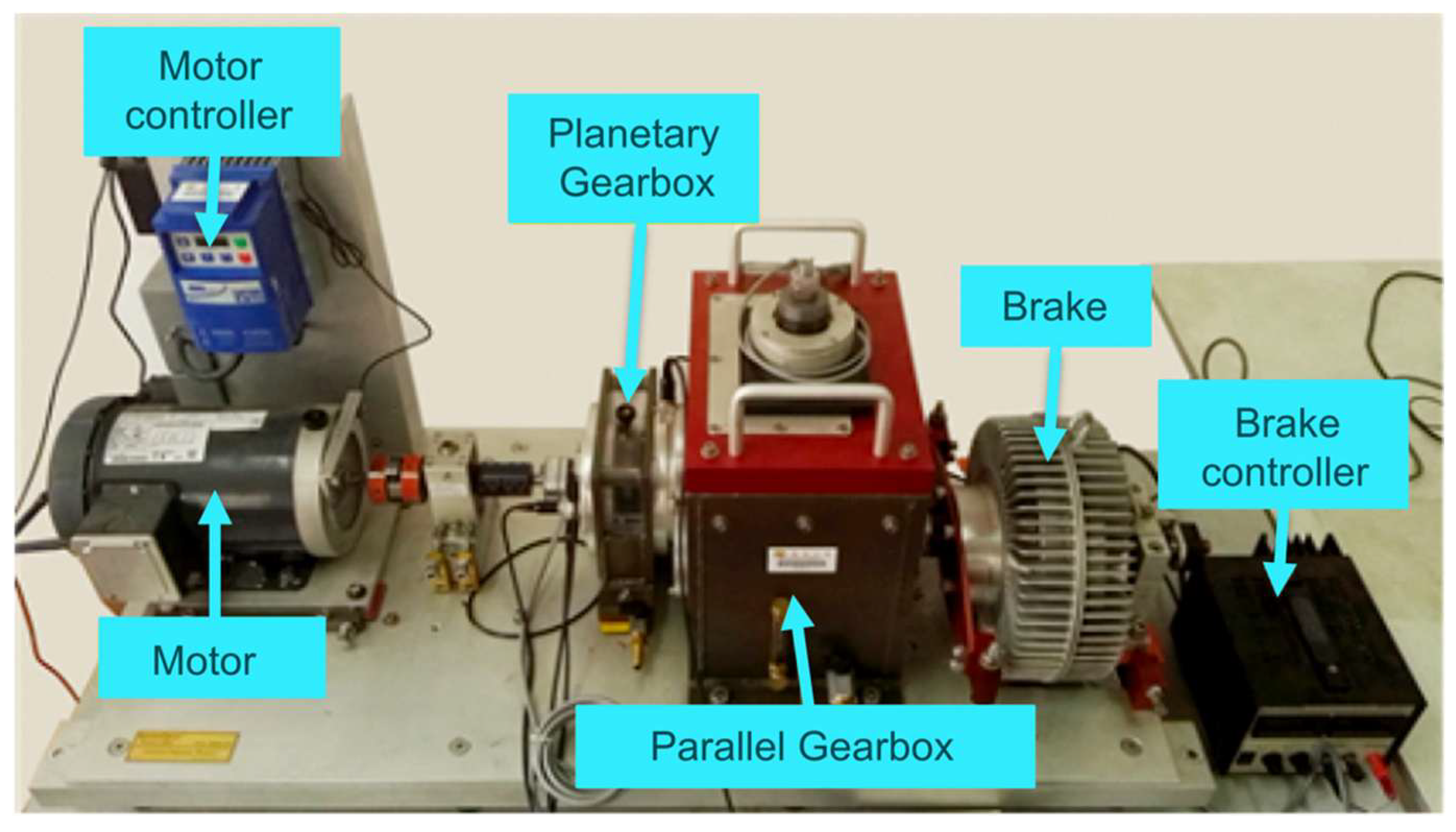

50], with the experimental setup shown in

Figure 7a. The test rig consists of a motor, controller, planetary gearbox, parallel gearbox, brake, and accelerometers for collecting vibration signals in both horizontal and vertical directions.

In the experiment, vibration signals were synchronously collected in the axial and radial directions under the conditions of a constant motor speed of 1800 rpm and a sampling frequency of 20,480 Hz. The dataset covers four predefined fault modes for the planetary gearbox and four predefined fault modes for the bearings, totaling eight fault states. Additionally, signals from the healthy state were collected as a benchmark, resulting in nine state categories in total, as shown in

Figure 7b. All vibration samples have a fixed length of 1024 data points, with a total of 10,800 samples. The detailed sample information is shown in

Table 2. This partitioning ensures the training set contains enough samples for the model to fully learn each fault’s features, while retaining ample samples in the test set to yield a stable and reliable evaluation.

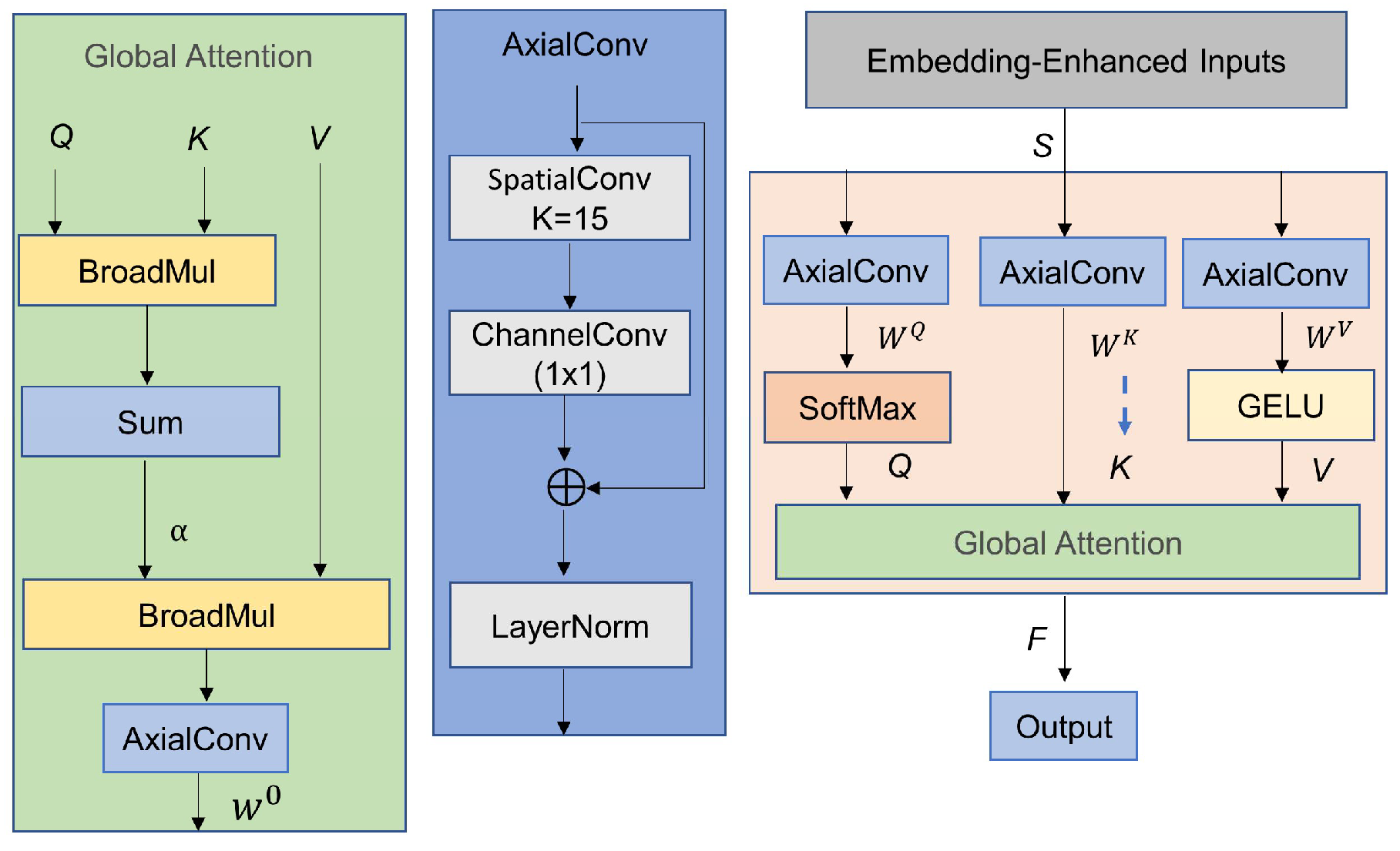

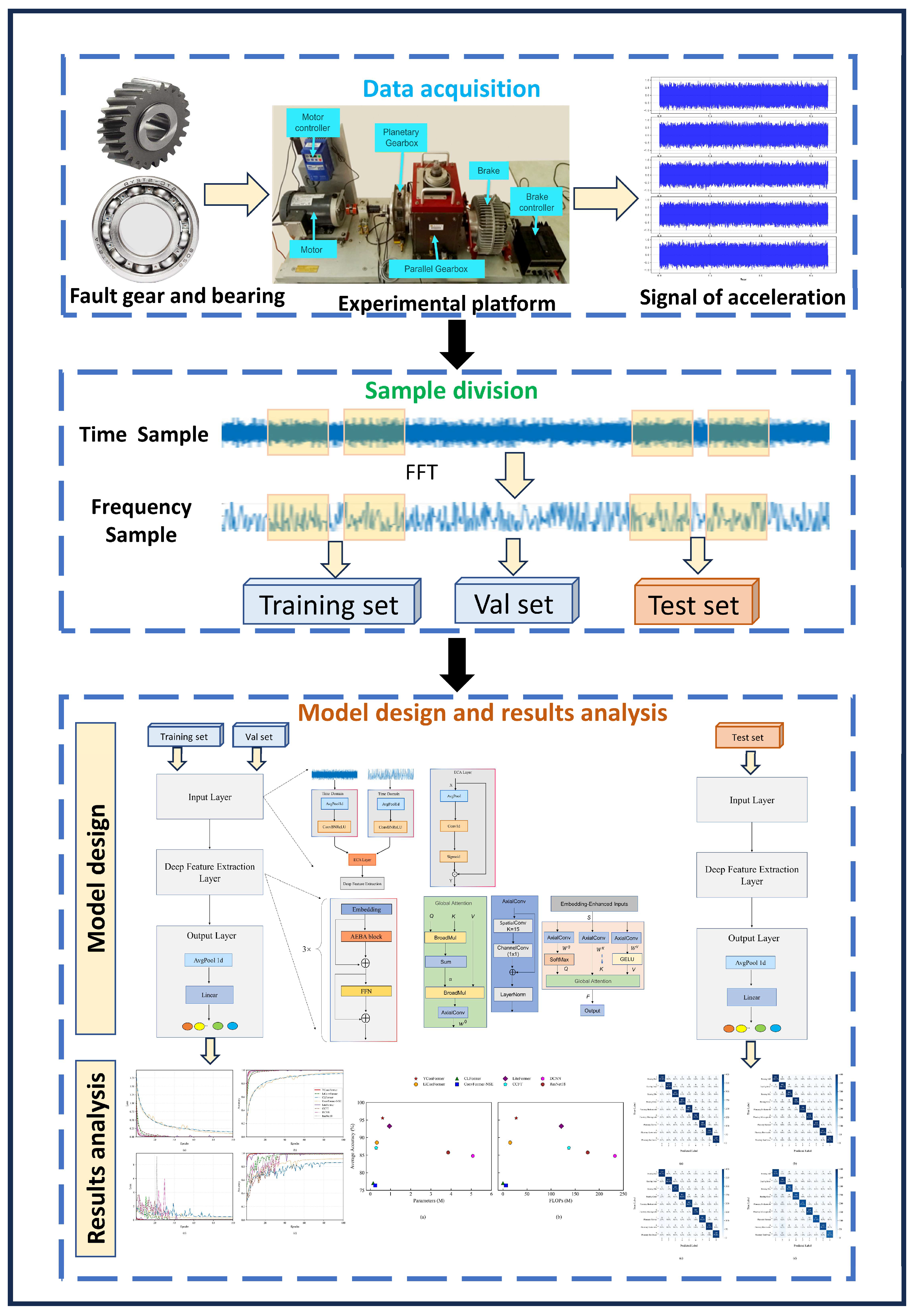

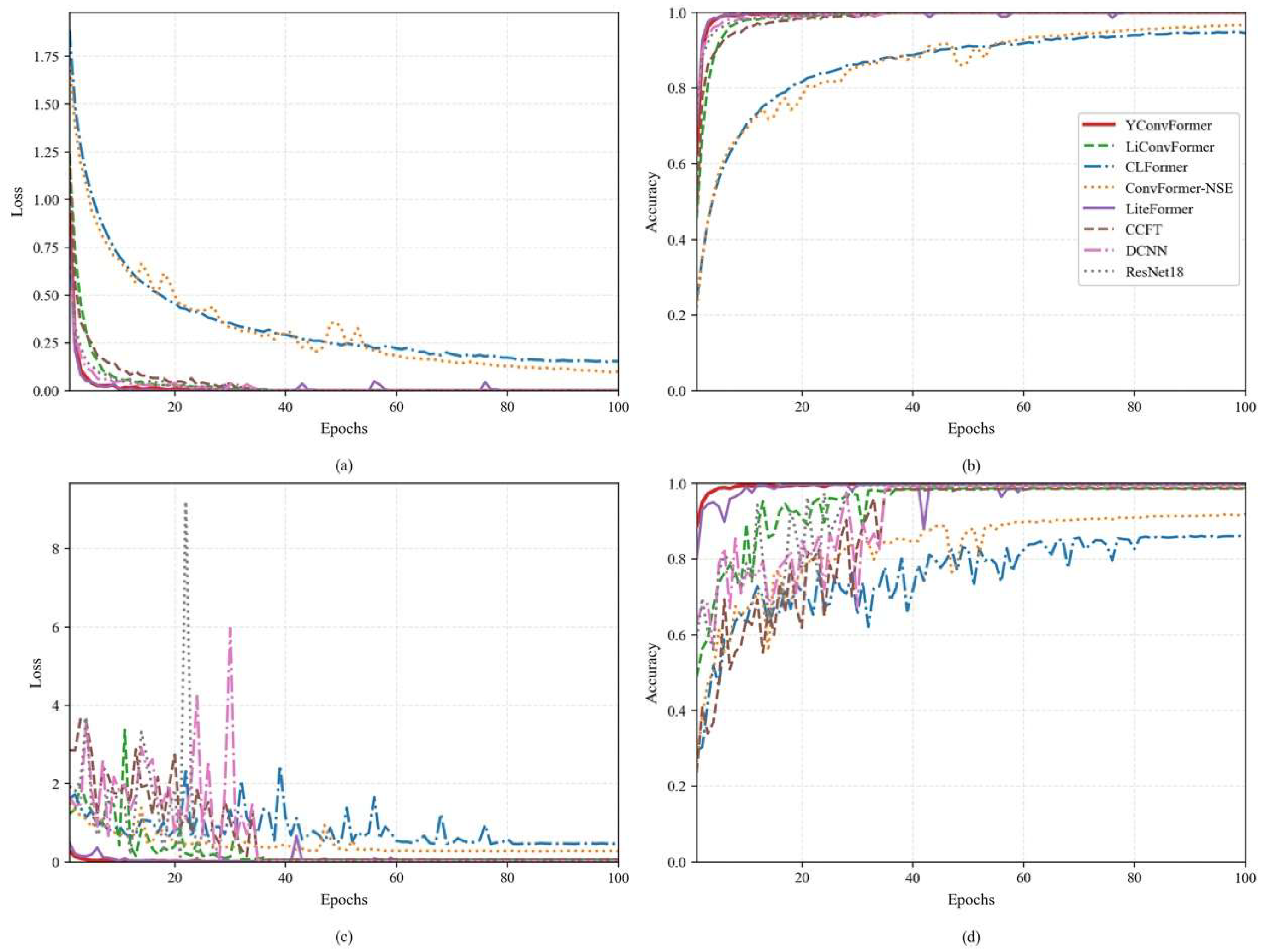

As shown in

Figure 8, the average loss and accuracy curves during the training and validation phases for the ten experiments indicate the following: in the early stages of iteration, the loss and accuracy fluctuations on the training set for all methods were significantly smaller than those on the validation set. Subsequently, upon reaching 60 training epochs, all models exhibited stable low loss and high accuracy on both the training and validation datasets. Nevertheless, the validation accuracy of CLFormer and ConvFormer-NSE lagged noticeably behind the remaining six approaches. This phenomenon can be attributed to the feature dimension reduction strategy used by these two models, which led to the loss of high-frequency details in the vibration signals, thereby weakening the models’ ability to analyze multi-dimensional fault features. It is worth noting that although DCNN and CCFT eventually achieved ideal loss and accuracy in the test phase, their early curves exhibited severe oscillations. This is a typical manifestation of model over-parameterization, reflecting issues such as unstable gradients during training, low computational efficiency, and poor interpretability. In contrast, the proposed YConvFormer model, on the other hand, demonstrated average loss and accuracy fluctuations that were only slightly higher than LiteFormer. However, it exhibited the best stability and convergence during the validation phase, indicating that its parameter optimization trajectory consistently approached the global optimal solution. Ultimately, this low fluctuation characteristic confirms that the model effectively captures the spatiotemporal correlations of fault features, while also validating its outstanding generalization ability and noise robustness.

The comprehensive analysis in

Table 3 indicates that YConvFormer achieves the optimal balance between noise robustness, computational efficiency, and diagnostic accuracy. Specifically, under strong noise interference (λ = 0.6), YConvFormer achieves an average accuracy of 87.93%, which is 6.52 percentage points higher than the best baseline, LiteFormer (81.41%). Compared to the traditional CNN model, ResNet18 (69.24%), it improves by 18.69 percentage points. Under low-noise conditions (λ = 0 and λ = 0.2), YConvFormer exhibits negligible variability with standard deviations of 0 and 0.200, respectively, surpassing all competing models. At higher noise levels, the standard deviation increases to 1.9545 at λ = 0.4 and to 2.5768 at λ = 0.6. However, the model’s pronounced accuracy advantage under these conditions firmly establishes YConvFormer as the most effective architecture overall.

Furthermore, YConvFormer achieves significant computational efficiency with only 0.604 M parameters (63.8% of LiteFormer) and 27.646 M FLOPs, representing an 88.1% reduction compared to DCNN. While CLFormer and ConvFormer-NSE exhibit lower complexity (0.143 M parameters and 6.270 M FLOPs, respectively), their accuracies under high noise drop to 65.96% and 60.58%, which are 21.97 and 27.35 percentage points lower than YConvFormer. These results demonstrate the effectiveness of the proposed architecture in achieving both robustness and lightweight design. Ultimately, the experimental data confirms that YConvFormer outperforms current advanced models in terms of noise adaptability, resource efficiency, and real-time performance, offering a more feasible, robust diagnostic solution for industrial scenarios.

Figure 9 provides a comprehensive comparison of diagnostic performance and computational complexity under various noise levels. The proposed YConvFormer exhibits remarkable noise robustness while achieving superior computational efficiency. Remarkably, with only 0.604 M parameters and 27.646 M FLOPs, it maintains an average accuracy of 87.93% under strong noise conditions (λ = 0.6), significantly outperforming all comparison models. Compared to the second-best performer, LiteFormer (81.41% accuracy), YConvFormer achieves a 6.52 percentage point gain, while reducing the parameter count by 36.2% and FLOPs by 77.2%. When compared with the lightweight CLFormer, it delivers a 21.97% higher accuracy with only 0.461 M additional parameters. Furthermore, relative to the conventional CNN model ResNet18, YConvFormer compresses the parameter size and computational cost by 84.3% while improving accuracy by 18.7 percentage points. These results affirm the model’s ability to balance robustness, accuracy, and efficiency, making it well-suited for real-time industrial fault diagnosis under noisy conditions.

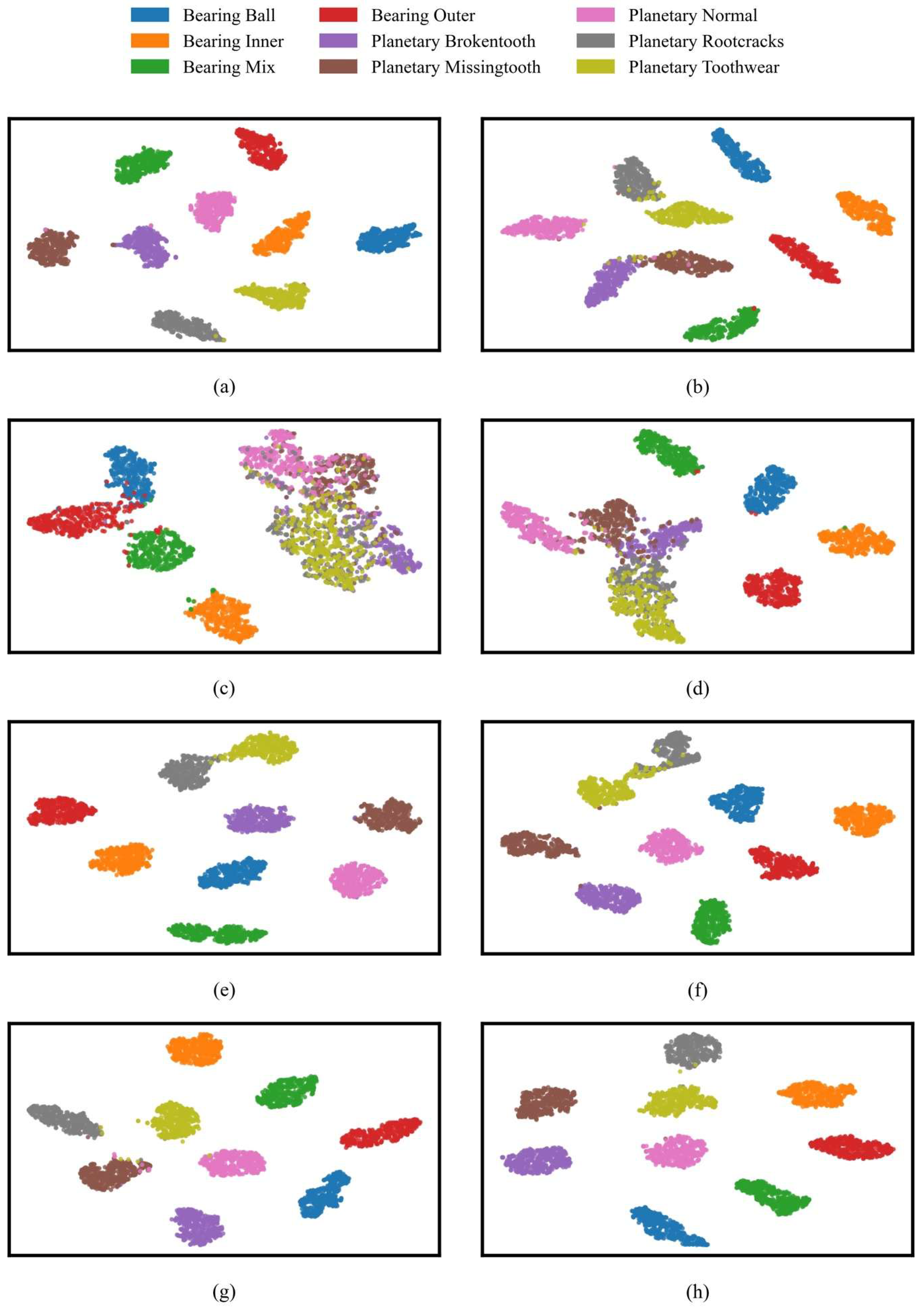

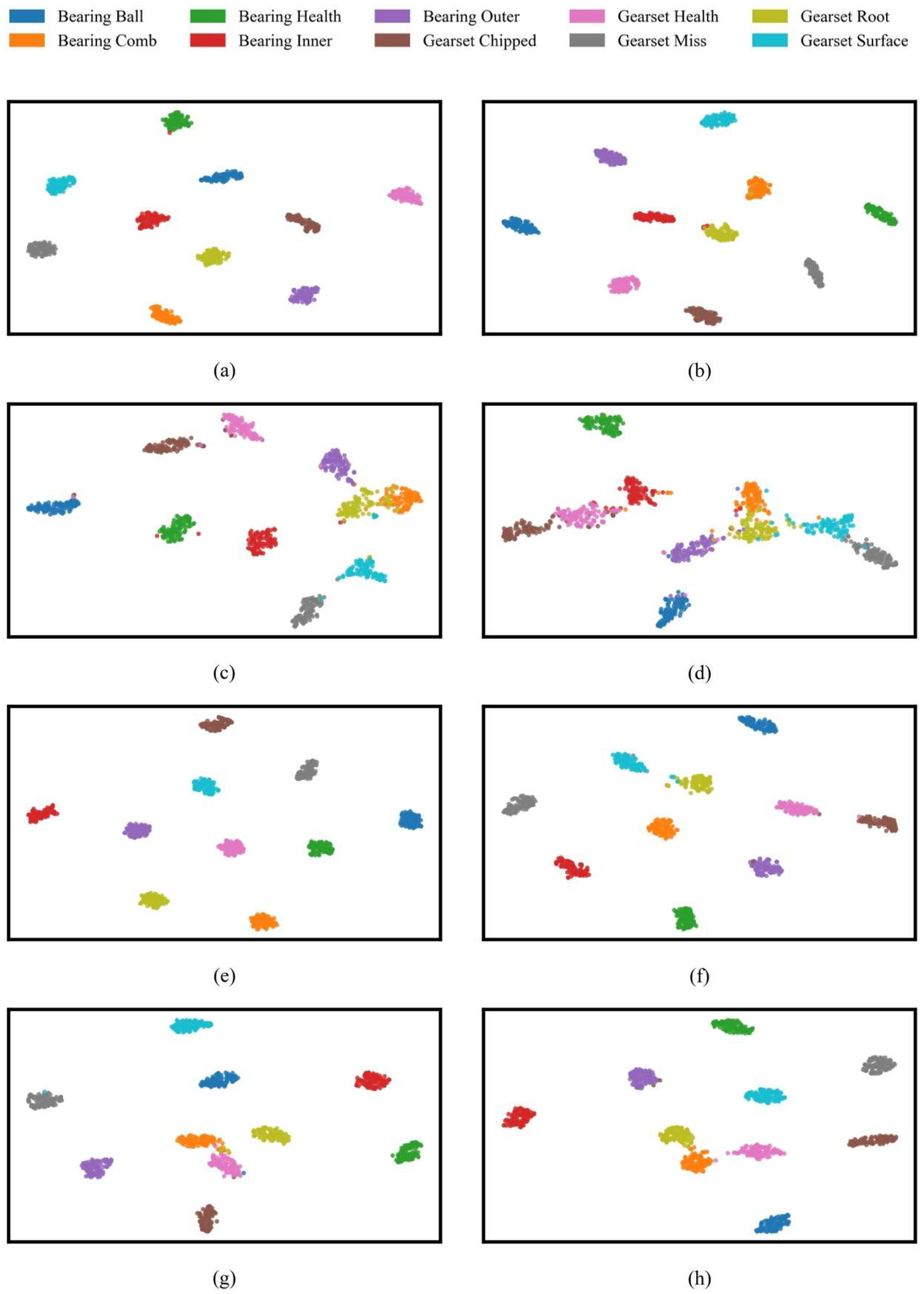

Figure 10 illustrates the two-dimensional t-SNE distribution of feature representations produced by eight different models under noise-free conditions (λ = 0). Most notably, the features extracted by YConvFormer show exceptional discriminability. Samples of various fault types form compact and distinct clusters in the 2D space, with high intra-class sample density and clear boundaries between different fault states, providing strong evidence that YConvFormer can accurately capture fine-grained feature differences between fault and normal states. The second-best performing LiteFormer is able to cluster most of the healthy state samples, but some fault categories, such as root crack and tooth wear, show overlapping edge samples, with significantly weaker feature separability compared to YConvFormer. Meanwhile, lightweight CNN–Transformer models such as CLFormer and ConvFormer-NSE exhibit significant mixing and dispersal in their feature distribution, with large overlaps in the feature clusters for root crack and tooth wear, reflecting feature information loss due to excessive dimension compression. Traditional deep models like ResNet18 show unclear boundaries in the normal state clusters, with scattered normal state samples and indistinct separation from the fault clusters. This observation aligns with the sharp drop in their average accuracy to 68.41% and 69.24% under high noise conditions, revealing their insufficient noise resistance at the feature layer level. Importantly, these feature space distribution differences are closely aligned with model performance trends: YConvFormer, while maintaining a lightweight architecture, strengthens the separability of features between fault and normal states through an efficient feature encoding mechanism. As a result, it maintains an average accuracy of 87.93% at λ = 0.6, far surpassing other models. The t-SNE visualization further clarifies the underlying mechanism that makes YConvFormer superior in classification accuracy and noise robustness.

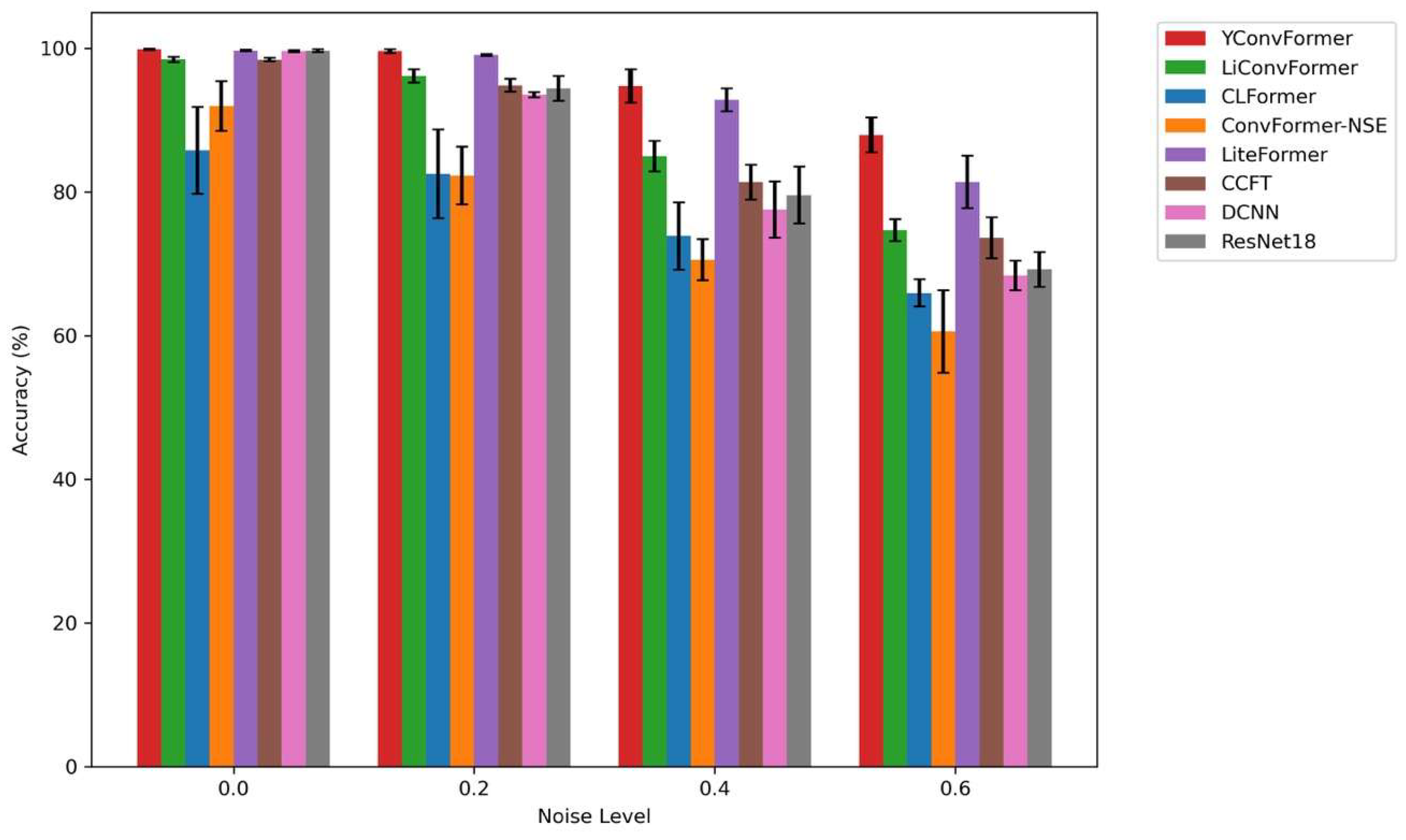

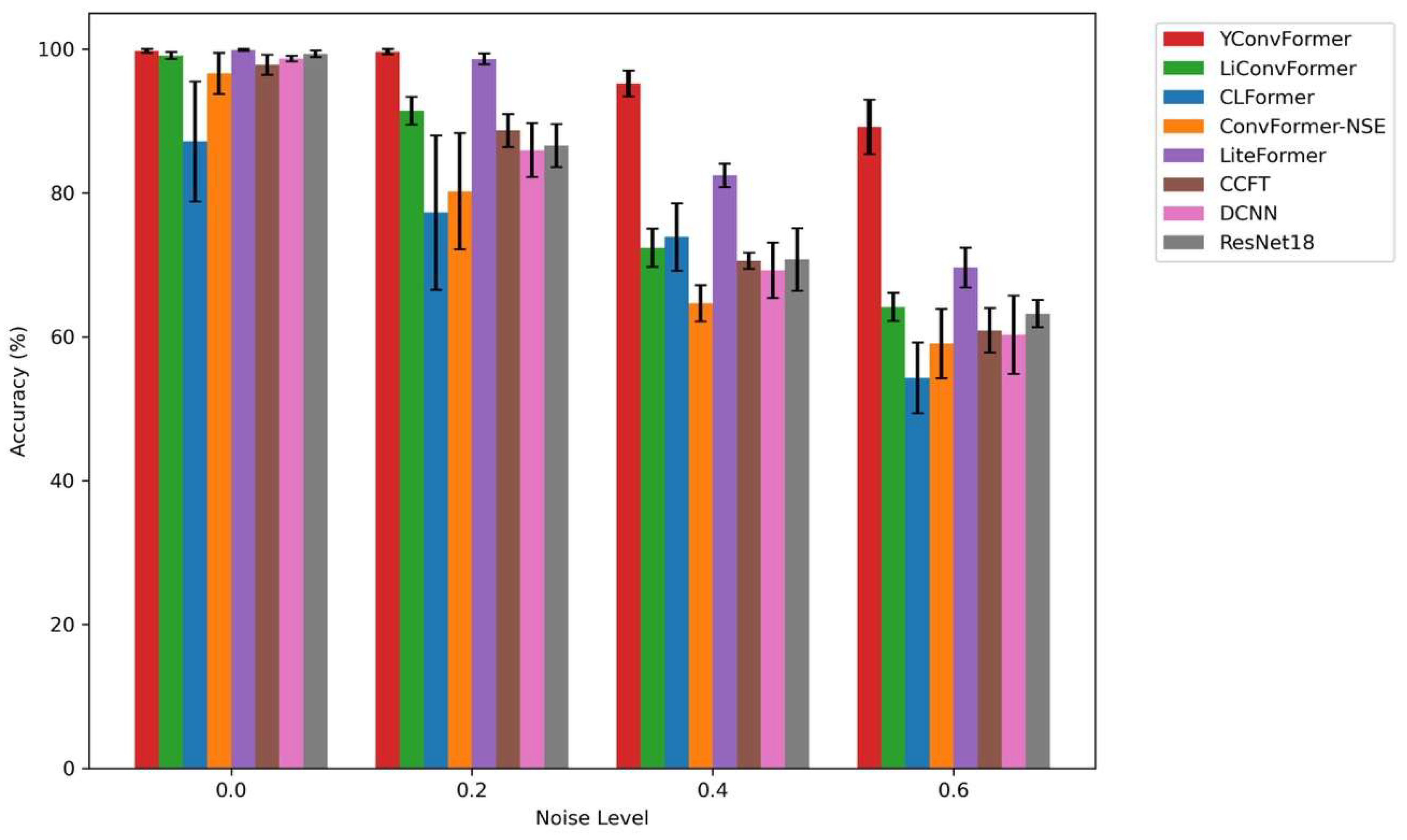

Figure 11 intuitively presents the diagnostic accuracy and fluctuation characteristics of eight models across four noise levels. YConvFormer demonstrates both leading accuracy and exceptional stability across the entire noise range, exhibiting a dual advantage: At λ = 0, its accuracy approaches 100%, on par with LiteFormer and ResNet18, with the shortest error bars, reflecting the stability of feature extraction. At λ = 0.2, the accuracy of CLFormer and ConvFormer-NSE decreases significantly, while YConvFormer maintains a high accuracy of around 99.60%, outperforming the next best model, LiteFormer, by approximately 0.51%. At λ = 0.4, YConvFormer achieves an accuracy of 94.77%, far surpassing lightweight models like CLFormer (73.88%) and ConvFormer-NSE (70.58%), and improving by 15.19 percentage points over the traditional strong baseline ResNet18 (79.58%). Most impressively, at λ = 0.6, YConvFormer maintains an accuracy of 87.93%, significantly outperforming LiteFormer (81.41%) and surpassing ResNet18 by 18.69%. Additionally, its error bars are noticeably shorter than those of the other models, further validating the effectiveness of its noise-robust feature enhancement mechanism. In contrast, while LiteFormer achieves similar accuracy to YConvFormer at low noise levels, its error bars increase sharply as noise increases. At λ = 0.6, the fluctuation range expands significantly, reflecting its insufficient noise stability. Overall, the bar chart quantifies and verifies the superiority of the YConvFormer model across three dimensions: accuracy, noise robustness decay rate, and result stability.

Table 4 shows that YConvFormer consistently outperforms other models, particularly in terms of precision, recall, and F1-score. Under low noise conditions (λ = 0 and λ = 0.2), YConvFormer achieves a perfect precision, recall, and F1-score of 0.9986. Even with increasing noise (λ = 0.4 and λ = 0.6), its performance remains strong, with F1-scores of 0.9487 and 0.8839, respectively.

In contrast, other models like LiConvFormer show a significant decline under high noise. At λ = 0.6, its F1-score drops to 0.7461, much lower than YConvFormer. DCNN and LiteFormer, while maintaining relatively higher performance, also experience notable drops as noise increases. For DCNN, the F1-score decreases from 0.9959 at λ = 0 to 0.6936 at λ = 0.6, while LiteFormer drops from 0.9971 at λ = 0 to 0.8164 at λ = 0.6. CLFormer and ConvFormer-NSE show even larger declines, with F1-scores of 0.6550 and 0.6094 at λ = 0.6, respectively, highlighting their lower noise resilience. Overall, YConvFormer’s ability to maintain high performance under increasing noise levels makes it the most effective model in terms of noise robustness and diagnostic accuracy.

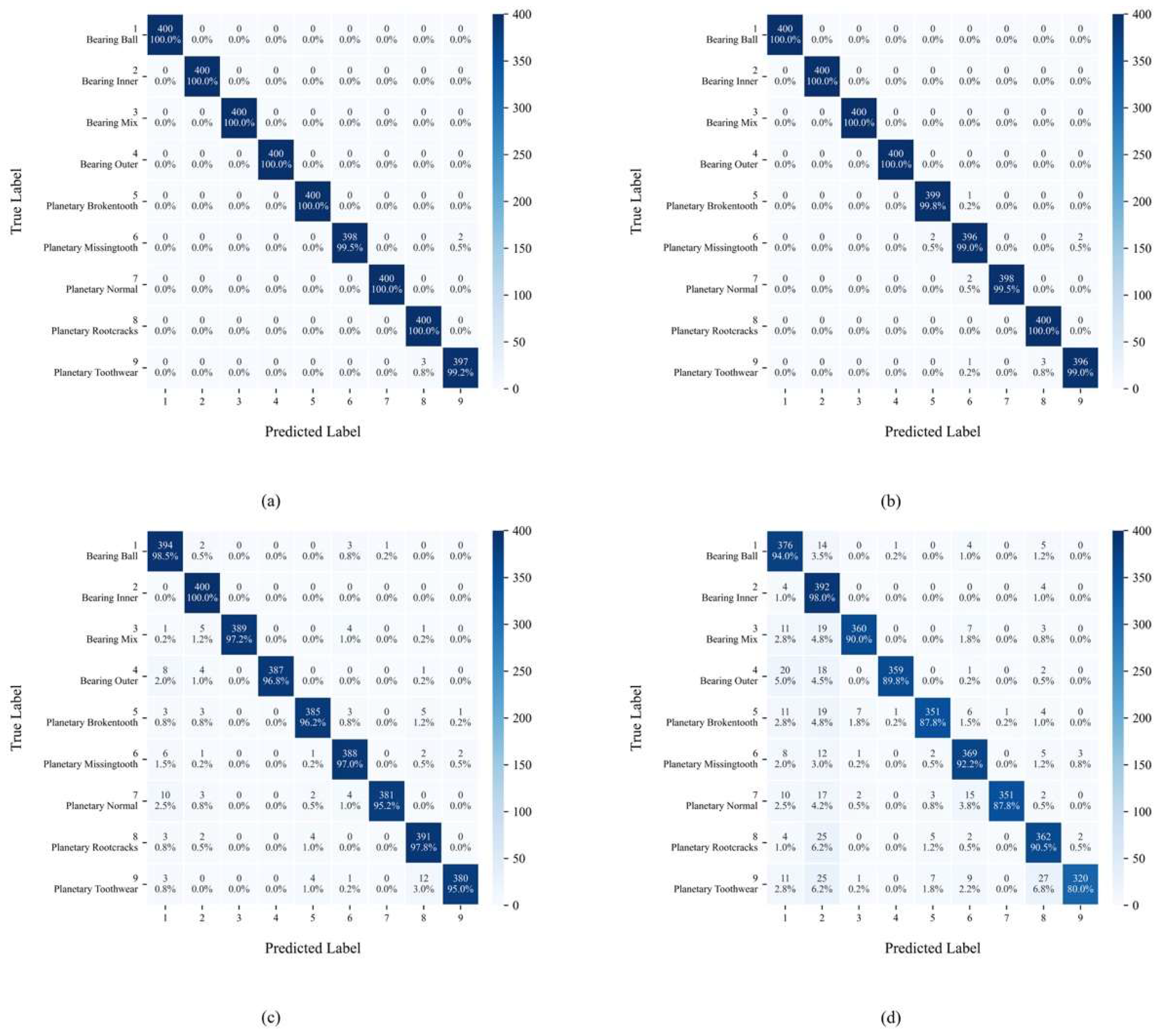

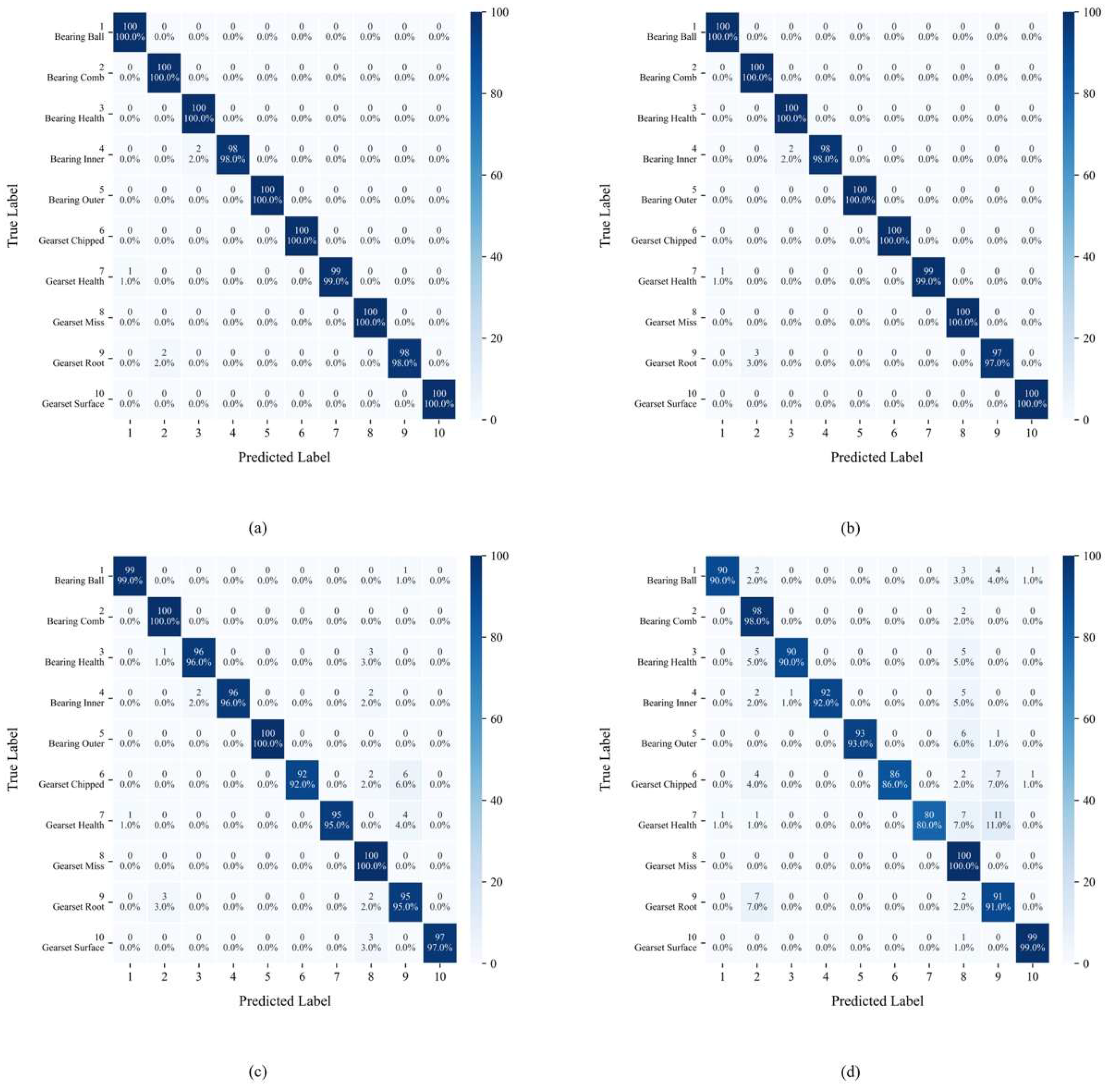

Figure 12 displays the confusion matrix distribution of YConvFormer at four different noise levels. The size and color depth of each matrix element reflect the frequency of predicted categories, while the values along the diagonal represent the classification accuracy of each true class. In

Figure 12a, except for classes 6 and 9, all other classes achieve 100% classification accuracy, with only a very small number of samples being misclassified. This confirms the model’s exceptional ability to distinguish features in pure signals. In

Figure 12b, although the diagonal values slightly decrease for each true class, it is significant that the number of misclassifications remains in single digits, and the errors are concentrated in adjacent semantic categories, such as Brokentooth and Missingtooth, indicating that noise has only a minor impact on the feature discrimination of edge samples. In

Figure 12c, the diagonal values further decrease, and misclassifications become more dispersed across categories. However, the predicted distributions for each true class still cluster around the diagonal, indicating that the model is able to anchor core feature dimensions, even under moderate noise levels, maintaining correct classification for most samples. In

Figure 12d, while the proportion of diagonal values significantly decreases and misclassifications across categories increase, compared to the average accuracy of other models under the same noise levels in

Table 3, YConvFormer still maintains the highest diagonal proportion. Moreover, the misclassified samples do not exhibit a “random confusion across all categories” disorderly pattern, confirming that its noise-robust mechanism preserves key discriminative information and constrains the semantic validity of misclassified samples even under extreme noise conditions. In summary, the confusion matrix reveals YConvFormer’s highly robust classification characteristics under various noise levels from three dimensions: intra-class cohesion, cross-class confusion patterns, and performance degradation trends under increasing noise. This provides fine-grained feature-level evidence of its reliability in complex industrial noise environments.

4.3. Performance on SEU Dataset

The second dataset used for model validation is provided by Southeast University (SEU) [

51]. The experimental platform is shown in

Figure 13, consisting mainly of a motor, motor controller, planetary gearbox, parallel gearbox, brake, and brake controller. The SEU gearbox dataset was collected using a drivetrain dynamics simulator (DDS). The experiment was conducted under two operating conditions: the speed–load configuration (RS-LC) was set to 20 Hz-0 V and 30 Hz-2 V, with load settings of 0 V and 2 V. Vibration signals were collected for eight different gear faults, four bearing faults, one normal gear state, and one normal bearing state. Vibration signals from eight channels were recorded, and the second channel’s vibration signal was input into the model. The dataset includes 10 types of data.

In this paper, the SEU gearbox dataset is preprocessed, and data with a load setting of 20 Hz–0 V is selected for the experiment. The dataset is first divided into training, validation, and test sets, with no overlap between them. Vibration signals are truncated using a sliding window approach without overlap, and each data sample contains 1024 points. All samples in the training set are used for training, half of the samples in the validation set are randomly selected for validation, and half of the samples in the test set are randomly selected for testing. Additionally, noise is added to the test set, as indicated in formula 12.

Table 5 shows the dataset division. This partitioning ensures the training set provides ample samples for the model to learn robust fault representations, while reserving 25% each for validation and testing to support reliable hyperparameter tuning and unbiased performance evaluation.

Table 6 reaffirms the findings of the first experiment, where YConvFormer consistently outperforms competing models in terms of noise robustness. While its accuracy is slightly lower than LiteFormer and ResNet18 at low noise (λ = 0), it maintains a significant lead at higher noise levels. YConvFormer’s performance under strong noise (λ = 0.6) is notably better than models such as LiteFormer, DCNN, and ResNet18, confirming its superiority in feature extraction and noise resilience. Despite a relatively higher standard deviation of 2.5768 at λ = 0.6 compared to its peers, YConvFormer’s markedly superior accuracy under these conditions confirms it as the overall best-performing model.

Figure 14 shows that the t-SNE analysis in this experiment mirrors the previous findings, where YConvFormer exhibits clear, compact feature clusters with minimal overlap. This highlights its exceptional ability to distinguish between fault and normal states, even under noisy conditions. The performance of LiteFormer is still strong under low noise but shows increasing feature overlap as noise levels rise, further emphasizing YConvFormer’s robust discriminability.

The bar chart in

Figure 15 shows that YConvFormer again demonstrates its dual advantage of accuracy and stability. At higher noise levels (λ = 0.4 and λ = 0.6), it maintains a much smaller drop in accuracy compared to other models, particularly those like CLFormer and LiConvFormer, which show sharp declines. These results corroborate the findings from the first experiment, where YConvFormer’s robust feature extraction mechanism ensures minimal performance degradation even in challenging conditions.

Table 7 confirms the findings of the experiment on the XJTU dataset: YConvFormer consistently leads in noise robustness. At low noise (λ = 0), it records precision = 0.9975, recall = 0.9974, and F1-score = 0.9974—just behind LiteFormer (0.9986) and ahead of ResNet18 (0.9935). As noise rises, YConvFormer retains its advantage. Under strong noise (λ = 0.6), it achieves precision = 0.9174, recall = 0.8962, and F1-score = 0.8984, surpassing both LiteFormer and ResNet18 and demonstrating superior stability in noisy conditions. Although its standard deviation at λ = 0.6 is higher than some peers, its accuracy secures its status as the optimal model overall.

Figure 16 presents the confusion matrix that further supports the conclusions drawn in the first experiment. Even under extreme noise, YConvFormer shows superior ability in maintaining high intra-class cohesion and preventing random misclassification. The misclassifications primarily occur between semantically similar classes, which is indicative of the model’s noise-robust feature extraction and classification capability.

4.4. Ablation Experiment

This section validates the effectiveness of the proposed modules on both the SEU and XJTU datasets. The data partitioning follows the same method as described earlier.

Table 8 presents the fault diagnosis performance of time-domain (TD), frequency-domain (FD), and time–frequency combined (TFE) inputs with the AEBA module on both the XJTU and SEU datasets. Most significantly, TFE outperforms all other input methods at every noise level across both datasets, demonstrating the stability of time–frequency fusion. Breaking down the results, TD achieves high accuracy at λ = 0, but as noise increases, its accuracy drops significantly at λ = 0.6. Specifically, on the XJTU dataset, the accuracy decreases to 0.7834, and on the SEU dataset, it drops to 0.6566. Meanwhile, the standard deviation for TD rises from 0.1790 to 3.3128 on XJTU (and from 0.3000 to 1.5969 on SEU), indicating increasing inconsistency under noise. This indicates that time-domain signals are highly susceptible to noise interference, and the lack of frequency-domain information leads to performance degradation in high-noise conditions. Turning to FD input, although more robust to noise than TD, it consistently has lower accuracy than TFE due to the absence of time-domain transient details. FD’s standard deviation also grows from 0.1000 to 3.3151 on XJTU (and from 0.2301 to 2.5315 on SEU), reflecting similar stability issues at high noise levels. By contrast, TFE effectively combines the transient pulse features from the time domain with the energy distribution features from the frequency domain, effectively enhancing fault information through complementary feature fusion. Notably, TFE maintains the lowest standard deviations across all noise levels (0.0000 → 2.5768 on XJTU and 0.1854 → 3.0800 on SEU); on the SEU dataset, although TFE’s standard deviation at λ = 0.6 (3.0800) exceeds that of the next best model (2.5315), its higher accuracy (0.8920 vs. 0.8708) confirms it as the best-performing method. In all noise scenarios across both datasets, TFE consistently leads, strongly validating the necessity and effectiveness of time–frequency joint inputs for gearbox fault diagnosis.

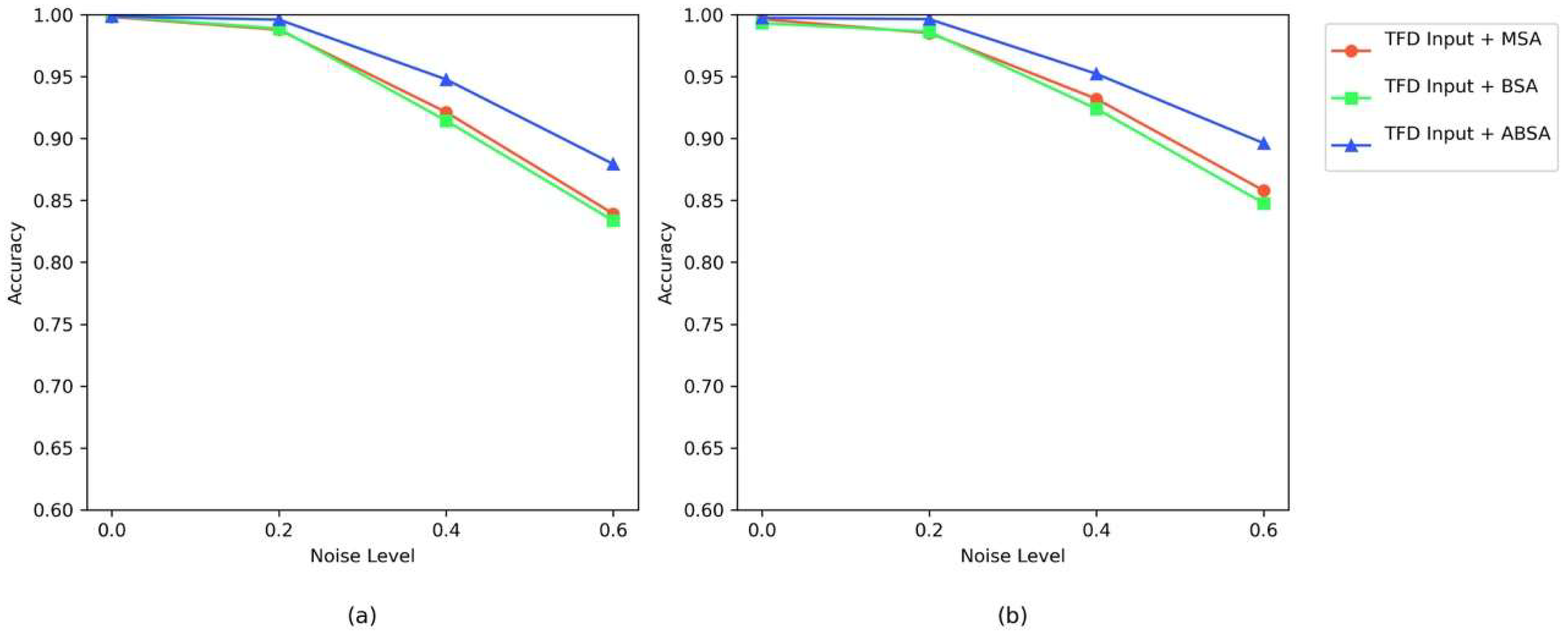

Figure 17 presents the average accuracy curves for different inputs (time-domain TD, frequency-domain FD, time–frequency combined TFE) with the AEBA module on two datasets. Time–frequency combined (TFE) input shows the strongest noise robustness across all noise levels: In

Figure 17a, under low noise conditions, the accuracy of TFE is comparable to that of TD and FD. However, in high-noise conditions, TFE experiences the smallest accuracy drop. In this figure, TFE drops from approximately 0.99 to 0.87, which is significantly smaller than the drop in TD (from 0.99 to 0.78) and FD (from 0.99 to 0.79). In

Figure 17b, at λ = 0.6, TFE still maintains an accuracy of about 0.88, while TD drops to just 0.65. The diagnostic efficiency of FD is comparable to TFE, far outperforming TD. This is because the SEU dataset is more sensitive to noise, and frequency-domain input retains fault information better, showing better noise resistance [

52]. This aligns with the experimental results where YConvFormer outperforms LiteFormer by 19.58% under strong noise conditions, demonstrating that incorporating frequency-domain information significantly enhances accuracy. Each subplot confirms that time–frequency joint input preserves complementary information from both time-domain transient features and frequency-domain energy distribution, enhancing fault discriminability under noise. The consistent leading performance of TFE across both datasets further demonstrates the critical gain in noise robustness provided by the time–frequency fusion strategy, offering empirical support for the effectiveness of the AEBA module in terms of the input domain.

Table 9 presents the fault diagnosis accuracy of TFE input combined with different attention mechanisms (MSA, BSA, and AEBA) on the XJTU and SEU datasets. TFE Input + AEBA demonstrates a dual advantage of robustness across datasets and adaptability of the attention mechanism, showing superior performance from λ = 0 to λ = 0.6. Specifically, in low-noise scenarios (λ = 0, λ = 0.2), the accuracies and standard deviations of MSA, BSA, and AEBA are similar. However, in high-noise scenarios (λ = 0.4, λ = 0.6), AEBA shows a significant advantage over both MSA and BSA; although its standard deviation remains at an intermediate level, its markedly higher accuracy supports its identification as the best-performing method.

Figure 18 displays the anti-noise accuracy curves for TFE input combined with different attention mechanisms (MSA, BSA, and AEBA) across dual datasets. Most notably, the model incorporating Axial Decomposition (AEBA) demonstrates the best noise resistance across all noise levels. The axial decomposition feature of AEBA allows the decoupling of multi-dimensional dependencies in time–frequency domain features, preventing single-dimensional attention from overlooking critical information. Furthermore, its design to expand the receptive field strengthens cross-scale feature associations, enabling the model to reliably capture fault time–frequency coupling patterns even under noise interference. Ultimately, the consistent superior performance of AEBA across both datasets validates the enhancement in attention-based noise resistance brought by axial decomposition and receptive field optimization.