1. Introduction

Proxy re-signature schemes provide a mechanism for the transitive authentication of cross-domain digital identities. In proxy re-signature, a proxy (P) serves the function of transforming signatures on an identical message between two distinct signers. Specifically, using a proxy re-signature key, proxy P can convert delegator A’s signature on a given message into a signature verifiable with delegatee B’s public key for that same message. This transformation ensures that the resulting re-signed signature is indistinguishable from one genuinely generated by delegatee B while being clearly distinguishable from delegator A’s original signature. Proxy re-signature technology has significant applications in diverse fields, such as Digital Rights Management (DRM), cross-domain certificate interoperability, and access control in distributed systems. A prime example of this is its application in international travel document systems. Consider a traveler (C) holding an electronic signature () issued by their home country (E) who seeks entry into country F. The border control agency in country F, acting as a proxy entity, first verifies the validity of . Upon successful verification, the agency can convert into an electronic signature () that conforms to country F’s standards. Subsequently, relevant authorities within country F can perform the traveler’s identity verification and achieve transnational authentication merely by utilizing the public key of country F’s border control agency. This process obviates the need to manage and maintain complex, transnational certificate chains.

The concept of proxy re-signature was first introduced by Blaze et al. [

1]. Ateniese and Hohenberger [

2] formally defined the security model and explored additional application scenarios. Early applications primarily focused on smart card key updates, enabling the dynamic expansion of terminal device key spaces using proxy signatures. Subsequently, their use has expanded to areas such as anonymous group signatures and distributed system path attestation. For instance, in distributed network routing verification, data packets can leverage a proxy signature chain to prove their complete transmission path, with verifiers only requiring the public key of the terminal node. However, these early proxy re-signature schemes were predominantly based on certificate-based public-key cryptosystems. In such systems, the public keys of the delegatee and delegator must be obtained from certificates prior to signature verification, leading to significant certificate distribution and management challenges. To address the bottleneck of certificate management, researchers [

3,

4] have developed various identity-based proxy re-signature (IBPRS) schemes. These schemes utilize user identity information as public keys, thereby avoiding the reliance on certificates.

Nevertheless, in IBPRS schemes, the private keys of both the delegatee and delegator are generated by a Private Key Generator (PKG). This inherently introduces a key escrow problem [

5], as the PKG possesses knowledge of all user private keys, enabling it to potentially eavesdrop on communications or to forge user signatures. To resolve both certificate management and key escrow issues, scholars have begun investigating certificateless proxy re-signature (CLPRS) schemes. Guo et al. [

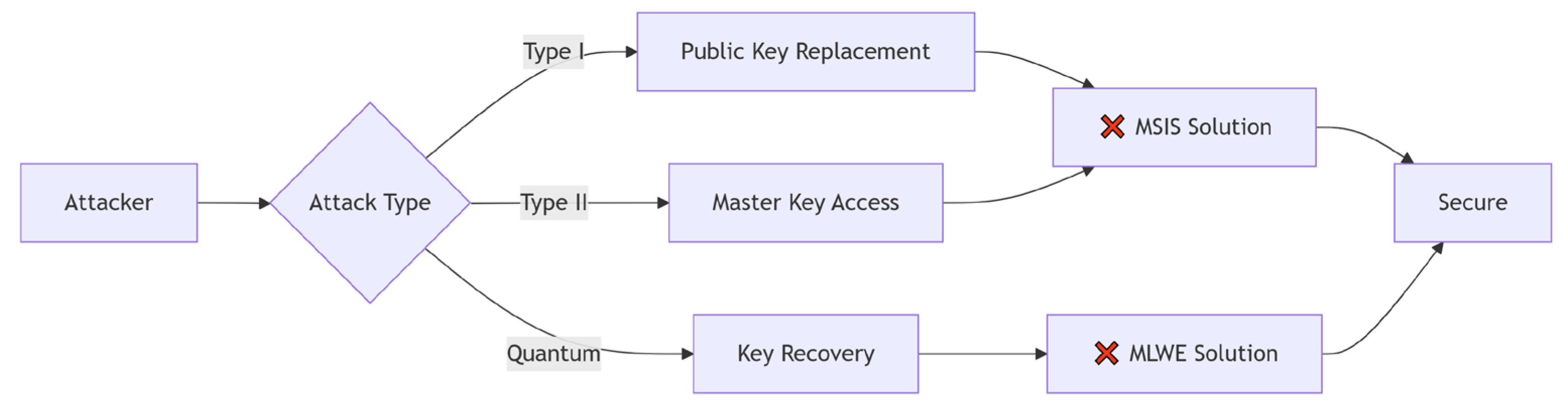

6] combined certificateless cryptography with proxy re-signature, proposing the first bidirectional CLPRS scheme. Although this approach eliminated certificate dependency and avoided the key escrow problem, a concrete security proof was not provided. The proxy re-signature schemes discussed previously are all based on traditional public key cryptography, with their security relying on the presumed intractability of problems such as large integer factorization and discrete logarithm problems. This reliance introduces inherent security vulnerabilities to the system. With the advent of quantum computing, the security of public-key cryptosystems founded on classically hard number-theoretic problems faces a significant challenge. As Shor [

7] demonstrated, the advancement of quantum computing renders problems like discrete logarithm and integer factorization computationally tractable. Thus, developing post-quantum certificateless proxy re-signature schemes is the only comprehensive approach to resolving the certificate management bottleneck, key escrow problem, and imminent quantum computational threat faced by current proxy re-signature technologies.

To address these problems, we introduce a computationally efficient post-quantum certificateless proxy re-signature scheme based on algebraic lattices. This scheme is designed to guarantee the security and integrity of communication messages exchanged among the Key Generation Center (KGC), proxy, delegator, and delegatee, even in quantum attack environments.

3. Related Work

Current research hotspots in proxy re-signature primarily encompass identity-based schemes, lightweight schemes for the Internet of Things (IoT), certificateless schemes, and post-quantum secure schemes. Dutta et al. [

8] proposed an identity-based unidirectional proxy re-signature scheme; however, it is susceptible to private key leakage. Tian et al. [

9] constructed an identity-based bidirectional proxy re-signature scheme. However, its proxy re-signature process necessitates joint computation involving the private keys of both the delegator and delegatee, thereby increasing the key management complexity. Zhang et al. [

10] introduced an identity-based non-interactive proxy re-signature scheme tailored for Mobile Edge Computing. While this scheme reduces the computational overhead by avoiding bilinear pairing operations, its reliance on the hardness of large integer factorization renders it vulnerable to quantum computing threats. In the context of mobile Internet environments, Lei et al. [

11] proposed a unidirectional, variable-threshold proxy re-signature scheme notable for its shorter signature lengths, reduced computational costs, enhanced verification efficiency, and improved adaptability; this construction was also formally proven secure in the Standard Model against both collusion and adaptive chosen message attacks. Nevertheless, its reliance on the hardness of the bilinear pairing problem renders it incapable of withstanding quantum computing attacks.

Operating within certificateless frameworks, Fan et al. [

12] remedied the limitations inherent in the signature protocol devised by Tian et al. [

9]. This resolution involved the presentation of a certificateless proxy re-signature method exhibiting superior operational efficiency and distinguished by more concise private keys. In a separate study, Wu et al. [

13] proposed a flexible unidirectional certificateless proxy re-signature scheme. Nevertheless, the structural dependence of this particular scheme on the bilinear pairing problem means that it is not equipped to counteract threats emerging from quantum computation. Zhang et al. [

14] developed a revocable certificateless proxy re-signature scheme capable of supporting signature evolution within Electronic Health Record (EHR) sharing systems. Specifically, it facilitates dynamic user management and enables efficient revocation and updating of signatures in response to evolving data requirements. However, this scheme also lacks resistance to quantum computing threats.

Currently, post-quantum signature methodologies predominantly fall into three main classes: those derived from hash functions, those constructed using multivariate polynomials, and those based on lattices. The security of hash-derived signatures is contingent upon the collision resistance of the underlying hash functions; however, such schemes often present limitations regarding both signature compactness and execution velocity. Multivariate polynomial signatures, on the other hand, are recognized as being vulnerable to algebraic cryptanalysis, which can potentially undermine their claimed security. Diverging from these two paradigms, lattice-based signatures exhibit notable strengths in terms of computational performance and security robustness. The initial basis for public-key cryptography leveraging lattices was provided by Ajtai [

15], who demonstrated a fundamental linkage between the average-case difficulty and worst-case intractability of certain lattice problems. Following this foundational work, Gentry [

16], during the process of developing signature schemes from lattices, introduced the precise notion of a ‘one-way trapdoor function.’ In [

17], Lyubashevsky introduced a novel methodology for converting identification schemes into signature schemes using the Fiat-Shamir transform [

18,

19]. This approach incorporates an ‘abort’ mechanism to discard any signature values that might leak private key information, thereby ensuring that the output signature values adhere to a uniform distribution. Later, Lyubashevsky [

20] employed rejection sampling techniques to generalize sampling to arbitrary distributions and demonstrated that signature schemes based on the Learning With Errors (LWE) problem could achieve smaller public key sizes.

Building upon Lyubashevsky’s signature scheme [

20], Tian et al. [

21] introduced a lattice-based certificateless signature scheme that is notable for its shorter private keys and enhanced efficiency compared with other contemporary schemes. Subsequently, Xie et al. [

22] proposed a versatile unidirectional lattice-based proxy re-signature scheme. However, a significant drawback of their approach is that users must fully generate their own public and private keys, creating vulnerabilities to attacks from malicious users. Furthermore, their scheme risks exposing the delegatee’s private key during the generation of the re-signature key. Fan et al. [

23] developed a lattice-based re-signature method proven secure in the CCA-PRE model. Nonetheless, this scheme is susceptible to man-in-the-middle attacks and requires a greater storage capacity for re-signatures. Jiang et al. [

24] put forward a lattice-based proxy re-signature scheme that permits a message to be re-signed multiple times. A proxy re-signature construction employing lattice structures, designed for unidirectional applications and infinite use, was proposed by Chen et al. [

25]. This scheme incorporates private re-signature keys, which allow an individual message to undergo an unbounded number of re-signing operations. Separately, Luo et al. [

26] advanced a proposal for an attribute-based proxy re-signature methodology, establishing its foundations upon conventional lattice structures. Through the application of dual-mode cryptographic techniques, Zhou et al. [

27] developed a certificateless proxy re-signature scheme engineered to be resistant to collusion attacks; however, an efficiency analysis of this scheme was not provided, and it suffers from large signature sizes.

To date, scholarly investigations into certificateless proxy re-signature schemes based on algebraic lattices are not extensive. This scarcity is primarily because certificateless signature schemes constructed using algebraic lattices often result in large signature or private key lengths, thereby imposing a notable burden on the available storage resources. To address the challenge of substantial signature lengths, Guneysu et al. [

28] proposed a methodology centered on partitioning numerical values into two distinct components: higher-significance bits and lower-significance bits. This approach permits the elision of the lower-significance bits on the condition that their removal does not alter the rounding outcome of the higher-significance bits, consequently leading to diminished storage requirements. Concurrently, Bai et al. [

29] introduced a method for discarding signature subcomponents, which implicitly incorporates a proof of noise knowledge within the overarching proof pertaining to the private key. Recognized as a NIST-standardized algorithm for post-quantum signatures, Dilithium utilizes compression strategies comparable to those in Guneysu’s work. It further makes use of ‘hints’ within its rounding operations, thereby aiming to avert failures during the verification process. Furthermore, Dilithium is implemented on algebraic lattices, and its security is based on the presumed hardness of the Module Small Integer Solution (MSIS) and Module Learning With Errors (MLWE) problems [

30]. Due to its design, which incorporates uniform key sampling, the scheme demonstrates resistance to known algebraic and subfield attacks. For NIST-selected parameter sets of Dilithium (e.g., security levels 2, 3, and 5), solving the corresponding MLWE and MSIS instances remains computationally infeasible under currently known optimal classical and quantum attacks [

31]. In summary, existing certificateless proxy re-signature schemes are typically either designed based on traditional computationally hard problems, rendering them vulnerable to quantum attacks, or they achieve quantum security at the cost of low storage and computational efficiency, making them unsuitable for practical deployment. Consequently, there is substantial value in furthering the design of certificateless proxy re-signature schemes employing lattices that concurrently achieve high efficiency and strong security guarantees.

8. Limitations and Future Work

While the proposed scheme exhibits significant advantages in terms of post-quantum security, certificate-less nature, and computational and storage efficiency over comparable lattice-based schemes, its widespread deployment in resource-constrained environments, such as the Internet of Things (IoT), necessitates acknowledging its inherent limitations.

First, concerning energy consumption, our performance evaluation indicates that the proposed scheme outperforms existing solutions in terms of computational overhead. Nevertheless, the underlying lattice-based cryptographic operations are inherently computationally intensive. Furthermore, the rejection-sampling mechanism integral to the scheme has a non-constant execution time. Although the average number of repetitions is acceptable, the iteration count for signature generation still exhibits minor variability. This non-determinism can cause fluctuations in the energy consumption of a device, posing a considerable challenge for battery-dependent IoT terminals that demand extended operational lifetimes. Second, the scalability of the system presents another key concern. The system model of the scheme depends on a centralized Key Generation Center (KGC) for the issuance of partial private keys. In large-scale IoT ecosystems comprising millions of devices, this centralized KGC is likely to become a performance bottleneck and a single point of failure. Finally, and of critical importance, this paper details the scheme’s design at the algorithmic level. Although the officially specified Dilithium algorithm is purported to resist side-channel attacks, its resilience on physical hardware is not guaranteed. The potential for key information leakage through side-channel attacks, such as power analysis or timing attacks, requires empirical validation once the scheme is implemented in physical devices. Furthermore, this scheme primarily focuses on the authenticity of identities and the unforgeability of messages in its design, without optimizing privacy features such as unlinkability of signatures or signer anonymity. This may increase privacy leakage risks in highly sensitive application scenarios like electronic health record (EHR) sharing.

In conclusion, our future work will focus on three core directions: (1) Subsequent work will empirically validate the scheme’s deployment feasibility and efficiency advantages on resource-stringent end devices through concrete IoT use cases (e.g., smart health monitoring or environmental sensor networks). (2) Fine-grained hardware-software co-optimization: Researching and developing constant-time implementations of our scheme to eliminate timing channels and flatten energy consumption. (3) Enhanced scalability: Exploring distributed or hierarchical KGC architectures to alleviate centralization bottlenecks. (4) Strengthened security: Formally integrating mature side-channel countermeasures and conditional privacy preservation into the scheme implementation with formal proofs of security.