GAPO: A Graph Attention-Based Reinforcement Learning Algorithm for Congestion-Aware Task Offloading in Multi-Hop Vehicular Edge Computing

Abstract

1. Introduction

- We propose a novel GNN-based state encoder that effectively captures global contextual information and spatiotemporal dependencies from the complex network topology, overcoming the problem of incomplete state information in traditional RL methods.

- We design a novel attention-based policy network within an Actor–Critic framework that intelligently selects the optimal offloading destination while collaboratively determining the continuous ratios for offloading and resource allocation.

- We develop a comprehensive multi-objective reward function that guides the agent to simultaneously minimize task completion latency and, crucially, to alleviate network congestion on multi-hop communication links.

- Through comprehensive simulations and ablation studies, we demonstrate that GAPO significantly outperforms traditional heuristics and standard DRL approaches in both latency and congestion mitigation.

2. Related Work

2.1. Traditional and Heuristic Approaches

2.2. Deep Reinforcement Learning for Task Offloading

2.3. Emerging GNN-Based DRL Methods

2.4. Comparison with State-of-the-Art and GAPO’s Contributions

3. System Model

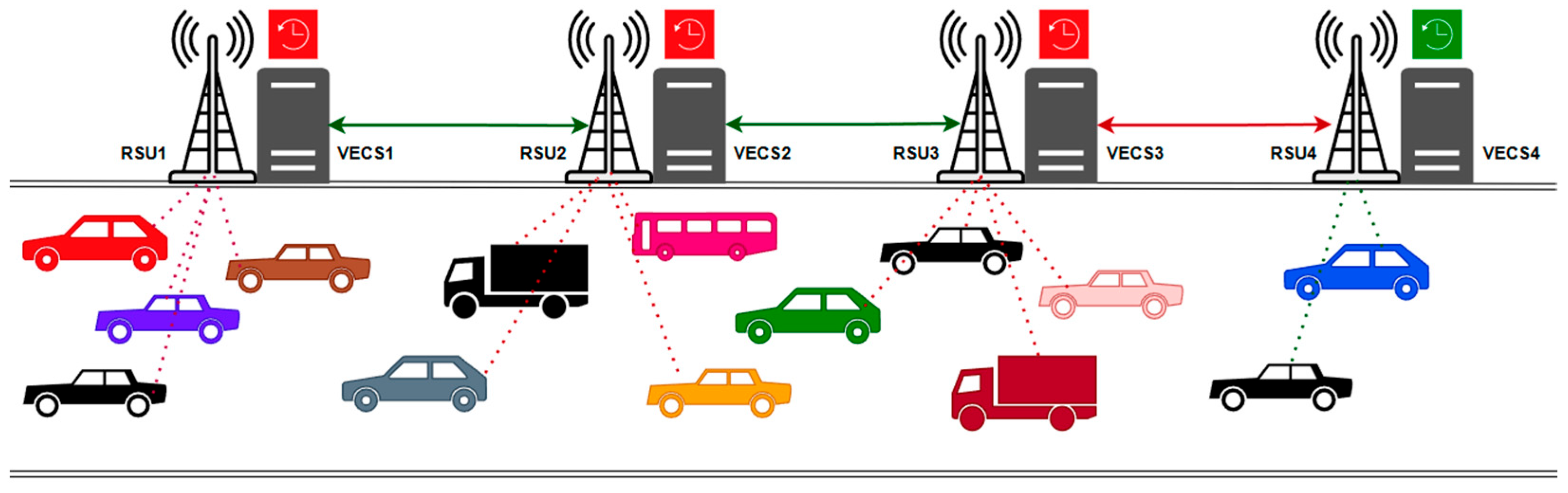

3.1. System Architecture

3.2. Network Graph Representation

- Node type: A one-hot encoding vector indicating whether the node is a vehicle or an RSU.

- Node computational capacity.

- Current computational load: The instantaneous CPU utilization of the node, .

- Available computational resources: The currently unallocated computational resources of the node.

- Geographical location information: The coordinates of the node, which are dynamic for vehicles and fixed for RSUs.

- Node degree: The number of connections the node has in the graph.

- Link type: A one-hot encoding indicating whether it is a V2R or R2R link.

- Link bandwidth capacity.

- Current link load: The instantaneous utilization of the link, .

- Communication latency: The estimated transmission latency of the link.

- Link reliability: The packet loss rate of the link.

3.3. Task Processing and Communication Latency Model

- Offloading Destination Selection:

- 2.

- Offloading Ratio:

- 3.

- Computational Resource Allocation Ratio:

- Transmission Time:

- 2.

- Queuing Time:

| Algorithm 1: Multi-Hop Path Latency Calculation |

| Input: |

| Output: |

| , ← None |

| do |

| ) |

| then |

| ] |

| 9: else |

| as R2R latency: |

| do |

| 13: end for |

| ) |

| exists then |

| ] ⊕ // ⊕ is path concatenation |

| 19: else |

| 20: continue |

| 21: end if |

| 22: end if |

| then |

| 26: end if |

| 27: end for |

3.4. Computation Model and Total Latency Analysis

- Fully Local Execution:

- 2.

- Full Offloading to an RSU:

- 3.

- Partial Offloading:

- Minimize Average Task Processing Latency,

- 2

- Minimize Average R2R Link Congestion,

4. Graph Attention Policy Optimization (GAPO)

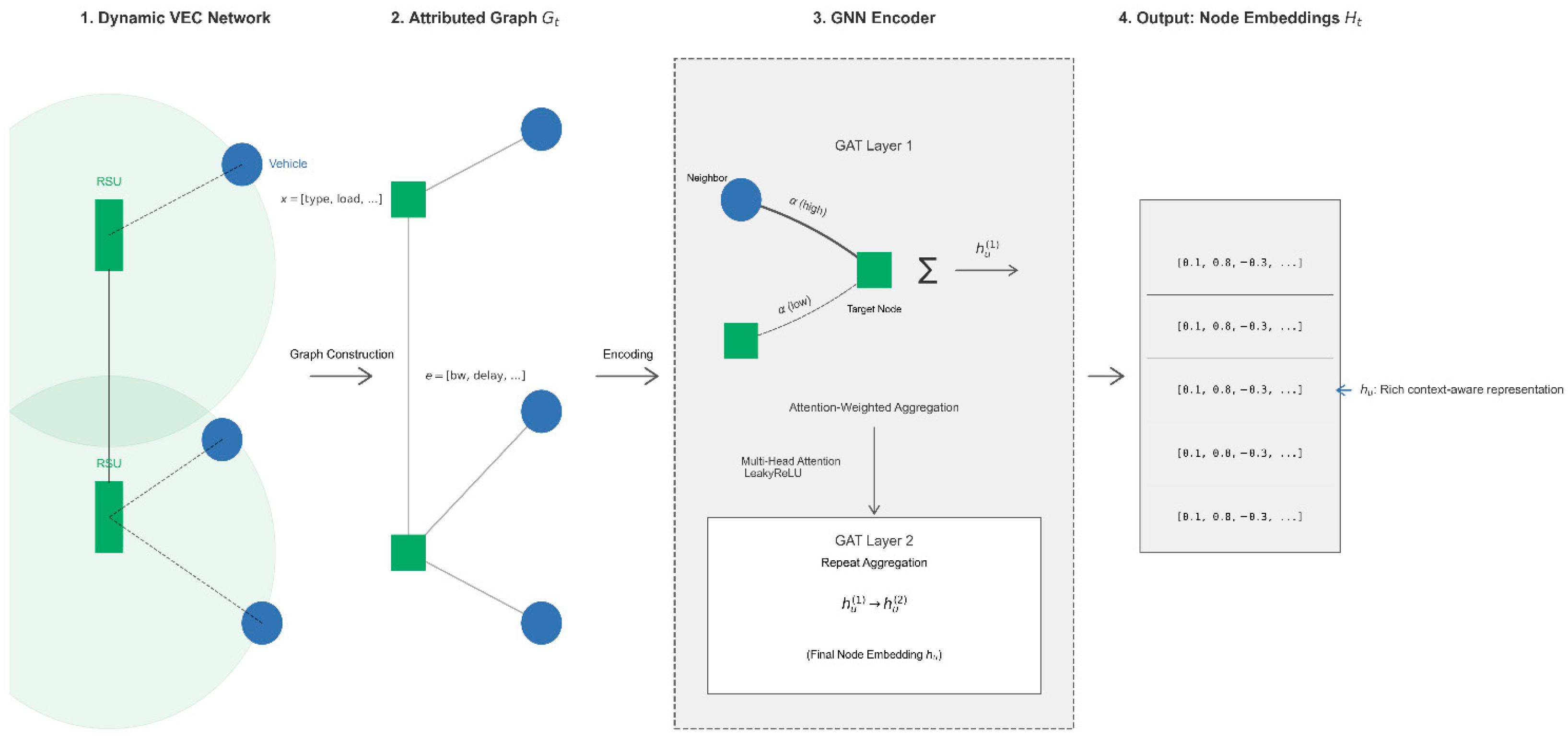

4.1. Overview of the GAPO Algorithm Framework

4.2. GNN Network and Embeddings

4.3. Attention-Based Actor–Critic Policy Network

| Algorithm 2: Actor Network Forward Pass for Joint Action Generation |

| 1: Phase A: Select Offloading Target via Attention |

| do |

| ) |

| 6: end for |

| 7: Mask scores for invalid/unavailable targets |

| ) |

| ] |

| 11: Phase B: Determine Continuous Ratios |

| = 0 then |

| ← 1 |

| 14: else |

| + 1 |

| + 1 |

| 19: end if |

| 20: Calculate total log probability log π from the distributions |

4.4. Policy Optimization Algorithm

4.5. Convergence and Complexity Analysis

5. Experimental Analysis

5.1. Experimental Setup

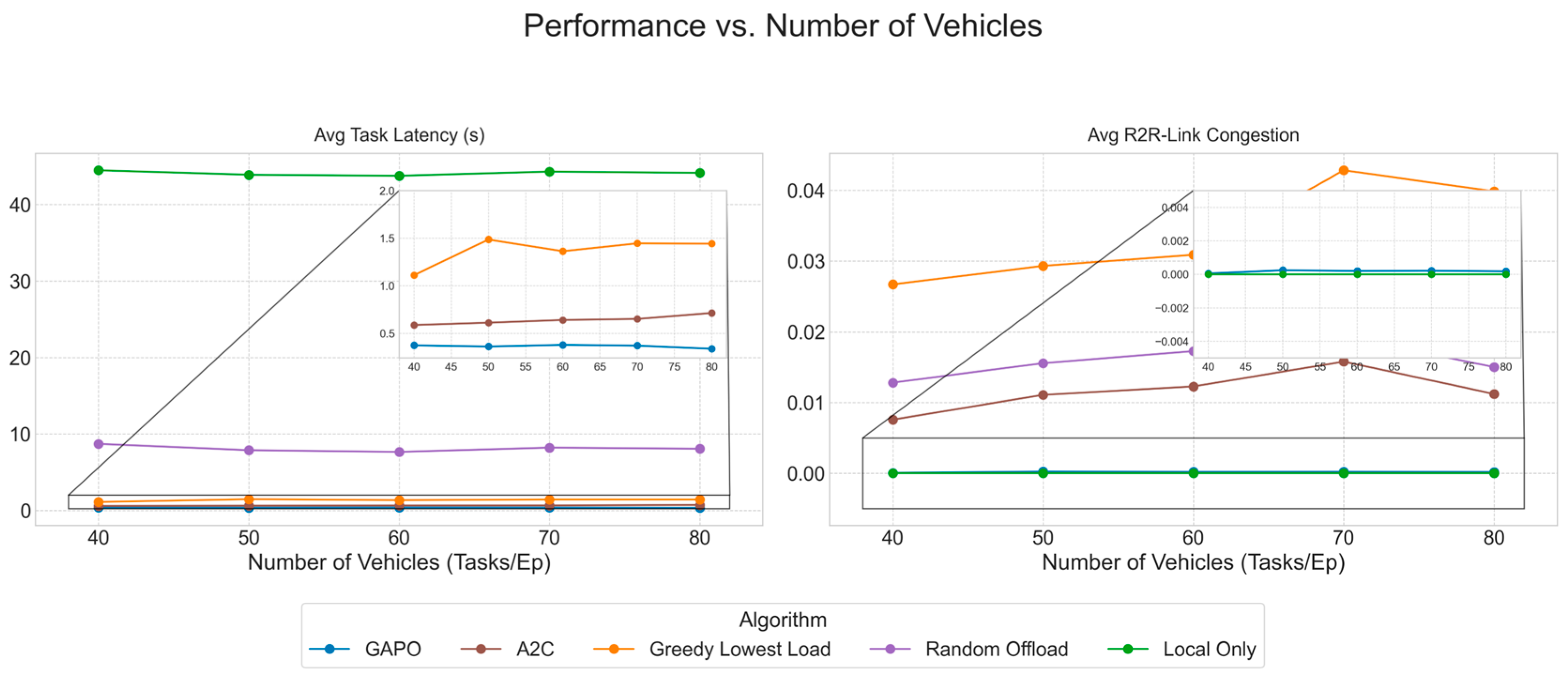

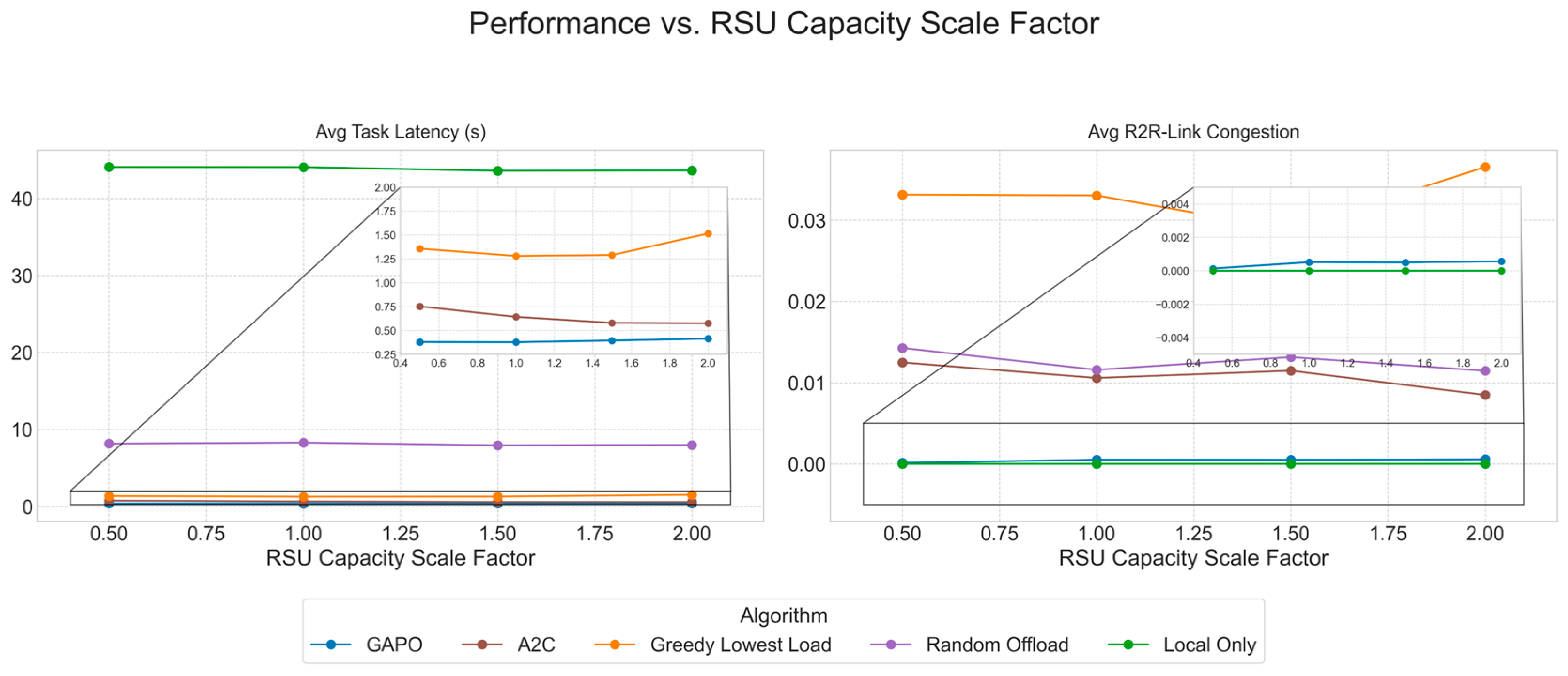

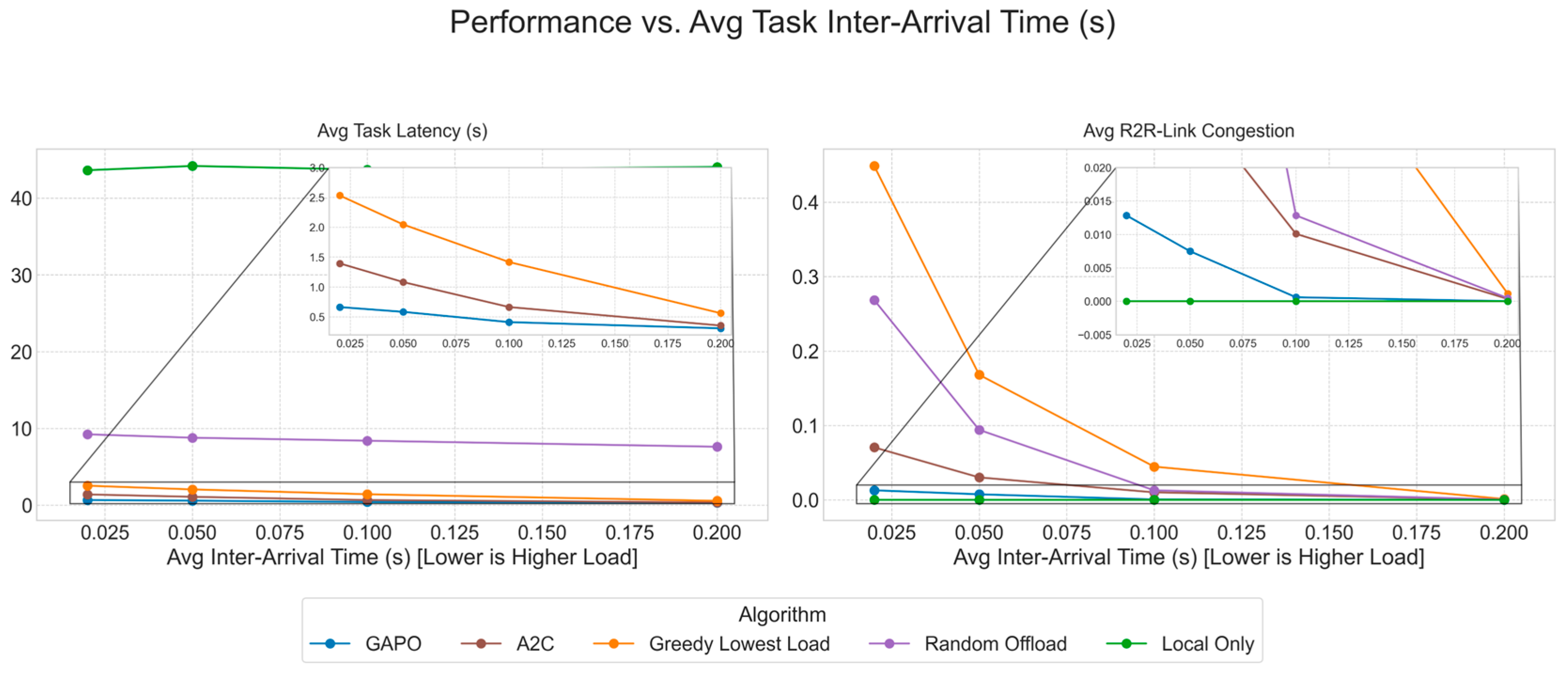

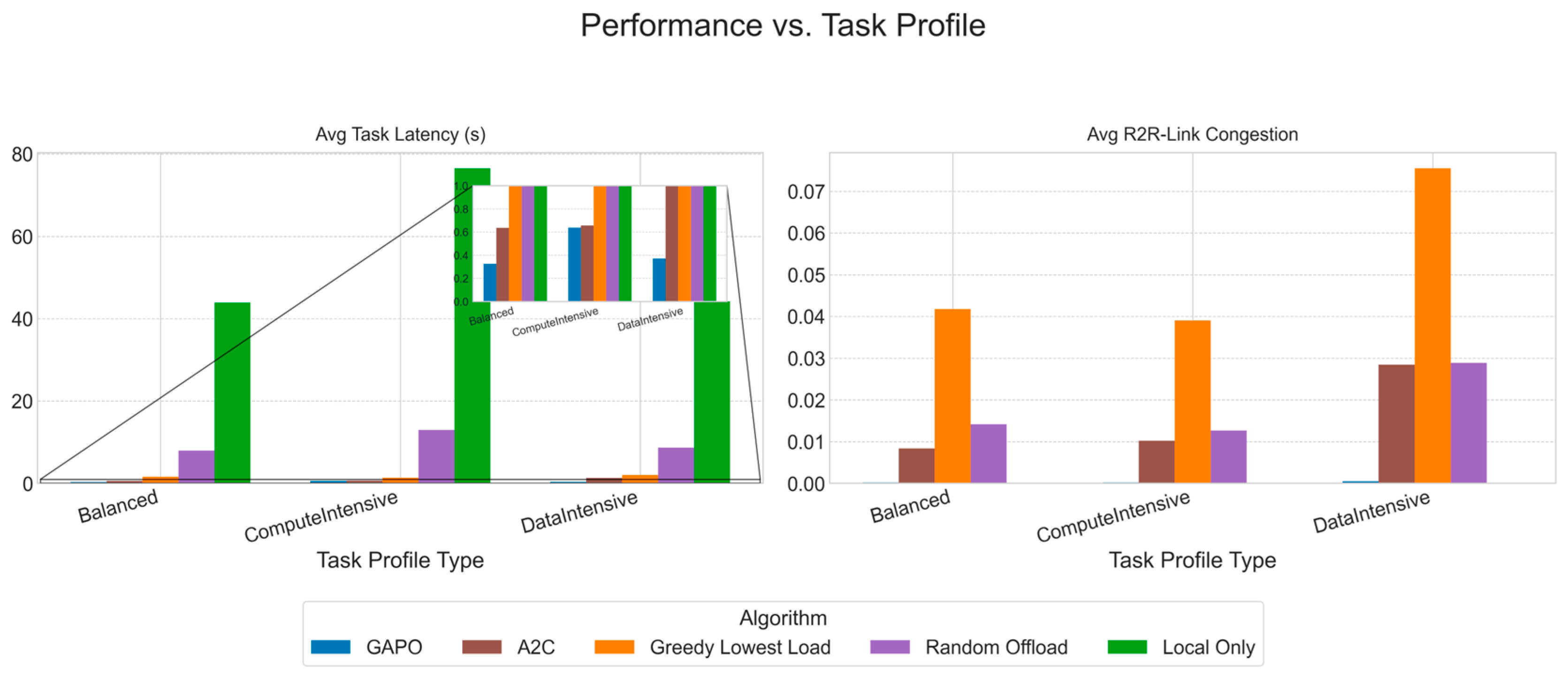

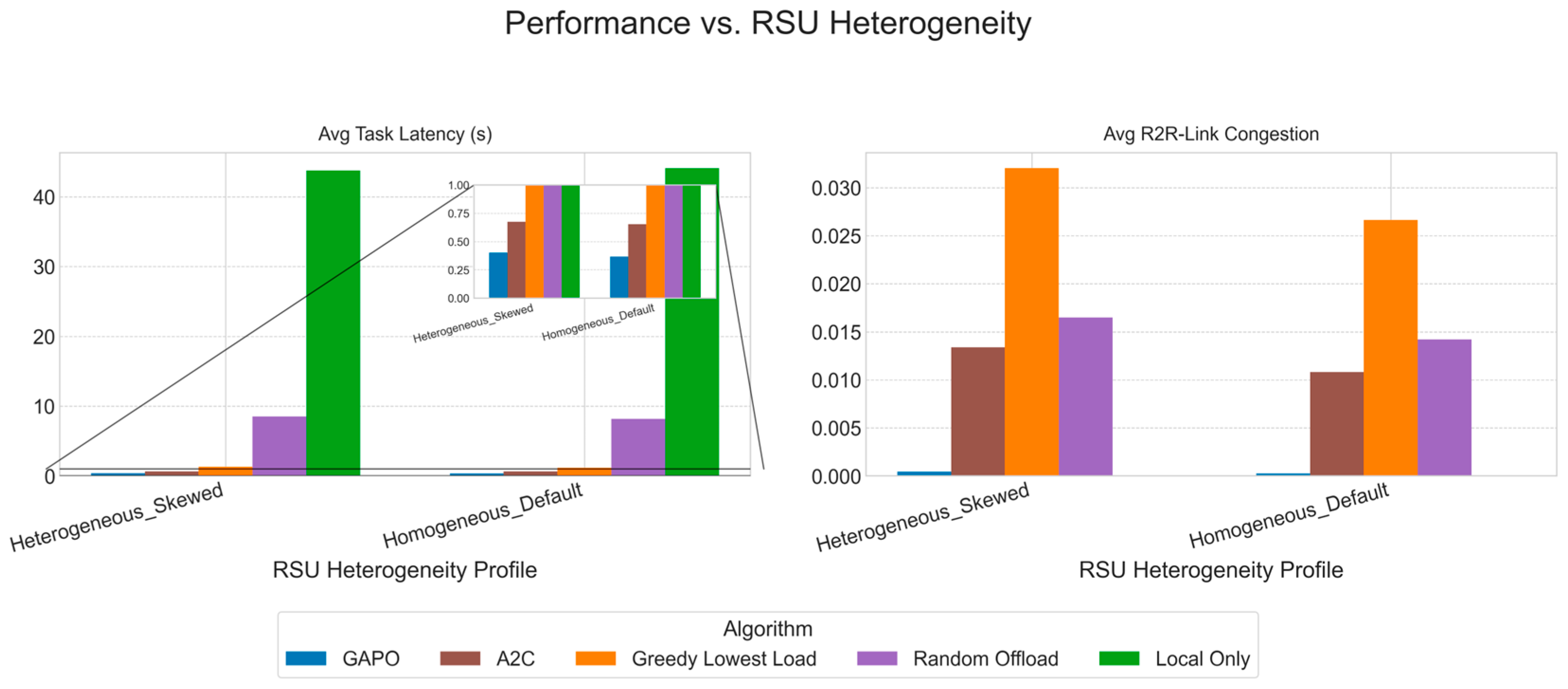

5.2. Comparative Experiments

- Local-Only: All tasks generated by vehicles are processed on their local on-board units, without any network offloading. This strategy completely avoids network communication latency and congestion but is limited by the finite computational power of the vehicles.

- Random Offload: A simple baseline strategy where vehicles make decisions randomly. It randomly selects an available RSU as the offloading target or chooses to process the task locally. If offloaded, the task is fully offloaded.

- Greedy Lowest Load: A heuristic algorithm where, at the time of decision, the vehicle evaluates the expected computational load of all reachable computation nodes, including itself. It always greedily selects the node with the lowest computational load as the offloading target.

- A2C (Advantage Actor–Critic) [23]: A classic reinforcement learning algorithm. To adapt it to the complex environment of this study, we designed a manual feature engineering module for it. This module transforms the graph-structured observation into a fixed-length vector. Specifically, it extracts a series of aggregated features for the current task, the source vehicle, and each RSU, such as direct connectivity with the source vehicle, the number of hops and estimated latency of the multi-hop path to the RSU, and the RSU’s own computational load. These features are concatenated into a long vector and fed into a standard MLP-based Actor–Critic network. The performance of the A2C algorithm is highly dependent on the completeness and effectiveness of these hand-crafted features, which stands in stark contrast to GAPO’s core idea of automatically learning network state representations via GNN.

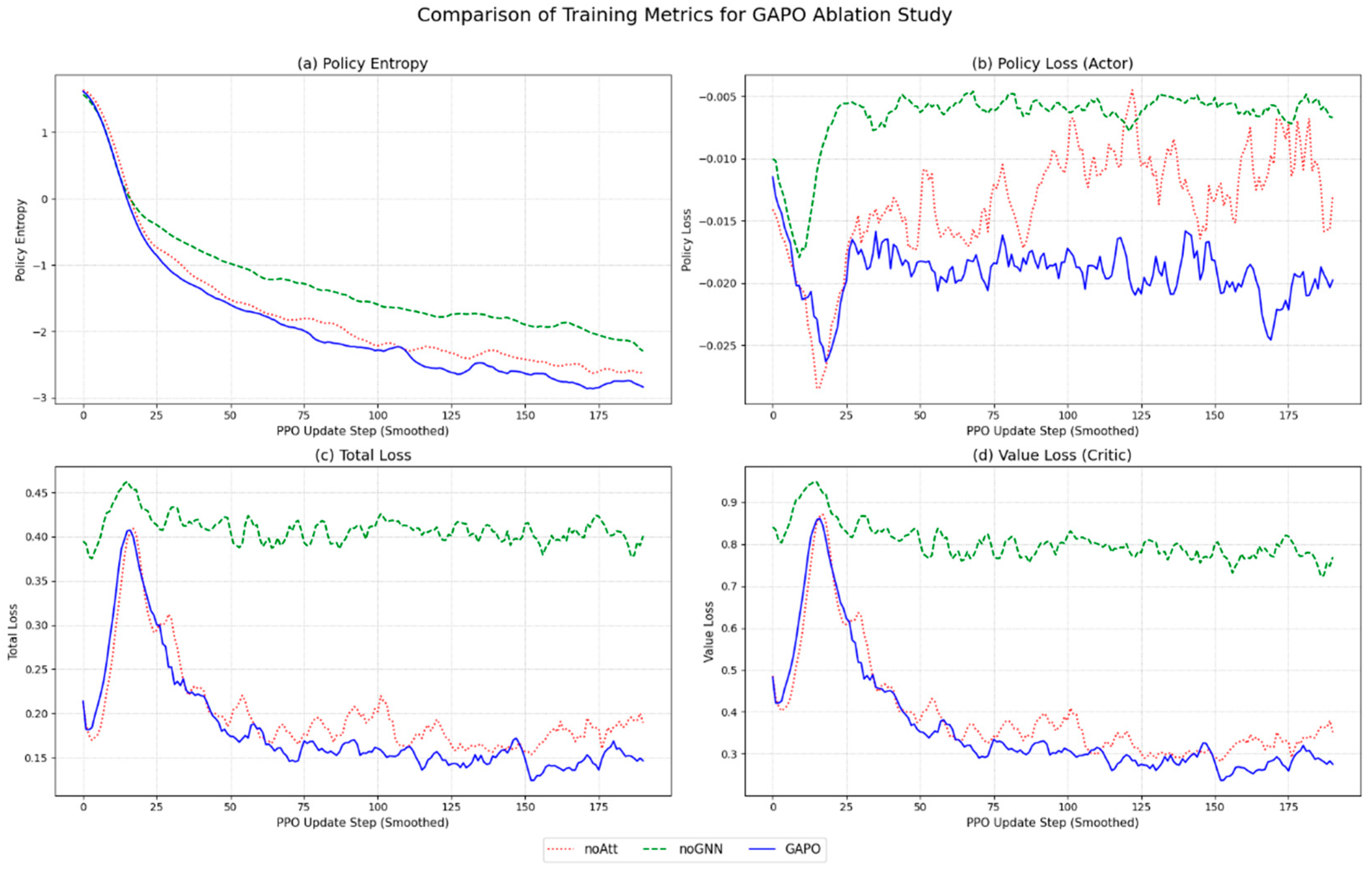

5.3. Ablation Study

- GAPO: The complete algorithm proposed in this paper, using both the GNN encoder and the query-based attention mechanism for target selection.

- noAtt: This variant removes the query-based attention module from the Actor network. Instead, it directly concatenates the source vehicle’s embedding, each candidate target’s embedding, and the task features, then uses a simple MLP network to score each candidate, finally selecting a target via Softmax. This variant still uses the GNN encoder to obtain node embeddings but loses the ability to intelligently match task needs with target characteristics.

- noGNN: This variant replaces the GNN encoder with a standard multi-layer perceptron (MLP). In this model, the features of each node are input into the MLP independently, completely ignoring the network topology. Therefore, this model cannot learn the contextual information of nodes through neighbor aggregation, and its state-aware capability degenerates to be similar to traditional flattened state vector methods.

6. Discussion

- State Representation and Scalability: An urban grid would contain a larger number of RSU nodes and a more complex edge set. This increases the scale of the input graph. GAPO’s GNN architecture is well-equipped to handle this. By design, GNNs like GATv2 operate on local neighborhoods. The computational complexity for updating a single node’s embedding scales with its number of neighbors, not the total number of nodes in the graph. This inherent locality ensures that the state representation module of GAPO remains scalable even in larger, denser network environments.

- Multi-Hop Pathfinding: The pathfinding algorithm in our current implementation implicitly assumes a linear chain of RSUs. For an urban grid, this module would need to be replaced with a more general graph traversal algorithm. This is a straightforward modification. The RSU network can be treated as a subgraph, where the edges are dynamically weighted by the real-time communication latency calculated by our model. A standard algorithm like Dijkstra’s could then be directly applied to find the latency-optimal path between any two RSU nodes. This would allow GAPO to identify the most efficient multi-hop communication routes through the urban mesh network without changing the core RL decision-making logic.

- V2V Communication at Intersections: Urban intersections present rich opportunities for multi-hop V2V relaying, which is not a primary focus in our highway model. Extending GAPO to fully leverage this would be a promising direction for future research. This could involve dynamically including high-value vehicle nodes into the pathfinding subgraph, further enriching the offloading options.

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, J.; Letaief, K.B. Mobile Edge Intelligence and Computing for the Internet of Vehicles. Proc. IEEE 2019, 108, 246–261. [Google Scholar] [CrossRef]

- Mach, P.; Becvar, Z. Mobile Edge Computing: A Survey on Architecture and Computation Offloading. IEEE Commun. Surv. Tutor. 2017, 19, 1628–1656. [Google Scholar] [CrossRef]

- Mao, Y.; You, C.; Zhang, J.; Huang, K.; Letaief, K.B. A Survey on Mobile Edge Computing: The Communication Perspective. IEEE Commun. Surv. Tutor. 2017, 19, 2322–2358. [Google Scholar] [CrossRef]

- Filali, A.; Abouaomar, A.; Cherkaoui, S.; Kobbane, A.; Guizani, M. Multi-Access Edge Computing: A Survey. IEEE Access 2020, 8, 197017–197046. [Google Scholar] [CrossRef]

- Dai, Y.; Xu, D.; Maharjan, S.; Zhang, Y. Joint Load Balancing and Offloading in Vehicular Edge Computing and Networks. IEEE Internet Things J. 2018, 6, 4377–4387. [Google Scholar] [CrossRef]

- Sahni, Y.; Cao, J.; Yang, L.; Ji, Y. Multi-Hop Multi-Task Partial Computation Offloading in Collaborative Edge Computing. IEEE Trans. Parallel Distrib. Syst. 2020, 32, 1133–1145. [Google Scholar] [CrossRef]

- Han, X.; Gao, G.; Ning, L.; Wang, Y.; Zhang, Y. A semidefinite relaxation approach for the offloading problem in edge computing. Comput. Electr. Eng. 2022, 98, 107728. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, X.; Sun, Y.; Wang, W.; Lei, B. Spatiotemporal Non-Uniformity-Aware Online Task Scheduling in Collaborative Edge Computing for Industrial Internet of Things. IEEE Trans. Mob. Comput. 2025, 1–18. [Google Scholar] [CrossRef]

- Ke, Q.; Silka, J.; Wieczorek, M.; Bai, Z.; Wozniak, M. Deep Neural Network Heuristic Hierarchization for Cooperative Intelligent Transportation Fleet Management. IEEE Trans. Intell. Transp. Syst. 2022, 23, 16752–16762. [Google Scholar] [CrossRef]

- Zielonka, A.; Sikora, A.; Woźniak, M. Fuzzy rules intelligent car real-time diagnostic system. Eng. Appl. Artif. Intell. 2024, 135, 108648. [Google Scholar] [CrossRef]

- Liu, X.; Gao, C.; Zhao, J.; Chen, Y. Deep Reinforcement Learning for Task Offloading in Vehicular Edge Computing. In Proceedings of the IEEE International Conference on Communications (ICC), Denver, CO, USA, 9–13 June 2024; pp. 1–6. [Google Scholar]

- Moon, S.; Lim, Y. Federated Deep Reinforcement Learning Based Task Offloading with Power Control in Vehicular Edge Computing. Sensors 2022, 22, 9595. [Google Scholar] [CrossRef] [PubMed]

- Ji, M.; Wu, Q.; Fan, P.; Cheng, N.; Chen, W.; Wang, J.; Letaief, K.B. Graph Neural Networks and Deep Reinforcement Learning-Based Resource Allocation for V2X Communications. IEEE Internet Things J. 2024, 12, 3613–3628. [Google Scholar] [CrossRef]

- Polowczyk, A.; Polowczyk, A.; Woźniak, M. Graph Neural Network via Dynamic Weights and LSTM with Attention for Traffic Forecasting. Procedia Comput. Sci. 2025, 257, 119–126. [Google Scholar] [CrossRef]

- Wu, J.; Zou, Y.; Zhang, X.; Liu, J.; Sun, W.; Du, G. Dependency-Aware Task Offloading Strategy via Heterogeneous Graph Neural Network and Deep Reinforcement Learning. IEEE Internet Things J. 2025, 12, 22915–22933. [Google Scholar] [CrossRef]

- Busacca, F.; Palazzo, S.; Raftopoulos, R.; Schembra, G. MANTRA: An Edge-Computing Framework based on Multi-Armed Bandit for Latency- and Energy-aware Job Offloading in Vehicular Networks. In Proceedings of the 2023 IEEE 9th International Conference on Network Softwarization (NetSoft), Madrid, Spain, 19–23 June 2023; pp. 143–151. [Google Scholar]

- Chen, S.; Li, W.; Sun, J.; Pace, P.; He, L.; Fortino, G. An Efficient Collaborative Task Offloading Approach Based on Multi-Objective Algorithm in MEC-Assisted Vehicular Networks. IEEE Trans. Veh. Technol. 2025, 74, 11249–11263. [Google Scholar] [CrossRef]

- IEEE Std 802.11p-2010; IEEE Standard for Information Technology—Telecommunications and Information Exchange between Systems—Local and Metropolitan Area Networks—Specific Requirements—Part 11: Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications—Amendment 6: Wireless Access in Vehicular Environments. IEEE: New York, NY, USA, 2010.

- Bose, S.K. An Introduction to Queueing Systems; Springer Science & Business Media: New York, NY, USA, 2013. [Google Scholar]

- Brody, S.; Alon, U.; Yahav, E. How Attentive are Graph Attention Networks? arXiv 2022, arXiv:2105.14491. [Google Scholar]

- Schulman, J.; Moritz, P.; Levine, S.; Jordan, M.; Abbeel, P. High-dimensional continuous control using generalized advantage estimation. In Proceedings of the 4th International Conference on Learning Representations (ICLR), San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal Policy Optimization Algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous Methods for Deep Reinforcement Learning. In Proceedings of the 33rd International Conference on International Conference on Machine Learning (ICML), New York, NY, USA, 19–24 June 2016; pp. 1928–1937. [Google Scholar]

- Zhao, Z.; Perazzone, J.; Verma, G.; Segarra, S. Congestion-Aware Distributed Task Offloading in Wireless Multi-Hop Networks Using Graph Neural Networks. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 8951–8955. [Google Scholar]

| Symbol | Description |

|---|---|

| Set of all vehicles in the system. | |

| Set of all roadside units. | |

| The attributed graph representing the network state at time . | |

| Set of all nodes at time . | |

| Set of all communication links at time . | |

| Node and edge attribute matrices. | |

| The -th computational task. | |

| Data size of task (in KB). | |

| Computational complexity of task (in giga-cycles). | |

| The joint action taken for task . | |

| Discrete offloading destination for task . | |

| Continuous offloading ratio for task . | |

| Continuous computational resource allocation ratio for task . | |

| Latency for local computation. | |

| Latency for computation on an edge server. | |

| , | Communication latency over a V2R or R2R link. |

| Total end-to-end completion latency for a task. | |

| , | Average task latency and average R2R link congestion. |

| The multi-objective function to be minimized. |

| Parameter | Description | Value |

|---|---|---|

| Highway Length | Total length of the highway in the simulation | 1000 m |

| RSU Coverage Radius | Signal coverage range of each RSU | 200 m |

| Default RSU Count | Number of RSUs in the baseline scenario | 5 |

| RSU Default Compute Capacity | Set of RSU compute capacities (heterogeneous) | {15, 20, 25, 30, 35} Gcps |

| Vehicle Local Compute Capacity | On-board compute capacity of a single vehicle | 0.25 Gcps |

| RSU–R2R Link Base Bandwidth | Backbone link bandwidth between RSUs | 30 Mbps |

| Default Task Arrival Interval | Average time interval between task generations | 0.1 s |

| Task Data Size | Range of data size to be transmitted for tasks | (512,1024) KB |

| Task Computation Size | Range of computation cycles required for tasks | (0.5,1.5) Gc |

| G/G/1 Stability Factor | Factor used to calculate the effective service rate | 0.99 |

| State Averaging Window | Time window for EWMA calculation | 1 s |

| Hyperparameter | Value |

|---|---|

| Learning Rate | 1 × 10−4 |

| Discount Factor (γ) | 0.99 |

| GAE Smoothing Parameter (λ) | 0.95 |

| Training Epochs per Update Cycle | 10 |

| Experience Replay Buffer Size | 2048 |

| Mini-batch Size for Updates | 4 |

| Entropy Loss Coefficient | 0.01 |

| Value Function Loss Coefficient | 0.5 |

| GNN Output Node Embedding Dimension | 32 |

| GAT Attention Head Count | 2 |

| MLP Hidden Layer Dimension in the Actor Network | 64 |

| MLP Hidden Layer Dimension in the Critic Network | 64 |

| Model | Average Task Latency (s) | Average R2R Link Congestion Rate |

|---|---|---|

| GAPO | 0.374 (±0.178) | 0.05% |

| noAtt | 0.477 (±0.197) | 0.09% |

| noGNN | 0.681 (±0.264) | 1.64% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, H.; Li, X.; Li, C.; Yao, L. GAPO: A Graph Attention-Based Reinforcement Learning Algorithm for Congestion-Aware Task Offloading in Multi-Hop Vehicular Edge Computing. Sensors 2025, 25, 4838. https://doi.org/10.3390/s25154838

Zhao H, Li X, Li C, Yao L. GAPO: A Graph Attention-Based Reinforcement Learning Algorithm for Congestion-Aware Task Offloading in Multi-Hop Vehicular Edge Computing. Sensors. 2025; 25(15):4838. https://doi.org/10.3390/s25154838

Chicago/Turabian StyleZhao, Hongwei, Xuyan Li, Chengrui Li, and Lu Yao. 2025. "GAPO: A Graph Attention-Based Reinforcement Learning Algorithm for Congestion-Aware Task Offloading in Multi-Hop Vehicular Edge Computing" Sensors 25, no. 15: 4838. https://doi.org/10.3390/s25154838

APA StyleZhao, H., Li, X., Li, C., & Yao, L. (2025). GAPO: A Graph Attention-Based Reinforcement Learning Algorithm for Congestion-Aware Task Offloading in Multi-Hop Vehicular Edge Computing. Sensors, 25(15), 4838. https://doi.org/10.3390/s25154838