1. Introduction

Industrial robot arms have been used for many years to increase the efficiency of modern manufacturing processes due to their high precision, repeatability, and speed capacities [

1]. They are widely used in critical processes such as pick-and-place applications, assembly, packaging, and material handling, and these application capabilities directly affect the performance of production lines [

2,

3]. However, high-precision and high-accuracy positioning and manipulation of objects in dynamic and complex industrial environments pose significant challenges, especially in cases that require 6D (six-dimensional) pose estimation (three-dimensional position and three-dimensional orientation) [

4,

5]. In this study, the problem of picking metal plates located in different positions in a brake pad production line from the center of gravity with a vacuum–magnetic gripper integrated at the end point of the robot arm and placing them in certain empty slots with high precision is addressed. This process becomes complicated due to the different orientations and positions of the plates and requires high accuracy in both picking and placing operations. For example, if the gripper at the end point of the robot arm moves to the correct position but does not approach the metal plate with the correct orientation, it may not be able to grasp the metal plate from its targeted center point due to its weight, magnetic field effect, and, if any, its inclination relative to the production line. Therefore, a metal plate that cannot be picked with the desired position and orientation may not be correctly placed in the empty target slots. This problem emerges as a critical obstacle that needs to be solved in order to increase precision and minimize the margin of error in industrial production.

For estimating the 6D pose of objects, cameras are the most widely used technology in industrial applications [

6], even if sensors such as lasers [

7], IMU-based string encoders [

8], and tactile sensors [

9] are rarely used. In this study, a fixed eye-to-hand RGB-D camera is used because RGB-D cameras provide more accurate pose estimation by providing both color and depth information of objects simultaneously. In order to process the real-time images obtained from the camera and to compare the calculated 6D pose values, the actual dimensional parameters of the metal plates in the 3D computer-aided design (CAD) models are needed as reference values. To computationally estimate the 6D pose of objects, deep learning-based or traditional analytics-based solutions are often integrated with image processing techniques.

When we examine pioneering and innovative studies that have become reference points in the literature [

10,

11,

12,

13,

14] on the estimation of objects’ 6D pose, the approaches to solving this problem are divided into two main categories: learning-based and non-learning-based techniques. Of the traditional non-learning-based 6D pose estimation techniques from the early years, the template matching-based and feature-based methods are still used today. LineMOD [

15], the most well-known of the template matching methods, estimates the 6D pose of an object by comparing previously created 2D/3D templates from different viewpoints with the input image and selecting the best matching pose. Feature-based methods, other important methods that are non-learning-based, estimate the 6D pose by matching the 2D key points in the image with the corresponding 3D points in the object model and then solving the pose using geometric techniques such as perspective-n-points (PnP) and random sample consensus (RANSAC) [

16]. For target objects exhibiting distinct textural features, traditional techniques such as the Hough Transform (HT) [

17], Scale-Invariant Feature Transform (SIFT) [

18], Speeded-Up Robust Features (SURF) [

19], and Oriented FAST and Rotated BRIEF (ORB) [

20] are often used for feature extraction and reliable pose estimation. In recent years, the estimation of objects’ 6D pose using these traditional techniques has been further developed and used in robotic grasping applications. In [

21], the authors presented an approach for detecting and achieving high-accuracy 3D localization of multiple textureless rigid objects from RGB-D data. The authors of [

22] presented a unique framework called Latent-Class Hough Forests for 3D pose estimation in situations that are very congested and obscured. Ref. [

23] proposed a coarse-to-fine approach using only shape and contour data, selecting similar projection images to create many-to-one 2D–3D correspondences while emphasizing outlier rejection and leveraging geometric matching to guide pose estimation robustly. The authors in [

5,

24] developed the bunch-of-lines descriptor (BOLD) method to identify and match contour lines by fully using the geometric information of textureless metal objects in industrial applications. The study in [

25] introduced a 3D point cloud pose estimation method using geometric information prediction to enhance accuracy and speed in robotic grasping of industrial parts by analyzing appearance characteristics and point cloud geometry. The study in [

26] contributed a probabilistic smoothing method for stable object pose tracking in robot control using real and synthetic datasets. The study in [

27] proposed a grasping pose estimation framework using point cloud fusion and filtering from a single-view RGB-D image.

Learning-based 6D pose estimation techniques include direct regression (e.g., PoseNet and SSD-6D), key point-based (e.g., PVNet and HybridPose), dense correspondence (e.g., DenseFusion and CosyPose), template matching (e.g., PPF-FoldNet), pose refinement (e.g., DeepIM and RePose), and differentiable rendering (e.g., DPOD and NeMo) methods. These methods rely on deep learning to predict object poses from RGB or RGB-D data with varying trade-offs in accuracy, robustness, and computational efficiency. Direct regression methods predict the 6D pose of the object from an input image in an end-to-end manner using deep neural networks, as demonstrated by PoseNet [

28], which regresses camera pose from RGB images, SSD-6D [

29], which combines detection and pose regression, and Deep-6DPose [

30], which directly outputs key points and pose through a CNN. Key point-based methods predict the 6D pose by first detecting 2D key points (e.g., object corners or semantic points) in the image and then solving the pose using PnP algorithms, as seen in PVNet [

31], which predicts vector fields for key point localization, and HybridPose [

32], which combines key points with edge vectors and symmetry constraints for robust estimation. Dense correspondence methods establish per-pixel 2D–3D mappings between the input image and object model before computing the 6D pose, as demonstrated by DenseFusion [

33], which fuses RGB and depth features for pixel-wise pose prediction, and CosyPose [

34], which leverages dense matching for robust multi-object pose estimation in cluttered scenes. Template matching with deep features aligns input images with 3D object representations using learned feature descriptors, as exemplified by PPF-FoldNet [

35], which encodes geometric Point Pair Features via deep learning, and Oberweger et al.’s method in [

36], which employs CNNs to predict template matches for robust pose estimation under occlusion and textureless conditions. Pose refinement methods iteratively optimize an initial coarse pose estimate through learned correction mechanisms, as demonstrated by DeepIM [

37], which uses iterative feature matching between observed and rendered images, and RePose [

38], which employs differentiable rendering to analytically refine poses in a neural framework. Differentiable rendering-based methods optimize the estimation of 6D pose by comparing neural renderings of predicted poses without real images using gradient-based refinement, as exemplified by DPOD [

39], which combines detection with differentiable silhouette matching, and NeMo [

40], which aligns predicted and rendered surface normal maps for pose optimization.

Common features of hybrid methods are that they perform CNN-based feature extraction (key point, correspondence, and segmentation) in the learning phase and final pose optimization with PnP, RANSAC, ICP, or differentiable rendering in the geometric phase. Learning-based and non-learning-based hybrid approaches combine both the flexibility of deep learning and the robustness of traditional geometric methods in 6D pose estimation. Among the aforementioned methods, the most common hybrid methods and prominent examples are DenseFusion [

33], PVNet [

31], HybridPose [

32], CosyPose [

34], and DPOD [

39]. Tekin et al. introduced YOLO6D in [

41], a CNN that estimates 2D projections of 3D bounding box corners (You Only Look Once (YOLO) was first developed by [

42]), enabling pose estimation via PnP via 2D–3D relations. Sundermeyer et al. introduced augmented autoencoders (AAEs) in [

43], an extension of denoising autoencoders, which reduce the synthetic-to-real domain gap by training the model to be invariant to such discrepancies. Hodan et al. proposed a hybrid EPOS method in [

44] that learns compressed 3D surface features and combines them with PnP and RANSAC. The authors in [

45] proposed a region-based key point detection transformer, which uses set prediction and voting mechanisms to estimate the 6D pose in robotic grasping applications. Recent work by [

46] demonstrated a vision-based pick-and-place system using an eye-in-hand camera and deep learning (YOLOv7 combined with GANs) to achieve real-time object recognition and precise robotic control in both simulated and real-world environments. In general, most of the above-mentioned geometry-based, learning-based, and hybrid methods focus on pose estimation itself without real-world applications. When we examine the studies mentioned above and the literature in general, we see that there are very few academic studies dealing with real problems in industrial applications. In our study, we address the problem of 6D pose estimation using a camera for a high-precision pick-and-place application of a metal brake pad plate with a robot arm in a real industrial application.

The learning-based and hybrid approaches mentioned in the literature usually require large datasets and intensive training processes, which can create difficulties in terms of efficiency and real-time performance in practical industrial applications. In this study, an analytical solution-based 6D pose estimation method with less computational complexity is presented, specially developed for a specific industrial task. Most of the studies mentioned above focus only on pose estimation itself and ignore real-world applications; therefore, academic studies dealing with real industrial problems are quite few. Existing studies generally do not take into account critical practical issues such as how the robot arm’s end-effector can fail operations such as grasping heavy metal plates from the center, even if it approaches the object in the correct position. Therefore, in this paper, the estimation of the 6D pose of an object encountered in a real industrial application is combined with robotic grasp planning to demonstrate the accuracy of the proposed method. In this respect, this study stands out as one of the rare studies that address vision-based high-precision 6D pose estimation and an industrial robotic pick-and-place application in an integrated manner.

In this study, we address the challenge of high-precision 6D pose estimation for a real-world industrial application by developing and validating a complete vision-guided robotic system. The main contributions of this study are summarized as follows:

A lightweight, analytics-based 6D pose estimation method is developed and implemented in a real-world robotic pick-and-place system without requiring deep learning or large training datasets.

The proposed method is experimentally validated on a Staubli TX2-60L robot arm integrated with an RGB-D camera and a vacuum–magnetic gripper in an actual brake pad production line.

The system demonstrates high accuracy in real-time 6D pose estimation in four different object placement scenarios, achieving positional errors less than 2 mm and angular errors less than .

A comparative analysis is presented against state-of-the-art hybrid YOLO-based methods (e.g., YOLO + PnP/RANSAC), highlighting the accuracy, time-efficiency, consistency, and robustness of the proposed approach.

Although this study focuses on planar metallic brake pad plates due to their relevance in the specific industrial problem addressed, the proposed analytical 6D pose estimation approach is designed to be adaptable to broader object categories. Furthermore, while the current industrial setup benefits from relatively stable lighting and minimal occlusion, future adaptations may require addressing dynamic environmental factors such as varying illumination, partial object visibility, or complex geometries. The subsequent sections are organized as follows:

Section 2 provides a detailed description of the robotic setup, focusing on the six-degree-of-freedom Staubli TX2-60L robot arm and the forward kinematic model created using the Denavit–Hartenberg (DH) method.

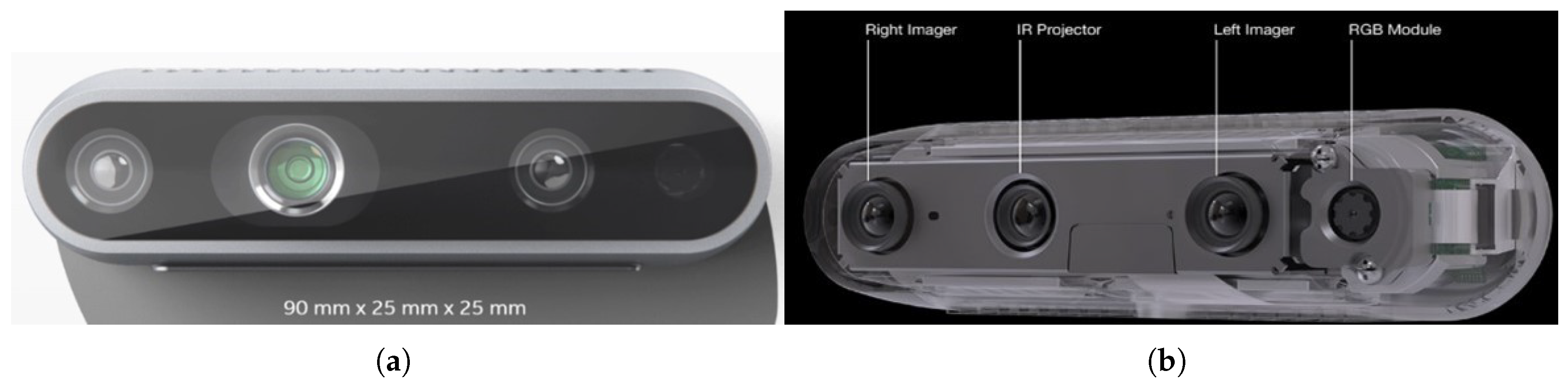

Section 3 outlines the industrial camera setup, including the specifications of the Intel RealSense D435 camera, the calibration process for the camera and robot frames, and the LabVIEW software developed for image acquisition.

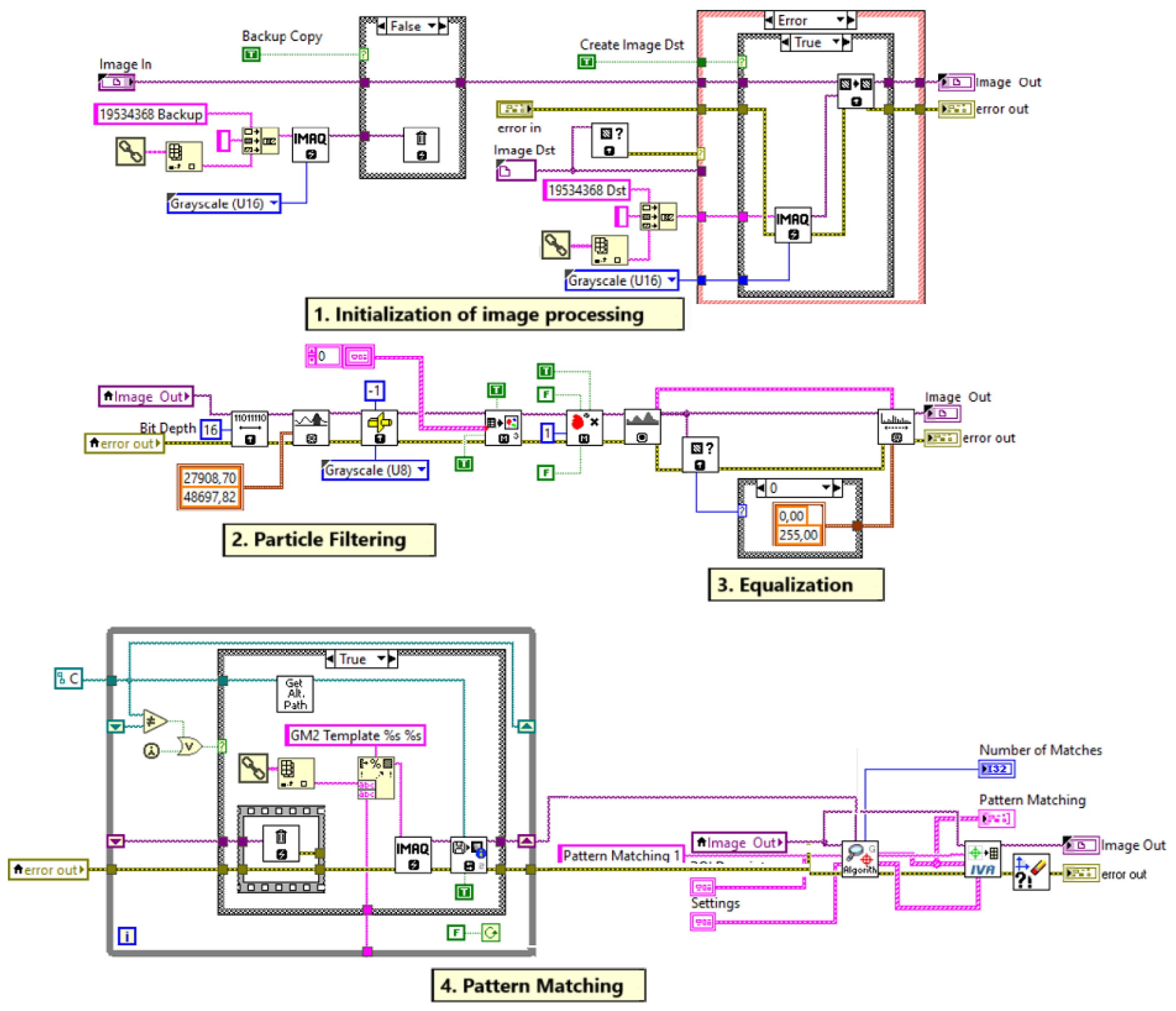

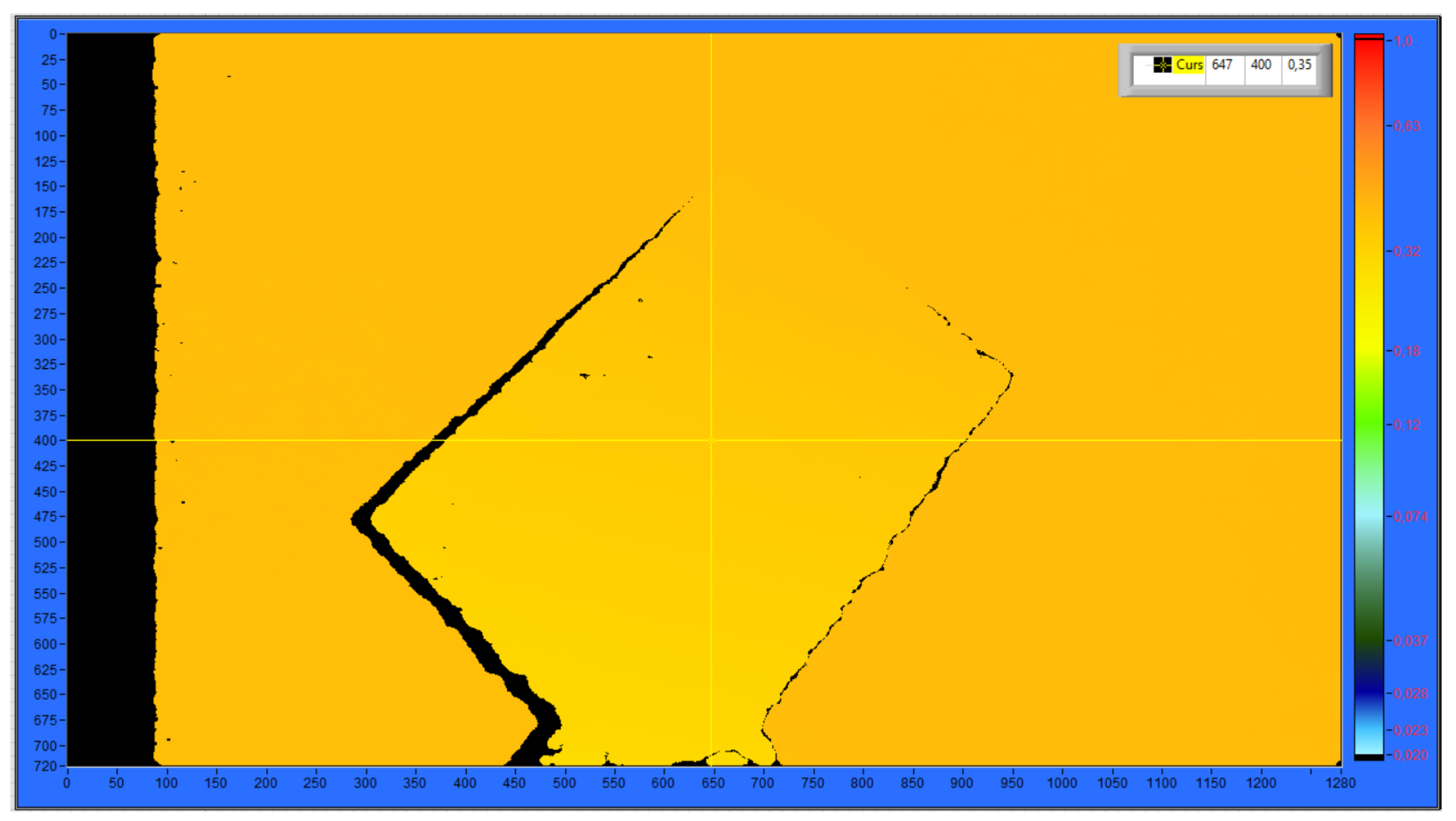

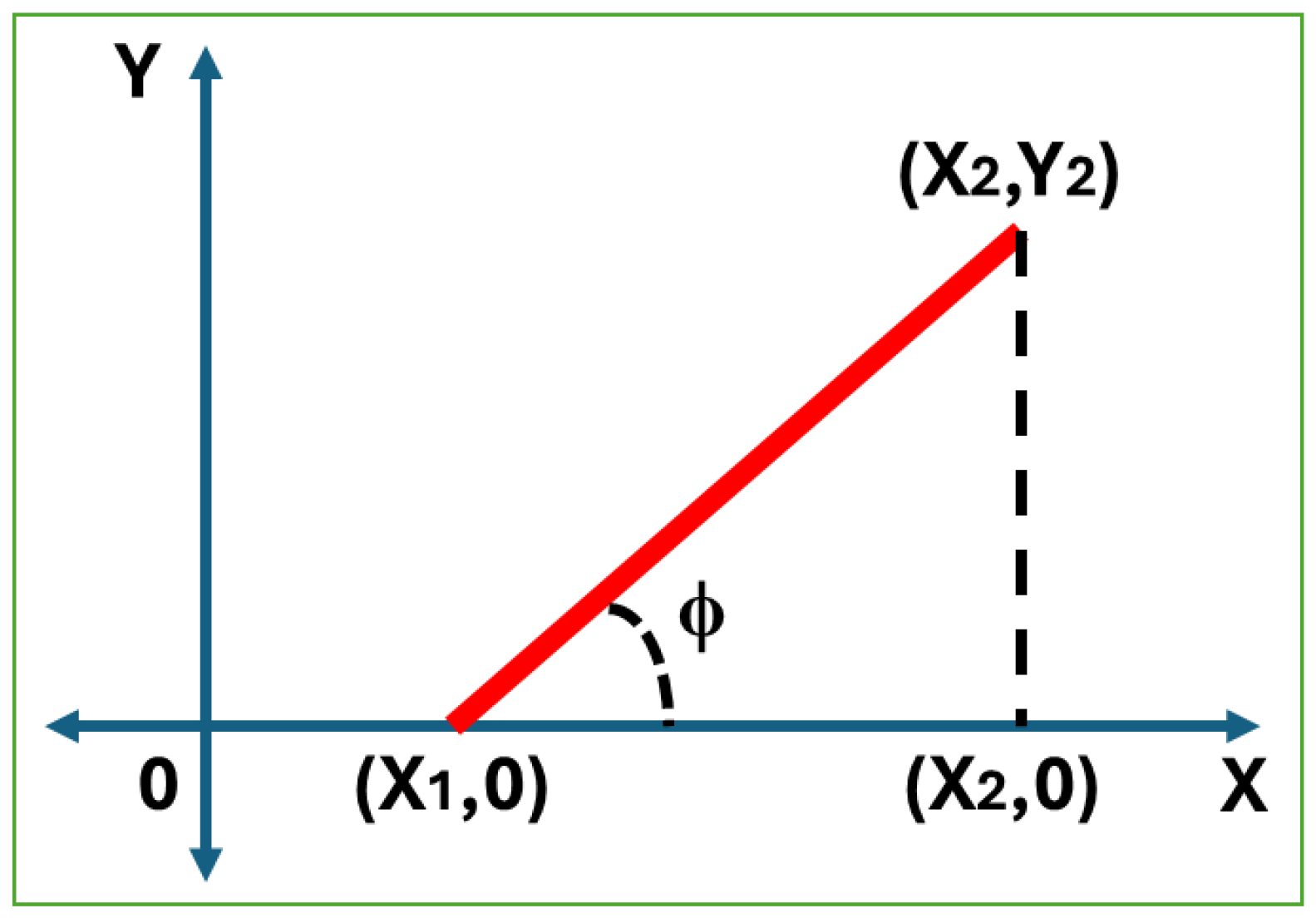

Section 4 explains our proposed analytics-based solution, which uses the NI Vision library to process images and an inclination angle method to calculate the object’s 6D pose. For comparative analysis,

Section 5 details the implementation of alternative hybrid methods, which use the deep learning-based algorithm YOLO-v8 for key point detection and various geometric algorithms (PnP/RANSAC) for pose estimation. Then,

Section 6 presents the experimental studies, describing the pick-and-place application software and the four different test scenarios and discussing the results, which validates the high precision of our proposed analytical method against the hybrid approach. Finally, this study is concluded in

Section 7.

2. Robotic Setup

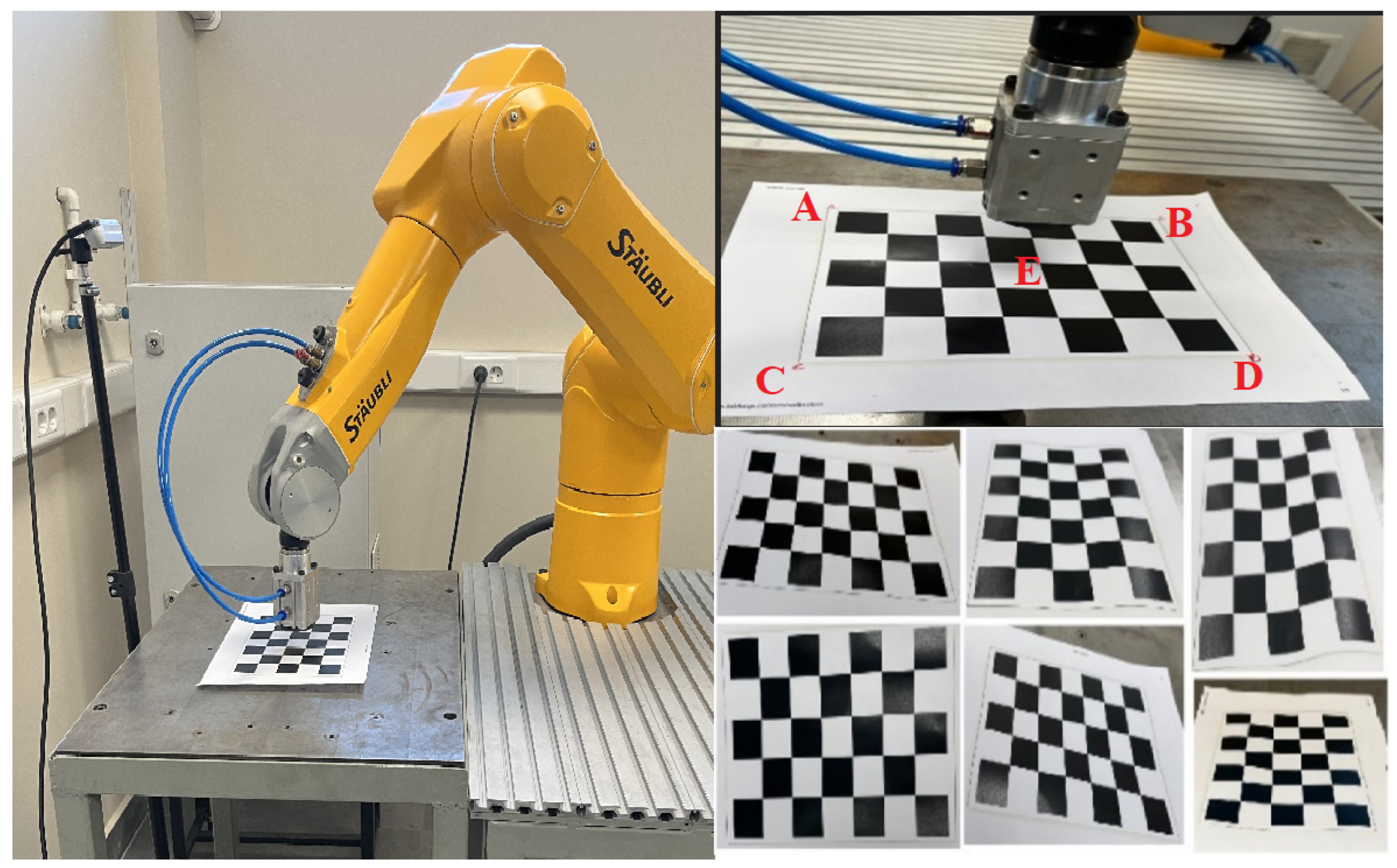

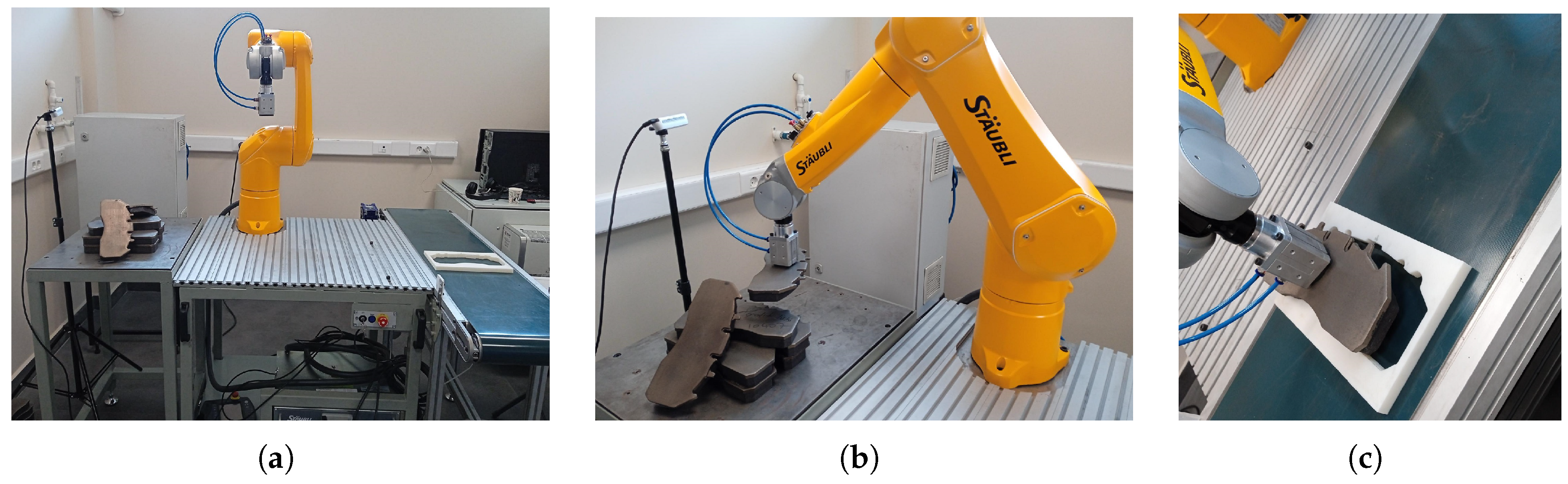

In this study, a fixed eye-to-hand camera and a robot arm are used to place metal plates with random unknown positions in bulk in empty slots with specific poses in a brake pad production phase. For this real industrial pick-and-place application, a Staubli TX2-60L model robot arm and an Intel RealSense D435 camera are used. The general structure of the system setup is shown in

Figure 1.

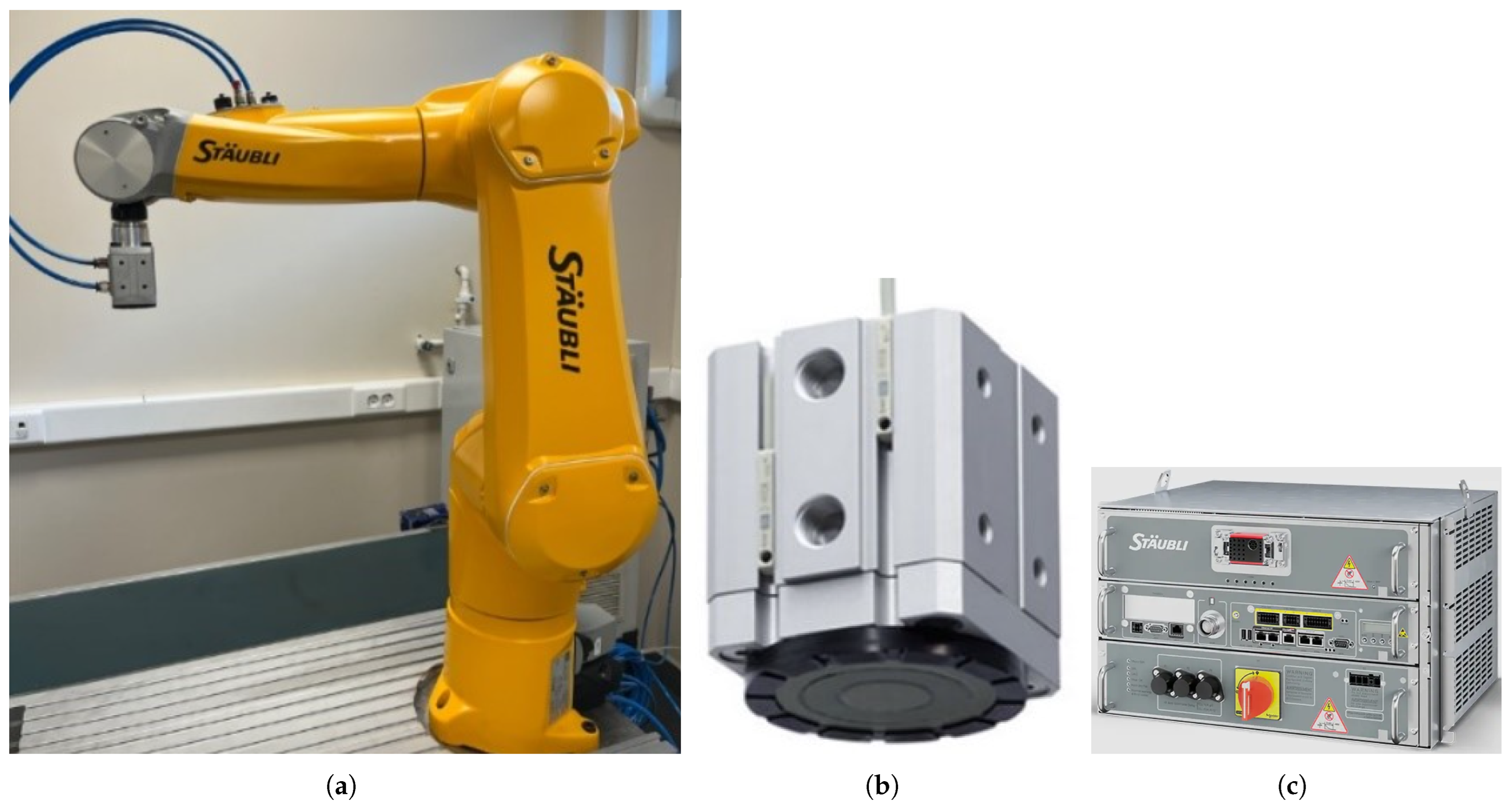

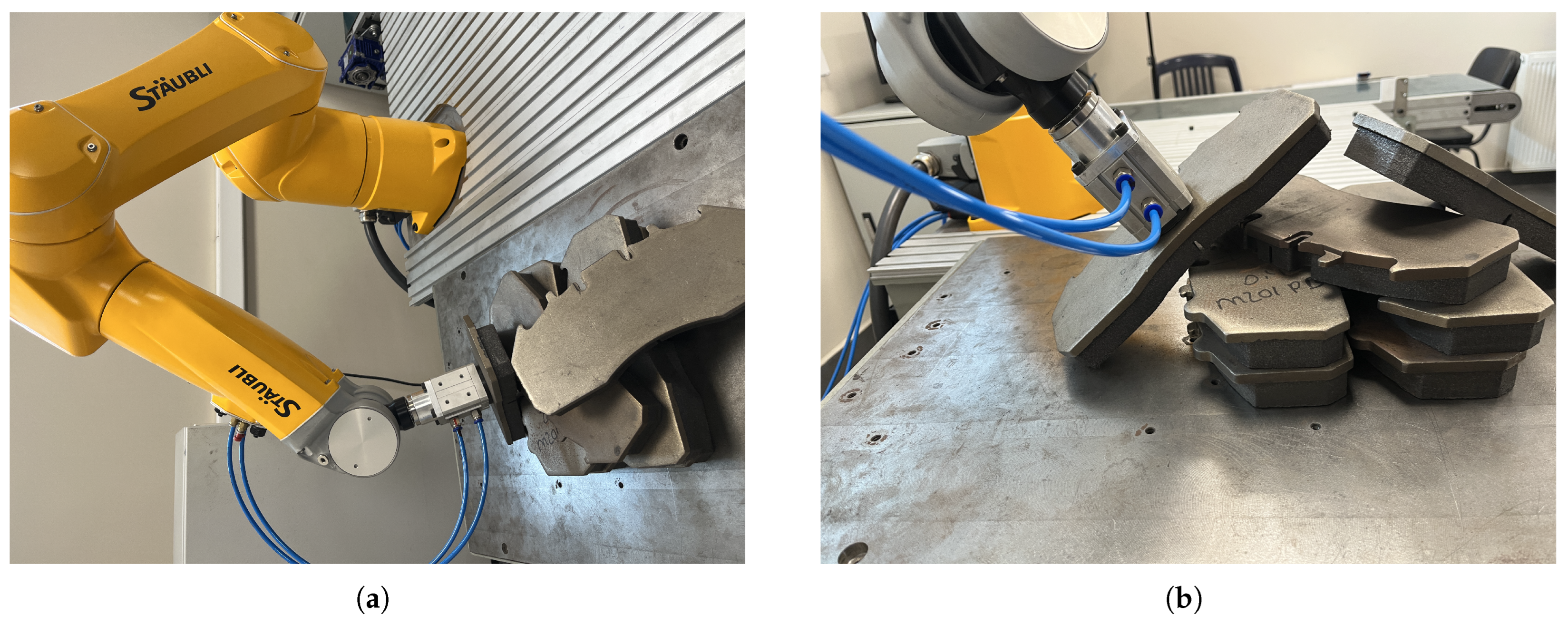

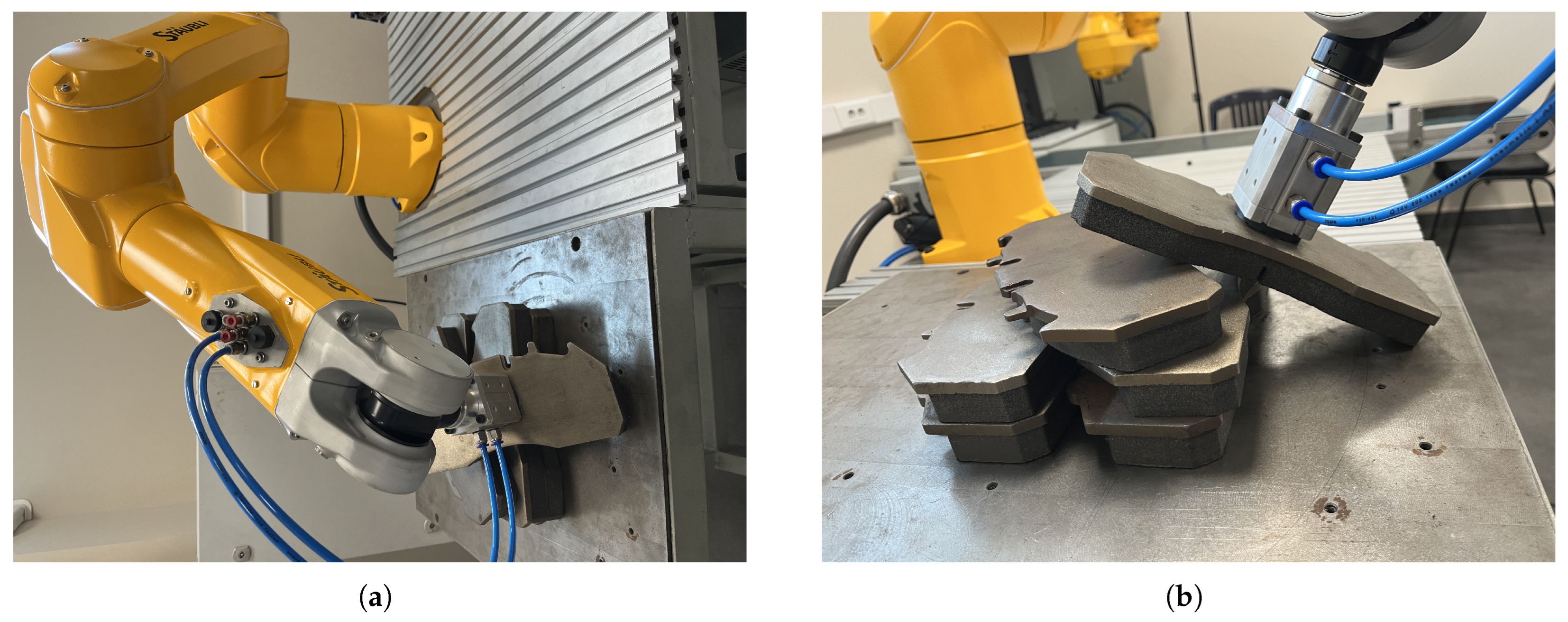

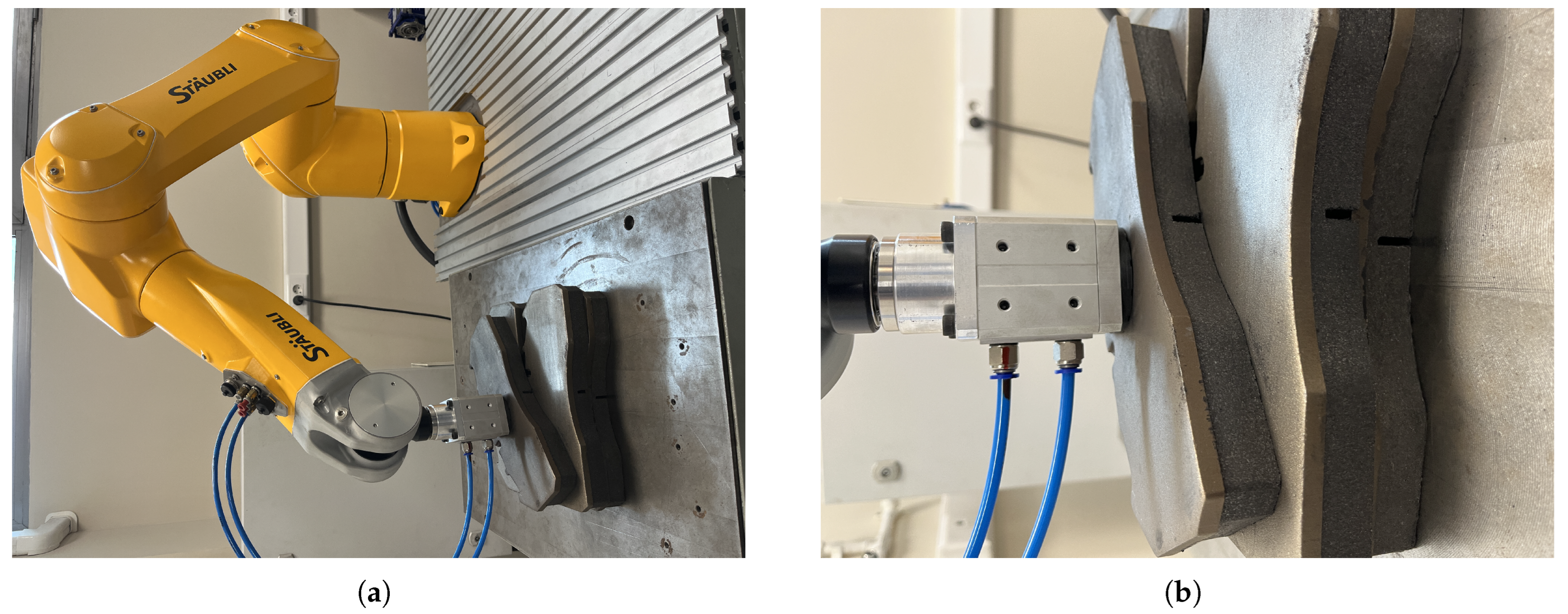

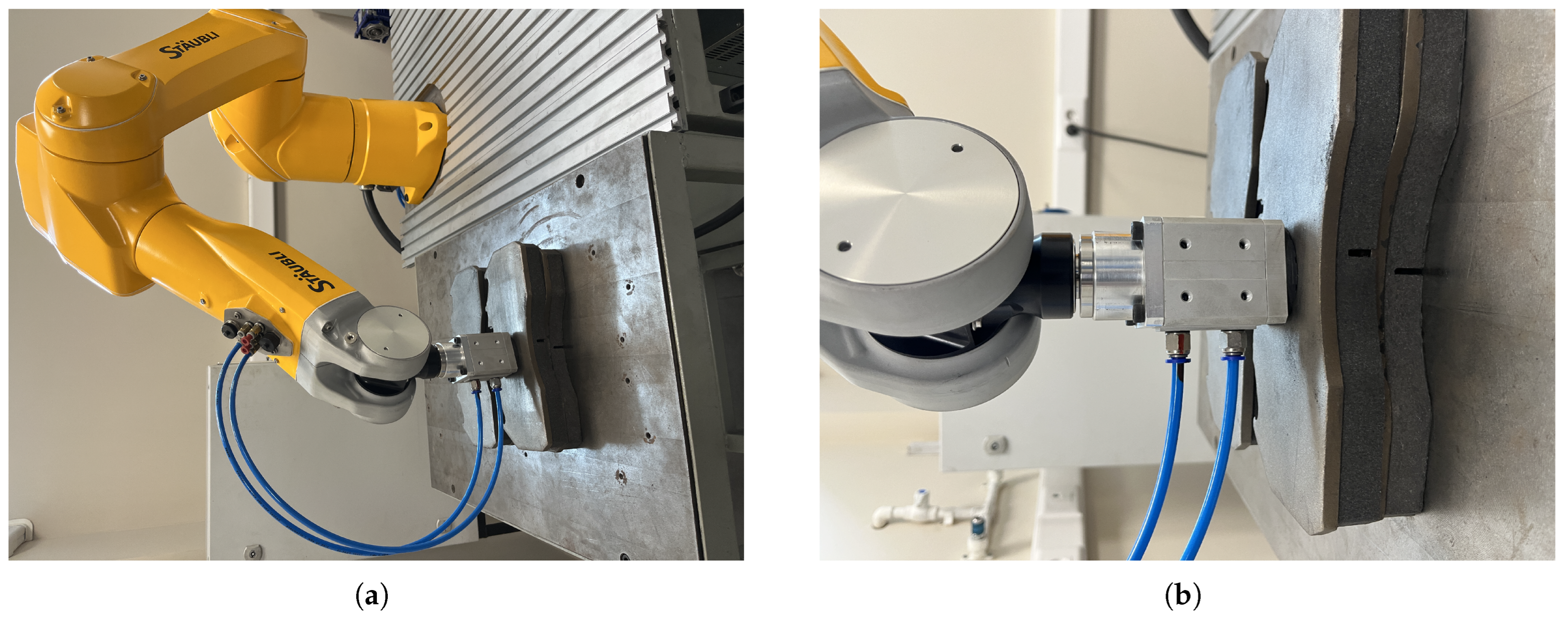

The Staubli TX2-60L model is a six-degree-of-freedom (DOF) robot arm, as shown in

Figure 2a. The robot arm operates in a spherical workspace, has a reach of approximately 1 m, and can carry payloads of up to 3.7 kg. This TX2-60L model is equipped with 19-bit absolute encoders and is ready to operate without initialization. It has a repeatability of ±0.02 mm and is used for high-precision tasks such as assembly or parts handling. Due to its compact size, fast movement, and high repeatability, this robot is suitable for performing a wide range of operations and especially pick-and-place tasks that require speed and accuracy. In order to perform our pick-and-drop operations on the metal plates, a vacuum–magnetic gripper is mounted on the robot’s end-effector, as shown in

Figure 2b, which can hold up to approximately 3.5 kg. The CS9 controller, as shown in

Figure 2c, with an open architecture connects the robot to the computer via the Modbus TCP/IP industrial communication protocol and is used consistently in production because it has rapid integration.

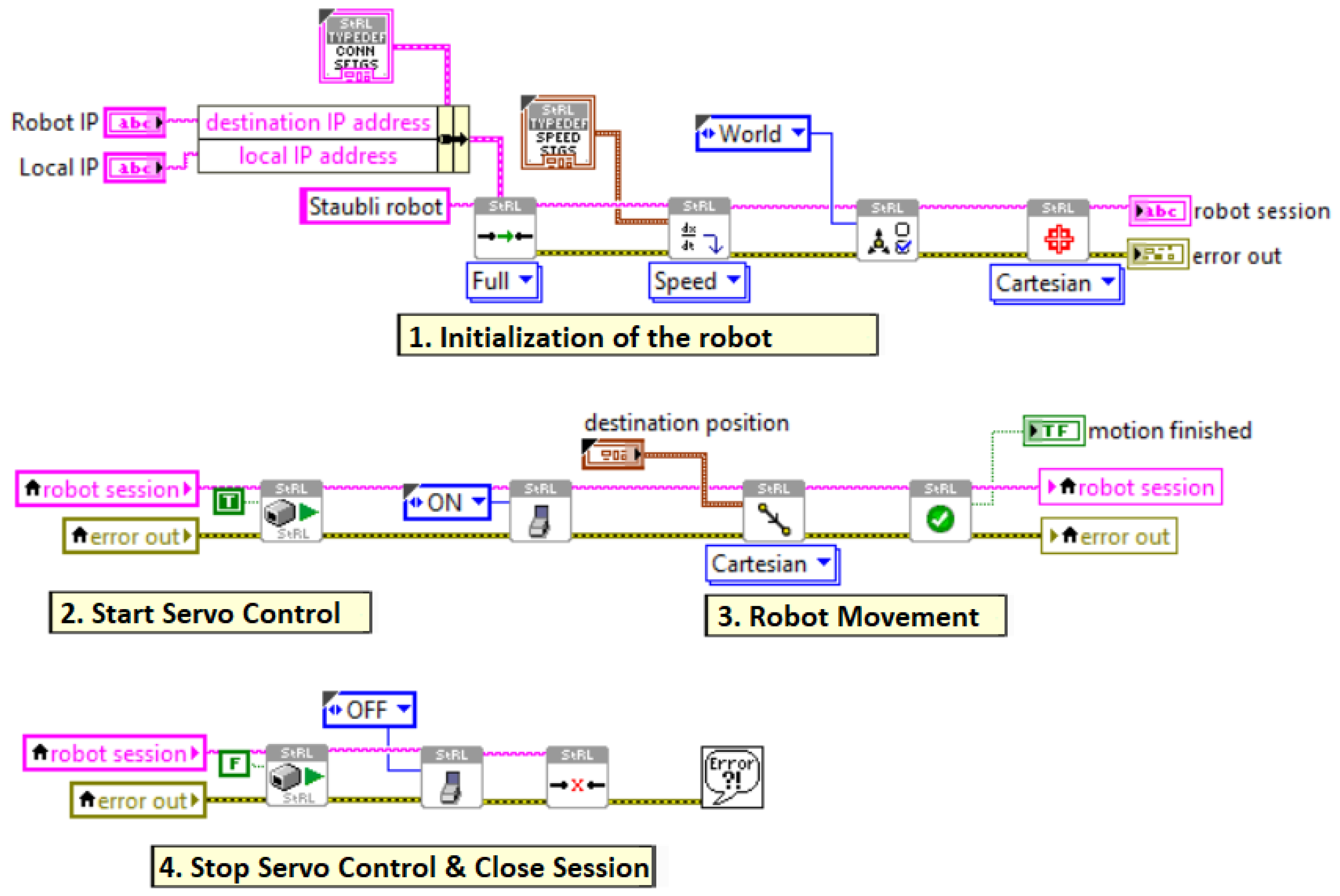

In order for the robot to perform the task of picking up the metal plates, first the 6D pose of the objects is calculated with the RGB-D data coming from the camera. Then, the pose of the target object in Cartesian space is converted to the target end-effector pose of the robot arm using camera calibration calculations. In order for the robot to pick up the objects quickly and precisely with a correct trajectory strategy, the target trajectory positions of the robot’s end-effector are sent to the CS9 controller. The CS9 controller performs the movement of the metal plate held at the end-effector of the robot from the picked point to the place point using the encoder data in the joint motors and forward kinematic calculations.

In this study, forward kinematic calculations are performed to find the position and orientation of the end-effector frame {T} of a 6-DOF Staubli robot arm in Cartesian space with respect to the base frame {B}. A standard Denavit–Hartenberg (DH) method [

47] is used to create the kinematic model of the robot and to perform forward kinematic calculations. This method defines the geometric relationship between each joint and link with four DH parameters (

,

a,

d, and

). According to the link frame assignments of the Staubli TX2-60L robot arm we created, as shown in

Figure 3, the DH parameters for each link are determined as in

Table 1.

In

Table 1,

is the twist angle between two joint axes,

is the distance between two adjacent joint axes,

is the translation from one link to the other along the joint axis, and

is the angle of rotation about the joint axis.

The variable DH parameters of this robot arm, all six of which are rotary joints, are the joint angles

for each joint and are measured from the encoder data in the motors/actuators. The other three DH parameters of the robot arm (

,

, and

) are fixed. These constant parameters are

m,

m,

m, and

m. Based on the standard definitions of the DH parameters [

48], link-to-link transformation matrices are formed as

where

and

represent the sine and cosine of the joint angle

, respectively, and

and

represent the sine and cosine of the twist angle

, respectively.

When each link-to-link transformation matrix is calculated from

to

and multiplied by all, the transformation matrix that gives the pose information of the end-effector of the robot arm

with respect to the base is found as

The equations for the

position vector entries (

,

, and

) obtained from the base-to-end-effector transformation matrix in (

2) can be calculated according to the DH parameters as

Using the 3 × 3 rotation matrix of the robot arm obtained from the base-to-end-effector transformation matrix in (

2), the Euler angles (roll

, pitch

, and yaw

) representing the orientation of the end-effector in Cartesian space can be calculated according to the DH parameters as

where

and

.

7. Conclusions

This work addressed the critical challenge of high-precision 6D pose estimation for robotic pick-and-place applications in a real industrial production line. An integrated system combining a Staubli TX2-60L industrial robot, an Intel RealSense RGB-D camera, and a novel analytics-based software solution built in LabVIEW and NI Vision was successfully developed. The proposed analytical method proved to be highly effective in accurately calculating the 6D pose of metal plates in real time. In extensive experimental trials in four challenging scenarios, the system demonstrated exceptional performance, achieving position estimation errors of less than 2 mm and rotation estimation errors of less than . This level of precision allowed the robot to successfully perform the pick-and-place task in all trials and accurately place the metal plates in a tight 2 mm tolerance slot.

In a comparative analysis, the developed analytical solution significantly outperformed popular hybrid methods that combined the YOLO-v8 deep learning-based object detector with various geometric-based algorithms, such as PnP and RANSAC. The hybrid method produced larger prediction errors that prevented the robot from reliably picking up and placing the metal plates. These findings highlight the value of a dedicated analytics-based approach for specific industrial applications where high accuracy and robustness are crucial. The success of this research highlights that traditional geometry-focused machine vision techniques can provide a more effective alternative to learning-based models such as YOLO and its hybrid solutions for well-defined industrial problems, providing a validated, practical, and highly reliable solution for automating complex manipulation tasks.

Although our study primarily addresses high-precision pose estimation within a semi-static industrial setting, we recognize that real-world applications frequently introduce complexities such as dynamic conveyors, moving targets, and fluctuating lighting or occlusion. However, the modular nature of our proposed method allows for adaptation to such dynamic environments. This would involve integrating elements such as high-speed imaging, motion synchronization, or predictive control. Extending this methodology to these more challenging scenarios would require custom setups, including robot-specific control strategies, customized grippers, and potentially multiple camera configurations to effectively manage occlusion and speed constraints.

The proposed method was experimentally validated using rigid and planar metallic brake pad plates due to their relevance in the target industrial application. However, the underlying analytical approach can be adapted to other types of objects with appropriate modifications to perception or manipulation systems. For example, handling reflective or non-planar objects may require different camera types (e.g., polarization or structured light sensors), lighting control, or alternative end-effectors. The current system also demonstrated robustness under cluttered scenes, with randomly oriented and overlapping objects. Future work will explore extending the method to non-metallic or deformable objects, integrating multi-view perception, and applying it in other industrial domains beyond automotive assembly lines.