Low-Latency Edge-Enabled Digital Twin System for Multi-Robot Collision Avoidance and Remote Control

Abstract

1. Introduction

- A novel DT-based multi-robot collision avoidance system that integrates real-time predictive modeling and trajectory optimization to enhance navigation accuracy.

- An edge computing-based smart observer architecture that preprocesses and extracts critical motion data before transmission, significantly reducing bandwidth usage compared to conventional raw sensor data transmission.

- A communication-efficient feature-based data transmission model that replaces raw video feeds with compressed trajectory data, achieving lower latency and improved real-time responsiveness.

- An implementation of an E-DTNCS that demonstrates seamless real-world synchronization between physical robots and their digital counterparts.

- Extensive experimental validation comparing the proposed system with conventional sensor-based and remote-controlled approaches, demonstrating significant improvements in collision avoidance accuracy and latency reduction.

2. Related Works

3. System Model

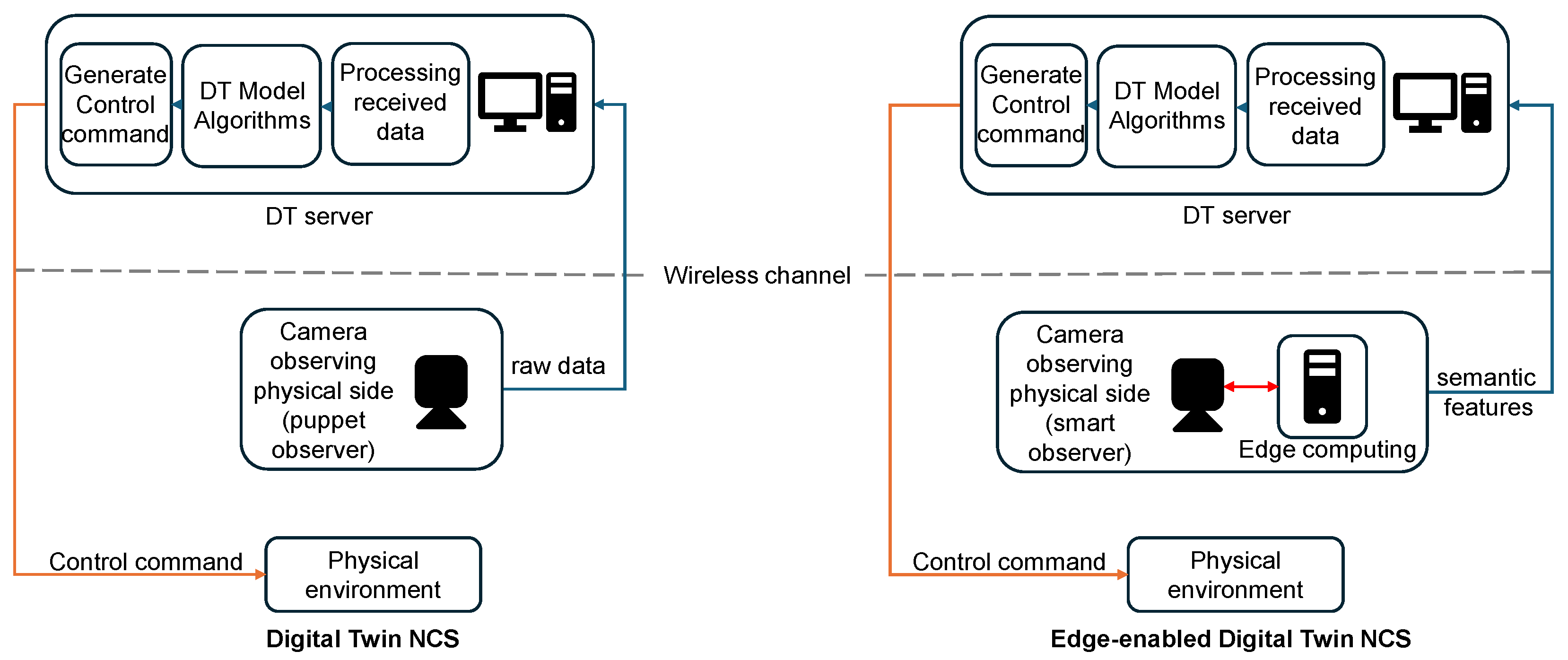

3.1. System Architecture

- The Physical Layer (Mobile Vehicle): This layer consists of a fleet of N mobile robots, denoted by the set , navigating in a shared physical workspace. Each robot is a simple agent with basic locomotion capabilities and is responsible for executing control commands received from the DT server.

- The Edge Layer (Smart Observer): An off-board sensing unit, such as a camera mounted with a view of the workspace, connected to an edge-computing device (e.g., a single-board computer). This smart observer captures raw sensor data (e.g., video frames) of the entire operational area, performs real-time feature extraction to determine the state of each robot, and transmits this compact feature information to the DT server.

- The Digital Twin Layer (DT Server): A remote server that hosts the digital twin of the entire multi-robot system. It receives feature data from the edge observer, updates the state of the virtual robots in the simulation, runs predictive collision avoidance algorithms, and generates and sends corrective control commands back to the physical robots via a wireless network.

3.2. Robot State Representation

3.3. Network Communication and Low-Latency Requirements

3.3.1. End-to-End Latency Model

3.3.2. Delay-Robust Safety Condition

3.3.3. Lyapunov Stability with Time-Evolving Distance Error Bound

4. Edge-Enabled Digital Twin Collision Avoidance Framework

4.1. Framework Overview

- Sensing: A smart observer with a global view of the workspace captures a video stream of the robots.

- Edge Feature Extraction: Instead of transmitting the high-bandwidth video stream, the edge device processes each frame locally to extract low-dimensional features, specifically, the coordinates of each robot.

- Feature Transmission: These compact feature packets are transmitted over a wireless network to the DT server. This step significantly reduces the data payload compared to sending raw images, as shown in the results in Section 6.

- Digital Twin Synchronization: The DT server receives the feature packets and updates the states of the corresponding virtual robots in its simulation environment.

- Predictive Collision Avoidance: The server runs the Guarding Circle algorithm on the updated digital twin states to predict and identify potential future collisions.

- Actuation: If a potential collision is detected, the server issues a control command (e.g., DecelerateOrStop) to the appropriate robot(s) to prevent the collision.

4.2. Edge-Based Feature Extraction

- Frame Acquisition: Frame acquisition is initiated using the Raspberry Pi Camera V2.1, operating at a resolution of 640 × 480 pixels and a frame rate of 30 FPS. The image acquisition is handled using imutils.VideoStream, which wraps the PiCamera interface for seamless and asynchronous frame grabbing. This choice optimizes for real-time operation while maintaining adequate image quality for low-level feature analysis.This raw frame represents a dense, high-dimensional RGB signal , where are pixel coordinates and denotes the color channel.

- Color Segmentation: To achieve lighting-invariant object detection, the RGB image is first converted to the HSV color space. HSV separates chromatic content (Hue) from intensity (Value), which provides robustness against illumination changes and shadows. Gaussian blurring with a kernel size of is first applied to suppress high-frequency noise:where is the Gaussian kernel, and ∗ denotes convolution. A color mask is then applied using an empirically calibrated range in HSV space. This generates a binary image where white pixels represent regions of interest (ROIs).

- Blob Analysis: The binary mask often contains noise and fragmented components. To refine this, two morphological operations are applied:

- Erosion with a kernel: removes small isolated noise.

- Dilation with a kernel: restores the size of significant regions.

Mathematically, erosion and dilation are defined as follows:where K is the structuring element (kernel). Contours in the filtered mask are then extracted using OpenCV’s findContours(). This yields a set of external contours, each representing a detected object or robot marker. - Centroid Extraction: Each contour is a set of boundary pixels. To compute its geometric center (centroid), image moments are used. For a binary shape S, the spatial moment of order is defined as follows:For centroid computation:

- : area of the blob.

- : first-order spatial moments.

The centroid is then derived as follows:This computation is implemented as follows: Contours with very small areas (e.g., noise) are filtered out by imposing a minimum radius threshold (e.g., pixels). Each valid detection is annotated and appended to the output structure.

4.3. Guarding Circle Collision Avoidance Algorithm

| Algorithm 1 Guarding-Circle Collision Avoidance. |

|

5. Experimental Setup

5.1. Experimental Environment

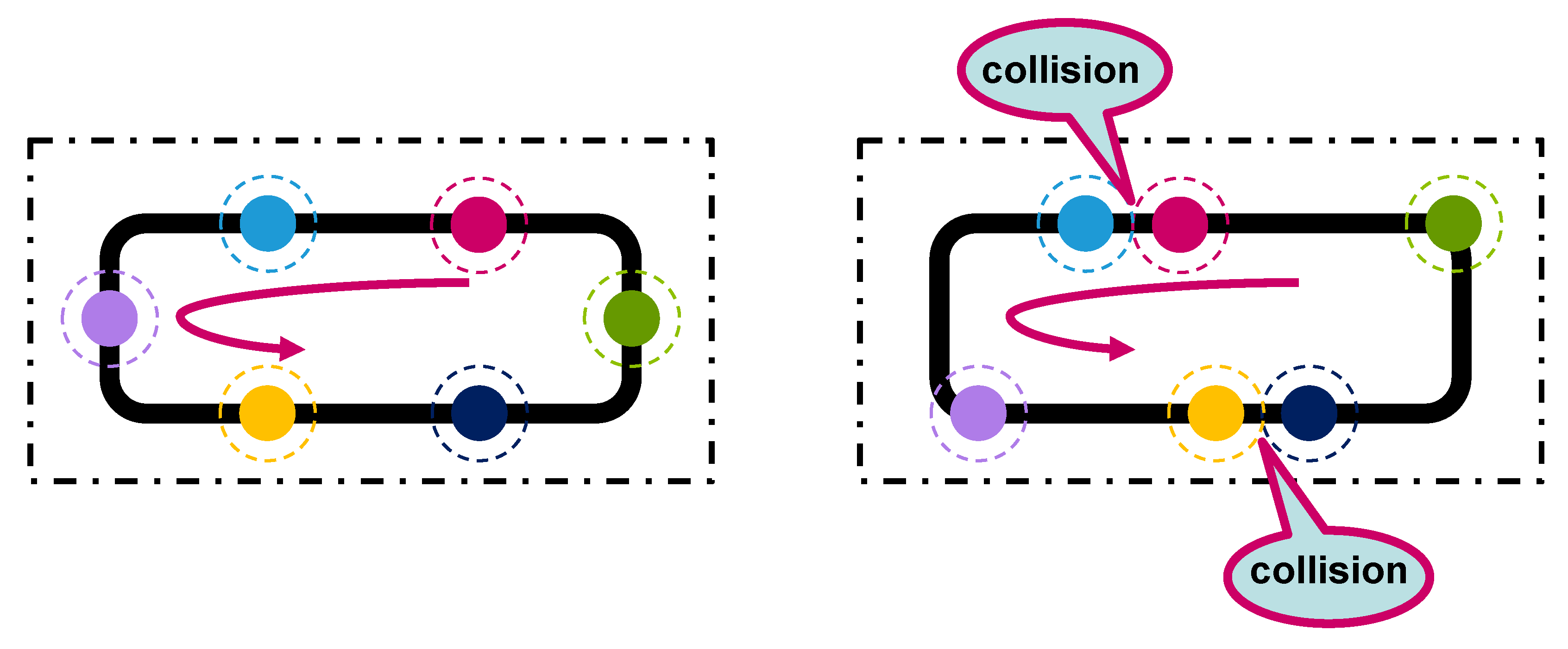

5.1.1. Intersection Collision

5.1.2. Rear-End Collision (Loop Collision)

5.2. Experimental Procedure

- Four nominal speeds (0.13, 0.14, 0.16, 0.17 m/s);

- Two traffic patterns—simple loop and intersection.

6. Results and Discussion

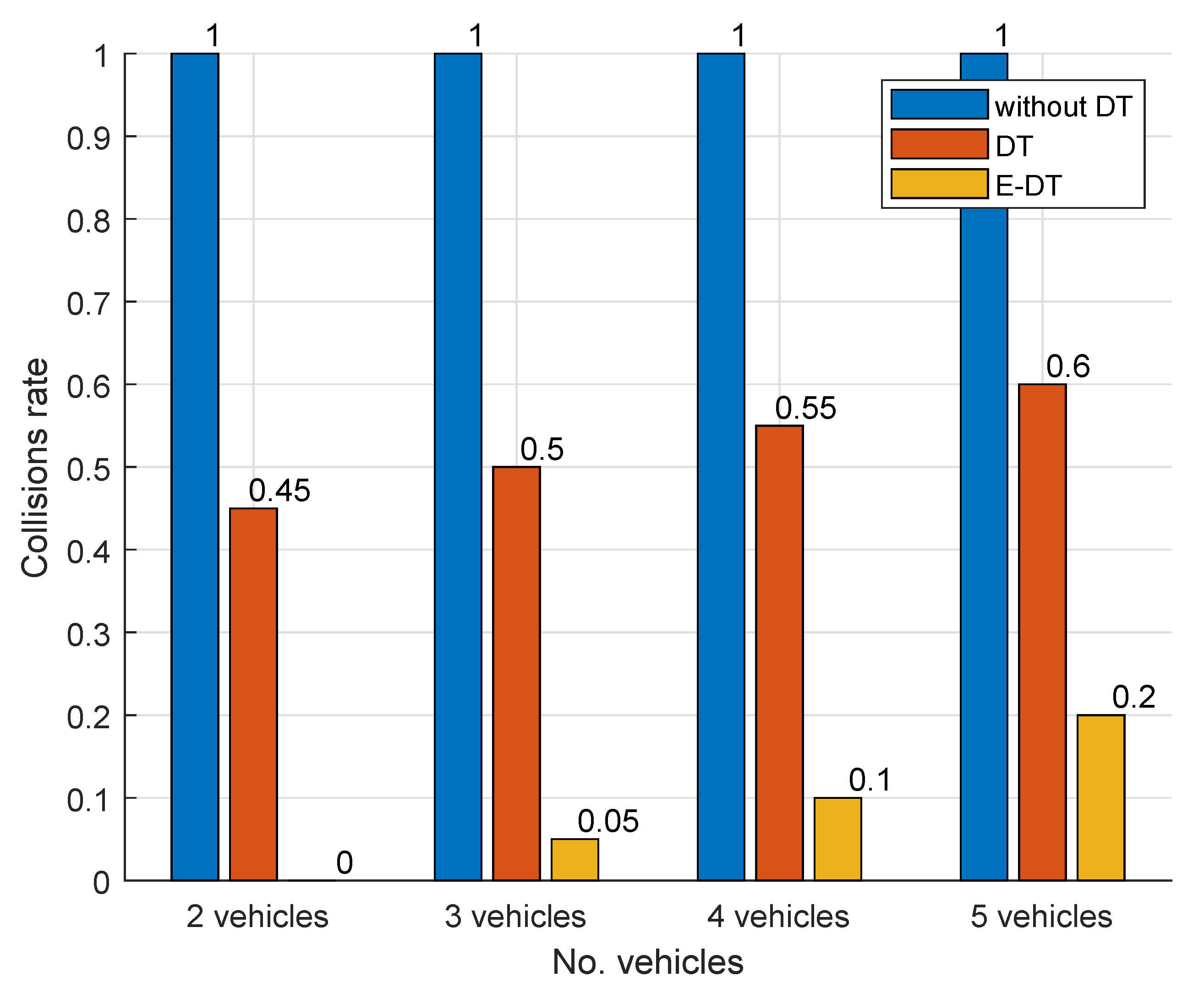

6.1. Collision Avoidance Comparison

6.2. Inter-Robot Distance Stability Under Varying Fleet Sizes

6.3. Communication Efficiency

6.4. Validating E-DTNCS Reliability Under Channel Impairments

7. Limitations and Future Work

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| CPU | Central Processing Unit |

| DT | Digital Twin |

| DTNCS | Digital Twin Networked Control Systems |

| E-DT | Edge-Enabled Digital Twin |

| E-DTNCS | Edge-Enabled Digital Twin Networked Control System |

| GB | Gigabyte |

| GPIO | General Purpose Input/Output |

| IR | Infrared |

| LiDAR | Light Detection and Ranging |

| PER | Packet Error Rate |

| SNR | Signal-to-Noise Ratio |

| UDP | User Datagram Protocol |

| WLAN | Wireless Local Area Network |

References

- Jawhar, I.; Mohamed, N.; Wu, J.; Al-Jaroodi, J. Networking of multi-robot systems: Architectures and requirements. J. Sens. Actuator Netw. 2018, 7, 52. [Google Scholar] [CrossRef]

- Peng, Z.; Wang, J.; Wang, D.; Han, Q.L. An overview of recent advances in coordinated control of multiple autonomous surface vehicles. IEEE Trans. Ind. Inform. 2020, 17, 732–745. [Google Scholar] [CrossRef]

- Chen, Y.F.; Liu, M.; Everett, M.; How, J.P. Decentralized non-communicating multiagent collision avoidance with deep reinforcement learning. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 285–292. [Google Scholar]

- Falanga, D.; Kleber, K.; Scaramuzza, D. Dynamic obstacle avoidance for quadrotors with event cameras. Sci. Robot. 2020, 5, eaaz9712. [Google Scholar] [CrossRef]

- Lunghi, G.; Marin, R.; Di Castro, M.; Masi, A.; Sanz, P.J. Multimodal human-robot interface for accessible remote robotic interventions in hazardous environments. IEEE Access 2019, 7, 127290–127319. [Google Scholar] [CrossRef]

- Tao, F.; Qi, Q.; Liu, A.; Kusiak, A. Data-driven smart manufacturing. J. Manuf. Syst. 2018, 48, 157–169. [Google Scholar] [CrossRef]

- Mtowe, D.P.; Kim, D.M. Edge-computing-enabled low-latency communication for a wireless networked control system. Electronics 2023, 12, 3181. [Google Scholar] [CrossRef]

- Saha, A.; Dhara, B.C. 3D LiDAR-based obstacle detection and tracking for autonomous navigation in dynamic environments. Int. J. Intell. Robot. Appl. 2024, 8, 39–60. [Google Scholar] [CrossRef]

- Xie, D.; Xu, Y.; Wang, R. Obstacle detection and tracking method for autonomous vehicle based on three-dimensional LiDAR. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419831587. [Google Scholar] [CrossRef]

- Alotaibi, A.; Alatawi, H.; Binnouh, A.; Duwayriat, L.; Alhmiedat, T.; Alia, O.M. Deep Learning-Based Vision Systems for Robot Semantic Navigation: An Experimental Study. Technologies 2024, 12, 157. [Google Scholar] [CrossRef]

- Kästner, L.; Marx, C.; Lambrecht, J. Deep-reinforcement-learning-based semantic navigation of mobile robots in dynamic environments. In Proceedings of the 2020 IEEE 16th International Conference on Automation Science and Engineering (CASE), Hong Kong, 20–21 August 2020; pp. 1110–1115. [Google Scholar]

- Wolf, D.F.; Sukhatme, G.S. Semantic mapping using mobile robots. IEEE Trans. Robot. 2008, 24, 245–258. [Google Scholar] [CrossRef]

- Alatise, M.B.; Hancke, G.P. A review on challenges of autonomous mobile robot and sensor fusion methods. IEEE Access 2020, 8, 39830–39846. [Google Scholar] [CrossRef]

- Zhang, J.; Song, C.; Hu, Y.; Yu, B. Improving robustness of robotic grasping by fusing multi-sensor. In Proceedings of the 2012 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Hamburg, Germany, 13–15 September 2012; pp. 126–131. [Google Scholar]

- Hu, G.; Tay, W.P.; Wen, Y. Cloud robotics: Architecture, challenges and applications. IEEE Netw. 2012, 26, 21–28. [Google Scholar] [CrossRef]

- Janssen, R.; van de Molengraft, R.; Bruyninckx, H.; Steinbuch, M. Cloud based centralized task control for human domain multi-robot operations. Intell. Serv. Robot. 2016, 9, 63–77. [Google Scholar] [CrossRef]

- Satyanarayanan, M. The emergence of edge computing. Computer 2017, 50, 30–39. [Google Scholar] [CrossRef]

- Grieves, M.; Vickers, J. Digital twin: Mitigating unpredictable, undesirable emergent behavior in complex systems. In Transdisciplinary Perspectives on Complex Systems: New Findings and Approaches; Springer: Cham, Switzerland, 2017; pp. 85–113. [Google Scholar]

- Ibrahim, M.; Rassõlkin, A.; Vaimann, T.; Kallaste, A. Overview on digital twin for autonomous electrical vehicles propulsion drive system. Sustainability 2022, 14, 601. [Google Scholar] [CrossRef]

- Hu, Z.; Lou, S.; Xing, Y.; Wang, X.; Cao, D.; Lv, C. Review and perspectives on driver digital twin and its enabling technologies for intelligent vehicles. IEEE Trans. Intell. Veh. 2022, 7, 417–440. [Google Scholar] [CrossRef]

- Ali, W.A.; Fanti, M.P.; Roccotelli, M.; Ranieri, L. A review of digital twin technology for electric and autonomous vehicles. Appl. Sci. 2023, 13, 5871. [Google Scholar] [CrossRef]

- Schwarz, C.; Wang, Z. The role of digital twins in connected and automated vehicles. IEEE Intell. Transp. Syst. Mag. 2022, 14, 41–51. [Google Scholar] [CrossRef]

- Wang, Z.; Han, K.; Tiwari, P. Digital twin simulation of connected and automated vehicles with the unity game engine. In Proceedings of the 2021 IEEE 1st International Conference on Digital Twins and Parallel Intelligence (DTPI), Beijing, China, 15 July–15 August 2021; pp. 1–4. [Google Scholar]

- Laaki, H.; Miche, Y.; Tammi, K. Prototyping a digital twin for real time remote control over mobile networks: Application of remote surgery. IEEE Access 2019, 7, 20325–20336. [Google Scholar] [CrossRef]

- Liu, X.; Gan, H.; Luo, Y.; Chen, Y.; Gao, L. Digital-twin-based real-time optimization for a fractional order controller for industrial robots. Fractal Fract. 2023, 7, 167. [Google Scholar] [CrossRef]

- Hu, F.; Wang, W.; Zhou, J. Petri nets-based digital twin drives dual-arm cooperative manipulation. Comput. Ind. 2023, 147, 103880. [Google Scholar] [CrossRef]

- ISO 23247; Automation Systems and Integration—Digital Twin Framework for Manufacturing—Part 1: Overview and General Principles. International Organization for Standardization: Geneva, Switzerland, 2021.

- Fridman, E. Tutorial on Lyapunov-based methods for time-delay systems. Eur. J. Control 2014, 20, 271–283. [Google Scholar] [CrossRef]

| Specification | Value |

|---|---|

| SOC | Broadcom BCM2837B0 |

| Core Type | Cortex-A53 64-bit |

| No. of Cores | 4 |

| GPU | VideoCore IV |

| CPU Clock Speed | 1.4 GHz |

| RAM | 1 GB LPDDR2 |

| Power Requirements | 1.13 A @ 5 V |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mtowe, D.P.; Long, L.; Kim, D.M. Low-Latency Edge-Enabled Digital Twin System for Multi-Robot Collision Avoidance and Remote Control. Sensors 2025, 25, 4666. https://doi.org/10.3390/s25154666

Mtowe DP, Long L, Kim DM. Low-Latency Edge-Enabled Digital Twin System for Multi-Robot Collision Avoidance and Remote Control. Sensors. 2025; 25(15):4666. https://doi.org/10.3390/s25154666

Chicago/Turabian StyleMtowe, Daniel Poul, Lika Long, and Dong Min Kim. 2025. "Low-Latency Edge-Enabled Digital Twin System for Multi-Robot Collision Avoidance and Remote Control" Sensors 25, no. 15: 4666. https://doi.org/10.3390/s25154666

APA StyleMtowe, D. P., Long, L., & Kim, D. M. (2025). Low-Latency Edge-Enabled Digital Twin System for Multi-Robot Collision Avoidance and Remote Control. Sensors, 25(15), 4666. https://doi.org/10.3390/s25154666